Abstract

Multistage stochastic optimization is used to solve many real-life problems where decisions are taken at multiple times. Such problems need the representation of stochastic processes, which are usually approximated by scenario trees. In this article, we implement seven scenario reduction algorithms: three based on random extraction, named Random, and four based on specific distance measures, named Distance-based. Three of the latter are well known in literature while the fourth is a new approach, namely nodal clustering. We compare all the algorithms in terms of computational cost and information cost. The computational cost is measured by the time needed for the reduction, while the information cost is measured by the nested distance between the original and the reduced tree. Moreover, we also formulate and solve a multistage stochastic portfolio selection problem to measure the distance between the optimal solutions and between the optimal objective values of the original and the reduced tree.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many real-life problems the parameters characterizing the structure of the future may be uncertain, e.g. the values of price or the level of demand. To solve this type of problem, the literature suggests exploiting the instruments provided by stochastic optimization, see Birge and Louveaux (1997) and Powell (2014). More precisely, we distinguish between single-stage stochastic optimization when only one decision is taken, and multistage stochastic optimization, when the solution is a sequence of decisions over a given span of times, see Shapiro et al. (2009) and Dupačová et al. (2002). To deal with these types of problems, we typically assume that the uncertain parameters are random variables with known probability distribution. This distribution is usually approximated by a discrete distribution represented by a scenario tree.

In order to better approximate the initial distribution, the scenario tree may become rather large making the problem computationally intractable. Therefore, several scenario tree reduction algorithms have been proposed in the literature to suit the available computational capabilities, e.g. Dupačová et al. (2003) and Pflug and Pichler (2014). In this article, we focus on comparing representative reducing algorithms with each other. In particular, we apply three reduction approaches based on random extraction and four more advanced reduction algorithms based on distance measures. With more sophisticated scenario reduction techniques the quality of the reduction is expected to be better than with random algorithms. We assume that the quality of the reduction is given by the closeness of the two scenario trees: the original and the reduced ones. To measure the distance between two scenario trees we use the Nested Distance (ND) proposed in Pflug and Pichler (2012, (2014). The aim of this work is then to observe how good the reduction algorithms are in relation to the computational effort, i.e. the computing time that each algorithm requires.

Moreover, it is well known that multistage stochastic optimization is particularly helpful for addressing portfolio management problems, see Dupačová et al. (2002) for an overview of the topic and the recent applications of Vitali et al. (2017), Kopa and Petrová (2017), Consigli et al. (2018a), Kopa et al. (2018), Moriggia et al. (2019) and references therein. For this reason, we also formulate and solve a multistage portfolio selection problem. The aim is to measure not only the distance between the initial tree and the reduced tree and the time needed to generate the reduced tree, but also the distances between the optimal objective values and between the here-and-now solutions.

This paper is structured as follows: the notation and definition of ND adopted throughout the paper is presented in Sect. 2. In Sect. 3, we present the reduction algorithms analysed in the subsequent sections. In particular, in Sect. 3.1 we present the most naive and non deterministic algorithms and in Sect. 3.2 we introduce three other algorithms which are more advanced and deterministic. Section 5 is devoted to the numerical analysis. In particular, Sect. 5.2 analyses the results of the nested distance, while Sect. 5.3 presents a multistage portfolio model used to analyse the objective value distance and the solution distance in Sects. 5.3.1 and 5.3.2, respectively. Section 6 concludes the paper.

2 Notation and nested distance

We represent a scenario tree as follows: let’s suppose that the tree consists of N nodes \(n=1,\dots ,N\). The root node is the node \(n=1\). For each node n, we define its stage t(n), we denote the set of its direct successors (children) by \(n+\), and, for each node, except for the root, we denote its direct predecessor (parent) by \(n-\), the sequence of its ancestors \(n-, n--, \ldots , 1\) by \({{\mathscr {A}}}(n)\) and the union of the set of ancestors with the node itself by \(\overline{{\mathscr {A}}}(n)={{\mathscr {A}}}(n)\cup \{n\}\). A subtree is a set of nodes consisting of a node and its children. A particular case of subtree is a degenerate subtree which is a set composed of a single node. The leaves of the tree are degenerate subtrees. A tree is called regular if all the nodes in the same stage have the same number of children. In the case of regular trees, the number of children of each node in subsequent stages is called branching structure (or bushiness) . All nodes are divided into disjoint node sets \({{\mathscr {K}}}_t,\ t=0,\dots ,T,\) collecting the nodes of each stage. In this notation, \({\mathscr {K}}_0 = \{1\}\) contains the root, \({\mathscr {K}}_T\) contains the leaves, and \({\mathscr {K}}_t,\ t=1,\dots ,T-1,\) contain the nodes of the inner stages. By \(p_n\) we denote the absolute probability of the node n; the conditional probability of the node n (given \(n-\)) is denoted by \(q_n\). Each node also carries an A-dimensional vector of values \(\xi _n\) which are the realizations of the random vector in that node.

In Fig. 1 we report an example of a regular tree spanning 4 stages, \(t=0,\ldots ,3\) and having branching structure 3-2-3. The number of nodes is \(N=28\). For instance, node \(n=7\) is on the second stage, \(t(7)=2\), so it belongs to \({{\mathscr {K}}}_2\), it has children \(7+=\{17,18,19\}\), parent \(7-=\{3\}\), ancestors \({{\mathscr {A}}}(7)=\{3,1\}\) and \(\overline{{\mathscr {A}}}(7) =\{7,3,1\}\), the corresponding vector of values of the random variable is \(\xi _7\), and, assuming that in the whole tree the conditional probabilities are equally distributed among the children of the same node, it has conditional probability \(q_7=\frac{1}{2}\) and absolute probability \(p_7=q_3\cdot q_7=\frac{1}{6}\). The set of the leaves is \({{\mathscr {K}}}_3=\{11,\ldots ,28\}\).

To measure the distance between two scenario trees, we adopt the concept of the ND proposed in Pflug and Pichler (2012, (2014), and then further analysed in Pflug and Pichler (2015, (2016), Timonina (2015), Kovacevic and Pichler (2015) and Vitali (2018). In these papers, the ND is computed by backward iteration and can be interpreted as the optimal transportation cost for moving one scenario tree onto the other scenario tree considering the conditional probabilities of the nodes and, of course, the realization of the random variable in each node.

Given two scenario trees, we start computing the distance between the scenarios of the first tree and the scenarios of the second tree. We denote by single quote \((')\) all the elements of the second tree. For each pair of leaf nodes \(i \in {\mathscr {K}}_T\) and \(j \in {\mathscr {K}}'_T\) we compute the distance

where \({ d}\) is the classic \(\ell ^1\) distance.

Then we move backward for \(t = T-1\) down to \(t=0\) and, for all combinations of \(k\in {\mathscr {K}}_t\) and \(l \in {\mathscr {K}}'_t\), we solve the following linear problem

The ND between the trees is the distance between the subtrees at level 0, i.e. at their roots,

3 Scenario reduction algorithms

As mentioned above, in many situations we have a stochastic process represented by a huge scenario tree, and we would like to reduce (approximate) the tree to a smaller one, in order to be able to solve a stochastic optimization problem based on the scenario tree itself. Many scenario reduction algorithms are proposed in the literature. We have selected a set of representative algorithms to highlight their quality in terms of computational costs and information cost, i.e. computation time and loss in the information carried by the tree. In particular, we divide the scenario reduction algorithms into two groups and we name them Random and Distance-based, see Table 1. The random algorithms are based on a random extraction of some nodes from a huge tree to generate a smaller one. These algorithms are easy to implement and fast to run. However, the consequent reduced tree is uncertain because it is based on a random sample and, therefore, also the quality of the reduction could vary. The distance-based algorithms are representatives of different classes of reduction approaches according to the distance definition used to merge the closest elements or to eliminate the furthest: distance between scenarios, distance between nodes and distance between subtrees.

3.1 Random algorithms

Random algorithms create a reduced tree by randomly extracting the elements (nodes and/or scenarios) of the original tree. Indeed, these algorithms neither compute any distance measure nor solve any nested optimization. They are easy to implement and typically very fast as will be shown in the numerical section. All these algorithms, being based on a random extraction, do not produce a unique reduced tree, but they identify a different reduced tree every time they are run.

3.1.1 Nodal extraction

The first algorithm we propose takes as input an original tree and the desired structure of the reduced tree. This means that the number of nodes for each stage and the branching structure is given. Then, at each stage, we randomly select from the nodes of that stage of the original tree a set of nodes that will become the nodes of the same stage on the reduced tree. Using this approach, we can completely alter the parent-child relationship of the original tree. Therefore, it can be used only for those scenario trees that originally have an intra-stage independence. Considering the example depicted in Fig. 1 and assuming we want a reduced tree with branching 2-1-2, we could obtain the reduced tree proposed in Fig. 2, for instance. In this case, for the second stage we extracted the nodes \(\{2,4\}\), for the third the nodes \(\{9,7\}\) and for the last stage the nodes \(\{13,27,21,19\}\). Clearly the parent-children relationships of the original tree are not conserved.

3.1.2 Improved nodal extraction

To address the issue of the previous approach, i.e. the loss of the parent-children relationships, nodes can be randomly sampled only from the children of the nodes already selected from the previous stage. Then, in each stage and for the children of a particular node, we observe the children of that node in the original tree and we sample from them the children of the node in the reduced tree. Therefore, this approach proceeds forward and preserves the original parent-children relationships. Considering the example shown in Fig. 1 and assuming we want a reduced tree with branching 2-1-2, we could, for example, obtain the reduced tree proposed in Fig. 3. In this case, we extract for the second stage the nodes \(\{2,4\}\), for the third stage as children of \(\{2\}\) we extract \(\{5\}\) and as children of \(\{4\}\) we extract \(\{10\}\), for the fourth stage as children of \(\{5\}\) we extract \(\{11,13\}\) and as children of \(\{10\}\) we extract \(\{27,28\}\). In this way, we keep the parent-children relationships of the original tree.

3.1.3 Scenario extraction

The scenario extraction approach requires as input an original tree and the number of scenarios of the reduced tree. Then, the algorithm consists of two steps. In the first step, the algorithm randomly samples the given number of scenarios from the original tree and generates the reduced tree as a fan of such scenarios. In the second step, the algorithm merges the overlapping nodes and sets the probabilities of the leaves so that the former ratios are preserved. For example, if we select three leaf nodes with original probabilities 0.2, 0.1 and 0.3, in the reduced tree the probabilities will be 1/3, 1/6 and 1/2. The probabilities of nodes in previous stages are computed recursively as the sum of the probabilities of their children.

Using this approach, it can happen that many nodes may have only one child. Therefore, the reduced tree could have a total number of nodes that is larger than the trees generated with the other two random algorithms.

Considering the example depicted in Fig. 1 and assuming we want a reduced tree with 4 scenarios as in the previous examples, we could, for instance, in the first step extract from the original scenario tree the scenarios having as leaves the nodes \(\{12,13,19,22\}\) and compose the fan of scenarios in Fig. 4a and then, in the second step, we obtain the final reduced scenario tree displayed in Fig. 4b. In this way, we keep the parent-children relationships of the original tree. However, we also notice that the size of the scenario tree increases compared to the other two random algorithms since the total number of nodes is 10 while with the other two approaches it was 9.

3.2 Distance-based algorithms

Since random algorithms do not use the information about the values of the nodes, it is natural to suspect that the reduced trees obtained by these methods might not be very close to the original tree. For this reason, we would like to think of a way to be able to take into account both the information of the values of the nodes and the information about the tree structure. In this section, we introduce four different approaches that tackle this issue.

3.2.1 Single scenario reduction

The single scenario reduction is proposed and described in detail in Dupačová et al. (2003). As the name suggests, it reduces the original tree scenario by scenario, taking as input an original scenario tree and the number of scenarios desired for the reduced scenario. At each iteration, this method eliminates one scenario which has a small distance from another scenario and also carries a small probability. To achieve this purpose, the algorithm performs the following three steps. First, the algorithm measures the distances between all the scenarios and constructs the matrix composed of the elements

where \((i_0,\dots ,i_{T})\) and \((j_0,\dots ,j_{T})\) are the paths of the scenario i and j, respectively. We use distance \(\ell ^1\), although as an alternative one could use any well-defined distance on \(R^{(T+1)\times A}\). Second, for each scenario i the algorithm defines

where \(p_i\) are the scenario probabilities, and the algorithm eliminates the scenario for which D(i) is the smallest. If \(\mathrm{arg}\,\mathrm{min} D(i)\) contains more than one element, we can either randomly choose the scenario that must be eliminated (which would lead to a non-deterministic solution) or we could simply eliminate the first one (which would lead to a deterministic solution).

Finally, in the third step, the algorithm identifies the scenario which is the closest to the eliminated one and increases its probability by the probability of the eliminated scenario, e.g. if the eliminated scenario is the scenario \(i^*\), we find

and set \(p_{j^*} = p_{j^*} + p_{i^*}\). We repeat this procedure until we have the desired number of scenarios.

The disadvantage of this algorithm is that we cannot specify a particular parent-children structure for the reduced tree but only a given number of scenarios. Moreover, it uses only absolute probabilities. Finally, this method is computationally much more demanding than the previous random algorithms, because all random algorithms have linear complexity while this method has quadratic complexity with respect to the number of scenarios (or nodes).

3.2.2 Nodal clustering

In this part, we introduce a new algorithm based on clustering. Clustering is a way of grouping n points into \(s<n\) groups, which are named clusters. There are many possible ways of clustering described in the literature, see Kaufman and Rousseeuw (2009) for a review. In this work we consider the following procedure: we start with each point being a cluster itself. Then we repeatedly join the two nearest clusters into one until we get the target number of clusters. The distance between clusters is defined as the maximum distance between the points of one cluster and the points of the other, i.e. if we have two clusters, say A and B, A consisting of points \(\{a_1,\dots ,a_k\}\), B of \(\{b_1,\dots ,b_l\}\), where k and l are positive integers, then the distance between A and B is

where \({ d}(a_i,b_j)\) is some distance between points \(a_i\) and \(b_j\), e.g. the distance \(\ell ^1\) or the distance \(\ell ^2\).

Alternatively, we could represent each cluster by its centroid and compute the cluster distances as the distances between their centroids. Instead of centroids, we could use the points which have the smallest average distance to the other points of the cluster, or the smallest maximum distance to the other points of the cluster, etc.

Given as input the desired reduced tree structure, we proceed as described below.

Algorithm. We choose the final (regular) structure of the reduced tree and set \({\mathscr {K}}^*_0 := {\mathscr {K}}_0.\) Then, for \(t=0\) to \(t=T-1\) and for each node \(n \in {\mathscr {K}}^*_t\), we cluster its children into the predetermined number of groups. As the representative of each group we take the group’s median, i.e. a new artificial node that corresponds to a vector whose components are the medians of the components of the vectors of the nodes belonging to that group. We store the label of the new node in \({\mathscr {K}}_{t+1}^*.\) Its probability is equal to the sum of the probabilities of all the members of the group and, since it is a new node, its children are all children of all the members of the group. The scheme of the process is given in Algorithm 1 (in Electronic Supplementary Material).

The reduced tree is represented by the nodes in \({\mathscr {K}}_t^*,\ t = 0,\dots ,T.\) It has the required structure, but its scenarios do not need to be equiprobable. This is due to the fact that the clusters usually do not have the same number of points and the probability is taken as the sum of the probabilities of the points in the cluster.

The idea of apply clustering techniques to reduce the size of a scenario tree is not new in the literature. Many works propose clustering the scenarios directly, e.g. Growe-Kuska et al. (2003), Heitsch and Römisch (2003), Mandelli et al. (2013), Heitsch and Römisch (2009), Crainic et al. (2014), Beraldi and Bruni (2014), while others suggest merging the nodes by applying a nested optimization at each iteration, see Pflug and Pichler (2014) that proposes the subtree merging explained in the next paragraph and Chen and Yan (2018) that adopts the same methodology. However, the approach described in Algorithm 1 differs from all these reduction algorithms because it clusters nodes and not scenarios and because it does not need any nested optimization. Therefore, we name this reduction algorithm nodal clustering.

3.2.3 Subtree merging

As already mentioned, see Pflug and Pichler (2014) and Chen and Yan (2018), it is also possible to merge nodes considering their corresponding subtrees. These methods require a nested optimization to compute the distance between subtrees. As a representative algorithm for this class of techniques, we adopt the algorithm presented in Pflug and Pichler (2014) that uses the ND itself to measure the distance between subtrees.

The procedure starts computing the NDs between all feasible pairs of subtrees, i.e. having roots (say \(i_0\) and \(j_0\)) at the same stage, \(t(i_0)=t(j_0)\), and with the same parent, \(i_0- = j_0-\). We merge the pair with the lowest ND using the algorithm described in the following paragraph. Note that the closest pair could have roots at any stage and so the algorithm proceeds neither forwards nor backwards.

Suppose we want to merge two subtrees with distributions \({\mathbb {P}}_1\) and \({\mathbb {P}}_2\) and roots \(i_0\) and \(j_0\). If the nodes \(i_0\) and \(j_0\) are leaves, we take their mean value as the new node. If they are not leaves, we use the optimal transport plan between \({\mathbb {P}}_1\) and \({\mathbb {P}}_2\) which we already have from the computation of the ND. We start by setting the value of the new root as the mean value of the two old roots. For the successors, we sort the optimal transport plan into descending order, i.e., we get

where \(c_1,c_2,\ldots \) represent all possible couples of successors. For instance, if \(i_0\) and \(j_0\) have three children each, \(i_1,i_2,i_3 \in i_0+\) and \(j_1,j_2,j_3 \in j_0+\), we get nine couples \((i_1,j_1)\), \((i_1,j_2),\dots ,(i_3,j_3),\) which we reorder so that the inequalities above hold and we denote them by \(c_1,c_2,\dots \) for simplicity, i.e. if \(\pi _{i_2,j_1}\) is the biggest then \(c_1 = (i_2,j_1)\). Then, we take the smallest m such that

where p is a chosen reducing parameter, \(p\in (0,1].\) The smaller the p, the greater the reduction. We obtain m pairs of subtrees with roots \(c_1,\dots ,c_m.\) We set their probabilities as

so that \(\sum _{k=1}^m p_k = 1\) holds. Now we proceed recursively by merging the pairs \(c_1,\dots ,c_m.\) The algorithm stops when the resulting number of scenarios reaches the required amount. Otherwise, we recalculate the NDs and merge the closest pair.

The computational effort of this algorithm is particularly intense, see numerical experiments in Sect. 5.1, since it does not control the number of nodes in each stage which could also increase compared with the original scenario tree. For this reason, we introduce two new versions of the subtree merging algorithm that change the algorithm and make it computationally more tractable.

Then, the three versions of the subtree merging algorithm considered are:

-

SubtreeM: the original algorithm proposed in Pflug and Pichler (2014), takes as input the original scenario tree and the number of scenarios of the reduced tree, but it does not control the number of nodes in each stage;

-

nonDeg-SubtreeM: the first new version of the algorithm that we propose. It takes as input the original scenario tree and the number of scenarios of the reduced tree, but it does not consider degenerate subtrees (subtrees consisting of one node only) when looking for the couple of subtrees to merge; in other words, at each iteration, the roots \(i_0\) and \(j_0\) of the subtrees to be merged could be at any stage excluding the last, \(t(i_0)=t(j_0)\ne T\);

-

Adj-SubtreeM: the second new version of the algorithm that we propose. The Adjusted version of the algorithm takes as input the original scenario tree and a specific branching structure for the reduced scenario tree (not only the number of scenarios). This algorithm differs from the original version because it proceeds forward, i.e. it starts by considering as possible subtrees to be merged only those with roots \(i_0\) and \(j_0\) at the first stage, \(t(i_0) = t(j_0) = 1\) , while the original algorithm considered \(t(i_0) = t(j_0), t(i_0) \in \{1,\ldots ,T\}\). Then, the algorithm merges the closest pair of these subtrees at each iteration until the number of nodes required for the first stage is reached. After the first stage, the algorithm proceeds to the second stage by considering the subtrees having roots \(i_0\) and \(j_0\) at the second stage and common parent, i.e. \(i_0- = j_0-\) and \(t(i_0) = t(j_0) =2\). The procedure continues forward in the same way until the desired branching is fulfilled. The algorithm stops at the last stage merging the leaves. When the algorithm identifies two subtrees to be merged, it follows the general procedure of the original algorithm.

A detailed analysis of the performance of the three versions of the subtree merging algorithm is reported in Sect. 5.1 where we also discuss the choice of the parameter p which is crucial for the performance of this algorithm.

3.2.4 Single node reduction

One of the most commonly used reduction algorithms is the algorithm proposed in Heitsch and Römisch (2009). The idea is to measure distances between nodes with the same parent, find the pairs which are close enough, reduce them, and repeat until the reduction stopping criterion is fulfilled. We modified the algorithm slightly so that it is as follows:

-

1.

for each pair (i, j) of nodes with the same parent compute

$$\begin{aligned} \varepsilon _{i,j} = p_i \cdot \Vert \xi _i - \xi _j\Vert + \frac{2 p_i p_j}{p_i + p_j}, \end{aligned}$$where \(p_i\) is the absolute probability of the node i and \(\xi _i\) the nodal value (note that the nodes (i, j) could be at any stage),

-

2.

choose the pair (i, j) with the smallest \(\varepsilon _{i,j}\),

-

3.

set the node i as the parent of the children of the node j, set \(p_i = p_i + p_j\) and remove the node j,

-

4.

repeat until the desired number of scenarios is reached.

4 Portfolio selection problem, objective and solution distances

After measuring the ND between the original tree and the reduced tree, we want to understand if the reduction algorithms induce not only close scenario trees, but also close multistage stochastic problems. Therefore, for a given multistage stochastic model, we want to observe if more or less close scenario trees also induce more or less close solutions. To evaluate the distance between two stochastic problems using different scenarios trees Vitali (2018) already analyses not only the ND, but also the optimal solutions both in terms of optimal objective function values and in terms of first-stage solutions. Therefore, we also formulate and solve a multistage stochastic problem to compare the objective function values and the first-stage solution that we obtain by using the original scenario tree and the reduced scenario trees. Given the nature of our scenario trees in the numerical section - weekly returns of a set of assets - and considering that one of the main fields of application of multistage stochastic optimization is portfolio selection and management, see e.g. Dupačová et al. (2002), Kilianová and Pflug (2009), Vitali et al. (2017), Kopa and Petrová (2017), Consigli et al. (2018a, (2018b), Kopa et al. (2018), Moriggia et al. (2019), Rusý and Kopa (2018), we formulate a multistage portfolio selection problem.

Given a set \(i=1,\ldots ,A\) of available assets and the set of nodes \(n=1,\ldots ,N\) each with probability \(p_n\), we define the following variables:

- \(x_{n,i}\):

-

is the amount of money invested in the asset i at the node n,

- \(\mathbf {W}_{t}\):

-

is a random variable representing the wealth distribution at stage t,

- \(W_{n}\):

-

are the realizations of the random variable \(\mathbf {W}_{t}\) with \(n \in {\mathscr {K}}_t\).

Then, considering the weekly returns \(\rho _{i,n}\) realized between node n and its ancestor \(n-\) and considering a diversification coefficient \(\theta \) and a turnover coefficient \(\lambda \), the problem is formulated as follows:

As objective function \(f(\mathbf {W}_T)\) in (5) we implement both the mean value of the final wealth and the Average Value-at-Risk of the final wealth. Then, in the first case we have \(f(\mathbf {W}_T)={\mathbb {E}}[\mathbf {W}_T]\) and the objective function (5) takes the following form:

while in the second case we have \(f(\mathbf {W}_T)=AV@R[\mathbf {W}_T]\) and, adopting the formulation proposed in Rockafellar and Uryasev (2000, (2002), we substitute the objective function (5) with the following:

where a is an auxiliary variable, \(z_n\) is a slack variable and \(\alpha \) is a given confidence level. Thus, we propose two different models and we observe the empirical evidence obtained by maximizing the final expected wealth and by optimizing a coherent risk measure of the final wealth. Equation (6) represents the first-stage budget constraint. Equation (7) expresses the financial growth of the portfolio due to the asset returns. Equation (8) calculates the wealth achieved in each node. Constraints (9) and (10) avoid an investment higher than \(\theta \) for a single asset. Constraint (11) imposes a maximum turnover, i.e. it is not possible to change each position by more than \(\lambda \cdot 100\%\). Clearly constraint (11) is then implemented with two inequality constraints to keep the model fully linear.

Once we have solved the above model for two scenario trees, the distance between the optimal objective values, namely objective distance, is measured using the \(\ell ^1\) norm, i.e. for two optimal objective values \(\mathrm{obj}^*_1\) and \(\mathrm{obj}_2^*\) the distance is

while the distance between the optimal solutions, namely solution distance, is intended as the \(\ell ^1\) distance between the first stage decisions. That is, if \(\mathbf {x}_1^1\) and \(\mathbf {x}_1^2\) are two here-and-now optimal solutions, the distance between them is then

where A is the dimension of the vectors \(\mathbf {x}_1^1\) and \(\mathbf {x}_1^2\), i.e. the number of assets, and then \(x_{1,i}^1\) and \(x_{1,i}^2\), respectively, are the optimal amount of the initial wealth \(W_0\) that should be invested in the asset i at time \(t=0\). By dividing by 2, we ensure that the solution distance is in the interval \([0,W_0]\).

5 Numerical study

We apply the algorithms described in the previous section to different original trees. All the original trees span over 4 stages and are regular trees. However, in many problems, in particular in Finance, for a given number of scenarios the scenario tree is built with more branching in the first stages and a lower number of children in the last stages to better describe the information in the short period. Therefore, we consider original trees with two different branching structures: 20-5-5-2, i.e. 1000 scenarios, or 25-10-10-4, i.e. 10,000 scenarios. Our aim is to obtain a reduced scenario tree having 100 scenarios and, when the algorithm allows, to use the following branching structure: 5-5-2-2. Moreover, for each size of the original scenario tree, in each node we consider different dimensions of the random variable. Indeed, we represent either 1-, 5-, 10- or 20-dimension random variable, i.e. we construct the evolution of either 1, 5, 10 or 20 assets.

To generate the scenario trees, we observe the weekly returns of a set of 20 assets over the period from the beginning of 2015 till the end of 2018. The list of the assets and the historical mean and standard deviation of the weekly returns are reported in Table S1. Then, in the 1-dimension case we consider the asset #1, UBI.PA, in the 5-dimension case we consider the assets #1-#5, in the 10-dimension case we consider the assets #1-#10, and in the 20-dimension case we consider all the assets.

To generate the original scenario tree, we consider two scenario generation approaches. The first approach is the Monte Carlo (MC) sampling method: observing also the historical correlation between the assets and considering the required structure, we sample the vector of the weekly returns of each node of the tree from a multivariate normal distribution having the parameters described in Table S1. Therefore, by construction, all the generated scenarios are equiprobable and there is no dependency between subsequent stages. The second approach is the Historical Extraction (HE) proposed in Kopa et al. (2018) that generates the scenario tree sampling from the historical series of the assets. In particular, given a time step and a given branching structure, it samples historical observations trying to reproduce possible financial cycles that consider both good and bad paths. Therefore, this approach not only maintains the dependency between the assets because the sampled observations carry the correlation between the assets naturally without any further estimation, but it also induces an intra-stage dependency due to the financial cycle that the algorithm reproduces, and generates equiprobable scenarios because the sampled historical observations are equiprobable.

Combining the two different sizes of the original tree (10,000 and 1000) with the dimensions of the vector in each node (1-, 5-, 10-, 20-dimension) and with the type of scenario generation approach (MC and HE), we obtain 16 original trees to which we apply the reduction methods described in the previous section.

Since the reduced tree cannot be determined uniquely, for the random algorithms we generate for each case 100 reduced scenario trees.

The numerical analysis starts with Sect. 5.1 where we compare the three versions of the subtree merging algorithm and the choice of the parameter p is discussed.

Then, the results of the ND between the original scenario trees and the reduced scenario trees, and the computing time needed to apply each reduction algorithm are presented and discussed in Sect. 5.2.

Finally, in Sect. 5.3 we implement and solve the multistage portfolio selection problem to compare the ND with the distances between the optimal objective values and the distances between the first-stage solutions.

Throughout the study, we use the ND of order one and the \(\ell ^1\) norm to measure distances between points and scenarios, i.e.

where \(T=4\) is the number of stages and A is the dimension of the nodes, i.e. the number of assets considered.

All computation is performed in MATLAB on a computer with Intel(R) Core(TM) i5-7200U CPU @ 2.50 GHz processor and 8 GB memory. The multistage portfolio selection problem is implemented and solved in GAMS 23.2.1 using Cplex 12.1.0. The time is measured in seconds.

5.1 Analysis of the subtree merging algorithm

The aim of this section is to compare the three versions of the subtree merging algorithm and the choice of the parameter p to find which version and which value of p is more suitable for adoption in the subsequent analysis. As representative cases, we consider the original trees with 10-dimension nodes and 1000 scenarios created both with the MC method and with the HE method. We apply the three versions of the subtree merging algorithm to obtain a reduced tree with 100 scenarios and, for the Adj-SubtreeM, specifying the branching 5-5-2-2. We propose results fixing the parameter p to values 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1 and considering the original tree generated with the HE method in Table 2 and with the MC method in Table 3.

The results in Tables 2 and 3 confirm various observations:

-

the Adj-SubtreeM version is on average 10 times faster than the nonDeg-SubtreeM which is on average 3 times faster than the SubtreeM.

-

the ND obtained with the SubtreeM version is smaller than the one obtained with the nonDeg-SubtreeM which is smaller than the one obtained with the Adj-SubtreeM. Therefore, there is a clear trade-off between the ND and the computing time of the three versions of the subtree merging algorithm.

-

for an increased value of the parameter p all versions show an increased computing time but not a decreased ND. Indeed, for almost all the cases, the minimum ND is touched for a value of p in the range [0.5, 0.7]. Therefore, focusing jointly on minimizing the ND and the computation time, we consider \(p=0.5\) in the subsequent analysis.

The comparison just shown could not be performed having the original scenario tree with 10,000 scenarios because both the nonDeg-SubtreeM and SubtreeM versions reached the limit time of 1 day for all values of p. Therefore, in the subsequent analysis, to be able to compare results for both 1000 and 10,000 cases, we adopt only the version Adj-SubtreeM.

5.2 Comparison of the ND and computing times

We compare the time required to run each of the seven reduction algorithms described in Sect. 3 and the NDs between the reduced trees generated by the algorithms and the original scenario trees.

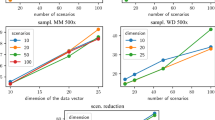

First, we analyse the results of the cases that consider the original scenario trees generated with the MC approach, cf. Fig. 5 and Table 4. For the reduction of the tree with 1000 scenarios, we notice that the single node reduction algorithm is the best in terms of the ND for the 1- and 10-dimension cases, while for the 5- and 20-dimension cases the single scenario reduction produces a reduced scenario tree closer to the original scenario tree (with the ND equal to 0.556 and 2.815, respectively). In terms of computing time, the single node reduction approach proves to be very efficient with an elapsed time of around 1.7 seconds. The nodal clustering algorithm is the fastest of the distance-based algorithms but the final ND is always greater than the one obtained with the single node reduction approach. The Adj-SubtreeM algorithm is computationally the most expansive, although it performs badly in terms of the ND.

The ND and the computation time for all the reduction algorithms considering the original trees generated with the MC approach. On the left, the result starting from the original tree with 1000 scenarios, on the right, the result starting from the original tree with 10,000 scenarios. Each row corresponds to a different dimension for the node of the trees

Considering the reduction of the tree with 10,000 scenarios, the nodal clustering appears to be the most efficient for all the dimensions. In the 5-dimension case, the single scenario extraction gives the same result as the nodal clustering (the ND equal to 0.706) but it requires much more time to run (47,600 vs 26 seconds). Moreover, we notice that for the single node reduction algorithm and, even more for the nodal clustering, the computing times remain relatively low (between 12 and 87 seconds), while for the single scenario reduction and for the Adj-SubtreeM the computing times increase significantly (between 13,000 and 53,000 seconds). However, unlike the 1000 case, the single node reduction algorithm now performs worse than the nodal clustering for all dimensions.

The random algorithms generate a reduced tree in less than 1 second and, especially in the case of the 10,000 scenarios, they have a performance in terms of the ND similar to some of the distance-based algorithms. As expected, of the random algorithms, the scenario extraction performs better than the others showing, on average, a smaller ND, being more stable with a smaller standard deviation (cf. SD in Table 4), and requiring a computing time of the same magnitude as the other random algorithms.

In Figure S1 and in Table S2, we show the results obtained by reducing the original tree generated with the HE approach.

In general, we observe similar results to the MC case. When we reduce the original scenario tree with 1000 scenarios, the best performing algorithm is the single node reduction and the single scenario reduction is the second best in the 5-, 10- and 20-dimension cases. The nodal clustering has an even worse result than the scenario extraction algorithm apart from the 1-dimension case. The Adj-SubtreeM algorithm produces the highest ND of the distance-based algorithms.

In the case of the 10,000 scenario tree, the nodal clustering is the best only in the 1-dimension case, while in all the other dimension cases the best performing algorithm is the single scenario reduction. However, the single scenario reduction, the nodal clustering and the single node reduction appear to be relatively close to each other in terms of the ND, while in terms of required computing time the single node reduction is the fastest. Considering the random algorithms, again the scenario extraction performs better than the others both in terms of the ND on average, and in terms of stability of the results (cf. the SD in Table S2). Moreover, the ND obtained with the scenario extraction is comparable to the one obtained with the distance-based algorithms.

5.3 Comparison of NDs, objective distances and solution distances

To evaluate the nearness between the whole stochastic optimization problems considering the original stochastic tree and the reduced stochastic tree, we implement and solve the model proposed in Sect. 4 and we compute the objective and the here-and-now solution distances therein explained. In particular, the objective distances and the solution distances are computed between the result of the optimization when the original scenario tree is considered and the result of the optimization when the reduced scenario tree is considered. We adopt the original trees and the reduced trees discussed in the previous section, where each tree has four stages, \(T=4\). The initial wealth of the portfolio selection model is \(W_0=100\), the diversification parameter \(\theta =0.65\), the turnover coefficient \(\lambda = 0.30\) and, when we optimize the Average Value-at-Risk, the confidence \(\alpha =0.05\). Clearly, the dimension of the random variable of the scenario trees corresponds to the number of assets available for the portfolio selection, then we consider the cases with \(A = 5, 10, 20\).

The ND and the distance between the optimal objective values for all the reduction algorithms considering the original scenario tree generated with the MC approach and the formulation of the portfolio selection model that optimizes the expected value of the final wealth. On the left, the result starting from the original tree with 1000 scenarios, on the right, the result starting from the original tree with 10,000 scenarios. Each row corresponds to a different dimension for the node of the trees

5.3.1 Objective distances

We start analysing the objective distance and considering the original tree generated with the MC approach and the formulation of the portfolio selection model that optimizes the expected value of the final wealth. The results are in Fig. 6, where we represent the objective distances and the NDs, and in Table 5.

In the case of the 1000 scenario tree, on average, the objective distances observed for cases where the reduced tree comes from a distance-based reduction approach are smaller than the ones coming from the random reduction approaches. Of the distance-based approaches, the nodal clustering has the smallest objective distance in the 5- and 20-dimension cases and the second smallest in the 10-dimension case. The Adj-SubtreeM algorithm is the best in the 10-dimension case and equal to the single scenario reduction algorithm in the other cases. The single node reduction is the worst in the 5- and 10-dimension cases but its performance improves in the 20-dimension case.

The ND and the distance between the optimal objective values for all the reduction algorithms considering the original scenario tree generated with the MC approach and the formulation of the portfolio selection model that optimizes the Average Value-at-Risk of the final wealth. On the left, the result starting from the original tree with 1000 scenarios, on the right, the result starting from the original tree with 10,000 scenarios. Each row corresponds to a different dimension for the node of the trees

In the case of the 10,000 scenario tree, the nodal clustering performs very well both in terms of the ND and in terms of objective distance. As in the 1000 case, we observe a stable behaviour of the nodal clustering algorithm meaning that the objective distance is constantly relatively low, while for the other distance-based algorithms we observe more volatile objective distances among the different cases.

The random algorithms have a very sparse behaviour in terms of objective distances. In particular, the scenario extraction algorithm proves to have a lower SD than the other random algorithms but, on average, a higher objective distance.

Considering the case of the original tree generated with the HE approach, see Figure S2 and Table S3, we observe similar results. In particular, the nodal clustering always gives a very low objective distance, the single scenario reduction is almost always the best, while the single node reduction is relatively volatile in terms of objective distance in all the cases. The significant difference is the worsening of the performance of the Adj-SubtreeM algorithm for which the objective distance proves to be relatively high. Of the random reduction algorithms, the single reduction algorithm is again the less volatile one but, in this case, the random reduction approach also produces on average the smallest objective distance.

Let’s consider the case when the original tree is generated with the MC approach and the formulation of the portfolio selection model is the optimization of the Average Value-at-Risk. The results are summarized in Fig. 7 and in Table 6. We notice that in all the cases the single node reduction generates the smallest objective distance in the distance-based reduction algorithms. The other algorithms show a more volatile behaviour. Only the single scenario reduction algorithm is second best in almost all the cases. Of the random reduction algorithms, the scenario extraction always has the smallest SD and the lowest objective distance on average. Moreover, we observe that in most of cases the average objective distance of the random algorithms is even better than the objective distance of the distance-based algorithms except for the single node reduction.

Now let’s consider the case when the original tree is generated with the HE approach and the formulation of the portfolio selection model is the optimization of the Average Value-at-Risk. The results are presented in Figure S3 and in Table S4. The results are very similar to the ones obtained in the MC case shown above. The most notable difference is that the best performing algorithm is not the single node reduction but the single scenario reduction. The Adj-SubtreeM still has the worst objective distance in most of the cases but the behaviour is better than in the MC case.

5.3.2 Solution distances

In this section we analyse the here-and-now solution distances in relation to the ND, and we start by analysing the cases in which the original tree is generated with the MC approach and the formulation of the portfolio selection model optimizes the expected value of the final wealth. The results are shown in Fig. 8 and in Table 7.

The ND and the distance between the optimal here-and-now solutions for all the reduction algorithms considering the original scenario tree generated with the MC approach and the formulation of the portfolio selection model that optimizes the expected value of the final wealth. On the left, the result starting from the original tree with 1000 scenarios, on the right, the result starting from the original tree with 10,000 scenarios. Each row corresponds to a different dimension for the node of the trees

For those cases having the original scenario tree with 1000 scenarios, we observe that the single scenario reduction algorithm is always the one that produces the minimum solution distance, while the other three distance-based algorithms have a volatile behaviour. Similarly, in the case of 10,000 scenarios, the general behaviour is still volatile. However, we can notice that the solution distance has values of around 10 and 30 for all the distance-based reduction algorithms, apart from the Adj-SubtreeM whose solution distance increases substantially. Considering the random algorithms, on average the scenario extraction gives the minimum solution distance and shows the lowest SD.

The ND and the distance between the optimal here-and-now solutions for all the reduction algorithms considering the original scenario tree generated with the MC approach and the formulation of the portfolio selection model that optimizes the Average Value-at-Risk of the final wealth. On the left, the result starting from the original tree with 1000 scenarios, on the right, the result starting from the original tree with 10,000 scenarios. Each row corresponds to a different dimension for the node of the trees

Let’s consider the case when the formulation of the portfolio selection model is again the optimization of the expected value of the final wealth, but the original tree is now generated with the HE approach. The results are shown in Figure S4 and in Table S5. We notice that the Adj-SubtreeM algorithm is the worst performing of the distance-based algorithms in terms of solution distance. Considering the other three distance-based algorithms, the solution distance is quite volatile and we do not observe any algorithm that is generally better than the others.

In Fig. 9 and in Table 8, we consider the case where the original tree is generated with the MC approach and the formulation of the portfolio selection model is the optimization of the Average Value-at-Risk. Again, the four distance-based algorithms have a volatile behaviour which means that it is not possible to identify one that performs better than the others. On the contrary, of the random algorithms, the scenario extraction still produces, on average, the lowest solution distance with the lowest SD.

In Figure S5 and in Table S6, we show the case where the original tree is generated with the HE approach and the formulation of the portfolio selection model is the optimization of the Average Value-at-Risk. As before, the distance-based algorithms perform in a similar way. In the 10,000 scenario case, we notice that the single scenario reduction always performs best. The scenario extraction algorithm is again the best of the random algorithms.

6 Conclusion

In this paper, we analyse the quality of several algorithms that can be used to reduce a multistage scenario tree. We consider four original multistage scenario trees by combining:

-

an established number of scenarios, 1000 or 10,000;

-

a scenario generating method, either Monte Carlo or Historical Extraction (Kopa et al. 2018).

To reduce these four scenario trees, we implemented seven scenario tree reduction algorithms, three random and four distance-based ones. Among the four distance-based algorithms, we proposed a new one based on a clustering technique, thus, we name it nodal clustering. Moreover, since one of the other distance-based algorithms, the Subtree Merging, appeared computationally intractable (for large number of scenarios) we introduced and analysed two more tractable versions of this algorithm and the role of the main parameter p. We found the Adj-SubtreeM algorithm with parameter \(p=0.5\) as the best choice. Finally, we compared the computational time and the Nested Distance between the original tree and the reduced trees applying the seven reduction algorithms.

In case of the distance-based algorithms, the results show that the nodal clustering and the single node reduction algorithms are typically the fastest, while the single scenario reduction and the Adj-SubtreeM are quite computationally demanding. The results can be summarized as follows:

-

considering the original tree with 1000 scenarios, the single node reduction and the single scenario reduction produce the lowest Nested Distance in most of the cases;

-

considering the original tree with 10,000 scenarios, the two best algorithms are the single scenario reduction and the nodal clustering;

-

thus, in general, we could say that the single node reduction, the single scenario reduction and the nodal clustering are almost equivalent in terms of Nested Distance performance, but the single scenario reduction is the slowest

To perform a deeper comparison between the seven reduction algorithms, we also formulated a multistage portfolio selection problem and we solved it both with the original trees and with the reduced trees, and we compared the optimal objective values and the first stage solutions. We can report the following observations:

-

considering the random algorithms, the scenario extraction algorithm appears to be the best in terms of the Nested Distance, objective value distance and solution distance; moreover, it is very fast, even if slightly slower than the other random algorithms;

-

considering the distance-based algorithms, they perform similarly in terms of objective value distance and solution distance; only the Adj-SubtreeM suffers a relatively high objective value distance and solution distance in almost all the cases. We are aware that the authors of this algorithm, see Pflug and Pichler (2014), proposed a further improvement. However, since it is even more computationally demanding, it was not considered in this paper.

To conclude, we believe that the Nested Distance can be efficiently used to analyse the quality of scenario reduction algorithms. Furthermore, to fully compare scenario reduction algorithms, the distance between stochastic models should be measured also in terms of objective value distance and here-and-now solution distance.

References

Beraldi P, Bruni ME (2014) A clustering approach for scenario tree reduction: an application to a stochastic programming portfolio optimization problem. Top 22(3):934–949

Birge JR, Louveaux F (1997) Introduction to stochastic programming. Springer, Berlin

Chen Z, Yan Z (2018) Scenario tree reduction methods through clustering nodes. Comput Chem Eng 109:96–111

Consigli G, Moriggia V, Benincasa E, Landoni G, Petronio F, Vitali S, di Tria M, Skoric M, Uristani A (2018a) Optimal multistage defined-benefit pension fund management. In: Handbook of Recent Advances in Commodity and Financial Modeling, Springer, Berlin pp 267–296

Consigli G, Moriggia V, Vitali S, Mercuri L (2018b) Optimal insurance portfolios risk-adjusted performance through dynamic stochastic programming. CMS 15(3–4):599–632

Crainic TG, Hewitt M, Rei W (2014) Scenario grouping in a progressive hedging-based meta-heuristic for stochastic network design. Comput Oper Res 43:90–99

Dupačová J, Hurt J, Štěpán J (2002) Stochastic modeling in economics and finance, applied Optimization. Kluwer,

Dupačová J, Gröwe-Kuska N, Römisch W (2003) Scenario reduction in stochastic programming. Math Program 95(3):493–511

Growe-Kuska N, Heitsch H, Romisch W (2003) Scenario reduction and scenario tree construction for power management problems. In: Power tech conference proceedings, 2003 IEEE Bologna, vol 3, p 7

Heitsch H, Römisch W (2003) Scenario reduction algorithms in stochastic programming. Comput Optim Appl 24(2–3):187–206

Heitsch H, Römisch W (2009) Scenario tree reduction for multistage stochastic programs. CMS 6(2):117–133

Kaufman L, Rousseeuw PJ (2009) Finding groups in data: an introduction to cluster analysis. Wiley, New York

Kilianová S, Pflug GC (2009) Optimal pension fund management under multi-period risk minimization. Ann Oper Res 166(1):261–270

Kopa M, Petrová B (2017) Multistage risk premiums in portfolio optimization. Kybernetika 53(6):992–1011

Kopa M, Moriggia V, Vitali S (2018) Individual optimal pension allocation under stochastic dominance constraints. Ann Oper Res 260(1–2):255–291

Kovacevic RM, Pichler A (2015) Tree approximation for discrete time stochastic processes: a process distance approach. Ann Oper Res 235(1):395–421

Mandelli D, Yilmaz A, Aldemir T, Metzroth K, Denning R (2013) Scenario clustering and dynamic probabilistic risk assessment. Reliab Eng Syst Saf 115:146–160

Moriggia V, Kopa M, Vitali S (2019) Pension fund management with hedging derivatives, stochastic dominance and nodal contamination. Omega 87:127–141

Pflug GC, Pichler A (2015) Convergence of the Smoothed Empirical Process in Nested Distance. Humboldt-Universität zu Berlin, Mathematisch-Naturwissenschaftliche Fakultät II, Institut für Mathematik, https://doi.org/10.18452/8448

Pflug GC, Pichler A (2012) A distance for multistage stochastic optimization models. SIAM J Optim 22(1):1–23

Pflug GC, Pichler A (2014) Multistage stochastic optimization. Springer, Berlin

Pflug GC, Pichler A (2016) From empirical observations to tree models for stochastic optimization: convergence properties. SIAM J Optim 26(3):1715–1740

Powell WB (2014) Clearing the jungle of stochastic optimization. In: Bridging data and decisions, Informs, pp 109–137

Rockafellar TR, Uryasev S (2000) Optimization of conditional value-at-risk. J Risk 2:21–42

Rockafellar TR, Uryasev S (2002) Conditional value-at-risk for general loss distributions. J Banking & Financ 26(7):1443–1471

Rusý T, Kopa M (2018) An asset-liability management stochastic program of a leasing company. Kybernetika 54(6):1247–1263

Shapiro A, Dentcheva D, Ruszczyński A (2009) Lectures on stochastic programing. Modeling and theory SIAM and mathematical programming society. https://www2.isye.gatech.edu/people/faculty/Alex_Shapiro/SPbook.pdf

Timonina AV (2015) Multi-stage stochastic optimization: the distance between stochastic scenario processes. CMS 12(1):171–195

Vitali S (2018) Multistage multivariate nested distance: an empirical analysis. Kybernetika 54(6):1184–1200

Vitali S, Moriggia V, Kopa M (2017) Optimal pension fund composition for an Italian private pension plan sponsor. CMS 14(1):135–160

Acknowledgements

Open access funding provided by Università degli Studi di Bergamo within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research was partially supported by Czech Science Foundation Grant 18-05631S, by MIUR ex-60% 2019 sci.resp. V. Moriggia, by MIUR ex-60% 2020 sci.resp. V. Moriggia and by MIUR ex-60% 2020 sci.resp. S. Vitali.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Horejšová, M., Vitali, S., Kopa, M. et al. Evaluation of scenario reduction algorithms with nested distance. Comput Manag Sci 17, 241–275 (2020). https://doi.org/10.1007/s10287-020-00375-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10287-020-00375-4