Abstract

The valuation of a real option is preferably done with the inclusion of uncertainties in the model, since the value depends on future costs and revenues, which are not perfectly known today. The usual value of the option is defined as the maximal expected (discounted) profit one may achieve under optimal management of the operation. However, also this approach has its limitations, since quite often the models for costs and revenues are subject to model error. Under a prudent valuation, the possible model error should be incorporated into the calculation. In this paper, we consider the valuation of a power plant under ambiguity of probability models for costs and revenues. The valuation is done by stochastic dynamic programming and on top of it, we use a dynamic ambiguity model for obtaining the prudent minimax valuation. For the valuation of the power plant under model ambiguity we introduce a distance based on the Wasserstein distance. Another highlight of this paper is the multiscale approach, since decision stages are defined on a weekly basis, while the random costs and revenues appear on a much finer scale. The idea of bridging stochastic processes is used to link the weekly decision scale with the finer simulation scale. The applicability of the introduced concepts is broad and not limited to the motivating valuation problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the deregulation of the energy market, the question of how to determine the value of a power plant can be asked. The traditional approach of valuing it within a given portfolio of other assets in a coordinated way against one’s customer load is one possibility. A second approach is to adopt the ideas of real option pricing in finance. In the first case one ends up with models resembling unit commitment (e.g., van Ackooij et al. 2018) but at a long time scale. Although the actual operation of the power plant can be presented in great detail, it will be harder to incorporate other features in the model. This will typically be the case for uncertainty, where one ends up with multi-stage mixed-integer programs which are not easily solved. One can also argue that it is unreasonable to model the system as fully coordinated. In contrast, when modelling the power plant as a real option, thus operating it in the face of a set of market signals, the setting becomes that of perfect competition. Uncertainty is also naturally modelled, but it comes at the expense of modelling the plant as an independent production unit and thus with less realism in that sense.

However, the price of the real option may well serve as a financial reference base between two parties. For example between the power plant owner and a trading entity actually operating on the market. Taking the option pricing perspective, it must be emphasized that energy markets are by far not as “granular” as the equity markets. For instance, on the electricity market one cannot buy a contract of delivery for a given hour 6 months from now. The classical pricing-hedging duality argument is thus not feasible. Moreover, when operating a power plant, generation will be bound locally by a given power output level. This can be either the result of ramping conditions or minimum up/down times. It is therefore reasonable to try to model the power plant with sufficient realism for the above discrepancies to be minimal. This is the stance that we have taken in the current work. It will lead us to consider a multiscale stochastic program in the sense of Glanzer and Pflug (2019), i.e., a multistage stochastic optimization problem where each stage itself is subdivided into a given set of time instants.

To account for uncertainty, we start out with a set of typical stochastic models for underlying prices, which are based on multi-factor models (e.g., Clelow and Strickland 2000) driven by Brownian motions. Clearly, such (commonly used) idealized modelling assumptions are rather unrealistic. It is thus the aim and the core part of the present paper to relax such strong assumptions by computing distributionally robust solutions to the studied operational problem and to investigate how the resulting valuation deviates when considering model ambiguity. Distributionally robust optimization is a field which has recently gained a lot of popularity in the literature (see (Pflug and Pichler 2014, pp. 232–233) for a review of different approaches). In particular, ambiguity sets based on distance concepts between probability measures (such as the Wasserstein distance) are well-supported by theory and frequently applied (e.g., Pflug and Wozabal 2007; Esfahani and Kuhn 2018; Glanzer et al. 2019; Duan et al. 2018; Gao and Kleywegt 2016). However, to the best of our knowledge, the effects of distributional robustness in (especially multistage) real-world applications, have not been investigated yet.

In order to solve the formulated problem numerically, the given uncertainty model will be discretized on a scenario lattice. The multiscale structure could then simply mean that uncertainty is lost within a given stage (cf., e.g., Moriggia et al. 2018). More advanced approaches do consider some uncertainty [e.g., the so-called multi-horizon approach originally suggested in Kaut et al. (2014) and subsequently studied and applied in Seljom and Tomasgard (2017), Skar et al. (2016), Werner et al. (2013), Zhonghua et al. (2015), Maggioni et al. (2019)], but the resulting paths do not necessarily connect with subsequent elements in the scenario tree/lattice. Hence, the multi-horizon approach is not appropriate for the present problem, as the key requirement of two time scales which may be assumed to run completely independent from each other, is not given. Indeed, we deal with two different granularities associated with one and the same stochastic process reflecting the evolution of the underlying market prices. A framework for such situations, where sub-stage paths in the lattice are carefully connected, has recently been proposed in Glanzer and Pflug (2019). We test the multiscale stochastic programming approach suggested in Glanzer and Pflug (2019) in the context of the present real-world application.

Although the resulting ideas will be illustrated through the power plant real option framework, their potential usage is readily seen to be beyond this specific application. In terms of contributions we can can state:

-

For the application of real option pricing, we investigate more reasonable exercise patterns. In order to keep computational burden low, this naturally leads to multiscale stochastic programs. We also consider model ambiguity to mitigate the fairly ideal models for market prices. From a high-level perspective, we thus extend the literature on real-world applications of dealing with two fundamental problems in stochastic programming, namely the problem of time scales with multiple granularities as well as the problem of model ambiguity.

-

With respect to multistage model ambiguity, we propose a new concept based on the Wasserstein distance. It is tailored with a computational intention, namely in such a way that (on a discrete scenario tree/lattice) the applicability of a classical backward dynamic programming recursion can be maintained. In particular, the suggested framework leads to solutions that are robust w.r.t. model misspecification in a ball around each conditional transition probability distribution. The size of these balls may be controlled uniformly by a single input parameter. We also link the concept to the nested distance in such a way that it inherits a favourable stability property of the latter.

-

In the context of Wasserstein ambiguity sets, we propose a state-dependent metric as a basis for the Wasserstein distance. Thereby we account for more realistic worst-case scenarios. We discuss that the well-appreciated statistical motivation for using Wasserstein balls is not invalidated by doing so.

The paper is organized as follows. Section 2 describes the valuation model and the uncertainty model. As typical for real-world energy applications, a sound mathematical framework reflecting all peculiarities of the problem requires carefulness in all details. The underlying uncertainty factors are modelled by a continuous time stochastic process. However, in the light of the nature of the decision problem, we will eventually apply a stochastic dynamic programming algorithm which operates backwards in (discrete) time. To prepare for the computational solution, we therefore discuss all discretization steps required by the multiscale stochastic programming framework that we adopt. Section 3 is dedicated to model ambiguity. We introduce and discuss a new concept which is tailor-made for incorporating model ambiguity into dynamic stochastic optimization models on discrete structures. All numerical experiments and aspects of the computational solution algorithm are given in Sect. 4. Section 5 concludes. Some technical details and examples are deferred to the “Appendix”.

2 The model

Our valuation problem belongs to the class of discrete time sequential decision problems with finite horizon T, decisions \(u_t\), state variables \(z_t\), and a Markovian driving process \(\xi _t\):

Here T is the number of decision stages and \(g_t(z_t,u_t,\xi _t)\) is the state transition function. The driving stochastic process \(\xi _t\) is assumed to belong to \(L_1(\Omega _t,\mathcal {F}_t ; \mathbb {R}^m)\,\) and the feasible decision variables at stage t are defined by the set \({\mathcal {U}}_t(z_t) \subseteq {\mathbb {R}}^m\). The set of all reachable state variables is denoted by \(\mathcal {Z}_t \subseteq \mathbb {R}^{d_1}\). The stage-wise profit function \(h_t : \mathbb {R}^{d_1} \times \mathbb {R}^m \times \mathbb {R}^{d_2} \rightarrow \mathbb {R}\) is continuous and satisfies the following growth condition:

for all \((z,u,x) \in \mathbb {R}^{d_1 + m + d_2}\) and some constant K. We choose the discount factor \(\beta _t = \beta ^t\) for some constant \(\beta \in (0,1]\) throughout the paper. Any decision \(u_t\) to be made at time t may only depend on the current state \(z_t\) and the most recent observation of exogenous information \(\xi _{t-1}\). This is the non-anticipativity condition. The initial conditions for the random process \(\xi \) and the state vector z are that \(\xi _0\) and \(z_0\) are assumed to be constant.

In our application, the decisions \(u_t\) represent the weekly electricity production plan for a thermal power plant. The latter is characterized by many technical constraints, such as minimum up/down times or ramping constraints. Fine grain constraints can be incorporated into the model by increasing the dimension of the state vector and accounting for the number of hours the plant has been offline/online. Such state-representations of constraints on generation assets have received attention in the literature (see, e.g., Martinéz et al. 2008; Frangioni and Gentile 2006; Frangioni et al. 2008 and the references therein). Finer granularity of the time dimension and/or the state variable would result in a significant increase of time steps T (and reduction of the time step size \(\Delta t\)) as well as an increase in the dimension of \(z_t\). For this reason, we introduce here the idea of a multiscale model: While the production decisions \(u_t\) are made on a weekly scale, the production costs and revenues are calculated on a finer time scale. To make the dynamic optimization algorithm tractable, we make the assumption that the decisions, i.e. the production profiles, must be chosen from a pre-specified set with finite cardinality. The profiles are set up such that they reflect realistic operating conditions and key choices, such as generating at minimal stable generation (MSG) at off peak hours.

Just prior to presenting the specific instantiation of (1), let us emphasize once more that the idea of subdividing a “stage” to mitigate (the curse of) dimensionality goes largely beyond the presented application. Typical other energy problems with similar mechanisms are cascaded reservoir management problems [e.g., see the extensive discussion in van Ackooij et al. (2014) as well as Escudero et al. (1996, 1999), Zéphyr et al. (2015), Cervellera et al. (2006), Aasgård et al. (2014), Séguin et al. (2017), Fleten et al. (2011)].

2.1 Instantiation of the problem: the valuation model

In our instantiation of problem (1) the time horizon is spanned by T weeks. Each week \(t=1,...,T\) is subdivided into S equally sized blocks of hours. With respect to our earlier introduced notation, we now present the following specific versions:

-

the price process \(\xi _{t,s}=(\xi ^e_{t,s}, \xi ^f_{t,s} , \xi ^c_{t,s}) \in {\mathbb {R}}^3 \) represents the electricity price in GBP per megawatt hour (£/MWh), the fuel price in USD per tonne ($/tonne(fuel)) and the \(\text{ CO }_2\) allowances price in EUR per tonne (€/tonne(carbon)), for each block s within week t, for \(s=0, \dots , S\). The information up to stage t is the information up to \(\xi _{t,0}\). The values within the weeks are \(\xi _{t,s}\) for \(s=1,\dots , S\) with the convention that \(\xi _{t,S} =\xi _{t+1,0} \) coincides with the initial prices of the next stage. With this convention we ensure continuity of prices in between weeks. In this way, the information up to stage t is to be understood as the information up to the value \(\xi _{t,0}\).

-

the control \(u_t = \{u_{t,s}\}_{s=0}^{S-1} \in {\mathcal {U}} \subseteq {\mathbb {R}}_+^S\) represents the production profile vector for week t, where \(u_{t,s} \) is given in megawatt (MW) and denotes the production at block s. Before the beginning of intermediate values of week t, we determine \(u_t\). Then, \(u_t\) is \(\xi _{t,0}-\) measurable.

-

the state vector \(z_t\) is two-dimensional, i.e., \(z_t = (x_t,y_t)\) with

-

\(x_t\in {\mathbb {R}}_+\) representing the amount of \(\text{ CO }_2\) allowances (measured in tonnes of carbon), that are left for week t.

-

\(y_t \in {\mathbb {Z}}_+\) representing the number of hours the power plant was offline before the beginning of week t.

-

The objective function \(h_t : \mathbb {R}\times {\mathbb {Z}}_+ \times \mathbb {R}_+^S \times \mathbb {R}_+ \rightarrow \mathbb {R}\) is given by

The profit at each block s within week t is defined as follows:

where \({\bar{u}}_t := \sum _{s=0}^{S-1} u_{t,s}\). Costs incurred are based on the following component functions:

-

\(f^{\text{ CO }_2} : \mathbb {R}\times \mathbb {R}_+\times \mathbb {R}_+ \rightarrow \mathbb {R}_+\) gives the cost of buying more \(\text{ CO }_2\) allowances at the beginning of week \(t\,\) (before the values within week t are known);

-

\(f^{\text{ start }} : {\mathbb {Z}}_+^2 \times \mathbb {R}_+^S \times \mathbb {R}_+^2 \rightarrow \mathbb {R}_+\) gives the start-up cost if the power plant has been offline prior to (one of its arguments) block s;

-

\(f^{\text{ tr }} : \mathbb {R}^S_+ \rightarrow \mathbb {R}_+\) represents fuel transportation costs linked to a selected production profile at the beginning of week t.

Table 1 summarizes constants used above or in the sequel.

The way in which each state variable is updated will be described now. First we will focus on the variables regarding the \(\text{ CO }_2\) allowances. Although in the past, a given set of allowances was allocated for free, in principle, they are now obtained from a non modelled auction process. Within our model, the variable \(I_t\) will represent the number of additional \(\text{ CO }_2\) allowances received from the regulator at the beginning of week t (measured in tonnes of carbon). Note that this variable will typically be equal to zero but sometimes it will take a relatively high value. The latter happens exactly at the rare events when new allowances are obtained.

Now, \(H_4\,{\bar{u}}_t\,\Delta s\) is the amount of generated \(\text{ CO }_2\) during stage t. Hence, together with \(x_t\) being the remaining stock level and \(I_t\) the “inflows”, the amount of allowances one needs to buy at stage t is \(\alpha _t = [ x_t +I_{t} - H_4 \,{\bar{u}}_t\,\Delta s ]_{-}\).Footnote 1 In the case where \(\alpha _t\) is positive, we follow a procurement strategy based on a low/middle/high price range partition resulting from some pre-market analysis. Prices in the interval \([{\underline{b}}, {\overline{b}}]\), with \(0 \le {\underline{b}} < {\overline{b}}\), are considered middle range. This is formalized as follows:

where \(C_\alpha \) is a constant that determines the size of the extra amount to be bought.

Recall, our implicit assumption is that new allowances are always bought before the prices within week t are known. The cost of buying more certificates for week t is then given by

The amount \(x_{t+1}\) of remaining allowances after the previous purchase, is updated as follows:

The second state variable accounts for start-ups and related costs. The latter depend on the amount of time the power plant was offline. In our model this time frame will be partitioned into C different intervals of hours denoted by \((c_j, c_{j+1}] ,\, (c_{C}, \infty )\), for \(j=1, \dots , C-1\), \(c_1=0\), over which the start-up costs are assumed to be constant. The associated costs are in terms of power, fuel burnt and extra costs. Depending on \(y_t\) and the chosen profile \(u_t\), one can readily figure out in which interval each start-up of \(u_t\) falls.

The induced start-up costs at block s within week t are given by:

where

-

\({\overline{W}}_s(y_t,u_t)\) is the amount of works power (MWh) for a start-up at s;

-

\({\overline{B}}_s(y_t,u_t)\) is the amount of solid fuel burnt (GJ) during a start-up at s;

-

\({\overline{E}}_s(y_t,u_t)\) denotes engineering and imbalance costs (£) during a start-up at s.

The updated state \(y_{t+1}\) is given by:

where \(\max \{ \emptyset \}=0\).

As a further cost factor, we account for the fuel transportation costs associated to each profile:

where the unit transportation cost (in £ per tonne of fuel) is given by the constant factor \(C^{tr}\).

2.2 Underlying price processes

To model the underlying uncertainties, i.e., the stochastic price-evolution of electricity, fuel and \(\text{ CO }_2\) allowances, we postulate a version of a classical two-factor model. The latter are commonly used for the modeling of commodity markets (cf. Clelow and Strickland 2000; Ewald et al. 2018; Ribeiro and Hodges 2004; Farkas et al. 2017). More specifically, in our model the electricity price behaviour is governed by a long term and a short term factor, whereas fuel and \(\text{ CO }_2\) allowances prices evolve according to a one factor model. In summary, we get a three-dimensional geometric Brownian motion model driven by four correlated one-dimensional Brownian components \(B^{e,\text{ sh }},B^{e,\text{ lo }},B^{f},B^{c}\). In particular, the dynamics of the underlying stochastic process F are described by the SDE

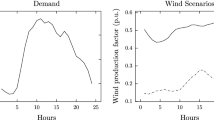

where the superscripts sh and lo refer to short-term and long-term, respectively. Volatility is allowed to be time-dependent but deterministic. The double-index notation \(F_{t,t}\) expresses the fact that we model the spot price as a special case of the forward price. In particular, the forward price \(F_{0,t}\) (as observed in the market at time 0) will enter the solution of (6) at time t. In this way, we account for the well-known seasonality (peak-hours and off-peak-hours) inherent in electricity prices. Figure 1 visualizes this typical effect. To avoid notational clutter, we henceforth write \(\xi _t\) with one index for the spot price as a short hand for \(F_{t,t}\). Note that we are dealing with a continuous time stochastic model here. The index notation should not be confused with the discrete-time multiscale indexes used in the valuation model; the context will always make clear what is meant.

Regarding the dependence structure between the underlying assets, we allow for a time dependent correlation matrix

Using the (lower triangular) matrix \(L_t\) resulting from a Cholesky decomposition of \(\rho _t\), we may replace the Brownian factors \([dB_t^{e,\text{ sh }}, dB_t^{f}, dB_t^{c}, dB_t^{e,\text{ lo }}]^\top \) by the matrix-vector product \(L_t \times [dW_t^{(1)}, dW_t^{(2)}, dW_t^{(3)}, dW_t^{(4)}]^\top \), such that the underlying prices are driven by independent Wiener processes \(W_1^{\text{ s }}, W_2, W_3, W_1^{\text{ l }}\). Multiplying the volatility matrix in (6) with \(L_t\), we can write the model in the form

The non-zero components of the above coefficient matrix involve nasty terms with combinations of the various correlations. The precise parameters can be found in the “Appendix”.

The solution of SDEs of such a form as in (7) is well known to be of the geometric Brownian motion type (e.g., see (Oksendal 2000, p. 62)). In particular, the random vector \(\xi _t = [\xi _t^{e},\xi _t^{f},\xi _t^{c}]\) follows a three-dimensional log-normal distribution. The corresponding parameters can again be found in the “Appendix”.

2.2.1 Discretization and the associated bridge process

For our numerical solution framework, which is discussed in detail in Sect. 4, the process \(\xi \) will first be discretized in all decision stages. Then, an approximate solution of the problem will be computed by stochastic dynamic programming with a backward recursion. In each decision stage, the algorithm relies on the expected profit/loss associated with any decision to be made for the upcoming observation blocks of the following week. To compute such values, we will exploit the structure of the valuation model, the uncertainty model and the backwards recursion. In particular, we are able to compute the expected profits by an analytical formula.

Let us start with the discretization step. To account for the two different time scales explained in Sect. 2.1 above, namely the weekly decision scale and the much finer intra-week observation scale, we use the notation \(\xi _{t,s}\), where \(t=0,\ldots ,T\,\) runs in weeks and \(s=0,\ldots ,S\) in hour-blocks of equal size \(\Delta s\) (such that \(t+S\cdot \Delta s = t+1)\). Considering the fact that intra-week data of fuel and \(\text{ CO }_2\) allowances prices typically show – if even available – a rather stable evolution with low fluctuations, we assume those prices to be constant from Monday to Sunday. On the contrary, the electricity price dimension is truly stochastic even on a fine time-scale. Looking at the expected profit function (2) at some block s during a week t, it turns out that (on the basis of our assumptions) the problem boils down to the expected value of the electricity price \(\xi _{t,s}^e\,\) given both the initial value \(\xi _{t,0}^e\) as well as the final value \(\xi _{t,S}^e\) of week t. This is due to the fact that the function \(h_t(\cdot )\) is linear in \(\xi _{t,s}^e\). Mathematically speaking, we are left with the computation of the conditional expected value at time \(t+s\cdot \Delta s\) of the stochastic bridge process linking the values \(\xi _{t,0}^e\) and \(\xi _{t,S}^e\), for all \(s=1,\ldots ,S\). All other parts can be computed in a straightforward way.

The one-dimensional process \(\xi _{t,s}^e\) follows a univariate lognormal distribution. Thus, its transition density \(\delta \) is available in analytical terms and the transition density of the associated bridge process can be computed explicitly. Let an initial value \(\eta _1\) of the process at the beginning of some week t and a final value \(\eta _2\) at the end of that week be given (i.e., \(\xi _{t,0}^{e}=\eta _1\) and \(\xi _{t,S}^{e}=\eta _2\)). Then, the bridge process transition density, at time \(s \in [0,S]\), is given by

where

In particular, we get for the conditional expectation

Let us emphasize that the above analytical tractability is not due to our restriction of the intra-week stochasticity to one dimension (see Glanzer and Pflug 2019 for a treatment of the more general multi-dimensional case). This restriction is purely motivated by data.

Figure 2 illustrates a set of sample paths from the bridge process, which starts and ends in the forward prices corresponding to two consecutive weeks. The intermediate forward prices are shown for comparison of the seasonal behaviour.

3 Ambiguity for dynamic stochastic optimization models

It is an application of classical stochastic dynamic programming theory to solve (1) backwards in time on the basis of the following recursion scheme:

where \(V_T (z_{T}, \xi _{T}) \equiv 0\), \(z_0\) and \(\xi _0\) are given.

Let \(\xi \) be a Markovian process defined on a finite state space \(\Xi _0 \times \dots \times \Xi _{T}\), where on each \(\Xi _t\) there is a distance \(d_t\). Let the cardinality of \(\Xi _t\) be \(N_t\) with \(N_0=1\) (typically nondecreasing in t). Then the transition matrices \(P_t\), \(t=0, \dots , T-1\) are of the form \(N_t \times N_{t+1}\), where the \(i-\)th row of the matrix \(P_t\) is denoted by \(p_{t}(i)\, \), for all \( i=1, \dots , N_t\). Notice that each row \(p_{t}(i)\) describes a probability measure on the metric space \((\Xi _{t+1},d_{t+1})\).

Let \(\xi _{t}^i \in \Xi _t\,\) be given. Then, the conditional probability to transition to \(\xi _{t+1}^j \in \Xi _{t+1}\) is given by the jth element of the row vector \(p_{t}(i)\), denoted as \(p_{t}(i,j)\) for \(j=1,\dots , N_{t+1}\) and \(i=1, \dots , N_t\). In this discrete case, the objective of the recursion in (9) can be written as

3.1 A new concept: uniform Wasserstein distances

In order to consider model ambiguity, we look for alternative transition matrices \(Q_t\), which are close to a given matrix \(P_t\). Let us first recall the general definition of the Wasserstein distance for discrete models.

Definition 3.1

Let \(P = \sum _{i=1}^n P_i\,\delta _{\xi ^i}\) and \(Q = \sum _{j=1}^{{\tilde{n}}} Q_j\,\delta _{{\tilde{\xi }}^j}\) be two discrete measures sitting on the points \(\{\xi ^1,\dots , \xi ^n \}\subset \Xi \) and \(\{{\tilde{\xi }}^1,\dots , {\tilde{\xi }}^{{\tilde{n}}} \}\subset {\tilde{\Xi }} \), respectively. Then, the Wasserstein distance between P and Q is defined as

where \(\pi = \sum _{i,j} \pi _{i,j} \cdot \delta _{\xi ^i, {\tilde{\xi }}^j}\) is a probability measure on \( \Xi \times {\tilde{\Xi }}\) with marginals P and Q, and where \(D_{ij}\) is a distance between the resp. atoms.

We will now measure the closeness between (discrete) multistage models \({\mathbb {P}}\) and \({\mathbb {Q}}\) by a uniform Wasserstein distance concept. The rows of alternative matrices \(Q_t\) are denoted by \(q_{t}(i), \, i=1,\dots , N_t\). The measure \(q_t(i)\) is sitting on at most \(N_{t+1}\) points in \(\Xi _{t+1}\) and is such that \(\mathrm{supp}(q_t(i)) \subseteq \mathrm{supp}(p_t(i))\). Then, we define the distance

which can be interpreted as a uniform version of scenariowise Wasserstein distances. An \(\varepsilon \) ball around \({\mathbb {P}}\) is characterized by the fact that all members \({\mathbb {Q}}\) satisfy

for all \(t=0,\ldots ,T-1\,\) and all \(i=1,\ldots , N_t\).

When introducing ambiguity into the model, we would like to solve problem (1) wherein the objective function is replaced with:

where \({\mathbb {E}}_{\mathbb {Q}}\) denotes the expectation with respect to the measure \(\mathbb {Q}\).

Our choice of the multistage distance makes it possible to keep the decomposed structure of the backward recursion, which reads:

Hence, the ambiguity approach just extends the max model to a maximin model. The ambiguous model can also be seen as a risk adverse model in contrast to the basic risk neutral model. If the distance is not of the decomposable form, then the backward recursion does not decompose scenariowise and one has to find all optimal decisions in one very big stagewise but not scenariowise decomposed algorithm. However, decomposability is the key feature of successful methods for dynamic decision problems. Hence, our concept is strongly motivated by its favourable computational properties. However, as we will discuss now, under a mild regularity condition (in the sense that it will always hold for discrete models, which are the basis of the whole computational framework) it can still be shown that optimal solutions are close if the underlying models are close w.r.t. the uniform Wasserstein distance.

The general distance concept for stochastic processes (including their discrete representation in the form of scenario trees) is the nested distance introduced in Pflug (2010), Pflug and Pichler (2012) as a multistage generalization of the classical Wasserstein distance.Footnote 2 In our case we have a Markov process which can be seen as a lattice process. Notice that a lattice can be interpreted as a compressed form of a tree. It can always be “unfolded” to a tree representing the same filtration structure, by splitting each node according to the number of incoming arcs. Thus, all results applying for trees do hold for lattices as well. The uniform Wasserstein distance introduced above is given by the maximum Wasserstein distance over all conditional transitions. The subsequent stability result holds.

Proposition 3.1

Let \({\mathbb {P}}\) and \(\tilde{{\mathbb {P}}}\) be two discrete Markovian probability models defined on the filtered space \((\Omega , \sigma (\xi ))\). Assume the following Lipschitz condition regarding \({\mathbb {P}}\,\) to hold for all \(t=0, \dots , T-1\) and all values \(\xi _t^{i},\, \xi _t^{j}\), where \(i,j = 1,\dots , N_t\):

for \(K_t\in {\mathbb {R}}\). Consider the generic multistage stochastic optimization problem

where the (nonanticipative) decisions x lie in some convex set and where the function \(c(\cdot ,\cdot )\) is convex in x and 1-Lipschitz w.r.t. \(\xi \). Then the relation

holds, where \({{\,\mathrm{\mathsf{{d}\mathsf{{I}}}}\,}}(\cdot ,\cdot )\) denotes the nested distance, and where the constant K is given by

Proof

The first inequality is a well-known result from (Pflug and Pichler 2012, Th. 11). The statement then follows readily from (Pflug and Pichler 2014, Lem. 4.27), by using \({{\,\mathrm{{\mathfrak {W}}^{\infty }}\,}}({\mathbb {P}}, \tilde{{\mathbb {P}}})\) as a uniform bound for \({{\,\mathrm{{\mathfrak {W}}}\,}}(P_t(\cdot \vert \xi _t), {\tilde{P}}_t(\cdot \vert \xi _t))\), over all t. \(\square \)

Remark

Notice that for discrete Markov chain models the assumption in Proposition 3.1 always holds, as one can simply choose the ergodic coefficient

Remark

In the above construction, all models contained in the ambiguity set share exactly the same tree structure and node values. Thus, one might conjecture at a first glance that it would be possible to bound the nested distance by a simple sum of the stagewise maximum of conditional Wasserstein distances, weighted by the number of subtrees at the respective stage. A simple example in the “Appendix” shows that such a construction does not work in general.

3.2 State-dependent distances

In practice, the worst-case model for an upcoming period may often depend on the current state. In the model considered in the present paper (cf. Sect. 2.1), we decide only at the beginning of each stage about the procurement of additional \(\text{ CO }_2\) allowances. In particular, we restrict ourselves not to buy any if the current stock is sufficient for whatever we may do during the subsequent week; regardless of their market price. If we neglect this consideration when searching for the optimal distributionally robust production profile, the worst case may reflect a variation in the \(\text{ CO }_2\) allowances price dimension which in fact will not have an impact on our optimal decision. Thus, we modify the distance on the underlying three-dimensional space by projecting to the electricity price and the fuel price dimension only, given that our stock of \(\text{ CO }_2\) allowances is sufficient. Otherwise, we keep the usual \(L_1\) norm. More formally, we define

with \(\alpha _t\) defined in Sect. 2.1 and positive weights \(w^e, w^f, w^c\). Notice that D is not a distance, as it does not separate points. However, this fact does not entail any restrictions for our considerations.

When basing the uncertainty model on historical observations, there is a strong statistical argument for using balls w.r.t. the Wasserstein distance as ambiguity sets (cf. Esfahani and Kuhn 2018). In particular, large deviations results are available (see Bolley et al. 2007; Fournier and Guillin 2015 for the case of the Wasserstein distance and Glanzer et al. (2019) for the case of the nested distance) which provide probabilistic confidence bounds for the true model being contained in the ambiguity set around the (smoothed) empirical measure. Observe that such results are not invalidated by the state-dependency that we introduce: it is evident that a given confidence bound is directly inherited if one neglects some dimension. Notice however that a general state-dependent weighting of the dimensions would require a more careful treatment.

4 A case study

In the following, we will test the framework elaborated in Sects. 2.1 and 3 for a specific power plant. For the present application, each week t is subdivided in \(S = 42\) blocks, where each block has 4 h. We solve the problem for a quarter ahead, thus we take the horizon to be \(T=13\) weeks.

In this case, the control variables are given vectors of dimension \(S=42\). The set of production profiles we use for this case study consists of 10 different production schedules. This set is denoted by \({\mathcal {U}} = \{u^{(i)}\}_{i=1}^{10} \) (see Fig. 3) and it will remain constant for every stage.

If we choose profile \(u^{(10)}\), the strategy A to follow is illustrated for different prices \(\xi _{t,0}^{c}\) and all possible left allowances \(x_t\) in the partition. The horizontal lines indicate the lower and upper bounds for the prices \({\underline{b}}\) and \({\overline{b}}\), respectively

The discrete evolution of prices is given as a lattice process \((\xi )\) defined on \(\Xi _0 \times \dots \times \Xi _T\), where each space \(\Xi _t\) has \(N_t\) elements correspondent to the number of nodes, i.e., \(\Xi _t = \{\xi _t^1, \dots , \xi _t^{N_t}\}\) and each node \(\xi _t^i = (\xi _t ^{e,i}, \xi _t ^{f,i}, \xi _t ^{c,i})\in {\mathbb {R}}^3 \) for all \(i=1, \dots , N_t\) and all \(t = 0, \dots , T\). As explained in Sect. 3, \(P_t\) will denote the probability transition matrices from stage t to stage \(t+1\) of dimensions \(N_t \times N_{t+1}\). The description of the lattice construction will be explained in Sect. 4.1.1.

The profit function \(h_t\) at stage t is defined by the expected profit during the upcoming week (see (2)). Profits at every block s in the week are quantified by the functions \(f_s\), for \(s=0,\dots , S-1\). Costs of buying additional allowances and transportation costs are quantified only at the beginning of each week, while start-up costs need to be assigned at each block s. We proceed to the description of the state variables. The costs of buying new allowances depend on the strategy A defined in (3). For its computation we consider \([{\underline{b}}, {\overline{b}}] = [4.4, \, 9.6]\), where the latter values were obtained by applying a simple quantile rule to the available data set. Moreover, we set \(C_\alpha = 2\).

For the partition of the amount of available \(\text{ CO }_2\) allowances \(x_t\), we consider different possible values from 0 tonne(carbon) to \(10^5\) tonne(carbon), and we also take into account the allowances needed for each profile. All in all, the partition of state \(x_t\) has 16 different elements.

For every state in the partition, an example of the procurement strategy is shown in Fig. 4 for different prices. Note that we illustrate this example when we choose full production, i.e., \(u^{(10)}\). The strategy when choosing a different profile is similar, the only change is that we do not need to buy as many allowances as with \(u^{(10)}\).

The second state variable \(y_t\) describes the hours the power plant was off since the last time it was on. The costs associated with restarting the production depend on \(y_t\) and the chosen profile \(u_t\). The cost function is a step function given in Table 2.

Once a profile is chosen for any week, the first time the profile is different than zeros is where we consider initial start up costs. Then, for the initial start up costs we consider the hours the power plant was offline before week t starts (i.e., \(y_t\)) in addition to the hours the chosen profile is off before it starts producing. For the rest of the blocks the costs will only depend on the profile. As for the notation of the elements in \(f^{\text{ start }}\), see (4), \({\overline{E}}_s\) is the sum of the last two columns of Table 2. We illustrate the initial start up costs for a profile that is on in the beginning of the week for all possible values of \(y_t\) (see Fig. 5) .

Given that we have a finite set of profiles, we can calculate the value of \(y_t\) for each profile. The partition of \(y_t\) will have these values and the limit numbers of the classes in Table 2.

Regarding the transportation cost function in (5), we assume \(C^{tr} = 40\) (£/tonne(fuel)).

Finally, the values of the constants in Table 1 are \(H_2 = 0.78\) (£/$), \(H_3 = 0.9\) (£/€), \(H_5 = 0.0975\) (MWh/GJ), \(H_7= 0.45\) (tonne(fuel)/MWh) and \(J = 2.31\) (tonne(carbon)/tonne(fuel)).

4.1 The solution algorithm

We numerically solve the power plant valuation problem by a stochastic dynamic programming algorithm. A lattice structure is used as a discrete representation of the uncertainty model in all decision stages.

4.1.1 On the lattice construction

The state-of-the art approach for the construction of scenario lattices is based on optimal quantization techniques (cf. Bally and Pagès 2003; Löhndorf and Wozabal 2018). For a given number of discretization points, such methods select the optimal locations as well as the associated probabilities in such a way that the Wasserstein distance (or some other distance concept for probability measures) with respect to a (continuous) target distribution is minimized. For the present study, we have implemented a stochastic approximation algorithm for the quantization task, following (Pflug and Pichler 2014, Algorithm 4.5). Referring to the latter algorithm, we first applied the iteration step (ii) in order to find the atoms of all marginal distributions, separately for each stage. We then formed a lattice out of these sets of points by fixing the structure of allowed transitions and then applied step (iv) to determine all conditional transition probabilities. Eventually, this also determines the absolute probabilities of each node in the lattice. The Wasserstein distance of order two has been used as a target measure for the minimization. We use a ternary lattice, i.e., each node has (at most) three successors with a positive transition probability.

As for the notation, recall that we distinguish between stages (weeks) and intra-week blocks. Decisions are taken only at each stage but the profit will be calculated taking into account the random evolution of the prices during the entire week. The discretized process in each stage t and node i is denoted as \(\xi _t^i = (\xi _t^{e,i} ,\xi _t^{f,i}, \xi _t^{c,i})\), and the values of the process within week t starting in node i, are denoted by \(\xi _{t,s}^i = (\xi _{t,s}^{e,i} ,\xi _{t,s}^{f,i}, \xi _{t,s}^{c,i})\), for \(s=0,\dots S\). At \(s=0\), \(\xi _{t,0}^i = \xi _t ^i\) takes the value of node i; and at \(s=S\), \(\xi _{t,S}^i = \xi _{t+1}^j\) takes the value of node j in the next stage with probability \(p_t(i,j)\), for \(j=1, \dots , N_{t+1}\). Note that if the lattice structure does not make a link between two nodes in consecutive stages, then the probability of such a transition will be zero.

4.2 Computing the expected profit between two decisions

The valuation of the power plant is obtained by solving (10) backwards in time from \(t=T\) to \(t=0\). In this section, we specify how to solve (10) and its robust version (12) at any stage t. We specifically concentrate on the calculation of the expected profit within each week given the current node and a successor node.

As discussed in Sect. 2.2.1, electricity prices within weeks will be modeled by the bridge process that we described. Fuel and \(\text{ CO }_2\) allowances prices are assumed to remain constant between stages.

We start now with the computation of the expected profit, as defined in (2). In classical stochastic dynamic programming problems, \(h_t\) exclusively depends on the values observed at time t. In contrast, in Sect. 2.1 we instantiated (1) in such a way that the function \(h_t\) is defined as an expected value of the the random profits within week t. Hence, given the values of node i at stage t (at block \(s=0\)), as well as initial states \((x_t, y_t)\); the weekly profit \(h_t\) will be calculated as follows

We define

for all \(j=1, \dots , N_{t+1}\). Then, \(h_t([x_t, y_t], u_t, \xi _{t,0}^i) = \sum _{j=1}^{N_{t+1}} h_t^j(x_t, y_t, u_t, \xi _{t,0}^i) \cdot p_t(i,j) \). We compute now \(h_t^j\) as follows

At stage T we set the terminal condition \(V_T=0\). Given an initial state \((x_0, y_0)\), going backwards in time from \(t=T-1\) to \(t=0\), we obtain the power plant value at \(t=0\). The latter is calculated with respect to the baseline multistage model \({\mathbb {P}}\) and will be denoted as \(\nu _0({\mathbb {P}})\). The policy associated with \(\nu _0({\mathbb {P}})\) is denoted with \(u_{{\mathbb {P}}}^*\). It can be represented as a probabilistic tree of profiles.

If we incorporate ambiguity in the lattice process using the uniform Wasserstein distance, for all \(0\le t\le T-1\) we solve:

The optimal value is reached at \(t=0\) and it will be denoted as \(\nu _0({\mathcal {Q}}^\varepsilon )\), where \({\mathcal {Q}}^\varepsilon \) denotes the ambiguity set defined as

A worst-case model \({{\mathbb {Q}}^\varepsilon }^*\) is any multistage probability model contained in \({\mathcal {Q}}^\varepsilon \) such that \(\nu _0({\mathcal {Q}}^\varepsilon ) = \nu _0({{\mathbb {Q}}^\varepsilon }^*)\). More concrete, the optimal value is reached at a saddle point \((u_{{\mathbb {Q}}^{\varepsilon *}} ^*, {{\mathbb {Q}}^\varepsilon }^*)\) where \(u_{{\mathbb {Q}}^{\varepsilon *}} ^*\) is the policy associated with the worst-case model.

At each node i, the objective function of the minimization problem is linear in \(q_t(i)\) under linear constraints. Define

for \(j=1, \dots , N_{t+1}\). Then, the minimization problem can be written as

where \(D_{k\, l} = D\left( [\xi _{t+1}^{e,k}, \, \xi _{t+1}^{f,k},\, \xi _{t+1}^{c,k}], [ \xi ^{e,l}_{t+1}, \, \xi _{t+1}^{f,l},\, \xi _{t+1}^{c,l}]\right) \) is the distance between nodes k and l at stage \(t+1\), as defined in (13) with specific weights \(w^e = 1\), \(w^f = H_1\) and \(w^c = H_3\cdot H_4\).

4.3 Impact of model ambiguity

4.3.1 The value of the power plant

We describe the optimal valuation of the power plant when we compute the iterative system of backward equations in (10) and (12). We assume that the initial state \(x_0\) provides enough allowances to execute any of the profiles and the power plant was not offline before we start, i.e., \(y_0 =0\). Moreover, the terminal condition for both problems is set to be \(V_T = 0\). With the baseline model we obtain an expected profit of approximately \(\nu _0 ({\mathbb {P}})=2.3\cdot 10^6\) (\(\pounds \)). The optimal decision at \(t = 0\) is to turn off the power plant by choosing \(u^{(1)}\). The valuation of the power plant including ambiguity is obtained for different radii \(\varepsilon \in [0,2]\). The different optimal values \(\nu _0({{\mathcal {Q}}^\varepsilon })\) are illustrated in Fig. 6 with respect to the ambiguity level. We observe the valuation of the power plant decreases when the ambiguity radius is higher. For \(\varepsilon = 2\), the valuation of the power plant decreases to \(\nu _0({{\mathcal {Q}}^\varepsilon }) = 6.8\cdot 10^5\).

In order to get an insight into the change of prices in the ambiguity model, we report the changes of electricity prices for the worst case models \({{\mathbb {Q}}^\varepsilon }^*\) in Table 3. Let \(B_0\) be a vector containing the stagewise expectations of electricity prices with respect to the baseline model and let \(B_\varepsilon \) be the vector containing the expectations with respect to each worst-case model \({{\mathbb {Q}}^\varepsilon }^*\). The percentage of change at each stage t in the prices are denoted with the parameter \(\theta _t\), such that \(B_\varepsilon (t) = (1-\theta _t) B_0(t)\). We observe that the largest change in prices is given in stage 12, where electricity prices decrease up to 30% for \(\varepsilon = 2\).

4.3.2 Forward in time

With the iterative solution of the backwards equations we eventually obtain an initial optimal profile at \(t=0\), namely \(u_0^* = u^{(1)}\). With this initial decision we go forward in time and create a probabilistic tree \(u^*_{\mathbb {P}}\) of the optimal decisions together with their profits. Starting with the given states and the optimal profile at \(t=0\), the updated states at stage \(t=1\) are completely determined by the knowledge of \(x_0,\, y_0\) and \(u_0^*\). The choice of the optimal profile in \( t= 1\), for each node \(i = 1, \dots , N_1\), will be made by looking at the nearest location of the updated states in the grid and taking the correspondent profile chosen in the backwards algorithm. We proceed in this way until we obtain all the optimal profiles at stage \(T-1\). Eventually, we obtain a probabilistic tree with \(3^{T}\) possible paths. Following the same procedure, we calculate the probabilistic tree of optimal profiles \(u_{{\mathbb {Q}}^{\varepsilon *}} ^*\) for each worst-case model \({{\mathbb {Q}}^\varepsilon }^*\), and the corresponding profits under the worst-case models for different radii \(\varepsilon \).

Starting with \(u^{(1)}\) is optimal for all models at \(t=0\). For the subsequent stages the choices of optimal profiles change. Figure 7 shows the stagewise distribution of the optimal profiles chosen with the baseline model \({\mathbb {P}}\). Figure 8 shows the changes of profile choices when we incorporate ambiguity in the model.

With no ambiguity there is a probability greater than 0 to choose full production in stages 6, 7, 8, 10 and 12. When we start increasing the radius of ambiguity these chances drop to 0. The larger the ambiguity radius is the more we choose to be offline or not to produce in the weekends choosing profiles like \(u^{(2)},\, u^{(4)}, \, u^{(6)}\). A different option, but with less probability is not to produce in peak hours, by choosing \(u^{(3)}\) or \(u^{(5)}\).

Given the optimal profiles for the baseline model \({\mathbb {P}}\) and the alternative models \({{\mathbb {Q}}^\varepsilon }^*\) we can calculate the profits we make along the decision tree. To be precise, we denote by \(i_\tau \in N_\tau \) any node index at stage \(\tau = 0, \dots , T\). Since \(N_0 = 1 \), a possible path to follow forward in time up to stage t, will go through any sequence of nodes \((1, i_1, \dots , i_{t-1}, i_t)\). The profit from \(i_\tau \) to \(i_{\tau + 1}\) is the profit made in stage \(\tau \) and is written as \(h_\tau ^ {i_\tau , i_{\tau + 1} }\). This profit is obtained with probability \(p_\tau (i_\tau , i_{\tau + 1 })\). Therefore, the accumulated profit until stage \(t-1\) is \( (h_0^{0, i_1} + \cdots + h_{t-1}^{i_{t-1}, i_t}) \) with probability \(p_0(1,i_1) \cdots p_{t-1}(i_{t-1}, i_t)\), when we end in node \(i_t\in N_t\). Figure 9 shows the accumulated profits following the tree of optimal profiles \(u_{\mathbb {P}}^*\) as well as the distribution of the final profits.

If we include ambiguity, then the optimal profits change as well as their probabilities. Figure 10 shows the profit trees together with the final distribution with respect to the correspondent alternative model. We observe that for larger \(\varepsilon \) the alternative models put more weight at lower profits.

5 Conclusion

In this paper, we have shown how a realistic valuation of a power plant can be done by solving a multistage Markovian decision problem. The value is defined as the (discounted) expected net profit, that one can get from the operation of the plant, if an optimal production plan is implemented. In this valuation process, all relevant purchasing costs and selling prices are included in the model. The number of feasible production plans is finite and thus a discrete multistage optimization problem has to be solved. We use the classical backward algorithm for the Markovian control problem and a forward algorithm for determining an estimate of the achievable profit and its distribution. The novelty of the paper is twofold. First, we adopt a multiscale approach, where decisions are made on a coarser scale than costs are calculated. This allows us to keep the computational effort tractable. Second, we do not only consider the baseline model for the random factors, but rather a set of models (the ambiguity set) which are close to the baseline model. This allows to incorporate the fact that probability distributions for future costs and revenues are not known precisely. The more models, and especially the more unfavourable models are included in the ambiguity set, the smaller is the robust value of the plant. We demonstrate how the final value under model ambiguity depends on the degree of uncertainty about the correct price and cost model. Our distance model for the ambiguity set depends on the state of the system, taking into account that what is close for two price vectors depends also on the fact whether these prices are relevant for the state at hand. We also noticed that the optimal production strategy not only depends on the degree of ambiguity, but also gets more diversified for larger ambiguity, in contrast to some bang-bang solutions in unambiguous models.

Change history

10 December 2019

The original version of this article unfortunately contained a mistake in equation (1). The correct expression is given below:

Notes

We use here the definition \([f(x)]_{-} := -\min \{f(x),0\}\) for the negative part of a function.

The general definition of the nested distance can be found in the “Appendix”.

References

Aasgård EK, Andersen GS, Fleten S, Haugstvedt D (2014) Evaluating a stochastic-programming-based bidding model for a multireservoir system. IEEE Trans Power Syst 29(4):1748–1757

Bally V, Pagès G (2003) A quantization algorithm for solving multidimensional discrete-time optimal stopping problems. Bernoulli 9(6):1003–1049

Bolley F, Guillin A, Villani C (2007) Quantitative concentration inequalities for empirical measures on non-compact spaces. Probab Theory Relat Fields 137(3–4):541–593

Cervellera C, Chen V, Wen A (2006) Optimization of a large-scale water reservoir network by stochastic dynamic programming with efficient state space discretization. Eur J Oper Res 171(3):1139–1151

Clelow L, Strickland C (2000) Energy derivatives: pricing and risk management. Lacima Group, London

Duan C, Fang W, Jiang L, Yao L, Liu J (2018) Distributionally robust chance-constrained approximate AC-OPF with Wasserstein metric. IEEE Trans Power Syst 33(5):4924–4936

Escudero LF, de la Fuente JL, Garcia C, Prieto FJ (1996) Hydropower generation management under uncertainty via scenario analysis and parallel computation. IEEE Trans Power Syst 11(2):683–689

Escudero LF, Quintana FJ, Salmeron J (1999) CORO, a modeling and an algorithmic framework for oil supply, transformation and distribution optimization under uncertainty. Eur J Oper Res 114(3):638–656

Esfahani PM, Kuhn D (2018) Data-driven distributionally robust optimization using the Wasserstein metric: performance guarantees and tractable reformulations. Math Program 171(1–2):115–166

Ewald C-O, Zhang A, Zong Z (2018) On the calibration of the Schwartz two-factor model to WTI crude oil options and the extended Kalman filter. Ann Oper Res 282(1–2):119–130

Farkas W, Gourier E, Huitema R, Necula C (2017) A two-factor cointegrated commodity price model with an application to spread option pricing. J Bank Financ 77(C):249–268

Fleten S-E, Haugstvedt D, Steinsbø JA, Belsnes M, Fleischmann F (2011) Bidding hydropower generation: Integrating short- and long-term scheduling. MPRA Paper 44450, University Library of Munich, Germany

Fournier N, Guillin A (2015) On the rate of convergence in Wasserstein distance of the empirical measure. Probab Theory Relat Fields 162(3–4):707

Frangioni A, Gentile C (2006) Solving non-linear single-unit commitment problems with ramping constraints. Oper Res 54(4):767–775

Frangioni A, Gentile C, Lacalandra F (2008) Solving unit commitment problems with general ramp contraints. Int J Electr Power Energy Syst 30:316–326

Gao R, Kleywegt AJ (2016) Distributionally robust stochastic optimization with Wasserstein distance. arXiv preprintarXiv:1604.02199

Glanzer M, Pflug GCh (2019) Multiscale stochastic optimization: modeling aspects and scenario generation. Comput Optim Appl. https://doi.org/10.1007/s10589-019-00135-4

Glanzer M, Pflug GCh, Pichler A (2019) Incorporating statistical model error into the calculation of acceptability prices of contingent claims. Math Program 174(1):499–524

Kaut M, Midthun K, Werner A, Tomasgard A, Hellemo L, Fodstad M (2014) Multi-horizon stochastic programming. Comput Manag Sci 11(1):179–193

Löhndorf N, Wozabal D (2018) Gas storage valuation in incomplete markets. Optimization. http://www.optimization-online.org/DB_HTML/2017/02/5863.html

Maggioni F, Allevi E, Tomasgard A (2019) Bounds in multi-horizon stochastic programs. Ann Oper Res. https://doi.org/10.1007/s10479-019-03244-9

Martinéz MG, Diniz AL, Sagastizábal C (2008) A comparative study of two forward dynamic programming techniques for solving local thermal unit commitment problems. In: 16th PSCC Conference, Glasgow Scotland, p 1–8

Moriggia V, Kopa M, Vitali S (2018) Pension fund management with hedging derivatives, stochastic dominance and nodal contamination. Omega

Oksendal B (2000) Stochastic differential equations, 5th edn. Springer, Berlin

Pflug GCh, Pichler A (2012) A distance for multistage stochastic optimization models. SIAM J Optim 22(1):1–23

Pflug GCh, Pichler A (2014) Multistage stochastic optimization, 1st edn. Operations research and financial engineering. Springer, Berlin

Pflug GCh, Wozabal D (2007) Ambiguity in portfolio selection. Quant Financ 7(4):435–442

Pflug GCh (2010) Version-independence and nested distributions in multistage stochastic optimization. SIAM J Optim 20(3):1406–1420

Ribeiro DR, Hodges SD (2004) A two-factor model for commodity prices and futures valuation. In: EFMA 2004 Basel Meetings Paper

Séguin S, Fleten S-E, Côté P, Pichler A, Audet C (2017) Stochastic short-term hydropower planning with inflow scenario trees. Eur J Oper Res 259(1):1156–1168

Seljom P, Tomasgard A (2017) The impact of policy actions and future energy prices on the cost-optimal development of the energy system in Norway and Sweden. Energy Policy 106(C):85–102

Skar C, Doorman G, Pérez-Valdés GA, Tomasgard A (2016) A multi-horizon stochastic programming model for the European power system. Censes working paper 2/2016, NTNU Trondheim. ISBN: 978-82-93198-13-0

van Ackooij W, Lopez I Danti, Frangioni A, Lacalandra F, Tahanan M (2018) Large-scale unit commitment under uncertainty: an updated literature survey. Ann Oper Res 271(1):11–85

van Ackooij W, Henrion R, Möller A, Zorgati R (2014) Joint chance constrained programming for hydro reservoir management. Optim Eng 15:509–531

Werner AS, Pichler A, Midthun KT, Hellemo L, Tomasgard A (2013) Risk measures in multi-horizon scenario trees. Springer, New York, pp 177–201

Zéphyr L, Lang P, Lamond BF (2015) Controlled approximation of the value function in stochastic dynamic programming for multi-reservoir systems. Comput Manag Sci 12(4):539–557

Zhonghua S, Egging R, Huppmann D, Tomasgard A (2015) A Multi-Stage Multi-Horizon Stochastic Equilibrium Model of Multi-Fuel Energy Markets. Censes working paper 2/2016, NTNU Trondheim. ISBN: 978-82-93198-15-4

Acknowledgements

Open access funding provided by University of Vienna. The authors would like to acknowledge financial support from the Gaspard-Monge program for Optimization and Operations Research (FMJH/PGMO) project with number 2017-0094H and title “Incorporating model error in the management of electricity portfolios”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Parameters for the model

The parameters corresponding to Eq. (7) in Sect. 2.2 are given by:

The parameters of the multivariate lognormal distribution of the GBM process (7), i.e., the expectation vector \(\mu (t)\) and the (components of the) variance-covariance matrix \(\Sigma (t)\) of the associated multivariate normal distribution are given by:

A remark on the nested distance

Definition 5.1

The nested distance \({{\,\mathrm{\mathsf{{d}\mathsf{{I}}}}\,}}({\mathbb {P}}, \tilde{{\mathbb {P}}})\) between two \({\mathbb {R}}^m\)-valued filtered stochastic processes \(({\mathbb {P}},{\mathcal {F}})\) and \((\tilde{{\mathbb {P}}},\tilde{{\mathcal {F}}})\) is defined as the optimal value of the following mass transportation problem, which optimizes over the set of all joint distributions that respect the given conditional marginals:

Figure 11 illustrates that the nested distance (between two trees resulting from a variation of the transition probabilities) cannot be bounded by only considering the Wasserstein distance between the subtrees with matching node values. In the given example, the maximum Wasserstein distance in the second stage is 2, the first case Wassersein distance is 4. Thus, \(4+2\cdot 2 = 8\) would still be smaller than the nested distance between the two trees, which is 10.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

van Ackooij, W., Escobar, D.D., Glanzer, M. et al. Distributionally robust optimization with multiple time scales: valuation of a thermal power plant. Comput Manag Sci 17, 357–385 (2020). https://doi.org/10.1007/s10287-019-00358-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10287-019-00358-0