Abstract

3D data from high-resolution volumetric imaging is a central resource for diagnosis and treatment in modern medicine. While the fast development of AI enhances imaging and analysis, commonly used visualization methods lag far behind. Recent research used extended reality (XR) for perceiving 3D images with visual depth perception and touch but used restrictive haptic devices. While unrestricted touch benefits volumetric data examination, implementing natural haptic interaction with XR is challenging. The research question is whether a multisensory XR application with intuitive haptic interaction adds value and should be pursued. In a study, 24 experts for biomedical images in research and medicine explored 3D medical shapes with 3 applications: a multisensory virtual reality (VR) prototype using haptic gloves, a simple VR prototype using controllers, and a standard PC application. Results of standardized questionnaires showed no significant differences between all application types regarding usability and no significant difference between both VR applications regarding presence. Participants agreed to statements that VR visualizations provide better depth information, using the hands instead of controllers simplifies data exploration, the multisensory VR prototype allows intuitive data exploration, and it is beneficial over traditional data examination methods. While most participants mentioned manual interaction as the best aspect, they also found it the most improvable. We conclude that a multisensory XR application with improved manual interaction adds value for volumetric biomedical data examination. We will proceed with our open-source research project ISH3DE (Intuitive Stereoptic Haptic 3D Data Exploration) to serve medical education, therapeutic decisions, surgery preparations, or research data analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Volumetric imaging techniques, e.g., Computed Tomography (CT) and Magnetic Resonance Imaging (MRI), have become indispensable resources for diagnosis and treatment in modern medicine. These advanced imaging approaches can produce high-resolution data in space and time, enabling medical professionals to obtain accurate and detailed information for clinical decision-making. Besides imaging techniques, the rapid development of artificial intelligence (AI) is another key player in the revolution of modern healthcare practices. For example, AI algorithms have enabled MRI scanning up to 10 times faster [1], paving the way to making portable MRI much wider accessibility. Also, AI algorithms can efficiently analyze large amounts of medical imaging data with great accuracy, as well as help medical professionals monitor patients’ conditions and track treatment progress. However, the visual connection and interactions between volumetric imaging, AI systems, and medical professionals is a field with much-lagged advancement. For example, the main avenue for exploring volumetric images is still archaic, rotating pseudo-3D renderings or using artificial flythroughs with DICOM viewers on flat 2D screens. These approaches do not support stereopsis, i.e., spatial depth perception through binocular vision [2]. Nor do they support the human ability to integrate multisensory information optimally and, therefore, better perceive shapes and sizes when haptic and visual information is available [3, 4]. Hence, spatial features and their physical properties present in the volumetric data cannot be efficiently perceived, which can lead to overseeing important details.

As an emerging technology, extended reality (XR) can render 3D images while supporting visual depth perception. In fact, in research, virtual reality (VR) has already been widely used for 3D volumetric medical image visualization, as reviewed in [5], and also similarly for mixed reality [6]. The advancement was in various directions, including new algorithms (e.g., for 3D rendering of medical data [7]), new applications (e.g., medical data on VR for educations [8, 9]), for surgical simulations [10], for AI-assisted surgical planning [11], for surgical planning with multi-users [12], or new scientific discoveries (e.g., exploring human splenic microcirculation data in VR to understand how splenic red pulp capillaries join sinuses [13]).

Recent works [14, 15] have found that allowing users to touch and feel the tissues or organs displayed in VR can significantly improve the comprehensive understanding of the data. However, to touch the virtual objects, real 3D-printed objects were used. This is unsuitable for everyday data examination because printing volumetric data, e.g., a whole torso MRI scan, would take an extremely long time compared to data loading in XR. Nevertheless, these works highlighted the importance of touching and manipulating beyond merely seeing. Since the default controllers of commercial XR sets were not explicitly designed for touching and manipulating volumetric data, people investigated how XR can be connected with additional devices for better data exploration. For example, specially designed pen-like haptic tools [16, 17] have been used with VR to create “haptic rendering” with better realism. Also, the combo of VR and pen-like haptic tools has been demonstrated for 3D medical image annotations to collect ground truth data for training AI models [18]. In specific applications, such as virtual surgical training, a pen-like device is similar to medical instruments and, therefore, could be appropriate [19]. However, in general, in volumetric data exploration, a pen-like device has limited flexibility and range of movement. Moreover, poking surfaces with a pen is not how humans naturally explore 3D objects.

Recently, haptic gloves have emerged as a new way to connect humans to the virtual metaverse with haptic feedback [20]. They are lightweight wearable devices designed to provide force or tactile feedback to the fingertips and, in some cases, to the palm. They have sensors to track finger and palm movements with multiple degrees of freedom (DoF) [21]. Specifically, haptic gloves enable users to interact with virtual objects in XR environments by transferring recorded finger movements to a virtual hand model. Whenever the virtual hand touches a virtual object, the XR application calculates haptic feedback and transmits it to the haptic gloves. The haptic gloves, in turn, provide the feedback, thereby simulating the sensation of touching the object.

Integrating haptic gloves into an XR application for volumetric biomedical data exploration and analysis seems promising for two reasons. First, it supports the human ability to perceive shapes and sizes through multisensory information better. Second, this enables implementing an XR application that functions like reality and can, therefore, be operated in a completely natural and intuitive without a long learning period. However, the theoretically possible benefits are countered by several challenges. Even though haptic gloves perform sufficiently for gaming and other purposes, research has shown that natural and intuitive manipulation of virtual objects is still impossible, especially if exact measures and dimensions are relevant [22]. Some potential reasons are identified, and fortunately, the biggest bottleneck seems to be in developing better algorithms [22, 23]. Nevertheless, without improving the haptic interaction with virtual objects, it is impossible to thoroughly investigate whether a multisensory XR application for volumetric biomedical image exploration and analysis is actually beneficial. On the contrary, without the application adding value, there is no need to invest effort in improving haptic interaction.

Thus, this work aims to investigate whether it is advisable to invest in multisensory XR applications for volumetric data exploration or analysis. To assess the potential benefits, we approached experts from our target group. This comprises people who work professionally with volumetric images, from image creation to image analysis in research and medicine. To enable participants to rate the usefulness of the multisensory XR application, they need to understand how different the exploration of volumetric biomedical data can be with the different application types. This means that, at this point, the focus was not on the functional execution of a specific task for which a fully functional application would also have been needed. The focus was instead on demonstrating the concepts, for which prototypes were also sufficient. Thus, we let the participants explore one volumetric data set with three applications: a standard PC application, a simple VR prototype, and a multisensory VR prototype. They rated their experience with standardized questionnaires and gave their opinion on the usefulness of a multisensory XR application.

Method

Participants

We enrolled 24 people (17 male, 7 female) who work professionally with volumetric (bio-) medical images, ranging from image creation to analysis. They were recruited via mailing lists, or employees in the research institutes where it took place were approached directly and asked whether they would like to test three different software for a study and share their opinion. Their age ranged from 23 to 65 years, with a median of 29 years. Twenty-two participants were from the involved affiliations, but they were naive to the research project. Nineteen participants worked in a research context only, two worked in a medical context only, and three worked within a combined context. The study was approved by the Ethics Committee of Bielefeld University with No 2023-258.

Procedure and Experimental Design

Participants first signed an informed consent. After that, they explored a volumetric data set in three applications. That means, they had no specific task to execute, as this would require implementing software features that go beyond a simple prototype stage. They completed a questionnaire after the usage of each application. In the end, participants filled out a final questionnaire. The whole session took approximately 30 min.

The experimental design was a within-subjects design. That means all participants performed all three experimental conditions corresponding to the three applications. The first application was a standard PC application commonly used for volumetric image visualization. For this purpose, we chose the software ImageJ, which ran on a PC and was operated by a mouse. It displayed the image in 2D, and to see the third dimension, the participant had to scroll through it. The second application was the simple VR prototype that corresponds to other VR state-of-the-art applications used in research. This application displayed the image in 3D, and the participants could manipulate it with default VR controllers. To grasp virtual data, participants had to move the VR controllers close to the object and press a button. Once they released the button, the object was released as well. The third application was the multisensory VR prototype. It differed from the simple VR prototype by allowing the participants to grasp and manipulate the virtual data intuitively using their hands.

To reduce sequence effects, the order of the three experimental conditions was varied. Since there are six possible orders for three experimental conditions, and we had 24 participants, each possible order occurred exactly four times.

VR Hardware

As VR glasses, the HTC Vive Pro 2 (HTC Corporation, Taiwan) was used to display the virtual world to the user visually. For position tracking, we used the native system of the HTC Corporation, consisting of two base stations. They send infrared signals detected by the HTC Vive Pro 2 and other accessories to determine position and orientation. The HTC Vive Pro 2 was connected via cable to a PC and communicated with the server applications Vive Console and SteamVR. These, in turn, communicated with the SteamVR plugin integrated into our VR prototypes.

For the simple VR prototype, the default controllers of the HTC Vive Pro 2 were used. They use the same native position tracking system and communicate wirelessly with the SteamVR applications on the PC. Participants hold them in their hands. When they pressed the button below their index finger, they could grasp virtual objects in the virtual world.

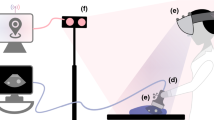

For the multisensory VR prototype, the haptic gloves SenseGlove Nova [24] (SenseGlove, Netherlands) were integrated. It comes as a pair so that people can wear one glove on the left and one on the right hand. It measures the finger movements with respect to the hand and sends them via Bluetooth to the server SenseCom running on a PC. The server, in turn, communicated with the SenseGlove pluginFootnote 1 integrated into the VR prototype. To track the hand position and rotation in space, HTC Vive trackers were attached to them. They also work with the HTC Corporation’s native tracking system and the SteamVR server. Based on both sources, i.e., the hand position and orientation tracked with the HTC Vive trackers (HTC Corporation, Taiwan) and the finger movements recorded with the haptic glove, the users’ detailed hand and finger movements were known. Thus, a virtual avatar hand was displayed in VR and mimicked the users’ movements in nearly real-time. Once the user touched a virtual object, the haptic glove provided force feedback to the user’s fingertips. Figure 1 shows how a user interacted with the multisensory VR prototype.

Volumetric Medical Data

To demonstrate the conceptual differences of the three application types to the participants, we needed a volumetric biomedical data set that could be loaded into all three applications. We decided to use a dataset that is publicly available and can be used for the standard PC application ImageJ and VR prototypes. Thus, we used a CT scan of a human torso. The dataset of patient s0011 can be downloaded as a NIfTI fileFootnote 2 [25] for usage in ImageJ. The same dataset of patient s0011 is also available, segmented and transformed into shapes, as part of MedShapeNetFootnote 3 [26]. MedShapeNet is a large public collection of 3D medical shapes, e.g., bones, organs, and vessels. They are based on original data from various databases, mostly captured with medical imaging devices such as computed tomography (CT) and magnetic resonance imaging (MRI) scanners. Those data were already processed, i.e., segmented with different AI methods, converted to shapes, and labeled with the name of the corresponding anatomy. These shapes consist of triangular meshes and point clouds and can be downloaded in .stl format. Thus, they can be easily loaded into VR applications where they have the exact same size as the scanned human torso.

To summarize, a CT human torso scan as an original NIfTI file was loaded into ImageJ, while the same human torso segmented into 104 parts, e.g., representing bones, organs, and vessels, was used for the VR prototypes. The latter one is shown in Fig. 2.

Torso data of patient s0011 from the MedShapeNet data set (https://medshapenet.ikim.nrw/). Left shows how the data was originally loaded. Right shows how the upper body could be disassembled to examine how individual organs are located in the body

The VR Prototypes

Both prototypes were implemented in C# with Unity and consisted of a VR scene and several modules. For each hardware component, a module handled the communication with the device and its integration into the VR scene. One module contained the virtual environment, which was a virtual room in which the user could walk around and manipulate the data. Another module handled the volumetric data. The volumetric data parts were set up to be grasped and manipulated through the haptic gloves or the VR controllers using the libraries provided with the hardware.

After starting the VR prototypes, participants were in a room where they could move freely. In the middle of the room, the whole torso scan was displayed, see Fig. 2 left. In the simple VR prototype, participants saw their virtual hands as red and black gloves corresponding to the SteamVR plugin. By pressing buttons on the VR controller, they could manipulate the 3D medical shapes. In the multisensory VR prototype, participants’ virtual hands were displayed as blue transparent meshes based on the original plugin of SenseGlove, shown in Fig. 3. The virtual hand moved according to the participants’ hand movements. Participants could manipulate the 3D medical shapes using their hands as they would manipulate objects in reality. In both applications, the possible manipulations of the 3D medical shapes were to grasp, move, and rotate them for visual inspection from different angles. Moreover, participants could examine how the organs were located by disassembling the torso organ-wise, see Fig. 2 right.

Measures

To assess participants’ experience with the three application types and to allow for objective comparison, participants filled out standardized, established questionnaires immediately after trying each application. To check if participants found the application usable, we chose the standardized System Usability Scale (SUS) [27], which provides insights into usability. It had ten questions and was answered on a 5-point Likert scale. Based on a given formula [27], the ten answers were added together, resulting in a SUS score of 0 (poor usability) to 100 (perfect usability) per participant. Further, we intended to check whether participants could do the volumetric data exploration as they wished. Since, especially in VR applications, the ability to do impacts the feeling of presence [28], we chose as the second questionnaire the IGroup Presence Questionnaire (IPQ) [29]. The IPQ consists of four subcategories: the general presence G, the spatial presence SP, the involvement INV, and the experienced realism REAL. Thereby, involvement describes how strongly one is focused on interacting with the application. While G consists of one question, the other subcategories consist of multiple questions. All questions were answered on a 7-point Likert scale. They were added together based on a formula [29]. Thus the scores for the subcategories range from 0 (not evident) to 6 (very prominent).

After trying all application types and filling out the corresponding questionnaires, participants completed a final questionnaire. The goal was to investigate whether a multisensory XR application adds value for volumetric biomedical data exploration or analysis in a medical or research context. It contained four subjective questions regarding the intentions of creating a multisensory XR application, which had to be answered on a 5-point Likert scale and are listed in Table 3. Moreover, they were asked two open questions on what they liked the most and what we must improve the most about the multisensory XR application. Finally, it also contained a question about the gender and the profession.

Results

The results of the SUS score are in Table 1. The ratings of the usability were, on average, similar for all three application types. As the SUS score ranged between 0 and 100, we chose an ANOVA with repeated measures for comparison. It did not reveal any significant differences with \(F(38,2)=0.39,\, p=0.677\).

Participants filled the IPQ questionnaire for all applications, to keep the experimental design simple and the participants unbiased. However, the questionnaire was created for VR applications, and some questions do not fit to describe the experience with a PC application. Therefore, it seems reasonable to exclude the IPQ results for the standard PC application. Table 2 shows the mean and standard deviation over all participants for all IPQ subcategories for the multisensory VR prototype and the simple VR prototype. The mean values for all subcategories were similar for both applications. Since the first subcategory of IPQ consisted just of one question and those were answered on a 7-point Likert scale, we compared the results with a non-parametric test, i.e., the Wilcoxon-signed-rank test. There are no significant differences between both VR prototypes. This means we found no significant differences in how present in general, how present in space, how involved, and how realistic participants perceived both VR prototypes. The results are displayed in Table 2 in the box plot figures.

The results of the final questionnaire are summarized in Table 3. Since the possible answers were from 1 (strongly disagree) to 5 (strongly agree), a value of 3 would indicate a neutral answer. The mean and median values are, on average, higher than 3. We wanted to examine whether the distribution of answers differs significantly from disagreement or a neutral answer. So, we performed a one-sided, one-sampled t-tests against 3 with \(\alpha =0.05\). As the Table 3 shows, all t-tests delivered significant results. This means the distribution of answers differs significantly from disagreement. Thus, overall, participants seemed to agree with the statements.

For the open questions, participants stated that they liked most: usage and freedom of the hands (instead of VR controllers), haptic or force feedback and sense of touch, visualization of the organs, better perceiving the organs’ dimensions and shapes, feeling more inside reality while being in VR or being immersed, easy to put on, more natural interaction or intuitive usage, ability to rotate and inspect the organs, simplicity. Based on the participants, the most improvable things were: the hardware reacted jerkily or jumped, the haptic or force feedback (should be more smoothly, more realistic, and have better timing), the accuracy of grasping (i.e., a gap between fingers and grasped objects, issues with edges, sometimes wrong organs are grasped), system robustness, too high force or resistance, grasping partially hidden objects (e.g., by using a ray cast feature), commonly used navigation through recorded slices is missing, details or structure of organs is missing, a mismatch between real and virtual hand movement, adding texture corresponding to the actual organ, more lightweight haptic gloves.

Discussions

In this work, we investigated whether a multisensory XR application can add value for volumetric biomedical data examination in medicine and research. For this purpose, we conducted a study with 24 participants who work professionally with biomedical images in research and medical contexts. The experts tested a multisensory VR prototype in which data could be manipulated intuitively using the hands. In comparison, they used a simple VR prototype that uses VR controllers for data manipulation and is comparable with state-of-the-art research, as well as a standard PC application for volumetric data examination.

Results of a standardized usability questionnaire showed no significant differences between the three application types. The presence questionnaire revealed no significant differences between the two VR applications. So, based on the standardized usability or presence questionnaires, the multisensory VR application neither adds value nor is disadvantageous for volumetric biomedical data exploration and analysis.

However, the final questionnaire asked the participants for their opinions on several statements. The outcome shows that participants think that a multisensory XR application helps to get a better understanding of the organs’ dimensions. They agreed that using hands instead of VR controllers simplifies data exploration. They felt that the multisensory VR prototype allowed intuitive data exploration. Finally, they believed that a multisensory XR application might be beneficial over traditional data exploration methods. So, based on this final questionnaire outcome, it seems that a multisensory XR application does add value for biomedical data examination.

The study had, however, several limitations. Despite the possibility to manually interact with the volumetric data was mentioned most often as one of the most positive aspects of the multisensory VR prototype, it was also listed most often as the most improvable aspect. The bottleneck of the current state of haptic gloves was already known in advance. Currently, most haptic gloves available on the market provide just heuristics [23] instead of accurate hand postures leading to a mismatch between actual hand movements and the corresponding simulation in VR. Therefore, we assume that for a fully working VR application, we have to work on improving haptic algorithms. This means, that in the current study, participants did not test the multisensory VR application with the haptic perception as we intend it for the long run. However, considering the problems of manual interaction, it is impressive that the results of the presence questionnaire for the multisensory VR prototype were as good as those for the simple VR prototype. The same holds for the results of the usability questionnaire. We would argue that an improved manual interaction might even lead to better outcomes in the standardized questionnaires.

Another study limitation is the fact, that the participants had no explicit task during the study and just explored the data. In order to evaluate the usability of such an application it would be required to perform explicit and real-life tasks with different applications. However, the VR applications are currently just in the prototype stage. Therefore, it was not possible to perform real-life tasks, e.g., diagnosing a tumor, with those prototypes yet. Implementing all those features, in order to allow real-life tasks, would have been an immense task. And first completing this immense task and performing a first evaluation just thereafter seemed not a good strategy. The reason is, that before testing, it was unclear, whether people would like such a multisensory VR prototype for those tasks or prefer the standard PC application anyway. So in the worst case, creating a fully working application would have been a time waste. Therefore, this current, very limited study just shows what the participants think such an application may or may not offer. Of course, it is required to perform usability studies with explicit real-life tasks in a later development stage of the project in order to provide actual statements about the usefulness.

In conclusion, based on the questionnaire results being equally good despite the problematic manual interaction and especially based on the experts’ opinions, our proposed multisensory XR application for biomedical data exploration and analysis might add value. Thus, we aim to proceed with working on this research project.

Future Work

We intend to further work on our research project that we named ISH3DE, a framework for Intuitive Stereoptic Haptic 3D Data Exploration. The overall concept of our proposed ISH3DE is illustrated in Fig. 4, where the information can freely flow through the (bio-) medical professionals, the AI system, and the volumetric data via interactions in an XR scene. The information flow between volumetric data and (bio-) medical professionals, now called users, passes through the XR interface consisting of multiple hardware components. It allows the users to intuitively interact with rendered data parts, e.g., organs, by grasping, feeling, and inspecting them from different perspectives. The data flow between the users and the AI systems runs through a virtual graphical user interface in the XR scene. Users can send a request to the AI system for various actions (e.g., to enhance the image quality or segment the organs), which are then applied to the rendered volumetric data. In addition, users can also provide feedback to the AI system to further train it, such as collecting a preliminary diagnosis label. The framework code is released open-source here (https://github.com/MMV-Lab/ISH3DE).

To improve the manual interaction, we will base on our previous work, in which a hand calibration tool was implemented to create an adequate hand model that fits the user’s hand [23]. Some of our ongoing projects include an algorithm calculating the hand posture based on fingertip positions so that the haptic glove can measure and provide more accurate hand posture in VR. Additionally, the haptic feedback will be improved by rendering different elasticities of objects and enabling deformability. For this purpose, Young’s modulus of common soft tissues or organs [30] can be encoded.

A limitation of the current prototype is that the interaction between the ISH3DE and AI systems is still offline. In other words, the currently loaded data is pre-processed by AI models and converted into proper binary and ASCII .stl files. The next step of our work is to utilize ONNX [31] and Unity neural network inference engine (Barracuda) to hook up multiple AI models to the XR application. This will allow the loading of raw volumetric data and request different AI models for different tasks, e.g., segmentation or labeling.

Another essential part of future work is close cooperation with the target groups from medicine and research. In particular, before the software content is further developed, a focus group should work out requirements. In addition, studies should be conducted in which real use cases are tested. The performance between ISH3DE and traditional software can be compared in such downstream tasks.

Conclusion

We presented a new viable path for medical professionals or researchers to explore and analyze volumetric biomedical data. It is based on the premise that supporting the human’s ability of multisensory depth perception delivers a better understanding of the data’s dimensions. A user study demonstrated that experts agree this new data exploration paradigm adds value. Therefore, we will proceed to work on the project ISH3DE, which is also open source. In the future, it may lead to more effective therapeutic decisions, better surgery preparations, enhanced medical education, or improved research data analysis.

Data Availability

Since the majority of participants allowed just for publishing the data summarized, the raw data, i.e., the filled questionnaires, are not available publically.

Code Availability

The framework code that was used for the study and all further developments are released open-source here (https://github.com/MMV-Lab/ISH3DE).

Notes

The official SenseGlove software is available here https://github.com/Adjuvo.

References

Zbontar, J., Knoll, F., Sriram, A., Murrell, T., Huang, Z., Muckley, M.J., Defazio, A., Stern, R., Johnson, P., Bruno, M., et al.: fastmri: An open dataset and benchmarks for accelerated mri. arXiv preprint arXiv:1811.08839 (2018)

Howard, I.P., Rogers, B.J.: Binocular Vision and Stereopsis. Oxford University Press, USA (1995)

Helbig, H.B., Ernst, M.O.: Optimal integration of shape information from vision and touch. Experimental brain research 179, 595–606 (2007)

Ernst, M.O., Banks, M.S.: Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415(6870), 429–433 (2002)

Lobachev, O., Berthold, M., Pfeffer, H., Guthe, M., Steiniger, B.S.: Inspection of histological 3d reconstructions in virtual reality. Frontiers in Virtual Reality 2, 628449 (2021)

Jain, S., Gao, Y., Yeo, T.T., Ngiam, K.Y.: Use of mixed reality in neuro-oncology: A single centre experience. Life 13(2), 398 (2023)

Zörnack, G., Weiss, J., Schummers, G., Eck, U., Navab, N.: Evaluating surface visualization methods in semi-transparent volume rendering in virtual reality. Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization 9(4), 339–348 (2021)

Schloss, K.B., Schoenlein, M.A., Tredinnick, R., Smith, S., Miller, N., Racey, C., Castro, C., Rokers, B.: The uw virtual brain project: An immersive approach to teaching functional neuroanatomy. Translational Issues in Psychological Science 7(3), 297 (2021)

Staubli, S.M., Maloca, P., Kuemmerli, C., Kunz, J., Dirnberger, A.S., Allemann, A., Gehweiler, J., Soysal, S., Droeser, R., Däster, S., et al.: Magnetic resonance cholangiopancreatography enhanced by virtual reality as a novel tool to improve the understanding of biliary anatomy and the teaching of surgical trainees. Frontiers in Surgery 9 (2022)

Syamlan, A., Mampaey, T., Denis, K., Vander Poorten, E., Tjahjowidodo, T., et al: A virtual spine construction algorithm for a patient-specific pedicle screw surgical simulators. In: 2022 IEEE Symposium Series on Computational Intelligence (SSCI), pp. 1493–1500 (2022). IEEE

Xu, X., Qiu, H., Jia, Q., Dong, Y., Yao, Z., Xie, W., Guo, H., Yuan, H., Zhuang, J., Huang, M., Shi, Y.: Ai-chd: An ai-based framework for cost-effective surgical telementoring of congenital heart disease. Commun. ACM 64(12), 66–74 (2021) 10.1145/3450409

Chheang, V., Saalfeld, P., Joeres, F., Boedecker, C., Huber, T., Huettl, F., Lang, H., Preim, B., Hansen, C.: A collaborative virtual reality environment for liver surgery planning. Computers & Graphics 99, 234–246 (2021)

Steiniger, B.S., Pfeffer, H., Gaffling, S., Lobachev, O.: The human splenic microcirculation is entirely open as shown by 3d models in virtual reality. Scientific Reports 12(1), 16487 (2022)

Reinschluessel, A.V., Muender, T., Döring, T., Uslar, V.N., Lück, T., Weyhe, D., Schenk, A., Malaka, R.: A study on the size of tangible organ-shaped controllers for exploring medical data in vr. In: Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, pp. 1–7 (2021)

Muender, T., Reinschluessel, A.V., Salzmann, D., Lück, T., Schenk, A., Weyhe, D., Döring, T., Malaka, R.: Evaluating soft organ-shaped tangibles for medical virtual reality. In: CHI Conference on Human Factors in Computing Systems Extended Abstracts, pp. 1–8 (2022)

Faludi, B., Zoller, E.I., Gerig, N., Zam, A., Rauter, G., Cattin, P.C.: Direct visual and haptic volume rendering of medical data sets for an immersive exploration in virtual reality. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, October 13–17, 2019, Proceedings, Part V 22, pp. 29–37 (2019). Springer

Zoller, E.I., Faludi, B., Gerig, N., Jost, G.F., Cattin, P.C., Rauter, G.: Force quantification and simulation of pedicle screw tract palpation using direct visuo-haptic volume rendering. International journal of computer assisted radiology and surgery 15, 1797–1805 (2020)

Rantamaa, H.-R., Kangas, J., Kumar, S.K., Mehtonen, H., Järnstedt, J., Raisamo, R.: Comparison of a vr stylus with a controller, hand tracking, and a mouse for object manipulation and medical marking tasks in virtual reality. Applied Sciences 13(4), 2251 (2023)

Allgaier, M., Neyazi, B., Sandalcioglu, I.E., Preim, B., Saalfeld, S.: Immersive vr training system for clipping intracranial aneurysms. Current Directions in Biomedical Engineering 8(1), 9–12 (2022)

Wang, D., Song, M., Naqash, A., Zheng, Y., Xu, W., Zhang, Y.: Toward whole-hand kinesthetic feedback: A survey of force feedback gloves. IEEE transactions on haptics 12(2), 189–204 (2018)

Wang, D., Yuan, G., Shiyi, L., Zhang, Y., Weiliang, X., Jing, X.: Haptic display for virtual reality: progress and challenges. Virtual Reality & Intelligent Hardware 1(2), 136–162 (2019)

Krieger, K.: A vr serious game framework for haptic performance evaluation. PhD thesis, Bielefeld University (submitted)

Krieger, K., Leins, D.P., Markmann, T., Haschke, R.: Open-source hand model configuration tool (hmct). Work-in-Progress Paper at 2023 IEEE Worldhaptics Conference (2023)

SenseGlove: The New Sense in VR for enterprise. https://www.senseglove.com/product/nova/. [Online; accessed 8-March-2023] (2023)

Wasserthal, J., Breit, H.-C., Meyer, M.T., Pradella, M., Hinck, D., Sauter, A.W., Heye, T., Boll, D.T., Cyriac, J., Yang, S., Bach, M., Segeroth, M.: Totalsegmentator: Robust segmentation of 104 anatomic structures in ct images. Radiology: Artificial Intelligence 5(5) (2023) 10.1148/ryai.230024

Li, J., Pepe, A., Gsaxner, C., et al.: Medshapenet - a large-scale dataset of 3d medical shapes for computer vision. arXiv preprint arXiv:2308.16139 (2023)

Brooke, J.: Sus: A quick and dirty usability scale. Usability Eval. Ind. 189 (1995)

Sanchez-Vives, M.V., Slater, M.: From presence to consciousness through virtual reality. Nature reviews neuroscience 6(4), 332–339 (2005)

Schubert, T., Friedmann, F., Regenbrecht, H.: The experience of presence: Factor analytic insights. Presence: Teleoperators & Virtual Environments 10(3), 266–281 (2001)

Liu, J., Zheng, H., Poh, P.S., Machens, H.-G., Schilling, A.F.: Hydrogels for engineering of perfusable vascular networks. International journal of molecular sciences 16(7), 15997–16016 (2015)

Bai, J., Lu, F., Zhang, K., et al.: ONNX: Open Neural Network Exchange. GitHub (2019)

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was supported by KITE (Plattform für KI-Translation Essen) from the REACT-EU initiative (EFRE-0801977, https://kite.ikim.nrw/). J.Chen is funded by the Federal Ministry of Education and Research (Bundesministerium für Bildung und Forschung, BMBF) in Germany under the funding reference 161L0272. J. Chen, K. Krieger, M. Gunzer are also supported by the Ministry of Culture and Science of the State of North Rhine-Westphalia (Ministerium für Kultur und Wissenschaft des Landes Nordrhein-Westfalen, MKW NRW).

Author information

Authors and Affiliations

Contributions

Conceptualization of the research was performed by Kathrin Krieger, Matthias Gunzer, and Jianxu Chen. Project administration, software creation, study conceptualization, design and execution, data analysis, and visualization were performed by Kathrin Krieger. Resources and funding acquisition were organized by Jan Egger, Jens Kleesiek, Matthias Gunzer, and Jianxu Chen. The first draft of the manuscript was written by Kathrin Krieger and Jianxu Chen, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval

This study was performed in line with the principles of the German Society of Psychology (Deutschen Gesellschaft für Psychologie, DGPs) and the Professional Association of German Psychologists (Berufsverbandes deutscher Psychologinnen und Psychologen, BdP). The Ethics Committee of Bielefeld University granted approval, with No 2023-258, on September 12th, 2023.

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

All participants agreed that their data could be published anonymously summarized.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Krieger, K., Egger, J., Kleesiek, J. et al. Multisensory Extended Reality Applications Offer Benefits for Volumetric Biomedical Image Analysis in Research and Medicine. J Digit Imaging. Inform. med. (2024). https://doi.org/10.1007/s10278-024-01094-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10278-024-01094-x