Abstract

Modern companies regularly use social media to communicate with their customers. In addition to the content, the reach of a social media post may depend on the season, the day of the week, and the time of the day. We consider optimizing the timing of Facebook posts by a large Finnish consumers’ cooperative using historical data on previous posts and their reach. The content and the timing of the posts reflect the marketing strategy of the cooperative. These choices affect the reach of a post via a dynamic process where the reactions of users make the post more visible to others. We describe the causal relations of the social media publishing in the form of a directed acyclic graph, use an identification algorithm to obtain a formula for the causal effect, and finally estimate the required conditional probabilities with Bayesian generalized additive models. As a result, we obtain estimates for the expected reach of a post for alternative timings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

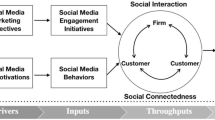

Companies widely use social media to communicate with their customers. The goals of marketing communication in social media may include, for instance, developing brand awareness, creating engagement, monitoring customer feedback, promoting products and services, and ultimately increasing sales (Kotler et al. 2017; Misirlis and Vlachopoulou 2018). As reaching the targeted audience is a prerequisite for the marketing communication to have any effect, increasing the customer reach can be regarded as an intermediate target for improving the efficiency of social media marketing.

The total reach of a social media post, i.e. the number of unique users who saw the post on their screen, can be easily monitored using tools provided by the social media platform. In addition to the content of the post and possible paid promotion, the total reach may depend on the time of the posting. The effect of time can be further divided into the effects of the season, the day of the week, and the time of day. Considering posting on the day of the week and the time of the day for a post is an attractive option because unlike paid promotion it does not induce additional costs. If optimizing the day and time of posting improves total reach, marketers should consider this option before spending money on paid promotion.

We consider the problem of choosing the timing that maximizes the expected total reach of a social media post. This is a problem of causal inference (Pearl 2009) that differs from the problem of predicting the total reach on the condition of the timing. The causal effect of timing could be estimated from a randomized controlled trial, often called an A/B-test (Kohavi and Longbotham 2017) in the business context. For instance, Chawla and Chodak (2021) conducted an experiment on Facebook where 96 posts were scheduled over a period of 4 days.

The experiments, however, have their limitations, which motivate the use of historical data. Differently e.g. from email campaigns, it is not possible to choose the time of posting on the individual level. It follows that the experiment should run for months if the frequency of posting is low. This is unsatisfactory if the company would like to have immediate support for decision-making. On the other hand, a high frequency of posting (as in (Chawla and Chodak 2021)) may be considered spamming by some users. It is also unclear if the results of a short-term experiment can be generalized to another season. Other limitations of relevant resources, such as costs related to experimental designs may create barriers to implementation, especially in the case of smaller businesses. However, passively observed data by the social media platforms are often available and can be used for causal inference if the assumptions on the underlying causal mechanisms are properly specified (Pearl 2009). For these reasons, we study how historical data on social media posts and their reach can be used as a starting point for the optimization of future posts.

In nonexperimental historical data, the timing of the posts is not completely random but depends implicitly or explicitly on the applied marketing strategy and other possible confounders. Causal inference provides a well-grounded approach for the handling of observed and unobserved confounders. However, the use of formal causal inference is just emerging in marketing (Hair and Sarstedt 2021; Hünermund and Bareinboim 2021) and earlier works that use historical data on social media posts seem to be restricted to purely associational and predictive approaches. These earlier approaches include regression (e.g., de Vries et al. 2012; Pletikosa Cvijikj and Michahelles 2013), data-mining (e.g., Moro et al. 2016) and some machine learning techniques (e.g., Jaakonmäki et al. 2017; Lee et al. 2018). As these approaches are not based on the principles of causal inference, it is unclear if they can be used to guide the choice of timing for future posts.

In this paper, we implement a causal inference approach for estimating the effect of timing in Facebook publishing using historical data from a consumers’ cooperative. We express the understanding of the causal relations in the form of a directed acyclic graph, use an identification algorithm to obtain a nonparametric formula for the causal effect, and finally estimate the required conditional probabilities with Bayesian generalized additive models. Helske et al. (2021) have applied a similar high-level approach in a different context. As a result, we obtain estimates for the expected reach of a planned post for different alternatives of the timing.

The paper is organized as follows: In Sect. 2, we introduce the data set and the social media publishing process of the consumers’ cooperative. In Sect. 3, we present the methods used for estimating a causal model and summarize the results on the effect of timing. Section 4 concludes the paper.

2 Data and assumptions

We examine Facebook posts of a large Finnish consumers’ cooperative (called henceforth “the cooperative”) which operates on a wide scale of business in the retail and service sectors. The cooperative has set the reach of users to be one of the main Key Performance Indicators (KPI) in social media marketing. Although customer reach does not necessarily accumulate into sales, it is assumed by the cooperative to positively contribute to the relevant return of investment (ROI) measurements. Optimizing the timing on the level of the day of the week and the time of day for enhancing the reach is a relatively easy way to adapt social media publishing in practice without interfering with the overall marketing strategy. In contrast, as the cooperative must be frequently visible on social media, limiting the publishing to only some months is an unrealistic option even if posting in these months would lead to higher reach than in other months.

No earlier experiments about the posting times have been run by the cooperative, so the data we use to make a causal inference is passively observed. The data set contains 790 Facebook posts published by the cooperative from August 21st, 2017 to August 30th, 2019. The data consists of summary variables collected by Facebook (Moro et al. 2016) and features derived from the content of posts by the first author. The variables of the data set with some summary statistics are introduced in Table 1. Discussions with the marketing managers were held to identify the causal relationships of the variables in the data and other relevant latent factors affecting the publishing. These insights were then put in the concrete form of the causal graph by the authors (as we later present) and then approved by the marketing managers of the cooperative.

The response variable of interest is the total reach of the post, defined as the number of unique users who saw the post on their screen. Various features of the published individual Facebook posts are measured. As the choice of timing is a special interest of the cooperative, the day of the week and the time of the day are set as decision variables from the optimization perspective. Moreover, there are two additional time-related variables, the month and the time difference between the current and the previous post.

A Facebook post contains various visual and textual elements, which were used to classify the post content into one of the four categories: inspiring, guiding, convincing, and entertaining. This subjective classification originates from the cooperatives’ social media publishing strategy. Examples of each are paraphrased and presented in English in Fig. 1. Additional media type variable has three categories: photos, videos (videos and shared videos), and others (statuses, links, and seven uncategorized posts). Using feature extraction to derive more fine-grained variables of the post content could be used but we did not pursue this here due to the modest size of the data, and the fact that content type was not the primary interest of the cooperative.

There has been an important change in the publishing strategy due to an exogenous consultation at the turn of October and November 2018. A dichotomous variable called “strategy adjustment” indicates whether a single post has been published before or after the change. Variable “paid reach” indicates whether or not the cooperative has bought visibility for the post.

To understand the processes that have generated the data to be analyzed, we describe the practices the cooperative has employed in social media publishing. The creation of Facebook posts has been guided by the marketing strategy, which is comprised of a confounder between the three timing and two content variables, and the paid reach variable. These elements of the posts have been usually chosen together, reflecting the season and the long-term marketing objectives. However, the month can be seen to affect the day of the week and the time of day because of holidays and different seasonal and daily campaigns. Also, the day of the week is affecting on the time of day due to the difference in activity between weekdays and weekends. In addition, different seasons like upcoming holidays and content such as videos may require additional monetary boost for increased visibility.

The reactions of Facebook users, i.e., liking, sharing, or commenting on a post, make the post more visible to other users and thus increase the total reach. The content type and the media type as well as content elements are probably the most important factors explaining the user reactions. Some of the media types can be assumed to be favoured by Facebook algorithms and thus affecting directly the total reach but also indirectly via content elements. In addition, the activity and behaviour of users may differ between months, the days of the week, and the times of the day. Also, the publishing frequency can be assumed to affect reactions and reach, especially in the case of short and long intervals. Naturally paid promotion on a Facebook post increases the reach but does not guarantee reactions to the post.

3 Causal effect estimation

We present the social media publishing process described in Sect. 2 as a directed acyclic graph (DAG) where the nodes represent variables and the arrows represent direct causal effects. The DAG contains both observed variables (white ellipses) and unobserved confounders (grey ellipses).

The DAG presented in Fig. 2 describes our understanding of the causal relations in the Facebook publishing in the cooperative obtained from discussions with marketing managers. An unobserved confounding variable denoted as the marketing strategy is placed into the DAG for representing the Facebook post creation process generating the three timing variables, content, and media type variables, and paid reach variable. The strategy adjustment is an exogenous variable that affects the unobserved marketing strategy variable.

As we described in Sect. 2, the content type and the media type define the actual content elements of a post. Also, the users’ reactions enhance the visibility of a Facebook post and increase the total reach. Hence latent variables are included in the DAG, considering content elements related to textual and visual details, but also reactions such as comments, likes, and shares. Moreover, a set of unknown factors such as competing posts affecting both the reactions of users and the total reach are taken into account with an unobserved confounder between them.

Directed acyclic graph (DAG) representing the process of Facebook publishing in the cooperative. White ellipses present the observed variables and grey ellipses the unobserved variables. Solid arrows represent the causal relationship between observed variables whereas dashed arrows indicate the effect of unobserved confounder

Semi-Markov DAG resulting from latent projections applied to the unobserved factors in the DAG in Fig. 2. Bidirected dashed arrows represent the unobserved confounders

We aim to estimate the causal effect of timing on the total reach for different content types. Denoting the total reach by Y, the two timing variables by \(\varvec{X}\) and the content type by C, we can express the interventional distribution of interest as \(P(Y \mid \text {do}(\varvec{X}), C)\), where \(\text {do}(\varvec{X})\) represents the decision made on \(\varvec{X}\). We are interested in the expected values \(E(Y \mid \text {do}(\varvec{X}), C)\) of this distribution, i.e., the post-interventional means for different content types.

A causal effect is identifiable if it can be uniquely expressed with probability distributions of observed data (Pearl 2009). The identifiability can be checked without the actual numeric data using a graph-theoretic identification algorithm. Using the assumptions encoded in the constructed DAG, we solve the identifiability of the causal effect of the day of the week and the time of day to the total reach on condition to the content of the post by the IDC algorithm (Shpitser and Pearl 2006a), which is an extension to ID algorithm (Shpitser and Pearl 2006b). These algorithms are implemented in the R package causaleffect (Tikka and Karvanen 2017a, b). Before running the algorithm, latent projections (Verma 1993) are applied to the unobserved confounders in the DAG to turn the graph into the semi-Markov form (Pearl 2009). This resulting semi-Markov DAG is presented in Fig. 3. For an identifiable effect, the algorithm returns a nonparametric expression consisting of conditional distributions that are estimable from the observed data.

In our case, the IDC algorithm returns us an identifiable causal effect of a form

where \(\varvec{Z}\) represents the counfounders needed in the adjustment: Month, Media type, and Paid reach. In other words, Strategy adjustment and Time difference are not needed in the estimation. Pearl et al. (2016) characterize Eq. (1) as c-specific effect where c refers to the conditioning variable (our C). The conditions for the validity of this adjustment are similar to the standard back-door criterion (Pearl 1995): No node in \(\varvec{Z} \cup C\) is descendant of \(\varvec{X}\), and \(\varvec{Z} \cup C\) blocks all paths between \(\varvec{X}\) and Y that contain an arrow to \(\varvec{X}\).

The identification assumptions will hold in the DAG of Fig. 2 even if additional mediators are added to the existing paths. An unobserved confounder that affects both the timing variables (the treatment) and the total reach (the response) would naturally make the causal effect non-identifiable (whereas if such a variable was observed, adding it to \(\varvec{Z}\) would again satisfy Eq. (1)). However, no such variables were identified during the discussions with the marketing managers of the cooperative. Another potential threat for the validity of Eq. (1) would be an unblocked path between the timing variables and the content elements. Such a path occurs, for instance, if an emoji favored by the users is often present in posts in the morning. This possibility cannot be completely ruled out but the marketing strategy applied during the data collection does not contain any explicit guidelines for linking the content elements and the time of the post.

The conditional distributions in (1) can be estimated via statistical methods. The distribution \(P(\varvec{Z} \mid C)\) is typically replaced with the corresponding empirical distribution of the data (Hernán and Robins 2020), an approach we also follow here. For estimation of conditional distribution \(P(Y \mid \varvec{X}, \varvec{Z}, C)\), a suitable statistical model can be chosen depending on the assumed parametric relationships of the variables. We estimate our model and the effects of the timing using Bayesian methods (see e.g. Gelman et al. (2014)), allowing us to take the uncertainty of model parameters into account in form of probability distributions when representing the results.

We assume that the total reach of a post is distributed according to a negative binomial distribution, a generalization of the Poisson distribution, a natural choice for overdispersed count data (Hilbe 2011), such as the total reach in our case. We also model the shape parameter of the negative binomial distribution by regressing it with the content variable, capturing the large dispersion in a total reach due to some of the posts.

Thin plate regression spline (Wood 2003) is applied to capture the observed nonlinearity of the total reach as a function of the time of day. This leads us to use generalized additive modeling (GAM) (see e.g Hastie and Tibshirani (1990) and Wood (2017)) so that the mean is modeled through a logarithmic link.

The parametric form of the model cannot be deduced from the DAG but must be chosen separately. As we optimize the effect of two timing variables, an interaction term between them is included in the model which takes into account possibly varying effects between the combination of time of day and day of the week. Since the interest is in a conditional causal effect, it is also reasonable to consider interactions between these two timing variables and the content type variable.

Let \(\theta _k\) denote the kth posterior sample of the model parameters. For evaluation of the posterior distribution of the causal effect of time of day \(X_1\) and day of week \(X_2\) on the total reach of a post Y, conditional on the content type C, we first compute the marginal mean of Y for a fixed \(\varvec{X}=(X_1,X_2)\), C, and \(\theta _k\) by marginalizing over covariates \(\varvec{Z}\):

where n is the number of observations (posts), \(I(C_i = C)\) is indicator function and \(n_C= \sum _{i=1}^n I(C_i = C)\). By repeating (2) for each posterior sample \(\theta _1,\ldots ,\theta _K\), of the model parameters, we obtain samples from the posterior distribution of \(E(Y \mid \mathrm {do}(\varvec{X}), C)\) which can be used to compute, e.g., posterior mean and 95% credible intervals for the post-interventional mean.

We use the R environment (R Core Team 2020) and the brms package (Bürkner 2017, 2018) for fitting the Bayesian GAM. The default diffuse priors of brms for regression coefficients were changed to proper \(N(0, 5^2)\) priors for more efficient Markov chain Monte Carlo sampling. For other model parameters, default weakly informative priors were used.

All data were used for estimating the model and the post-interventional means on the total reach were calculated for every half an hour between 8:00 and 16:00. We only consider posts between 8:00 and 16:00 because the number of posts published outside these hours is insufficient for reliable estimates of post-interventional means. In Table 2, ten posting times with the highest post-interventional means for each content type are presented with corresponding 95% credible intervals. The highest post-interventional mean amongst all content types can be seen with entertaining posts, as the best time is estimated to be on Wednesday morning. The same time of the day occurs with convincing and guiding posts as optimal times, but the days of the week are Sunday and Friday, respectively. The optimal timing with inspiring content lies in the late Saturday afternoon. A prior sensitivity analysis was implemented by changing the prior distributions of regression coefficients to be \(N(0, 10^2)\). The estimates obtained with priors with larger standard deviations however did not practically differ from the original ones.

As an illustration for a single day of the week, the estimated post-interventional means for Friday are presented in Fig. 4a for all content types. We can see rather varying behavior between the content types, as the optimal times of day seem to be either in the morning or in the afternoon. According to the predicted effect of timing, a convincing post seems to have a rather clear difference in the expected total reach when comparing the width of credible intervals between the morning and the early afternoon. No clear differences can be observed within other content types on Friday. Note, however, that Fig. 4a is based on the marginal estimates, and the adjacent estimates are correlated so an accurate comparison of different time points can be misleading (see, e.g. (Schenker and Gentleman 2001) in frequentist hypothesis testing context). Using the posterior samples of post-interventional means we can estimate the causal effect by inspecting full posterior distributions between different time points, and compare the density estimates, as illustrated in Fig. 4b. Comparing these density estimates of the expected values of post-interventional distributions of inspiring posts between 8:00 and 14:00, we see that mornings posts typically have higher reach than the posts in the early afternoon (with the posterior probability that the reach is higher at 8:00 than at 14:00 being 0.966).

4 Discussion

We have shown how causal inference can be applied to estimate the effect of the timing of social media posts on their reach when data on previous posts and their reach are available. The approach has four phases: (1) constructing a nonparametric causal model (a DAG) together with subject area experts, (2) applying an identification algorithm to obtain a formula for the causal effect, (3) fitting a Bayesian model and estimating the causal effect of timing on the total reach, and (4) finding times that maximize the expected total reach.

The strengths of the proposed approach are related to the well-defined workflow. A causal graph in form of a DAG offers a transparent way to formalize and communicate the assumptions made about the causal relationships. The next steps of the approach logically follow from these assumptions. The ID algorithm tells us how confounding should be handled, i.e., which variables are needed in the estimation. Covariate selection based on other criteria, such as cross-validation, might have led to a model containing a mediator as a covariate, which results in a bias in the estimated causal effects (Pearl 2013). In the estimation, Bayesian GAMs allow us to include prior information, use flexible functional forms (splines), and quantify the uncertainty of the results in the form of credible intervals.

As always in causal inference with observational data, the results are conditional on the assumed DAG and the possibility of unobserved confounders cannot be completely ruled out. The implications of alternative DAGs could be studied in a sensitivity analysis considering e.g. omitted variable bias (Cinelli and Hazlett 2020). The DAG modeled here represents the practices of marketing conducted by the individual cooperative and does not necessarily agree with the strategies and processes used in other companies. However, the presented causal inference approach is well applicable for other companies’ social media publishing as well as other business problems related to decision making.

The relatively small number of posts posed some limitations to the modeling, for example to the number of interactions that can be reliably estimated in the GAM. The number of posts was also insufficient to study the optimal timing outside the time window from 8:00 to 16:00. The credible intervals tend to be wide especially at the endpoints of the time window. Extending the two years of data collection further to the past would technically solve some of these limitations but the concern is that the older data might not represent the current social media behavior of consumers.

While the focus of the cooperative was purely in timing in the present study, a company might be interested in making an optimized decision also on content type C, i.e. considering the causal effect of intervention \(\text {do}(C)\). However, the causal effect \(P(Y \mid \text {do}(\varvec{X}), \text {do}(C))\) is not identifiable in the graph of Fig. 2.

Future research could focus on using the knowledge on the optimal timing in daily marketing practice and improving the estimation with additional data sources. Observed timings with the highest and lowest posterior means of post-interventional distributions could be used in setting a timing range when designing a randomized controlled trial. According to the obtained expected total reaches, such as in Fig. 4a, publishing more outside 8:00–16:00 could also be worth implementing to obtain more data and possibly identify even more optimal timings. As explained in Sect. 1, there are practical limitations related to A/B-tests. However, when combined with historical data, even small experiments can provide useful additional information. Tools for combining observations and experiments in causal inference are already available (Karvanen et al. 2020).

While the importance of data is continuously growing, human expertise is still present in almost all marketing decisions. Combining data with substance knowledge on the data generating process is a key for successful decision making. Causal inference offers a well-justified methodology for this task.

References

Bürkner PC (2017) brms: an R package for Bayesian multilevel models using Stan. J Stat Softw 80(1):1–28. https://doi.org/10.18637/jss.v080.i01

Bürkner PC (2018) Advanced Bayesian multilevel modeling with the R package brms. R J 10(1):395–411. https://doi.org/10.32614/RJ-2018-017

Chawla Y, Chodak G (2021) Social media marketing for businesses: organic promotions of web-links on facebook. J Bus Res 135:49–65. https://doi.org/10.1016/j.jbusres.2021.06.020

Cinelli C, Hazlett C (2020) Making sense of sensitivity: extending omitted variable bias. J R Stat Soc Series B (Stat Methodol) 82(1):39–67. https://doi.org/10.1111/rssb.12348

de Vries L, Gensler S, Leeflang PS (2012) Popularity of brand posts on brand fan pages: an investigation of the effects of social media marketing. J Interact Mark 26(2):83–91. https://doi.org/10.1016/j.intmar.2012.01.003

Gelman A, Carlin JB, Stern HS et al (2014) Bayesian data analysis, 3rd edn. CRC Press, Boca Raton

Hair JF Jr, Sarstedt M (2021) Data, measurement, and causal inferences in machine learning: opportunities and challenges for marketing. J Market Theory Pract 29(1):65–77. https://doi.org/10.1080/10696679.2020.1860683

Hastie T, Tibshirani R (1990) Generalized additive models. Chapman and Hall, London

Helske J, Tikka S, Karvanen J (2021) Estimation of causal effects with small data in the presence of trapdoor variables. J R Stat Soc A Stat Soc 184(3):1030–1051. https://doi.org/10.1111/rssa.12699

Hernán MA, Robins JM (2020) Causal inference: what If. Chapman & Hall/CRC, Boca Raton

Hilbe J (2011) Negative binomial regression, 2nd edn. Cambridge University Press

Hünermund P, Bareinboim E (2021) Causal inference and data fusion in econometrics. arxiv:1912.09104

Jaakonmäki R, Müller O, Vom Brocke J (2017) The impact of content, context, and creator on user engagement in social media marketing. In: Proceedings of the 50th Hawaii international conference on system sciences, https://doi.org/10.24251/HICSS.2017.136

Karvanen J, Tikka S, Hyttinen A (2020) Do-search: a tool for causal inference and study design with multiple data sources. Epidemiology 32(1):111–119

Kohavi R, Longbotham R (2017) Online controlled experiments and A/B testing. Encycl Mach Learn Data Min 7(8):922–929. https://doi.org/10.1007/978-1-4899-7687-1_891

Kotler P, Armstrong G, Harris L, et al (2017) Principles of marketing, 7th edn. Pearson

Lee D, Hosanagar K, Nair HS (2018) Advertising content and consumer engagement on social media: evidence from Facebook. Manage Sci 64(11):5105–5131. https://doi.org/10.1287/mnsc.2017.2902

Misirlis N, Vlachopoulou M (2018) Social media metrics and analytics in marketing-S3M: a mapping literature review. Int J Inf Manage 38(1):270–276. https://doi.org/10.1016/j.ijinfomgt.2017.10.005

Moro S, Rita P, Vala B (2016) Predicting social media performance metrics and evaluation of the impact on brand building: a data mining approach. J Bus Res 69(9):3341–3351. https://doi.org/10.1016/j.jbusres.2016.02.010

Pearl J (1995) Causal diagrams for empirical research. Biometrika 82(4):669–688. https://doi.org/10.1093/biomet/82.4.669

Pearl J (2009) Causality: models, reasoning, and inference, 2nd edn. Cambridge University Press

Pearl J (2013) Linear models: a useful microscope for causal analysis. J Causal Inference 1(1):155–170

Pearl J, Glymour M, Jewell NP (2016) Causal inference in statistics: a primer. Wiley

Pletikosa Cvijikj I, Michahelles F (2013) Online engagement factors on Facebook brand pages. Soc Netw Anal Min 3(4):843–861. https://doi.org/10.1007/s13278-013-0098-8

R Core Team (2020) R: a language and environment for statistical computing. r foundation for statistical computing, Vienna, Austria, https://www.R-project.org/

Schenker N, Gentleman JF (2001) On judging the significance of differences by examining the overlap between confidence intervals. Am Stat 55(3):182–186

Shpitser I, Pearl J (2006a) Identification of conditional interventional distributions. In: Proceedings of the 22nd Conference on Uncertainty in Artificial Intelligence. AUAI Press, pp 437–444, https://doi.org/10.48550/ARXIV.1206.6876

Shpitser I, Pearl J (2006b) Identification of joint interventional distributions in recursive semi-Markovian causal models. In: Proceedings of the 21st National Conference on Artificial Intelligence—Volume 2. AAAI Press, pp 1219–1226

Tikka S, Karvanen J (2017) Identifying causal effects with the R package causaleffect. J Stat Softw 76(12):1–30. https://doi.org/10.18637/jss.v076.i12

Tikka S, Karvanen J (2017) Simplifying probabilistic expressions in causal inference. J Mach Learn Res 18:1–30

Verma T (1993) Graphical aspects of causal models. Tech. rep., R-191, UCLA, Computer Science Department

Wood SN (2003) Thin plate regression splines. J R Stat Soc Series B (Stat Methodol) 65(1):95–114. https://doi.org/10.1111/1467-9868.00374

Wood SN (2017) Generalized additive models: an introduction with R, 2nd edn. Chapman and Hall/CRC

Acknowledgements

Lauri Valkonen is grateful for the grant of The Finnish Cultural Foundation, Central Finland Regional Fund. Jouni Helske was supported by the Academy of Finland grants 331817 and 311877. This work belongs to the thematic research area “Decision analytics utilizing causal models and multiobjective optimization” (DEMO) supported by Academy of Finland (Grant Number 311877).

Funding

Open Access funding provided by University of Jyväskylä (JYU).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Lauri Valkonen: none Jouni Helske: none Juha Karvanen: advisor for Explicans Oy

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Valkonen, L., Helske, J. & Karvanen, J. Estimating the causal effect of timing on the reach of social media posts. Stat Methods Appl 32, 493–507 (2023). https://doi.org/10.1007/s10260-022-00664-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-022-00664-z