Abstract

Investigating the relationship between Gross Domestic Product and unemployment is one of the most important challenges in macroeconomics. In this paper, we compare French and German economies in terms of the dynamic linkage between these variables. In particular, we use an empirical methodology to investigate how much the relationship between Gross Domestic Product and unemployment growth rates are dynamically different in the two major European economies over the period 2003–2019. To this aim, a Vector Autoregressive model is specified for each country to jointly model the growth rate of the two variables. Then a new statistical test is proposed to assess the distance between the two estimated models. Results indicate that the dynamic linkage between Gross Domestic Product and unemployment is very similar in the two countries. This empirical evidence does not imply identical product and labor markets in France and Germany, but it ensures that in these markets there are common dynamics. This could favor the process of economic convergence between the two countries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Gross Domestic Product (GDP) and unemployment arguably capture the essential features of an economy: the former is the final result of all its activity, and the latter the main determinant of the well-being of its population. Not surprisingly, the relationship between these two variables, known as “Okun’s law” (see, among others, Prachowny 1993; Lee 2000 and Nebot et al. 2019 for a review) is of paramount policy importance, as it describes how a fiscal stimulus is transmitted to (un)employment. While for structural studies long-run effects as measured by e.g. long-run multipliers will suffice, design and evaluation of anti-cyclical policies will require a full study of the dynamics of the relationship. This leads to our research question: is the relationship between GDP and unemployment dynamically different in the two major European continental economies, France and Germany? The key to answer this question is noting that the dynamics of the GDP-unemployment relationship can be captured by a Vector Autoregressive model (VAR) in these two variables. Thus, the question can be reformulated as “how different are the GDP-unemployment VARs for France and Germany?”, which can be answered exploiting the measure of distance between VARs proposed in Di Iorio and Triacca (2018).

In time series analysis the evaluation of the distance between series is one of the most important problems. In this regard, valuable overviews on the different measure of dissimilarities proposed in literature can be found in Liao (2005) and Fruhwirth-Schnatter (2011). In general, the methods to measure the distance among time series can be classified in two main categories: model-based methods (parametric methods), and model free-based methods (non-parametric methods). For univariate time series, the Autoregressive (AR) metric proposed by Piccolo (1990), the discrepancy measure introduced by Maharaj (1996) and the cepstral distance proposed in Martin (2000) are examples of model-based distances. Euclidean distance between estimated autocorrelation functions introduced by Galeano and Peña (2000) and Euclidean distance between the logarithms of the corresponding normalized periodograms considered in Caiado et al. (2006) are examples of model free-based distances.

Similarly to the univariate time series, there is no canonical way to measure the dissimilarity among multivariate time series. Different distance measures for multivariate time series have been proposed in Tapinos and Mendes (2013) and Triacca (2016). Recently, Di Iorio and Triacca (2018) have introduced a distance measure for evaluating the closeness of two Vector Autoregressive Moving Average (VARMA) models extending the notion of AR metric between univariate Autoregressive Moving Average (ARMA) models proposed by Piccolo (1990).

However, to the best of our knowledge, no test exists that allows to establish if any of these distances may be considered significantly different from zero, while in the case of univariate time series this problem has already been addressed and solved (see e.g. Corduas and Piccolo 2008). The test procedure proposed in this paper is meant to fill this gap.

The paper is organized as follows. Section 2 briefly illustrates the distance measure between pairs of VAR models derived from the distance for Vector Autoregressive Moving Average (VARMA) models proposed by Di Iorio and Triacca (2018). In Sect. 3, the Bootstrap test procedure, Sect. 4 the test performances are analysed by a set of Monte Carlo experiments. In Sect. 5 the proposed test is used to investigate the difference between French and German economies in terms of dynamic linkage between GDP and unemployment. Conclusions are in Sect. 6.

2 A distance measure between VAR models

In this section, following Di Iorio and Triacca (2018), we propose the distance measure between VAR models. Consider a k-dimensional process \(\left\{ \mathbf{y }_t=\left( y_{1t},\ldots y_{kt}\right) '; \; t \in {\mathbb {Z}}\right\}\) generated by the following VAR(p) model

or in the usual matrix notation:

where \(\left\{ \mathbf{u }_t =\left( u_{1t},\ldots u_{kt}\right) '; \; t \in {\mathbb {Z}}\right\}\) is a k-variate white-noise process with zero mean vector and nonsingular covariance matrix \(\pmb {\Sigma }_{\mathbf{u }}\). As usual the \((k\times k\)) matrix \(\pmb {A}(L)\) has finite polynomial elements in lag operator L expressed as \({\mathbf {A}}(L)=\mathbf{I }-{\mathbf {A}}_1L-\dots -{\mathbf {A}}_p L^p\) where \(\mathbf{I }\) is the \((k\times k)\) identity matrix and \(\left\{ \pmb {A}_i\right\}\) are matrices of parameters. The matrix \(\pmb {A}(L)\) is assumed to be of full rank. The nonstationarity is allowed but explosive processes are excluded assuming that \(\text{ det }\left[ \mathbf{A }(z)\right] \ne 0, \; \; \left| z\right| <1 \; \; \text{ for } \; z \in {\mathbb {C}}.\)

Following Zellner and Palm (1974), a VAR process implies a given specification of its individual components in terms of univariate ARMA processes

where \(\epsilon _{it}\) is a white noise process, the coefficients of the polynomial \(\theta _i(L)\) are found by equating the autocovariances in the two representations imposing the invertibility condition and \(\text{ adj}_i\left[ \pmb {A}(L)\right]\) denotes the i-th row of the adjoint matrix of \(\pmb {A}\). For more details see (Di Iorio and Triacca 2018).

Also let \({\mathcal {A}}_{\mathbf{y }}\) the set of k invertible univariate ARMA processes that corresponds to every k-variate VAR process, \(\mathbf{y }\). Any univariate process \(y_{i} \in {\mathcal {A}}_{\mathbf{y }}\) admits an (possibly infinite order) AR representation,

Let now \(x=\left\{ x_t; t \in {\mathbb {Z}}\right\}\) and \(y=\left\{ y_t; t \in {\mathbb {Z}}\right\}\) be two invertible univariate ARMA processes. Following Piccolo (1990), we can consider the Euclidean distance between the corresponding \(\pi\)-weights sequence, that is

where \(\left\{ \pi _{xl}\right\}\) and \(\left\{ \pi _{yl}\right\}\) denote the sequences of AR weights of x and y, respectively.

On this basis, as in Di Iorio and Triacca (2018), we define, in the class of the k-variate VAR processes, \({\mathcal {V}}_k\), the following measure of distance between two VAR processes:

Definition 1

Let \(\mathbf{x }=\left\{ \mathbf{x }_t=\left( x_{1t},\ldots x_{kt}\right) '; \; t \in {\mathbb {Z}}\right\}\) and \(\mathbf{y }=\left\{ \mathbf{y }_t=\left( y_{1t}, \ldots y_{kt}\right) '; \; t \in {\mathbb {Z}}\right\}\) be two VAR processes in \({\mathcal {V}}_k\):

and

where \(\left\{ \mathbf{v }_t; \; t \in {\mathbb {Z}}\right\}\) and \(\left\{ \mathbf{w }_t; \; t \in {\mathbb {Z}}\right\}\) are two k-variate white-noise processes with zero mean vector and nonsingular covariance matrices \(\pmb {\Sigma }_{\mathbf{v }}\) and \(\pmb {\Sigma }_{\mathbf{w }}\), respectively. Their distance \({\mathcal {D}}(\mathbf{x },\mathbf{y })\) is given by the sum of the distances according to the Eq. (3) between the implied ARMA models component by component:

where \(x_{i}\) and \(y_{i}\) (\(i=1,\ldots ,k\)) are the univariate invertible ARMA processes implied by k-variate VAR processes \(\mathbf{x }\) and \(\mathbf{y }\), respectively. \(\square\)

The main idea is that a k-variate VAR model implies k univariate ARMA models, so the distance between two VARs can be obtained as the sum of the distances between the implied univariate ARMA models component by component according to the AR metric. From this point of view the approach used is similar to that in Di Iorio and Triacca (2013). The proposed VAR distance can be usefully applied to obtain a matrix of distances among several VAR models where the Multidimensional Scaling and the Cluster Analysis can highlight similarities between the models.

Remark 1

It is relevant to point out that the distance component by component has been adopted to compare the same VAR model in two different situations (i.e. the same economic relation in two different countries) assuming that the variables are in the same order in the two models.

Example 1

As an instance let x, y two VAR(1) models given by:

and

where \((u_{x1,t},u_{x2,t})^{\prime }\) and \((u_{y1,t},u_{y2,t})^{\prime }\) are a bivariate white noise with covariance matrix \(\Sigma _{u_x}=\Sigma _{u_y}= \left[ \begin{array}{ll} 1 &{} -0.5 \\ -0.5 &{} 1 \end{array}\right] .\)

It is immediate to verify that the implied ARMA models (after erasing the common factors) for x are

and for y are

Then \({\mathcal {D}}(\mathbf{x },\mathbf{y })=d(x_{1},y_{1})+d(x_{2},y_{2})=0.22+0 = 0.22\) \(\square\)

We note that Proposition 1 in Di Iorio and Triacca (2018, p. 207) also applies for the proposed distance \({\mathcal {D}}\) that, as a result, satisfies the main properties of Non-negativity, Symmetry and Triangularity.

Moreover, it is important to underline that \({\mathcal {D}}(\mathbf{x },\mathbf{y })\) is a pseudometric, since the distance is allowed to be null even if the VARs are different. This point can be illustrated by the following Example 2.

Example 2

Let x, y be two VAR(1) models with the autoregressive coefficients as in Example 1 and the error covariance matrices \(\Sigma _{u_x}\) and \(\Sigma _{u_y}\) equal to the identity matrix. The implied models for x are:

and for y are:

In this case the distance is \({\mathcal {D}}(\mathbf{x },\mathbf{y })=0.\) \(\square\)

Remark 2

The Frobenius distance can be an alternative method to compare VAR models. However, this distance only partially reaches the goal. For the two VAR processes described in Definition 1, the Frobenius distance is defined as

where \(\mathbf{B }_x=\left( \mathbf{A }_{x1}|\mathbf{A }_{x2}|...|\mathbf{A }_{xk}\right)\) and \(\mathbf{B }_y=\left( \mathbf{A }_{y1}|\mathbf{A }_{y2}|...|\mathbf{A }_{yk}\right)\).

Suppose that the coefficients matrices satisfy \(\mathbf{A }_{xi}=\mathbf{A }_{yi}, \; i=1,\ldots ,k\) while the covariance matrices \(\pmb {\Sigma }_{\mathbf{v }}\ne \pmb {\Sigma }_{\mathbf{w }}\), then we have \(d_F(\mathbf{x },\mathbf{y })=0\), despite the fact that the temporal dynamics captured by the two VARs are different. A VAR is a model used to capture the linear inter-dependencies among multiple time series where the cross-equation error covariance matrix contains all the information about contemporaneous correlations. Thus, an appropriate distance measure should also take into account the difference in the covariance matrices; it is easy to verify that \({\mathcal {D}}(\mathbf{x },\mathbf{y })\) meets this requirement. \(\square\)

3 A Bootstrap test

To conduct a hypothesis testing procedure for \(H_0: {\mathcal {D}} = 0\) vs \(H_1: {\mathcal {D}} > 0\) an asymptotic distribution for \({\mathcal {D}}\) can be obtained as convolution of distances d among the univariate ARMA implied by the considered VAR models. In the class of ARIMA processes, Corduas and Piccolo (2008) proved that for two independent ARMA(p, q) processes \(x_{t}\) and \(y_{t}\), under the null hypothesis \(d(x,y)=0,\) the Maximum Likelihood estimator \({\hat{d}}^{2}\) has the asymptotic distribution:

where \(\lambda _{j}\) are the eigenvalues of the covariance matrix of \(({\hat{\pi }}_{xi}-{\hat{\pi }}_{yi})\), \(K<p+q\) and \(\chi _{g_j}^{2}\) are independent chi-squared distributions with \(g_{j}\) degrees of freedom, where \(g_{j}\) is the multiplicity of the eigenvalue \(\lambda _{j}\) (usually, \(g_{j}=1, \forall j\)). The evaluation of this distribution can be cumbersome, then approximations, as well as evaluation algorithms, have been proposed. For example the density distribution for \({\hat{d}}^{2}\) can be obtained according in Mathai (1982). In that paper Mathai focus is attention on a linear combination of independent but not identically distributed Gamma variables and derives its exact density as a finite sum in terms of zonal polynomials and in terms of confluent hypergeometric functions. An alternative approximation, proposed by Corduas (2000), is based on the asymptotic distributions of the ARIMA parameter estimators. The approximation accuracy will be less precise when the MA component parameters are close to the non-invertibility region, in particular with short sample size (Corduas 1996).

Unfortunately these proposals cannot be usefully applied in our framework to derive the asymptotic distribution of \(\hat{\mathcal{D}}(\mathbf{x },\mathbf{y })\). The implied ARMA models are not independent since they are functions of a combination of the original VAR error terms as defined by the second part of equation (2). Moreover the approximation proposed by Corduas (2000) refers to the squared distance \({\hat{d}}^{2}\), while the distribution of the distance \(\hat{\mathcal{D}}(\mathbf{x },\mathbf{y })\) involves a sum of squared root transformations. To solve this problem we propose a bootstrap test procedure.

The key point in a Bootstrap test is the choice of the pseudo data generation process that obeys the null hypothesis. In our framework, taking into account the implications of Remark 2 in the previous section, for generating the pseudo-data \(\mathbf{x }_{bt}\) and \(\mathbf{y }_{bt}\), such that \(\hat{\mathcal{D}}(\mathbf{x }_b,\mathbf{y }_b)=\sum _{i=1}^k {\hat{d}}(x_{bi},y_{bi})=0\), the same VAR model (same coefficients and same error covariance matrix) with two different bootstrap error redrawings must be considered. The choice whether to use the coefficients and residuals that come from the estimate on the observed data \(\mathbf{x }_{t}\) or \(\mathbf{y }_{t}\) can be questionable. Then we suggest to perform a multiple hypotheses tests performing the bootstrap test twice: one time generating the pseudo-data using the coefficient vectors and residuals from VAR estimation on the observed data \(\mathbf{x }_{t}\), and then using the coefficient vectors and residuals from VAR estimation on \(\mathbf{y }_{t}\). In this way we obtain two bootstrap p-values as the proportion of the bootstrap distances \(\tilde{\mathcal{D}}\) that exceed the same statistic \({{\hat{\mathcal{D}}}}\) evaluated on the observed data p-val=\(\frac{1}{B}\sum _{l=1}^B I(\tilde{\mathcal{D}}_l>{\hat{\mathcal{D}}})\), where B is the number of bootstrap redrawing and I(.) is the indicator function. Therefore, the test decision rule can follow two different ways:

-

(a)

reject the null if at least one of the bootstrap p-values rejects;

-

(b)

reject the null if both bootstrap p-values reject.

The first decision rule is the less favorable to the null hypothesis, while the second one protects the null, as in the common inference practice.

The Bootstrap procedure is described by the algorithm below assuming the same lag order p for both VAR models \(\mathbf{x }\) and \(\mathbf{y }\) without loss of generality.

Bootstrap test procedure

-

1.

Estimate on the observed data \(\mathbf{x }\) and \(\mathbf{y }\) the suitable VAR(p) models, obtaining the estimated parameters \(\vartheta_{x} = vech(\hat{A}_{x1} \dots \hat{A}_{xp})\) and \(\vartheta_{y}= vech(\hat{A}_{y1} \dots \hat{A}_{yp})\) and the k-variate residuals \({\hat{\epsilon }}_{x}\) and \({\hat{\epsilon }}_{y}\);

-

2.

Using the estimated parameters \(\vartheta _x\) and \(\vartheta _y\) from Step 1 obtain the implied ARMA models for the univariate processes \(x_i\) and \(y_i\) (\(i=1,\ldots ,k\));

-

3.

Evaluate the AR(\(\infty\)) representation truncated at some suitable lag \({\tilde{p}}\) for the ARMA models in Step 2 and obtain the estimated autoregressive coefficients \(\pi _{x_il}\) and \(\pi _{y_il}\) (\(i=1,\ldots ,k\); \(l=1\dots {\tilde{p}})\);

-

4.

Using the coefficients \(\pi _{x_il}\) and \(\pi _{y_il}\) of the AR(\({\tilde{p}}\)) representation from Step 3, evaluate the estimated distance \({\hat{d}}(x_i,y_i)\) (\(i=1,\ldots ,k\)) according Eq. (3) truncated at lag \({\tilde{p}}\);

-

5.

Estimate the VAR distance \({\hat{\mathcal{D}}}(\mathbf{x },\mathbf{y })=\sum _{i=1}^k {\hat{d}}(x_{i},y_{i})\);

-

6.

Apply two times a suitable Bootstrap redrawing algorithm to the k-variate residuals \({\hat{\epsilon }}_{x}\) and obtain two set of pseudo-residuals \(\epsilon _{xb1}\) and \(\epsilon _{xb2}\); do the same with \({\hat{\epsilon }}_{y}\) and obtain \(\epsilon _{yb1}\) and \(\epsilon _{yb2}\);

-

7.

Given the VAR(p) model in Step 1, generate the pseudo-data \({\mathbf {x}}_{b1}\) using the estimated parameters \(\vartheta _x\) and the pseudo-residuals \(\epsilon _{xb1}\); given the same VAR model generate the pseudo-data \({\mathbf {x}}_{b2}\) using the estimated parameters \(\vartheta _x\) and the pseudo-residuals \(\epsilon _{xb2}\). The VAR models from pseudo-data \({\mathbf {x}}_{b1}\) and \({\mathbf {x}}_{b2}\) obey the null hypotheses \({\mathcal {D}}({\mathbf {x}}_{b1},{\mathbf {x}}_{b2})=0\). Do the same with \(\vartheta _y\) and the pseudo-residuals \(\epsilon _{yb1}\) and \(\epsilon _{yb2}\) to generate the pseudo-data \({\mathbf {y}}_{b1}\) and \({\mathbf {y}}_{b2}\);

-

8.

Evaluate \(\tilde{\mathcal{D}}_x({\mathbf {x}}_{b1},{\mathbf {x}}_{b2})\) performing steps 1-5 on the pseudo-data \({\mathbf {x}}_{b1}\) and \({\mathbf {x}}_{b2}\);

-

9.

Evaluate \(\tilde{\mathcal{D}}_y({\mathbf {y}}_{b1},{\mathbf {y}}_{b2})\) performing steps 1-5 on the pseudo-data \({\mathbf {y}}_{b1}\) and \({\mathbf {y}}_{b2}\);

-

10.

Repeat steps from 7 to 9 for B times;

-

11.

Evaluate the Bootstrap \(p-value_x\) and \(p-value_y\) as proportion of the B estimated Bootstrap distances \(\tilde{\mathcal{D}}_x\) and \(\tilde{\mathcal{D}}_y\) that exceed the same statistic \(\hat{\mathcal{D}}\) evaluated on the observed data respectively, that is p-val=\(\frac{1}{B}\sum _{l=1}^B I(\tilde{\mathcal{D}}_l>\hat{\mathcal{D}})\), where I(.) is the indicator function;

-

12.

Define the Bootstrap pvalue \(pval_B\) associated to the null \({\mathcal {D}}({\mathbf {x}},{\mathbf {y}})=0\) according to one of the following decision rules:

-

(a)

reject the null if at least one of the Bootstrap pvalues rejects: \(pval_{Bmin}=min\{p-value_x, p-value_y\}\)

-

(b)

reject the null if both Bootstrap p-values rejects: \(pval_{Bmax}=max\{p-value_x, p-value_y\}\) ;

-

(a)

-

13.

Compare the Bootstrap p-value \(pval_B\) with the Bonferroni critical values \(\frac{\alpha }{2}\) where \(\alpha\) is the usual significance level.

Three remarks are in order. First, in this framework if the VAR(p) models in Step 1 are correctly specified, the estimated residuals \(\varvec{{\hat{\epsilon }}}_{t}\) do not show any autocorrelation structure. As a consequence, we don’t need any particular resampling scheme for dependent data: we can apply a simple resampling procedure (MacKinnon 2002). Alternatively a Block Bootstrap scheme for weakly dependent data, as the Stationary Bootstrap (Politis and Romano 1994), can be used. Second, the proposed algorithm can be run for two \(k-\)variate VAR of different orders, say \(p_x\) and \(p_y\) where \(p_x<p_y\), since the distance \(\mathcal {{\hat{D}}}\) depends just on the infinite representation of the implied ARMA models. In this case the algorithm can be easily adapted recalling that, if \(p_x<p_y\), a VAR(\(p_x)\) is a VAR(\(p_y\)), where all the coefficients in the matrices \(A_{p_x+1}\dots A_{p_y}\) are zeros. Finally, the same arguments that led us to introduce the proposed bootstrap strategy are the main obstacle to analytically derive its validity. However, we have good reasons for conjecturing that validity does hold. First, the Max and Min functions are continuous; second, the estimates of the coefficients of the autoregressive representation are consistent and have an asymptotic gaussian distribution. This conjecture is supported by the Monte Carlo simulation commented below.

4 Monte Carlo evaluation

The performance of the proposed inferential strategy can be investigated by the means of Monte Carlo experiments. All computations have been carried out using Hansl, the programming language of the free econometric project GretlFootnote 1. The experiments were conducted on a Intel Core i7 Windows notebook and they lasted about 33 minutes each. We consider a stable bivariate VAR(1) model, a stable trivariate VAR(1) model and a Cointegrated bivariate VAR(2).

4.1 Experiment 1

The bivariate VAR(1) is characterized by the following matrix

The size performance is studied considering two identical VAR(1) generated by the same coefficient matrix A, and two set of vector of i.i.d. Gaussian random error with identity matrix as covariance matrix and, as decision rule, \(p-val_B=max\{p-value_x, p-value_y\}\).

The analysis of the power behavior imposes some choices since the alternative hypothesis can follow multiple structures. Without loss of generality, we can consider a local alternative hypothesis where just one of the coefficients of the second VAR is different from those of the first VAR. In this way we can control the power when the alternative is close to the null one. According to this strategy, for the power experiments the coefficients of the first VAR model are given by the matrix \(\mathbf{A}(L)\) while the coefficients matrix of second VAR model will have all the elements equal to the matrix A with the exception of the element \(a_{12}\) that becomes \({\tilde{a}}_{12}=a_{12}+\delta\) where \(\delta\)= 0.05, 0.25, 0.50, 0.70. We perform, as robusteness check, a second power exercise where the element \(a_{21}\) becomes \({\tilde{a}}_{21}=a_{21}+\delta\)

We perform 500 Monte Carlo replications and 500 Bootstrap redrawings, considering as sample size T = 50, a sample size medium in terms of annual data but small for a quarterly frequency, and T = 100, that is large in terms of annual data, but common for quarterly dataset and adequate in monthly dataset, so that the experiments are relevant for empirical applications. We also verified the test performance in large sample (\(T=1000\)). The Monte Carlo results for the bivariate VAR(1) are summarized in Tables 1 and 2.

The performance in size is quite good when the sample size is small, and the power performance has the expected behavior when the local alternative is closer to the null one. When the local alternative is far from the null, the improvement in power is remarkable for \(T=100\); when the sample size is large we observe the expected behavior. The behavior of the test considering the decision rule \(p-val_B=min\{p-value_x, p-value_y\}\), available on request, is very close to that described.

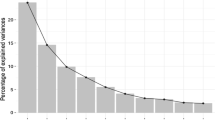

Figure 1 describes the Monte Carlo distribution of \({\mathcal {D}}\) for T=100 under the null (left panel) and the alternative (right panel) hypothesis in case \({\tilde{a}}_{12}=a_{12}+\delta\) with \(\delta =0.70\). The Locke (1976) nonparametric test for the null hypothesis that the Monte Carlo distribution follows a Gamma distribution has been evaluated. For the distribution in the left panel the Locke test is \(-0.855\) with \(p-value\) 0.392 while for the distribution in the right panel the Locke test is \(-3.50\) with p-value \(4.e-4\).

4.2 Experiment 2

A more general case regarding a trivariate VAR(1) characterized by the following matrix

and two trivariate vectors of i.i.d. Gaussian random terms with the same variance-covariance matrix \(\Sigma =\left[ \begin{array}{rrr} 1.0 &{} -0.5 &{} -0.3 \\ -0.5 &{} 1.0 &{} 0.1 \\ -0.3 &{} 0.1 &{} 1.0\\ \end{array}\right] .\)

The size performance is studied using the same strategy described for the bivariate VAR(1). Also in this case the analysis of the power behavior imposes some choices. For comparison purpose we decide to consider again an alternative hypothesis that involves just one of the coefficients in the second VAR. The coefficients of the first VAR model are given by the matrix \(\mathbf{A}(L)\), while the coefficients matrix of second VAR model will have all the elements equal to the matrix \(\mathbf{A}(L)\) with the exception of the element \(a_{12}\) that becomes \({\tilde{a}}_{12}=a_{12}+\delta\), where \(\delta\)= 0.1, 0.4, 0.8, 1.1. We have selected the values of the \(\delta\) coefficient in a such a way that the distance between the VARs in the Experiment 2 is equal to that in the Experiment 1.

Table 3 reports the results for the trivariate VAR(1). The size reflects the expectations, while the power performance has a less remarkable behavior with respect to the Experiment 1. The explanation of this behavior lays in the well-known VAR’s curse of dimensionality (in a VAR model as the dimension k or the order p rises, the number of the parameters dramatically increases). In the presented trivariate case the experiment tries to disentangle the differences between two VARs that differ just for 1 parameter over 15 (9 coefficients plus 6 variances and covariances), while in the Experiment 1 one parameter over 7 differed. For this reason this kind of power exercise can face some difficulties especially in small size samples. However, the power shows the expected behavior for increasing sample sizes.

4.3 Example 3

A third exercice is conducted on the following cointegrated bivariate VAR(2) model:

with covariance matrix \(\Sigma _\epsilon =\left[ \begin{array}{cc} 5 &{} 2 \\ 2 &{} 3 \end{array} \right]\).

As in the first exercise for the power analysis the coefficients of the first VAR model are given by the matrix \(\mathbf{A}(L)\) while the coefficients matrix of second VAR model will have all the elements equal to the matrix A with the exception of the element \(a_{21}\) that becomes \({\tilde{a}}_{21}=a_{21}+\delta\) where \(\delta\)= 0.05, 0.25, 0.50, 0.70.

As it can be seen from Table 4 for \(T=50\) the local power seems quite constant for a nominal value 0.5 while for \(T=100\) and \(T=1000\) the rejection rates have the expected behavior.

5 The distance between France and Germany in terms of dynamic linkage between GDP and unemployment

It is generally accepted that a degree of economic convergence among the European economies is necessary for a well-functioning monetary union. Large differences in economic development among countries make achieving common goals more difficult in heavily integrated currency areas such as the Euro Area. In this framework, it is important to evaluate the degree of homogeneity between the two major European economies, Germany and France. In order to do this, we apply the proposed metric \({\mathcal {D}}\) to investigate the distance between France and Germany in terms of the dynamic linkage between Gross Domestic Product (GDP) and unemployment rate (U). The linkage between the rate of change in GDP and change in unemployment rate (Okun’s Law) has been widely studied in empirical macroeconomics, see among others Christopoulos (2004), Lee (2000), Malley and Molana (2008), Watts and Mitchell (1991), Prachowny (1993).

We use quarterly data from Eurostat database concerning the Gross Domestic Product at market prices, chain linked volumes index with \(2010=100\) seasonally and calendar adjusted (namq_10_gdp in Eurostat datasetFootnote 2) and the Total Unemployment rates (lfsq_urgan in Eurostat dataset)Footnote 3. The sample period is between first quarter 2003 to fourth quarter of 2019 (2003Q1–2019Q4) for 68 observations. For Germany Unemployment rate there are missing values in Quarter I, II and IV in 2003 and 2004. Those missing data are imputed using back-casting. The well known break on GDP caused by the 2008 financial crisis has been linearised by TRAMO procedure for both countries, see Fig. 2. The Total Unemployment rates are seasonal adjusted using TRAMO-SEATS method, see right panel of Fig. 3; the growth rates are in Fig. 4.

The estimated VARs jointly model the rates of growth of unemployment and GDP: \(\Delta log(U)\) and \(\Delta log(GDP)\). The usual lags selection procedure for the VARs, based on the BIC criterion, we select two lags for both countries. The estimated parameters for these models are in Table 5.

The usual residual specification tests on serial correlation, heteroskedasticity and gaussianity are performed. Figure 5 shows the residuals cross-correlation matrices of the VAR model for Germany. The dashed lines of the plots indicate the approximate 2 standard-error limit of the cross-correlation. Based on the plots, the residuals of the model do not have any strong serial or cross-correlation. In Fig. 6 the residuals cross-correlation matrices of the VAR model for France are presented. Also in this case, we notice that most of the correlations in the residual series are negligible.

For testing the lack of serial correlation in the residuals of the estimated VAR models, we use the multivariate Portmanteau test (see Lütkepohl 2005, p. 171). The multivariate ARCH-LM test is used for testing the absence of heteroskedasticity up to lag 4 and the Doornik and Hansen (2008) normality test are applied to the residuals of each VAR model. The diagnostic results provided by these tests are presented in Table 7.

The overall conclusion is that both models adequately capture the association between the variables in time.

For these models we evaluate \({\hat{\mathcal{D}}}=0.40\) and the Bootstrap test based on the max-rule, with 5000 Bootstrap redrawings using the Politis and Romano (1994) Stationary Bootstrap algorithm, gives a p-value \(pval_B\times 100=81.3\). Thus we do not reject the null hypothesis of zero distance. The Bootstrap distribution of \(\tilde{\mathcal{D}}_x\), with a over-imposed Gamma distribution with 8.2 and 0.07 scale and shape estimated parameters, is shown in Fig. 7. For this distribution the the Locke test for null hypothesis of Gamma distribution is \(-1.17\) with \(p-value=0.25\), that does not reject the null. For \(\tilde{\mathcal{D}}_y\) distribution we can obseve very similar results.

Summarizing, we have that the distance between the VAR models is not significantly different from zero. A similar result has been obtained by Nebot et al. (2019). Our conclusion is consistent also with other studies emphasizing the fact both that France and Germany are at the core of the European convergence (see, for example, Lee and Mercurelli 2014) and that the largest EU economies have quite homogeneous business cycles (see, among others, Mihov 2001; Rafiq and Mallick 2008).

The empirical study verifies that, in the observed period, the two countries satisfy the necessary condition for convergence. However, this evidence cannot be considered a sufficient condition since the study does not allow us to exclude that there are forces in the respective labor and output markets that may hinder this process of convergence.

6 Conclusions

Given their economic weight in the euro area, it is important to identify and understand the features, similarities and divergences of the French and German economies. In this paper, we proposed an empirical methodology to investigate how much the relationship between GDP and unemployment growth rates are dynamically different in France and Germany, over the period 2003–2019. In particular, the comparison of the dynamic linkages between unemployment and GDP growth rates, as expressed by the Okun’s law, has been carried out computing the distance, proposed by Di Iorio and Triacca (2018), between the bivariate unemployment-GDP VARs estimated for the two countries. We tested the null hypothesis of zero distance using a new Bootstrap procedure, concluding that it cannot be rejected. In economic terms, the dynamic interrelationships between unemployment and GDP growth rates in France and Germany do not appear to be significantly different in the observed period. However, this evidence cannot be considered as sufficient condition for convergence, since the considered VAR model does not allow us to exclude that other forces in output or labour markets may hinder process of convergence. Our conclusion is consistent also with other studies emphasizing that both France and Germany are at the core of the European convergence and that the two biggest EU economies have quite homogeneous business cycles.

Availability of data and material

Eurostat dataset. For GDP http://appsso.eurostat.ec.europa.eu/nui/show.do?dataset=namq_10_gdp&lang=en; (namq_10_gdp last access Feb 27, 2021). For Total Unemployment rates http://appsso.eurostat.ec.europa.eu/nui/show.do?dataset=lfsa_urgan&lang=en (lfsq_urgan last access Feb 27, 2021).

Code availability

Gretl custom code

Notes

version 2020c.

http://appsso.eurostat.ec.europa.eu/nui/show.do?dataset=namq_10_gdp&lang=en; last access Feb 27, 2021.

http://appsso.eurostat.ec.europa.eu/nui/show.do?dataset=lfsa_urgan&lang=en; last access Feb 27, 2021.

References

Caiado J, Crato N, Peña D (2006) A periodogram-based metric for time series classification. Comput Stat Data Anal 50:2668–2684

Christopoulos D (2004) The relationship between output and unemployment: evidence from greek regions. Papers Reg Sci 83:611–620

Corduas M (1996) Uno studio sulla distribuzione asintotica della metrica Autoregressiva. Statistica, LVI, pp 321–332

Corduas M (2000) La metrica Autoregressiva tra modelli ARIMA: Una procedura operativa in linguaggio GAUSS. Quaderni di Statistica 2:1–37

Corduas M, Piccolo D (2008) Time series clustering and classification by the autoregressive metric. Comput Stat Data Anal 52:1860–1862

Di Iorio F, Triacca U (2013) Testing for Granger non-causality using the autoregressive metric. Econ Model 33:120–125

Di Iorio F, Triacca U (2018) Distance between VARMA models and its application to spatial differences analysis in the relationship GDP-unemployment growth rate in Europe. In: Rojas I, Pomares H, Valenzuela O (eds) Time series analysis and forecasting. Selected contributions from ITISE 2017. Springer, pp 203–215, ISBN:978-3-319-96944-2

Doornik JA, Hansen H (2008) An omnibus test for univariate and multivariate normality. Oxford Bull Econ Stat 70:927–939

Fruhwirth-Schnatter S (2011) Panel data analysis a survey on model based clustering of time series. Adv Data Anal Classif 5:251–280

Galeano P, Peña D (2000) Multivariate analysis in vector time series. Resenhas 4:383–404

Lee J (2000) The Robustness of Okuns law: evidence from OECD countries. J Macroecon 22:331–356

Lee KS, Mercurelli F (2014) Convergence in the core Euro Zone under the global financial crisis. J Econ Int 29:20–63

Liao T (2005) Clustering time series data: a survey. Pattern Recogn 38:1857–1874

Locke C (1976) A test for the composite hypothesis that a population has a gamma distribution. Commun Stat Theory Methods A5:351–364

Lütkepohl H (2005) New introduction to multiple time series analysis. Springer, Berlin

Maharaj EA (1996) A significance test for classifying ARMA models. J Stat Comput Simul 54(4):305–331

Malley J, Molana H (2008) Output, Unemployment and Okuns law: some evidence from the G7. Econ Lett 101:113–115

Martin RJ (2000) A metric for ARMA processes. IEEE Trans Signal Process 48:1164–1170

Mathai AM (1982) Storage capacity of a dam with gamma type inputs. Ann Inst Stat Math 34:591–597

MacKinnon JG (2002) Bootstrap inference in econometrics. Can J Econ 35:615–645

Mihov I (2001) Monetary policy implementation and transmission in the European Monetary Union. Econ Policy 16:370–406

Nebot C, Beyaert A, Garcia-Solanes J (2019) New insights into the nonlinearity of Okuns law. Econ Model 82:202–210

Piccolo D (1990) A distance measure for classifying ARIMA models. J Time Ser Anal 11:153–164

Politis DN, Romano JP (1994) The stationary bootstrap. J Am Stat Assoc 89:1303–1313

Prachowny MFJ (1993) Okuns Law theoretical foundations and revised estimates. Rev Econ Stat 75:331–336

Rafiq MS, Mallick SK (2008) The effect of monetary policy on output in EMU3: a sign restriction approach. J Macroecon 30:1756–1791

Tapinos A, Mendes P (2013) A method for comparing multivariate time series with different dimensions. PLoS ONE 8(2):e54201

Triacca U (2016) Measuring the distance between sets of ARMA models. Econometrics 4:32

Watts M, Mitchell W (1991) Alleged instability of the Okuns law relationship in Australia: an empirical analysis. Appl Econ 23(12):1829–1838

Zellner A, Palm F (1974) Time series analysis and simultaneous equation econometric models. J Econ 2:17–54

Funding

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement. Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Not applicable

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We benefit from discussions with Domenico Piccolo and Stefano Fachin. We also thank the anonymous Reviewer and the Editor for their constructive comments, which helped us to improve the manuscript. Usual disclaimer apply.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Iorio, F.D., Triacca, U. A comparison between VAR processes jointly modeling GDP and Unemployment rate in France and Germany. Stat Methods Appl 31, 617–635 (2022). https://doi.org/10.1007/s10260-021-00594-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-021-00594-2