Abstract

We propose a weighted stochastic block model (WSBM) which extends the stochastic block model to the important case in which edges are weighted. We address the parameter estimation of the WSBM by use of maximum likelihood and variational approaches, and establish the consistency of these estimators. The problem of choosing the number of classes in a WSBM is addressed. The proposed model is applied to simulated data and an illustrative data set.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Networks are used in many scientific disciplines to represent interactions among objects of interest. For example, in the social sciences, a network typically represents social ties between actors. In biological sciences, a network can represent interactions between proteins.

The stochastic block model (SBM) (Holland et al. 1983; Snijders and Nowicki 1997) is a popular generative model which partitions vertices into latent classes. Conditional on the latent class allocations, the connection probability between two vertices depends only on the latent classes to which the two vertices belong. Many extensions of the class SBM have been proposed which include the degree correlated SBM (Karrer and Newman 2011; Peng and Carvalho 2016), mixed membership SBM (Airoldi et al. 2008) and overlapping SBM (Latouche et al. 2011).

The SBM and many of its variants are usually restricted to Bernoulli networks. However, many binary networks are produced after applying a threshold to a weighted relationship (Ghasemian et al. 2016) which results in the loss of potentially valuable information. Although most of the literature has focused on binary networks, there is a growing interest in weighted graphs (Barrat et al. 2004; Newman 2004; Peixoto 2018).

In particular, a number of clustering methods have been proposed for weighted graphs including algorithm based and model based methods. Algorithm based methods for clustering of weighted graphs can be further divided into two classes: algorithms which do not explicitly optimize any criteria (Pons and Latapy 2005; von Luxburg 2007) and those directly optimize a criterion (Clauset et al. 2004; Stouffer and Bascompte 2011). Model based methods (Mariadassou et al. 2010; Aicher et al. 2013, 2015; Ludkin 2020) attempt to take into account the random variability in the data. A recent review of graph clustering methods is given by (Leger et al. 2014).

Mariadassou et al. (2010) presents a Poisson mixture random graph model for integer valued networks and proposes a variational inference approach for parameter estimation. The model can account for covariates via a regression model. In Zanghi et al. (2010), a mixture modelling framework is considered for random graphs with discrete or continuous edges. In particular, the edge distribution is assumed to follow an exponential family distribution. Aicher et al. (2013) proposed a general class of weighted stochastic block model for dense graphs where edge weights are assumed to be generated according to an exponential family distribution. In particular, their construction produces complete graphs, in which every pair of vertices is connected by some real-valued weight. Since most real-world networks are sparse, the constructed model cannot be applied directly. To address this shortcoming, Aicher et al. (2015) extends the work of Aicher et al. (2013) and models the edge existence using a Bernoulli distribution and the edge weights using an exponential family distribution. The contributions of edge-existence distribution and edge-weight distribution in the likelihood function are then combined via a simple tuning parameter. However, their construction does not result in a generative model and it is not obvious how to simulate network observations from the proposed model. More recently, Ludkin (2020) presents a generalization of the SBM which allows artbitrary edge weight distributions and proposes a reversible jump Markov chain Monte Carlo sampler for estimating the parameters and the number of blocks. However, the use of continuous probability distribution to model the edge weights implies that the resulting graph is complete whereby every edge is present. This assumption is unrealistic for many applications whereby a certain proportion of the real-valued edges is 0. Haj et al. (2020) presents a binomial SBM for weighted graphs and proposes a variational expectation maximization algorithm for parameter estimation.

In this paper, we propose a weighted Stochastic Block model (WSBM) with gamma weights which aims to capture the information of weights directly using a generative model. Both maximum likelihood estimation and variational methods are considered for parameter estimation where consistency results are derived. We also address the problem of choosing the number of classes using the Integrated Completed Likelihood (ICL) criteria (Biernacki et al. 2000). The proposed models and inference methodology are applied to an illustrative data set.

2 Model specification

In this section, we present the weighted stochastic block model in detail and introduce the main notations and assumptions.

We let \(\varOmega = ({\mathcal {V}}, {\mathcal {X}}, {\mathcal {Y}})\) denote the set of directed weighted random graphs where \({\mathcal {V}} = \mathbb {N}\) is the set of countable vertices, \( {{\mathcal {X}}} = \{ 0, 1 \}^{\mathbb {N} \times \mathbb {N}}\) is the set of edge-existence adjacency matrix, and \( {\mathcal {Y}} = \mathbb {R}_{+}^{ \mathbb {N} \times \mathbb {N} } \) is the set of weighted adjacency matrix. Given a random adjacency matrix \(X = \{ X_{ij} \}_{i,j \in \mathbb {N}}\), \(X_{ij}=1\) if an edge exists from vertex i to vertex j and \(X_{ij}=0\) otherwise. The associated weighted random adjacency matrix is given by: for \(i \ne j\), if \(X_{ij}=1\), \(Y_{ij} > 0\), and \(Y_{ij}=0\) otherwise. Let \(\mathbb {P}\) be a probability measure on \(\varOmega \).

2.1 Generative model

We now describe the procedure of generating a sample of random graph (V, X, Y) with n vertices from \( \varOmega \).

-

Let \(Z_{[n]}=(Z_{1}, \ldots , Z_{n})\) be the vector of latent block allocations for the vertices, and set \(\theta =(\theta _{1}, \ldots , \theta _{Q})\) with \(\sum _{q} \theta _{q}=1\). For each vertex \(v_{i}\), draw its block label \(Z_{i} \in \{1, \ldots , Q\}\) from a multinomial distribution

$$\begin{aligned} Z_{i} \sim {{\mathcal {M}}}(1; \theta _{1}, \ldots , \theta _{Q}) \text{. } \end{aligned}$$ -

Let \(\pi = (\pi _{ql})_{q,l=1}^{Q}\) be a \(Q \times Q\) matrix with entries in [0, 1]. Conditional on the block allocations \(Z_{[n]}\), the entries \(X_{ij}\) for \(i \ne j\) of the edge-existence adjacency matrix \(X_{[n]}\) is generated from independently a Bernoulli distribution

$$\begin{aligned} X_{ij} | Z_{i}=q, Z_{j}= l \sim {{\mathcal {B}}}(\pi _{ql}) \text{. } \end{aligned}$$ -

Let \(\alpha = (\alpha _{ql})_{q,l=1}^{Q} \) and \( \beta =(\beta _{ql})_{q,l=1}^{Q}\) be \(Q \times Q\) matrices with entries taking values in the positive reals. Conditional on the latent block allocations \(Z_{[n]}\) and edge-existence adjacency matrix X, the weighted adjacency matrix \(Y_{[n]}\) is generated independently from

$$\begin{aligned} Y_{ij} | X_{ij} = 1, Z_{i} = q, Z_{j} = l\sim & {} \text{ Ga }(\alpha _{ql}, \beta _{ql}) \text{, } \\ Y_{ij} | X_{ij} = 0, Z_{i} = q, Z_{j} = l\sim & {} \delta _{\{0\}} \text{, } \end{aligned}$$where \(\text{ Ga }(\cdot ,\cdot )\) denotes the gamma distribution and \( \delta _{\{\cdot \}}\) is the Dirac delta function.

The generative framework described above is a straightforward extension of the binary stochastic block model whereby a positive weight is generated according to a gamma distribution for each edge. In particular, (X, Z) is a realization of the binary directed SBM. The gamma distribution is chosen due to its flexibility in the sense that, depending on the value of its shape parameter, it can represent distributions of different shapes.

The log-likelihood of the observations \(X_{[n]}\) and \(Y_{[n]}\) is given by

where the sum is over all possible latent block allocations, \(\mathbb {P} \{ Z_{[n]} = z_{[n]} \} = \prod _{i=1}^{n} \theta _{z_{i}} \) is the probability of latent block allocation \(z_{[n]}\), and

is the complete data log-likelihood, where \( f(\cdot ;a,b)\) is the gamma probability density function with shape parameter a and rate parameter b,

2.2 Assumptions

We present several assumptions needed for identifiability and consistency of maximum likelihood estimates. The following four assumptions were presented in Celisse et al. (2012) and are needed in this paper.

Assumption 1

For every \(q \ne q^{'}\), there exists \(l \in \{1, \ldots , Q\} \) such that

Assumption 2

There exists \( \zeta \in (0,1)\) such that for all \( (q,l) \in \{1, \ldots , Q \}^{2} \)

Assumption 3

There exists \(0< \gamma < 1/Q \) such that for all \( q \in \{1, \ldots , Q\}\),

Assumption 4

There exists \(0< \gamma < 1/Q \) and \( n_{0} \in \mathbb {N}^{*} \) such that for all \( q \in \{1, \ldots , Q\} \), for all \( n \ge n_{0} \),

where \( N_{q}(z^{*}_{[n]} ) = | \{ 1 \le i \le n : z^{*}_{i} = q | \) and \(z^{*}_{[n]}\) is any realized block allocation under the WSBM.

(A1) requires that no two classes have the same connectivity probabilities. If this assumption is violated, the resulting model has too many classes and is non-identifiable. (A2) requires that the connectivity probability between any two classes strictly lies within a closed subset of the unit interval. Note that this assumption is slightly more restrictive compared to assumption 2 of Celisse et al. (2012) in that we do not consider the boundary cases where \(\pi _{q,l} \in \{0,1\}\). The boundary cases require special treatment and are not pursued in this paper. (A3) ensures that no class is empty with high probability while (A4) is the empirical version of (A3). We note that (A4) is satisfied asymptotically under the generative framework in Sect. 2.1 since the block allocations are generated according to a multinomial distribution.

In addition to the four assumptions above, we also have the following constraints on the gamma parameters.

Assumption 5

For every \(q \ne q^{'}\), there exists \( l \in \{1, \ldots , Q \}\) such that

(A5) requires that no two classes have the same weight distribution. This assumption is the exact counterpart of (A1).

The log-likelihood function (1) contains degeneracies that prevent the direct estimation of parameters \(\theta , \pi , \alpha , \beta \). To see this, we note that the probability density function of a gamma distribution \(\text{ Ga }(a,b)\) is given by

By Stirling’s formula, we have

Setting \(y = ab\),

Therefore, letting \(a \rightarrow \infty \) while keeping \(ab=y\), we have \(f(y;a,b) \rightarrow \infty \). One can therefore show that the log-likelihood function is unbounded above. To avoid likelihood degeneracy, we compactify the parameter space. That is, we restrict the parameter space to a compact subset which contains the true paraemters. Therefore, we have the following assumption.

Assumption 6

There exists \( 0< \alpha _{c}< \alpha _{C} < \infty \) and \( 0< \beta _{c}< \beta _{C} < \infty \) such that for all \( (q,l) \in \{ 1, \ldots , Q \}\),

With this assumption, it is easy to see that the log-likelihood function is bounded for any sample size.

2.3 Identifiability

Sufficient conditions for identifiability of binary SBM with two classes have been first obtained by Allman et al. (2009). Celisse et al. (2012) show that the SBM parameters are identifiable up to a permutation of class labels under the conditions that \( \pi \theta \) has distinct coordinates and \(n \ge 2Q\). The condition on \(\pi \theta \) is mild since the set of vectors violating this assumption has Lebesgue measure 0. The identifiability of weighted SBM is more challenging where the only known result (Section 4 of Allman et al. (2011)) requires all entries of \((\pi , \alpha , \beta )\) to be distinct. We note that the assumptions in the previous section are not necessarily sufficient but are necessary to ensure that the identifiability of the parameters.

3 Asymptotic recovery of class labels

We study the posterior probability distribution of the class labels \(Z_{[n]}\) given the random adjacency matrix \(X_{[n]}\) and weight matrix \(Y_{[n]}\), which is denoted by \(\mathbb {P}(Z_{[n]}|X_{[n]},Y_{[n]})\). Since \(X_{[n]}\) and \(Y_{[n]}\) are random, \(\mathbb {P}(Z_{[n]}|X_{[n]},Y_{[n]})\) is also random.

Let \( P^{*}(X_{[n]},Y_{[n]}) := \mathbb {P}(X_{[n]},Y_{[n]}|Z_{[n]}=z_{[n]}^{*}) \) be the true conditional distribution of \((X_{[n]},Y_{[n]})\) which depends on the true parameters \((\theta ^{*},\pi ^{*},\alpha ^{*},\beta ^{*})\). We study the convergence rate of \(\mathbb {P}(Z_{[n]}|X_{[n]},Y_{[n]})\) towards 1 with respect to \(P^{*}\).

The matrices \(\pi , \alpha , \beta \) are permutation-invariant if one permutes both its rows and columns according to some permutation \(\sigma : \{1,\ldots ,Q\} \rightarrow \{1,\ldots ,Q\}\). Let \(\pi ^{\sigma }, \alpha ^{\sigma }, \beta ^{\sigma }\) be the matrices defined by

and define the set

Two vectors of class labels z and \(z^{'}\) are equivalent if there exists \(\sigma \in \varSigma \) such that \( z^{'}_{i} = \sigma (z_{i}) \text{, } \) for all i. We let [z] denote the equivalence class of z and will omit the square-brackets in the equivalence class notation as long as no confusion arises.

The following result extends Theorem 3.1 of Celisse et al. (2012) to the case of WSBM.

Theorem 1

Under assumptions (A1)–(A6), for every \(t>0\),

uniformly with respect to \(z^{*}\), and for some \( \kappa > 0\) depending only on \(\pi ^{*}, \alpha ^{*}, \beta ^{*}\) but not on \(z^{*}\). Here \(z^{*} = (z_i^{*})_{i=1}^{\infty }\) with \(z_i^{*} \in \{1, \ldots , Q\}\). Furthermore, \(P^{*}\) can be replaced by \(\mathbb {P}\) under assumptions (A1)–(A3) and (A5)–(A6).

4 Maximum likelihood estimation of WSBM parameters

For the binary SBM, consistency of parameter estimation have been shown for profile likelihood maximization (Bickel and Chen 2009), spectral clustering method (Rohe et al. 2011), method of moments approach (Bickel et al. 2011), method based on empirical degrees (Channarond et al. 2012), and others (Choi et al. 2012). Consistency of both maximum likelihood estimation and variational approximation method are established in Celisse et al. (2012) and Bickel et al. (2013) where asymptotic normality is also established in Bickel et al. (2013). Abbe (2018) reviews recent development in the stochastic block model and community detections.

Ambroise and Matias (2012) proposes a general class of sparse and weighted SBM where the edge distribution may exhibit any parametric form and studies the consistency and convergence rates of various estimators considered in their paper. However, their model requires the edge existence parameter to be constant across the graph, that is, \(\pi _{ql} = \pi , \quad \) for all q, l, or \(\pi _{ql}\) can be modelled as \(\pi _{ql} = a_1 I_{q =l} + a_2 I_{q \ne l}\) where \(I_{\cdot }\) is the indicator function and \(a_1 \ne a_2\). Furthermore, they also assume that conditional on the block assignments, the edge weight \(Y_{ij}|X_{i}=q, X_{j}=l\) is modelled using a parametric distribution with a single parameter \(\theta _{ql}\). They further impose the restriction that

These assumptions are more restrictive than those imposed in this paper. Jog and Loh (2015) studies the problem of characterizing the boundary between success and failure of MLE when edge weights are drawn from discrete distributions. More recently, Brault et al. (2020) studies the consistency and asymptotic normality of the MLE and variational estimators for the latent block model which is a generalization of the SBM. However, the model considered in Brault et al. (2020) is restricted to the dense setting and requires the observations in the data matrix to be modelled by univariate exponential family distributions.

This section addresses the consistency of the MLE of WSBM. In particular, we extend the results obtained in the pioneering paper of Celisse et al. (2012) to the case of weighted graphs. Our proof closely follows the proof of consistency of the MLE in Celisse et al. (2012). The MLE consistency proof of \( ( \pi , \alpha , \beta ) \) and \( \theta \) require different treatments since there are \(n(n-1)\) edges but only n vertices. The following result established the MLE consistency of \( (\pi , \alpha , \beta )\).

Theorem 2

Assume that assumptions (A1), (A2), (A3), (A5), (A6) hold. Let us define the MLE of \( ( \theta ^{*}, \pi ^{*}, \alpha ^{*}, \beta ^{*})\) by

Then for any metric \( d(\cdot ,\cdot ) \) on \( (\pi , \alpha , \beta ) \),

Under additional assumption on the rate of convergence of the estimators \( (\hat{\pi }, \hat{\alpha }, \hat{\beta }) \) of \( (\pi , \alpha , \beta )\), consistency of \( \hat{\theta } \) can be established.

Theorem 3

Let \( (\hat{\theta }, \hat{\pi }, \hat{\alpha }, \hat{\beta } ) \) denote the MLE of \( (\theta ^{*}, \pi ^{*}, \alpha ^{*}, \beta ^{*}) \) and assume that \( || \hat{\pi } - \pi ^{*} ||_{\infty } = o_{\mathbb {P}}( \sqrt{\log n}/n ) \), \( || \hat{\alpha } - \alpha ^{*} ||_{\infty } = o_{\mathbb {P}}( \sqrt{\log n}/n ) \), and \( || \hat{\beta } - \beta ^{*} ||_{\infty } = o_{\mathbb {P}}( \sqrt{\log n}/n ) \), then

for any metric d in \(\mathbb {R}^{Q}\).

5 Variational estimators

Direct maximization of the log-likelihood function is intractable except for very small graphs since it involves a sum over \(Q^{n}\) terms. In practice, approximate algorithms such as Markov Chain Monte Carlo (MCMC) and variational inference algorithms are often used for parameter inference. For the SBM, both MCMC and variational inference approaches have been proposed (Snijders and Nowicki 1997; Daudin et al. 2008). Variational inference algorithms have also been developed for mixed membership SBM (Airoldi et al. 2008), overlapping SBM (Latouche et al. 2011), and the weighted SBM proposed in Aicher et al. (2013). This section develops a variational inference algorithm for the WSBM which can be considered a natural extension of the algorithm proposed in Daudin et al. (2008) for the SBM.

The variational method consists in approximating \(P(Z_{[n]}= \cdot | X_{[n]}, Y_{[n]})\) by a product of n multinomial distributions. Let \( {{\mathcal {D}}}_{n}\) denote a set of product multinomial distributions

where

For any \(D_{\tau _{[n]}} \in {{\mathcal {D}}}_{n} \), the variational log-likelihood is defined by

Here \(\mathbb {KL}(\cdot ,\cdot )\) denotes the Kullback-Leibler divergence between two probability distributions, which is nonnegative. Therefore, \({{\mathcal {J}}}\) provides a lower bound on the log-likelihood function. We have that

where \(\text{ b }(\cdot ;p)\) denotes the probability mass function of a Bernoulli distribution with parameter p, and recall that \( f(\cdot ;\alpha _{ql},\beta _{ql}) \) denotes the density function of a gamma distribution \(\text{ Ga }(\alpha _{ql},\beta _{ql})\).

The variational algorithm works by iteratively maximizing the lower bound \({{\mathcal {J}}}\) with respect to the approximating distribution \( D_{\tau _{[n]}} \), and estimating the model parameters. Maximization of \({{\mathcal {J}}}\) with respect to \( D_{\tau _{[n]}} \) consists of solving

where \(\theta , \pi , \alpha , \beta \) can be replaced by plug-in estimates. This has a closed-form solution given by

Conditional on \(\hat{\tau }_{[n]}\), the variational estimators of \( (\theta , \pi , \alpha , \beta )\) are found by solving,

Closed-form updates for \(\tilde{\theta }\) and \(\tilde{\pi }\) exist and are given by

On the other hand, updates for \(\tilde{\alpha }\) and \(\tilde{\beta }\) do not have a closed form since the maximum likelihood estimators of the two parameters of a gamma distribution do not have closed forms. However, using the fact that a gamma distribution is a special case of a generalized gamma distribution, Ye and Chen (2017) derived simple closed-form estimators for the two parameters of gamma distribution. The estimators were shown to be strongly consistent and asymptotically normal. For \( q,l = 1, \ldots ,Q\), let us define the quantities

then the updates for \(\alpha _{ql}, \beta _{ql}\) are given by

We obtain the variational estimators \((\tilde{\theta }, \tilde{\pi }, \tilde{\alpha }, \tilde{\beta })\) by computing (3), (4), (5), (6), (7) until convergence.

We now address the consistency of the variational estimators derived above. The following two propositions are the counterpart of Theorem 2 and 3 for variational estimators. We omit the proof since they follow similar arguments as the proof of Corollary 4.3 and Theorem 4.4 of Celisse et al. (2012).

Proposition 1

Assume that assumptions (A1), (A2), (A3), (A5) and (A6), and let \( (\tilde{\theta }, \tilde{\pi }, \tilde{\alpha }, \tilde{\beta } ) \) be the variational estimators defined above. Then for any distance \(d(\cdot ,\cdot )\) on \( ( \pi , \alpha , \beta ) \),

Proposition 2

Assume that the variational estimators \( (\tilde{\pi }, \tilde{\alpha }, \tilde{\beta }) \) converge at rate 1/n to \( (\pi ^{*}, \alpha ^{*}, \beta ^{*}) \), respectively, and assumptions (A1), (A2), (A3), (A5), (A6) hold. We have

where d denotes any distance between vectors in \(\mathbb {R}^{Q}\).

Note a stronger assumption on the convergence rate 1/n of \( \tilde{\pi }, \tilde{\alpha }, \tilde{\beta } \) is assumed for Proposition 2 compared to \(\sqrt{\log n}/n\) in Theorem 3. The same assumption is also used in Theorem 4.4. of Celisse et al. (2012).

6 Choosing the number of classes

In real world applications, the number of classes is typically unknown and needs to be estimated from the data. For the SBM, a number of methods have been developed to determine the number of classes, including log-likelihood ratio statistic (Wang and Bickel 2017), composite likelihood (Saldaña et al. 2017), exact integrated complete data likelihood (Côme and Latouche 2015) and Bayesian framework (Yan 2016) based methods. Model selection for variants of SBM have also been investigated (Latouche et al. 2014).

We apply the integrated classification likelihood (ICL) criterion developed by Biernacki et al. (2000) to choose the number of classes for the WSBM. The ICL is an approximation of the complete-date integrated likelihood. The ICL criterion for SBM have been derived by Daudin et al. (2008) under the assumptions that the prior distribution of \( (\theta , \pi )\) factorizes and a non-informative Dirichlet prior on \(\theta \). Here we follow the approach of Daudin et al. (2008) to derive an approximate ICL for the WSBM.

Let \(m_{Q}\) denote the model with Q blocks, the ICL criterion is an approximation of the complete-data integrated likelihood:

where \( g(\theta , \pi , \alpha , \beta ) \) is the prior distribution of the parameters. Assuming a non-informative Jeffreys prior on \(\theta \), a Stirling approximation to the gamma function, and finally a BIC approximation to the conditional log-likelihood function, the approximate ICL can be derived. For a model \(m_{Q}\) with Q blocks, and assuming a non-informative Jeffreys prior \(Dir(0.5, \ldots , 0.5)\) on \(\theta \), the approximate ICL criterion is:

where \(\tilde{Z}_{[n]}\) is the estimate of \(Z_{[n]}\). The derivation of above follows exactly the same lines as the proof of Proposition 8 of Daudin et al. (2008).

7 Simulation

We validate the theoretical results developed in previous sections by conducting simulation studies. In particular, we investigate how fast parameter estimates of WSBM converge to their true values and the accuracy of posterior block allocations. Additionally, we investigate the performance of ICL in choosing the number of blocks.

7.1 Experiment 1 (two-class model)

For each fixed number of nodes n, 50 realizations of WSBM are generated based on the fixed parameter setting given in (8 and 9). The variational inference algorithm derived in Sect. 5 is then applied to estimate the model parameters and class allocations.

We can see from Table 1 that the estimated model parameters converge to their true values as the number of nodes increases while the posterior class assignment is accurate across any number of nodes. Table 2 shows the ICL criterion tends to select the correct number of classes, especially when the number of nodes is large.

7.2 Experiment 2 (three-class model)

50 network realizations are obtained under the three-class model with parameter values given in (10 and 11) for a range of values n.

We can see from Table 3 that the estimated parameters converge to their true values quickly as the number of nodes increases. The ICL criterion tends to overestimate the number of classes when the number of nodes is small, but consistently selects the correct model when the number of nodes is large (Table 4).

7.3 Computational complexity

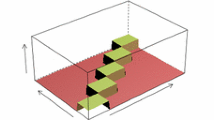

The computational complexity of the variational estimators derived in Sect. 5 scales as \({{\mathcal {O}}}(n^2)\), which has the same complexity as the variational algorithm developed by Daudin et al. (2008). Therefore, the algorithm may be prohibitively expensive for networks with more than 1000 nodes. The estimated computational time for the two-class model in Sect. 7.1 and for the three-class model in Sect. 7.2 for various values of n are shown in Fig. 1. The estimated computing time at each n is the average running time of the variational algorithm over 20 replications.

8 Application: Washington bike data set

We apply the WSBM to analyse the Washington bike sharing scheme data set.Footnote 1 Information with respect to start stations and end stations of trips as well as length (travel time) of trips are available in the data set. We select a time window of one week staring from January 10th, 2016 and construct the adjacency matrix X and weight matrix Y as follows:

-

\(X_{ij} = 1\) if there is trip starting from station i and finishing at station j.

-

\(Y_{ij}\) is the total length of trips (in minutes) from station i to station j.

The resulting network consists of 370 nodes with an average out-degree of 36.14. The average total length of trips between any pair of stations is 42.15 minutes. We apply the ICL criterion to select the number of classes for the WSBM. For each number of classes Q, the variational inference algorithm is fitted to the network 20 times with 20 random initializations and the highest value of ICL is recorded, and the six-class model is chosen (Table 5). Each bike station is plotted on the map in Fig. 2 where its colour represents the estimated class assignment. We observe that bike stations in class 6 (colored in brown) tend to be concentrated in the central area of Washington wheraas stations in class 3 (colored in red) tend to be located further from the center. Figure 2 shows some spatial effect in the class assignment of bike stations whereby stations that are close in distance tend to be in the same cluster, with the exception of class 3 (colored in red). One potential extension of the model is to take into account the spatial locations of the bike stations as covariates.

The estimated class proportions \(\hat{\theta }\) shown in 12 indicate that class 3 has the largest number of stations whereas class 4 has the smallest number of stations. The estimated \(\hat{\pi }\) shows that within class connectivity is generally higher compared to between class connectivity. We further observe that the connection probabilities between bike stations in class 1, 4, and 6 are substantially higher. Interestingly, we observe a near symmetry in the matrix \(\hat{\pi }\) indicating that the probability of having a trip from a station in class k to another station in class l is similar with the probability of having a trip from class l to class k.

The estimated densities of travel time between each pair of classes are shown in Fig. 3. We observe that the majority of the estimated densities have mode and mean close to 0, particularly for the estimated densities in the diagonal of Fig. 3. This implies that the total travel times between stations in the same class are quite short. In comparison, the total travel time between stations in different classes tend to be longer. This is reasonable as the distance between bike stations in different classes tend to be longer which in turn requires longer travel time.

9 Discussion

This paper proposes a weighted stochastic block model (WSBM) for networks. The proposed model is an extension of the stochastic block model. A variational inference strategy is developed for parameter estimation. Asymptotic properties of maximum likelihood estimators and variational estimators are derived, and the problem of choosing the number of classes is addressed by using an ICL criteria. Simulation studies are conducted to evaluate the performance of variational estimators and the use of ICL to determine the number of classes. The proposed model and inference methods are an illustrative data set.

It is straightforward to extend the WSBM to allow node covariates. Let \(w_i \in \mathbb {R}^{d}\) be the covariates for each node \(i=1, \ldots , n\), and let \(w_{ij}\) be the covariates for each pair of nodes, \(i,j=1, \ldots , n, i \ne j\). The edge probability \(p_{ij}\) between a pair of nodes i, j with node i in block q and node j in block l can be modelled as

where \(\xi _{ql,0} \in \mathbb {R}\) and \(\xi _{ql,1}, \xi _{ql,2}, \xi _{ql,3} \in \mathbb {R}^{d}\). Conditional on block assignments and existence of an edge between a pair of nodes i, j, \(Y_{ij}\) can be modelled as a Gamma random variable with mean \(\mu _{ij}\) and variance \(\sigma _{ij}\) where

where \(\phi _{ql,0} \in \mathbb {R}\) and \(\phi _{ql,1}, \phi _{ql,2}, \phi _{ql,3} \in \mathbb {R}^{d}\).

Many possible future extensions are possible. First, it is desirable to investigate further theoretical properties of maximum likelihood and variational estimators of WSBM parameters such as asymptotic normality of the estimators. Furthermore, some of the assumptions imposed in this work in order to ensure consistency of the estimators maybe relaxed. Moreover, the number of blocks is assumed to be fixed in the asymptotic analysis of the estimators. It would be interesting to allow the number of blocks to grows as the number of nodes grows.

Notes

Historical data available at https://www.capitalbikeshare.com/.

References

Abbe E (2018) Community detection and stochastic block models: recent developments. J Mach Learn Res 18:1–86

Airoldi EM, Blei DM, Fienberg SE, Xing EP (2008) Mixed membership stochastic blockmodels. J Mach Learn Res 9:1981–2014

Aicher C, Jacobs AZ, Clauset A (2013) Adapting the stochastic block model to edge-weighted networks. ICML workshop on structured learning

Aicher C, Jacobs AZ, Clauset A (2015) Learning latent block structure in weighted networks. J Compl Netw 3:221–248

Allman ES, Matias C, Rhodes JA (2009) Identifiability of parameters in latent structure models with many observed variables. Ann Stat 37:3099–3132

Allman ES, Matias C, Rhodes JA (2011) Parameter identifiability in a class of random graph mixture models. J Stat Plan Inference 141:1719–1736

Ambroise C, Matias C (2012) New consistent and asymptotically normal parameter estimates for random-graph mixture models. J R Stat Soc Ser B Stat Methodol 74:3–35

Baraud Y (2010) A Bernstein-type inequality for suprema of random processes with applications to model selection in non-Gaussian regression. Bernoulli 16:1064–1085

Barrat A, Barthélemy M, Pastor-Satorras R, Vespignani A (2004) The architecture of complex weighted networks. Proc Natl Acad Sci 101:3747–3752

Bickel PJ, Chen A (2009) A nonparametric view of network models and Newman–Girvan and other modularities. Proc Natl Acad Sci USA 106:21068–21073

Bickel PJ, Chen A, Levina E (2011) The method of moments and degree distributions for network models. Ann Stat 39:2280–2301

Bickel P, Choi D, Chang X, Zhang H (2013) Asymptotic normality of maximum likelihood and its variational approximation for stochastic blockmodels. Ann Stat 41:1922–1943

Biernacki C, Celeux G, Govaert G (2000) Assessing a mixture model for clustering with the integrated completed likelihood. IEEE Trans Pattern Anal Mach Intell 22:719–725

Brault V, Keribin C, Mariadassou M (2020) Consistency and asymptotic normality of Latent Block Model estimators. Electron J Stat 14:1234–1268

Celisse A, Daudin J-J, Pierre L (2012) Consistency of maximum-likelihood and variational estimators in the stochastic block model. Electron J Stat 6:1847–1899

Channarond A, Daudin J-J, Robin S (2012) Classification and estimation in the stochastic blockmodel based on the empirical degrees. Electron J Stat 6:2574–2601

Choi DS, Wolfe PJ, Airoldi EM (2012) Stochastic blockmodels with a growing number of classes. Biometrika 99:273–284

Clauset A, Newman MEJ, Moore C (2004) Finding community structure in very large networks. Phys Rev E 70:066111

Côme E, Latouche P (2015) Model selection and clustering in stochastic block models based on the exact integrated complete data likelihood. Stat Model 15:564–589

Daudin J-J, Picard F, Robin S (2008) A mixture model for random graphs. Stat Comput 18:173–183

Ghasemian A, Zhang P, Clauset A, Moore C, Peel L (2016) Detectability thresholds and optimal algorithms for community structure in dynamic networks. Phys Rev X 6:031005

Haj AE, Slaoui Y, Louis P-Y, Khraibani Z (2020) Estimation in a binomial stochastic blockmodel for a weighted graph by a variational expectation maximization algorithm. Commun Stat Simul Comput:1–20

Holland PW, Laskey KB, Leinhardt S (1983) Stochastic blockmodels: first steps. Soc Netw 5:109–137

Jog V, Loh P-L (2015) Information-theoretic bounds for exact recovery in weighted stochastic block models using the Rényi divergence. CoRR, arXiv:abs/1509.06418

Karrer B, Newman MEJ (2011) Stochastic blockmodels and community structure in networks. Phys Rev E 83:016107

Latouche P, Birmelé E, Ambroise C (2011) Overlapping stochastic block models with application to the French political blogosphere. Ann Appl Stat 5:309–336

Latouche P, Birmelé E, Ambroise C (2014) Model selection in overlapping stochastic block models. Electron J Stat 8:762–794

Leger J-B, Vacher C, Daudin J-J (2014) Detection of structurally homogeneous subsets in graphs. Stat Comput 24:675–692

Ludkin M (2020) Inference for a generalised stochastic block model with unknown number of blocks and non-conjugate edge models. Comput Stat Data Anal 152:107051

Mariadassou M, Robin S, Vacher C (2010) Uncovering latent structure in valued graphs: a variational approach. Ann Appl Stat 4:715–742

Newman MEJ (2004) Analysis of weighted networks. Phys Rev E 70:056131

Peixoto TP (2018) Nonparametric weighted stochastic block models. Phys Rev E 97:012306

Peng L, Carvalho L (2016) Bayesian degree-corrected stochastic blockmodels for community detection. Electron J Stat 10:2746–2779

Pons P, Latapy M (2005) Computing communities in large networks using random walks. In: Proceedings of the 20th international conference on computer and information sciences. Springer, Berlin, ISCIS’05, pp 284–293

Rohe K, Chatterjee S, Yu B (2011) Spectral clustering and the high-dimensional stochastic blockmodel. Ann Stat 39:1878–1915

Saldaña DF, Yu Y, Feng Y (2017) How many communities are there? J Comput Graph Stat 26:171–181

Snijders TAB, Nowicki K (1997) Estimation and prediction for stochastic blockmodels for graphs with latent block structure. J Classif 14:75–100

Stouffer DB, Bascompte J (2011) Compartmentalization increases food-web persistence. Proc Natl Acad Sci USA 108:3648–3652

von Luxburg U (2007) A tutorial on spectral clustering. Stat Comput 17:395–416

Wang YXR, Bickel PJ (2017) Likelihood-based model selection for stochastic block models. Ann Stat 45:500–528

Yan X (2016) Bayesian model selection of stochastic block models. In: 2016 IEEE/ACM international conference on advances in social networks analysis and mining (ASONAM), pp 323–328

Ye Z-S, Chen N (2017) Closed-form estimators for the gamma distribution derived from likelihood equations. Am Stat 71:177–181

Zanghi H, Picard F, Miele V, Ambroise C (2010) Strategies for online inference of model-based clustering in large and growing networks. Ann Appl Stat 4:687–714

Funding

Open Access funding provided by the IReL Consortium. The funding was provided by Science Foundation Ireland (Grant No. SFI/12/RC/2289-2).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Auxiliary results

Definition 1

A random variable X with mean \(\mu = E(X)\) is sub-exponential if there are non-negative parameters \((\nu , b)\) such that

The following lemma is a straight forward consequence of the definition.

Lemma 1

If the independent random variables \(\{ X_{i} \}_{i=1}^{n}\) are sub-exponential with parameters \(( \nu _{i}, b_{i})\), \(i=1,\ldots ,n\), then \(\sum _{i=1}^{n} X_{i} \) is sub-exponential with parameters \((\sqrt{ \sum _{i=1}^{n} \nu _{i}^{2} }, \max _{i=1}^{n} b_{i} )\).

The following results show that for a Gamma random variable Y and a Bernoulli random variable X, both XY and \(X \log Y\) are sub-exponential random variables. They are useful for the proof of the main theorems.

Proposition 3

If \(Y \sim Ga(a, b)\) and \(X \sim Ber(\pi )\), YX is a sub-exponential random variable.

Proof

The expectation of YX is given by

and thus

The moment generating function of YX is given by

which is defined for \(t < b\). Taylor series expansion of \( (1 - \frac{t}{b})^{-a} \) around \(t = 0\) gives that

Similary, Taylor series expansion of \( \exp \left( -t \frac{a}{b} \pi \right) \) around 0 gives that

Thus, we have

This leads to

Hence, we can choose suitable \(\nu , b\) such that

\(\square \)

Proposition 4

If \(Y \sim Ga(a, b)\) and \(X \sim Ber(\pi )\), \(X \log Y \) is a sub-exponential random variable.

Proof

The proof is analogous to the proof of Proposition 3. It is straightforward to show that

and

where \(\varPsi (\cdot )\) is the digamma function. The rest of the proof follows the same line as the proof of Proposition 3 by considering the Taylor series expansion of \( e^{- \mu t}\) and \(E( e^{t X \log Y})\) around \(t= 0\). \(\square \)

The following lemma provides an upper bound for the tail probability of a sub-exponential random variable.

Lemma 2

(Sub-exponential tail bound)Suppose that X with mean \(\mu \) is sub-exponential with parameters \((\nu , b)\). Then

The following inequality for suprema of random processes is needed for the proof of Theorem 2.

Proposition 5

(Baraud 2010, Theorem 2.1) Let \( (S(g))_{g \in {{\mathcal {G}}}} \) be a family of real valued and centered random variables. Fix some \(g_{0}\) in \( {{\mathcal {G}}}\).

Suppose the following two conditions hold:

- 1.:

-

There exist two arbitrary norms \(||\cdot ||_{2}\) and \(||\cdot ||_{\infty }\) on \({{\mathcal {G}}}\) and a nonnegative constant c such that for all \(g_{1}, g_{2} \in {{\mathcal {G}}} (g_{1} \ne g_{2}) \),

$$\begin{aligned} \mathbb {E} \Big [ e^{t (S(g_{1}) - S(g_{2}))} \Big ] \le \exp \Bigg ( \frac{t^{2} ||g_{1} - g_{2}||_{2}^{2} }{ 2(1-t c ||g_{1} - g_{2} ||_{\infty }) } \Bigg ) \end{aligned}$$for all \( t \in \Big [ 0, \frac{1}{c ||g_{1} - g_{2}||_{\infty }} \Big ) \) .

- 2.:

-

Let \({{\mathcal {S}}}\) be a linear space with finite dimension D endowed with the two norms \(||\cdot ||_{\infty }\), \(||\cdot ||_{2}\) defined above, we assume that for constants \(u > 0\) and \(b \ge 0\),

$$\begin{aligned} {{\mathcal {G}}} \subset \{ g \in {{\mathcal {S}}} : ||g - g_{0}||_{\infty } \le u_1, c ||g-g_{0}||_{2} \le u_2 \} . \end{aligned}$$

we have for all \(x>0\),

where \(\kappa = 18\).

Proof

In this section, we prove Theorems 1, 2, 3 for the special case \( \alpha _{q,l} = \alpha _{11} \) for all \( (q,l) \in \{1, \ldots , Q \} \). The more general case can be proved similary by using the fact that \(X_{ij} \log Y_{ij}\) is a sub-exponential random variable.

1.1 Proof of Theorem 1

Proof

Our proof is adapted from Celisse et al. (2012). Using the fact that for every \(z^{'}_{[n]} \in [z_{[n]}]\), \( \mathbb {P}(z^{'}_{[n]} | X_{[n]}, Y_{[n]}) = \mathbb {P}(z_{[n]} | X_{[n]}, Y_{[n]}) \), we have that

where \( |[z_{[n]}]|\) is the cardinality of the equivalence class \( [z_{[n]}] \).

By applying a similar approach as in ( Celisse et al. (2012); “Appendix B2”), one can derive an upper bound for \( P^{*} \Bigg [ \sum _{ [z_{[n]}] \ne [z^{'}_{[n]}] } \frac{ \mathbb {P}( [ Z_{[n]} ] = [ z_{[n]} ] | X_{[n]}, Y_{[n]} )}{ \mathbb {P}( [ Z_{[n]} ] = [ z^{*}_{[n]} ] | X_{[n]}, Y_{[n]} ) } > t \Bigg ] \):

where \(||z_{[n]} - z^{*}_{[n]} ||_{0} \) is the number of difference between \(z_{[n]}\) and \(z^{*}_{[n]}\).

To simplify notation, we write \(z := z_{[n]}\), \( X:=X_{[n]}\), and \(Y=Y_{[n]}\). We have that

Consider the first term on the RHS of the last inequality,

The summand in the summation above vanishes when \( \beta ^{*}_{z_{i},z_{j}} = \beta ^{*}_{z_{i}^{*},z_{j}^{*}} \). For two vectors z and \(z^{'}\), we define

and let \(N_{r}(z) = | D(z,z^{*}) | \) denote the number of terms in the summation. Set

one can show by Lemma B.3 of Celisse et al. (2012) that there exists some positive constant \(c^{*}\) such that \(s \ge c^{*} > 0\). We have that

Assumption (A6) implies that the random variable \( \alpha _{11}^{*} ( \log \beta ^{*}_{z_{i}, z_{j}} / \beta ^{*}_{z^{*}_{i},z^{*}_{j}} ) X_{ij} \) is bounded for all i, j. An application of Hoeffding’s inequality yields that

for some constant \(L_{1} > 0\).

By Proposition 3, the random variable \(Y_{ij} X_{ij} \) is sub-exponential and the tail bound for sub-exponential random variables (Lemma 2) implies that

for some constants \(L_{2}, L_{3} > 0\). Proposition B.4 of Celisse et al. (2012) shows that \(N_{r}(z)\) is bounded below by

Combining inequalities (14), (15), (16), it is straight forward to show that

By Theorem 3.1 of Celisse et al. (2012), we have

for some constant \(A_{2}>0\). Therefore, with \(A := \min \{ A_{1}, A_{2} \} \)

as \( n \rightarrow \infty \). Since the upper bound does not depend on \(z^{*}\), \(P^{*}\) can be replaced by \(\mathbb {P}\). \(\square \)

1.2 Proof of Theorem 2

Proof

As the first step of the proof, we define the normalized complete data log-likelihood function

and its expectation

The following proposition shows that \(\phi _{n}(z_{[n]}, \pi , \alpha , \beta )\) uniformly converges to \( \varPhi _{n}(z_{[n]}, \pi , \alpha , \beta ) \). This result is an extension of Proposition 3.5 of Celisse et al. (2012).

Proposition 6

Under assumptions (A1), (A2), (A5), (A6), we have

where \( {{\mathcal {P}}} := \{ (z_{[n]}, \pi , \alpha , \beta ): {(A1)}, {(A2)}, {(A5)}, {(A6)} \} \).

Proof

We have that

where \( \rho _{n} = 1/n(n-1) \). Notice that under Assumption (A2) and (A6),

Therefore, by Proposition 3.5 of Celisse et al. (2012),

To bound the second term on the RHS of the inequality 17, we apply Proposition 5 (Theorem 2.1 of Baraud (2010)). We first define

Now, for two parameters \( \beta ^{(1)} \) and \( \beta ^{(2)} \),

Since \(X_{ij} Y_{ij} \) is sub-exponential with parameters \((\nu , b)\), \(\sum _{i \ne j, z_{i} = q, z_{j}=l} X_{i} X_{j} \) is sub-exponential with parameters \((\sqrt{n_{ql}} \nu , b)\) by Lemma 3, where \(n_{ql} = | \{ (i, j): z_{i} = q, z_{j} = l\} |\). Therefore, define the norms

we have

for all

where c is some negative constant. Therefore, the first condition of Proposition 5 is satisfied.

Fix some \(\beta ^{(0)}\), (A6) implies that there exist \(u_{1} = {{\mathcal {O}}}(n) \) and \(u_{2} = {{\mathcal {O}}}(1)\) such that \( ||\beta - \beta ^{(0)}||_{2} \le u_{1}\), and \(||\beta - \beta ^{(0)}||_{2} \le u_{2}\). Therefore the second condition of Proposition 5 is also satisfied with \(D = Q^{2}\) . Now, we have

Since \( \sum _{i \ne j} X_{ij} Y_{ij} \beta ^{(0)}_{z_{i},z_{j}} \) is a sub-exponential random variable with parameters \((n \nu , b)\), the second term on the RHS of inequality 18 can be bounded by

To bound the first term on the RHS of inequality 18, we introduce the set \({{\mathcal {P}}}z_{[n]} = \{ (\pi , \alpha , \beta ): (z_{[n]}, \pi , \alpha , \beta ) \in {{\mathcal {P}}} \}\) for every \(z_{[n]}\), and define the event

We have

by Proposition 5. Combining 18, 19, and 20,

Since \(z_{[n]}\) belongs to a set of cardinality at most \(Q^{n}\), by choosing \(x_{n} = n \log (n) \), the three sums converge to 0.

By using the fact that \( X_{ij} \log Y_{ij} \) is a sub-exponential random variable, we can similarly show that

Since the convergence is uniform with respect to \(z_{[n]}^{*}\), \(P^{*}\) can be replaced by \(\mathbb {P}\) and the proof is completed. \(\square \)

Proposition 6 allows us to establish the following result concerning the convergence of the normalized log-likelihood function. Proposition 7 is an extension of Theorem 3.6 of Celisse et al. (2012) and allows us to establish the consistency of MLE of \((\pi , \alpha , \beta )\).

Proposition 7

We assume that assumptions (A1), (A2), (A3), (A5), (A6) hold. For every \(( \theta , \pi , \alpha , \beta )\), set

and

where

Then for any \( \eta > 0\),

Proof

We define the following:

By a similar reasoning as in the proof of Theorem 3.6 of Celisse et al. (2012), we can show that \( \overline{A}_{\pi ^{*}, \alpha ^{*}, \beta ^{*}} = I_{Q} \) and is unique. To show that for all \( \eta >0\), \( \sup _{d((\pi , \alpha , \beta ), (\pi ^{*}, \alpha ^{*}, \beta ^{*})) \ge \eta } \mathbb {M}(\pi , \alpha , \beta ) < \mathbb {M}( \pi ^{*}, \alpha ^{*}, \beta ^{*}) \), we let \( ( \overline{a}_{ql} )_{q,l} \) denote coefficients of \( \overline{A}_{\pi , \alpha , \beta } \). We have that

where \(\mathbb {KL}_{B}( p,q)\) denotes the Kullback-Leibler divergence between two bernoulli distributions \( \text{ Ber }(p)\) and \(\text{ Ber }(q)\), and \(\mathbb {KL}_{G}((a_,b_{1}),(a_2,b_{2}))\) denotes the Kullback-Leibler divergence between two gamma distributions \( \text{ Ga }(a_1,b_{1}) \) and \(\text{ Ga }(a_2,b_{2})\).

Since the set \( \{ (\pi , \alpha , \beta ) | d( ( \pi , \alpha , \beta ), (\pi ^{*}, \alpha ^{*}, \beta ^{*}) ) \ge \eta ) \} \) is compact by assumptions, there exists \( (\pi ^{0}, \alpha ^{0}, \beta ^{0}) \ne (\pi ^{*}, \alpha ^{*}, \beta ^{*} ) \) such that

Next we show that

We first have the following bound:

We first consider the first term on the RHS of inequality (21):

Consider the second term on the RHS of inequality (21), if \( \phi _{n}( \hat{z}, \pi , \alpha , \beta ) < \varPhi _{n}( \tilde{z}, \pi , \alpha , \beta ) \), we have

On the other hand, if \(\phi _{n}( \hat{z}, \pi , \alpha , \beta ) \ge \varPhi _{n}( \tilde{z}, \pi , \alpha , \beta ) \),

Therefore, Proposition 6 implies that

Last, we notice that under our assumptions (A2) and (A6), and using the strong law of large number,

Combining (22), (23) and (24), we have the desired result. \(\square \)

The consistency of \( (\hat{\pi }, \hat{\alpha }, \hat{\beta }) \) follows from Proposition 7 and Theorem 3.4 of Celisse et al. (2012). \(\square \)

1.3 Proof of Thereom 3

Proof

We denote \( \hat{P}( Z_{[n]} = z_{[n]} | X_{[n]}, Y_{[n]}) \) as the conditional distribution of \( Z_{[n]} \) under the parameters \( (\hat{\theta }, \hat{\pi }, \hat{\alpha }, \hat{\beta } )\). The following result is an extension of Proposition 3.8 of Celisse et al. (2012) and is needed to establish the consistency of \(\hat{\theta }\).

Proposition 8

Assume that assumptions (A1)-(A6) hold, and there exists estimators \(\hat{\pi }, \hat{\alpha }, \hat{\beta }\) such that \( || \hat{\pi } - \pi ||_{\infty } = o_{P}(v_{n}) \), \( || \hat{\alpha } - \alpha ||_{\infty } = o_{P}(v_{n}) \), \( || \hat{\beta } - \beta ||_{\infty } = o_{P}(v_{n}) \), with \(v_{n} = o( \sqrt{ \log n }/n) \). Let also \(\hat{\theta } \) denote any estimator of \(\theta ^{*}\). Then for every \(\epsilon > 0\),

for n large enough, and for some constants \(\kappa _{1}, \kappa _{2} > 0\) and

Proof

We can write

where

By the proof of Proposition 3.8 of Celisse et al. (2012), we have

We have that

The proof of Proposition 3.8 of Celisse et al. (2012) shows that

We have that

Since \(X_{ij} Y_{ij}\) is a sub-exponential random variable and \(X_{ij}\) is bounded for all i, j, one can show that

Using similar techniques as in the proof of Proposition 3.8 of Celisse et al. (2012), one can show that

Combining inequalities 25, 26, 27, 28, 29, and letting \( v_{n} = o( \sqrt{ \log n}/ n) \), we have for some \(B_{1}, B_{2}, B_{3} > 0\),

where \( u_{n}^{'} = \exp \Big [ B_{2} n \log n - B_{3} \frac{ (\log n)^{2}}{n v_{n}^{2}} \Big ] \). Since \((1+(Q-1) u_{n}^{'})^{n} \rightarrow 1\) as \(n \rightarrow \infty \), and the proof is completed. \(\square \)

The proof of the consistency of \( \hat{\theta } \) is a consequence of the proposition above and follows the same lines as the proof of Theorem 3.9 of Celisse et al. (2012).\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ng, T.L.J., Murphy, T.B. Weighted stochastic block model. Stat Methods Appl 30, 1365–1398 (2021). https://doi.org/10.1007/s10260-021-00590-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-021-00590-6