Abstract

With reference to causal mediation analysis, a parametric expression for natural direct and indirect effects is derived for the setting of a binary outcome with a binary mediator, both modelled via a logistic regression. The proposed effect decomposition operates on the odds ratio scale and does not require the outcome to be rare. It generalizes the existing ones, allowing for interactions between both the exposure and the mediator and the confounding covariates. The derived parametric formulae are flexible, in that they readily adapt to the two different natural effect decompositions defined in the mediation literature. In parallel with results derived under the rare outcome assumption, they also outline the relationship between the causal effects and the correspondent pathway-specific logistic regression parameters, isolating the controlled direct effect in the natural direct effect expressions. Formulae for standard errors, obtained via the delta method, are also given. An empirical application to data coming from a microfinance experiment performed in Bosnia and Herzegovina is illustrated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mediation analysis has the general purpose to understand to what extent the overall effect of a treatment/exposure (X) on an outcome (Y) is due to the presence of an intermediate variable (W), called mediator, influenced by the treatment and affecting the outcome in turn. Mediation analysis has been developed both for associational (Judd and Kenny 1981; Baron and Kenny 1986) and causal frameworks (Robins and Greenland 1992; Pearl 2001); see also Geneletti (2007) for an alternative approach without counterfactuals.

In a non causal framework, the first notable contribution to mediation for the linear case is due to Cochran (1938). As is well-known, Cochran’s formula decomposes the total effect of X on Y into the sum of products of pathway-specific regression parameters, thereby opening the way to path analysis (Bollen 1989). Unfortunately, this one-to-one mapping between effects and regression coefficients is lost when even minimal deviations from linearity, like the presence of an XW interaction term in the model for Y, are introduced. Nevertheless, a number of decompositions have been proposed where the total effect can still be written as a sum of terms with a clear interpretation; see Cox (2007) for quantile regression and Stanghellini and Doretti (2019) and Lupparelli (2019) for logistic and log-linear regression.

In a causal framework, formal definitions of total, direct and indirect effects were first introduced by Robins and Greenland (1992) and Pearl (2001); see also Pearl (2009) for a comprehensive overview. In these approaches, effect decompositions typically work on an additive scale. Specifically, Robins and Greenland (1992) introduced the so-called pure and total direct and indirect effects, so that two different decompositions are obtained overall. In detail, the pure direct effect and the total indirect effect sum to the total effect, and so do the total direct effect and the pure indirect effect. All these direct and indirect effects are referred to as natural effects, in addition to the controlled direct effect as defined in Pearl (2001). Under certain assumptions, natural effects can be identified and estimated from observational data, even in a non-parametric setting, with a class of methods known as the mediation formula (Pearl 2010); see also Imai et al. (2010a, b) for a related approach. Although it allows to avoid assuming a specific form for the mediator and outcome data generating processes, such a non-parametric estimation framework might be problematic in the presence of a continuous treatment. Similarly, when the number of confounders to adjust for is relevant, the curse of dimensionality is likely to occur. In these cases, a parametric setting obviates the fact that some data configuration might have just a few or possibly no sample observations for estimation. When X is binary, a compromise solution might be represented by semi-parametric methods based on the propensity score (Rosenbaum and Rubin 1983); see e.g. Huber et al. (2018).

For binary outcomes, ratio scales have also been recently investigated in the literature. In particular, VanderWeele and Vansteelandt (2010) and Valeri and VanderWeele (2013) have defined causal effects on the odds ratio scale in a way such that the relationship between the total effect and the natural direct and indirect effects is no longer additive but multiplicative. In this framework, the aforementioned twofold decomposition is maintained and the mediation formula can still be applied, leading - with the same provisos discussed above - to either non-parametric or parametric expressions for natural effects. Like in the associational case, the parametric formulations lack a one-to-one mapping with regression coefficients due to non-linearity. Nevertheless, they remain appealing since they highlight the role of path-specific coefficients in a rather intuitive way, allowing, as a by-product, to isolate the controlled direct effect as a component of the natural direct effect.

It is important to notice that VanderWeele and Vansteelandt (2010) and Valeri and VanderWeele (2013) base their parametric identification of natural effect odds ratios on the assumption that the outcome is rare within all the strata formed by X and W, i.e., that \(P(Y=1\mid X=x,W=w)\) is small for every (x, w) configuration; see a detailed discussion in Samoilenko et al. (2018), Samoilenko and Lefebvre (2019) and VanderWeele et al. (2019). This allows them to use the logarithmic function in place of the logistic function, corresponding in practice to approximate effects on the odds ratio scale to effects on the risk ratio scale. When the outcome is not (conditionally) rare, such an approximation is no longer valid, thereby representing a serious limitation in many empirical data analyses.

In this paper, we focus on a setting with a binary outcome and a binary mediator, both modelled via a logistic regression. We provide a novel parametric expression for the natural direct and indirect effects, on the odds ratio scale, that does not rely on the rare outcome assumption. Like the approaches developed under the rare outcome assumption, our formulation allows to appreciate the role pathway-specific coefficients play in natural effects, isolating the controlled direct effect in the natural direct effect formulae. This is an advantage compared to other exact formulations already introduced in the literature, where parametric expressions are plugged in place of the probabilities appearing in the mediation formula (Gaynor et al. 2019; Samoilenko et al. 2018). Furthermore, our approach is rather flexible since a single parametric object, that we name A-term, governs all the causal contrasts needed to identify the four natural effects.

In this framework, it is possible to account for the possible presence of any kind of confounders, that is, exposure-outcome, mediator-outcome or exposure–mediator confounders. Therefore, the proposed formulae can be used to estimate causal effects in the presence of observational data, provided that all relevant confounders are measured without error. Furthermore, they handle every possible interaction in regression models, including those between the exposure (as well as the mediator) and the confounding covariates. These interactions were not previously considered by either exact or approximate approaches; see VanderWeele and Vansteelandt (2010) and Valeri and VanderWeele (2013) for a related discussion. We also derive compact formulae to compute, via the delta method, the approximate standard errors of the exact natural effect estimators. The availability of these formulae is likely to boost the use of exact estimators in place of approximate estimators in applications.

The paper is structured as follows. In Sect. 2, we outline the general theory leading to the natural effect decompositions, reporting the parametric formulae for the natural direct and indirect effects on the odds ratio scale. The relationship between these effects and the correspondent pathway-specific regression coefficients is studied in Sect. 2.3. In Sect. 3, we discuss an application to data gathered from a randomized microcredit experiment performed in Bosnia and Herzegovina (Augsburg et al. 2015), where a plausible mediation scheme arises which was not considered in previous analyses. Finally, in Sect. 4 some concluding remarks are offered.

2 Parametric effect decomposition

2.1 Notation and assumptions

We denote the binary outcome by Y, the binary mediator by W and the treatment/exposure, which can be of any nature, by X. We take a potential outcome approach (Rubin 1974) and let \(Y_{x}\) and \(W_{x}\) be, respectively, the random variables representing the outcome and the mediator had the exposure been set, possibly contrary to the fact, to level x. Further, \(Y_{xw}\) indicates the value of the outcome if X had been set to x and W to w.

In line with the classical causal mediation framework, we make the standard assumptions needed to identify causal direct and indirect effects. Among these there are the so-called consistency and composition assumptions. Consistency states that, in the subgroup of units with \(X=x\), the observed variables Y and W equal the potential outcome variables \(Y_x\) and \(W_x\) respectively (VanderWeele 2009). In the mediation framework, consistency also requires that, for units with \(X=x\) and \(W=w\), Y is equal to the potential outcome \(Y_{xw}\) (VanderWeele and Vansteelandt 2009). On the other hand, composition requires that \(Y_x=Y_{xW_x}\), i.e., that the potential outcome associated to the intervention \(X=x\) be equal to the potential outcome associated to setting X to x and the mediator to \(W_x\), which is the value it would have naturally attained under \(X=x\) (VanderWeele and Vansteelandt 2009).

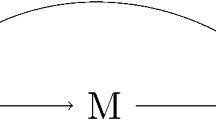

A number of assumptions concerning confounding are also required which are graphically summarized in Fig. 1. Specifically, we assume a set of covariates \(C=(T,S,V)\) suffices to remove the exposure-outcome (T), the mediator-outcome (S) and the exposure–mediator (V) confounding. In the language of conditional independence (Dawid 1979), this corresponds to the conditional independence statements

-

(1)

\(Y_{xw}\perp \!\!\!\perp X \mid T\),

-

(2)

\(Y_{xw} \perp \!\!\!\perp W \mid (X,S)\),

-

(3)

\(W_{x} \perp \!\!\!\perp X \mid V\),

which have to hold for every level x and w. Another necessary assumption, sometimes termed cross-world independence (Steen and Vansteelandt 2018), is encoded by the conditional independence statement

-

(4)

\(Y_{xw} \perp \!\!\!\perp W_{x^{\star }} \mid C\),

for all x, \(x^{\star }\) and w. This assumption means in practice that none of the variables tackling the mediator-outcome confounding can be affected by the treatment (which would correspond to an arrow from X to S in Fig. 1). Such an effect would compromize the identification of natural effects (Valeri and VanderWeele 2013; VanderWeele and Vansteelandt 2010), unless alternative methods to handle variables that are simultaneously confounders and mediators are introduced (Robins 1986; Daniel et al. 2015; Steen et al. 2017). In what follows, to simplify formulae we will use the condensed notation \(Z=(T,S)\). Without loss of generality, we can think of Z and V as of univariate random variables. This is equivalent to assume that one covariate addresses both the exposure-outcome and the mediator-outcome confounding and another covariate manages the exposure-mediator confounding. Results for the case of multiple Z and V follow in a straightforward way; see “Appendix 1”.

In this framework, with reference to a situation where the exposure is changed from a reference level \(x^{\star }\) to another level x, VanderWeele and Vansteelandt (2010) introduced the definitions of causal effects on the odds ratio scale. Specifically, the controlled direct effect is defined as

and describes the causal effect of the exposure on the outcome while the mediator is kept to level w for every unit (VanderWeele and Vansteelandt 2010). In contrast, the pure natural direct effect

quantifies the same effect when keeping the mediator to \(W_{x^{\star }}\), that is, to the level it would have naturally attained for each unit under the exposure level \(x^{\star }\) (VanderWeele and Vansteelandt 2010). The total natural indirect effect is given by

and compares the odds of \(Y=1\) had the exposure been set to x and the mediator been set to the value that it would have naturally taken if the exposure had been set to x (\(W_x\)) against the odds of \(Y=1\) had the exposure been set to x but the mediator been set to the value that it would have naturally achieved if exposure had been set to \(x^{\star }\) (\(W_{x^{\star }}\)). In other terms, the total natural indirect effect measures the causal effect on the outcome of moving the mediator from \(W_{x^{\star }}\) to \(W_x\) while keeping the exposure X fixed to level x (VanderWeele and Vansteelandt 2010).

With regard to the other decomposition, the total natural direct effect can be defined as

whereas the pure natural indirect effect is

In line with (2), the former represents the causal effect on the outcome of moving the exposure from \(x^{\star }\) to x under an external intervention keeping the mediator to \(W_x\), the level it would have naturally taken for each unit under the exposure level x. The latter, in analogy with (3), compares the causal effect on the outcome of moving the mediator from \(W_x\) to \(W_{x^{\star }}\), with exposure X set to level \(x^{\star }\).

Finally, the total causal effect is defined as

and can be decomposed in the multiplicative fashion

or, as it is often presented, in an additive fashion on the logarithmic scale. The choice of which natural effect to use, and thus which decomposition, depends on the research questions one aims to investigate (Hafeman and Schwartz 2009). Combining the assumptions given in Sect. 2.1, the causal effects defined above can be non-parametrically identified by using the Pearl’s mediation formula (Pearl 2001, 2010; VanderWeele and Vansteelandt 2010; Valeri and VanderWeele 2013).

2.2 Parametric formulae for causal natural effects

As mentioned in the Introduction, parametric formulae can be derived which express the natural effects as a function of the coefficients of the logistic regression models for Y and W, that can be easily estimated from observational data. We first present results for the case of absence of covariates, i.e. for \(C=\emptyset\). Then, the more general case will be addressed. Specifically, we can formulate the two models as

and

In this setting, the logarithm of the controlled direct effect (1) can be identified by

without the need to invoke the rare outcome assumption (VanderWeele and Vansteelandt 2010). Here, letting

and

we show that the parametric expressions for the logarithm of the causal effects defined in (2) and (3) are given by

and

Similarly, formulae for the logarithm of the effects in (4) and (5) can be written as

and

The mathematical derivations leading to (9) and (10) are given in “Appendix 1”, with those for (11) and (12) following in a straightforward way. Combining (9) and (10) (or alternatively, (11) and (12)) one immediately obtains the identification expression for the logarithm of the total casual effect, which is

The parametric object in Eq. (8) is called the A-term. Notice that its first subscript refers to the value of X in the model for Y given X and W, while the second one refers to the value of X in the model for W given X. The main advantage of this compact notation is the fact that it creates a clear parallel between the contrasts contained in the definitions (2)–(5) and those contained in the identification formulae (9)-(12). In a non causal framework, Stanghellini and Doretti (2019) have shown that \(A_{x,x}\) is the inverse of the risk ratio of \({{\bar{W}}} = 1-W\) for varying Y when \(X=x\), that is

However, it is important to underline this interpretation holds only when the two subscripts of the A-term take the same value.

The first of the two parametric decompositions above generalizes the one by Valeri and VanderWeele (2013) developed under the rare outcome assumption. Specifically, it holds that the exponential of (9) tends to the pure natural direct effect in Valeri and VanderWeele (2013, p.150) if \(P(Y=1\mid X=x,W=w)\) (or, equivalently, the exponentiated linear predictor \(e_y(x,w)\)) tends to zero for every (x, w) configuration. A similar argument applies to the total natural indirect effect, though with some distinctions regarding the set of configurations tending to zero. A formal account about these limits concerning also the other decomposition is contained in “Appendix 2”. Furthermore, like in Valeri and VanderWeele (2013), our formulation expresses the odds ratio natural direct effects (pure and total) as the product between the controlled direct effect \({\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{CDE}}}(0)\) and a residual term.

The extension of the above setting to the parametric inclusion of covariates C is immediate. In detail, if Eqs. (6) and (7) are modified to account for these additional covariates and for all their possible interactions, i.e., to

and

then also the natural effects become conditional on the covariate configuration \(C=c\). Specifically, we have

and

where, denoting the exponentiated linear predictors by the compact forms

the conditional version of (8) is given by

The proof follows immediately from the previous one and is also reported in “Appendix 1”. Similarly to the previous case, the parametric identification of the other causal estimands is obtained from the conditional versions of Eqs. (11) and (12). It is worth to underline that all the causal effects above are heterogeneous across the covariate patterns even in the absence of the interaction terms in Eqs. (15) and (16); see for example the empirical application considered in Sect. 3. A similar argument holds for the treatment/mediator interaction and heterogeneity across treatment levels; see Sect. 2.3.

Clearly, the consistent estimation of these causal effects can be achieved by simply plugging-in the parameter estimates obtained from the fitted logistic regression models in the formulae above. The variance–covariance matrix of the effect estimators can be obtained via the delta method (Oehlert 1992). The explicit formulae for the first-order delta approximation of such a matrix are reported in “Appendix 3”.

2.3 Links with the pathway-specific coefficients

The parametric formulae given in Sect. 2.2 link natural effects to their pathway-specific regression coefficients in an explicit way. Indeed, although the general expression of the A-term involves the whole set of parameters, it is possible to spot a particular behavior of the natural effects when the three pathway-specific sets of parameters are null, that is, when: (i) \(\beta _x=0=\beta _{xw}\), (ii) \(\beta _w=0=\beta _{xw}\), and (iii) \(\gamma _x=0\).

In case (i), it can be noticed that \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{PNDE}}}\) and \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{TNDE}}}\) are null, and so is \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{CDE}}}(0)\). In summary, for this case

In case (ii), the A-term is equal to 1 for all the combinations of its subscripts, so that every pair of A-terms generates a null contrast on the logarithmic scale. As a consequence, we have that \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{TNIE}}}=0=\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{PNIE}}}\) and that

In case (iii), again the two natural indirect effects vanish simultaneously, so that

However, these quantities are not necessarily equal to \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{CDE}}}(0)=\beta _x(x-x^{\star })\) like in the previous case.

It is useful to notice that such a clear correspondence between model parameters and direct/indirect effects does not hold with associational (non-causal) effects. In particular, for non-linear models it is known that the condition \(\gamma _{x}=0\) does not guarantee the marginal and conditional effects of X on Y be equal. For logistic regression, this is related to the well-known fact that odds ratios are non-collapsible association measures (Greenland et al. 1999). Also, notice that the condition \(\beta _{xw}=0\) does not generally imply that \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{PNDE}}}\) equals \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{TNDE}}}\) or that \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{PNIE}}}\) equals \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{TNIE}}}\), i.e., the causal effects are heterogeneous across treatment levels regardless of the presence of the treatment-mediator interaction. Conversely, in linear models a null interaction results in the absence of such a kind of effect heterogeneity (Huber 2019). For binary outcomes, the same happens for log-linear regression models. For a more detailed dissertation within the four-way decomposition framework see VanderWeele (2014).

Another interesting situation arises when \(x^{\star }=0\), including (but not limited to) the case of binary exposures. In this case, we can notice that \(\log {\mathrm{OR}}_{x,0}^{{\mathrm{CDE}}}(0)\) is obtained evaluating \(\log {\mathrm{OR}}_{x,0}^{{\mathrm{TE}}}\) in \(\beta _w=0=\beta _{xw}\). Furthermore, \(\log {\mathrm{OR}}_{x,0}^{{\mathrm{PNIE}}}\) is obtained from \(\log {\mathrm{OR}}_{x,0}^{{\mathrm{TE}}}\) evaluated in \(\beta _x=0=\beta _{xw}\), whereas \(\log {\mathrm{OR}}_{x,0}^{{\mathrm{PNDE}}}\) equals \(\log {\mathrm{OR}}_{x,0}^{{\mathrm{TE}}}\) evaluated in \(\gamma _x=0\).

3 Application to Bosnian microcredit data

Microcredit, as the main tool of microfinance, makes credit accessible to those individuals, often termed “unbankables”, that are considered too risky and financially unreliable to access regular loans granted by financial institutions. The main purpose of microcredit is the enhancement of the overall “bankability” - that is, the capability to attract loans from banks or other microfinance institutions (MFIs) - of the financially disadvantaged individuals. We here offer an empirical application of the derived analytical results to a microcredit experiment implemented in Bosnia and Herzegovina by Augsburg et al. (2015); see also Banerjee et al. (2015) for details about the more general project involving similar experiments in other countries. This study was performed during the period 2009–2010 and was addressed to a particular segment of unbankable people formed by the potential clients of a well-established MFI of the country.

The main goal of the experiment was to evaluate the impact of randomly allocated microcredit loans not only on clients’ bankability but also on a number of other socioeconomic outcomes including self-employment, business ownership, income, time worked, consumption and savings. At baseline, clients were selected to take part to the experiment and enrolled in a pre-intervention survey in order to collect main information concerning them and their household. Then, they were randomly assigned to the exposure (access to the microloan) or control group. After 14 months, the research team conducted a follow-up survey on the same respondents recruited at baseline. In total, 995 respondents were interviewed at the two waves. In the description of the experiment contained in Augsburg et al. (2015), non-compliance issues appear to be absent, but a 17% loss to follow-up was registered. However, the reasons leading to client dropout, together with formal analyses reported in the paper, do not induce to think that a non-ignorable missigness mechanism (Molenberghs et al. 2008) occurred. The average microloan amount was equal to 1’653 Bosnian marks (BAM, with an exchange rate at baseline of US$1 to BAM 1.634) with an average maturity of 57 weeks.

Since individuals were free to use money from the loan for business activities as well as for household consumption, a positive effect of the financing policy on many of the above-mentioned socioeconomic indicators was found (Augsburg et al. 2015). However, some of these measures can be reasonably thought of not only as final outcomes, but also as determinants of clients’ future credit attractiveness, lending themselves to the role of possible mediators of the overall effect of microcredit on bankability. In particular, Banerjee et al. (2015) acknowledge, though without any formal analysis, business ownership as the main candidate as a mediator variable. Indeed, a business can be started or maintained thanks to the initial microcredit financing, and business owners are usually more likely to access the loan market than others. In line with the hypothesis above which involves a binary mediator, we here compute the two odds ratio decompositions of the causal total effect into the natural effects.

We make use of the notation of Sect. 2 and denote by X the binary exposure taking value 1 if the client gets the microcredit financing at baseline, by Y the binary outcome taking value 1 for clients who have access to at least one new credit line at follow-up, and by W the binary mediator with value 1 for units owning a personal business, which is also measured at follow-up. Notice that individuals with \(Y=1\) might have received loans from an MFI as well as from other traditional institutions like banks. A graphical representation of the setting under investigation is shown in Fig. 2.

From Fig. 2, it is possible to note that no exposure-outcome and exposure–mediator confounders are included in the analysis since the exposure assignment is randomized. On the contrary, the set of possible mediator-outcome confounders S needs to be taken into account. Some preliminary research combined with subject matter considerations led to include in S client’s age (A), educational level (U) and number of active loans (L). In particular, age is measured in years while educational level is coded as a binary variable taking value 1 for individuals with at least a university degree. All these covariates are measured at baseline and can be considered as pre-treatment variables. This should ensure that the cross-world independence assumption (see Sect. 2) holds, since none of the variables in S is causally affected by the exposure (VanderWeele and Vansteelandt 2009; Steen and Vansteelandt 2018). In the sample, age ranges from 17 to 70 years, with an average of 37.81 years and a median of 37 years. The first and third quartiles are 28 and 47 years respectively. Further, only 5% of sample units own a university degree, while only 4% have three or more active loans at baseline. All the sample marginal probabilities for X, W and Y are close to 0.5. In detail, we have \(P(X=1)=0.55\), \(P(W=1)=0.54\) and \(P(Y=1)=0.57\). Furthermore, we have \(P(Y=1 \mid X=0,W=0)=0.24\), \(P(Y=1 \mid X=1,W=0)=0.67\), \(P(Y=1 \mid X=0,W=1)=0.41\) and \(P(Y=1 \mid X=1,W=1)=0.84\), in contrast with the rare outcome assumption, which is clearly violated also conditionally on the covariates S.

Table 1 contains the output of the fitted logistic regression models for the outcome and the mediator, whereas Table 2 shows the estimates, together with their variability measures, of the causal effects obtained from these model parameters. The effects refer to individuals with median age and all the most common patterns of the other covariates. The asymptotic standard errors and confidence intervals are constructed using the delta method as illustrated in “Appendix 3”. As in standard analyses on odds ratios, the 95% confidence intervals are first built on the logarithmic scale and then exponentiated. Also, the p values refer to tests where the null hypotheses are formulated on the logarithmic scale, that is, that log-odds ratios are equal to zero. In the outcome model, the presence of interaction terms involving the confounders was explored, but none of these effects resulted statistically significant or, to the best of our judgment, worth to be added to the model.

Table 1 shows that all the estimated coefficients related to the mediation pathways \(X\rightarrow W\rightarrow Y\) and \(X\rightarrow Y\) are positive and statistically significant, with the exception of the interaction \({\hat{\beta }}_{xw}\), which is positive but not significant (p value 0.643). However, it is possible to notice a relevant difference in the coefficient magnitudes. Indeed, \({\hat{\beta }}_{w}\) and \({\hat{\gamma }}_{x}\) are much smaller than \({\hat{\beta }}_{x}\), suggesting that the natural direct effect is the dominant component of the total effect. This is confirmed by the results in Table 2 which are rather stable across the covariate patterns examined. Specifically, the estimated natural direct effects always lie between 6.647 and 6.868, whereas all the estimates of natural indirect effects range between 1.048 and 1.059. These values are very close to those obtained by averaging across all the covariate patterns. In this setting, the pure (total) natural direct effect is the effect of the microloan keeping business ownership to the level it would naturally take in the absence (presence) of the microloan itself. Similarly, the total (pure) natural indirect effect is the effect of moving business ownership from its natural level under no microloan to that under the microloan administration, while keeping the microloan fixed to be present (absent). With regard to total effects, we observe that the estimated causal odds ratios reported in Table 2 lie around 7.100, whereas the average odds ratio across all the covariate patterns is 7.049. These values are slightly greater than, but essentially in line with, the marginal outcome/exposure odds ratio (6.885, s.e. 0.985 with the delta method). Since access to microloans is randomly assigned, such a marginal odds ratio also has a causal interpretation outside the mediation framework.

All the 95% confidence intervals for the direct effects are far away from 1, corresponding to highly significant natural direct effects. On the contrary, all the 95% confidence intervals for the indirect effects barely contain 1, corresponding to p values around 6% or 7%. The low magnitude of the natural indirect effects might be due to the relatively limited temporal distance occurring between the baseline and the follow-up measurement occasions. Indeed, also from the original study by Augsburg et al. (2015) it seems that a 14-month period may be not long enough to register any relevant effect of microcredit on business ownership. We have also replicated these results starting from an alternative outcome model where the XW interaction is removed. In this model, the main effects modify to \({\hat{\beta }}_{x}=1.974\) (s.e. 0.149) and \({\hat{\beta }}_{w}=0.828\) (s.e. 0.149), while the other parameters do not sensibly change. The causal odds ratios resulting from this model are substantially equivalent to the previous ones.

As a sensitivity analysis, we have also recomputed the effect estimates after widening the set of observed confounders with some other potentially relevant variables excluded in the first place like income, value of family assets, gender and marital status. Also these results are in line with the values in Table 2. Though this tends to confirm the validity of our causal estimates, like in every empirical study the absence of unobserved confounding cannot be guaranteed with certainty, and results have to be interpreted with caution. For example, some proxy variable of individuals’ creditworthiness might act as a confounder, with a positive effect on the outcome and (possibly) on the mediator. In linear models, the omission of such a variable would correspond to an inflation of the \({\hat{\beta }}_w\) coefficient (see, e.g., Cinelli and Hazlett 2020), and thus of the indirect effect estimate. However, in a logistic regression framework the effect distortion is far more complex because of non-collapsibility issues.

4 Conclusions

We have focussed on causal mediation for binary outcomes, deriving novel parametric expressions for natural direct and indirect effects, on the odds ratio scale, for settings where the mediator is a binary random variable. The proposed formulae are exact in the sense that they do not require the rare outcome assumption and are suitable for the two different natural effect decompositions existing in the casual mediation literature. Furthermore, they are written in order to distinctly maintain the link between natural effects and their pathway-correspondent coefficients of the logistic regression models assumed to govern the data generating process. In particular, in line with VanderWeele and Vansteelandt (2010) and Valeri and VanderWeele (2013), we have isolated the controlled direct effect as part of the natural direct effect. We have also formalized the expressions of the approximate standard errors of the causal effect estimators, obtained via the delta method. The formulae for both the causal effects and the standard errors generalize the existing ones, especially with regard to the presence of parametric interactions between the exposure (and the mediator) and the confounders in the logistic models.

As an illustration, we have applied the derived formulae to a dataset coming from a microcredit experiment performed in Bosnia and Herzegovina (Augsburg et al. 2015), where it is plausible to think that the effect of randomly allocated microloans on client’s bankability (i.e., the capability to obtain loans from financial institutions) at a 14-month follow up might be mediated by whether or not the client owns an active business. The causal effects estimated for the microcredit data are conditional on client’s age, educational level and number of active loans at baseline. These are the mediator-outcome confounder variables we have chosen by combining empirical analyses and subject matter knowledge. The results we have obtained are rather stable across the patterns of these variables. Specifically, the average of the causal total effect odds ratios across all the covariate patterns is equal to 7.049, but only a small (and barely significant) part of such effects appears to be mediated by business ownership.

The counterfactual approach to mediation analysis requires strong assumptions about confounding which might be difficult to meet in practice. Therefore, it would be interesting to adapt to the present context the existing methods of sensitivity analysis with respect to unobserved confounding. In particular, the interval identification method introduced by Lindmark et al. (2018) for probit regression, based on the proposal of Genbäck et al. (2015), could be adapted to logistic models. Alternatively, an instrumental variable (IV) approach might be adopted to overcome the no unobserved confounding assumption. Indeed, methods have been recently developed in the literature that allow for direct and indirect effect estimation in an IV setting (Didelez et al. 2010; Frölich 2007; Sobel 2008; Frölich and Huber 2014; Dippel et al. 2019; Mattei and Mealli 2011). These results mainly rely either on non-parametric or linear parametric models, whereas for binary data the rare outcome assumption is invoked to exploit log-linear models. Thus, we think that the results presented in this paper may be extended to an IV framework for non-rare binary outcomes. Other promising extensions involve the case of multiple mediators, for which associational decompositions have been recently derived.

References

Augsburg B, De Haas R, Harmgart H, Meghir C (2015) The impacts of microcredit: evidence from Bosnia and Herzegovina. Am Econ J Appl Econ 7(1):183–203

Banerjee A, Karlan D, Zinman J (2015) Six randomized evaluations of microcredit: introduction and further steps. Am Econ J Appl Econ 7(1):1–21

Baron R, Kenny D (1986) The moderator–mediator variable distinction in social psychological research: conceptual, strategic, and statistical considerations. J Personal Soc Psychol 51(6):1173–1182

Bollen K (1989) Structural equation with latent variables. Wiley, New York

Cinelli C, Hazlett C (2020) Making sense of sensitivity: extending omitted variable bias. J R Stat Soc Ser B (Stat Methodol) 82(1):39–67

Cochran WG (1938) The omission or addition of an independent variate in multiple linear regression. Suppl J R Stat Soc 5(2):171–176

Cox DR (2007) On a generalization of a result of W.G. Cochran. Biometrika 94(3):755–759

Daniel R, De Stavola B, Cousens S, Vansteelandt S (2015) Causal mediation analysis with multiple mediators. Biometrics 71(1):1–14

Dawid AP (1979) Conditional independence in statistical theory (with discussion). J R Stat Soc Ser B (Stat Methodol) 41(1):1–31

Didelez V, Meng S, Sheehan NA (2010) Assumptions of IV methods for observational epidemiology. Stat Sci 25(1):22–40

Dippel C, Gold R, Heblich S, Pinto R (2019) Mediation analysis in IV settings with a single instrument. Technical report, Mimeo

Frölich M (2007) Nonparametric IV estimation of local average treatment effects with covariates. J Econom 139(1):35–75

Frölich M, Huber M (2014) Direct and indirect treatment effects: causal chains and mediation analysis with instrumental variables. Technical report, IZA discussion paper

Gaynor SM, Schwartz J, Lin X (2019) Mediation analysis for common binary outcomes. Stat Med 38(4):512–529

Genbäck M, Stanghellini E, de Luna X (2015) Uncertainty intervals for regression parameters with non-ignorable missingness in the outcome. Stat Pap 56(3):829–847

Geneletti S (2007) Identifying direct and indirect effects in a non-counterfactual framework. J R Stat Soc Ser B (Stat Methodol) 69(2):199–215

Greenland S, Robins JM, Pearl J (1999) Confounding and collapsibility in causal inference. Stat Sci 14(1):29–46

Hafeman DM, Schwartz S (2009) Opening the black box: a motivation for the assessment of mediation. Int J Epidemiol 38(3):838–845

Huber M (2019) A review of causal mediation analysis for assessing direct and indirect treatment effects. Technical report, University of Freiburg

Huber M, Lechner M, Strittmatter A (2018) Direct and indirect effects of training vouchers for the unemployed. J R Stat Soc Ser A (Stat Soc) 181(2):441–463

Imai K, Keele L, Tingley D (2010a) A general approach to causal mediation analysis. Psychol Methods 15(4):309–334

Imai K, Keele L, Yamamoto T (2010b) Identification, inference and sensitivity analysis for causal mediation effects. Stat Sci 25(1):51–71

Judd CM, Kenny DA (1981) Process analysis: estimating mediation in treatment evaluations. Eval Rev 5(5):602–619

Lindmark A, de Luna X, Eriksson M (2018) Sensitivity analysis for unobserved confounding of direct and indirect effects using uncertainty intervals. Stat Med 37(10):1744–1762

Lupparelli M (2019) Conditional and marginal relative risk parameters for a class of recursive regression graph models. Stat Methods Med Res 28(10–11):3466–3486

Mattei A, Mealli F (2011) Augmented designs to assess principal strata direct effects. J R Stat Soc Ser B (Stat Methodol) 73(5):729–752

Molenberghs G, Beunckens C, Sotto C, Kenward MG (2008) Every missingness not at random model has a missingness at random counterpart with equal fit. J R Stat Soc Ser B (Stat Methodol) 70(2):371–388

Oehlert GW (1992) A note on the delta method. Am Stat 46:27–29

Pearl J (2001) Direct and indirect effects. In: Proceedings of the 17th international conference on uncertainty in artificial intelligence, UAI’01. Morgan Kaufmann Publishers Inc., San Francisco, pp 411–420

Pearl J (2009) Causality: models, reasoning, and inference, 2nd edn. Cambridge University Press, New York

Pearl J (2010) The mediation formula: a guide to the assessment of causal pathways in non-linear models. Technical report R-363, University of California, Los Angeles

Robins J (1986) A new approach to causal inference in mortality studies with a sustained exposure period—application to control of the healthy worker survivor effect. Math Model 7(9–12):1393–1512

Robins JM, Greenland S (1992) Identifiability and exchangeability for direct and indirect effects. Epidemiology 3(2):143–155

Rosenbaum PR, Rubin DB (1983) The central role of the propensity score in observational studies for causal effects. Biometrika 70(1):41–55

Rubin DB (1974) Estimating causal effects of treatments in randomized and nonrandomized studies. J Educ Psychol 66(5):688–701

Samoilenko M, Lefebvre G (2019) Natural direct and indirect effects’ risk ratio expressions in causal mediation analysis of binary mediator and binary outcome: a fresh look at the formulas. Am J Epidemiol 188(7):1201–1203

Samoilenko M, Blais L, Lefebvre G (2018) Comparing logistic and log-binomial models for causal mediation analyses of binary mediators and rare binary outcomes: evidence to support cross-checking of mediation results in practice. Obs Stud 4:193–216

Sobel ME (2008) Identification of causal parameters in randomized studies with mediating variables. J Educ Behav Stat 33(2):230–251

Stanghellini E, Doretti M (2019) On marginal and conditional parameters in logistic regression models. Biometrika 106(3):732–739

Steen J, Vansteelandt S (2018) Graphical models for mediation analysis. arXiv preprint arXiv:180106069

Steen J, Loeys T, Moerkerke B, Vansteelandt S (2017) Flexible mediation analysis with multiple mediators. Am J Epidemiol 186(2):184–193

Valeri L, VanderWeele TJ (2013) Mediation analysis allowing for exposure–mediator interactions and causal interpretation: theoretical assumptions and implementation with SAS and SPSS macros. Psychol Methods 18(2):137–150

VanderWeele TJ (2009) Concerning the consistency assumption in causal inference. Epidemiology 20(6):880–883

VanderWeele TJ (2014) A unification of mediation and interaction: a four-way decomposition. Epidemiology 25(5):749–761

VanderWeele TJ, Vansteelandt S (2009) Conceptual issues concerning mediation, intervention and composition. Stat Interface 2(4):457–468

VanderWeele TJ, Vansteelandt S (2010) Odds ratios for mediation analysis for a dichotomous outcome. Am J Epidemiol 172(12):1339–1348

VanderWeele TJ, Valeri L, Ananth CV (2019) Mediation formulas with binary mediators and outcomes and the “rare outcome assumption’’. Am J Epidemiol 188(7):1204–1205

Funding

Open access funding provided by Università degli Studi di Perugia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Mathematical derivation of \({\mathrm{OR}}^{{\mathrm{PNDE}}}_{x,x^{\star }}\) and \({\mathrm{OR}}^{{\mathrm{TNIE}}}_{x,x^{\star }}\)

Given the standard causal inference assumptions of Sect. 2, the natural effects can be non-parametrically identified by using Pearl’s mediation formula (Pearl 2001, 2010). For a binary mediator, the expression identifying the pure natural direct effect is

Given the parametric models assumed, the numerator of the expression above can be written as

For the denominator, an analogous calculation leads to \(Q_2 = \exp (\beta _0+\beta _xx^{\star })A_{x^{\star },x^{\star }}\) and therefore to \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{PNDE}}}=\log Q_1 - \log Q_2 = \beta _x(x-x^{\star }) + \log (A_{x,x^{\star }}/A_{x^{\star },x^{\star }})\), which proves Eq. (9). Derivations for the total natural indirect effect are similar since we have

with \(Q_3=\exp (\beta _0+\beta _xx)A_{x,x}\), leading to \(\log {\mathrm{OR}}_{x,x^{\star }}^{{\mathrm{TNIE}}}=\log Q_3 - \log Q_1 = \log (A_{x,x}/A_{x,x^{\star }})\), that is, Eq. (10).

To prove that \(A_{x,x}\) equals the inverse risk ratio term in (14), let

be the parametric expression for \(\log \frac{P(W=1\mid Y=y, X=x)}{P(W=0\mid Y=y, X=x)}\) given by Stanghellini and Doretti (2019). Then, it is straightforward to prove that

with the right-hand side term being indeed the probability ratio in (14).

The algebraic developments above remain unchanged once confounding-removing covariates C are added, provided that linear predictors are suitably modified. Specifically, the conditional versions of \(Q_1\), \(Q_2\) and \(Q_3\) become

where \(e_y(x,w,z)\) and \(A_{x,x^{\star }\mid c}\) are as in Sect. 2. The derivation of Eqs. (17) and (18) is then immediate. Notice that this approach can be immediately generalized to account for multiple confounders; it suffices to replace z with \(\varvec{z}=(z_{1},\dots ,z_{p})'\) and v with \(\varvec{v}=(v_1,\dots ,v_q)'\) in the formulas above, substituting every product involving z and v with the corresponding row-column product (for instance, \(\beta _{z}z\) is replaced by \(\varvec{\beta }_{z}'\varvec{z}\) with \(\varvec{\beta }_{z}=(\beta _{z_{1}},\dots ,\beta _{z_{p}})'\) and so on).

Appendix 2: Natural effects and the rare outcome assumption

Recalling that \(e_y(x,w)=\exp (\beta _0+\beta _xx+\beta _ww+\beta _{xw}xw)\) and \(e_w(x)=\exp (\gamma _0+\gamma _xx)\), the odds ratio pure natural direct effect is given by

If all the four terms \(e_y(x,0)\), \(e_y(x,1)\), \(e_y(x^{\star },0)\) and \(e_y(x^{\star },1)\) tend to zero, then the expression above reduces to

which is the expression in Valeri and VanderWeele (2013, p.150). The same logic applies to the odds ratio total natural direct effect, which is

Again, if all the four terms above tend to 0 this quantity tends to

in analogy with (20). The second factors of the right-hand sides of both (20) and (21) are equal to 1 if \(\beta _{xw}=0\). This means that under the rare outcome assumption the odds ratio natural direct effects are given by a product between \({\mathrm{OR}}^{{\mathrm{CDE}}}_{x,x^{\star }}(0)=\exp \{\beta _x(x-x^{\star })\}\) (governed by \(\beta _x\) only) and a residual quantity governed by the interaction coefficient \(\beta _{xw}\). This clear separation does not occur in the exact formulae, where both these residual terms are not generally equal to 1 when \(\beta _{xw}=0\).

The odds ratio total natural indirect effect is

Contrary to the case of natural direct effects, we notice that \(e_y(x^{\star },0)\) and \(e_y(x^{\star },1)\) are not present in the expression above. Thus, we only need \(e_y(x,0)\) and \(e_y(x,1)\) to tend to zero in order to obtain the expression in Valeri and VanderWeele (2013, p.150)

This is particularly relevant when X is binary, since it means that we only need \(P(Y=1\mid X=1,W=0)\approx 0\) and \(P(Y=1\mid X=1,W=1)\approx 0\), but not necessarily \(P(Y=1\mid X=0,W=0)\approx 0\) and \(P(Y=1\mid X=0,W=1)\approx 0\).

Similarly, in the expression of the odds ratio pure natural indirect effect

the terms \(e_y(x,0)\) and \(e_y(x,1)\) are not present, so it suffices that \(e_y(x^{\star },0)\) and \(e_y(x^{\star },1)\) tend to zero to obtain

For the case of binary X, this means that the conditions \(P(Y=1\mid X=0,W=0)\approx 0\) and \(P(Y=1\mid X=0,W=1)\approx 0\) are needed, while \(P(Y=1\mid X=1,W=0)\approx 0\) and \(P(Y=1\mid X=1,W=1)\approx 0\) are not. Overall, it is possible to conclude that the two natural indirect effects need only different subsets of the rare outcome assumption (instead of the whole assumption as traditionally defined) to be identified by the approximate parametric formulae.

Appendix 3: Variance–covariance matrix of the estimated causal effects

Denoting by \(\varvec{\beta }=(\beta _{0},\beta _{x},\beta _{z},\beta _{xz},\beta _{w},\beta _{xw},\beta _{wz},\beta _{xwz})'\) and \(\varvec{\gamma }=(\gamma _{0},\gamma _{x},\gamma _{v},\gamma _{xv})'\) the two vectors of model parameters, by \(\varvec{\varSigma }_{\hat{\varvec{\beta }}}\) and \(\varvec{\varSigma }_{\hat{\varvec{\gamma }}}\) the variance–covariance matrices of their estimators \(\hat{\varvec{\beta }}\) and \(\hat{\varvec{\gamma }}\), and by

the true causal effect vector, the first-order approximate variance–covariance matrix of the estimator

can be obtained as \(V(\hat{\varvec{e}}) = \varvec{E}\varvec{D}\varvec{\varSigma }\varvec{D}'\varvec{E}'\), where \(\varvec{E}={\mathrm{diag}}(\varvec{e})\),

and \(\varvec{D}\) is the matrix of derivatives \(\varvec{D} = \partial \log \varvec{e}/\partial \varvec{\theta }'\), with \(\varvec{\theta }=(\varvec{\beta }',\varvec{\gamma }')'\) denoting the whole parameter vector. To obtain \(\varvec{D}\), it is convenient to compute the row vector \(\varvec{d}_{x,x^{\star }\mid c}=\partial A_{x,x^{\star }\mid c}/\partial \varvec{\theta }'\) first. To this end, it is worth to write \(A_{x,x^{\star }\mid c}\) as

with \(p_1=\exp (\beta _w+\beta _{xw}x+\beta _{wz}z+\beta _{xwz}xz)\), \(p_2=e_{w}(x^{\star },v)\), \(p_3=1+e_{y}(x,0,z)\) and \(p_4=1+e_{y}(x,1,z)\). Under this notation, the three key derivatives to compute are

while the others can be written as functions thereof. Specifically, a compact form for \(\varvec{d}_{x,x^{\star }\mid c}\) is given by

where \(\otimes\) denotes the Kronecker product and, letting \(\varvec{I}_{2}\) be a diagonal matrix of order 2, \(\varvec{d}(a,b)\) is the row vector returned by the vector-matrix multiplication \(\varvec{d}(a,b) = (1\,,\,a)[(1\,,\,b)\otimes \varvec{I}_{2}]\). The vectors \(\varvec{d}_{x,x\mid c}\), \(\varvec{d}_{x^{\star },x^{\star }\mid c}\) and \(\varvec{d}_{x^{\star },x\mid c}\) can be calculated applying the same formulas above to \(A_{x,x\mid c}\), \(A_{x^{\star },x^{\star }\mid c}\) and \(A_{x^{\star },x\mid c}\) respectively. Then, the matrix \(\varvec{D}\) can be obtained as

where

while \(\varvec{d}_1\) is a row vector of the same length of \(\varvec{\theta }\) with all its components set to zero but the ones in the positions of \(\beta _{x}\) and \(\beta _{xz}\), worth \(x-x^{\star }\) and \(z(x-x^{\star })\) respectively. Again, extension to multiple confounders is immediate provided that \(\varvec{\beta }\) and \(\varvec{\gamma }\) are extended as follows:

Clearly, in finite-sample analyses one has plug in the estimates \(\hat{\varvec{\beta }}\) and \(\hat{\varvec{\gamma }}\) everywhere in the formulae above to obtain the estimated variance/covariance matrix \({\hat{V}}(\hat{\varvec{e}})\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Doretti, M., Raggi, M. & Stanghellini, E. Exact parametric causal mediation analysis for a binary outcome with a binary mediator. Stat Methods Appl 31, 87–108 (2022). https://doi.org/10.1007/s10260-021-00562-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-021-00562-w