Abstract

In a measure space \((X,{\mathcal {A}},\mu )\), we consider two measurable functions \(f,g:E\rightarrow {\mathbb {R}}\), for some \(E\in {\mathcal {A}}\). We prove that the property of having equal p-norms when p varies in some infinite set \(P\subseteq [1,+\infty )\) is equivalent to the following condition:

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a measure space \((X,{\mathcal {A}},\mu )\) and two measurable functions \(f,g:E\rightarrow {\mathbb {R}}\), for some \(E\in {\mathcal {A}}\). The aim of this paper is to characterize the property of having equal p-norms when p belongs to a sequence \(P=(p_j)_{j=1}^{\infty }\) of distinct real numbers \(p_j\ge 1\).

Before stating our main results, let us recall the standard notation for the norms in \({\mathcal {L}}^p(E)\):

Moreover, for a measurable function \(f:E\rightarrow {\mathbb {R}}\), we write

Here is our main result.

Theorem 1

Suppose \(f,g\in {\mathcal {L}}^{p}(E)\) for all \(p\ge 1\), and \(P=(p_j)_{j=1}^{\infty }\) is a sequence of distinct real numbers \(p_j\ge 1\). Suppose also that at least one of the following two conditions holds:

- a)

P has an accumulation point in \((1,+\infty )\,\);

- b)

\(f,g\in {\mathcal {L}}^{\infty }(E)\) and

$$\begin{aligned} \sum _{j=1}^{\infty }\frac{p_j-1}{(p_j-1)^2+1}=+\infty . \end{aligned}$$(1)

Then the following two statements are equivalent:

- i)

\(||f ||_p=||g ||_p\ \quad \text {for all } p \in P\) ;

- ii)

\(\mu (|f|>\alpha )=\mu (|g|>\alpha )\quad \text { for all }\alpha \ge 0.\)

Observe that, if \(\lim _{j} p_j=+\infty \), then (1) is equivalent to

Notice moreover that, in this case, if we drop the boundedness hypothesis on f and g, the result is no longer true, as we will show with a counterexample in Sect. 4. Theorem 1 applies for example if

On the contrary, the set \(P=\left\{ j^2 : j\in {\mathbb {N}}{\setminus }\{0\}\right\} \) is not admissible, since in this case the series in (1) converges.

It can be seen, as a consequence of the Chebyshev inequality, that ii) implies the identity \(||f ||_p=||g ||_p\), for all \(p \in [1,+\infty )\), and for any functions \(f,g\in {\mathcal {L}}^p(E)\). Hence, the main interest of our result will be in proving that i) implies ii).

The paper is organized as follows. In Sect. 2, we recall some results in measure theory and complex analysis. Section 3 is devoted to the proof of Theorem 1 by the use of the “Full Müntz Theorem in \({\mathcal {C}}[0,1]\)”, elementary complex analysis and the Mellin transform. In Sect. 4, we construct the counterexample to the conclusion of Theorem 1 if the boundedness hypothesis on f and g is dropped in b). In the last section, we provide some complementary results and final remarks.

2 Some preliminaries for the proof

We will need the following preliminary results.

Lemma 2

Suppose \(P=(p_j)_{j=1}^{\infty }\) is a sequence of distinct real numbers \(p_j\ge 1\) satisfying (1) and having at most 1 as a finite accumulation point. If \(\varphi \in {\mathcal {L}}^1[0,1]\) satisfies

then \(\varphi (x)=0\) for almost every \(x\in [0,1]\).

Proof

By the assumptions given on P, one of the following two cases occurs:

- (b1):

P has a strictly increasing subsequence \((p_{j_k})_k\) such that

$$\begin{aligned} \displaystyle p_{j_k}\rightarrow +\infty \quad \hbox { and } \quad \sum _{k=1}^{\infty }\frac{1}{p_{j_k}}=+\infty \,; \end{aligned}$$- (b2):

P has a strictly decreasing subsequence \((p_{j_k})_k\) such that

$$\begin{aligned} p_{j_k} \rightarrow \ 1 \quad \hbox { and } \quad \sum _{k=1}^{\infty }(p_{j_k}-1)=+\infty . \end{aligned}$$

With no loss of generality, we may replace P by the subsequence \(p_{j_k}\), in either case.

Suppose that (b1) holds; then by (2) we have

By the “Full Müntz Theorem in \({\mathcal {C}}[0,1]\)” given in [1, Theorem 2.1] the linear span of \(\{x^{p-p_1},p\in P\}\) is a dense subset in \({\mathcal {C}}^0[0,1]\) because in this case

by (1). Hence, for any \(h\in {\mathcal {C}}^0[0,1]\) we have:

Extend \(\varphi \equiv 0\) outside [0, 1]. Let \(\chi _{\varepsilon }\) be a family of mollifying kernels on \({\mathbb {R}}\) (as shown for example in the article [3] or [9, Chapter 9.2]). Then picking \(h(x)=\chi _{\varepsilon }(x-y)\) and setting \(k(x)=\varphi (x)x^{p_1-1}\) we obtain:

Now, by [9, Chapter 9, Theorem 9.6],

Consequently, (2) holds if and only if \(\varphi =0\) almost everywhere.

If case (b2) holds then we can define a sequence of functions \((f_j)_j\) by

The sequence \((f_j)_j\) converges to \(\varphi \) pointwise. Moreover, choosing \(\sigma (x)=|\varphi (x)|\), we obtain that \(\sigma \in {\mathcal {L}}^1\) and

By the Dominated Convergence theorem, we obtain that

Consequently we can suppose, without losing in generality, that 1 belongs to P and the proof proceeds as before applying the “Full Müntz Theorem in \({\mathcal {C}}[0,1]\)” [1, Theorem 2.1]. \(\square \)

Remark 3

If \(f\in {\mathcal {L}}^p(E)\) for all \(p\ge 1\), let \(\mu _f:(0,+\infty )\rightarrow {\mathbb {R}}\) be defined by

It is well known that \(\mu _f\) is a monotone nonincreasing function, continuous from the right, and that

for every \(p\in [1,+\infty )\), cf. [9, Theorem 5.51]. Note in particular that \(\mu _f\in {\mathcal {L}}^1(0,+\infty )\).

To deal with the first part of Theorem 1, namely when case a) holds, we have to recall some tools in complex analysis. The Mellin transform of a function \(\upsilon (t)\) is defined as

whenever the integral exists for at least one value \(z_0\) of z (cf. [8, 10, 11]).

Lemma 4

Let \(\upsilon :[0,+\infty )\rightarrow {\mathbb {R}}\) be a function such that

Then \({\mathcal {M}}\upsilon \) is analytic in \(S=\{w\in {\mathbb {C}}: \mathfrak {R}(w)>1\}.\)

Proof

Let \(\gamma :[0,1]\rightarrow {\mathbb {C}}\) be a triangle in S and let \(\Phi (s,z)=\upsilon (s)s^{z-1}\). Then,

with \(\gamma '(t)\) defined for all but three points \(t\in [0,1]\). Observe that

Being \(\gamma \) a triangle, \(|\gamma '(t)|\) is constant on every side and then there exists \(C_1\) such that \(|\gamma '(t)|<C_1\) for all \(t\in [0,1]\) where the tangent vector is defined. Let \(R>0\) be such that \({{\,\mathrm{Supp}\,}}(\gamma )\subseteq B(0,R)\). Then,

and so

By hypothesis, \(\upsilon (s)s^p\) is Lebesgue integrable for all \(p\ge 0\), so

Then, by Fubini-Tonelli Theorem,

But now \(\upsilon (s)s^{z-1}\) is a holomorphic function of z, and then by the Cauchy integral theorem

and then

for every triangular path. Consequently, by Morera’s theorem for triangles (see for example [2]), F(s) is holomorphic on \(\{w\in {\mathbb {C}}: \mathfrak {R}(w)>1\}.\)\(\square \)

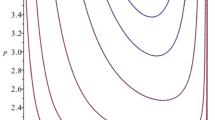

3 Proof of Theorem 1

Being f and g in \({\mathcal {L}}^p(E)\) for every \(p\in [1,+\infty )\), the functions

are finite almost everywhere, the function

is well defined and finite for every \(p\in [1,+\infty )\), and

Substituting \(z=t^{\frac{1}{p}}\), the integral becomes

where

Notice that \(\varphi :[0,{+\infty })\rightarrow {\mathbb {R}}\) is continuous from the right and that

Suppose a) holds. Being \(\varphi :[0,+\infty )\rightarrow {\mathbb {R}}\) the difference of two monotone functions, it is differentiable almost everywhere, and hence continuous almost everywhere. Moreover, it is of bounded variation on \([a,+\infty )\) for all \(a>0\) and then of bounded variation in a neighborhood of each \(y\in (0,+\infty )\). Notice that the integral in the right-hand side of (3) is the Mellin transform of \(\varphi ,\) hence

By [10, Chapter 6.9, Theorem 28], for every \(c\in (1,+\infty )\),

where

By Lemma 4, \({\mathcal {M}}\varphi \) is holomorphic on \(\{w\in {\mathbb {C}}: \mathfrak {R}(w)>1\}.\) But

and, by a), P has an accumulation point in \(\{w\in {\mathbb {C}}: \mathfrak {R}(w)>1\}.\) Then, by the identity theorem of complex analytic functions,

The inversion formula (4) then becomes

Being \(\varphi (x)\) continuous almost everywhere, we have that \(\varphi (x)=0\) for almost every x. The conclusion easily follows.

Assume now that b) holds and that P has no accumulation points in \((1,+\infty )\). If \(||f ||_{\infty }=0\) or \(||g ||_{\infty }=0,\) then either i) or ii) imply that \(f=g=0\) almost everywhere, and the result is achieved. Without loss of generality, we can suppose \(||f ||_{\infty }\le ||g ||_{\infty }=1\). Indeed,

In this case,

and \(\varphi \in {\mathcal {L}}^1[0,1]\). By Lemma 2, we have that \({\mathcal {I}}(p)=0\) for all \(p\in P\) if and only if \(\varphi (z)=0\) for almost every \(z\in [0,1]\). By the right continuity, we conclude. \(\square \)

4 Construction of the counterexample

In this section, we want to show that, in general, the boundedness hypothesis on f and g in Theorem 1, when b) holds, cannot be removed. In the first part, we give some definitions to set the problem in a more general frame, then we develop the counterexample. Precisely, we will firstly build a continuous function \(\varphi \) defined on the positive real semiaxis and orthogonal to every monomial (and for linearity to every polynomial). Then, we will prove that this function is continuous and it is of bounded variation on \([0,+\infty )\). So, it can be written as the difference of two strictly decreasing functions; their inverses are the functions we are looking for. To conclude we show, as corollaries of independent interest, that modifying a bit this function \(\varphi \) firstly we can make it smooth, and secondly it could be orthogonal to every rational power of x, with fixed denominator. For an in-depth analysis of this argument, see e.g., [5, 7].

Lemma 5

The function \(\varphi :[0,+\infty )\rightarrow {\mathbb {R}}\) defined as

is such that

Proof

This result was already known by Stieltjes that gave it in his work on continuous fractions [6, page 105]. For the convenience of the reader, we sketch a self-contained proof relying only on elementary complex analysis.

Set

Being \(|x^n \mathrm{e}^{-(1-i)x}|=x^n \mathrm{e}^{-x}\), we have that the integral \(I_n\) is well defined for all n. Moreover, letting \(z=1-i\), performing the change of variables \(zx=y\) we obtain:

where \(\gamma \) is the half-line starting at the origin, containing the point \(1-i\). Consider the triangular path \(T_N\) in the complex plane joining the points 0, N, \(N-iN\). Being \(y^n \mathrm{e}^{-y}\) analytic over the interior of \(T_N\) the integral along \(T_N\) is 0. Moreover:

and so

Then, passing to the limit for N tending to \(+\infty \), the first term tends to \(\Gamma (n+1)\), the second term tends to 0, and the third tends to \(-z^{n+1}I_n\) hence:

Then,

So,

and then

so that

Letting \(x=u^{\frac{1}{4}},\) we arrive to

The function

has the requested properties. \(\square \)

Lemma 6

The function \(\varphi \) defined in Lemma 5 belongs to \(BV([0,+\infty ))\).

Proof

Observe preliminarily that \(\varphi (0)=0\) and \(\varphi \) tends to 0 at infinity; moreover,

and so

The second derivative of \(\varphi \) is given by

Letting

we see that \((\varphi ''(x_n))_n\) has alternating signs, since

So, the total variation of \(\varphi \) is the series of variations between each stationary point. Writing \({\mathbb {R}}^+=\{x\in {\mathbb {R}}:x\ge 0\}\), we have

We are now ready to construct the counterexample. Define

where \(P(t,+\infty )\) and \(N(t,+\infty )\) are, respectively, the positive and the negative variation of \(\varphi \) on \((t,+\infty )\). The functions \(\phi \) and \(\psi \) are positive, strictly decreasing, bounded and achieve their maximum in 0. Moreover,

and

Restricting the codomain of \(\phi \) to \((0,\phi (0)]\) and that of \(\psi \) to \((0,\psi (0)]\,\), we obtain two invertible functions

Moreover, their inverses are also nonnegative decreasing functions. Define

and notice that

Extend f and g to all \({\mathbb {R}}\) by setting them equal to 0 outside their domain, and call them \({\tilde{f}}\) and \({\tilde{g}}\). These are the functions we are looking for. Indeed, we will now prove that \(\mu (|{\tilde{f}}|>\alpha )\) does not coincide with \(\mu (|{\tilde{g}}|>\alpha )\) for a.e. \(\alpha \ge 0\). By contradiction suppose that

Being \({\tilde{f}}\) and \({\tilde{g}}\) nonnegative and being their level sets coincident with those of f and g, we have

But f and g are monotonically strictly decreasing, so \(\{f>\alpha \}=[0,f^{-1}(\alpha ))\) and \(\{g>\alpha \}=[0,g^{-1}(\alpha ))\), hence

Being \(f^{-1}=\phi \) and \(g^{-1}=\psi \),

Recall then the definition of \(\phi \) and \(\psi \) to obtain

so

and then

finding a contradiction. The proof is then completed. \(\square \)

In the following corollary, we want to extend Lemma 5 to find a continuous function orthogonal to every fractional power of x with fixed denominator.

Corollary 7

Fix \(q\in {\mathbb {N}}{\setminus }\{0\}\). There exists a continuous function \(\varphi _q:(0,+\infty )\rightarrow {\mathbb {R}}\), not identically equal to 0, such that

Proof

Define \(I_n\) as before. We have

Letting \(x=u^{\frac{1}{4q}}\) we arrive to

The function

is the one we were looking for. \(\square \)

The aim of the subsequent lemma is to show that, if we multiply the functions \(\varphi (x)\) and \(\varphi _q(x)\), found, respectively, in Lemma 5 and Corollary 7, by a suitable power of x, we obtain two new functions that maintain the same property of orthogonality but are arbitrarily regular. We achieve this result applying Faà di Bruno’s formula.

Lemma 8

Let \(w\in {\mathcal {C}}^{\infty }({\mathbb {R}})\) and \(0<\alpha <1\). Then the function \(g_n:[0,+\infty )\rightarrow {\mathbb {R}}\),

is of class \({\mathcal {C}}^n\) on \([0,+\infty )\), with \(g_n^{(j)}(0)=0\) for all \(j=0,1,2,\dots ,n\).

Proof

A central tool of this proof will be Faà di Bruno’s formula that we will recall briefly. Let w and u be \({\mathcal {C}}^m\) real-valued functions such that the composition \(w\circ u\) is defined; then \((w\circ u)(x)\) is of class \({\mathcal {C}}^m\) and for \(x>0\) we have

or

For a proof of this formula look at [4]. We have that

and each term of this sum is of the form

where C is a real number depending on j, h and n. Now we use the Faà di Bruno’s formula to express the derivatives of w. In our case \(u(x)=x^{\alpha }\) and so

Consequently

and if \(h_1+\cdots +h_k=j,\)

So, applying Faà di Bruno’s formula to (5), each term has the form

To conclude observe that

and apply the theorem on the limit of the derivative. \(\square \)

As a consequence of Lemma 8, we have that the function \(\varphi :[0,+\infty )\rightarrow {\mathbb {R}}\) in the statement of Lemma 5 can be chosen to be arbitrarily regular (but not \({\mathcal {C}}^{\infty }\)). For example, taking

by Lemma 8 choosing \(w(x)=\mathrm{e}^{-x}\sin (x)\) and \(\alpha =\frac{1}{4}\), we see that \(\varphi (x)\) is of class \({\mathcal {C}}^n\). The same reasoning choosing the same w and \(\alpha =\frac{1}{4q}\) allows to conclude that also the function \(\varphi _q\) is of class \({\mathcal {C}}^n\) if multiplied by \(x^{n+1}\).

5 Final remarks

As a direct consequence of Theorem 1, we have the following.

Corollary 9

Suppose \(\mu (E)<+\infty \), \(f\in {\mathcal {L}}^{p}(E)\) for all \(p \ge 1\) and \(P=(p_j)_{j=1}^{\infty }\) is a sequence of distinct real numbers \(p_j\ge 1\). Let C be a nonnegative constant, and suppose that either a) or b) holds. Then, the following two conditions are equivalent:

- i)

\( \displaystyle \left( \frac{1}{\mu (E)}\right) ^{\frac{1}{p}}||f ||_p= C \qquad \text {for all }\,p\in P\,; \)

- ii)

\( \displaystyle |f(x)|= C \qquad \qquad \qquad \text { for a.e. } x\in E. \)

Indeed, Theorem 1 applies taking as g a constant function.

Theorem 1 remains valid assuming the existence of an accumulation point of P in (0, 1] and supposing \(f,g\in {\mathcal {L}}^p(E)\) for all \(p>0\). In this case, \(||f||_p\) is formally defined as before, although this is not a norm anymore. To prove this, first notice that, without loss of generality, we can always assume that \(f,g\ge 0\,\). Let \(\delta \in (0,1]\) be an accumulation point of P. For all \(p\in P\cap \left( \frac{\delta }{2},2\right) \),

The set \({\widetilde{P}}=\{\frac{2p}{\delta }:p\in P\cap \left( \frac{\delta }{2},2\right) \}\) is contained in \((1,+\infty )\) and has an accumulation point there. We can now apply the second part of Theorem 1 to find that

and so \(\mu (|f|>\alpha )=\mu (|g|>\alpha )\) for all \(\alpha \ge 0\).

In the last part of this section, we consider the special case of \(\ell ^p\) spaces and show that Theorem 1 can be generalized in this setting. We recall that, for a sequence \(A=(a_n)_n\), the \(\ell ^p\) norms are defined as follows:

Here is our result.

Theorem 10

Let \(A=(a_n)_n\) and \(B=(b_n)_n\) be two sequences of real numbers in \(\ell ^1\). If \(P=(p_j)_{j=1}^{\infty }\) is a sequence of distinct real numbers \(p_j\ge 1\) having an accumulation point in \((1,+\infty ]\) and

then the sequences

can be obtained one from the other by permutation, appending or removing some zeroes.

Proof

Suppose that the accumulation point is finite. Choose \(X={\mathbb {N}}\), \({\mathcal {A}}={\mathcal {P}}({\mathbb {N}})\) and \(\mu \) the counting measure. By Theorem 1, we have that

Without loss of generality, we can suppose that \(a_n,b_n> 0\) for all \(n\in {\mathbb {N}}\). Being \((a_n)_n\) and \((b_n)_n\) absolutely convergent, we can rearrange them in such a way that A and B are nonincreasing without modifying the \(\ell ^p\) norms, thus obtaining \({\hat{A}}=({\hat{a}}_n)_n\) and \({\hat{B}}=({\hat{b}}_n)_n\), respectively. Clearly

If \({\hat{a}}_n={\hat{b}}_n\) for all n, then the theorem is proved. Assume by contradiction that \({\hat{A}}\ne {\hat{B}}\) and let \({\bar{n}}\) be the smallest index such that \({\hat{a}}_{{\bar{n}}}\ne {\hat{b}}_{{\bar{n}}}\). Suppose for instance that \({\hat{a}}_{{\bar{n}}}>{\hat{b}}_{{\bar{n}}}\) and choose

With this choice, we have

a contradiction. Suppose now that \(+\infty \) is the only accumulation point of P. We have that

and similarly for \({\hat{B}}\), obtaining that \({\hat{a}}_0={\hat{b}}_0\). Define \({\hat{A}}_1=({\hat{a}}_n)_{n\ge 1}\) and \({\hat{B}}_1=({\hat{b}}_n)_{n\ge 1}\) and notice that:

and consequently \(\Vert {\hat{A}}_1\Vert _{p_j}=\Vert {\hat{B}}_1\Vert _{p_j}\) for all j. By the same reasoning, we will obtain that

and the same for \({\hat{B}}_1,\) to obtain \({\hat{a}}_1={\hat{b}}_1\). Proceeding by induction, we conclude that \({\hat{A}}={\hat{B}}\). \(\square \)

Notice that, choosing for example \(p_j=j!\) for all \(j\ge 1\), the condition \(\Vert {\hat{A}}\Vert _{p_j}=\Vert {\hat{B}}\Vert _{p_j}\) for all j implies that \({\hat{A}}={\hat{B}}\), but in this case the series in (1) converges. Notice moreover that Theorem 10 remains valid if 1 is the only accumulation point of P, supposing that (1) holds.

Let us show with an example that, even if \(\mu (E)\) is finite, condition (1) could not be necessary for the conclusion of Theorem 1. Let \(X=\{0\}\), \({\mathcal {A}}={\mathcal {P}}(X)\) and \(\mu \) the counting measure. Let \(P=(j^2)_{j\ge 1}\) and let \(f,g:\{0\}\rightarrow {\mathbb {R}}\) two measurable functions. Suppose moreover \(\Vert f\Vert _{j^2}=\Vert g\Vert _{j^2}\) for all \(j\ge 1\). Without losing in generality, we can assume that \(f(0)=\alpha _0\ge 0\) and \(g(0)=\beta _0\ge 0\). Then,

and consequently \(\alpha _0=\beta _0.\) However, condition (1) is not fulfilled.

The problem of the necessity of condition (1) remains open, e.g., when \(X={\mathbb {R}}\) and \(\mu \) is the Lebesgue measure.

References

Borwein, P., Erdélyi, T.: The full Müntz Theorem in \({\cal{C}}[0,1]\) and \({\cal{L}}^1[0,1]\). J. Lond. Math. Soc. 54, 102–110 (1996)

Conway, J.B.: Functions of One Complex Variable, 2nd edn. Springer, Berlin (1978)

Friedrichs, K.O.: The identity of weak and strong extensions of differential operators. Trans. Am. Math. Soc. 55, 132–151 (1944)

Roman, S.: The formula of Faà di Bruno. Am. Math. Mon. 87, 805–809 (1980)

Shohat, J.A., Tamarkin, J.D.: The Problem of Moments. American Mathematical Society, New York (1943)

Stieltjes, T.J.: Recherches sur les fractions continues. Ann. Fac. Sci. Univ. Toulouse 8, 1–122 (1894)

Stoyanov, J.: Counterexamples in Probability. Wiley, Chichester (1997)

Titchmarsh, E.C.: Introduction to the Theory of Fourier Integrals. Clarendon Press, Glasgow (1967)

Wheeden, R.L., Zygmund, A.: Measure and Integral: An Introduction to Real Analysis. Marcel Dekker, New York (1977)

Widder, D.V.: The Laplace Transform. Princeton University Press, Princeton (1946)

Zayed, A.I.: Handbook of Function and Generalized Function Transformations. CRC Press, Boca Raton (1996)

Acknowledgements

The author wishes to warmly thank Giovanni Alessandrini for his precious help, and Gianni Dal Maso, Daniele Del Santo, Alessandro Fonda and Sergio Vesnaver for the useful discussions and suggestions. I am also very grateful to the anonymous referee for pointing out some gaps in the proofs and suggesting several improvements.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Klun, G. On functions having coincident p-norms. Annali di Matematica 199, 955–968 (2020). https://doi.org/10.1007/s10231-019-00907-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10231-019-00907-z