Abstract

The approximation of probability measures on compact metric spaces and in particular on Riemannian manifolds by atomic or empirical ones is a classical task in approximation and complexity theory with a wide range of applications. Instead of point measures we are concerned with the approximation by measures supported on Lipschitz curves. Special attention is paid to push-forward measures of Lebesgue measures on the unit interval by such curves. Using the discrepancy as distance between measures, we prove optimal approximation rates in terms of the curve’s length and Lipschitz constant. Having established the theoretical convergence rates, we are interested in the numerical minimization of the discrepancy between a given probability measure and the set of push-forward measures of Lebesgue measures on the unit interval by Lipschitz curves. We present numerical examples for measures on the 2- and 3-dimensional torus, the 2-sphere, the rotation group on \(\mathbb R^3\) and the Grassmannian of all 2-dimensional linear subspaces of \({\mathbb {R}}^4\). Our algorithm of choice is a conjugate gradient method on these manifolds, which incorporates second-order information. For efficient gradient and Hessian evaluations within the algorithm, we approximate the given measures by truncated Fourier series and use fast Fourier transform techniques on these manifolds.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The approximation of probability measures by atomic or empirical ones based on their discrepancies is a well examined problem in approximation and complexity theory [59, 62, 67] with a wide range of applications, e.g., in the derivation of quadrature rules and in the construction of designs. Recently, discrepancies were also used in image processing for dithering [46, 72, 77], i.e., for representing a gray-value image by a finite number of black dots, and in generative adversarial networks [28].

Besides discrepancies, Optimal Transport (OT) and in particular Wasserstein distances have emerged as powerful tools to compare probability measures in recent years, see [24, 81] and the references therein. In fact, so-called Sinkhorn divergences, which are computationally much easier to handle than OT, are known to interpolate between OT and discrepancies [30]. For the sample complexity of Sinkhorn divergences we refer to [37]. The rates for approximating probability measures by atomic or empirical ones with respect to Wasserstein distances depend on the dimension of the underlying spaces, see [21, 58]. In contrast, approximation rates based on discrepancies can be given independently of the dimension [67], i.e., they do not suffer from the curse of dimensionality. Additionally, we should keep in mind that the computation of discrepancies does not involve a minimization problem, which is a major drawback of OT and Sinkhorn divergences. Moreover, discrepancies admit a simple description in Fourier domain and hence the use of fast Fourier transforms is possible, leading to better scalability than the aforementioned methods.

Instead of point measures, we are interested in approximations with respect to measures supported on curves. More precisely, we consider push-forward measures of probability measures \(\omega \in {\mathcal P} ([0,1])\) by Lipschitz curves of bounded speed, with special focus on absolutely continuous measures \(\omega = \rho \lambda \) and the Lebesgue measure \(\omega = \lambda \). In this paper, we focus on approximation with respect to discrepancies. For related results on quadrature and approximation on manifolds, we refer to [31, 47, 64, 65] and the references therein. An approximation model based on the 2-Wasserstein distance was proposed in [61]. That work exploits completely different techniques than ours both in the theoretical and numerical part. Finally, we want to point out a relation to principal curves which are used in computer science and graphics for approximating distributions approximately supported on curves [49, 50, 50, 55, 57]. For the interested reader, we further comment on this direction of research in Remark 3 and in the conclusions. Next, we want to motivate our framework by numerous potential applications:

-

In MRI sampling [11, 17], it is desirable to construct sampling curves with short sampling times (short curve) and high reconstruction quality. Unfortunately, these requirements usually contradict each other and finding a good trade-off is necessary. Experiments demonstrating the power of this novel approach on a real-world scanner are presented in [60].

-

For laser engraving [61] and 3D printing [20], we require nozzle trajectories based on our (continuous) input densities. Compared to the approach in [20], where points given by Llyod’s method are connected as a solution of the TSP (traveling salesman problem), our method jointly selects the points and the corresponding curve. This avoids the necessity of solving a TSP, which can be quite costly, although efficient approximations exist. Further, it is not obvious that the fixed initial point approximation is a good starting point for constructing a curve.

-

The model can be used for wire sculpture creation [2]. In view of this, our numerical experiment presented in Fig. 5 can be interpreted as a building plan for a wire sculpture of the Spock head, namely of a 2D surface. Clearly, the approach can be also used to create images similar to TSP Art [54], where images are created from points by solving the corresponding TSP.

-

In a more manifold related setting, the approach can be used for grand tour computation on \({\mathcal {G}}_{2,4}\) [5], see also our numerical experiment in Fig. 11. More technical details are provided in the corresponding section.

Our contribution is two-fold. On the theoretical side, we provide estimates of the approximation rates in terms of the maximal speed of the curve. First, we prove approximation rates for general probability measures on compact Ahlfors d-regular length spaces \({\mathbb {X}}\). These spaces include many compact sets in the Euclidean space \({\mathbb {R}}^d\), e.g., the unit ball or the unit cube as well as d-dimensional compact Riemannian manifolds without boundary. The basic idea consists in combining the known convergence rates for approximation by atomic measures with cost estimates for the traveling salesman problem. As for point measures, the approximation rate \(L^{d/(2d-2)} \le L^{-1/2}\) for general \(\omega \in {\mathcal P} ([0,1])\) and \(L^{d/(3d-2)} \le L^{-1/3}\) for \(\omega = \lambda \) in terms of the maximal Lipschitz constant (speed) L of the curves does not crucially depend on the dimension of \({\mathbb {X}}\). In particular, the second estimate improves a result given in [18] for the torus.

If the measures fulfill additional smoothness properties, these estimates can be improved on compact, connected, d-dimensional Riemannian manifolds without boundary. Our results are formulated for absolutely continuous measures (with respect to the Riemannian measure) having densities in the Sobolev space \(H^s({\mathbb {X}})\), \(s> d/2\). In this setting, the optimal approximation rate becomes roughly speaking \(L^{-s/(d-1)}\). Our proofs rely on a general result of Brandolini et al. [13] on the quadrature error achievable by integration with respect to a measure that exactly integrates all eigenfunctions of the Laplace–Beltrami with eigenvalues smaller than a fixed number. Hence, we need to construct measures supported on curves that fulfill the above exactness criterion. More precisely, we construct such curves for the d dimensional torus \({\mathbb {T}}^d\), the spheres \({\mathbb {S}}^d\), the rotation group \(\mathrm{SO}(3)\) and the Grassmannian \({\mathcal {G}}_{2,4}\).

On the numerical side, we are interested in finding (local) minimizers of discrepancies between a given continuous measure and those from the set of push-forward measures of the Lebesgue measure by bounded Lipschitz curves. This problem is tackled numerically on \({\mathbb {T}}^2\), \({\mathbb {T}}^3\), \({\mathbb {S}}^2\) as well as \(\mathrm{SO}(3)\) and \({\mathcal {G}}_{2,4}\) by switching to the Fourier domain. The minimizers are computed using the method of conjugate gradients (CG) on manifolds, which incorporates second order information in form of a multiplication by the Hessian. Thanks to the approach in the Fourier domain, the required gradients and the calculations involving the Hessian can be performed efficiently by fast Fourier transform techniques at arbitrary nodes on the respective manifolds. Note that in contrast to our approach, semi-continuous OT minimization relies on Laguerre tessellations [41], which are not available in the required form on the 2-sphere, \(\mathrm{SO}(3)\) or \({\mathcal {G}}_{2,4}\).

This paper is organized as follows: In Sect. 2 we give the necessary preliminaries on probability measures. In particular, we introduce the different sets of measures supported on Lipschitz curves that are used for the approximation. Note that measures supported on continuous curves of finite length can be equivalently characterized by push-forward measures of probability measures by Lipschitz curves. Section 3 provides the notation on reproducing kernel Hilbert spaces and discrepancies including their representation in the Fourier domain. Section 4 contains our estimates of the approximation rates for general given measures and different approximation spaces of measures supported on curves. Following the usual lines in approximation theory, we are then concerned with the approximation of absolutely continuous measures with density functions lying in Sobolev spaces. Our main results on the approximation rates of smoother measures are contained in Sect. 5, where we distinguish between the approximation with respect to the push-forward of general measures \(\omega \in {{\mathcal {P}}}[0,1]\), absolute continuous measures and the Lebesgue measure on [0, 1]. In Sect. 6 we formulate our numerical minimization problem. Our numerical algorithms of choice are briefly described in Sect. 7. For a comprehensive description of the algorithms on the different manifolds, we refer to respective papers. Section 8 contains numerical results demonstrating the practical feasibility of our findings. Conclusions are drawn in Sect. 9. Finally, Appendix A briefly introduces the different manifolds \({\mathbb {X}}\) used in our numerical examples together with the Fourier representation of probability measures on \({\mathbb {X}}\).

2 Probability Measures and Curves

In this section, the basic notation on measure spaces is provided, see [3, 32], with focus on probability measures supported on curves. At this point, let us assume that

\({\mathbb {X}}\) is a compact metric space endowed with a bounded non-negative Borel measure \(\sigma _{\mathbb {X}}\in {\mathcal {M}} ({\mathbb {X}})\) such that \(\text {supp}(\sigma _{\mathbb {X}})={\mathbb {X}}\). Further, we denote the metric by \({{\,\mathrm{dist}\,}}_{\mathbb {X}}\).

Additional requirements on \({\mathbb {X}}\) are added along the way and notations are explained below. By \({\mathcal {B}}({\mathbb {X}})\) we denote the Borel \(\sigma \)-algebra on \({\mathbb {X}}\) and by \({\mathcal {M}}({\mathbb {X}})\) the linear space of all finite signed Borel measures on \({\mathbb {X}}\), i.e., the space of all \(\mu :{\mathcal {B}}({\mathbb {X}}) \rightarrow {\mathbb {R}}\) satisfying \(\mu ({\mathbb {X}}) < \infty \) and for any sequence \((B_k)_{k \in {\mathbb {N}}} \subset {\mathcal {B}}({\mathbb {X}})\) of pairwise disjoint sets the relation \(\mu (\bigcup _{k=1}^\infty B_k) = \sum _{k=1}^\infty \mu (B_k)\). The support of a measure \(\mu \) is the closed set

For \(\mu \in {\mathcal {M}}({\mathbb {X}})\) the total variation measure is defined by

With the norm \(\Vert \mu \Vert _{{\mathcal {M}}} = |\mu |({\mathbb {X}})\) the space \({\mathcal {M}}({\mathbb {X}})\) becomes a Banach space. By \({{\mathcal {C}}}({\mathbb {X}})\) we denote the Banach space of continuous real-valued functions on \({\mathbb {X}}\) equipped with the norm \(\Vert \varphi \Vert _{{{\mathcal {C}}}({\mathbb {X}})} :=\max _{x \in {\mathbb {X}}} |\varphi (x)|\). The space \({\mathcal {M}}({\mathbb {X}})\) can be identified via Riesz’ theorem with the dual space of \({\mathcal C}({\mathbb {X}})\) and the weak-\(^*\) topology on \({\mathcal {M}}({\mathbb {X}})\) gives rise to the weak convergence of measures, i.e., a sequence \((\mu _k )_k \subset {\mathcal {M}}({\mathbb {X}})\) converges weakly to \(\mu \) and we write \(\mu _k \rightharpoonup \mu \), if

For a non-negative, finite measure \(\mu \), let \(L^p({\mathbb {X}},\mu )\) be the Banach space (of equivalence classes) of complex-valued functions with norm

By \({\mathcal {P}} ({\mathbb {X}})\) we denote the space of Borel probability measures on \({\mathbb {X}}\), i.e., non-negative Borel measures with \(\mu ({\mathbb {X}}) = 1\). This space is weakly compact, i.e., compact with respect to the topology of weak convergence. We are interested in the approximation of measures in \({\mathcal {P}} ({\mathbb {X}})\) by probability measures supported on points and curves in \({\mathbb {X}}\). To this end, we associate with \(x \in {\mathbb {X}}\) a probability measure \(\delta _x\) with values \(\delta _x(B) = 1\) if \(x \in B\) and \(\delta _x(B) = 0\) otherwise.

The atomic probability measures at N points are defined by

In other words, \({\mathcal {P}}_N^{{{\,\mathrm{atom}\,}}} ({\mathbb {X}})\) is the collection of probability measures, whose support consists of at most N points. Further restriction to equal mass distribution leads to the empirical probability measures at N points denoted by

In this work, we are interested in the approximation by measures having their support on curves. Let \({\mathcal {C}}([a,b],{\mathbb {X}})\) denote the set of closed, continuous curves \(\gamma :[a,b]\rightarrow {\mathbb {X}}\). Although our presented experiments involve solely closed curves, some applications might require open curves. Hence, we want to point out that all of our approximation results still hold without this requirement. Upper bounds would not get worse and we have not used the closedness for the lower bounds on the approximation rates. The length of a curve \(\gamma \in {\mathcal {C}}([a,b],{\mathbb {X}}) \) is given by

If \(\ell (\gamma )<\infty \), then \(\gamma \) is called rectifiable. By reparametrization, see [48, Thm. 3.2], the image of any rectifiable curve in \({\mathcal {C}}([a,b],{\mathbb {X}})\) can be derived from the set of closed Lipschitz continuous curves

The speed of a curve \(\gamma \in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) is defined a.e. by the metric derivative

cf. [4, Sec. 1.1]. The optimal Lipschitz constant \(L=L(\gamma )\) of a curve \(\gamma \) is given by \(L(\gamma ) = \Vert \, |{\dot{\gamma }}| \, \Vert _{^\infty ([0,1])}\). For a constant speed curve it holds \(L(\gamma ) = \ell (\gamma )\).

We aim to approximate measures in \({\mathcal {P}}({\mathbb {X}})\) from those of the subset

This space is quite large and in order to define further meaningful subsets, we derive an equivalent formulation in terms of push-forward measures. For \(\gamma \in {\mathcal {C}}([0,1],{\mathbb {X}})\), the push-forward \(\gamma {_*} \omega \in {{\mathcal {P}}}({\mathbb {X}})\) of a probability measure \(\omega \in {{\mathcal {P}}}([0,1])\) is defined by \(\gamma {_*} \omega (B):=\omega (\gamma ^{-1} (B))\) for \(B \in {\mathcal {B}}({\mathbb {X}})\). We directly observe \(\text {supp}(\gamma {_*} \omega )=\gamma (\text {supp}(\omega ))\). By the following lemma, \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) consists of the push-forward of measures in \({\mathcal {P}}([0,1])\) by constant speed curves.

Lemma 1

The space \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) in (1) is equivalently given by

Proof

Let \(\nu \in {\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) as in (1). If \(\text {supp}(\nu )\) consists of a single point \(x \in {\mathbb {X}}\) only, then the constant curve \(\gamma \equiv x\) pushes forward an arbitrary \(\delta _t\) for \(t\in [a,b]\), which shows that \(\nu \) is contained in (2).

Suppose that \(\text {supp}(\nu )\) contains at least two distinct points and let \(\gamma \in {\mathcal {C}}([a,b],{\mathbb {X}})\) with \(\text {supp}(\nu )\subset \gamma ([a,b])\) and \(\ell (\gamma )<\infty \). According to [16, Prop. 2.5.9], there exists a continuous curve \({\tilde{\gamma }} \in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) with constant speed \(\ell (\gamma )\) and a continuous non-decreasing function \(\varphi :[a,b] \rightarrow [0,1]\) with \(\gamma = {\tilde{\gamma }} \circ \varphi \). Now, define \(f:{\mathbb {X}}\rightarrow [0,1]\) by \(f(x) :=\min \{\tilde{\gamma }^{-1}(x)\}\). This function is measurable, since for every \(t \in [0,1]\) it holds that

is compact. Due to \(\text {supp}(\nu )\subset {\tilde{\gamma }}([0,1])\), we can define \(\omega :=f{_*}\nu \in {\mathcal {P}}([0,1])\). By construction, \(\omega \) satisfies \(\tilde{\gamma }{_*} \omega (B)=\omega (\tilde{\gamma }^{-1}(B)) =\nu (f^{-1} \circ \tilde{\gamma }^{-1}(B))= \nu (B)\) for all \(B\in {\mathcal {B}}({\mathbb {X}})\). This concludes the proof. \(\square \)

The set \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) contains \({\mathcal {P}}_N^{{{\,\mathrm{atom}\,}}} ({\mathbb {X}})\) if L is sufficiently large compared to N and \({\mathbb {X}}\) is sufficiently nice, cf. Sect. 4. It is reasonable to ask for more restrictive sets of approximation measures, e.g., when \(\omega \in {\mathcal {P}}([0,1])\) is assumed to be absolutely continuous. For the Lebesgue measure \(\lambda \) on [0, 1], we consider

In the literature [18, 61], the special case of push-forward of the Lebesgue measure \(\omega = \lambda \) on [0, 1] by Lipschitz curves in \({\mathbb {T}}^d\) was discussed and successfully used in certain applications [11, 17]. Therefore, we also consider approximations from

It is obvious that our probability spaces related to curves are nested,

Hence, one may expect that establishing good approximation rates is most difficult for \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\) and easier for \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\).

3 Discrepancies and RKHS

The aim of this section is to introduce the way we quantify the distance (“discrepancy”) between two probability measures. To this end, choose a continuous, symmetric function \(K:{\mathbb {X}}\times {\mathbb {X}}\rightarrow {\mathbb {R}}\) that is positive definite, i.e., for any finite number \(n \in {\mathbb {N}}\) of points \(x_j\in {\mathbb {X}}\), \(j=1,\ldots ,n\), the relation

is satisfied for all \(a_j\in {\mathbb {R}}\), \(j=1,\ldots ,n\). We know by Mercer’s theorem [23, 63, 76] that there exists an orthonormal basis \(\{\phi _k: k \in {\mathbb {N}}\}\) of \(L^2({\mathbb {X}},\sigma _{\mathbb {X}})\) and non-negative coefficients \((\alpha _k)_{k \in {\mathbb {N}}} \in \ell _1\) such that K has the Fourier expansion

with absolute and uniform convergence of the right-hand side. If \(\alpha _k > 0\) for some \(k \in {\mathbb {N}}_0\), the corresponding function \(\phi _k\) is continuous. Every function \(f\in L^2({\mathbb {X}},\sigma _{\mathbb {X}})\) has a Fourier expansion

The kernel K gives rise to a reproducing kernel Hilbert space (RKHS). More precisely, the function space

equipped with the inner product and the corresponding norm

forms a Hilbert space with reproducing kernel, i.e.,

Note that \(f\in H_K({\mathbb {X}})\) implies \(\hat{f}_k=0\) if \(\alpha _k=0\), in which case we make the convention \(\alpha _k^{-1} {\hat{f}}_k=0\) in (4). The space \(H_{K} ({\mathbb {X}})\) is the closure of the linear span of \(\{ K (x_j,\cdot ): x_j \in {\mathbb {X}}\}\) with respect to the norm (4), and \(H_{K} ({\mathbb {X}})\) is continuously embedded in \(C({\mathbb {X}})\). In particular, the point evaluations in \(H_{K} ({\mathbb {X}})\) are continuous.

The discrepancy \({\mathscr {D}}_K(\mu ,\nu )\) is defined as the dual norm on \(H_{K}({\mathbb {X}})\) of the linear operator \(T:H_K({\mathbb {X}}) \rightarrow {\mathbb {C}}\) with \(\varphi \mapsto \int _{{\mathbb {X}}} \varphi \,\mathrm {d}(\mu - \nu )\):

see [40, 67]. Note that this looks similar to the 1-Wasserstein distance, where the space of test functions consists of Lipschitz continuous functions and is larger. Since

we obtain by Riesz’s representation theorem

which yields by Fubini’s theorem, (3), (4) and symmetry of K that

where the Fourier coefficients of \(\mu , \nu \in \mathcal P({\mathbb {X}})\) are well-defined for k with \(\alpha _k\ne 0\) by

Remark 1

The Fourier coefficients \({\hat{\mu }}_{k}\) and \({\hat{\nu }}_{k}\) depend on both K and \(\sigma _{\mathbb {X}}\), but the identity (6) shows that \({\mathscr {D}}_K(\mu ,\nu )\) only depends on K. Thus, our approximation rates do not depend on the choice of \(\sigma _{\mathbb {X}}\). On the other hand, our numerical algorithms in Sect. 7 depend on \(\phi _k\) and hence on the choice of \(\sigma _{\mathbb {X}}\).

If \(\mu _n \rightharpoonup \mu \) and \(\nu _n \rightharpoonup \nu \) as \(n\rightarrow \infty \), then also \(\mu _n \otimes \nu _n \rightharpoonup \mu \otimes \nu \). Therefore, the continuity of K implies that \(\lim _{n \rightarrow \infty } {\mathscr {D}}_K(\mu _n,\nu _n) = {\mathscr {D}}_K(\mu ,\nu )\), so that \({\mathscr {D}}_K\) is continuous with respect to weak convergence in both arguments. Thus, for any weakly compact subset \(P\subset {\mathcal {P}}({\mathbb {X}})\), the infimum

is actually a minimum. All of the subsets introduced in the previous section are weakly compact.

Lemma 2

The sets \({\mathcal {P}}_N^{{{\,\mathrm{atom}\,}}}({\mathbb {X}})\), \({\mathcal {P}}_N^{{{\,\mathrm{emp}\,}}}({\mathbb {X}})\), \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\), \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L({\mathbb {X}})\), and \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\) are weakly compact.

Proof

It is well-known that \({\mathcal {P}}_N^{{{\,\mathrm{atom}\,}}}({\mathbb {X}})\) and \({\mathcal {P}}_N^{{{\,\mathrm{emp}\,}}}({\mathbb {X}}) \) are weakly compact.

We show that \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) is weakly compact. In view of (2), let \((\gamma _k)_{k\in {\mathbb {N}}}\) be Lipschitz curves with constant speed \(L(\gamma _k)\le L\) and \((\omega _k)_{k\in {\mathbb {N}}} \subset {{\mathcal {P}}}([0,1])\). Since \({\mathcal P}([0,1])\) is weakly compact, we can extract a subsequence \((\omega _{k_j})_{j\in {\mathbb {N}}}\) with weak limit \({{\hat{\omega }}} \in {\mathcal P}([0,1])\). Now, we observe that \( {{\,\mathrm{dist}\,}}_{\mathbb {X}}( \gamma _{k_j} (s), \gamma _{k_j} (t)) \le L |s-t| \) for all \(j\in {\mathbb {N}}\). Since \({\mathbb {X}}\) is compact, the Arzelà–Ascoli theorem implies that there exists a subsequence of \((\gamma _{k_j})_{j\in {\mathbb {N}}}\) which converges uniformly towards \({{\hat{\gamma }}}\in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) with \(L({\hat{\gamma }})\le L\). Then, \({{\hat{\nu }}}:={\hat{\gamma }}{_*}{\hat{\omega }}\) fulfills \(\text {supp}({\hat{\nu }})\subset {\hat{\gamma }}([0,1])\), so that \({\hat{\nu }}\in {{\mathcal {P}}}_{L}^{{{\,\mathrm{curv}\,}}}({\mathbb {X}})\) by (1). Thus, \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) is weakly compact.

The proof for \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L({\mathbb {X}})\) and \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\) is analogous and hence omitted. \(\square \)

Remark 2

(Discrepancies and Convolution Kernels) Let \({\mathbb {X}}= {\mathbb {T}}^d :={\mathbb {R}}^d / {\mathbb {Z}}^d\) be the torus and \(h \in {\mathcal {C}}({\mathbb {T}}^d)\) be a function with Fourier series

which converges in \(L^2({\mathbb {T}}^d)\) so that \(\sum _k |{\hat{h}}_k|^2 < \infty \).

Assume that \({\hat{h}}_k \not = 0\) for all \(k \in {\mathbb {Z}}^d\). We consider the special Mercer kernel

with associated discrepancy \({\mathscr {D}}_h\) via (6), i.e., \(\phi _k(x) = \text {e}^{2 \pi \text {i}\langle k,x\rangle }\), \(\alpha _k = |{\hat{h}}_k|^2\), \(k \in {\mathbb {Z}}^d\) in (3). The convolution of h with \(\mu \in {{\mathcal {M}}}({\mathbb {T}}^d)\) is the function \(h * \mu \in C({\mathbb {T}}^d)\) defined by

By the convolution theorem for Fourier transforms it holds \(\widehat{(h * \mu )}_k = {\hat{h}}_k {{\hat{\mu }}}_k\), \(k \in {\mathbb {Z}}^d\), and we obtain by Parseval’s identity for \(\mu ,\nu \in \mathcal M({\mathbb {T}}^d)\) and (7) that

In image processing, metrics of this kind were considered in [18, 33, 77].

Remark 3

(Relations to Principal Curves) A similar concept, sharing the common theme of “a curve which passes through the middle of a distribution” with the intention of our paper, is that of principle curves. The notion of principal curves has been developed in a statistical framework and was successfully applied in statistics and machine learning, see [38, 55, 57]. The idea is to generalize the concept of principal components with just one direction to so-called self-consistent (principal) curves. In the seminal paper [49], the authors showed that these principal curves \(\gamma \) are critical points of the energy functional

where \(\mu \) is a given probability measure on \({\mathbb {X}}\) and \(\mathrm {proj}_\gamma (x) = \mathrm {argmin}_{y \in \gamma } \Vert x - y\Vert _2\) is a projection of a point \(x \in {\mathbb {X}}\) on \(\gamma \). This notion has also been generalized to Riemannian manifolds in [50], see also [57] for an application on the sphere. Further investigation of principal curves in the plane, cf. [27], showed that self-consistent curves are not (local) minimizers, but saddle points of (8). Moreover, the existence of such curves is established only for certain classes of measures, such as elliptical ones. By additionally constraining the length of curves minimizing (8), these unfavorable effects were eliminated, cf. [55]. In comparison to the objective (8), the discrepancy (6) averages for fixed \(x \in {\mathbb {X}}\) the distance encoded by K to any point on \(\gamma \), instead of averaging over the squared minimal distances to \(\gamma \).

4 Approximation of General Probability Measures

Given \(\mu \in {\mathcal {P}}({\mathbb {X}})\), the estimatesFootnote 1

are well-known, cf. [43, Cor. 2.8]. Here, the constant hidden in \(\lesssim \) depends on \({\mathbb {X}}\) and K but is independent of \(\mu \) and \(N\in {\mathbb {N}}\). In this section, we are interested in approximation rates with respect to measures supported on curves.

Our approximation rates for \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) are based on those for \({\mathcal {P}}_N^{{{\,\mathrm{atom}\,}}}({\mathbb {X}})\) combined with estimates for the traveling salesman problem (TSP). Let \({{\,\mathrm{TSP}\,}}_{{\mathbb {X}}}(N)\) denote the worst case minimal cost tour in a fully connected graph G of N arbitrary nodes represented by \(x_1,\ldots ,x_N\in {\mathbb {X}}\) and edges with cost \({{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_i,x_j)\), \(i,j=1,\ldots ,N\). Similarly, let \({{\,\mathrm{MST}\,}}_{{\mathbb {X}}}(N)\) denote the worst case cost of the minimal spanning tree of G. To derive suitable estimates, we require that \({\mathbb {X}}\) is Ahlfors d-regular (sometimes also called Ahlfors-David d-regular), i.e., there exists \(0<d<\infty \) such that

where \(B_r(x)=\{y\in {\mathbb {X}}: {{\,\mathrm{dist}\,}}_{{\mathbb {X}}}(x,y)\le r\}\) and the constants in \(\sim \) do not depend on x or r. Note that d is not required to be an integer and turns out to be the Hausdorff dimension. For \({\mathbb {X}}\) being the unit cube the following lemma was proved in [75].

Lemma 3

If \({\mathbb {X}}\) is a compact Ahlfors d-regular metric space, then there is a constant \(0<C_{{{\,\mathrm{TSP}\,}}}<\infty \) depending on \({\mathbb {X}}\) such that

Proof

Using (10) and the same covering argument as in [74, Lem. 3.1], we see that for every choice \(x_1,\ldots ,x_N\in {\mathbb {X}}\), there exist \(i\ne j\) such that \({{\,\mathrm{dist}\,}}_{{\mathbb {X}}}(x_i,x_j)\lesssim N^{-1/d}\), where the constant depends on \({\mathbb {X}}\).

Let \(S = \{x_1,\ldots ,x_N\}\) be an arbitrary selection of N points from \({\mathbb {X}}\). First, we choose \(x_i\) and \(x_j\) with \({{\,\mathrm{dist}\,}}_{{\mathbb {X}}}(x_i,x_j)\le c N^{-1/d}\). Then, we form a minimal spanning tree T of \(S \setminus \{x_{i}\}\) and augment the tree by adding the edge between \(x_i\) and \(x_j\). This construction provides us with a spanning tree and hence we can estimate \({{\,\mathrm{MST}\,}}_{{\mathbb {X}}}(N) \le {{\,\mathrm{MST}\,}}_{{\mathbb {X}}}(N-1) + c N^{-1/d}\). Iterating the argument, we deduce

cf. [75]. Finally, the standard relation \({{\,\mathrm{TSP}\,}}_{\mathbb {X}}(N) \le 2 {{\,\mathrm{MST}\,}}_{\mathbb {X}}(N)\) for edge costs satisfying the triangular inequality concludes the proof. \(\square \)

To derive a curve in \({\mathbb {X}}\) from a minimal cost tour in the graph, we require the additional assumption that \({\mathbb {X}}\) is a length space, i.e., a metric space with

cf. [15, 16]. Thus, for the rest of this section, we are assuming that

\({\mathbb {X}}\) is a compact Ahlfors d-regular length space.

In this case, Lemma 3 yields the next proposition.

Proposition 1

For a compact, Ahlfors d-regular length space \({\mathbb {X}}\) it holds \({\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_N({\mathbb {X}})\subset {\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_{C_{{{\,\mathrm{TSP}\,}}} N^{1-1/d}}({\mathbb {X}})\).

Proof

The Hopf-Rinow Theorem for metric measure spaces, see [15, Chap. I.3] and [16, Thm. 2.5.28], yields that every pair of points \(x,y\in {\mathbb {X}}\) can be connected by a geodesic, i.e., there is \(\gamma \in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) with constant speed and \(\ell (\gamma |_{[s,t]})={{\,\mathrm{dist}\,}}_{\mathbb {X}}(\gamma (s),\gamma (t))\) for all \(0\le s\le t\le 1\). Thus, for any pair \(x,y\in {\mathbb {X}}\), there is a constant speed curve \(\gamma _{x,y}\in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) of length \(\ell (\gamma _{x,y}) = {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x, y)\) with \(\gamma _{x,y}(0)=x\), \(\gamma _{x,y}(1) = y\), cf. [16, Rem. 2.5.29]. For \(\mu _N\in {\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_N({\mathbb {X}})\), let \(\{x_1,\ldots ,x_N\}=\text {supp}(\mu _N)\). The minimal cost tour in Lemma 3 leads to a curve \(\gamma \in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\), so that \(\mu _N=\gamma {_*}\omega \in {\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\) for an appropriate measure \(\omega \in {\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_N([0,1])\). \(\square \)

By Proposition 1 we can transfer approximation rates from \({\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_N({\mathbb {X}})\) to \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\).

Theorem 1

For \(\mu \in {\mathcal {P}}({\mathbb {X}})\), it holds with a constant depending on \({\mathbb {X}}\) and K that

Proof

Choose \(\alpha = \frac{d-1}{d}\). For L large enough, set \(N :=\lfloor (L/C_{{{\,\mathrm{TSP}\,}}})^{\frac{1}{\alpha }} \rfloor \in {\mathbb {N}}\), so that we observe \({\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_{N}({\mathbb {X}})\subset {\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_{L}({\mathbb {X}})\). According to (9), we obtain

\(\square \)

Next, we derive approximation rates for \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L({\mathbb {X}}) \) and \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\).

Theorem 2

For \(\mu \in {\mathcal {P}}({\mathbb {X}})\), we have with a constant depending on \({\mathbb {X}}\) and K that

Proof

Let \(\alpha = \frac{d-1}{d}\), \(d \ge 2\). For L large enough, set \(N :=\lfloor L^{\frac{2}{2\alpha + 1}} /\mathrm{diam}({\mathbb {X}}) \rfloor \in {\mathbb {N}}\). By (9), there is a set of points \(\{ x_1, \ldots , x_{N } \} \subset {\mathbb {X}}\) such that

Let these points be ordered as a solution of the corresponding \({{\,\mathrm{TSP}\,}}\). Set \(x_0:=x_N\) and \(\tau _i :={{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_i,x_{i+1})/L\), \(i=0, \ldots , N-1\). Note that

so that \(\tau _i \le N^{-1}\) for all \(i=0,\ldots , N -1\). We construct a closed curve \(\gamma _{\scriptscriptstyle {L}} :[0,1]\rightarrow {\mathbb {X}}\) that rests in each \(x_i\) for a while and then rushes from \(x_i\) to \(x_{i+1}\). As in the proof of Proposition 1, \({\mathbb {X}}\) being a compact length space enables us to choose \(\gamma _i\in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) with \(\gamma _i(0)=x_i\), \(\gamma _i(1) = x_{i+1}\) and \(L(\gamma _i) = {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_i, x_{i+1})\). For \(i=0,\ldots ,N_{\scriptscriptstyle {L}}-1\), we define

By construction, \(L(\gamma _{\scriptscriptstyle {L}})\) is bounded by \(\min _i d(x_i,x_{i+1}) \tau _i^{-1} \le L\). Defining the measure \(\nu :=(\gamma _{\scriptscriptstyle {L}}){_*}\lambda \in {{\mathcal {P}}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_{L} ({\mathbb {X}})\), the related discrepancy can be estimated by

The relation (12) yields \({\mathscr {D}}_K(\mu ,\nu _N)\le CL^{-\frac{1}{2\alpha +1}}\) with some constant \(C>0\). Since for \(\varphi \in H_K({\mathbb {X}})\) it holds \(\Vert \varphi \Vert _{L^\infty ({\mathbb {X}})} \le C_K\Vert \varphi \Vert _{H_K({\mathbb {X}})}\) with \(C_K:=\sup _{x\in {\mathbb {X}}} \sqrt{K(x,x)}\), we finally obtain by Lemma 3

\(\square \)

Note that many compact sets in \({\mathbb {R}}^d\) are compact Ahlfors d-regular length spaces with respect to the Euclidean metric and the normalized Lebesgue measure such as the unit ball or the unit cube. Moreover many compact connected manifolds with or without boundary satisfy these conditions. All assumptions in this section are indeed satisfied for d-dimensional connected, compact Riemannian manifolds without boundary equipped with the Riemannian metric and the normalized Riemannian measure. The latter setting is studied in the subsequent section to refine our investigations on approximation rates.

Remark 4

For \({\mathbb {X}}= {\mathbb {T}}^d\) with \(d\in {\mathbb {N}}\), the estimate

was derived in [18] provided that K satisfies an additional Lipschitz condition, where the constant in (13) depends on d and K. The rate coincides with our rate in (11) for \(d = 2\) and is worse for higher dimensions as \(\frac{d}{3d-2} > \frac{1}{3}\) for all \(d\ge 3\).

5 Approximation of Probability Measures Having Sobolev Densities

To study approximation rates in more detail, we follow the standard strategy in approximation theory and take additional smoothness properties into account. We shall therefore consider \(\mu \) with a density satisfying smoothness requirements. To define suitable smoothness spaces, we make additional structural assumptions on \({\mathbb {X}}\). Throughout the remaining part of this work, we suppose that

\({\mathbb {X}}\) is a d-dimensional connected, compact Riemannian manifold without boundary equipped with the Riemannian metric \({{\,\mathrm{dist}\,}}_{\mathbb {X}}\) and the normalized Riemannian measure \(\sigma _{\mathbb {X}}\).

In the first part of this section, we introduce the necessary background on Sobolev spaces and derive general lower bounds for the approximation rates. Then, we focus on upper bounds in the rest of the section. So far, we only have general upper bounds for \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\). In case of the smaller spaces \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L({\mathbb {X}})\) and \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\), we have to restrict to special manifolds \({\mathbb {X}}\) in order to obtain bounds. For a better overview, all theorems related to approximation rates are named accordingly.

5.1 Sobolev Spaces and Lower Bounds

In order to define a smoothness class of functions on \({\mathbb {X}}\), let \(-\varDelta \) denote the (negative) Laplace–Beltrami operator on \({\mathbb {X}}\). It is self-adjoint on \(L^2({\mathbb {X}},\sigma _{\mathbb {X}})\) and has a sequence of positive, non-decreasing eigenvalues \((\lambda _k)_{k\in {\mathbb {N}}}\) (with multiplicities) with a corresponding orthonormal complete system of smooth eigenfunctions \(\{\phi _k: k\in {\mathbb {N}}\}\). Every function \(f \in L^2({\mathbb {X}},\sigma _{\mathbb {X}})\) has a Fourier expansion

The Sobolev space \(H^s({\mathbb {X}})\), \(s > 0\), is the set of all functions \(f \in L^2({\mathbb {X}},\sigma _{\mathbb {X}})\) with distributional derivative \((I-\varDelta )^{s/2} f \in L^2({\mathbb {X}},\sigma _{\mathbb {X}})\) and norm

For \(s>d/2\), the space \(H^s({\mathbb {X}})\) is continuously embedded into the space of Hölder continuous functions of degree \(s - d/2\), and every function \(f \in H^s({\mathbb {X}})\) has a uniformly convergent Fourier series, see [70, Thm. 5.7]. Actually, \(H^s({\mathbb {X}})\), \(s>d/2\), is a RKHS with reproducing kernel

Hence, the discrepancy \({\mathscr {D}}_K(\mu ,\nu )\) satisfies (5) with \(H_K({\mathbb {X}})=H^s({\mathbb {X}})\). Clearly, each kernel of the above form with coefficients having the same decay as \((1+\lambda _k)^{-s}\) for \(k \rightarrow \infty \) gives rise to a RKHS that coincides with \(H^s({\mathbb {X}})\) with an equivalent norm. Appendix A contains more details of the above discussion for the torus \({\mathbb {T}}^d\), the sphere \({\mathbb {S}}^d\), the special orthogonal group \(\mathrm{SO}(3)\) and the Grassmannian \({\mathcal {G}}_{k,d}\).

Now, we are in the position to establish lower bounds on the approximation rates. Again, we want to remark that our results still hold if we drop the requirement that the approximating curves are closed.

Theorem 3

(Lower bound) For \(s > d/2\) suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Assume that \(\mu \) is absolutely continuous with respect to \(\sigma _{\mathbb {X}}\) with a continuous density \(\rho \). Then, there are constants depending on \({\mathbb {X}}\), K, and \(\rho \) such that

Proof

The proof is based on the construction of a suitable fooling function to be used in (5) and follows [13, Thm. 2.16]. There exists a ball \(B\subset {\mathbb {X}}\) with \(\rho (x)\ge \epsilon = \epsilon (B,\rho )\) for all \(x \in B\) and \(\sigma _{\mathbb {X}}(B)>0\), which is chosen as the support of the constructed fooling functions. We shall verify that for every \(\nu \in {{\mathcal {P}}}_N^{{{\,\mathrm{atom}\,}}}({\mathbb {X}})\) there exists \(\varphi \in H^s({\mathbb {X}})\) such that \(\varphi \) vanishes on \(\text {supp}(\nu )\) but

where the constant depends on \({\mathbb {X}}\), K, and \(\rho \). For small enough \(\delta \) we can choose 2N disjoint balls in B with diameters \(\delta N^{-1/d}\), see also [39]. For \(\nu \in {{\mathcal {P}}}_N^{{{\,\mathrm{atom}\,}}}({\mathbb {X}})\), there are N of these balls that do not intersect with \(\text {supp}(\nu )\). By putting together bump functions supported on each of the N balls, we obtain a non-negative function \(\varphi \) supported in B that vanishes on \(\text {supp}(\nu )\) and satisfies (14), with a constant that depends on \(\epsilon \), cf. [13, Thm. 2.16]. This yields

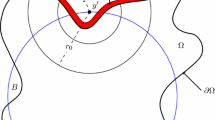

The inequality for \({\mathcal {P}}_L^{{{\,\mathrm{curv}\,}}}({\mathbb {X}})\) is derived in a similar way. Given a continuous curve \(\gamma :[0,1]\rightarrow {\mathbb {X}}\) of length L, choose N such that \(L\le \delta N N^{-1/d}\). By taking half of the radius of the above balls, there are 2N pairwise disjoint balls of radius \(\frac{\delta }{2} N^{-1/d}\) contained in B with pairwise distances at least \(\delta N^{-1/d}\). Any curve of length \(\delta N N^{-1/d}\) intersects at most N of those balls. Hence, there are N balls of radius \(\frac{\delta }{2}N^{-1/d}\) that do not intersect \(\text {supp}(\gamma )\). As above, this yields a fooling function \(\varphi \) satisfying (14), which ends the proof. \(\square \)

5.2 Upper Bounds for \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\)

In this section, we derive upper bounds that match the lower bounds in Theorem 3 for \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\). Our analysis makes use of the following theorem, which was already proved for \({\mathbb {X}}= {\mathbb {S}}^d\) in [51].

Theorem 4

[13, Thm. 2.12] Assume that \(\nu _r \in {{\mathcal {P}}}({\mathbb {X}})\) provides an exact quadrature for all eigenfunctions \(\varphi _k\) of the Laplace–Beltrami operator with eigenvalues \(\lambda _k \le r^2\), i.e.,

Then, it holds for every function \(f \in H^s({\mathbb {X}})\), \(s > d/2\), that there is a constant depending on \({\mathbb {X}}\) and s with

For our estimates it is important that the number of eigenfunctions of the Laplace–Beltrami operator on \({\mathbb {X}}\) belonging to eigenvalues with \(\lambda _k \le r^2\) is of order \(r^d\), see [19, Chap. 6.4] and [52, Thm. 17.5.3, Cor. 17.5.8]. This is known as Weyl’s estimates on the spectrum of an elliptic operator. For some special manifolds, the eigenfunctions are explicitly given in the appendix. In the following lemma, the result from Theorem 4 is rewritten in terms of discrepancies and generalized to absolutely continuous measures with densities \(\rho \in H^s({\mathbb {X}})\).

Lemma 4

For \(s>d/2\) suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms and that \(\nu _r\in {\mathcal {P}}({\mathbb {X}})\) satisfies (15). Let \(\mu \in {\mathcal {P}}({\mathbb {X}})\) be absolutely continuous with respect to \(\sigma _{\mathbb {X}}\) with density \(\rho \in H^s({\mathbb {X}})\). For sufficiently large r, the measures \({\tilde{\nu }}_r:=\frac{\rho }{\beta _r} \nu _r \in {\mathcal {P}}({\mathbb {X}})\) with \(\beta _r :=\int _{{\mathbb {X}}} \rho \,\mathrm {d}\nu _r\) are well defined and there is a constant depending on \({\mathbb {X}}\) and K with

Proof

Note that \(H^s({\mathbb {X}})\) is a Banach algebra with respect to addition and multiplication [22], in particular, for \(f,g \in H^s({\mathbb {X}})\) we have \(fg \in H^s({\mathbb {X}})\) with

By Theorem 4, we obtain for all \(\varphi \in H^s({\mathbb {X}})\) that

In particular, this implies for \(\varphi \equiv 1\) that

Then, application of the triangle inequality results in

According to (17), the first summand is bounded by \(\lesssim r^{-s} \Vert \varphi \Vert _{H^s({\mathbb {X}})}\Vert \rho \Vert _{H^s({\mathbb {X}})}\). It remains to derive matching bounds on the second term. Hölder’s inequality yields

where the last inequality is due to \(H^s({\mathbb {X}}) \hookrightarrow L^\infty ({\mathbb {X}})\) and (18). \(\square \)

Using the previous lemma, we derive optimal approximation rates for \({\mathcal {P}}_N^{{{\,\mathrm{atom}\,}}}({\mathbb {X}})\) and \({\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_L({\mathbb {X}})\).

Theorem 5

(Upper bounds) For \(s > d/2\) suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Assume that \(\mu \) is absolutely continuous with respect to \(\sigma _{\mathbb {X}}\) with density \(\rho \in H^s({\mathbb {X}})\). Then, there are constants depending on \({\mathbb {X}}\) and K such that

Proof

By [13, Lem. 2.11] and since the Laplace–Beltrami has \(N \sim r^d\) eigenfunctions belonging to eigenvectors \(\lambda _k < r^2\), there exists a measure \(\nu _r\in {\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_{N}({\mathbb {X}})\) that satisfies (15). Hence, (15) is satisfied with \(r\sim N^{1/d}\), where the constants depend on \({\mathbb {X}}\) and K. Thus, Lemma 4 with \({\tilde{\nu }}_r\in {\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_N({\mathbb {X}})\) leads to (19).

The assumptions of Lemma 3 are satisfied, so that analogous arguments as in the proof of Theorem 1 yield \({\mathcal {P}}^{{{\,\mathrm{atom}\,}}}_{N}({\mathbb {X}})\subset {\mathcal {P}}^{{{\,\mathrm{curv}\,}}}_{L}({\mathbb {X}})\) with suitable \(N\sim L^{d/(d-1)}\). Hence, (19) implies (20).

\(\square \)

5.3 Upper Bounds for \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L(\pmb {{\mathbb {X}}})\) and special manifolds \(\pmb {{\mathbb {X}}}\)

To establish upper bounds for the smaller space \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L({\mathbb {X}})\), restriction to special manifolds is necessary. The basic idea consists in the construction of a curve and a related measure \(\nu _r\) such that all eigenfunctions of the Laplace–Beltrami operator belonging to eigenvalues smaller than a certain value are exactly integrated by this measure and then applying Lemma 4 for estimating the minimum of discrepancies. We begin with the torus.

Theorem 6

(Torus) Let \({\mathbb {X}}= {\mathbb {T}}^d\) with \(d\in {\mathbb {N}}\), \(s>d/2\) and suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Then, for any absolutely continuous measure \(\mu \in {{\mathcal {P}}}({\mathbb {X}})\) with Lipschitz continuous density \(\rho \in H^s({\mathbb {X}})\), there exists a constant depending on d, K, and \(\rho \) such that

Proof

1. First, we construct a closed curve \(\gamma _r\) such that the trigonometric polynomials from \({\Pi }_r({\mathbb {T}}^{d})\), see (33) in the appendix, are exactly integrated along this curve. Clearly, the polynomials in \({\Pi }_r(\mathbb T^{d-1})\) are exactly integrated at equispaced nodes \(x_{\varvec{k}} = \frac{{\varvec{k}}}{n}\), \({\varvec{k}}=(k_1,\ldots ,k_{d-1}) \in \mathbb N_0^{d-1}\), \(0 \le k_i \le n-1\), with weights \(1/n^{d-1}\), where \(n :=r+1\). Set \(z(\varvec{k}) :=k_1 + k_2 n + \ldots + k_{d-1} n^{d-2}\) and consider the curves

Then, each element in \({\Pi }^{d}_r\) is exactly integrated along the union of these curves, i.e., using \(I :=\{0,\ldots ,n-1\}^{d-1}\), we have

The argument is repeated for every other coordinate direction, so that we end up with \(d n^{d-1}\) curves mapping from an interval of length \(\frac{1}{d n^{d-1}}\) to \({\mathbb {T}}^d\). The intersection points of these curves are considered as vertices of a graph, where each vertex has 2d edges. Consequently, there exists an Euler path \(\gamma _r:[0,1] \rightarrow {\mathbb {T}}^d\) trough the vertices build from all curves. It has constant speed \(d n^{d-1}\) and the polynomials \({\Pi }^{d}_r\) are exactly integrated along \(\gamma _r\), i.e.,

2. Next, we apply Lemma 4 for \(\nu _r={\gamma _r}_{*}\lambda \). We observe \({\tilde{\nu }}_r ={\gamma _r}{_*}((\rho \circ \gamma _r)/{ \beta _r} \lambda )\) and deduce \(L(\rho \circ \gamma _r/\beta _r)\le L(\gamma _r)L(\rho )/{\beta _r}\lesssim r^{d-1}\sim L\) as \(\beta _r\sim 1\). Here, constants depend on d, K, and \(\rho \). \(\square \)

Now, we provide approximation rates for \({\mathbb {X}}={\mathbb {S}}^d\).

Theorem 7

(Sphere) Let \({\mathbb {X}}= {\mathbb {S}}^d\) with \(d \ge 2\), \(s>d/2\) and suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Then, we have for any absolutely continuous measure \(\mu \in {{\mathcal {P}}}({\mathbb {X}})\) with Lipschitz continuous density \(\rho \in H^s({\mathbb {X}})\) that there is a constant depending on d, K, and \(\rho \) with

Proof

1. First, we construct a constant speed curve \(\gamma _{r}:[0,1]\rightarrow {\mathbb {S}}^{d}\) and a probability measure \(\omega _r = \rho _r \lambda \) with Lipschitz continuous density \(\rho _{r}:[0,1] \rightarrow {\mathbb {R}}_{\ge 0}\) such that for all \(p \in {\Pi }_{r}({\mathbb {S}}^{d})\), it holds

Utilizing spherical coordinates

where \(\theta _k \in [0,\pi ]\), \(k=1,\ldots ,d-1\), and \(\phi \in [0,2\pi )\), we obtain

where \(c_{d} :=(\int _{0}^{\pi } \sin (\theta )^{d-1} \,\mathrm {d}\theta )^{-1} \). There exist nodes \({\tilde{x}}_{i} \in \mathbb S^{d-1}\) and positive weights \(a_{i}\), \(i=1,\dots , n \sim r^{d-1}\), with \(\sum _{i=1}^n a_i = 1\), such that for all \(p \in {\Pi }_{r}({\mathbb {S}}^{d-1})\) it holds

To see this, substitute \(u_k = \sin \theta _k\), \(k=2,\ldots ,d-1\), apply Gaussian quadrature with nodes \(\lceil (r+1)/2 \rceil \) and corresponding weights to exactly integrate over \(u_k\), and equispaced nodes and weights \(1/(2r+1)\) for the integration over \(\phi \) as, e.g., in [82]. Then, we define \(\gamma _{r}:[0,1]\rightarrow {\mathbb {S}}^{d}\) for \(t \in [(i-1)/n,i/n]\), \(i=1,\dots ,n\), by

Since \((1,0,\dots ,0) = \gamma _{r,i}(0) = \gamma _{r,i}(2\pi )\) for all \(i=1,\dots ,n\), the curve is closed. Furthermore, \(\gamma _{r}(t)\) has constant speed since for \(i=1,\dots ,n\), i.e.,

Next, the density \(\rho _{r}:[0,1]\rightarrow \mathbb {\mathbb {R}}\) is defined for \(t \in [(i-1)/n,i/n]\), \(i=1,\dots ,n\), by

We directly verify that \( \rho _{r}\) is Lipschitz continuous with \(L(\rho _r) \lesssim \max _{i} a_i n^2\). By [34], the quadrature weights fulfill \(a_i \lesssim \frac{1}{r^{d-1}}\) so that \(L(\rho _r) \lesssim n^2 r^{-(d-1) } \sim r^{d-1}\). By definition of the constant \(c_{d}\) and weights \(a_{i}\), we see that \(\rho _r\) is indeed a probability density

For \(p \in {\Pi }_{r}({\mathbb {S}}^{d})\), we obtain

Without loss of generality, p is chosen as a homogeneous polynomial of degree \(k \le r\), i.e., \(p(t x) =t^k p(x)\). Then,

and regarding that for fixed \(\alpha \in [0,2\pi ]\) the function \({\tilde{x}} \mapsto p(\cos (\alpha ), \sin (\alpha ) {\tilde{x}})\) is a polynomial of degree at most r on \({\mathbb {S}}^{d-1}\), we conclude

Now, the assertion (21) follows from (23) and since \(\int _{{\mathbb {S}}^{d}} p \,\mathrm {d}\sigma _{{\mathbb {S}}^{d}}=0\) if k is odd.

2. Next, we apply Lemma 4 for \(\nu _r={\gamma _r}_{*} \rho _r\lambda \), from which we obtain that \({\tilde{\nu }}_r={\gamma _r}{_*}((\rho \circ \gamma _r) \rho _r/{\beta _r}\lambda )\). As all \(\rho _r\) are uniformly bounded by construction and \(\rho \) is bounded due to continuity, we conclude using \(L(\rho _r) \lesssim r^{d-1}\) and \(L(\gamma _r) \sim r^{d-1}\) that

which concludes the proof. \(\square \)

Finally, we derive approximation rates for \({\mathbb {X}}=\mathrm{SO}(3)\).

Corollary 1

(Special orthogonal group) Let \({\mathbb {X}}= \mathrm{SO}(3)\), \(s>3/2\) and suppose \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Then, we have for any absolutely continuous measure \(\mu \in {{\mathcal {P}}}({\mathbb {X}})\) with Lipschitz continuous density \(\rho \in H^s({\mathbb {X}})\) that

where the constant depends on K and \(\rho \).

Proof

1. For fixed \(L\sim r^2\), we shall construct a curve \(\gamma _{r}:[0,1] \rightarrow \mathrm {SO(3)}\) with \(L(\gamma _r)\lesssim L\) and a probability measure \(\omega _r = \rho _r \lambda \) with density \(\rho _{r}:[0,1] \rightarrow {\mathbb {R}}_{\ge 0}\) and \(L(\rho _{r}) \lesssim L\), such that

We use the fact that the sphere \({\mathbb {S}}^{3}\) is a double covering of \(\mathrm {SO(3)}\). That is, there is a surjective two-to-one mapping \(a:{\mathbb {S}}^{3} \rightarrow \mathrm {SO(3)}\) satisfying \(a(x) = a(-x)\), \(x \in {\mathbb {S}}^{3}\). Moreover, we know that \(a:{\mathbb {S}}^{3} \rightarrow \mathrm {SO(3)}\) is a local isometry, see [42], i.e., it respects the Riemannian structures, implying the relations \(\sigma _{\mathrm {SO(3)}} = a_{*} \sigma _{{\mathbb {S}}^{3}}\) and

It also maps \({\Pi }_{r}(\mathrm {SO(3)})\) into \({\Pi }_{2r}({\mathbb {S}}^3)\), i.e., \(p\in {\Pi }_{r}(\mathrm {SO(3)})\) implies \(p\circ a\in {\Pi }_{2r}({\mathbb {S}}^3)\). Now, let \({\tilde{\gamma }}_{r}:[0,1]\rightarrow {\mathbb {S}}^{3}\) and \({\tilde{\omega }}_{r}\) be given as in the first part of the proof of Theorem 7 for \(d=3\), i.e., \({{\tilde{\gamma }}_r}{_*}{\tilde{\omega }}_{r}\) satisfies (21) with \(L(\tilde{\gamma _r})\lesssim L\) and \(\tilde{\omega }_r=\tilde{\rho _r}\lambda \) with \(L(\tilde{\rho }_r)\lesssim L\).

We now define a curve \(\gamma _r\) in \(\mathrm{SO}(3)\) by

and let \(\omega _r :=\tilde{\omega }_{2r}\). For \(p\in {\Pi }_r(\mathrm{SO}(3))\), the push-forward measure \({\gamma _r}{_*} \omega _r\) leads to

Hence, property (15) is satisfied for \({\gamma _r}{_*} \omega _r={\gamma _r}{_*} (\tilde{\rho }_{2r}\lambda )\).

2. The rest follows along the lines of step 2. in the proof of Theorem 7. \(\square \)

5.4 Upper Bounds for \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L(\pmb {{\mathbb {X}}})\) and special manifolds \(\pmb {{\mathbb {X}}}\)

To derive upper bounds for the smallest space \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\), we need the following specification of Lemma 4.

Lemma 5

For \(s>d/2\) suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Let \(\mu \in {\mathcal {P}}({\mathbb {X}})\) be absolutely continuous with respect to \(\sigma _{\mathbb {X}}\) with positive density \(\rho \in H^s({\mathbb {X}})\). Suppose that \(\nu _r :={\gamma _{r}}{_*} \lambda \) with \(\gamma _r\in {{\,\mathrm{Lip}\,}}({\mathbb {X}})\) satisfies (15) and let \( \beta _r :=\int _{\mathbb {X}}\rho \,\mathrm {d}\nu _r \). Then, for sufficiently large r,

is well-defined and invertible. Moreover, \(\tilde{\gamma }_r :=\gamma _r \circ g^{-1}\) satisfies \(L({\tilde{\gamma }}_r) \lesssim L( \gamma _r)\) and

where the constants depend on \({\mathbb {X}}\), K, and \(\rho \).

Proof

Since \(\rho \) is continuous, there is \(\epsilon >0\) with \(\rho \ge \epsilon \). To bound the Lipschitz constant \(L({\tilde{\gamma }}_r)\), we apply the mean value theorem together with the definition of g and the fact that \((g^{-1})'(s) = 1/g'(g^{-1}(s))\) to obtain

Using (18), this can be further estimated for sufficiently large r as

To derive (24), we aim to apply Lemma 4 with \(\nu _r={\gamma _r}{_*}\lambda \). We observe

so that Lemma 4 indeed implies (24). \(\square \)

In comparison to Theorem 6, we now trade the Lipschitz condition on \(\rho \) with the positivity requirement, which enables us to cover \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\).

Theorem 8

(Torus) Let \({\mathbb {X}}= {\mathbb {T}}^d\) with \(d\in {\mathbb {N}}\), \(s>d/2\) and suppose that \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Then, for any absolutely continuous measure \(\mu \in {{\mathcal {P}}}({\mathbb {X}})\) with positive density \(\rho \in H^s({\mathbb {X}})\), there is a constant depending on d, K, and \(\rho \) with

Proof

The first part of the proof is identical to the proof of Theorem 6. Instead of Lemma 4 though, we now apply Lemma 5 for \(\gamma _r\) and \(\rho _r \equiv 1\). Hence, \({\tilde{\gamma }}_r = \gamma _r \circ g_r^{-1}\) satisfies \(L(\tilde{\gamma }_r)\le \frac{\beta _r}{\epsilon } d(2r +1)^{d-1} \lesssim r^{d-1}\), so that \(\tilde{\gamma _r}{_*}\lambda \) satisfies (24) and is in \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\) with \(L \sim r^{d-1}\). \(\square \)

The construction on \({\mathbb {X}}={\mathbb {S}}^d\) for \({\mathcal {P}}^{{{\,\mathrm{ a-curv}\,}}}_L({\mathbb {X}})\) in the proof of Theorem 7 is not compatible with \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\). Thus, the situation is different from the torus, where we have used the same underlying construction and only switched from Lemma 4 to Lemma 5. Now, we present a new construction for \({\mathcal {P}}^{{{\,\mathrm{{\lambda }-curv}\,}}}_L({\mathbb {X}})\), which is tailored to \({\mathbb {X}}={\mathbb {S}}^2\). In this case, we can transfer the ideas of the torus, but with Gauss-Legendre quadrature points.

Theorem 9

(2-sphere) Let \({\mathbb {X}}= {\mathbb {S}}^2\), \(s>1\) and suppose \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Then, we have for any absolutely continuous measure \(\mu \in {{\mathcal {P}}}({\mathbb {X}})\) with positive density \(\rho \in H^s({\mathbb {X}})\) that there is a constant depending on K and \(\rho \) with

Proof

1. We construct closed curves such that the spherical polynomials from \({\Pi }_r({\mathbb {S}}^2)\), see (35) in the appendix, are exactly integrated along this curve. It suffices to show this for the polynomials \(p(x) = x^{k_1} x^{k_2} x_3^{k_3} \in {\Pi }_r({\mathbb {S}}^2)\) with \(k_1+k_2+k_3 \le r\) restricted to \({\mathbb {S}}^2\). We select \(n = \lceil (r+1)/2 \rceil \) Gauss-Legendre quadrature points \(u_j = \cos (\theta _j)\in [-1,1]\) and corresponding weights \(2\omega _j\), \(j=1, \ldots ,n\). Note that \(\sum _{j=1}^n \omega _j = 1\). Using spherical coordinates \(x_1=\cos (\theta )\), \(x_2=\sin (\theta )\cos (\phi )\), and \(x_3=\sin (\theta )\sin (\phi )\) with \((\theta , \phi ) \in [0,\pi ] \times [0,2\pi ]\), we obtain

see also [83]. If \(k_2+k_3\) is odd, then the integral over \(\phi \) becomes zero. If \(k_2+k_3\) is even, the inner integrand is a polynomial of degree \(\le r\). In both cases we get

Substituting in each summand \(\phi = 2\pi t /\omega _j\), \(j=1,\ldots ,n\), yields

where \(\gamma _j:[0,\omega _j] \rightarrow {\mathbb {S}}^2\) is defined by

and has constant speed \(L(\gamma _j) = 2\pi \sin (\theta _j)/\omega _j\). The lower bound \(\omega _j \gtrsim \frac{1}{n}\sin (\theta _j)\), cf. [34], implies that \(L(\gamma _j)\lesssim n\). Defining a curve \(\tilde{\gamma }:[0,1]\rightarrow {\mathbb {S}}^2\) piecewise via

where \(s_j :=\omega _1 + \ldots + \omega _j\), we obtain

Further, the curve satisfies \(L(\tilde{\gamma })\lesssim r\).

As with the torus, we now “turn” the sphere (or switch the position of \(\phi \)) so that we get circles along orthogonal directions. This large collection of circles is indeed connected. As with the torus, each intersection point has an incoming and outgoing part of a circle, so that all this corresponds to a graph, where again each vertex has an even number of “edges”. Hence, there is an Euler path inducing our final curve \(\gamma _r:[0,1]\rightarrow {\mathbb {S}}^2\) with piecewise constant speed \(L(\gamma _r)\lesssim r\) satisfying

2. Let \(r \sim L\). Analogous to the end of the proof of Theorem 8, Lemma 5 now yields the assertion. \(\square \)

To get the approximation rate for \({\mathbb {X}}={\mathcal {G}}_{2,4}\), we make use of its double covering \({\mathbb {X}}={\mathbb {S}}^2\times {\mathbb {S}}^2\), cf. Remark 8.

Theorem 10

(Grassmannian) Let \({\mathbb {X}}= {\mathcal {G}}_{2,4}\), \(s>2\) and suppose \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\) holds with equivalent norms. Then, we have for any absolutely continuous measure \(\mu \in {{\mathcal {P}}}({\mathbb {X}})\) with positive density \(\rho \in H^s({\mathbb {X}})\) that there exists a constant depending on K and \(\rho \) with

Proof

By Remark 8 in the appendix, we know that \({\mathcal {G}}_{2,4} \cong {\mathbb {S}}^2\times {\mathbb {S}}^2/ \{\pm 1\}\) so that is remains to prove the assertion for \({\mathbb {X}}= {\mathbb {S}}^2 \times {\mathbb {S}}^2\).

There exist pairwise distinct points \(\{x_1,\ldots ,x_N\}\subset {\mathbb {S}}^2\) such that \(\frac{1}{N}\sum _{j=1}^N \delta _{x_j}\) satisfies (15) on \({\mathbb {S}}^2\) with \(N\sim r^2\), cf. [9, 10]. On the other hand, let \(\tilde{\gamma }\) be the curve on \({\mathbb {S}}^2\) constructed in the proof of Theorem 9, so that \(\tilde{\gamma }{_*}\lambda \) satisfies (15) on \({\mathbb {S}}^2\) with \(\ell (\tilde{\gamma })\le L(\tilde{\gamma })\sim r\). Let us introduce the virtual point \(x_{N+1}:=x_1\).

The curve \(\tilde{\gamma }([0,1])\) contains a great circle. Thus, for each pair \(x_j\) and \(x_{j+1}\) there is \(O_j\in {{\,\mathrm{O}\,}}(3)\) such that \(x_j,x_{j+1}\in \varGamma _j:=O_j\tilde{\gamma }([0,1])\).

It turns out that the set on \({\mathbb {S}}^2 \times {\mathbb {S}}^2\) given by \( \bigcup _{j=1}^N (\{x_j\}\times \varGamma _j)\cup (\varGamma _j \times \{x_{j+1}\}) \) is connected. We now choose \(\gamma _j:=O_j\tilde{\gamma }\) and know that the union of the trajectories of the set of curves

is connected. Combinatorial arguments involving Euler paths, see Theorems 6 and 9, lead to a curve \(\gamma \) with \(\ell (\gamma )\le L(\gamma )\sim N L(\tilde{\gamma }) \sim r^3\), so that \(\gamma {_*} \lambda \) satisfies (15). The remaining part follows along the lines of the proof of Theorem 7. \(\square \)

Our approximation results can be extended to diffeomorphic manifolds, e.g., from \({\mathbb {S}}^2\) to ellipsoids, see also the 3D-torus example in Sect. 8. To this end, recall that we can describe the Sobolev space \(H^s({\mathbb {X}})\) using local charts, see [78, Sec. 7.2]. The exponential maps \(\exp _{x} :T_{x}{\mathbb {X}}\rightarrow {\mathbb {X}}\) give rise to local charts \((\mathring{B}_{x}(r_0), \exp _x^{-1})\), where \(\mathring{B}_{x}(r_0) :=\{y \in {\mathbb {X}}: {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x,y) < r_0\}\) denotes the geodesic balls around x with the injectivity radius \(r_0\). If \(\delta < r_0\) is chosen small enough, there exists a uniformly locally finite covering of \({\mathbb {X}}\) by a sequence of balls \((\mathring{B}_{x_j}(\delta ))_j\) with a corresponding smooth resolution of unity \((\psi _j)_j\) with \(\text {supp}(\psi _j) \subset \mathring{B}_{x_j}(\delta )\), see [78, Prop. 7.2.1]. Then, an equivalent Sobolev norm is given by

where \((\psi _j f) \circ \exp _{x_j}\) is extended to \({\mathbb {R}}^d\) by zero, see [78, Thm. 7.4.5]. Using Definition (25), we are able to pull over results from the Euclidean setting.

Proposition 2

Let \({\mathbb {X}}_1\), \({\mathbb {X}}_2\) be two d-dimensional connected, compact Riemannian manifolds without boundary, which are \(s+1\) diffeomorphic with \(s>d/2\). Assume that for \(H_K(\mathbb X_2)=H^s({\mathbb {X}}_2)\) and every absolutely continuous measure \(\mu \) with positive density \(\rho \in H^s({\mathbb {X}}_2)\) it holds

where the constant depends on \({\mathbb {X}}_2\), K, and \(\rho \). Then, the same property holds for \({\mathbb {X}}_1\), where the constant additionally depends on the diffeomorphism.

Proof

Let \(f :{\mathbb {X}}_2 \rightarrow {\mathbb {X}}_1\) denote such a diffeomorphism and \(\rho \in H^s({\mathbb {X}}_1)\) the density of the measure \(\mu \) on \({\mathbb {X}}_1\). Any curve \({\tilde{\gamma }} :[0,1] \rightarrow {\mathbb {X}}_2\) gives rise to a curve \(\gamma :[0,1] \rightarrow {\mathbb {X}}_1\) via \(\gamma = f \circ {\tilde{\gamma }}\), which for every \(\varphi \in H^s({\mathbb {X}}_1)\) satisfies

where \(J_f\) denotes the Jacobian of f. Now, note that \(\varphi \circ f, \rho \circ f \vert \det (J_f) \vert \in H^s({\mathbb {X}}_2)\), see (16) and [78, Thm. 4.3.2], which is lifted to manifolds using (25). Hence, we can define a measure \({\tilde{\mu }}\) on \({\mathbb {X}}_2\) through the probability density \(\rho \circ f \vert \det (J_f) \vert \). Choosing \(\tilde{\gamma }_L\) as a realization for some minimizer of \(\inf _{\nu \in {\mathcal {P}}_L^{{{\,\mathrm{{\lambda }-curv}\,}}}} {\mathscr {D}}({\tilde{\mu }},\nu )\), we can apply the approximation result for \({\mathbb {X}}_2\) and estimate for \(\gamma _L = f \circ {\tilde{\gamma }}_L\) that

where the second estimate follows from [78, Thm. 4.3.2]. Now, \(L(\gamma _L) \le L(f)L\) implies

\(\square \)

Remark 5

Consider a probability measure \(\mu \) on \({\mathbb {X}}\) such that the dimension \(d_\mu \) of its support is smaller than the dimension d of \({\mathbb {X}}\). Then, \(\mu \) does not have any density with respect to \(\sigma _{\mathbb {X}}\). If \(\text {supp}(\mu )\) is itself a \(d_\mu \)-dimensional connected, compact Riemannian manifold \({\mathbb {Y}}\) without boundary, we switch from \({\mathbb {X}}\) to \({\mathbb {Y}}\). Sobolev trace theorems and reproducing kernel Hilbert space theory imply that the assumption \(H_K({\mathbb {X}})=H^s({\mathbb {X}})\) leads to \(H_{K'}({\mathbb {Y}})=H^{s'}({\mathbb {Y}})\), where \(K':=K|_{{\mathbb {Y}}\times {\mathbb {Y}}}\) is the restricted kernel and \(s'=s-(d-d_\mu )/2\), cf. [36]. If, for instance, \({\mathbb {Y}}\) is diffeomorphic to \({\mathbb {T}}^{d_\mu }\) (or \({\mathbb {S}}^{d_{\mu }}\) with \(d_\mu =2\)), and \(\mu \) has a positive density \(\rho \in H^{s'}({\mathbb {Y}})\) with respect to \(\sigma _{{\mathbb {Y}}}\), then Theorem 8 (or 9) and Proposition 2 eventually yield

If \(\text {supp}(\mu )\) is a proper subset of \({\mathbb {Y}}\), we are able to analyze approximations with \({\mathcal {P}}_L^{{{\,\mathrm{ a-curv}\,}}}({\mathbb {Y}})\). First, we observe that the analogue of Proposition 2 also holds for \({\mathcal {P}}_L^{{{\,\mathrm{ a-curv}\,}}}({\mathbb {X}}_1), {\mathcal {P}}_L^{{{\,\mathrm{ a-curv}\,}}}({\mathbb {X}}_2)\) when the positivity assumption on \(\rho \) is replaced with the Lipschitz requirement as in Theorems 6 and 7. If, for instance, \({\mathbb {Y}}\) is diffeomorphic to \({\mathbb {T}}^{d_\mu }\) or \({\mathbb {S}}^{d_\mu }\) and \(\mu \) has a Lipschitz continuous density \(\rho \in H^{s'}({\mathbb {Y}})\) with respect to \(\sigma _{{\mathbb {Y}}}\), then Theorems 6 and 7, and Proposition 2 eventually yield

6 Discretization

In our numerical experiments, we are interested in determining minimizers of

Defining \( A_L :=\{\gamma \in {{\,\mathrm{Lip}\,}}({\mathbb {X}}): L(\gamma ) \le L\}\) and using the indicator function

we can rephrase problem (26) as a minimization problem over curves

where \({\mathcal {J}}_L(\gamma ):={\mathscr {D}}^2_K(\mu ,\gamma {_*}\lambda ) + \iota _{A_L}(\gamma )\). As \({\mathbb {X}}\) is a connected Riemannian manifold, we can approximate curves in \(A_L\) by piecewise shortest geodesics with N parts, i.e., by curves from

Next, we approximate the Lebesgue measure on [0, 1] by \(e_N :=\frac{1}{N} \sum _{i=1}^{N} \delta _{i/N}\) and consider the minimization problems

where \({\mathcal {J}}_{L,N}(\gamma ):={\mathscr {D}}^{2}_K (\mu , \gamma {_*}e_N) + \iota _{A_{L,N}}(\gamma )\). Since \(\mathrm {ess}\sup _{t \in [0,1]} |{\dot{\gamma }}| (t) = L(\gamma )\), the constraint \(L(\gamma ) \le L\) can be reformulated as \(\int _0^1 (|{\dot{\gamma }}| (t) - L)_+^2 \,\mathrm {d}t = 0\).Footnote 2 Hence, using \(x_i = \gamma (i/N)\), \(i=1,\ldots ,N\), \(x_0 = x_N\) and regarding that \(|{\dot{\gamma }}| (t) = N {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{i-1},x_{i})\) for \(t \in \left( \frac{i-1}{N},\frac{i}{N} \right) \), problem (27) is rewritten in the computationally more suitable form

This discretization is motivated by the next proposition. To this end, recall that a sequence \((f_N)_{N\in {\mathbb {N}}}\) of functions \(f_N:{{\mathbb {X}}} \rightarrow (-\infty ,+\infty ]\) is said to \(\varGamma \)-converge to \(f :{{\mathbb {X}}} \rightarrow (-\infty ,+\infty ]\) if the following two conditions are fulfilled for each \(x \in {{\mathbb {X}}}\), see [12]:

-

(i)

\(f(x) \le \liminf _{N \rightarrow \infty } f_N(x_N)\) whenever \(x_N \rightarrow x\),

-

(ii)

there is a sequence \((y_N)_{N\in {\mathbb {N}}}\) with \(y_N \rightarrow x \) and \(\limsup _{N \rightarrow \infty } f_N(y_N) \le f(x)\).

The importance of \(\varGamma \)-convergence relies in the fact that every cluster point of minimizers of \((f_N)_{N\in {\mathbb {N}}}\) is a minimizer of f. Note that for non-compact manifolds \({\mathbb {X}}\) an additional equi-coercivity condition would be required.

Proposition 3

The sequence \(({\mathcal {J}}_{L,N})_{N\in {\mathbb {N}}}\) is \(\varGamma \)-convergent with limit \({\mathcal {J}}_L\).

Proof

1. First, we verify the \(\liminf \)-inequality. Let \((\gamma _N)_{N\in {\mathbb {N}}}\) with \(\lim _{N\rightarrow \infty } \gamma _N = \gamma \), i.e., the sequence satisfies \(\sup _{t \in [0,1]} {{\,\mathrm{dist}\,}}_{\mathbb {X}}(\gamma (t),\gamma _N(t)) \rightarrow 0\). By excluding the trivial case \(\liminf _{N \rightarrow \infty } {\mathcal {J}}_{L,N}(\gamma _N) = \infty \) and restricting to a subsequence \((\gamma _{N_k})_{k \in {\mathbb {N}}}\), we may assume \(\gamma _{N_k} \in A_{L,N_k} \subset A_L\). Since \(A_L\) is closed, we directly infer \(\gamma \in A_L\). It holds \(e_N \rightharpoonup \lambda \), which is equivalent to the convergence of Riemann sums for \(f \in C[0,1]\), and hence also \({\gamma _N}_{*} e_N \rightharpoonup \gamma _{*}\!\,\mathrm {d}r\). By the weak continuity of \({\mathscr {D}}^2_K\), we obtain

2. Next, we prove the \(\limsup \)-inequality, i.e., we are searching for a sequence \((\gamma _N)_{N\in {\mathbb {N}}}\) with \(\gamma _N \rightarrow \gamma \) and \(\limsup _{N \rightarrow \infty } {\mathcal {J}}_{L,N}(\gamma _N) \le \mathcal J_L(\gamma )\). First, we may exclude the trivial case \(\mathcal J_L(\gamma ) = \infty \). Then, \(\gamma _N\) is defined on every interval \([(i-1)/N,i/N]\), \(i=1,\ldots ,N\), as a shortest geodesic from \(\gamma ((i-1)/N)\) to \(\gamma (i/N)\). By construction we have \(\gamma _N \in A_{L,N}\). From \(\gamma ,\gamma _N \in A_L\) we conclude

implying \(\gamma _N \rightarrow \gamma \). Similarly as in (29), we infer \(\limsup _{N \rightarrow \infty } {\mathcal {J}}_{L,N}(\gamma _N) \le {\mathcal {J}}_L(\gamma )\). \(\square \)

In the numerical part, we use the penalized form of (28) and minimize

7 Numerical Algorithm

For a detailed overview on Riemannian optimization we refer to [69] and the books [1, 79]. In order to minimize (30), we have a closer look at the discrepancy term. By (6) and (7), the discrepancy can be represented as follows

Both formulas have pros and cons: The first formula allows for an exact evaluation only if the expressions \(\varPhi (x) :=\int _{{\mathbb {X}}} K(x,y) \,\mathrm {d}\mu (y)\) and \(\int _{{\mathbb {X}}} \varPhi \,\mathrm {d}\mu \) can be written in closed forms. In this case the complexity scales quadratically in the number of points N. The second formula allows for exact evaluation only if the kernel has a finite expansion (3). In that case the complexity scales linearly in N.

Our approach is to use kernels fulfilling \(H_K({\mathbb {X}}) = H^s({\mathbb {X}})\), \(s > d/2\), and approximating them by their truncated representation with respect to the eigenfunctions of the Laplace–Beltrami operator

Then, we finally aim to minimize

where \(\lambda >0\). Our algorithm of choice is the nonlinear conjugate gradient (CG) method with Armijo line search as outlined in Algorithm 1 with notation and implementation details described in the comments after Remark 6, see [25] for Euclidean spaces. Note that the notation is independent of the special choice of \({\mathbb {X}}\) in our comments. The proposed method is of “exact conjugacy” and uses the second order derivative information provided by the Hessian. For the Armijo line search itself, the sophisticated initialization in Algorithm 2 is used, which also incorporates second order information via the Hessian. The main advantage of the CG method is its simplicity together with fast convergence at low computational cost. Indeed, Algorithm 1, together with Algorithm 2 replaced by an exact line search, converges under suitable assumptions superlinearly, more precisely dN-step quadratically towards a local minimum, cf. [73, Thm. 5.3] and [43, Sec. 3.3.2, Thm. 3.27].

Remark 6

The objective in (31) violates the smoothness requirements whenever \(x_{k-1}=x_{k}\) or \({{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{k-1},x_{k}) = L/N\). However, we observe numerically that local minimizers of (31) do not belong to this set of measure zero. This means in turn, if a local minimizer has a positive definite Hessian, then there is a local neighborhood where the CG method (with exact line search) permits a superlinear convergence rate. We do indeed observe this behavior in our numerical experiments.

Let us briefly comment on Algorithm 1 for \({\mathbb {X}}\in \{{\mathbb {T}}^2, {\mathbb {T}}^3, {\mathbb {S}}^2,\mathrm{SO}(3),{\mathcal {G}}_{2,4}\}\) which are considered in our numerical examples. For additional implementation details we refer to [43]. By \(\gamma _{x, d}\) we denote the geodesic with \(\gamma _{x, d}(0) = x\) and \({\dot{\gamma }}_{x, d}(0) = d\). Besides evaluating the geodesics \(\gamma _{x^{(k)}, d^{(k)}}(\tau ^{(k)})\) in the first iteration step, we have to compute the parallel transport of \(d^{(k)}\) along the geodesics in the second step. Furthermore, we need to compute the Riemannian gradient \(\nabla _{{\mathbb {X}}^N}F\) and products of the Hessian \(H_{{\mathbb {X}}^N} F\) with vectors d, which are approximated by the finite difference

The computation of the gradient of the penalty term in (30) is done by applying the chain rule and noting that for \(x \mapsto {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x,y)\), we have \(\nabla _{\mathbb {X}}{{\,\mathrm{dist}\,}}_{\mathbb {X}}(x,y) = \log _x y/{{\,\mathrm{dist}\,}}_{\mathbb {X}}(x,y)\), \(x\not = y\) with the logarithmic map \(\log \) on \({\mathbb {X}}\), while the distance is not differentiable for \(x=y\). Concerning the later point, see Remark 5. The evaluation of the gradient of the penalty term at a point in \({\mathbb {X}}^N\) requires only \({\mathcal {O}}(N)\) arithmetic operations. The computation of the Riemannian gradient of the data term in (30) is done analytically via the gradient of the eigenfunctions \(\varphi _k\) of the Laplace–Beltrami operator. Then, the evaluation of the gradient of the whole data term at given points can be done efficiently by fast Fourier transform (FFT) techniques at non-equispaced nodes using the NFFT software package of Potts et al. [56]. The overall complexity of the algorithm and references for the computation details for the above manifolds are given in Table 1.

8 Numerical Results

In this section, we underline our theoretical results by numerical examples. We start by studying the parameter choice in our numerical model. Then, we provide examples for the approximation of absolutely continuous measures with densities in \(H^s({\mathbb {X}})\), \(s > d/2\), by push-forward measures of the Lebesgue measure on [0, 1] by Lipschitz curves for the manifolds \({\mathbb {X}}\in \{{\mathbb {T}}^2, \mathbb T^3, {\mathbb {S}}^2,\mathrm{SO}(3),G_{2,4}\}\). Supplementary material can be found on our webpage.

8.1 Parameter Choice

We like to emphasize that the optimization problem (31) is highly nonlinear and the objective function has a large number of local minimizers, which appear to increase exponentially in N. In order to find for fixed L reasonable (local) solutions of (26), we carefully adjust the parameters in problem (31), namely the number of points N, the polynomial degree r in the kernel truncation, and the penalty parameter \(\lambda \). In the following, we suppose that \(\mathrm {dim}(\text {supp}(\mu )) = d \ge 2\).

-

(i)

Number of points N Clearly, N should not be too small compared to L. However, from a computational perspective it should also be not too large since the optimization procedure is hampered by the vast number of local minimizers. From the asymptotic of the path lengths of TSP in Lemma 3, we conclude that \(N \gtrsim \mathcal \ell (\gamma )^{d/(d-1)}\) is a reasonable choice, where \(\mathcal \ell (\gamma ) \le L\) is the length of the resulting curve \(\gamma \) going through the points.

-

(ii)

Polynomial degree r Based on the proofs of the theorems in Sect. 5.4 it is reasonable to choose

$$\begin{aligned} r \sim L^{\frac{1}{d-1}} \sim N^{\frac{1}{d}}. \end{aligned}$$ -

(iii)

Penalty parameter \(\lambda \) If \(\lambda \) is too small, we cannot enforce that the points approximate a regular curve, i.e., \(L/N \gtrsim {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{k-1},x_{k})\). Otherwise, if \(\lambda \) is too large the optimization procedure is hampered by the rigid constraints. Hence, to find a reasonable choice for \(\lambda \) in dependence on L, we assume that the minimizers of (31) treat both terms proportionally, i.e., for \(N\rightarrow \infty \) both terms are of the same order. Therefore, our heuristic is to choose the parameter \(\lambda \) such that

$$\begin{aligned} \min _{x_{1},\dots ,x_{N}} {\mathscr {D}}_K^{2} \Big (\mu , \frac{1}{N} \sum _{k=1}^{N} \delta _{x_{k}}\Big ) \sim N^{-\frac{2s}{d}} \sim \frac{\lambda }{N} \sum _{k=1}^{N} \big (N {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{k-1},x_{k}) - L \big )_{+}^{2} . \end{aligned}$$On the other hand, assuming that for the length \(\ell (\gamma ) = \sum _{k=1}^{N}{{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{k-1},x_{k})\) of a minimizer \(\gamma \) we have \(\ell (\gamma ) \sim L \sim N^{(d-1)/d}\), so that \(N {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{k-1},x_{k}) \sim L\), the value of the penalty term behaves like

$$\begin{aligned} \frac{\lambda }{N} \sum _{k=1}^{N} \big (N {{\,\mathrm{dist}\,}}_{\mathbb {X}}(x_{k-1},x_{k}) - L \big )_{+}^{2} \sim \lambda L^{2} \sim \lambda N^{\frac{2d-2}{d}}. \end{aligned}$$Hence, a reasonable choice is

$$\begin{aligned} \lambda \sim L^{\frac{-2s-2(d-1)}{d-1}} \sim N^{\frac{-2s-2(d-1)}{d}}. \end{aligned}$$(32)

Remark 7

In view of Remark 5 the relations in i)-iii) become

In the rest of this subsection, we aim to provide some numerical evidence for the parameter choice above. We restrict our attention to the torus \({\mathbb {X}}= {\mathbb {T}}^{2}\) and the kernel K given in (34) with \(d=2\) and \(s = 3/2\). Choose \(\mu \) as the Lebesgue measure on \({\mathbb {T}}^{2}\). From (32), we should keep in mind \(\lambda \sim N^{-5/2} \sim L^{-5}\).