Abstract

The increasing demand for communication between networked devices connected either through an intranet or the internet increases the need for a reliable and accurate network defense mechanism. Network intrusion detection systems (NIDSs), which are used to detect malicious or anomalous network traffic, are an integral part of network defense. This research aims to address some of the issues faced by anomaly-based network intrusion detection systems. In this research, we first identify some limitations of the legacy NIDS datasets, including a recent CICIDS2017 dataset, which lead us to develop our novel dataset, CIPMAIDS2023-1. Then, we propose a stacking-based ensemble approach that outperforms the overall state of the art for NIDS. Various attack scenarios were implemented along with benign user traffic on the network topology created using graphical network simulator-3 (GNS-3). Key flow features are extracted using cicflowmeter for each attack and are evaluated to analyze their behavior. Several different machine learning approaches are applied to the features extracted from the traffic data, and their performance is compared. The results show that the stacking-based ensemble approach is the most promising and achieves the highest weighted F1-score of 98.24%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the increasing use of the Internet, attacks on networks are also on the rise, with denial of service (DoS) being the most common example of a network attack. Intrusion detection systems (IDSs) are an important part of the defense mechanisms through which continuous monitoring of the system is performed. IDS can be categorized according to their detection methodology, such as signature-based IDS, anomaly-based IDS, and stateful protocol analysis [1]. It is recognized that traditional mechanisms for detecting these attacks are no longer sufficient to deal with the increasing and diverse attacks [2, 3]. To cope with these types of attacks, an effective intrusion detection system that can handle unexpected and previously undetected attacks is required.

Network-based intrusion detection systems (NIDS) usually use signature-based detection methods to help detect attacks for which predefined attack identification rules are available. The problem occurs when there are unknown attacks for which there are no predefined rules for detection. Anomaly-based methods for detecting attacks on the network generally use artificial intelligence-based techniques, more specifically machine learning-based techniques that can detect unknown attacks on the network by distinguishing malicious traffic from normal traffic. Rather than static rules, machine learning techniques can automatically learn about network anomalies and misuse based on data behavior rather than explicit rules or information. This behavior of an anomaly-based intrusion detection system is one of the most important features that help in the detection of zero-day attacks.

Various machine learning algorithms are available, often enabling higher detection rates and optimal computation in intrusion detection systems [4, 5]. However, machine learning-based intrusion detection system is used with caution because they can suffer from a high number of false positives [4, 6, 7]. The key to developing such an IDS is the ability to efficiently extract and select features that truly represent network traffic. Cicflowmeter [8], for example, has proven to be a good feature extractor for network traffic. It extracts layer 3 and 4 time-based features, including time intervals and various flag counts. Since it is an open-source tool, it can be easily integrated into any new solution, such as the one we proposed. All features extracted in the cicflowmeter are flow-based. This means that these features can be extracted from packet headers and session data with timestamps.

There are several datasets, such as CICIDS2017, KDD, and UNSW-NB15, that can be used to evaluate the performance of an IDS. In this study, the CICIDS2017 dataset is used to evaluate the proposed stacking-based ensemble model because it has several important features over other datasets [9]. In this work, our objective is to build an approach that can detect seen as well as unseen malicious traffic without static rules with higher detection rates while keeping the false-positive rate low. The key contributions of our study are:

-

Critical analysis of the CICIDS2017 dataset, including the new insights on its understanding.

-

Generation of our new dataset (CIPMAIDS2023-1) to overcome the limitations of the CICIDS2017 dataset.

-

Design and implementation of proposed stacking-based ensemble model.

-

Evaluation and comparative analysis of our proposed stacking-based ensemble model on our dataset, CIPMAIDS2023-1.

The remainder of the manuscript is organized as follows: Section 2 discusses the background and related concepts and Section 3 provides a brief literature review of existing datasets and techniques applied to them in the area of network intrusion detection. Section 4 sheds a light on issues with datasets most notably issues of CICIDS2017. Section 5 describes the simulation setup and implementation details, whereas the generation of the new dataset CIPMAIDS2023-1 and its methodology are discussed in Sect. 6. The results and discussions are presented in Sect. 7, and finally, Sect. 8 concludes this study along with future research directions.

2 Preliminaries

This section briefly explains the basic concepts belonging to IDS, machine learning, performance evaluation metrics, and related terms used in this study.

2.1 Intrusion detection systems

Intrusion detection systems, or more specifically network intrusion detection systems, provide a solution that examines incoming and outgoing network traffic from servers and clients to detect any type of malicious activity. Fred Cohen stated in 1987 that it is difficult to detect an invasion in any situation and that the resources required to detect anomalies grow proportionally to the number of requests [10]. NIDS is not to be confused with but rather work together with two other cybersecurity terms: firewalls and intrusion prevention systems (IPSs). Firewalls, for example, are usually placed on the outer perimeter of the network and look for intruders before they enter the network. Their main purpose is to analyze network packet headers and filter incoming and outgoing network traffic based on some predetermined rules, which may include protocol IP address and port numbers. IDS, on the other hand, can also monitor and analyze activities inside the protected network. We cannot take actions such as blocking the source of the attack or malicious traffic in IDS. This is where network intrusion prevention systems (IPS) come into play. Their job is to monitor and analyze network activity and proactively block any malicious activity that could pose a threat to the network. This is one of the most difficult tasks, as an appropriate response is difficult to program and any inappropriate response will cause problems in the active network. In this section, we address the topic of IDS and therefore focus on IDS.

2.2 IDS detection methods

The National institute of standards and technology (NIST) distinguishes intrusion detection systems based on the types of events they monitor and the methods used to deploy them [1]. Further, these IDSs use a variety of approaches to detect incidents. Figure 1 shows the classification of IDSs based on their type (deployment type) and their detection methods.

A host-based intrusion detection system (HIDS) detects unusual activity by monitoring the characteristics of a particular host and the events that occur on that host. A network-based intrusion detection system (NIDS), on the other hand, monitors network traffic for specific network segments or devices and observes the behavior of network and application layer protocols for abnormal activity. Intrusion detection systems (IDSs) deployed for wireless network traffic observe and examine wireless network traffic and attempt to detect the abnormal behavior of wireless network protocols. Network behavior analysis is another type of intrusion detection system that analyzes network data to detect attacks such as denial-of-service (DoS)/distributed denial of service (DDoS) attacks, certain types of malware, and policy violations. To provide more comprehensive and accurate detection, most IDS technologies use different detection approaches, either alone or in combination. These basic types of detection methods are signature-based, anomaly-based, and stateful protocol analysis.

Signature-based detection methods match specific patterns in network traffic, such as the sequence of bytes with an already known sequence of malicious actions. Once the attack patterns are identified, they are used to create signatures that can be used to detect the same threat again. Each attack has its unique signature. Already known attacks are easier to detect because we only need to match the observed pattern with one of the existing signatures in the database. It is difficult to detect new attacks based on existing signatures because their signatures are not identified.

Anomaly-based detection methods deal with unknown malicious attacks since the number of unseen attacks is increasing daily and these cannot be detected using signature mechanisms. Most anomaly-based detection methods use machine learning to define trusted traffic, and anything that deviates from this is classified as anomalous or malicious. These methods have better generalization capabilities compared to signature-based methods but have relatively high false-positive rates. One of the most important areas of research in anomaly-based network intrusion detection is to reduce these false positives.

Stateful protocol analysis is a process for identifying deviations from predefined profiles, also known as protocol models, with commonly accepted parameters for benign protocol behavior for each protocol state. These predefined profiles, or protocol models, are based on vendor-created universal profiles that specify how certain protocols should and should not be used. Variations in the implementation of each protocol are also often accounted for in the protocol models. The fundamental drawback of stateful protocol analysis is that it requires a significant amount of resources due to the complexity of the analysis and the cost of tracking the state of multiple concurrent sessions. In addition, these approaches are also unable to detect attacks that do not violate the characteristics of commonly accepted protocol behavior.

2.3 Machine learning

The impact of artificial intelligence (AI) on our daily lives is increasing. It is changing the nature of our daily activities and influencing the traditional approach to human thinking and interaction with the environment [11].

Machine learning is often considered a subfield of artificial intelligence, whose goal is to allow learning from data without being explicitly programmed to do so. The focus is on computer programs that can learn and grow with new data. This enables them to deal with unseen data [12]. For example, machine learning techniques can learn and extract sophisticated patterns for network attacks from large amounts of network data that can pose a threat to the network if undetected [13].

Machine learning is considered a viable solution especially when working with a large amount of data. It consists of two main phases, namely training and testing. In the training phase, we provide a large amount of data to an algorithm from which it learns patterns and relationships. The validation data is used in the training phase to validate each training step. As discussed in [14,15,16], there are numerous machine learning algorithms, each with its own advantages and disadvantages. The author has provided a comprehensive survey of deep learning methods and their application in various domains. [17].

In this research work, the ML-based approach is used to eliminate false positives and false negatives from the system. A false positive is a type of alert or detection that occurs in normal traffic when there is no intrusion or attack in progress, which is a problem in rule-based NIDS. A false negative is defined as the failure of a rule to alert or detect an actual attack, which also occurs in rule-based NIDS [18].

Another popular approach is ensemble methods. In this method, several multiple models are trained independently and their predictions are combined to create a final prediction. In stacking-based ensemble approach, the base level of models are trained and their predictions are passed to the secondary-level models also known as meta-models. Meta-models are generally simpler models. This technique has its own advantages including strength of multiple models. Ensemble methods generally improve the performance of the final model [19]. However, they require careful fine-tuning of base and meta-models.

In this work, we focus on flow-based network features as it is more convenient to extract and store these features. There are many open source extractors for flow features that are helpful in this area. Deep packet inspection is generally complicated and difficult to use and in most cases very slow to process the load. In developing a machine learning-based classifier, two steps are required: training and testing. Each selected model has its own training techniques, advantages, and disadvantages. This process of building a model, specifying its architecture, training, and testing is usually driven as a whole by well-known frameworks such as Scikit-learn [20], TensorFlow [21], PyTorch [22], MATLAB [23], or Weka [24].

Each framework has its own impact on the optimization time of the algorithm and the degree of optimizations that can be performed on it. Tensorflow provides the most support for developers, while Pytorch is currently used with more control over machine learning tasks but less support for developers. We used Keras, a wrapper of Tensorflow and Sci-Kit-Learn, for machine learning tasks.

2.4 Evaluation metrics

The main evaluation measures commonly used to evaluate machine learning-based IDS include recognition rate (true-positive rate), false-positive rate, accuracy, F1-score, and ROC curves [16, 25, 26]. True positive (TP) and false positive (FP) represent samples classified as attack class and samples falsely classified as attack class, respectively. True negative (TN) and false negative (FN) represent the number of samples correctly classified as a normal class and samples incorrectly classified as a normal class, respectively.

ROC curves are mostly used in binary detection. Accuracy measures the ratio between the total number of correct classifications and the total number of samples. Accuracy can be calculated using Eq. 1.

Precision measures the ratio of correct positive classifications to the total number of predicted positive classifications, as shown in Eq. 2.

Recall measures the number of correct positive classifications relative to the total number of positive samples in the data. It is calculated as shown in Eq. 3.

The F1-score measures the weighted average, i.e., the harmonic mean of precision and recall, and is calculated as shown in Eq. 4.

We used balanced precision, which is most often used in anomaly detection when one of the classes under study occurs much more frequently than others. Another metric used for unbalanced multiclass data is the weighted F1-score. This score is calculated by evaluating the F1-score for each label and then taking the weighted average corresponding to the number of samples of that class in the dataset. The most focused evaluation metric is the weighted F1-score as used in this work. One could use execution time as an evaluation metric. In this work, execution time is not used as a primary evaluation criterion because it can vary depending on the computational power of the computer used, and our goal is offline detection from a full packet capture.

3 Literature review

The following section presents a comprehensive review of the literature on machine learning approaches to anomaly-based network intrusion detection systems. With the ever-evolving nature of network protocols, older datasets have become inadequate for modern intrusion detection scenarios. One of the most significant examples is the QUIC protocol introduced by Google in 2012 [27], which is now a major component of sample traffic for most Google-based services. Consequently, there has been a growing need for dynamic datasets that account for these changes.

In [28], the authors used a convolutional neural network to detect network attacks, but the model only achieved high detection rates for 10 out of 14 network attacks, highlighting the class bias of deep neural networks for the CICIDS2017 dataset. Similarly, in [29], the authors proposed an artificial neural network (ANN) approach to shellcode detection, resulting in better performance than signature-based detection methods and significantly reducing false positives.

In [30], the authors developed an effective intrusion detection approach using decision trees for the CICIDS2018 dataset and concluded that this approach could be extended to real-time networks, as long as the feature set remained consistent. In [31], the authors used an efficient wrapper-based feature selection method combined with an ANN to achieve a high detection rate. V. Kanimozhi and T. Prem Jacob [32] trained a deep neural network for binary classification using the recent dataset CSE-CICIDS2018 and concluded that the model could be extended to all classes of network attacks.

In [33], the authors used a hybrid multimodal solution to enhance the performance of intrusion detection systems (IDS). They developed an ensemble model using a meta-classification approach enabled by stacked generalization and used two datasets, UNSW NB15 (a packet-based dataset) and UGR’16 (a flow-based dataset), for experiments and performance evaluation. In [34], a comprehensive study of different ML techniques and their performance on the CICIDS2017 dataset is presented. This study includes both supervised and unsupervised ML algorithms for evaluation.

Additionally, a comprehensive review of anomaly detection strategies for high-dimensional Big Data is presented in [35], including limitations of traditional techniques and current strategies for high-dimensional data. Various techniques based on Big Data that can be used to optimize anomaly detection are also discussed. In [36], the authors achieved the highest accuracy of 99.46% against the NSL-KDD dataset using the CatBoot classifier. In [37, 38], ensemble methods were employed to achieve the accuracy of 99.90% and 99.86%, respectively, using the KDDCupp99 dataset.

In conclusion, several key points can be derived from the existing literature on network intrusion detection systems (NIDSs). Firstly, rule-based systems are outdated due to emerging attack patterns and should be replaced by more advanced methods of intelligent detection based on mathematical models. Secondly, machine learning approaches show great potential for detecting intrusions in large networks, but the field is still developing due to problems such as insufficient training data and high false-positive rates. Finally, network-specific data are crucial for testing the performance of machine learning models. A chronological summary of the state-of-the-art literature on NIDS is presented in Table 1.

4 Datasets

One of the most important issues in network intrusion detection is testing and evaluating the approach. Datasets used for training in NIDS, therefore, play an important role in the evaluation and validation of NIDS. Datasets are also important because they help in replicating the desired experiment, evaluating new approaches, and comparing different approaches. Variance in datasets allows machine learning algorithms to learn abnormal or malicious patterns and distinguish them from normal patterns. Authors have shown the applicability of AI algorithms on several IDS datasets [39]. Several benchmark datasets are publicly available, e.g., KDD CUP’99, NSL-KDD, CAIDA 2007, UNSW-NB15, CICIDS2017, etc. [15, 40, 41].

The most frequently used dataset in the literature is KDDCupp99 and its derived NSL-KDD dataset. Table 1 shows the chronological summary of some recent studies performed on these datasets [42, 43]. However, these datasets do not represent the current network architecture and attack methods.

Cyber security researchers have created synthetic NIDS datasets using simulated environments to obtain labeled realistic network traffic [44]. For this purpose, a test environment is first developed to simulate normal traffic between different servers and clients. This simulation is performed to overcome the security and privacy issues faced by real production networks. Therefore, these controlled environments are more reliable and secure than production or real networks. Various attacks are launched, attack scenarios are simulated, and tagged traffic is generated. The captured network traffic can be in the form of packet capture (pcap) or NetFlow (network flow) or sFlow (advanced network format). Features can then be extracted from the pcap files using appropriate tools such as cicflowmeter, a tool developed by the CIC Institute to extract previously created features, or an open-source tool such as Zeek (IDS). This results in the labeled network flows, which are composed of benign and network traffic.

These network traffic features are critical because they are used to distinguish between normal and attack traffic. Therefore, they should have the necessary variance to capture these patterns. They must also be an optimal size so that solutions based on these characteristics are scalable and practical. Their use is the most important task.

However, due to the lack of a standard set of features in network intrusion datasets, most approaches are domain-specific and cannot be generalized as a whole. As a result, each dataset consists of unique features derived from domain research. These exclusive and distinct feature sets pose a problem for evaluating ML models across multiple datasets and limit the reliability of the evaluation methods. The main problems, leading to a large gap between academic research and deployed ML-based NIDS solutions, are described below.

-

Different capture and storage methods for NIDS features due to domain-specific security events.

-

The inability of machine learning models to generalize with a fixed feature set across multiple datasets from different network topologies.

-

Lack of a universal aggregation method that can combine network data streams from different sources and generate standard feature sets.

Machine learning algorithms require a large amount of data to train. Quality and quantity of data play a crucial role in the testing performance of any machine learning algorithm. Most of the time, high-quality data beat the better algorithms. Unfortunately, these types of datasets are expensive, have privacy issues, and are difficult to generate. They are very time-consuming to generate, which is why most approaches use publicly available datasets for validation and testing [45].

4.1 Limitations of CICIDS2017 dataset

In our study, we used the CICIDS2017 dataset to train our machine learning models to detect network attacks. However, we observed some issues with the dataset that led us to capture our own traffic based on almost similar attacks. The purpose of this was to test our models on traffic from our network to avoid the problem of generalization that is commonly encountered when testing models on different network topologies.

The generalization problem arises when models trained on a specific network topology fail to detect attacks on other network topologies. In our case, even though our machine learning models achieved a balanced accuracy greater than 95 percent on CICIDS2017 traffic, they had only a 26% recall on other network topologies, with less than 50 percent accuracy. Therefore, we concluded that capturing attack traffic from the network on which we intend to test the model is necessary to ensure that the model’s performance can be generalized. However, due to privacy and security concerns, it was not possible for us to capture real network traffic that is publicly accessible. As such, we used a network simulator to simulate victim and attack networks, and the traffic generated in these networks was captured in near real-time.

It is important to note that each network has a specific type of benign traffic that must be considered when applying machine learning models. For instance, in our network topology, Windows operating systems have window update services that constantly communicate with Microsoft servers to receive important security updates. Additionally, most Google services are communicated using the QUIC protocol, which is a low-latency Internet transport protocol over UDP that combines the advantages of both TCP and UDP [46].

To generate normal traffic, we simulated web browsing, file downloads, email, SSH, FTP sessions, and other major services such as Google and Microsoft for an entire week. The traffic was generated from multiple versions of Windows and Linux operating systems running on users’ virtual machines (VMs). Our goal was to create a comprehensive dataset that accurately reflects the traffic patterns and network topology of our network. Figure 2 provides a visual representation of our network topology.

5 Simulation setup and implementation details

5.1 Simulation setup

In [47], a standard feature set is created based on Netflow features. They concluded that this leads to a reduction in overall prediction time with comparable results. Therefore, these features can lead to overall superior features when additional metrics are used with them. All experimental and simulation work is performed on a desktop computer with 52GB of memory, Intel Core i7-7800 CPU @ 3.40 GHz, and 930GB of hard drive space. The host operating system is Windows 10, and the main programming frameworks used are Python-based libraries such as Sklearn, Keras, and Flask. We have used Anaconda distribution as simulation environment for this research that included the aforementioned python libraries.

5.2 Network topology

Graphical network simulator-3 (GNS-3) [48] is used in this study to simulate a full network topology and implement various attack scenarios along with benign user traffic.

Figure 2 shows our experimental setup with a victim network and an attacker network separated by a typical firewall. The firewall we used in our network topology is Pfsense. By default, the local area network (LAN) side of the firewall is a trusted network, while the wide area network side (WAN) of the firewall is untrusted. Traffic initiated from WAN to LAN is blocked. We have allowed this traffic so that we can perform network attack scenarios. The victim’s network also has access to the Internet through network address translation (NAT).

5.2.1 Victim network

We use several popular OS for the victim networks. These are; Microsoft Windows with different versions including Windows 10, Windows 7, Windows XP; and several Linux operating systems (OS), including Ubuntu 16, Ubuntu 18, and Ubuntu 12. The victim network can communicate with the Internet to simulate normal day-to-day traffic, including Windows updates and other network communications. The services that run on the victim network include FTP, SSH, HTTP, OpenSSL, and HTTPS. These services are later exploited to simulate network attacks. DVWA, a vulnerable web application, is also run on one of the victim computers to simulate web attacks.

5.2.2 Attacker network

On the attacker side, we have Kali Linux, a popular offensive cybersecurity OS on the attacker network. Other popular OS on the attacker side are Ubuntu 18 and Windows 10 to perform network attacks from multiple operating systems. The main tools used in Kali Linux are Patator, LOIC, and DoS goldeneye script.

5.2.3 Firewall

The firewall used in this network topology is Pfsense [49]. It was chosen because it is open source and can be easily configured. It can act as both a firewall and a router. We have two network pages, namely LAN and WAN. The LAN page is trusted by default, while the WAN page is untrusted. Traffic from WAN to LAN is blocked by default. We need to allow this traffic to perform our network attacks.

5.2.4 Traffic capture

The victim’s network traffic is recorded using the well-known network recording and processing tool Wireshark. Ubuntu 18 is used as the operating system. All communication that takes place over the victim’s network is mirrored to this virtual machine. Here, this traffic is recorded for several attack scenarios and forwarded to the cicflowmeter.

5.3 Attack traffic generation on GNS3 topology

The following subsection discusses the implemented attacks in detail. The following attacks were implemented on the GNS3 network, shown in Fig. 2, and their network traffic was recorded. The main attack categories include denial of service, brute force, reconnaissance, and OpenSSL exploits.

5.3.1 DoS attacks

First, a DoS attack based on the low orbit ion cannon (LOIC) is carried out. To perform a DoS attack, the LOIC tool is used. This tool performs Layer 3 attacks in three variants. The attack types are UDP, TCP, and HTTP attacks. All attacks are performed using the same mechanism. We used the TCP variant of the attack in our network. The LOIC app shows the current status of the attack by indicating the failed processing of the request and the threats that are trying to connect to the server or are already connected to it. We used TCP SYN Flood to exploit the three-way TCP handshake. The attacker and victim machines are Kali Linux and Ubuntu16, respectively. In this way, multiple incomplete connection requests overload the server in terms of resources, making it unreachable.

The second DoS attack is slowloris. It is carried out using the slowloris.py Python script from Kali Linux. The victim machines are Ubuntu 16 and Ubuntu 12. This attack works by sending partial HTTP requests to consume all connections to the web server that will never complete. This is intended to consume all system resources, causing the system service to be interrupted. The web server is contacted with a large number of connections, and the connections persist for a long time.

The third DoS attack is Goldeneye. It is implemented using the DoS script from the Kali Linux attack. The victim machine is Ubuntu 16. This is an application level DoS attack. The intent is to disrupt the service. It is executed using a Python script and therefore can run on all Linux and Windows platforms.

The next attack is the DoS attack using the slowhttptest tool. This attack is similar to slowloris, but it is a more stealthy and interactive version. It can also act like a slowloris attack. The attacker machine is Kali Linux, while the victim machine is Ubuntu16. This application-level DoS attack tool uses low bandwidth and consumes server resources through a concurrent connection pool. As we know, HTTP protocol relies on the request to complete before it is processed. So, if the connection speed is slow, the server must be busy waiting for clients to complete requests, making them inaccessible to legitimate users.

The last DoS attack that used Hulk was an application layer DoS attack tool. Kali Linux and Ubuntu VM are used as attackers and victims. It is an HTTP flood tool that uses a unique and obfuscated traffic volume. Due to its obfuscation, it is harder to detect compared to other DoS attack tools because of its predictable, repetitive patterns. The main principle of this tool is to create a unique pattern for each request, so it can bypass all intrusion detection and prevention systems. This attack causes a high number of packets, which leads to a high number of data flows.

5.3.2 Brute force attacks

The brute force attack is used to crack the credentials of the user or service. We have performed this attack using three protocols. The first protocol used is secure shell (SSH). Patator, a Kali Linux tool, is used to perform brute force attacks on SSH protocol. SSH is a protocol used to secure multiple services over the Internet. Most commonly, it is used for logging into a remote server. The victim VM is Ubuntu 16, which runs an SSH service. We provided the tool with two files containing usernames and passwords. The brute force method sees a large number of small TCP packets with higher frequency to crack usernames and passwords.

The second protocol that is exploited is the file transfer protocol (FTP). The brute force attack on the FTP protocol is performed using the patator tool (a penetration testing tool). FTP protocol is used for file transfer between clients and servers. It requires a valid username and password. This attack is used to extract the username ID and password of the user to gain access to the remote system. The victim is Ubuntu 16, which runs the FTP service. Username and password files used in SSH brute force attacks are also used here. We find similar behavior to SSH brute force attacks. A large number of unsuccessful password attempts over a short period of time resulted in small TCP packets with high frequency. Overall, the bandwidth consumption of this attack is low.

The last protocol used is the hypertext transfer protocol (HTTP). For the brute force attack on HTTP, we configured the DVWA app. Damn vulnerable web app (DVWA) is a web application that is vulnerable and used for network attacks. In the brute force section, there is a web page that is used to test the network attacks. The Patator tool is used to perform this attack. The behavior of this attack is very similar to the previously mentioned brute force attacks. The brute force page comes after the first login page of the app. For this reason, we also need to use session cookies in the attack command.

5.3.3 Heartbleed attack

Heartbeat is an important part of the TLS protocol. It is used to confirm that the corresponding device is online. It is a vulnerability in the OpenSSL library implemented in the TLS protocol. Heartbeat works with a technique where a client sends a random payload to the server. Then, the server must respond to the client with the same payload. This is called a heartbeat request and response. The client must also specify the length of the random payload. This is where the weakness lies. There is no verification of the length and the actual payload sent by the client.

Suppose the client sends 20 bytes of random payload and specifies a length of 500, then the server simply sends back that 20 bytes of random payload, including 480 bytes of data in the adjacent block, whatever it may contain. This adjacent block may contain sensitive information such as personal data and passwords. The attacker can repeat this process indefinitely to access the entire server memory. The victim’s machine is Ubuntu 12, running OpenSSL v1. The attacker first uses nmap to detect the OpenSSL vulnerability and then performs the attack with the Metasploit tool.

5.3.4 Web attacks

Two attacks are carried out based on the exploitation of web forms. The first one is SQL injection. SQL injection is one of the most popular attacks on the Internet that targets vulnerable web forms that accept uncontrolled queries from users. It involves exploiting the database layer of the web application, which uses user input directly in database queries. The main queries include stealing and deleting database data through queries. The victim is a DVWA application configured on Ubuntu 16, while the attacker is running Kali Linux. This attack is quite difficult to detect because it contains almost the same traffic as normal traffic. Even a small change in the user’s input request can lead to this dangerous attack.

The second web attack is a cross-site scripting attack. This attack is similar to SQL injection attack where we inject malicious scripts into the uncontrolled user input. Once a malicious script is injected, it is displayed to the victims when they visit the website. This can lead to fraud, execution of malicious scripts, and data theft. DVWA application is used to perform this attack by Kali Linux OS. Like SQL injection, this attack is also difficult to detect.

5.3.5 Portscan

This is a popular attack used in the reconnaissance phase of the attack. The attacker uses this attack to gather information about systems and plan how to penetrate the system. We can get information about operating systems, possible vulnerabilities, running services, and port status. In a PortScan attack, the attacker attempts to communicate with each port, which is usually in the range of 0 to 65,535, and then interprets from the response whether that port is in use or not, leading to the detection of weak entry points.

6 Dataset generation and processing methodology

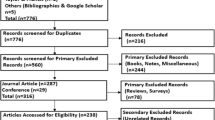

Due to several issues discussed previously, we created our own new dataset CIPMAIDS2023-1 that includes all the attacks listed in CICIDS2017 and uses similar tools so that we can compare the performance of our proposed stacking-based ensemble model. Dataset generation flowchart is shown in Fig. 3.

6.1 Cicflowmeterv4 features extraction

Cicflowmeter is an open-source tool for extracting 84 network traffic features proposed in [8]. These features, published in [8], represent some good network traffic characteristics. This tool takes the traffic record file and generates a CSV file based on these 84 features. These characteristics are based on the layer 3 and 4 header information of OSI and other time- and volume-based information of the network traffic, such as the number of bytes, packets, and durations in both forward and reverse directions. The layer 3 header specifies the source IP, destination IP, and type of service, while layer 4 contains a variety of header flags, including source and destination ports, sequence number, acknowledgement number, and other flags such as URG, ACK, PSH, RST, SYN, and FIN. Both TCP and UDP flows are generated. UDP flows usually end with the flow timeout, while TCP flows end with either FIN packet or flow timeout. The default timeout for flows is 120,000,000 microseconds or 120 s.

6.2 Data distribution

Twenty percentage of the total data is isolated, for use as test data. The remaining data is split into another 75% training data and 25% validation data. The overall distribution of the data is shown in Fig. 4.

Three classes have the least number of flows including Heartbleed, SQL injection, and cross-site scripting. The absolute frequencies of network flows against each attack are shown in Table 2.

6.2.1 Feature preprocessing

First, all socket features are removed from the overall features because they do not properly reflect network traffic. A socket is basically a combination of IP and port. When two devices communicate with each other, each side has its own socket. They can change while exhibiting the same benign and malicious behavior. These characteristics include flow ID, destination IP, source IP, destination port, and timestamp. The attacker can also use spoofed IP addresses and ports other than the known ones to perform network attacks. After removing these features, we found some features with near-zero variance. Zero variance means that they do not contribute to the representation of attacking or benign network traffic. These features are BwdPSHFlags, BwdURGFlags, FwdBulkRateAvg, FwdBytes, BulkAvg, FwdPacket/BulkAvg, FwdURGFlags, SubflowBwdPackets, and URGFlagCount. Three of these 8 features relate to the URG flag, which is one of the layer 4 flags in the open systems interconnection (OSI) model. This may be because this flag is deprecated. Some of the flows in the "FlowBytes/s" feature contained zero values. These flows were removed from the data because zero values cannot be entered into the machine learning algorithm. Another problem was the non-finite values in the flow features "FlowBytes/s" and "FlowPackets/s". The total number of these values was less than 60. These generated flows were also removed from the data to prepare them for a machine learning algorithm.

6.2.2 Feature importance

First, a random forest regressor is used to calculate the importance of the features for each network attack. Then, the importance of the features is calculated for all attacks individually, for brute force, DoS, and web attacks in the dataset. This results in each feature being ranked with an importance value relative to other features. This algorithm uses random forests to calculate the importance of each feature. These features are then used to analyze network attack behavior. Important features that affect each attack give us a good insight into the behavior of network attacks. Tables 4 and 5 show the distribution of the 5 most important features of each attack.

Based on the above important features for each class, we can conclude that Flow IAT is the most important feature for network attacks. This is essentially the time interval between two subsequent packets in a flow in the forward direction. These characteristics include the maximum, minimum, mean, and standard deviation of time between consecutive packets in a flow.

Both SQL injection and cross-site script have similar important features. These features include FlowPackets/s, FlowIATMin, BwdPacketLengthStd, and FlowDuration. Because of these same features, these attacks are difficult to distinguish. Important features selected by the random forest regressor against all brute force attacks were FlowIATMin, FlowDuration, FwdIATTotal, FwdPacketLengthStd, and TotalBwdpackets.

For the DoS LOIC attack, no other feature even comes close to the most important feature, TotalLengthofBwdPackets. It represents the total size of the packet in the backward direction. Backward direction means the direction in which a response is sent to the attacker. The reason for this is that this attack is a volumetric attack that consumes bandwidth using layer 3 or 4 protocols, unlike other attacks that take place at the application layer. These types of attacks primarily include internet control message protocol (ICMP), ping flooding, and TCP sync flooding. Application layer attacks tend to be more complex and target specific application layer protocols, including slowhttptest and goldeneye.

If we look at the relevant features of the portscan attack, we can see that the most important features are flow duration and FlowIATMax. This is because in most cases portscan focuses on small packets to test each port. FlowIATMax can be a good differentiator between short durations and a high number of attack packets compared to normal traffic. Other features include flow bytes/s and flow packets/s. These are also important characteristics because portscan traffic has a high number of packets for each flow and an extremely low number of bytes.

Flow duration is one of the most important features in almost all brute force attacks. This is due to the fast testing of different usernames and passwords. The main characteristics of combined DoS attacks are FwdPacketLengthMax, FwdIATTotal, FlowIATMean, BwdPacketLengthMean, and FlowIATMin. DoS attacks tend to disrupt the service so that a continuous stream of packets comes from the attacker’s machines. The most important features, FwdPacketLenghtMax and FwdIATTotal, help distinguish the incoming traffic from the attacker network.

6.2.3 Normalization

Normalization of the data is performed after removing pedestal features and features with zero variance. In this work, min–max normalization is used. This is the most common type of normalization. One of its disadvantages is that it reduces the impact of outliers. Algorithms that are very sensitive to outliers benefit from this transformation. Another normalization that is used is z-score normalization. This is a simple normalization of mean and standard deviation, where the mean of the transformed data is 0 and the standard deviation is 1. This method benefits from the specification of minimum and maximum values. We tried both normalizations on our data and decided to use the min–max normalization because of the better overall results. First, a scaler is fitted to the training data. Then, the training data, the test data, and the validation data are normalized using this scaler. Importantly, the scaler is not applied to the validation and test data, so this data is completely unseen.

6.2.4 Smote up-sampling

Most classes have a high number of flows, either due to high traffic or their nature. Web attacks and Heartbleed attacks do not have a large number of flows due to their nature. This leads to a bias in the machine learning models. To deal with this bias, these two approaches are used in most cases. First, up-sampling a minority class or down-sampling the majority class, second, weighting each class according to its importance or number. Both approaches can successfully solve the new class imbalance problem, but the latter approach is recommended. It is recommended to use class weighting instead of oversampling. That is, we do not have synthetic or augmented data. This generally leads to better performance, but not necessarily. Several classes with a smaller number of samples were up-sampled using the smote technique.

Cicflowmeter, a flow-based feature extractor, is highly dependent on the unique source-to-destination and reverse packets [8]. Therefore, it generates a large number of flows with traffic containing unique packets, such as benign traffic, portscan attacks, Dos attacks, and brute force attacks. On the other hand, web attacks and Heartbleed attacks perform minimal network communication to reach their target and therefore generate a smaller number of flows. This problem of unbalanced class data is addressed by upsampling.

The criteria for up-sampling are as follows. We had approximately 40 thousand samples for the benign class. Any attack class that had less than 5000 samples in the training data was up-sampled to 5000 samples. This produced overall balanced results compared to a strong bias of some classes when the data was not up-sampled.

7 Results and discussion

The contents of this section are as follows. First, the results of the basic machine learning models on the extracted features and then the results of the proposed ensemble stacking classifier are presented. The distribution of the data is the same as explained in Sect. 6.2. The main evaluation metric is the weighted F1-score, which is already explained in Sect. 2.4. The same scoring metric was used by the author of the CICIDS2017 dataset. An Ablation study has been carried out in Sects. 7.1 and 7.2 to justify our proposed ensemble approach.

7.1 Comparative evaluation of baseline models

Before turning to complex deep learning and ensemble models, several base classifiers are used. Table 3 shows the results of the base classifiers in terms of the weighted F1-score. We used SVM with multiple kernels. The best performing kernels were linear and RBF, SVM, KNN, random forest, and logistic regression are also used as base classifiers.

It can be observed in Table 3 that the overall performance of the basic classifiers on our generated dataset CIPMAIDS2023-1 is not bad. Random forest with 120 estimators performed best with a weighted f1 value of 97.7%. Confusion matrix of the random forest (Fig. 5) and the F1-score of random forest classifier (Table 6) with the highest score, as well as correct and incorrect predictions for each class. We can see that many false-positive and false-negative predictions are made for SQL injection web attacks and cross-site script attacks. This is due to the high similarity between these attacks. This similarity was also evident when we observed the important features of these attacks using a random forest regressor.

We can see that this classifier worked well for multiclass classification. For web attacks, including SQL injection and cross-site scripting attacks, there are many false positives due to the high correlation.

7.2 Performance analysis of deep learning and ensemble classifiers

In this subsection, we address the results for deep learning models and other ensemble classifiers on the dataset. First, a multilayer perceptron (MLP) with 4 hidden layers and 450, 250, 150, and 50 neurons each are used. A dropout of 0.2 is used in the last layer to avoid overfitting. The model is trained for 48 epochs with a stack size of 64. Then, a one-dimensional convolutional neural network (1DCNN) is also optimized on the data. The CNN has 3 convolutional layers with 60 kernels each and a dropout of 0.2. The optimizers used are Adam and Sparse Categorical Cross Entropy for loss. The model is trained for 150 epochs with a batch size of 24.

When classical machine learning methods are insufficient to avoid overfitting the data, ensemble methods come into play. Ensemble methods typically perform better than a single model because they can reduce prediction error by adding bias [19] and make model performance more robust. We used ensemble methods to create an optimal predictive model that performs better than any of the contributing models. Instead of relying on a single model, other models can be considered in the final prediction, and the final prediction is based on the aggregated results of all models. Nowadays, ensemble methods are considered as one of the best state-of-the-art solutions in various areas of machine learning. In general, ensemble models very often achieve better results than any other single model.

Several ensemble techniques are also applied to the data. Among them, the following three techniques are the most powerful. First, the majority-based voting ensemble method is used with SVM, KNN, and random forest as the basic estimators. Second, XGBOOST, KNN, and random forests are used as the baseline estimators for the ensemble method; both techniques include soft voting criteria. Hard voting criteria assign a score to each model, while soft voting uses the probability distribution of each model to assign a label to the test data. In the third technique, a stacking-based ensemble is used. Here, KNN, SVM, and random forest are used as the base estimators, and XGBOOST is used as the metamodel. This classifier gave the best results for the test data. Finally, the same model was used to train with combined training and validation data and then tested with test data. Table 7 shows the results for several complex classifiers applied to the data.

Comparative results of baseline models (Table 3) and deep ML models and ensemble models (Table 7) on our generated dataset CIPMAIDS2023-1 represent that various models are applied to our generated dataset, and the weighted F1-score of our proposed ensemble approach is the highest, i.e., 98.24%. These results prove the supremacy of our proposed ensemble approach using our own generated dataset CIPMAIDS2023-1.

The confusion matrix for the best-performing classifier, which is the stacking-based ensemble classifier, is shown in Fig. 6. These experimental results show that the ensemble stacking approach achieved the highest weighted F1-score as compared to other classifiers and select features from the flowmeter are effective and can be used for network intrusion detection. This technique achieved the highest score of 98.05%, so we slightly improved the weighted F1-score and achieved good multiclass accuracy by using this approach. Table 8 shows the weighted F1-score for each attack using this stacking-based ensemble method.

Based on the results, we can see that we have a significant improvement in the F1-score for SQL injection attacks and cross-site scripting web attacks. Now we will compare our stacking based ensemble techniques, in Table 9, with other techniques.

The primary objective of the comparative analysis shown in Table 9 is to highlight the overall performance of our proposed method relative to other state-of-the-art techniques. As stated earlier, we attempted to overcome the limitations of the CICIDS2017 dataset by the generation of our own dataset CIPMAIDS2023-1 so, it is pertinent to mention that our generated dataset CIPMAIDS2023-1 shares similar characteristics to the CICIDS2017 dataset. This means that both datasets have the same baseline structure, and hence, it is reasonable to compare the performance of our proposed stacking-based ensemble technique and other state-of-the-art techniques on mutually comparable CICIDS2017 & CIPMAIDS2023-1 datasets.

Sharafaldin et al. [8] achieved the highest score of 98.0%, and Bakhshi et al. [50] used hybrid deep learning for multiclass attack detection. In this case, multiple deep learning models were used. The best-performing model was CNN with a weighted f1 value of 93.56%. Yulianto et al. [51] used synthetic a minority oversampling technique and ensemble feature selection to improve the results. The evaluation results showed that the proposed model achieved a maximum score of 90.01%. Evaluation results shown in Table 9 are evident that among all, our proposed ensemble-based model achieved the maximum F1-score of 98.24%. Our proposed ensemble model, when tested on the CICIDS2017 dataset, resulted in a noticeably lower accuracy of 78% as compared the 98% achieved on our proposed similar distribution dataset (CIPMAIDS2023-1). It is important to note that CICIDS2017 dataset may possess unique characteristics that can present potential challenges for the generalization of the intrusion detection model trained on our generated dataset. Some of these characteristics are dataset bias, temporal factor, and collection methodology [52, 53].

The dataset exhibits bias in terms of network traffic pattern distribution due to the network environment or the data collection method. Temporal factors also have an impact on the generalization as network traffic protocols are continuously upgraded and hence introduce variance in traffic behavior. In the last, the collection methodology also introduces noise and inconsistency in the generated data. We have seen this inconsistency in CICIDS2017 in the form of not a number (nan) values of some features that we had to remove in the preprocessing steps.

8 Conclusion and future work

In conclusion, we have proposed a stacking-based ensemble approach for intrusion detection, which achieved a weighted F1-score of up to 98.24. We have also analyzed the effect of using different techniques for handling imbalanced data and found that using class weights improves the overall performance, but can degrade the multi-class performance in some cases. We have also observed similarities between SQL injection attacks and cross-site scripting attacks, leading to a high percentage of false positives in these classes.

In future work, we plan to extend the authenticity of our results by evaluating our proposed model on other datasets. The performance of the proposed model on the CICIDS2017 dataset exhibited a decrease of approximately 20.24% in the weighted F1-score compared to its performance on our proposed similar distribution dataset (CIPMAIDS2023-1). To enhance the performance of the model on the CICIDS2017 dataset in the future, we plan to employ improved evaluation metrics and validation techniques, which can contribute to maximizing the generalization of the model across datasets. Additionally, we will explore the use of additional network features not provided by the cicflowmeter to improve discrimination between attack and benign classes. We plan to investigate the transferability of models trained on one dataset to another dataset and analyze how the diversity of the dataset affects the transferability. We will also use a realistic network with public IPs to obtain more realistic traffic, which can lead to better generalization. Finally, we will explore the use of deep packet inspection to generate more features that truly represent the discrimination between attack and benign classes, which will require us to allocate more resources to the processing of network traffic packets.

Data availability

There are no public data or materials for this paper.

References

Scarfone, K., Mell, P.: Guide to intrusion detection and prevention systems (idps), special publication (nist sp). National Institute of Standards and Technology, Gaithersburg (2007). https://doi.org/10.6028/NIST.SP.800-94

Patil, N.V., Krishna, C.R., Kumar, K.: Distributed frameworks for detecting distributed denial of service attacks: a comprehensive review, challenges and future directions. Concurr. Comput. Pract. Exp. 33, 6197 (2021). https://doi.org/10.1002/CPE.6197

Jazi, H.H., Gonzalez, H., Stakhanova, N., Ghorbani, A.A.: Detecting http-based application layer dos attacks on web servers in the presence of sampling. Comput. Netw. 121, 25–36 (2017). https://doi.org/10.1016/J.COMNET.2017.03.018

Jallad, K.A., Aljnidi, M., Desouki, M.S.: Anomaly detection optimization using big data and deep learning to reduce false-positive. J. Big Data 7, 1–12 (2020). https://doi.org/10.1186/S40537-020-00346-1

Gupta, N., Jindal, V., Bedi, P.: Lio-ids: handling class imbalance using lstm and improved one-vs-one technique in intrusion detection system. Comput. Netw. 192, 108076 (2021)

Verma, A., Ranga, V.: Machine learning based intrusion detection systems for iot applications. Wirel. Pers. Commun. 111, 2287–2310 (2020). https://doi.org/10.1007/S11277-019-06986-8

Kasim, Ö.: An efficient and robust deep learning based network anomaly detection against distributed denial of service attacks. Comput. Netw. 180, 107390 (2020)

Sharafaldin, I., Lashkari, A.H., Ghorbani, A.A.: Toward generating a new intrusion detection dataset and intrusion traffic characterization. In: ICISSP 2018—Proceedings of the 4th International Conference on Information Systems Security and Privacy 2018-Janua, 108–116 (2018). https://doi.org/10.5220/0006639801080116

Sharafaldin, I., Lashkari, A.H., Ghorbani, A.A.: A detailed analysis of the cicids2017 data set. Commun. Comput. Inf. Sci. 977, 172–188 (2019)

Cohen, F.: Computer viruses: Theory and experiments. Comput. Secur. 6, 22–35 (1987). https://doi.org/10.1016/0167-4048(87)90122-2

Ullah, Z., Al-Turjman, F., Mostarda, L., Gagliardi, R.: Applications of artificial intelligence and machine learning in smart cities. Comput. Commun. 154, 313–323 (2020). https://doi.org/10.1016/J.COMCOM.2020.02.069

Sravani, K., Srinivasu, P.: Comparative study of machine learning algorithm for intrusion detection system. Adv. Intell. Syst. Comput. 247, 189–196 (2014). https://doi.org/10.1007/978-3-319-02931-3_23

Sahu, S.K., Sarangi, S., Jena, S.K.: A detail analysis on intrusion detection datasets. In: Souvenir of the 2014 IEEE International Advance Computing Conference, IACC 2014, 1348–1353 (2014). https://doi.org/10.1109/IADCC.2014.6779523

Al-Garadi, M.A., Mohamed, A., Al-Ali, A.K., Du, X., Ali, I., Guizani, M.: A survey of machine and deep learning methods for internet of things (iot) security. IEEE Commun. Surv. Tutor. 22, 1646–1685 (2020). https://doi.org/10.1109/COMST.2020.2988293

Kilincer, I.F., Ertam, F., Sengur, A.: Machine learning methods for cyber security intrusion detection: datasets and comparative study. Comput. Netw. 188, 107840 (2021). https://doi.org/10.1016/J.COMNET.2021.107840

Liu, H., Lang, B.: Machine learning and deep learning methods for intrusion detection systems: a survey. Appl. Sci. (2019). https://doi.org/10.3390/APP9204396

Aslam, S., Herodotou, H., Mohsin, S.M., Javaid, N., Ashraf, N., Aslam, S.: A survey on deep learning methods for power load and renewable energy forecasting in smart microgrids. Renew. Sustain. Energy Rev. 144, 110992 (2021)

Shah, S.N., Singh, M.P.: Signature-based network intrusion detection system using snort and winpcap—ijert. Int. J. Eng. Res. Technol. (IJERT) 01

Krishnaveni, S., Sivamohan, S., Sridhar, S.S., Prabakaran, S.: Efficient feature selection and classification through ensemble method for network intrusion detection on cloud computing. Clust. Comput. 24(3), 1761–1779 (2021). https://doi.org/10.1007/s10586-020-03222-y

FabianPedregosa, F.P., Michel, V., OlivierGrisel, O.G., Blondel, M., Prettenhofer, P., Weiss, R., Vanderplas, J., Cournapeau, D., Pedregosa, F., Varoquaux, G., Gramfort, A., Thirion, B., Grisel, O., Dubourg, V., Passos, A., Brucher, M., andÉdouardand, M.P., andÉdouard Duchesnay, Edouardduchesnay, F.D.: Scikit-learn: Machine learning in python gaël varoquaux bertrand thirion vincent dubourg alexandre passos pedregosa, varoquaux, gramfort et al. matthieu perrot. J. Mach. Learn. Res. 12, 2825–2830 (2011). https://doi.org/10.5555/1953048

Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., et al.: \(\{\)TensorFlow\(\}\): a system for \(\{\)Large-Scale\(\}\) machine learning. In: 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), pp. 265–283 (2016)

Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E., DeVito, Z., Lin, Z., Desmaison, A., Antiga, L., Lerer, A.: Automatic differentiation in pytorch (2017)

MATLAB: (R2022a). The MathWorks Inc., Natick, Massachusetts (2022)

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., Witten, I.H.: The weka data mining software. ACM SIGKDD Explor. Newsl 11, 10–18 (2009). https://doi.org/10.1145/1656274.1656278

Moulahi, T., Zidi, S., Alabdulatif, A., Atiquzzaman, M.: Comparative performance evaluation of intrusion detection based on machine learning in in-vehicle controller area network bus. IEEE Access 9, 99595–99605 (2021). https://doi.org/10.1109/ACCESS.2021.3095962

Çavuşoğlu, Ünal.: A new hybrid approach for intrusion detection using machine learning methods. Appl. Intell. 49, 2735–2761 (2019). https://doi.org/10.1007/S10489-018-01408-X/TABLES/16

Van, T., Tran, H.A., Souihi, S., Mellouk, A.: Empirical study for dynamic adaptive video streaming service based on google transport quic protocol. In: 2018 IEEE 43rd Conference on Local Computer Networks (LCN), pp. 343–350 (2018). IEEE

Ho, S., Al Jufout, S., Dajani, K., Mozumdar, M.: A novel intrusion detection model for detecting known and innovative cyberattacks using convolutional neural network. IEEE Open J. Comput. Soc. 2, 14–25 (2021)

Shenfield, A., Day, D., Ayesh, A.: Intelligent intrusion detection systems using artificial neural networks. ICT Express 4, 95–99 (2018). https://doi.org/10.1016/J.ICTE.2018.04.003

Jamadar, R.A.: Network intrusion detection system using machine learning. Indian J. Sci. Technol. (2018). https://doi.org/10.17485/ijst/2018/v11i48/139802

Taher, K.A., Jisan, B.M.Y., Rahman, M.M.: Network intrusion detection using supervised machine learning technique with feature selection. In: 1st International Conference on Robotics, Electrical and Signal Processing Techniques, ICREST 2019, 643–646 (2019). https://doi.org/10.1109/ICREST.2019.8644161

Kanimozhi, V., Jacob, T.P.: Artificial intelligence based network intrusion detection with hyper-parameter optimization tuning on the realistic cyber dataset cse-cic-ids2018 using cloud computing. In: Proceedings of the 2019 IEEE International Conference on Communication and Signal Processing, ICCSP 2019, 33–36 (2019). https://doi.org/10.1109/ICCSP.2019.8698029

Rajagopal, S., Kundapur, P.P., Hareesha, K.S.: A stacking ensemble for network intrusion detection using heterogeneous datasets. Secur. Commun. Netw. (2020). https://doi.org/10.1155/2020/4586875

Maseer, Z.K., Yusof, R., Bahaman, N., Mostafa, S.A., Foozy, C.F.M.: Benchmarking of machine learning for anomaly based intrusion detection systems in the cicids2017 dataset. IEEE Access 9, 22351–22370 (2021). https://doi.org/10.1109/ACCESS.2021.3056614

Thudumu, S., Branch, P., Jin, J., Singh, J.J.: A comprehensive survey of anomaly detection techniques for high dimensional big data. J. Big Data 7, 1–30 (2020). https://doi.org/10.1186/S40537-020-00320-X/TABLES/6

Bhati, N.S., Khari, M.: A new intrusion detection scheme using catboost classifier. In: Forthcoming Networks and Sustainability in the IoT Era: First EAI International Conference, FoNeS–IoT 2020, Virtual Event, October 1-2, 2020, Proceedings 1, pp. 169–176. Springer (2021)

Bhati, N.S., Khari, M., Malik, H., Chaudhary, G., Srivastava, S.: A new ensemble based approach for intrusion detection system using voting. J. Intell. Fuzzy Syst. 42(2), 969–979 (2022). https://doi.org/10.3233/JIFS-189764

Bhati, N.S., Khari, M.: An ensemble model for network intrusion detection using adaboost, random forest and logistic regression. In: Applications of Artificial Intelligence and Machine Learning: Select Proceedings of ICAAAIML 2021, pp. 777–789. Springer (2022). https://doi.org/10.1007/978-3-319-10840-7_32

Bhati, N.S., Khari, M., García-Díaz, V., Verdú, E.: A review on intrusion detection systems and techniques. Internat. J. Uncertain. Fuzziness Knowl. Based Syst. 28(Supp02), 65–91 (2020). https://doi.org/10.1142/S0218488520400140

Ferrag, M.A., Maglaras, L., Moschoyiannis, S., Janicke, H.: Deep learning for cyber security intrusion detection: approaches, datasets, and comparative study. J. Inf. Secur. Appl. 50, 102419 (2020). https://doi.org/10.1016/j.jisa.2019.102419

Khraisat, A., Gondal, I., Vamplew, P., Kamruzzaman, J.: Survey of intrusion detection systems: techniques, datasets and challenges. Cybersecurity (2019). https://doi.org/10.1186/s42400-019-0038-7

Siddique, K., Akhtar, Z., Khan, F.A., Kim, Y.: Kdd cup 99 data sets: a perspective on the role of data sets in network intrusion detection research. Computer 52, 41–51 (2019)

Hindy, H., Brosset, D., Bayne, E., Seeam, A.K., Tachtatzis, C., Atkinson, R., Bellekens, X.: A taxonomy of network threats and the effect of current datasets on intrusion detection systems. IEEE Access 8, 104650–104675 (2020). https://doi.org/10.1109/ACCESS.2020.3000179

Ferriyan, A., Thamrin, A.H., Takeda, K., Murai, J.: Generating network intrusion detection dataset based on real and encrypted synthetic attack traffic. Appl. Sci. (2021). https://doi.org/10.3390/app11177868

Sarhan, M., Layeghy, S., Moustafa, N., Portmann, M.: Netflow datasets for machine learning-based network intrusion detection systems. In: Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST 371 LNICST, 117–135 (2021). https://doi.org/10.1007/978-3-030-72802-1_9

Tong, V., Tran, H.A., Souihi, S., Mellouk, A.: Empirical study for dynamic adaptive video streaming service based on google transport quic protocol. In: Proceedings—Conference on Local Computer Networks, LCN 2018-October, 343–350 (2019). https://doi.org/10.1109/LCN.2018.8638062

Sarhan, M., Layeghy, S., Portmann, M.: Towards a standard feature set for network intrusion detection system datasets. Mobile Netw. Appl. 27, 357–370 (2022). https://doi.org/10.1007/S11036-021-01843-0/FIGURES/4

GNS3 The software that empowers network professionals. https://www.gns3.com/. (accessed: 15/10/2022) (2022)

Patel, K.C., Sharma, P.: A review paper on pfsense-an open source firewall introducing with different capabilities and customization. IJARIIE 3, 2395–4396 (2017)

Bakhshi, T., Ghita, B.: Anomaly detection in encrypted internet traffic using hybrid deep learning. Secur. Commun. Netw. (2021). https://doi.org/10.1155/2021/5363750

Yulianto, A., Sukarno, P., Suwastika, N.A.: Improving adaboost-based intrusion detection system (ids) performance on cic ids 2017 dataset. J. Phys: Conf. Ser. 1192, 12018 (2019). https://doi.org/10.1088/1742-6596/1192/1/012018

Verkerken, M., D’hooge, L., Wauters, T., Volckaert, B., De Turck, F.: Towards model generalization for intrusion detection Unsupervised machine learning techniques. J. Netw. Syst. Manag. 30, 1–25 (2022)

Dhooge, L., Verkerken, M., Wauters, T., De Turck, F., Volckaert, B.: Investigating generalized performance of data-constrained supervised machine learning models on novel, related samples in intrusion detection. Sensors (2023). https://doi.org/10.3390/s23041846

Acknowledgements

All authors read and approved the final manuscript.

Funding

This research is funded by the Ministry of Planning, Development, and Special Initiatives through the Higher Education Commission of Pakistan under the National Center for Cyber Security (NCCS) and Cyber Security Centre, University of Warwick, UK.

Author information

Authors and Affiliations

Contributions

MA did conceptualization, data curation, methodology, writing—original draft, software, writing—review and editing; MH done conceptualization, data curation, methodology, writing—original draft, software, writing—review and editing, formal analysis, project administration, visualization, and investigation; MHD was involved in supervision, project administration, visualization, investigation, writing—review and editing, and formal analysis; AU contributed to writing—review and editing, formal analysis, data curation, methodology, project administration; SMM done writing—review and editing, formal analysis, methodology, investigation, project administration, funding acquisition; HM was involved in writing—review and editing, formal analysis, data curation, methodology, project administration; CM done writing—review and editing, formal analysis, methodology, investigation, and project administration.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ali, M., Haque, Mu., Durad, M.H. et al. Effective network intrusion detection using stacking-based ensemble approach. Int. J. Inf. Secur. 22, 1781–1798 (2023). https://doi.org/10.1007/s10207-023-00718-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10207-023-00718-7