Abstract

Detecting conditional independencies plays a key role in several statistical and machine learning tasks, especially in causal discovery algorithms, yet it remains a highly challenging problem due to dimensionality and complex relationships presented in data. In this study, we introduce LCIT (Latent representation-based Conditional Independence Test)—a novel method for conditional independence testing based on representation learning. Our main contribution involves a hypothesis testing framework in which to test for the independence between X and Y given Z, we first learn to infer the latent representations of target variables X and Y that contain no information about the conditioning variable Z. The latent variables are then investigated for any significant remaining dependencies, which can be performed using a conventional correlation test. Moreover, LCIT can also handle discrete and mixed-type data in general by converting discrete variables into the continuous domain via variational dequantization. The empirical evaluations show that LCIT outperforms several state-of-the-art baselines consistently under different evaluation metrics, and is able to adapt really well to both nonlinear, high-dimensional, and mixed data settings on a diverse collection of synthetic and real data sets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Proposed Latent representation-based Conditional Independence Test (LCIT) framework. First, X and Y are transformed into respective latent spaces using two conditional normalizing flow (CNF) modules independently learned from samples of \(\left( X,Z\right) \) and \(\left( Y,Z\right) \), respectively. The latent variables (\(\epsilon _{X}\) and \(\epsilon _{Y}\)), which have standard Gaussian distributions by design, are then used as inputs for a conventional correlation test. If \(\epsilon _{X}\) and \(\epsilon _{Y}\) are indeed uncorrelated, then we accept the null hypothesis (\({\mathcal {H}}_{0}:X\perp \!\!\! \perp Y\mid Z\)); otherwise, we reject the null hypothesis and accept the alternative (\({\mathcal {H}}_{1}:X\not \perp \!\!\! \perp Y\mid Z\))

Conditional independence (CI) tests concern with the problem of testing if two random variables X and Y are statistically independent after removing all the influences coming from the conditioning variables Z (denoted as \(X\perp \!\!\! \perp Y\mid Z\)), using the empirical samples from their joint distribution \(p\left( X,Y,Z\right) \). More formally, we consider the hypothesis testing setting with:

As a simple example, imagine a single light switch Z controls two light bulbs X and Y. Then, given the fact that the switch Z is on, the state of X does not tell anything about the state of Y and vice versa, e.g., X may be shining but Y may be dead. In this case, we say that X and Y are conditionally independent given Z (\(X\perp \!\!\! \perp Y\mid Z\)). Conversely, imagine two light switches X and Y control the same light bulb Z, as in staircase light systems. Then, given Z is glowing, we may deduce the state of Y from the state of X, e.g., if X is off, then Y must be on and vice versa. We say that X and Y are conditionally dependent given Z (\(X \not \!\perp \!\!\!\perp Y \mid Z\)) in this case.

With this functionality, CI tests have been extensively leveraged as the backbone of causal discovery algorithms which aim to disassemble the causal interrelationships embedded in the observed data. More specifically, in constraint-based causal discovery methods such as the PC algorithm and its variants [1, 2], CI tests are used to detect if each pair of variables has an intrinsic relationship that cannot be explained by any other intermediate variables, and so forth connectivity of those who do not share this kind of relation are progressively removed. The outputs from such methods are extremely valuable in many scientific sectors such as econometrics [3], neuroscience [4], machine learning [5,6,7], and especially bioinformatics [8, 9] where the behavioral links between genes, mutations, diseases, etc., are at the heart of curiosity.

Here, we consider the continuous instance of the problem where X, Y, and Z are real-valued random vectors or variables, which is significantly harder than the discrete case in general. This is because discrete probabilistic quantities are usually more tractable to be accurately estimated, in contrary with their continuous counterparts. In fact, many methods must resort to binning continuous variables into discrete values for their tests [10,11,12]. However, this technique is prone to loss of information and is erroneous in high dimensions due to the curse of dimensionality. This also highlights the inherent difficulty of CI testing in continuous domains.

From the technical perspective, CI tests can be roughly categorized into four major groups based on their conceptual essence, including distance-based methods, kernel-based methods, regression-based methods, and model-based methods. In distance-based methods [10, 12, 13], the direct characterization of CI, \(X\perp \!\!\! \perp Y\mid Z\) if and only if \(p(x,y\mid z)=p(x\mid z)p(y\mid z)\) for all x, y, z, is exploited and methods in this class aim to explicitly measure the difference between the respective probability densities or distributions. To achieve that, this approach usually employs data discretization, which tends to be faulty as the data dimensionality increases.

Next, kernel-based methods [14,15,16,17] adopt kernels to exploit high-order properties of the distributions via sample-wise similarities in higher spaces. However, as noted in [18], the performance of kernel-based methods may polynomially deteriorate w.r.t. the dimensionality of data as well.

The next group of CI tests is regression-based methods [19,20,21,22], which transform the original CI testing problem into a more manageable problem of marginal independence testing between regression residuals. However, the assumptions made by these methods are rather restrictive and may be violated in practice.

Finally, the last group of CI methods contain a diverse set of approaches leveraging learning algorithms as the main technical device. For example, in [23,24,25], the conditional mutual information (CMI) between X and Y given Z is considered as the test statistics and estimated using k-nearest neighbors estimators. Additionally, methods utilizing generative adversarial neural networks (GAN) [26, 27] have also been proposed where new samples are generated to help simulate the null distributions of the test statistics. Also, in [28], the use of supervised classifiers is harnessed to differentiate between conditional independence and conditional dependence samples.

1.1 Present study

In this study, we offer a novel approach to test for conditional independence motivated by regression-based methods and executed via latent representation learning with normalizing flows. Our proposed method, called Latent representation-based Conditional Independence Testing (LCIT),Footnote 1 learns to infer the latent representations of X and Y conditioned on Z, then tests for their unconditional independence.

Considering the real-valued data setting, to infer the latent variables, we make use of normalizing flows (NF) [29, 30], which is a subset of generative modeling methods designed to represent complex distributions using much simpler “base” distributions via the use of a sequence of bijective transformations. Here, the latent variables, i.e., the “bases” in the NF framework, are chosen to be Gaussians so that their independence can be tested with a correlation test where the p-value has a simple closed-form expression, unlike many approaches in the literature of conditional independence testing that require bootstrapping since their test statistics have non-trivial null distributions [15, 21,22,23,24, 26, 27].

In comparison with other branches of generative methods, while GANs [31] are able to generate high-quality samples but do not offer the ability to infer latent representations, variational auto-encoders (VAEs) [32] can both generate new samples as well as infer latent variables but the information may be lost due to non-vanishing reconstruction errors; the bijective map in NF is perfectly fit to our methodological design due to its ability to infer latent representations without loss of information. The importance of the information preservation will become apparent later in Sect. 3. Moreover, since NFs can be parametrized with neural networks, we can benefit vastly from their expressiveness that allows them to learn any distributions with arbitrary precision.

In addition, the framework can also be extended to discrete data by carefully unifying the domains of all variables into the same continuous domain. Discrete values in the conditioning variables Z can be handled using conventional data processing techniques, such as one-hot-encoding. Meanwhile, discrete X or Y is more tricky to tackle because our theoretical foundation relies on their continuity. To overcome this, we propose to adopt variational dequantization [33] to seamlessly integrate the discrete distributions into the continuous domain. Then, they can be treated as if they are real-valued variables.

We demonstrate the effectiveness of our method in conditional independence testing with extensive numerical simulations, as well as in real datasets. The empirical results show that LCIT outperforms existing state-of-the-art methods in a consistent manner.

1.2 Contributions

This paper offers three key contributions summarized as follows:

-

1.

We present a conversation of conditional independence into unconditional independence of “latent” variables via invertible transformations of target variables, adjusted on the conditioning set. This characterization allows us to operate with the conventional marginal independence testing problem which is less challenging than the original conditional problem.

-

2.

We introduce a new conditional independence testing algorithm, called Latent representation-based Conditional Independence Testing (LCIT), which harnesses a conditional normalizing flows framework to convert target variables into corresponding latent representations, where their uncorrelatedness entails conditional independence in the original space. To the best of our knowledge, this is the first time normalizing flows are applied into the problem of conditional independence testing. Additionally, deviating from many of the existing nonparametric tests, LCIT can estimate the p-value computationally cheaper than methods involving bootstrapping or permutation. See Fig. 1 for an illustration of our framework.

-

3.

We elaborate how to extend the framework to discrete and mixed-type data by sophisticatedly converting discrete-valued variables to the continuous domain using the technique of variational dequantization. This extension enables the employment of LCIT in general real-life applications.

-

4.

We demonstrate the effectiveness of the proposed LCIT method with a comparison against various state-of-the-art baselines on both synthetic and real datasets, where LCIT is showed to outperform other methods in several metrics. The experimental results also indicate that LCIT can handle mixed and continuous data at the same quality.

1.3 Paper organization

In the following parts of the paper, we first briefly highlight major state-of-the-art ideas in the literature of conditional independence hypothesis testing in Sect. 2. Then, in Sect. 3, we describe in detail our proposed LCIT method for conditional independence testing for real-valued data, which is followed by the extension of our framework to mixed-type data in Sect. 4. Next, in Sect. 5, we perform experiments to demonstrate the strength of LCIT in both synthetic and real data settings. Finally, the paper is concluded with a summary and suggestions for future developments in Sect. 6.

2 Related works

Based on the technical device, CI test designs can be practically clustered into four main groups: distance-based methods [10, 12, 13], kernel-based methods [14,15,16,17], regression-based methods [22], and lastly model-based methods [23, 24, 26, 27].

2.1 Distance-based methods

Starting from the most common definition of conditional independence—\(X\perp \!\!\! \perp Y\mid Z\) if and only if \(p\left( x\mid z\right) p\left( y\mid z\right) =p\left( x,y\mid z\right) \) (or equivalently, \(p\left( x\mid y,z\right) =p\left( x|z\right) \)) for every realizations y and z of Y and Z, respectively—early methods set the first building blocks by explicitly estimating and comparing the relevant densities or distributions.

For example, [10] measure the distance between two conditionals \(p\left( X\mid y,z\right) \) and \(p\left( X\mid z\right) \) using the weighted Hellinger distance. Following this direction, [13] devise a new conditional dependence measure equal to the supremum of the Wasserstein distance between \(p\left( X\mid y,z\right) \) and \(p\left( X\mid y',z\right) \) over all realizations \(y,y',z\).

Additionally, in [12], the Wasserstein distance between two conditional distributions \(p\left( X,Y\mid z\right) \) and \(p\left( X\mid z\right) \otimes p\left( Y\mid z\right) \) is measured for each discretized value of z.

2.2 Kernel-based methods

Many other approaches avoid the difficulties in estimating conditional densities with alternative characterizations of conditional independence. Particularly, when X, Y, Z are jointly multivariate normal, then the conditional independence \(X\perp \!\!\! \perp Y\mid Z\) reduces to the vanish of the partial correlation coefficient \(\rho _{XY\cdot Z}\) [34], which is easy to test for zero since its Fisher transformation follows an approximately normal distribution under the null [35].

Departing from that a large body of works has focused on kernel methods [14,15,16,17], which can be perceived as a nonparametric generalization of the connection between partial uncorrelatedness and conditional independence of Gaussian variables [15]. These methods follow the CI characterization established in [36] where the conditional independence is expressed in terms of conditional cross-covariance operators of functions in the reproducing kernel Hilbert spaces (RKHS): \(\varSigma _{YX\mid Z}:=\varSigma _{YX}-\varSigma _{YZ}\varSigma _{ZZ}^{-1}\varSigma _{ZX}\) where the cross-covariance operator is defined as \(\left\langle g,\varSigma _{YX}f\right\rangle :={\mathbb {E}}_{XY}\left[ f\left( X\right) g\left( Y\right) \right] -{\mathbb {E}}_{X}\left[ f\left( X\right) \right] {\mathbb {E}}_{Y}\left[ g\left( Y\right) \right] \) with f, g being, respectively, functions in RKHS of X and Y. This can be interpreted as the generalization of the conventional partial covariance.

In what follows, conditional independence is achieved if and only if the conditional cross-covariance is zero. For this reason, in [14], the Hilbert–Schmidt norm of the partial cross-covariance is tested against zero for the null hypothesis.

2.3 Regression-based methods

Regression-based CI tests [20,21,22] assume that Z is a confounder set of X and Y, as well as the relationships between Z and X/Y are additive noise models (i.e., \(X:=f\left( Z\right) +E,\;Z\perp \!\!\! \perp E\)). Therefore, by the use of a suitable regression function, we can remove all the information from Z embeded in X/Y by simply subtracting the regression function, i.e., \(r_{X}:=X-{\hat{f}}\left( Z\right) \) and \(r_{Y}:=Y-{\hat{g}}\left( Z\right) \). After this procedure, the conditional independence \(X\perp \!\!\! \perp Y\mid Z\) can be simplified to \(r_{X}\perp \!\!\! \perp r_{Y}\). Alternatively, in [19], the hypothesis testing problem \(X\perp \!\!\! \perp Y\mid Z\) is converted into \(X-{\hat{f}}\left( Z\right) \perp \!\!\! \perp \left( Y,Z\right) \). Meanwhile, the problem is transformed into testing for \(X-{\hat{f}}\left( Z\right) \perp \!\!\! \perp \left( Y-{\hat{g}}\left( Z\right) ,Z\right) \) in [20].

Nonetheless, the assumptions required by regression-based methods are relatively strong overall. As proven in [37], in general, we can only obtain residuals independent of Z if regression is performed against the true “cause” in the data generating process.

More particularly, it was shown in [37] that if the data admits both models \(X:=f\left( Z\right) +E_{X},\ Z\perp \!\!\! \perp E_{X}\) and \(Z:=g\left( X\right) +E_{Z},\ X\perp \!\!\! \perp E_{Z}\), then all the choices of f and g are contained in a very restricted space. Resultantly, if \(Z:=g\left( X\right) +E,\ X\perp \!\!\! \perp E_{Z}\) in the true data generating process and \(X=f\left( Z\right) +E_{X}\), then in general \(Z\not \!\perp \!\!\!\perp E_{X}\), thus \(X-{\mathbb {E}}{{\left[ X\mid Z\right] }}\) typically still depends on Z. As an example, let \(Z:=\sqrt{X}+E,\ E\perp \!\!\! \perp X,\;E\sim {\mathcal {N}}\left( 0,1\right) \), then \(X-{\mathbb {E}}\left[ X\mid Z\right] =E^{2}-2EZ^{2}-1\) still statistically depends on Z.

Additionally, the additive noise model assumption is also easy to be violated. If the generating process involves non-additive noises, then the regression residuals can still be dependent on Z, making the equivalence \(X\perp \!\!\! \perp Y\mid Z\Leftrightarrow r_{X}\perp \!\!\! \perp r_{Y}\) invalid. For example, if \(X:=f\left( Z\right) \times E,\;E\sim {\mathcal {N}}\left( 0,1\right) \), then \(X-{\mathbb {E}}\left[ X\mid Z\right] =f\left( Z\right) \times E\) which is still dependent on Z.

2.4 Model-based approaches

Apart from the aforementioned methods, model-based approaches more heavily borrow supervised and unsupervised learning algorithms as the basis. For instance, recently GANs have been employed [26, 27] to implicitly learn to sample from the conditionals \(p\left( X\mid Z\right) \) and \(p\left( Y|Z\right) \).

In [26], the main motivation is that for any dependence measure \(\rho \) and \({\tilde{X}}\sim p\left( X\mid Z\right) \) with \(Y\perp \!\!\! \perp {\tilde{X}}\), under both hypotheses, \(\rho \left( X,Y,Z\right) \) should be at least \(\rho \left( {\tilde{X}},Y,Z\right) \) and the equality occurs only under \({\mathcal {H}}_{0}\). This key observation motivates the authors to employ GANs to learn the generator for \(p\left( X\mid Z\right) \). Then, the test’s p-value can be empirically estimated by repeatedly sampling \({\tilde{X}}\) and calculating the dependence measure without any knowledge of the null distribution. Similarly, the double GAN approach [27] goes one step further—two generators are used in order to learn both \(p\left( X\mid Z\right) \) and \(p\left( Y\mid Z\right) \), then the test statistics is calculated as the maximum of the generalized covariance measures of multiple transformation functions.

Differently from those, since conditional independence coincides with zero conditional mutual information (CMI), which is a natural and well-known measure of conditional dependence, many methods aim to estimate CMI as the test statistics [23,24,25]. In [23], the CMI is estimated via several k-NN entropy estimators [38] and the test statistics is empirically estimated by randomly shuffling samples of X in a way that preserves \(p\left( X\mid Z\right) \) while breaking the conditional dependence between X and Y given Z.

As an extension to [23], since k-NN CMI estimators are erroneous in high dimensions which is followed by the failed tests, in [25], CMI is replaced with the short expansion of CMI, computed via the Mobius representation of CMI, which offers simple asymptotic null distributions that allow for an easier construction of the conditional independence test. Additionally, [24] propose an classifier-based estimator for the Kullback–Leibler divergence to measure the divergence of \(p\left( X,Y\mid Z\right) \) and \(p\left( X\mid Z\right) \otimes p\left( Y\mid Z\right) \), which is essentially CMI.

Following a slightly similar approach, the classification-based CI test [28] reduces the CI testing problem into a binary classification problem, where the central idea is that under the null hypothesis, a binary classifier cannot differentiate between the original dataset and a shuffled dataset that forces the conditional independence \(X\perp \!\!\! \perp Y\mid Z\), whereas the difference under the alternative hypothesis can be easily captured by the classifier.

2.5 Our approach

Our LCIT method departs from regression-based methods in the sense that it does not require any of the limited assumptions supposed by these methods. More particularly, we devise a “generalized residual,” referred to as a “latent representation,” such that it is independent from Z without assuming Z is a confounder of X and Y nor the relationships are additive noise models.

Moreover, while our method employs generative models, it approaches the CI testing problem from an entirely different angle—instead of learning to generate randomized samples as in GAN-based methods [26, 27], we explicitly learn the deterministic inner representations of X and Y through invertible transformations of NFs, so that we can directly check for their independence instead of adopting bootstrapping procedures.

3 Latent representation-based conditional independence testing

Let \(X,Y\in {\mathbb {R}}\) and \(Z\in {\mathbb {R}}^{d}\) be our random variables and vectors where we wish to test for \(X\perp \!\!\! \perp Y\mid Z\) and d is the number of dimensions of Z. In regard of X and Y being limited to scalars instead of vectors, this is due to the fact that in the majority of applications of conditional independence testing, which include causal discovery tasks, we typically care about the dependence of each pair of univariate variables given other variables. Additionally, according to the decomposition and union rules of the probabilistic graphoids [39], the conditional independence between two sets of variables given the third set of variables can be factorized into a series of conditional independencies between pairs of univariate variables. Therefore, for simplicity, this work focuses on real-valued X and Y exclusively.

Nonetheless, it may be desirable sometimes when X and Y are multidimensional, e.g., when they represent locations or measurements. In such cases, it is still possible to apply our proposed method with few extensions. Particularly, assuming X has n dimensions and Y has m dimensions, then we can turn the problem of testing \(X\perp \!\!\! \perp Y\mid Z\) into a multiple hypothesis testing problem, comprising of \(n\times m\) sub-problems \(X_i \perp \!\!\! \perp Y_j \mid Z,\;i=1..n,j=1..m\), where several techniques are available to tackle it, for example, Bonferroni’s method [40].

3.1 A new characterization of conditional independence

We start by giving the fundamental observation that drives the development of our method:

Lemma 1

Assuming there exists random variables \(\epsilon _{X}\), \(\epsilon _{Y}\) statistically independent of Z and invertible functions (w.r.t. the first argument) f, g such that \(X=f\left( \epsilon _{X},Z\right) ,\epsilon _{X}=f^{-1}\left( X,Z\right) \) and \(Y=g\left( \epsilon _{Y},Z\right) ,\epsilon _{Y}=g^{-1}\left( Y,Z\right) \), then

Proof

Since f and g are invertible, by the change of variables rule, we have: \(\square \)

where we ignore z due to the constraint \(\left( \epsilon _{X},\epsilon _{Y}\right) \perp \!\!\! \perp Z\).

Thus, \(p\left( x\mid z\right) p\left( y\mid z\right) =p\left( x,y\mid z\right) \) if and only if \(p\left( \epsilon _{X}\right) p\left( \epsilon _{Y}\right) =p\left( \epsilon _{X},\epsilon _{Y}\right) \), rendering \(\epsilon _{X}\) and \(\epsilon _{Y}\) marginally independent.

For this reason, to test for \(X\perp \!\!\! \perp Y\mid Z\), we can instead test for \(\epsilon _{X}\perp \!\!\! \perp \epsilon _{Y}\), which is progressively less challenging thanks to the reduced conditioning variables.

Furthermore, since \(\epsilon _{X}\) and \(\epsilon _{Y}\) act as contributing factors to X and Y in the supposed generating process without being observed, we refer to them as latent representations of X and Y. This is inspired by regression based methods, where the “residuals” are essentially the additive noises in the data generation, and uniquely obtained by subtracting the regression functions to remove all information from Z, i.e., \(r_{X}:=X-{\mathbb {E}}{{\left[ X\mid Z\right] }}\) and \(r_{Y}:=Y-{\mathbb {E}}{{\left[ Y\mid Z\right] }}\). As explained in Sect. 2, while residuals are easy to compute, they cannot completely remove all information from Z as intended without restrictive conditions, including additive noise models and Z being a confounder set of both X and Y. Consequently, the dependence between \(r_{X}\) and \(r_{Y}\), which is possibly caused by the remaining influences from Z to them, may not exactly entail \(X\not \perp \!\!\! \perp Y\mid Z\). In contrary, with the help of NFs, the latent variables in our framework are always constructible without any assumption about the relationship between Z and X/Y, thus generalize beyond the regression residuals.

We note that when \(p\left( x\mid z\right) \) and \(p\left( y\mid z\right) \) are smooth and strictly positive, then the use of the cumulative distribution functions (CDF)—\(\epsilon _{X}:=\textrm{F}\left( x\mid z\right) ,\epsilon _{Y}:=\textrm{F}\left( y\mid z\right) ,f:=\textrm{F}^{-1}\left( \epsilon _{X}\mid z\right) ,\) and \(g:=\textrm{F}^{-1}\left( \epsilon _{X}\mid z\right) \)—is naturally a candidate for (3). Notably, the cumulative distribution functions for the alternative unconditional test has also been employed in [41], where quantile regression is used to estimate the cumulative distribution functions. However, in this study, Lemma 1 emphasizes the application of a more generic invertible transformation that is not restricted to CDFs, which can be parametrized with NFs.

3.2 Conditional normalizing flows for latent variables inference

In this subsection, we explain in detail the conditional normalizing flows (CNF) models used to infer the latent representations of X and Y conditional on Z, which will be used for the proxy unconditional test as in (3). For brevity, since the models for X and Y are identically implemented except for their learnable parameters, we only describe the CNF module for X and the module for Y follows accordingly.

3.2.1 Unconditional normalizing flows modeling

Normalizing flows have been progressively developed in the last decade since they were defined in [29] and made popular in [42, 43]. For LCIT, we demonstrate the usage of CDF flows [30] thanks to their simplicity and expressiveness. However, it should be noted that any valid alternative NFs can be naturally adapted into our solution.

The essence of CDF flows begins with the observation that the CDF of any strictly positive density function (e.g., Gaussian, Laplace, or Student’s t distributions) is differentiable and strictly increasing, hence the positively weighted combination of any set of these distributions is also a differentiable and strictly increasing function, which entails invertibility. This allows us to parametrize the flow transformation in terms of a mixture of Gaussians, which is known as a universal approximator of any smooth density [44]. Therefore, we can approximate any probability density function to arbitrary nonzero precision, given a sufficient number of components.

We emphasize that while it is possible to using only a single Gaussian component, its applicability is severely limited due to its simplicity, e.g., a single Gaussian distribution is unimodal, while data in real life is usually multimodal. Meanwhile, with just a mixture of a few Gaussians, many data distribution in practice can be learned adequately.

More concretely, starting with an unconditional Gaussian mixture-based NF, we denote k as the number of components, along with \(\left\{ \mu _{i},\sigma _{i}\right\} _{i=1}^{k}\) and \(\left\{ w_{i}\right\} _{i=1}^{k}\) as the parameters and the weight for each component in the mixture of univariate Gaussian densities \(p\left( x\right) =\sum _{i=1}^{k}w_{i}{\mathcal {N}}\left( x;\mu _{i},\sigma _{i}^{2}\right) \), where \(w_{i}\ge 0\), \(\sum _{i=1}^{k}w_{i}=1\). Subsequently, the invertible mapping \({\mathbb {R}}\rightarrow \left( 0,1\right) \) is defined as

where \(\varPhi \left( x;\mu ,\sigma \right) \) is the cumulative distribution function of \({\mathcal {N}}\left( \mu ,\sigma \right) \).

Along with the fact that \(u\in \left( 0,1\right) \), this transformation entails that u has a standard uniform distribution:

Following this reasoning, the transformation (8) maps a variable x with a Gaussian mixture distribution, which is multimodal and complex, into a simpler standard uniform distribution. Moreover, due to its monotonicity, it is also possible to reverse the process to generate new x after sampling u, though it is not necessary in the considering application of CI testing.

3.2.2 From unconditional to conditional normalizing flows

Next, to extend unconditional NF to conditional NF, we parametrize the weights, means, as well as variances of the Gaussian components as functions of z using neural networks. To be more specific, we parametrize \(\mu \left( z;\theta \right) \in {\mathbb {R}}^{k}\) as a simple multiple layer perceptron (MLP) with real-valued outputs. As for \(\sigma ^{2}\), since it is constrained to be positive, we instead model \(\log \sigma ^{2}\left( z;\theta \right) \in {\mathbb {R}}^{k}\) with an MLP, similarly as \(\mu \left( z;\theta \right) \). Finally, for the weights, which must be non-negative and sum to one, \(w_{i}\left( z;\theta \right) \in \left( 0,1\right) ^{k}\) is parametrized with an MLP with the Softmax activation function for the last layer. Here, \(\theta \) denotes the total set of learnable parameters of these MLPs. These steps can be summarized as follows:

with the respective derivative:

It is worth emphasizing that this translation naturally preserves the property similarly to (9). In another word, \(u_{\theta }\left( x,z\right) \in \left( 0,1\right) \) and

Therefore, U is both conditionally and marginally standard uniform regardless of the value of z, making U unconditionally independent of Z.

Moreover, we introduce an additional flow that depends on u only. This flow adopts the inverse cumulative distribution function (iCDF) of the standard Gaussian distribution, to transform U from a standard uniform variable to a standard Gaussian variable, which is the final “latent” variable we use for the surrogate test in (3):

3.2.3 Learning conditional normalizing flows

Similarly to conventional NFs, we adopt the maximum likelihood estimation (MLE) framework to learn the CNF modules for X and Y.

With a fixed number of components k, the conditional likelihood of X is given by

and we aim to maximize the log-likelihood of observed X conditioned on Z over the space of \(\theta \):

where \(\left\{ \left( x^{\left( j\right) },z^{\left( j\right) }\right) \right\} _{j=1}^{n}\) is the set of n observed samples of \(\left( X,Z\right) \).

Subsequently, a gradient-based optimization framework can be applied to learn \(\theta \) since \({\mathcal {L}}\) is fully differentiable. In summary, Algorithm 1 highlights the main steps of the training process. The steps involving discrete data will be explained in more details later in Sect. 4.

3.3 Marginal independence test for the latents

For two jointly Gaussian variables, their independence is equivalent to zero Pearson’s correlation coefficient.Footnote 2 Therefore, we can resort to Fisher’s transformation to get the test statistics, which has approximate Gaussian distribution under the null hypothesis, hence the closed form of the p-value is available [35].

To be more specific, first we calculate the Pearson’s correlation coefficient r between \(\epsilon _{X}\) and \(\epsilon _{Y}\), then turn it into the test statistic t using the Fisher’s transformation:

where the test statistic t has an approximate Gaussian distribution with mean \(\frac{1}{2}\ln \frac{1+\rho }{1-\rho }\) and standard deviation of \(\frac{1}{\sqrt{n-3}}\) where \(\rho \) is the true correlation between \(\epsilon _{X}\) and \(\epsilon _{Y}\), and n is the sample size.

Therefore, under the null hypothesis where \(\rho =0\), the p-value for t can be calculated as:

Finally, with a significance level of \(\alpha \), the null hypothesis \({\mathcal {H}}_{0}\) is rejected if \(p\text {-value}<\alpha \), otherwise we fail to reject \({\mathcal {H}}_{0}\) and have to conclude conditional dependence \(X\not \perp \!\!\! \perp Y\mid Z\). To summarize, see Algorithm 2 for the whole computation flow of the proposed LCIT method.

3.4 Computational complexity

Algorithm 1(Latents inference)

With a fixed maximum number of optimization steps, hidden units, and Gaussian components, the procedure passes the data \({\mathcal {O}}\left( n\right) \) times and in each pass, the computational cost of evaluating all the MLPs is \({\mathcal {O}}\left( d\right) \), where n and d represents the sample size and the dimensionality of Z, respectively. Therefore, the total complexity of Algorithm 1 is \({\mathcal {O}}\left( nd\right) \).

Algorithm 2(LCIT)

Learning the CNFs of X and Y using Algorithm 1 costs \({\mathcal {O}}\left( nd\right) \) as argued. Then, evaluating the covariance between the latents is only \({\mathcal {O}}\left( d\right) \) of runtime. Therefore, the overall complexity of the LCIT algorithm is \({\mathcal {O}}\left( nd\right) \), which is linear in both sample size and dimensionality.

4 Handling mixed-type data

In general, data are not usually homogeneously continuous. Particularly, both the conditioning variables Z and the target variables X and Y can be discrete or mixed. One natural way to handle this is by carefully integrate the discrete values into the continuous domain, so that the proposed framework can be seamlessly applied as if all variables are originally continuous. Toward this end, we must handle the discrete values in the adjusting variables Z, along with those in X and Y.

4.1 Mixed data in Z

Fortunately, the data type of Z is not integral to our theoretical foundation, i.e., Lemma 1. In fact, Z is only needed to be continuous so it can be used as the inputs for the MLPs to enable the differentiable gradient-based learning process. Therefore, we can deal with discrete features in Z by common data processing techniques. To be more specific, in our implementation, we employ the well-known one-hot-encoding schema for each discrete variable in Z to avoid losing information.

Particularly, let \(\left[ c_{1},\ldots ,c_{p}\right] \) be the list of p values of a discrete variable \(Z_{i}\). Then, we replace \(Z_{i}\) with \(p-1\) new binary features \(Z'_{1..p-1}\) where

4.2 Mixed data in X and Y

Owing to the change of variables theorem, Lemma 1 and normalizing flows in general are only applicable to continuous random variables X and Y. More specifically, normalizing flows are dedicated to learn probability density functions, where there is a constraint that the volume of the density function must be one, in contrary with probability mass functions where there is no volume. Therefore, if one attempts to apply normalizing flows to learn a probability mass function, the maximum likelihood objective will put infinitely high likelihood on the particular discrete values of the distribution, resulting in a malformed density model where almost everywhere is zero and a few places are infinite.

To overcome this obstacle, uniform dequantization is often applied. Particularly, assume X is discrete without loss of generality, first its discrete values \(\left[ c_{1},\ldots ,c_{p}\right] \) are encoded to numeric values \(\left[ 0,\ldots ,p-1\right] \). Next, a standard uniform noise \(E\sim {\mathcal {U}}\left[ 0,1\right) \) is added to X: \(X'=X+E\). \(X'\) is now the real-valued version of X with the range of \(\left[ 0,p\right) \). Note that \(X'\) can be rounded to convert back to X, so there is no loss of information of X in \(X'\), and thus its conditional (in)dependence with Y given Z is intact.

However, the resultant probability density function is not yet smooth because there still exists many straight borders, particularly at the values \(\left[ 0,\ldots ,p\right] \). This kind of densities is challenging to learn with normalizing flows since flow-based models strongly rely on the smooth transformations. One possible way to avoid this pitfall is by the technique called variational dequantization [33].

In variational dequantization, to learn a marginal distribution over the discrete variable X, instead of using a fixed standard uniform distribution \({\mathcal {U}}\left[ 0,1\right) \) for every value of X, a learnable conditional noise distribution \(q_{\phi }\left( E\mid X\right) \) with the support \(\left[ 0,1\right) \) is employed. Its purpose is to make the dequantized density function smoother and thus makes the learning process of the normalizing flows easier. In our framework, since we need the learn the conditional distribution of X given Z instead of the marginal of X, we will rather learn \(q_{\phi }\left( E\mid X,Z\right) \).

Now, we discuss the new objective function to learn both \(p_{\theta }\) and \(q_{\phi }\). Let us define \(P_{\theta }\left( x\mid z\right) \) as the modeling probability of X given Z, and \(p_{\theta }\left( x'\mid z\right) \) is the modeling probability density function of the dequantized variable \(X'=X+E\) given Z. We have the following identity:

Viewing \(q_{\phi }\) as a variational distribution, by the Jensen inequality, we obtain a variational lower bound as follows:

where the outer expectation is taken over the data. This bound applies for all valid distributions \(q_{\phi }\). However, to allow differentiable sampling which in turn enables end-to-end gradient-based optimization, we can again parametrize \(q_{\phi }\) using normalizing flows.

In particular, let \(\xi \sim {\mathcal {N}}\left( 0,1\right) \) and \(\xi \perp \!\!\! \perp \left( X,Y,Z\right) \). We can express e via \(\xi \) using a normalizing flow similarly to (13) as follows:

where \(w_{i}\), \(\mu _{i}\), and \(\sigma _{i}^{2}\) are modeled with neural networks with parameters \(\phi \) in the same way as in Sect. 3.2.2.

Subsequently, the corresponding derivative of e w.r.t. \(\xi \) is given by:

Next, by the change of variables rule, we can now express \(q_{\phi }\) as follows:

Consequently, combining (23) and (29) gives us the following objective function:

Finally, we jointly maximize the objective function with respect to \(\theta \) and \(\phi \) using the MLE approach:

To summarize, readers are advised to revisit Algorithm 1 for the key steps to handle mixed-type data in our framework. The marginal correlation test for the inferred latents (Algorithm 2) remains unchanged.

5 Experiments

5.1 Setup

In this subsection, we describe the experimental settings, including baseline methods, the considerations of parameters, as well as operation details of our method.

5.1.1 Methods

We demonstrate the effectiveness of the LCIT in conditional independence testing tasks on synthetic and real data against popular and recent state-of-the-art methods across different approaches. More specifically, we consider the kernel-based KCITFootnote 3 method [15] as a popular competitor, the recently emerged residual similarity-based SCITFootnote 4 approach [22], and lastly the classification-based CCITFootnote 5 algorithm [28]. Regarding the configurations, we use the default parameters recommended by respective baseline methods. Furthermore, while CCIT offers hyper-parameters selection as is, the KCIT implementation does not provide any such procedure.

Additionally, for all methods, we first apply a standard normalization step for each of X, Y, and Z before performing the tests.

Specifically for LCIT, we parametrize \(\textrm{MLP}_{\mu }\), \(\textrm{MLP}_{\log \sigma ^{2}}\), and \(\textrm{MLP}_{w}\) with neural networks of one hidden layer, rectifier linear unit (ReLU) and batch normalization [45] activation functions. The hyper-parameter specifications and analyses are considered in the followings.

5.1.2 Training CNFs

We implement the CNF modules using the PyTorch framework [46]. Each CNF module is trained using the Adam optimization algorithm with fixed learning rate of \(5\times 10^{-3}\), weight decay of \(5\times 10^{-5}\) to help regularize the model’s complexity and batch size of 64.

5.1.3 Data processing

Before feeding data into the CNF modules, apart from data standardization as other methods, we additionally handle outliers by clipping the data to be in between the \(2.5\%\) and \(97.5\%\) quantiles of each dimension, which helps stabilize the training process since neural networks can be very sensitive to extreme values.

Subsequently, the input dataset is divided into training and validation sets with a ratio of 70/30, where the training portion is used to learn parameters and the validation set enables early stopping. We notice that typically, the training process only requires under 20 training epochs.

5.1.4 Hyper-parameters

The most important hyper-parameters presented in our models are the number of Gaussian mixture components and the hidden layer sizes, which together determine the expressiveness of the CNF. To examine which set of configuration works best, we furthermore perform hyper-parameter tuning on these two factors using the Optuna framework [47].

Specifically, we simulate 50 random datasets in the same manner as in Sub-sect. 5.2, both CI and non-CI labels, for each number of dimensions of Z varying in \(\left\{ 25,50,75,100\right\} \). With each dataset we execute 20 Optuna trials to find the best configuration, where the number of components varies in \(\left\{ 8,16,32,64,128,256\right\} \) and the number of units in the hidden layer takes value in \(\left\{ 4,8,16,32,64,128,256\right\} \). The objective is the sum of the maximum log-likelihoods of X and Y conditioned on Z. Finally, the most advantageous setting by far is recorded.

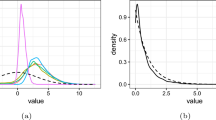

The summary of best configurations found via hyper-parameter tuning is shown in Fig. 2. It is clear that the more mixture components is usually preferable, while a small number of hidden units is sufficiently effective to retain a high performance. Based on these, we fix 32 Gaussian components for all other experiments in order to keep computations low with only a small loss of performance compared with larger numbers of components. On another hand, four hidden units is also used for every experiments since it is both computationally efficient and sufficiently expressive.

Conditional Independence Testing performance as a function of sample size. The evaluation metrics are \(F_{1}\) score, AUC (higher is better), Type I and Type II error rates (lower is better). We compare our proposed LCIT method with three baselines CCIT [28], KCIT [15], and SCIT [22]. Our method achieves the best \(F_{1}\) and AUC scores, and is the only method that retains good scores in all metrics and sample sizes

Conditional Independence Testing performance as a function of dimensionality. The evaluation metrics are \(F_{1}\) score, AUC (higher is better), Type I and Type II error rates (lower is better). We compare our proposed LCIT method with three baselines CCIT [28], KCIT [15], and SCIT [22]. Our method achieves the best \(F_{1}\) and AUC scores and is the only method that retains good scores in all metrics and dimensionalities

5.2 Synthetic data

To study the effectiveness of our proposed method against state-of-the-arts by evaluating them on synthetic data sets. Specifically, we first describe the data simulation process and study the sensitivity of our method with respect to random initialization. Then, we investigate the performance of our methods in comparison with the baselines when varying the number of samples and the number of dimensionalities. We also study the case when data is mixed type by making a portion of features to be discrete.

Following several closed related studies [15,16,17, 26], we randomly simulate the datasets following the post-nonlinear additive noise model:

with \(X:=2E_{X}\) where \(E_{X}\), \(E_{f}\), and \(E_{g}\) are independent noise variables following the same distribution randomly selected from \(\left\{ {\mathcal {U}}\left( -1,1\right) ,{\mathcal {N}}\left( 0,1\right) ,\textrm{Laplace}\left( 0,1\right) \right\} \). The \(\otimes \) and \(\left\langle \cdot ,\cdot \right\rangle \) denote the outer and inner products, respectively. Additionally, \(a,b\sim {\mathcal {U}}\left( -1,1\right) ^{d}\), \(c\sim {\mathcal {U}}\left( 1,2\right) \), and f, g are uniformly chosen from a rich set of mostly nonlinear functions \(\left\{ \alpha x,x^{2},x^{3},\tanh x,x^{-1},e^{-x},\frac{1}{1+e^{-x}}\right\} \).Footnote 6

For mixed-type data, we randomly choose a subset of all variables, including X, Y, and dimensions of Z, to turn them into discrete variables after they are generated. Specifically, each chosen feature is standardized and rounded to integers. Values exceeding three standard deviations are grouped. Finally, the new value range is shifted to start at zero. As a result, each selected continuous variable is converted to a discrete variable with at most five values.

5.2.1 Influence of random seeds

Since our method utilizes neural networks, which may be subjected to random initialization, we first study the sensitivity of our method with respect to different values of the random seed. Specifically, we consider 50 data sets generated with 1,000 samples and 25 dimensions of Z. We then try ten different values of the random initialization seed for our method and evaluate the variance of the results, which are measured via four different metrics, namely the \(F_{1}\) score (higher is better), area under the receiver operating characteristic curve (AUC, higher is better), as well as Type I and Type II errors (lower is better). The results shown in Table 1 confirm that our method is insensitive to random initialization as the performance scores exhibits very preferable values, i.e., high expected \(F_1\) and AUC scores, and low expected Type I and II error rates, while having relatively low variances.

5.2.2 Effect of different sample sizes

To study the performance of the LCIT against alternative tests across different sample sizes, we fix the dimensionality d of Z at 25 and vary the sample size from 250 to 1,000. We measure the CI testing performance under four different metrics, namely the \(F_{1}\) score (higher is better), area under the receiver operating characteristic curve (AUC, higher is better), as well as Type I and Type II errors (lower is better). More specifically, Type I error refers to the proportion of false rejections under \({\mathcal {H}}_{0}\), and Type II error reflects the proportion of false acceptances under \({\mathcal {H}}_{1}\). These metrics are evaluated using 250 independent runs for each combination of method, sample size, and label. Additionally, for \(F_{1}\) score, Type I and Type II errors, we adopt the commonly used significance level of \(\alpha =0.05\).

The result is reported in Figure 3, which shows that our method is the only one achieving good and stable performance in all four evaluation criterions. Remarkably, LCIT scores the highest in terms of \(F_{1}\) measure, surpassing all other tests with clear differences. Furthermore, LCIT, along with KCIT, also obtains the highest AUC scores, approaching closely to \(100\%\) as more samples are used, and marginally outperforms the two recent state-of-the-arts CCIT and SCIT at considerable margins. For both Type I and II errors, LCIT stably earns the second lowest in overall, at around \(10\%\).

Meanwhile, KCIT achieves the lowest Type II errors, but its Type I errors are completely larger than those of all other methods, suggesting that KCIT majorly returns conditional dependence as output. In contrary, while CCIT is able to obtain virtually no error in Type I, its use becomes greatly unreliable when viewed from the perspective of Type II errors. This indicates that CCIT in general usually favors outputting conditional independence as the answer.

5.2.3 Effect of high-dimensional conditioning sets

In Figure 4, we study the change in performance of LCIT as well as other methods in higher-dimensional settings. Concretely, we fix the sample size at 1,000 samples and increase the dimension of Z from 25 to 100. The result shows that our method consistently outperforms other state-of-the-arts as the dimensionality of Z increases, as evidenced by the highest AUC scores in overall, leaving CCIT and SCIT by up to roughly 40 units, while having comparably low error rates.

Generally, we can see a visible decline in performance of all methods, especially KCIT and CCIT. The AUC score of KCIT drops rapidly by 20 units from the smallest to largest numbers of dimensions, whereas its \(F_{1}\) score deteriorates quickly to half of the initial value, and the Type I errors are always the highest among all considered algorithms. On the other hand, CCIT also has vanishing Type I errors but exceedingly high Type II errors similarly to that in Figure 3.

5.2.4 Performance on mixed data

We X and Y we consider two possibilities, namely when one of them is discrete and when both of them are discrete. Likewise, for Z, we consider the case when it is completely continuous and when it is completely discrete. We conduct experiments to study the performance of LCIT on mixed-type data over different sample sizes (Fig. 5) and dimensionalities (Fig. 6).

It can be seen that the performance measures over the discrete data configurations are relatively consistent. More particularly, in Fig. 5, the performance of our method varies across different data settings at smaller sample sizes, but quickly converge as more samples are available. On the other hand, in Fig. 6, all data configurations witness the same performance in AUC and Type II error, and a small disparity can only be seen in \(F_{1}\) score and Type I error. Interestingly, X or Y being discrete does not degrade the performance of our method, but the discrete variables in Z slightly does. This is potentially due to the increment in dimensionality of Z as many one-hot-encoding features are added to Z when discrete features present.

5.3 Real data

To furthermore demonstrate the robustness of the proposed LCIT test, we evaluate it against other state-of-the-arts in CI testing on real datasets.

In general, real datasets of triplets \(\left( X,Y,Z\right) \) for CI test benchmarking are not available, so we have to resort to data coming with ground truth networks instead, which are still relatively rare and there are few consensus benchmark datasets.

In this study, we examine two datasets from the Dialogue for Reverse Engineering Assessments and Methods challenge,Footnote 7 ninth edition (referred to as DREAM4) [48, 49], where the data is publicly accessible with the ground truth gene regulatory networks presented. The challenge’s objective is to recover the gene regulatory networks given their gene expression data only. Therefore, the data sets are well fit to the application of our method and CI tests in general.

Regarding data description, each data set includes a ground truth transcriptional regulatory network of Escherichia coli or Saccharomyces cerevisiae, along with observations of gene expression measurements. We denote the two considered datasets as D4-A and D4-B, where D4-A is from the first sub-challenge of the contest that contains 10 genes with 105 gene expression observations, whereas D4-B comes from the second sub-challenge and consists of 100 genes complemented with 210 gene expression samples.

Next, we extract conditional independent and conditional dependent triplets \(\left( X,Y,Z\right) \) from the ground truth networks. This process is done based on the fact that if there is a direct connection between two nodes in a network, then regardless of the conditioning set, they remain conditionally dependent. Otherwise, the union of their parent sets should d-separate all paths connecting them, rendering them conditionally independent given the joint parents set [50]. In short, if there is an edge between X and Y in the ground truth network, we add to the data set \(\left\{ \left( X,Y,Z_{i}\right) \right\} _{i=1}^{5}\) where \(Z_{i}\) is a random subset of the remaining nodes with size \(d-4\); otherwise, we add \(\left( X,Y,\text {Parents}\left( X\right) \cup \text {Parents}\left( Y\right) \right) \) to the data set. Finally, to create class-balance datasets, for D4-A, we sample 30 conditional independence and 30 conditional dependence relationships from the created data set, while the number of relationships from D4-B are 50 each.

The CI testing performance on the DREAM4 datasets is reported in Fig. 7. It can be seen that the results follow relatively consistently with synthetic data scenarios, with LCIT being the best performer, followed by KCIT. Meanwhile, CCIT and SCIT considerably underperform with AUC scores around or under \(50\%\), comparable to a fair-coin random guesser.

6 Conclusion and future work

In this paper, we propose a representation learning approach to conditional independence testing called LCIT. Through the use of conditional normalizing flows, we transform the difficult conditional independence testing problem into an easier unconditional independence testing problem. We showcase the performance of our LCIT method via intensive experiments including synthetic datasets of highly complex relationships, as well as real datasets in bio-genetics and mixed-type data settings. The empirical results show that LCIT performs really well and is able to consistently outperform existing state-of-the-arts.

Conditional independence testing is a generic tool that serves as the basis of a wide variety of scientific tasks, especially in causal discovery. Therefore, the development of LCIT offers a promising generic alternative solution for these problems and methods.

As for future perspectives, since the latent representation based approach is first used in LCIT, it opens doors for further scientific developments of conditional independence tests based on representation learning, which are expected to greatly improve from LCIT and are able to extend to more challenging scenarios such as heterogeneity and missing data.

Notes

Source code and related data are available at https://github.com/baosws/LCIT.

While our procedure only constrains \(\left( \epsilon _{X},\epsilon _{Y}\right) \) to be marginally Gaussian, the experiments show that it is still robust in a wide range of scenarios.

We use the KCIT implementation from the CMU causal-learn package: https://github.com/cmu-phil/causal-learn.

We follow the authors’ original source code in MATLAB: https://github.com/Causality-Inference/SCIT.

We use the implementation from original authors: https://github.com/rajatsen91/CCIT.

For numerical stability, the input is appropriately scaled and translated before being fed into each function.

References

Spirtes P, Glymour C (1991) An algorithm for fast recovery of sparse causal graphs. Soc Sci Comput Rev 9:62–72

Spirtes P, Glymour CN, Scheines R, Heckerman D (2000) Causation, prediction, and search. MIT Press, London

Hünermund P, Bareinboim E (2019) Causal inference and data fusion in econometrics. arXiv preprint arXiv:1912.09104

Cao Y, Summerfield C, Park H, Giordano BL, Kayser C (2019) Causal inference in the multisensory brain. Neuron 102:1076–1087

Schölkopf B, Janzing D, Lopez-Paz D (2016) Causal and statistical learning. Oberwolfach Rep 13:1896–1899

Schölkopf B, Locatello F, Bauer S, Ke NR, Kalchbrenner N, Goyal A, Bengio Y (2021) Toward causal representation learning. Proc IEEE 109:612–634

Schölkopf B, von Kügelgen J (2022) From statistical to causal learning. arXiv preprint arXiv:2204.00607

Sachs K, Perez O, Pe’er D, Lauffenburger DA, Nolan GP (2005) Causal protein-signaling networks derived from multiparameter single-cell data. Science 308:523–529

Zhang B, Gaiteri C, Bodea LG, Wang Z, McElwee J, Podtelezhnikov AA, Zhang C, Xie T, Tran L, Dobrin R et al (2013) Integrated systems approach identifies genetic nodes and networks in late-onset Alzheimer’s disease. Cell 153:707–720

Su L, White H (2008) A nonparametric Hellinger metric test for conditional independence. Economet Theory 829–864

Diakonikolas I, Kane DM (2016) A new approach for testing properties of discrete distributions. In: Proceedings of the annual symposium on foundations of computer science, pp. 685–694

Warren A (2021) Wasserstein conditional independence testing. arXiv preprint arXiv:2107.14184

Etesami J, Zhang K, Kiyavash N (2017) A new measure of conditional dependence. arXiv preprint arXiv:1704.00607

Fukumizu K, Gretton A, Sun X, Schölkopf B (2007) Kernel measures of conditional dependence. Adv Neural Inf Process Syst

Zhang K, Peters J, Janzing D, Schölkopf B (2012) Kernel-based conditional independence test and application in causal discovery. arXiv preprint arXiv:1202.3775

Doran G, Muandet K, Zhang K, Schölkopf B (2014) A permutation-based Kernel conditional independence test. In: Proceedings of the conference on uncertainty in artificial intelligence, pp. 132–141

Strobl EV, Zhang K, Visweswaran S (2019) Approximate Kernel-based conditional independence tests for fast non-parametric causal discovery. J Causal Inference

Ramdas A, Reddi SJ. Póczos B, Singh A, Wasserman L (2015) On the decreasing power of Kernel and distance based nonparametric hypothesis tests in high dimensions. In: Proceedings of the AAAI conference on artificial intelligence

Grosse-Wentrup M, Janzing D, Siegel M, Schölkopf B (2016) Identification of causal relations in neuroimaging data with latent confounders: an instrumental variable approach. NeuroImage 825–833

Zhang H, Zhou S, Zhang K, Guan J (2017) Causal discovery using regression-based conditional independence tests. In: Proceedings of the AAAI conference on artificial intelligence

Zhang H, Zhang K, Zhou S, Guan J, Zhang J (2021) Testing independence between linear combinations for causal discovery. In: Proceedings of the AAAI conference on artificial intelligence, pp. 6538–6546

Zhang H, Zhang K, Zhou S, Guan J (2022) Residual similarity based conditional independence test and its application in causal discovery. In: Proceedings of the AAAI conference on artificial intelligence

Runge J (2018) Conditional independence testing based on a nearest-neighbor estimator of conditional mutual information. In: Proceedings of the international conference on artificial intelligence and statistics, pp. 938–947

Mukherjee S, Asnani H, Kannan S (2020) CCMI: classifier based conditional mutual information estimation. In: Proceedings of the conference on uncertainty in artificial intelligence, pp. 1083–1093

Kubkowski M, Mielniczuk J, Teisseyre P (2021) How to gain on power: novel conditional independence tests based on short expansion of conditional mutual information. J Mach Learn Res 1–57

Bellot A, van der Schaar M (2019) Conditional independence testing using generative adversarial networks. Adv Neural Inf Process Syst

Shi C, Xu T, Bergsma W, Li L (2021) Double generative adversarial networks for conditional independence testing. J Mach Learn Res 1–32

Sen R, Suresh AT, Shanmugam K, Dimakis AG, Shakkottai S (2017) Model-powered conditional independence test. Adv Neural Inf Process Syst

Tabak EG, Vanden-Eijnden E (2010) Density estimation by dual ascent of the log-likelihood. Commun Math Sci 217–233

Papamakarios G, Nalisnick ET, Rezende DJ, Mohamed S, Lakshminarayanan B (2021) Normalizing flows for probabilistic modeling and inference. J Mach Learn Res 1–64

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114

Ho J, Chen X, Srinivas A, Duan Y, Abbeel P (2019) Flow++: improving flow-based generative models with variational dequantization and architecture design. In: International conference on machine learning, pp. 2722–2730

Baba K, Shibata R, Sibuya M (2004) Partial correlation and conditional correlation as measures of conditional independence. Aust N Z J Stat 657–664

Hotelling H (1953) New light on the correlation coefficient and its transforms. J R Stat Soc Ser B (Methodological) 193–232

Fukumizu K, Bach FR, Jordan MI (2004) Dimensionality reduction for supervised learning with reproducing kernel Hilbert spaces. J Mach Learn Res 73–99

Hoyer PO, Janzing D, Mooij JM, Peters J, Schölkopf B (2008) Nonlinear causal discovery with additive noise models. Adv Neural Inf Process Syst 689–696

Kozachenko LF, Leonenko NN (1987) Sample estimate of the entropy of a random vector. Problemy Peredachi Informatsii 9–16

Pearl J, Paz A (1986) GRAPHOIDS: graph-based logic for reasoning about relevance relations or when would x tell you more about y if you already know z? In: Proceedings of the European conference on artificial intelligence, pp. 357–363

Bland JM, Altman DG (1995) Multiple significance tests: the Bonferroni method. BMJ 310:170

Petersen L, Hansen NR (2021) Testing conditional independence via quantile regression based partial copulas. J Mach Learn Res 1–47

Rezende D, Mohamed S (2015) Variational inference with normalizing flows. In: Proceedings of the international conference on machine learning, pp. 1530–1538

Dinh L, Krueger D, Bengio Y (2014) Nice: Non-linear independent components estimation. arXiv preprint arXiv:1410.8516

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. Nature Publishing Group

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the international conference on machine learning, pp. 448–456

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L, et al (2019) Pytorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst

Akiba T, Sano S, Yanase T, Ohta T, Koyama M (2019) Optuna: a next-generation hyperparameter optimization framework. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery & data mining, pp. 2623–2631

Marbach D, Schaffter T, Mattiussi C, Floreano D (2009) Generating realistic in silico gene networks for performance assessment of reverse engineering methods. J Comput Biol 16:229–239

Marbach D, Prill RJ, Schaffter T, Mattiussi C, Floreano D, Stolovitzky G (2010) Revealing strengths and weaknesses of methods for gene network inference. Proc Natl Acad Sci 107:6286–6291

Pearl J (1998) Graphical models for probabilistic and causal reasoning. In: Quantified representation of uncertainty and imprecision. Springer pp. 367–389

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

BD, TN conceived and refined the idea of this work. BD, TN designed and BD performed the experiments. All authors drafted, revised the manuscript and approved the final version.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Duong, B., Nguyen, T. Normalizing flows for conditional independence testing. Knowl Inf Syst 66, 357–380 (2024). https://doi.org/10.1007/s10115-023-01964-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-023-01964-w