Abstract

Bayesian Networks (BNs) have become increasingly popular over the last few decades as a tool for reasoning under uncertainty in fields as diverse as medicine, biology, epidemiology, economics and the social sciences. This is especially true in real-world areas where we seek to answer complex questions based on hypothetical evidence to determine actions for intervention. However, determining the graphical structure of a BN remains a major challenge, especially when modelling a problem under causal assumptions. Solutions to this problem include the automated discovery of BN graphs from data, constructing them based on expert knowledge, or a combination of the two. This paper provides a comprehensive review of combinatoric algorithms proposed for learning BN structure from data, describing 74 algorithms including prototypical, well-established and state-of-the-art approaches. The basic approach of each algorithm is described in consistent terms, and the similarities and differences between them highlighted. Methods of evaluating algorithms and their comparative performance are discussed including the consistency of claims made in the literature. Approaches for dealing with data noise in real-world datasets and incorporating expert knowledge into the learning process are also covered.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The achievements of black-box machine learning, such as neural networks, are undeniable and have contributed to a renewed interest in machine learning and artificial intelligence in general. Nevertheless, it is now well understood that black-box solutions that are restricted to predictive optimisation are unsuitable to inform decision making in domains that require transparency and tractability, such as in government policy and healthcare. The recent book by Pearl and Mackenzie (2018) highlights the need for models to be capable of reasoning under causal representation, in order to offer solutions that go beyond prediction. They illustrate this by presenting a ladder of causation that consists of three levels:

-

Level 1: Models restricted to associational relationships, or are capable of generating predictions only; e.g., “What symptoms should we expect to observe given disease A?”.

-

Level 2: Models that involve some form of causal representation and can answer questions about interventions; e.g., “What effect would taking drug A have on symptoms B given that they are caused by disease C?”.

-

Level 3: Models that offer a complete form of causal representation and can answer questions about causation that extend to counterfactual reasoning; e.g., “If I had taken drug B instead of drug A, would my symptoms caused by disease C be less severe?”

Pearl and Mackenzie also refer to the three above levels as seeing, doing and imagining, respectively. The Bayesian network (BN) framework that Pearl described a few decades back (Pearl 1985, 1988) enables us to answer questions up to and including Level 3, although this requires that the BN model is employed under causal assumptions; also referred to as a ‘causal BN’.

A BN is a probabilistic graphical model which provides a powerful general approach especially suited to modelling complex non-deterministic systems. A BN offers a compact probabilistic representation of the system and provides a means of applying probability theory to large collections, sometimes thousands or more, of variables. They have been used in many different domains, for example, in protein (Sachs et al. 2005) and gene (Imoto et al. 2004) networks in biology, pyschosis (Moffa et al. 2017) and cancer care (Sesen et al. 2013) in healthcare, engineering fault diagnosis (Cai et al. 2017), and air pollution modelling (Vitolo et al. 2018). Koller and Friedman (2009) and Darwiche (2009) provide two excellent introductions to the theory behind, and use of, BNs.

A BN consists of graph which shows the direct dependence relationships between variables, or in a causal BN, direct cause and effect relationships. The BN also defines parameters which specify the form and the strengths of these relationships. Determining these parameters is generally much easier than recovering the graph accurately and so we focus here on the latter. The graph may be specified by human experts in a domain of interest, but here, we describe structure learning algorithms which aim to learn the graph from data. We focus on combinatoric algorithms where the approach is to search or constrain the finite discrete space of possible graphs in some way. This paper aims to provide intuitive descriptions of a comprehensive range of these algorithms from the earliest, but often still competitive, algorithms, to some of the most recent advances.

Inevitably, in a field as broad and rapidly developing as this, we have had to omit, or only briefly refer to, some aspects of structure learning. Fortunately, there are other recent survey papers that cover some of these aspects more completely. For example, the paper by Glymour et al. (2019) provides more coverage of functional causal models where assumptions about the functional form of the effect, causes and noise relationships can be used to deduce causal relationships. Vowels et al. (2021) concentrate on approaches which treat structure learning as a continuous optimisation problem, optimising an objective function and handling the acyclicity constraint as a continuous function, and Zanga et al. (2022) cover algorithms which learn from mixed observational and experimental data, and those which learn cyclic graphs. Moraffah et al. (2020) and Noguiera et al. (2022) deal with structure learning from time-series data which we do not cover in this paper.

The paper is structured as follows: the next section covers some preliminaries about BNs, Sect. 3 covers constraint-based learning, Sect. 4 score-based learning, and Sect. 5 hybrid learning and some non-combinatorial approaches. Figure 6 provides an overview of the evolution of structure learning algorithms that are covered in this paper, and will be referenced in subsequent sections. Section 6 covers various practical considerations when applying these algorithms to synthetic and real data, including how to evaluate their output, as well as a discussion of comparative reviews of algorithm performance. Section 6 also discusses some of the main approaches these algorithms may incorporate to handle noise in the data, methods for incorporating expert knowledge into the structure learning process, some open-source software packages, and some guidelines on choosing algorithms. Lastly, we provide our concluding remarks in Sect. 7.

2 Preliminaries

A Bayesian Network, \(\mathcal{B}\), is defined by a tuple consisting of a Directed Acyclic Graph (DAG) \(G\), and a set of parameters \({\varvec{\Theta}}\), defining the strength and the shape of the relationships between variables (we shall denote sets in boldface throughout):

The DAG, \(G\), consists of a set of nodes (also known as vertices) \({\varvec{X}}\), each of which corresponds to one of the \(n\) variables under consideration, \({\varvec{X}}=\{{X}_{1}, \dots , {X}_{n}\}\), and a set of directed edges (or arcs) \({\varvec{E}}\), so that:

We will use plain capital letters to represent individual variables or nodes, e.g. \(A, Y, {X}_{i}\). A directed edge, for example \(A \to B\), represents a direct conditional relationship between \(A\) and \(B\), or under a causal assumption, means that \(A\) is a direct cause of \(B\). The BN may simply be considered as a compact representation of the conditional independence relations in observational data, and in this non-casual interpretation, it may be used to infer conditional and marginal distributions in the observational data to provide predictive analysis. However, if we interpret the BN to be a causal BN, then the BN is a unique DAG that enables us to reason about intervention and understand the system being modelled at a deeper level. Where we have a directed edge \(A \to B\) in a graph, we say that \(A\) is a parent of \(B\), or equivalently, \(B\) is a child of \(A\).

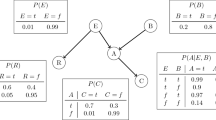

Figure 1 shows a DAG representing a simple model of two causes of (lung) cancer and two effects of cancer. It encapsulates the relationships between the variables, in particular the conditional dependence and independence relations between the variables. Conditional probability tells us, for example, the probability that the person will have a cloudy X-ray given that we know they have Cancer, written as \(P\left(XRay \right| Cancer)\)Footnote 1 where “|” means “given”. Conditional independence tells us which variables are irrelevant to that probability. For example,

tells us that the probability of a cloudy X-Ray is independent of whether Smoker is true given that we know the person has Cancer. The symbol “\({\perp}\)” means “is independent of” and so this is written as:

Figure 2 shows the three causal classes possible with three variables, together with all the conditional independence or dependence relations between \(A\) and \(C\) given \(B\) that they entail. The DAGs in Fig. 2a and b entail the same conditional independence relationship which means they cannot be distinguished by their conditional independence relations solely from observational data. When this is the case, we say that they belong to the same Markov Equivalence Class (MEC, but simply referred to as equivalence class from now on). However, in Fig. 2c, \(A\) and \(C\) are independent but become dependent given \(B\) (indicated by the symbol “\({\not \perp}\)”). This kind of relationship is known as explaining away and cannot be represented in undirected probabilistic graphs. A node which has multiple parents is known as a collider. The configuration shown in Fig. 2c, where \(B\) is a collider, and there is no edge between \(A\) and \(C\), will be referred to here as a v-structure, although other authors use the term unshielded collider.

Probabilistic graphical models represent conditional independence through the notion of graphical separation. For example, Cancer “separates” Smoker from XRay in the graph in Fig. 1. DAGs use a special form of graphical separation known as d-separation to represent conditional independence relationships. D-separation is defined as follows (Pearl 1988): If \(X\) and \(Y\) are nodes in DAG \(G\), a subset of the remaining nodes, \({\varvec{S}},\) d-separates \(X\) from \(Y\) if \({\varvec{S}}\) blocks all paths between \(X\) and \(Y\). Each path between \(X\) and \(Y\) is blocked by \({\varvec{S}}\) if at least one of the nodes between \(X\) and \(Y\) on that path meets one of the following conditions, either:

-

it is a collider and neither it, nor any of its descendants, are in \({\varvec{S}}\);

-

or, it is not a collider and it is in \({\varvec{S}}\)

If \({\varvec{S}}\) does d-separate \(X\) and \(Y\), we say that \({\varvec{S}}\) is a Sepset (also referred to as cut-set or separating set) for \(X\) and \(Y\). Figure 3 presents four examples of applying the d-separation rules to examine whether \(X\) and \(Y\) are d-separated, where the different conditioning sets are indicated by shaded nodes. Paths which are not blocked are known as active paths and are shown by green arrows, and conditioning sets which are minimal Sepsets are shaded in pink; otherwise, they are shaded in grey.

The BN represents the set of conditional independence relationships (and implicitly therefore, the dependence relationships too) in the joint probability distribution over all the variables, \(P({\varvec{X}})\). Two assumptions about the DAG in a BN are made:

-

Markov Condition every variable \(X\) in \(G\) is conditionally independent of its non-descendants given its parents. This is equivalent to saying that every conditional independence implied by d-separation in the DAG is present in the joint probability distribution \(P({\varvec{X}})\). Importantly, this condition means that the joint probability distribution \(P({\varvec{X}})\) can be decomposed as follows (where \({\varvec{P}}{\varvec{a}}\left({X}_{i}\right)\) are the parents of \({X}_{i}\)):

$$P\left({\varvec{X}}\right)=\prod_{i=1}^{n}P\left({X}_{i} \right| \mathbf{P}\mathbf{a}( {X}_{i} ))$$ -

Minimality Condition we cannot remove any of the edges in the DAG without the graph implying a conditional independence relationship that is not present in \(P({\varvec{X}})\).

Pearl (1988) expresses these two conditions by saying that \(G\) is a minimal Independence-Map (I-map) of \(P({\varvec{X}})\). If the DAG represents the causal structure of the variables, the Markov Condition is referred to as the Causal Markov Condition since it links the probabilistic and causal interpretations of the DAG.

We wish to learn the BN from a set of data \({\varvec{D}}\), which consists of \(N\) data instances \({\varvec{D}}=\{{{\varvec{d}}}_{1}, \dots , {{\varvec{d}}}_{{\varvec{N}}}\}\), each of which defines the value of each of the variables \({X}_{1}..{X}_{n}\), that is \({\varvec{d}}=\{{x}_{1}, \dots , {x}_{n}\}\) (lower case letters denote values or states of a variable). Discrete BNs allow variables which take discrete values each having a defined probability of occurring dependent upon the value of the parents. For example, in Fig. 1, the probability of Dyspnoea occurring might be 0.9 if Cancer were true, but only 0.05 if Cancer were false. This set of conditional probabilities for a discrete variable is known as the Conditional Probability Table (CPT).

Linear Gaussian BNs are based on continuous variables which are assumed to follow Gaussian distributions. Each value of a child variable is a linear combination of its parents’ values plus a local noise component. These networks are parameterised with Conditional Probability Distributions (CPDs), as opposed to CPTs. Unless stated otherwise, we will assume linear relationships when we use the term Gaussian BN. Hybrid BNs support both discrete and continuous distributions. The most common form of hybrid BN is a Conditional Linear Gaussian BN (CLGBN) which allows discrete variables to be parents of a continuous variable, with a separate Gaussian Linear Model with different weighting coefficients for each set of discrete parent values (Geiger and Heckerman 1994). While CLGBNs do not generally allow continuous variables to be parents of discrete ones, works such as those by Andrews et al. (2018) describe hybrid BNs which remove this restriction.

Constructing a BN involves two main phases: (a) determining the graphical structure and (b) determining the parameters \({\varvec{\Theta}}\). The graph and the parameters of a BN model can be determined by expert knowledge, learnt from data, or a combination of both. This paper focuses on the problem of learning the structure of BNs from data, or from both data and expert knowledge.

Learning the structure of a BN represents an NP-hard problem partly because the solution space of graphs grows super-exponentially with the number of variables. Robinson (1973) showed that the recurrence relation:

computes the number of possible DAGs for \(n\) variables, \(|{G}_{n}|\), with \(|{G}_{0}|\) defined as 1. Using this recurrence formula, Table 1 illustrates how the number of possible DAGs grows super-exponentially as \(n\) increases. Clearly, a naïve exhaustive search is not a solution for any problem with a reasonable number of variables.

In general, structure learning algorithms fall into two main classes. The first class is constraint-based methods that eliminate and orientate edges based on a series of conditional independence (CI) tests. The second class, score-based methods, represent a traditional machine learning approach where the aim is to search over different graphs maximising an objective function. The graph that maximises the objective function is returned as the preferred graph. Additionally, hybrid algorithms that combine score-based and constraint-based approaches are often viewed as a third class of structure learning. Chickering et al. (1994) demonstrate that score-based learning is NP-hard, and Chickering et al. (2004) show that constraint-based learning is as well. This is true even under favourable conditions such as limiting the number of parents to three and having a constant time method of computing scores from the data.

3 Constraint-based learning

Constraint-based learning uses CI tests on the data to determine the conditional independence relationships between the variables under investigation, and hence construct a graph consistent with the data. Constraint-based learning is often assumed to discover causal relationships under the assumptions of causal faithfulness and causal sufficiency which we cover later in this section. As the simple examples in Fig. 2 have already shown, a set of independence relationships may be consistent with multiple DAGs. Hence, rather than producing a single DAG, constraint-based algorithms return the set of DAGs consistent with the independence relationships in the data. That is, the equivalence class referred to in Sect. 1.

Verma and Pearl (1990) show that two DAGs belong to the same equivalence class if they have the same adjacencies (same skeleton) and the same set of v-structures. The adjacencies and v-structures are represented by a Partially Directed Acyclic Graph (PDAG) which has a mixture of directed and undirected edges, with the directed edges indicating the v-structures. Figure 4a shows three DAGs which entail the same set of independence relationships even though the arrow orientations vary between \(A, B\) and \(C\). Figure 4b shows the corresponding PDAG, with directed edges indicating the v-structure \(B \to D \leftarrow C\). Implicit in that PDAG is that \(B-D-E\) and \(C-D-E\) are not v-structures, so we can deduce that there must be a directed edge \(D \to E\), and filling in all the additional implicit directed arrows such as this one creates a Complete PDAG (CPDAG), as shown inFig. 4c.Footnote 2 The CPDAG represents the equivalence class. A directed edge in the CPDAG means that all the equivalent DAGs must have that same directed edge, but undirected edges in the CPDAG indicate that the equivalent DAGs can have a directed edge in either direction.

Illustration of the equivalence classes, PDAGs and CPDAGs, based on an example in Verma and Pearl (1990)

We noted in the Preliminaries that two assumptions are made when formally defining a Bayesian Network: the Markov and Minimality Conditions. To recap, this means that all conditional independence relationships implied from the DAG by d-separation are present in the probability distribution. In general, however the converse is not necessarily true, in that there may be conditional independence relationships present in the probability distribution that are not reflected by the DAG. If this is the case, we say that the DAG and the probability distribution are unfaithful to one another.

Figure 5 shows an example in which the network is unfaithful. Applying d-separation rules to the DAG would indicate that \(A\) and \(C\) are not independent. However, the particular values chosen for the CPTs shown give rise to a probability distribution where \(A\) and \(C\) are independent. Thus, there is an independence in the probability distribution which is not reflected by the DAG, and so it is unfaithful. In other words, this example shows that it is possible to have causation without association. Note that constraint-based algorithms do often make the additional assumption that all independence relationships present in the distribution are reflected in the DAG. In this case, we say that the DAG and distribution are faithful to each other, or that the DAG is a perfect map (P-map) of the distribution.

The next subsection of this section describes the CI tests used to determine the set of independence relationships, and the remaining three subsections each discuss a group of constraint-based algorithms. Section 3.2 describes the prototypical constraint-based algorithms that learn the graph structure globally and make the assumption of causal sufficiency which is also explained there. Section 3.3 describes local learning algorithms which learn the graph structure local to each variable which can then be merged to produce the overall graph. Finally, Section 3.4 describes algorithms which assume the existence of latent variables (i.e., causal insufficiency) and which are represented by a new kind of graph covered in that subsection. The main constraint-based algorithms covered in these subsections are shown in red hues in Fig. 6, which also illustrates the evolution of structure learning algorithms covered in this review. Lastly, Table 2 summarises the constraint-based algorithms covered in terms of whether they are global or local, the type of outputFootnote 3 they produce, and the key assumptions the algorithms make. Note that faithfulness assumptions that are stronger than the normal are marked in red text, and those that are weaker marked in blue text.

3.1 Determining conditional independence

CI tests check whether nodes \(A\) and \(B\) are conditionally independent given a conditioning set of nodes \({\varvec{S}}=\{{S}_{1}, \dots , {S}_{q}\}\) where \(q\) ranges over the number of nodes in the conditioning set. In other words, it decides whether \({\varvec{S}}\) will be a Sepset for \(A\) and \(B\) in the learnt graph. Although we generically describe these as tests of conditional independence, the same tests are also used when \({\varvec{S}}=\varnothing\); that is, testing whether \(A\) and \(B\) are unconditionally independent. These tests rely on setting an arbitrary threshold used to determine conditional independence, and can only identify conditional independence relationships present in the dataset which may not necessarily reflect those present in the true distribution. Therefore, we must recognise that these CI tests can make “mistakes”, and that these errors are more likely to occur with smaller sample sizes. Minimising the effects of these errors is an important consideration when designing constraint-based algorithms because if an edge is mistakenly removed from the graph at an early stage in the discovery process, this is likely to cause the discovery of incorrect edges at a later stage.

The most commonly used CI tests for discrete BNs are the \({G}^{2}\) and \({\chi }^{2}\) statistical tests and mutual information (MI), whereas for Gaussian BNs, Fisher’s z-test is frequently used. CI tests such as \({G}^{2}\) and \({\chi }^{2}\) assume a null hypothesis that \(A\) and \(B\) are conditionally independent given \({\varvec{S}}\). The tests produce a test statistic which can then be used to estimate how likely, defined by a p-value, that the observed data is, given the null hypothesis. If the p-value is below a predefined significance level, \(\alpha\), typically chosen as 0.05, the null hypothesis is rejected and it is assumed \(A\) and \(B\) are conditionally dependent given \({\varvec{S}}\). Conversely, if the p-value is above \(\alpha\), the null hypothesis cannot be rejected and we assume \(A\) and \(B\) are conditionally independent given \({\varvec{S}}\). The general form of the \({G}^{2}\) test statistic is:

which when applied to test the conditional independence of discrete variables \(A\) and \(B\) given conditioning set \({\varvec{S}}=\{{S}_{1}, \dots , {S}_{q}\}\) becomes (Spirtes et al. 2000):

where \(a, b\) range over the values of \(A, B\) respectively, and \(s\) ranges over all the combinations of values of the conditioning set \({\varvec{S}}\). \({N}_{abs}\) is the number of data cases with specific values \(A=a, B=b, {\varvec{S}}=\{{s}_{1}, \dots , {s}_{q}\}\). \({N}_{bs}\) is the marginal count over all values of \(a\) for data cases with \(B=b, {\varvec{S}}=\{{s}_{1}, \dots , {s}_{q}\}\), with \({N}_{as}\) and \({N}_{s}\) being analogous marginal counts over \(b\) and \(a, b\) respectively. The degrees of freedom, \(df\), which is required to determine the p-value from the test statistic is dependent upon the cardinality of the variables and is given by:

where \(\left|A\right|, \left|B\right|, \left|{S}_{i}\right|\) are the number of distinct values that nodes \(A, B, {S}_{i}\) can take respectively. \(df\) is defined here under the assumption that none of the values of \({N}_{abs}\) is zero, that is, every possible combination of values is present in the data. However, it is likely that some combinations of values will be absent with limited sample sizes, and so Sprites et al. (2000) suggest a heuristic of reducing \(df\) by 1 for every combination of values where \({N}_{abs}\) is zero. The \({\chi }^{2}\) CI test is similar, but with the test statistic defined as:

Another CI test for discrete BNs is mutual information (MI) which measures the amount of information shared between two variables (Cheng et al. 1997). The mutual information between two variables \(A\) and \(B\) is:

where \(P(a, b)\) is shorthand for \(P(A=a, B=b)\), and similarly for \(P(a)\), and \(P(b)\). Conditional mutual information is defined as:

where \(P\left(a, b \right| {\varvec{s}})\) is shorthand for\(P\left(A=a, B=b \right| \left\{{S}_{1}={s}_{1}, \dots , {S}_{q}={s}_{q}\right\})\), and similarly for \(P\left(a \right|\boldsymbol{ }{\varvec{s}})\) and\(P\left(b \right|\boldsymbol{ }{\varvec{s}})\). A value of 0 for \(MI\left(A, B \right| {\varvec{S}})\) indicates that there is no information flow between \(A\) and \(B\) when conditioned on\({\varvec{S}}\), that is, they are conditionally independent. In practice a threshold value \(\epsilon\) is chosen so that if \(MI\left(A, B \right| {\varvec{S}})< \epsilon\), conditional independence is assumed. Variable \(\epsilon\) may be given an arbitrary small value such as 0.01 (Cheng et al. 1997) or it may be estimated by comparing the predictive accuracy using different values (Cheng and Greiner 1999). If we rewrite the frequencies used in the definition of the \({G}^{2}\) test statistic as probabilities, we see that it only differs from mutual information by a scaling factor:

In the case of Gaussian BNs, Fisher’s Z-test is commonly used to test the null hypothesis that the partial correlation coefficient is zero. Fisher’s Z-test uses Fisher’s Z-transformation which is defined as:

where \({\widehat{\rho }}_{ab|{\varvec{s}}}\) is the partial correlation coefficient between values \(a\) of node \(A\) and values \(b\) of node \(B\), given values \({\varvec{s}}\) of the conditioning set S. The value \({\widehat{\rho }}_{ab|{\varvec{s}}}\) can be computed recursively with conditioning sets of increasing size (Anderson 1962; de la Fuente et al. 2004). This transformed version of the partial correlation \(\widehat{Z}\), follows a normal distribution with:

where q is the number of variables in the conditioning set. We can, therefore, use a normal distribution Z-score to compute the p-value of obtaining the computed partial correlation coefficient given the null hypothesis of zero partial correlation (\({Z}_{0}=0)\):

The test statistics and associated p-values described in this section are usually used in a binary decision to decide whether variables are conditionally independent or not. However, some algorithms also use them as a measure of the degree of association, or dependence or independence between variables. For example, a high mutual information value indicates that two variables are strongly associated with each other.

3.2 Global discovery algorithms

This group of constraint-based algorithms are known as global discovery algorithms since they attempt to learn the graph structure as a whole rather than first learning the local structure relating to each variable separately as the local constraint-based algorithms do (see Sect. 3.3). Both these global and the local constraint-based algorithms make one further assumption known as causal sufficiency, which is of importance if we wish to interpret the BNs causally. This assumption means there are no latent (unmeasured) variables that would affect the causal relationships. For example, variables that are a common cause of two or more of the measured variables \({\varvec{X}}\), which are widely known as latent confounders.

3.2.1 SGS algorithm

The SGS algorithm (Spirtes et al. 1990) is rather inefficient but is of interest since many constraint-based algorithms build upon its approach. SGS relies on two key theorems derived from the definition of Bayesian Networks (Verma and Pearl 1990) that apply to faithful and causally sufficient BNs:

-

1.

if \(A \,{\not \perp}\, B | {\varvec{S}}\) for every subset \({\varvec{S}}\subseteq {\varvec{X}}\setminus \{A, B\}\) then \(A\) and \(B\) are adjacent in the graph (“\({\varvec{X}}\boldsymbol{ }\backslash \{A, B\}\)” means set \({\varvec{X}}\) with elements \(A\) and \(B\) removed);

-

2.

if \(A\) and \(B\), and \(B\) and \(C\) are adjacent in the graph, but \(A\) and \(C\) are not adjacent, and if \(A \,{\not \perp}\, C | {\varvec{S}}\cup B\) for any subset \({\varvec{S}}\subseteq {\varvec{X}}\setminus \left\{A, B, C\right\}\) in the DAG, then \(A, B, C\) form a v-structure \(A \to B \leftarrow C\)

SGS starts from a complete (i.e., there is an edge between every pair of nodes) undirected graph on the node set \({\varvec{X}}\) and learns the Markov equivalence class in three phases:

-

1.

Adjacency phase: making use of rule 1 above, for each pair of nodes \(A, B\) this phase performs a CI test on \(A\) and \(B\) conditional on every possible subset \({\varvec{S}}\) of the remaining nodes. If conditional independence occurs for any set \({\varvec{S}},\) the edge between \(A\) and \(B\) is removed. This phase produces the graph skeleton.

-

2.

V-structure phase: using rule 2 above, for every triple \(A, B, C\) in the skeleton where \(A, B\) and \(B, C\) are adjacent pairs, and \(A\) and \(C\) are not adjacent, perform CI tests on \(A\) and \(C\) conditional on every possible subset \({\varvec{S}}\), of the remaining nodes where \({\varvec{S}}\) contains \(B\). If \(A\) and \(C\) are conditionally dependent given for every subset \({\varvec{S}}\), then mark \(A-B-C\) as a v-structure \(A \to B \leftarrow C\). This phase produces the PDAG.

-

3.

Orientation propagation phase: for every undirected edge in the PDAG, check if one of the orientations would:

-

a.

introduce a cycle into the graph, or

-

b.

create a new v-structure.

If so, then that orientation is forbidden and so the opposite orientation can be assumed. These rules are applied repeatedly until no more edges can be orientated, producing the CPDAG.

-

a.

The first phase in the SGS algorithm is particularly expensive. In the worst case, it requires \(n\left(n-1\right)\cdot {2}^{n-3}\) CI tests, which makes it exponential in \(n\) and therefore infeasible for a reasonable number of variables. However, SGS is relatively stable (Spirtes et al. 2000), in that errors made in CI tests tend not to be highly amplified by subsequent steps. A CI mistake in phase 1 may result in an extraneous or missing edge, but this would not affect other decisions made in that phase. However, these adjacency errors and further errors in identifying v-structures may propagate out to cause further orientation errors.

3.2.2 The PC algorithm

The adjacency phase in SGS exhaustively tests every possible conditioning set for each pair of nodes. This is computationally expensive and also means that many high order CI tests (CI tests applied to large parent-sets) are performed which are unreliable because the individual elements of the CI test are based on relatively few data instances. To counter these issues, the PC algorithm by Spirtes and Glymour (1991) performs the adjacency phase with conditioning sets of increasing size—checking all pairs of nodes \(A, B\) at a particular conditioning set size and removing edges \(A-B\) if a Sepset is found before moving to higher conditioning sets. Moreover, the PC adjacency phase makes use of the result that the minimum conditioning set that d-separates two nodes must be a subset of the union of the parents of those nodes under the assumptions of faithfulness and causal sufficiency (Verma and Pearl 1990). Thus, the algorithm need only consider conditioning sets of nodes which are adjacent to \(A\) and \(B\). This condition has no benefit initially since PC starts from a complete graph, but it reduces the number and order of the CI tests that are performed as the adjacency phase progresses and edges are removed.

To improve computational efficiency, the v-structure phase makes use of the Sepsets identified in the adjacency phase; if the Sepset for \(A\) and \(C\) identified in the adjacency phase for an unshielded triple \(A-B-C\) does not contain \(B\), then this identifies it as the v-structure \(A \to B \leftarrow C\). The PC algorithm then performs orientation propagation using the “Meek Rules” (Meek 1995). The complexity of the PC algorithm is bounded by (Spirtes et al. 2000):

where \({s}_{max}\) is the maximum size of any Sepset. This complexity bound is hard to quantify, but PC is polynomial given a limit on node degree (Claassen et al. 2013). Although far more efficient, the PC algorithm is less stable than SGS. For example, edges mistakenly removed in the adjacency phase can result in other edges being mistakenly retained later on in the adjacency phase.

3.2.3 The conservative PC (CPC) algorithm

The PC and SGS algorithms assume complete faithfulness, and one direction in which constraint-based algorithms have developed is to weaken this assumption. The Conservative PC (CPC) algorithm (Ramsey et al. 2006) does this by considering how faithfulness is assumed in the adjacency and orientation phases of the PC algorithm separately, using the terms adjacency-faithfulness and orientation-faithfulness respectively. It is shown that if only adjacency-faithfulness is assumed, the v-structure phase can detect and mark unfaithful v-structures. To do this, CPC considers all Sepsets of \(A\) and \(C\) to determine if \(A-B-C\) is a v-structure—marking it as such only if none of the Sepsets contain \(B\). Moreover, unless the “vote” is unanimous, the triple is marked as unfaithful. Thus, CPC is more cautious about orientating edges than PC, hence the name “conservative”. Simulations on a dataset of sample size 1,000 showed CPC to be only slightly slower than PC, but generating fewer erroneous edge orientations.

3.2.4 The very conservative SGS (VCSGS) algorithm

Zhang and Spirtes (2008) showed that a restricted assumption of faithfulness could be applied to the adjacency phase too. This weakened faithfulness condition is a combination of the minimality condition described in the Introduction, and triangle faithfulness which only assumes faithfulness on fully connected triples. With this weakened faithfulness assumption alone, it is possible to identify all other faithfulness violations. Spirtes and Zhang (2014) describe a version of SGS, the Very Conservative SGS, which would implement this weaker faithfulness assumption, though it was left as an open question whether it could be made efficient enough to be viable. It does not seem as though this algorithm has been implemented.

3.2.5 The PC-stable algorithm

Colombo and Maathuis (2014) considered the effect of mistaken CI test decisions arising from limited sample sizes and, in particular, their interaction with the order in which the CI tests are performed. They showed that the output from all three phases of the original PC algorithm (including related algorithms such as FCI and RFCI which we discuss below) is sensitive to the order in which CI tests are performed. The order in which CI tests are performed is generally an artefact of the way the algorithm is implemented; e.g., it may be related to the lexicographic ordering of the node names, or in the order the variables appear in the data. They proposed modifications to each phase of the original PC algorithm (Sect. 3.2.2) to remove this order dependence. Figure 7 presents the pseudo-code for the PC-Stable algorithm which has the following three phases:

-

Adjacency: in the original PC algorithm, mistaken deletions of edges propagate by erroneously reducing the conditioning sets available in subsequent CI tests at a given conditioning set size. This was remedied by only recomputing adjacencies before processing all the CI tests at each conditioning set size, in contrast to the original PC algorithm where edges are removed and adjacencies adjusted as soon as an independence relationship is detected. This is accomplished by taking a copy of the adjacencies at lines 5 and 6 of the pseudo-code and using this stable copy to determine possible conditioning sets ignoring the fact that edges might have been deleted in lines 8 to 10.

-

V-structure: the original PC algorithm re-uses the Sepset used to determine that the triple is unshielded, to also decide whether that triple is a v-structure. Given that the original algorithm can use invalid Sepsets in the adjacency phase, this also means its sensitivity to node ordering can adversely affect v-structure orientation. PC-Stable follows the approach adopted by CPC (Sect. 3.2.3) by considering all the Sepsets of \(A\) and \(C\) in triple \(A-B-C\) to decide where it is a v-structure. However, PC-Stable takes a less conservative approach than CPC, which they term majority rule, whereby the triple is marked as a v-structure if a majority of the Sepsets do not contain the middle node \(B\). Orientation conflicts are identified during this phase and marked by bi-directional edges, as shown in line 13 to 19 in the pseudo-code.

-

Orientation propagation: mistaken CI tests mean that situations like that shown in Fig. 8 can occur; i.e., the two v-structures imply conflicting orientations for edge \(B-E\). The original PC algorithm would arbitrarily choose one orientation based on node processing order. PC-Stable instead marks this conflicted edge with a bidirectional arrow.

Pseudo-code for PC-Stable algorithm. Program code keywords are coloured blue, comments in grey, key variables in red, and application-specific complex operations or conditions in black. Note, that for clarity, this variant does not identify orientation conflicts in the orientation propagation phase. (Color figure online)

The authors compared PC-Stable to PC in a low-dimensional simulation with 50 variables, an average neighbourhood size of 2 or 4 and 1000 rows, and in a high-dimensional simulation with 1000 variables, an average neighbourhood size of 2 and 50 rows. 250 random graphs were generated in each setting. Synthetic Gaussian variable datasets were produced for each graph, and twenty random orderings of variables used with each dataset. The CI test threshold was also varied.

The behaviour of PC and PC-Stable was very similar in the low-dimensional simulation. However, in the high-dimensional one, PC-Stable learnt graphs with lower SHD from the true graph, and with a much smaller variance in SHD across the different dataset orderings. This demonstrated the improved accuracy and stability of PC-Stable over PC. PC-Stable was between three and 13 percent slower than PC due to performing more CI tests. Most recent implementations of algorithms in the PC (and FCI) family employ the order-independence strategies used by PC-Stable.

Marella and Vicard (2022) provide a variant of PC, PC-CS, which addresses selection biases introduced by complex survey designs by using modified independence tests based on resampling techniques. The algorithm was evaluated using synthetic discrete variable datasets generated from random graphs. However, rows with particular values for some variables were preferentially included in the dataset in order to simulate the complex selection biases often found in survey data. The simulation then compared PC-Stable’s and PC-CS’s ability to learn the random graph. PC-CS produced better SHD scores than PC-Stable, but it should be noted that the simulations had at most 10 variables and so were somewhat limited.

3.2.6 PC-MAX algorithm

Whereas PC uses the Sepset identified in the adjacency phase, and CPC and PC-Stable use a voting scheme, to determine whether an unshielded triple is a v-structure, PC-MAX (Ramsey 2016) uses the Sepset with the highest p-value to determine this. The intuition here is that the Sepset with the highest p-value is the one which most strongly separates the end nodes of the triple, and so should be used to decide whether it is a v-structure or not. Similarly, when two overlapping v-structures would give rise to a bidirectional edge as shown in Fig. 8, PC-MAX avoids that conflict by only retaining the v-structure with the highest p-value. PC-MAX also parallelises the adjacency and v-structure phases and adopts the strategies used in PC-Stable to avoid sensitivity to the order of node processing. The authors evaluated performance on Gaussian BNs with PC-MAX obtaining better arc orientation than both PC and PC-Stable on a BN with 1000 variables. They demonstrated scalability by learning graphs with 20,000 variables and sample size 1000 on a powerful laptop with four dual-core processors in less than 5 min (Ramsey 2016), though observed that the score-based Fast Greedy Equivalence Search described in Sect. 4.2.2 was faster still and more accurate.

3.2.7 Three-phase dependency algorithm (TPDA)

The Three-Phase Dependency Algorithm (TPDA) by Cheng et al. (2002) focuses on reducing the number of statistical tests required, performing at most \(O({n}^{4})\) of them. TPDA adopts an information flow perspective to learn the graph adjacencies and differs from most constraint-based algorithms in that it uses MI tests quantitatively as a measure of information flow along paths, as well as a basis for conditional independence decisions.

Figure 9, based on an example from Cheng et al. (2002), illustrates a subgraph of a true network to demonstrate the basic principles behind TPDA. In particular, it shows how TPDA determines if a new edge is required between \(X\) and \(Y\) during its adjacency phase. We consider a point in time in the adjacency phase where TPDA has discovered that \(\{A, B, C, D\}\) are the only shared neighbours of \(X\) and\(Y\). Note at this point, TPDA has not determined the edge orientations. It checks whether \(X\) and \(Y\) are conditionally independent by testing if \(MI\left(X, Y \right| {\varvec{S}} \}<\epsilon\), where \(\epsilon\) represents a threshold negligible information flow. It starts by setting \({\varvec{S}}=\{A, B, C, D\}\) and progressively removes one node at a time from \({\varvec{S}}\) so that each time \(MI\left(X, Y \right| {\varvec{S}} \}\) is reduced by the greatest amount. It repeats this until either a Sepset is found (in this example, it would find Sepset\(\{A, B\}\)), or no Sepset is found. The latter situation means that the current graph is not sufficient to explain the information flow between \(X\) and\(Y\), and hence a direct edge is required between \(X\) and\(Y\).

In this way, the skeleton of the graph is built up, but with a reduced bound on the number of CI tests. In order for this approach to be sound, a stronger form of faithfulness called monotone-faithfulness must be assumed which corresponds to saying that blocking a path between two nodes never increases the information flow between them. In more detail, TPDA builds the skeleton in three phases:

-

1.

Drafting: starts with an empty graph and progressively adds undirected edges between pairs of nodes with the highest MI scores, if there is not currently an undirected path between the pair. This creates a maximum spanning tree. That is, where there is one, and only one, path between every pair of variables and the sum of edge scores is a maximum. This tree is used as a good starting point for the next phase.

-

2.

Thickening: adds edges between non-adjacent nodes if there is no Sepset in the set of shared neighbours between the two nodes, as described above.

-

3.

Thinning: the thickening phase adds edges greedily, and so it can happen that an edge addition can render a previous edge addition superfluous by providing an alternative information flow route. The thinning phase identifies these superfluous edges by looking for direct edges which have parallel indirect routes that can carry the required information flow, and then removes the superfluous direct edge.

TPDA then orientates edges using the v-structure and orientation phases described in the SGS algorithm. Notwithstanding the reduced bound on the number of CI tests required, Cheng et al. (2002) reported similar accuracy and efficiency results to the PC algorithm.

3.2.8 Recursive autonomy identification (RAI) algorithm

Yehezkel and Lerner (2009) also concentrated on reducing the number of CI tests, although they focussed on the costly and unreliable high-order tests. Their Recursive Autonomy Identification (RAI) algorithm assumes discrete variables and faithfulness, and starts with a complete undirected graph. Like the PC algorithm, RAI uses CI tests of increasing order. However, edge orientation is undertaken after edge removal at each conditioning set size, and this can allow RAI to identify autonomous subgraphs. These can be learnt independently of each other through recursive calls of the algorithm.

Figure 10, based on the example given in their paper, illustrates these concepts. It shows the state of the graph whilst learning the DAG shown in the inset. In particular, it shows the state after CI tests of order 0 have removed some edges and an orientation step has been performed. At this point, RAI is able to decompose this particular graph into two autonomous ancestor subgraphs marked in green, and a descendant autonomous subgraph marked in orange, which can all then be further refined independently by recursive calls to RAI. The black arrows show the edges which have been orientated after CI tests of order 0, and the red edges are undirected edges within the subgraphs which may be orientated after higher-order CI tests remove more edges. This decomposition allows the overall structure of the graph to appear early on in the learning process, and tends to avoid the higher cost and less reliable high-order CI tests. Whether this decomposition is possible depends upon the independence relationships in the data. If it is not possible, then RAI behaves like the PC algorithm. Nonetheless, the authors reported that RAI demonstrated higher structural and predictive accuracy than contemporaneous algorithms including PC, over a range of commonly evaluated BNs (Yehezkel and Lerner 2009). They also reported that RAI conducts fewer CI tests and therefore has shorter runtimes.

Illustration of autonomous subgraphs within the RAI algorithm (based on figure in Yehezkel and Lerner 2009). (Color figure online)

3.3 Local discovery algorithms

In contrast to the global algorithms described in the previous subsection, the algorithms covered in this subsection learn the local skeleton relating to each variable separately. The local structure learnt can either be the parent and children (i.e., neighbours) of each node, \(T\) say, denoted \({\varvec{P}}{\varvec{C}}(T)\), or the Markov Blanket of \(T\), denoted \({\varvec{M}}{\varvec{B}}(T)\). The Markov Blanket of node \(T\) is defined as the minimal conditioning set for which \(T\) is independent of all other nodes besides those in \({\varvec{M}}{\varvec{B}}(T)\). Thus, \({\varvec{M}}{\varvec{B}}(T)\) shields \(T\) from the influence of all other variables. Assuming faithfulness, it can be shown that \({\varvec{M}}{\varvec{B}}(T)\) consists of the parents, children, and parents of children (also known as spouses) of \(T\).

In some contexts, the individual local structure of a particular variable can be useful in its own right. In particular, determining the Markov Blanket of a variable provides a principled causal approach to feature selection, and much of the motivation for, and evaluation of, these local discovery algorithms has been around this use in classification problems (Aliferis et al. 2010). However, within BN structure learning, the local skeletons are learnt for every node and then merged to form the whole skeleton. As we discuss here, this may be done as part of overall constraint-based learning algorithm, with subsequent v-structure and orientation phases producing a CPDAG. Local discovery algorithms may also be part of hybrid approaches which are discussed in Sect. 5.

These local structures should be symmetric. That is, for example, \(A\in {\varvec{P}}{\varvec{C}}\left(B\right)\iff B\in {\varvec{P}}{\varvec{C}}(A)\) where \({\varvec{P}}{\varvec{C}}\left(B\right)\) denotes the parents and children of node \(B\). However, errors made by CI tests can mean that local structures may not be symmetric in practice. Algorithms usually resolve these conflicts by applying the “AND-rule”, where an edge will only be included in the global skeleton if the two nodes are in each other’s parent-and-child sets. More sophisticated symmetry correction approaches can be used however—see, for example, Sect. 5.1.5.

3.3.1 Markov Blanket algorithms

The Grow-Shrink (GS) algorithm (Margaritis and Thrun 1999) was the first to exploit the concept of a Markov Blanket to reduce the number of CI tests in the adjacency phase. It consists of two steps:

-

1.

Grow: for each node \(X\) in \({\varvec{X}}\backslash \{T\}\), GS tests whether \(X\perp T | {\varvec{M}}{\varvec{B}}(T)\). If not, \(X\) is immediately added to \({\varvec{M}}{\varvec{B}}(T)\) which grows dynamically throughout this step. Nodes are tested for inclusion in \({\varvec{M}}{\varvec{B}}(T)\) in decreasing order of the strength of the association between the node \(X\) and \(T\), which is calculated in a pre-processing step.

-

2.

Shrink: the grow step may add unnecessary nodes in the Markov blankets, which this step removes. It checks if \(X\perp T | {\varvec{M}}{\varvec{B}}\left(T\right)\backslash \{X\}\) for all \(X\in {\varvec{M}}{\varvec{B}}(T)\). If yes, \(X\) is removed from \({\varvec{M}}{\varvec{B}}(T)\).

Having constructed the Markov Blanket of all nodes in \(G\), GS performs the following steps:

-

1.

Completes the adjacency determination by removing parents of children of \(T\) in each Markov Blanket \({\varvec{M}}{\varvec{B}}(T)\). These are identified by having the condition \(X \perp T | {\varvec{S}}\) for some \({\varvec{S}}\subseteq {\varvec{M}}{\varvec{B}}(T)\backslash \{X\}\).

-

2.

v-structure and orientation phases similar to SGS and PC.

Margaritis (2003) reported an overall complexity for GS of \(O({n}^{2}+n{b}^{2}{2}^{b})\) CI tests, where \(b={max}_{{\varvec{X}}}(\left|{\varvec{M}}{\varvec{B}}\left(X\right)\right|)\) is the size of the largest Markov Blanket. For dense networks where \(b\approx n\), this means the GS algorithm has exponential complexity, although for the more usual sparse networks \(b\) can be considered a small constant and in those cases the complexity decreases to \(O\left({n}^{2}\right).\) Margaritis (2003) reported similar adjacency performance to PC, although GS is said to produce better edge orientation.

The Incremental Association Markov Blanket (IAMB) algorithm optimises Markov Blanket discovery so that it can handle thousands of nodes (Tsamardinos et al. 2003). The authors argue that GS’s Markov Blanket grow phase is suboptimal because it is slow to discover spouses in the Markov Blanket \({\varvec{M}}{\varvec{B}}(T)\) since these often have weak association with \(T\). This in turn leads to more CI tests in the grow and shrink phases. Instead, they propose using conditional mutual information MI(X, T | MB(T)) to determine the order in which a node \(X\) is considered for inclusion into \({\varvec{M}}{\varvec{B}}(T)\) during the grow phase. They also propose a variant on IAMB, called Inter-IAMB, which interleaves the grow and shrink phases. IAMB and Inter-IAMB were able to handle synthetic networks with up to 1,000 nodes, offering better accuracy in Markov Blanket discovery than GS.

Yaramakala and Margaritis (2005) suggested a further variant, Fast-IAMB. They proposed using the \({\chi }^{2}\) test statistic as the metric for deciding which nodes to add to \({\varvec{M}}{\varvec{B}}(T)\) during the grow phase. Furthermore, they argued that recomputing the statistic each time a node is added to \({\varvec{M}}{\varvec{B}}\left(T\right)\) is expensive and so proposed adding groups of nodes to \({\varvec{M}}{\varvec{B}}(T)\) before the test statistics are recomputed. They demonstrated similar accuracy in Markov Blanket identification to IAMB and Inter-IAMB, but with savings in execution time of 18–32% over the former, and 28–48% over the latter, together with a reduction in high-order CI tests.

3.3.2 Parents-and-children algorithms

The parents and children of node \(T\), \({\varvec{P}}{\varvec{C}}(T)\) is more directly useful for skeleton learning than \({\varvec{M}}{\varvec{B}}(T)\), and can be obtained by removing the spouses from \({\varvec{M}}{\varvec{B}}(T)\). However, Max–Min Parents Children (MMPC), HITON-PC and SI-HITON-PC algorithms learn \({\varvec{P}}{\varvec{C}}(T)\) directly (Tsamardinos et al. 2003: Aliferis et al. 2003a, b; and, Aliferis et al. 2010, respectively). Aliferis et al. (2010) defined a sound generic framework for learning \({\varvec{P}}{\varvec{C}}(T)\) into which these three specific algorithms fit, and which consists of:

-

a strategy for inclusion of a node \(X\) in \({\varvec{P}}{\varvec{C}}(T)\), heuristically prioritised, for instance, based on the strength of association between \(X\) and \(T\);

-

an elimination strategy for removal from \({\varvec{P}}{\varvec{C}}(T)\), for example, removing \(X\) from \({\varvec{P}}{\varvec{C}}(T)\) if \(X\perp T | {\varvec{S}}\) for some \({\varvec{S}}\subseteq {\varvec{P}}{\varvec{C}}(T)\backslash \{X\}\);

-

an approach for interleaving inclusion and elimination. For example, all candidate variables can first be included in \({\varvec{P}}{\varvec{C}}(T)\), and then extraneous variables can be eliminated, or variables can be added one at a time to in \({\varvec{P}}{\varvec{C}}(T)\), with the elimination step performed each time a new variable is added.

3.4 Algorithms assuming the existence of latent variables

The algorithms considered so far have assumed causal sufficiency, which is unreasonable in many real-world situations. We now consider algorithms where this assumption is not made. Explicitly including latent confounders into the DAG might be one approach to avoiding this assumption, but since these confounders are unmeasured and often unknown, this is formidably difficult. It also risks increasing the number of variables so that learning becomes intractable.

Instead, the most common approach is to learn a graph consisting of only the observed variables, while at the same time taking into account the potential existence of latent variables or confounders that might explain part of the effects or relationships between the observed variables. However, the semantics of DAGs are not detailed enough to represent this information. Figure 11a illustrates this issue using a causal graph of four observed variables \(\{A, B, C, D\}\), and a latent confounder \(L\) which would entail the dependence relationships \(A\perp D | B\), \(A\perp D | C\), and \(A \,{\not \perp}\, D | B, C\). If we attempt to represent this with a DAG of just the four observable variables, then there is no orientation of a directed edge between \(B\) and \(C\) that could entail these dependence relationships. Figure 11b presents an ancestral graph which, unlike DAGs, captures relationships due to latent confounders, and which we describe in the subsection that follows below.

3.4.1 Ancestral graphs

Richardson and Spirtes (2002) introduced a new class of graph called an ancestral graphFootnote 4 capable of capturing the relationships between observed variables in the presence of both latent confounders and selection bias. The latter is the situation where the probability of inclusion of a data instance in the dataset depends upon one or more latent selection variables. An example might be where patients in a clinical trial do not complete the trial if they become seriously ill, and so are not present in the dataset. Crucially, DAGs are a special case of an ancestral graph, and ancestral graphs are closed under conditioning and marginalisation. This means that an ancestral graph can be used to represent the probability distribution of a partially observed DAG. Ancestral graphs have three types of edge (Zhang 2008b):

-

directed, e.g. \(A \to B\): The mapping between edge types in the ancestral graph and relationships in the underlying DAG is given in Richardson and Spirtes (2002) but is somewhat complicated. We first define “\(A\) is an ancestor of \(B\)” to mean that there is a directed path from \(A\) to \(B\) with at least two directed edges. Directed edge \(A \to B\) in the ancestral graph means that \(A\) is an ancestor or parent of \(B\) and/or a selection variable, and that \(B\) is not an ancestor or parent of \(A\) nor of a selection variable in the underlying DAG. Note, for example, that this edge type does not preclude a latent variable being the cause of both \(A\) and \(B\) as well (i.e., a latent confounder).

-

bidirected, e.g. \(A \leftrightarrow B\): indicates that \(A\) is not an ancestor or parent of \(B\), \(B\) is not an ancestor or parent of \(A\), and neither are ancestors or parents of a selection variable. This edge type arises in the presence of latent confounders.

-

undirected, e.g. \(A-B\): \(A\) is an ancestor or parent of \(B\) or a selection variable and \(B\) is an ancestor or parent of \(A\) or a selection variable.

As the above shows, ancestral graphs primarily provide information about the ancestral and parental relationships in the underlying DAG, hence their name. Figure 11b shows the ancestral graph which represents the relationships between the observed variables in Fig. 11a. Richardson and Spirtes (2002) state two key conditions in the definition of an ancestral graph:

-

there are no partially directed cycles. A partially directed cycle consists of an anterior path from \(A\) to \(B\) together with an edge \(B\to A\) or \(B\leftrightarrow A\). An anterior path from \(A\) to \(B\) consists of edges with no arrows pointing towards \(A\);

-

for any undirected edge \(A-B\), \(A\) and \(B\) should have no incoming arrows.

Many properties of ancestral graphs flow from these two conditions. In particular, that there can be at most one edge between each pair of variables, and that marginalisation and conditioning are closed. It also follows that ancestral graphs encode conditional independence relationships through a graphical criterion called m-separation which is analogous to d-separation for DAGs. In an ancestral graph, \({\varvec{S}}\) m-separates \(A\) from \(B\) if all paths between \(A\) and \(B\) are blocked by \({\varvec{S}}\). A path is blocked if at least one node on the path is either:

-

a collider, defined in an ancestral graph as having two arrowheads incident to it, and neither it, nor any of its descendants, are in \({\varvec{S}}\);

-

or, is not a collider and is in in \({\varvec{S}}\).

If two nodes are not adjacent in a DAG, this implies that there is a set of nodes which d-separates them. However, this does not follow for ancestral graphs. Figure 12a based on Zhang (2008b) illustrates this, since \(G\) and \(H\) are not adjacent, but there is no subset of the other nodes that m-separates them. Therefore, a sub-class of ancestral graphs known as Maximal Ancestral Graphs (MAG) is defined which does have the property that non-adjacent nodes can be m-separated. Equivalently, this means that every absent edge in a MAG corresponds to a conditional independence relationship. A MAG can always be constructed from an ancestral graph by adding bi-directional edges such as \(G \leftrightarrow H\) in Fig. 12b.

Maximal Ancestral Graphs (Zhang 2008b)

In the same way that an equivalence class of DAGs may be consistent with a given set of independence relationships, the independence relationships with latent and selection variables present may be consistent with multiple MAGs. Analogously to a CPDAG, the equivalence class of MAGs is represented by a Partial Ancestral Graph (PAG). Constraint-based algorithms which take account of latent confounders and selection variables generally produce a PAG. There are three types of endpoint possible at each end of an edge in a PAG:

-

an invariant arrowhead, marked as “>”, indicating that all MAGs in the equivalence class have an arrowhead at that endpoint;

-

an invariant tail, marked as “-”, indicating that all MAGs in the equivalence class have a tail at that endpoint;

-

a variant endpoint, marked as “o”, indicating that some MAGs in the equivalence class have an arrowhead, and others a tail, at that endpoint.

So, for example, an edge \(\circ \to\) in a PAG indicates that MAGs in the equivalence class may have \(\to\) or \(\leftrightarrow\) at that location, and similarly an edge \(\circ -\circ\) in the PAG indicates that MAGs in the equivalence class can have a \(\to\), \(\leftarrow\), \(\leftrightarrow\) or \(-\) edge at that location. Note that the semantics of CPDAGs and PAGs are somewhat different. In particular, whereas a \(-\) edge in a CPDAG indicates that the edge in equivalent DAGs can be either \(\to\) or \(\leftarrow\), a \(-\) edge in a PAG indicates that the edge is \(-\) in all equivalent MAGs.

3.4.2 Fast causal inference (FCI) algorithm

Spirtes et al. (1993, 2000) described the Fast Causal Inference (FCI) algorithm for structure learning without assuming causal sufficiency, though the causal Markov and causal faithfulness conditions are still assumed. FCI produces a Partially Orientated Inducing Path Graph (POIPG)—an earlier representation which is slightly less informative than a PAG. In broad overview, FCI is similar to PC in that it first determines the adjacencies in the POIPG, and then orientates edges. We recall that the PC adjacency phase is optimised by using conditioning sets of increasing size. The PC adjacency phase also makes use of the fact that, for a DAG, a Sepset must be a subset of the parents of \(A\) or \(B\), and so it need only consider conditioning sets which are subsets of the neighbours of A and B. The FCI adjacency phase also uses conditioning sets of increasing size. However, Sepsets in a MAG are a subset of \({\varvec{D}}\)-\({\varvec{S}}{\varvec{e}}{\varvec{p}}(A, B)\) (Spirtes et al. 2000, p. 134) which in general contains nodes which are not adjacent to \(A\) or \(B\), as well as those that are. This necessitates a more complex strategy for determining adjacencies:

-

1.

Firstly, an initial skeleton is estimated considering conditioning sets that are subsets of the neighbours of \(A\) or \(B\). In general, this skeleton will have some extraneous adjacencies.

-

2.

Secondly, a v-structure phase is performed to orientate some edges. The resulting graph allows us to identify nodes that are definitely not in \({\varvec{D}}\)-\({\varvec{S}}{\varvec{e}}{\varvec{p}}(A, B)\) and so conversely define a superset of \({\varvec{D}}\)-\({\varvec{S}}{\varvec{e}}{\varvec{p}}(A, B)\), denoted \({\varvec{P}}{\varvec{o}}{\varvec{s}}{\varvec{s}}{\varvec{i}}{\varvec{b}}{\varvec{l}}{\varvec{e}}\)-\({\varvec{D}}\)-\({\varvec{S}}{\varvec{e}}{\varvec{p}}(A, B)\).

-

3.

Further edges may then be removed using subsets of \({\varvec{P}}{\varvec{o}}{\varvec{s}}{\varvec{s}}{\varvec{i}}{\varvec{b}}{\varvec{l}}{\varvec{e}}\)-\({\varvec{D}}\)-\({\varvec{S}}{\varvec{e}}{\varvec{p}}(A, B)\) as conditioning sets.

V-structure identification is then repeated on this new skeleton, followed by an orientation phase which is much more complex in POIPGs (and PAGs) than in PDAGs. Zhang (2008b) augmented the process of Spirtes et. al (2000) by defining eleven orientation rules that are said to produce a sound and complete PAG as the sample size \(N\to \infty\); i.e., all arrowheads and edge tails are said to be correct and the maximum possible number of them are determined. Colombo and Maathuis (2014) applied the same techniques used in PC-Stable to amend FCI to produce the FCI-Stable algorithm, which removes the dependence of the result on node lexicographical ordering. FCI-Stable is often used as the benchmark when assessing learning in the presence of latent and selection variables.

3.4.3 Really fast causal inference (RFCI) algorithm

Despite the presence of “Fast” in FCI’s name, its adjacency determination is typically far more resource intensive than in the PC algorithm. Really Fast Causal Inference (Colombo et al. 2012) seeks to address this by reverting back to considering only conditioning sets that are the parents of nodes in the adjacency phase as PC does, and having just one adjacency phase instead of two as in FCI. The v-structure phase and one of the eleven orientation rules are also modified to avoid orientation errors that might occur due to the fact that the PC adjacency step is used rather than the more accurate FCI one. The authors showed that for a large class of graphs being learnt, this produced the same PAG that FCI would have. Moreover, when FCI and RFCI do produce different results, RFCI produces PAGs with extra edges, thus slightly weakening the meaning of an edge. On the other hand, the consequent reduction in CI tests, particularly high-order ones, meant that RFCI was around 250 times faster for some synthetic, sparse, high-dimensional graphs (n = 500) with latent variables. It should be noted that all structural accuracy evaluations given in Colombo et al. (2012) were done against PAGs produced by FCI rather than against ‘ground-truth’ graphs.

3.4.4 Conservative fast causal inference (CFCI) algorithm

As well as developing the RFCI algorithm, Colombo et al. (2012) also investigated modifying FCI by weakening the faithfulness assumption used to identify v-structures as Ramsey et al. (2006) had done in the Conservative PC algorithm. They identified v-structures as either ambiguous or unambiguous, and only used the latter in subsequent stages. This resulted in fewer arrowheads, smaller possible conditioning sets, and hence extra edges in the resultant PAG compared to FCI. The overall effect was to produce similar numbers of additional edges compared to FCI as RFCI had produced, though with edge orientation closer to FCI.

3.4.5 Fast causal inference plus (FCI+) algorithm

Fast Causal Inference Plus (FCI+) centers around another approach to speeding up the adjacency phase of FCI (Claassen et al. 2013). It retains FCI’s approach of using conditioning sets for \(A, B\) that incorporate ancestors as well as just parents of \(A\) and \(B\), but focuses on efficiently identifying those cases where ancestors rather than just direct parents are in the Sepset. In doing so, they demonstrate that learning sparse causal graphs can be performed in polynomial time if a limit is placed on the node degree. In particular, in the worst case, FCI+ requires \(O({n}^{2\left(d+2\right)})\) CI tests, where \(n\) is the number of observed variables, and \(d\) the maximum node degree. This complexity is \(O({PC}^{2})\), that is the square of what the PC algorithm requires. Although detailed performance results are not given, the authors suggested that cases where Sepsets do include non-parents are relatively rare, and so performance may in practice be relatively close to PC.

3.4.6 Mixed variable types—MGM-FCI-MAX algorithm

Raghu et al. (2018) extended FCI to support a mixture of continuous and discrete variable types in their MGM-FCI-MAX variant of FCI. They introduced regression-based tests to detect conditional independence across different variable types. Orientation accuracy is also improved using the Sepsets with the highest p-value to identify v-structures as described in the PC-MAX algorithm (see Sect. 3.2.6). This produces higher numbers of CI tests compared to FCI, and so the adjacency and v-structure phases are parallelised to counteract this efficiency drawback. The code parallelisation resulted in modest time savings of the order of 30% using six processor cores instead of one core. The algorithm achieves more substantial savings by another innovation of using a Mixed Graphical Model (MGM) undirected graph as input to the adjacency phases rather than a complete undirected graph as is customary. An MGM is an undirected graph which can represent conditional independence relationships between mixed variable types and was generated by the algorithm described by Lee and Hastie (2015). Combining all these innovations, MGM-FCI-MAX was said to achieve a better balance between precision and recall than CFCI and FCI, as well as significantly reduced runtimes when applied to networks consisting of 500 variables.

4 Score-based learning

Score-based learning represents the other main class of BN structure learning and consists of two elements: (a) a search strategy that determines which path to follow in the search space of possible graphs, and (b) an objective function that can be used to evaluate each graph explored in the search space of graphs. The overriding challenge for score-based learning is to find high, or ideally the highest, scoring graphs amongst the vast number of possible graphs. As we have seen in the Introduction, a naïve exhaustive search where every possible graph is considered and scored is only feasible in problems with a handful of variables.

We first describe the objective function, which is pertinent to all score-based algorithms, in Sect. 4.1, followed by the algorithms themselves. Score-based algorithms are the most diverse type of structure learning algorithms, and there are different ways one might choose to categorise them. Here, we opt to primarily organise them according to those which do not guarantee to return the highest scoring graph, known as approximate algorithms and described in Sect. 4.2, and those which do offer that guarantee, known as exact algorithms and described in Sect. 4.3. These different groups of score-based algorithms, and their evolution, are shown in different shades of blue in Fig. 6.

The other defining characteristic of score-based algorithms is the search strategy. This is a combination of what search space is used, how the algorithm traverses that search space, and how that search space might be pruned (reduced). Perhaps the simplest score-based algorithm one might imagine is one that starts with an empty graph and greedily adds the arc which most increases the score subject to the restriction that it does not create a cycle in the graph. This process continues until it is no longer possible to find an arc addition that increases the score. The search space in this simple case would be DAG space (sometimes referred to as structure or graph space), and the traversal method is add arc. Since the algorithm greedily adds arcs, there is no guarantee it will find the highest scoring graph, and so it is an approximate algorithm.

Approximate algorithms which search DAG space are described in Sect. 4.2.1. However, other kinds of search space have also been adopted. For example, Sect. 4.2.2 describes approximate algorithms which explore equivalence class space, and Sect. 4.2.3 covers those which explore node-ordering space. Node ordering is a topological ordering of the nodes in the DAG such that a node can only have parents which are higher up the ordering than it. Note that a node ordering exists for a directed graph if and only if it is acyclic, and that in general a DAG may be consistent with multiple orderings as well as an ordering may be consistent with multiple DAGs.

This categorisation by search space is also followed for the exact algorithms. Pruning the search space is particularly important for exact algorithms where the pruning rules must be sound so as to guarantee that the pruned space still contains the optimal solution, whereas heuristic pruning does not offer this guarantee. Table 3 describes the search space and the search space traversal method used by the score-based algorithms covered in this paper, as well as whether they are approximate or exact algorithms. It also includes the objective function used in the original paper proposing the algorithm. Note that Scutari et al. (2019a) argued that the choice of algorithm and score used should be independent, and indeed, many BN tools support using different score functions for each algorithm. Thus, this column does not necessarily indicate a fundamental restriction on the scores that can be used with each algorithm, rather it gives a historical view on preferred scores at the time of their introduction. Finally, Table 3 describes the output graph type each algorithm produces. Approximate algorithms will typically produce a single DAG with a locally optimum score, whereas exact search algorithms will return a DAG with the globally optimum (that is, highest possible) score. Lastly, algorithms searching in equivalence class space will return a CPDAG.

4.1 Objective functions

Objective functions fall under two categories: the Bayesian scores which generally focus on the goodness of fit and allow the incorporation of prior knowledge, and information-theoretic scores which explicitly consider model complexity in addition to the goodness of fit, aiming to avoid model overfitting. Importantly, a score is said to be decomposable if the score of a graph can be decomposed into a sum of scores each associated with a node in the graph. Decomposable scores mean that only the scores for nodes affected by a graph change in a search process need to be re-computed, rather than re-computing the score of the whole graph for every single graph modification. As a result, a decomposable score greatly improves computational efficiency and virtually all algorithms employ them.

As noted in the Introduction, all the DAGs in an equivalence class entail the same conditional independence relationships, and therefore there is no reason for preferring one of them above the others on the basis of the observational data alone.Footnote 5 Therefore, the objective function is usually specified so it gives the same score to all DAGs in an equivalence class—a property known as score equivalence. Most commonly used scores do have this property. However, it is worth noting that approximate and exact algorithms that use score equivalent objective functions often just return a single result DAG. In that case, we should regard the output DAG as being a representative of the equivalence class to which it belongs. Indeed, the particular DAG within an equivalence class that the algorithm returns is usually just an artefact of the dataset (Constantinou et al. 2021b). It may depend on the lexicographical ordering of the variable names, or the order in which the variables in the dataset are encountered.

4.1.1 Bayesian scores

Bayesian scoring functions return a relative posterior probability for a graph conditioned on the data, taking into account prior beliefs about the graphical structure and/or dependence relationship parameters. The approach provides a theoretical underpinning to assign a posterior probability to each possible structure, something that constraint-based approaches do not offer. This in turn allows Bayesian Model Averaging (BMA) where, for instance, the posterior probability of a given feature such as a specific arc can be averaged across a set of likely structures.

Most commonly, one assumes that all graph structures are equally probable a priori. For discrete data, we generally assume a Dirichlet prior for the parameters which gives rise to the well-established general Bayesian Dirichlet (BD) score which, in its general form, is not score equivalent (Heckerman et al. 1995). Formally, the general BD score is defined as:

where \(\Gamma\) is the Gamma function, \(i\) is the index over the \(n\) variables, \(j\) is the index over the \({q}_{i}\) combinations of values of the parents of the node \({X}_{i}\), and \(k\) is the index over the \({r}_{i}\) possible values (states) of node \({X}_{i}\). Further, \({N}_{ijk}\) is the number of instances in the data \(D\) where node \({X}_{i}\) has the \({k}{th}\) value, and its parents have the \({j}{th}\) combination of values, and \({N}_{ij}=\sum_{k=1}^{{r}_{i}}{N}_{ijk}\) representing the total number of instances in the data \(D\) where the parents of node \({X}_{i}\) have the \({j}{th}\) combination of values. Lastly, \({N{^{\prime}}}_{ijk}\) and \({N{^{\prime}}}_{ij}=\sum_{k=1}^{{r}_{i}}{N{^{\prime}}}_{ijk}\) are defined analogously based on prior beliefs of these values. \(P(G)\) is the prior probability of a particular graph structure which is generally assumed to be the same for all graphs and so can be ignored.

A drawback of the general BD score is that it requires the user to specify the values of \({N{^{\prime}}}_{ijk}\) individually, which renders it impractical. The K2 score is the BD score where \({N{^{\prime}}}_{ijk}=1\), (Cooper and Herskovits 1992) and simplifies the general BD score to:

The K2 score also is not score equivalent. Heckerman et al. (1995) introduced the score equivalent BDe score, defined as

Here \({\theta {^{\prime}}}_{ijk}\) is the prior conditional probability of node \({X}_{i}\) having the \({k}{th}\) value given the parents have the \({j}{th}\) combination of values in the prior distribution. \(N {^{\prime}}\) is the equivalent sample size (ESS, also sometimes known as the imaginary sample size, ISS) and expresses our confidence in the prior parameters.

The most commonly used Bayesian score is the BDeu score (Buntine 1991; Heckerman et al. 1995) which is a special case of BDe where the prior parameters are set to \({\theta {^{\prime}}}_{ijk}= 1/{r}_{i}{q}_{i}\) for all \(i, j, k\) leading to the following definition:

Cooper and Yoo (1999) define a variant of BDeu which is suitable for a mix of observational and interventional data where the terms that express the likelihood of the data given a particular structure are left out for nodes that are intervened on. They showed that using this approach, a combination of observational and experimental data was the most effective at identifying causally-related nodes.