Abstract

Pattern mining is a fundamental data mining task with applications in several domains. In this work, we consider the scenario in which we have a sequence of datasets generated by potentially different underlying generative processes, and we study the problem of mining statistically robust patterns, which are patterns whose probabilities of appearing in transactions drawn from such generative processes respect well-defined conditions. Such conditions define the patterns of interest, describing the evolution of their probabilities through the datasets in the sequence, which may, for example, increase, decrease, or stay stable, through the sequence. Due to the stochastic nature of the data, one cannot identify the exact set of the statistically robust patterns by analyzing a sequence of samples, i.e., the datasets, taken from the generative processes, and has to resort to approximations. We then propose gRosSo, an algorithm to find rigorous approximations of the statistically robust patterns that do not contain false positives or false negatives with high probability. We apply our framework to the mining of statistically robust sequential patterns and statistically robust itemsets. Our extensive evaluation on pseudo-artificial and real data shows that gRosSo provides high-quality approximations for the problem of mining statistically robust sequential patterns and statistically robust itemsets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Frequent pattern mining [2] is one of the fundamental tasks in data mining, and requires to identify all patterns appearing in fractions at least \(\theta \) of all transactions from a transactional dataset. Several variants of the problem have explored different types of patterns (from itemsets [3] to sequential patterns [4], to subgroups [5], to graphlets [6]) relevant to applications ranging from market basket analysis to recommendation systems to spam detection.

In several real applications, a pattern is studied in the context of a sequence of datasets, where the sequence is given, for example, from the collection of the data at different time points. For example, in market basket analysis, it is natural to study the patterns (e.g., itemsets) in datasets obtained from transactions in different weeks or months. In almost all applications, one can assume that each dataset is obtained from a generative process on transactions, which generates transactions according to some probability distribution, as assumed by statistically sound pattern mining [7]. Let us consider, for example, a series of n surveys performed in n different time intervals in a supermarket, where we collect the data of the receipts of the costumers. The goal of such surveys is to infer information on how the behavior of the entire customers population evolves, but, obviously, it is impossible to collect the receipts of the whole population. Thus, our datasets only represent a collection of samples from the whole population.

In such a scenario, patterns of interest are the ones whose probability of appearing in a transaction follows some well-specified trend (e.g., it increases, decreases, or is constant across datasets). In the survey example above, we may be interested in finding sequences of purchases (i.e., sequential patterns) which become more and more common in time to understand how the customers’ behavior changes over time. However, the identification of such patterns is extremely challenging, since the underlying probability distributions are unknown and the observed frequencies of the patterns in the data only approximately reflect such probabilities. As a result, considering the same trends at the level of observed frequencies leads to reporting several false positives. This problem is exacerbated by the huge number of potential candidates, which poses a severe multiple hypothesis correction problem [8]. In addition, techniques developed for significant pattern mining [7] or for statistically emerging pattern mining [9] can only be applied to (a sequence of) two datasets.

To address such challenges, in this work we introduce a novel framework to identify statistically robust patterns from a sequence of datasets, i.e., patterns whose probability of appearing in transactions follows a well-specified trend, while providing guarantees on the quality of the reported patterns in terms of false positives or in terms of false negatives.

1.1 Our contributions

In this work, we introduce the problem of mining statistically robust patterns from a sequence of datasets. In this regard, our contributions are:

-

We define the problem of mining statistically robust patterns, and define an approximation of such patterns that does not contain false positives. We also describe three general types of patterns (emerging, descending, and stable) which are of interest in most scenarios.

-

We introduce an algorithm, gRosSo, to obtain a rigorous approximation, without false positives, of the statistically robust patterns from a sequence of datasets with probability at least \(1-\delta \), where \(\delta \) is a confidence parameter set by the user. Our strategy is based on the concept of maximum deviation and can employ any uniform convergence bound (see Sect. 2). We show how such a strategy can be used to approximate the three types of statistically robust patterns we introduced.

-

We define an approximation of the statistically robust patterns that does not contain false negatives, and explain how gRosSo can be modified to obtain such an approximation with high probability. We also discuss and prove additional guarantees that can be obtained with gRosSo.

-

We apply the general framework of statistically robust patterns to mine sequential patterns. We also introduce a novel algorithm to compute an upper bound on the capacity of a sequence that can be used to bound the maximum deviation using the statistical learning concept of VC-dimension of sequential patterns, which may be of independent interest.

-

We apply the general framework of statistically robust patterns to mine itemsets using the VC-dimension of itemsets to bound the maximum deviation.

-

We perform an extensive experimental evaluation, mining statistically robust sequential patterns and itemsets from pseudo-artificial datasets. Our evaluation proves that relying on frequency alone leads to several spurious discoveries, while gRosSo provides high-quality approximations for both data mining tasks. Finally, we analyze real datasets mining statistically robust sequential patterns, proving that gRosSo is able to detect various type of patterns.

1.2 Related works

We now discuss the relationship of our work to prior art on significant pattern mining, emerging pattern mining, and robust pattern mining, which are the areas most related to our work. We also focus on works that considered sequential pattern and itemset mining, which are the applications of our framework that we present in this paper, and that use concepts from statistical learning theory, as done in our work.

In significant pattern mining the dataset is seen as a sample from an unknown distribution and one is interested in finding patterns significantly deviating from an assumed null distribution (or hypothesis). Many variants and algorithms have been proposed for the problem. We point interested reader to the survey [7] and the recent works [10,11,12]. Few works have been proposed to mine statistically significant sequential patterns [13,14,15]. These methods are orthogonal to our approach, which focuses on finding patterns whose frequencies with respect to (w.r.t.) underlying generative distributions respect well-defined conditions through a sequence of datasets.

The first work that proposed the problem of mining emerging patterns is [16]. To the best of our knowledge, the only work that considers the problem of finding emerging patterns considering a data generative process and provides statistical guarantees is [9]. However, the proposed approach only works with two datasets and only finds patterns with significant differences in the two datasets. Instead, our approach describes more general trends of the probabilities of the patterns and considers more than two datasets, and it is unclear whether the approach of [9] can be modified to work in our scenario.

Frequent itemset mining [3] and frequent sequential pattern mining [4] are two fundamental data mining problems, and several algorithms have been proposed for these tasks (e.g., [17,18,19,20]). Servan-Schreiber et al. [21] are the first who apply the statistical learning theory concept of VC-dimension to sequential patterns, and they provide the first computable efficient upper bound on the empirical VC-dimension of sequential patterns, based on the notion of capacity of a sequence. In this work, we propose a tighter upper bound on the capacity of a sequence to compute it and we apply it in a different scenario. More recently, Santoro et al. [22] provide a sampling-based algorithm to compute approximations for the frequent sequential patterns problem, based on an upper bound on the VC-dimension of sequential patterns. They are also the first who consider the problem of mining true frequent sequential patterns, that are frequent sequential patterns w.r.t. an underlying generative process. They propose two approaches to compute approximations of such a problem: one based on the empirical VC-dimension and the other based on the Rademacher complexity. While we use a general framework similar to the one proposed by [22], we consider the problem of mining statistically robust patterns in a sequence of datasets, that is a different task. Riondato and Upfal [23] are the first who apply the VC-dimension to itemsets, providing a sampling-based algorithm to compute approximations for the frequent itemsets problem. Riondato and Vandin [24] are instead the first who consider the extraction of frequent patterns w.r.t. an underlying generative process, based on the concept of empirical VC-dimension of itemsets. While in our application to mine statistically robust itemsets we use some of the results provided in these works, we consider the problem of mining itemsets in a sequence of datasets, that is a different problem.

Few works have been proposed to mine robust patterns, where the robustness is usually defined by constraints between the relation of the observed frequency of a pattern in a dataset and the frequencies of its sub- or super-patterns. For example, Zhu et al. [25] define robust patterns as patterns for which, by removing some of their sub-patterns, the ratio between its original frequency and the frequency of the resulting pattern in a dataset is greater than a user defined parameter. Egho et al. [26] introduce a space of rules patterns model and they define a Bayesian criterion for evaluating the interest of sequential patterns for mining sequential rule patterns for classification purpose. Differently from our work, these contributions focus on a single dataset and do not consider a dataset as a collection of samples from an unknown generative process.

This version of our work differs in many ways from the preliminary one that appeared in the proceedings of IEEE ICDM’20 [1]. The major changes are the following:

-

We include additional proofs and pseudo-code that had been removed from the previous version due to space constraints.

-

We define a false negatives free approximation, and we discuss how gRosSo can be extended to obtain such an approximation (Sect. 4.4).

-

We discuss additional guarantees that can be obtained with gRosSo for both types of approximations (Sect. 4.5).

-

We include another application of the general framework of statistically robust patterns, namely mining statistically robust itemsets, using the VC-dimension of itemsets to bound the maximum deviation (Sect. 6).

-

We extend our experimental evaluation to include experiments on mining statistically robust itemsets from pseudo-artificial datasets, and on mining false negatives free approximations for both statistically robust sequential patterns and statistically robust itemsets (Sect. 7).

1.3 Organization of the paper

The rest of the paper is structured as follows. Sect. 2 contains the definitions and concepts used throughout this work. Our framework for statistically robust pattern mining is presented in Sect. 3. Section 4 describes our algorithm, gRosSo to mine statistically robust patterns and provides discussions and proofs of the guarantees that can be obtained with gRosSo. The application of our approach for mining statistically robust sequential patterns is described in Sect. 5, while the application for mining statistically robust itemsets is described in Sect. 6. Section 7 reports the results of an extensive suite of experiments performed to evaluate the effectiveness of gRosSo on pseudo-artificial and real datasets. Section 8 concludes the paper with some final remarks.

2 Preliminaries

We now provide the definitions and the concepts used throughout the paper. We start by introducing the task of pattern mining and defining the problems of mining frequent and true frequent patterns. Then, we formally define the concept of maximum deviation, which is required by our strategy to find an approximation of the statistically robust patterns, and of VC-dimension, showing how it can be used to bound the maximum deviation.

2.1 Pattern mining

Let a dataset \(\mathcal {D} = \{\tau _1,\tau _2,\ldots ,\tau _m\}\) be a finite bag of \(|\mathcal {D}|=m\) transactions, where each transaction is an element from a domain \(\mathbb {U}\). We assume that the elements of \(\mathbb {U}\) exhibit a poset structure. We define a pattern p as an element of \(\mathbb {U}\), potentially with some constraints. (For example, in itemset mining the domain \(\mathbb {U}\) consists of all subsets of binary features called items.) A pattern p belongs to a transaction \(\tau \in \mathcal {D}\) if and only if p is contained in \(\tau \), denoted by \(p \sqsubseteq \tau \). The support set \(T_{\mathcal {D}}(p)\) of p in \(\mathcal {D}\) is the set of transactions in \(\mathcal {D}\) containing p, that is, \(T_{\mathcal {D}}(p) = \{\tau \in \mathcal {D} : p \sqsubseteq \tau \}\). Finally, the frequency \(f_{\mathcal {D}}(p)\) of p in \(\mathcal {D}\) is the fraction of transactions in \(\mathcal {D}\) to which p belongs, that is,

Given a dataset \(\mathcal {D}\) and a minimum frequency threshold \(\theta \in (0,1]\), frequent pattern (FP) mining is the task of reporting the set \(FP(\mathcal {D},\theta )\) of all the patterns whose frequencies in \(\mathcal {D}\) are at least \(\theta \), and their frequencies, that is,

Given a generic set \(\mathcal {A}\) of pairs \((p,\cdot )\), where the first element of each pair is a pattern, in the following, with an abuse of notation, we use \(p \in \mathcal {A}\) to indicate that \(\exists (p,\cdot ) \in \mathcal {A}\), e.g., \(p \in FP(\mathcal {D},\theta ) \Rightarrow \exists (p,f_\mathcal {D}(p)) \in FP(\mathcal {D},\theta )\).

2.2 True frequent pattern mining

In several applications, the dataset \(\mathcal {D}\) is a sample of transactions independently drawn from an unknown probability distribution \(\pi \) on \(\mathbb {U}\), that is, the dataset \(\mathcal {D}\) is a finite bag of \(|\mathcal {D}|\) independent identically distributed (i.i.d.) samples from \(\pi \), with \(\pi : \mathbb {U} \rightarrow [0,1]\). The true support set T(p) of p is the set of patterns in \(\mathbb {U}\) to which p belongs, \(T(p) = \{\tau \in \mathbb {U} : p \sqsubseteq \tau \}\), and the true frequency \(t_{\pi }(p)\) of p w.r.t. \(\pi \) is the probability that a transaction sampled from \(\pi \) contains p, that is,

In such a scenario, the final goal of the data mining process on \(\mathcal {D}\) is to gain a better understanding of the process that generated the data, i.e., the distribution \(\pi \), through the true frequencies of the patterns, which are unknown and only approximately reflected in the dataset \(\mathcal {D}\). Thus, given a probability distribution \(\pi \) on \(\mathbb {U}\) and a minimum frequency threshold \(\theta \in (0,1]\), true frequent pattern (TFP) mining is the task of reporting the set \(TFP(\pi ,\theta )\) of all patterns whose true frequencies w.r.t. \(\pi \) are at least \(\theta \), and their true frequencies, that is,

Let us note that, given a finite number of random samples from \(\pi \), the dataset \(\mathcal {D}\), it is not possible to find the exact set \(TFP(\pi ,\theta )\), and one has to resort to approximations of \(TFP(\pi ,\theta )\).

2.3 Maximum deviation

Let \(\mathcal {X}\) be a domain set and let \(\mathcal {P}\) be a probability distribution on \(\mathcal {X}\). Let \(\mathcal {G}\) be a set of functions from \(\mathcal {X}\) to [0, 1]. Given a function \(g \in \mathcal {G}\), the expectation \(\mathbb {E}[g]\) of g is defined as

with \(x \in \mathcal {X}\), and, given a sample A of |A| elements drawn from \(\mathcal {P}\), the empirical average E(g, A) of g on A is defined as

The maximum deviation is defined as the largest difference, over all functions \(g \in \mathcal {G}\), between the expectation of g and its empirical average on a sample A, that is,

In the TFP mining task, one is interested in finding good estimates for \(t_\pi (p)\) simultaneously for each pattern \(p \in \mathbb {U}\). In such a scenario, the true frequency \(t_\pi (p)\) and the frequency \(f_{\mathcal {D}}(p)\) of a pattern p on \(\mathcal {D}\) represent, respectively, the expectation and the empirical average of a function associated with p, since

and

with \(\mathbbm {1}_{\tau }(p)\) the indicator function that assumes the value 1 if and only if \(p \sqsubseteq \tau \). Thus, in the TFP scenario the maximum deviation is

and one is interested in finding probabilistic upper bounds on such a measure, i.e., finding a \(\mu \in (0,1)\) such that (s.t.)

with a confidence parameter \(\delta \in (0,1)\).

Such probabilistic upper bounds on the maximum deviation can be computed with tools from statistical learning theory, e.g., VC-dimension [27] and Rademacher complexity [28]. More common techniques (e.g., Hoeffding inequality and union bounds) instead do not provide useful results since they require to know the number of all possible patterns that can be generated from the process, which can be infinite or impractical to compute.

2.4 VC-dimension

The Vapnik–Chervonenkis (VC) dimension [27, 29] of a space of points is a measure of the complexity or expressiveness of a family of indicator functions, or, equivalently of a family of subsets, defined on that space. A finite bound on the VC-dimension of a structure implies a bound of the number of random samples required to approximately learn that structure.

We define a range space as a pair \((X,\mathcal {R})\), where X is a finite or infinite set and \(\mathcal {R}\), the range set, is a finite or infinite family of subsets of X. The members of X are called points while the members of \(\mathcal {R}\) are called ranges. Given \(A \subseteq X\), we define the projection of \(\mathcal {R}\) in A as \({P_{\mathcal {R}}(A) = \{r \cap A\; : \; r \in \mathcal {R}\}}\). We define \(2^A\) as the power set of A, that is the set of all the possible subsets of A, including the empty set \(\emptyset \) and A itself. If \(P_{\mathcal {R}}(A) = 2^A\), then A is said to be shattered by \(\mathcal {R}\). The VC-dimension of a range space is the cardinality of the largest set shattered by the ranges.

Definition 1

Let \(RS = (X,\mathcal {R})\) be a range space and \(B \subseteq X\). The empirical VC-dimension EVC(RS, B) of RS on B is the maximum cardinality of a subset of B shattered by \(\mathcal {R}\).

The main application of VC-dimension in statistics and learning theory is to derive the sample size needed to approximate “learn” the ranges, as defined below.

Definition 2

Let \(RS = (X,\mathcal {R})\) be a range space and let \(\gamma \) be a probability distribution on X. Given \(\mu \in (0,1)\), a bag B of elements sampled from X according to \(\gamma \) is a \(\mu \)-bag of \((X,\gamma )\) if for all \(r \in \mathcal {R}\),

A \(\mu \)-bag of \((X,\gamma )\) can be constructed sampling points from X according to the distribution \(\gamma \), as follows.

Theorem 1

[30] Let \(RS = (X,\mathcal {R})\) be a range space and let \(\gamma \) be a probability distribution on X. Let B a bag of |B| elements sampled from X according to \(\gamma \) and let d be the empirical VC-dimension EVC(RS, B) of RS on B. Then, given \(\delta \in (0,1)\) and

the bag B is a \(\mu \)-bag of \((X,\gamma )\) with probability at least \(1-\delta \).

2.4.1 Range space of patterns

We now define the range space of patterns and prove how the VC-dimension can be used to bound the maximum deviation in the TFP scenario.

Definition 3

Let \(\mathbb {U}\) be a domain and let \(\pi \) be a probability distribution on \(\mathbb {U}\). We define \(RS=(X,\mathcal {R})\) to be a range space associated with \(\mathbb {U}\) w.r.t. \(\pi \) such that:

-

\(X = \mathbb {U}\);

-

\(\mathcal {R} = \{T(p) : p \in \mathbb {U}\}\) is a family of sets of transactions such that for each pattern p the set \(T(p) = \{\tau \in \mathbb {U} : p \sqsubseteq \tau \}\) is the true support set of p.

The following theorem is a generalization of a result for sequential patterns appearing in [22]. Here, we provide it for a general pattern mining task.

Theorem 2

Let RS be the range space associated with \(\mathbb {U}\) w.r.t. \(\pi \), let \(\mathcal {D}\) be a finite bag of i.i.d. sample from \(\pi \), and let v be the empirical VC-dimension \(EVC(RS,\mathcal {D})\) of RS on \(\mathcal {D}\). Then, given \(\delta \in (0,1)\) and

\(\sup _{p\in \mathbb {U}}|t_\pi (p)-f_{\mathcal {D}}(p)|\le \mu \) with probability at least \(1-\delta \).

Proof

From Theorem 1, we know that \(\mathcal {D}\) is a \(\mu \)-bag of \((\mathbb {U},\pi )\) with probability at least \(1-\delta \). Then, from Definition 2,

for all \(r \in \mathcal {R}\). Given a pattern \(p \in \mathbb {U}\) and its real support set T(p), which is the range \(r_p\), from the definition of range space of patterns (Definition 3) we have

and

Thus, \(\sup _{p\in \mathbb {U}}|t_\pi (p)-f_{\mathcal {D}}(p)|\le \mu \) with probability at least \(1-\delta \). \(\square \)

In Sects. 5 and 6, we discuss, respectively, an efficient computable upper bound of the empirical VC-dimension of sequential patterns and of itemsets, to bound the maximum deviation of the true frequencies for the two data mining tasks.

3 Statistically robust pattern mining

In this work, we introduce the task of mining statistically robust patterns from a sequence of datasets. Let us consider the scenario in which we have a sequence \(\mathcal {D}_1^n =\{\mathcal {D}_1,\mathcal {D}_2,\dots ,\mathcal {D}_n\}\) of n datasets, where each dataset \(\mathcal {D}_i\) is a bag of \(|\mathcal {D}_i|\) i.i.d. samples taken from a probability distribution \(\pi _i\) on \(\mathbb {U}\), with \(i \in \{1,\dots ,n\}\). Let \(\varPi _1^n= \{\pi _1,\pi _2,\dots ,\pi _n\}\) denote the sequence of the n probability distributions and \(\mathcal {T}_p = \{t_{\pi _1}(p),t_{\pi _2}(p),\dots ,t_{\pi _n}(p)\}\) the sequence of the true frequencies of the pattern p w.r.t. \(\varPi _1^n\). In such a scenario, we are interested in finding patterns whose true frequencies w.r.t. \(\varPi _1^n\) respect a well-defined condition \(cond(\mathcal {T}_p)\) that describes the evolution of their true frequencies through the sequence. For example, one may be interested in finding patterns whose true frequencies are almost the same in all the probability distributions, or patterns whose true frequencies always increase/decrease, and so on. So, given n probabilities distribution \(\varPi _1^n= \{\pi _1,\pi _2,\dots ,\pi _n\}\), a condition \(cond(\mathcal {T}_p)\) on the true frequencies \(\mathcal {T}_p\) that defines the patterns we are interested in, with \(cond(\mathcal {T}_p)=1\) when the condition is satisfied and \(cond(\mathcal {T}_p)=0\) otherwise, statistically robust pattern (SRP) mining is the task of reporting the set \(SRP(\varPi _1^n)\) of all patterns whose true frequencies w.r.t \(\varPi _1^n\) respect \(cond(\mathcal {T}_p)\), that is,

Similarly to TFP mining, from a sequence of samples (the datasets \(\mathcal {D}_1^n\)) it is not possible to find the exact set \(SRP(\varPi _1^n)\). Thus, one has to resort to approximations. Denoting by \(\mathcal {F}_p = \{f_{\mathcal {D}_1}(p),f_{\mathcal {D}_2}(p),\dots ,f_{\mathcal {D}_n}(p)\}\) the sequence of the n frequencies of p in \(\mathcal {D}_1^n\), we define a false positives free (FPF) approximation \(\mathcal {A}_P\) of \(SRP(\varPi _1^n)\) as

The approximation \(\mathcal {A}_P\) does not contain false positives, that is, patterns \(p \notin SRP(\varPi _1^n)\). In Sect. 4.4, we define an approximation that does not contain false negatives.

Now, we define three general types of patterns that can be described by the SRPs framework, and that we consider in the rest of this work.

Emerging Patterns (EP): these are patterns whose true frequencies always increase over the sequence, i.e., patterns p for which \(t_{\pi _{i+1}}(p) > t_{\pi _i}(p)+\varepsilon \), for all \(i \in \{1,\dots ,n-1\}\), for some given emerging threshold \(\varepsilon \in [0,1)\). Formally, given an emerging threshold \(\varepsilon \in [0,1)\), we define the emerging condition \(cond^{E}(\mathcal {T}_p)\) as

Descending Patterns (DP): these are patterns p whose true frequencies always decrease over the sequence, i.e., patterns p for which \(t_{\pi _{i}}(p) > t_{\pi _{i+1}}(p)+\varepsilon \) for all \(i \in \{1,\dots ,n-1\}\), for some given emerging threshold \(\varepsilon \in [0,1)\). Formally, given an emerging threshold \(\varepsilon \in [0,1)\), we define the descending condition \(cond^{D}(\mathcal {T}_p)\) as

Stable Patterns (SP): these are patterns whose true frequencies in the n probability distributions are above a minimum frequency threshold \(\theta \) and do not change too much. In particular, we consider patterns p for which \(|t_{\pi _{i}}(p) - t_{\pi _j}(p)| \le \alpha \) and \(t_{\pi _i}(p)\ge \theta \) for all \(i\ne j \in \{1,\dots ,n\}\), for some given stability threshold \(\alpha \in (0,1)\) and a minimum frequency threshold \(\theta \in (0,1)\). Formally, given a stability threshold \(\alpha \in (0,1)\) and minimum frequency threshold \(\theta \in (0,1)\), we define the stability condition \(cond^{S}(\mathcal {T}_p)\) as

Let us note that many more types of patterns can be described by our proposed framework. For example, one may be interested in patterns whose true frequencies in the different probability distributions have a ratio larger than a user-defined constant, or may be interested in patterns whose true frequencies are stable in some distributions and then increase/decrease in others, or that first increase and then decrease, and so on. In addition, for the EP and DP tasks, we provided general conditions to describe such patterns, while one may also consider constraints using a minimum frequency threshold \(\theta \).

4 gRosSo: approximating the statistically robust patterns

In this section, we describe gRosSo, mininG statistically RObuSt patterns from a Sequence Of datasets, our strategy to provide a rigorous approximation of the SRPs. In particular, gRosSo aims to find an approximation that does not contain false positives (i.e., a FPF approximation, see Sect. 3) with high probability. In Sects. 4.1–4.3 we show how to apply such a strategy to mine approximations of the three types of SRPs we defined in the previous section. gRosSo can also be modified to find approximations with guarantees on the false negatives: in Sect. 4.4, we show how to use gRosSo to find such approximations for the EP task. Finally, in Sect. 4.5 we describe additional guarantees that can be obtained with gRosSo for both types of approximations.

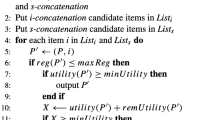

Algorithm 1 shows the pseudo-code of gRosSo. For a fixed \(cond(\mathcal {T}_p)\) that defines the SRPs we are interested in, and given the sequence \(\mathcal {D}_1^n\) of n datasets and a confidence parameter \(\delta \in (0,1)\) as input, we start by computing an upper bound \(\mu _i\) on the maximum deviation w.r.t. \(\pi _i\) for each dataset \(\mathcal {D}_i\), i.e., \(\sup _{p \in \mathbb {U}}|t_{\pi _i}(p) - f_{\mathcal {D}_i}(p)|\le \mu _i\), with \(i \in \{1,\dots ,n\}\) (lines 1-2). Each upper bound is computed using \(\delta /n\) as confidence parameter, thus \(\Pr (\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|\le \mu _i) \ge 1-\delta /n\), \(\forall \, i \in \{1,\dots ,n\}\). We denote by \(\mu _1^n = \{\mu _1,\mu _2,\dots ,\mu _n\}\) the sequence of the n upper bounds on the maximum deviations. (Such upper bounds can be computed, for example, using Theorem 2 and the VC-dimension.) Since \(cond(\mathcal {T}_{p})\) considers the true frequencies \(\mathcal {T}_p\), which are unknown, we need to define a new condition \(cond_P(\mathcal {F}_{p},\mu _1^n)\) on the frequencies \(\mathcal {F}_{p}\) and on the upper bounds \(\mu _1^n\). Such new FPF condition takes into account the uncertainty of the data in our samples, i.e., the datasets, in order to avoid false positives, and, for a pattern p, it must be \(cond(\mathcal {T}_{p})=0 \implies cond_P(\mathcal {F}_{p},\mu _1^n)=0\) if \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|\le \mu _i\) holds \(\forall i \in \{1,\dots ,n\}\). Figure 1 shows such conditions for the EP and SP scenarios as examples. Let us note that \(cond_P(\mathcal {F}_{p},\mu _1^n)\) can be also evaluated only considering a subsequence of the frequencies \(\mathcal {F}_{p}\), and if \(cond_P(\mathcal {F}_{p},\mu _1^n)=0\) for some subsequence, then there are no guarantees that \(cond(\mathcal {T}_{p})=1\). Then, we aim to find a starting set of possible candidates. For each dataset \(\mathcal {D}_i\), we compute the minimum frequency threshold \(\tilde{\theta }_i\) which the patterns must have in such a dataset to verify \(cond_P(\mathcal {F}_{p},\mu _1^n)\) (line 3). We then mine the dataset \(\mathcal {D}_k\), where \(k = {{\,\mathrm{arg\,max}\,}}_{i \in \{1,\dots ,n\}}{\tilde{\theta }_i} \), with the corresponding minimum frequency threshold \(\tilde{\theta }_k\), obtaining the set \(\mathcal {B} = FP(\mathcal {D}_k,\tilde{\theta }_k)\) of the starting candidates (line 4). The idea is to mine the dataset with the highest minimum frequency threshold in order to obtain a set of possible candidates that is as small as possible. Let us note that any efficient algorithm for mining frequent patterns can be used to obtain \(FP(\mathcal {D}_k,\tilde{\theta }_k)\). For each pattern \(p \in \mathcal {B}\), we then create an empty array \(\mathcal {F}_p\) of length n, initialize its k-th element with \(f_{\mathcal {D}_k}(p)\), and put the pair \((p,\mathcal {F}_p)\) in the set \(\mathcal {A}_P\) containing all possible candidates (lines 6-9). Finally, we explore the remaining datasets (lines 10-14). For each \(\mathcal {D}_i \in \mathcal {D}_1^n \setminus \mathcal {D}_k\) and for each pattern \(p \in \mathcal {A}_P\), we compute its frequency \(f_{\mathcal {D}_i}(p)\) in \(\mathcal {D}_i\) and initialize the i-th element of \(\mathcal {F}_p\) with \(f_{\mathcal {D}_i}(p)\) (line 12), and we check whether \(cond_P(\mathcal {F}_{p},\mu _1^n)=1\), considering the subsequence of the frequencies \(\mathcal {F}_p\) that has already been computed. If \(cond_P(\mathcal {F}_{p},\mu _1^n)=0\), there are no guarantees that \(cond(\mathcal {T}_{p})=1\), and we remove such a pattern from the set of the possible candidates (lines 13-14). Then, outputs are the patterns that have not been removed from the set of the possible candidates (line 15).

Theorem 3

The set \(\mathcal {A}_P\) returned by gRosSo is a FPF approximation of \(SRP(\varPi _1^n)\) with probability \(\ge 1-\delta \).

Proof

From the definition of \(cond_P(\mathcal {F}_p,\mu _1^n)\), we know that \(cond(\mathcal {T}_p)=0 \implies cond_P(\mathcal {F}_p,\mu _1^n)=0\) if \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|\le \mu _i\) holds \(\forall \, i \in \{1,\dots ,n\}\). In such a scenario, only the patterns \(p \in SRP(\varPi _1^n)\) can appear in \(\mathcal {A}_P\), and thus \(\mathcal {A}_P\) is a FPF approximation of \(SRP(\varPi _1^n)\). Now, let us define the event \(E_i\) as the event in which \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|>\mu _i\), with \(i \in \{1,\dots ,n\}\). From the choice of the confidence parameter used to compute the upper bounds on the maximum deviation, we know that \(\Pr (E_i) < \delta /n\). So, we have \(\Pr (\exists i\in \{1,\dots ,n\} : \sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|>\mu _i) = \Pr (\cup _{i=1}^nE_i) \le \sum _{i=1}^n \Pr (E_i) < \delta .\) Thus, the set \(\mathcal {A}_P\) returned by gRosSo is a FPF approximation of \(SRP(\varPi _1^n)\) with probability \(\ge 1-\delta \). \(\square \)

4.1 FPF approximation of the EP

Now, we apply the strategy defined above to find a FPF approximation of the EP. Starting from \(cond^{E}(\mathcal {T}_p)\) (Equation 1), we define \(cond^{E}_P(\mathcal {F}_p,\mu _1^n)\) as

For a given \(i \in \{1,\dots ,n-1\}\), such a condition represents the scenario in which \(t_{\pi _{i+1}}(p)\) and \(t_{\pi _{i}}(p)\) assume the values \(f_{\mathcal {D}_{i+1}}(p) - \mu _{i+1}\) and \(f_{\mathcal {D}_{i}}(p) + \mu _{i}\), respectively, that are the values at which their distance is minimum over all possible values that they can assume. Only if such a condition is true, we are guaranteed that \(t_{\pi _{i+1}}(p) > t_{\pi _{i}}(p) + \varepsilon \). (See Fig. 1.) Then, starting from such a condition, we compute the minimum frequency threshold for each dataset. Since it must be \(f_{\mathcal {D}_2}(p) - \mu _2 > f_{\mathcal {D}_1}(p) + \mu _1 + \varepsilon \) and \(f_{\mathcal {D}_3}(p) - \mu _3 > f_{\mathcal {D}_2}(p) + \mu _2 + \varepsilon \), and thus \(f_{\mathcal {D}_3}(p) > f_{\mathcal {D}_1}(p) + 2 \cdot \varepsilon + \mu _1 + \mu _3 + 2 \cdot \mu _2\), iterating such a reasoning for all the n datasets and considering \(f_{\mathcal {D}_1}(p) \ge 0\), we obtain the minimum frequency threshold \(\tilde{\theta }^E_n\) for the dataset \(\mathcal {D}_n\),

the highest over all the n datasets. Thus, the set \(FP(\mathcal {D}_n,\tilde{\theta }^E_n)\) provides the starting candidates. Finally, starting from \(\mathcal {D}_{n-1}\) and ending with \(\mathcal {D}_{1}\), we analyze the remaining datasets and check whether the candidates verify \(cond^{E}_P(\mathcal {F}_p,\mu _1^n)\).

Theorem 4

\(cond^{E}(\mathcal {T}_p)=0 \implies cond^{E}_P(\mathcal {F}_p,\mu _1^n)=0\).

Proof

Let us consider that \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)| \le \mu _i\), \(\forall \, i \in \{1,\dots ,n\}\). Thus, we have that for all patterns \(p \in \mathbb {U}\), it results \(t_{\pi _i}(p) \in [f_{\mathcal {D}_i}(p)-\mu _i,f_{\mathcal {D}_i}(p)+\mu _i]\), \(\forall \, i \in \{1,\dots ,n\}\). Let \(p'\) be a pattern s.t. \(cond^{E}(\mathcal {T}_{p'})=0\). From Equation 1, there is at least a couple of consecutive distribution \(\pi _j\), \(\pi _{j+1}\), with \(j \in \{1,\dots ,n-1\}\), s.t. \(t_{\pi _{j+1}}(p') \le t_{\pi _{j}}(p') + \varepsilon \). Since we know that \(t_{\pi _{j+1}}(p') \in [f_{\mathcal {D}_{j+1}}(p')-\mu _{j+1},f_{\mathcal {D}_{j+1}}(p')+\mu _{j+1}]\) and that \(t_{\pi _{j}}(p') \in [f_{\mathcal {D}_{j}}(p')-\mu _{j},f_{\mathcal {D}_{j}}(p')+\mu _{j}]\), the condition \(f_{\mathcal {D}_{j+1}}(p') - \mu _{j+1} - (f_{\mathcal {D}_{j}}(p') + \mu _{j}) > \varepsilon \), cannot be verified for such \(p'\), and thus \(cond^{E}_P(\mathcal {F}_{p'},\mu _1^n)=0\). \(\square \)

If one is interested in patterns with a true frequency above a value \(\theta \in (0,1)\), i.e., \(t_{\pi _i}(p) \ge \theta \), \(\forall \, i \in \{1,\dots ,n\}\), the following strategy can be used to reduce the set of starting candidates. Since we require that \(f_{\mathcal {D}_1}(p) \ge \theta + \mu _1\) to discard possible false positives, a factor \(\theta + \mu _1\) must be added to \(\tilde{\theta }^E_n\). Instead, if one is interested in patterns p with \(t_{\pi _n}(p) \ge \theta \), the minimum frequency threshold \(\tilde{\theta }^E_n\) for dataset \(\mathcal {D}_n\) is

4.2 FPF approximation of the DP

Using the same approach proposed to approximate the EP, it is possible to approximate the DP. Starting from \(cond^{D}(\mathcal {T}_p)\) (Equation 2), we define \(cond^{D}_P(\mathcal {F}_p,\mu _1^n)\) as

Iterating such a condition for all the n datasets, we obtain the minimum frequency threshold \(\tilde{\theta }^D_1 = \tilde{\theta }^E_n\) for the dataset \(\mathcal {D}_1\), that is the highest over all the n datasets. Thus, the set \(FP(\mathcal {D}_1,\tilde{\theta }^D_1)\) provides the starting candidates. Finally, starting from \(\mathcal {D}_{2}\) and ending with \(\mathcal {D}_{n}\), we analyze the remaining datasets and check whether the candidates verify \(cond^{D}_P(\mathcal {F}_p,\mu _1^n)\). In the case of a minimum frequency threshold \(\theta \in (0,1)\), reasoning analogous to the EP can be applied.

Theorem 5

\(cond^{D}(\mathcal {T}_p)=0 \implies cond^{D}_P(\mathcal {F}_p,\mu _1^n)=0\). \(\square \)

The proof is analogous to the proof of Theorem 4.

4.3 FPF approximation of the SP

Finally, we apply the strategy defined above to find an approximation of the SP. Starting from \(cond^{S}(\mathcal {T}_p)\) (Equation 3), we define \(cond^{S}_P(\mathcal {F}_p,\mu _1^n)\) as

Given \(i \ne j \in \{1,\dots ,n\}\), the first two conditions represent the scenario in which \(t_{\pi _{i}}(p)\) and \(t_{\pi _{j}}(p)\) assume the values \(f_{\mathcal {D}_{i}}(p) - \mu _{i}\) and \(f_{\mathcal {D}_{j}}(p) + \mu _{j}\), respectively, if \(f_{\mathcal {D}_{i}}(p) < f_{\mathcal {D}_{j}}(p)\), or, respectively, the values \(f_{\mathcal {D}_{j}}(p) - \mu _{j}\) and \(f_{\mathcal {D}_{i}}(p) + \mu _{i}\) if \(f_{\mathcal {D}_{j}}(p) < f_{\mathcal {D}_{i}}(p)\), that are the values at which their distance is maximum over all possible values that they can assume. Only if such conditions are true, we can prove that \(|t_{\pi _{i}}(p) - t_{\pi _{j}}(p)| \le \alpha \). The third condition, instead, represents the scenario in which \(t_{\pi _{i}}(p)\) assumes the value \(f_{\mathcal {D}_{i}}(p) - \mu _{i}\), that is the minimum value that it can assume. Only if such a condition is true, we can prove that \(t_{\pi _{i}}(p) \ge \theta \). (See Fig. 1.) The only condition that affects the minimum frequency thresholds \(\tilde{\theta }^S_i\) is \(f_{\mathcal {D}_i}(p) \ge \theta + \mu _i\), \(\forall \, i \in \{1,\dots ,n\}\). So, we have \(\tilde{\theta }^S_i = \theta +\mu _i, \, \forall \, i \in \{1,\dots ,n\}\), and the set \(FP(\mathcal {D}_k,\tilde{\theta }^S_k)\), with \(k = {{\,\mathrm{arg\,max}\,}}_{i \in \{1,\dots ,n\}}{\tilde{\theta }^S_i}\), provides the starting candidates. Finally, we analyze the remaining datasets \(\mathcal {D}_i \in \mathcal {D}_1^n \setminus \mathcal {D}_k\) and check whether the candidates verify \(cond^{S}_P(\mathcal {F}_p,\mu _1^n)\).

Theorem 6

\(cond^{S}(\mathcal {T}_p)=0 \implies cond^{S}_P(\mathcal {F}_p,\mu _1^n)=0\).

Proof

Let us consider that \(\sup _{p \in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)| \le \mu _i\), \(\forall \, i \in \{1,\dots ,n\}\). Thus, we have that for all patterns \(p \in \mathbb {U}\), it results \(t_{\pi _i}(p) \in [f_{\mathcal {D}_i}(p)-\mu _i,f_{\mathcal {D}_i}(p)+\mu _i]\), \(\forall \, i \in \{1,\dots ,n\}\). Let \(p'\) be a pattern s.t. \(cond^{S}(\mathcal {T}_{p'})=0\). From Equation 3, there is at least a distribution \(\pi _i\), with \(i \in \{1,\dots ,n\}\) s.t. \(t_{\pi _{i}}(p') < \theta \) and/or there is at least a couple of distributions \(\pi _k,\pi _j\), with \(k\ne j \in \{1,\dots ,n\}\), s.t. \(|t_{\pi _j}(p')-t_{\pi _k}(p')|>\alpha \). First, let us consider the case in which there is a distribution \(\pi _i\), with \(i \in \{1,\dots ,n\}\), s.t. \(t_{\pi _{i}}(p') < \theta \). Since we know that \(t_{\pi _{i}}(p') \in [f_{\mathcal {D}_{i}}(p')-\mu _{i},f_{\mathcal {D}_{i}}(p')+\mu _{i}]\), the condition \(f_{\mathcal {D}_{i}}(p') - \mu _{i} \ge \theta \) cannot be verified, and thus \(cond^{S}_P(\mathcal {F}_{p'},\mu _1^n)=0\). Now, let us consider the case in which there is a couple of distributions \(\pi _k,\pi _j\), with \(k\ne j \in \{1,\dots ,n\}\) s.t. \(|t_{\pi _j}(p')-t_{\pi _k}(p')|>\alpha \). Since we know that \(t_{\pi _{j}}(p') \in [f_{\mathcal {D}_{j}}(p')-\mu _{j},f_{\mathcal {D}_{j}}(p')+\mu _{j}]\) and that \(t_{\pi _{k}}(p') \in [f_{\mathcal {D}_{k}}(p')-\mu _{k},f_{\mathcal {D}_{k}}(p')+\mu _{k}]\), the condition \(f_{\mathcal {D}_{j}}(p') + \mu _{j} - (f_{\mathcal {D}_{k}}(p') - \mu _{k}) \le \alpha \) cannot be verified, if \(f_{\mathcal {D}_{j}}(p')> f_{\mathcal {D}_{k}}(p')\), while the condition \(f_{\mathcal {D}_{k}}(p') + \mu _{k} - (f_{\mathcal {D}_{j}}(p') - \mu _{j}) \le \alpha \) cannot be verified if \(f_{\mathcal {D}_{j}}(p')< f_{\mathcal {D}_{k}}(p')\), and thus \(cond^{S}_P(\mathcal {F}_{p'},\mu _1^n)=0\). \(\square \)

4.4 Guarantees on false negatives

In this section, we explain how gRosSo can be modified to obtain an approximation without false negatives with high probability. Analogously to what done in Sect. 3, we first define a false negatives free (FNF) approximation \(\mathcal {A}_N\) of \(SRP(\varPi _1^n)\) as

The approximation \(\mathcal {A}_N\) does not contain false negatives, that is, it contains all patterns \(p \in SRP(\varPi _1^n)\).

Our algorithm gRosSo can be used to obtain FNF approximations. The procedure is the same described above (see Algorithm 1) but we need to define a new condition \(cond_N(\mathcal {F}_{p},\mu _1^n)\), a FNF condition that takes into account the uncertainty in the data in order to avoid false negatives, and for a pattern p it must be \(cond(\mathcal {T}_{p})=1 \implies cond_N(\mathcal {F}_{p},\mu _1^n)=1\) if \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|\le \mu _i\) holds \(\forall i \in \{1,\dots ,n\}\). Figure 2 shows such a condition for the EP scenario as an example. Then, we compute the minimum frequency threshold \(\hat{\theta }_i\) for each dataset \(\mathcal {D}_i\), with \(i \in \{1,\dots ,n\}\), considering \(cond_N(\mathcal {F}_p,\mu _i^n)\), and we mine the set of the starting candidates from the dataset with the highest minimum frequency threshold.

Theorem 7

The set \(\mathcal {A}_N\) returned by gRosSo using \(cond_N(\mathcal {F}_p,\mu _1^n)\) is a FNF approximation of \(SRP(\varPi _1^n)\) with probability \(\ge 1-\delta \).

Proof

From the definition of \(cond_N(\mathcal {F}_p,\mu _1^n)\), we know that \(cond(\mathcal {T}_p)=1 \implies cond_N(\mathcal {F}_p,\mu _1^n)=1\) if \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)|\le \mu _i\) holds \(\forall \, i \in \{1,\dots ,n\}\). In such a scenario, all the patterns \(p \in SRP(\varPi _1^n)\) appear in \(\mathcal {A}_N\), and thus \(\mathcal {A}_N\) is a FNF approximation of \(SRP(\varPi _1^n)\). The remaining of the proof is analogous of the proof of Theorem 3. \(\square \)

4.4.1 FNF approximation of the EP

Now, we apply the strategy defined above to find a FNF approximation of the EP. With a similar reasoning, it is possible to mine a FNF approximation of the descending and stable patterns. Starting from \(cond^{E}(\mathcal {T}_p)\) (Equation 1), we define \(cond^{E}_N(\mathcal {F}_p,\mu _1^n)\) as

For a given \(i \in \{1,\dots ,n-1\}\), such a condition represents the scenario in which \(t_{\pi _{i+1}}(p)\) and \(t_{\pi _{i}}(p)\) assume the values \(f_{\mathcal {D}_{i+1}}(p) + \mu _{i+1}\) and \(f_{\mathcal {D}_{i}}(p) - \mu _{i}\), respectively, that are the values at which their distance is maximum over all possible values that they can assume. Only if such a condition is false, we can prove that \(t_{\pi _{i+1}}(p) \le t_{\pi _{i}}(p) + \varepsilon \). (See Fig. 2.) Then, starting from such a condition, we compute the minimum frequency threshold for each dataset. Since it must be \(f_{\mathcal {D}_2}(p) + \mu _2 > f_{\mathcal {D}_1}(p) - \mu _1 + \varepsilon \) and \(f_{\mathcal {D}_3}(p) + \mu _3 > f_{\mathcal {D}_2}(p) - \mu _2 + \varepsilon \), and thus \(f_{\mathcal {D}_3}(p) > f_{\mathcal {D}_1}(p) + 2 \cdot \varepsilon - \mu _1 - \mu _3 - 2 \cdot \mu _2\), iterating such a reasoning for all the n datasets and considering \(f_{\mathcal {D}_1}(p) \ge 0\), we obtain the minimum frequency threshold

for each dataset \(\mathcal {D}_i\), \(i \in \{2,\dots ,n\}\), and \(\hat{\theta }^E_1 = 0\). Thus, the set \(FP(\mathcal {D}_k,\hat{\theta }^E_k)\), with \(k = {{\,\mathrm{arg\,max}\,}}_{i \in \{1,\dots ,n\}}{\hat{\theta }^E_i}\), provides the starting candidates. Then, we analyze the remaining datasets \(\mathcal {D}_i \in \mathcal {D}_1^n \setminus \mathcal {D}_k\) and check whether the candidates verify \(cond^{E}_N(\mathcal {F}_p,\mu _1^n)\). Let us note that, depending on the values of \(\varepsilon \) and \(\mu _1^n\), the highest minimum frequency threshold \(\hat{\theta }^E_k\) can be equal or very close to 0, resulting in a huge amount of starting candidates, sometimes infeasible to mine. Thus, to obtain FNF approximations, a reasonable solution is to only consider patterns with a minimum true frequency. If one is interested in patterns with a true frequency above a value \(\theta \in (0,1)\), i.e., \(t_{\pi _i}(p) \ge \theta \), \(\forall \, i \in \{1,\dots ,n\}\), the following strategy can be used to reduce the set of starting candidates. Since we require that \(f_{\mathcal {D}_i}(p) \ge \theta - \mu _i\) for all \(i\in \{1,\dots ,n\}\) to discard possible false negatives, we obtain the minimum frequency threshold

for each dataset \(\mathcal {D}_i\), \(i \in \{1,\dots ,n\}\). Instead, if one is interested in patterns p with \(t_{\pi _n}(p) \ge \theta \), the highest minimum frequency threshold is the maximum between \(\theta - \mu _n\), for dataset \(\mathcal {D}_n\), and the minimum frequency threshold described above without frequency constraints.

Theorem 8

\(cond^{E}(\mathcal {T}_p)=1 \implies cond^{E}_N(\mathcal {F}_p,\mu _1^n)=1\).

Proof

Let us consider that \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)| \le \mu _i\), \(\forall \, i \in \{1,\dots ,n\}\). Thus, we have that for all patterns \(p \in \mathbb {U}\), it results \(t_{\pi _i}(p) \in [f_{\mathcal {D}_i}(p)-\mu _i,f_{\mathcal {D}_i}(p)+\mu _i]\), \(\forall \, i \in \{1,\dots ,n\}\). Let \(p'\) be a pattern s.t. \(cond^{E}(\mathcal {T}_{p'})=1\). From Equation 1, we have \(t_{\pi _{j+1}}(p') > t_{\pi _{j}}(p') + \varepsilon \) for all couples of consecutive distributions \(\pi _j\), \(\pi _{j+1}\), with \(j \in \{1,\dots ,n-1\}\). Since we know that \(t_{\pi _{j+1}}(p') \in [f_{\mathcal {D}_{j+1}}(p')-\mu _{j+1},f_{\mathcal {D}_{j+1}}(p')+\mu _{j+1}]\) and that \(t_{\pi _{j}}(p') \in [f_{\mathcal {D}_{j}}(p')-\mu _{j},f_{\mathcal {D}_{j}}(p')+\mu _{j}]\), the condition \(f_{\mathcal {D}_{j+1}}(p') + \mu _{j+1} - (f_{\mathcal {D}_{j}}(p') - \mu _{j}) > \varepsilon \) is verified for such \(p'\) for all \(j \in \{1,\dots ,n-1\}\), and thus \(cond^{E}_N(\mathcal {F}_{p'},\mu _1^n)=1\). \(\square \)

4.5 Additional guarantees of gRosSo

In this section, we provide additional guarantees of gRosSo for both types of approximations. In particular, it is possible to derive guarantees on the false negatives that can appear in a FPF approximation returned by gRosSo, and, vice versa, guarantees on the false positives that can appear in a FNF approximation. Such guarantees differ considering different types of patterns (i.e., emerging, descending, and stable), and thus, they must be separately derived for each type of pattern. Here, we prove additional guarantees for the emerging patterns but, with a similar reasoning, it is possible to obtain analogous guarantees for the descending and stable patterns.

Theorem 9

For any pattern p with \(t_{\pi _{i+1}}(p) > t_{\pi _i}(p) + \varepsilon + 2\cdot \mu _i + 2\cdot \mu _{i+1}\) \(\forall i \in \{1,\dots ,n-1\}\), \(cond^{E}_P(\mathcal {F}_p,\mu _1^n)=1\).

Proof

Let us consider that \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)| \le \mu _i\), \(\forall \, i \in \{1,\dots ,n\}\). Thus, we have that for all patterns \(p \in \mathbb {U}\), it results \(t_{\pi _i}(p) \in [f_{\mathcal {D}_i}(p)-\mu _i,f_{\mathcal {D}_i}(p)+\mu _i]\), \(\forall \, i \in \{1,\dots ,n\}\). Let \(p'\) be a pattern s.t. \(t_{\pi _{i+1}}(p') > t_{\pi _i}(p') + \varepsilon + 2\cdot \mu _i + 2\cdot \mu _{i+1}\) \(\forall i \in \{1,\dots ,n-1\}\). Since we know that \(t_{\pi _{i+1}}(p') \in [f_{\mathcal {D}_{i+1}}(p')-\mu _{i+1},f_{\mathcal {D}_{i+1}}(p')+\mu _{i+1}]\) and that \(t_{\pi _{i}}(p') \in [f_{\mathcal {D}_{i}}(p')-\mu _{i},f_{\mathcal {D}_{i}}(p')+\mu _{i}]\) \(\forall i \in \{1,\dots ,n-1\}\), then we have that \(f_{\mathcal {D}_{i+1}}(p')-\mu _{i+1} > f_{\mathcal {D}_{i}}(p')+\mu _{i} + \varepsilon \) \(\forall i \in \{1,\dots ,n-1\}\), and thus \(cond^{E}_P(\mathcal {F}_{p'},\mu _1^n)=1\). \(\square \)

Theorem 10

For any pattern p with \(t_{\pi _{i+1}}(p) \le t_{\pi _i}(p) + \varepsilon - 2\cdot \mu _i - 2\cdot \mu _{i+1}\) \(\forall i \in \{1,\dots ,n-1\}\), \(cond^{E}_N(\mathcal {F}_p,\mu _1^n)=0\).

Proof

Let us consider that \(\sup _{p\in \mathbb {U}}|t_{\pi _i}(p)-f_{\mathcal {D}_i}(p)| \le \mu _i\), \(\forall \, i \in \{1,\dots ,n\}\). Thus, we have that for all patterns \(p \in \mathbb {U}\), it results \(t_{\pi _i}(p) \in [f_{\mathcal {D}_i}(p)-\mu _i,f_{\mathcal {D}_i}(p)+\mu _i]\), \(\forall \, i \in \{1,\dots ,n\}\). Let \(p'\) be a pattern s.t. \(t_{\pi _{i+1}}(p') \le t_{\pi _i}(p') + \varepsilon - 2\cdot \mu _i - 2\cdot \mu _{i+1}\) \(\forall i \in \{1,\dots ,n-1\}\). Since we know that \(t_{\pi _{i+1}}(p') \in [f_{\mathcal {D}_{i+1}}(p')-\mu _{i+1},f_{\mathcal {D}_{i+1}}(p')+\mu _{i+1}]\) and that \(t_{\pi _{i}}(p') \in [f_{\mathcal {D}_{i}}(p')-\mu _{i},f_{\mathcal {D}_{i}}(p')+\mu _{i}]\) \(\forall i \in \{1,\dots ,n-1\}\), then we have that \(f_{\mathcal {D}_{i+1}}(p')+\mu _{i+1} \le f_{\mathcal {D}_{i}}(p')-\mu _{i} + \varepsilon \) and thus \(cond^{E}_N(\mathcal {F}_{p'},\mu _1^n)=0\). \(\square \)

Theorem 9 and Theorem 10 provide additional guarantees for the emerging patterns returned by gRosSo. In particular, Theorem 9 provides additional guarantees for a FPF approximation returned by gRosSo, stating that a pattern p with \(t_{\pi _{i+1}}(p) > t_{\pi _i}(p) + \varepsilon + 2\cdot \mu _i + 2\cdot \mu _{i+1}\) \(\forall i \in \{1,\dots ,n-1\}\) is certainly included in a FPF approximation. Instead, Theorem 10 provides additional guarantees for a FNF approximation returned by gRosSo, stating that a pattern p with \(t_{\pi _{i+1}}(p) \le t_{\pi _i}(p) + \varepsilon - 2\cdot \mu _i - 2\cdot \mu _{i+1}\) \(\forall i \in \{1,\dots ,n-1\}\) cannot appear in a FNF approximation.

5 Application: mining statistically robust sequential patterns

In this section, we introduce the task of sequential pattern mining, as a concrete realization of the general framework of pattern mining we introduced in Sect. 2.1. Then, we introduce a novel algorithm to compute an upper bound on the capacity of a sequence and we use such an algorithm to compute an upper bound on the empirical VC-dimension of sequential patterns. Finally, we discuss a VC-dimension-based strategy to bound the maximum deviation of the true frequencies of sequential patterns, which can be used in the SRP mining scenario.

5.1 Sequential pattern mining

Let \(\mathcal {I} = \{i_1,i_2,\ldots ,i_p\}\) be a finite set of items. An itemset X is a non-empty subset of \(\mathcal {I}\), i.e., \(X\subseteq \mathcal {I}\), \(X \ne \emptyset \). A sequential pattern (or sequence) \(s=\left\langle S_1,S_2,\ldots ,S_k \right\rangle \) is a finite ordered sequence of itemsets, with \(S_i \subseteq \mathcal {I}\), \(S_i \ne \emptyset \) for all \(i \in \{1,\dots ,k\}\). We say that such a sequence s is built on \(\mathcal {I}\) and we denote by \(\mathbb {S}\) the set of all such sequences. The length |s| of s is the number of itemsets in s. The item-length ||s|| of s is the sum of the sizes of the itemsets in it, i.e., \(||s|| = \sum _{i=1}^{|s|} |S_i|\), where the size \(|S_i|\) of an itemset \(S_i\) is the number of items in it. A sequential pattern \(y =\left\langle Y_1,Y_2,\ldots ,Y_a \right\rangle \) is a subsequence of an other sequential pattern \(w =\left\langle W_1,W_2,\ldots ,W_b \right\rangle \), denoted by \(y \sqsubseteq w\), if and only if there exists a sequence of naturals \(1 \le i_1< i_2< \cdots < i_a \le b\) s.t. \(Y_1 \subseteq W_{i_1}, Y_2 \subseteq W_{i_2},\ldots ,Y_a \subseteq W_{i_a}\). Let us note that an item can occur only once in an itemset, but it can occur multiple times in different itemsets of the same sequence. The capacity c(s) of a sequence s is the number of distinct subsequences of s, that is, \(c(s) = | \{a: a \sqsubseteq s \} |\).

Example 1

Let us consider the following sequential dataset \(\mathcal {D}=\{\tau _1,\tau _2,\tau _3,\tau _4\}\) as an example:

The dataset above has 4 transactions. The first one, \(\tau _1 = \langle \{2,6,7\},\{2\}\rangle \) has length \(|\tau _1| = 2\), item-length \(||\tau _1|| = 4\) and capacity \(c(\tau _1) = 14\). The frequency \(f_{\mathcal {D}}(\langle \{7\},\{2\}\rangle )\) of the sequence \(\langle \{7\},\{2\}\rangle \) in \(\mathcal {D}\), is 3/4, since it is contained in all transactions but \(\tau _3\). Let us note that the sequence \(\langle \{7\},\{2\}\rangle \) occurs three times as a subsequence of \(\tau _4\), but \(\tau _4\) contributes only once to the frequency of \(\langle \{7\},\{2\}\rangle \).

5.2 VC-dimension of sequential patterns

Given a dataset \(\mathcal {D}\) for the sequential pattern mining task, that is a finite bag of transactions sampled from \(\mathbb {S}\) in according to \(\pi \), we aim to compute the empirical VC-dimension \(EVC(RS,\mathcal {D})\) of the range space (see Definition 3) associated with \(\mathbb {S}\) w.r.t. \(\pi \) on the dataset \(\mathcal {D}\) in order to find a probabilistic bound \(\mu \in (0,1)\) on the maximum deviation \(\sup _{s \in \mathbb {S}}|t_\pi (s) - f_{\mathcal {D}}(s)|\). In particular, given \(EVC(RS,\mathcal {D})\) and using Theorem 2, it is possible to compute a \(\mu \in (0,1)\) s.t. \(\sup _{s \in \mathbb {S}}|t_\pi (s) - f_{\mathcal {D}}(s)|\le \mu \).

The exact computation of the empirical VC-dimension \(EVC(RS,\mathcal {D})\) of sequential patterns on the dataset \(\mathcal {D}\), required by Theorem 2, is computationally expensive. The s-index introduced by Servan-Schreiber et al. [21] provides an efficiently computable upper bound on \(EVC(RS,\mathcal {D})\).

Definition 4

[21] Let \(\mathcal {D}\) be a sequential dataset. The s-index of \(\mathcal {D}\) is the maximum integer d such that \(\mathcal {D}\) contains at least d different sequential transactions with capacity at least \(2^d-1\), such that no one of them is a subsequence of another, i.e., the d transactions form an anti-chain.

5.3 New upper bound on the capacity

Definition 4 requires to compute the capacity of each transaction \(\tau \in \mathcal {D}\). The exact capacity c(s) of a sequence s can be computed using the algorithm described in [31], but it is computationally expensive and may be prohibitive for large datasets. Thus, we are interested in efficiently computable upper bounds on c(s). A first naïve bound, that we denote by \(\tilde{c}_n(s) \ge c(s)\), is given by \(2^{||s||}-1\), but it may be a loose upper bound since \(c(s)=2^{||s||}-1\) if and only if all the items contained in all the itemsets of the sequence s are different.

The second upper bound has been introduced in [21]. Such upper bound, that we denote by \(\tilde{c}(s) \ge c(s)\), can be computed as follows. When s contains, among others, two itemsets A and B s.t. \(A \subseteq B\), subsequences of the form \(\langle C \rangle \) with \(C \subseteq A\) are considered twice in \(2^{||s||}-1\), “generated” once from A and once from B. To avoid over-counting such \(2^{|A|}-1\) subsequences, [21] proposes to consider only the ones “generated” from the longest itemset that can generate them.

In this work, we introduce a novel, tighter upper bound \(\hat{c}(s)\ge c(s)\). Our upper bound is based on the following observation. Let itemsets A and B be, respectively, the i-th and j-th itemset of the sequence s with \(i < j\), that is, A comes before B in s, and let \(T = A \cap B \ne \emptyset \) be their intersection. Let D be a subset of the bag-union of the itemsets in s that come before A, that is \(D \subseteq \bigcup _{S_k \in s : k < i} S_k\), and let E be a subset of the bag-union of the itemsets in s that come after B, that is \(E \subseteq \bigcup _{S_{\ell } \in s : \ell > j} S_{\ell }\). The sequences of the form \(\langle D C E \rangle \), with \(C \subseteq T\), are also considered twice, for the same reasons explained above. Given \(a = \sum _{k=1}^{i-1}|S_k|\) the sum of the sizes of the itemsets before A in the sequence s and \(b = \sum _{\ell =j+1}^{|s|}|S_{\ell }|\) the sum of the sizes of the ones that come after B, the number of over-counted sequences of this form is \(2^a \cdot (2^{|T|}-1) \cdot 2^b\). Let us note that this new formula also includes the sequences of the form \(\langle C \rangle \), since D and E may be the empty set.

An algorithm to compute an upper bound \(\hat{c}(s)\) based on the observation above is given in Algorithm 2. Let \(s = \langle S_1,S_2,\ldots ,S_{|S|}\rangle \) be a sequence and assume to re-label the itemsets in s by increasing size, ties broken arbitrarily, i.e., following the original order. Let \(\hat{s} = \langle S_1,S_2,\ldots ,S_{|\hat{s}|}\rangle \) be the sequence in the new order, s.t. \(|S_{i}| \le |S_{i+1}|, \forall \, i \in \{1,\dots ,|\hat{s}|-1\}\). Let \(N = [n_1,n_2,\ldots ,n_{|\hat{s}|}]\) be a vector s.t. its i-th element \(n_i\) is the sum of the sizes of the itemsets that in the original ordered sequence s come before the i-th itemset of the new ordered sequence \(\hat{s}\). The inputs of our algorithm are the new ordered sequence \(\hat{s}\) and the vector N. First, \(\hat{c}(\hat{s})\) is set to \(2^{||\hat{s}||} -1\) (line 2). For each itemset \(S_i \in \hat{s}\), we check whether there exists an itemset \(S_j\), with \(j>i\), s.t. the set \(T_{ij} = S_i \cap S_j \) is non-empty (line 6). For such \(S_j\), we compute the number of over-counted subsequences with the formula above (line 7). In Algorithm 2 (line 7), the min and max functions are used to check which itemset comes first in the original ordered sequence. After checking the entire sequence \(\hat{s}\) for a single itemset \(S_i\), we remove the maximum number of over-counted subsequences found for such \(S_i\) (line 9). Then, we update the vector N, subtracting the size of \(S_i\) from each \(n_m\), if the itemset m comes after the itemset i in the original ordered sequence s (lines 11-13).

Example 2

Let us consider the sequence \(s = \langle \{1\},\{2,5,7\},\{4\},\{2,3,5\},\{1,8\} \rangle \). The inputs of our algorithm are \(\hat{s} = \langle \{1\},\{4\},\{1,8\},\{2,5,7\},\{2,3,5\} \rangle \) and \(N = [0,4,8,1,5]\). The naïve upper bound \(\tilde{c}_n(s)\) is \(2^{10}-1 = 1023\). The upper bound \(\tilde{c}(s)\) defined in [21] is 1022, since it only removes once the sequence \(\langle \{1\} \rangle \). The upper bound \(\hat{c}(s)\) obtained with our algorithm is 1010, since we remove the sequence \(\langle \{1\} \rangle \) but also sequences generated by the intersection of \(\{2,5,7\}\) and \(\{2,3,5\}\) combined with other itemsets (e.g., the sequence \(\langle \{2,5\},\{1,8\} \rangle \)).

5.4 Bound on the maximum deviation

Using Algorithm 2 one can compute upper bounds on the capacities of the transactions of \(\mathcal {D}\), which can be used to obtain an upper bound on the s-index. Such bound can be used in Theorem 2 as upper bound of the empirical VC-dimension of sequential patterns, in order to compute a bound on the maximum deviation of the true frequencies of sequential patterns. With such a bound on the maximum deviation, we can use gRosSo to find FPF and FNF approximations of the statistically robust sequential patterns.

6 Application: mining statistically robust itemsets

In this section, we introduce the task of itemset mining, as another concrete realization of the general framework of pattern mining we introduced in Sect. 2.1. Then, we apply the VC-dimension to itemsets and we discuss a VC-dimension-based strategy to bound the maximum deviation of the true frequencies of itemsets, which can be used in the SRP mining scenario.

6.1 Itemset mining

Let \(\mathcal {I} = \{i_1,i_2,\ldots ,i_p\}\) be a finite set of items. Let us remember that an itemset X is a non-empty subset of \(\mathcal {I}\), i.e., \(X\subseteq \mathcal {I}\), \(X \ne \emptyset \). We denote by \(\mathbb {I}\) the set of all possible itemsets composed by items from \(\mathcal {I}\). The length |X| of X is the number of items in X and an itemset X is contained in another itemset Y if and only if \(X\subseteq Y\).

Example 3

Let us consider the following dataset \(\mathcal {D}=\{\tau _1,\tau _2,\tau _3,\tau _4\}\) as an example:

The dataset above has 4 transactions. The first one, \(\tau _1 = \{2,6,7\}\), has length \(|\tau _1| = 3\). The frequency \(f_{\mathcal {D}}(\{6,7\})\) of the itemset \(\{6,7\}\) in \(\mathcal {D}\) is 3/4, since it is contained in all transactions but \(\tau _3\).

6.2 VC-dimension of itemsets and bound on the maximum deviation

As described in Sect. 5.2 for the sequential patterns, given a dataset \(\mathcal {D}\) for the itemset mining task, that is a finite bag of transactions sampled from \(\mathbb {I}\) in according to \(\pi \), we aim to compute the empirical VC-dimension \(EVC(RS,\mathcal {D})\) of the range space (see Definition 3) associated with \(\mathbb {I}\) w.r.t. \(\pi \) on the dataset \(\mathcal {D}\) in order to find a probabilistic bound \(\mu \in (0,1)\) on the maximum deviation \(\sup _{X \in \mathbb {I}}|t_\pi (X) - f_{\mathcal {D}}(X)|\). In particular, given \(EVC(RS,\mathcal {D})\) and using Theorem 2, it is possible to compute a \(\mu \in (0,1)\) s.t. \(\sup _{X \in \mathbb {I}}|t_\pi (X) - f_{\mathcal {D}}(X)|\le \mu \). The d-index introduced by Riondato and Upfal [23] provides an efficiently computable upper bound on \(EVC(RS,\mathcal {D})\).

Definition 5

[23] Let \(\mathcal {D}\) be a dataset for the itemset mining task. The d-index of \(\mathcal {D}\) is the maximum integer d such that \(\mathcal {D}\) contains at least d different transactions of length at least d, such that no one of them is a subset of another, i.e., the d transactions form an anti-chain.

The d-index can be used in Theorem 2 as upper bound of the empirical VC-dimension of itemsets in order to compute a bound on the maximum deviation of the true frequencies of itemsets. With such a bound on the maximum deviation, we can use gRosSo to find FPF and FNF approximations of the statistically robust itemsets.

7 Experimental evaluation

In this section, we report the results of our experimental evaluation on multiple pseudo-artificial datasets to assess the performance of gRosSo for approximating the statistically robust sequential patterns and itemsets. Then, we execute gRosSo on multiple real datasets to approximate the statistically robust sequential patterns and we analyze the sequential patterns mined. To bound the maximum deviations, as required by gRosSo, we use Theorem 2. The VC-dimension of sequential patterns is bounded using the s-index obtained by using our algorithm (Algorithm 2) to compute the upper bound on the capacity of each sequential transaction, while the VC-dimension of itemsets is bounded using the d-index (Definition 5).

The goals of the evaluation are the following:

-

Assess the performance of our algorithm to compute an upper bound on the capacity c(s) of a sequence s, comparing our upper bound with the naïve bound and with the one proposed by [21] (see Sect. 5.3).

-

Assess the performance of gRosSo on pseudo-artificial datasets to mine statistically robust sequential patterns and itemsets, checking whether, with probability \(1-\delta \), the set of patterns returned by gRosSo does not contain false positives or false negatives.

-

Assess the performance of gRosSo to mine statistically robust sequential patterns on real datasets.

Since this is the first work that considers the problem of mining SRPs, there are not methods to compare with.

7.1 Implementation, environment, and real datasets

We implemented gRosSo for mining statistically robust sequential patterns and itemsets, and our algorithm to compute an upper bound on the capacity of a sequence in Java. To mine the frequent sequential patterns and frequent itemsets, we used, respectively, the PrefixSpan [20] and the FP-Growth [18] implementations both provided by the SPMF library [32]. We performed all experiments on the same machine with 512 GB of RAM and 2 Intel(R) Xeon(R) CPU E5-2698 v3 @ 2.3GHz, using Java 1.8.0_201. Our open-source implementation and the code developed for the tests and to generate the datasets are available at https://github.com/VandinLab/gRosSo. In all experiments, we fixed \(\delta = 0.1\).

Here, we provide the details on the generation of the real datasets for the sequential pattern mining task. The details on the generation of pseudo-artificial datasets for the sequential pattern and itemset mining tasks are, respectively, in Sects. 7.3.1 and 7.3.2. To obtain sequences of real sequential datasets, we generated multiple datasets starting from the Netflix Prize data,Footnote 1 which contains over 100 million ratings from 480 thousand randomly chosen anonymous Netflix customers over 17 thousand movie titles collected between October 1998 and December 2005.

To generate a single dataset, we collected all the movies that have been rated by the users in a given time interval (e.g., in 2004). Each transaction is the temporal ordered sequence of movies rated by a single user, with the movies sorted by ratings’ date. Movies rated by such a user in the same day form an itemset and each movie is represented by its year of release. Considering consecutive time intervals, we obtained a sequence of datasets, where each dataset only contains data generated in a single time interval. From the original data we removed movies which year of release is not available and movies that have been rated in a year that is antecedent to their year of release. The latter are due to one of the perturbations introduced in the data to preserve the privacy of the users.Footnote 2

We considered the data collected between January 2003 and December 2005. For each year 2004 and 2005, we generated two types of sequences: the first one composed by 4 datasets, e.g., 2004(Q1-Q4) (each dataset contains the data generated in 3 months), and the second one composed by 3 datasets, e.g., 2004(T1-T3) (each dataset contains the data generated in 4 months). Finally, we generated another sequence of datasets, 2003-2005, considering the entire data between 2003 and 2005 (each dataset contains the data generated in one year).

The characteristics of the generated real datasets are reported in Table 1.

7.2 Upper bound on the capacity

In this section, we report the results of Algorithm 2, which computes the upper bound \(\hat{c} (s)\) on the capacity of a sequence, and compare it with the naïve upper bound \(\tilde{c}_{n} (s) = 2^{||s||}-1\), and the upper bound \(\tilde{c} (s)\) from [21]. (See Sect. 5.3.) Table 1 shows the averages (over all transactions) of the relative differences between our novel upper bound \(\hat{c} (s)\) and the previously proposed ones, which, for a dataset \(\mathcal {D}\), are computed as

and

In all the datasets, our novel bound is (on average) tighter than the other bounds, with a maximum improvement of \(13.81\%\) on the naïve method and \(13.37\%\) on the method proposed by [21].

7.3 Results with pseudo-artificial datasets

In this section, we report the results of our evaluation on pseudo-artificial datasets. First, we describe the experimental evaluation using pseudo-artificial datasets for the sequential pattern mining task (Sect. 7.3.1), and then for the itemset mining task (Sect. 7.3.2).

7.3.1 Sequential patterns

Here, we report the results of our experimental evaluation using pseudo-artificial datasets for the sequential pattern mining task. We considered the 2005(T1-T3) sequence of datasets as ground truth for the sequential patterns, and we generated random datasets taking random samples from each of the datasets in the sequence. In such a way, we know the true frequencies of the sequential patterns (the probability that a pattern belongs to a transaction sampled from a dataset is exactly the frequency that such a pattern has in that dataset). Then, we executed gRosSo on the pseudo-artificial datasets and, by knowing the true frequencies of the patterns, we assessed its performance in terms of false positives, false negatives, and of correctly reported patterns. Since it is not feasible to obtain all the statistically robust sequential patterns due to the gargantuan number of candidates to consider in such datasets, for the EP and DP scenarios, we only considered patterns with true frequency above a minimum threshold \(\theta \) in the last and first dataset, respectively, while for the SP with true frequency above \(\theta \) in all the datasets, as defined in Sect. 3.

From each of the three original datasets, 2005T1, 2005T2 and 2005T3, we generated a random dataset with the same size of the corresponding original one, obtaining a sequence of three random datasets. From such a sequence, we mined the set of statistically robust sequential patterns without considering the uncertain of the data, i.e., directly using Equation 1, Equation 2, or Equation 3, using the observed frequencies of the patterns in the random datasets. This allows us to verify whether the set of sequential patterns obtained considering only the frequencies (i.e., without taking the uncertainty into account) results in false positives or in false negatives.

We then ran gRosSo on the sequence of random datasets to mine a FPF or a FNF approximation of the statistically robust sequential patterns, and checked whether the returned approximation contained, respectively, false positives or false negatives. We also reported what fraction of statistically robust sequential patterns is reported by gRosSo. (For both gRosSo and the observed frequency-based approach above, we only considered patterns with frequency greater than \(\theta \) as explained above, matching our ground truth.)

Table 2 reports the average results, over 5 different random sequences, denoted by \(\mathcal {S}_1^n\), for mining FPF approximations of the EP and DP with \(\varepsilon \in \{0,0.01,0.05\}\), Table 3 reports the average results for mining FPF approximations of the SP with \(\alpha \in \{0.05,0.1\}\), while Table 4 reports the average results for mining FNF approximations of the EP. We repeated the entire procedure with 5 sequences of random datasets, denoted by \(\mathcal {S}_1^{n\times 2}\), where each random dataset had size twice the original one, and then with five sequences of random datasets, denoted by \(\mathcal {S}_1^{n\times 3}\), with size three times the original one. For all the experiments, we used \(\theta \in \{0.2,0.3\}\). Here, we report only a representative subset of the results, with \(\varepsilon =0.01\) and \(\alpha =0.1\). Other results are analogous and discussed below.

The results show that, for almost all parameters, the sets of patterns mined in the pseudo-artificial datasets only considering the observed frequency of the patterns (i.e., without considering the uncertainty) contain false positives or false negatives with high probability. In addition, such a probability increases with a lower \(\theta \), and thus with a large number of patterns. Instead, the patterns returned by gRosSo do not contain false positives or false negatives in all the runs and with all the parameters. The results are even better than the theoretical guarantees, since theory guarantees us a probability at least \(1-\delta = 0.9\) of obtaining a set without false positives or without false negatives. Let us note that in some cases, the percentage of reported SRPs is small, in particular for the SP. However, such a percentage increases with larger datasets, since techniques from statistical learning theory, such as the VC-dimension, perform better when larger collections of data are available. In the EP scenario, for both types of approximations, FPF and FNF, we also checked whether the approximations returned by gRosSo had the additional guarantees described in Sect. 4.5, and, in all the runs, we found that such additional guarantees were always respected.

For the EP and DP with guarantees on the false positives, the results obtained with \(\varepsilon = 0\) are very close to the ones reported by Table 2, in many cases even better, while with \(\varepsilon = 0.05\) gRosSo reported a lower percentage (between 0.003 and 0.23) of statistically robust sequential patterns, in particular for the DP scenario. For the SP instead, using \(\alpha = 0.05\), we found only few real SRPs in the original data, and gRosSo did not report any of them, while for the EP with guarantees on the false negatives, the results obtained with \(\varepsilon = 0\) and \(\varepsilon = 0.05\) are very close to the ones reported by Table 4, with a percentage of reported patterns between 0.06 and 0.78.

For the EP and DP scenarios with guarantees on the false positives, we also performed an additional experiment to verify the absence of false positives in the output of gRosSo. We generated a random sequence of datasets taking three random samples from the same original dataset 2005T1. In such a way, the random sequence did not contain any EP and DP, since each pattern had the same true frequency in all the datasets. Then, we executed gRosSo on such a sequence using \(\theta = 0\) and \(\varepsilon = 0\). Let us note that this choice of parameters is the most challenging scenario, since we searched for all the EP and DP we were able to find. Again, we repeated such an experiment with five different random sequences where each dataset had the same size of the original one, five sequences with double size and five sequences with datasets that had three times the size of the original one. In all the runs, gRosSo correctly did not report any EP and DP.

These results show that, in general, considering the observed frequencies of the patterns is not enough to find sets of SRPs that do not contain false positives or false negatives. Thus, techniques like the one introduced in this work are necessary to find large sets of SRPs without false positives or false negatives. In addition, gRosSo is an effective tool to find rigorous approximations of the statistically robust sequential patterns.

7.3.2 Itemsets

Here, we report the results of our experimental evaluation using pseudo-artificial datasets for the itemset mining task. Starting from the 2005(T1-T3) sequence of datasets for the sequential pattern mining task, we first generated the corresponding sequence of datasets, 2005(T1-T3)IT, for the itemset mining task. For each dataset in the sequence 2005(T1-T3), we generated a new dataset taking the union of the items in each transaction of the dataset, e.g., a sequential transaction \(\tau =\langle \{1\},\{2\},\{6,7\},\{2\} \rangle \) becomes a transaction \(\tau '= \{1,2,6,7\}\). Then, we considered the 2005(T1-T3)IT sequence as ground truth for the itemsets, and we performed the same experimental evaluation described in Sect. 7.3.1 for the sequential patterns. We denote by \(\mathcal {Q}_1^n\), \(\mathcal {Q}_1^{n\times 2}\), and \(\mathcal {Q}_1^{n\times 3}\) the analogous to \(\mathcal {S}_1^n\), \(\mathcal {S}_1^{n\times 2}\), and \(\mathcal {S}_1^{n\times 3}\), but for itemsets. Table 5 reports the average results for mining FPF approximations of the EP and DP, Table 6 reports the average results for mining FPF approximations of the SP, while Table 7 reports the average results for mining FNF approximations of the EP. The results show that, for almost all parameters, the sets of patterns mined in the pseudo-artificial datasets only considering the observed frequency of the patterns contain false positives or false negatives with high probability. Instead, the patterns returned by gRosSo do not contain false positives or false negatives in all the runs, as observed for the sequential patterns. In addition, all the approximations of the EP reported by gRosSo respected the additional guarantees introduced in Sect. 4.5. All these results emphasize that considering the observed frequency is not enough to find large sets of SRPs without false positives or false negatives, and that gRosSo is an effective tool also to find rigorous approximations of the statistically robust itemsets. Comparing these results with the ones obtained for the sequential patterns, it is interesting to notice that in the EP and DP scenarios, almost always the returned statistically robust itemsets are less than the corresponding statistically robust sequential patterns, in particular for the DP, and that also the percentages of reported patterns are lower for the itemsets. Instead, in the SP scenario, more statistically robust itemsets are returned, always with higher percentages of reported patterns.

7.4 Results with real datasets