Abstract

A key problem in mathematical imaging, signal processing and computational statistics is the minimization of non-convex objective functions that may be non-differentiable at the relative boundary of the feasible set. This paper proposes a new family of first- and second-order interior-point methods for non-convex optimization problems with linear and conic constraints, combining logarithmically homogeneous barriers with quadratic and cubic regularization respectively. Our approach is based on a potential-reduction mechanism and, under the Lipschitz continuity of the corresponding derivative with respect to the local barrier-induced norm, attains a suitably defined class of approximate first- or second-order KKT points with worst-case iteration complexity \(O(\varepsilon ^{-2})\) (first-order) and \(O(\varepsilon ^{-3/2})\) (second-order), respectively. Based on these findings, we develop new path-following schemes attaining the same complexity, modulo adjusting constants. These complexity bounds are known to be optimal in the unconstrained case, and our work shows that they are upper bounds in the case with complicated constraints as well. To the best of our knowledge, this work is the first which achieves these worst-case complexity bounds under such weak conditions for general conic constrained non-convex optimization problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Non-convex optimization is an active area of research in optimization with one of the goals being to establish complexity guarantees for finding approximate stationary points, see the review [40] and the references therein. Two large groups of algorithms that allow one to achieve this goal are first-order methods [2, 20, 23, 32, 51] and second-order methods [18, 26, 27, 29,30,31,32, 37, 38, 42, 79]. Higher-order algorithms are also analyzed, e.g., in [17, 28, 33, 74]. One of the challenges in the development and analysis of algorithms for non-convex optimization is dealing with constraints. The class of optimization problems we consider in this paper is described as follows.

Let \(\mathbb {E}\) be a finite dimensional vector space with inner product \(\langle \cdot ,\cdot \rangle \) and Euclidean norm \(\Vert \cdot \Vert \). We are concerned with solving constrained conic optimization problems of the form

The main working assumption underlying our developments is as follows:

Assumption 1

-

1.

\(\bar{\textsf{K}}\subset \mathbb {E}\) is a pointed (i.e., \(\bar{\textsf{K}}\cap (-\bar{\textsf{K}})=\{0\}\)) closed convex cone with nonempty interior \(\textsf{K}\);

-

2.

\({\textbf{A}}:\mathbb {E}\rightarrow \mathbb {R}^{m}\) is a linear operator assigning each element \(x\in \mathbb {E}\) to a vector in \(\mathbb {R}^m\) and having full rank., i.e., \({{\,\textrm{im}\,}}({\textbf{A}})=\mathbb {R}^{m}\), \(b \in \mathbb {R}^{m}\);

-

3.

The feasible set \(\bar{\textsf{X}}=\bar{\textsf{K}}\cap \textsf{L}\), where \(\textsf{L}=\{x\in \mathbb {E}\vert {\textbf{A}}x=b\}\), is compact and has nonempty relative interior denoted by \(\textsf{X}=\textsf{K}\cap \textsf{L}\);

-

4.

\(f:\mathbb {E}\rightarrow \mathbb {R}\) is possibly non-convex, continuous on \(\bar{\textsf{X}}\) and continuously differentiable on \(\textsf{X}\);

-

5.

Problem (Opt) admits a global solution. We let \(f_{\min }(\textsf{X})=\min \{f(x)\vert x\in \bar{\textsf{X}}\}\).

Note that f is not assumed to be globally differentiable, but only over the relative interior of the feasible set. Problem (Opt) contains many important classes of optimization problems as special cases. We summarize the three most important ones below.

Example 1

(NLP with non-negativity constraints) For \(\mathbb {E}=\mathbb {R}^n\) and \(\bar{\textsf{K}}\equiv \bar{\textsf{K}}_{\text {NN}}=\mathbb {R}^n_{+}\) we recover non-linear programming problems with linear equality constraints and non-negativity constraints: \(\bar{\textsf{X}}=\{x\in \mathbb {R}^n\vert {\textbf{A}}x=b,\text { and } x_{i}\ge 0\text { for all }i=1,\ldots ,n\}.\) \(\Diamond \)

Example 2

(Optimization over the second-order cone) Consider \(\mathbb {E}=\mathbb {R}^{n}\) and \(\bar{\textsf{K}}\equiv \bar{\textsf{K}}_{\text {SOC}}=\{x=(x_{0},\underline{x})\in {\mathbb {R}\times \mathbb {R}^{n-1}}\vert x_{0}\ge \Vert \underline{x}\Vert _2\}\), the second-order cone (SOC). In this case problem (Opt) becomes a non-linear second-order conic optimization problem. Such problems have a huge number of applications, including energy systems [75], network localization [84], among many others [3]. \(\Diamond \)

Example 3

(Semi-definite programming) If \(\mathbb {E}=\mathbb {S}^{n}\) is the space of real symmetric \(n\times n\) matrices and \(\bar{\textsf{K}}\equiv \bar{\textsf{K}}_{\text {SDP}}=\mathbb {S}^{n}_{+}\) is the cone of positive semi-definite matrices, we obtain a non-linear semi-definite programming problem. Endow this space with the standard inner product \(\langle a,b\rangle ={{\,\textrm{tr}\,}}(ab)\). In this case, the linear operator \({\textbf{A}}\) assigns a matrix \(x\in \mathbb {S}^{n}\) to a vector \({\textbf{A}}x = [\langle a_{1},x\rangle , \ldots , \langle a_{m},x\rangle ]^\top \). Such mathematical programs have received enormous attention due to the large number of applications in control theory, combinatorial optimization, and engineering [13, 41, 70]. \(\Diamond \)

Example 4

(Exponential cone programming) Consider the exponential cone defined as

with the closure \(\bar{\textsf{K}}_{\exp }={{\,\textrm{cl}\,}}(\textsf{K}_{\exp })=\textsf{K}_{\exp }\cup \{(x_{1},0,x_{3})\vert x_{1}\ge 0,x_{3}\le 0\}\). \(\textsf{K}_{\exp }\) is an important convex cone that is implemented in standard numerical solution packages like YALMIP, MOSEK, and CVX, as it can be used to represent a lot of interesting convex sets arising in optimization; see [34] and the PhD thesis [35]. \(\Diamond \)

1.1 Motivating applications

1.1.1 Inverse problems with non-convex regularization

An important instance of (Opt) is the composite optimization problem

where \(\ell :\mathbb {R}^n\rightarrow \mathbb {R}\) is a smooth data fidelity function, \(\varphi :\mathbb {R}\rightarrow \mathbb {R}\) is a convex function, \(p\in (0,1)\), and \(\lambda >0\) is a regularization parameter. A common use of this problem formulation is the regularized empirical risk-minimization problem in high-dimensional statistics, or the variational regularization technique in inverse problems. Non-negativity constraints can be motivated by some prior knowledge about the observed object which needs to be reconstructed. For example, the true signal may represent an image with positive intensities of the pixels, or one can consider Poisson Inverse Problem as in [59]. Common specifications for the regularizing function are \(\varphi (s)=s\), or \(\varphi (s)=s^{2/p}\). In the first case, we obtain \(\sum _{i=1}^{n}\varphi (x^{p}_{i})=\sum _{i=1}^{n}x_{i}^{p}=\Vert x\Vert ^{p}_{p}\) on \(\textsf{K}_{\text {NN}}\), whereas in the second case, we get \(\sum _{i=1}^{n}\varphi (x^{p}_{i})=\sum _{i=1}^{n}x_{i}^{2}=\Vert x\Vert ^{2}\). Note that the first case yields the objective f which is non-convex and non-differentiable at the boundary of the feasible set. It has been reported in imaging sciences that the use of such non-convex and non-differentiable regularizer has advantages in the restoration of piecewise constant images. Bian and Chen [15] contains a nice survey of studies supporting this observation. Moreover, in variable selection, the \(L_{p}\) penalty function with \(p\in (0,1)\) owns the oracle property [44] in statistics, while \(L_{1}\) (called the LASSO) does not; problem (1) with \(p\in (0,1)\) can be used for variable selection at the group and individual variable levels simultaneously, while the very same problem with \(p=1\) can only work for individual variable selection [66]. See [36, 50] for a complexity-theoretic analysis of this problem.

1.1.2 Low rank matrix recovery

On the space of symmetric matrices \(\mathbb {E}=\mathbb {S}^{n}\), together with the feasible set \(\bar{\textsf{X}}=\{x\in \mathbb {E}\vert {\textbf{A}}x=b,x\in \bar{\textsf{K}}_{\text {SDP}}\}\) we can consider the composite model \(f(x)=\ell (x)+r(x)\), with smooth loss function \(\ell :\mathbb {E}\rightarrow \mathbb {R}\), and with regularizer \(r:\mathbb {E}\rightarrow \mathbb {R}\cup \{+\infty \}\). In applications the regularizer is given in the form of a matrix function \(r(x)=\sum _{i}\sigma _{i}(x)^{p}\) on \(x\in \textsf{K}_{\text {SDP}}\), where \(p\in (0,1)\) and \(\sigma _{i}(x)\) is the i-th singular value of the matrix x. The resulting optimization problem is a matrix version of the non-convex regularized problem (1). See [67, 86] for a wealth of optimization problems following this description.

1.2 Challenges and contribution

One of the challenges in approaching problem (Opt) algorithmically is to deal with the feasible set \(\textsf{L}\cap \bar{\textsf{K}}\). A projection-based approach faces the computational bottleneck to project onto the intersection of a cone with an affine subspace, which makes the majority of the existing first-order [2, 20, 23, 32, 51, 57, 77] and second-order [18, 26, 27, 31, 32, 37, 38, 42, 79] methods practically less attractive, as they either are designed for unconstrained problems or use proximal steps in the updates. When primal feasibility is not a major concern, augmented Lagrangian algorithms [5, 7, 19, 54] are an alternative, though they do not always come with complexity guarantees. These observations motivate us to focus on primal barrier-penalty methods that allow us to decompose the feasible set and treat \(\bar{\textsf{K}}\) and \(\textsf{L}\) separately. Barrier methods are classical and powerful for convex optimization in the form of interior-point methods [78]. In the non-convex optimization setting results are in a sense fragmentary, with many different algorithms existing for different particular instantiations of (Opt). In particular, the main focus of barrier methods for non-convex optimization has been on particular cases, such as non-negativity constraints [16, 22, 58, 82, 85, 87] and quadratic programming [47, 73, 87]. In this paper we develop a flexible and unifying algorithmic framework that is able to accommodate first- and second-order interior-point algorithms for (Opt) with potentially non-convex and non-smooth at the relative boundary objective functions, and general, possibly non-symmetric, conic constraints. To the best of our knowledge, our framework is the first one providing complexity results for first- and second-order algorithms to reach points satisfying, respectively, suitably defined approximate first- and second-order necessary optimality conditions, under such weak assumptions and for such a general setting.

1.2.1 Our approach

At the core of our approach is the assumption that the cone \(\bar{\textsf{K}}\) admits a logarithmically homogeneous self-concordant barrier (LHSCB) h(x) ([78], cf. Definition 1), for which we can retrieve information about the function value h(x), the gradient \(\nabla h(x)\) and the Hessian \(H(x)=\nabla ^{2}h(x)\) with relative ease. This is not a very restrictive assumption, since all standard conic restrictions in optimization (i.e., \(\bar{\textsf{K}}_{\text {NN}},\bar{\textsf{K}}_{\text {SOC}},\bar{\textsf{K}}_{\text {SDP}}\) and \(\bar{\textsf{K}}_{\exp }\)) have this property. Using this barrier function, our algorithms are designed to reduce the potential function

where \(\mu >0\) is a (typically) small penalty parameter. By definition, the domain of the potential function \(F_{\mu }\) is the interior of the cone \(\bar{\textsf{K}}\). Therefore, any algorithm designed to reduce the potential will automatically respect the conic constraints, and the satisfaction of the linear constraints \(\textsf{L}\) can be ensured by choosing search directions from the nullspace of the linear operator \({\textbf{A}}\). Our target is to find points satisfying suitably defined approximate necessary first- and second-order optimality conditions for problem (Opt) expressed in terms of \(\varepsilon \)-KKT and \((\varepsilon _1,\varepsilon _2)\)-2KKT points respectively (cf. Sect. 3 for a precise definition).Footnote 1

1.2.2 Finding points satisfying approximate necessary first-order conditions

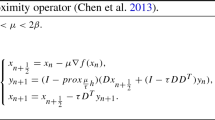

To produce an \(\varepsilon \)-KKT point for the model problem (Opt), we construct a novel gradient-based method, which we call the first-order adaptive Hessian barrier algorithm (\({{\,\mathrm{\textbf{FAHBA}}\,}}\), Algorithm 1). The main computational steps involved in \({{\,\mathrm{\textbf{FAHBA}}\,}}\) are the identification of a search direction and a step-size policy, guaranteeing feasibility and sufficient decrease in the potential function value. The algorithm starts from an approximate analytic center of the feasible set. To find a search direction, we employ a linear model for \(F_{\mu }\), regularized by the squared local norm induced by the Hessian of the barrier function h, which is then minimized over the tangent space of the affine subspace \(\textsf{L}\). The step-size is adaptively chosen to ensure feasibility and sufficient decrease in the objective function value f. For a judiciously chosen value of \(\mu \), we prove that this gradient-based method enjoys the upper iteration complexity bound \(O(\varepsilon ^{-2})\) for reaching an \(\varepsilon \)-KKT point when a “descent Lemma” holds relative to the local norm induced by the Hessian of h (cf. Assumption 3 and Theorem 1 in Sect. 4). We then move on in proving that \({{\,\mathrm{\textbf{FAHBA}}\,}}\) can be embedded within a path-following scheme that iteratively reduces the value of \(\mu \). This renders our first-order interior-point method parameter-free and any-time convergent, with basically the same iteration complexity of \(O(\varepsilon ^{-2})\).

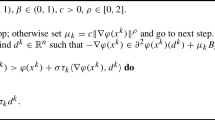

1.2.3 Finding points satisfying approximate necessary second-order conditions

To obtain \((\varepsilon _1,\varepsilon _2)\)-2KKT points, we construct a second-order adaptive Hessian barrier algorithm (\({{\,\mathrm{\textbf{SAHBA}}\,}}\), Algorithm 3). As for \({{\,\mathrm{\textbf{FAHBA}}\,}}\), the search direction subroutine minimizes a local model of the potential function \(F_{\mu }\) over the tangent space of the affine subspace \(\textsf{L}\). The minimized model is composed of the linear model for \(F_{\mu }\), augmented by second-order information on the objective function f and regularized by the cube of the local norm induced by the Hessian of h. The regularization parameter is chosen adaptively to allow for potentially larger steps in the areas of small curvature. For a judiciously chosen value of \(\mu \), we establish (cf. Theorem 3) the worst-case upper bound \(O(\max \{\varepsilon _1^{-3/2},\varepsilon _2^{-3/2}\})\) on the number of iterations for reaching an \((\varepsilon _1,\varepsilon _2)\)-2KKT point, under a weaker version of the assumption that the Hessian of f is Lipschitz relative to the local norm induced by the Hessian of h (cf. Assumption 4 in Sect. 5 for a precise definition). We then propose a path-following version of \({{\,\mathrm{\textbf{SAHBA}}\,}}\) that iteratively reduces the value of \(\mu \) making the algorithm parameter-free and any-time convergent, with \(O(\max \{\varepsilon _1^{-3/2},\varepsilon _2^{-3/2}\})\) complexity.

1.3 Related work

To the best of our knowledge, \({{\,\mathrm{\textbf{FAHBA}}\,}}\) and \({{\,\mathrm{\textbf{SAHBA}}\,}}\) are the first interior-point algorithms that achieve such complexity bounds for the general non-convex problem template (Opt). Closest to our approach, but within the trust-region framework, are the works [58, 65, 82]. All these papers focus on the special case of non-negativity constraints with \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\) (Example 1) and fix \(\mu \) before the start of the algorithm based on the desired accuracy \(\varepsilon \), which may require some hyperparameter tuning in practice and may not work if the desired accuracy is not yet known. Interestingly, for the special case \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\), our algorithms provide stronger results under weaker assumptions, compared to the first- and second-order methods in [58], the second-order method in [65] specified to our setting of linear constraints, and the first-order implementation of the second-order method in [82]. We make this claim precise in Sects. 4.4 and 5.3.

1.3.1 First-order methods

In the unconstrained setting, when the gradient is Lipschitz continuous, the standard gradient descent achieves the lower iteration complexity bound \(O(\varepsilon ^{-2})\) to find a first-order \(\varepsilon \)-stationary point \(\hat{x}\) such that \(\Vert \nabla f(\hat{x})\Vert \leqslant \varepsilon \) [24, 25, 76]. The original inspiration for the construction of our methods comes from the paper [22] on Hessian barrier algorithms, which in turn was strongly influenced by continuous-time techniques [4, 21]. We extend the first-order method of [22] to general conic constraints, and develop a unified complexity analysis, which goes far beyond the quadratic optimization case studied in detail in that reference.

1.3.2 Second-order methods

In unconstrained optimization with Lipschitz continuous Hessian, cubic-regularized Newton methods [55, 79] and second-order trust region algorithms [27, 37, 38] achieve the lower iteration complexity bound \(O(\max \{\varepsilon _1^{-3/2},\varepsilon _2^{-3/2}\})\) [24, 25] to find a second-order \((\varepsilon _1,\varepsilon _2)\)-stationary point, i.e., a point \(\hat{x}\) satisfying \(\Vert \nabla f(\hat{x})\Vert \le \varepsilon _1\) and \(\lambda _{\min } \left( \nabla ^2 f(\hat{x})\right) \ge - \sqrt{\varepsilon _2}\), where \(\lambda _{\min }(\cdot )\) denotes the minimal eigenvalue of a matrix.Footnote 2 The existing literature on non-convex problems with non-linear constraints either considers only equality constraints [39], only inequality constraints [65], or both, but require projection [32]. Moreover, they do not consider general conic constraints as as we do in this paper.

1.3.3 Approximate optimality conditions

Bian et al. [16] consider box-constrained minimization of the same objective as in (1) and propose a notion of \(\varepsilon \)-scaled KKT points. Their definition is tailored to the geometry of the optimization problem, mimicking the complementarity slackness condition of the classical KKT theorem for the non-negative orthant. In particular, their first-order condition consists of feasibility of x along with a scaled gradient condition. Haeser et al. and O’Neill–Wright [58, 82] convincingly argue that, without additional assumptions on the objective function, points that satisfy the scaled gradient condition may not approach KKT points as \(\varepsilon \) decreases. Thus, [58, 82], provide alternative notions of approximate first- and second-order KKT conditions for the domain \(\textsf{K}_{\text {NN}}\). Inspired by [58], we define the corresponding notions for general cones. Our first-order conditions turn out to be stronger than those of [58, 82] and the second-order condition is equivalent to theirs in the particular case of non-negativity constraints (cf. Sects. 3.3, 4.4, and 5.3). The proof that our algorithms are guaranteed to find such approximate KKT points requires some fine analysis exploiting the structural properties of logarithmically homogeneous barriers associated to the cone \(\textsf{K}\), and are not simple extensions of arguments used for \(\textsf{K}_{\text {NN}}\).

We remark that after the first release of our preprint on arXiv on October 29, 2021 (https://arxiv.org/abs/2111.00100v1), the paper [61] appeared in July 2022, of which we became aware during the revision of our paper after its submission. They also consider the model problem (Opt) and also build their algorithmic developments on a barrier construction. They propose a Newton-CG based method for finding \((\varepsilon ,\varepsilon )\)-second-order approximate KKT points with similar to ours iteration complexity guarantee \(O(\varepsilon ^{-3/2})\), yet the dependence of their complexity bound on the barrier parameter is not clear. Their algorithm also runs for a fixed parameter \(\mu \), and thus is not parameter-free, unlike our parameter-free versions. Also, unlike them, we propose a first-order algorithm.

1.4 Notation

In what follows, \(\mathbb {E}\) denotes a finite-dimensional real vector space, and \(\mathbb {E}^{*}\) the dual space, which is formed by all linear functions on \(\mathbb {E}\). The value of \(s\in \mathbb {E}^{*}\) at \(x\in \mathbb {E}\) is denoted by \(\langle s,x\rangle \). In the particular case where \(\mathbb {E}=\mathbb {R}^n\), we have \(\mathbb {E}=\mathbb {E}^{*}\). The gradient vector of a differentiable function \(f:\mathbb {E}\rightarrow \mathbb {R}\) is denoted as \(\nabla f(x)\in \mathbb {E}^{*}\). For an operator \(\textbf{H}:\mathbb {E}\rightarrow \mathbb {E}^{*}\), denote by \(\textbf{H}^{*}\) is adjoint operator, defined by the identity

Thus, \(\textbf{H}^{*}:\mathbb {E}\rightarrow \mathbb {E}^{*}\). It is called self-adjoint if \(\textbf{H}=\textbf{H}^{*}\). We use \(\lambda _{\max }(\textbf{H})\; (\lambda _{\min }(\textbf{H}))\), to denote the maximum (minimum) eigenvalue of such operators. Important examples of such self-adjoint operators are Hessians of twice differentiable functions \(f:\mathbb {E}\rightarrow \mathbb {R}\):

Operator \(\textbf{H}:\mathbb {E}\rightarrow \mathbb {E}^{*}\) is positive semi-definite if \(\langle \textbf{H}u,u\rangle \ge 0\) for all \( u\in \mathbb {E}\). If the inequality is always strict for non-zero u, then \(\textbf{H}\) is called positive definite. These attributes are denoted as \(\textbf{H}\succeq 0\) and \(\textbf{H}\succ 0\), respectively. By fixing a positive definite self-adjoint operator \(\textbf{H}:\mathbb {E}\rightarrow \mathbb {E}^{*}\), we can define the following Euclidean norms

In some cases we use notation \(\Vert u\Vert _{\textbf{H}}\) to explicitly indicate the operator used to define the norm. If \(\mathbb {E}=\mathbb {R}^n\), then \(\textbf{H}\) is usually taken as the identity matrix \(\textbf{H}=\textbf{I}\). The \(L_{\infty }\)-norm of \(x \in \mathbb {R}^n\) is denoted as \(\Vert x\Vert _{\infty } = \max _{i=1,\ldots ,n} |x_i|\). The directional derivative of function \(f:\mathbb {E}\rightarrow \mathbb {R}\) is defined in the usual way:

More generally, for \(v_{1},\ldots ,v_{p}\in \mathbb {E}\), we define \(D^{p}f(x)[v_{1},\ldots ,v_{p}]\) the p-th directional derivative at x along directions \(v_{i}\in \mathbb {E}\). In that way we define \(\nabla f(x)\in \mathbb {E}^{*}\) by \(Df(x)[u]=\langle \nabla f(x),u\rangle \) and the Hessian \(\nabla ^{2}f(x):\mathbb {E}\rightarrow \mathbb {E}^{*}\) by \(\langle \nabla ^{2}f(x)u,v\rangle =D^{2}f(x)[u,v]\). We denote by \(\textsf{L}_{0}\triangleq \{ v \in \mathbb {E}\vert {\textbf{A}}v = 0\}{\triangleq \ker ({\textbf{A}})}\) the tangent space associated with the affine subspace \(\textsf{L}\subset \mathbb {E}\).

2 Preliminaries

2.1 Cones and their self-concordant barriers

Let \(\bar{\textsf{K}}\subset \mathbb {E}\) be a regular cone: \(\bar{\textsf{K}}\) is closed convex with nonempty interior \(\textsf{K}={{\,\textrm{int}\,}}(\bar{\textsf{K}})\), and pointed (i.e. \(\bar{\textsf{K}}\cap (-\bar{\textsf{K}})=\{0\}\)). Any such cone admits a self-concordant logarithmically homogeneous barrier h(x) with finite parameter value \(\theta \) [78].

Definition 1

A function \(h:\bar{\textsf{K}}\rightarrow (-\infty ,\infty ]\) with \({{\,\textrm{dom}\,}}h=\textsf{K}\) is called a \(\theta \)-logarithmically homogeneous self-concordant barrier (\(\theta \)-LHSCB) for the cone \(\bar{\textsf{K}}\) if:

-

(a)

h is a \(\theta \)-self-concordant barrier for \(\bar{\textsf{K}}\), i.e., for all \(x \in \textsf{K}\) and \(u\in \mathbb {E}\)

$$\begin{aligned}&|D^{3}h(x)[u,u,u]|\le 2D^{2}h(x)[u,u]^{3/2},\text { and } \\&\sup _{u\in \mathbb {E}}|2 Dh(x)[u]-D^{2}h(x)[u,u]|\le \theta . \end{aligned}$$ -

(b)

h is logarithmically homogeneous:

$$\begin{aligned} h(tx)=h(x)-\theta \ln (t)\qquad \forall x\in \textsf{K},t>0. \end{aligned}$$

We denote the set of \(\theta \)-logarithmically homogeneous barriers by \(\mathcal {H}_{\theta }(\textsf{K})\).

The constant \(\theta \) is called the parameter of the barrier function. Just like in standard interior point methods, it affects the iteration complexity of our methods. Given \(h\in \mathcal {H}_{\theta }(\textsf{K})\), from [76, Thm 5.1.3] we know that for any \(\bar{x}\in {{\,\textrm{bd}\,}}(\bar{\textsf{K}})\), any sequence \((x_{k})_{k \ge 0}\) with \(x_{k}\in \ \textsf{K}\) and \(\lim _{k\rightarrow \infty }x_{k}=\bar{x}\) satisfies \(\lim _{k\rightarrow \infty }h(x_{k})=+\infty \). For a pointed cone \(\bar{\textsf{K}}\), we have \(\theta \ge 1\) and the Hessian \(H(x)\triangleq \nabla ^{2}h(x):\mathbb {E}\rightarrow \mathbb {E}^{*}\) is a positive definite linear operator defined by \(\langle H(x)u,v\rangle \triangleq D^{2}h(x)[u,v]\) for all \(u,v\in \mathbb {E}\), see [76, Thm. 5.1.6]. The Hessian gives rise to a (primal) local norm

We also define a dual local norm on \(\mathbb {E}^{*}\) as

The Dikin ellipsoid with center \(x\in \textsf{K}\) and radius \(r>0\) is defined as the open set \(\mathcal {W}(x;r)\triangleq \{u\in \mathbb {E}\vert \;\Vert u-x\Vert _{x}<r\}\). The usage of the local norm adapts the unit ball to the local geometry of the set \(\textsf{K}\). Indeed, the following classical results are key to the development of our methods, since they allow us to ensure feasibility of the iterates and sufficient decrease of the potential function in each iteration of our algorithms.

Lemma 1

(Theorem 5.1.5 [76]) For all \(x\in \textsf{K}\) we have \(\mathcal {W}(x;1)\subseteq \textsf{K}\).

Proposition 1

(Theorem 5.1.9 [76]) Let \(h\in \mathcal {H}_{\theta }(\textsf{K})\), \(x\in {{\,\textrm{dom}\,}}h\), and a fixed direction \(d \in \mathbb {E}\). For all \(t \in [0,\frac{1}{\Vert d\Vert _x})\), with the convention that \(\frac{1}{\Vert d\Vert _{x}}=+\infty \) if \(\Vert d\Vert _x=0\), we have:

where \(\omega (t)=\frac{-t-\ln (1-t)}{t^2}\).

We will also use the following inequality for the function \(\omega (t)\) [76, Lemma 5.1.5]:

Appendix A contains some more technical properties of LHSCBs which are used in the proofs. We close this section with important examples of conic domains to which our methods can be directly applied.

Example 5

(Non-negativity constraints) For \(\mathbb {E}=\mathbb {R}^n\) and \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\), we define the log-barrier \(h(x)=-\sum _{i=1}^{n}\ln (x_{i})\) for all \(x\in \textsf{K}_{\text {NN}}=\mathbb {R}^n_{++}\). It is readily seen that \(h\in \mathcal {H}_{n}(\textsf{K})\). \(\Diamond \)

Example 6

(SOC constraints) Let \(\mathbb {E}=\mathbb {R}^{n}\) and \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {SOC}}\), defined in Example 2. For \(x=(x_{0},\underline{x})\in \bar{\textsf{K}}_{\text {SOC}}\), we define the barrier \(h(x)=-\ln (x_{0}^{2}-\underline{x}^{\top }\underline{x})\). It is well known that \(h\in \mathcal {H}_{2}(\textsf{K}_{\text {SOC}})\) [78]. \(\Diamond \)

Example 7

(SDP constraints) Let \(\mathbb {E}=\mathbb {S}^{n}\) and \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {SDP}}\), defined in Example 3. Consider the barrier \(h(x)=-\ln \det (x)\). It is well known that \(h\in \mathcal {H}_{n}(\textsf{K}_{\text {SDP}})\). \(\Diamond \)

Example 8

(The exponential cone) Consider the exponential cone \(\textsf{K}_{\exp }\) with closure \(\bar{\textsf{K}}_{\exp }\) introduced in Example 4. This set admits a 3-LHSB

We remark that this cone is not self-dual (cf. Definition 2), but

under the linear transformation \( G=\left[ \begin{array}{ccc} 1/e &{} 0 &{} 0 \\ 0 &{} 0 &{} -\,1 \\ 0 &{} -\,1 &{} 0 \end{array}\right] . \) There are many convex sets that can be represented using the exponential cone. We list some examples below, but refer to the PhD thesis [35] for further details.

-

Exponential: \(\{(t,u)\vert t\ge e^{u}\}\iff (t,1,u)\in \bar{\textsf{K}}_{\exp }\);

-

Logarithm: \(\{(t,u)\vert t\le \ln (u)\}\iff (u,1,t)\in \bar{\textsf{K}}_{\exp }\);

-

Entropy: \(t\le -u\ln (u)\iff t\le u\ln (1/u)\iff (1,u,t)\in \bar{\textsf{K}}_{\exp }\);

-

Relative Entropy: \(t\ge u\log (u/w)\iff (w,u,t)\in \bar{\textsf{K}}_{\exp }\);

-

Softplus function: \(t\ge \ln (1+e^{u})\iff a+b\le 1,(a,1,u-t)\in \bar{\textsf{K}}_{\exp },(b,1,-t)\in \bar{\textsf{K}}_{\exp }\).

\(\Diamond \)

2.2 Exploiting the structure of symmetric cones

Nesterov and Todd [80] introduced self-scaled barriers, which later were realized as LHSCBs for symmetric cones. Such barriers are nowadays key to define primal–dual interior point methods with potentially larger step-sizes for convex problems. Our methods can also exploit the structure of self-scaled barriers, leading to potentially larger step-sizes and eventual faster convergence in practice. For a given closed convex nonempty cone \(\bar{\textsf{K}}\), its dual cone is the closed convex and nonempty cone \(\bar{\textsf{K}}^{*}\triangleq \{s\in \mathbb {E}^{*}\vert \langle s,x\rangle \ge 0\;\forall x\in \bar{\textsf{K}}\}\). If \(h\in \mathcal {H}_{\theta }(\textsf{K})\), then the dual barrier is defined as \(h_{*}(s)\triangleq \sup _{x\in \textsf{K}}\{\langle -s,x\rangle -h(x)\}\) for \(s\in \bar{\textsf{K}}^{*}\). Note that \(h_{*}\in \mathcal {H}_{\theta }(\textsf{K}^{*})\) [78, Theorem 2.4.4].

Definition 2

An open convex cone is self-dual if \(\textsf{K}^{*}=\textsf{K}\). \(\textsf{K}\) is homogeneous if for all \(x,y\in \textsf{K}\) there exists a linear bijection \(G:\mathbb {E}\rightarrow \mathbb {E}\) such that \(Gx=y\) and \(G\textsf{K}=\textsf{K}\). An open convex cone \(\textsf{K}\) is called symmetric if it is self-dual and homogeneous.

The class of symmetric cones can be characterized within the language of Euclidean Jordan algebras [45, 46, 63, 64]. For optimization, the three symmetric cones of most relevance are \(\bar{\textsf{K}}_{\text {NN}},\bar{\textsf{K}}_{\text {SOC}}\), and \(\bar{\textsf{K}}_{\text {SDP}}\).

Definition 3

([80]) \(h\in \mathcal {H}_{\theta }(\textsf{K})\) is a \(\theta \)-self-scaled barrier (\(\theta \)-SSB) if for all \(x,w\in \textsf{K}\) we have \(H(w)x\in \textsf{K}\) and \(h_{*}(H(w)x)=h(x)-2h(w)-\theta \). Let \(\mathcal {B}_{\theta }(\textsf{K})\) denote the class of \(\theta \)-SSBs.

Clearly, \(\mathcal {B}_{\theta }(\textsf{K})\subset \mathcal {H}_{\theta }(\textsf{K})\). Hauser and Güler [60] showed that every symmetric cone admits a \(\theta \)-SSB for some \(\theta \ge 1\), while a characterization of the barrier parameter \(\theta \) has been obtained in [56]. The main advantage of working with SSBs instead of LHSCBs is that we can make potentially longer steps in the interior of the cone \(\textsf{K}\) towards the direction of its boundary, and have larger decrease of the potential. Let \(x \in \textsf{K}\) and \(d \in \mathbb {E}\). Denote

Since \(\mathcal {W}(x;1) \subseteq \textsf{K}\) for all \(x\in \textsf{K}\), we have that \(\sigma _x(d) \le \Vert d\Vert _{x}\) and \(\sigma _{x}(-d)\le \Vert d\Vert _{x}\) for all \(d\in \mathbb {E}\). Therefore \([0,\frac{1}{\Vert d\Vert _{x}})\subseteq [0,\frac{1}{\sigma _{x}(d)})\). Hence, if the scalar quantity \(\sigma _{x}(d)\) can be computed efficiently, it would allow us to make a larger step without violating feasibility.

Example 9

For \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\), to guarantee \(x-td\in \textsf{K}_{\text {NN}}\), we need \(x_{i}-t d_{i}>0\) for all \(i\in \{1,\ldots ,n\}\). Hence, if \(d_{i}\le 0\), this is satisfied for all \(t\ge 0\). If \(d_{i}>0\), we obtain the restriction \(t\le \frac{x_{i}}{d_{i}}\). Therefore, \(\sigma _{x}(-d)=\max \{\frac{d_{i}}{x_{i}}:d_{i}>0\}\). \(\Diamond \)

Example 10

For \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {SDP}}\), we see that \(x-td\succ 0\) if and only if \({{\,\textrm{Id}\,}}\succ t x^{-1/2}dx^{-1/2}\), where \({{\,\textrm{Id}\,}}\) is the identity matrix and \(x^{1/2}\) denotes the square root of a matrix \(x\in \textsf{K}_{\text {SDP}}\). Hence, if \(\lambda _{\max }(x^{-1/2}dx^{-1/2})>0\), then \(t<\frac{1}{\lambda _{\max }(x^{-1/2}dx^{-1/2})}\). Thus, \(\sigma _{x}(d)=\max \{\lambda _{\max }(x^{-1/2}dx^{-1/2}),0\}\). \(\Diamond \)

The analogous result to Proposition 1 for barriers \(h\in \mathcal {B}_{\theta }(\textsf{K})\) reads as follows:

Proposition 2

(Theorem 4.2 [80]) Let \(h\in \mathcal {B}_{\theta }(\textsf{K})\) and \(x \in \textsf{K}\). Let \(d \in \mathbb {E}\) be such that \(\sigma _x(-d)>0\). Then, for all \(t \in [0,\frac{1}{\sigma _x(-d)})\), we have:

2.3 Unified notation

Our algorithms work on any conic domain on which we can efficiently evaluate a \(\theta \)-LHSCB.

Assumption 2

\(\bar{\textsf{K}}\) is a regular cone admitting an efficient barrier setup \(h\in \mathcal {H}_{\theta }(\textsf{K})\). By this we mean that at a given query point \(x\in \textsf{K}\), we can construct an oracle that returns to us information about the values \(h(x),\nabla h(x)\) and \(H(x)=\nabla ^{2}h(x)\), with low computational efforts.

Note that when \(h \in \mathcal {B}_{\nu }(\textsf{K})\), we have the flexibility to treat h either as \(h \in \mathcal {H}_{\nu }(\textsf{K})\) or as \(h \in \mathcal {B}_{\nu }(\textsf{K})\). Given the potential advantages when working on symmetric cones, it is useful to develop a unified notation handling the case \(h\in \mathcal {H}_{\theta }(\textsf{K})\) and \(h\in \mathcal {B}_{\theta }(\textsf{K})\) at the same time. We therefore introduce the notation

Note that

Propositions 1 and 2 together with Eq. (4), give us the one-and-for-all Bregman bound

valid for all \((x,d)\in \textsf{X}\times \mathbb {E}\) and \(t \in \left[ 0,\frac{1}{\zeta (x,d)}\right) \).

3 Approximate optimality conditions

Consider the non-convex optimization problem (Opt). If \(x^{*}\) is a local solution of the optimization problem at which the objective function f is continuously differentiable, then there exists \(y^{*}\in \mathbb {R}^{m}\) such that

This is equivalent to the standard local optimality condition [81]

Remark 1

Since \(-{{\,\mathrm{\textsf{NC}}\,}}_{\bar{\textsf{K}}}(x^{*})\subseteq \bar{\textsf{K}}^{*}\), the inclusion (8) implies \(s^{*}\triangleq \nabla f(x^{*})-{\textbf{A}}^{\top }y^{*}\in \bar{\textsf{K}}^{*}\).\(\Diamond \)

3.1 First-order approximate KKT conditions

The next definition specifies our notion of an approximate first-order KKT point for problem (Opt).

Definition 4

Given \(\varepsilon {\ge }0\), a point \(\bar{x}\in \mathbb {E}\) is an \(\varepsilon \)-KKT point for problem (Opt) if there exists \(\bar{y}\in \mathbb {R}^{m}\) such that

Note that by conditions (10) and (11) \(\bar{x}\in \textsf{K}\) and \(\bar{s}\in \bar{\textsf{K}}^{*}\), i.e., are feasible and \(\langle \bar{s},\bar{x}\rangle \ge 0\). Thus, condition (12) is reasonable since it recovers the standard complementary slackness when \(\varepsilon \rightarrow 0\). Although our definition explicitly requires strict primal feasibility, similar approximate KKT conditions have been introduced for candidates \(\bar{x}\) which may be infeasible. This gains relevance in primal–dual settings and sequential optimality conditions for Lagrangian-based methods. In such setups a projection should be used [7, 9].

Definition 4 can be motivated as an approximate version of the exact first-order KKT condition stated as Theorem 5 in Appendix B. It is also easy to show that the above definition readily implies the standard approximate first-order stationarity condition \(\langle \nabla f(\bar{x}), x - \bar{x}\rangle \ge -\varepsilon \), for all \(x \in \bar{\textsf{X}}\) (cf. (9)). Indeed, let \(x \in \bar{\textsf{X}}\) be arbitrary and \(\bar{x} \) satisfy Definition 4. Then,

where we used that \({\textbf{A}}x = {\textbf{A}}\bar{x}=b\), \(\langle \bar{s}, x \rangle \ge 0\) since \(x \in \bar{\textsf{K}}\) and \(\bar{s} \in \bar{\textsf{K}}^{*}\), and \(\langle \bar{s}, \bar{x} \rangle \le \varepsilon \).

The next result uses a perturbation argument based on an interior penalty approach, inspired by [58], and shows that our definition of an \(\varepsilon \)-KKT point is attainable and can be read as an approximate KKT condition.

Proposition 3

Let \(x^{*}\in \bar{\textsf{X}}\) be a local solution of problem (Opt) where f is continuously differentiable on \(\textsf{X}\). Then, there exist sequences \((x_{k})_{k\ge 1}\subset \textsf{X},(y_{k})_{k\ge 1}\subset \mathbb {R}^{m}\) and \(s_{k}=\nabla f(x_{k})-{\textbf{A}}^{*}y_{k}\), \(k\ge 1\) satisfying the following:

-

(i)

\(x_{k}\rightarrow x^{*}\).

-

(ii)

For all \(\varepsilon >0\) we have \(|\langle s_{k},x_{k}\rangle |\le \varepsilon \) for all k sufficiently large.

-

(iii)

All accumulation points of the sequence \((s_{k})_{k\ge 1}\) are contained in \(-{{\,\mathrm{\textsf{NC}}\,}}_{\bar{\textsf{K}}}(x^{*})\subseteq \bar{\textsf{K}}^{*}\).

Proof

- (i):

-

Consider the following perturbed version of problem (Opt), for which \(x^*\) is the unique global solution if we take \(\delta >0\) sufficiently small,

$$\begin{aligned} \min _{x} f(x) + \frac{1}{2}\Vert x-x^*\Vert ^2 \quad \text {s.t.: } {\textbf{A}}x=b, \; x\in \bar{\textsf{K}}, \; \Vert x-x^*\Vert ^2 \le \delta . \end{aligned}$$(13)Further, consider the penalty function

$$\begin{aligned} \varphi _{k}(x)\triangleq f(x)+\mu _{k}h(x)+\frac{1}{2}\Vert x-x^{*}\Vert ^{2} \end{aligned}$$for a sequence \(\mu _{k}\rightarrow 0^{+}\) as \(k\rightarrow \infty \) and the optimization problem

$$\begin{aligned} \min _{x}\varphi _{k}(x) \quad \text {s.t.: } {\textbf{A}}x=b,\; x\in \textsf{K},\; \Vert x-x^{*}\Vert ^{2}\le \delta . \end{aligned}$$It is well known [48] that a global solution \(x_{k}\) exists for this problem for all \(k\ge 1\), and that cluster points of the sequence \((x_{k})_{k\ge 1}\) are global solutions of (13). Since \(\Vert x_k-x^*\Vert ^2 \le \delta \), the sequence \(x_k\) is bounded and, thus, \(x_k \rightarrow x^*\) as \(k\rightarrow \infty \).

- (ii):

-

For k large enough, \(x_{k}\) is a local solution of

$$\begin{aligned} \min _{x}\varphi _{k}(x) \quad \text {s.t.: } {\textbf{A}}x=b. \end{aligned}$$The optimality conditions for this problem read as

$$\begin{aligned} \nabla f(x_{k})+(x_{k}-x^{*})+\mu _{k}\nabla h(x_{k})-{\textbf{A}}^{*}y_{k}=0, \end{aligned}$$where \(y_k \in \mathbb {R}^m\) is a Lagrange multiplier. Setting \(s_{k}\triangleq \nabla f(x_{k})-{\textbf{A}}^{*}y_{k}\), we can continue with

$$\begin{aligned} |\langle s_{k},x_{k}\rangle |=|\langle x^{*}-x_{k},x_{k}\rangle -\mu _{k}\langle \nabla h(x_{k}),x_{k}\rangle | {\mathop {=}\limits ^{\tiny (81)}} |\langle x^{*}-x_{k},x_{k}\rangle +\mu _{k}\theta |. \end{aligned}$$As \(x_{k}\rightarrow x^{*}\) and \(\mu _k \rightarrow 0\), we conclude that \(\lim _{k\rightarrow \infty }|\langle s_{k},x_{k}\rangle |=0\).

- (iii):

-

Let \(x\in \bar{\textsf{K}}\) be arbitrary. Using part (ii) and the Cauchy–Schwarz inequality, we see that

$$\begin{aligned} \langle s_{k},x\rangle&=\langle s_{k},x-x_{k}\rangle +\langle s_{k},x_{k}\rangle \\&=\langle x^{*}-x_{k},x-x_{k}\rangle +\langle s_{k},x_{k}\rangle -\mu _{k}\langle \nabla h(x_{k}),x-x_{k}\rangle \\&{\mathop {\ge }\limits ^{\tiny (83)}} -\Vert x^{*}-x_{k}\Vert \cdot \Vert x-x_{k}\Vert +\langle s_{k},x_{k}\rangle -\mu _{k}\theta \rightarrow 0. \end{aligned}$$Hence, \(\liminf _{k\rightarrow \infty }\langle s_{k},x\rangle \ge 0\) for all \(x\in \bar{\textsf{K}}\), which proves that accumulation points of \((s_{k})_{k\ge 1}\) are contained in the dual cone \(\bar{\textsf{K}}^{*}\). Let \(x_{k}\rightarrow x^{*}\). Assume first that \(x^{*}\in \textsf{X}=\textsf{K}\cap \textsf{L}\). Then the sequence \((s_{k})_{k\ge 1}\) constructed as in part (ii) satisfies \(s_{k}=-\mu _{k}\nabla h(x_{k})+(x^{*}-x_{k})\) for all \(k\ge 1\). Consequently, \(s_{k}\rightarrow 0\) as \(k\rightarrow \infty \). Now assume that \(x^{*}\in {{\,\textrm{bd}\,}}(\textsf{X})\). Choosing \(\mu _{k}\triangleq \frac{1}{\Vert \nabla h(x_{k})\Vert }\rightarrow 0\) as \(k\rightarrow \infty \), gives \(s_{k}=x^{*}-x_{k}-\frac{1}{\Vert \nabla h(x_{k})\Vert }\nabla h(x_{k})\). Lemma 4 in Appendix A shows that the sequence \(\left( \frac{\nabla h(x_{k})}{\Vert \nabla h(x_{k})\Vert }\right) _{k\ge 1}\) is bounded with all its accumulation points contained in \({{\,\mathrm{\textsf{NC}}\,}}_{\bar{\textsf{K}}}(x^{*})\). This readily implies that accumulation points of the sequence \((s_{k})_{k\ge 1}\) must be contained in \(-{{\,\mathrm{\textsf{NC}}\,}}_{\bar{\textsf{K}}}(x^{*})\).

\(\square \)

3.2 Second-order approximate KKT conditions

Our definition of an approximate second-order KKT point is motivated by the exact definition in Theorem 6, stated in Appendix B. The natural inexact version of this definition reads as follows.

Definition 5

Given \(\varepsilon _1,\varepsilon _2 \ge 0\), a point \(\bar{x}\in \mathbb {E}\) is an \((\varepsilon _1,\varepsilon _2)\)-2KKT point for problem (Opt) if there exists \(\bar{y}\in \mathbb {R}^{m}\) such that for \(\bar{s}=\nabla f(\bar{x})-{\textbf{A}}^{*}\bar{y}\) conditions (10) and (11) hold, as well as

Given \(x\in \textsf{X}\), define the set of feasible directions as \(\mathcal {F}_{x}\triangleq \{v\in \mathbb {E}\vert x+v\in \textsf{X}\}.\) Lemma 1 implies that

Upon defining \(d=[H(x)]^{1/2}v\) for \(v\in \mathcal {T}_{x}\), we obtain a direction d satisfying \({\textbf{A}}[H(x)]^{-1/2}d=0\) and \(\Vert d\Vert =\Vert v\Vert _{x}\). Hence, for \(x\in \textsf{K}\), we can equivalently characterize the set \(\mathcal {T}_{x}\) as \(\mathcal {T}_{x}=\{[H(x)]^{-1/2}d\vert {\textbf{A}}[H(x)]^{-1/2}d=0,\Vert d\Vert <1\}\). In terms of feasible directions contained in the set \(\mathcal {T}_{x}\), we observe that condition (15) can be rewritten as follows:

The last line connects our approximate KKT condition with the exact condition stated in Theorem 6 in Appendix B. The next Proposition gives a justification of Definition 5. We again use a perturbation argument based on an interior penalty approach, inspired by [58].

Proposition 4

Let \(x^{*}\in \bar{\textsf{X}}\) be a local solution of problem (Opt), where f is twice continuously differentiable on \(\textsf{X}\). Then, there exist sequences \((x_{k})_{k\ge 1}\subset \textsf{X},(y_{k})_{k\ge 1}\subset \mathbb {R}^{m}\) and \(s_{k}=\nabla f(x_{k})-{\textbf{A}}^{*}y_{k}\), \(k \ge 1\), satisfying the following:

-

(i)

\(x_{k}\rightarrow x^{*}\).

-

(ii)

For all \(\varepsilon _{1}>0\) we have \(|\langle s_{k},x_{k}\rangle |\le \varepsilon _{1}\) for all k sufficiently large.

-

(iii)

All accumulation points of the sequence \((s_{k})_{k\ge 1}\) are contained in \(-{{\,\mathrm{\textsf{NC}}\,}}_{\bar{\textsf{K}}}(x^{*})\subseteq \bar{\textsf{K}}^{*}\).

-

(iv)

For all sequences \(v_{k}\in \mathcal {T}_{x_{k}}\) we have

$$\begin{aligned} \liminf _{k\rightarrow \infty }\langle \nabla ^{2}f(x_{k})v_{k},v_{k}\rangle \ge 0. \end{aligned}$$

Proof

Consider the penalty function

It is easy to see that the proof of Proposition 3 still applies, mutatis mutandis, showing that items (i)–(iii) of the current Proposition hold.

(iv) The point \(x_{k}\) satisfies the necessary second-order condition

for all \(v\in \textsf{L}_{0}\). Here \({{\,\textrm{Id}\,}}_{\mathbb {E}}\) is the identity operator on \(\mathbb {E}\). This reads as

where \(\rho _k\) is the largest eigenvalue of \(\Vert x_{k}-x^{*}\Vert ^{2}{{\,\textrm{Id}\,}}_{\mathbb {E}}+2(x_{k}-x^{*})\otimes (x_{k}-x^{*})\). We know that \(x_{k}\rightarrow x^{*}\). Let \((v_{k})_{k\ge 1}\) be an arbitrary sequence with \(v_{k}\in \mathcal {T}_{x_{k}}\) for all \(k\ge 1\). Then, there exists a sequence \((u_{k})_{k\ge 1}\) satisfying \(v_{k}=[H(x_{k})]^{-1/2}u_{k}\) and \(\Vert u_{k}\Vert <1\). Therefore, \(\Vert v_{k}\Vert ^{2}_{x_{k}}=\Vert u_{k}\Vert ^{2}<1\), and \(\Vert v_{k}\Vert =\Vert u_{k}\Vert ^{*}_{x_{k}}\le \Vert x_{k}\Vert \texttt{e}_{*}(u_{k})\), where we have used Lemma 5 and the definition \(\texttt{e}_{*}(v)\triangleq \sup _{x\in \textsf{K}:\Vert x\Vert =1}\Vert v\Vert _{x}^{*}\). We thus obtain the bound

Since the sequence \((u_{k})_{k\ge 1}\) is bounded, \(\mu _{k}\rightarrow 0^{+}\) and \(\rho _k\rightarrow 0^{+}\) as \(k \rightarrow \infty \), the claim follows.\(\square \)

3.3 Discussion

3.3.1 Comparison with approximate KKT conditions for interior-point methods

To compare our approximate KKT conditions with the ones previously formulated in the literature we consider the particular case \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\subset \mathbb {E}=\mathbb {R}^n\) as in [58, 82]. The exact optimality condition for problem (Opt), assuming that f is continuously differentiable at \(x^{*}\), reads in this case as the complementarity system for the primal–dual triple \((x^{*},y^{*},s^{*})\in \bar{\textsf{K}}_{\text {NN}}\times \mathbb {R}^m \times \bar{\textsf{K}}_{\text {NN}}\) given by

These conditions directly motivate the approximate optimality conditions used in the interior-point method of [58], which are \({\textbf{A}}^{*}\bar{x}=b\), \(\bar{x}>0\), and

where \(\textbf{1}_{n} \in \mathbb {R}^n\) is the vector of all ones and \(\textbf{I}={{\,\textrm{Id}\,}}_{\mathbb {R}^n}\) is the \(n\times n\) identity matrix. Note that, in this particular case, our first-order conditions (10)–(12) are similar but a bit stronger since they imply (17) and (18). Note also that the change of variable \( v=[H(\bar{x})]^{-1/2} d = {{\,\textrm{diag}\,}}[\bar{x}] d\) shows the equivalence between our second-order condition (15) and the condition (19). These observations provide additional motivation for our definitions of approximate KKT points since similar in spirit definitions of approximate KKT points were previously used in the literature, i.e., in [58].

As pointed out in [82], conditions (18) and (19) are commonly used approximate optimality conditions for (Opt) [15, 36]. However, these two conditions alone are insufficient to guarantee that a sequence of points that satisfies these conditions as \(\varepsilon \rightarrow 0\) converges to a KKT point for f [58]. For this reason the condition (17) is added in [58]. These conditions can be overly stringent for coordinates i in which \(\bar{x}_{i}\) is positive and numerically large (which is possible only if the norm of the vectors is not too much restricted in concrete instances, due to our compactness assumption). In this case, the complementarity condition (18) requires the corresponding dual variable \(\bar{s}_{i}\) to be very small. Similarly, (19) requires that the Hessian in the subspace spanned by these coordinates can have only minimal negative curvature. Such requirements contrast sharply with the case of unconstrained minimization. In the limiting scenario in which all of the coordinates of \(\bar{x}\) are far from the boundary, these approximate first-order conditions are significantly harder to satisfy than in the (equivalent) unconstrained formulation. To remedy this potential problem, O’Neill and Wright [82] proposed scaling in Eqs. (18) and (19) only when \(\bar{x}_{i}\in (0,1]\). This operation aims for an interpolation between the bound-constrained case (when \(\bar{x}_{i}\) is small) and the unconstrained case (when \(\bar{x}_{i}\) is large), while also controlling the norm of the matrix used in their optimality conditions. Since [82] only assume non-negativity constraints without linear equality constraints, and no further upper bounds on the decision variables, this clipping of variables makes a lot of sense. However, since we assume compactness, the coordinates of the approximate solutions can become large, but only up to a pre-defined and known upper bound. Hence, the hardness issue of identifying an approximate KKT point is less pronounced in our work. Moreover, we prove that our algorithms produce approximate KKT points with standard scaling and with a similar iteration complexity as in the setting of [82]. Finally, it is easy to show that the conditions with interpolating scaling of [82] follow in our setting for \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\) as well since \(0 \le \min \{x_i,1\}\le x_i\) in this case.

3.3.2 Complementarity conditions for symmetric cones

If \(\bar{\textsf{K}}\) is a symmetric cone, the approximate complementarity conditions (12) and (14) are equivalent to approximate complementarity conditions formulated in terms of the multiplication \(\circ \) under which \(\textsf{K}\) becomes an Euclidean Jordan algebra. [72, Prop.2.1] shows that \(x\circ y=0\) if and only if \(\langle x,y\rangle =0\), where \(\langle \cdot ,\cdot \rangle \) is the inner product of the space \(\mathbb {E}\). Moreover, if \(\bar{\textsf{K}}\) is a primitive symmetric cone, then by [45, Prop.III.4.1], there exists a constant \(a>0\) such that \(a{{\,\textrm{tr}\,}}(x\circ y)=\langle x,y\rangle \) for all \(x,y\in \textsf{K}\). In view of this relation, our approximate complementarity conditions can be written in the form of condition \(\bar{s}\circ \bar{x}\le \varepsilon \). Hence, our approximate KKT conditions reduce to the ones reported in [6]. In particular, for \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\) we recover the standard approximate complementary slackness condition \(\bar{s}^{k}_{i}\bar{x}^{k}_{i} \le \varepsilon \) for all i, as in this case the Jordan product \(\circ \) gives rise to the Hadamard product. See [5] for more details.

3.3.3 On the relation to scaled critical points

In absence of differentiability at the boundary, a popular formulation of necessary optimality conditions involves the definition of scaled critical points. Indeed, at a local minimizer \(x^{*}\), the scaled first-order optimality condition \(x_{i}^{*}[\nabla f(x^{*})]_{i}=0,1\le i\le n\) holds, where the product is taken to be 0 when the derivative does not exist. Based on this characterization, one may call a point \(x\in \textsf{K}_{\text {NN}}\) with \(|x_{i}[\nabla f(x)]_{i}|\le \varepsilon \) for all \(i=1,\ldots ,n\) an \(\varepsilon \)-scaled first-order point. Algorithms designed to produce \(\varepsilon \)-scaled first-order points, with some small \(\varepsilon >0\), have been introduced in [15, 16]. As reported in [58], there are several problems associated with this weak definition of a critical point. First, when derivatives are available on \(\bar{\textsf{K}}_{\text {NN}}\), the standard definition of a critical point would entail the inequality \(\langle \nabla f(x),x'-x\rangle \ge 0\) for all \(x'\in \bar{\textsf{K}}_{\text {NN}}.\) Hence, \([\nabla f(x)]_{i}=0\) for \(x_{i}>0\) and \([\nabla f(x)]_{i}\ge 0\) for \(x_{i}=0\). It follows that \(\nabla f(x)\in \bar{\textsf{K}}_{\text {NN}}\), a condition that is absent in the definition of a scaled critical point. Second, scaled critical points come with no measure of strength, as they hold trivially when \(x=0\), regardless of the objective function. Third, there is a general gap between local minimizers and limits of \(\varepsilon \)-scaled first-order points, when \(\varepsilon \rightarrow 0^{+}\) (see [58]). Similar remarks apply to the scaled second-order condition, considered in [15]. Our definition of approximate KKT points overcome these issues. In fact, our definitions of approximate first- and second-order KKT points are continuous in \(\varepsilon \), and therefore in the limit our approximate KKT conditions coincide with first- and weak second-order necessary conditions for a local minimizer. This is achieved without assuming global differentiability of the objective function or performing an additional smoothing of the problem data as in [14, 15].

3.3.4 Second-order conditions in the literature on interior-point methods compared to standard second-order conditions

As discussed above, our necessary second-order optimality conditions stated in Proposition 4, and their approximate counterpart given in Definition 5, are in alignment with the existing body of work that studies non-convex conic optimization [58, 61, 82]. In particular, condition (15) coincides with those used in [58, 82] for the particular case of non-negativity constraints, and in [61] for general conic constraints. However, the necessary second-order optimality conditions in Proposition 4, [58, Theorem 1], [82, Theorem 3.1], [61, Theorem 4] are weaker than the standard ones that we refer to as strong conditions. This can be easily illustrated with the following example.Footnote 3

Example 11

(Strong vs. weak necessary second-order optimality conditions) Consider \(\mathbb {E}= \mathbb {R}\) and \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}} = \mathbb {R}_{+}\). Also, assume that there are no linear equality constraints. Consider the function \(f:\mathbb {R}_{+}\rightarrow \mathbb {R}\) defined by

This function is continuously differentiable on \(\mathbb {R}_{++}\), bounded from below, and has Lipschitz gradient. The minimization problem \(\min \{f(x)\vert x\in \mathbb {R}_{+}\}\) has two first-order KKT points \(x\in \{0,2\}\), both supported by Lagrange multiplier \(s=0\). A strong second-order necessary optimality condition involves the strong critical cone [81, Section12.5], which in the current example reads as

A strong second-order necessary condition is then

Since \(C^{s}(0)=[0,\infty )\), but \(\nabla ^{2}f(0)=-1\), it follows that \(\bar{x}=0\) does not satisfy the strong second-order necessary condition. However, \(C^{s}(2)=\mathbb {R}\), and \(\nabla ^{2}f(2)=1\). In fact \((x^*,s^*)=(2,0)\) is the global solution to the problem. When the strong critical cone is replaced by the weak critical cone [52, 53]

the weak second-order necessary condition reads as

Clearly \(\bar{x}=0\) satisfies this weak second-order condition.

At the same time, \(\bar{x}=0\) is a second-order stationary point in the sense of Proposition 4 since the necessary conditions (i)–(iv) of that Proposition hold. Indeed, let us consider the sequence \(x_k=\frac{1}{k}\), \(k\ge 1\) and the corresponding sequence \(s_k = \nabla f(x_k) = -x_k = -\frac{1}{k}\). Then, clearly \(x_k \rightarrow 0=\bar{x}\) and condition (i) holds. Condition (ii) also holds since \(|\langle s_k,x_k\rangle | = \frac{1}{k^2} \le \varepsilon _1\) for sufficiently large k. Since \(s_k \rightarrow 0\) as \(k \rightarrow \infty \), we have that condition (iii) holds as well. We finally show that (iv) holds. Let us consider arbitrary \(v_k \in \mathcal {T}_{x_k}\). Then, by the Definition (16) of \(\mathcal {T}_{x_k}\) we have that \(1 > \Vert v_k\Vert _{x_k} = \sqrt{\langle H(x_k)v_k,v_k\rangle } = |v_k/x_k| = |k v_k|\), which implies \(|v_k| \le \frac{1}{k}\) and \(\langle \nabla ^2 f(x_k) v_k, v_k\rangle = - v_k^2 \rightarrow 0\) as \(k\rightarrow \infty \), which finishes the proof of (iv). Thus, we proved that \(\bar{x}=0\) is a second-order stationary point in the sense of Proposition 4. Importantly, one can show that in this example the point \(\bar{x}=0\) satisfies also second-order necessary optimality conditions in [58, Theorem 1], [82, Theorem 3.1], [61, Theorem 4]. Overall, in this example we see that \(\bar{x}=0\) is not a strong second-order stationary point, but is a weak second-order stationary point since (20) as well as conditions in Proposition 4, [58, Theorem 1], [82, Theorem 3.1], [61, Theorem 4] hold. \(\Diamond \)

Our second-order necessary conditions involve the set \(\mathcal {T}_{x}\). Theorem 3.2 in [73] demonstrates that the cone generated by \(\mathcal {T}_{x}\) coincides with the weak critical cone for conic programming, which is used in weak second-order necessary conditions [8, 52]. At the same time, weak second-order necessary conditions are appropriate notions for barrier algorithms. Indeed, [53] gives an explicit example illustrating that accumulation points of trajectories generated by barrier algorithms will converge to stationary points satisfying the weak second-order necessary condition, but not the strong version. Later, Andreani and Secchin [10] made a small modification in Gould and Toint’s counterexample to come to the same conclusion for augmented Lagrangian-type algorithms. Hence, the most we can expect from our method is that it generates points that approximately satisfy the weak second-order necessary optimality conditions.

4 A first-order Hessian barrier algorithm

In this section we introduce a first-order potential reduction method for solving (Opt) that uses a barrier \(h \in \mathcal {H}_{\theta }(\textsf{K})\) and potential function (2). We assume that we are able to compute an approximate analytic center at low computational cost. Specifically, our algorithm relies on the availability of a \(\theta \)-analytic center, i.e. a point \(x^{0}\in \textsf{X}\) such that

To obtain such a point \(x^{0}\), one can apply interior point methods to the convex programming problem \(\min _{x\in \textsf{X}}h(x)\). Moreover, since \(\theta \ge 1\) we do not need to solve it with high precision, making the application of computationally cheap first-order method, such as [43], an appealing choice for this preprocessing step.

4.1 Local properties

Our complexity analysis relies on the ability to control the behavior of the objective function along the set of feasible directions and with respect to the local norm.

Assumption 3

(Local smoothness) \(f:\mathbb {E}\rightarrow \mathbb {R}\cup \{+\infty \}\) is continuously differentiable on \(\textsf{X}\) and there exists a constant \(M>0\) such that for all \(x\in \textsf{X}\) and \(v\in \mathcal {T}_{x}\), where \(\mathcal {T}_{x}\) is defined in (16), we have

Remark 2

If the set \(\bar{\textsf{X}}\) is bounded, we have \(\lambda _{\min }(H(x)) \ge \sigma \) for some \(\sigma >0\). In this case, assuming f has an M-Lipschitz continuous gradient, the classical descent lemma [76] implies Assumption 3. Indeed,

\(\Diamond \)

Remark 3

We emphasize that the local Lipschitz smoothness condition (22) does not require global differentiability. Consider the composite non-smooth and non-convex model (1) on \(\bar{\textsf{K}}_{\text {NN}}\), with \(\varphi (s)=s\) for \(s \ge 0\). This means \(\sum _{i=1}^{n}\varphi (x_{i}^{p})=\Vert x\Vert _{p}^{p}\) for \(p\in (0,1)\) and \(x\in \bar{\textsf{K}}_{\text {NN}}\). As a concrete example for the smooth part of the problem let us consider the \(L_{2}\)-loss \(\ell (x)=\frac{1}{2}\Vert \textbf{N}x-\textbf{p}\Vert ^{2}\). This gives rise to the \(L_{2}-L_{p}\) minimization problem, an important optimization formulation arising in phase retrieval, mathematical statistics, signal processing and image recovery [36, 49, 50, 71]. Setting \(M=\lambda _{\max }(\textbf{N}^{*}\textbf{N})\), the descent lemma yields

for \(x,x^{+}\in \textsf{K}_{\text {NN}}\). Since \(t\mapsto t^{p}\) is concave for \(t>0\) and \(p\in (0,1)\), we have, for \(x,x^{+}\in \textsf{K}_{\text {NN}}\),

Adding these inequalities, we immediately arrive at condition (22) in terms of the Euclidean norm. Over a bounded feasible set \(\bar{\textsf{X}}\), this implies Assumption 3 (cf. Remark 2). At the same time, f is not differentiable for \(x \in {{\,\textrm{bd}\,}}(\textsf{K}_{\text {NN}})=\{x\in \mathbb {R}^n_{+}\vert x_i=0\text { for some } i\}\). \(\Diamond \)

We emphasize that in Assumption 3, the Lipschitz modulus M is hardly known exactly in practice, and it is also not an easy task to obtain universal upper bounds that can be efficiently used in the algorithm. Therefore, adaptive techniques should be used to estimate it and are likely to improve the practical performance of the method.

Considering \(x\in \textsf{X},v\in \mathcal {T}_{x}\) and combining Eq. (22) with Eq. (7) (with \(d=v\) and \(t=1< \frac{1}{\Vert v\Vert _x} {\mathop {\le }\limits ^{\tiny (5)}} \frac{1}{\zeta (x,v)}\)) reveals a suitable quadratic model, to be used in the design of our first-order algorithm.

Lemma 2

(Quadratic overestimation) For all \(x\in \textsf{X},v\in \mathcal {T}_{x}\) and \(L\ge M\), we have

4.2 Algorithm description and its complexity

4.2.1 Defining the step direction

Let \(x \in \textsf{X}\) be given. Our first-order method employs a quadratic model \( Q^{(1)}_{\mu }(x,v)\) to compute a search direction \(v_{\mu }(x)\), given by

For the above problem, we have the following system of optimality conditions involving the dual variable \(y_{\mu }(x)\in \mathbb {R}^{m}\):

Since \(H(x)\succ 0\) for \(x\in \textsf{X}\), any standard solution method [81] can be applied for the above linear system. Moreover, this system can be solved explicitly. Indeed, since \(H(x)\succ 0\) for \(x\in \textsf{X}\), and \({\textbf{A}}\) has full row rank, the linear operator \({\textbf{A}}[H(x)]^{-1}{\textbf{A}}^{*}\) is invertible. Hence, \(v_{\mu }(x)\) is given explicitly as

To give some intuition behind this expression, observe that we can give an alternative representation of \(\textbf{S}_{x}\) as \(\textbf{S}_{x}v = [H(x)]^{-1/2}\Pi _{x}[H(x)]^{-1/2}v\), where

This shows that \(\textbf{S}_{x}\) is just the \(\Vert \cdot \Vert _{x}\)-orthogonal projection operator onto \(\ker ({\textbf{A}}[H(x)]^{-1/2})\).

4.2.2 Defining the step-size

To determine an acceptable step-size, consider a point \(x \in \textsf{X}\). The search direction \(v_{\mu }(x)\) gives rise to a family of parameterized arcs \(x^{+}(t)\triangleq x+tv_{\mu }(x)\), where \(t\ge 0\). Our aim is to choose this step-size to ensure feasibility of iterates and decrease of the potential. By (6) and (26), we know that \(x^{+}(t)\in \textsf{X}\) for all \(t\in I_{x,\mu } \triangleq [0,\frac{1}{\zeta (x,v_{\mu }(x))})\). Multiplying (25) by \(v_{\mu }(x)\) and using (26), we obtain \(\langle \nabla F_{\mu }(x),v_{\mu }(x)\rangle =-\Vert v_{\mu }(x)\Vert _{x}^{2}\). Choosing \(t \in I_{x,\mu }\), we bound

Therefore, if \(t\zeta (x,v_{\mu }(x))\le 1/2\), we readily see from (23) that

The function \(t \mapsto \eta _{x}(t)\) is strictly concave with the unique maximum at \( \frac{1}{M+2\mu }\), and two real roots at \(t\in \left\{ 0,\frac{2}{M+2\mu }\right\} \). Thus, maximizing the per-iteration decrease \(\eta _{x}(t)\) under the restriction \(0\le t\le \frac{1}{2\zeta (x,v_{\mu }(x))}\), we choose the step-size

4.2.3 Backtracking on the Lipschitz modulus

The above step-size rule, however, requires knowledge of the parameter M. To boost numerical performance, we employ a backtracking scheme in the spirit of [79] to estimate the constant M at each iteration. This procedure generates a sequence of positive numbers \((L_{k})_{k\ge 0}\) for which the local Lipschitz smoothness condition (22) holds. More specifically, suppose that \(x^{k}\) is the current position of the algorithm with the corresponding initial local Lipschitz estimate \(L_{k}\) and \(v^{k}=v_{\mu }(x^{k})\) is the corresponding search direction. To determine the next iterate \(x^{k+1}\), we iteratively try step-sizes \(\alpha _k\) of the form \(\texttt{t}_{\mu ,2^{i_k}L_k}(x^{k})\) for \(i_k\ge 0\) until the local smoothness condition (22) holds with \(x=x^{k}\), \(v= \alpha _k v^{k}\) and local Lipschitz estimate \(M=2^{i_k}L_k\), see (30). This process must terminate in finitely many steps, since when \(2^{i_k}L_k \ge M\), inequality (22) with M changed to \(2^{i_k}L_k\), i.e., (30), follows from Assumption 3.

4.2.4 First-order algorithm and its complexity result

Combining the search direction finding problem (24) with the just outlined backtracking strategy, yields a First-order Adaptive Hessian Barrier Algorithm (\({{\,\mathrm{\textbf{FAHBA}}\,}}\), Algorithm 1).

Our main result on the iteration complexity of Algorithm 1 is the following Theorem, whose proof is given in Sect. 4.3.

Theorem 1

Let Assumptions 1–3 hold. Fix the error tolerance \(\varepsilon >0\), the regularization parameter \(\mu =\frac{\varepsilon }{\theta }\), and some initial guess \(L_0>0\) for the Lipschitz constant in (22). Let \((x^{k})_{k\ge 0}\) be the trajectory generated by \({{\,\mathrm{\textbf{FAHBA}}\,}}(\mu ,\varepsilon ,L_{0},x^{0})\), where \(x^{0}\) is a \(\theta \)-analytic center satisfying (21). Then the algorithm stops in no more than

outer iterations, and the number of inner iterations is no more than \(2(\mathbb {K}_{I}(\varepsilon ,x^{0})+1)+\max \{\log _{2}(M/L_{0}),0\}\). Moreover, the last iterate obtained from \({{\,\mathrm{\textbf{FAHBA}}\,}}(\mu ,\varepsilon ,L_{0},x^{0})\) constitutes a \(2\varepsilon \)-KKT point for problem (Opt) in the sense of Definition 4.

Remark 4

The line-search process of finding the appropriate \(i_k\) is simple since only recalculating \(z^k\) is needed, and repeatedly solving problem (28) is not required. Furthermore, the sequence of constants \(L_k\) is allowed to decrease along subsequent iterations, which is achieved by the division by the constant factor 2 in the final updating step of each iteration. This potentially leads to longer steps and faster decrease of the potential. \(\Diamond \)

Remark 5

Since \(\theta \ge 1\), \(f(x^{0}) - f_{\min }(\textsf{X})\) is expected to be larger than \(\varepsilon \), and the constant M is potentially large, we see that the main term in the complexity bound (31) is \(O\left( \frac{M\theta ^2(f(x^{0}) - f_{\min }(\textsf{X}))}{\varepsilon ^2}\right) =O\left( \frac{\theta ^{2}}{\varepsilon ^{2}}\right) \), i.e., has the same dependence on \(\varepsilon \) as the standard complexity bounds [24, 25, 69] of first-order methods for non-convex problems under the standard Lipschitz-gradient assumption, which on bounded sets is subsumed by our Assumption 3. Further, if the function f is linear, Assumption 3 holds with \(M=0\) and we can take \(L_0=0\). In this case, the complexity bound (31) improves to \(O\left( \frac{\theta (f(x^{0}) - f_{\min }(\textsf{X}))}{\varepsilon }\right) \). \(\Diamond \)

Remark 6

Just like classical interior-point methods, the iteration complexity of \({{\,\mathrm{\textbf{FAHBA}}\,}}\) depends on the barrier parameter \(\theta \ge 1\). For conic domains, the characterization of this barrier parameter has thus been an active research line. [56] demonstrated that for symmetric cones, the barrier parameter is equivalent to algebraic properties of the cone and identified it with the rank of the cone (see [45] for a definition of the rank of a symmetric cone). This deep analysis gives an exact characterization of the optimal barrier parameter for the most important conic domains in optimization. For \(\textsf{K}_{\text {NN}}\) and \(\textsf{K}_{\text {SDP}}\), it is known that \(\theta =n\) is optimal, whereas for \(\textsf{K}_{\text {SOC}}\) the optimal barrier parameter is \(\theta =2\) (and therefore independent of the dimension n). \(\Diamond \)

4.2.5 Connection with interior point flows on polytopes

Consider \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}\), and \(\textsf{X}=\textsf{K}_{\text {NN}}\cap \textsf{L}\). We are given a function \(f:\bar{\textsf{X}}\rightarrow \mathbb {R}\) which is the restriction of a smooth function \(f:\mathbb {R}^n\rightarrow \mathbb {R}\). The canonical barrier for this setting is \(h(x)=-\sum _{i=1}^{n}\ln (x_{i})\), so that \(H(x)={{\,\textrm{diag}\,}}[x_{1}^{-2},\ldots ,x_{n}^{-2}]\triangleq \textbf{X}^{-2}\) for \(x\in \textsf{X}\). Applying our first-order method on this domain gives the search direction \(v_{\mu }(x)=-\textbf{S}_{x}\nabla F_{\mu }(x)=-\textbf{X}(\textbf{I}-\textbf{X}{\textbf{A}}^{\top }({\textbf{A}}\textbf{X}^{2}{\textbf{A}}^{\top })^{-1}{\textbf{A}}\textbf{X})\textbf{X}\nabla F_{\mu }(x)\). This explicit formula yields various interesting connections between our approach and classical methods. First, for \(f(x)=c^{\top }x\) and \(\mu =0\), we obtain from this formula the search direction employed in affine scaling methods for linear programming [1, 11, 12]. Second, [22] partly motivated their algorithm as a discretization of the Hessian-Riemannian gradient flows introduced in [4, 21]. Heuristically, we can think of \({{\,\mathrm{\textbf{FAHBA}}\,}}\) as an explicit Euler discretization (with non-monotone adaptive step-size policies) of the gradient-like flow \(\dot{x}(t)=-\textbf{S}_{x(t)}\nabla F_{\mu }(x(t))\), which resembles very much the class of dynamical systems introduced in [21]. This gives an immediate connection to a large class of interior point flows on polytopes, heavily studied in control theory [62].

4.3 Proof of Theorem 1

Our proof proceeds in several steps. First, we show that procedure \({{\,\mathrm{\textbf{FAHBA}}\,}}(\mu ,\varepsilon ,L_{0},x^{0})\) produces points in \(\textsf{X}\), and, thus, is indeed an interior-point method. Next, we show that the line-search process of finding appropriate \(L_k\) in each iteration is finite, and estimate the total number of trials in this process. Then we enter the core of our analysis where we prove that if the stopping criterion does not hold at iteration k, i.e., \(\Vert v^{k}\Vert _{x^{k}} \ge \tfrac{\varepsilon }{\theta }\), then the objective f is decreased by a quantity \(O(\varepsilon ^{2})\), and, since the objective is globally lower bounded, we conclude that the method stops in at most \(O(\varepsilon ^{-2})\) iterations. Finally, we show that when the stopping criterion holds, the method has generated an \(\varepsilon \)-KKT point.

4.3.1 Interior-point property of the iterates

By construction \(x^{0}\in \textsf{X}\). Proceeding inductively, let \(x^{k}\in \textsf{X}\) be the k-th iterate of the algorithm, delivering the search direction \(v^{k}\triangleq v_{\mu }(x^{k})\). By Eq. (29), the step-size \(\alpha _k\) satisfies \(\alpha _{k}\le \frac{1}{2\zeta (x^{k},v^{k})}\), and, hence, \(\alpha _{k}\zeta (x^{k},v^{k})\le 1/2\) for all \(k\ge 0\). Thus, by (6) \(x^{k+1}=x^{k}+\alpha _{k}v^{k} \in \textsf{K}\). Since, by (28), \({\textbf{A}}v^{k} =0\), we have that \(x^{k+1} \in \textsf{L}\). Thus, \(x^{k+1}\in \textsf{K}\cap \textsf{L}=\textsf{X}\). By induction, we conclude that \((x^{k})_{k\ge 0}\subset \textsf{X}\).

4.3.2 Bounding the number of backtracking steps

Let us fix iteration k. Since the sequence \(2^{i_k} L_k \) is increasing as \(i_k\) is increasing, and Assumption 3 holds, we know that when \(2^{i_k} L_k \ge \max \{M,L_k\}\), the line-search process for sure stops since inequality (30) holds. Hence, \(2^{i_k} L_k \le 2\max \{M,L_k\}\) must be the case, and, consequently, \(L_{k+1} = 2^{i_k-1} L_k \le \max \{M,L_k\}\), which, by induction, gives \(L_{k+1} \le \bar{M}\triangleq \max \{M,L_0\}\). At the same time, \(\log _{2}\left( \frac{L_{k+1}}{L_{k}}\right) = i_{k}-1\), \(\forall k\ge 0\). Let N(k) denote the number of inner line-search iterations up to the \(k-\)th iteration of \({{\,\mathrm{\textbf{FAHBA}}\,}}(\mu ,\varepsilon ,L_{0},x^{0})\). Then, using that \(L_{k+1} \le \bar{M}=\max \{M,L_0\}\),

This shows that on average the inner loop ends after two trials.

4.3.3 Per-iteration analysis and a bound for the number of iterations

Let us fix iteration counter k. Since \(L_{k+1} = 2^{i_k-1}L_k\), the step-size (29) reads as \(\alpha _{k}=\min \left\{ \frac{1}{2L_{k+1} + 2 \mu },\frac{1}{2\zeta (x^k,v^k)} \right\} \). Hence, \(\alpha _{k}\zeta (x^{k},v^{k})\le 1/2\), and (27) with the specification \(t=\alpha _k = \texttt{t}_{\mu ,2L_{k+1}}(x^{k})\), \(M=2L_{k+1}\), \(x=x^{k}\), \(v_{\mu }(x^{k}) \triangleq v^k\) gives:

where we used that \(\alpha _k \le \frac{1}{2(L_{k+1}+\mu )}\) in the last inequality. Substituting into (32) the two possible values of the step-size \(\alpha _k\) in (29) gives

Recalling \(L_{k+1} \le \bar{M}\) (see Sect. 4.3.2), we obtain that

Rearranging and summing these inequalities for k from 0 to \(K-1\) gives

where we used that, by the assumptions of Theorem 1, \(x^{0}\) is a \(\theta \)-analytic center defined in (21) and \(\mu = \varepsilon /\theta \), implying that \(h(x^{0}) - h(x^{K}) \le \theta = \varepsilon /\mu \). Thus, up to passing to a subsequence, \(\delta _{k}\rightarrow 0\), and consequently \(\Vert v^{k}\Vert _{x^{k}} \rightarrow 0\) as \(k \rightarrow \infty \). This shows that the stopping criterion in Algorithm 1 is achievable.

Assume now that the stopping criterion \(\Vert v^k\Vert _{x^k} < \frac{\varepsilon }{ \theta }\) does not hold for K iterations of \({{\,\mathrm{\textbf{FAHBA}}\,}}\). Then, for all \(k=0,\ldots ,K-1,\) it holds that \(\delta _{k}\ge \min \left\{ \frac{\varepsilon }{4 \theta },\frac{\varepsilon ^{2}}{4 \theta ^{2}(\bar{M}+\mu )}\right\} \). Together with the parameter coupling \(\mu =\frac{\varepsilon }{\theta }\), it follows from (34) that

Hence, recalling that \(\bar{M}=\max \{M,L_0\}\), we obtain

i.e., the algorithm stops for sure after no more than this number of iterations. This, combined with the bound for the number of inner steps in Sect. 4.3.2, proves the first statement of Theorem 1.

4.3.4 Generating \(\varepsilon \)-KKT point

To finish the proof of Theorem 1, we now show that when Algorithm 1 stops for the first time, it returns a \(2\varepsilon \)-KKT point of (Opt) according to Definition 4.

Let the stopping criterion hold at iteration k. By the optimality condition (25) and the definition of the potential (2), we have

Denoting \(g^{k}\triangleq -\mu \nabla h(x^{k})\), multiplying both equations, and using the stopping criterion \(\Vert v^{k}\Vert _{x^{k}} < \frac{\varepsilon }{\theta }\), we conclude

Whence, setting \(s^{k}\triangleq \nabla f(x^{k})-{\textbf{A}}^{*}y^{k}\in \mathbb {E}^{*}\), the definition of the dual norm yields

where in the last equality we used the fact \(h_{*}\in \mathcal {H}_{\theta }(\textsf{K}^{*})\), so that Eq. (80) delivers the identity \(\nabla ^{2}h_{*}(\frac{1}{\mu }g^{k})=\mu ^{2}\nabla ^{2}h_{*}(g^{k})\). Since we set \(\mu =\frac{\varepsilon }{\theta }\), it follows

Thus, since, by (78), \(g^{k}=-\mu \nabla h(x^{k})\in {{\,\textrm{int}\,}}(\textsf{K}^{*})\), applying Lemma 1, we can now conclude that \(\nabla f(x^{k})-{\textbf{A}}^{*}y^{k}=s^{k}\in {{\,\textrm{int}\,}}(\textsf{K}^{*})\) and therefore (11) holds. By construction, \(x^{k}\in \textsf{K}\) and \({\textbf{A}}x^{k} = b\). Thus, (10) holds as well. Finally, since \((x^{k},s^{k})\in \textsf{K}\times \textsf{K}^{*}\), we see

where the last inequality uses \(\theta \ge 1\). Hence, the complementarity condition (12) holds as well. This finishes the proof of Theorem 1.

4.4 Discussion

4.4.1 Special case of non-negativity constraints

For \(\bar{\textsf{K}}=\bar{\textsf{K}}_{\text {NN}}=\mathbb {R}^n_{+}\), i.e., a particular case covered by our general problem template (Opt), [58] consider a first-order potential reduction method employing the standard log-barrier \(h(x)=-\sum _{i=1}^n \ln (x_i)\) and using a trust-region subproblem for obtaining the search direction. For \(x\in \textsf{K}_{\text {NN}}\), we have \(\nabla h(x)=[-x_{1}^{-1},\ldots ,-x_{n}^{-1}]^{\top }\), \(H(x)={{\,\textrm{diag}\,}}[x_{1}^{-2},\ldots ,x_{n}^{-2}]\triangleq \textbf{X}^{-2}\), which makes the problem in a sense simpler since we have a natural coupling between the variable x and the Hessian H(x) expressed as \([H(x^{k})^{-\frac{1}{2}}s^{k}]_i=x_i^{k}s_i^{k}\) and simplifying the derivation of the complementarity condition. More precisely, combining (35), the information \([H(x^{k})]^{-1/2}\nabla h(x^{k})= - \textbf{1}_{n},\;\theta =n\), and the stopping criterion of Algorithm 1 at iteration k, saying that \(\Vert v^{k}\Vert _{x^{k}}<\frac{\varepsilon }{\theta }\), we see

Therefore, since \(\mu =\varepsilon /n\) and \(s^{k}=\nabla f(x^{k})-{\textbf{A}}^{*}y^{k}\), we have \(H(x^{k})^{-\frac{1}{2}}s^{k}= \textbf{X}^{k}s^{k} > 0\) and \(x^{k}\in \mathbb {R}^n_{++}\) implies \(s^{k} \in \mathbb {R}^n_{++}\). Additionally, from the triangle inequality, we have