Abstract

The first part of this work established the foundations of a radial duality between nonnegative optimization problems, inspired by the work of Renegar (SIAM J Optim 26(4): 2649-2676, 2016). Here we utilize our radial duality theory to design and analyze projection-free optimization algorithms that operate by solving a radially dual problem. In particular, we consider radial subgradient, smoothing, and accelerated methods that are capable of solving a range of constrained convex and nonconvex optimization problems and that can scale-up more efficiently than their classic counterparts. These algorithms enjoy the same benefits as their predecessors, avoiding Lipschitz continuity assumptions and costly orthogonal projections, in our newfound, broader context. Our radial duality further allows us to understand the effects and benefits of smoothness and growth conditions on the radial dual and consequently on our radial algorithms.

Similar content being viewed by others

Notes

Instead of using a re-parameterization, one can explicitly include equality constraints in our model. The details of this approach are given in Sect. 2.2.1, where we see that equality constraints are unaffected by the radial dual.

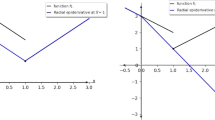

Our calculation of the radial dual of the quadratic objective follows by definition as

$$\begin{aligned} (1-\frac{1}{2}x^T Qx - c^Tx)^\varGamma _+(y)&= \sup \left\{ v>0 \mid v\left( 1-\frac{y^T Qy}{2v^2} - \frac{c^Ty}{v}\right) \le 1 \right\} \\&= \sup \left\{ v>0 \mid v^2-\frac{1}{2}y^T Qy - (c^Ty+1)v\le 0 \right\} \\&=\left( \frac{c^Ty+1 + \sqrt{(c^Ty+1)^2 +2y^TQy}}{2}\right) _+. \end{aligned}$$Quadratic programming was the original motivating setting for Frank-Wolfe [13].

The source code is available at github.com/bgrimmer/Radial-Duality-QP-Example.

For example, if either \(\{a_i\}\) spans \(\mathbb {R}^n\) or the regularizer r(x) has bounded level sets.

Note this hypograph is not actually star convex since \((0,0)\not \in \mathrm {hypo\ }f\).

This is essentially by definition as \(v\cdot \hat{\iota }_S(y/v)\) is nondecreasing in v if and only if S is star-convex w.r.t. the origin. Then it is simple to check this function is upper semicontinuous and is vacuously strictly increasing on its effective domain \(\mathrm {dom\ }\hat{\iota }_S = \emptyset \), which is empty.

References

Bauschke, H.H., Bolte, J., Teboulle, M.: A descent lemma beyond lipschitz gradient continuity: first-order methods revisited and applications. Math. Oper. Res. 42(2), 330–348 (2017). https://doi.org/10.1287/moor.2016.0817

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Beck, A., Teboulle, M.: Smoothing and first order methods: a unified framework. SIAM J. Optim. 22, 557–580 (2012)

Bertero, M., Boccacci, P., Desiderà, G., Vicidomini, G.: Image deblurring with poisson data: from cells to galaxies. Inverse Prob. 25(12), 123006 (2009). https://doi.org/10.1088/0266-5611/25/12/123006

Bolte, J., Daniilidis, A., Lewis, A.: The łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17(4), 1205–1223 (2007). https://doi.org/10.1137/050644641

Bolte, J., Nguyen, T.P., Peypouquet, J., Suter, B.W.: From error bounds to the complexity of first-order descent methods for convex functions. Math. Program. 165(2), 471–507 (2017). https://doi.org/10.1007/s10107-016-1091-6

Burke, J.V., Ferris, M.C.: Weak sharp minima in mathematical programming. SIAM J. Control. Optim. 31(5), 1340–1359 (1993). https://doi.org/10.1137/0331063

Chandrasekaran, K., Dadush, D., Vempala, S.: Thin Partitions: Isoperimetric Inequalities and a Sampling Algorithm for Star Shaped Bodies, pp. 1630–1645. https://doi.org/10.1137/1.9781611973075.133

Clarke, F.H., Ledyaev, Y.S., Stern, R.J., Wolenski, P.R.: Nonsmooth Analysis and Control Theory. Springer-Verlag, Berlin, Heidelberg (1998)

Davis, D., Drusvyatskiy, D.: Stochastic model-based minimization of weakly convex functions. SIAM J. Optim. 29(1), 207–239 (2019). https://doi.org/10.1137/18M1178244

Dorn, W.S.: Duality in quadratic programming. Q. Appl. Math. 18(2), 155–162 (1960)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001). https://doi.org/10.1198/016214501753382273

Frank, M., Wolfe, P.: An algorithm for quadratic programming. Naval Res. Logist. Q. 3(1–2), 95–110 (1956). https://doi.org/10.1002/nav.3800030109

Freund, R.M.: Dual gauge programs, with applications to quadratic programming and the minimum-norm problem. Math. Program. 38, 47–67 (1987). https://doi.org/10.1007/BF02591851

Gao, H.Y., Bruce, A.G.: Wave shrink with firm shrinkage. Stat. Sin. 7(4), 855–874 (1997)

Grimmer, B.: Radial subgradient method. SIAM J. Optim. 28(1), 459–469 (2018). https://doi.org/10.1137/17M1122980

Grimmer, B.: Radial Duality Part I: Foundations. arXiv e-prints arXiv:2104.11179 (2021)

Guminov, S., Gasnikov, A.: Accelerated Methods for \(\alpha \)-Weakly-Quasi-Convex Problems. arXiv e-prints arXiv:1710.00797 (2017)

Guminov, S., Nesterov, Y., Dvurechensky, P., Gasnikov, A.: Accelerated primal-dual gradient descent with linesearch for convex, nonconvex, and nonsmooth optimization problems. Dokl. Math. 99, 125–128 (2019). https://doi.org/10.1134/S1064562419020042

He, N., Harchaoui, Z., Wang, Y., Song, L.: Fast and simple optimization for poisson likelihood models. CoRR abs/1608.01264 (2016). http://arxiv.org/abs/1608.01264

Hinder, O., Sidford, A., Sohoni, N.: Near-optimal methods for minimizing star-convex functions and beyond. In: J. Abernethy, S. Agarwal (eds.) Proceedings of Thirty Third Conference on Learning Theory, Proceedings of Machine Learning Research, vol. 125, pp. 1894–1938. PMLR (2020). http://proceedings.mlr.press/v125/hinder20a.html

Johnstone, P.R., Moulin, P.: Faster subgradient methods for functions with hölderian growth. Math. Program. 180(1), 417–450 (2020). https://doi.org/10.1007/s10107-018-01361-0

Klein Haneveld, W.K., van der Vlerk, M.H., Romeijnders, W.: Chance Constraints, pp. 115–138. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-29219-5_5

Kurdyka, K.: On gradients of functions definable in o-minimal structures. Annales de l’institut Fourier 48(3), 769–783 (1998). http://eudml.org/doc/75302

Lacoste-Julien, S., Schmidt, M., Bach, F.R.: A simpler approach to obtaining an o(1/t) convergence rate for the projected stochastic subgradient method. CoRR abs/1212.2002 (2012). http://arxiv.org/abs/1212.2002

Lee, J.C., Valiant, P.: Optimizing star-convex functions. In: 2016 IEEE 57th Annual Symposium on Foundations of Computer Science (FOCS), pp. 603–614 (2016). https://doi.org/10.1109/FOCS.2016.71

Liu, M., Yang, T.: Adaptive accelerated gradient converging method under holderian error bound condition. In: Advances in Neural Information Processing Systems, vol. 30 (2017). https://proceedings.neurips.cc/paper/2017/file/2612aa892d962d6f8056b195ca6e550d-Paper.pdf

Lojasiewicz, S.: Une propriété topologique des sous-ensembles analytiques réels. Les équations aux dérivées partielles 117, 87–89 (1963)

Łojasiewicz, S.: Sur la géométrie semi-et sous-analytique. In: Annales de l’institut Fourier, vol. 43, pp. 1575–1595 (1993)

Mukkamala, M.C., Fadili, J., Ochs, P.: Global convergence of model function based bregman proximal minimization algorithms. (2020) arXiv:2012.13161

Nemirovski, A., Shapiro, A.: Convex approximations of chance constrained programs. SIAM J. Optim. 17(4), 969–996 (2007). https://doi.org/10.1137/050622328

Nesterov, Y.: A method for unconstrained convex minimization problem with the rate of convergence \(o(1/k^2)\). Soviet Math. Doklady 27(2), 372–376 (1983)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005). https://doi.org/10.1007/s10107-004-0552-5

Nesterov, Y.: Universal gradient methods for convex optimization problems. Math. Program. 152(1–2), 381–404 (2015). https://doi.org/10.1007/s10107-014-0790-0

Nesterov, Y., Polyak, B.: Cubic regularization of newton method and its global performance. Math. Program. 108, 177–205 (2006). https://doi.org/10.1007/s10107-006-0706-8

Polyak, B.T.: Minimization of unsmooth functionals. USSR Comput. Math. Math. Phys. 9(3), 14–29 (1969). https://doi.org/10.1016/0041-5553(69)90061-5

Polyak, B.T.: Sharp minima. Institute of Control Sciences Lecture Notes,Moscow, USSR. Presented at the IIASA Workshop on Generalized Lagrangians and Their Applications, IIASA, Laxenburg, Austria. (1979)

Renegar, J.: “Efficient’’ subgradient methods for general convex optimization. SIAM J. Optim. 26(4), 2649–2676 (2016). https://doi.org/10.1137/15M1027371

Renegar, J.: Accelerated first-order methods for hyperbolic programming. Math. Program. 173(1–2), 1–35 (2019). https://doi.org/10.1007/s10107-017-1203-y

Renegar, J., Grimmer, B.: A simple nearly-optimal restart scheme for speeding-up first order methods. To appear in foundations of computational mathematics (2021)

Roulet, V., d’Aspremont, A.: Sharpness, restart, and acceleration. SIAM J. Optim. 30(1), 262–289 (2020). https://doi.org/10.1137/18M1224568

Rousseeuw, P.J.: Least median of squares regression. J. Am. Stat. Assoc. 79(388), 871–880 (1984). https://doi.org/10.1080/01621459.1984.10477105

Rubinov, A., Yagubov, A.: The space of star-shaped sets and its applications in nonsmooth optimization. Math. Program. Stud. 29 (1986). https://doi.org/10.1007/BFb0121146

Stellato, B., Banjac, G., Goulart, P., Bemporad, A., Boyd, S.: OSQP: an operator splitting solver for quadratic programs. Math. Program. Comput. 12(4), 637–672 (2020). https://doi.org/10.1007/s12532-020-00179-2

Wen, F., Chu, L., Liu, P., Qiu, R.C.: A survey on nonconvex regularization-based sparse and low-rank recovery in signal processing, statistics, and machine learning. IEEE Access 6, 69883–69906 (2018). https://doi.org/10.1109/ACCESS.2018.2880454

Yang, T., Lin, Q.: Rsg: Beating subgradient method without smoothness and strong convexity. J. Mach. Learn. Res. 19(6), 1–33 (2018). http://jmlr.org/papers/v19/17-016.html

Yu, J., Eriksson, A., Chin, T.J., Suter, D.: An adversarial optimization approach to efficient outlier removal. J. Math. Imag. Vis 48, 451–466 (2014). https://doi.org/10.1007/s10851-013-0418-7

Yuan, Y., Li, Z., Huang, B.: Robust optimization approximation for joint chance constrained optimization problem. J. Glob. Optim. 67, 805–827 (2017). https://doi.org/10.1007/s10898-016-0438-0

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010). https://doi.org/10.1214/09-AOS729

Acknowledgements

The author thanks Jim Renegar broadly for inspiring this work and concretely for providing feedback an early draft and Rob Freund for constructive thoughts helping focus this work. Additionally, two anonymous referees and the associate editor provided useful feedback much improving this work’s presentation and clarity.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This material is based upon work supported by the National Science Foundation Graduate Research Fellowship under Grant No. DGE-1650441. This work was partially done while the author was visiting the Simons Institute for the Theory of Computing. It was partially supported by the DIMACS/Simons Collaboration on Bridging Continuous and Discrete Optimization through NSF grant #CCF-1740425.

Appendices

Appendix A: LogSumExp gradients and QP optimality certificates

In our quadratic programming example (8) and our generalized setting (37), we consider smoothings of a finite maximum. Given a smooth convex functions \(f_i:\mathcal {E}\rightarrow \mathbb {R}\), we considered the smoothing of \(\max \{f_i\}\) with parameter \(\eta >0\) given by \( f_\eta (x):= \eta \log \left( \sum _{i=0}^n \exp (f_i(x)/\eta )\right) .\) Its gradient is given by \( \nabla f_\eta (x) = \sum \lambda _i \nabla f_i(x)\) where \(\lambda _i = \exp (f_i(x)/\eta ) / \sum _j \exp (f_j(x)/\eta )\). Computationally evaluating this requires mild care to avoid precision issues with exponentiating potentially larger numbers. It is numerically stable to instead compute these coefficients via the equivalent formula

Next, we specialize this formula to the setting of quadratic programming for \(g_\eta \) in (8). Observe the gradient of the objective component is given by

where \(x=y/\left( \frac{c^Ty+1 + \sqrt{(c^Ty+1)^2 +2y^TQy}}{2}\right) _+\) by using the gradient formula (25). The gradients of the transformed constraints are simply \( \nabla a_i^T y /b_i = a_i/b_i \). Then the gradient of the smoothing overall is given by

This gradient can be computed using two matrix multiplications with A: Ay is needed to compute the coefficients \(\lambda _i\), then \(A^T[\lambda _1/b_1 \dots \lambda _n/b_n]\) is needed for the summation above. This gradient formula indicates a reasonable selection of dual multipliers \( v_i = \frac{\lambda _i (1+\frac{1}{2}x^TQx)}{\lambda _0b_i}\) as we then have \(g_\eta (y)\) proportional to \(Qx+c + A^Tv\).

Appendix B: Calculation of nonconvex subgradient method guarantee (41)

Here we derive (41) from the convergence theory of [10, Theorem 3.1], which assumes the function being minimized g is uniformly M-Lipschitz and \(\rho \)-weakly convex (defined as \(g+\frac{\rho }{2}\Vert \cdot \Vert ^2\) being convex). Equation [10, (3.4)] ensures the subgradient method \(y_{k+1} = y_k - \alpha \zeta _k\) for \(\zeta _k\in \partial _P g(y_k)\) has some \(y_k\) with

where \(g_{1/\bar{\rho }}\) is the Moreau envelope of g (and using that \(g_{1/\bar{\rho }}(x_0) < g(x_0)\)). Given the method will be run for T steps, setting \(\bar{\rho }= 2\rho \) and \(\alpha _k = \sqrt{\frac{g(y_0)-\inf g}{\rho M^2(T+1)}}\) this bound becomes

Following the discussion of [10, Page 210], this implies \(y_k\) has a nearby y that is nearly stationary on g. In particular, \(\Vert y-y_k\Vert \le (1/2\rho )\Vert \nabla g_{1/2\rho }(y_k)\Vert \) and \(\textrm{dist}(0,\partial _P g(y))\le \Vert \nabla g_{1/2\rho }(y_k)\Vert \). Combining this with the above bound, we conclude

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Grimmer, B. Radial duality part II: applications and algorithms. Math. Program. 205, 69–105 (2024). https://doi.org/10.1007/s10107-023-01974-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-023-01974-0

Keywords

- Optimization

- Projection-free methods

- Convex

- Nonconvex

- Nonsmooth

- First-order methods

- Projective transformations