Abstract

The softmax policy gradient (PG) method, which performs gradient ascent under softmax policy parameterization, is arguably one of the de facto implementations of policy optimization in modern reinforcement learning. For \(\gamma \)-discounted infinite-horizon tabular Markov decision processes (MDPs), remarkable progress has recently been achieved towards establishing global convergence of softmax PG methods in finding a near-optimal policy. However, prior results fall short of delineating clear dependencies of convergence rates on salient parameters such as the cardinality of the state space \({\mathcal {S}}\) and the effective horizon \(\frac{1}{1-\gamma }\), both of which could be excessively large. In this paper, we deliver a pessimistic message regarding the iteration complexity of softmax PG methods, despite assuming access to exact gradient computation. Specifically, we demonstrate that the softmax PG method with stepsize \(\eta \) can take

to converge, even in the presence of a benign policy initialization and an initial state distribution amenable to exploration (so that the distribution mismatch coefficient is not exceedingly large). This is accomplished by characterizing the algorithmic dynamics over a carefully-constructed MDP containing only three actions. Our exponential lower bound hints at the necessity of carefully adjusting update rules or enforcing proper regularization in accelerating PG methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Despite their remarkable empirical popularity in modern reinforcement learning [35, 41], theoretical underpinnings of policy gradient (PG) methods and their variants [20, 23, 37, 43, 47] remain severely obscured. Due to the nonconcave nature of value function maximization induced by complicated dynamics of the environments, it is in general highly challenging to pinpoint the computational efficacy of PG methods in finding a near-optimal policy. Motivated by their practical importance, a recent strand of work sought to make progress towards demystifying the effectiveness of policy gradient type methods (e.g., [1, 7,8,9, 11, 18, 22, 24, 31, 32, 34, 40, 53,54,55, 57, 59]), focusing primarily on canonical settings such as tabular Markov decision processes (MDPs) for discrete-state problems and linear quadratic regulators for continuous-state problems.

The current paper studies PG methods with softmax parameterization—commonly referred to as softmax policy gradient methods—which are among the de facto implementations of PG methods in practice. An intriguing theoretical result was recently obtained by the work Agarwal et al. [1], which established asymptotic global convergence of softmax PG methods for infinite-horizon \(\gamma \)-discounted tabular MDPs. Subsequently, Mei et al. [34] strengthened the theory by demonstrating that softmax PG methods are capable of finding an \(\varepsilon \)-optimal policy with an iteration complexity proportional to \(1/\varepsilon \) (see Table 1 for the precise form). While these results take an important step towards understanding the effectiveness of softmax PG methods, caution needs to be exercised before declaring fast convergence of the algorithms. In particular, the iteration complexity derived by Mei et al. [34] falls short of delineating clear dependencies on important salient parameters of the MDP, such as the dimension of the state space \({\mathcal {S}}\) and the effective horizon \(1/(1-\gamma )\). These parameters are, more often than not, enormous in contemporary RL applications, and might play a pivotal role in determining the scalability of softmax PG methods.

Additionally, it is worth noting that existing literature largely concentrated on developing algorithm-dependent upper bounds on the iteration complexities. Nevertheless, we recommend caution when directly comparing computational upper bounds for distinct algorithms: the superiority of the computational upper bound for one algorithm does not necessarily imply this algorithm outperforms others, unless we can certify the tightness of all upper bounds being compared. As a more concrete example, it is of practical interest to benchmark softmax PG methods against natural policy gradient (NPG) methods with softmax parameterization, the latter of which is a variant of policy optimization lying underneath several mainstream RL algorithms such as proximal policy optimization (PPO) [39] and trust region policy optimization (TRPO) [38]. While it is tempting to claim superiority of NPG methods over softmax PG methods—given the appealing convergence properties of NPG methods [1] (see Table 1)—existing theory fell short to reach such a conclusion, due to the absence of convergence lower bounds for softmax PG methods in prior literature.

The above considerations thus lead to a natural question that we aim to address in the present paper:

Can we develop a lower bound on the iteration complexity of softmax PG methods that reflects explicit dependency on salient parameters of the MDP of interest?

1.1 Main result

As an attempt to address the question posed above, our investigation delivers a somewhat surprising message that can be described in words as follows:

Softmax PG methods can take (super-)exponential time to converge, even in the presence of a benign initialization and an initial state distribution amenable to exploration.

Our finding, which is concerned with a discounted infinite-horizon tabular MDP, is formally stated in the following theorem. Here and throughout, \(|{\mathcal {S}}|\) denotes the size of the state space \({\mathcal {S}}\), \(0<\gamma <1\) stands for the discount factor, \(V^{\star }\) indicates the optimal value function, \(\eta >0\) is the learning rate or stepsize, whereas \(V^{(t)}\) represents the value function estimate of softmax PG methods in the t-th iteration. All immediate rewards are assumed to fall within \([-1,1]\). See Sect. 2 for formal descriptions.

Theorem 1

Assume that the softmax PG method adopts a uniform initial state distribution, a uniform policy initialization, and has access to exact gradient computation. Suppose that \(0<\eta < (1-\gamma )^2/5\), then there exist universal constants \(c_1, c_2, c_3>0\) such that: for any \(0.96< \gamma < 1\) and \( |{\mathcal {S}}| \ge c_3 (1-\gamma )^{-6}\), one can find a \(\gamma \)-discounted MDP with state space \({\mathcal {S}}\) that takes the softmax PG method at least

to reach

Remark 1

(Action space) The MDP we construct contains at most three actions for each state.

Remark 2

(Stepsize range) Our lower bound operates under the assumption that \(\eta < (1-\gamma )^2/5\). In comparison, prior convergence guarantees for PG-type methods with softmax parameterization (e.g., Agarwal et al. [1, Theorem 5.1] and Mei et al. [34, Theorem 6]) required \(\eta <(1-\gamma )^3/8\), a range of stepsizes fully covered by our theorem. In fact, prior works could only guarantee monotonicity of softmax PG methods (in terms of the value function) within the range \(\eta <(1-\gamma )^2/5\) (see Agarwal et al. [1, Lemma C.2]).

Remark 3

While we can also provide explicit numbers for the constants \(c_1, c_2, c_3>0\), these numbers are not informative, and hence we omit explicit numbers here to streamline the proof a bit.

For simplicity of presentation, Theorem 2 is stated for the long-effective-horizon regime where \(\gamma > 0.96\); it continues to hold when \(\gamma > c_0\) for some smaller constant \(c_0>0\). Our result is obtained by exhibiting a hard MDP instance—which is a properly augmented chain-like MDP—for which softmax PG methods converge extremely slowly even when perfect model specification is available. Several remarks and implications of our result are in order.

Comparisons with prior results. Table 1 provides an extensive comparison of the iteration complexities—including both upper and lower bounds—of PG and NPG methods under softmax parameterization. As suggested by our result, the iteration complexity \(O({\mathcal {C}}^2_{\textsf{spg}}({\mathcal {M}}) \frac{1}{\varepsilon })\) derived in Mei et al. [34] (see Table 1) might not be as rosy as it seems for problems with large state space and long effective horizon; in fact, the crucial quantity \({\mathcal {C}}_{\textsf{spg}}({\mathcal {M}})\) therein could scale in a prohibitive manner with both \(|{\mathcal {S}}|\) and \(\frac{1}{1-\gamma }\). Mei et al. [34] also developed a lower bound on the iteration complexity of softmax PG methods, which falls short of capturing the influence of the state space dimension and might become smaller than 1 unless \(\varepsilon \) is very small (e.g., \(\varepsilon \lesssim (1-\gamma )^3\)) for problems with long effective horizons. In addition, Mei et al. [33] provided some interesting evidence that a poorly-initialized softmax PG algorithm can get stuck at suboptimal policies for a single-state MDP (i.e., the bandit problem). This result, however, fell short of providing a complete runtime analysis and did not look into the influence of a large state space. By contrast, our theory reveals that softmax PG methods can take exponential time to reach even a moderate accuracy level.

Slow convergence even with benign distribution mismatch. Existing computational complexities for policy gradient type methods (e.g., [1, 34]) typically scale polynomially in the so-called distribution mismatch coefficientFootnote 1\(\big \Vert \frac{d^{\pi }_{\rho }}{\mu } \big \Vert _{\infty } \), where \(d^{\pi }_{\rho }\) stands for a certain discounted state visitation distribution (see (13) in Sect. 2), and \(\mu \) denotes the distribution over initial states. It is thus natural to wonder whether the exponential lower bound in Theorem 1 is a consequence of an exceedingly large distribution mismatch coefficient. This, however, is not the case; in fact, our theory chooses \(\mu \) to be a benign uniform distribution so that \(\Vert \frac{d^{\pi }_{\rho }}{\mu }\Vert _{\infty } \le \Vert \frac{1}{\mu }\Vert _{\infty } \le |{\mathcal {S}}|\), which scales at most linearly in \(|{\mathcal {S}}|\).

Benchmarking with softmax NPG methods. Our algorithm-specific lower bound suggests that softmax PG methods—in their vanilla form—might take a prohibitively long time to converge when the state space and effective horizon are large. This is in stark contrast to the convergence rate of NPG type methods, whose iteration complexity is dimension-free and scales only polynomially with the effective horizon [1, 11]. Consequently, our results shed light on the practical superiority of NPG-based algorithms such as PPO [39] and TRPO [38].

Crux of our design. As we shall elucidate momentarily in Sect. 3, our exponential lower bound is obtained through analyzing the trajectory of softmax PG methods on a carefully-designed MDP instance with no more than 3 actions per state, when a uniform initialization scheme and a uniform initial state distribution are adopted. Our construction underscores the critical challenge of credit assignments [42] in RL compounded by the presence of delayed rewards, long horizon, and intertwined interactions across states. While it is difficult to elucidate the source of exponential lower bound without presenting our MDP construction, we take a moment to point out some critical properties that underlie our designs. To be specific, we seek to design a chain-like MDP containing \(H=O\big ( \frac{1}{1-\gamma } \big )\) key primary states \(\{1,\ldots ,H\}\) (each coupled with many auxiliary states), for which the softmax PG method satisfies the following properties.

-

For the two key primary states, we have

$$\begin{aligned} \min \big \{ \textsf{convergence}\text {-}\textsf{time}\text {(state 1)}, \, \textsf{convergence}\text {-}\textsf{time}\text {(state 2)} \big \} \ge \frac{|{\mathcal {S}}|}{\eta } . \end{aligned}$$(3) -

(A blowing-up phenomenon) For each key primary state \(3\le s\le H=O\big (\frac{1}{1-\gamma }\big )\), one has

$$\begin{aligned} \textsf{convergence}\text {-}\textsf{time}\text {(state }s\,) \gtrsim \big ( \textsf{convergence}\text {-}\textsf{time}\text {(state }s-2\,) \big )^{1.5}, \qquad 3\le s\le H. \end{aligned}$$(4)

Here, it is understood that \(\textsf{convergence}\text {-}\textsf{time}\text {( state }s\,)\) represents informally the time taken for the value function of state s to be sufficiently close to its optimal value. The blowing-up phenomenon described above is precisely the source of our (super)-exponential lower bound.

1.2 Other related works

Non-asymptotic analysis of (natural) policy gradient methods. Moving beyond tabular MDPs, finite-time convergence guarantees of PG / NPG methods and their variants have recently been studied for control problems (e.g., [18, 19, 44, 58]), regularized MDPs (e.g., [11, 24, 54]), constrained MDPs (e.g., [15, 50]), robust MDPs (e.g., [29, 60]), MDPs with function approximation (e.g., [1, 2, 10, 25, 30, 45]), Markov games (e.g., [13, 14, 46, 49, 61]), and their use in actor-critic methods (e.g., [3, 12, 48, 51]).

Other policy parameterizations. In addition to softmax parameterization, several other policy parameterization schemes have also been investigated in the context of policy optimization and reinforcement learning at large. For example, [1, 24, 54, 56] studied the convergence of projected PG methods and policy mirror descent with direct parameterization, [4] introduced the so-called mallow parameterization, while [33] studied the escort parameterization. Part of these parameterizations were proposed in response to the ineffectiveness of softmax parameterization observed in practice.

Lower bounds. Establishing information-theoretic or algorithmic-specific lower bounds on the statistical and computational complexities of RL algorithms—often achieved by constructing hard MDP instances—plays an instrumental role in understanding the bottlenecks of RL algorithms. To give a few examples, Azar et al. [5], Domingues et al. [16], Li et al. [28], Yan et al. [52] developed information-theoretic lower bounds on the sample complexity of RL under multiple sampling mechanisms (e.g., sampling with a generative model, online RL, and offline/batch RL), Li et al. [27] established an algorithm-dependent lower bound on the sample complexity of Q-learning, whereas Khamaru et al. [21], Pananjady and Wainwright [36] developed instance-dependent lower bounds for policy evaluation. Additionally, Agarwal et al. [1] constructed a chain-like MDP whose value function under direct parameterization might contain very flat saddle points under a certain initial state distribution, highlighting the role of distribution mismatch coefficients in policy optimization. Finally, exponential-time convergence of gradient descent has been observed in other nonconvex problems as well (e.g., [17]) despite its asymptotic convergence [26], although the context and analysis therein are drastically different from what happens in RL settings.

1.3 Paper organization

The rest of this paper is organized as follows. In Sect. 2, we introduce the basics of Markov decision processes, and describe the softmax policy gradient method along with several key functions/quantities. Section 3 constructs a chain-like MDP, which is the hard MDP instance underlying our computational lower bound for PG methods. In Sect. 4, we outline the proof of Theorem 1, starting with the proof of a weaker version before establishing Theorem 1. The proof of all technical lemmas are deferred to the appendix. We conclude the paper in Sect. 5 with a summary of our findings.

2 Background

In this section, we introduce the basics of MDPs, and formally describe the softmax PG method. Here and throughout, we denote by \(\Delta ({\mathcal {X}})\) the probability simplex over a set \({\mathcal {X}}\), and let \(|{\mathcal {X}}|\) represent the cardinality of the set \({\mathcal {X}}\). Given two probability distributions p and q over \({\mathcal {S}}\), we adopt the notation \(\big \Vert \frac{p}{q} \big \Vert _{\infty } = \max _{s\in {\mathcal {S}}} \frac{p(s)}{q(s)}\) and \(\big \Vert \frac{1}{q} \big \Vert _{\infty } = \max _{s\in {\mathcal {S}}} \frac{1}{q(s)}\). Throughout this paper, the notation \(f({\mathcal {M}})\gtrsim g({\mathcal {M}})\) (resp. \(f({\mathcal {M}})\lesssim g({\mathcal {M}})\)) means there exist some universal constants \(c>0\) independent of the parameters of the MDP \({\mathcal {M}}\) such that \(f({\mathcal {M}})\ge c g({\mathcal {M}})\) (resp. \(f({\mathcal {M}})\le c g({\mathcal {M}})\)), while the notation \(f({\mathcal {M}})\asymp g({\mathcal {M}})\) means that \(f({\mathcal {M}})\gtrsim g({\mathcal {M}})\) and \(f({\mathcal {M}})\lesssim g({\mathcal {M}})\) hold simultaneously.

Infinite-horizon discounted MDP. Let \({\mathcal {M}} = ({\mathcal {S}}, \{{\mathcal {A}}_s \}_{s\in {\mathcal {S}}}, P, r,\gamma )\) be an infinite-horizon discounted MDP. Here, \({\mathcal {S}}\) represents the state space, \({\mathcal {A}}_s\) denotes the action space associated with state \(s\in {\mathcal {S}}\), \(\gamma \in (0,1)\) indicates the discount factor, P is the probability transition kernel (namely, for each state-action pair (s, a), \(P(\cdot \,|\,{s,a})\in \Delta ({\mathcal {S}})\) denotes the transition probability from state s to the next state when action a is taken), and r stands for a deterministic reward function (namely, r(s, a) is the immediate reward received in state s upon executing action a). Throughout this paper, we assume normalized rewards such that \(-1 \le r(s,a)\le 1\) for any state-action pair (s, a). In addition, we concentrate on the scenario where \(\gamma \) is quite close to 1, and often refer to \(\frac{1}{1-\gamma }\) as the effective horizon of the MDP.

Policy, value function, Q-function and advantage function. The agent operates by adopting a policy \(\pi \), which is a (randomized) action selection rule based solely on the current state of the MDP. More precisely, for any state \(s\in {\mathcal {S}}\), we use \(\pi (\cdot \,|\,s)\in \Delta ({\mathcal {A}}_s)\) to specify a probability distribution, with \(\pi (a \,|\,s)\) denoting the probability of executing action \(a\in {\mathcal {A}}_s\) when in state s. The value function \(V^{\pi }: {\mathcal {S}}\rightarrow {\mathbb {R}}\) of a policy \(\pi \)—which indicates the expected discounted cumulative reward induced by policy \(\pi \)—is defined as

Here, the expectation is taken over the randomness of the MDP trajectory \(\{(s^k,a^k)\}_{k\ge 0}\) and the policy, where \(s^0=s\) and, for all \(k\ge 0\), \(a^k \sim \pi (\cdot \,|\,s^k)\) follows the policy \(\pi \) and \(s^{k+1}\sim P(\cdot \,|\,s^k, a^k)\) is generated by the transition kernel P. Analogously, we shall also define the value function \(V^{\pi }(\mu )\) of a policy \(\pi \) when the initial state is drawn from a distribution \(\mu \) over \({\mathcal {S}}\), namely,

Additionally, the Q-function \(Q^{\pi }\) of a policy \(\pi \)—namely, the expected discounted cumulative reward under policy \(\pi \) given an initial state-action pair \((s^0,a^0)=(s,a)\)—is formally defined by

where the expectation is again over the randomness of the MDP trajectory \(\{(s^k,a^k)\}_{k\ge 1}\) when policy \(\pi \) is adopted. In addition, the advantage function of policy \(\pi \) is defined as

for every state-action pair (s, a).

A major goal is to find a policy that optimizes the value function and the Q-function. Throughout this paper, we denote respectively by \(V^{\star }\) and \(Q^{\star }\) the optimal value function and optimal Q-function, namely,

Softmax parameterization and policy gradient methods. The family of policy optimization algorithms attempts to identify optimal policies by resorting to optimization-based algorithms. To facilitate differentiable optimization, a widely adopted scheme is to parameterize policies using softmax mappings. Specifically, for any real-valued parameter \(\theta =[\theta (s,a)]_{s\in {\mathcal {S}}, a\in {\mathcal {A}}_s}\), the corresponding softmax policy \(\pi _\theta {:=}\, \textsf{softmax}(\theta )\) is defined such that

With the aim of maximizing the value function under softmax parameterization, namely,

the softmax PG method proceeds by adopting gradient ascent update rules w.r.t. \(\theta \):

Here and throughout, we let \({V}^{(t)} = V^{\pi ^{(t)}}\) and \({Q}^{(t)} = Q^{\pi ^{(t)}}\) abbreviate respectively the value function and Q-function of the policy iterate \(\pi ^{(t)}{:=}\pi _{\theta ^{(t)}}\) in the t-th iteration, and \(\eta >0\) denotes the stepsize or learning rate. Interestingly, the gradient \( \nabla _{\theta } {V}^{\pi _{\theta }}\) under softmax parameterization (10) admits a closed-form expression [1], that is, for any state-action pair (s, a),

Here, \(d_{\mu }^{\pi _\theta }(s)\) represents the discounted state visitation distribution of a policy \(\pi \) given the initial state \(s^0\sim \mu \):

with the expectation taken over the randomness of the MDP trajectory \(\{(s^k,a^k)\}_{k\ge 0}\) under the policy \(\pi \) and the initial state distribution \(\mu \). In words, \(d_{\mu }^{\pi }(s)\) measures—starting from an initial distribution \(\mu \)—how frequently state s will be visited in a properly discounted fashion. Throughout this paper, we shall denote \({A}^{(t)} {:=}\, A^{\pi ^{(t)}}\) and \(d_{\mu }^{(t)}(s) {:=}\,d_{\mu }^{\pi ^{(t)}}(s)\) for notation simplicity.

3 Construction of a hard MDP

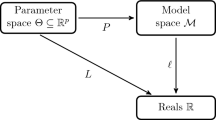

This section constructs a discounted infinite-horizon MDP \({\mathcal {M}}=\{{\mathcal {S}}, \{{\mathcal {A}}_s\}_{s\in {\mathcal {S}}}, r, P, \gamma \}\), as depicted in Fig. 1, which forms the basis of the exponential lower bound claimed in this paper. In addition to the basic notation already introduced in Sect. 2, we remark on the action space as follows.

-

For each state \(s\in {\mathcal {S}}\), we have \({\mathcal {A}}_s \subseteq \{a_0,a_1, a_2\}\). For convenience of presentation, we allow the action space to vary with \(s\in {\mathcal {S}}\), but it always comprises no more than 3 actions.

State space partitioning. The states of our MDP exhibit certain group structure. To be precise, we partition the state space \({\mathcal {S}}\) into a few disjoint subsets

which entails:

-

State 0 (an absorbing state);

-

Two key “buffer” state subsets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\);

-

A set of \(H-2\) key primary states \({\mathcal {S}}_{\textsf{primary}}{:=}\{3, \ldots , H \}\);Footnote 2

-

A set of H key adjoint states \({\mathcal {S}}_{\textsf{adj}}{:=}\{ {\overline{1}}, {\overline{2}}, \ldots , {\overline{H}} \}\);

-

2H “booster” state subsets \(\widehat{{\mathcal {S}}}_1, \ldots , {\widehat{{\mathcal {S}}}}_H\), \({\widehat{{\mathcal {S}}}}_{{\overline{1}}}, \ldots , {\widehat{{\mathcal {S}}}}_{{\overline{H}}}\).

Remark 4

Our subsequent analysis largely concentrates on the subsets \({\mathcal {S}}_1\), \({\mathcal {S}}_2\), \({\mathcal {S}}_{\textsf{primary}}\) and \({\mathcal {S}}_{\textsf{adj}}\). In particular, each state \(s\in \{3,\ldots , H\}\) is paired with what we call an adjoint state \({\overline{s}}\), whose role will be elucidated shortly. In addition, state \({\overline{1}}\) (resp. state \({\overline{2}}\)) can be viewed as the adjoint state of the set \({\mathcal {S}}_1\) (resp. \({\mathcal {S}}_2\)). The sets \({\mathcal {S}}_{\textsf{primary}}\) and \({\mathcal {S}}_{\textsf{adj}}\) comprise a total number of \(2H-2\) states; in comparison, \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\) are chosen to be much larger and contain a number of replicated states, a crucial design component that helps ensure the property (3) under a uniform initial state distribution. As we shall make clear momentarily, the “booster” state sets are introduced mainly to help boost the discounted visitation distribution of the states in \({\mathcal {S}}_1\), \({\mathcal {S}}_2\), \({\mathcal {S}}_{\textsf{primary}}\), and \({\mathcal {S}}_{\textsf{adj}}\) at the initial stage.

We shall also specify below the size of these state subsets as well as some key parameters, where the choices of the quantities \(c_{\textrm{h}},c_{\textrm{b},1},c_{\textrm{b},2},c_{\textrm{m}}\asymp 1\) will be made clear in the analysis (cf. (35)).

-

H is taken to be on the same order as the “effective horizon” of this discounted MDP, namely,

$$\begin{aligned} H = \frac{c_{\textrm{h}}}{1-\gamma }. \end{aligned}$$(15) -

The two buffer state subsets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\) have size

$$\begin{aligned} |{\mathcal {S}}_1| = c_{\textrm{b},1}(1-\gamma )|{\mathcal {S}}| \qquad \text {and}\qquad |{\mathcal {S}}_2| = c_{\textrm{b},2}(1-\gamma )|{\mathcal {S}}|. \end{aligned}$$(16) -

The booster state sets are of the same size, namely,

$$\begin{aligned} |{\widehat{{\mathcal {S}}}}_1|= \cdots |{\widehat{{\mathcal {S}}}}_H| = |{\widehat{{\mathcal {S}}}}_{{\overline{1}}}|= \cdots =| {\widehat{{\mathcal {S}}}}_{{\overline{H}}}| = c_{\textrm{m}}(1-\gamma )|{\mathcal {S}}|. \end{aligned}$$(17)

Probability transition kernel and reward function. We now describe the probability transition kernel and the reward function for each state subset. Before continuing, we find it helpful to isolate a few key parameters that will be used frequently in our construction:

where \(s\in \{1,2,\ldots ,H\}\), and \(c_{\textrm{p}}>0\) is some small constant that shall be specified later (see (35)). To facilitate understanding, we shall often treat \(\tau _s\) and \(r_s\) (\(s\le H\)) as quantities that are all fairly close to 0.5 (which would happen if \(\gamma \) is close to 1 and \(H=\frac{c_{\textrm{h}}}{1-\gamma }\) for \(c_{\textrm{h}}\) sufficiently small).

We are now positioned to make precise descriptions of both P and r as follows.

-

Absorbing state 0: singleton action space \(\{a_0\}\),

$$\begin{aligned} P(0 \,|\,0, a_0)=1, \qquad \qquad r(0,a_0)=0. \end{aligned}$$(19)This is an absorbing state, namely, the MDP will stay in this state permanently once entered. As we shall see below, taking action \(a_0\) in an arbitrary state will enter state 0 immediately.

-

Key primary states \(s\in \{ 3,\ldots , H \}\): action space \(\{a_0,a_1, a_2\}\),

(20a)

(20a) (20b)

(20b) (20c)

(20c) (20d)

(20d)where p, \(\tau _s\) and \(r_s\) are all defined in (18).

-

Key adjoint states \({\overline{s}} \in \{ {\overline{3}},\ldots , {\overline{H}} \}\): action space \(\{a_0, a_1\}\),

(21a)

(21a) (21b)

(21b)where \(\tau _s\) is defined in (18a).

-

Key buffer state subsets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\): action space \(\{a_0,a_1\}\),

(22a)

(22a) (22b)

(22b) (22c)

(22c) (22d)

(22d)Given the homogeneity of the states in \({\mathcal {S}}_1\) (resp. \({\mathcal {S}}_2\)), we shall often use the shorthand notation \(P(\cdot \,|\,1, a)\) (resp. \(P(\cdot \,|\,2, a)\)) to abbreviate \(P(\cdot \,|\,s_{1}, a)\) (resp. \(P(\cdot \,|\,s_{2}, a)\)) for any \(s_1\in {\mathcal {S}}_1\) (resp. \(s_2\in {\mathcal {S}}_2\)) for the sake of convenience.

-

Other adjoint states \({\overline{1}}\) and \({\overline{2}}\): action space \(\{a_0,a_1\}\),

(23a)

(23a) (23b)

(23b)where \(\tau _1\) and \(\tau _2\) are defined in (18a).

-

Booster state subsets \({\widehat{{\mathcal {S}}}}_1\), \(\ldots \), \({\widehat{{\mathcal {S}}}}_H\), \({\widehat{{\mathcal {S}}}}_{{\overline{1}}}\), \(\ldots \), \({\widehat{{\mathcal {S}}}}_{{\overline{H}}}\): singleton action space \(\{a_1\}\),

(24a)

(24a) (24b)

(24b)for any \(s\in \{3,\ldots , H\}\),

$$\begin{aligned} \forall s'\in \widehat{{\mathcal {S}}}_{s},:\qquad&P(s\,|\,s',a_{1})=1, \end{aligned}$$(24c)and for any \({\overline{s}} \in \{{\overline{1}},\ldots ,{\overline{H}}\}\),

$$\begin{aligned} \forall s'\in \widehat{{\mathcal {S}}}_{{\overline{s}}},:\qquad&P({\overline{s}}\,|\,s',a_{1})=1. \end{aligned}$$(24d)The rewards in all these cases are set to be 0 (in fact, they will not even appear in the analysis). In addition, any transition probability that has not been specified is equal to zero.

Convenient notation for buffer state subsets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\). By construction, it is easily seen that the states in \({\mathcal {S}}_1\) (resp. \({\mathcal {S}}_2\)) have identical characteristics; in fact, all states in \({\mathcal {S}}_1\) (resp. \({\mathcal {S}}_2\)) share exactly the same value functions and Q-functions throughout the execution of the softmax PG method. As a result, we introduce the following convenient notation whenever it is clear from the context:

Optimal values and optimal actions of the constructed MDP. Before concluding this section, we find it convenient to determine the optimal value functions and the optimal actions of the constructed MDP, which would be particularly instrumental when presenting our analysis. This is summarized in the lemma below, whose proof can be found in Appendix A.3.

Lemma 1

Suppose that \(\gamma ^{2H}\ge 2/3\) and \(H\ge 2\).

Then one has

and the optimal policy is to take action \(a_1\) in all non-absorbing states. In addition, for any policy \(\pi \) and any state-action pair (s, a), one has \(Q^{\pi }(s,a) \ge - \gamma ^2\).

Lemma 1 tells us that for this MDP, the optimal policy for all non-absorbing states takes a simple form: sticking to action \(a_{1}\). In particular, when \(\gamma \approx 1\) and \(\gamma ^H\approx 1\), Lemma 1 reveals that the optimal values of all non-absorbing major states are fairly close to 1, namely,

Additionally, the above lemma directly implies that the Q-function (and hence the value function) is always bounded below by \(-1\), a property that will be used several times in our analysis.

4 Analysis: proof outline

In this section, we present the main steps for establishing our computational lower bound in Theorem 1. Before doing so, we find it convenient to start by presenting and proving a weaker version as follows.

Theorem 2

Consider the MDP \({\mathcal {M}}\) constructed in Sect. 3 (and Fig. 1). Assume that the softmax PG method adopts a uniform initial state distribution, a uniform policy initialization, and has access to exact gradient computation. Suppose that \(0<\eta <(1-\gamma )^2/5\). There exist universal constants \(c_1, c_2, c_3>0\) such that: for any \(0.96< \gamma < 1\) and \( |{\mathcal {S}}| \ge c_3 (1-\gamma )^{-6}\), one has

provided that the iteration number satisfies

In what follows, we shall concentrate on establishing Theorem 2, on the basis of the MDP instance constructed in Sect. 3. Once this theorem is established, we shall revisit Theorem 1 (towards the end of Sect. 4.3) and describe how the proof of Theorem 2 can be adapted to prove Theorem 1.

4.1 Preparation: crossing times and choice of constants

Crossing times. To investigate how long it takes for softmax PG methods to converge to the optimal policy, we shall pay particular attention to a family of key quantities: the number of iterations needed for \(V^{(t)}(s)\) to surpass a prescribed threshold \(\tau \) (\(\tau <1\)) before it reaches its optimal value. To be precise, for each \(s \in \{3,\ldots ,H\} \cup \{{\overline{1}},\ldots ,{\overline{H}}\}\) and any given threshold \(\tau >0\), we introduce the following crossing time:

When it comes to the buffer state subsets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\), we define the crossing times analogously as follows

where we recall the notation \(V^{(t)}(1)\) and \(V^{(t)}(2)\) introduced in (25).

Monotonicity of crossing times. Recalling the definition (30) of the crossing time \(t_s(\cdot )\), we know that

with \(\tau _{s}\) defined in expression (18a). We immediately make note of the following crucial monotonicity property that will be justified later in Remark 8:

It will also be shown in Lemma 4 that \(t_1({\tau }_1)\le t_2({\tau }_2)\) when the constants \(c_{\textrm{b},1}, c_{\textrm{b},2}\) and \(c_{\textrm{m}}\) are properly chosen.

Remark 5

As we shall see shortly (i.e., Part (iii) of Lemma 8), one has \(t_{{\overline{s}}}(\gamma \tau _s) = t_{s}(\tau _s)\) for any \({\overline{s}}\in \{{\overline{1}},\ldots ,{\overline{H}}\}\), which combined with (33) leads to

Choice of parameters. We assume the following choice of parameters throughout the proof:

In the sequel, we outline the key steps that underlie the proof of our main results, with the proofs of the key lemmas postponed to the appendix.

4.2 A high-level picture

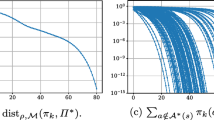

While our proof is highly technical, it is prudent to point out some key features that help paint a high-level picture about the slow convergence of the algorithm. Recall that \(a_1\) is the optimal action in the constructed MDP. The chain-like structure of our MDP underscores a sort of sequential dependency: the dynamic of any primary state \(s\in \{3,\ldots ,H\}\) depends heavily on what happens in those states prior to s—particularly state \(s-1\), state \(s-2\) as well as the associated adjoint states. By carefully designing the immediate rewards, we can ensure that for any \(s\in \{3,\ldots ,H\}\), the iterate \(\pi ^{(t)}(a_1\,|\,s)\) corresponding to the optimal action \(a_1\) keeps decreasing before \(\pi ^{(t)}(a_1\,|\,s-2)\) gets reasonably close to 1. As illustrated in Fig. 2, this feature implies that the time taken for \(\pi ^{(t)}(a_1\,|\,s)\) to get close to 1 grows (at least) geometrically as s increases, as will be formalized in (46).

An illustration of the dynamics of \(\{\theta ^{(t)}(s,a)\}_{a\in \{a_0,a_1,a_2\}}\) vs. iteration number t. The blue, red and yellow lines represent the dynamics of \(\theta ^{(t)}(s,a_0)\), \(\theta ^{(t)}(s,a_1)\) and \(\theta ^{(t)}(s,a_2)\), respectively. Here, we use solid lines to emphasize the time ranges for which the dynamics of \(\theta ^{(t)}(s,a)\) play the most crucial roles in our lower bound analysis

Furthermore, we summarize below the typical dynamics of the iterates \(\theta ^{(t)}(s,a)\) before they converge, which are helpful for the reader to understand the proof. We start with the key buffer state sets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\), which are the easiest to describe.

Next, the dynamics of \(\theta ^{(t)}(s,a)\) for the key primary states \(3\le s \le H\) are much more complicated, and rely heavily on the status of several prior states \(s-1\), \(s-2\) and \(\overline{s-1}\). This motivates us to divide the dynamics into several stages based on the crossing times of these prior states, which are illustrated in Fig. 3 as well. Here, we remind the reader of the definition of \(\tau _s\) in (18).

4.3 Proof outline

We are now in a position to outline the main steps of the proof of Theorem 1 and Theorem 2, with details deferred to the appendix. In the following, Steps 1-6 are devoted to analyzing the dynamics of softmax PG methods when applied to the constructed MDP \({\mathcal {M}}\), which in turn establish Theorem 2. Step 7 describes how these can be easily adapted to prove Theorem 1, by slightly modifying the MDP construction.

4.4 Step 1: bounding the discounted state visitation distributions

In view of the PG update rule (12), the size of the policy gradient relies heavily on the discounted state visitation distribution \(d_{\mu }^{(t)}(s)\). In light of this observation, this step aims to quantify the magnitudes of \(d_{\mu }^{(t)}(s)\), for which we start with several universal lower bounds regardless of the policy in use.

Lemma 2

For any policy \(\pi \), the following lower bounds hold true:

As it turns out, the above lower bounds are order-wise tight estimates prior to certain crucial crossing times. This is formalized in the following lemma, where we recall the definition of \(\tau _s\) in (18).

Lemma 3

Under the assumption (35), the following results hold:

Remark 6

As will be demonstrated in Lemma 4, one has \(t_1({\tau }_1)\le t_2({\tau }_2)\) for properly chosen constants \(c_{\textrm{b},1}, c_{\textrm{b},2}\) and \(c_{\textrm{m}}\). Therefore, we shall bear in mind that the properties (37d) and (37e) hold for any \(t \le t_1({\tau }_1)\).

The proofs of these two lemmas are deferred to Appendix B. The sets of booster states, whose cardinality is controlled by \(c_{\textrm{m}}\), play an important role in sandwiching the initial distribution of the states in \({\mathcal {S}}_1\), \({\mathcal {S}}_2\), \({\mathcal {S}}_{\textsf{primary}}\), and \({\mathcal {S}}_{\textsf{adj}}\). Combining these bounds, we uncover the following properties happening before \(V^{(t)}(s)\) exceeds \({\tau }_s\):

-

For any key primary state \(s\in \{3,\ldots ,H\} \) or any adjoint state \(s\in \{{\overline{1}},\ldots , {\overline{H}}\} \), one has

$$\begin{aligned} d_{\mu }^{(t)}(s) \asymp (1-\gamma )^2. \end{aligned}$$ -

For any state s contained in the buffer state subsets \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\), we have

$$\begin{aligned} d_{\mu }^{(t)}(1) \asymp \frac{(1-\gamma )^2}{|{\mathcal {S}}_1|} \qquad \text {and} \qquad d_{\mu }^{(t)}(2) \asymp \frac{(1-\gamma )^2}{|{\mathcal {S}}_2|}, \end{aligned}$$where we recall the size of \({\mathcal {S}}_1\) and \({\mathcal {S}}_2\) in (16). In other words, the discounted state visitation probability of any buffer state is substantially smaller than that of any key primary state \(3,\ldots ,H\) or adjoint state. In principle, the size of each buffer state subset plays a crucial role in determining the associated \(d_{\mu }^{(t)}(s)\)—the larger the size of the buffer state subset, the smaller the resulting state visitation probability.

-

Further, the aggregate discounted state visitation probability of the above states is no more than the order of

$$\begin{aligned} (1-\gamma )^2 \cdot H \asymp 1-\gamma = o(1), \end{aligned}$$which is vanishingly small. In fact, state 0 and the booster states account for the dominant fraction of state visitations at the initial stage of the algorithm.

4.5 Step 2: characterizing the crossing times for the first few states (\({\mathcal {S}}_1\), \({\mathcal {S}}_2\), and \({\overline{1}}\))

Armed with the bounds on \(d_{\mu }^{(t)}\) developed in Step 1, we can move forward to study the crossing times for the key states. In this step, we pay attention to the crossing times for the buffer states \({\mathcal {S}}_1, {\mathcal {S}}_2\) as well as the first adjoint state \({\overline{1}}\), which forms a crucial starting point towards understanding the behavior of the subsequent states. Specifically, the following lemma develops lower and upper bounds regarding these quantities, whose proof can be found in Appendix C.

Lemma 4

Suppose that (35) holds. If \(|{\mathcal {S}}| \ge 1/(1-\gamma )^2\), then the crossing times satisfy

In addition, if \(|{\mathcal {S}}| \ge \frac{320\gamma ^3}{c_{\textrm{m}}(1-\gamma )^{2}}\), then one has

For properly chosen constants \(c_{\textrm{b},1}\), \(c_{\textrm{b},2}\) and \(c_{\textrm{m}}\), Lemma 4 delivers the following important messages:

-

The cross times of these first few states are already fairly large; for instance,

$$\begin{aligned} t_1(\tau _1) \,\asymp \, t_2(\tau _2) \,\asymp \, \frac{ |{\mathcal {S}}| }{ \eta }, \end{aligned}$$(39)which scale linearly with the state space dimension. As we shall see momentarily, while \(t_1(\tau _1)\) and \(t_2(\tau _2)\) remain polynomially large, these play a pivotal role in ensuring rapid explosion of the crossing times of the states that follow (namely, the states \(\{3,\ldots ,H\}\)).

-

We can guarantee a strict ordering such that the crossing time of state 2 is at least as large as that of both state 1 and state \({\overline{1}}\). This property is helpful as well for subsequent analysis.

4.6 Step 3: understanding the dynamics of \(\theta ^{(t)}(s, a)\) before \(t_{s-2}({\tau }_{s-2})\)

With the above characterization of the crossing times for the first few states, we are ready to investigate the dynamics of \(\theta ^{(t)}(s, a)\) (\(3 \le s \le H\)) at the initial stage, that is, the duration prior to the threshold \(t_{s-2}({\tau }_{s-2})\). Our finding for this stage is summarized in the following lemma, with the proof deferred to Appendix D.

Lemma 5

Suppose that (35) holds. For any \(3 \le s \le H\) and any \(0 \le t \le t_{s-2}({\tau }_{s-2})\), one has

Lemma 5 makes clear the behavior of \(\theta ^{(t)}(s, a)\) during this initial stage:

-

The iterate \(\theta ^{(t)}(s, a_1)\) associated with the optimal action \(a_1\) keeps dropping at a rate of \(\log \big (O(\frac{1}{\sqrt{t}})\big )\), and remains the smallest compared to the ones with other actions (since \(\theta ^{(t)}(s, a_1) \le 0 \le \theta ^{(t)}(s, a_2) \le \theta ^{(t)}(s, a_0) \)).

-

The other two iterates \(\theta ^{(t)}(s, a_0)\) and \(\theta ^{(t)}(s, a_2)\) stay non-negative throughout this stage, with \(a_0\) being perceived as more favorable than the other two actions.

-

In fact, a closer inspection of the proof in Appendix D reveals that \(\theta ^{(t)}(s, a_2)\) remains increasing—even though at a rate slower than that of \(\theta ^{(t)}(s, a_0)\)—throughout this stage (see (134) and the gradient expression (12b)).

In particular, around the threshold \(t_{s-2}({\tau }_{s-2})\), the iterate \(\theta ^{(t)}(s, a_1)\) becomes as small as

In fact, an inspection of the proof of this lemma reveals that

This means that \(\pi ^{(t)}(a_1 \,|\,s)\) becomes smaller for a larger \(t_{s-2}({\tau }_{s-2})\), making it more difficult to return/converge to 1 afterward.

4.7 Step 4: understanding the dynamics of \(\theta ^{(t)}(s, a)\) between \(t_{s-2}({\tau }_{s-2})\) and \(t_{\overline{s-1}}(\tau _{s})\)

Next, we investigate, for any \(3\le s\le H\), the behavior of the iterates during an “intermediate” stage, namely, the duration when the iteration count t is between \(t_{s-2}({\tau }_{s-2})\) and \(t_{\overline{s-1}}(\tau _{s})\). This is summarized in the following lemma, whose proof can be found in Appendix E.

Lemma 6

Consider any \(3 \le s \le H\). Assume that (35) holds. Suppose that

Then one has

In particular, when \(s = 3\), the results in (43) hold true without requiring the assumption (42).

Remark 7

Condition (42a) only requires \(t_{s-1}({\tau }_{s-1})\) to be slightly larger than \(t_{\overline{s-2}}(\tau _{s-1})\), which will be justified using an induction argument when proving the main theorem.

As revealed by the claim (43) of Lemma 6, the iterate \(\theta ^{(t)}(s, a_2)\) remains sufficiently large during this intermediate stage. In the meantime, Lemma 6 guarantees that during this stage, \(\theta ^{(t)}(s, a_1)\) lies below the level of \(\theta ^{(t_{s-2}({\tau }_{s-2}))}(s, a_1)\) that has been pinned down in Lemma 5 (which has been shown to be quite small). Both of these properties make clear that the iterates \(\theta ^{(t)}(s, a) \) remain far from optimal at the end of this intermediate stage.

4.8 Step 5: establishing a blowing-up phenomenon

The next lemma, which plays a pivotal role in developing the desired exponential convergence lower bound, demonstrates that the cross times explode at a super fast rate. The proof is postponed to Appendix F.

Lemma 7

Consider any \(3 \le s \le H\). Suppose that (35) holds and

Then there exists a time instance \(t_{\textsf{ref}}\) obeying \(t_{\overline{s-1}}(\tau _s) \le t_{\textsf{ref}}< t_{s}({\tau }_s)\) such that

and at the same time,

The most important message of Lemma 7 lies in property (45c). In a nutshell, this property uncovers that the crossing time \(t_{s}({\tau }_s)\) is substantially larger than \(t_{s-2}({\tau }_{s-2})\), namely,

thus leading to explosion at a super-linear rate. By contrast, the other two properties unveil some important features happening between \(t_{\overline{s-1}}(\tau _s) \) and \(t_{s}({\tau }_s)\) that in turn lead to property (45c). In words, property (45a) requires \(\theta ^{(t_{\textsf{ref}})}(s, a_0)\) to be not much larger than \(\theta ^{(t_{\textsf{ref}})}(s, a_1)\); property (45b) indicates that: when \(t_{s-2}({\tau }_{s-2})\) is large, both \(\theta ^{(t_{\textsf{ref}})}(s, a_1)\) and \(\theta ^{(t_{\textsf{ref}})}(s, a_0)\) are fairly small, with \(\theta ^{(t_{\textsf{ref}})}(s, a_2)\) being the dominant one (due to the fact \(\sum _a \theta ^{(t_{\textsf{ref}})}(s, a) = 0\) as will be seen in Part (vii) of Lemma 8).

The reader might naturally wonder what the above results imply about \(\pi ^{(t_{\textsf{ref}})}(a_1 \,|\,s)\) (as opposed to \(\theta ^{(t_{\textsf{ref}})}(s, a_1 )\)). Towards this end, we make the observation that

where (i) holds true since \(\sum _a \theta ^{(t_{\textsf{ref}})}(s,a)=0\) (a well-known property of policy gradient methods as recorded in Lemma 8(vii)), and (ii) follows from the properties (45a) and (45b). In other words, \(\pi ^{(t_{\textsf{ref}})}(s,a_{1})\) is inversely proportional to \(\big ( t_{s-2}({\tau }_{s-2}) \big )^{3/2}\). As we shall see, the time taken for \(\pi ^{(t_{\textsf{ref}})}(a_1 \,|\,s)\) to converge to 1 is proportional to the inverse policy iterate \(\big (\pi ^{(t)}(s,a_{1})\big )^{-1}\), meaning that it is expected to take an order of \(\big (t_{s-2}({\tau }_{s-2})\big )^{3/2}\) iterations to increase from \(\pi ^{(t_{\textsf{ref}})}(s,a_{1})\) to 1.

4.9 Step 6: putting all this together to establish Theorem 2

With the above steps in place, we are ready to combine them to establish the following result. As can be easily seen, Theorem 2 is an immediate consequence of Theorem 3.

Theorem 3

Suppose that (35) holds. There exist some universal constants \(c_1, c_2, c_3 > 0\) such that

provided that

Proof of Theorem 3

Let us define two universal constants \(C_1 {:=}\frac{\log 3}{1+17c_{\textrm{m}}/c_{\textrm{b},1}}\) and \(C_2 {:=} \frac{10^{-20}c_{\textrm{p}}^2c_{\textrm{m}}\log 3}{1+17c_{\textrm{m}}/c_{\textrm{b},1}}\). We claim that if one can show that

then the desired bound (48) holds true directly. In order to see this, recall that \(\tau _{s} \le 1/2\) by definition, and therefore,

Here, (i) follows from (50) in conjunction with the assumption (49), whereas (ii) holds true by setting \(c_1 = C_1/C_2\) and \(c_2 = C_2^3\).

It is then sufficient to prove the inequality (50), towards which we shall resort to mathematical induction in conjunction with the following induction hypothesis

-

We start with the cases with \(s=1,2,3\). It follows from Lemma 4 that

$$\begin{aligned} t_2({\tau }_2) \ge t_1({\tau }_1) \ge \frac{\log 3}{1+17c_{\textrm{m}}/c_{\textrm{b},1}} \frac{|{\mathcal {S}}|}{\eta } = C_1 \frac{|{\mathcal {S}}|}{\eta }, \end{aligned}$$(52)which validates the above claim (50) for \(s = 1\) and \(s=2\). In addition, Lemma 7 ensures that

$$\begin{aligned} t_{3}({\tau }_3) - \max \Big \{ t_{{\overline{1}}}(\gamma ^{3}-1/4),~ t_{{\overline{2}}}(\tau _{3}) \Big \}&\ge 10^{-10}c_{\textrm{p}}c_{\textrm{m}}^{0.5}\eta ^{0.5}(1-\gamma )^2\Big (t_{1}({\tau }_{1}) \Big )^{1.5}\nonumber \\&\ge \frac{9776}{c_{\textrm{m}}\gamma \eta (1-\gamma )^2}, \end{aligned}$$(53)where the last inequality is guaranteed by (52) and the assumption \(|{\mathcal {S}}| \ge \max \Big \{\frac{4888}{C_1c_{\textrm{m}}\gamma (1-\gamma )^2}, \frac{4}{C_2(1-\gamma )^4}\Big \}\). This implies that the inequality (51) is satisfied when \(s = 3.\)

-

Next, suppose that the inequality (50) holds true up to state \(s-1\) and the inequality (51) holds up to s for some \(3\le s\le H\). To invoke the induction argument, it suffices to show that the inequality (50) continues to hold for state s and the inequality (51) remains valid for \(s+1\). This will be accomplished by taking advantage of Lemma 7.]

Given that the inequality (50) holds true for every state up to \(s-1\), one has

$$\begin{aligned} t_{s-1}({\tau }_{s-1})&\ge t_{s-2}({\tau }_{s-2}) \ge C_1\frac{|{\mathcal {S}}|}{\eta }\Big (C_2(1-\gamma )^4|{\mathcal {S}}| \Big )^{1.5^{\lfloor (s-3)/2\rfloor }-1} \\&\ge \Big ( \frac{6300 e}{c_{\textrm{p}}(1-\gamma )} \Big )^4\frac{1}{\frac{c_{\textrm{m}}\gamma }{35} \eta (1-\gamma )^2}, \end{aligned}$$where the last inequality is satisfied provided that \(|{\mathcal {S}}| > \max \Big \{\big ( \frac{6300 e}{c_{\textrm{p}}} \big )^4\frac{35}{C_1c_{\textrm{m}}\gamma (1-\gamma )^6}, \frac{4}{C_2(1-\gamma )^4}\Big \}.\) Therefore, Lemma 7 is applicable for both s and \(s+1\), thus leading to

$$\begin{aligned} t_{s}({\tau }_{s}) - t_{\overline{s-1}}(\tau _{s})&\ge 10^{-10}c_{\textrm{p}}c_{\textrm{m}}^{0.5}\eta ^{0.5}(1-\gamma )^2\Big (t_{s-2}({\tau }_{s-2}) \Big )^{1.5} \\&\ge 10^{-10}c_{\textrm{p}}c_{\textrm{m}}^{0.5}\eta ^{0.5}(1-\gamma )^2\\&\quad \left( C_1\frac{|{\mathcal {S}}|}{\eta }\Big (C_2(1-\gamma )^4|{\mathcal {S}}| \Big )^{1.5^{\lfloor (s-3)/2\rfloor }-1}\right) ^{1.5} \\&\ge C_1\frac{|{\mathcal {S}}|}{\eta }\Big (C_2(1-\gamma )^4|{\mathcal {S}}| \Big )^{1.5^{\lfloor (s-1)/2\rfloor }-1}. \end{aligned}$$Here, the last step relies on the condition \(10^{-10}c_{\textrm{p}}c_{\textrm{m}}^{0.5}\eta ^{0.5}(1-\gamma )^2 (C_1\frac{|{\mathcal {S}}|}{\eta })^{0.5}\ge 1.\) This in turn establishes the property (50) for state s (given that \(t_{\overline{s-1}}(\tau _{s})\ge 0\)). In addition, Lemma 7—when applied to \(s+1\)—gives

$$\begin{aligned} t_{s+1}({\tau }_{s+1}) - t_{{\overline{s}}}(\tau _{s+1})&\ge 10^{-10}c_{\textrm{p}}c_{\textrm{m}}^{0.5}\eta ^{0.5}(1-\gamma )^2\Big (t_{s-1}({\tau }_{s-1}) \Big )^{1.5} \\&\ge 10^{-10}c_{\textrm{p}}c_{\textrm{m}}^{0.5}\eta ^{0.5}(1-\gamma )^2\\&\quad \left( C_1\frac{|{\mathcal {S}}|}{\eta }\Big (C_2(1-\gamma )^4|{\mathcal {S}}| \Big )^{1.5^{\lfloor (s-2)/2\rfloor }-1}\right) ^{1.5} \\&\ge C_1\frac{|{\mathcal {S}}|}{\eta }\Big (C_2(1-\gamma )^4|{\mathcal {S}}| \Big )^{1.5^{\lfloor s/2\rfloor }-1}\\&\ge \frac{2444(s+2)}{c_{\textrm{m}}\gamma \eta (1-\gamma )^2}, \end{aligned}$$where the last step follows as long as \(|{\mathcal {S}}| > \max \left\{ \frac{4888}{C_1c_{\textrm{m}}\gamma (1-\gamma )^2}, \frac{4}{C_2(1-\gamma )^4}\right\} \). We have thus established the property (51) for state \(s+1\).

Putting all the above pieces together, we arrive at the inequality (50), thus establishing Theorem 3. \(\square \)

4.10 Step 7: adapting the proof to establish Theorem 1

Thus far, we have established Theorem 2, and are well equipped to return to the proof of Theorem 1. As a remark, Theorem 2 and its analysis posits that for a large fraction of the key primary states (as well as their associated adjoint states), softmax PG methods can take a prohibitively large number of iterations to converge. The issue, however, is that there are in total only O(H) key primary states and adjoint states, accounting for a vanishingly small fraction of all \(|{\mathcal {S}}|\) states. In order to extend Theorem 2 to Theorem 1 (the latter of which is concerned with the error averaged over the entire state space), we would need to show that the value functions associated with those booster states—which account for a large fraction of the state space—also converge slowly.

In the MDP instance constructed in Sect. 3, however, the action space associated with the booster states is a singleton set, meaning that the action is always optimal. As a result, we would first need to modify/augment the action space of booster states, so as to ensure that their learned actions remain suboptimal before the algorithm converges for the associated key primary states and adjoint states.

A modified MDP instance. We now augment the action space for all booster states in the MDP \({\mathcal {M}}\) constructed in in Sect. 3, leading to a slightly modified MDP denoted by \({\mathcal {M}}_{\textsf{modified}}\):

-

for any key primary state \(s\in \{3,\ldots , H\}\) and any associated booster state \({\widehat{s}}\in \widehat{{\mathcal {S}}}_{s}\), take the action space of \({\widehat{s}}\) to be \(\{a_0, a_1\}\) and let

$$\begin{aligned}&P(0\,|\,{\widehat{s}},a_0) = 0.9, ~~ P(s\,|\,{\widehat{s}},a_0) = 0.1, ~~ r({\widehat{s}},a_0)=0.9\gamma \tau _s,~~\nonumber \\&P(s\,|\,{\widehat{s}},a_{1}) = 1, ~~ r({\widehat{s}},a_1)=0; \end{aligned}$$(54) -

for any key adjoint state \({\overline{s}} \in \{{\overline{1}},\ldots ,{\overline{H}}\}\) and any associated booster state \({\widehat{s}}\in \widehat{{\mathcal {S}}}_{{\overline{s}}}\), take the action space of \({\widehat{s}}\) to be \(\{a_0, a_1\}\) and let

$$\begin{aligned}&P(0\,|\,{\widehat{s}},a_0) = 0.9, ~~P({\overline{s}}\,|\,{\widehat{s}},a_0) = 0.1,~~ r({\widehat{s}},a_0)=0.9\gamma ^2\tau _s,~~ \nonumber \\&P({\overline{s}}\,|\,{\widehat{s}},a_{1}) = 1, ~~ r({\widehat{s}},a_1)=0; \end{aligned}$$(55) -

all other comoponents of \({\mathcal {M}}_{\textsf{modified}}\) remain identical to those of the original \({\mathcal {M}}\).

Analysis for the new booster states. Given that the dynamics of non-booster states are un-affected by the booster states, it suffices to perform analysis for the booster states. Let us first consider any key primary state s and any associated booster state \({\widehat{s}}\).

-

As can be easily seen,

$$\begin{aligned} Q^{(t)}({\widehat{s}},a_{0})&=r({\widehat{s}},a_{0})+\gamma P(s\,|\,{\widehat{s}},a_{0})V^{(t)}(s)+\gamma P(0\,|\,{\widehat{s}},a_{0})V^{(t)}(0)\\&=0.9\gamma \tau _{s}+0.1\gamma V^{(t)}(s),\\ Q^{(t)}({\widehat{s}},a_{1})&=r({\widehat{s}},a_{1})+\gamma P(s\,|\,{\widehat{s}},a_{0})V^{(t)}(s)=\gamma V^{(t)}(s), \end{aligned}$$where we have used the basic fact \(V^{(t)}(0)=0\) (see (73) in Lemma 8). Given that \(V^{(t)}({\widehat{s}})\) is a convex combination of \(Q^{(t)}({\widehat{s}},a_{0})\) and \(Q^{(t)}({\widehat{s}},a_{1})\), one can easily see that: if \(V^{(t)}(s) < \tau _s\), then one necessarily has \(V^{(t)}({\widehat{s}}) < \gamma \tau _s\)

-

Similarly, the optimal Q-function w.r.t. \({\widehat{s}}\) is given by

$$\begin{aligned} Q^{\star }({\widehat{s}},a_{0})&=r({\widehat{s}},a_{0})+\gamma P(s\,|\,{\widehat{s}},a_{0})V^{\star }(s)+\gamma P(0\,|\,{\widehat{s}},a_{0})V^{\star }(0)\\&=0.9\gamma \tau _{s}+0.1\gamma V^{\star }(s),\\ Q^{\star }({\widehat{s}},a_{1})&=r({\widehat{s}},a_{1})+\gamma P(s\,|\,{\widehat{s}},a_{0})V^{\star }(s)=\gamma V^{\star }(s), \end{aligned}$$which together with Lemma 1 and the definition (18) of \(\tau _s\) indicates that \(V^{\star }({\widehat{s}})=Q^{\star }({\widehat{s}},a_{1})=\gamma ^{2s+1}\).

-

The above facts taken collectively imply that: if \(V^{(t)}(s) < \tau _s\), then

$$\begin{aligned} V^{\star }({\widehat{s}}) - V^{(t)}({\widehat{s}})> \gamma ^{2s+1} - \gamma \tau _s = \gamma \big (\gamma ^{2s} - 0.5\gamma ^{\frac{2s}{3}}\big ) > 0.22, \end{aligned}$$(56)provided that \(\gamma \) is sufficiently large (which is satisfied under the condition (35)).

Similarly, for any key adjoint state \({\overline{s}}\) and any associated booster state \({\widehat{s}}\), if \(V^{(t)}({\overline{s}}) < \gamma \tau _s\), then one must have

Repeating the same proof as for Theorem 2, one can easily show that (with slight adjustment of the universal constants)

This taken together with the above analysis suffices to establish Theorem 1, given the following two simple facts: (i) there are \(2 Hc_{\textrm{m}}(1-\gamma )|{\mathcal {S}}|= 2c_{\textrm{m}}c_{\textrm{h}}|{\mathcal {S}}|\) booster states, and (ii) more than \(90\%\) of them need a prohibitively large number of iterations (cf. (58)) to reach 0.22-optimality. Here, we can take \(c_{\textrm{m}}c_{\textrm{h}}> 0.18\) which satisfies (35). The proof is thus complete.

5 Discussion

This paper has developed an algorithm-specific lower bound on the iteration complexity of the softmax policy gradient method, obtained by analyzing its trajectory on a carefully-designed hard MDP instance. We have shown that the iteration complexity of softmax PG methods can scale pessimistically, in fact (super-)exponentially, with the dimension of the state space and the effective horizon of the discounted MDP of interest. Our finding makes apparent the potential inefficiency of softmax PG methods in solving large-dimensional and long-horizon problems. In turn, this suggests the necessity of carefully adjusting update rules and/or enforcing proper regularization in accelerating policy gradient methods (Table 2).

Our work relies heavily on proper exploitation of the structural properties of the MDP in algorithm-dependent analysis, which might shed light on lower bound construction for other algorithms as well. For instance, if the objective function (i.e., the value function) is augmented by a regularization term, how does the choice of regularization affect the global convergence behavior? While Agarwal et al. [1] demonstrated polynomial-time convergence of PG methods in the presence of log-barrier regularization, non-asymptotic analysis of PG methods with other popular regularization—particularly entropy regularization—remains unavailable in existing literature. How to understand the (in)-effectiveness of entropy-regularized PG methods is of fundamental importance in the theory of policy optimization. Additionally, the current paper concentrates on the use of constant learning rates; it falls short of accommodating more adaptive learning rates, which might be a potential solution to accelerate vanilla PG methods. Furthermore, our strategy for lower bound construction might be extended to unveil algorithmic bottlenecks of policy optimization in multi-agent Markov games as well. All this is worthy of future investigation.

Notes

Here and throughout, the division of two vectors represents componentwise division.

While we do not include states 1 and 2 here, any state in \({\mathcal {S}}_1\) (resp. \({\mathcal {S}}_2\)) can essentially be viewed as a (replicated) copy of state 1 (resp. state 2).

References

Agarwal, A., Kakade, S.M., Lee, J.D., Mahajan, G.: On the theory of policy gradient methods: optimality, approximation, and distribution shift. J. Mach. Learn. Res. 22(98), 1–76 (2021)

Agazzi, A., Lu, J.: Global optimality of softmax policy gradient with single hidden layer neural networks in the mean-field regime. In: International Conference on Learning Representations (ICLR) (2021)

Alacaoglu, A., Viano, L., He, N., Cevher, V.: A natural actor-critic framework for zero-sum Markov games. In: International Conference on Machine Learning, pp. 307–366. PMLR (2022)

Asadi, K., Littman, M.L.: An alternative softmax operator for reinforcement learning. In: International Conference on Machine Learning, pp. 243–252 (2017)

Azar, M.G., Munos, R., Kappen, H.J.: Minimax PAC bounds on the sample complexity of reinforcement learning with a generative model. Mach. Learn. 91(3), 325–349 (2013)

Beck, A.: First-Order Methods in Optimization. SIAM, Philadelphia, PA (2017)

Bhandari, J.: Optimization foundations of reinforcement learning. Ph.D. thesis, Columbia University (2020)

Bhandari, J., Russo, D.: Global optimality guarantees for policy gradient methods. arXiv preprint arXiv:1906.01786 (2019)

Bhandari, J., Russo, D.: On the linear convergence of policy gradient methods for finite MDPs. In: International Conference on Artificial Intelligence and Statistics, pp. 2386–2394. PMLR (2021)

Cai, Q., Yang, Z., Jin, C., Wang, Z.: Provably efficient exploration in policy optimization. In: International Conference on Machine Learning, pp. 1283–1294 (2020)

Cen, S., Cheng, C., Chen, Y., Wei, Y., Chi, Y.: Fast global convergence of natural policy gradient methods with entropy regularization. Oper. Res. 70(4), 2563–2578 (2022)

Cen, S., Chi, Y., Du, S.S., Xiao, L.: Faster last-iterate convergence of policy optimization in zero-sum Markov games. arXiv preprint arXiv:2210.01050 (2022)

Cen, S., Wei, Y., Chi, Y.: Fast policy extragradient methods for competitive games with entropy regularization. Adv. Neural Inf. Process. Syst. 34, 27952–27964 (2021)

Daskalakis, C., Foster, D.J., Golowich, N.: Independent policy gradient methods for competitive reinforcement learning. Adv. Neural Inf. Process. Syst. 33, 5527–5540 (2020)

Ding, D., Zhang, K., Basar, T., Jovanovic, M.: Natural policy gradient primal–dual method for constrained Markov decision processes. Adv. Neural Inf. Process. Syst. 33, 8378–8390 (2020)

Domingues, O.D., Ménard, P., Kaufmann, E., Valko, M.: Episodic reinforcement learning in finite MDPs: minimax lower bounds revisited. In: Algorithmic Learning Theory, pp. 578–598 (2021)

Du, S., Jin, C., Jordan, M., Póczos, B., Singh, A., Lee, J.: Gradient descent can take exponential time to escape saddle points. Adv. Neural Inf. Process. Syst. 30, 1068–1078 (2017)

Fazel, M., Ge, R., Kakade, S., Mesbahi, M.: Global convergence of policy gradient methods for the linear quadratic regulator. In: International Conference on Machine Learning, pp. 1467–1476 (2018)

Jansch-Porto, J.P., Hu, B., Dullerud, G.E.: Convergence guarantees of policy optimization methods for Markovian jump linear systems. In: American Control Conference, pp. 2882–2887. IEEE (2020)

Kakade, S.M.: A natural policy gradient. Adv. Neural Inf. Process. Syst. 14, 1531–1538 (2002)

Khamaru, K., Pananjady, A., Ruan, F., Wainwright, M.J., Jordan, M.I.: Is temporal difference learning optimal? An instance-dependent analysis. SIAM J. Math. Data Sci. 3(4), 1013–1040 (2021)

Khodadadian, S., Doan, T.T., Romberg, J., Maguluri, S.T.: Finite sample analysis of two-time-scale natural actor-critic algorithm. IEEE Trans. Automatic Control. (2022). https://doi.org/10.1109/TAC.2022.3190032

Konda, V.R., Tsitsiklis, J.N.: Actor-critic algorithms. Adv. Neural Inf. Process. Syst. 12, 1008–1014 (2000)

Lan, G.: Policy mirror descent for reinforcement learning: linear convergence, new sampling complexity, and generalized problem classes. Math. Program. (2022). https://doi.org/10.1007/s10107-022-01816-5

Lan, G.: Policy optimization over general state and action spaces. arXiv preprint arXiv:2211.16715 (2022)

Lee, J.D., Simchowitz, M., Jordan, M.I., Recht, B.: Gradient descent only converges to minimizers. In: Conference on learning Theory, pp. 1246–1257 (2016)

Li, G., Cai, C., Chen, Y., Wei, Y., Chi, Y.: Is Q-learning minimax optimal? A tight sample complexity analysis. arXiv preprint arXiv:2102.06548 (2021)

Li, G., Shi, L., Chen, Y., Chi, Y., Wei, Y.: Settling the sample complexity of model-based offline reinforcement learning. arXiv preprint arXiv:2204.05275 (2022)

Li, Y., Zhao, T., Lan, G.: First-order policy optimization for robust Markov decision process. arXiv preprint arXiv:2209.10579 (2022)

Liu, B., Cai, Q., Yang, Z., Wang, Z.: Neural proximal/trust region policy optimization attains globally optimal policy. Adv. Neural Inf. Process. Syst. 32, 10565–10576 (2019)

Liu, Y., Zhang, K., Basar, T., Yin, W.: An improved analysis of (variance-reduced) policy gradient and natural policy gradient methods. Adv. Neural Inf. Process. Syst. 33, 7624–7636 (2020)

Mei, J., Gao, Y., Dai, B., Szepesvari, C., Schuurmans, D.: Leveraging non-uniformity in first-order non-convex optimization. In: International Conference on Machine Learning, pp. 7555–7564 (2021)

Mei, J., Xiao, C., Dai, B., Li, L., Szepesvári, C., Schuurmans, D.: Escaping the gravitational pull of softmax. Adv. Neural Inf. Process. Syst. 33, 21130–21140 (2020)

Mei, J., Xiao, C., Szepesvari, C., Schuurmans, D.: On the global convergence rates of softmax policy gradient methods. In: International Conference on Machine Learning, pp. 6820–6829 (2020)

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A.A., Veness, J., Bellemare, M.G., Graves, A., Riedmiller, M., Fidjeland, A.K., Ostrovski, G., et al.: Human-level control through deep reinforcement learning. Nature 518(7540), 529–533 (2015)

Pananjady, A., Wainwright, M.J.: Instance-dependent \(\ell _{\infty }\)-bounds for policy evaluation in tabular reinforcement learning. IEEE Trans. Inf. Theory 67(1), 566–585 (2020)

Peters, J., Schaal, S.: Natural actor-critic. Neurocomputing 71(7–9), 1180–1190 (2008)

Schulman, J., Levine, S., Abbeel, P., Jordan, M., Moritz, P.: Trust region policy optimization. In: International Conference on Machine Learning, pp. 1889–1897 (2015)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

Shani, L., Efroni, Y., Mannor, S.: Adaptive trust region policy optimization: global convergence and faster rates for regularized MDPs. In: AAAI Conference on Artificial Intelligence, vol. 34, pp. 5668–5675 (2020)

Silver, D., Huang, A., Maddison, C.J., Guez, A., Sifre, L., Van Den Driessche, G., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M., et al.: Mastering the game of Go with deep neural networks and tree search. Nature 529(7587), 484–489 (2016)

Sutton, R.S.: Temporal credit assignment in reinforcement learning. Ph.D. thesis, University of Massachusetts (1984)

Sutton, R.S., McAllester, D.A., Singh, S.P., Mansour, Y.: Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 12, 1057–1063 (2000)

Tu, S., Recht, B.: The gap between model-based and model-free methods on the linear quadratic regulator: an asymptotic viewpoint. In: Conference on Learning Theory, pp. 3036–3083 (2019)

Wang, L., Cai, Q., Yang, Z., Wang, Z.: Neural policy gradient methods: global optimality and rates of convergence. In: International Conference on Learning Representations (2019)

Wei, C.-Y., Lee, C.-W., Zhang, M., Luo, H.: Last-iterate convergence of decentralized optimistic gradient descent/ascent in infinite-horizon competitive Markov games. In: Conference on Learning Theory, pp. 4259–4299. PMLR (2021)

Williams, R.J.: Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 8(3–4), 229–256 (1992)

Wu, Y.F., Zhang, W., Xu, P., Gu, Q.: A finite-time analysis of two time-scale actor-critic methods. Adv. Neural Inf. Process. Syst. 33, 17617–17628 (2020)

Xie, Q., Yang, Z., Wang, Z., Minca, A.: Provable fictitious play for general mean-field games. arXiv preprint arXiv:2010.04211 (2020)

Xu, T., Liang, Y., Lan, G.: A primal approach to constrained policy optimization: global optimality and finite-time analysis. arXiv preprint arXiv:2011.05869 (2020)

Xu, T., Wang, Z., Liang, Y.: Non-asymptotic convergence analysis of two time-scale (natural) actor-critic algorithms. arXiv preprint arXiv:2005.03557 (2020)

Yan, Y., Li, G., Chen, Y., Fan, J.: Model-based reinforcement learning is minimax-optimal for offline zero-sum Markov games. arXiv preprint arXiv:2206.04044 (2022)

Yang, W., Li, X., Xie, G., Zhang, Z.: Finding the near optimal policy via adaptive reduced regularization in MDPs. arXiv preprint arXiv:2011.00213 (2020)

Zhan, W., Cen, S., Huang, B., Chen, Y., Lee, J.D., Chi, Y.: Policy mirror descent for regularized reinforcement learning: a generalized framework with linear convergence. arXiv preprint arXiv:2105.11066 (2021)

Zhang, J., Kim, J., O’Donoghue, B., Boyd, S.: Sample efficient reinforcement learning with REINFORCE. In: AAAI Conference on Artificial Intelligence, vol. 35, pp. 10887–10895 (2021)

Zhang, J., Koppel, A., Bedi, A.S., Szepesvari, C., Wang, M.: Variational policy gradient method for reinforcement learning with general utilities. Adv. Neural Inf. Process. Syst. 33, 4572–4583 (2020)

Zhang, J., Ni, C., Szepesvari, C., Wang, M.: On the convergence and sample efficiency of variance-reduced policy gradient method. Adv. Neural Inf. Process. Syst. 34, 2228–2240 (2021)

Zhang, K., Hu, B., Basar, T.: Policy optimization for \(\cal{H}_2\) linear control with \(\cal{H}_{\infty }\) robustness guarantee: implicit regularization and global convergence. In: Learning for Dynamics and Control, pp. 179–190 (2020)

Zhang, K., Koppel, A., Zhu, H., Basar, T.: Global convergence of policy gradient methods to (almost) locally optimal policies. SIAM J. Control. Optim. 58(6), 3586–3612 (2020)

Zhang, X., Chen, Y., Zhu, X., Sun, W.: Robust policy gradient against strong data corruption. In: International Conference on Machine Learning, pp. 12391–12401 (2021)

Zhao, Y., Tian, Y., Lee, J., Du, S.: Provably efficient policy gradient methods for two-player zero-sum Markov games. in: International Conference on Artificial Intelligence and Statistics (2021)

Acknowledgements

Y. Wei is supported in part by the the NSF grants CCF-2106778 and CAREER award DMS-2143215. Y. Chi is supported in part by the grants ONR N00014-18-1-2142 and N00014-19-1-2404, ARO W911NF-18-1-0303, NSF CCF-1806154, CCF-2007911 and CCF-2106778. Y. Chen is supported in part by the Alfred P. Sloan Research Fellowship, the Google Research Scholar Award, the AFOSR grant FA9550-22-1-0198, the ONR grant N00014-22-1-2354, and the NSF grants CCF-2221009, CCF-1907661, IIS-2218713 and IIS-2218773.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was presented in part at the Conference on Learning Theory (COLT) 2021.

Appendices

Preliminary facts

1.1 Basic properties of the constructed MDP

In this section, we provide more basic properties about the MDP we have constructed (see Sect. 3). Specifically, we present a miscellaneous collection of basic relations regarding more general policies, postponing the proof to Appendix A.4.

Lemma 8

Consider any policy \(\pi \), and recall the quantities defined in (18). Suppose that \(\gamma ^{2H}\ge 1/2\) and \(0<c_{\textrm{p}}\le 1/6\).

-

(i)

For any state \(s\in \{3,\ldots , H\}\), one has

$$\begin{aligned} \gamma ^{\frac{3}{2}}\tau _{s-1} \le Q^{\pi }(s,a_{0})&= r_{s}+\gamma ^{2}p\tau _{s-2} \le \gamma ^{\frac{1}{2}}\tau _{s}, \end{aligned}$$(59a)$$\begin{aligned} Q^{\pi }(s,a_{1})&=\gamma V^{\pi }(\overline{s-1}), \end{aligned}$$(59b)$$\begin{aligned} Q^{\pi }(s,a_{2})&=r_{s}+\gamma pV^{\pi }(\overline{s-2}) \le \gamma ^{\frac{1}{2}}\tau _{s}. \end{aligned}$$(59c)If one further has \(V^{\pi }(\overline{s-2})\ge 0\), then \(Q^{\pi }(s,a_{2}) \ge \gamma ^{\frac{3}{2}}\tau _{s-1}\).

-

(ii)

If \(V^{\pi }(s) \ge \tau _s\) for some \(s\in \{3,\ldots , H\}\), then we necessarily have

$$\begin{aligned} \pi (a_{1}\,|\,s) \ge \frac{1-\gamma }{2}. \end{aligned}$$(60) -

(iii)

For any \({\overline{s}}\in \{{\overline{1}},\ldots ,{\overline{H}}\}\), one has

$$\begin{aligned} Q^{\pi }({\overline{s}},a_{0})&=\gamma \tau _{s} \qquad \text {and} \qquad Q^{\pi }({\overline{s}},a_{1}) = \gamma V^{\pi }(s), \end{aligned}$$(61)where we recall the definition of \(V^{\pi }(1)\) and \(V^{\pi }(2)\) in (25). In addition, if \(\pi (a_1\,|\,{\overline{s}})>0\), then

$$\begin{aligned} V^{\pi }({\overline{s}}) \ge \gamma \tau _s \qquad \text {holds if and only if} \qquad V^{\pi }(s) \ge \tau _{s}. \end{aligned}$$(62)This means that: if \(\pi ^{(t)}(a_1\,|\,{\overline{s}})>0\) holds for all \(t\ge 0\), then one necessarily has

$$\begin{aligned} t_{{\overline{s}}}(\gamma \tau _s) = t_{s}(\tau _s). \end{aligned}$$(63) -

(iv)

For any policy \(\pi \), we have

$$\begin{aligned}&Q^{\pi }(1,a_{0})=-\gamma ^{2},\quad Q^{\pi }(1,a_{1})=\gamma ^{2}, \quad V^{\pi }(1)=-\gamma ^{2}\pi (a_{0}\,|\,1)+\gamma ^{2}\pi (a_{1}\,|\,1), \end{aligned}$$(64a)$$\begin{aligned}&Q^{\pi }(2,a_{0})=-\gamma ^{4},\quad Q^{\pi }(2,a_{1})=\gamma ^{4}, \quad V^{\pi }(2)=-\gamma ^{4}\pi (a_{0}\,|\,2)+\gamma ^{4}\pi (a_{1}\,|\,2). \end{aligned}$$(64b) -

(v)

Consider any policy \(\pi \) obeying \(\min _{a,s}\pi (a\,|\,s)>0\). For every \(s\in \{3,\ldots , H\}\), if \(V^{\pi }(s) \ge \gamma ^{\frac{1}{2}}\tau _{s}\) occurs, then one necessarily has \(V^{\pi }(s-1) \ge \tau _{s-1}.\)

-

(vi)

If \(V^{\pi }(s-2) < \tau _{s-2}\) and \(\pi (a_1 \,|\,\overline{s-2}) >0\), then

$$\begin{aligned} Q^{\pi }(s,a_0) - Q^{\pi }(s,a_2) = \gamma p \big (\gamma \tau _{s-2} - V^\pi (\overline{s-2}) \big ) > 0. \end{aligned}$$If \(V^{\pi }(s-1) \le \tau _{s-1}\) and \(V^{\pi }(\overline{s-2})\ge 0\), then

$$\begin{aligned} \min \big \{Q^{\pi }(s, a_0), Q^{\pi }(s, a_2) \big \} - Q^{\pi }(s, a_1) \ge (1-\gamma )/8. \end{aligned}$$ -

(vii)

Consider the softmax PG update rule (12). One has for any \(s \in {\mathcal {S}}\) and any \(\theta \),

$$\begin{aligned} \sum _a \frac{\partial V^{\pi _{\theta }}(\mu )}{\partial \theta (s, a)} = 0 \qquad \text {and}\qquad \sum _a \theta ^{(t)}(s,a) = 0 \end{aligned}$$(65)

Remark 8

As it turns out, invoking Part (v) of Lemma 8 recursively reveals that: for any \(2\le s \le H\) and any \(t< t_s({\tau }_s)\), we have

This in turn implies that \(t_2({\tau }_2)\le t_3({\tau }_3)\le \cdots \le t_H({\tau }_H)\) according to the definition (30).

Let us point out some implications of Lemma 8 that help guide our lower bound analysis. Once again, it is helpful to look at the results of this lemma when \(\gamma \approx 1\) and \(\gamma ^{H} \approx 1\). In this case, the quantities defined in (18) obey \(\tau _s\approx r_s \approx 1/2\), allowing us to obtain the following messages:

-

Lemma 8(i) implies that, under mild conditions,

$$\begin{aligned} Q^{\pi }(s,a_0)\approx Q^{\pi }(s,a_2) \approx 1/2 \end{aligned}$$holds any \(s\in \{3,\ldots , H\}\) and any policy \(\pi \). In comparison to the optimal values (27), this result uncovers the strict sub-optimality of actions \(a_0\) and \(a_2\), and indicates that one cannot possibly approach the optimal values unless \(\pi (a_{1}\,|\,s)\approx 1\).

-

As further revealed by Lemma 8(ii), one needs to ensure a sufficiently large \(\pi (a_{1}\,|\,s)\)—i.e., \(\pi (a_{1}\,|\,s) \ge (1- \gamma )/2\)—in order to achieve \(V^{\pi }(s) \gtrapprox 1/2\).

-

Lemma 8(iii) establishes an intimate connection between \(V^{\pi }(s)\) and \(V^{\pi }({\overline{s}})\): if we hope to attain \(V^{\pi }({\overline{s}})\gtrapprox 1/2\) for an adjoint state \({\overline{s}}\), then one needs to first ensure that its associated primary state achieves \(V^{\pi }(s)\gtrapprox 1/2\). The equivalence property (63) allows one to propagate the crossing time of state s to that of state \({\overline{s}}\).

-

In Lemma 8(iv), we make clear that the Q-functions w.r.t. the buffer states are independent of the policy in use.

-

Lemma 8(v) further establishes an intriguing connection between the crossing time of state s and that of the preceding state \(s-1\).

-

Lemma 8(vi) uncovers that: (a) if \(V^{\pi }(s-2)\) is not sufficiently large, then the Q-value associated with \((s,a_0)\) dominates the one associated with \((s,a_2)\); (b) if \(V^{\pi }(s-1)\) is not large enough, then the Q-value associated with \((s,a_1)\) is dominated by that of the other two.

-

As indicated by Lemma 8(vii), the sum of the iterate \(\theta ^{(t)}(s,a)\) over a remains unchanged throughout the execution of the algorithm.

Another key feature that permeates our analysis is a certain monotonicity property of value function estimates as the iteration count t increases, which we discuss in the sequel. To begin with, akin to the monotonicity properties of gradient descent [6], the softmax PG update is known to achieve monotonic performance improvement in a pointwise manner, as summarized in the following lemma. The interested reader is referred to Agarwal et al. [1, Lemma C.2] for details.

Lemma 9

Consider the softmax PG method (12). One has

for any state-action pair (s, a) and any \(t\ge 0\), provided that \(0<\eta < (1-\gamma )^2 / 5\).

The preceding monotonicity feature, in conjunction with the uniform initialization scheme, ensures non-negativity of value function estimates throughout the execution of the algorithm.

Lemma 10

Consider the softmax PG method (12), and suppose the initial policy \(\pi ^{(0)}(\cdot \,|\,s)\) for any \(s\in {\mathcal {S}}\) is given by a uniform distribution over the action space \({\mathcal {A}}_s\) and \(0< \eta < (1-\gamma )^2/5\). Then one has

Proof

The only negative rewards in our constructed MDP are \(r(s_1,a_0)\) for \(s_1\in {\mathcal {S}}_1\) and \(r(s_2,a_0)\) for \(s_2\in {\mathcal {S}}_2\). When \(\pi ^{(0)}(\cdot \,|\,s_1)\) is uniformly distributed, the MDP specification (22) gives

Similarly, one has \(V^{(0)}(s_2)=0\) for all \(s_2\in {\mathcal {S}}_2\). Applying Lemma 9, we can demonstrate that \(V^{(t)}(s)\ge V^{(0)}(s) \ge 0\) for any \(s\in {\mathcal {S}}_1 \cup {\mathcal {S}}_2\) and any \(t\ge 0\). From the Bellman equation, it is easily seen that the value function \(V^{(t)}\) of any other state is a linear combination of \(\{r(s,a)\,|\,s\notin {\mathcal {S}}_1, s\notin {\mathcal {S}}_2\}\), \(\{V^{(t)}(s_1) \,|\,s_1\in {\mathcal {S}}_1 \}\) and \(\{V^{(t)}(s_2)\,|\,s_2\in {\mathcal {S}}_2\}\), which are all non-negative. It thus follows that \(V^{(t)}(s)\ge 0\) for any \(s\in {\mathcal {S}}\) and any \(t\ge 0\). \(\square \)

1.2 A type of recursive relations

In addition, we make note of a sort of recursive relations that appear commonly when studying the dynamics of gradient descent [6]. The proof of the following lemma can be found in Appendix A.5.

Lemma 11

Consider a positive sequence \(\{x_t\}_{t\ge 0}\).

-

(i)

Suppose that \(x_t\le x_{t-1}\) for all \(t>0\). If there exists some quantity \(c_{\textrm{l}} >0\) obeying \(c_{\textrm{l}} x_0\le 1/2\) and

$$\begin{aligned} x_t \ge x_{t-1} - c_{\textrm{l}} x_{t-1}^2 \qquad \text {for all }t > 0, \end{aligned}$$(67a)then one has

$$\begin{aligned} x_{t}\ge \frac{1}{2c_{\textrm{l}} t + \frac{1}{x_{0}}} \qquad \text {for all }t \ge 0. \end{aligned}$$(67b) -

(ii)

If there exists some quantity \(c_{\textrm{u}} >0\) obeying

$$\begin{aligned} x_t \le x_{t-1} - c_{\textrm{u}} x_{t-1}^2 \qquad \text {for all }t > 0, \end{aligned}$$(68a)then it follows that

$$\begin{aligned} x_{t}\le \frac{1}{c_{\textrm{u}} t + \frac{1}{x_{0}}} \qquad \text {for all }t \ge 0. \end{aligned}$$(68b) -

(iii)

Suppose that \(0<x_{t} < c_{x}\) for all \(t<t_{0}\) and \(x_{t_0}\ge c_{x}\) for some quantity \(c_x>0\). Assume that

$$\begin{aligned} x_{t}\ge x_{t-1}+c_{-}x_{t-1}^{2}\qquad \text {for all }0<t\le t_0 \end{aligned}$$(69a)for some quantity \(c_- >0\). Then one necessarily has

$$\begin{aligned} t_0 \le \frac{1+c_{-}c_x}{c_- x_{0}}. \end{aligned}$$(69b) -

(iv)