Abstract

The question whether the Simplex Algorithm admits an efficient pivot rule remains one of the most important open questions in discrete optimization. While many natural, deterministic pivot rules are known to yield exponential running times, the random-facet rule was shown to have a subexponential running time. For a long time, Zadeh’s rule remained the most prominent candidate for the first deterministic pivot rule with subexponential running time. We present a lower bound construction that shows that Zadeh’s rule is in fact exponential in the worst case. Our construction is based on a close relation to the Strategy Improvement Algorithm for Parity Games and the Policy Iteration Algorithm for Markov Decision Processes, and we also obtain exponential lower bounds for Zadeh’s rule in these contexts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The quest for discovering the best pivot rule for the Simplex Algorithm [5] remains one of the most important challenges in discrete optimization. In particular, while several other weakly polynomial algorithms for solving Linear Programs have been proposed in the past [3, 9, 27,28,29], no fully “combinatorial” algorithm with strongly polynomial running time is known to date – in fact, the question whether such an algorithm exists is contained in Smale’s list of 18 mathematical problems for the century, among other famous unsolved problems like the Riemann hypothesis and the P versus NP problem [36]. The Simplex Algorithm is inherently combinatorial and may yield a strongly polynomial algorithm if a suitable pivot rule exists. The question what theoretical worst-case running time can be achieved with a pivot rule for the Simplex Algorithm is closely related to the question what the largest possible (combinatorial) diameter of a polytope is, and, in particular, to the weak Hirsch conjecture which states that the diameter is polynomially bounded [5, 35, 41].

For a variety of natural pivot rules, exponential worst-case examples were found soon after the Simplex Algorithm was proposed [1, 20, 30]. These examples are highly imbalanced in the sense that they cause some improving directions to be selected by the pivot rule only rarely, while others are selected often. Randomized pivot rules were proposed as a way to average out the behavior of the Simplex Algorithm and to thus avoid imbalanced behavior. The hope that this may lead to a better worst-case performance was met when subexponential upper bounds were eventually established for the random-facet pivot rule [22, 25, 32]. Other promising candidates for efficient pivot rules were deterministic “pseudo-random” rules that balance the behavior of the algorithm explicitly by considering all past decisions in each step, instead of obliviously deciding for improvements independently. The two most prominent examples of such pivot rules are Cunningham’s rule [4] which fixes an order of all possible improvement directions at the start and, in each step, picks the next improving direction in this order in round robin fashion, and Zadeh’s rule [43] which picks an improving direction chosen least often so far in each step. By design, bad examples are much more difficult to construct for these more balanced pivoting rules, and it took more than 30 years until the first lower bounds were established. Eventually, a subexponential lower bound was shown for the random-facet rule [17, 18, 21] and the random-edge rule [17, 33]. Most recently, a subexponential lower bound was shown for Zadeh’s rule [7, 15], and an exponential lower bound for Cunningham’s rule [2]. An exponential lower bound for Zadeh’s rule is known on Acyclic Unique Sink Orientations [39], but it is unclear whether the corresponding construction can be realized as a Linear Program. This means that Zadeh’s rule remained the only promising candidate for a deterministic pivot rule to match the subexponential running time of the random-facet rule.

Local search algorithms similar to the Simplex Algorithm are important in other domains, like Vöge and Jurdziński’s Strategy Improvement Algorithm for Parity Games [42] and Howard’s Policy Iteration Algorithm for Markov Decision Processes [23]. Much like the Simplex Algorithm, these algorithms rely on a pivot rule that determines which local improvement to perform in each step. And much like for the Simplex Algorithm, many natural deterministic pivot rules for these algorithms have been shown to be exponential [2, 10, 12, 13], while a subexponential upper bound has been shown for the random-facet rule [16, 18, 25, 26, 32]. Again, Zadeh’s rule remained as a promising candidate for a deterministic subexponential pivot rule.

Our results and techniques. In this paper, we give the first exponential lower bound for Zadeh’s pivot rule for the Strategy Improvement Algorithm for Parity Games, for the Policy Iteration Algorithm for Markov Decision Processes, and for the Simplex Algorithm. This closes a long-standing open problem by eliminating Zadeh’s pivot rule as a candidate for a deterministic, subexponential pivot rule in each of these three areas (up to tie-breaking). We note that while the lower bound for the Simplex Algorithm is arguably our most important result, the lower bounds for Parity Games and Markov Decision Processes are important in their own right and complement previous results in these areas [10, 12, 13, 16].

Our lower bound construction is based on the technique used in [2, 15] (among others). In particular, we construct a Parity Game that forces the Strategy Improvement Algorithm to emulate a binary counter by enumerating strategies corresponding to the natural numbers 0 to \(2^{n-1}\). The construction is then converted into a Markov Decision Process that behaves similarly (but not identically) regarding the Policy Iteration Algorithm. Finally, using a well-known transformation, the Markov Decision Process can be turned into a Linear Program for which the Simplex Algorithm mimics the behavior of the Policy Iteration Algorithm. We remark that we use an artificial, but systematic and polynomial time computable, tie-breaking rule for the pivot step whenever Zadeh’s rule does not yield a unique improvement direction. Importantly, while the tie-breaking rule is carefully crafted to simplify the analysis, conceptually, our construction is not based on exploiting the tie-breaking rule. Note that it cannot be avoided to fix a tie-breaking rule when analyzing Zadeh’s pivot rule, in the sense that, for every Markov Decision Process of size n, a tie-breaking rule tailored to this instance exists, such that the Policy Iteration Algorithm takes at most n steps [14, Cor. 4.79].

Roughly speaking, much like the subexponential construction in [15], our construction consists of multiple levels, one for each bit of the counter. The subexponential construction of [15] requires each level to connect to the level of the least significant set bit of the currently represented number, which yields a quadratic number m of edges in the construction, which in turn leads to a lower bound of \(2^{\varOmega (n)} = 2^{\varOmega (\sqrt{m})}\), i.e., a subexponential bound in the size \(\varTheta (m)\) of the construction. In contrast, our construction only needs each level to connect to one of the first two levels, depending on whether the currently represented number is even or odd. Very roughly, this is the key idea of our result, since it allows us to reduce the size of the construction to \(\varTheta (n)\), which leads to an exponential lower bound. However, to make this change possible, many other technical details have to be addressed, and, in particular, we are no longer able to carry the construction for Parity Games over as-is to Markov Decision Processes.

A challenge when constructing a lower bound for Zadeh’s rule is to keep track not only of the exact sets of improving directions in each step, but also of the exact number of times every improving direction was selected in the past. In contrast, the exponential lower bound construction for Cunningham’s rule [2] “only” needs to keep track of the next improving direction in the fixed cyclic order. As a consequence, the full proof of our result is very technical, because it requires us to consider all possible improvements in every step, and there are many transitional steps between configurations representing natural numbers. In this paper, we give an exact description of our construction and an outline of our proof. A complete and detailed proof can be found in the full version [6]. Importantly, our construction has been implemented and tested empirically for consistency with our formal treatment, see Appendix A.Footnote 1 The resulting animations of the execution for \(n=3\) resp. \(n=4\), which take 160 resp. 466 steps, are available online [24].

2 Parity games and strategy improvement

A Parity Game (PG) is a two player game that is played on a directed graph where every vertex has at least one outgoing edge. Formally, it is defined as a tuple \(G=(V_0,V_1,E,\varOmega )\), where \(V_0\cap V_1=\emptyset \), \((V_0\cup V_1,E)\) is a directed graph and \(\varOmega :V_0\cup V_1\rightarrow \mathbb {N}\) is the priority function. The set \(V_p\) is the set of vertices of player \(p\in \{0,1\}\) and the set \(E_p{:}{=}\{(v,w)\in E:v\in V_p\}\) is the set of edges of player \(p\in \{0,1\}\). We let \(V{:}{=}V_0\cup V_1\). A play in G is an infinite walk in the graph. The winner of a play is determined by the highest priority that occurs infinitely often along the walk. If this priority is even, player 0 wins, otherwise, player 1 wins.

Formally, a play in G can be described by a pair of strategies. A strategy for player p is a function that chooses one outgoing edge for each vertex of player p. To be precise, a (deterministic positional) strategy for player p is a function \(\sigma :V_p\rightarrow V\) that selects for each vertex \(v\in V_p\) a target vertex \(\sigma (v)\) such that \((v,\sigma (v))\in E_p\) for all \(v\in V_p\). Throughout this paper we only consider deterministic positional strategies and henceforth simply refer to them as strategies. Two strategies \(\sigma ,\tau \) for players 0,1 and a starting vertex v then define a unique play starting at v with the corresponding walk being determined by the strategies of the players. A play can thus fully be described by a tuple \((\sigma ,\tau ,v)\) and is denoted by \(\pi _{\sigma ,\tau ,v}\). A player 0 strategy \(\sigma \) is winning for player 0 at vertex v, if player 0 is the winner of every game \(\pi _{\sigma ,\tau ,v}\), regardless of \(\tau \). Winning strategies for player 1 are defined analogously. A fundamental result in the theory of Parity Games is that, for every starting vertex, there always is a winning strategy for exactly one of the two players. The computational problem of solving a parity game consists in finding the corresponding partition of V.

Theorem 1

(e.g. [11, 31]) In every Parity Game, V can be partitioned into winning sets \((W_0,W_1)\), where player p has a positional winning strategy for each starting vertex \(v\in W_p\).

2.1 Vertex valuations, the strategy improvement algorithm, and sink games

We now discuss the Strategy Improvement Algorithm of Vöge and Jurdziński [42] and its theoretical background. We discuss the concept of vertex valuations and define a special class of games that our construction belongs to, called sink games, and define vertex valuations for this class of games. We refer to [2, 14] for a more in-depth and general discussion of these topics.

Fix a pair \(\sigma ,\tau \) of strategies for players 0,1, respectively. The idea of vertex valuations is to assign a valuation to every \(v\in V\) that encodes how “profitable” vertex v is for player 0. By defining a suitable pre-order on these valuations, this enables us to compare the valuations of vertices and “improve” the strategy \(\sigma \) by changing the target \(\sigma (v)\) of a vertex v to a more “profitable” vertex \(w\ne \sigma (v)\) with \((v,w)\in E\). Since there are only finitely many strategies and vertices, the strategy of player 0 can only be improved a finite number of times, eventually resulting in a so-called optimal strategy for player 0. It is known (e.g., [14, 42]) that an optimal strategy can then be used to determine the winning sets \(W_0,W_1\) of the Parity Game and thus to solve the game.

Formally, vertex valuations are given as a totally ordered set \((U,\preceq )\). For every pair of strategies \(\sigma ,\tau \), we are given a function \(\varXi _{\sigma ,\tau }:V\rightarrow U\) assigning vertex valuations to vertices. Since U is totally ordered, this induces a preorder of the vertices for fixed strategies \(\sigma ,\tau \). To eliminate the dependency on the player 1 strategy, we define the vertex valuation of v with respect to \(\sigma \) by \(\varXi _{\sigma }(v){:}{=}\min _{\prec }\varXi _{\sigma ,\tau }(v)\) where the minimum is taken over all player 1 strategies \(\tau \). Formally, if \(\varXi _{\sigma ,\tau }(\tau (v))\preceq \varXi _{\sigma }(v)\) for all \((v,u)\in E_1\), then the player 1 strategy \(\tau \) is called counterstrategy for \(\sigma \). It is well-known that counterstrategies exist and can be computed efficiently [42]. For a strategy \(\sigma \), an arbitrary but fixed counterstrategy is denoted by \(\tau ^{\sigma }\).

We can extend this ordering to a partial ordering of strategies by defining \(\sigma \unlhd \sigma '\) if and only if \(\varXi _{\sigma }(v)\preceq \varXi _{\sigma '}(v)\) for all \(v\in V\). We write \(\sigma \lhd \sigma '\) if \(\sigma \unlhd \sigma '\) and \(\sigma \ne \sigma '\). Given a strategy \(\sigma \), a strategy \(\sigma '\) with \(\sigma \lhd \sigma '\) can be obtained by applying an improving switch. Intuitively, an improving switch is an edge such that including e in \(\sigma \) improves the strategy with respect to \(\unlhd \). Formally, let \(e=(v,u)\in E_0\) and \(\sigma (v)\ne u\). We define the strategy  via

via  if \(v'\ne v\) and

if \(v'\ne v\) and  . The edge e is improving for \(\sigma \) if

. The edge e is improving for \(\sigma \) if  and we denote the set of improving switches for \(\sigma \) by \(I_{\sigma }\).

and we denote the set of improving switches for \(\sigma \) by \(I_{\sigma }\).

The Strategy Improvement Algorithm now operates as follows. Given an initial strategy \(\iota \), apply improving switches until a strategy \(\sigma ^*\) with \(I_{\sigma ^*}=\emptyset \) is reached. Such a strategy is called optimal and a strategy is optimal if and only if \(\sigma \lhd \sigma ^*\) for all player 0 strategies [42]. The running time of this algorithm highly depends on the order in which improving switches are applied – a point that we discuss in more detail later.

This terminology allows us to introduce a special class of Parity Games, called sink games. This class allows for an easy definition of the vertex valuations as discussed after the definition.

Definition 1

A Parity Game \(G=(V_0,V_1,E,\varOmega )\) together with an initial player 0 strategy \(\iota \) is a Sink Game if the following two statements hold.

-

1.

There is a vertex \(t\in V_1\) with \((t,t)\in E\) and \(\varOmega (t)=1\) reachable from all vertices. In addition, \(\varOmega (v)>\varOmega (t)\) for all \(v\in V{\setminus }\{t\}\). This unique vertex t is called the sink of the sink game.

-

2.

For each player 0 strategy \(\sigma \) with \(\iota \unlhd \sigma \) and each vertex v, every play \(\pi _{\sigma ,\tau ^{\sigma },v}\) ends in t.

Let \(G=(V_0,V_1,E,\varOmega )\) and \(\iota \) define a Sink Game. To simplify the presentation, assume that \(\varOmega \) is injective. Since G is a Sink Game, every play \(\pi _{\sigma ,\tau ^\sigma ,v}\) in G can be represented as the walk \(\pi _{\sigma ,\tau ^\sigma ,v}=v,v_2,\dots ,v_k,(t)^{\infty }\). In particular, a play can be identified with its path component \(v,v_2,\dots ,v_k\). Now, defining \(\varXi _{\sigma }(v)\) as the path component of \(\pi _{\sigma ,\tau ^\sigma ,v}\) is a well-studied choice of vertex valuations. To give a total ordering of the vertex valuations, it thus suffices to give a ordering of all subsets of V.

Let \(M,N\subseteq V, M\ne N\). Intuitively, M is better than N for player 0 if it contains a vertex with large even priority not contained in N, or if it there is a vertex with large odd priority contained in N but not in M. Formally, \(v\in M\varDelta N\) is called most significant difference of M and N if \(\varOmega (v)>\varOmega (w)\) for all \(w\in M\varDelta N, w\ne v\). The most significant difference of M and N is denoted by \(\varDelta (M,N)\) and allows us to define an ordering \(\prec \) on the subsets of V. For \(M,N\subset V, M\ne N\) we define

Note that \(\prec \) is a total ordering as we assume \(\varOmega \) to be injective. We mention here that injectivity is not necessary – the most significant difference of any two vertex valuations being unique suffices.

The following theorem summarizes the most important aspects related to Parity Games, vertex valuations and improving switches. Note that the construction of vertex valuations given here is a simplified version of the general concept of vertex valuations used for Parity Games. It is, however, in accordance with the general construction and we refer to [14] for a more detailed discussion.

Theorem 2

[42] Let \(G=(V_0,V_1,E,\varOmega )\) be a Sink Game and \(\sigma \) be a player 0 strategy.

-

1.

The vertex valuations of a player 0 strategy are polynomial-time computable.

-

2.

There is an optimal player 0 strategy \(\sigma ^*\) with respect to the ordering \(\lhd \).

-

3.

If \(I_{\sigma }=\emptyset \), then \(\sigma \) is optimal.

-

4.

We have \(I_{\sigma }=\{(v,w)\in E_0:\varXi _{\sigma }(\sigma (v))\lhd \varXi _{\sigma }(w)\}\) and

for all \(e\in I_{\sigma }\).

for all \(e\in I_{\sigma }\). -

5.

Given an optimal player 0 strategy, the winning sets \(W_0\) and \(W_1\) of player 0 and player 1 can be computed in polynomial time.

3 Lower bound construction

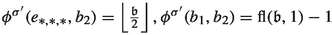

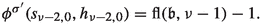

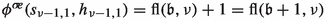

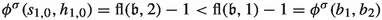

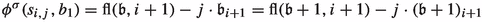

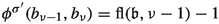

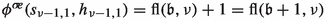

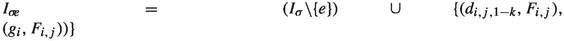

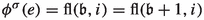

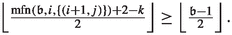

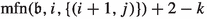

In this section, we describe a PG \(S_n=(V_0,V_1,E,\varOmega )\) such that the Strategy Improvement Algorithm performs at least \(2^n\) iterations when using Zadeh’s pivot rule and a specific tie-breaking rule. Before giving a formal definition, we give a high-level intuition of the main idea of the construction. A simplified visualization of the construction is given in Fig. 1.

Visualization of the general structure of the binary counter for \(n=4\). Level 1 encodes the least significant bit and level 4 encodes the most significant bit. The left picture shows the cycle centers and their distribution in the levels. The two figures on the right give examples for settings of the cycles representing the numbers 11 and 3, respectively

The key idea is that \(S_n\) simulates an n-digit binary counter. We thus introduce notation related to binary counting. It will be convenient to consider counter configurations with more than n bits, where unused bits are zero. In particular, we always interpret bit \(n+1\) as 0. Formally, we denote the set of n-bit configurations by \(\mathcal {B}_n{:}{=}\{\mathfrak {b}\in \{0,1\}^{\infty }:\mathfrak {b}_i=0\quad \forall i>n\}\). We start with index one, hence a counter configuration \(\mathfrak {b}\in \mathcal {B}_n\) is a tuple \((\mathfrak {b}_{n},\ldots ,\mathfrak {b}_1)\). Here, \(\mathfrak {b}_1\) is the least and \(\mathfrak {b}_n\) is the most significant bit. The integer value of \(\mathfrak {b}\in \mathcal {B}_n\) is \(\sum _{i=1}^n\mathfrak {b}_i2^{i-1}\). We identify the integer value of \(\mathfrak {b}\) with its counter configuration and use the natural ordering of \(\mathbb {N}\) to order counter configurations. For \(\mathfrak {b}\in \mathcal {B}_n,\mathfrak {b}\ne 0\), we define \(\nu (\mathfrak {b}) {:}{=}\min \{i\in \{1,\dots ,n\}:\mathfrak {b}_i = 1\}\) to be the least significant set bit of \(\mathfrak {b}\).

The PG \(S_n\) consists of n (nearly) identical levels and each level encodes one bit of the counter. Certain strategies and corresponding counterstrategies in \(S_n\) are then interpreted as binary numbers. If the Strategy Improvement Algorithm enumerates at least one player 0 strategy per \(\mathfrak {b}\in \mathcal {B}_n\) before finding the optimal strategy, it enumerates at least \(2^n\) strategies. Since the game has size linear in n, this then establishes the exponential lower bound.

The main challenge is to obey Zadeh’s pivot rule as it forces the algorithm to only use improving switches used least often during the execution. Intuitively, a counter obeying this rule needs to switch bits in a “balanced” way. However, counting from 0 to \(2^n-1\) in binary does not switch individual bits equally often. For example, the least significant bit is switched every time and the most significant bit is switched only once. The key idea to overcome this obstacle is to have a substructure in each level that contains two gadgets. These gadgets are called cycle centers. In every iteration of the algorithm, only one of the cycle centers is interpreted as encoding the bit of the current level. This enables us to perform operations within the other cycle center without losing the interpretation of the bit being equal to 0 or 1. This is achieved by an alternating encoding of the bit by the two cycle centers.

We now provide more details. Consider some level i, some \(\mathfrak {b}\in \mathcal {B}_n\) and denote the cycle centers of level i by \(F_{i,0}\) and \(F_{i,1}\). One of them now encodes \(\mathfrak {b}_i\). Which of them represents \(\mathfrak {b}_i\) depends on \(\mathfrak {b}_{i+1}\), since we always consider \(F_{i,\mathfrak {b}_{i+1}}\) to encode \(\mathfrak {b}_i\). This cycle center is called the active cycle center of level i, while \(F_{i,1-\mathfrak {b}_{i+1}}\) is called inactive. A cycle center can additionally be closed or open. These terms are used to formalize when a bit is interpreted as 0 or 1. To be precise, \(\mathfrak {b}_i\) is interpreted as 1 if and only if \(F_{i,\mathfrak {b}_{i+1}}\) is closed. In this way, cycle centers encode binary numbers. Since bit \(i+1\) switches every second time bit i switches, counting from 0 to \(2^n-1\) in binary then results in an alternating and balanced usage of both cycle centers of any level as required by Zadeh’s pivot rule.

We now describe the construction of a parity game that implements this idea in detail. Fix some \(n\in \mathbb {N}\). The vertex sets \(V_0,V_1\) of the underlying graph are composed as follows:

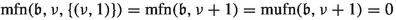

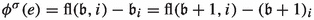

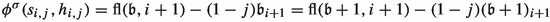

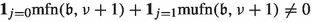

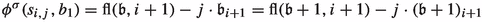

The priorities of the vertices and their sets of outgoing edges are given by Table 1. Note that every vertex \(v\in V_0\) has at most two outgoing edges. For convenience of notation, we henceforth identify the node names \(b_i\) and \(g_i\) for \(i > n\) with t. The graph can be separated into n levels, where the levels \(i<n-1\) are structurally identical and the levels \(n-1\) and n differ slightly from the other levels. The i-th level is shown in Fig. 2, the complete graph of \(S_3\) is shown in Fig. 3.

The graph \(S_3\) together with a canonical strategy representing the number 3 in the graph \(S_3\). The dashed copies of the vertices \(g_1,b_1\) and \(b_2\) all refer to the corresponding vertices of levels 1 and 2. Red edges belong to the strategy of player 0, blue edges belong to the counterstrategy of player 1. The dashed edges indicate the spinal path

The general idea of the construction is the following. Certain pairs of player 0 strategies \(\sigma \) and counterstrategies \(\tau ^{\sigma }\) are interpreted as representing a number \(\mathfrak {b}\in \mathcal {B}_n\). Such a pair of strategies induces a path starting at \(b_1\) and ending at t, traversing the levels \(i\in \{1,\dots ,n\}\) with \(\mathfrak {b}_i=1\) while ignoring levels with \(\mathfrak {b}_i=0\). This path is called the spinal path with respect to \(\mathfrak {b}\in \mathcal {B}_n\). Ignoring and including levels in the spinal path is controlled by the entry vertex \(b_i\) of each level \(i\in \{1,\dots ,n\}\). To be precise, when \(\mathfrak {b}\) is represented, the entry vertex of level i is intended to point towards the selector vertex \(g_i\) of level i if and only if \(\mathfrak {b}_i=1\). Otherwise, i.e., when \(\mathfrak {b}_i=0\), level i is ignored and the entry vertex \(b_i\) points towards the entry vertex of the next level.

Consider a level \(i\in \{1,\dots ,n-2\}\). Attached to the selector vertex \(g_i\) are the cycle centers \(F_{i,0}\) and \(F_{i,1}\) of level i. As described at the beginning of this section, these player 1 vertices are the main structures used for interpreting whether the bit i is equal to one. They alternate in encoding bit i. As discussed before, this is achieved by interpreting the active cycle center \(F_{i,\mathfrak {b}_{i+1}}\) as encoding \(\mathfrak {b}_i\) while the inactive cycle center \(F_{i,1-\mathfrak {b}_{i+1}}\) does not interfere with the encoding. This enables us to manipulate the inactive part of a level without losing the encoded value of \(\mathfrak {b}_i\). To this end, the selector vertex \(g_i\) is used to ensure that the active cycle center is contained in the spinal path.

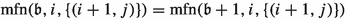

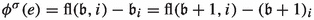

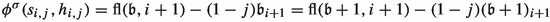

As discussed previously, a cycle center \(F_{i,j}\) can have different configurations. To be precise, it can be closed, halfopen, or open. The configuration of \(F_{i,j}\) is defined via the cycle vertices \(d_{i,j,0}\) and \(d_{i,j,1}\) of the cycle center and the two cycle edges \((d_{i,j,0},F_{i,j})\) and \((d_{i,j,1},F_{i,j})\). More precisely, \(F_{i,j}\) is closed with respect to a player 0 strategy \(\sigma \) if both cycle vertices point towards the cycle center, i.e., when \(\sigma (d_{i,j,0})=\sigma (d_{i,j,1})=F_{i,j}\). If this is the case for exactly one of the two edges, the cycle center \(F_{i,j}\) is called halfopen. A cycle that is neither closed nor halfopen is called open. An example of the different configurations is given in Fig. 4.

In addition, the cycle center is connected to its upper selection vertex \(s_{i,j}\). It connects the cycle center \(F_{i,j}\) with the first level via \((s_{i,j},b_1)\) and with either level \(i+1\) or \(i+2\) via \((s_{i,j},h_{i,j})\) via the respective edge \((h_{i,0},b_{i+2})\) or \((h_{i,1},g_{i+1})\) (depending on j). This vertex is thus central in allowing \(F_{i,j}\) to get access to either the beginning of the spinal path or the next level of the spinal path.

We next discuss the cycle vertices. If their cycle centers are not closed, these vertices still need to be able to access the spinal path. The valuation of vertices along this path is usually very high and it is almost always very profitable for player 0 vertices to get access to this path. Since the cycle vertices cannot obtain access via the cycle center (as this would, by definition, close the cycle center) they need to “escape” the level in another way. This is handled by the escape vertices \(e_{i,j,0}\) and \(e_{i,j,1}\). The escape vertices are used to connect the levels with higher indices to the first two levels and thus enable each vertex to access the spinal path. To be precise, they are connected with the entry vertex of level 2 and the selector vertex of level 1. In principle, the escape vertices will point towards \(g_1\) when the least significant set bit of the currently represented number has the index 1 and towards \(b_2\) otherwise.

We now formalize the idea of a strategy encoding a binary number by defining the notion of a canonical strategy. Note that the definition also includes some aspects that are purely technical, i.e., solely required for some proofs, and do not have an immediate intuitive interpretation.

Definition 2

Let \(\mathfrak {b}\in \mathcal {B}_n\). A player 0 strategy \(\sigma \) for the Parity Game \(S_n\) is called a canonical strategy for \(\mathfrak {b}\) if it has the following properties.

-

1.

All escape vertices point to \(g_1\) if \(\mathfrak {b}_1=1\) and to \(b_2\) if \(\mathfrak {b}_1=0\).

-

2.

The following hold for all levels \(i\in \{1,\dots ,n\}\) with \(\mathfrak {b}_i=1\):

-

(a)

Level i needs to be accessible, i.e., \(\sigma (b_i)=g_i\).

-

(b)

The cycle center \(F_{i,\mathfrak {b}_{i+1}}\) needs to be closed while \(F_{i,1-\mathfrak {b}_{i+1}}\) must not be closed.

-

(c)

The selector vertex of level i needs to select the active cycle center, i.e., \(\sigma (g_i)=F_{i,\mathfrak {b}_{i+1}}\).

-

(a)

-

3.

The following hold for all levels \(i\in \{1,\dots ,n\}\) with \(\mathfrak {b}_i=0\):

-

(a)

Level i must not be accessible and needs to be “avoided”, i.e., \(\sigma (b_i)=b_{i+1}\).

-

(b)

The cycle center \(F_{i,\mathfrak {b}_{i+1}}\) must not be closed.

-

(c)

If the cycle center \(F_{i,1-\mathfrak {b}_{i+1}}\) is closed, then \(\sigma (g_i)=F_{i,1-\mathfrak {b}_{i+1}}\).

-

(d)

If neither of the cycle centers \(F_{i,0},F_{i,1}\) is closed, then \(\sigma (g_i)=F_{i,0}\).

-

(a)

-

4.

Let \(\mathfrak {b}_{i+1}=0\). Then, level \(i+1\) is not accessible from level i, i.e., \(\sigma (s_{i,0})=h_{i,0}\) and \(\sigma (s_{i,1})=b_1\).

-

5.

Let \(\mathfrak {b}_{i+1}=1\). Then, level \(i+1\) is accessible from level i, i.e., \(\sigma (s_{i,0})=b_1\) and \(\sigma (s_{i,1})=h_{i,1}\).

-

6.

If \(\mathfrak {b}<2^n-1\), then both cycle centers of level \(\nu (\mathfrak {b}+1)\) are open.

We use \(\sigma _{\mathfrak {b}}\) to denote a canonical strategy for \(\mathfrak {b}\in \mathcal {B}_n\). A canonical strategy representing (0, 1, 1) in \(S_3\) is shown in Fig. 3.

As mentioned before, the main structure that is used to determine whether a bit is interpreted as being set are the cycle centers. In fact, any configuration of the cycle centers can be interpreted as an encoded number in the following way.

Definition 3

Let \(\sigma \) be a player 0 strategy for \(S_n\). Then, the induced bit state \(\beta ^\sigma =(\beta _n^\sigma ,\dots ,\beta _1^\sigma )\) is defined inductively as follows. We define \(\beta _n^{\sigma }=1\) if and only if \(\sigma (d_{n,0,0})=\sigma (d_{n,0,1})=F_{n,0}\) and \(\beta _i^\sigma =1\) if and only if \(\sigma (d_{i,\beta _{i+1}^{\sigma },0})=\sigma (d_{i,\beta _{i+1}^{\sigma },1})=F_{i,\beta _{i+1}^{\sigma }}\) for \(i<n\)

This definition is in accordance with our interpretation of encoding a number as \(\beta ^{\sigma _{\mathfrak {b}}}=\mathfrak {b}\) if \(\sigma _{\mathfrak {b}}\) is a canonical strategy for \(\mathfrak {b}\).

4 Lower bound for policy iteration on MDPs

In this section we discuss the Markov Decision Process (MDP) that is constructed analogously to the PG \(S_n\). We discuss how this MDP allows the construction of a Linear Program (LP) such that the results obtained for the MDP carry over to the LP formulation. The main idea is to replace player 1 by the “random player” and to choose the probabilities in such a way that applying improving switches in the MDP behaves nearly the same way as in the PG. Note that we continue to use the same language for valuations, strategies and so on in MDP context, although other notions (like policy instead of strategy) are more common.

We give a brief introduction to the theory of MDPs (see also [2]). Similarly to a PG, an MDP is formally defined by its underlying graph \((V_0,V_R,E,r,p)\). Here, \(V_0\) is the set of vertices controlled by player 0 and \(V_R\) is the set of randomization vertices. We let \(V{:}{=}V_0\cup V_R\). For \(p\in \{0,R\}\), we define \(E_{p}{:}{=}\{(v,w):v\in V_p\}\). The set \(E_0\) then corresponds to possible choices that player 0 can make, and each such choice is assigned a reward by the reward function \(r:E_0\rightarrow \mathbb {R}\). The set \(E_R\) corresponds to probabilistic transitions and transition probabilities are specified by the function \(p:E_R\rightarrow [0,1]\) with \(\sum _{u:(v,u)\in E_R}p(v,u)=1\).

As for \(S_n\), a (player 0) strategy is a function \(\sigma :V_0\rightarrow V\) that selects for each vertex \(v\in V_0\) a target corresponding to an edge, i.e., such that \((v,\sigma (v))\in E_0\). There are several computational tasks that can be investigated for MDPs. They are typically described via an objective. We consider the expected total reward objective for MDPs which can be formulated using vertex valuations in the following sense. Given an MDP, we define the vertex valuations \(\varXi _{\sigma }^\mathrm {M}(*)\) with respect to a strategy \(\sigma \) as the solution (if it exists) of the following set of equations:

We also impose the condition that the values sum up to 0 on each irreducible recurrent class of the Markov chain defined by \(\sigma \), yielding uniqueness [2]. We intentionally use very similar notation as for vertex valuations in the context of Parity Games since this allows for a unified treatment.

We now discuss the Policy Iteration Algorithm and refer to [23] for further details. Similarly to the Strategy Improvement Algorithm for PGs, this algorithm starts with some initial policy \(\iota =\sigma _0\). In each step i, it generates a strategy \(\sigma _i\) by changing the target vertex \(\sigma _{i-1}(v)\) of some vertex \(v\in V_0\) to some vertex w with \(\varXi _{\sigma }^M(w)>\varXi _{\sigma }^M(\sigma _{i-1}(v))\). For an arbitrary strategy \(\sigma \), such an edge \((v,w)\in E_0\) with \(w\ne \sigma (v)\) but \(\varXi _{\sigma }^\mathrm {M}(w)>\varXi _{\sigma }^\mathrm {M}(\sigma (v))\) is called improving switch and the set of improving switches is denoted by \(I_{\sigma }\). The term optimal strategy is defined as in PG context. In particular, a strategy \(\sigma \) is optimal if and only if \(I_{\sigma }=\emptyset \). Moreover, applying an improving switch cannot decrease the valuation of any vertex. That is, if \(e=(v,w)\in I_{\sigma }\) and  denotes the strategy obtained after applying e to \(\sigma \), then

denotes the strategy obtained after applying e to \(\sigma \), then  for all \(v'\in V\) and

for all \(v'\in V\) and  . Since there are only finitely many strategies, the algorithm thus generates a finite sequence \(\sigma _0,\sigma _1,\dots ,\sigma _N\) with \(I_{\sigma _N}=\emptyset \).

. Since there are only finitely many strategies, the algorithm thus generates a finite sequence \(\sigma _0,\sigma _1,\dots ,\sigma _N\) with \(I_{\sigma _N}=\emptyset \).

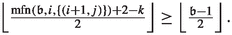

We now discuss how the counter introduced in Sect. 3 is altered to obtain an MDP \(M_n\). A sketch of level i of \(M_n\) can be found in Fig. 5. First, all player 1 vertices are replaced by randomization vertices. This is a common technique used for obtaining MDPs that behave similarly to given PGs and was used before (e.g., [2, 10]). While the ideas used in the transformations are similar, there is no standard reduction from PGs to MDPs preserving all properties.

Level i of the MDP. Circular vertices are vertices of the player, rectangular vertices are randomization vertices. Numbers below vertex names, if present, encode priorities \(\varOmega \). If a vertex has priority \(\varOmega (v)\), then a reward of \(\langle v\rangle {:}{=}(-N)^{\varOmega (v)}\) is associated with every edge leaving this vertex

In our construction, all cycle centers \(F_{i,j}\) and all vertices \(h_{i,j}\) are now randomization vertices. As vertices of the type \(h_{i,j}\) have only one outgoing edge, the probability of this edge is set to 1. For defining the probabilities of the cycle edges, we introduce a small parameter \(\varepsilon >0\) and defer its exact definition to later. The idea is to use \(\varepsilon \) to make the probabilities of edges \((F_{i,j},s_{i,j})\) very small by setting \(p(F_{i,j},s_{i,j})=\varepsilon \) and \(p(F_{i,j},d_{i,j,k})=\frac{1-\varepsilon }{2}\) for \(k\in \{0,1\}\). Then, the valuation of \(s_{i,j}\) can only contribute significantly to the valuation of \(F_{i,j}\) if the cycle center is closed. If the cycle center is not closed, then the contribution of this vertex can often be neglected. However, there are situations in which even this very low contribution has a significant impact on the valuation of the cycle center. For example, if \(F_{i,0}\) and \(F_{i,1}\) are both open for \(\sigma \), then \(\varXi _{\sigma }^\mathrm {M}(F_{i,0})>\varXi _{\sigma }^\mathrm {M}(F_{i,1})\) if and only if \(\varXi _{\sigma }^\mathrm {M}(s_{i,0})>\varXi _{\sigma }^\mathrm {M}(s_{i,1})\). This sometimes results in a different behavior of the MDP when compared to the PG. We discuss this later in more detail.

Second, all player 0 vertices remain player 0 vertices. Each player 0 vertex is assigned the same priority as in \(S_n\). This priority is now used to define the rewards of the edges leaving a vertex. More precisely, if we denote the priority of \(v\in V_0\) by \(\varOmega (v)\), then we define the reward of any edge leaving v as \(\langle v\rangle {:}{=}(-N)^{\varOmega (v)}\), where \(N\ge 7n\) is a large and fixed parameter. Note that the reward of an edge thus only depends on its starting vertex. The reward function that is defined in that way then has the effect that vertices with an even priority are profitable while vertices with an odd priority are not profitable. In addition, the profitability of a vertex is better (resp. worse) the higher its priority is. By choosing a sufficiently large parameter N, it is also ensured that rewards are sufficiently separated. For example, the profitability of some vertex v with even priority cannot be dominated by traversing many vertices with lower but odd priorities. In principle, this ensures that the MDP behaves very similarly to the PG.

Having introduced the parameter N, we now fix the parameter \(\varepsilon \) such that \(\varepsilon <(N^{2n+11})^{-1}\). Note that both parameters can be encoded by a polynomial number of bits with respect to the parameter n. By defining the reward of the edge (t, t) as 0, this completely describes the MDP.

We now provide more details on the aspects where the PG and the MDP differ. One of the main differences between the PG and the MDP is the set of canonical strategies. Consider a strategy \(\sigma \) representing some \(\mathfrak {b}\in \mathcal {B}_n\), some level i and the two cycle centers \(F_{i,0}, F_{i,1}\). In PG context, both vertices have an even priority and the priority of \(F_{i,0}\) is larger than the priority of \(F_{i,1}\). Thus, if both cycle centers escape the level, the valuation of \(F_{i,0}\) is better than the valuation of \(F_{i,1}\). Consequently, if \(\sigma (g_i)\ne F_{i,0}\), then \((g_i,F_{i,0})\) is improving for \(\sigma \). In some sense, this can be interpreted as the PG “preferring” \(F_{i,0}\) over \(F_{i,1}\). A similar, but not the same, phenomenon occurs in MDP context. If both cycle centers \(F_{i,0}\) and \(F_{i,1}\) are in the same “state”, then the valuation of the two upper selection vertices \(s_{i,0}, s_{i,1}\) determines which cycle center has the better valuation. It turns out that the valuation of \(s_{i,\mathfrak {b}_{i+1}}\) is typically better than the valuation of \(s_{i,1-\mathfrak {b}_{i+1}}\). It is in particular not true that the valuation of \(s_{i,0}\) is typically better than the valuation of \(s_{i,1}\). Hence, the MDP “prefers” vertices \(F_{i,\mathfrak {b}_{i+1}}\) over vertices \(F_{i,1-\mathfrak {b}_{i+1}}\). We thus adjust the definition of a canonical strategy in MDP context in the following way.

Definition 4

Let \(\mathfrak {b}\in \mathcal {B}_n\). A player 0 strategy \(\sigma \) for the MDP \(M_n\) is called a canonical strategy for \(\mathfrak {b}\) if it has the properties defined in Definition 2 where Property 3.(d) is replaced by the following: If neither of the cycle centers \(F_{i,0}, F_{i,1}\) is closed, then \(\sigma (g_i)=F_{i,\mathfrak {b}_{i+1}}\).

5 Lower bound for the simplex algorithm and linear programs

Following the arguments of [2, 15], we now discuss how the MDP can be transformed into an LP such that the results obtained for the Policy Iteration Algorithm may be transferred to the Simplex Algorithm. This transformation makes use of the unichain condition. This condition (see [34]) states that the Markov Chain obtained from each strategy \(\sigma \) has a single irreducible recurrent class. Unfortunately, the MDP constructed previously does not fulfill the unichain condition. As we prove in Lemma 1, it however fulfills a weak version of the unichain condition. This weak version states that the optimal policy has a single irreducible recurrent class and does not demand this to be true for every strategy. This implies that the same LP which can be obtained by transforming an MDP fulfilling the unichain condition can be used. We refer to [14, 40] for more details.

We thus return to the discussion for MDPs fulfilling the unichain condition. Optimal policies for MDPs fulfilling this condition can be found by solving the following Linear Program:

The variable x(u, v) for \((u,v)\in E_0\) represents the probability (or frequency) of using the edge (u, v). The constraints of (P) ensure that the probability of entering a vertex u is equal to the probability of exiting u. It is not difficult to see that the basic feasible solutions of (P) correspond directly to strategies of the MDP, see, e.g., [2]. For each strategy \(\sigma \) we can define a feasible setting of the variables x(u, v) with \((u,v)\in E_0\) such that \(x(u,v)>0\) only if \(\sigma (u)=v\). Conversely, for every basic feasible solution of (P), we can define a corresponding policy \(\sigma \). It is well-known that the policy corresponding to an optimal basic feasible solution of (P) is an optimal policy for the MDP (see, e.g., [2, 34]).

Our MDP only fulfills the weak unichain condition. If it is provided an initial strategy that has the same single irreducible recurrent class as the optimal policy, then the same Linear Program introduced above can be used [14]. This follows since all considered basic feasible solutions will have the same irreducible recurrent class by monotonicity. We refer to [40] for more details.

6 Lower bound proof

6.1 The approach and basic definitions

In this section we outline the proof for the exponential lower bound on the running time of the Strategy Improvement resp. Policy Iteration Algorithm using Zadeh’s pivot rule and a strategy-based tie-breaking rule. We discuss the following key components separately before combining them into our main result.Footnote 2 In the following, we use the notation \(G_n\) to simultaneously refer to \(S_n\) and \(M_n\). If a statement or definition only holds for either \(S_n\) or \(M_n\), we explicitly state this.

-

1.

We first define an initial strategy \(\iota \) such that the pair \((G_n,\iota )\) defines a sink game in PG context resp. has the weak unichain condition in MDP context. We also formalize the idea of counting how often an edge has been applied as improving switch.

-

2.

We then state and discuss the tie-breaking rule. Together with the initial strategy, this completely describes the application of the improving switches performed by the Strategy Improvement resp. Policy Iteration Algorithm. Further statements, proofs and explanations that are provided in Appendix C thus only serve to prove that the algorithms and the tie-breaking rule indeed behave as intended.

-

3.

We then focus on a single transition from a canonical strategy \(\sigma _{\mathfrak {b}}\) to the next canonical strategy \(\sigma _{\mathfrak {b}+1}\). During such a transition, many improving switches need to be applied and thus many intermediate strategies need to be considered. These strategies are divided into five phases, depending on the configuration of \(G_n\) induced by the encountered strategies.

-

4.

To prove that the tie-breaking rule indeed proceeds along the described phases, we need to specify how often player 0 edges are applied as improving switches, which is formalized by an occurrence record. We explicitly describe the occurrence records for canonical strategies.

-

5.

Finally, we combine the previous aspects to prove that applying the respective algorithms with Zadeh’s pivot rule and our tie-breaking rule yields an exponential number of iterations.

We begin by providing the initial strategy \(\iota \) for \(G_n\). In principle, the initial strategy is a canonical strategy for 0 in the sense of Definition 2 resp. 4.

Definition 5

The initial player 0 strategy \(\iota :V_0\mapsto V\) is defined as follows:

v | \(b_i (i<n)\) | \(b_n\) | \(g_i\) | \(d_{i,j,k}\) | \(e_{i,j,k}\) | \(s_{i,0}\) | \(s_{i,1} (i<n)\) |

|---|---|---|---|---|---|---|---|

\(\iota (v)\) | \(b_{i+1}\) | t | \(F_{i,0}\) | \(e_{i,j,k}\) | \(b_2\) | \(h_{i,0}\) | \(b_1\) |

We further introduce the notion of a reachable strategy. A strategy \(\sigma '\) is reachable from some strategy \(\sigma \) if it can be produced by the Strategy Improvement Algorithm starting from \(\sigma \) and applying a finite number of improving switches. Note that the notion of reachability does not depend on the pivot rule or the tie-breaking rule and that every strategy calculated by the Strategy Improvement resp. Policy Iteration Algorithm is reachable by definition.

Definition 6

Let \(\sigma \) be a player 0 strategy for \(G_n\). The set of all strategies that can be obtained from \(\sigma \) by applying an arbitrary sequence of improving switches is denoted by \(\varLambda _{\sigma }\). A strategy \(\sigma '\) is reachable from \(\sigma \) if \(\sigma '\in \varLambda _{\sigma }\).

Note that reachability is a transitive property and that we include \(\sigma \in \varLambda _{\sigma }\) for convenience. The i-th level of the initial strategy is shown in Fig. 6. The initial strategy is chosen such that \(G_n\) and \(\iota \) define a sink game in \(S_n\) resp. have the weak unichain condition in \(M_n\).

Lemma 1

For all \(n\in \mathbb {N}\), the game \(G_n\) and the initial player 0 strategy \(\iota \) define a Sink Game with sink t in PG context, resp. have the weak unichain condition in MDP context.

As Zadeh’s pivot rule is a memorizing pivot rule, the algorithms need to maintain information about how often edges have been applied as improving switches. During the execution of the algorithms, we thus maintain an occurrence record \(\phi ^{\sigma }:E_0\mapsto \mathbb {R}\) that specifies how often an improving switch was applied since the beginning of the algorithms. Formally, we define \(\phi ^{\iota }(e)\,{:}{=}\, 0\) for every edge \(e\in E_0\), i.e., the occurrence record with respect to the initial strategy is equal to 0. Then, whenever the algorithms apply an edge e, the occurrence record of e is increased by 1.

6.2 The tie-breaking rule

We now discuss the tie-breaking rule. It specifies which edge to apply if there are multiple improving switches that minimize the occurrence record for the current strategy. Note that, in contrast to many classical pivot rules, fixing a specific tie-breaking rule cannot easily be avoided for Zadeh’s pivot rule, since it is unavoidable that occurrence records occasionally coincide between multiple improving switches. Intuitively, asking for a lower bound construction to work for all tie-breaking rules might be comparably difficult to asking for a construction that works for all pivot rules in the first place.

We rely on a structurally simple tie-breaking rule that is in principle implemented as an ordering of the set \(E_0\) and depends on the current strategy \(\sigma \) as well as the occurrence records. In fact, it turns out to be sufficient to specify a pre-order of \(E_0\) that can be extended to a total order arbitrarily. Whenever the algorithms have to break ties, they then choose the first edge according to this ordering. For convenience, there is one small exception from this behavior. During the transition from the canonical strategy representing 1 towards the canonical strategy representing 2, one improving switch e (which we do not specify yet) has to be applied earlier than during other transitions. The reason is that the occurrence records of several edges, including e, are still zero at this point in time, which leads to unwanted behavior. In later iterations, e is the unique improving switch minimizing the occurrence record whenever it has to be applied, so no special treatment is necessary.

Let \(\sigma \) be a player 0 strategy for \(G_n\). Henceforth, we use the symbol \(*\) as a wildcard. More precisely, when using the symbol \(*\), this means any suitable index or vertex (depending on the context) can be inserted for \(*\) such that the corresponding edge exists. For example, the set \(\{(e_{*,*,*},*)\}\) would then denote the set of all edges starting in escape vertices. Using this notation, we define the following sets of edges.

-

\(\mathbb {G}{:}{=}\{(g_{i},F_{i,*})\}\) is the set of all edges leaving selector vertices.

-

\(\mathbb {E}^0{:}{=}\{(e_{i,j,k},*):\sigma (d_{i,j,k})\ne F_{i,j}\}\) is the set of edges leaving escape vertices whose cycle vertices do not point towards their cycle center. Similarly, \(\mathbb {E}^1{:}{=}\{(e_{i,j,k},*):\sigma (d_{i,j,k})=F_{i,j}\}\) is the set of edges leaving escape vertices whose cycle vertices point towards their cycle center.

-

\(\mathbb {D}^1{:}{=}\{(d_{*,*,*},F_{*,*})\}\) is the set of cycle edges and \(\mathbb {D}^0{:}{=}\{(d_{*,*,*},e_{*,*,*})\}\) is the set of the other edges leaving cycle vertices.

-

\(\mathbb {B}^0{:}{=}\bigcup _{i=1}^{n-1}\{(b_i,b_{i+1})\}\cup \{(b_n,t)\}\) is the set of all edges between entry vertices. The set \(\mathbb {B}^1{:}{=}\{(b_*,g_*)\}\) of all edges leaving entry vertices and entering selection vertices is defined analogously and \(\mathbb {B}{:}{=}\mathbb {B}^0\cup \mathbb {B}^1\) is the set of all edges leaving entry vertices.

-

\(\mathbb {S}{:}{=}\{(s_{*,*},*)\}\) is the set of all edges leaving upper selection vertices.

We next define two pre-orders based on these sets. However, we need to define finer pre-orders for the sets \(\mathbb {E}^0,\mathbb {E}^1,\mathbb {S}\) and \(\mathbb {D}^1\) first.

Informally, the pre-order on \(\mathbb {E}^0\) forces the algorithms to favor switches of higher levels and to favor \((e_{i,0,k},*)\) over \((e_{i,1,k},*)\) in \(S_n\) and \((e_{i,\beta ^{\sigma }_{i+1},k},*)\) over \((e_{i,1-\beta ^{\sigma }_{i+1},k},*)\) in \(M_n\). For a formal description let \((e_{i,j,x},*),(e_{k,l,y},*)\in \mathbb {E}^0\). In \(S_n\), we define \((e_{i,j,x},*)\prec _\sigma (e_{k,l,y},*)\) if either \(i>k\), or \(i=k\) and \(j<l\). In \(M_n\), we define \((e_{i,j,x},*)\prec _\sigma (e_{k,l,y},*)\) if either \(i>k\), or \(i=k\) and \(j=\beta ^{\sigma }_{i+1}\).

Similarly, the pre-order on \(\mathbb {S}\) also forces the algorithm to favor switches of higher levels. Thus, for \((s_{i,j},*),(s_{k,l},*)\in \mathbb {S}\), we define \((s_{i,j},*)\prec _{\sigma } (s_{k,l},*)\) if \(i>k\).

We now describe the pre-order for \(\mathbb {E}^1\). Let \((e_{i,j,x},*),(e_{k,l,y},*)\in \mathbb {E}^1\).

-

1.

The first criterion encodes that switches contained in higher levels are applied first. Thus, if \(i>k\), then \((e_{i,j,x},*)\prec _{\sigma }(e_{k,l,y},*)\).

-

2.

If \(i=k\), then we consider the states of the cycle centers \(F_{i,j}\) and \(F_{k,l}=F_{i,1-j}\). If exactly one cycle center of level i is closed, then the improving switches within this cycle center are applied first.

-

3.

Consider the case where \(i=k\) but no cycle center of level i is closed. Let \(t^{\rightarrow }{:}{=}b_2\) if \(\nu (\mathfrak {b}+1)>1\) and \(t^{\rightarrow }{:}{=}g_1\) if \(\nu (\mathfrak {b}+1)=1\). If there is exactly one halfopen cycle center escaping to \(t^{\rightarrow }\) in level i, then switches within this cycle center have to be applied first.

-

4.

Assume that none of the prior criteria applied. This includes the case where both cycle centers are in the same state, and \(i=k\) holds in this case. Then, the order of application depends on whether we consider \(S_n\) or \(M_n\). In \(S_n\), improving switches within \(F_{i,0}\) are applied first. In \(M_n\), improving switches within \(F_{i,\beta ^{\sigma }_{i+1}}\) are applied first.

We next give a pre-order for \(\mathbb {D}_1\). Let \((d_{i,j,x},F_{i,j}),(d_{k,l,y},F_{k,l})\in \mathbb {D}^1\).

-

1.

The first criterion states that improving switches that are part of open cycles are applied first. We thus define \((d_{i,j,x},F_{i,j})\prec _\sigma (d_{k,l,y},F_{k,l})\) if \(\sigma (d_{k,l,1-y})=F_{k,l}\) but \(\sigma (d_{i,j,1-x})\ne F_{i,j}\).

-

2.

The second criterion states the following. Among all halfopen cycle centers, improving switches contained in cycle centers such that the bit of the level the cycle center is part of is equal to zero are applied first. If the first criterion does not apply, we thus define \((d_{i,j,x},F_{i,j})\prec _\sigma (d_{k,l,y},F_{k,l})\) if \(\beta _k^{\sigma }>\beta _i^{\sigma }\).

-

3.

The third criterion states that among all partially closed cycle centers, improving switches inside cycle centers contained in lower levels are applied first. If none of the first two criteria apply, we thus define \((d_{i,j,x},F_{i,j})\prec _\sigma (d_{k,l,y},F_{k,l})\) if \(k>i\).

-

4.

The fourth criterion states that improving switches within the active cycle center are applied first within one level. If none of the previous criteria apply, we thus define \((d_{i,j,x},F_{i,j})\prec _\sigma (d_{k,l,y},F_{k,l})\) if \(\beta ^\sigma _{k+1}\ne l\) and \(\beta ^{\sigma }_{i+1}= j\).

-

5.

The last criterion states that edges with last index equal to zero are preferred within one cycle center. That is, if none of the previous criteria apply, we define \((d_{i,j,x},F_{i,j})\prec _{\sigma }(d_{k,l,y},F_{k,l})\) if \(x<y\). If this criterion does not apply either, the edges are incomparable.

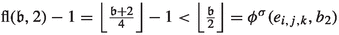

We now define the pre-order \(\prec _{\sigma }\) and the tie-breaking rule, implemented by an ordering of \(E_0\).

Definition 7

Let \(\sigma \) be a player 0 strategy for \(G_n\) and \(\phi ^{\sigma }:E^0\rightarrow \mathbb {N}_0\) be an occurrence record. We define the pre-order \(\prec _\sigma \) on \(E_0\) by defining the set-based pre-order

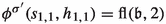

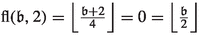

where the sets \(\mathbb {E}^0,\mathbb {E}^1,\mathbb {S}\) and \(\mathbb {D}^1\) are additionally pre-ordered as described before. We extend the pre-order to an arbitrary but fixed total ordering and denote the corresponding order also by \(\prec _\sigma \). We define the following tie-breaking rule: Let \(I_\sigma ^{\min }\) denote the set of improving switches with respect to \(\sigma \) that minimize the occurrence record. Apply the first improving switch contained in \(I_\sigma ^{\min }\) with respect to the ordering \(\prec _\sigma \) with the following exception: If \(\phi ^{\sigma }(b_1,b_2)=\phi ^{\sigma }(s_{1,1}, h_{1,1})=0\) and \((b_1,b_2),(s_{1,1}, h_{1,1}) \in I_\sigma \), apply \((s_{1,1}, h_{1,1})\) immediately.

We usually just use the notation \(\prec \) to denote the ordering if it is clear from the context which strategy is considered and whether \(\prec \) is defined via the default or special pre-order.

Lemma 2

Given a strategy \(\sigma \in \varLambda _{\iota }\) and an occurrence record \(\phi ^{\sigma }:E^0\rightarrow \mathbb {N}_0\), the tie-breaking rule can be evaluated in polynomial time.

6.3 The phases of a transition and the application of improving switches

As explained earlier, the goal is to prove that Zadeh’s pivot rule with our tie-breaking rule enumerates at least one strategy per number \(\mathfrak {b}\in \mathcal {B}_n\). This is proven in an inductive fashion. That is, we prove that given a canonical strategy \(\sigma _{\mathfrak {b}}\) for \(\mathfrak {b}\in \mathcal {B}_n\), the algorithms eventually calculate a canonical strategy \(\sigma _{\mathfrak {b}+1}\) for \(\mathfrak {b}+1\). This process is called a transition and each transition is partitioned into up to five phases. In each phase, a different “task” is performed in order to obtain the strategy \(\sigma _{\mathfrak {b}+1}\). These tasks are, for example, the opening and closing of cycle centers, updating the escape vertices or adjusting some of the selection vertices.

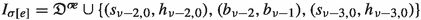

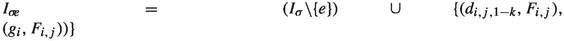

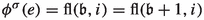

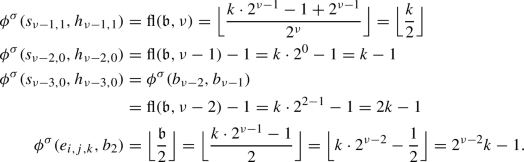

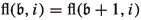

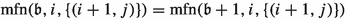

Visualization of the phases encountered when transitioning from \(\sigma _{11}\) to \(\sigma _{12}\) in \(S_4\). Each subfigure shows the state of the counter at the beginning of a phase. Red and green edges correspond to the strategy of player 0, blue edges to the strategy of player 1. Green edges show improving switches that were applied in the preceding phase. The edges of vertices with an outdegree of 1 are not marked as these are chosen in every strategy. All occurrences of vertices with the same label refer to the same unique vertex

Depending on whether we consider \(S_n\) or \(M_n\) and \(\nu (\mathfrak {b}+1)\), there can be 3,4 or 5 different phases. Phases 1,3 and 5 always take place while Phase 2 only occurs if \(\nu (\mathfrak {b}+1)>1\), as it updates the target vertices of some selection vertices \(s_{i,j}\) with \(i<\nu (\mathfrak {b}+1)\). The same holds for Phase 4, although this phase only exists if we consider \(S_n\). In \(M_n\), we apply the corresponding switches already in Phase 3 and there is no separate Phase 4.

We now give a detailed description of the phases. For the sake of the presentation we only describe the main function of each phase and omit switches that are applied for technical reasons. Furthermore, we abbreviate \(\nu {:}{=}\nu (\mathfrak {b}+1)\) whenever \(\mathfrak {b}\) is clear from the context. See Fig. 7 for a full example of the phases in a transition between two canonical strategies.

-

1.

During Phase 1, cycle centers are closed such that the induced bit state of the final strategy is \(\mathfrak {b}+1\). Furthermore, several cycle edges are switched such that the occurrence records of these switches are as balanced as possible. In the end of the phase, the cycle center \(F_{\nu ,(\mathfrak {b}+1)_{\nu +1}}\) is closed and either Phase 2 or Phase 3 begins.

-

2.

During Phase 2, the upper selection vertices \(s_{i,j}\) for \(i\in \{1,\dots ,\nu -1\}\) and \(j=(\mathfrak {b}+1)_{i+1}\) change their targets to \(h_{i,j}\). This is necessary as the induced bit state of the strategy is now equal to \(\mathfrak {b}+1\). Also, the entry vertices of these levels are switched towards the entry vertex of the next level. Since \(\mathfrak {b}_i=\mathfrak {b}_{i+1}\) for all \(i\ne 1\) if \(\nu (\mathfrak {b}+1)=1\), these operations only need to be performed if \(\nu (\mathfrak {b}+1)>1\).

-

3.

Phase 3 is partly responsible for applying improving switches involving escape vertices. Since \(\nu (\mathfrak {b})\ne \nu (\mathfrak {b}+1)\), all escape vertices need to change their target vertices. In Phase 3, some (but not all) escape vertices perform the corresponding switch. Also, for some of these escape vertices \(e_{i,j,k}\), the switch \((d_{i,j,k},e_{i,j,k})\) is applied. This later enables the application of the switch \((d_{i,j,k},F_{i,j})\) which is necessary to balance the occurrence records of the cycle edges. At the end of this phase, depending on \(\nu \), either \((b_{1},g_{1})\) or \((b_1,b_2)\) is applied. In \(M_n\), the switches described for Phase 4 are also applied during Phase 3.

-

4.

During Phase 4, the upper selection vertices \(s_{i,j}\) for \(i\in \{1,\dots ,\nu -1\}\) and \(j\ne (\mathfrak {b}+1)_{i+1}\) change their targets to \(b_1\). Updating the upper selection vertices is necessary since they need to give their cycle centers access to the spinal path. Similar to Phase 2, these switches are only performed if \(\nu (\mathfrak {b}+1)>1\).

-

5.

During Phase 5, the remaining escape vertices switch their targets and some of the cycle vertices switch to their cycle centers. This phase ends once all improving switches at the escape vertices are performed, yielding a canonical strategy for \(\mathfrak {b}+1\).

We next give the formal definition of the different phases. For this, we need to introduce a strategy-based parameter \(\mu ^{\sigma }\in \{1,\dots ,n+1\}\). This parameter \(\mu ^{\sigma }\) is called the next relevant bit of the strategy \(\sigma \). Before defining this parameter formally, we briefly explain its importance and how it can be interpreted.

As described in Sect. 3, both cycle centers of level i alternate in encoding bit i. Therefore, the selection vertex \(g_i\) needs to select the correct cycle center and the entry vertex \(b_i\) should point towards \(g_i\) if and only if bit i is equal to one (see Definition 2). In particular, the selection vertex \(g_{i-1}\) of level \(i-1\) needs to be in accordance with the entry vertex \(b_i\) of level i if bit \(i-1\) is equal to one. That is, it should not happen that \(\sigma (b_i)=g_i\) and \(\sigma (b_{i+1})=g_{i+1}\) but \(\sigma (g_{i})=F_{i,0}\). However, we cannot guarantee that this does not happen for some intermediate strategies. Therefore, we need to perform some operations within the levels i and \(i-1\) and define \(\mu ^{\sigma }\) as the lowest level higher than any level that is set “incorrectly” in that sense. If there are no such levels, the parameter denotes the index of the lowest level with \(\sigma (b_i)=b_{i+1}\). The parameter can thus be interpreted as an indicator encoding where “work needs to be done next". As its formal definition is rather complex, we introduce an additional notation \({\bar{\sigma }}\) that somehow "encodes" \(\sigma \) using integer numbers. As this notation is however a pure formal tool, we do not fully introduce it here. The definition is provided in Appendix B. Formally, the next relevant bit is now defined as follows.

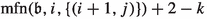

Definition 8

Let \(\sigma \in \varLambda _{\iota }\). The set \(\mathfrak {I}^{\sigma }{:}{=}\{i\in \{1,\dots ,n\}:{\bar{\sigma }}(b_{i})\wedge {\bar{\sigma }}(g_{i})\ne {\bar{\sigma }}(b_{i+1})\}\) is the set of incorrect levels. The next relevant bit \(\mu ^{\sigma }\) of the strategy \(\sigma \) is defined by

Note that we interpret expressions of the form \(x\wedge y=z\) as \(x\wedge (y=z)\). Using the next relevant bit \(\mu ^{\sigma }\), we now give a formal definition of the phases. Formally, a strategy belongs to one of the five phases if it has a certain set of properties. These properties can be partitioned into several categories and are described in Table 5 in Appendix B. Each of these properties depends on either the level or the cycle center. Properties Bac1, Bac2 and Bac3 involve the entry vertices \({{\varvec{b}}}\), Properties Usv1 and Usv2 involve the Upper Selection Vertices and Properties Esc1, Esc2, Esc3, Esc4 and Esc5 the Escape vertices. In addition, Properties Rel1 and Rel2 involve the next relevant bit \(\mu ^{\sigma }\), Properties Cc1, Cc2 the Cycle Centers and Property Sv1 the Selection Vertices.

For most of the phases, there are additional special conditions that need to be fulfilled. The corresponding conditions simplify the distinction between different phases and allow for an easier argumentation or description of statements. However, we do not discuss these here as they are solely needed for technical reasons and we refer to Appendix B for details. We also provide the table used for the exact definition of the phases there.

Definition 9

(Phase-k -strategy) Let \(\mathfrak {b}\in \mathcal {B}_n, \sigma \in \varLambda _{\iota }\) and \(k\in \{1,\dots ,5\}\). The strategy \(\sigma \) is a Phase-k-strategy for \(\mathfrak {b}\) if it has the properties of the k-th column of Table 4 for the respective indices as well as the special conditions of the respective phase (if there are any).

6.4 The occurrence records

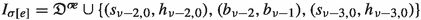

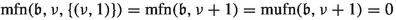

We next describe the actual occurrence records that occur when applying the Strategy Improvement resp. Policy Iteration Algorithm. To do so, we need to introduce notation related to binary counting.

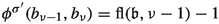

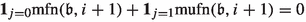

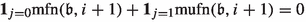

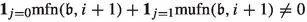

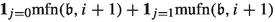

The number of applications of specific edges in level i as improving switches depends on the last time the corresponding cycle centers were closed or how often they were closed. We thus define  as the number of numbers smaller than \(\mathfrak {b}\) with least significant set bit having index i. To quantify how often a specific cycle center was closed, we introduce the maximal flip number and the maximal unflip number. Let \(\mathfrak {b}\in \mathcal {B}_n, i\in \{1,\dots ,n\}\) and \(j\in \{0,1\}\). Then, we define the maximal flip number

as the number of numbers smaller than \(\mathfrak {b}\) with least significant set bit having index i. To quantify how often a specific cycle center was closed, we introduce the maximal flip number and the maximal unflip number. Let \(\mathfrak {b}\in \mathcal {B}_n, i\in \{1,\dots ,n\}\) and \(j\in \{0,1\}\). Then, we define the maximal flip number  as the largest \(\tilde{\mathfrak {b}}\le \mathfrak {b}\) with \(\nu (\tilde{\mathfrak {b}})=i\) and \(\tilde{\mathfrak {b}}_{i+1}=j\). Similarly, we define the maximal unflip number

as the largest \(\tilde{\mathfrak {b}}\le \mathfrak {b}\) with \(\nu (\tilde{\mathfrak {b}})=i\) and \(\tilde{\mathfrak {b}}_{i+1}=j\). Similarly, we define the maximal unflip number  as the largest \(\tilde{\mathfrak {b}}\le \mathfrak {b}\) with \(\tilde{\mathfrak {b}}_1=\dots =\tilde{\mathfrak {b}}_i=0\) and \(\tilde{\mathfrak {b}}_{i+1}=j\). If there are no such numbers, then

as the largest \(\tilde{\mathfrak {b}}\le \mathfrak {b}\) with \(\tilde{\mathfrak {b}}_1=\dots =\tilde{\mathfrak {b}}_i=0\) and \(\tilde{\mathfrak {b}}_{i+1}=j\). If there are no such numbers, then  . If we do not impose the condition that bit \(i+1\) needs to be equal to j then we omit the term in the notation., i.e.,

. If we do not impose the condition that bit \(i+1\) needs to be equal to j then we omit the term in the notation., i.e.,  and \(\mathrm {mufn}(\mathfrak {b},i)\) is defined analogously.

and \(\mathrm {mufn}(\mathfrak {b},i)\) is defined analogously.

These notations enable us to properly describe the occurrence records. We however do not describe the occurrence record for every strategy \(\sigma \) produced by the considered algorithms. Instead, we only give a description of the occurrence records for canonical strategies. When discussing the application of the improving switches, we later prove the following: Assuming that the occurrence records are described correctly for \(\sigma _{\mathfrak {b}}\), they are also described correctly for \(\sigma _{\mathfrak {b}+1}\) when improving switches are applied according to Zadeh’s pivot rule and our tie-breaking rule.

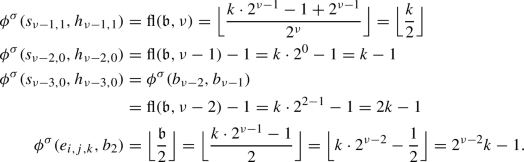

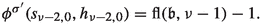

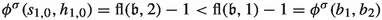

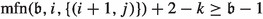

Theorem 3

Let \(\sigma _{\mathfrak {b}}\) be a canonical strategy for \(\mathfrak {b}\in \mathcal {B}_n\) and assume that improving switches are applied as described in Sect. 6.3. Then Table 2 describes the occurrence records with respect to \(\sigma _{\mathfrak {b}}\).

We now give some intuition for the occurrence records of parts of Table 2. As the occurrence records of most of the edges are much more complicated to explain, we omit an intuitive description of their occurrence records here. Let \(\sigma _{\mathfrak {b}}\in \varLambda _{\iota }\) be a canonical strategy for \(\mathfrak {b}\in \mathcal {B}_n\).

Consider some edge \((b_i,g_i)\). This edge is applied as an improving switch whenever bit i switches from 0 to 1. That is, it is applied if and only if we transition towards some \(\mathfrak {b}'\in \mathcal {B}_n\) with \(\nu (\mathfrak {b}')=i\) and \(\mathfrak {b}'\le \mathfrak {b}\). Therefore,  . Now consider \((b_i,b_{i+1})\). This edge is only applied as an improving switch when bit i switches from 1 to 0. This can however only happen if bit i switched from 0 to 1 earlier. That is, applying \((b_i,b_{i+1})\) can only happen when \((b_i,g_i)\) was applied before. Also, we can only apply the switch \((b_i,g_i)\) again after bit i has been switched back to 0 again, i.e., after \((b_i,b_{i+1})\) was applied. Consequently,

. Now consider \((b_i,b_{i+1})\). This edge is only applied as an improving switch when bit i switches from 1 to 0. This can however only happen if bit i switched from 0 to 1 earlier. That is, applying \((b_i,b_{i+1})\) can only happen when \((b_i,g_i)\) was applied before. Also, we can only apply the switch \((b_i,g_i)\) again after bit i has been switched back to 0 again, i.e., after \((b_i,b_{i+1})\) was applied. Consequently,  .

.

Next, consider some edge \((s_{i,j},h_{i,j})\) and fix \(j=1\) for now. This edge is applied as an improving switch if and only if bit \(i+1\) switches from 0 to 1. Hence, as discussed before,  . Now let \(j=0\). The switch \((s_{i,0},h_{i,0})\) is applied whenever bit \(i+1\) switches from 1 to 0. This requires the bit to have switched from 0 to 1 before. Therefore,

. Now let \(j=0\). The switch \((s_{i,0},h_{i,0})\) is applied whenever bit \(i+1\) switches from 1 to 0. This requires the bit to have switched from 0 to 1 before. Therefore,  . Further note that the switch \((s_{i,j},b_1)\) is applied in the same transitions in which the switch \((s_{i,1-j},h_{i,1-j})\) is applied. Hence,

. Further note that the switch \((s_{i,j},b_1)\) is applied in the same transitions in which the switch \((s_{i,1-j},h_{i,1-j})\) is applied. Hence,  and

and  .

.

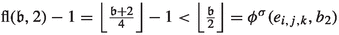

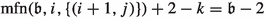

Finally consider some edge \((e_{i,j,k},g_1)\). This edge is applied as an improving switch whenever the first bit switches from 0 to 1. Since 0 is even, this happens once for every odd number smaller than or equal to \(\mathfrak {b}\), i.e., \(\left\lceil \frac{\mathfrak {b}}{2}\right\rceil \) times. Since the switch \((e_{i,j,k},b_2)\) is applied during each transition in which the switch \((e_{i,j,k},g_1)\) is not applied, we have \(\phi (e_{i,j,k},g_1)=\mathfrak {b}-\left\lceil \frac{\mathfrak {b}}{2}\right\rceil = \left\lfloor \frac{\mathfrak {b}}{2}\right\rfloor \) as \(\mathfrak {b}\in \mathbb {N}\).

6.5 Proving the lower bound

We now sketch our proof that applying the Strategy Improvement resp. Policy Iteration Algorithm with Zadeh’s pivot rule and our tie-breaking rule introduced in Definition 7 takes an exponential number of iterations. This is shown in an inductive fashion as follows. Assume that we are given a canonical strategy \(\sigma _{\mathfrak {b}}\) for some \(\mathfrak {b}\in \mathcal {B}_n\) that fulfills a certain set of conditions. Then, applying improving switches according to Zadeh’s pivot rule and our tie-breaking rule produces a canonical strategy \(\sigma _{\mathfrak {b}+1}\) for \(\mathfrak {b}+1\) that fulfills the same conditions. If we can then show that the initial strategy \(\iota \) is a canonical strategy for 0 having the desired properties, our bound on the number of iterations follows. Most of these properties are however rather complicated and are only needed for technical reasons. We thus do not discuss them here and refer to Appendix B for a detailed overview. These conditions are called canonical conditions and are the following:

-

1.

The occurrence records \(\phi ^{\sigma _{\mathfrak {b}}}\) are described correctly by Table 2.

-

2.

\(\sigma _{\mathfrak {b}}\) has Properties (Or1)\(_{*,*,*}\) to (Or4)\(_{*,*,*}\) related to the occurrence records.

-

3.

Any improving switch was applied at most once per previous transition.

As a basis for the following proofs and statements, we give a characterization of the set of improving switches for canonical strategies. We furthermore prove that canonical strategies are Phase-1-strategies for the corresponding number. We use the notation \(\sigma \rightarrow \sigma '\) to denote the sequence of strategies calculated by the algorithms when starting with \(\sigma \) until they eventually reach \(\sigma '\). Throughout this section let \(\nu \, {:}{=}\,\nu (\mathfrak {b}+1)\).

Lemma 3

Let \(\sigma _{\mathfrak {b}}\in \varLambda _{\iota }\) be a canonical strategy for \(\mathfrak {b}\in \mathcal {B}_n\). Then \(\sigma _{\mathfrak {b}}\) is a Phase-1-strategy for \(\mathfrak {b}\) and \(I_{\sigma _{\mathfrak {b}}}=\{(d_{i,j,k},F_{i,j}):\sigma _{\mathfrak {b}}(d_{i,j,k})\ne F_{i,j}\}\).

As claimed, \(\iota \) is in fact a canonical strategy and fulfills the canonical conditions.

Lemma 4

The initial strategy \(\iota \) is a canonical strategy for 0 fulfilling the canonical conditions.

We now discuss how the main statement is proven. Consider some canonical strategy \(\sigma _{\mathfrak {b}}\) for some \(\mathfrak {b}\in ~\mathcal {B}_n\). We prove that applying improving switches to \(\sigma _{\mathfrak {b}}\) using Zadeh’s pivot rule and our tie-breaking rule produces a specific Phase-k-strategy for \(\mathfrak {b}\) for every \(k~\in ~\{1,\dots ,5\}\). These strategies are typically the first strategy of the corresponding phase and have several nice properties that help in proving the statement. The properties of these strategies and the set of improving switches of these strategies are summarized in Tables 6 and 7 in Appendix B. We finally prove that applying improving switches to the Phase-5-strategy that is calculated by the Strategy Improvement resp. Policy Iteration Algorithm then yields a canonical strategy \(\sigma _{\mathfrak {b}+1}\) for \(\mathfrak {b}+1\) fulfilling the canonical conditions. Since \(\iota \) is a canonical strategy for 0 fulfilling the canonical conditions, we can then apply the statement iteratively to prove the main theorem of this paper.

Before formally stating this idea as a theorem, we discuss the individual phases. Consider Phase 1. In this phase, (mainly) cycle edges \((d_{i,j,k},F_{i,j})\) are applied. The last switch of this phase closes the cycle center \(F_{\nu ,(\mathfrak {b}+1)_{\nu +1}}\). We will show that after the application of this switch, the induced bit state of the strategy is \(\mathfrak {b}+1\). As a consequence, the resulting strategy is not a Phase-1-strategy. It then depends on the parity of \(\mathfrak {b}\) whether this strategy belongs to Phase 2 or Phase 3. If \(\nu >1\), then the next strategy is a Phase 2 strategy. If \(\nu =1\), then the next strategy is a Phase-3-strategy.

Now consider the beginning of Phase 2. The properties of the corresponding strategy we refer to here are given by Tables 6 and 7. Since the induced bit state of the strategy changed from \(\mathfrak {b}\) to \(\mathfrak {b}+1\), the entry vertices of all levels \(i\le \nu \) need to be adjusted. Accordingly, the upper selection vertices of all levels \(i<\nu \) need to be adjusted. This is reflected by the set of improving switches, containing the edges \((b_{\nu },g_{\nu })\) and \((s_{\nu -1,1}, h_{\nu -1,1})\). Moreover, edges \((d_{*,*,*},F_{*,*})\) that were improving for \(\sigma _{\mathfrak {b}}\) and have not been applied yet remain improving and these edges have relatively high occurrence records. Also, Table 2 describes the occurrence records of the edges that were just applied when interpreted for \(\mathfrak {b}+1\). Note that we explicitly exclude the switches \((g_i,F_{i,j})\) here. The reason is that it is hard to show that the bound on the occurrence records of these edges is valid by only considering a single transition. We will thus show that these switches cannot be applied too often during \(\iota \rightarrow \sigma _{\mathfrak {b}}\) for any \(\mathfrak {b}\in \mathcal {B}_n\) after discussing the single phases is detail.

As discussed previously, the targets of the upper selection vertices are not set correctly for \(\mathfrak {b}+1\) if \(\nu >1\). This is partly handled during Phase 2. More precisely, the improving switches \((s_{i,(\mathfrak {b}+1)_{i+1}},h_{i,(\mathfrak {b}+1)_{i+1}})\) are applied during Phase 2 for all \(i<\nu \). Furthermore, the target vertices of all entry vertices \(b_2\) to \(b_\nu \) are updated during Phase 2. After applying all of these switches, we then have a Phase-3-strategy. Since none of these switches needs to be applied if \(\nu =1\), we reach such a Phase-3-strategy directly after Phase 1 in that case.

The Phase 3 strategy obtained at this point shares several properties with the Phase 2 strategy described earlier. For example, if \((d_{*,*,*}, F_{*,*})\) was improving for \(\sigma _{\mathfrak {b}}\), it is still improving at the beginning of Phase 3 and has a “high” occurrence record. Furthermore, all edges \((e_{*,*,*}, b_2)\) resp. \((e_{*,*,*}, g_1)\) are improving now. The explanation for this is that the spinal path already contains the correct levels with respect to \(\mathfrak {b}+1\) (with the exception of the corresponding edge starting at \(b_1\)) and has thus a very good valuation. Note that this requires that the vertices \(s_{i,(\mathfrak {b}+1)_{i+1}}\) were updated for \(i<\nu \). The vertices \(b_2\) resp. \(g_1\) are thus very profitable, implying that all edges leading directly towards these vertices become improving.

It turns out that switches \((e_{*,*,*},*)\) then minimize the occurrence record and are applied next. Due to the tie-breaking rule, the algorithms then only apply switches \((e_{i,j,k},*)\) with \(\sigma (d_{i,j,k})=F_{i,j}\). If \((\mathfrak {b}+1)_i\ne 1\) or \((\mathfrak {b}+1)_{i+1}\ne j\), applying a switch \((e_{i,j,k},*)\) can then make the edge \((d_{i,j,k},e_{i,j,k})\) improving. However, the occurrence record of these edges is bounded from above by the occurrence record of the just applied switch \((e_{i,j,k},*)\). Consequently, such a switch is then applied next.

At this point, there is a major difference between \(S_n\) and \(M_n\). In \(S_n\), the application of such a switch has no influence on the valuation of the corresponding cycle center \(F_{i,j}\). The reason is that the player 1 controlled cycle center can simply choose the other cycle vertex (or, in case that the cycle center was closed, \(s_{i,j}\)). This is not true in \(M_n\) as the valuation of \(F_{i,j}\) is then directly affected if the valuation of the cycle vertices changes. Thus, the valuation of cycle centers increases in \(M_n\). This might unlock improving switches involving upper selection in lower levels which will then be applied directly as they have low occurrence records. The set of switches that will be applied in that fashion exactly corresponds to the set of switches applied during Phase 4 in \(S_n\) and can also only occur if \(\nu >1\).