Abstract

We consider a hierarchy of upper approximations for the minimization of a polynomial f over a compact set \(K \subseteq \mathbb {R}^n\) proposed recently by Lasserre (arXiv:1907.097784, 2019). This hierarchy relies on using the push-forward measure of the Lebesgue measure on K by the polynomial f and involves univariate sums of squares of polynomials with growing degrees 2r. Hence it is weaker, but cheaper to compute, than an earlier hierarchy by Lasserre (SIAM Journal on Optimization 21(3), 864–885, 2011), which uses multivariate sums of squares. We show that this new hierarchy converges to the global minimum of f at a rate in \(O(\log ^2 r / r^2)\) whenever K satisfies a mild geometric condition, which holds, eg., for convex bodies and for compact semialgebraic sets with dense interior. As an application this rate of convergence also applies to the stronger hierarchy based on multivariate sums of squares, which improves and extends earlier convergence results to a wider class of compact sets. Furthermore, we show that our analysis is near-optimal by proving a lower bound on the convergence rate in \(\varOmega (1/r^2)\) for a class of polynomials on \(K=[-1,1]\), obtained by exploiting a connection to orthogonal polynomials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the problem of finding the minimum value taken by an n-variate polynomial \(f\in \mathbb {R}[x]\) over a compact set \(K\subseteq \mathbb {R}^n\), i.e., computing the parameter:

Throughout we also set \(f_{\max }=\max _{x\in K}f(x)\). Computing the parameter \(f_{\min }\) (or \(f_{\max }\)) is a hard problem in general, including for instance the maximum stable set problem as a special case. For a general reference on polynomial optimization and its applications, we refer, eg., to [14, 16].

If we fix a Borel measure \(\lambda \) with support K, problem (1) may be reformulated as minimizing the integral \(\int _K f(x) \sigma (x) d\lambda (x)\) over all sum-of-squares polynomials \(\sigma \in \varSigma [x]\) that provide a probability density on K with respect to the measure \(\lambda \). By bounding the degree of \(\sigma \), we obtain the following hierarchy of upper bounds on \(f_{\min }\) proposed by Lasserre [15]:

Here \(\varSigma [x]\) denotes the set of polynomials that can be written as a sum of squares of polynomials and we set \(\varSigma [x]_{r}=\varSigma [x]\cap \mathbb {R}[x]_{2r}\). Since sums of squares of polynomials can be expressed using semidefinite programming, for any fixed \(r\in \mathbb {N}\) the parameter \(f^{(r)}\) can be computed efficiently by semidefinite programming or, even simpler, as the smallest eigenvalue of an appropriate matrix of size \(n+r\atopwithdelims ()r\) ([15], see also [5]).

Recently, Lasserre [17] introduced new, weaker but more economical, upper bounds on \(f_{\min }\) that are based on a univariate approach to the problem. For this purpose, he considers the push-forward measure \(\lambda _f\) of \(\lambda \) by f, which is defined by

Note that for any measurable function \(g:\mathbb {R}\rightarrow \mathbb {R}\), we thus have

We then can define the following hierarchy of upper bounds on \(f_{\min }\):

The difference with the parameter \(f^{(r)}\) is that we now restrict the search to univariate sums of squares \(s\in \varSigma [t]_r\), which we then evaluate at the polynomial f, leading to the multivariate sum of squares \(\sigma _\mathrm{pfm}:=s\circ f\in \varSigma [x]_{rd}\) if f has degree d. Therefore we have the inequality

Again, the parameter \(\smash {f_\mathrm{pfm}^{(r)}}\) can be computed efficiently for any fixed r. But now it can be computed as the smallest eigenvalue of an appropriate matrix of much smaller size \(r+1\) (see (8) below). Asymptotic convergence of the parameters \(\smash {f_\mathrm{pfm}^{(r)}}\) to \(f_{\min }\) is shown in [17], but no quantitative results are given there. In this paper, we are interested in analyzing the convergence rate of the parameters \(\smash {f_\mathrm{pfm}^{(r)}}\) to the global minimum \(f_{\min }\) in terms of the degree r.

1.1 Previous work

In what follows we always consider for \(\lambda \) the Lebesgue measure on K (unless specified otherwise). Several results exist on the convergence rate of the parameters \(f^{(r)}\) to the global minimum \(f_{\min }\), depending on the set K. The best rates in \(O(1/r^2)\) were shown in [5, 6, 23] when K belongs to special classes of convex bodies, including the hypercube \([-1, 1]^n\), the ball \(B^n\), the sphere \(S^{n-1}\), the standard simplex \(\varDelta ^n\) and compact sets that are locally ‘ball-like’. Furthermore, it was shown in [5] that this analysis is best possible in general (already for \(K=[-1,1]\) and \(f(x)=x\)). The starting point for each of these results is a connection between the parameters \(f^{(r)}\) and the smallest roots of certain orthogonal polynomials (see [5, Sect. 2] and the short recap below).

In [23, Theorems 10-11], a rate in \(O(\log ^2 r / r^2)\) was shown for general convex bodies K, as well as a rate in \(O(\log r / r)\) for general compact sets K that satisfy a minor geometric condition (a srengthening of Assumption 1 below). There the analysis relied on constructing explicit sum-of-squares densities that approximate well the Dirac delta function at a global minimizer of f, making use of the so-called ‘needle’ polynomials from [12]. An improved rate in \(O(\log ^k r / r^k)\) was shown in [23, Theorem 14] when the partial derivatives of f up to degree \(k-1\) vanish at one of its global minimizers on K.

When K is a convex body, a convergence rate in O(1/r) had been shown earlier in [4], by exploiting a link to simulated annealing. There the authors considered sum-of-squares densities of (roughly) the form \(\sigma =s\circ f\), where \(s(t)=\sum _{k=0}^{2r}(-t/T)^k/k!\in \varSigma [t]_{r}\) is the truncated Taylor expansion of the exponential \(e^{-t/T}\). Hence this specific choice of s (or \(\sigma \)) provides an upper bound not only for the parameter \(f^{(rd)}\) (as exploited in [4]) but also for the parameter \(\smash {f_\mathrm{pfm}^{(r)}}\) and thus the result of [4] gives directly \(\smash {f_\mathrm{pfm}^{(r)}}-f_{\min }=O(1/r)\) when K is a convex body.

The result above gives a first quantitative analysis of the parameters \(\smash {f_\mathrm{pfm}^{(r)}}\) for convex bodies. In this paper we improve this result in two directions. First we sharpen the analysis and show the stronger convergence rate \(O(\log ^2 r/r^2)\) and second we show that this analysis applies a large class of compact sets (those satisfying Assumption 1), which includes all semialgebraic sets that have a dense interior.

We also mention briefly another hierarchy of bounds when K is a semialgebraic set, of the form

Then lower bounds for the minimum of f over K can be obtained as

(setting \(g_0=1\)). This hierarchy has been widely studied in the literature (see, eg., [14, 16] and references therein). Asymptotic convergence to \(f_{\min }\) holds when the semialgebraic set K satisfies the Archimedean condition (which implies K is compact) [13] and relies on the positivity certificate of Putinar [20]. (The Archimedean condition requires existence of \(R>0\) such that \(R-\sum _{i=1}^nx_i^2\) lies in the quadratic module generated by the \(g_j\)’s, consisting of the polynomials \(\sum _js_jg_j\) for some sum-of-squares polynomials \(s_j\)). The question arises naturally of analyzing the quality of the bounds \(f_{(r)}\). A convergence rate in \(O(1/(\log (r/c))^{1/c})\) is shown in [18], where c is a constant depending only on K. If in the definition of the bounds \(f_{(r)}\) we allow decompositions in the preordering, which consists of the polynomials \(\sum _{J\subseteq [m]} \sigma _J \prod _{j\in J}g_j\) with \(\sigma _J\) sum-of-squares polynomials, then, based on Schmüdgen’s positivity certificate [21], asymptotic convergence holds for any compact K and a stronger convergence rate in \(O(1/r^c)\) was shown in [22] (where c again depends only on K). When allowing decompositions in the preordering a stronger convergence rate in O(1/r) was shown for special sets like the simplex (in [1]) and the hypercube (in [2]). (See also [7] for an overview). For the minimization of a homogeneous polynomial f over the unit sphere an improved convergence rate in \(O(1/r^2)\) for the bounds \(f_{(r)}\) was shown recently in [11] (improving the earlier rate in O(1/r) from [9]). It turns out that this analysis relies (implicitly) on the convergence rate of the upper bounds for a special class of polynomials. This indicates there are intimate links between the upper and lower bounds \(f^{(r)}\) and \(f_{(r)}\), which forms an additional motivation for better understanding the upper bounds \(f^{(r)}\). Showing an improved convergence analysis for the bounds \(f_{(r)}\) for broader classes of semialgebraic sets remains an important research question.

1.2 New results

The main contribution of this paper is the following bound on the convergence rate of the parameter \(\smash {f_\mathrm{pfm}^{(r)}}\) that holds whenever K satisfies a minor geometric condition.

Theorem 1

Let \(K \subseteq \mathbb {R}^n\) be a compact connected set satisfying Assumption 1 below. Then we have

In view of (6), we immediately get the following corollary, extending the rate in \(O(\log ^2 r / r^2)\), shown in [23] for convex bodies, to all connected compact sets K satisfying Assumption 1.

Corollary 1

Let \(K \subseteq \mathbb {R}^n\) be a compact connected set satisfying Assumption 1. Then we have

In light of the following special case of [5, Corollary 3.2] our result on the convergence rate of \(\smash {f_\mathrm{pfm}^{(r)}}\) is best possible in general, up to the log-factor.

Theorem 2

[5] Let \(K = [-1, 1]\) and let \(f(x) = x\). Then \(f^{(r)} =-1+ \varTheta (1/r^2)\). As a direct consequence, we have \(\smash {f_\mathrm{pfm}^{(r)}}(=f^{(r)}) = -1+\varOmega (1/r^2)\).

As an additional result, we extend the lower bound \(\varOmega (1/r^2)\) on the error range \(\smash {f_\mathrm{pfm}^{(r)}}-f_{\min }\) to the class of functions \(f(x)=x^{2k}\) with integer \(k\ge 1\).

Theorem 3

Let \(K = [-1, 1]\) and let \(f(x) = x^{2k}\) for \(k\ge 1\) integer. Then we have \(\smash {f_\mathrm{pfm}^{(r)}}= \varOmega (1/r^2)\).

Combining Theorem 3 with the fact that \(f^{(r)} = O(\log ^{2k} r / r^{2k})\) when \(f(x) = x^{2k}\) (using [23, Theorem 14]), we thus show a large separation between the asymptotic quality of the bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\) for this class of functions.

1.3 Approach and discussion

As already mentioned above, a crucial ingredient in the analysis of the parameters \(f^{(r)}\) for special compact sets like the hypercube \([-1,1]^n\), the ball, the sphere, or the simplex, is the analysis in the univariate case when \(K=[-1,1]\) (equipped with the Lebesgue measure or more generally allowing a weight of Jacobi type) and the special polynomial \(f(t)=t\). Let \(\{p_i \in \mathbb {R}[t]_i :i\in \mathbb {N}\}\) be the (unique) orthonormal basis of \(\mathbb {R}[t]\) with respect to the inner product \(\langle \cdot , \cdot \rangle _\lambda \) given by

Then, as is shown in [5], the parameter \(f^{(r)}\) coincides with the smallest eigenvalue of the (truncated) moment matrix \(M_{\lambda ,r}\) of \(\lambda \), which is defined as

A classical result on orthogonal polynomials (cf., eg., [24]) shows that the eigenvalues of \(M_{\lambda ,r}\) are given by the roots of \(p_{r+1}\). Hence, the parameter \(f^{(r)}\) is equal to the smallest root of \(p_{r+1}\), the asymptotic behaviour of which is well understood and known to be in \(-1+ \varTheta (1/r^2)\) when \(\lambda \) is a measure of Jacobi type ([5], see also Lemma 2 below).

Recall that \(\lambda _f\) is the push-forward measure of \(\lambda \) by f, as defined in (3), and \(f(K)=[f_{\min },f_{\max }]\) since we assume K is compact and connected. Let \(\{p_{f,i}: i\in \mathbb {N}\}\) denote the orthonormal basis of \(\mathbb {R}[t]\) with respect to the inner product \(\langle \cdot ,\cdot \rangle _{\lambda _f}\) on the interval \([f_{\min },f_{\max }]\). In view of the above discussion, if we use the first (univariate) formulation of \(\smash {f_\mathrm{pfm}^{(r)}}\) in (5), we can immediately conclude that \(\smash {f_\mathrm{pfm}^{(r)}}\) is equal to the smallest eigenvalue of the matrix

and also to the smallest root of the orthogonal polynomial \(p_{f,r+1}\). However it is not clear how to exploit this connection in order to gain information about the convergence rate of the parameters \(\smash {f_\mathrm{pfm}^{(r)}}\) since the orthogonal polynomials \(p_{f,i}\) are not known explicitly in general.

In this paper, we will go back to the idea of trying to find a good sum-of-squares polynomial approximation of the Dirac delta function. As in [23], we make use of the needle polynomials from [12] for this purpose. The difference with the approach in [23] is that we now work on the interval \([f_{\min }, f_{\max }]\); so we need an approximation of the Dirac delta function centered at \(f_{\min }\), which is on the boundary of this interval. As is already noted in [12], this special setting allows for better approximations than would be available in general.

1.4 Outline

The rest of the paper is organized as follows. In Sect. 2 we give a proof of Theorem 1. Then, in Sect. 3, we prove Theorem 3. We provide some numerical examples that illustrate the practical behaviour of the bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\) in Sect. 4. Finally, in Sect. 5, we give a small discussion of the geometric Assumption 1 below and we show that it is satisfied by the compact semialgebraic sets with a dense interior.

2 Convergence analysis for the new hierarchy

We first state the precise geometric condition alluded to in Theorem 1.

Assumption 1

There exist positive constants \(\epsilon _K,\eta _K>0\) and \(N \ge n\), such that, for all \(x\in K\) and \(0<\delta \le \epsilon _K\), we have

Here, \(B^n_\delta (x)\) is the Euclidean ball centered at x with radius \(\delta \) and \(B^n=B^n_1(0)\).

A slightly stronger version of Assumption 1 (requiring \(N=n\)) was introduced in [3], where it was used to give the first error analysis in \(O(1/\sqrt{r})\) for the bounds \(f^{(r)}\). The condition of [3] is satisfied, eg., when K is a convex body, or more generally when K satisfies an interior cone condition, or when K is star-shaped with respect to a ball (see also [3] for a more complete discussion). The weaker condition (9) is satisfied additionally by the compact semialgebraic sets that have a dense interior, which allows in particular that K has certain types of cusps. We discuss Assumption 1 in more detail in Sect. 5 below.

We show the following restatement of Theorem 1.

Theorem 4

Assume K is connected, compact and satisfies the above geometric condition (9). Then there exists a constant C (depending only on n, the Lipschitz constant of f and K) such that

The rest of this section is devoted to the proof of Theorem 4. We will make the following assumptions in order to simplify notation in our arguments. Let a be a global minimizer of f in K. After applying a suitable translation (replacing K by \(K-a\) and the polynomial f by the polynomial \(x\mapsto f(x-a)\)), we may assume that \(a=0\), that is, we may assume that the global minimum of f over K is attained at the origin. Furthermore, it suffices to work with the rescaled polynomial

which satisfies \(F(K)=[0,1]\), with \(F_{\min }=0\) and \(F_{\max }=1\). Indeed, one can easily check that

Then, for this polynomial F, we know that the support of the push-forward measure \(\lambda _F\) is equal to [0, 1], and (5) gives

In order to analyze the bound \(F_\mathrm{pfm}^{(r)}\), we follow a similar strategy to the one employed in [23] to analyze the bound \(F^{(r)}\). Namely, we construct a univariate sum-of-squares polynomial s which approximates well the Dirac delta centered at the origin on the interval [0, 1], making use of the so-called \(\frac{1}{2}\)-needle polynomials from [12].

Lemma 1

[12] Let \(h\in (0,1)\) be a scalar and let \(r \in \mathbb {N}\). Then there exists a univariate polynomial \(\nu ^h_r \in \varSigma [t]_{2r}\) satisfying the following properties:

We consider the sum-of-squares polynomial \(s(t):= C \nu ^h_r(t),\) where \(h \in (0, 1)\) will be chosen later, and C is chosen so that s is a density on [0, 1] with respect to the measure \(\lambda _F\). That is,

As s is a feasible solution to (10), we obtain

Our goal is thus to show that

Define the set

We first work out the numerator of (12), which we split into two terms, depending whether we integrate on \(K_h\) or on its complement:

Here we have upper bounded F(x) by h on \(K_h\) and by 1 on \(K\setminus K_h\). On the other hand, we can lower bound the denominator in (12) as follows:

Combining the above two inequalities on numerator and denominator we get

Thus we only need to upper bound the second term above. We first work on the numerator. For any \(x\in K\setminus K_h\) we have \(F(x)>h\) and thus, using (11), we get \(\nu ^h_r(F(x)) \le 4e^{-{1\over 2}r\sqrt{h}}\). This implies

Next, we bound the denominator. In [23, Corollary 4], it is observed that

Set \(\rho = \frac{1}{64r^2}\). We will later choose \(h \ge \rho \), so that \(K_h \supseteq K_{\rho } := \{ x \in K : F(x) \le \rho \}\) and \(\nu ^h_r(F(x)) \ge \frac{1}{2}\) for all \(x \in K_\rho \). As K is compact, there exists a Lipschitz constant \(C_F>0\) such that

Note that \(K \cap B^n_{\rho /C_F}\subseteq K_\rho .\) By the geometric assumption (9) we have

for all r large enough such that \(\rho /C_F \le \epsilon _K\). We can then lower bound the denominator as follows:

Combining the above inequalities, we obtain

If we now select \(h=\left( 4({N}+1){\log r\over r}\right) ^2\), we have \(h \ge \rho \) and a straightforward computation shows that

Here, the constant in the big O depends on n, N, \(C_F\), \(\eta _K\) and \(\lambda (K)\). This concludes the proof of Theorem 4.

3 Separation for a special class of polynomials

In this section we consider in more detail the behaviour of the bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\) for the class of polynomials \(f(x)=x^{2k}\) (with \(k\ge 1\) integer) on the interval \(K=[-1, 1]\). Then \(f([-1,1])=[0,1]\) and, by applying (6) to the polynomial \(f(x)=x^{2k}\), we have the following inequality:

Note that for any \(i\le 2k-1\), the ith derivative of f vanishes at its global minimizer 0 on \([-1, 1]\). Using [23, Theorem 14], we therefore have that \(f^{(2rk)} \le f^{(r)} = O(\log ^{2k} r/ r^{2k})\). On the other hand, the convergence rate in \(O(\log ^2 r/r^2)\) for \(\smash {f_\mathrm{pfm}^{(r)}}\) shown in Theorem 1 is optimal up to the log-factor. Indeed, we will show here a lower bound for \(\smash {f_\mathrm{pfm}^{(r)}}\) in \(\varOmega (1/r^2)\).

Let \(\lambda _k:=\lambda _{f}\) denote the push-forward measure (3) of the Lebesgue measure on \([-1, 1]\) by the function \(f(x) = x^{2k}\), and let \(\{p_{k,i}(t): i\in \mathbb {N}\}\subseteq \mathbb {R}[t]\) denote the family of orthogonal polynomials that provide an orthonormal basis for \(\mathbb {R}[t]\) w.r.t. the inner product \(\langle \cdot , \cdot \rangle _{\lambda _k}\) (cf. (7)). Then, as shown in [5] and as recalled above, the parameter \(\smash {f_\mathrm{pfm}^{(r)}}\) is equal to the smallest root of the polynomial \(p_{k,r+1}(t)\). As it turns out, here we can find explicitly the push-forward measure \(\lambda _k\), which can be shown to be of Jacobi type. Hence, we have information about the corresponding orthogonal polynomials \(p_{k,i}\), whose extremal roots are well understood. First we introduce the classical Jacobi polynomials (see, eg., [24] for a general reference).

Lemma 2

Let \(a, b > -1\). Consider the weight function \(w_{a,b}(x)=(1-x)^a(1+x)^b\) on the interval \([-1,1]\) and let \(\{p^{a,b}_i(x): i\in \mathbb {N}\}\) be the corresponding family of orthogonal polynomials. Then \(p^{a, b}_i\) is known as the degree i Jacobi polynomial (with parameters a, b), and its smallest root \(\xi _i^{a, b}\) satisfies:

Proof

A proof of this fact based on results in [8, 10] is given in [5]. \(\square \)

Lemma 3

For any integrable function g on \([-1,1]\) we have the identity

Hence, the push-forward measure \(\lambda _k\) is given by \(d\lambda _k(t) :={1\over k}t^{-1+{1\over 2k}}dt\) for \(t \in [0,1]\).

Proof

It suffices to show the first claim, which follows by making a change of variables \(t=x^{2k}\) so that we get

\(\square \)

Proof of Theorem 3

By applying the change of variables \(x=2t-1\), we see that the Jacobi type measure \((1-x)^a(1+x)^b dx\) on \([-1,1]\) corresponds to the measure \(2^{a+b}(1-t)^at^b dt\) on [0, 1] and that (up to scaling) the orthogonal polynomials for the latter measure on [0, 1] are given by \(t \mapsto p^{a,b}_i(2t-1)\) for \(i\in \mathbb {N}\).

If we set \(a=0\) and \(b=-1+1/2k\), then the measure obtained in this way on [0, 1] is precisely the push-forward measure \(\lambda _k\) (see Lemma 3). Hence, we can conclude that (up to scaling) the orthogonal polynomials \(p_{k,i}\) for \(\lambda _k\) on [0, 1] are given by \(p_{k,i}(t) = p^{a,b}_i(2t-1)\) for each \( i\in \mathbb {N}\). Therefore, the smallest root of \(p_{k,r+1}(t)\) is equal to \((\xi ^{a,b}_{r+1} +1 ) / 2 =\varTheta (1/r^2)\) by (14). In particular, we can conclude that \(\smash {f_\mathrm{pfm}^{(r)}}= \varOmega ({1 / r^2})\) for any \(k \ge 1\). \(\square \)

4 Numerical examples

In this section, we illustrate the practical behaviour of the bounds \(\smash {f_\mathrm{pfm}^{(r)}}\) and \(f^{(r)}\) using some numerical examples. Recall from (6) that \(f^{(dr)}\le \smash {f_\mathrm{pfm}^{(r)}}\) if f has degree d. However, we will see that in many of the examples below the parameter \( \smash {f_\mathrm{pfm}^{(r)}}\) provides in fact a better bound than \(f^{(r)}\).

Comparison of \(\smash {f_\mathrm{pfm}^{(r)}}\) and \(f^{(r)}\) for polynomial test functions. First, we consider the polynomial test functions listed in Table 1. These are all well-known in optimization, and were already used to test the behaviour of the bounds \(f^{(r)}\) in [3, 23]. We compare the bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\) directly for \(f \in \{f_\mathrm{bo}, f_\mathrm{ma},f_\mathrm{ca}, f_\mathrm{mo} \}\), computed for the unit box \([-1, 1]^2\) and the unit ball \(B^{2}\). For \(1 \le r \le 20\), we compute the values of the fraction:

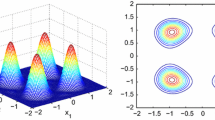

So, values of \(\rho _{r}({f})\) smaller than 1 indicate good performance of the bounds \(\smash {f_\mathrm{pfm}^{(r)}}\) in comparison to \(f^{(r)}\). The results can be found in Fig. 3. Remarkably, it appears that the performance of the bound \(\smash {f_\mathrm{pfm}^{(r)}}\) is comparable to (or better than) the performance of \(f^{(r)}\) in each instance, except for the Camel function. Additionally, we note that the performance of \(\smash {f_\mathrm{pfm}^{(r)}}\) for the Motzkin polynomial is comparatively much better on the unit ball than on the unit box. Figure 1 shows a plot of the Camel function, as well as the sum-of-squares densities corresponding to \(f^{(6)}\) and \(f_\mathrm{pfm}^{(6)}\) on the unit box. Note that while the density corresponding to \(f^{(6)}\) resembles the Dirac delta function centered at the global minimizer (0, 0) of the Camel function, the density corresponding to \(f_\mathrm{pfm}^{(6)}\) instead mirrors the Camel function itself.

Comparison of \(\smash {f_\mathrm{pfm}^{(r)}}\) and \(f^{(r)}\) for the special class of polynomials \(f(x)=x^{2k}\). Next, we consider the polynomials \(f(x) = x^{2k}\) for \(k \ge 1\) on the interval \([-1, 1]\), which were treated in Sect. 3. In Fig. 4, the values of \(\rho _{r}({f})\) are shown for \(1 \le r \le 20\) and \(1 \le k \le 5\). It can be seen that the performance of \(\smash {f_\mathrm{pfm}^{(r)}}\) is comparable to the performance of \(f^{(r)}\) for \(k = 1\) (indeed, in this case we have \(\smash {f_\mathrm{pfm}^{(r)}}= f^{(2r)}\)), but it is much worse for \(k > 1\), which matches our earlier findings (Theorem 3). In Fig. 2, the optimal sum-of-squares densities \(\sigma \) (corresponding to \(f^{(r)}\)) and \(\sigma _\mathrm{pfm}\) (corresponding to \(\smash {f_\mathrm{pfm}^{(r)}}\)) are depicted for \(k =1,3,5\) and \(r = 6\). Note that while the density \(\sigma \) changes very little as we increase k, the density \(\sigma _\mathrm{pfm}\) grows increasingly ‘flat’ around the minimizer 0 of f (mirroring the behavior of f itself). As such, the density \(\sigma _\mathrm{pfm}\) is a comparatively much worse approximation of the Dirac delta function centered at 0 than \(\sigma \). Note also that in this instance \(f^{(r)} = f^{(r+1)}\) for even r, explaining the ‘zig-zagging’ behaviour of the ratio \(\rho _{r}({f})\).

Comparison of \(\smash {f_\mathrm{pfm}^{(r)}}\) and \(f^{(r)}\) for random instances of maximum cut. Finally, we consider some polynomial maximization problems on \([-1, 1]^n\) coming from small instances of MaxCut. An instance of MaxCut with vertex set [n] and edge weights \(w_{ij} \ge 0\) can be written as:

Note that while f is usually maximized over the discrete cube \(\{-1, 1\}^n\), the formulation (15) is equivalent as f is convex.

Following [15], we create our instances by setting \(w_{ij} = 0\) with probability p, and sampling \(w_{ij}\) uniformly from [0, 1] otherwise. In Table 2, we list values of \(\smash {f_\mathrm{pfm}^{(r)}}\) and \(f^{(r)}\) for a few such random instances with \(p=1/2\) and \(n=8\). In each case, \(\smash {f_\mathrm{pfm}^{(r)}}\) provides a better bound than \(f^{(r)}\). In Table 3, we list the average over 50 randomly generated instances of the ratios:

for \(r \le 4\) and \(p \in \{1/4,~ 1/2,~ 3/4 \}\). Although it seems \(\smash {f_\mathrm{pfm}^{(r)}}\) is more sensitive to changes in the density of the instances, we find again that it provides a better bound in general than \(f^{(r)}\).

Comparison of the bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\) for the first four functions in Table 1, computed on the unit box (left) and unit ball (right)

5 On the geometric assumption

As mentioned above, the condition (9) is a weaker version of a condition introduced in [3]. There, the authors demand that there exist constants \(\eta _K,\epsilon _K\) such that

The difference is that the power of \(\delta \) in (16) is fixed to be the dimension n of K, whereas it is allowed to be an arbitrary \(N\ge n\) in (9).

Condition (9) is satisfied by a significantly larger class of sets K than (16). In particular, as we will observe below, sets satisfying (9) may have polynomial cusps, whereas sets satisfying (16) may not have any cusps at all.

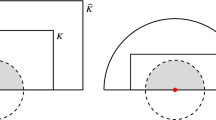

Example 1

Consider the set \(K = \{x \in \mathbb {R}^2 : 0 \le x_1 \le 1, \ 0 \le x_2 \le x_1^2 \}\) (see Fig. 5). This set K satisfies (9) (with \(N=3\)), but it does not satisfy (16). Indeed, for the point \(0 \in K\) we have

and conclude (16) cannot be satisfied at \(x = 0\). Note that the point 0 is indeed a polynomial cusp of the set K.

Example 2

Consider the set \(K = \{x \in \mathbb {R}^2: 0 \le x_1 \le 1, \ 0 \le x_2 \le \exp (-1/x_1) \}\) (see Fig. 5). This set K does not satisfy (9) (and, as a consequence, does not satisfy (16)). Indeed, for the point \(0 \in K\) we have

Now note that for any \(N, \eta > 0\) fixed, we have:

and so (9) can not be satisfied at \(x = 0\). Note that the point 0 is an exponential cusp of K.

It turns out that compact semialgebraic sets which have a dense interior (aka being fat) satisfy Assumption 1, as is shown essentially in [19].

Definition 1

A set \(K \subseteq \mathbb {R}^n\) is called fat if \(K \subseteq \overline{{{\,\mathrm{int}\,}}K}\), i.e., the interior of K is dense in K.

Theorem 5

([19], Theorem 6.4, see also Remark 6.5) Let \(K \subseteq \mathbb {R}^n\) be a compact, fat semialgebraicFootnote 1 set. Then there exist constants \(\eta >0\), \(N \ge 1\) and a positive integer \(d \in \mathbb {N}\) such that one may find a polynomial \(h_x\) of degree d for each \(x \in K\) satisfying:

Furthermore, the polynomials \(h_x\) may be chosen such that \(\Vert x - h_x(t)\Vert \le t\) for all \(x \in K, t \in [0, 1]\).

Corollary 2

Let \(K \subseteq \mathbb {R}^n\) be a compact, fat semialgebraic set. Then K satisfies Assumption 1.

Proof

For \(x \in K\), let \(\eta , N\) and \(h_x\) be as in Theorem 5. We may assume that \(h_t := h_x(t) \in B^n_t(x)\) for all \(t \in [0, 1]\). For clarity, we write \(B(y, a) := B^n_a(y)\) in the rest of the proof.

Using the triangle inequality and (19) we find that

for all \(t \in [0, 1]\), noting that \(t^N \le t\) in this case. But now substituting \(\delta = (1 + \eta )t\) yields:

showing (9) holds for \(0 < \delta \le \epsilon _K := (1 + \eta )\) and \(\eta _K = \eta ^n (1+ \eta )^{-Nn}\). \(\square \)

6 Conclusions

We have shown a convergence rate in \(O(\log ^2 r/ r^2)\) for the approximations \(\smash {f_\mathrm{pfm}^{(r)}}\) of the minimum of a polynomial f over a compact connected set K satisfying the minor geometric assumption (9). Furthermore, we have shown that this analysis is near-optimal, in the sense that the asymptotic behaviour of the error range \(\smash {f_\mathrm{pfm}^{(r)}}-f_{\min }\) is in \(O(\log ^2 r/r^2)\) in general and in \( \varOmega (1/r^2)\) for an infinite class of polynomials.

This latter result shows that although the worst-case guarantees on the convergence of the bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\) are very similar, a large separation may exist for certain polynomials (eg., when \(f(x) = x^{2k}\)). Of course, it should be noted that the parameter \(\smash {f_\mathrm{pfm}^{(r)}}\) can be obtained via a much smaller eigenvalue computation than the parameter \(f^{(r)}\), namely by computing the smallest eigenvalue of a matrix of size \(r+1\) for the latter in comparison to a matrix of size \(n+r\atopwithdelims ()r\) for the former.

From a computational point of view, one should also observe that while the computation of \(\smash {f_\mathrm{pfm}^{(r)}}\) involves a smaller matrix, it however requires to know the moments \(\int _K f^k d\lambda \) of powers of f for \(k\le 2r\). If f has many terms this computation can be demanding for large values of r. This has to be taken into account when comparing the computational burden of both bounds \(f^{(r)}\) and \(\smash {f_\mathrm{pfm}^{(r)}}\).

Lastly, as a surprising consequence of Theorem 1, we are able to extend the bound in \(O(\log ^2 r / r^2)\) on the convergence rate of \(f^{(r)}\) to all compact connected sets K satisfying the geometric condition (9), whereas it was previously only known for convex bodies [23]. In this sense, the arguments of Sect. 2 can be seen as a refinement (and simplification) of the ones given in [23].

As said above, the analysis in this paper is near-optimal: we can show an upper bound in \(O(\log ^2 r/r^2)\) and a lower bound in \(\varOmega (1/r^2)\) for a certain class of polynomials. Deciding what is the right regime and whether the \(\log \)-factor can be avoided in the convergence analysis is the main research question left open by this work.

The \(\log \)-factor arises from our analysis technique, based on using polynomial approximation by the needle polynomials. We had to use this analysis technique since the behaviour of the orthogonal polynomials for the push-forward measure \(\lambda _f\) is not known for general f. On the other hand, our results may be interpreted as giving back some information for general push-forward measures \(\lambda _f\) and their corresponding orthogonal polynomials \(p_{f,i}\) on the interval \([f_{\min },f_{\max }]\). Indeed, what our results imply is that for any polynomial f and any compact connected K satisfying (9), the asymptotic behaviour of the smallest root of \(p_{f,i}\) is in \(f_{\min }+O(\log ^2 r/r^2)\).

Notes

In fact, the result is shown for subanalytic sets, of which semialgebraic sets are an example.

References

de Klerk, E., Laurent, M., Parrilo, P.: A PTAS for the minimization of polynomials of fixeddegree over the simplex. Theor. Comput. Sci. 361(2–3), 210–225 (2006)

de Klerk, E., Laurent, M.: Error bounds for some semidefinite programming approaches to polynomial minimization on the hypercube. SIAM J. Optim. 20(6), 3104–3120 (2010)

de Klerk, E., Laurent, M., Sun, Z.: Convergence analysis for Lasserre’s measure-based hierarchy of upper bounds for polynomial optimization. Math. Program. Ser. A 162(1), 363–392 (2017)

de Klerk, E., Laurent, M.: Comparison of Lasserre’s measure-based bounds for polynomial optimization to bounds obtained by simulated annealing. Math. Oper. Res. 43, 1317–1325 (2018)

de Klerk, E., Laurent, M.: Worst-case examples for Lasserre’s measure-based hierarchy for polynomial optimization on the hypercube. Math. Oper. Res. 45(1), 86–98 (2020)

de Klerk, E., Laurent, M.: Convergence analysis of a Lasserre hierarchy of upper bounds for polynomial minimization on the sphere. Math. Program. (2020). https://doi.org/10.1007/s10107-019-01465-1

de Klerk, E., Laurent, M.: A survey of semidefinite programming approaches to the generalized problem of moments and their error analysis. In: Araujo, C., Benkart, G., Praeger, C., Tanbay, B. (eds.) World Women in Mathematics 2018. Association for Women in Mathematics Series, vol. 20. Springer, Cham (2019)

Dimitrov, D.K., Nikolov, G.P.: Sharp bounds for the extreme zeros of classical orthogonal polynomials. J. Approx. Theory 162, 1793–1804 (2010)

Doherty, A.C., Wehner, S.: Convergence of SDP hierarchies for polynomial optimization on the hypersphere (2013). arXiv:1210.5048v2

Driver, K., Jordaan, K.: Bounds for extreme zeros of some classical orthogonal polynomials. J. Approx. Theory 164, 1200–1204 (2012)

Fang, K., Fawzi, H.: The sum-of-squares hierarchy on the sphere and applications in quantum information theory. Math. Program. (2020). https://doi.org/10.1007/s10107-020-01537-7

Kroó, A.: Multivariate needle polynomials with application to norming sets and cubature formulas. Acta Math. Hung. 147(1), 46–72 (2015)

Lasserre, J.B.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11, 796–817 (2001)

Lasserre, J.B.: Moments, Positive Polynomials and Their Applications. Imperial College Press, London (2009)

Lasserre, J.-B.: A new look at nonnegativity on closed sets and polynomial optimization. SIAM J. Optim. 21(3), 864–885 (2011)

Lasserre, J.-B.: An Introduction to Polynomial and Semi-algebraic Optimization (Cambridge Texts in Applied Mathematics). Cambridge University Press, Cambridge (2015)

Lasserre, J.B.: Connecting optimization with spectral analysis of tri-diagonal Hankel matrices (2019). arXiv:1907.097784

Nie, J., Schweighofer, M.: On the complexity of Putinar’s Positivstellensatz. J. Complex. 23, 135–150 (2007)

Pawłucki, W., Pleśniak, W.: Markov’s inequality and C\(^\infty \) functions on sets with polynomial cusps. Math. Ann. 275, 467–480 (1986)

Putinar, M.: Positive polynomials on compact semi-algebraic sets. Ind. Univ. Math. J. 42, 969–984 (1993)

Schmüdgen, K.: The \(K\)-moment problem for compact semi-algebraic sets. Math. Ann. 289, 203–206 (1991)

Schweighofer, M.: On the complexity of Schmüdgen?s Positivstellensatz. J. Complex. 20(4), 529–543 (2004)

Slot, L., Laurent, M.: Improved convergence analysis of Lasserre’s measure-based upper bounds for polynomial minimization on compact sets. Math. Program. (2020). https://doi.org/10.1007/s10107-020-01468-3

Szegö, G.: Orthogonal Polynomials. American Mathematical Society Colloquium Publications, Providence (1975)

Acknowledgements

We wish to thank Edouard Pauwels for bringing to our attention the needle polynomials, as well as the anonymous referees for their helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported by the European Union’s EU Framework Programme for Research and Innovation Horizon 2020 under the Marie Skłodowska-Curie Actions Grant Agreement No 764759 (MINOA).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Slot, L., Laurent, M. Near-optimal analysis of Lasserre’s univariate measure-based bounds for multivariate polynomial optimization. Math. Program. 188, 443–460 (2021). https://doi.org/10.1007/s10107-020-01586-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-020-01586-y

Keywords

- Polynomial optimization

- Sum-of-squares polynomial

- Lasserre hierarchy

- Push-forward measure

- Semidefinite programming

- Needle polynomial