Abstract

We develop an algorithm for minimax problems that arise in robust optimization in the absence of objective function derivatives. The algorithm utilizes an extension of methods for inexact outer approximation in sampling a potentially infinite-cardinality uncertainty set. Clarke stationarity of the algorithm output is established alongside desirable features of the model-based trust-region subproblems encountered. We demonstrate the practical benefits of the algorithm on a new class of test problems.

Similar content being viewed by others

Notes

Our selection of such an approach is informed by a recent study [5] highlighting merits of cutting-plane methods in various robust optimization settings.

We remark that in [23], a variant of Algorithm 1 is proposed in which points of \(\mathfrak {U}^{k}\) added in earlier iterations may eventually be removed from \(\mathfrak {U}^{k}\), and the same convergence results that apply to Algorithm 1 are proven. Although such a constraint-dropping scheme may have a practical benefit, it is easier to analyze Algorithm 1 as stated. We have also chosen to implement our novel method without a constraint-dropping scheme, but this is a potential topic of future work.

We note that the proposed algorithm and its analysis could also employ inexact gradient values, provided that these gradients satisfy the approximation condition specified in Assumption 3.

References

Ben-Tal, A., den Hertog, D., Vial, J.P.: Deriving robust counterparts of nonlinear uncertain inequalities. Math. Program. 149(1), 265–299 (2015). https://doi.org/10.1007/s10107-014-0750-8

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization. Princeton University Press, Princeton (2009)

Ben-Tal, A., Hazan, E., Koren, T., Mannor, S.: Oracle-based robust optimization via online learning. Oper. Res. 63(3), 628–638 (2015). https://doi.org/10.1287/opre.2015.1374

Bertsimas, D., Brown, D., Caramanis, C.: Theory and applications of robust optimization. SIAM Rev. 53(3), 464–501 (2011). https://doi.org/10.1137/080734510

Bertsimas, D., Dunning, I., Lubin, M.: Reformulation versus cutting-planes for robust optimization. Comput. Manag. Sci. 13(2), 195–217 (2016). https://doi.org/10.1007/s10287-015-0236-z

Bertsimas, D., Nohadani, O.: Robust optimization with simulated annealing. J. Glob. Optim. 48(2), 323–334 (2010). https://doi.org/10.1007/s10898-009-9496-x

Bertsimas, D., Nohadani, O., Teo, K.M.: Robust optimization in electromagnetic scattering problems. J. Appl. Phys. 101(7), 074507 (2007)

Bertsimas, D., Nohadani, O., Teo, K.M.: Robust optimization for unconstrained simulation-based problems. Oper. Res. 58(1), 161–178 (2010). https://doi.org/10.1287/opre.1090.0715

Bigdeli, K., Hare, W.L., Tesfamariam, S.: Configuration optimization of dampers for adjacent buildings under seismic excitations. Eng. Optim. 44(12), 1491–1509 (2012). https://doi.org/10.1080/0305215x.2012.654788

Calafiore, G., Campi, M.: Uncertain convex programs: randomized solutions and confidence levels. Math. Program. 102(1), 25–46 (2005). https://doi.org/10.1007/s10107-003-0499-y

Cheney, E.W., Goldstein, A.A.: Newton’s method for convex programming and Tchebycheff approximation. Numer. Math. 1, 253–268 (1959). https://doi.org/10.1007/bf01386389

Ciccazzo, A., Latorre, V., Liuzzi, G., Lucidi, S., Rinaldi, F.: Derivative-free robust optimization for circuit design. J. Optim. Theory Appl. 164(3), 842–861 (2015). https://doi.org/10.1007/s10957-013-0441-2

Conn, A.R., Gould, N.I.M., Toint, P.L.: Trust-Region Methods. Society for Industrial and Applied Mathematics (2000)

Conn, A.R., Scheinberg, K., Vicente, L.N.: Introduction to Derivative-Free Optimization. Society for Industrial and Applied Mathematics (2009)

Conn, A.R., Vicente, L.N.: Bilevel derivative-free optimization and its application to robust optimization. Optim. Methods Softw. 27(3), 561–577 (2012). https://doi.org/10.1080/10556788.2010.547579

Curtis, F.E., Que, X.: An adaptive gradient sampling algorithm for non-smooth optimization. Optim. Methods Softw. 28(6), 1302–1324 (2013). https://doi.org/10.1080/10556788.2012.714781

Diehl, M., Bock, H.G., Kostina, E.: An approximation technique for robust nonlinear optimization. Math. Program. 107(1–2), 213–230 (2006). https://doi.org/10.1007/s10107-005-0685-1

Duran, M.A., Grossmann, I.E.: An outer-approximation algorithm for a class of mixed-integer nonlinear programs. Math. Program. 36(3), 307–339 (1986). https://doi.org/10.1007/BF02592064

Fiege, S., Walther, A., Kulshreshtha, K., Griewank, A.: Algorithmic differentiation for piecewise smooth functions: a case study for robust optimization. Optim. Methods Softw. pp. 1–16 (2018). https://doi.org/10.1080/10556788.2017.1333613

Fletcher, R., Leyffer, S.: Solving mixed integer nonlinear programs by outer approximation. Math. Program. 66(1), 327–349 (1994). https://doi.org/10.1007/BF01581153

Garmanjani, R., Jùdice, D., Vicente, L.N.: Trust-region methods without using derivatives: worst case complexity and the nonsmooth case. SIAM J. Optim. 26(4), 1987–2011 (2016). https://doi.org/10.1137/151005683

Goldfarb, D., Iyengar, G.: Robust convex quadratically constrained programs. Math. Program. 97(3), 495–515 (2003). https://doi.org/10.1007/s10107-003-0425-3

Gonzaga, C., Polak, E.: On constraint dropping schemes and optimality functions for a class of outer approximations algorithms. SIAM J. Control Optim. 17(4), 477–493 (1979). https://doi.org/10.1137/0317034

Grapiglia, G.N., Yuan, J., Yuan, Yx: A derivative-free trust-region algorithm for composite nonsmooth optimization. Comput. Appl. Math. 35(2), 475–499 (2016). https://doi.org/10.1007/s40314-014-0201-4

Griewank, A., Walther, A.: Evaluating Derivatives: Principles and Techniques of Algorithmic Differentiation. SIAM, Philadelphia (2008)

Hare, W.: Compositions of convex functions and fully linear models. Optim. Lett. 11(7), 1217–1227 (2017). https://doi.org/10.1007/s11590-017-1117-x

Hare, W., Nutini, J.: A derivative-free approximate gradient sampling algorithm for finite minimax problems. Comput. Optim. Appl. 56(1), 1–38 (2013). https://doi.org/10.1007/s10589-013-9547-6

Hare, W., Sagastizábal, C.: Computing proximal points of nonconvex functions. Math. Program. 116(1), 221–258 (2009). https://doi.org/10.1007/s10107-007-0124-6

Hare, W., Sagastizábal, C.: A redistributed proximal bundle method for nonconvex optimization. SIAM J. Optim. 20(5), 2442–2473 (2010). https://doi.org/10.1137/090754595

Hettich, R., Kortanek, K.O.: Semi-infinite programming: theory, methods, and applications. SIAM Rev. 35(3), 380–429 (1993). https://doi.org/10.1137/1035089

Kelley Jr., J.E.: The cutting-plane method for solving convex programs. J. Soc. Ind. Appl. Math. 8(4), 703–712 (1960). https://doi.org/10.1137/0108053

Khan, K., Larson, J., Wild, S.M.: Manifold sampling for optimization of nonconvex functions that are piecewise linear compositions of smooth components. Preprint ANL/MCS-P8001-0817, Argonne National Laboratory, MCS Division (2017). http://www.mcs.anl.gov/papers/P8001-0817.pdf

Kiwiel, K.: An ellipsoid trust region bundle method for nonsmooth convex minimization. SIAM J. Control Optim. 27(4), 737–757 (1989). https://doi.org/10.1137/0327039

Larson, J., Menickelly, M., Wild, S.M.: Manifold sampling for L1 nonconvex optimization. SIAM J. Optim. 26(4), 2540–2563 (2016). https://doi.org/10.1137/15M1042097

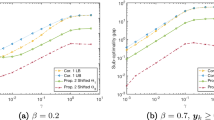

Moré, J.J., Wild, S.M.: Benchmarking derivative-free optimization algorithms. SIAM J. Optim. 20(1), 172–191 (2009). https://doi.org/10.1137/080724083

Polak, E.: Optimization. Springer, New York (1997). https://doi.org/10.1007/978-1-4612-0663-7

Postek, K., den Hertog, D., Melenberg, B.: Computationally tractable counterparts of distributionally robust constraints on risk measures. SIAM Rev. 58(4), 603–650 (2016). https://doi.org/10.1137/151005221

Wild, S.M., Regis, R.G., Shoemaker, C.A.: ORBIT: optimization by radial basis function interpolation in trust-regions. SIAM J. Sci. Comput. 30(6), 3197–3219 (2008). https://doi.org/10.1137/070691814

Wild, S.M., Shoemaker, C.A.: Global convergence of radial basis function trust-region algorithms for derivative-free optimization. SIAM Rev. 55(2), 349–371 (2013). https://doi.org/10.1137/120902434

Acknowledgements

This material was based upon work supported by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, applied mathematics program under Contract No. DE-AC02-06CH11357.

Author information

Authors and Affiliations

Corresponding author

Additional information

The submitted manuscript has been created by UChicago Argonne, LLC, Operator of Argonne National Laboratory (“Argonne”). Argonne, a U.S. Department of Energy Office of Science laboratory, is operated under Contract No. DE-AC02-06CH11357. The U.S. Government retains for itself, and others acting on its behalf, a paid-up nonexclusive, irrevocable worldwide license in said article to reproduce, prepare derivative works, distribute copies to the public, and perform publicly and display publicly, by or on behalf of the Government. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan. http://energy.gov/downloads/doe-public-access-plan.

Appendices

Appendix A: optimality measure properties

Here we collect proofs for Section 2.

1.1 A.1 Proof of Proposition 2

For fixed \(\hat{x}\in \mathbb {R}^n\), we have that \(\theta (\hat{x},h)\) from (3) can be written as

which is a maximization of a linear function over

Thus, its optimal value is equal to the optimal value of

since an extreme point of \(\mathcal {D}_{f,\mathcal {U}}(\hat{x})\), which is necessarily in \(\mathcal {E}(\hat{x})\) by definition of the convex hull, is an optimal solution of (30). Thus, we have established that

Letting \(b(h,(\xi _0,\xi )) \triangleq -\xi _0 + \langle \xi ,h\rangle + \displaystyle \frac{1}{2}\Vert h\Vert ^2\), the function involved in the minimax expression of (31), we note that

\(b(h,(\xi _0,\xi ))\) is continuous on \(\mathbb {R}^{n}\times \mathbb {R}^{n+1}\);

\(b(h,(\hat{\xi }_0,\hat{\xi }))\) is strictly convex in h for any \((\hat{\xi }_0,\hat{\xi })\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\);

\(b(\hat{h},(\xi _0,\xi ))\) is concave in \((\xi _0,\xi )\) for any \(\hat{h}\in \mathbb {R}^n\);

\(\mathcal {D}_{f,\mathcal {U}}(\hat{x})\) is, by definition, a convex set; and

\(b(h,(\xi _0,\xi ))\rightarrow \infty \) as \(\Vert h\Vert \rightarrow \infty \) uniformly in \((\xi _0,\xi )\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\).

Thus, the conditions of von Neumann’s theorem apply, and so we conclude that (31) is equivalent to

Now, for a fixed \((\hat{\xi }_0,\hat{\xi })\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\), the solution to the unconstrained convex inner minimization problem of (32) satisfies (by sufficient and necessary first-order conditions) \(h = -\hat{\xi }\). Thus, the inner minimization in (32) can be replaced with \(-\hat{\xi }_0 - \displaystyle \frac{\Vert \hat{\xi }\Vert ^2}{2}\), yielding the desired result

\(\square \)

1.2 A.2 Proof of Proposition 3

Clearly, \(\xi _0 = \varPsi (\hat{x}) - f(\hat{x},u) \ge 0\) for all \((\xi _0,\xi )\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\). Combined with the nonnegativity of norms, it follows immediately from the definition of \(\varTheta (\hat{x})\) in (5) that \(\varTheta (\hat{x})=0\) if and only if \(\mathbf {0}\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\). Thus, it suffices to show that \(\mathbf {0}\in \partial \varPsi (\hat{x})\) if and only if \(\mathbf {0}\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\).

Suppose that \(\mathbf {0}\in \partial \varPsi (\hat{x})\). Let \(u^*(\hat{x})\in \mathcal {U}^*(\hat{x})\), where we have defined

Then, for any such \(u^*(\hat{x})\), \(\varPsi (\hat{x}) - f(\hat{x},u^*(\hat{x}))=0\). It follows that the set

satisfies \(D^*(\hat{x})\subseteq \mathcal {E}(\hat{x})\subseteq \mathcal {D}_{f,\mathcal {U}}(\hat{x})\). Thus, \(\mathbf {0}\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\).

Now suppose that \(\mathbf {0}\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\). By Carathéodory’s theorem and the convex hull definition of \(\mathcal {D}_{f,\mathcal {U}}(\hat{x})\) in (4), there exist \(q\le n+2\); \(u^1,\dots ,u^q\in \mathcal {U}\); and \(\{\lambda \in \mathbb {R}^{q}_{+}: \lambda _1 + \dots + \lambda _q = 1\}\) such that

Clearly, \(\varPsi (\hat{x})-f(\hat{x},\hat{u}) = \displaystyle {{\mathrm{argmax}}}_{u\in \mathcal {U}}f(\hat{x},u)-f(\hat{x},\hat{u})\ge 0\) for all \(\hat{u}\in \mathcal {U}\). Thus, projecting the convex combination (33) into its first coordinate, we must have that all q vectors satisfy \(\varPsi (\hat{x})-f(\hat{x},u^j)=0\); that is,

Likewise, projecting (33) into its last n coordinates,

Together, (34) and (35) imply that \(\mathbf {0}\in \partial \varPsi (\hat{x})\). \(\square \)

1.3 A.3 Proof of Proposition 4

For completeness, we state the definition of a continuous set-valued mapping.

Definition 1

Consider a sequence of sets \(\{S_j\}_{j=0}^\infty \subset \mathbb {R}^{n}\).

- 1.

The point \(x^*\in \mathbb {R}^n\) is a limit point of\(\{S_j\}\) provided \(dist(x^*,S_j)\rightarrow 0\).

- 2.

The point \(x^*\in \mathbb {R}^n\) is a cluster point of\(\{S_j\}\) if there exists a subsequence \(\mathcal {K}\) such that \(dist(x^*,S_j)\rightarrow _\mathcal {K}0\).

- 3.

We denote the set of limit points of \(\{S_j\}\) by \(\liminf S_j\) and refer to it as the inner limit.

- 4.

We denote the set of cluster points of \(\{S_j\}\) by \(\limsup S_j\) and refer to it as the outer limit.

Definition 2

We say that a set-valued mapping \(\varGamma : \mathbb {R}^{n}\rightarrow 2^{\mathbb {R}^{m}}\) is

- 1.

outer semicontinuous (o.s.c.) at\(\hat{x}\) provided for all sequences \(\{x^j\}\rightarrow \hat{x}\), \(\displaystyle \limsup \varGamma (x^j) \subseteq \varGamma (\hat{x})\),

- 2.

inner semicontinuous (i.s.c.) at\(\hat{x}\) provided for all sequences \(\{x^j\}\rightarrow \hat{x}\), \(\displaystyle \liminf \varGamma (x^j) \supseteq \varGamma (\hat{x})\), and

- 3.

continuous at\(\hat{x}\) provided \(\varGamma \) is o.s.c. and i.s.c. at \(\hat{x}\).

Without proof, we state Corollary 5.3.9 from [36].

Proposition 7

Suppose that \(g:\mathbb {R}^{n}\times \mathbb {R}^{m}\rightarrow \mathbb {R}^{p}\) is continuous and that \(\varGamma : \mathbb {R}^{n}\rightarrow 2^{\mathbb {R}^{m}}\) is a continuous set-valued mapping. Then, the set-valued mapping \(G: \mathbb {R}^{n}\rightarrow 2^{\mathbb {R}^{p}}\) defined by

is continuous.

By using Proposition 7, we get the following intermediate result needed to prove continuity of \(\varTheta \).

Proposition 8

Let Assumption 1 hold; then, the set-valued mapping \(\mathcal {D}_{f,\mathcal {U}}(\cdot ):\mathbb {R}^n\rightarrow 2^{\mathbb {R}^{n+1}}\) is continuous.

Proof

We look to (36) in Proposition 7 as a template. In the definition of \(\mathcal {D}_{f,\mathcal {U}}(\cdot )\), \(\varGamma (x) = \mathcal {U}\) for all \(x\in \mathbb {R}^n\), and as such, \(\mathcal {U}\) is trivially a continuous set-valued mapping. We have only to show that \(D:\mathbb {R}^{n}\times \mathbb {R}^m\rightarrow \mathbb {R}^{n+1}\) defined by

is continuous. Continuity follows since, by Assumption 1, \(\varPsi (x)-f(x,u)\) is a continuous function on \(\mathbb {R}^n\times \mathcal {U}\), and \(\nabla _x f(x,u):\mathbb {R}^{n}\times \mathcal {U}\rightarrow \mathbb {R}^n\) is a Lipschitz continuous function on \(\mathbb {R}^n\times \mathcal {U}\). \(\square \)

We can now prove Proposition 4:

Proof

Consider the equivalent form of \(\varTheta \) from Proposition 2 in (5),

where we have defined the concave quadratic \(q(\xi _0,\xi )\triangleq -\xi _0 - \displaystyle \frac{1}{2}\Vert \xi \Vert ^2.\)

Let \(\hat{x}\) be arbitrary, and let \(\{x^j\}_{j=0}^\infty \) be an arbitrary sequence satisfying \(x^j\rightarrow \hat{x}\). For \(j=0,1,\dots \), let \((\xi ^j_0,\xi ^j)\) be any \((\xi ^j_0,\xi ^j)\in \mathcal {D}_{f,\mathcal {U}}(x^j)\) such that \(\varTheta (x^j) = -\xi _0^j - \displaystyle \frac{1}{2}\Vert \xi ^j\Vert ^2.\)

The sequence \(\{x^j\}_{j=0}^\infty \) is bounded (it is convergent by assumption); we can also show that despite the arbitrary selection, there exists \(M\ge 0\) such that \(\Vert (\xi ^j_0,\xi ^j)\Vert \le M\) uniformly for \(j=0,1,\dots \). To see this, suppose instead that \(\Vert (\xi ^j_0,\xi ^j)\Vert \rightarrow \infty \). Then, since \(\mathcal {D}_{f,\mathcal {U}}(\hat{x})\) is a compact set, there exists \(M\ge 0\) such that \(\displaystyle \max \nolimits _{(\xi _0,\xi )\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})} \Vert (\xi _0,\xi )\Vert = M\). By our contradiction hypothesis, there exists \(\underline{j}\) sufficiently large so that \(\Vert (\xi ^j_0,\xi ^j)\Vert >2M\) for all \(j\ge \underline{j}\). From Proposition 8, \(\mathcal {D}_{f,\mathcal {U}}(\cdot )\) is a continuous set-valued mapping. Thus, for any \(\epsilon >0\), there exists \(\underline{j}(\epsilon )\ge \underline{j}\) sufficiently large so that \(\mathbf {dist} \left( (\xi ^j_0,\xi ^j), \mathcal {D}_{f,\mathcal {U}}(\hat{x})\right) < \epsilon \) for all \(j > \underline{j}(\epsilon )\); this means that \(\Vert (\xi ^j_0,\xi ^j)\Vert \le M + \epsilon \). This is impossible for all \(\epsilon \in [0,M]\), yielding a contradiction.

Thus, since \(\Vert (\xi ^j_0,\xi ^j)\Vert \le M\) for \(j=0,1,\dots \) and because \(q(\cdot )\) is a continuous function of \((\xi _0,\xi )\), \(\displaystyle \limsup \nolimits _{j\rightarrow \infty } q(\xi ^j_0,\xi ^j)\) exists by the Bolzano–Weierstrass theorem. Let \(\mathcal {K}\) denote a subsequence witnessing

and let \((\hat{\xi }_0,\hat{\xi }) = \displaystyle \lim \nolimits _{j\in \mathcal {K}} (\xi ^j_0,\xi ^j)\) denote the corresponding accumulation point. Again using the fact that \(\mathcal {D}_{f,\mathcal {U}}(\cdot )\) is o.s.c., we conclude that \((\hat{\xi }_0,\hat{\xi })\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\). Using the definition of \(\varTheta (\hat{x})\), we have

As written, (37) means that \(\varTheta (\cdot )\) is upper semicontinuous. We now demonstrate that \(\varTheta (\cdot )\) is also lower semicontinuous, which will complete the proof of the continuity of \(\varTheta (\cdot )\). To establish a contradiction, we suppose that there exist \(\hat{x}\in \mathbb {R}^n\) and a sequence \(\{x^j\}_{j=0}^\infty \) satisfying \(x^j\rightarrow \hat{x}\) such that \(\varTheta (x^j)\) exists for all j and

Let \((\hat{\xi }_0,\hat{\xi })\in \mathcal {D}_{f,\mathcal {U}}(\hat{x})\) satisfy \(\varTheta (\hat{x})=q(\hat{\xi }_0,\hat{\xi })\). Since \(\mathcal {D}_{f,\mathcal {U}}(\hat{x})\) is a continuous set-valued mapping by Proposition 8, there exists a \((\xi ^j_0,\xi ^j)\in \mathcal {D}_{f,\mathcal {U}}(x^j)\) satisfying \(\varTheta (x^j)=q(\xi ^j_0,\xi ^j)\) for all \(j=0,1,\dots \) such that \((\xi ^j_0,\xi ^j)\rightarrow (\hat{\xi }_0,\hat{\xi })\). Since \(q(\cdot )\) is a continuous function in \((\xi _0,\xi )\), \(\displaystyle \lim \nolimits _{j\rightarrow \infty } q(\xi ^j_0,\xi ^j) = q(\hat{\xi }_0,\hat{\xi })\). Thus, by using the contradiction hypothesis (38), we have

the desired contradiction. \(\square \)

Appendix B: Convergence of inexact method of outer approximation

We now establish intermediate results needed to prove Theorem 1.

For brevity of notation, we use the following shorthand for the quadratic objective that appears in the definition of the optimality measure (7):

Consistent with our previous notation, we write q(x, h) in (39) in the case where \(\hat{\mathcal {U}}=\mathcal {U}\).

Lemma 6

Let \(\mathcal {S}\subset \mathbb {R}^{n}\) be a bounded subset. Suppose Assumptions 1 and 2 hold, and let \(L\in [0,\infty )\) be a Lipschitz constant valid for \(f(\cdot ,\cdot )\) and \(\nabla _x f(\cdot ,\cdot )\) on \(\mathcal {S}\times \mathcal {U}\). Then, there exists \(\kappa _1<\infty \) such that for all \(x\in \mathcal {S}\) and for all \(k=0,1,\dots \),

Moreover, for the \(\delta :\mathbb {N}\rightarrow \mathbb {R}\) from Assumption 2, there exists \(\kappa _2 \in (\kappa _1,\infty )\) such that

Proof

Since \(\varOmega ^k\subseteq \mathcal {U}\), we have that \(\varPsi _{\varOmega ^k}(\hat{x})\le \varPsi (\hat{x})\) for all \(\hat{x}\in \mathcal {S}\) and all \(k=0,1,\dots .\)

Fix \(\hat{x}\in \mathcal {S}\) and \(u^*(\hat{x})\in \mathcal {U}^*(\hat{x})\). Then, by definition of \(\varPsi \), \(\varPsi (\hat{x})=f(\hat{x},u^*(\hat{x})).\) By Assumption 2, for all k, there exists \([u^*(\hat{x})]'\in \varOmega ^k\) and \(\kappa _0>0\) such that \(\Vert u^*(\hat{x})-[u^*(\hat{x})]'\Vert \le \kappa _0\delta (k)\). Thus,

proving the first part of the lemma, with \(\kappa _1=L \kappa _0 \).

For the second part, let \(\hat{x}\in \mathcal {S}\) and \(\hat{h}\in \mathbb {R}^n\) be arbitrary. By the definition of q in (39),

for any \(k=0,1,\ldots \). Since \(\hat{h}\) was arbitrary, we can replace it with a minimizer of the convex \(q(\hat{x},\cdot )\); that is,

Observing that \(\varTheta \) in (2) and \(\varTheta _{\varOmega ^k}\) in (7) can be written, respectively, as

we conclude from (40) and (41) that

Denote the minimizer of \(\varTheta _{\varOmega ^k}(\hat{x})\) by

Then, from the dual characterization of \(\varTheta _{\varOmega ^k}(\hat{x})\) in Proposition 2, we have

By Assumption 1 and since we supposed \(\mathcal {S}\) and \(\varOmega ^k\) are bounded, \(\nabla _x f(\cdot ,u)\) is continuous over \(\mathcal {S}\) for each \(u\in \varOmega ^k\); furthermore, by (43), there exists \(M\in [0,\infty )\) such that \(\Vert h_k(x)\Vert \le M\) for all \(x\in \mathcal {S}\). Let \(u^*(\hat{x})\in \mathcal {U}\) be a maximizer in the definition of \(q\left( \hat{x},h_k(\hat{x})\right) \) in (39) such that

By Assumption 2, for all k, there exists \([u^*(\hat{x})]'\in \varOmega ^k\) such that \(\Vert u^*(\hat{x}) -[u^*(\hat{x})]'\Vert \le \kappa _0\delta (k)\). Combining that with the Lipschitz continuity of Assumption 1, we obtain both

and

Combining these Lipschitz bounds with (44), we obtain

Using the definition of \(\varTheta _{\varOmega ^k}(\hat{x})\), we can rewrite (45) as

Likewise, by using the fact that \(\varTheta (\hat{x}) = \displaystyle {{\mathrm{argmin}}}_{h\in \mathbb {R}^n} q(\hat{x},h) - \varPsi (\hat{x}) \le q(\hat{x},h_k(\hat{x})) - \varPsi (\hat{x})\), (46) is equivalent to

Inserting the bound from (40) into (47), we obtain

Combining the bounds in (42) and (48), we have proved the second part of the lemma, with \(\kappa _2=(M+2)L \kappa _0 \), since \(\kappa _2 > \kappa _1 = L \kappa _0\). \(\square \)

The next lemma demonstrates that, under our assumptions, the accumulation points \(x^*\) of a sequence \(\{x^k\}\) generated by Algorithm 1 satisfy (on the same subsequence K defining the accumulation) \(\varPsi _{\mathfrak {U}^{k}}(x^k) \rightarrow _K \varPsi (x^*)\).

Lemma 7

Suppose that Assumptions 1 and 2 hold and that both

- 1.

\(\{x^k\}_{k=0}^\infty \subset \mathbb {R}^n\) and

- 2.

\(\mathfrak {U}^{k}\subseteq \varOmega ^k\) are constructed recursively with \(\mathfrak {U}^{0} \ne \emptyset \), \(\mathfrak {U}^{0}\subseteq \mathcal {U}\), and \(\mathfrak {U}^{k+1} = \mathfrak {U}^{k}\cup \{u{'}\}\), where \(u{'}\in (\varOmega ^{k+1})^*(x^{k+1})\).

If \(x^*\) is an accumulation point of \(\{x^k\}_{k=0}^\infty \) (i.e., for some infinite subset \(\mathcal {K}\subset \mathbb {N}\), \(x^k\rightarrow _\mathcal {K}x^*\)), then \(\varPsi _{\mathfrak {U}^{k}}(x^k)\rightarrow _\mathcal {K}\varPsi (x^*)\).

Proof

For any \(k\in \{1,2,\dots \}\), let \(\underline{k} \triangleq \max \{k'\in \mathcal {K}: k' \le k\}\). Then, by our recursive construction, for any k, \(u^{\underline{k}}\in \mathfrak {U}^{k}\). Since \(\mathfrak {U}^{k}\subseteq \mathcal {U}\) for \(k=0,1,\dots \),

By the triangle inequality,

Because \(x^k\rightarrow _\mathcal {K}x^*\) and because \(\varPsi (\cdot )\) is a continuous function as a result of Assumption 1, the second summand in (50) satisfies \(|\varPsi (x^{\underline{k}}) - \varPsi (x^*)|\rightarrow 0\). By Lemma 6 and the continuity of \(\varPsi (\cdot )\), we also conclude that the first summand in (50) satisfies \(|\varPsi _{\varOmega ^{\underline{k}}} (x^{\underline{k}}) - \varPsi (x^{\underline{k}})|\rightarrow 0\). Thus,

Since from Assumption 1, \(f(\hat{x},u)\) is a uniformly continuous function in u over a compact set, and since \(\Vert x^k-x^{\underline{k}}\Vert \rightarrow 0\) (by accumulation), we have

By definition, \(\varPsi _{\varOmega ^{\underline{k}}}(x^{\underline{k}}) = f(x^{\underline{k}},u^{\underline{k}})\), and so the above can be written as

It follows immediately from (51) and (52) that \(f(x^k,u^{\underline{k}})\rightarrow \varPsi (x^*)\). So, by (49) and an application of the sandwich theorem, we conclude \(\varPsi _{\mathfrak {U}^{k}}(x^k)\rightarrow _\mathcal {K}\varPsi (x^*)\), as we intended to show. \(\square \)

By using Lemma 7, we can now give a proof of Theorem 1.

Proof of Theorem 1

Recalling the definition of \(q_{\hat{\mathcal {U}}}\) in (39), and since \(\mathfrak {U}^{k}\subseteq \mathcal {U}\) for \(k=0,1,\dots \), we have that for all k and for all \(\hat{h}\in \mathbb {R}^n\), \(q_{\mathfrak {U}^{k}}(x^k,\hat{h})\le q(x^k,\hat{h})\). Then, by the definition of \(\varTheta _{\mathfrak {U}^{k}}\) in (7), we have that

By using the criteria imposed on \(\varTheta _{\mathfrak {U}^{k}}(x^{k+1})\) in Line 5 of Algorithm 1 and (53), we have that for \(k=0,1,\dots \),

Let \(\mathcal {K}\) be a subsequence defining the accumulation \(\{x^k\}\rightarrow _\mathcal {K}x^*\). Taking the limit with respect to \(\mathcal {K}\) in (54), we obtain

By the continuity of \(\varTheta \) from Proposition 4, the result follows from the sandwich theorem. \(\square \)

C Availability of a generalized cauchy point

We refer the reader to [13, Chapter 12.2] for a detailed discussion of generalized Cauchy decrease in trust-region subproblems with convex (here, linear) constraints, but we provide some necessary details here, beginning with

Definition 3

Let \(p(r):\mathbb {R}\rightarrow \mathbb {R}^{n+1}\) denote the projection \(\mathcal {P}_{\mathcal {C}}([-r;\mathbf {0}])\), where

Use the notation \(p(r) = [p_z(r); p_d(r)]\) to indicate the separation of p(r) into the scalar z component and the n-dimensional d component. Then, the generalized Cauchy point for (P) is defined as \(p(r^*)\), where

The generalized Cauchy point is the global minimizer of the objective in (P) restricted to an arc described by the projected steepest descent direction at \((z,d) = (0,\mathbf {0})\). Algorithm 3 (see [13, Algorithm 12.2.2]) computes an approximate generalized Cauchy point for (P) via a Goldstein-type line search. The notation \(\mathcal {T}_{\mathcal {C}}(y)\) denotes the tangent cone to a convex set \(\mathcal {C}\) at a point y (and we remark that, given a linear polytope \(\mathcal {C}\), this set is easily computable).

We further remark that the computation of p(r) for a given r involves the solution of the convex quadratic program

Although we anticipate that Algorithm 3 has benefits in many real-world settings, here it is merely of theoretical convenience, and we do not use it in the implementation tested.

D Global maximization of (28)

First, we remark that the objective function of (28) is separable with respect to the variables L and b. Thus, it is evident that the optimal value of b is given by

We now consider the optimal value of L. After deleting rows and columns of \(I_n \otimes xx^\top \) corresponding to the entries \(L_{ij}\) where \(L_{ij}=0\), we are left with a matrix of the form

where \(x_{\bar{i}}\) denotes the truncated vector \([x_1,\dots ,x_i]\). Exploiting this block structure, the maximization of the quadratic decomposes into n bound-constrained quadratic maximization problems of the form

for \(i=1,\dots ,n\). In turn, solving (55) is equivalent to solving the problem

which can be cast as a mixed-integer linear program with exactly one binary variable; that is, solving (56) to global optimality entails the solution of two linear programs with \(\mathcal {O}(i)\) variables and \(\mathcal {O}(i)\) constraints each. Thus, the total cost of solving (28) to global optimality through this reformulation is bounded by the cost of solving 2n linear programs, the largest of which has \(\mathcal {O}(n)\) variables and constraints, and the smallest of which has \(\mathcal {O}(1)\) variables and constraints.

Rights and permissions

About this article

Cite this article

Menickelly, M., Wild, S.M. Derivative-free robust optimization by outer approximations. Math. Program. 179, 157–193 (2020). https://doi.org/10.1007/s10107-018-1326-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-018-1326-9