Abstract

Classifying retinal diseases is a complex problem because the early problematic areas of retinal disorders are quite small and conservative. In recent years, Transformer architectures have been successfully applied to solve various retinal related health problems. Age-related macular degeneration (AMD) and diabetic macular edema (DME), two prevalent retinal diseases, can cause partial or total blindness. Diseases therefore require an early and accurate detection. In this study, we proposed Vision Transformer (ViT), Tokens-To-Token Vision Transformer (T2T-ViT) and Mobile Vision Transformer (Mobile-ViT) algorithms to detect choroidal neovascularization (CNV), drusen, and diabetic macular edema (DME), and normal using optical coherence tomography (OCT) images. The predictive accuracies of ViT, T2T-ViT and Mobile-ViT achieved on the dataset for the classification of OCT images are 95.14%, 96.07% and 99.17% respectively. Experimental results obtained from ViT approaches showed that Mobile-ViT have superior performance with regard to classification accuracy in comparison with the others. Overall, it has been observed that ViT architectures have the capacity to classify with high accuracy in the diagnosis of retinal diseases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Age-related macular degeneration (AMD) occurs in people aged 50 years and older and is one of the main reasons of central vision loss and permanent blindness worldwide [1]. AMD affects the central region of the retina known as the macula and is classified as dry or wet AMD. Drusen is a typical example of dry AMD. Containing various proteins associated with inflammation and lipids, Drusen are extracellular materials located between the retinal pigment epithelium (RPE) and Bruch's membrane, causing focus elevations in the RPE [2]. Choroidal neovascularization (CNV) is wet-type AMD. CNV is defined as the growth of new blood vessels emerging from the choroid into the sub-RPE space through a break in Bruch's membrane [3]. Diabetic macular edema (DME) occurs as a result of deterioration of the neurovascular structure in people with diabetes. DME is one of the primary reasons of vision loss in people aged 20–79 worldwide [4]. Nowadays, early diagnosis of these diseases in terms of ophthalmology and rapid response to treatment are very important [5].

OCT is a biomedical imaging technique that uses the coherent properties of light for the early diagnosis and treatment of AMD and DME-type retinal diseases. OCT is also critical as it is the preliminary in diagnostic imaging [6]. OCT provides a cross-sectional view of the retina and lesions to diagnose and follow AMD and DME with high resolution and non-invasive imaging [7].

It is very difficult to make the classification accurately with the computer perspective. However, the increase in the number of patients in medical centers and the fact that there are different opinions among specialists during the diagnosis of diseases also make it difficult to classification. However, an important advantage of classification models is; It can reach the right result in the fastest and most accurate way by eliminating the speed, time and accuracy problems caused by the increasing number of patients and different opinions during the detection. Therefore, deep learning-based classification models can be used, in which OCT images are automatically classification.

Most of the classification studies in the literature include classical deep learning [8,9,10] and traditional machine learning algorithms [11]. Fu et al. proposed a deep learning based automatic detection model for the presence of angle closure on (Anterior segment optical coherence tomography) AS-OCT images obtained from a time-domain OCT. As a result, it was reported that the proposed method reached 90% ± 0.02 sensitivity [12]. In another study conducted for the detection of macular diseases and segmentation of lesion areas, Liu et al. used one-stage attention-based Convolutional Neural Network (CNN). When the results were examined for four different classes, it was seen that an accuracy value of 93.6% for the CNV class, 94.8% for the DME class, 94.6% for the Drusen class, and 97.1% for the Normal class were achieved [13]. Sunija et al. proposed a CNN- Long Short-Term Memory (CNN-LSTM) for glaucoma detection from raw Spectral-Domain-OCT (SD-OCT) images and an accuracy value of 99.64% was obtained [14]. Altan et al. reported an accuracy value of 99.20% with the DeepOCT model for classification of macular edema [15]. Lo et al. presented a federated learning framework to enable the classification of diabetic retinopathy by OCT and OCT angiography (OCTA) images. Based on the study's findings, AUROC values of 95.4% and 96% were acquired for OCT and OCTA respectively [16]. Naz et al. classify OCT images to differentiate individuals with DME from normal ones using the Support Vector Machine (SVM) classifier and an accuracy value of 79.65% was reported [17]. Venkatraman et al. presented a machine learning-based method to detect abnormalities in the retinal layer with the SVM classifier and an accuracy value of 87.98 was obtained [18]. Sugmk et al. classified abnormalities in retinal diseases as AMD and DME for OCT images using an image segmentation technique. [19]. Das et al. devised deep multiscale fusion CNN (DFM-CNN). As a result of the study, 96.03% and 99.60% accuracy values were obtained for the University of California San Diego (UCSD) and the Noor Eye Hospital (NEH) datasets, respectively [20]. Najeeb et al. classified retinal abnormalities in retinal OCT images using a single layer CNN structure to with an accuracy value of 95.62% [21]. Serener et al. evaluated the performance of the ResNet and AlexNet architectures to accurately detect dry age-related macular degeneration and wet age-related macular degeneration [22]. Kang et al. classified pachychoroid and non-pachychoroid eyes based on OCT-B scan images using ResNet50 and InceptionV3 models and achieved 96.1% and 95.25% accuracy values, respectively [23]. Perdomo et al. presented an automatic image analysis method called a custom OCT-NET model based on CNNs for the detection of DME. As a result of the study, it was shown that the CNN based OCT-NET model gave a successful result with an accuracy of 93.75% [24]. Kim et al. used VGG-16 architecture for automatic segmentation and classification of retinal layers [25].

The novelty of in this study can be summarized as a comparative analysis and investigation of the effect of different transformer methods on success. Verification of ViT's diagnostic efficacy for various retinal disorders such as CNV, drusen and DME is infrequent.

The main contributions of this study are as follows:

-

We point to an automatic diagnosis system to avoid delays in the diagnosis process brought about by the difficulty of meaningful analysis of images by medical professionals as a result of the increasing number of daily OCT scans taken in medical centers.

-

By going beyond the existing methods in the literature, we show the success of the use of transformers in the classification of retinal diseases.

-

We reveal the idea of the applicability of the method used for the analysis and classification of different biomedical images.

-

We demonstrate the success of transformers in terms of calculation efficiency and accuracy.

-

To show the success of the evaluation results of the proposed model, it is compared with the results obtained in the literature.

-

Comparative studies have been conducted with ViT, T2T-ViT and Mobile-ViT and on retinal OCT images. There are no similar comparative analyses, according to our best knowledge.

Materials and methods

Dataset

We used publicly available open-access Labeled Optical Coherence Tomography (OCT) and Chest X-Ray Images dataset for experimenbts [26]. Original dataset has 84,495 OCT images divided into four categories: CNV, drusen, DME and normal. The distribution among the classes in the dataset is unequal. The success of the predictive model requires that the amount of data used to solve the retinal diseases classification problem be established with equal or approximate data sizes for each class label. A total of 34,464 data are taken, with 8,616 for each class used in the training and testing process. The total number of images in the original dataset and the number of images used are given in Table 1 and characteristics of patients are given Table 2.

The main reasons of problems by deep learning models is that they may encounter problems with overfitting or underfitting during training. The validation data obtained by dividing the training data is not used for training, it is used to measure the performance of the model during training. For this reason, within the scope of the study, it is aimed to create validation data with a split ratio of 0.1 and to use the results obtained with these data in the back propagation process to ensure that they directly affect the learning process. It was determined as a total of 968 test data with 242 data from each class. The distribution of training and test data used in the study is shown in Fig. 1.

Proposed model

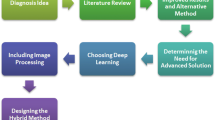

To evaluate the classification effects of different networks for optical coherence tomography images we use three kinds of transformer based network architecture based on the attention mechanism: ViT, T2T-ViT, Mobile-ViT. The transformer models have been supported with sufficient data and more accurate predictions have been pointed out. Figure 2 is shown the framework of the proposed approaches for retinal disease detection.

Vision transformer model

Vision Transformer (ViT) model essentially creates the image it takes as an input as a series of image patches by processing it. Accordingly, it predicts the class label. The concept of pixel is the most basic unit for the analysis of each image. However, considering the computational relations for each pixel, large costs may arise in terms of computational efficiency. Instead, ViT significantly reduces the semantic relationships between pixels by breaking down the image into patches, thus aiming to reduce costs. Patch are placed in a row. These embedded embeds are learnable vectors. The ViT structure obtains it by multiplying each segment by the embedding matrix, ensuring that it takes a linear order. The extracted results are transferred to the transformer encoder by position embedding. Transformer encoder structure includes a multi-head self-attention layer, multi-layer perceptron layer and norm layer. Here, the self-attention layer ensures that information is embedded throughout the image. The model learns the training data to be able to reconstruct the structure of the image. The MLP layer applies the classification header for image classification [27]. The ViT architecture model is shown in Fig. 3.

The T2T-ViT model is based on the ViT structure. The T2T-ViT model works by adopting the image as a language and encoding the image pixels as tokens. Tokens are the biggest feature that distinguishes T2T-ViT from ViT [28]. T2T-ViT architecture model is shown in Fig. 4.

Mobile-ViT represents a lightweight ViT structure. It covers spherical representations learning with transformers as convolutions. Learning global representations using transformers as convolution is the main goal. As a result, we are able to implicitly add convolution-like features into the network, learn representations using straightforward training recipes, and easily integrate Mobile-ViT with downstream architectures [29]. Mobile-ViT architecture model is shown in Fig. 5.

Experimental results

In the proposed study, the classification performance of transformer-based classification methods on a dataset with four different class namely CNV, DME, Drusen and Normal retina has been evaluated. To measure the performance of the model, precision, recall, accuracy and F1-score are used. To explain through a class, where true positive (TP) is the number of truly classified Drusen images. False positive (FP) shows the number of images predicted as Drusen but not Drusen. False negative (FN) is the incorrect classification of images as CNV, DME or Normal retina. True negative (TN) is the number of correctly classified not Drusen images. The formulas of performance metrics in terms of TP, FP, FN and TN and entropy are given with Eq. (1–5).

In this direction, we apply three different approaches. First, the classification process was carried out with the ViT model. To apply the model, a hyper parameter selection analysis was performed and parameter values were determined. Keeping the learning rate value too high can mean too much influence from the data. Choosing a small value will cause the training to take too long. This will negatively affect the training process in terms of speed and cost. For this reason, the learning rate value was chosen as 0.001 among the three different methods used in the study for optimum results. Adaptive algorithms run a faster process than SGD. Therefore, for all methods, AdamW, which has a dynamic structure, was chosen as the optimizer. Additionally, Categorical Cross Entropy was selected as loss function and 100 was used as the epoch value. The fact that the epoch value was very high showed that the learning status of the model decreased after a certain point.

When the results obtained from 968 test samples of 128x128x3 dimensions were examined, an accuracy value of 95.14% was obtained with ViT. As a result of the T2T-ViT approach which eas applied in the second stage, an accuracy value of 96.07% was obtained. In the last phase of the study, we apply the Mobile-ViT approach for retinal disease classification. Accordingly, an accuracy value of 99.17% was obtained. The performance metrics obtained for each class label with all the approaches applied are shown in Table 3. The confusion matrices obtained by the three different approaches proposed are shown in Fig. 6.

In terms of classifying OCT images into four groups, the suggested approaches performed the best when compared to other systems as shown in Table 4. Mobile-ViT model achieves a high classification rate when compared with alternative methods. Transformer based models classifies the four retinal diseases more accurately using the OCT image dataset,

Conclusions

In the medical sector, the need for automatic diagnosis systems is increasing day by day. With the increasing human population, the increasing patient density makes it difficult for experts to make quick and accurate decisions. As a result of such reasons, it has been tried to find a solution to this problem with different artificial learning approaches.

In this study, we focus on the classification of retinal diseases with the transformer approach. The novelty of this study can be summarized as a comparative study examining the impact of transformers-based architectures. Comparative studies are conducted with ViT, T2T-ViT and Mobile-ViT on the retinal OCT images dataset. To the best of our knowledge, similar comparative analyzes are not available. The results indicate the idea of concrete applicability of the study in the health sector. The accuracy value of 99.17% and other results obtained with the Mobile-ViT structure shows that the Transformer structure based on the attention mechanism gives successful results for object classification, which is the subject of computer vision. Thus, we reveal the applicability of transformer approximations to the classification process by capturing spherical and wider property relationships. The results show that the transformer structure can be used especially in terms of medical image classification, apart from different deep learning approaches in the literature. At the same time, it has been shown that the structure of the transformer, contrary to what is thought, is not only successful for training based on large data sizes but also for smaller size datasets. Transformer-based classifiers have emphasized that they provide high speed and classification performance with a lightweight architecture for small-scale datasets by reducing the number of parameters.

Standards and frameworks for image quality assessment for OCT images are important for accurate imaging-based diagnosis. In future studies, we plan to apply these approaches to datasets with images at different magnification levels to evaluate image quality. Additionally, new transformer-based classifier designs will be a new research method.

References

Liu L, Li C, Yu H, Yang X (2022) A critical review on air pollutant exposure and age-related macular degeneration. Sci Total Environ 840:156717

Sakurada Y, Tanaka K, Fragiotta S (2023) Differentiating drusen and drusenoid deposits subtypes on multimodal imaging and risk of advanced age-related macular degeneration. Jpn J Ophthalmol 67(1):1–13

Monis MD, Ali SM, Bhutto IA, Mahar PS, Ali S, Mahar S (2023) Idiopathic choroidal neovascularization in pregnancy: a case report. Cureus 15(2):e34611

Madjedi K, Pereira A, Ballios BG, Arjmand P, Kertes PJ, Brent M, Yan P (2022) Switching between anti-VEGF agents in the management of refractory diabetic macular edema: A systematic review. Surv Ophthalmol 67(5):1364–1372

Karabaş VL, Tokuç EÖ, Şermet F (2022) Survey of Intravitreal injection preferences for the treatment of age-related macular degeneration and macular edema among members of the Turkish ophthalmological association. Turk J Ophthalmol 52(3):179

Schmitt JM (1999) Optical coherence tomography (OCT): a review. IEEE J Sel Top Quantum Electron 5(4):1205–1215

Diao S, Su J, Yang C, Zhu W, Xiang D, Chen X, ... Shi F (2023) Classification and segmentation of OCT images for age-related macular degeneration based on dual guidance networks. Biomedical Signal Processing and Control 84:104810

Nandy Pal M, Roy S, Banerjee M (2021) Content based retrieval of retinal OCT scans using twin CNN. Sādhanā 46(3):174

Daanouni O, Cherradi B, Tmiri A (2021) Automatic detection of diabetic retinopathy using custom cnn and grad-cam. In Advances on Smart and Soft Computing: Proceedings of ICACIn 2020 (pp. 15–26). Springer Singapore

Kepp T, Ehrhardt J, Heinrich MP, Hüttmann G, Handels H (2019) Topology-preserving shape-based regression of retinal layers in OCT image data using convolutional neural networks. In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019) (pp. 1437–1440). IEEE

Santos AM, Paiva AC, Santos AP, Mpinda SA, Gomes DL, Silva AC, ... Gattass M (2018) Semivariogram and Semimadogram functions as descriptors for AMD diagnosis on SD-OCT topographic maps using Support Vector Machine. Biomed Eng Online 17(1):1–20

Fu H, Baskaran M, Xu Y, Lin S, Wong DWK, Liu J, ... Aung T (2019) A deep learning system for automated angle-closure detection in anterior segment optical coherence tomography images. Am J Ophthalmol 203:37–45

Liu X, Bai Y, Cao J, Yao J, Zhang Y, Wang M (2022) Joint disease classification and lesion segmentation via one-stage attention-based convolutional neural network in OCT images. Biomed Signal Process Control 71:103087

Sunija AP, Gopi VP, Palanisamy P (2022) Redundancy reduced depthwise separable convolution for glaucoma classification using OCT images. Biomed Signal Process Control 71:103192

Altan G (2022) DeepOCT: an explainable deep learning architecture to analyze macular edema on OCT images. Eng Sci Technol Int J 34:101091

Lo J, Timothy TY, Ma D, Zang P, Owen JP, Zhang Q, ... Sarunic MV (2021) Federated learning for microvasculature segmentation and diabetic retinopathy classification of OCT data. Ophthalmol Sci 1(4):100069

Naz S, Hassan T, Akram MU, Khan SA (2017) A practical approach to OCT based classification of diabetic macular edema. In 2017 international conference on signals and systems (ICSigSys) (pp. 217–220). IEEE

Venkatraman K, Sumathi M (2020) A Study on Fluid based Retinal abnormalities Analysis from OCT Images using SVM Classifier. In 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS) (pp. 86–89). IEEE

Sugmk J, Kiattisin S, Lasantitham AL (2014) Automated classification between age-related macular degeneration and diabetic macular edema in OCT image using image segmentation. In IEEE Biomedical Engineering International Conference

Das V, Dandapat S, Bora PK (2021) Automated classification of retinal OCT images using a deep multi-scale fusion CNN. IEEE Sens J 21(20):23256–23265

Najeeb S, Sharmile N, Khan MS, Sahin I, Islam MT, Bhuiyan MIH (2018) Classification of retinal diseases from OCT scans using convolutional neural networks. In 2018 10th International conference on electrical and computer engineering (ICECE) (pp. 465–468). IEEE

Serener A, Serte S (2019) Dry and wet age-related macular degeneration classification using oct images and deep learning. In 2019 Scientific meeting on electrical-electronics & biomedical engineering and computer science (EBBT) (pp. 1–4). IEEE

Kang NY, Ra H, Lee K, Lee JH, Lee WK, Baek J (2021) Classification of pachychoroid on optical coherence tomography using deep learning. Graefes Arch Clin Exp Ophthalmol 259:1803–1809

Perdomo O, Otálora S, González FA, Meriaudeau F, Müller H (2018) Oct-net: A convolutional network for automatic classification of normal and diabetic macular edema using sd-oct volumes. In 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018) (pp. 1423–1426). IEEE

Kim J, Tran L (2021). Retinal disease classification from oct images using deep learning algorithms. In 2021 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB) (pp. 1–6). IEEE

Kermany D, Zhang K, Goldbaum M (2018) Labeled Optical Coherence Tomography (OCT) and Chest X-Ray Images for Classification, Mendeley Data, V2. https://doi.org/10.17632/rscbjbr9sj.2

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, ... Houlsby N (2020). An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Yuan L, Chen Y, Wang T, Yu W, Shi Y, Jiang ZH, ... Yan S (2021) Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 558–567)

Mehta S, Rastegari M (2021) Mobilevit: Light-weight, general-purpose, and mobile-friendly vision transformer. arXiv. arXiv preprint arXiv:2110.02178

Rajagopalan N, Narasimhan V, Kunnavakkam Vinjimoor S, Aiyer J (2021) Deep CNN framework for retinal disease diagnosis using optical coherence tomography images. J Ambient Intell Humaniz Comput 12:7569–7580

Paul D, Tewari A, Ghosh S, Santosh KC (2020) Octx: Ensembled deep learning model to detect retinal disorders. In 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS) (pp. 526–531). IEEE

Kim J, Tran L (2020) Ensemble learning based on convolutional neural networks for the classification of retinal diseases from optical coherence tomography images. In 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS) (pp. 532–537). IEEE

Kermany DS, Goldbaum M, Cai W, Valentim CC, Liang H, Baxter SL, ... Zhang K (2018) Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172(5):1122–1131

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Contributions

S.A.: Software, Z.G.: Conception, Design, Supervision, Writing&Editing, E.E.: Conception, Design, Supervision, Writing&Editing, F.A.: Literature Review.

Corresponding author

Ethics declarations

Informed consent

Not Applicable.

Competing ınterest

Not Applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Akça, S., Garip, Z., Ekinci, E. et al. Automated classification of choroidal neovascularization, diabetic macular edema, and drusen from retinal OCT images using vision transformers: a comparative study. Lasers Med Sci 39, 140 (2024). https://doi.org/10.1007/s10103-024-04089-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10103-024-04089-w