Abstract

Haptic feedback, a natural component of our everyday interactions in the physical world, requires careful design in virtual environments. However, feedback location can vary from the fingertip to the finger, hand, and arm due to heterogeneous input/output technology used for virtual environments, from joysticks to controllers, gloves, armbands, and vests. In this work, we report on the user experience of touch interaction with virtual displays when vibrotactile feedback is delivered on the finger, wrist, and forearm. In a first controlled experiment with fourteen participants and virtual displays rendered through a head-mounted device, we report a user experience characterized by high perceived enjoyment, confidence, efficiency, and integration as well as low perceived distraction, difficulty, and confusion. Moreover, we highlight participants’ preferences for vibrotactile feedback on the finger compared to other locations on the arm or through the VR controller, respectively. In a follow-up experiment with fourteen new participants and physical touchscreens, we report a similar preference for the finger, but also specific nuances of the self-reported experience, not observed in the first experiment with virtual displays. Overall, our results depict an enhanced user experience when distal vibrotactile feedback is available over no vibrations at all during interactions with virtual and physical displays, for which we propose future work opportunities for augmented interactions in virtual worlds.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Displays come in various form factors, including PC monitors, personal mobile devices, visual interfaces for wearables, public ambient displays, and large-scale immersive installations. They also vary in nature, ranging from physical screens to a diversity of extended reality (XR) displays in augmented and virtual reality (AR/VR) environments (Pamparău et al. 2023; Itoh et al. 2021). Whether physical or virtual, displays serve as the primary means of organizing and presenting visual information to users of interactive systems, convenient in a diversity of contexts of use. Additionally, they enable direct interaction with visual information through touch, multitouch, and hand gestures in both physical (Wigdor and Wixon 2011; Cirelli and Nakamura 2014; Vatavu 2023) and virtual (Sagayam and Hemanth 2017; Li et al. 2019) environments.

During direct manipulation, the haptic sensation of touching the information presented on a display creates a distinctive user experience (UX) compared to indirect manipulation through an intermediate device, such as a joystick or VR controller. While tactile feedback is inherent when interacting with a physical display, it needs design in virtual environments and delivery through VR controllers (Sinclair et al. 2019; Zenner et al. 2020; Degraen et al. 2021), numerical gloves (Gu et al. 2016; Kovacs et al. 2020; Bickmann et al. 2019), or finger-augmentation devices (Maeda et al. 2022; Preechayasomboon and Rombokas 2021; Catană and Vatavu 2023). In this context, a recent variation of haptic technology has been targeting distal locations on the user’s body with respect to the location where the finger lands on the display. Examples include vibrations through a smartwatch while the user is interacting with the smartphone (Henderson et al. 2019), a ring during tabletop interactions (Le et al. 2016), or custom forearm wearables when interacting with large displays (Terenti and Vatavu 2022). Empirical evaluations of distal vibrotactile feedback for physical displays have revealed not only better user performance (Henderson et al. 2019), but also a distinctive type of a user experience (Terenti and Vatavu 2022) resulting from decoupling the point of touch and the location where confirmatory tactile sensations are felt on the body. However, the user experience of distal vibrotactile feedback on the arm has been little examined in virtual environments, where vibrations delivered through VR controllers or head-mounted displays (HMDs) have represented the norm.

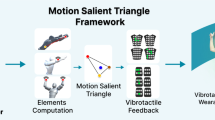

In this work, we examine the perceived user experience of distal vibrotactile feedback delivered at different locations on the arm with the purpose of augmenting interactions with a virtual display. To this end, we present empirical results about users’ preferences for vibrotactile feedback delivered on the finger, wrist, and forearm while interacting with visual content organized on a virtual display. Furthermore, we contrast vibrotactile feedback delivered at those locations against the conventional approach of delivering vibrations through VR controllers from the perspective of the user experience created by such augmented interactions; see Fig. 1. Our contributions are as follows:

a A user, wearing a head-mounted device, interacts with a virtual display in a virtual environment; b the same user interacting with a physical touchscreen display in the physical world; c locations on the interactive arm, progressively distant from the point of contact with the display, addressed in this work for implementing and examining the effect of distal vibrotactile feedback during interactions with both virtual and physical displays

-

1.

We report empirical results from a controlled experiment conducted with N = 14 participants, who self-reported their experience of interacting with visual content presented on virtual displays rendered in HMDs while receiving vibrotactile feedback at various locations on the interactive arm and through the VR controller, respectively. Our findings show a user experience that is characterized by high levels of perceived enjoyment (M = 3.9, SD = 0.8 on a scale from 1, low to 5, high), efficiency (M = 3.6, SD = 0.9), confidence (M = 3.9, SD = 0.8), and integration (M = 4.2, SD = 0.6) between input on the display and vibrations delivered at various locations on the interactive arm. This experience is complemented by a low perceived distraction (M = 2.1, SD = 0.9), difficulty (M = 1.9, SD = 0.9), and confusion (M = 2.0, SD = 0.7) of distal vibrotactile feedback. However, although we detected a statistically significant preference for vibrotactile feedback delivered on the finger compared to the wrist, forearm, and even through the VR controller, this preference could not be pinpointed down by any of the specific UX measures used in our evaluation.

-

2.

To gain more insight into the user experience of distal vibrotactile feedback during interactions with displays, we conducted a follow-up experiment in a physical environment with a new sample of N = 14 participants, who underwent the same procedure and performed the same task, but with physical touchscreens. The findings of this second experiment reveal a similar user experience, characterized by high perceived enjoyment (M = 3.5, SD = 0.6), efficiency (M = 3.7, SD = 0.8), confidence (M = 4.1, SD = 0.7), and integration (M = 4.1, SD = 0.6), as well as low perceived distraction (M = 2.3, SD = 0.7), difficulty (M = 1.9, SD = 0.9), and confusion (M = 1.7, SD = 0.8). Moreover, the results confirm the previously observed preference for vibrotactile feedback on the finger compared to the wrist and forearm, but also highlight nuanced differences between these conditions, e.g., more perceived enjoyment, efficiency, confidence, and integration when vibrotactile feedback is delivered on the finger in the condition where the display is physical, not virtual.

The findings from our two experiments, conducted with virtual and physical displays, provide insights into the perceived user experience of distal vibrotactile feedback delivered on the interactive arm when the user is engaged in interactions with displays located at the two extremes of the Reality-Virtuality continuum (Milgram and Kishino 1994). While the impact of location on the interactive arm is not evident during interactions in the virtual environment, it becomes significant when interacting with physical touchscreens in the real world, a finding that confirms, from the user experience perspective, prior results about user performance being augmented by distal vibrotactile feedback (Henderson et al. 2019; McAdam and Brewster 2009). This particular nuance in our discoveries, examined within the context of an otherwise comparable user experience with both virtual and physical displays, highlights perceptual distinctions between the physical and immersed body. These contributions are presented in detail in Sects. 3 and 4, after an overview of related work about vibrotactile feedback designed for interactions with physical and virtual displays, respectively, presented in Sect. 2. We conduct a comparative discussion of the findings of our two experiments in Sect. 5, address limitations of our work, and propose future work opportunities for distal vibrotactile feedback provided on the interactive arm for enhancing interactions in AR/VR environments.

2 Related work

We connect in this section to prior research that examined vibrotactile feedback with the purpose of enhancing user interactions with content presented in virtual environments, e.g., when grasping virtual objects, as well as in physical environments, e.g., vibrations accompanying touch input on a physical display. We also connect to prior work that characterized diverse aspects of the user perception of vibrotactile feedback delivered at various locations on the body, the user experience of the corresponding perceptions, and measures and metrics used for this purpose. We start our discussion by identifying the scope of our work precisely and providing our definition of virtual displays.

2.1 Physical versus virtual displays

Not approaching the familiarity of physical displays, the concept of a display in augmented, virtual, or extended reality requires clarification and a definition. To this end, we adopt the approach of Pamparău et al. (2023), based on an overview of display taxonomies from the scientific literature, and define a virtual display as a specific virtual object that features spatial and visual realism (Itoh et al. 2021), multiscale and multiuser characteristics (Lantz 2007), and criteria for augmentable user screens (Grübel et al. 2021), while it can be created and destroyed on the fly, and its properties, e.g., size, location, orientation, can be dynamically changed; see more details in Pamparău et al. (2023). Unlike physical displays, characterized by the rigidity of their form factors, sizes, and locations in the physical environment, virtual displays are flexible in terms of these characteristics. Thus, they enable new functionality that emerges from the capability of positioning at different points across the Reality-Virtuality continuum (Milgram and Kishino 1994) to create UX journeys (Pamparău and Vatavu 2022) for their users. For the scope of this work and in the context of the above definition, we see virtual displays as the counterpart of physical displays, but in a virtual environment rendered through a HMD; see Fig. 1a, b. This approach enables a direct comparison between the perceived user experience of distal vibrotactile feedback delivered at various locations on the interactive arm, next to the point of touch (Fig. 1c), when interacting with a virtual display compared to a physical one from the real world.

2.2 Vibrotactile feedback as a haptic output modality for interactive computer systems

Researchers have leveraged haptic technology to create a more realistic user experience of direct interaction with computer systems by simulating physical contact with, touching, and manipulating physical objects and, consequently, to induce sensations of weight, rigidity, or surface texture for digital content (Bau and Poupyrev 2012; Massie and Salisbury 1994; Völkel et al. 2008; Wu et al. 2012; Park et al. 2020). For example, one pioneering work is the PHANToM haptic interface (Massie and Salisbury 1994), a device that applies force to the user’s fingers to induce the sensation of being in physical contact with the virtual object presented on the computer display. BrailleDis (Völkel et al. 2008) is a device with mechanical pins that enables people with visual impairments to form a mental image of a picture. In the same application area, Wu et al. (2012) introduced a vibrotactile vest that projects the contours of a near-by physical object onto a vibromotor array, enabling feedback to be delivered directly on the user’s back. The technique also enables tactile display of the alphabet letters, for which user recognition accuracy rates were as high as 82%, indicating the feasibility of a portable vibrotactile system for non-visual image representation. Poupyrev et al. (2004) presented a digital pen augmented with vibrotactile feedback designed to enrich the experience of pen-based computing, and reported that vibrotactile feedback improved user performance in terms of task completion time, but also that users preferred vibrotactile feedback over no vibrations at all in both drawing and tapping tasks. Jansen (2010) proposed a technology that leveraged magnetic fluid beneath a multitouch display that, through manipulation of the fluid viscosity, delivers active haptic feedback. As a result, users feel a relief when running their fingers over the surface of the display, a sensation that fosters application of this technology to contexts of use involving eyes-free interaction.

Park et al. (2020) proposed augmentation of physical buttons with vibration actuators to deliver a diversity of vibrotactile sensations enabled by varying the vibration frequency, amplitude, duration, and envelope. Moreover, prior work has equally examined user perception of augmented buttons, and created mappings between the felt experience and descriptive adjectives, organized as dichotomous pairs, of that experience. Within the context of such mappings, users associate the dimensions of vibrotactile feedback patterns with the sensations they perceive in terms of the sharpness, smoothness, and softness of those patterns, among many other descriptive adjectives. The results of a perceptual study (Park et al. 2020) revealed that the sharp-blunt and soft-hard continua represent two main perceptual dimensions for augmented buttons. In the same direction, Dariosecq et al. (2020) conducted a user study with an ultrasonic-based haptic display to examine the semantic perceptions of thirty-two tactile textures of different waveforms and amplitudes. The findings and corresponding analysis resulted in the identification of a continuum spanning from roughness to smoothness, along which vibrotactile feedback can be characterized, informing the design of such feedback.

These examples illustrate many research efforts, creative approaches, and technological advances for incorporating haptics in the design of user interactions with computer systems of various kinds, in both physical and virtual environments. In this large space of application opportunities for haptics technology, vibrotactile feedback has been used to deliver various tactile effects, from simulating physical deformation (Heo et al. 2019) to creating the sensation of surface texture (Zhao et al. 2019; Kato et al. 2018), rendering properties of virtual objects, such as roughness (Ito et al. 2019; Asano et al. 2015) or smoothness (Punpongsanon et al. 2015) directly on the user’s skin, accompanying mid-air gesture input (Schönauer et al. 2015), and augmenting the user experience of interacting with touchscreen displays (Terenti and Vatavu 2022). For instance, Strohmeier and Hornbæk (2017) investigated the perceived texture of virtual surfaces using a physical slider augmented with vibrotactile feedback. By playing with the parameters of the vibrotactile patterns, particular conditions were identified for virtual surfaces to be perceived and described as rough, adhesive, sharp, or bumpy, just like physical surfaces.

The sense of touch is also known to impact people’s emotional states (Hertenstein et al. 2006; Bertheaux et al. 2020) and, consequently, there have been many efforts to design vibrotactile feedback for inducing emotional reactions in users, such as enjoyment and engagement (Levesque et al. 2011), or communicating physical touch at a distance through mediating digital devices (Smith and MacLean 2007). For example, Mullenbach et al. (2014) used haptics generated by the variable friction of a tablet display to study affective interaction between partners. Cabibihan and Chauhan (2017) compared the body’s reaction to touch delivered directly or mediated by a digital device over the Internet, and found that the body reacts similarly in terms of heartbeats, but differently when the experience is measured with respect to galvanic skin response. Other applications include exchanging haptic messages (Israr et al. 2015), encoded with “feelgits” (feel widgets) and “feelbits,” representing definitions of haptic patterns and their instantiations based on particular parameter values, and wearing haptic players at various locations on the body (Boer et al. 2017), for which the temporal form of the vibrotactile feedback was identified as key to the aesthetic experience reported by users.

Besides development of new technology and applications to various areas, researchers have equally looked at users’ discrimination abilities in identifying vibrotactile feedback delivered at various body locations. For example, Seim et al. (2015) found that placements of vibration motors on the dorsal side of the hand determine consistent recognition accuracy of the respective location, whereas placements on the ventral side resulted in higher accuracy as the distance from the fingertip increased. Regarding vibrotactile feedback delivered at wrist level, Liao et al. (2016) found that users can effectively discriminate between twenty-six spatiotemporal vibrotactile stimuli delivered through a watch-back vibrotactile array display. At the head level, vibrotactile feedback has been largely studied for navigation assistance (Kaul and Rohs 2017; Berning et al. 2015; Kaul et al. 2021). Finally, at the level of the whole body, high recognition accuracy of the body location used for the delivery of vibrotactile feedback was reported for vibrations on the arm compared to the palm, thigh, and waist (Alvina et al. 2015), but also for the body extremities (Elsayed et al. 2020). For more details about user perception of vibrotactile stimuli, we refer to Cholewiak and Collins (2003) for an overview and empirical results of vibrotactile localization on the arm, and Cholewiak et al. (2004) for a detailed discussion of vibrotactile localization on the abdomen. For an overview of other body locations examined in the scientific literature for vibrotactile feedback, we refer readers to the summary table from Elsayed et al. (2020), p. 125:3.Å

2.3 Vibrotactile feedback for interactions with physical displays in physical environments

Displays are used for both output and input. However, except for the displays integrated into mobile and wearable devices, such as smartphones, tablets, and smartwatches, the majority of the displays from our physical environments lack haptic feedback even though prior work has repeatedly highlighted the positive impact of the vibrotactile information channel on user performance (Brewster et al. 2007; Liao et al. 2017; Hoggan et al. 2008).

One approach to adding vibrotactile feedback to a touchscreen display is represented by integrating the vibration mechanism into the display itself. For instance, HapTable (Emgin et al. 2019) is a multimodal interactive tabletop combining electromechanical and electrostatic actuation by utilizing four piezo patches positioned at the tabletop edges. Bau et al. (2010) introduced TeslaTouch, a customization of a 3 M Microtouch panel for enhancing touch input with electro-vibrations, and carried out a study to measure user perception and report the frequency and amplitude thresholds at which vibrotactile feedback could be discriminated on the touch surface. Their results revealed that the sensations created with TeslaTouch were closely related to the perception of forces lateral to the skin, and that the amplitude just-noticeable-difference was found to be roughly similar, of 1.16 dB, relative to the reference voltage, across various frequencies between 80 and 400 Hz. Carter et al. (2013) explored ultrasound technology to produce haptic feedback above a surface, and examined the user performance in identifying different tactile properties, e.g., 86% accuracy for the perception of two focal points of different modulation frequencies at a separation distance of 3 cm. Although these display prototypes have integrated creative solutions, their technology is not available at scale. In this context, turning to wearable devices as one form of computing that is becoming mainstream, may represent a feasible technical design solution to augment interactions with physical displays with vibrotactile feedback (Terenti and Vatavu 2022).

A few works have considered vibrotactile feedback delivered by mobile and wearable devices to accompany touch-based interactions. For instance, McAdam and Brewster (2009) studied the performance of distal vibrotactile output provided by the smartphone when entering text on an interactive tabletop. Their results showed an increased typing speed when vibrotactile feedback was provided on the upper arm and wrist of the user’s dominant arm. Improvements in user performance were also reported by Henderson et al. (2019), who compared target acquisition task times and error rates when touch input was augmented with vibrotactile feedback on a smartphone, under the user’s finger, or on the wrist of the nondominant hand using a smartwatch. Le et al. (2016) introduced Ubitile, a smart ring device designed to deliver vibrotactile feedback during tabletop interactions. Other authors have investigated vibrotactile feedback delivered by wearables for augmenting smartphone input and output. For example, Schönauer et al. (2015) examined vibrotactile feedback on the upper arm for gestures performed in mid-air to interact with the smartphone, and focused on aspects of user perception when the vibrations were physically decoupled from the smartphone. They reported user perception of vibrotactile stimuli that was up to 80% accurate for various combinations of vibration patterns and intensities. Vatavu et al. (2016) reported a finger-augmentation device rendering vibrations on the index finger to create the illusion of holding digital content from the smartphone, e.g., a photograph, after being picked up from the smartphone display with a pinch gesture, is taken out of the smartphone and brought into the physical world, where it manifests via vibrations. In a user study, digital vibrons were characterized by the participants as fun, attractive, easy to use, and useful, while consensus also emerged for various vibrotactile patterns to be associated with particular digital content formats, e.g., images (42% consensus), PDF files (37%), short text messages (26%), and music files (25%). Next, we look at vibrotactile feedback designed for interactions with content presented to users in virtual environments.

2.4 Vibrotactile feedback for interactions in virtual environments

Vibrotactile feedback has been leveraged in virtual environments to induce the feeling of being in physical contact with, grasping, and manipulating virtual objects. For example, Cheng et al. (1997) evaluated the effect of vibrotactile feedback, delivered on top of visual and audio feedback, on user performance when grasping virtual objects. Their results revealed that vibrotactile feedback led to faster training times, although at the cost of an increased pressure observed for the grasped object over time. Nukarinen et al. (2018) investigated the temples and wrist for delivering vibrotactile feedback during virtual object picking tasks, and found that the wrist gave better results. To this end, they compared visual feedback with combined visual and vibrotactile feedback where vibrations were presented on the user’s wrists, temples, or at both locations simultaneously. Pezent et al. (2020) explored wrist haptics for VR/AR interaction using Tasbi, a bracelet device designed to render complex multisensory squeezing and vibrotactile feedback. Their results showed that on-wrist feedback substantially improved virtual hand-based interactions in VR/AR compared to no haptics. Moon (2022) reported the results of two experiments conducted to investigate the effect of the interaction method—VR controller or free-hand interaction—and vibrotactile feedback on the user experience of VR games by focusing on the perceived sense of presence, engagement, usability, and task performance. Their results showed that vibrotactile feedback increased users’ sense of presence and engagement in the virtual environment. Kreimeier et al. (2019) examined the influence of haptic feedback on user performance involving manual action in virtual environments, including throwing, stacking, and object identification. They found that haptic feedback increased the feeling of presence, decreased execution time of specific tasks, and improved detection rates for identification tasks, respectively.

Other researchers have focused on tools for designing and implementing vibrotactile feedback. For example, Weirding Haptics (Degraen et al. 2021) is an in-situ prototyping system of vibrotactile feedback for virtual environments based on vocalization, where the frequency and amplitude of the user’s voice are rendered by the VR controller in the form of vibrations. We refer readers to Bouzbib et al. (2022), Wang et al. (2019), and Tong et al. (2023) for surveys of haptics accompanying interactions in virtual environments, and Bermejo and Hui (2021) for haptic technologies used for augmented reality, respectively.

2.5 The user experience of vibrotactile feedback

Besides increasing user performance, vibrotactile feedback has also been explored for its significant role in enriching the user experience of interactions with or mediated by computer systems, a role that has been examined from various perspectives and using a diversity of methods. For instance, Suhonen et al. (2012) investigated the user experience of haptic feedback delivered as vibrotactile, thermal, and squeezing of the user’s finger and wrist for the purpose of interpersonal communication mediated by wearables. By employing semi-structured interviews and Likert-scale questionnaires to evaluate participants’ willingness to employ such technology and understand their preferences for haptic feedback mediating interpersonal communication, the authors identified applications involving emotional messages that mimic touch, and documented preferences for the delivery of haptics stimuli to the hand. Shim and Tan (2020) were specifically interested in the design of vibrotactile feedback patterns that could support a more engaging and delightful user experience. Their prototype, palmScape, consisting of a four-tactor display, was used to deliver sensations reflective of natural phenomena, such as breathing, heartbeat, and earthquake, designed to convey aliveness in a calm manner. The corresponding user experience was evaluated in terms of valence and arousal ratings, for which half of the vibrotactile patterns under evaluation were attributed calm and pleasant ratings. Singhal and Schneider (2021) defined “haptic embellishments” as haptic feedback designed to reinforce information already provided using other modalities, and “juicy haptics” as excessive positive haptic feedback. A set of design principles for haptic embellishments was presented and examined in the context of computer games and interactive media with the Player Experience Inventory (Abeele et al. 2020) and Haptic Experience model (Kim and Schneider 2020). Results indicated that juicy haptics improved player experience in terms of perceived enjoyability, aesthetic appeal, immersion, and meaning.

One common application of vibrotactile feedback to virtual environments is represented by simulating the sensation of touching virtual objects and surfaces to increase the perceived realism and immersion (Kronester et al. 2021; Friesen and Vardar 2023) and, consequently, the user experience of haptics has equally been examined in this regard. For example, van Beek et al. (2023) examined soft pneumatic displays for interactions in virtual environments, and compared haptic feedback delivered through a pneumatic unit cell at fingertip level against vibrotactile feedback and no feedback at all for tasks involving pressing buttons on a virtual number pad. Their results, obtained using various metrics of task performance, kinematics, and cognitive load, revealed that the participants moved more smoothly when receiving pneumatic feedback, felt more successful at performing the task when pneumatic or vibrotactile feedback were available, and reported the lowest stress level in the presence of pneumatic haptic feedback. Another example is Walking Vibe (Peng et al. 2020), a system designed for reducing sickness and improving the realism of walking experiences in virtual environments by means of vibrotactile feedback delivered through the HMD. The authors compared various vibrotactile feedback designs, represented by combinations of locations at the head level and synchronization techniques, against audio-visual feedback. Results showed that two-sided, footstep-synchronized vibrations can improve the user experience of virtual walking, while also mitigating motion sickness and discomfort.

Prior research has relied on questionnaires and interviews to unveil the user experience of haptic feedback for various applications involving both the physical and virtual world. In some cases, specific tools were developed and applied to this purpose, such as the Haptic Experience model (Kim and Schneider 2020), consisting of dedicated design parameters (timeliness, intensity, density, and timbre), usability requirements (utility, causality, consistency, and saliency), experiential dimensions (expressivity, harmony, autotelics, immersion, and realism), and support for personalization. Anwar et al. (2023) focused on a four-factor model consisting of realism (i.e., the degree to which the haptic effect is convincing, believable, and realistic), harmony (the degree to which haptics do not distract, fell out of place, or are disconnected from the rest of the experience), involvement (the experience of focusing energy and attention on a coherent set of stimuli), and expressivity (the degree to which users feel the haptic stimuli to distinguishably reflect varying user input and system events). In other cases, generic tools have been employed to evaluate user experience aspects, such as the System Usability Scale (Brooke 1996), Usability Metric for User Experience (Finstad 2010), Task Load Index (Hart and Staveland 1988), or evaluations based on SEQ, the Single Ease Question (Sauro and Dumas 2009), regarding task satisfaction.

2.6 Lessons learned

An extensive body of work is available on haptics for virtual environments, including vibrotactile feedback, to increase users’ sense of presence, engagement, and immersion in the virtual world, but also user performance at interacting with virtual objects. At the same time, interactions with physical devices and displays, e.g., smartphones, smartwatches, and large touchscreens, have been augmented with vibrotactile feedback with beneficial effects on user performance and experience of the interaction. In this large space of application opportunities for haptics, we have identified gaps between vibrotactile feedback delivered to accompany interactions with content presented on virtual and physical displays. Whereas physical touchscreen devices enable on-screen vibrotactile feedback, the feeling of touching virtual displays is simulated with distal vibrations at various locations on the interactive arm and, conventionally, through off-the-shelf VR controllers. In this context, distal vibrotactile feedback, characterized by a physical decoupling between the location where the vibrations are delivered on the arm and the point of touch where the user’s finger lands on the display, has received less attention for physical displays. Furthermore, the user experience of distal vibrotactile feedback has not been documented for interactions with virtual displays, and the differences in comparison to physical displays have not been explored. In this work, we present empirical results from two experiments designed to unveil the user experience of distal vibrotactile feedback across both virtual and physical displays.

3 Experiment #1: Distal vibrotactile feedback for interactions with virtual displays

We conducted a controlled experiment to measure the user experience of distal vibrotactile feedback delivered at various locations on the user’s arm—at the finger, wrist, and forearm level—during input with visual content presented on a virtual display, compared to the baseline condition of receiving vibrotactile feedback through a conventional VR controller. Given the little research available on documenting the user experience of distal vibrotactile feedback, as discussed in Sect. 2, we designed our experiment to be exploratory in nature, rather than hypothesis-driven (Hessels and Hooge 2021). This approach offered us increased flexibility to focus on examining elicited subjective perceptions of the user experience created by distal vibrotactile feedback from an analysis perspective guided by a search for discovery rather that confirmation of preregistered hypotheses (Rubin and Donkin 2022).

3.1 Participants

Fourteen participants (of which ten self-identified as male and four as female), representing young adults aged between 19 and 33 years old (M = 25.7, SD = 3.1 years), were recruited for our experiment via convenience sampling. All of the participants were smartphone users, three (21.4%) were also using tablets on a regular basis, eight (57.1%) were using smartwatches or fitness trackers, two participants (14.3%) reported smart earbuds, and six participants (42.9%) had used VR applications before our experiment, mostly represented by VR games. A number of six participants (42.9%) reported that the keyboard vibration feature was turned on on their smartphones.

3.2 Apparatus

We developed a custom interactive map application to support the task of the experiment. Our primary requirements for the task, involving presentation of visual content on a display, were effective use of visual attention (to locate specific visual targets) and visuomotor coordination (to touch and select those targets), respectively, for which an interactive map application fulfilled both. Furthermore, maps are ubiquitous in both desktop applications and virtual environments, and empirical results (Dong et al. 2020) have shown that user accuracy and reported satisfaction and readability levels are similar when interacting with maps in desktops and virtual environments alike.

Apparatus used in our experiment: a screenshot of the virtual display showing an interactive map; b the wearable device for delivering vibrotactile feedback on the interactive arm; see Fig. 3 for participants wearing the HMD and wearable device

Participants interacting with the vertical (a, b) and horizontal (c, d) virtual displays in the virtual environment; see Fig. 2 for a detailed view of the apparatus

For the implementation, we used Leaflet,Footnote 1 a popular open-source JavaScript library for developing interactive maps. At startup, the application zooms into a city map with pinpoints shown next to selected targets, e.g., the Museum of Contemporary Art of Barcelona; see Fig. 2a for a screenshot from the virtual environment. We used the HTC Vive Cosmos HMD (\(2880\times 1700\) pixel resolution with 90 Hz refresh rate and 110\(^{\circ }\) field of view) to render the interactive map as a virtual display window floating in an empty room at an arm-reach distance from the user immersed in the virtual environment. The virtual display was implemented as a SteamVRFootnote 2 overlay, on which we projected the map application using OVRDropFootnote 3 running in the Google Chrome web browser of the desktop PC (Intel Core i9-9900KF CPU, 64 GB RAM, NVIDIA GeForce RTX 3070) to which the HTC Vive HMD was connected. When a target is selected with the raycast, a popup window shows a brief description of the target, and vibrotactile feedback is delivered through the VR controller or at various locations on the user’s interactive arm through a wearable device that we specifically designed and prototyped for the experiment; see Fig. 2b for a close-up photograph. The wearable device incorporates three 10 mm DC coin vibration motorsFootnote 4 into a velcro band with adjustable length so that the motors can be affixed comfortably to the index finger, wrist, and forearm, respectively, as shown in Figs. 2b and 3.Footnote 5 The vibration motors are commanded by a CH340G NodeMcu V3 board based on the ESP8266 Wi-Fi module,Footnote 6 a self contained System-on-a-Chip with integrated TCP/IP protocol stack, which communicates with the map application via the WebSocketFootnote 7 protocol. During the experiment, vibrations were delivered by each motor independently for a fixed duration of 150ms, a value that we determined empirically during our technical prototyping so that the vibrations could be felt and localized unambiguously by the participants on the various parts of the arm (Terenti and Vatavu 2022). Vibrations were delivered at the maximum intensity of the coin motors used to implement our device (1.40G nominal amplitude vibe force at rated voltage of 3.0V DC) and at the default setting of the VR controller of the HTC Vive HMD, respectively.

3.3 Design

Our experiment was a within-subjects design with the following two independent variables:

-

1.

Location, nominal variable with four explicit conditions: controller, finger, wrist, and forearm, specifying various locations where distal vibrotactile feedback was provided during input with the virtual display, which we contrasted against the implicit condition of none, representing the absence of vibrotactile feedback during the interaction; see the specific design and formulation of our UX measures in Sect. 3.5.

-

2.

Orientation, nominal variable with two conditions, corresponding to horizontal and vertical displays.

While the Location variable enables examination of the effect of either holding or wearing a device, Orientation covers potential effects of visual content presentation mode in the virtual environment with respect to the user’s body; see Fig. 3. The dependent variables are represented by several UX measures described in detail in Sect. 3.5.

3.4 Task

After signing the consent form and filling out a demographic questionnaire, the participants were presented with the virtual environment, the interactive map application, and the wearable device. During a training stage, the participants familiarized themselves with the task by selecting targets from the map using the VR controller, confirming that they were feeling the vibrations delivered by the controller and our wearable device, respectively. The participants were provided the option to either sit or stand, choosing whichever felt more comfortable for them while visualizing and interacting with the content presented on the virtual displays; see Fig. 3a–d for photographs from the experiment. For the experimental task, a different city map was presented for each combination of Location \(\times\) Orientation, and the participants were asked a question about the targets indicated with pinpoints on the map, e.g., “What is the year of the oldest building from this city?” To answer the question, the participants had to select all of the targets to access their associated descriptions. Vibrotactile feedback was delivered upon target selection either by the VR controller or the wearable device affixed to the dominant hand, according to the current condition. The order of Orientation was randomized per participant as was the order of Location for each display orientation. The first condition of Location was always no vibrotactile feedback (none), representing our implicit control condition against which we elicited participants’ preferences for distal vibrotactile feedback delivered at various locations on the interactive arm; see Sect. 3.5 for our UX measures. In order to minimize motion sickness effects, we introduced breaks between the administration of the different Location conditions, during which the participants filled out a questionnaire with UX measures designed to describe their experience with distal vibrotactile feedback; see next for details.

3.5 Measures

We collected participants’ experience of distal vibrotactile feedback with several UX measures representing the dependent variables in our experiment. The measures were collected with 5-point Likert-scale ratings encoding participants’ level of agreement, from 1 (“strongly disagree”) to 2 (“disagree”), 3 (“neither agree nor disagree”), 4 (“agree”), and 5 (“strongly agree”), with various statements centered on words having either positive or negative connotations. The specific formulation that we chose for the statements employed to elicit participants’ perceptions of distal vibrotactile feedback contrasted each of the four explicit conditions of the Location independent variable against the implicit control condition, none, where vibrations were absent, as follows:

-

Perceived-Enjoyment, in response to the statement “Interacting with the display felt more enjoyable with vibrotactile feedback than without.”

-

Perceived-Distracteness, in response to “Interacting with the display felt more distracting with vibrotactile feedback than without.”

-

Perceived-Efficiency, “Interacting with the display felt more efficient with vibrotactile feedback than without.”

-

Perceived-Difficulty, “Interacting with the display felt more difficult with vibrotactile feedback than without.”

-

Perceived-Confidence, in response to the statement “Vibrotactile feedback made me feel more confident when interacting with the display compared to when vibrations were absent.”

-

Perceived-Confusion, “Vibrotactile feedback created confusion for me when interacting with the display.”

-

Perceived-Integration, “Touching the display and feeling the vibrations integrated well into one experience.”

This set of measures was designed to elicit user perception regarding specific dimensions previously identified in the scientific literature as important for the UX construct (Pamparău and Vatavu 2020; Garrett 2011) with its multiple valences: perceived usability, affect, trust, value, workload (Sonderegger et al. 2019), usability requirements and experiential dimensions (Kim and Schneider 2020). In relation to these dimensions, we employed descriptive words with positive connotation, such as “enjoyment,” representative of the short-term affective response category of UX qualities (Law et al. 2014), and “efficiency,” from the instrumental UX category (Law et al. 2014), respectively, which we contrasted with two negatively connoted items, “distractedness” and “difficulty.” Furthermore, the opposite pair of “confidence” and “confusion” descriptors, and the perception of the “integration” between touch input and vibrotactile feedback address explicitly the experience of a confirmatory response following on-screen input, but also connect to aspects of harmony and involvement (Anwar et al. 2023). We collected participants’ ratings for these measures for each combination of Location (four explicit conditions, since the absence of vibrotactile feedback was considered as the baseline in each of the statements formulating the UX measures) and Orientation (two conditions). Furthermore, we aggregated the individual measures corresponding to positively and negatively connoted descriptors into two overall measures of contentment and discontentment regarding the experience of distal vibrotactile feedback, reflective of both usability and experiential dimensions (Kim and Schneider 2020), as follows:

-

Perceived-Contentment = (Perceived-Enjoyment + Perceived-Efficiency + Perceived-Confidence)/3

-

Perceived-Discontentment = (Perceived-Distracteness + Perceived-Difficulty + Perceived-Confusion)/3

In addition to this set of measures focused on the perception of vibrations for each condition, we also collected participants’ preferences for all of the combinations of Location \(\times\) Orientation. However, unlike the 1 to 5 scale used for the former, we expanded the preference scale to a range from 1 to 9. This adjustment ensured that all combinations of Location \(\times\) Orientation had an equal opportunity to be ranked differently, particularly considering since their total number exceeded five.

3.6 Statistical analysis

To analyze the ordinal data resulting from our two-factor experiment, we employed ANOVA with the Aligned-Rank Transform (ART) procedure, a technique specifically designed for nonparametric factorial data analysis using the F-test, implemented with ARTool (Wobbrock et al. 2011).Footnote 8 For post-hoc pairwise comparisons, we employed the ART-C algorithm (Elkin et al. 2021) proposed as an extension for ART, and applied FDR adjustments for p-values.

User experience of distal vibrotactile feedback delivered on the controller, finger, wrist, and forearm for interacting with virtual displays, measured relative to the implicit control condition of no vibrations. Notes: mean values are shown; error bars denote 95% CIs; see Table 1 for the corresponding statistical tests

3.7 Results

We did not find a statistically significant effect of Orientation on Overall-Preference (\(F_{(1,91)}=1.726, p=.192, n.s.\)), but we detected a significant effect of Location (\(F_{(3,91)}=5.472, p<.01\)) of a medium to large effect size (\(\eta ^2_p=.15\)); see Fig. 4, bottom right. Post-hoc tests (FDR-adjusted p-values) revealed that distal vibrotactile feedback delivered on the finger (Mdn = 3.5, M = 4.2, SD = 3.1) was preferred to feedback on the wrist (Mdn = 7, M = 6.2, SD = 2.6, \(t_{(91)}=-2.538, p<.05, \eta ^2_p=.07\)), forearm (Mdn = 7, M = 6.8, SD = 2.2, \(t_{(91)}=-3.688, p<.01, \eta ^2_p=.13\)), and controller (Mdn = 7, M = 6.3, SD = 3.1, \(t_{(91)}=3.279, p<.01, \eta ^2_p=.11\)), respectively.

To find out more, we looked at the individual UX measures; see Fig. 4 for the mean ratings of participants’ perceptions of distal vibrotactile feedback delivered on the finger, wrist, forearm, and through the controller, respectively, measured with respect to none, our implicit baseline condition of no vibrotactile feedback. Overall, the experience reported by our participants was characterized by high perceived enjoyment (Mdn = 4.0, M = 3.9, SD = 0.8), efficiency (Mdn = 4.0, M = 3.6, SD = 0.9), confidence (Mdn = 4.0, M = 3.9, SD = 0.9), and integration (Mdn = 4.0, M = 4.2, SD = 0.6), complemented by low perceived distraction (Mdn = 1.0, M = 2.1, SD = 0.9), difficulty (Mdn = 1.0, M = 1.9, SD = 0.9), and confusion (Mdn = 2.0, M = 1.9, SD = 0.7). However, none of our specific UX measures could help explain participants’ overall preference for distal vibrotactile feedback on the finger. We found that neither the Orientation of the virtual display or the Location where vibrations were delivered influenced participants’ perceptions of the experience of distal vibrotactile feedback (all p-values were above the \(\alpha =.05\) level of statistical significance); see Table 1 for details. These results were corroborated by no significant effects of Location or Orientation on the aggregated UX measures of Perceived-Contentment and Perceived-Discontentment, respectively (all \(p{>}.05, n.s.\)). The mean ratings of Perceived-Contentment stayed roughly the same, between 3 and 4, for all four conditions of distal vibrotactile feedback: 3.8 (finger), 3.9 (wrist), 3.7 (forearm), and 3.9 (controller). Furthermore, the average ratings for Perceived-Discontentment were relatively low on the scale: 2.0 (finger), 2.0 (wrist), 2.1 (forearm), and 1.9 (controller). Nevertheless, the Overall-Preference results indicate that vibrations on the finger are preferred to alternative locations on the body and the VR controller, respectively. To gain more insight, we reconducted the experiment with physical displays in a physical environment; see the next section.

4 Experiment #2: Distal vibrotactile feedback for interactions with physical displays

We conducted a follow-up experiment to evaluate the user experience of vibrations delivered at distal locations on the arm during interactions with physical displays. Just like in the previous experiment, we adopted a design and approach that were exploratory in nature rather than hypothesis-driven.

4.1 Participants

We recruited another sample of fourteen participants (of which ten self-identified as male and four as female), representing young adults aged between 19 and 34 years old (M = 25.0, SD = 4.1 years). All of the participants were smartphone users, five (35.7%) were also using tablets on a regular basis, eight (57.1%) were using smartwatches or fitness trackers, and four participants (28.6%) reported using smart earbuds. A number of eight participants (57.1%) had the keyboard vibration feature turned on on their smartphones. None of the participants from this second experiment were involved in the first one conducted with virtual displays, described in Sect. 3. However, we made sure that the two samples of participants were similar in terms of their age and gender distribution.

4.2 Apparatus

We reused the interactive map application, which ran in the Google Chrome web browser (full screen mode) of two touchscreen displays: an horizontal 46-inch Ideum PlatformFootnote 9 (\(1920\times 1080\) pixel resolution, 12ms touch response time, integrated CPU Intel Core i7-4790 S 3.2G Hz, RAM 16 GB DDR3) and a vertical 55-inch Samsung UE55D displayFootnote 10 with a CY-TD55LDAH touchscreen overlayFootnote 11 (\(1920\times 1080\) pixel resolution, 13ms touch response time, connected to a Dell laptop with Intel Core i5-4300U 2 GHz, RAM 8 GB DDR3); see Fig. 5.

Participants interacting with the vertical (a, b) and horizontal (c, d) physical displays in the physical environment; see Fig. 3 for correspondences with the participants from the first experiment, who interacted with virtual displays

4.3 Design

Following the design adopted for the first experiment, our second study was also a within-subjects design with two independent variables:

-

1.

Location, nominal variable with three explicit conditions: finger, wrist, and forearm, specifying locations on the arm where distal vibrotactile feedback was provided during input with the physical display, which we contrasted against the implicit condition of none, representing the absence of vibrotactile feedback during the interaction.

-

2.

Orientation, nominal variable, two conditions: horizontal and vertical.

The dependent variables were represented by the same UX measures used in the first experiment, which were described in detail in Sect. 3.5.

4.4 Task

After signing the consent form, the participants were presented the two physical displays, the interactive map application, and the wearable device, and were briefed about the specifics of the task. The task was the same as in the first experiment, and was practiced during a training phase. The order of Orientation was randomized per participant as was the order of the finger, wrist, and forearm vibrotactile feedback conditions of the Location independent variable for each display orientation. The first condition for each display was always touch input without vibrotactile feedback (none), which was our control, just like in the first experiment.

User experience of distal vibrotactile feedback delivered on the finger, wrist, and forearm for interacting with physical displays, measured relative to the implicit control condition of no vibrations. Notes: mean values are shown; error bars denote 95% CIs; see Table 2 for the corresponding statistical tests

4.5 Results

We did not find a statistically significant effect of Orientation on Overall-Preference (\(F_{(1,65)}=0.243, p=.623, n.s.\)), but we detected a significant effect of Location (\(F_{(2,65)}=12.493, p<.001\)) of a large size (\(\eta ^2_p=.28\)); see Fig. 6, bottom right. The finger (Mdn = 1, M = 2.9, SD = 2.9) condition was preferred to the wrist (Mdn = 4, M = 4.2, SD = 2.6, \(t_{(65)}=-2.788, p<.05, \eta ^2_p=.11\)) and forearm (Mdn = 5, M = 5.5, SD = 2.4, \(t_{(65)}=-4.987, p<.001, \eta ^2_p=.28\)), and the wrist was preferred to the forearm (\(t_{(65)}=-2.198, p<.05, \eta ^2=.05\)), respectively (FDR adjustments were applied to the p-values).

Figure 6 also shows nuances of participants’ perceptions of distal vibrotactile feedback delivered on the finger, wrist, and forearm with respect to the implicit baseline condition of no vibrations (none). Except for the Perceived-Enjoyment measure (\(p=.041<.05\)), Orientation did not influence participants’ perceptions of the experience they felt and reported with distal vibrotactile feedback. However, the Location where vibrations were delivered to the arm influenced significantly the user experience according to six of our UX measures: Perceived-Enjoyment (\(\eta ^2_p=.28\)), Perceived-Efficiency (\(\eta ^2_p=.42\)), Perceived-Confidence (\(\eta ^2_p=.28\)), Perceived-Integration (\(\eta ^2_p=.33\)), Perceived-Confusion (\(\eta ^2_p=.13\)), and Perceived-Distracteness (\(\eta ^2_p=.21\)); see Table 2 for details. Post-hoc contrast tests (with FDR adjustments for p-values) revealed statistically significant differences between vibrations delivered on the finger compared to the wrist and forearm, but no difference between wrist and forearm. Overall, vibrotactile feedback on the finger was preferred in terms of Perceived-Enjoyment (Mdn = 5 and 4.5 for the horizontal and vertical displays), Perceived-Efficiency (Mdn = 5 and 5), Perceived-Confidence (Mdn = 5 and 5), and Perceived-Integration (Mdn = 5 and 4.5), where higher values, closer to 5, denote a better user experience; see Fig. 6. Furthermore, vibrations delivered on the finger were preferable to the wrist and forearm when the experience was described in terms of descriptors with negative connotations, Perceived-Distracteness (Mdn = 1.5 and Mdn = 1), Perceived-Difficulty (Mdn = 1 and Mdn = 1.5), and Perceived-Confusion (Mdn = 1 and Mdn = 1), for which lower ratings, closer to 1, denote a better user experience.

These results were corroborated by statistically significant effects of Location (\(p<.001\)) on both the aggregated measures of Perceived-Contentment and Perceived-Discontentment with significant differences (\(p<.01\)) between finger and wrist and finger and forearm, respectively; see Table 2. In order, the mean Perceived-Contentment ratings of the distal vibrotactile feedback experience decreased from the finger (M = 4.4, Mdn = 5, SD = 0.8) to the wrist (M = 3.6, Mdn = 3.8, SD = 1.0) and forearm (M = 3.2, Mdn = 3.3, SD = 1.0), while Perceived-Discontentment decreased from the forearm (M = 2.3, Mdn = 2.3, SD = 0.8) to the wrist (M = 2.1, Mdn = 1.8, SD = 0.8) and finger (M = 1.6, Mdn = 1.3, SD = 0.7), respectively.

5 Discussion

In this section, we comparatively present the findings of our two experiments, which we discuss in the broader context of immersion in physical and virtual worlds. Additionally, we address the limitations of our experiments and propose future work opportunities to address them.

5.1 The user experience of distal vibrotactile feedback between virtual and physical worlds

Unlike for the experience of interacting with virtual displays, specific nuances were detected by the majority of our UX measures when the displays were physical in nature and the participants could touch them directly; see Tables 1 and 2 from the previous two sections. This finding suggests that the location of distal vibrotactile feedback on the interactive arm, ranging from the finger to the forearm, may be of less significance to users when they are immersed in a virtual environment compared to when they interact in the physical world and are in front of a physical display.

The primary distinction between these two conditions lies in the presentation of the world, whether virtual or physical, and the users’ sense of immersion in each, respectively. In the virtual world, our participants could observe a virtual representation of their hand interacting with the content presented on the virtual display, facilitated by the VR controller. In the physical world, the hand was real, familiar, and directly in contact with the display without any intermediary device. These differences in the perceptual nature of immersion in each world may have influenced the additional perception of vibrations delivered on the arm during interactions in those worlds. While the level of immersion and the perception of the physical body’s connection and belonging to the world were at their peak during interactions with the physical display, the attention to additional perceptual cues was likely given greater consideration than in the virtual environment. In the latter case, where a disconnection likely existed between the perceived immersion into a different, new world and the delivery of additional perceptual cues in the form of vibrations at various locations on the body, reminiscent of the physical world, the importance of those specific locations on the interactive arm was diminished when reporting their experience. The statistically significant effect detected on the Perceived-Efficiency measure (M = 4.4 for physical displays vs. M = 3.5 for virtual displays, \(p<.05\), see the second row in Table 3) supports this observation of a difference of perceptual nature between being in a virtual world and experiencing vibrations on the physical body.

Nevertheless, the overall preference for distal vibrotactile feedback delivered on the finger implementing the interaction was a common outcome of both experiments. The finger was systematically preferred to the wrist and forearm and, in the virtual displays experiment, the VR controller, respectively. This additional finding indicates that, while distal vibrotactile feedback accompanying interactions with virtual and physical displays is perceived equally valuable across various UX dimensions, such as perceived enjoyment, confidence, distractedness, and others, the finger is consistently rated higher compared to other locations on the interactive arm. Notably, the preference for vibrations delivered on the finger was significantly stronger for physical displays than when interacting with virtual displays (M = 2.9 vs. M = 5.1, \(p<05\), see the last row of Table 3, values closer to 1 denote higher preference). This finding corroborates our earlier observation regarding our participants’ greater consideration to the specific location where additional perceptual cues were delivered on their arm, as part of the overall contrasting experience, at the level of immersion, of being in a physical or virtual world.

5.2 Limitations and future work

We focused in our two experiments on vibrotactile feedback delivered to users during interactions with displays located at the two extremes of the Reality-Virtuality Continuum (Milgram and Kishino 1994). Specifically, our displays were either purely virtual or effectively physical, but not in between. Interesting future work is recommended to examine user perception of the experience of distal vibrotactile feedback delivered during interactions with content presented on mixed reality (MR) displays, such as through optical see-through HMDs. Unlike our choice of HMD technology from the virtual displays experiment, which was meant to immerse our participants completely in the virtual world in terms of visual perception, see-through HMDs keep users anchored in the physical world, while presenting virtual content on top. In this context, interesting options for further investigations include augmented reality (AR) displays, presented on top of the substratum of the physical world, e.g., interacting with virtual content structured according to the geometry of a physical surface from the physical world, but also augmented virtuality (AV) displays, where content from a physical display is streamed into the virtual world. Such follow-up investigations, enabled by optical see-through HMDs, may reveal new nuances in the user experience of haptics providing confirmatory sensations of the on-screen interaction, when screens are of a mixed, physical-virtual nature.

Another limitation of our experiments refers to body pose while interacting with displays. In the VR experiment, the participants chose to sit down while interacting with the vertical display, but opted to stand up in the horizontal display condition. In the physical displays experiment, however, all the participants stood up to comfortably reach the various parts of the horizontal and vertical displays. This observation highlights an interesting difference between virtual and physical displays, where the former, unrestricted by physical constraints, allow for a more comfortable body posture during interaction. In contrast, both our physical displays from the second experiment necessitated participants to stand up to physically reach various targets. This aspect also suggests opportunities for future work. For example, exploring other locations on the body where to deliver vibrotactile feedback could be interesting for displays involving interactions with the feet (Velloso et al. 2015), head (Yan et al. 2018), and the whole-body (Vatavu 2017), respectively, for new interactive experiences with large public displays (Ardito et al. 2015) or large-scale immersive installations (Lantz 2007), and for which other body augmentation devices should be explored, including haptic vests, shoes, and suits.

Lastly, we reported empirical findings based on small sample sizes, i.e., fourteen participants in each experiment. Although small, our sample size is similar to those from previous studies about haptic feedback. For example, Brewster et al. (2007) involved twelve participants in their study about user performance of tactile feedback during mobile interactions; Carter et al. (2013) used nine participants in a study concerned about users’ capacity to recognize and discriminate between focal point conditions of the UltraHaptics technology; Bickmann et al. (2019) involved eighteen participants in an usability study of the Haptic Illusion Glove employed during grasping objects; Cho et al. (2016) used twelve participants to understand perceptions about RealPen, a device for input on touchscreens incorporating auditory-tactile feedback; and Hoggan et al. (2008) involved twelve participants in their experiment evaluating the effectiveness of tactile feedback for mobile touchscreens, to give just a few examples. Nevertheless, reconducting our experiments with a large sample size may lead to more nuanced findings about the user experience of interacting with physical and virtual displays, which we leave for future work.

We also recommend conducting further investigations into the user experience of distal vibrotactile feedback for other application types. In our experiments, we employed an interactive map, an application that successfully fulfilled our requirements for visual search and visuomotor coordination when interacting with on-screen content. However, other applications that involve content presentation on a display, such as web browsing (Cibelli et al. 1999), interactive television (Popovici and Vatavu 2019), learning and education (Radianti et al. 2020), with different requirements, may lead to new discoveries about the user experience of distal vibrotactile feedback. The user experience of other user groups, such as people with visual or motor impairments, is equally interesting to examine in future work towards practical applications of distal vibrotactile feedback matching specific user abilities (Tennison et al. 2020; Vatavu et al. 2022).

6 Conclusion

We focused in this work on measuring the user experience of distal vibrotactile feedback delivered on the arm during interactions with visual content presented on virtual displays, which we contrasted with the more common experience of interacting with physical touchscreens. In both cases, we found a similar user experience, complemented by a preference for vibrations delivered on the finger rather than other parts of the arm, yet with specific nuances according to the nature, virtual or physical, of the display. Also, user preference for vibrotactile feedback delivered on the finger was larger than for vibrations delivered through a conventional VR controller. Based on these results, interesting future work lies ahead for physically decoupling, by design, the location of vibrotactile feedback and the point of interaction with virtual displays towards augmented interactions in VR/AR worlds.

7 Open data

To foster future work in the area of distal vibrotactile feedback, we release our dataset, representing the self-reported user experience of the twenty-eight participants from our two experiments, as a free resource for researchers.

Notes

An alternative design approach would have been to implement three devices, one for the finger, one for the wrist, and one for the forearm, but the participants would have had to don and doff the devices repeatedly when moving from one experimental condition to the next; see the next subsections for more details about the task.

References

Abeele VV, Spiel K, Nacke L et al (2020) Development and validation of the player experience inventory: a scale to measure player experiences at the level of functional and psychosocial consequences. Int J Hum Comput Stud 135(102):370. https://doi.org/10.1016/j.ijhcs.2019.102370

Alvina J, Zhao S, Perrault ST et al (2015) Omnivib: towards cross-body spatiotemporal vibrotactile notifications for mobile phones. In: Proceedings of the 33rd annual ACM conference on human factors in computing systems (CHI’15). ACM, New York, pp 2487–2496. https://doi.org/10.1145/2702123.2702341

Anwar A, Shi T, Schneider O (2023) Factors of haptic experience across multiple haptic modalities. In: Proceedings of the 2023 CHI conference on human factors in computing systems (CHI’23). ACM, New York. https://doi.org/10.1145/3544548.3581514

Ardito C, Buono P, Costabile MF et al (2015) Interaction with large displays: a survey. ACM Comput Surv 47(3):66. https://doi.org/10.1145/2682623

Asano S, Okamoto S, Yamada Y (2015) Vibrotactile stimulation to increase and decrease texture roughness. IEEE Trans Hum–Mach Syst 45(3):393–398. https://doi.org/10.1109/THMS.2014.2376519

Bau O, Poupyrev I (2012) Revel: tactile feedback technology for augmented reality. ACM Trans Graph 31(4):66. https://doi.org/10.1145/2185520.2185585

Bau O, Poupyrev I, Israr A et al (2010) Teslatouch: electrovibration for touch surfaces. In: Proceedings of the 23nd annual ACM symposium on user interface software and technology (UIST’10). ACM, New York, pp 283–292. https://doi.org/10.1145/1866029.1866074

Bermejo C, Hui P (2021) A survey on haptic technologies for mobile augmented reality. ACM Comput Surv 54(9):66. https://doi.org/10.1145/3465396

Berning M, Braun F, Riedel T et al (2015) ProximityHat: a head-worn system for subtle sensory augmentation with tactile stimulation. In: Proceedings of the 2015 ACM international symposium on wearable computers (ISWC’15). ACM, New York, pp 31–38. https://doi.org/10.1145/2802083.2802088

Bertheaux C, Toscano R, Fortunier R et al (2020) Emotion measurements through the touch of materials surfaces. Front Hum Neurosci 13:455. 10.3389%2Ffnhum.2019.00455

Bickmann R, Tran C, Ruesch N et al (2019) Haptic illusion glove: a glove for illusionary touch feedback when grasping virtual objects. In: Proceedings of Mensch Und Computer 2019 (MuC’19). ACM, New York, pp 565–569. https://doi.org/10.1145/3340764.3344459

Boer L, Vallgårda A, Cahill B (2017) Giving form to a hedonic haptics player. In: Proceedings of the 2017 conference on designing interactive systems (DIS’17). ACM, New York, pp 903–914. https://doi.org/10.1145/3064663.3064792

Bouzbib E, Bailly G, Haliyo S et al (2022) “Can I Touch This?”: Sfbrourvey of virtual reality interactions via haptic solutions. In: Proceedings of the 32nd conference on l’interaction homme–machine (IHM’21). ACM, New York. https://doi.org/10.1145/3450522.3451323

Brewster S, Chohan F, Brown L (2007) Tactile feedback for mobile interactions. In: Proceedings of the SIGCHI conference on human factors in computing systems (CHI’07). ACM, New York, pp 159–162. https://doi.org/10.1145/1240624.1240649

Brooke J (1996) SUS: a ’Quick and Dirty’ usability scale. In: Jordan PW, Thomas B, McClelland IL et al (eds) Usability evaluation in industry. CRC Press, London, pp 189–194. https://doi.org/10.1201/9781498710411-35

Cabibihan JJ, Chauhan SS (2017) Physiological responses to affective tele-touch during induced emotional stimuli. IEEE Trans Aff Comput 8(1):108–118. https://doi.org/10.1109/TAFFC.2015.2509985

Carter T, Seah SA, Long B et al (2013) Ultrahaptics: multi-point mid-air haptic feedback for touch surfaces. In: Proceedings of the 26th annual ACM symposium on user interface software and technology (UIST’13). ACM, New York. https://doi.org/10.1145/2501988.2502018

Catană AV, Vatavu RD (2023) Fingerhints: understanding users’ perceptions of and preferences for on-finger kinesthetic notifications. In: Proceedings of the 2023 CHI conference on human factors in computing systems (CHI’23). ACM, New York. https://doi.org/10.1145/3544548.3581022

Cheng LT, Kazman R, Robinson J (1997) Vibrotactile feedback in delicate virtual reality operations. In: Proceedings of the 4th ACM international conference on multimedia (MULTIMEDIA’96). ACM, New York, pp 243–251. https://doi.org/10.1145/244130.244220

Cho Y, Bianchi A, Marquardt N et al (2016) RealPen: providing realism in handwriting tasks on touch surfaces using auditory-tactile feedback. In: Proceedings of the 29th annual symposium on user interface software and technology (UIST’16). ACM, New York, pp 195–205. https://doi.org/10.1145/2984511.2984550

Cholewiak RW, Collins AA (2003) Vibrotactile localization on the arm: effects of place, space, and age. Percept Psychophys 65:1058–1077. https://doi.org/10.3758/BF03194834

Cholewiak RW, Brill JC, Schwab A (2004) Vibrotactile localization on the abdomen: effects of place and space. Percept Psychophys 66:970–987. https://doi.org/10.3758/BF03194989

Cibelli M, Costagliola G, Polese G et al (1999) A virtual reality environment for web browsing. In: Proceedings of the 10th international conference on image analysis and processing (ICIAP’99). IEEE Computer Society, USA, p 1009

Cirelli M, Nakamura R (2014) A survey on multi-touch gesture recognition and multi-touch frameworks. In: Proceedings of the ninth ACM international conference on interactive tabletops and surfaces (ITS’14). ACM, New York, pp 35–44. https://doi.org/10.1145/2669485.2669509

Dariosecq M, Plénacoste P, Berthaut F et al (2020) Investigating the semantic perceptual space of synthetic textures on an ultrasonic based haptic tablet. In: HUCAPP 2020, pp 45–52. https://hal.archives-ouvertes.fr/hal-02434298

Degraen D, Fruchard B, Smolders F et al (2021) Weirding haptics: in-situ prototyping of vibrotactile feedback in virtual reality through vocalization. In: Proceedings of the 34th annual ACM symposium on user interface software and technology (UIST’21). ACM, New York, pp 936–953. https://doi.org/10.1145/3472749.3474797

Dong W, Yang T, Liao H et al (2020) How does map use differ in virtual reality and desktop-based environments? Int J Digit Earth 13(12):1484–1503. https://doi.org/10.1080/17538947.2020.1731617

Elkin LA, Kay M, Higgins JJ et al (2021) An aligned rank transform procedure for multifactor contrast tests. In: Proceedings of the 34th annual ACM symposium on user interface software and technology (UIST’21). ACM, New York, pp 754–768. https://doi.org/10.1145/3472749.3474784

Elsayed H, Weigel M, Müller F et al (2020) Vibromap: understanding the spacing of vibrotactile actuators across the body. Proc ACM Interact Mob Wearable Ubiq Technol 4(4):66. https://doi.org/10.1145/3432189

Emgin SE, Aghakhani A, Sezgin TM et al (2019) HapTable: an interactive tabletop providing online haptic feedback for touch gestures. IEEE Trans Visual Comput Graph 25(9):2749–2762. https://doi.org/10.1109/TVCG.2018.2855154

Finstad K (2010) The usability metric for user experience. Interact Comput 22(5):323–327. https://doi.org/10.1016/j.intcom.2010.04.004

Friesen RF, Vardar Y (2023) Perceived realism of virtual textures rendered by a vibrotactile wearable ring display. IEEE Trans Haptics 66:1–11. https://doi.org/10.1109/TOH.2023.3304899

Garrett JJ (2011) The elements of user experience: user-centered design for the web and beyond, 2nd edn. New Riders, Berkeley

Grübel J, Gath-Morad M, Aguilar L et al (2021) Fused twins: a cognitive approach to augmented reality media architecture. In: Media Architecture Biennale 20 (MAB20). ACM, New York, pp 215–220. https://doi.org/10.1145/3469410.3469435

Gu X, Zhang Y, Sun W et al (2016) Dexmo: an inexpensive and lightweight mechanical exoskeleton for motion capture and force feedback in VR. In: Proceedings of the 2016 CHI conference on human factors in computing systems (CHI’16). ACM, New York, pp 1991–1995. https://doi.org/10.1145/2858036.2858487

Hart SG, Staveland LE (1988) Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv Psychol 52:139–183. https://doi.org/10.1016/S0166-4115(08)62386-9

Henderson J, Avery J, Grisoni L et al (2019) Leveraging distal vibrotactile feedback for target acquisition. In: Proceedings of the 2019 CHI conference on human factors in computing systems. ACM, New York. https://doi.org/10.1145/3290605.3300715

Heo S, Lee J, Wigdor D (2019) PseudoBend: producing haptic illusions of stretching, bending, and twisting using grain vibrations. In: Proceedings of the 32nd annual ACM symposium on user interface software and technology (UIST’19). ACM, New York, pp 803–813. https://doi.org/10.1145/3332165.3347941

Hertenstein MJ, Keltner D, App B et al (2006) Touch communicates distinct emotions. Emotion 6(3):528–533. https://doi.org/10.1037/1528-3542.6.3.528

Hessels RS, Hooge IT (2021) Dogmatic modes of science. Perception 50(11):913–916. https://doi.org/10.1177/03010066211047826

Hoggan E, Brewster SA, Johnston J (2008) Investigating the effectiveness of tactile feedback for mobile touchscreens. In: Proceedings of the SIGCHI conference on human factors in computing systems (CHI’08). ACM, New York, pp 1573–1582. https://doi.org/10.1145/1357054.1357300

Israr A, Zhao S, Schneider O (2015) Exploring embedded haptics for social networking and interactions. In: Proceedings of the 33rd annual ACM conference extended abstracts on human factors in computing systems (CHI EA’15). ACM, New York, pp 1899–1904. https://doi.org/10.1145/2702613.2732814

Ito K, Okamoto S, Yamada Y et al (2019) Tactile texture display with vibrotactile and electrostatic friction stimuli mixed at appropriate ratio presents better roughness textures. ACM Trans Appl Percept. https://doi.org/10.1145/3340961

Itoh Y, Langlotz T, Sutton J et al (2021) Towards indistinguishable augmented reality: a survey on optical see-through head-mounted displays. ACM Comput Surv. https://doi.org/10.1145/3453157

Jansen Y (2010) Mudpad: fluid haptics for multitouch surfaces. In: Proceedings of the CHI extended abstracts on human factors in computing systems (CHI EA’10). ACM, New York, pp 4351–4356. https://doi.org/10.1145/1753846.1754152

Kato K, Ishizuka H, Kajimoto H et al (2018) Double-sided printed tactile display with electro stimuli and electrostatic forces and its assessment. In: Proceedings of the 2018 CHI conference on human factors in computing systems. ACM, New York (CHI’18). https://doi.org/10.1145/3173574.3174024

Kaul OB, Rohs M (2017) HapticHead: a spherical vibrotactile grid around the head for 3D guidance in virtual and augmented reality. In: Proceedings of the 2017 CHI conference on human factors in computing systems (CHI’17). ACM, New York, pp 3729–3740. https://doi.org/10.1145/3025453.3025684

Kaul OB, Rohs M, Mogalle M et al (2021) Around-the-head tactile system for supporting micro navigation of people with visual impairments. ACM Trans Comput–Hum Interact 28(4):66. https://doi.org/10.1145/3458021

Kim E, Schneider O (2020) Defining haptic experience: foundations for understanding, communicating, and evaluating HX. In: Proceedings of the 2020 CHI conference on human factors in computing systems (CHI’20). ACM, New York, pp 1–13. https://doi.org/10.1145/3313831.3376280

Kovacs R, Ofek E, Gonzalez Franco M et al (2020) Haptic pivot: on-demand handhelds in vr. In: Proceedings of the 33rd annual ACM symposium on user interface software and technology (UIST’20). ACM, New York, pp 1046–1059. https://doi.org/10.1145/3379337.3415854

Kreimeier J, Hammer S, Friedmann D et al (2019) Evaluation of different types of haptic feedback influencing the task-based presence and performance in virtual reality. In: Proceedings of the 12th ACM international conference on PErvasive technologies related to assistive environments (PETRA’19). ACM, New York, pp 289–298. https://doi.org/10.1145/3316782.3321536

Kronester MJ, Riener A, Babic T (2021) Potential of wrist-worn vibrotactile feedback to enhance the perception of virtual objects during mid-air gestures. In: Extended abstracts of the 2021 CHI conference on human factors in computing systems (CHI EA’21). ACM, New York. https://doi.org/10.1145/3411763.3451655

Lantz E (2007) A survey of large-scale immersive displays. In: Proceedings of the 2007 workshop on emerging displays technologies: images and beyond: the future of displays and interacton (EDT’07). ACM, New York, p 1-es, https://doi.org/10.1145/1278240.1278241

Law ELC, van Schaik P, Roto V (2014) Attitudes towards user experience (UX) measurement. Int J Hum Comput Stud 72(6):526–541. https://doi.org/10.1016/j.ijhcs.2013.09.006

Le KD, Zhu K, Kosinski T et al (2016) Ubitile: a finger-worn I/O device for tabletop vibrotactile pattern authoring. In: Proceedings of the 9th Nordic conference on human–computer interaction (NordiCHI’16). ACM, New York. https://doi.org/10.1145/2971485.2996721