Abstract

Distance learning has become a popular learning channel today. However, while various distance learning tools are available, most of them only support a single platform, offer only the trainer’s perspective, and do not facilitate student-instructor interaction. As a result, distance learning systems tend to be inflexible and less effective. To address the limitations of existing distance learning systems, this study developed a cross-platform hands-on virtual lab within the Metaverse that enables multi-user participation and interaction for distance education. Four platforms, HTC VIVE Pro, Microsoft HoloLens 2, PC, and Android smartphone, are supported. The virtual lab allows trainers to demonstrate operation steps and engage with multiple trainees simultaneously. Meanwhile, trainees have the opportunity to practice their operational skills on their virtual machines within the Metaverse, utilizing their preferred platforms. Additionally, participants can explore the virtual environment and interact with each other by moving around within the virtual space, similar to a physical lab setting. The user test compares the levels of presence and usability in the hands-on virtual lab across different platforms, providing insights into the challenges associated with each platform within the Metaverse for training purposes. Furthermore, the results of the user test highlight the promising potential of the architecture due to its flexibility and adaptability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Distance learning offers the advantages of overcoming time and space constraints. However, it also has some drawbacks, such as the lack of face-to-face interaction and hands-on experimentation (Dickson-Karn 2020; Faulconer et al. 2018). Studies have reported that students are less engaged in distance learning (Chen et al. 2021), which can negatively impact their academic performance (Jaggars 2013). While some instructors use screen-sharing to simulate face-to-face interaction, communication is often delayed and unclear (May et al. 2022).

Previous studies have found that virtual presence with a high level of immersion plays a critical role in the success and satisfaction of online learners (Annetta et al. 2008, 2009). With extended reality (XR) technology, including virtual reality (VR), augmented reality (AR), and mixed reality (MR), becoming more accessible and affordable, it has been increasingly utilized in job training and industrial applications. To achieve a high level of presence and immersion, XR technology has been increasingly used in distance learning. For instance, Monahan et al. (2008) developed a web-based e-learning system that employed VR and multimedia to create a 3D virtual classroom where students can attend group meetings and discussions. McFaul and FitzGerald (2020), as well as Kondratiuk et al. (2022), created 3D virtual environments for users to practice presentation skills. Similarly, Harfouche and Nakhle (2020) designed a virtual lecture hall for bioethics education. Chan et al. (2023) found that VR games significantly enhanced learner motivation and engagement in chemical safety training compared to a video lecture-only approach. In science, technology, engineering, and mathematics (STEM) education, Mystakidis et al. (2022) indicated that students who utilized AR achieved better outcomes compared to their counterparts in the traditional training formats.

While the XR applications mentioned above have proven effective in enhancing students’ distance learning, their capabilities have been constrained to presentation or viewing functions, lacking online hands-on capabilities or multi-user interactions. The significance of hands-on practices and multi-user interactions in STEM disciplines is crucial. However, providing virtual laboratories or training in a distance or non-traditional format has posed a challenge (Faulconer et al. 2018).

1.1 XR-based hands-on distance learning

Some researchers have made attempts to incorporate hands-on functions into XR-based distance learning. For example, Potkonjak et al. (2010) created a mechatronics and robotics virtual lab for distance learning. Students could operate and observe the virtual robots. Aziz et al. (2014) developed a game-based hands-on virtual lab for gear design. Xu and Wang (2022) proposed a virtual lab for wafer preparation training. Hurtado-Bermúdez and Romero-Abrio (2023) used an online virtual lab suite to teach students how to operate a scanning electron microscope. Chan et al. (2021) demonstrated that virtual labs have the capability to deliver training effects comparable to those of real hands-on labs. Therefore, virtual labs have been used as a preparatory tool to enhance students’ learning in real laboratories (Dalgarno et al. 2009; Elfakki et al. 2023; Kiourt et al. 2020; Manyilizu 2023; Potkonjak et al. 2016; Sung and Chin 2023).

Conventional virtual labs are typically regarded as a teaching approach, lacking any human-to-human interaction (Reeves and Crippen 2021). Therefore, although online hands-on lab provided a safe and time-efficient learning platform, research showed that students often considered the online lab to be less intuitive than the physical lab, more difficult to ask for help, more difficult to diagnose errors, and lacking in communication (Li et al. 2020).

Many of the prior hands-on virtual labs were limited to a single platform, thereby limiting their usability. In order to cater to the diverse needs of learners, there is a growing demand for cross-platform distance learning solutions. For example, Bao et al. (2022) used WebXR to build a multi-user cross-platform system for construction safety training. The system was designed to support desktop, mobile devices, and head-mounted displays (HMDs). Kambili-Mzembe and Gordon (2022) used Unreal Engine to build a multi-user cross-platform system for secondary school teaching. Their system supported desktop, HMDs, and Android mobile devices. Delamarre et al. (2020) developed a cross-platform virtual classroom to simulate student’s emotional and social disruptive behaviors to facilitate teacher training. Their system supported desktop, HMDs, and cave automatic virtual environment (CAVE) setups. Delamarre et al. (2020) mentioned that the main difference between the platforms is the navigation techniques. Furthermore, Buttussi and Chittaro (2018) demonstrated that various types of VR displays can influence users’ engagement and sense of presence.

Modern virtual labs should be compatible with multiple platforms. This ensures that students can access the labs from various devices and places, enhancing flexibility and usability. To enhance collaboration and engagement, virtual labs should also support multi-user interactions. This allows students to work together in real-time, fostering teamwork and communication, similar to a physical lab setting.

1.2 Metaverse education

With the advancements in computing technology, the concept of the Metaverse has emerged as an innovative social and interactive tool built upon XR technology. Metaverse is a 3D online multi-user environment that combines physical reality with digital virtuality (Mystakidis 2022). The advent of the Metaverse opens up new possibilities for distance learning (Inceoglu and Ciloglugil 2022). Based on the concept of the Metaverse, some of the aforementioned XR-based distance learning systems indeed fall into the category of the Metaverse.

For example, Siyaev and Jo (2021) used a Metaverse in HoloLens 2 for MR aircraft maintenance training. Trainees without HoloLens 2 could use mobile devices to view the maintenance lessons simultaneously. Khan et al. (2022) created a Metaverse in Quest VR goggles to deliver engineering technology courses. Two presentation tools were available for the instructor, one was a whiteboard and the other was the ability to share computer screen into the VR classroom. Hwang (2023) implemented a Metaverse on PC to showcase artworks.

The aforementioned research highlights that while numerous online virtual labs have been developed, to the best of our knowledge, none of them support both cross-platform and multi-user interactions. To address the research gap, in this study, a novel cross-platform multi-user hands-on virtual lab within the Metaverse was developed, supporting HTV VIVE Pro, Microsoft HoloLens 2, PC, and Android smartphone. The implementation of the Metaverse enables trainers and trainees to immerse themselves in the same virtual lab using different platforms, facilitating interactive engagement with both each other and the virtual objects, replicating the experience of a physical lab environment. This study states the following two research questions:

-

RQ 1.

What is the level of presence of each platform in the multi-user cross-platform hands-on virtual lab within the Metaverse?

-

RQ 2.

What is the usability of each platform in the multi-user cross-platform hands-on virtual lab within the Metaverse?

Since machining training not only requires extensive hands-on practice but also often involves close interaction between trainers and trainees, in this study, machining training was used as an example to demonstrate the feasibility and capability of the developed tool. This paper is organized as follows: Sect. 2 introduces the system architecture. Section 3 describes the training environment. Section 4 discusses the interaction designs. Section 5 presents the user test. Section 6 provides discussions. Finally, Sect. 7 offers conclusions.

2 System architecture

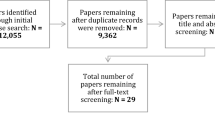

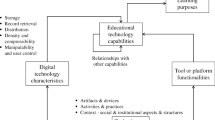

In this study, Unity and Photon Unity Networking (PUN) SDK were selected for creating a cross-platform Metaverse. PUN offers several advantages. The Photon cloud server facilitates a client-server-based connection, enabling communication between clients within the same virtual environment. The client’s system architecture, as depicted in Fig. 1, comprises six modules: Photon Network Module, Operation Simulation Module, Lab Manager Module, Interaction Module, Rendering Module, and Information Module.

The Photon Network Module communicates with Photon cloud server by sending local machine status and receiving lab information, such as the number of users and the names of users. The machine status includes the position of virtual objects, operation process, and the user’s speech data. The Photon Network Module also sends non-local machine status to the Operation Simulation module, sends lab status to the Lab Manager module, and receives local machine status from the Operation Simulation module. The Operation Simulation module is the system’s core, containing all virtual machines. The Operation Simulation module manages the local machine’s status, including the location of each component, operation status, operation process, interactive functions, and user’s speech data. Users communicate with each other using audio devices. Speech data from a non-local machine will be transmitted to virtual objects, which in turn will play the speech. This process enables users to communicate with each other using a microphone.

The Lab Manager module oversees the lab status, including the number of clients in the lab, and their names and representative colors. When a trainee enters into the lab, this module creates a new virtual machine in the Operation Simulation module. When a trainee leaves the lab, the Lab Manager module deletes the corresponding virtual machine.

The Interaction module collects the user’s operation information and user’s speech data, and then sends them to the Operation Simulation module. User’s operation information is also sent to the Rendering module. The Rendering module includes a virtual camera, which captures the images of the virtual environment and projects the virtual object accurately on the display hardware. The Information module retrieves information from the Operation Simulation module and creates visual feedback to users, such as message texts, operation status, warning signals, and indication pointers.

3 Training environment

The system includes four platforms: HTC VIVE Pro, Microsoft HoloLens 2, PC, and Android smartphone. Due to the differences in input interfaces across the platforms, it is necessary to utilize different software development kits (SDKs) to manage the diverse input data. In this study, SteamVR was used to handle the VIVE Pro input, while the Mixed Reality Toolkit (MRTK) was used to manage the HoloLens 2 input. The PC and smartphone platforms use the Unity input utilities.

The LC-1/2 series milling machines were used to demonstrate the capabilities of the multi-user cross-platform online hands-on virtual lab. The Metaverse consists of a virtual factory and several virtual milling machines, as depicted in Fig. 2. The trainer’s local machine is always in blue, while other trainees’ local machines are in other colors. Since all machines are situated in the same scene, users can observe each other’s operations freely without any restrictions. A user list is used to display all users participating in the lab, along with the respective colors representing their local machines, as shown in Fig. 2.

Other than using a user list to represent the presence of other users, the system also uses virtual heads with different colors to denotes the locations of different users. For example, Fig. 3(a) displays trainees viewing the trainer’s demonstration from different locations. Taking advantage of the Metaverse, unlike the physical world, multiple users can occupy the same space, as shown in Fig. 3(b). Fig. 3(c) depicts users operating their own machines.

The training process is shown in Fig. 4. Once a user joins a virtual lab, the system loads the lab scene, which includes both VR and AR versions. The HoloLens 2 device utilizes the AR version, while other devices use the VR version. Then, the Lab Manager module is initialized for each client. In the VR version, users can move around in the virtual scene without physically moving their bodies. In contrast, in the AR version, users are required to physically move their bodies to maintain the relative position between the virtual objects and real objects. Since rendering multiple objects is a significant computing load for HoloLens 2, only one machine is displayed with 40 frames per second.

Once all trainees are ready, the trainer can initiate the training. VR users will be transported to the front of the trainer’s machine, while AR users will have the local machine hidden and only the trainer’s machine displayed. The system then proceeds to the “Hands-on Demo” session, where the trainer demonstrates how to operate the machine. All trainees have the ability to change their viewpoint to observe the hands-on demo operation from different angles.

After the hands-on demo session, each trainee will be transported back to their respective local machines to practice the operation process, as depicted in Fig. 5. Trainees will be required to replicate each operation step demonstrated by the trainer. The system will only advance to the next step when trainees accurately perform the required actions. The Operation Simulation module in each client also simulates the status of non-local machines, allowing the trainer and trainees to move to other non-local machines and observe the operations of other users. Storing milling data requires a minimum of 2.28 MB of memory per second. Due to limitations in the capacity of certain platforms that cannot accommodate this large data volume, the system was not designed to log or record practice milling data.

4 Interaction design

Due to the differences in user interface (UI) designs across the platforms, it is necessary to utilize different software development kits (SDKs) to manage the diverse input data.

4.1 VIVE pro interaction

VIVE Pro utilizes the SteamVR SDK to manage the inputs of the platform. SteamVR enables the system to synchronize the position and rotation of the virtual camera with the HMD. Additionally, SteamVR generates a virtual hand at the controller position to assist users in locating their hands in the virtual environment, as illustrated in Fig. 6. The red sphere with a radius of 30 mm in Fig. 6 is referred to as the trainer pointer. When the trainer uses the VIVE Pro platform, trainees on their respective platforms can only see the red trainer pointer instead of the virtual hand. This is due to the complexity of the virtual hand coordinates, which involve tracking over 20 points to record the hand status. Transmitting excessive data to synchronize the virtual hands’ status can lead to an unstable connection and delays, which would undermine the effectiveness of the training.

4.2 HoloLens 2 interaction

HoloLens 2 utilizes MRTK to manage the inputs of the platform, including the position of the HMD, hand tracking, and gesture recognition. Gesture recognition is limited to the pinch gesture, as shown in Fig. 7, where a pinch gesture and the trainer pointer are used to grab an object. MRTK creates virtual hands based on hand-tracking input, which are superimposed on the real hands in the virtual environment. Since MRTK has the capability to detect objects touching the virtual index fingertip, users can interact with virtual buttons by pressing them as they would with actual buttons. This allows users to interact with virtual objects using their bare hands.

The user’s viewpoint corresponds to the position and rotation of the HMD, enabling natural control of the viewpoint by moving their heads. However, HoloLens 2 only moves the viewpoint through head tracking, so users must ensure they have enough physical space to move around and operate the virtual machine.

4.3 PC interaction

PC and smartphone platforms utilize the Unity input utilities to manage the interactions. PC uses a mouse and a keyboard as the input interfaces. Users can grab a virtual object at the location of the mouse cursor by pressing the left mouse button. They can release the grabbed object by releasing the left mouse button. When users click the left mouse button, the mouse cursor typically provides the 2D position in the image plane using pixel units. On the other hand, the position of virtual objects within the virtual environment is defined in the 3D global coordinate system using meter units. The 2D mouse position needs to be translated to the corresponding 3D position in the virtual environment.

4.4 Smartphone interaction

The primary input interface of a smartphone is its touchscreen, which also serves as the image plane. Consequently, the smartphone platform utilizes the same interaction algorithm as the PC platform. However, users adjust the object distance by using two fingers. The UI on smartphones is identical to the UI on PC since Unity UI elements are also compatible with smartphones. Moreover, both platforms use the position on the image plane as the primary input, enabling for a unified UI design.

5 User test

5.1 Method

The user test was conducted in two stages to evaluate the effectiveness of the cross-platform hands-on virtual lab within the Metaverse. VIVE Pro, HoloLens 2, and smartphone utilized their built-in speakers and microphones for recording and playing users’ voice. In this study, the smartphone used was the SAMSUNG Galaxy S8+, and the PC was equipped with the HONG JIN HJ-MX3 multimedia microphone and the DIKE DSM230 speaker.

In the first stage, the trainer demonstrated how to set up a milling machine and machine a 60 × 60 × 60 mm3 workpiece to the target shape, as shown in Fig. 8. In the second stage, trainees were asked to repeat the operation in the first stage. The milling operation included several steps, such as installing a workpiece and a milling tool, moving an object to a specific position, rotating a handwheel to a particular angle, tightening a nut, and machining the workpiece into a specific shape. The system will only advance to the next step when users accurately complete the required actions. Consequently, the time taken in the second stage was utilized to assess the trainees’ performance. After completing the second stage, trainees were asked to fill out a presence questionnaire (PQ) version 3 (Witmer et al. 2005), a system usability scale (SUS) questionnaire (Brooke 1996), a subjective questionnaire, and to provide comments about the system.

The PQ evaluates each platform’s involvement, sensory fidelity, adaptation/immersion, and interface quality. For enhanced reliability and validity, irrelevant questions were excluded. In this study, the PQ comprised 25 questions aimed at measuring the presence of the virtual lab, using a 7-point Likert scale (1 for strongly disagree, 7 for strongly agree). Higher scores indicate better involvement, sensory fidelity, and adaptation/immersion, whereas a lower score is preferable for interface quality. As this system does not simulate the environmental sounds of milling machines or provide haptic feedback, the Presence Questionnaire (PQ) questions related to audio and haptic aspects were removed. However, since participants can communicate with each other verbally, a subjective questionnaire was designed to evaluate the verbal audio quality in the system.

The purpose of the SUS is to evaluate the usability of each platform. The SUS questionnaire consists ten questions to evaluate the usability of a system (Brooke 1996), with a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). The scores are then converted to a SUS score using a hundred-point system. A system is considered usable if its SUS score is higher than the average score of 70 (Bangor et al. 2008).

The subjective questionnaire was designed to assess participants’ communication and navigation capabilities within the virtual lab across different platforms. The subjective questionnaire comprised twelve questions. Questions 1 to 4 evaluated the clarity of the audio communication for specific platform, while Questions 5 to 8 focused on participants’ perception of audio delays. Questions 9 to 11 aimed to assess satisfaction with the navigation function on various platforms, excluding HoloLens 2 due to its limited computing power for rendering all machines in the virtual lab at the same time.

To determine the minimum sample size, this study used the G* power tool. The statistical power P, type I error α, and effect size d were set to 0.8, 0.05, and 0.833, respectively (Cohen 1988). The result showed that at least 11 participants were needed for the user test in each platform. In this study, a total of 52 participants aged between 20 and 30 were recruited, with 13 using VIVE Pro, 13 using HoloLens 2, 13 using PC, and 13 using smartphones. None of the participants were professional milling machine operators, but all had operation experience of less than one year. Participants using VIVE Pro and HoloLens 2 had no prior experience with these devices, whereas those in the PC and smartphone groups had over two years of experience with their respective devices.

5.2 Results

5.2.1 Operation time

Table 1 presents the average operation time for participants who used different platforms. The smartphone platform had the longest average operation time, while the VIVE Pro platform had the shortest average operation time. However, the one-way ANOVA analysis showed that operation time on different platforms did not significantly differ (p = .097).

5.2.2 Presence questionnaire

Figure 9 displays the PQ results for each factor category. Regarding interface quality, for the sake of easy comparison, the scores were transposed, with higher values indicating better performance. The statistical analysis results show that the VIVE Pro platform received the highest score for the involvement factor, and it is significantly higher than the smartphone platform by the Tukey’s test. The ANOVA results of the sensory fidelity score (F(3,48) = 0.219, p = .882) and adaptation/immersion score (F(3,48) = 2.734, p = .054) showed no significant difference among platforms. The smartphone platform tends to exhibit lower scores in involvement, sensory fidelity, and adaptation/immersion. The Tukey’s test indicated that the interface quality of the HoloLens 2 was significantly lower than other platforms.

Table 2 provides a breakdown of the detailed results for each PQ factor. The bolded number indicates a significant difference from the neutral point of 4. Questions 1 to 12 assess the level of involvement, reflecting participants’ interactions and engagement with the virtual lab. Sensory fidelity questions (13 and 14) measure the realism of the sensory experience in the virtual lab. Questions 15 to 22 evaluate the level of adaptation and immersion, indicating whether participants adapt to the virtual lab and feel immersed. Questions 23 to 25 assess interface quality, emphasizing the impact of device functionality and the quality of interface design.

The results of the PQ for VIVE Pro platform indicated that participants exhibited high levels of involvement in the training environment and demonstrated effective interaction with virtual objects (Q 1–12). The system was found to be responsive (Q 1 and 2) and provided a natural interaction mechanism for participants (Q 3, 5, 6, and 9), allowing them to engage effectively with the virtual lab (Q 11).

Participants also reported positive opinions on sensory fidelity, indicating that they could examine virtual objects closely from multiple viewpoints (Q 13, 14). Participants demonstrated a high level of adaptability to the virtual environment and were able to operate virtual machines with a strong sense of immersion (Q 15–22). Participants could quickly adjust to the control devices and the system (Q 16 and 21). The mechanisms of the system allowed participants to focus on their tasks and have an immersive experience (Q 18 and 20). However, it is worth noting that some participants experienced occasional issues with unclear images when using the VIVE Pro headset even though other questions did not reveal the impact of the display issue (Q 24).

Compared to the VIVE Pro, participants considered the HoloLens 2 to have a lower level of involvement and adaption/immersion. This suggests that participants felt challenged when interacting with virtual objects using the HoloLens 2 platform (Q 1–3 and 10). These interaction issues had an impact on the overall experience of the virtual lab compared to real-world scenarios (Q 7). Consequently, some participants perceived that their ability to fully engage with tasks and maintain a high level of focus was compromised. Users also indicated dissatisfaction with the interface quality of the HoloLens 2 platform (Q 23–25). This might be attributed to occasional tracking issues and gesture recognition failures of the HoloLens 2 platform, which were perceived as distracting and disruptive to their concentration. However, HoloLens 2 users demonstrated a comparable level of sensory fidelity with the VIVE Pro users, indicating that participants generally reported a positive experience in terms of examining virtual objects using HoloLens 2 (Q 13–14).

The results of the PQ show that the PC platform demonstrated a comparable level of involvement, sensory fidelity, adaption/immersion, and interface quality with the VIVE Pro users, with the exception of visual and motion aspects (Q 4 and 9). It might be attributed to the stationary 2D screen display and constrained spatial movement limitations inherent in the PC platform. However, it is surprising that the PC platform received the highest scores on sensory fidelity among the four platforms (Q 13 and 14). This may be attributed to participants’ familiarity with the PC platform.

The PQ results for the smartphone platform suggested that participants felt a low level of involvement compared to the VIVE Pro platform, likely attributed to difficulties in interacting with virtual objects and controlling the virtual camera using a small hand-held device. These challenges also make the smartphone platform have the lowest sensory fidelity. However, due to participants’ familiarity with smartphone interfaces (Q 23–25), they felt they could predict the system’s response and adapt quickly to it (Q 15 and 16).

5.2.3 System usability scale

The results of the SUS are presented in Table 3. On the VIVE Pro platform, the average SUS score was 87.69, significantly higher than the average score of 70 (t = 6.397, p < .001). Similarly, the SUS score of the PC platform (79.42) was significantly greater than the average score (t = 2.59, p = .024). All participants strongly agreed that the system on the VIVE Pro and PC platforms was easy to use and that they would like to use it.

However, the SUS score of the HoloLens 2 platform (70.77) was not significantly different from the average score (t = 0.214, p = .834). It implied that participants could accept the system on HoloLens 2 but had some issues using it. Questions 7 to 9 of the SUS indicated that participants found it difficult to operate HoloLens 2. The reported challenges with hand tracking and gesture recognition functions may have influenced the overall SUS scores, resulting in scores that are not significantly different from the average score.

The SUS score of the smartphone platform (70.77) was also not significant different from the average SUS score (t = 0.385, p = .7). Questions 3, 7, and 9 revealed that some participants found the system challenging to use, leading to a lack of confidence. The primary issue causing this problem was the difficulty of placing objects to the designated location after grabbing them.

5.2.4 Subjective questionnaire

The results of the subjective questionnaire are presented in Table 4, utilizing a 7-point Likert scale (1 for strongly disagree, 7 for strongly agree). The Wilcoxon-signed rank test was applied to all questions due to the non-normally distributed data. The results of Questions 1 to 4 were significantly different from the neutral point of 4, suggesting that participants found that the audio communication on all platforms to be clear, and the ANOVA result (F(3,204) = 0.8233, p = .48) indicated that the clarity of the audio communication was not significantly different. This implies that all platforms effectively transmitted clear audio data. The results of Questions 5 to 8 showed that participants perceived no delay in communication with others in the virtual lab, and the ANOVA result (F(3,48) = 0.426, p = .732) showed no significantly difference among the platforms. Questions 9 to 11 indicated that participants found the navigation function easy to use on all platforms, and the ANOVA result (F(2,36) = 0.142, p = .868) indicated that there is no significant difference.

6 Discussion

After the user test, participants were asked about their experience using the system. They found it exciting to operate virtual milling machines with other participants in the same virtual factory. As the interaction designs vary across different platforms, making a fair comparison becomes challenging. However, specific platforms may be more suitable for certain applications (Delamarre et al. 2020). While the operation time did not significantly differ among the platforms, VIVE Pro users were the quickest, followed by PC users, and HoloLens 2 and smartphone users took the third and longest, respectively. This pattern might be associated with the usability of the platforms, with VIVE Pro receiving the highest SUS score, while smartphone and HoloLens 2 received the lowest scores.

Prior research has demonstrated that clear communication and verbal instruction have a significant impact on online virtual lab instruction.(Li et al. 2020; May et al. 2022). According to the subjective questionnaire results, participants reported no audio communication issues on any platform. The navigation technique used in the VIVE Pro is teleportation (from one location to another directly without a middle path), while PC and smartphone are steering (from one location to another rapidly with a middle path). While prior research indicated that steering might lead to more cybersickness than teleportation (Chin et al. 1988), in this study, the steering time is very short. Participants did not even notice the existence of the middle path, and they could easily navigate to different locations in the virtual lab without any difficulty or experiencing cybersickness.

Prior research showed that VR head mounted displays provide higher presence and engagement than a PC (Buttussi and Chittaro 2018). The success and satisfaction of online learners are critically linked to virtual presence and a high level of immersion. (Annetta et al. 2008, 2009). In this study, the use of the VIVE Pro platform resulted in the highest levels of involvement and adaptation/immersion, with the PC platform following as the second.

Although VIVE Pro controllers could not fully replicate actual actions, users considered the platform intuitive and immersive. However, users encountered challenges with the VIVE Pro platform related to display issues. This was attributed to the optical characteristics of the device, where the edges of the display may appear blurred. Additionally, if the VIVE Pro was not worn in the optimal position, it could further contribute to image blurriness. The unclear images posed challenges for participants when operating the virtual machine, hindering their ability to perceive and interact with the virtual environment effectively.

Using HoloLens 2 with bare hands allowed participants to quickly learn the operations. The AR scene made participants feel immersive, and they could perceive the actual milling machine’s size, tools and feel like they were operating a real milling machine. However, the scores for the SUS and PQ interface quality were relatively low. Users encountered occasional challenges with hand tracking when interacting with virtual objects. Due to HoloLens 2’s limitation of tracking hands within the user’s field of view (FOV), users needed to consistently look at their hands. This made it challenging to read text messages while operating the machine. Some participants found the grabbing operation confusing, as HoloLens 2 only supports the “pinch” gesture. Moreover, the platform’s constrained computing power restricted it to displaying only one machine at a time, and it lacked teleportation functionality, further constraining user interaction.

The PC platform generally received higher scores on both the PQ and SUS, despite a few participants expressing a lack of immersion due to the 2D display. The larger image size on the PC provided participants with an easy and clear view, greatly facilitating their virtual machine operations. Participants found it effortless to grab objects without needing additional practice, and they highlighted the straightforward nature of the UI on the PC platform. However, there were instances where the message panel would occasionally cover virtual objects, causing participants minor inconvenience.

Participants using the smartphone platform expressed satisfaction with its mobility, appreciating the freedom from space constraints. However, the SUS score, as well as the involvement, sensory fidelity, and adaptation/immersion factors in PQ, were relatively low, indicating participants were not entirely satisfied with the virtual labs on smartphones. It might be attributed to the small smartphone screen, as participants reported that virtual objects appearing much smaller than actual objects, even when attempts were made to enlarge them. Users’ fingers sometimes obstructed their view of the virtual objects, hindering complete engagement with the virtual lab experience. As a result, more time was spent relocating the objects to their designated locations, leading participants to find it challenging to interact with virtual objects. This issue was not encountered on the PC platform, where virtual object images were larger than the mouse cursor.

7 Conclusions

Distance learning has become a global trend, as it allows students to acquire knowledge at any time and from any location. Modern virtual labs must be compatible across various platforms to ensure students can access them from different devices and locations, promoting flexibility and usability. Additionally, supporting multi-user interactions enhances collaboration, mirroring the teamwork and communication found in physical labs. This study developed a multi-user cross-platform hands-on virtual lab within the Metaverse. This innovative approach aims to enhance the ubiquity of applications in distance learning, providing students with more interactive and practical hands-on learning opportunities.

In this study, a virtual lab was developed specifically for machining training, serving as a case study. To facilitate the participation of multiple trainees, the system employed Photon Unity Networking, which enabled the connection of users across different platforms such as HTC VIVE Pro, Microsoft HoloLens 2, PC, and smartphones. Each platform offered unique interactive interfaces, allowing multiple users to engage in the virtual lab and interact with each other.

In implementing multi-user cross-platform hands-on virtual labs, the user test revealed that participants were satisfied with the presence and usability of the VIVE Pro platform within the virtual lab. The PC platform also provided comparable levels of presence and usability satisfaction for users. However, participants were dissatisfied with the interface quality and usability of the HoloLens 2 platform, due to occasional tracking issues and gesture recognition failures. Additionally, due to computing power limitations and the nature of AR displays, HoloLens 2 can only display one machine at a time and lacks teleportation functionality. Despite the interface design of the smartphone platform being similar to that of the PC platform, participants were dissatisfied with the smartphone’s usability. They also considered the smartphone platform to lack involvement, sensory fidelity, and immersiveness due to its small display.

The developed architecture and user interfaces developed in this study serves as a roadmap for the implementation of a multi-user cross-platform online hands-on interactive virtual lab. The study is subject to limitations arising from the diverse range of AR, VR, PC, and smartphone devices used. The levels of presence and usability may vary among these devices. Due to differences in interaction designs across platforms, conducting a fair comparison poses challenges. Certain platforms may prove more suitable for specific applications. In the future, different devices and multi-user hands-on training tasks will be assessed and tested. Comparative studies of learning effectiveness across different platforms will also be conducted. The interaction functions of the platforms should be optimized to ensure better hands-on effectiveness. Finally, a more compact data structure for recording hands-on working data will be developed.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author.

References

Annetta L, Klesath M, Holmes S (2008) V-Learning: how Gaming and avatars are engaging online students. Innovate 4.

Annetta L, Klesath M, Meyer J (2009) Taking Science Online: evaluating Presence and Immersion through a Laboratory experience in a virtual learning environment for Entomology Students. J Coll Sci Teach 39:27–33

Aziz E-SS, Chang Y, Esche SK, Chassapis C (2014) A multi-user virtual laboratory environment for gear train design. Comput Appl Eng Educ 22:788–802. https://doi.org/10.1002/cae.21573

Bangor A, Kortum PT, Miller JT (2008) An empirical evaluation of the system usability scale. Int J Human–Computer Interact 24:574–594. https://doi.org/10.1080/10447310802205776

Bao L, Tran SV-T, Nguyen TL, Pham HC, Lee D, Park C (2022) Cross-platform virtual reality for real-time construction safety training using immersive web and industry foundation classes. Autom Constr 143:104565. https://doi.org/10.1016/j.autcon.2022.104565

Brooke J (1996) SUS-A quick and dirty usability scale. Usability evaluation in industry, 1st edn. CRC, London, pp 4–7

Buttussi F, Chittaro L (2018) Effects of different types of virtual reality Display on Presence and Learning in a Safety Training scenario. IEEE Trans Vis Comput Graph 24:1063–1076. https://doi.org/10.1109/TVCG.2017.2653117

Chan P, Van Gerven T, Dubois J-L, Bernaerts K (2021) Virtual chemical laboratories: a systematic literature review of research, technologies and instructional design. Computers Educ Open 2:100053. https://doi.org/10.1016/j.caeo.2021.100053

Chan P, Van Gerven T, Dubois J-L, Bernaerts K (2023) Study of motivation and engagement for chemical laboratory safety training with VR serious game. Saf Sci 167:106278. https://doi.org/10.1016/j.ssci.2023.106278

Chen E, Kaczmarek K, Ohyama H (2021) Student perceptions of distance learning strategies during COVID-19. J Dent Educ 85:1190–1191. https://doi.org/10.1002/jdd.12339

Chin JP, Diehl VA, Norman KL (1988) Development of an instrument measuring user satisfaction of the human-computer interface. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Washington, D.C., USA, pp.213–218. Association for Computing Machinery. https://doi.org/10.1145/57167.57203

Cohen J (1988) Statistical power analysis for the behavioral sciences. New York: Routledge. 2nd Edition https://doi.org/10.4324/9780203771587

Dalgarno B, Bishop AG, Adlong W, Bedgood DR (2009) Effectiveness of a virtual laboratory as a preparatory resource for Distance Education chemistry students. Comput Educ 53:853–865. https://doi.org/10.1016/j.compedu.2009.05.005

Delamarre A, Lisetti C, Buche C (2020) A Cross-Platform Classroom Training Simulator: Interaction Design and EvaluationA Cross-Platform Classroom Training Simulator: Interaction Design and Evaluation. 2020 International Conference on Cyberworlds (CW). Caen, France, 86–93. https://doi.org/10.1109/CW49994.2020.00020

Dickson-Karn NM (2020) Student Feedback on Distance Learning in the Quantitative Chemical Analysis Laboratory. J Chem Educ 97:2955–2959. https://doi.org/10.1021/acs.jchemed.0c00578

Elfakki AO, Sghaier S, Alotaibi AA (2023) An efficient system based on experimental laboratory in 3D virtual environment for students with Learning Disabilities. Electronics 12:989

Faulconer E, Gruss A, Distance Science Laboratory Experiences (2018) A review to weigh the pros and cons of Online, Remote, and. Int Rev Res Open Distrib Learn 19:155–168. https://doi.org/10.19173/irrodl.v19i2.3386

Harfouche AL, Nakhle F (2020) Creating Bioethics Distance Learning through virtual reality. Trends Biotechnol 38:1187–1192. https://doi.org/10.1016/j.tibtech.2020.05.005

Hurtado-Bermúdez S, Romero-Abrio A (2023) The effects of combining virtual laboratory and advanced technology research laboratory on university students’ conceptual understanding of electron microscopy. Interact Learn Environ 31:1126–1141. https://doi.org/10.1080/10494820.2020.1821716

Hwang Y (2023) When makers meet the metaverse: effects of creating NFT metaverse exhibition in maker education. Comput Educ 194:104693. https://doi.org/10.1016/j.compedu.2022.104693

Inceoglu MM, Ciloglugil B (2022) Use of Metaverse in Education. Springer International Publishing, Cham, pp 171–184. https://doi.org/10.1007/978-3-031-10536-4_12

Jaggars SSE, Stacey N (2013) Georgia West Creating an Effective Online Instructor Presence. Columbia University: Community College Research Center. (accessed 2022/11/7) Retrieved from https://eric.ed.gov/?id=ED542146

Kambili-Mzembe F, Gordon NA (2022) Synchronous Multi-User Cross-Platform Virtual Reality for School Teachers. 2022 8th International Conference of the Immersive Learning Research Network (iLRN), Vienna, Austria, pp.1–5. https://doi.org/10.23919/iLRN55037.2022.9815966

Khan V, Basith II, Boyd M (2022) Application of Metaverse as an Immersive Teaching Tool in Engineering Technology Classrooms. 2022 ASEE Illinois-Indiana Section Conference. Anderson, Indiana: ASEE Conferences, 36131

Kiourt C, Kalles D, Lalos AS, Papastamatiou NP, Silitziris P, Paxinou E, Theodoropoulou H, Zafeiropoulos V, Papadopoulos A, Pavlidis G (2020) XRLabs: Extended Reality Interactive Laboratories. International Conference on Computer Supported Education. 601–608. https://doi.org/10.5220/0009441606010608

Kondratiuk L, Musiichuk S, Zuienko N, Sobkov Y, Trebyk O, Yefimov D (2022) Distance Learning of Foreign languages through virtual reality. BRAIN Broad Res Artif Intell Neurosci 13:22–38. https://doi.org/10.18662/brain/13.2/329

Li R, Morelock J, May D (2020) A comparative study of an online lab using Labsland and Zoom during COVID-19. Adv Eng Educ 8:1–10

Manyilizu MC (2023) Effectiveness of virtual laboratory vs. paper-based experiences to the hands-on chemistry practical in Tanzanian secondary schools. Educ Inform Technol 28:4831–4848. https://doi.org/10.1007/s10639-022-11327-7

May D, Morkos B, Jackson A, Hunsu NJ, Ingalls A, Beyette F (2022) Rapid transition of traditionally hands-on labs to online instruction in engineering courses. Eur J Eng Educ 48:842–860. https://doi.org/10.1080/03043797.2022.2046707

McFaul H, FitzGerald E (2020) A realist evaluation of student use of a virtual reality smartphone application in undergraduate legal education. Br J Edu Technol 51:572–589. https://doi.org/10.1111/bjet.12850

Monahan T, McArdle G, Bertolotto M (2008) Virtual reality for collaborative e-learning. Comput Educ 50:1339–1353. https://doi.org/10.1016/j.compedu.2006.12.008

Mystakidis S (2022) Metaverse Encyclopedia 2:486–497. https://doi.org/10.3390/

Mystakidis S, Christopoulos A, Pellas N (2022) A systematic mapping review of augmented reality applications to support STEM learning in higher education. Educ Inform Technol 27:1883–1927. https://doi.org/10.1007/s10639-021-10682-1

Potkonjak V, Vukobratović M, Jovanović K, Medenica M (2010) Virtual Mechatronic/Robotic laboratory – a step further in distance learning. Comput Educ 55:465–475. https://doi.org/10.1016/j.compedu.2010.02.010

Potkonjak V, Gardner M, Callaghan V, Mattila P, Guetl C, Petrović VM, Jovanović K (2016) Virtual laboratories for education in science, technology, and engineering: a review. Comput Educ 95:309–327. https://doi.org/10.1016/j.compedu.2016.02.002

Reeves SM, Crippen KJ (2021) Virtual Laboratories in Undergraduate Science and Engineering courses: a systematic review, 2009–2019. J Sci Edu Technol 30:16–30. https://doi.org/10.1007/s10956-020-09866-0

Siyaev A, Jo G-S (2021) Towards aircraft maintenance metaverse using speech interactions with virtual objects in mixed reality. Sensors 21:2066. https://doi.org/10.3390/s21062066

Sung C, Chin S (2023) Contactless Multi-user virtual hair design synthesis. Electronics 12:3686. https://doi.org/10.3390/electronics12173686

Witmer BG, Jerome CJ, Singer MJ (2005) The factor structure of the Presence Questionnaire. Presence: Teleoperators Virtual Environ 14:298–312

Xu X, Wang F (2022) Engineering Lab in Immersive VR—An Embodied Approach to Training Wafer Preparation. J Educational Comput Res 60:455–480. https://doi.org/10.1177/07356331211036492

Acknowledgements

The authors would like to thank the Ministry of Science and Technology, Taiwan, Republic of China for financially supporting this research under Contract MOST 110-2221-E-002-145.

Author information

Authors and Affiliations

Contributions

All authors wrote the main manuscript text and reviewed the manuscript.

Corresponding author

Ethics declarations

Ethical approval

All procedures carried out in studies involving human participants adhered to the ethical standards of the institutional and/or national research committee, with an IRB-approved consent form.

Competing interests

The authors declare no competing interests.

Consent for Publication

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chuang, TJ., Smith, S. A Multi-user Cross-platform hands-on virtual lab within the Metaverse – the case of machining training. Virtual Reality 28, 62 (2024). https://doi.org/10.1007/s10055-024-00974-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-024-00974-5