Abstract

Lower limbs position sense is a complex yet poorly understood mechanism, influenced by many factors. Hence, we investigated the position sense of lower limbs through feet orientation with the use of Immersive Virtual Reality (IVR). Participants had to indicate how they perceived the real orientation of their feet by orientating a virtual representation of the feet that was shown in an IVR scenario. We calculated the angle between the two virtual feet (α-VR) after a high-knee step-in-place task. Simultaneously, we recorded the real angle between the two feet (α-R) (T1). Hence, we assessed whether the acute fatigue impacted the position sense. The same procedure was repeated after inducing muscle fatigue (T2) and after 10 min from T2 (T3). Finally, we also recorded the time needed to confirm the perceived position before and after the acute fatigue protocol. Thirty healthy adults (27.5 ± 3.8: 57% women, 43% men) were immersed in an IVR scenario with a representation of two feet. We found a mean difference between α-VR and α-R of 20.89° [95% CI: 14.67°, 27.10°] in T1, 16.76° [9.57°, 23.94°] in T2, and 16.34° [10.00°, 22.68°] in T3. Participants spent 12.59, 17.50 and 17.95 s confirming the perceived position of their feet at T1, T2, T3, respectively. Participants indicated their feet as forwarding parallel though divergent, showing a mismatch in the perceived position of feet. Fatigue seemed not to have an impact on position sense but delayed the time to accomplish this task.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Position sense is the ability to perceive the location of different parts of our body in space, even in absence of vision. It relays on the integration of the information retrieved from the ‘postural schema’ and the ‘body model’, which are a result of complex bottom-up and top-down mechanisms, respectively (Ganea and Longo 2017). Bottom-up mechanisms depend on different types of peripheral afferent signals such as mechanoreceptors from joints signalling flexion or extension movements and from muscles spindles signalling contraction and lengthening, but also stretch-sensitive receptors from the skin (Longo et al. 2010; Proske and Gandevia 2012). Together they are called ‘proprioceptive afferent signals’. Their integration and elaboration with the efferent signals from the motor system specifying movements provide information about joint angles, leading to the ‘postural schema’. This scheme contains the angular orientation of our body segments in space. On the other hand, the top-down mechanisms lead to the ‘body model’, an inner body representation that contains information about the length and shape of body segments, information not available through any afferent signal (Longo and Haggard 2010a). The integration of the ‘postural schema’ and the ‘body model’ provides the ‘position sense’. Despite the important role that the ‘body model’ has in the position sense, little is known about its specific nature (Longo et al. 2010).

Given the multifactorial nature of the position sense processing, its assessment is not easy due to the impossibility to access a single information but only the final output which is the body segments’ location and orientation (position) referred by the person. Many authors have assessed the distance between the actual and judged locations, defined as ‘localisation error’, of a single landmark (Longo 2015), and others instead have been able to entirely map the ‘body model’ representations of limbs assessing the distance between the judged locations of two adjacent landmarks (Longo and Haggard 2010b). However, there are still many sources that can affect the position sense output, such as misperceptions of joint angles or the indirect use of vision that can help to build spatial references related to the body (Nieto-Guisado et al. 2022; Radziun and Ehrsson 2018). Experiments with visual sensory-deficit participants or with prism spectacles induced a conflict in position sense tasks (Mon-Williams et al. 1997; Rossetti et al. 1995; Stenneken et al. 2006a, b; Stenneken et al. 2006a, b). It is thought that visual information, when available, can bypass the afferent signals of the body and lead the brain to integrate automatically that information even if not congruent (Touzalin-Chretien et al. 2010).

Moreover, in several conditions the position sense has been studied to be altered or affected, such as ageing (Ferlinc et al. 2019; Herter et al. 2014), central (Ateş and Ünlüer 2020; Rand 2018) and peripheral (Goldberg et al. 2008; Li et al. 2019) nervous system injuries, movement, and (Abbruzzese et al. 2014) musculoskeletal disorders (Mohammadi et al. 2013; Röijezon et al. 2015a), and fatigue (Proske 2019; Vafadar et al. 2012; Verschueren et al. 2020). In particular, fatigue seems to be associated with neuromuscular control changes that lead to proprioceptive and executive function deficits, joint instability, and musculoskeletal injuries (Abd-Elfattah et al. 2015; Steib et al. 2013). Possible mechanisms are thought to be linked to acute workload, perturbation of feedback loops or signal processing (Jahjah et al. 2018; Johnston et al. 2018), but further research is needed. Similarly, the connection between fatigue and position sense is still unclear due to the difficulty in assessing quantitatively to what extent each factor entailed in the proprioception can influence position sense (Proske 2019; Romero-Franco and Jiménez-Reyes 2017).

A possible solution to isolate afferent sensory signals, such as visual bodily and spatial cues, and assess position sense is Immersive Virtual Reality (IVR). Through IVR, the investigator can reduce external stimuli by immersing the person into an ad hoc created scenario partially devoid of visual cues (Fogg 2002; Valori et al. 2020; Witmer and Singer 1998), where vision cannot help to build any self-body or space reference. For instance, Valori et al. implemented the IVR to investigate the extent to which the presence or absence of visual cues aids proprioceptive afferent signals accuracy across lifespan (Valori et al. 2020). Similarly, Bayramova et al. studied the accuracy in reproducing a rotation angle in a self-rotation task in IVR, and the memory aspect of the task, reporting that position sense is more accurate when vision and proprioception are optimally integrated (Bayramova et al. 2021). Moreover, by displaying a virtual user interface, the participants can synchronously and subjectively quantify their position sense without relying on visual bodily or spatial cues. The reported strengths of IVR, together with its versatility, highlight a new role of this technology in this research framework (Sanchez-Vives and Slater 2005; Valori et al. 2020). Relying on these premises, we posited whether the real orientation of the lower limbs can differ from the perceived one, measuring this possible variability inside an IVR environment deprived of visual references. Considering the preliminary findings on the effect of visual sensory inputs on position sense, we expected a discrepancy between the actual and the perceived orientation of the lower limbs. Furthermore, we hypothesised that this parameter, along with the time to process it, might change under acute fatigue conditions, as a result of a reduction in the accuracy of position sense. Hence, the main aims of this study are (1) to assess the position sense of the lower limbs by quantifying the difference between the real and the perceived orientation of feet, and the time required to confirm it, and (2) to investigate how fatigue can influence these parameters in healthy people by immersing them in an ad hoc virtual environment devoid of visuospatial contextual factors.

2 Methods

2.1 Trial design

A pre-post trial study was performed at the Rehabilation and Engineering Laboratory (REHELab) at the Campus of Savona (Savona, Italy), Department of Neuroscience, Rehabilitation, Ophthalmology, Genetics, Maternal and Child Health of the University of Genova (Genova, Italy). Ethical approval was obtained from the Ethics Committee for University Research (Comitato Etico per la Ricerca di Ateneo [CERA], University of Genova, Genova, Italy; code: 2020.12; approval date: 09/07/2020).

2.2 Participants

People without diseases (18 ≤ age ≤ 50) were considered eligible to partake in this study if they did not report any acute injuries or musculoskeletal and/or neurological disorders in the last 6 months. Moreover, people in cure under psychotropic drugs, those who have taken non-steroidal anti-inflammatory drugs and corticosteroids in the previous 48 h before the experimental session, and those unable to understand the tasks were not allowed to join the study. Participants were also excluded if they had drunk caffeinated or alcoholic beverages 6 h before the beginning of the session. The use of spectacles or contact lenses was allowed, and all participants had to sign the informed consent.

Participants were informed that the trial would be performed barefoot wearing comfortable sports clothing and provided with all the information and explanation about the aims, the phases, the instrumentations, and the risks of the experimental trial. Each doubt and curiosity was answered. The possibility of interrupting participation in the study at any moment was also explained. The signing of the informed consent was mandatory.

2.3 Interventions

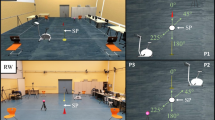

The HTC VIVE Pro IVR system was adopted for the trial. The apparatus was installed into a 7 × 5 room, with no reflective surfaces and the possibility to avoid any exposure to natural lighting. The system setting included a Vive PRO head-mounted display (HMD), two Vive controllers (2018), and two SteamVR 1.0 ‘Lighthouse’ base stations. The choice to use a simple set-up (HMD + 2 controller) was made for two main reasons. Firstly, since our experiment was aimed at the study of proprioception, we wanted to minimise the invasiveness of the set-up, especially for the lower limbs. Secondly, the dimensions of rather cumbersome sensors (Vive Trackers 2018, Ø = 10 cm) (HTC Vive 2018), and the difficulties of a stable and repeatable positioning on the instep, raised some concerns about the accuracy of the measurements obtainable from additional devices.

The two lighthouses were connected through a ‘sync cable’, 5 m apart from each other, and fixed to the ceiling at the height of h = 3 m, with an inclination of 40° to it. This system uses a robust full-room tracking technology, and it records the position and orientation of all the trackable components across a 2,5 × 2,5 m space (recording area) (Sansone et al. 2021). Specifically, the tracked position and orientation are updated primarily through inertial measurement units (Niehorster et al. 2017). The lighthouses limit and correct the intrinsic ‘drift’ error of the inertial measurements by providing additional kinematic data. The system extrapolates positional and orientation values from a set of photodetectors located on the trackable device illuminated by the lighthouses, which emits an IR synchronisation blink followed by two IR pulses, sweeping the tracking area repeatedly, from left to right and then from top to bottom. By knowing the angular velocity of the device, and the time between the blink and the detection of the laser pulse, the system determines the directions in which each photodetector is located. The directions of four non-coplanar photodetectors are the basis to solve the so-called perspective-n-point (PnP) problem (Maciejewski et al. 2020) and improve the accuracy of the tracking.

The trial is divided in various phases settled into different virtual scenarios, developed within the framework of Unreal Engine (v4.25): (1) Welcome Scenario; (2) Adaptation Scenario; (3) Stepping Scenario; (4) Fatiguing Scenario; and (5) Measuring Scenario (Fig. 1). During the virtual simulation, the investigator stayed close to the participants to guide them throughout the experiment.

The participants were instructed to move towards the centre of the recording area, outlined by a 60 × 60 cm scaled tile with 12 parallel lines, distanced 4 cm from each, marked on its surface (Fig. 2). Subsequently, they wear the HMD and two controllers (one for each hand) to interact with the virtual world. Hence, the participants were immersed into the ‘Welcome Scenario’, a white virtual environment without any spatial references, and with a virtual disclaimer reading ‘Welcome to the body perception test. In this period, feel free to get accustomed to the virtual world. Follow the directions of the researcher and press START to begin’ (Fig. 3A).

Under these circumstances, the participants’ capability of reading the text was assessed to ensure the right positioning of the HMD. After that, by pressing the trigger button of the controllers, the next phase of the experiment started. The participants were transferred into the ‘Adaptation Scenario’. In this scenario, the participants were asked to orientate two feet icons, represented by two arrows for each foot, respectively, towards specific-coloured zones, by the simultaneous use of the two controllers (Fig. 3B). Here, they were free to experience and become confident with the use of the controllers. Specifically, the system registered the finger position on the touchpad and calculated the angle of the vector identified by the centre of the touch surface and the point of contact.

Once they were familiarised with the controls, the experimental trial started with the ‘Stepping Scenario’ and the ‘Measuring Scenario’. In the ‘Stepping Scenario’, after an acoustic signal, participants had to perform a high-knee step-in-place task while staring at a black point displayed in front of them in the virtual environment. This point was used as an external attention focus to help them to hold their balance. After a second acoustic signal, the black point disappeared, and the participants had to stop in the reached position without moving further their feet, and then the ‘Measuring Scenario’ appeared. The time between the two acoustic signals was randomly defined between 10 and 15 s to avoid any participant’s possible conditionings. In the ‘Measuring Scenario’ (Fig. 3D), the participants saw the virtual feet icons in front of them, as seen in the ‘Adaptation Scenario’, but without the coloured dial to avoid influencing their assessments. Participants had to indicate their perceived feet’ orientation through the touchpad of the controllers.

When the participants pressed both controllers’ triggers simultaneously, the program recorded the angle between the two feet (α-VR) icons and the time to confirm it, as the time spent with the finger on the touchpad. Once acquiring α-VR, they were instructed to maintain their feet still to let the investigator record the actual feet angle (α-R). This angle was obtained by considering the intersection of the straight lines that linked the II metatarsal axis to the calcaneal tuberosity of each foot on the tile surface (Fig. 4).

The figure shows the technique used to calculate the actual feet angle; angle a represents the angle between the axis that connects the II metatarsal axis to the calcaneal tuberosity of the right foot and the first perpendicular line on the tile, same for the angle b but for the left foot. Their addition provides the angle c, which is the angle between the median axis of the two feet

After acquiring α-VR and α-R measures by pressing the trigger button of the controllers, participants started the fatiguing protocols in the ‘Fatiguing Scenario’. It consisted of a 90° squat exercise with an elastic band surrounding both knees aiming at straining the hip’s external rotator muscles. During the descent phase of the squat, the hip and knee joints flexed (approximately 90°), while the ankle joints are dorsiflexing. Conversely, the hip and knee joints extended during the ascending phase, and the ankle joints plantarflexed. Once the lowest phase of the squat was reached, the participants had to rotate the hips externally and internally for a set of 15 repetitions. In this virtual scenario, participants were instructed to follow a recorded video inside the VR that showed a physiotherapist that kept on performing the movement set repeatedly (Fatiguing Scenario Fig. 3C), which also allowed them to control the speed of the movements. Each set of exercises was followed by a 10-s rest period, where the video was stopped and in which participants were asked to rate their perceived exertion on the Borg CR-10 scale (Ferguson 2014; Lamb et al. 1999). The number of movements in each set was identical for all (15 repetitions), but the total number of sets performed by each participant depended on their fatigue assessment.

Thus, the participant continued with the fatiguing protocol until one of the following stop conditions was met: they reported a Borg scale rate of 10 or could no longer fully extra-rotate their legs for three consecutive movements. Meanwhile, the investigator controlled the correct execution of the task while motivating the participants to carry on doing the exercise. This last point helped moderate the mental fatigue associated with participants’ motivation (Marcora et al. 2009). Once one of the stop conditions was met, the participants could press the trigger button of the controllers, and they performed the stepping and measuring scenarios again. After taking the second measurement, participants removed the HMD and recovered from the fatiguing protocol for 10 min. After this recovery time, participants were immersed again into the ‘Stepping Scenario’ and, consequently, the ‘Measuring Scenario’ and the third measurement were taken. This last measurement was taken to make a comparison with the baseline. The duration of each experiment depended on the fatiguing protocol and participants’ resistance. However, it required a maximum of 45 min.

2.4 Statistical methods

A priori analysis was run with G*Power 3.1 to calculate the sample size. Based on 3 × 2 Mixed ANOVA, a sample of 30 participants per group (VR and R) was determined to accept a power of 95%, a significant level of 0.05, and an effect size of 0.4 (Cohen, 1992). Descriptive statistics were performed, including means and standard deviations. Software statistics SPSS 26.0 (IBM SPSS Statistics, version 26.0, 2019, Armonk, NY, USA) and Stata 17 were used to run the analysis.

2.4.1 Primary outcome—feet angular differences between the real and the perceived orientation

The primary outcome of this study was the differences computed from the angles included between the virtual feet (α-VR group) and the real feet (α-R group) before (T1), immediately after (T2) and after 10 min from (T3) the fatiguing protocol. Data followed a normal distribution after inspections of q–q plots. A 3 × 2 Mixed ANOVA was used to detect statistical interaction at T1, T2 and T3 (times) between α-VR and α-R (groups). There were no outliers, as assessed by boxplots. Testing the homogeneity of variance (Levene’s test) was unnecessary since the two groups' sample size was equal. Greenhouse’s test of sphericity indicated that the assumption of sphericity was not violated for the two-way interaction, p = 0.89. The magnitude of the results of times and groups in the differences between α-VR and α-R was calculated through Cohen’s d and mean differences (MDs) with their 95% interval confidence (95% IC).

2.4.2 Secondary outcome—time to confirm the perceived orientation at the virtual reality task

The secondary outcome of this study was the time to confirm the perceived orientation at the virtual reality task before (T1), immediately after (T2), and after 10 min (T3) the fatiguing protocol. In this case, data did not follow a normal distribution after inspections of q–q plots as they were positively skewed. Johnson’s corrected t test (stata function ‘johnson’) for skewed data was used to compute MDs and 95% CI across times.

3 Results

3.1 Descriptive analysis

Thirty Italian participants were recruited for this study. Among them, 17 (57%) identified themselves as women and 13 as men (43%). The mean age was 27.5 ± 3.8, and the mean BMI was 21.9 ± 2.4.

3.2 Primary outcome—feet angular differences between the real and the perceived orientation

Table 1 reports α-VR and α-R and their mean differences. There was no statistical interaction between time (within-subject factor) and groups (between-subject factors) in determining the differences between α-VR and α-R (p = 0.26). Time did not show an effect in the estimation of the differences between α-VR and α-R. Precisely, T1 VS T2 has a 95% CI that ranged from -5.20° to 1.87°, T1 VS T3 from − 5.03° to 0.49°, and T2 VS T3 from -4.70° to 3.48°. Conversely, an effect was found between the groups in the estimation of the differences between α-VR and α-R. Precisely, an effect was found between α-VR and α-R at T1 (MD = 20.89, 95% CI [14.67°, 27.10°]), at T2 (16.76, [9.57°, 23.94°]) and T3 (16.43°, [10.00°, 22.68°]) (Table 1).

3.3 Secondary outcome—time to confirm the perceived orientation at the virtual reality task

The mean and the SD of the seconds used to define the feet orientation in VR are reported in Table 2, together with their mean differences and 95% CI. An effect was found between T2 and T1 (MD = 5.10 95% CI [2.82, 7.39]) and T3 and T1 (4.97 [1.64, 8.30]), but not with T3 and T2 (0.13 [− 3.40, 3.14]) (Table 2).

4 Discussion

The present study investigated the position sense of lower limbs in a perceptually deprived virtual environment. Specifically, it assessed the differences between the participants’ perceived and real orientation of feet in space, the time needed to confirm it, and to what extent this difference might be affected by a fatiguing task. Our first hypothesis was the existence of a discrepancy between the actual and the perceived orientation of the lower limbs. Our results seemed to confirm that the perceived position of the feet generally differs from the actual one, as participants tended to perceive their feet in a parallel forward orientation, no matter where the real position was. The participants had an externally oriented real position of the feet, on average. Secondly, we were expecting this parameter, along with the time to process it, to change under acute fatigue conditions, as a result of a reduction in the accuracy of position sense. Nevertheless, neither before nor after the fatiguing protocol, participants’ tendency to perceive their feet in a parallel forward orientation changed, but the time needed to confirm the orientation seemed delayed.

With our protocol we have been able to study only the position sense output through feet orientation in the total absence of any visual bodily or spatial cues. Therefore, we cannot know how the lower limbs ‘postural schema’ or ‘body model’ behaved but based on this phenomenon we can bring to the forefront several hypotheses that are discussed hereafter. Participants had no visual cues since they were immersed in an IVR scenario devoid of any spatial cues. Assuming that they actually reached the acute fatigue condition, as they reached the Borg scale rate of 10 or could no longer continue the exercise in the fatigue protocol, we were expecting a decrease in proprioception afferent signals (Abdelkader et al. 2020; Larson and Brown 2018). Nevertheless, there was no change in the final position sense output across the three different times, as all the participants indicated their feet to be in a parallel forward orientation, despite they were generally divergent. According to the predictive processing theory, the brain generates inferences by comparing and integrating personal beliefs, cultural influences, and expected inputs with afferent multi-sensory stimulations. Upon these, it selects the best functional action to answer to an environmental output (Beierholm et al. 2009; Körding et al. 2007; Magnotti et al. 2013; Rohe and Noppeney 2015; Wozny et al. 2008). Since the brain cannot directly access the position of the limbs, it can only make inferences upon sensory afferent inputs and ‘body representation’ data (Samad et al. 2015). Hence, the uncertainty becomes higher and higher when data from sensory inputs are fewer, like when there are no visual bodily and spatial cues, and the proprioception information is reduced since the participant cannot move. Both conditions were created in the present study. Von Castell et al. (2021) studied the integration of visual and postural eye-height information in a similar virtual reality context. They affirmed that observers, upon receiving incongruent perceptual information, calibrate visual eye-height information relying on an internalised rather than a flexibly updated posture (von Castell et al. 2021). Accordingly, other authors suggested that the visual experience of the body might play a role in shaping the ‘body model’ (Longo et al. 2010; Kimmel 2013; Lagopoulos 2019; Spurgas 2005). Moreover, it might be possible that environmental and cultural factors influence the ‘body representations’, both ‘postural schema’ and ‘body model’, contributing to modelling a stereotyped picture of ourselves, just like they can influence emotions (Immordino-Yang et al. 2016; Kitayama et al. 2006). Furthermore, repeated exposure to anatomical content through different media sources (e.g. websites, books, etc.) might play a role.

Likely, the brain, under the circumstance of our study, relies on cultural and cognitive components (i.e. personal and cultural beliefs, expectations, previous experiences) that bring to identify the feet’ orientation closer to the body’s midline (Paillard and Brouchon 1968; Wann and Ibrahim 1992; Wozny et al. 2008). However, the exploration of this hypothesis needs further studies and more precise fatigue protocols, perhaps also investigating different populations with different cultural backgrounds. Then, a possible reason behind the results of the study is that the participants relied mostly on their ‘body model’ representation with the feet interiorly represented as parallel. It is known that the ‘body model’ representations of the hand, as well as the leg, are distorted (Longo and Haggard 2010b; Stone et al. 2018). Stone et al. (2018) described a distortion of the ‘body model’ representation for the leg highlighting how participants overestimated their lower limbs’ width by about 10%, particularly relative to their ankles, which were perceived as significantly more ‘swollen’ than their knees and thighs (Stone et al. 2018). All these experiments are characterised by perceptual tasks performed without seeing the bones and the joints, as in the present study. Hence, also a possible distorted representation of the tibiotarsal joint can be found.

As far as the muscular fatigue effects on position sense, although many researchers state that fatigue discharges muscle spindles and alters proprioception and therefore position sense (Gear 2011; Myers et al. 1999; Röijezon et al. 2015b; Vuillerme et al. 2002), our participants’ position sense performance did not get worse after the exercise session. It could be that in this study scenario, intended as an IVR environment, the fatigue was not sufficient to affect the integration of ‘postural schema’ and ‘body model’ and so the position sense got unaltered. Another possible explanation could be that our fatiguing task was focussed on extra rotators of the hip and so we could not get a general acute fatigue effect, which in literature is sustained to affect position sense compared to local fatigue (Abd-Elfattah et al. 2015). Therefore, the fatigue protocol proposed needs to be taken cautiously as it might not be the most suitable one. Despite this, we found that all participants during the fatiguing session reached the same results obtained in the baseline but with a more extensive lapse of time to confirm the orientation. The exercise may have induced an overload of local information, caused by fatigue, and a bigger computational effort required by the brain. This may represent an obstacle to motor learning since the cognitive effort, expressed in the time required to construct the position sense, becomes higher (Batson 2013; Eddy et al. 2015). This temporal shift hypothesis is in line with the results of Vuillerme et al. (2002), who observed that ankle fatigue increases attentional demand in regulating static postural control in healthy adults (Bisson et al. 2014; Harkins et al. 2005; Hatami Bahmanbegloo et al. 2021; Jo et al. 2022).

This study presents a few limitations that need to be addressed. The current data preclude any conclusions regarding the validity of our assessment methods for α-VR and α-R, thereby limiting the interpretation of our results. For what concern the estimated virtual orientation of the feet, this parameter is linked to the perceptive sensitivity and representation capacity of each participant, and for this reason it is intrinsically variable. Future studies in a larger population could use the methodology proposed in this work to compare the estimated virtual orientation against a set of predefined real angles of the feet, extracting normative values for the error related to the position sense of the lower limbs. As for the assessment of the real orientation of the feet, we could not explore the reliability of this specific methodology, since we did not have a proper gold standard for data comparison. Nonetheless, we adopted a series of strategies to reduce the measurement error and standardise the procedure as much as possible: (1) we used specific bone landmarks to clearly identify the reference vector for the measurement of the angle of the feet; (2) the assessment and the landmarks identification were always performed by the same operator; (3) we used a fine point ink pen (0.7 mm) to draw the lines on the scaled tile. Considering the sensitivity of the goniometer used during our experimental sessions, and the width of the drawn lines we can estimate a standard deviation of the measurements of about ± 2 degrees. This error can be reduced with the implementation of more advanced (and expensive) solutions integrating VR and optoelectronic motion capture systems. Another limitation of our study is that we focussed our analysis solely on the position sense output derived from the orientation of the feet, without exploring broader concepts related to the ‘body representations’ such as the ‘postural schema’, which investigates the angular configuration of all the lower limbs' joints, and the ‘body model’. Future research should also focus on these representations adopting, possibly, a higher quality design (e.g. randomised controlled trial to test fatigue effects). Moreover, the fatigue protocol adopted presents some criticalities, as it has not been validated and the exercise proposed might have tired only the hip extra rotator muscles without inducing a general acute fatigue condition. Hence, this may have affected the fatigue effects, limiting the interpretability and the magnitude of our results. However, the strength of this study is the use of a new measurement system (i.e. IVR) to assess position sense, in the absence of any visual-spatial cues, not affected by the experimenter.

5 Conclusion

People without diseases in the absence of a direct vision of their body and environmental cues tend to sense their feet as forwarding parallel even though they are divergent. Moreover, muscle fatigue does not affect the task, but it delays the time to accomplish it. IVR represents an interesting and easy-to-use tool to perform tasks intended to study position sense due to its ability to modify the impact of visual bodily and spatial cues. Future studies should test the hypothesis of this work and better investigate the position sense and the effect of fatigue, adopting physiological measurements like electromyography. Moreover, it would be important to see any possible changes in our results on clinical conditions where the elaboration of body representations is hindered by damage of the central nervous system and high fatigability (e.g. multiple sclerosis, myasthenia gravis, fibromyalgia, stroke).

Data availability

Data are available upon reasonable request to the corresponding author.

References

Abbruzzese G, Trompetto C, Mori L, Pelosin E (2014) Proprioceptive rehabilitation of upper limb dysfunction in movement disorders: a clinical perspective. Front Hum Neurosci 8:961. https://doi.org/10.3389/fnhum.2014.00961

Abd-Elfattah HM, Abdelazeim FH, Elshennawy S (2015) Physical and cognitive consequences of fatigue: a review. J Adv Res 6(3):351–358. https://doi.org/10.1016/J.JARE.2015.01.011

Abdelkader NA, Mahmoud AY, Fayaz NA, El-Din Mahmoud LS (2020) Decreased neck proprioception and postural stability after induced cervical flexor muscles fatigue. J Musculoskelet Neuronal Interact 20(3):421–428

Ateş Y, Ünlüer NÖ (2020) The relationship of pain, anxiety, and fatigue with knee position sense, balance, and dual task performance during menstrual cycle in females with multiple sclerosis. Somatosens Mot Res 37(4):307–312. https://doi.org/10.1080/08990220.2020.1828057

Batson G (2013) Exercise-induced central fatigue: a review of the literature with implications for dance science research. J Danc Med Sci 17(2):53–62. https://doi.org/10.12678/1089-313X.17.2.53. (Official publication of the international association for dance medicine & science)

Bayramova R, Valori I, McKenna-Plumley PE, Callegher CZ, Farroni T (2021) The role of vision and proprioception in self-motion encoding: an immersive virtual reality study. Atten Percept Psychophys 83(7):2865–2878. https://doi.org/10.3758/S13414-021-02344-8

Beierholm UR, Quartz SR, Shams L (2009) Bayesian priors are encoded independently from likelihoods in human multisensory perception. J vis. https://doi.org/10.1167/9.5.23

Bisson EJ, Lajoie Y, Bilodeau M (2014) The influence of age and surface compliance on changes in postural control and attention due to ankle neuromuscular fatigue. Exp Brain Res 232(3):837–845. https://doi.org/10.1007/S00221-013-3795-7/FIGURES/4

Eddy MD, Hasselquist L, Giles G, Hayes JF, Howe J, Rourke J, Coyne M, O’Donovan M, Batty J, Brunyé TT, Mahoney CR (2015) The effects of load carriage and physical fatigue on cognitive performance. PLoS ONE. https://doi.org/10.1371/JOURNAL.PONE.0130817

Ferguson B (2014) ACSM’s guidelines for exercise testing and prescription 9th Ed. 2014. J Can Chiropr Assoc 58(3):328

Ferlinc A, Fabiani E, Velnar T, Gradisnik L (2019) The importance and role of proprioception in the elderly: a short review. Mater Socio Med 31(3):221. https://doi.org/10.5455/msm.2019.31.219-221

Fogg BJ (2002) Persuasive technology. Ubiquity 200:2. https://doi.org/10.1145/764008.763957

Ganea N, Longo MR (2017) Projecting the self outside the body: body representations underlying proprioceptive imagery. Cognition 162:41–47. https://doi.org/10.1016/J.COGNITION.2017.01.021

Gear WS (2011) Effect of different levels of localized muscle fatigue on knee position sense. J Sports Sci Med 10(4):725

Goldberg A, Russell JW, Alexander NB (2008) Standing balance and trunk position sense in impaired glucose tolerance (IGT)-related peripheral neuropathy. J Neurol Sci 270(1–2):165–171. https://doi.org/10.1016/J.JNS.2008.03.002

Harkins KM, Mattacola CG, Uhl TL, Malone TR, McCrory JL (2005) Effects of 2 ankle fatigue models on the duration of postural stability dysfunction. J Athl Train 40(3):191

Hatami Bahmanbegloo Z, Farsi A, Hassanlouie H, Tilp M (2021) Effect of central and peripheral muscle fatigue contribution after ankle submaximal fatiguing contractions on muscle synergies and postural control. Mot Behav. https://doi.org/10.22089/MBJ.2021.10287.1959

Herter TM, Scott SH, Dukelow SP (2014) Systematic changes in position sense accompany normal aging across adulthood. J Neuroeng Rehabil. https://doi.org/10.1186/1743-0003-11-43

HTC Vive (2018) HTC VIVE tracker (2018) developer guidelines Ver. 1.0 version control. Accessed from. https://dl.vive.com/Tracker/Guideline/HTC_Vive_Tracker(2018)_Developer+Guidelines_v1.0.pdf

Immordino-Yang MH, Yang XF, Damasio H (2016) Cultural modes of expressing emotions influence how emotions are experienced. Emotion (washington D.c.) 16(7):1033–1039. https://doi.org/10.1037/EMO0000201

Jahjah A, Seidenspinner D, Schüttler K, Klasan A, Heyse TJ, Malcherczyk D, El-Zayat BF (2018) The effect of ankle tape on joint position sense after local muscle fatigue: a randomized controlled trial. BMC Musculoskelet Disord. https://doi.org/10.1186/S12891-017-1909-2

Jo D, Goubran M, Bilodeau M (2022) Sex differences in central and peripheral fatigue induced by sustained isometric ankle plantar flexion. J Electromyogr Kinesiol 65:102676. https://doi.org/10.1016/J.JELEKIN.2022.102676

Johnston W, Dolan K, Reid N, Coughlan GF, Caulfield B (2018) Investigating the effects of maximal anaerobic fatigue on dynamic postural control using the Y-balance test. J Sci Med Sport 21(1):103–108. https://doi.org/10.1016/J.JSAMS.2017.06.007

Kimmel M (2013) The arc from the body to culture: how affect, proprioception, kinesthesia, and perceptual imagery shape cultural knowledge (and vice versa). Integr Rev 9(2):300–348

Kitayama S, Mesquita B, Karasawa M (2006) Cultural affordances and emotional experience: socially engaging and disengaging emotions in Japan and the United States. J Pers Soc Psychol 91(5):890–903. https://doi.org/10.1037/0022-3514.91.5.890

Körding KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L (2007) Causal inference in multisensory perception. PLoS ONE. https://doi.org/10.1371/JOURNAL.PONE.0000943

Lagopoulos AP (2019) The cultural transformation of the proprioceptive senses. Semiotica 2019(231):193–223. https://doi.org/10.1515/SEM-2018-0041/PDF

Lamb KL, Eston RG, Corns D (1999) Reliability of ratings of perceived exertion during progressive treadmill exercise. Br J Sports Med 33(5):336. https://doi.org/10.1136/BJSM.33.5.336

Larson DJ, Brown SHM (2018) The effects of trunk extensor and abdominal muscle fatigue on postural control and trunk proprioception in young, healthy individuals. Hum Mov Sci 57:13–20. https://doi.org/10.1016/J.HUMOV.2017.10.019

Li L, Zhang S, Dobson J (2019) The contribution of small and large sensory afferents to postural control in patients with peripheral neuropathy. J Sport Health Sci 8(3):218–227. https://doi.org/10.1016/j.jshs.2018.09.010

Longo MR (2015) Implicit and explicit body representations. Eur Psychol 20(1):6–15. https://doi.org/10.1027/1016-9040/A000198

Longo MR, Azañón E, Haggard P (2010) More than skin deep: body representation beyond primary somatosensory cortex. Neuropsychologia 48(3):655–668. https://doi.org/10.1016/J.NEUROPSYCHOLOGIA.2009.08.022

Longo MR, Haggard P (2010a) An implicit body representation underlying human position sense. Proc Natl Acad Sci USA 107(26):11727–11732. https://doi.org/10.1073/PNAS.1003483107

Maciejewski M, Piszczek M, Pomianek M, Palka N (2020) Design and evaluation of a steamvr tracker for training applications—simulations and measurements. Metrol Meas Syst 27(4):601–614. https://doi.org/10.24425/MMS.2020.134841

Magnotti JF, Ma WJ, Beauchamp MS (2013) Causal inference of asynchronous audiovisual speech. Front Psychol. https://doi.org/10.3389/FPSYG.2013.00798/PDF

Mohammadi F, Azma K, Naseh I, Emadifard R, Etemadi Y (2013) Military exercises, knee and ankle joint position sense, and injury in male conscripts: a pilot study. J Athl Train 48(6):790–796. https://doi.org/10.4085/1062-6050-48.3.06

Mon-Williams M, Wann JP, Jenkinson M, Rushton K (1997) Synaesthesia in the normal limb. Proc Biol Sci 264(1384):1007–1010. https://doi.org/10.1098/RSPB.1997.0139

Myers JB, Guskiewicz KM, Schneider RA, Prentice WE (1999) Proprioception and neuromuscular control of the shoulder after muscle fatigue. J Athl Train 34(4):362. https://doi.org/10.17615/41by-0c70

Niehorster DC, Li L, Lappe M (2017) The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research. I Percept 8(3):1–23. https://doi.org/10.1177/2041669517708205

Nieto-Guisado A, Solana-Tramunt M, Marco-Ahulló A, Sevilla-Sánchez M, Cabrejas C, Campos-Rius J, Morales J (2022) The mediating role of vision in the relationship between proprioception and postural control in older adults, as compared to teenagers and younger and middle-aged adults. Healthcare 10(1):103. https://doi.org/10.3390/HEALTHCARE10010103

Paillard J, Brouchon M (1968) Active and passive movements in the calibration of position sense In: Freedman SJ (ed) The neuropsychology of spatially oriented behavior, chap 3. Dorsey Press, Homewood III, pp 37–55

Proske U (2019) Exercise, fatigue and proprioception: a retrospective. Exp Brain Res 237(10):2447–2459. https://doi.org/10.1007/S00221-019-05634-8

Proske U, Gandevia SC (2012) The proprioceptive senses: their roles in signaling body shape, body position and movement, and muscle force. Physiol Rev 92(4):1651–1697. https://doi.org/10.1152/PHYSREV.00048.2011

Radziun D, Ehrsson HH (2018) Short-term visual deprivation boosts the flexibility of body representation. Sci Rep. https://doi.org/10.1038/S41598-018-24496-8

Rand D (2018) Proprioception deficits in chronic stroke-Upper extremity function and daily living. PLoS ONE 13(3):e0195043. https://doi.org/10.1371/journal.pone.0195043

Rohe T, Noppeney U (2015) Cortical hierarchies perform bayesian causal inference in multisensory perception. PLoS Biol. https://doi.org/10.1371/JOURNAL.PBIO.1002073

Röijezon U, Clark NC, Treleaven J (2015b) Proprioception in musculoskeletal rehabilitation. Part 1 basic science and principles of assessment and clinical interventions. Man Ther 20(3):368–377. https://doi.org/10.1016/J.MATH.2015.01.008

Röijezon U, Clark NC, Treleaven J (2015a) Proprioception in musculoskeletal rehabilitation: part 1: basic science and principles of assessment and clinical interventions. Man Ther 20(3):368–377. https://doi.org/10.1016/j.math.2015.01.008

Romero-Franco N, Jiménez-Reyes P (2017) Effects of warm-up and fatigue on knee joint position sense and jump performance. J Mot Behav 49(2):117–122. https://doi.org/10.1080/00222895.2016.1152222

Rossetti Y, Desmurget M, Prablanc C (1995) Vectorial coding of movement: vision, proprioception, or both? J Neurophysiol 74(1):457–463. https://doi.org/10.1152/JN.1995.74.1.457

Samad M, Chung AJ, Shams L (2015) Perception of body ownership is driven by bayesian sensory inference. PLoS ONE. https://doi.org/10.1371/JOURNAL.PONE.0117178

Sanchez-Vives MV, Slater M (2005) From presence to consciousness through virtual reality. Nat Rev Neurosci 6(4):332–339. https://doi.org/10.1038/nrn1651

Sansone LG, Stanzani R, Job M, Battista S, Signori A, Testa M (2021) Robustness and static-positional accuracy of the SteamVR 1.0 virtual reality tracking system. Virtual Real 26:1–22. https://doi.org/10.1007/S10055-021-00584-5

Spurgas AK (2005) Body image and cultural background. Sociol Inq 75(3):297–316. https://doi.org/10.1111/J.1475-682X.2005.00124.X

Steib S, Zech A, Hentschke C, Pfeifer K (2013) Fatigue-induced alterations of static and dynamic postural control in athletes with a history of ankle sprain. J Athl Train 48(2):203–208. https://doi.org/10.4085/1062-6050-48.1.08

Stenneken P, Prinz W, Bosbach S, Aschersleben G (2006a) Visual proprioception in the timing of movements: evidence from deafferentation. NeuroReport 17(5):545–548. https://doi.org/10.1097/01.WNR.0000209013.01470.F8

Stenneken P, Prinz W, Cole J, Paillard J, Aschersleben G (2006b) The effect of sensory feedback on the timing of movements: evidence from deafferented patients. Brain Res 1084(1):123–131. https://doi.org/10.1016/J.BRAINRES.2006.02.057

Stone KD, Keizer A, Dijkerman HC (2018) The influence of vision, touch, and proprioception on body representation of the lower limbs. Acta Physiol 185:22–32. https://doi.org/10.1016/J.ACTPSY.2018.01.007

Touzalin-Chretien P, Ehrler S, Dufour A (2010) Dominance of vision over proprioception on motor programming: evidence from ERP. Cereb Cortex 20(8):2007–2016. https://doi.org/10.1093/CERCOR/BHP271

Vafadar AK, Côté JN, Archambault PS (2012) The effect of muscle fatigue on position sense in an upper limb multi-joint task. Mot Control 16(2):265–283. https://doi.org/10.1123/mcj.16.2.265

Valori I, McKenna-Plumley PE, Bayramova R, Callegher CZ, Altoè G, Farroni T (2020) Proprioceptive accuracy in immersive virtual reality: a developmental perspective. PLoS ONE 15(1):e0222253. https://doi.org/10.1371/JOURNAL.PONE.0222253

Verschueren J, Tassignon B, De Pauw K, Proost M, Teugels A, Van Cutsem J, Roelands B, Verhagen E, Meeusen R (2020) Does acute fatigue negatively affect intrinsic risk factors of the lower extremity injury risk profile? A systematic and critical review. Sports Med (auckland, N.z.) 50(4):767–784. https://doi.org/10.1007/S40279-019-01235-1

Von Castell C, Oberfeld D, Hecht H (2021) Visual and postural eye-height information is flexibly coupled in the perception of virtual environments. J Exp Psychol Hum Percept Perform 47(8):1132–1148. https://doi.org/10.1037/XHP0000933

Vuillerme N, Forestier N, Nougier V (2002) Attentional demands and postural sway: the effect of the calf muscles fatigue. Med Sci Sports Exerc 34(12):1907–1912. https://doi.org/10.1097/00005768-200212000-00008

Wann JP, Ibrahim SF (1992) Does limb proprioception drift? Exp Brain Res 91:162–166. https://doi.org/10.1007/BF00230024

Wozny DR, Beierholm UR, Shams L (2008) Human trimodal perception follows optimal statistical inference. J vis. https://doi.org/10.1167/8.3.2

Witmer BG, Singer MJ (1998) Measuring presence in virtual environments: a presence questionnaire. Presence Teleoperators Virtual Environ 7(3):225–240

Acknowledgements

This work was developed within the DINOGMI Department of Excellence framework of MIUR 2018-2022 (Legge 232 del 2016).

Funding

Open access funding provided by Università degli Studi di Genova within the CRUI-CARE Agreement. The authors have no relevant financial or non-financial interests to disclose.

Author information

Authors and Affiliations

Contributions

All authors made substantial contributions to the conception and design, data acquisition, or analysis and interpretation of data. All authors participated in drafting the article or revising it critically for important intellectual content. All authors gave final approval of the version to be published. All authors agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Conflict of interest

The authors report no conflicts of interest. All authors certify that they have no affiliations with or involvement in any organisation or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Ethical approval

Ethical approval was obtained from the Ethics Committee for University Research (Comitato Etico per la Ricerca di Ateneo [CERA], University of Genova, Genova, Italy; code: 2020.12; approval date: 09/07/2020).

Consent to participate

The participants signed informed consent before participation.

Consent for publication

The participants signed informed consent for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Giardulli, B., Battista, S., Sansone, L.G. et al. Real and perceived feet orientation under fatiguing and non-fatiguing conditions in an immersive virtual reality environment. Virtual Reality 27, 2371–2381 (2023). https://doi.org/10.1007/s10055-023-00809-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-023-00809-9