Abstract

Potentially habitable icy Ocean Worlds, such as Enceladus and Europa, are scientifically compelling worlds in the solar system and high-priority exploration targets. Future robotic exploration of Enceladus and Europa by in-situ missions would require a detailed understanding of the surface material and of the complex lander-surface interactions during locomotion or sampling. To date, numerical modeling approaches that provide insights into the icy terrain’s mechanical behavior have been lacking. In this work, we present a Discrete Element Model of porous planetary ice analogs that explicitly describes the microstructure and its evolution upon sintering. The model dimension is tuned following a Pareto-optimality analysis, the model parameters’ influence on the sample strength is investigated using a sensitivity analysis, and the model parameters are calibrated to experiments using a probabilistic method. The results indicate that the friction coefficient and the cohesion energy density at the particle-scale govern the macroscopic properties of the porous ice. Our model reveals a good correspondence between the macroscopic and bond strength evolutions, suggesting that the strengthening of porous ice results from the development of a large-scale network due to inter-particle bonding. This work sheds light on the multi-scale nature of the mechanics of planetary ice analogs and points to the importance of understanding surface strength evolution upon sintering to design robust robotic systems.

Graphic abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the main challenges in developing robots for in-situ space exploration is the uncertainty associated with the surface properties of the planetary bodies. Properties like ultimate bearing capacity and penetration resistance affect the ability of space robots to land, sample, and explore their environment. Hence, estimating these properties is critical for the design and optimization of the robots’ hardware and control system and the development of suitable sampling systems.

Several robotic mission concepts are under development to explore Ocean Worlds [10, 34]. Ocean Worlds are bodies in our solar system that are considered potentially habitable or inhabited. Saturn’s moon Enceladus and Jupiter’s moon Europa recently gained significant interest among the scientific community, and are now regarded as two of the most likely Ocean Worlds to harbor extraterrestrial life. Indeed, they appear to contain all essential ingredients of life, namely liquid water, energy, and nutrients.

Enceladus and Europa are likely to harbor an internal ocean of liquid water underneath their thick ice shells [66, 82]. Enceladus’ internal ocean spews material from large surface fractures around its southern pole known as the Tiger Stripes. The plume formed by the confluence of these geysers is primarily composed of micron-sized particles dominated by water ice [83] and are partly deposited back on the surface to form the outermost crust [44, 50, 81]. This unique geological context provides direct access to subsurface material, making Enceladus a high-priority target for planetary missions in the search for biosignatures [54]. Europa is also thought to be geologically active with plumes, though definitive data remain elusive [41]. The upcoming Europa Clipper mission [65] may confirm the presence of such features.

After the plume material is deposited on the surface, the size and microstructure of the ice particles are expected to evolve via sintering, transforming initially unconsolidated deposits into consolidated porous ice [50]. Sintering of ice is a metamorphism that describes the diffusion of water molecules within and between the ice particles, as well as the evolution of inter-particle bonds. Sintering leads to an increase in the inter-particle neck size and the densification of the aggregates, resulting in the material as a whole becoming stronger over time [5]. Sintering is a temperature and particle-size dependent process in which the evolution rate increases monotonically with increasing temperature and decreasing particle size. While earlier work provided an improved understanding of sintering timescales in planetary environments [60], planetary ice sintering remains a poorly understood process that is now the subject of active research.

In a recent study, laboratory icy plume deposit analogs were produced and left to sinter over extended periods of time and at several temperatures [16]. Cone penetration tests were performed at frequent intervals to investigate the mechanical strength of the samples. [16] showed that the observed temperature dependence of the strength evolution is commensurate with a mechanism dominated by diffusion of water molecules on the surface of ice particles. Their study revealed a link between thermodynamic processes and sample strength, but was not suited to understand and quantify the mechanical properties of planetary ice analogs at length scales ranging from particle- to macro-scale.

In this work, we develop a numerical model that aims to unravel the roles of particle-scale properties of the porous ice in the overall mechanical behavior. We use the Discrete Element Method (DEM) to represent the sintered ice particles and simulate cone penetration tests on ice plume deposit analogs at different consolidation levels. DEM allows studying complex behavior via simple particle-particle interactions. Alternative approaches to model this ice are presented in the Supplementary Material (Text S1). Our model, composed of homogeneous particles that interact via contacts, mimics the material’s microstructure and reflects the ice properties both at the particle and at the contact level. As a result, the macroscopic mechanical behavior emerges from the microstructure and micromechanical interactions. Our model also describes the contacts and the sintering process based on the physics of the ice.

DEM has seen rapid development both in academic research [64, 86] and in engineering to solve problems involving granular material, such as in the mining, food, and pharmaceuticals industries. DEM has also been recognized as an accurate and computationally effective tool to simulate terrain–tool interactions [55, 79]. The method has been used to simulate ice, particularly for applications involving interactions with offshore structures and ship hulls [45, 68, 85]. [46] developed a DEM-based model but for sintered snow. However, to the best of our knowledge, no previous study has attempted to model sintered porous granular ice.

In this work, the ice particles are treated as rigid bodies that interact via contacts. The contact parameters are calibrated with experimental data of cone penetration tests. The inter-particle solid bonds are modeled with a cohesive force following a Simplified Johnson-Kendall-Roberts (SJKR) model [48]. Unlike other bond models [2, 7, 23, 26, 33, 52, 55, 67, 74], the SJKR model allows the bonds to fracture and reform dynamically throughout the simulation, allowing for indefinitely long simulations with large deformations and arbitrary interactions.

The paper is organized as follows. In Sect. 2, we briefly describe the ice preparation and testing methods. We also describe the fundamentals of DEM and detail the contact models used in our study. In Sect. 3, we introduce the simulation setup, the modeling procedure, and the parameters of our model. In Sect. 4, we investigate how to reduce the model complexity in an optimal and rigorous manner. In Sect. 5, we calibrate the model to fit experimental data following a proposed probabilistic method. Finally, we present and interpret the results in Sect. 6.

2 Methodologies

2.1 Laboratory ice analog preparation and testing

To create a laboratory analog of the ice plume deposits, deionized liquid water was atomized into liquid nitrogen. The water droplets instantly crystallize and form ice particles. This formed fine-grained ice was stored in sealed containers and left to sinter at four different temperatures (193 K, 223 K, 233 K, and 243 K) for time periods up to 14 months. The starting ice particles had a log-normal size distribution with a median diameter of 12 μm. The samples exhibited a porosity of \(51.5\% \pm 1.6\%\), which remained sensibly constant throughout the aging process. The cone penetration resistance has been routinely measured using an in-house-developed experimental setup illustrated in Fig. 2a. The cone penetration tests consisted of driving a rod with a conical tip of 10mm diameter into the sample at a constant speed of \({10}\,{\hbox {mm.s}^{-1}}\). The vertical force acting on the cone tip is measured in relation to the penetration depth and used to derive the ice strength. A complete description of the material preparation, the measurement procedure, and a summary of the experimental results are presented in [16].

2.2 DEM modeling

In the DEM framework, as first described by [21], discrete rigid-body particles (or elements) interact with each other, transferring forces and torques via contacts. One appealing feature of DEM is that no assumptions about the material behavior at the continuum scale are required; instead, the material continuum behavior emerges from the large number of interactions at the particle scale [64]. In that sense, it is a faithful representation of the underlying physics. Nonetheless, DEM has a prohibitively high computational cost, limiting the simulation time and the number of particles in a given simulation.

In DEM, the translational and rotational motion of each individual particle is calculated by solving Newton’s second law of motion at each time step, as summarized in the following equations:

where \(m_i\), \(I_i\), \(\varvec{v_i}\), \(\varvec{\omega _i}\) are respectively the mass, the moment of inertia, the translational velocity vector, and the angular velocity vector of a particle i. \(\varvec{g}\) is the gravity vector, \(\varvec{F}^n_{ij}\), \(\varvec{F}^t_{ij}\), and \(\varvec{\tau }^r_{ij}\) are respectively the normal force, the tangential force, and the rolling resistance applied on particle i from the interaction with particle j. \(\varvec{R}_{ij}\) is the vector between the center of particle i and the contact point with the particle j. In this work, Eqs. (1) and (2) are solved using the Velocity Verlet scheme. More details on integration schemes are presented in the Supplementary Material (Text S2).

Prior to solving Eqs. (1) and (2), the forces and torques exerted on each particle by neighboring particles or boundaries are calculated using the three contact models depicted in Fig. 1. The normal and tangential forces at the contact point, respectively \(\varvec{F}_n\) and \(\varvec{F}_t\), are modeled using the Hertz-Mindlin no-slip contact model with a linear spring-dashpot [59], as shown in Fig. 1a. In this framework, the normal and tangential forces have an elastic and a viscous component, denoted by the superscripts e and v, and are decomposed in the following equations:

Additionally, the tangential force is governed by the Coulomb law of friction \(|\varvec{F}_t| \le \mu |\varvec{F}_n|\), where \(\mu\) is the friction coefficient. Beyond this limit, slippage between particles occurs. The elastic force in the normal direction \(\varvec{F}_n^e\) is based on the classical Hertz’s theory of contact between two spheres [36, 47]. It assumes small elastic strains, small contact surfaces, and an ellipsoidal distribution of contact stresses. The elastic force in the tangential direction \(\varvec{F}_t^e\) is based on Mindlin’s theory [59]. It is determined by both the normal and tangential overlap, respectively \(\varvec{\delta }_n\) and \(\varvec{\delta }_t\). The tangential overlap \(\varvec{\delta }_t\) stems for the tangential velocity mismatch of two particles at their contact point. These models describe non-linear normal elastic force-displacement relationships which are expressed as follows:

where \(k_n\) and \(k_t\) are the contact’s normal and tangential elastic constants, \(R^*=\frac{R_i R_j}{R_i+R_j}\) the equivalent radius of the two bodies in contact, \(R_i\) the radius of the i-th particle, \(G^*=1/(\frac{2(2-\nu _i)(1+\nu _i)}{E_i}+\frac{2(2-\nu _j)(1+\nu _j)}{E_j})\) the equivalent shear modulus, \(E^*=1/(\frac{1-\nu _i^2}{E_i}+\frac{1-\nu _j^2}{E_j})\) the equivalent Young’s modulus, \(\nu _i\) the Poisson’s ratio of the i-th particle, and \(E_i\) its Young’s modulus.

The viscous components in Eqs. (3) and (4) allow the system to dissipate energy to reach a steady state packing in reasonable time. In their original formulation, [21] proposed an expression of the critical damping ratio \(\beta\) based on the critical damping time of a single degree-of-freedom spring mass dashpot system. [84] proposed that the critical damping ratio \(\beta\) derives from the coefficient of restitution e - a physical parameter of the particles that characterizes the energy lost during collision and plastic straining - such that \(\beta = -{ln(e)/\sqrt{ln(e)^2+ \pi ^2}}\). When \(\beta =1\), the system is considered critically damped and reaches steady-state packing in the shortest achievable time. The normal and tangential viscous forces are expressed as follows:

where \(\gamma _n\) and \(\gamma _t\) are the normal and tangential viscoelastic damping constants, \(\varvec{v}^n\) and \(\varvec{v}^t\) are the normal and tangential components of the relative velocity at the contact point, \(m^*=\frac{m_i m_j}{m_i+m_j}\) is the equivalent mass. \(S_n\) and \(S_t\) are, respectively, the normal and tangential stiffness and are defined by \(S_n = 2 E^* \sqrt{R^* |\varvec{\delta _n}}|\) and \(S_t = 8 G^* \sqrt{R^* |\varvec{\delta _n}}|\).

The rolling resistance represents the torques transmitted at the particles’ contact region. The contact region formed when two particles are pushed together under a normal load can transfer torque owing to frictional forces distributed over the contact region. Thereby, the magnitude of the rolling resistance is proportional to the normal force–the source of the contact surface–and to a friction coefficient. It is calculated using a rolling friction model, as shown in Fig. 1b. While several rolling models exist [1, 43, 93], the Constant Directional Torque (CDT) is the most commonly used in DEM research owing to its relatively accurate and efficient calculations. In this model, the resistive torque \(\varvec{\tau }_r\) is proportional to the normal force \(\varvec{F}_n\) and is oriented in the direction of the relative rolling motion \(\frac{\varvec{\omega }_{ij}}{|\varvec{\omega }_{ij}|}\). It is expressed as follows:

where \(\mu _r\) is the coefficient of rolling friction and \(\varvec{\omega }_{ij}\) is the relative angular velocity of particle i with respect to particle j. Although the rolling friction has been somewhat successfully used to model particle shape [88], it remains unclear whether it is a physical property [88, 93].

To represent inter-particle bonding due to sintering, we consider a supplemental cohesion model shown in Fig. 1c. Several bond models have been proposed like the dual spring [23], the Euler-Bernoulli [7, 26], the cohesive beam [2, 74], the bonded particle [33, 55], the vector-based [52], and the parallel bond [67] models. These models typically describe a rigid bond between particles that forms initially and remains until breakage occurs. As a result, they can only be used to run short simulations with restricted interaction types.

On the other hand, the Johnson-Kendall-Roberts (JKR) model [48] acts by adding an additional normal force, also known as pull-out force, which tends to maintain the contact. The pull-out force corresponds to the force needed to separate two particles with zero overlaps. It can vanish and reappear throughout the simulation depending on the particle contacts, allowing the bond to fracture and reform dynamically. The JKR model was first developed from the observation of necks forming around the contact area of adhesive solids. It accounts for the electrostatic interactions such as the Van der Waals forces and other physical and chemical surface interaction effects, and, thus, its formulation depends on the material’s surface energy \(\gamma\) [48].

In our work, we employ the simplified version of the JKR model (SJKR), in which the area increment caused by the formation of the neck is ignored, and the effective contact area is simply calculated as the intersection of the two particles. The cohesive force in the SJKR model is calculated as follows:

where \(\Omega\) is the specific cohesion energy density per unit volume (J/\(\hbox {m}^3\) or Pa) and \(\pi a^2\) is the circular contact area. This cohesive force also implicitly represents the resistance of the inter-particle bond to shear and bending. Because the maximal tangential force \(\varvec{F}_t\) and resistive torque \(\varvec{\tau }_r\) are proportional to the normal force, their magnitudes increase with the additional normal force due to the cohesion force \(|\varvec{F}_{coh}|\). The contacts configuration used in this study is summarized in the Supplementary Material (Table S1).

3 Simulation of porous granular ice

3.1 Simulation setup

The DEM simulations are performed using the open-source code \(\hbox {LIGGGHTS}^{\copyright }\) [51]. The cone geometry is replicated at true scale. The cone has a diameter of \(D_{\text{cone}} = {10}\,\hbox {mm}\). The ice samples are cylinders having a diameter of \(D_{cyl}\) and a height of \(H = {130}\,{\hbox {mm}}\), which is approximately equal to the height of the experimental samples. The cone was meshed using a non-uniform grid, where the average mesh size is \({0.2}\,\hbox {mm}\) around the cone tip and \({2}\,\hbox {mm}\) around the cone base and at the shaft. The mesh size was chosen to be approximately an order of magnitude smaller than the particle size to ensure an accurate transfer of forces between the particles and the solid. The particle diameter in our study ranged from \({1.5}\,\hbox {mm}\) to \({8}\,\hbox {mm}\). The visualization of the simulations was performed using ParaView (version 5.2.0) and the postprocessing using a custom code implemented on \(\hbox {MATLAB}^{\copyright }\) (version r2018b). The simulations were run on Lonestar5 - a high-performance supercomputer at the TACC facilities (Texas Advanced Computing Center)–and took advantage of LIGGGHTS’s parallel processing capabilities. To this end, an MPI (Message Passing Interface) framework was used to compile a parallel version of LIGGGHTS. Up to four nodes, each with two 12-core (Xeon E5-2690 v3 – Haswell 2.60 GHz) processing cores, were used for the various simulations. On this setup, a typical simulation takes about two hours.

To simulate the cone penetration test, the ice particles are poured into a cylinder from a height approximately equal to the final height of the sample. The particles are allowed sufficient time to settle under terrestrial gravitational conditions. The cone is then driven at a constant velocity of \({100}\,{\hbox {mm.s}^{-1}}\) towards the bottom of the sample. It should be noted that, due to computational limitations, the penetration velocity was increased by a factor of ten relative to the experimental penetration speed. The simulation is terminated when the cone reaches \({20}\,\hbox {mm}\) before the bottom of the sample. Fig. 2 shows a snapshot of the simulation at \(\hbox {t} = {0}\,\hbox {s}\) and \(\hbox {t} = {0.5}\,\hbox {s}\) cut along the middle of the sample.

a Custom cone penetrometer apparatus used in [16] to measure the cone penetration resistance of sintered ice samples. Dimensions are in mm b (left) DEM simulation of a cone penetration test of sintered ice at \(\hbox {t} ={0}{\hbox {s}}\) (right) Cut-off view at the sample median at \(\hbox {t} = {0.5}\,{\hbox {s}}\) showing the particles in contact with the cone along with the cone dimensions. The cone geometry is replicated at scale. In the presented case, the sample diameter is \(D_{cyl} = {100}\,\hbox {mm}\) and the particle radius is \(R = {2}\,\hbox {mm}\)

3.2 Model input parameters

In our study, we varied six parameters, namely the particle’s Young’s modulus \(E_p\), the friction coefficient \(\mu\), the rolling friction coefficient \(\mu _{r}\), the cohesion energy density \(\Omega\), the particle radius R, and the sample size \(D_{cyl}\). The parameters’ range and all other model input parameters are summarized in Table 1. \(\mu\), \(\mu _r\), \(\Omega\), \(E_p\) are the degrees of freedom of the model. The range of particle properties is taken to be 1000 to 10 times lower than the bulk ice modulus owing to the peculiar microstructure of our porous ice [25, 63, 76]. The model’s sensitivity to these parameters is investigated extensively in Sect. 4.3. The particle radius R and the domain size \(D_{cyl}\) are the hyper-parameters of the model and are investigated extensively in Sect. 4.1.

To reduce the complexity of the model, we equate the particle-cone friction coefficients to the particle-particle friction coefficients, i.e. \(\mu _{pp} = \mu _{pc}\) and \(\mu _{r,pp} = \mu _{r,pc}\). The particle’s density \(\rho\) was derived from the density of homogeneous polycrystalline isotropic ice at phase \(\mathrm {Ih}\). Even though the density of ice decreases slightly with temperature by \(\sim\) \({0.1}\,{\hbox {kg.m}^{-3}/\hbox {K}}\) [39], for simplicity, we assume a constant density at the reference temperature (i.e., T = 233 K). The Poisson’s ratio \(\nu _p\) was taken as the average over our experimental temperature range [24, 25, 61, 63, 76, 80]. The particle-particle coefficient of restitution \(e_{pp}\) and particle-cone coefficient of restitution \(e_{pc}\) were obtained from previous studies on collision between, respectively, two ice particles [38], and an ice particle and an ice block [37].

Table 1 also reports the range of time step values used in the Velocity Verlet integration scheme. As the simulation time step is affected by both the particle properties and the particle size, the time step is adjusted for each simulation to match the critical time step to ensure algorithmic stability. The calculation of the critical time step is discussed in detail in the Supplementary Material (Text S3).

3.3 Model response variables

To study the response of the ice sample, we measure the force reaction on the cone upon penetration, which, according to Newton’s third law, is equal to the force required to drive the cone at the given velocity. The force is extracted along three directions, with the Z-axis aligned with the cone axis. The X- and Y-axes are orthogonal to each other but arbitrarily oriented relative to the sample due to the symmetry of the problem. The stress components \(\sigma _x\), \(\sigma _y\) and \(\sigma _z\) are derived by dividing the components of the force vector by the largest cross-sectional area of the cone (\(A_{cone} = {78.5}\,{\hbox {mm}^2}\)).

Typical stress response of a cone penetration test simulation a Stress components measured at the cone tip as a function of the penetration depth. b Description of summary statistics; the cone penetration energy density \(E_i\), the cone penetration resistance \(\overline{\sigma _i}\), and the dispersion factor \(s_{\sigma _i}\)

Figure 3a shows a typical stress response of a cone penetration simulation. We note that the stress components along the X- and Y-axes are not precisely equal, meaning that the problem is not perfectly symmetrical. This is primarily due to the granular nature of the material and the non-symmetry in the particle configuration.

The stress results from the top three centimeters starting of the samples are excluded from the analysis. It is suspected that the derived strength in this region is not representative of the sample strength since the tip influence zone has not yet fully developed [73]. Similarly, the stress results from the bottom three centimeters of the sample are excluded from the analysis. In this region, the tip influence zone interacts with the bottom of the sample, which distorts the strength measurements. The stress results in the remaining proof region are the most representative of the true strength of the specimen. The tip influence zone in this region is considered to be nearly undistorted and uniform. Figure 3a summarizes the extent of these regions.

To reduce the dimensionality of the model response, we extract a number of summary statistics from the stress profiles, namely the cone penetration resistance \(\overline{\sigma _i}\), the dispersion factor \(s_{\sigma _i}\), the penetration energy density \(E_i\) along the three axes, and the strength-depth correlation factor \(\kappa\). These metrics can be calculated as follows:

where \(\sigma _{i,k}\) is the stress component along the i-axis measured at depth \(z_k\). Figure 3b provides a visual depiction of the extracted summary statistics. \(\overline{\sigma _i}\) is indicative of the average sample strength, \(s_{\sigma _i}\) of the dispersion of the stress, \(E_i\) is of the total energy required for the cone to penetrate through the sample, and the coefficient \(\kappa\) is indicative of any depth dependency in the stress profile.

The coefficient \(\kappa\) takes values between − 1 and 1. A zero value corresponds to a plateau in the cone penetration strength profile. A positive \(\kappa\) value reflects a depth-strengthening behavior, while a negative \(\kappa\) reflects a depth-weakening behavior. The higher the absolute value of \(\kappa\), the more pronounced the depth dependence.

4 Statistical study of the model parameters

4.1 Sample dimensions

Due to computational limitations, DEM samples are typically downscaled compared to their experimental counterpart, while particles are typically upscaled relative to the true particle size [31]. However, the selection of the downscaling and upscaling factors is not trivial and is usually performed heuristically. Here, we propose a method for rigorously selecting the optimal factors such that the numerical sample represents the true boundary conditions while entailing a relatively low computational cost. We simulate different sample configurations with a range of relative sample diameters \({D_{cyl}/D_{cone}}\) and relative particle diameters \({D_{cone}/d}\), as shown in Fig. 4. The sample diameter \({D_{cyl}}\) is expressed relative to the cone diameter \(D_{cone}\) as it is indicative of the extent of the boundary influence zone. An infinitely large bed effectively mimics the true sample boundary conditions and, thus, large sample diameters are desired. The cone diameter \(D_{cone}\) is expressed relative to the particle diameter d as it is indicative of the number of active particle-cone contacts. The total number of particles N needed for each configuration can be estimated using the formula \(N=\frac{3}{2} (1-\phi ) {D_{cyl}^2 H/d}\) where \(\phi\) is the sample porosity and H is the sample height. In this work, the sample porosity and height are taken to be equal to their experimental values, i.e., \(\phi =0.42\) and H = \({130}\,\hbox {mm}\). With the available computation resources, several particles ranging from \(\sim \! 300\) to \(\sim \! 65,000\) can be simulated in 3 minutes to 100 hours.

The computational time per node \(\tau\) is defined as the time taken to complete a simulation multiplied by the number of nodes used. \(\tau\) provides a measure that can be compared across simulations under some assumptions of equivalence, i.e., constant simulation performance, linear speed-up, little overhead time, and 100% parallelizable instructions. \(\tau\) tends to increase with the number of particles, which itself increases with both the particle diameter and the sample diameter. Hence, there is a trade-off between the particle diameter and sample size, as illustrated by Fig. 4. We note that the physical configuration corresponds to an estimated particle count of \(N=170.10^9\) and an extrapolated computation time of \(\tau =3.10^{11}\) h - a prohibitively expensive cost that reaffirms the need for scaling factors.

Matrix of relative sample dimensions \({D_{cyl}/D_{cone}}\) as a function of the relative particle dimensions \({D_{cone}/d}\). \(\tau\) represents the computational time per node. Starred values are the estimated computation time derived from an exponential fit of the executed simulations. N refers to the number of particles in the sample, and starred values are estimated numbers. The case corresponding to the physical condition is reported in the top-right case (particle diameter \(\sim \! 25\) μm, sample diameter \(\sim \! 160\) mm). The color scale represents the computational time, where red cases correspond to simulations needing considerable computational resources that exceed the available resources for this study. Green to orange cases represents the computationally feasible cases with a varying need for resources

To select the optimal configuration, we qualitatively analyze the sample displacement fields. Figure 5 shows the displacement map of the particles in the vertical direction \(\delta _z\) (left) and in the radial direction \(\delta _r\) (right), averaged azimuthally around the main axis. We observe that lower relative sample diameter leads to higher vertical displacement, which the law of mass conservation can explain. No significant change in the vertical displacement map can be seen for values higher than \({D_{cyl}/D_{cone}}=10\), indicating that this value might be an appropriate threshold. We also observe that finer particles lead to finer radial displacement maps, although no significant change can be seen beyond \({D_{cone}/d}=2.5\), indicating that this ratio might be an appropriate choice.

Matrix of the particle displacement field as a function of relative sample dimensions \({D_{cyl}/D_{cone}}\) and relative particle dimensions \({D_{cone}/d}\). In each panel, the left map represents the vertical displacement \(\delta _z\) while the right map represents the radial displacement \(\delta _r\) averaged azimuthally around the main sample axis

To formally study the trade-off between sample size and computational time, we perform a Pareto analysis relating the displacement at the boundary to the computational cost, as shown in Fig. 6. The Pareto front connects the efficient configurations selected in such a way that no one objective can be improved without sacrificing the other. The objectives in our study are minimal displacement at the boundary and minimal computational cost. The displacement at the boundary is calculated as the average total displacement in the outer \({10}\hbox {mm}\) layer of the sample. The computational cost, measured in service-units (SUs), represents the computational resources needed in terms of time and number of cores. The Pareto optimal configuration correspond to the point closest to the origin of the graph shown in Fig. 6. By inspection and considering the available computational resources, we select \({D_{cyl}/D_{cone}}=10\) and \({D_{cone}/d}=2.5\). Henceforth, a sample diameter \(D_{cyl} = {100}\,\hbox {mm}\) and a particle radius \(R = d/2 = {2}\,\hbox {mm}\) are used in all simulations.

Finally, while varying the particle size in the above analysis, we observed a decrease in penetration energy density with decreasing particle size. Nevertheless, this effect does not impact our results and an in-depth study of its causes goes beyond the scope of this work.

Computational cost versus average total displacement in the outer one centimeter layer of the sample. The displacement is calculated as \(\delta =\sqrt{\delta _x^2+\delta _y^2+\delta _z^2}=\sqrt{\delta _r^2+\delta _z^2}\). The dashed line is the estimated Pareto front, where optimal solutions correspond to the joint minimization of the objectives; displacement vs. computational cost

4.2 Sample bed randomness

The sample bed is produced by pluviation, which has an inherent random component. The particles can assume a virtually infinite number of packing configurations while statistically retaining consistent macroscopic properties. This randomness implies a non-unique stress profile despite holding the same input parameters. To evaluate the effect of packing randomness on the model response, we compare the results of ten sample beds having arbitrarily different particle arrangements but the same input parameters. The results are summarized in Fig. 7.

Box plots representing the distribution of the summary metrics of ten beds having arbitrarily different particle configurations but the same particle and interaction properties. The spread of the data points illustrates the variance in the model due to variations in particle arrangements. The interquartile range for this parameter configuration is \(IQ_{\sigma _i} = [2.08 e3, 1.76 e3, 2.58 e3\)] MPa, \(IQ_{s_{\sigma _i}} = [1.55 e3, 1.18 e3, 1.67 e3\)] MPa, \(IQ_{E_i} = [2.07 e3, 1.75 e3, 2.61 e3\)] MPa for \(i \in \{x,y,z\}\) The spread in the strength-depth correlation factor is \(IQ_\kappa =0.25\)

We observe that the spread in the model outputs, measured by the interquartile range IQ, is comparable. In particular, we note that the interquartile ranges are smaller than the experimental confidence bounds derived from the laboratory tests [16], allowing for the use of a single representative numerical sample with given parameters for each experiment.

To obtain a translatable measure of the dispersion, we calculate the coefficient of variation, which is expressed as \(C_v (x)={s_x/{\overline{x}}}\), where \(s_x\) is the standard deviation and \({\overline{x}}\) is the mean of the metric x. We obtain \(C_v({\overline{\sigma _z}}) = 4\%\), \(C_v(s_{\sigma _z})=14\%\), and \(C_v(E_z)=9\%\), indicating that the effect of sample bed randomness is limited. The coefficient of variation is not applicable to \(\kappa\) as it is a bounded measure (i.e., \(-1< \kappa < 1\)). We take \(IQ_\kappa\) as an estimate of the uncertainty associated with the sample bed randomness, which also indicates a limited spread relative to the experimental confidence bounds. We can conclude that the stochasticity introduced by the sample bed configuration will not significantly affect the subsequent model calibration and the use of a single representative sample bed is sufficient.

This limited effect of sample bed randomness has the added benefit of considerably reducing the simulation computational cost. Only one simulation is needed for each parameter set, and the associated confidence bounds accounting for sample bed randomness can be easily extrapolated. This reduces the number of simulations by a factor of approximately ten. Furthermore, creating the sample bed can take as long as running the virtual cone penetration test. Hence, using the same sample bed for all simulations further reduces the total computation time by a factor of two, allowing for a total reduction by a factor of about 20.

4.3 Sensitivity analysis

Before proceeding with the sensitivity analysis, we need to derive a lower-dimensional representation of the model output, i.e., a limited set of metrics that comprehensively capture the output characteristics. As seen in Sect. 3.3, ten variables are output from the DEM simulations. The degree of interdependence between the different model output parameters is measured by studying the correlation matrix, as shown in the Supplementary Material (Fig. S1). Output parameters with a high degree of correlation may be considered redundant, while output parameters with a degree of correlation close to zero may be considered orthogonal. We find that the penetration energy density \(E_z\) is poorly correlated with the strength-depth correlation factor \(\kappa\) (\(r = -0.17\)), indicating that \(\kappa\) and \(E_z\) express very different characteristics of the ice. Henceforth, the output of the numerical test of any sample is described by the pair (\(E_z\), \(\kappa\)).

As described in Sect. 3.2, the DEM model has six input parameters (i.e., six degrees of freedom). In this section, we assess the impact of each input parameter on the model response in an attempt to reduce the model complexity without significantly affecting its flexibility and predictive capability. To this end, we conduct a sensitivity analysis in which the output response is determined by sequentially varying one input parameter while fixing all other parameters to nominal values. Fig. 8 shows the variation of the penetration energy density \(E_z\) for a range of particle input parameters \(E_p\), \(\mu\), \(\Omega\), and \(\mu _r\).

Qualitative sensitivity analysis of the model micromechanical parameters on the penetration energy density \(E_z\) a Effect of the particle Young’s Modulus \(E_p\) b Effect of the friction coefficient \(\mu\) c Effect of the cohesion energy density \(\Omega\) d Effect of the rolling friction coefficient \(\mu _r\)

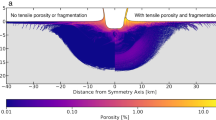

In Fig. 8a, we observe that the penetration energy density \(E_z\) decreases with increasing Young’s modulus \(E_p\), which can be explained by the fact that stiffer particles are harder to deform and thus have a lower contact area. Furthermore, since the cohesive forces are proportional to the contact area (see Eq. (10), they are also reduced, making it easier for the cone to penetrate the sample. Figure 8b shows that the penetration energy density \(E_z\) increases monotonically with the friction coefficient \(\mu\), which can be explained by considering that more friction implies more energy for the particles to slide past each other. The strength appears to reach a plateau at low and high friction values. At low friction values (\(\mu \approx\) 0.2), the strength is only a few kPa. In this regime, the particles can easily slide past each other and the strength is primarily determined by the resistance to rolling, which simulates the interlocking effect [88]. The relatively low contribution of the rolling resistance (few kPa) to the total sample strength (few MPa for a typical sample) supports the relative unimportance of the rolling friction parameter in our case. At high friction values (\(\mu > 2\)), the Coulomb threshold is high and particles cannot easily slide past each other. Instead, the particles’ bonds rupture and the contact points are continuously reforming as the simulation progresses. In this case, the strength is limited by the bond strength and thus the cohesion value, which points to the relative importance of this parameter. Figue 8c shows a large influence of the cohesion energy density \(\Omega\) on the model response, although the dependence is not monotonic. A higher inter-particle cohesion implies higher energy for the cone to penetrate through the sample. However, a peak strength is observed at \(\Omega\) \(\sim \! {80} \,\hbox {MPa}\). Visualization of the simulations beyond this threshold indicates the formation of cracks which could explain the apparent decrease in strength beyond this limit, as shown in the Supplementary Material (Fig. S2). Finally, Fig. 8d shows the influence of the rolling friction coefficient \(\mu _r\) on the penetration energy density \(E_z\). The increase in strength for \(\mu _r < 1\) can be explained by the fact that the particles require an increasing amount of torque to roll over each other. The maximum strength is reached when the rolling resistance becomes equal to the Coulomb friction limit i.e., \(\mu _r R^* {|\varvec{F}_n|/R}=\mu |\varvec{F}_n|\). In the case of homogeneous particle sizes, the equation leads to \(\mu _r = 2 \mu\). Beyond this limit, the particles will slip past each other before exceeding the torque limit, and thus the strength will decrease.

To quantify the influence of each parameter, we compute the parameter sensitivity matrix \({\overline{S}}_k\), whose formula, adapted from [89], reads as follows:

where \(y=E_z\) is the response variable and \(x_k^i\) is the value of the k-th parameter in the i-th simulation. To have a comparable metric, we scale the sensitivity values \(\frac{dy}{dx_k}\) by the value of each parameter in each experiment \(x_k^i\) and model response \(y(\varvec{x^i})\). We run a set of 81 simulations with the input parameters \(E_p = [0.5,1,5]\) GPa, \(\mu = [0.2,0.6,1]\), \(\Omega = [0.2,2,20]\) MPa, and \(\mu _r = [0.5,0.75,1]\). Several statistics of the sensitivity matrix are reported in Fig. 9.

Yan et al. [89] proposed to use the norm of the parameter sensitivity matrix \(norm({\overline{S}}_k)\) in DEM sensitivity studies to determine the most influential parameter. However, this metric is biased towards higher values due to the quadratic nature of the norm. Moreover, it does not provide information on the directional dependence of the response. As an alternative metric, we could take the mean value of the distribution \(< {\overline{S}}_k>\) which accounts for the directional dependence and gives all simulations the same weight, effectively solving two of the pitfalls of the norm metric mentioned above. The mean value metric, however, is sensitive to extreme values. As shown in Fig. 9, the distributions of \(E_p\), \(\mu\), and \(\Omega\) are highly skewed, which biases the mean value. To mitigate these points, we propose an analysis based on the median value of the parameter sensitivity matrix \(median({\overline{S}}_k)\). The median values, as shown in Fig. 9, are consistent both in direction and magnitude with the findings showcased earlier in Fig. 8. The friction coefficient \(\mu\) entails a positive relationship where a 100% increase in \(\mu\) leads to about 153% increase in penetration energy density. Similarly, a 100% increase in the cohesion energy density \(\Omega\) leads to an average increase of about 36% in the penetration energy density. For Young’s modulus \(E_p\), a 100% increase leads to about a 12% decrease in penetration energy density. The coefficient of rolling friction \(\mu _r\) seems to be the least influential parameter and is almost symmetrically distributed around − 8%, consistent with the non-monotonic relationship observed in Fig. 8.

We conclude from our sensitivity study that the coefficient of friction \(\mu\) and the cohesion energy density parameter \(\Omega\) are the most influential parameters and can, henceforth, be considered as the model parameters. Both Young’s modulus \(E_p\) and the coefficient of rolling friction \(\mu _r\) have a relatively low influence and can thus be held constant. For the subsequent simulations, we choose a value of Young’s Modulus \(E_p = 1\) GPa, which is close to Young’s modulus of snow [27] but 5–10 times lower than Young’s modulus of isotropic polycrystalline ice [25, 63, 90]. While we expect Young’s modulus of this type of granular ice to differ from snow and isotropic ice due to differences in microstructure, the low sensitivity values observed suggest that an exact estimate is not required. The coefficient of rolling friction is fixed to the value that maximizes the strength results, i.e. \(\mu _r=1\), to emulate the interlocking effect of particle aggregates.

5 Model response and calibration

5.1 Model response maps

The model response is estimated by simulating a wide range of model input parameters. This step corresponds to the evaluation of the implicit function F that represents our DEM model:

where \(\theta _i\) is the vector of model input parameters for the i-th simulation \((\mu _i, \Omega _i)\). We evaluate the function F at 110 parameter combinations distributed on a uniform grid. Then, the results are linearly interpolated to obtain a continuous map over the whole parameter space as shown in Fig. 10. We note that this interpolation is considered a “model of a model” and falls under the general framework of surrogate models [4, 6, 12, 33, 62, 69, 71, 92]. We choose a linear interpolation for its simplicity and the relatively good approximation it provides for fine grids.

Figure 10a shows the response map of the cone penetration energy density \(E_z\) while Fig. 10b shows the response map of the strength-depth correlation factor \(\kappa\). We see that the model can represent a broad range of ice conditions with varying strength values and depth-strengthening behavior. The modeled strength map, as seen in Fig. 10a, is regular and smooth with values ranging from \(\sim \! 1\) MPa (unconsolidated ice) to \(\sim \! 13\) MPa (heavily consolidated ice). The cone penetration energy density increases with increasing values of cohesion energy density \(\Omega\) and friction coefficient \(\mu\), consistent with the expectation that stronger particle-particle interactions yield a higher macroscopic strength. We also note a decrease in penetration energy density beyond a critical value of \(\Omega \sim \! 80\) MPa, which, as touched upon in Sect. 4.3, might be due to the formation of large cracks in the sample. As shown in Fig. 10b, the strength-depth correlation map is less smooth. In particular, at low \(\Omega\) and high \(\mu\) values, the model predicts a high depth-strengthening behavior. This observation is similar to the behavior exhibited by viscous liquids and is consistent with a loose frictional granular material. To better understand the depth-strengthening and depth-weakening behavior observed in our study (i.e. the value of the correlation factor \(\kappa\)), future work will investigate the scaling laws of the penetration force with depth, similar to other work on impact in granular material [8]. These model response maps will subsequently be used in the calibration process to solve the inverse problem of finding the pair of model input parameters (\(\Omega\),\(\mu\)) for a given output value (\(E_z\),\(\kappa\)) obtained experimentally.

5.2 Model calibration

DEM parameters are typically difficult to measure in the laboratory and cannot be easily related to measurable physical material parameters [35]. As a result, calibration is required to select the appropriate parameters for use in simulations. Calibration is considered one of the most challenging steps in DEM modeling, and is now an active area of research [20]. It corresponds to solving the inverse problem of finding the model parameters \(\tilde{\theta _i}\) that match the experimental bulk properties \(G_i^{exp}\) for the i-th experiment, as shown in the following equation:

In previous DEM studies, \(G_i^{exp}\) have been typically obtained from biaxial and triaxial tests [42], angle of repose tests [72], and Brazilian tests [33, 62]. Cone penetration tests are less commonly used for DEM calibration. They have only been used to calibrate non-cohesive materials in chambers with well-controlled boundary conditions [9, 18, 58]. Hence, calibrating this cohesive ice with cone penetration tests is an unprecedented task.

Multiple methods have been developed to solve the inverse problem in DEM [20, 71]. However, they either require numerous experiments [30, 35], a good a priori knowledge of the parameter dependencies [15, 19, 49, 56, 91], tend to find local minima rather than global optima [69], or tend to be computationally intensive [4, 22]. Probability-based calibration methods like the sequential quasi-Monte Carlo [14], iterative Bayesian filtering [15], Transitional Markov Chain Monte Carlo [32], and Sequential Monte Carlo [13, 14] are state-of-the-art and have been successfully used. However, they require expert knowledge and remain difficult to implement for many DEM users.

We propose a novel Bayesian parameter calibration method that optimally incorporates experimental uncertainties and uses a single calibration experiment. This method leads to a set of candidate DEM input parameters, together with their likelihood to yield the given experimental outcome. Considering the complete parameter space ensures that our method satisfies global optimality. To obtain a unique parameter set, we use a weighted mean approach where each parameter set is weighted by its likelihood. We run our method on each experiment independently, and estimate the corresponding posterior distribution on the model parameter space. The method makes use of the Bayes’ theorem such that:

where \(p(\tilde{\theta _i}|E_{z,i}^{exp}, \kappa _i^{exp})\) is the joint posterior probability distribution, \(p(\tilde{\theta _i}|E_{z,i}^{exp})\) is the posterior probability distribution on the parameter space given the experimental strength value \(E_z\), and \(p(\tilde{\theta _i}| \kappa _i^{exp})\) is the posterior probability distribution on the parameter space given the experimental strength-depth correlation factor \(\kappa\). This method assumes that the strength value and the strength-depth correlation factor are conditionally independent of the model input parameters. In other words, if the model input parameters were known, knowledge of the sample strength would not provide any information on its depth-strengthening behavior.

The uniqueness of our method lies in the estimation approach. We estimate the posterior distributions by solving the inverse problem described in Eq. (17) for a selected set of values within the experimental confidence bounds \(\{G_k | G_k= \mu _{G^{exp}}+\alpha _k \sigma _{G^{exp}}, k=1..N\}\), where \(\mu _{G^{exp}}\) and \(\sigma _{G^{exp}}\) are, respectively, the mean and standard deviation of the experimental measurement of the property G, N the number of selected values in the set, and \(\alpha\) is a variable. We then search for each value \(G_k\) on the response maps shown in Fig. 10, and record the associated parameter set \(\{{\tilde{\theta }}_{i,k}\}\). To each parameter \({\tilde{\theta }}_{i,k}\) in the parameter set \(\{{\tilde{\theta }}_{i,k}\}\), we associate a probability \(p({\tilde{\theta }}_{i,k})\) equal to the corresponding value of the probability density function of a standard normal distribution \(\varphi (\alpha _k)\). For example, if we consider values at both ends of the experimental confidence interval, their associated parameters will be attributed a low probability value (i.e. a low weight), whereas for values at the center of the experimental confidence interval their associated parameters will be attributed high probability values (i.e. high weights). The algorithmic implementation for this procedure is presented in details in Supplementary Material (Algorithm S1).

The experimental strength measurements \(E^{exp}_z\) are represented by a Gaussian distribution with a mean and standard deviation equal to the experimental values, i.e., \(\mu =\mu _{E^{exp}_z}\) and \(\sigma =s_{E^{exp}_z}\). For the correlation factor \(\kappa\), we cannot assume a Gaussian distribution since \(\kappa\) is a bounded measure. We can transform the bounded correlation values to unbounded values using the Fischer transformation function \(F(r)=\frac{1}{2} ln( \frac{1+r}{1-r}) =\) arctanh(r), commonly used for correlation factors. The mean and standard deviation of the Gaussian distribution is equal to the mean and standard deviation of the transformed experimental values, i.e., \(\mu =\mu _{F(\kappa ^{exp})}\) and \(\sigma ={s_{F(\kappa ^{exp})}/\sqrt{n-3}}\), where n is the sample size.

To assess the normality assumption, we compare the quantiles of the experimental and theoretical distributions in a Q–Q plot shown in the Supplementary Material (Fig. S2). The results indicate a good match between the experimental and theoretical distributions overall, which suggests that the data reasonably satisfies the normality assumption and justifies the choice of the Gaussian distribution.

6 Results and discussion

6.1 Comparison between experimental and simulation results

We first examine the cone penetration test results of ice samples that sintered at four different temperatures (193 K, 223 K, 233 K, and 243 K) for time periods up to 14 months [16]. Figure 11 shows the experimental strength-depth correlation factor \(\kappa\) as a function of the penetration energy density \(E_z\). We observe that the ice samples exhibit strength values up to 14 MPa and correlation factor values spanning almost the whole \([-1,1]\) interval. The data points are mostly scattered throughout the range of possible values, indicating that the ice can take a large number of physical configurations and have a rather large, unrestricted state space. A closer inspection of the data shows a cluster of very high positive strength-depth correlation factor values at penetration energy density below 2 MPa. Such high values of \(\kappa\) correspond to a linear increase in resistance with depth. For penetration energy density values higher than 2 MPa, the strength-depth correlation factor is uniformly distributed, which indicates an absence of apparent relationship between cone penetration resistance and depth. In addition, for \(E_z > 2\) MPa, the cone penetration profiles (e.g., Fig. 3) exhibit a jerky behavior. These findings are indicative of a brittle mechanical behavior for samples with resistance above 2 MPa, a value comparable to the brittle compressive strength of ice at strain rates greater than approximately \(10^{-2}\) \(\hbox {s}^{-1}\) [17].

The purple region in Fig. 11 corresponds to the ice states that are numerically reproducible with our current DEM model. It is derived from a smoothed representation of the combination of the model response maps shown in Fig. 10 and indicates that our DEM model can represent approximately 70% of the observed experimental states.

Strength-depth correlation factor \(\kappa\) as a function of the cone penetration energy density \(E_z\) extracted from the experimental cone penetration tests. Markers color-coded according to the sintering temperatures. The colored surface corresponds to the numerically feasible region. (Adapted from [16])

For each experiment presented as a data point in Fig. 10, we follow the calibration procedure described in Sect. 5.2. Figure 12 shows an example of the posterior probability distribution for an ice sample aged for 4 days at \(T=\) 243 K. The sample response is characterized by a cone penetration energy density \(E_z=\) 3.06 ± 0.24 MPa (95% confidence interval) and a strength-depth correlation factor \(\kappa =-0.29\) (\(CI_{\kappa }=[-0.62;\,0.14]\) 95% confidence interval). Figure 12a shows the posterior distribution given the strength value \(p(\tilde{\theta _i}|E_{z,i}^{exp})\). The distribution spans over a large set of possible model input parameters. The most likely parameters form a smooth line owing to the regularity of the underlying strength map. Figure 12b shows the posterior probability distribution given the strength-depth correlation factor \(p(\tilde{\theta _i}|\kappa _i^{exp})\). The distribution still spans a large set of possible model input parameters but is less regular due to the unevenness of the underlying response map. Together, these two distributions restrict the possible set of parameters. Figure 12c shows the joint posterior distribution \(p(\tilde{\theta _i}|E_{z,i}^{exp},\kappa _i^{exp})\), which depicts the most likely set of input parameters for a given experiment. This map can be intuitively understood by considering that input parameters that are declared highly likely by both probability distributions, \(p(\tilde{\theta _i}|E_{z,i}^{exp})\) and \(p(\tilde{\theta _i}|\kappa _i^{exp})\), are highly likely overall. Input parameters that are declared likely by only one of the distributions are only moderately likely overall and parameters that are declared impossible by one of the distributions (i.e., \(p(\tilde{\theta _i}|E_{z,i}^{exp}) = 0\) or \(p(\tilde{\theta _i}|\kappa _i^{exp}) = 0\)) are considered impossible overall.

In this particular example where the sample sintered for 4 days at \(T=\) 243 K, the most probable input parameters are in the lower-end of friction coefficient \(\mu \approx 0.33\) and cohesion \(\Omega \approx\) 42 MPa, which is consistent with the low level of consolidation of the sample and its relatively young age. In the case of more consolidated ice, for example, a sample that aged for 28 days at \(T=\) 243 K developed a cone penetration energy density \(E_z=9.33 \pm 2.28\) MPa (95% confidence interval) and a strength-depth corrrelation factor \(\kappa =-0.69\) (\(CI_{\kappa }=[-0.93;-0.03]\) 95% confidence interval). Our analysis yields a most probable friction coefficient of \(\mu \approx 0.69\) and cohesion parameter of \(\Omega \approx\) 87 MPa.

Posterior probability distributions on the model parameters’ space for a cone penetration test performed on an ice sample aged for 4 days at \(T=\) 243 K. The colormap gives the likelihood of each parameter set a Posterior distribution \(p(\tilde{\theta _i}|E_{z,i}^{exp})\) for an experimental penetration energy density value \(E_z\) = 3.06 ± 0.24 MPa (95% confidence interval) b Posterior distribution \(p(\tilde{\theta _i}|\kappa _i^{exp})\) for a strength-depth correlation factor \(\kappa =-0.29\) (\(CI_{\kappa }=[-0.62;0.14]\) 95% confidence interval) c Joint posterior distribution \(p(\tilde{\theta _i}|E_{z,i}^{exp}, \kappa _i^{exp})\sim p(\tilde{\theta _i}|E_{z,i}^{exp}) p(\tilde{\theta _i}| \kappa _i^{exp})\)

6.2 Time evolution of particle-scale parameters

Figure 13 shows the evolution of the calibrated set of parameters as a function of sintering time and temperature for all tests performed. Fig. 13a and b show, respectively, the evolution of the calibrated cohesion energy density parameter \(\Omega\) and the calibrated friction parameter \(\mu\), which both exhibit a clear temperature dependence. We constrain each parameter evolution at each temperature to the first order with a linear regression while weighting each parameter set by its probability. The dashed lines represent the best-fit trend lines.

Evolution of the calibrated model input parameters a Cohesion energy density \(\Omega\) and b Friction coefficient \(\mu\) as a function of sintering time and temperature. The mean and standard deviation of the probability distribution of the calibrated parameters are shown. The dashed lines represent the weighted linear regression and are color-coded with respect to temperature. Arrhenius plot of the calibrated model input parameters c Cohesion energy density \(\Omega\) and d Friction coefficient \(\mu\) as a function of inverse temperature

We observe from Fig. 13a and b that the evolution of the particle-scale parameters matches very well the evolution of the continuum-scale sample cone penetration resistance presented in [16]. The model parameters increase monotonically with time, and the evolution rates increase with temperature. This correspondence points to a link between the micro- and macro-mechanical properties and suggests that the evolution of the particle-scale interactions upon sintering is an important strengthening mechanism of the ice. Similarly to [16], we notice that the linear fits do not have the same intercept values with the Y-axis, which represents the parameters associated with a fresh ice sample. This may be an artifact of the simplistic linear evolution model assumed or due to a different strengthening rate at earlier sintering times.

To further investigate the temperature dependence, we extract the evolution rates of the model input parameters. Fig. 13c and d show the natural logarithm of the rates at which the cohesion energy density \(\frac{d\Omega }{dt}\) and the friction coefficient \(\frac{d\mu }{dt}\) evolve as a function of inverse temperature, also known as the Arrhenius plot. Such representation is widely used to study thermally activated processes. The evolution rates for each parameter seem to follow a linear trend, which supports that the evolution follows an Arrhenius law. The slope of the linear regression is given by \(-{Q/R}\), where Q is the activation energy of the underlying process and \(R = {8.314}\,{\hbox {J}/\hbox {mol.K}}\) is the ideal gas constant. The linear regression yields an activation energy of \(Q = 26.8 \pm 15.0\) kJ/mol for the cohesion energy density \(\Omega\) and \(Q = 17.9 \pm 5.5\) kJ/mol for the friction coefficient \(\mu\).

For comparison, [16] derived an activation energy of \(Q= 24.3 \pm 3.3\) kJ/mol for the rate of strengthening of the experimental samples and found that it is consistent with diffusion of water molecules on the surface of ice particles. Within their confidence intervals, the activation energies representing the temperature evolution of both the cohesion energy density and the friction coefficient are broadly consistent with the experimentally-derived value for the strengthening rate. This suggests that the particle-scale interaction parameters in the DEM model, despite larger uncertainties, reflect the underlying thermodynamics and represent well the effect of temperature that had been derived experimentally at the macroscopic scale.

6.3 Implications for mechanical properties from particle- to macro-scale

The correspondence between the calibrated model parameters and the physical properties of ice supports that the model parameters \(\mu\) and \(\Omega\) are representative of the physical micro-mechanical properties of the ice. The calibrated friction coefficient \(\mu\) of a sample that sintered for 4 days at \(T=\) 243 K (i.e., \(\mu \approx 0.33\)) is in good agreement with published experimental measurements. [77] measured the kinetic friction coefficient of fresh ice to be \(\mu =0.29\pm 0.03\) for a sliding velocity of \(V=10^{-3}\) \(\hbox {ms}^{-1}\) at a temperature of \(T=\) 223 K. Futhermore, the increase in the calibrated friction coefficient, as seen in the example above (from \(\mu \approx 0.33\) to \(\mu \approx 0.69\) in 24 days at \(T=\) 243 K), is consistent with increased inter-particle interactions due to sintering. The evolution of the model friction coefficient, as showcased in Fig. 13b, exhibits a clear positive trend and is reminiscent of static strengthening – a phenomenon observed in compact ice held under constant normal stress [77, 78].

The cohesion energy density \(\Omega\) is tightly linked to the material’s surface energy \(\gamma\), which has been established as the primary driver of ice sintering [5]. In fact, the JKR model, which represents various electrostatic, physical, and chemical surface interactions, expresses the cohesion force as a function of the material’s surface energy \(\gamma\) (in unit surface). The SJKR model, which makes a geometrical simplification to the JKR model, expresses the cohesion force as a function of \(\Omega\) (in unit volume). Furthermore, since increasingly sintered material require an increasingly high pull-out force to separate the particle, and since \(F_{pull-out}=F_{coh}=\Omega \pi a^2\), enhancement of sintering implies higher \(\Omega\) values – a feature that is showcased in our results on the evolution of the model cohesion energy densities (Fig. 13a).

This modeling study along with prior works suggest interrelationships between the particle-scale interaction parameters, the evolution of a mesoscopic network of ice, and the macroscopic mechanical properties that govern the brittle compressive failure of ice. As discussed in Sect. 6.1, the evolution in cone penetration resistance of macroscopic samples of ice microspheres suggested a transition in mechanical behavior from loose unconsolidated ice particles at low cone penetration resistance values (\(< 1\, \hbox {MPa}\)) to brittle compressive failure for values around 2 MPa or greater. This consolidation is not accompanied by any discernible change in bulk porosity, implying that sintering may be responsible for the development of an inter-particle network that binds the starting ice particles together. Once this network is established, the ice particles form consolidated aggregates which behaves as a coherent medium and fails in the brittle regime when compressed at strain rates greater than approximately \(10^{-2}\) \(\hbox {s}^{-1}\) [17].

Interestingly, the combination of friction and cohesive energy and its apparent dominant role in the brittle failure of porous ice has also emerged from experiments on the brittle compressive failure of fully-dense ice. There, the frictional-sliding wing crack mechanism [3, 29, 40, 57] accounts quantitatively for the behavior [11, 28, 70, 75, 87]. Accordingly, sliding across the opposing faces of closed, parent/primary cracks that are inclined to the principal loading direction induces tensile stress at the crack tips. When sufficiently high, tension is relieved through the initiation of out-of-plane secondary cracks termed wings (or extensile cracks). As sliding continues, the wing-crack mouths open, thereby increasing the mode-I stress intensity factor at their tips until a critical level is reached, at which point the wings begin to grow in a stable, albeit jerky, manner. As sliding continues, the secondary/wing cracks lengthen, interact with other secondaries and eventually form a fault at which point the material collapses. In this model, resistance to sliding is set by the coefficient of friction and resistance to wing crack growth is set by fracture toughness to which surface energy, and hence cohesive energy, is a major contributor in ice [76, pp. 207–208].

That two different approaches–DEM of sintered ice and experiment-cum-physical modeling of pore-free ice–point to the underlying role of the same physical processes suggests that an intermediate level of porosity, while increasing microstructural complexity, may not change the underlying physics of brittle compressive failure.

7 Conclusion

In this study, we present a physics-based numerical model of planetary ice plume deposit analogs that explicitly represents the microstructure and its evolution upon sintering. We calibrated our DEM model using 100 published experiments of cone penetration tests on ice plume deposit analog samples that sintered at different temperatures for time periods up to 14 months. We tuned the sample dimension and particle size (\(D_{cyl}\), d) following a Pareto-optimality approach and investigated the effect of the particle and bond parameters (\(E_p\), \(\mu\), \(\mu _r\), and \(\Omega\)) using a sensitivity analysis. We found that the friction coefficient \(\mu\) and the cohesion energy density \(\Omega\) are the primary model parameters. Furthermore, we proposed a novel easy-to-implement Bayesian probabilistic calibration method for numerically replicating experimental conditions while optimally incorporating experimental uncertainties.

Our DEM model has shown capable of reproducing experimental porous ice strengthening results and representing the physical micromechanics of ice and their evolution upon sintering. The evolution of the bond strength matches that of the macroscopic sample, suggesting that ice sintering is responsible for an increased interaction at the particle-scale which manifests itself by the formation of a mesoscale network structure that mechanically behaves in a manner akin to fully-dense ice.

Our findings show that this methodology can provide a critical link between theoretical and experimental studies, allowing us to better understand the effect of sintering on the mechanical properties of plume deposits on icy worlds. In the future, this model could be used to study ice features that are impossible or extremely difficult to observe experimentally, such as the force network, ice fabric, micro-dynamics, and fracture behavior. In this regard, it would enable a more detailed exploration of ice mechanics and, potentially, discovering new fundamental insights about this unique icy material.

This numerical model approach can also be expanded to robot-terrain applications. Such models are beneficial for engineers optimizing the design of planetary exploration robots and sampling systems. For this purpose, it would need to be interfaced with multi-body dynamics software to simulate complex robotic interactions such as traversing or sampling. As our model is tuned to cone penetration tests, its performance should be first evaluated on other loading conditions, then generalized to accommodate realistic robotic systems, such as landing pads.

Finally, the model can be extrapolated to Ocean Worlds surface conditions, accounting for the reduced gravity, reduced pressure, plume deposition rate, and differential sintering with depth. This extrapolation would allow the prediction of surface conditions on icy worlds and the identification of suitable landing and sampling sites.

Availability of data and material

All data used in this paper are available upon request.

References

Ai, J., Chen, J.F., Rotter, J.M., Ooi, J.Y.: Assessment of rolling resistance models in discrete element simulations. Powder Technol. 206(3), 269–282 (2011)

André, D., Iordanoff, I., luc Charles J, Néauport J. : Discrete element method to simulate continuous material by using the cohesive beam model. Comput. Methods Appl. Mech. Eng. 213–216:(113–125), 10.2012.1016/j.cma.2011.12.002

Ashby, M.F., Hallam, S.: The failure of brittle solids containing small cracks under compressive stress states. Acta Metallurgica 34(3), 497–510 (1986)

Benvenuti, L., Kloss, C., Pirker, S.: Identification of dem simulation parameters by artificial neural networks and bulk experiments. Powder Technol. 291, 456–465 (2016). https://doi.org/10.1016/j.powtec.2016.01.003

Blackford, J.R.: Sintering and microstructure of ice: a review. J. Phys. D: Appl. Phys. 40(21), R355 (2007)

Boikov, A.V., Savelev, R.V., Payor, V.A.: DEM calibration approach: random forest. J. Phys.: Conf. Series 1118, 012009 (2018). https://doi.org/10.1088/1742-6596/1118/1/012009

Brown, N.J., Chen, J.F., Ooi, J.Y.: A bond model for dem simulation of cementitious materials and deformable structures. Granular Matter 16(3), 299–311 (2014)

Brzinski, T.A., III., Mayor, P., Durian, D.J.: Depth-dependent resistance of granular media to vertical penetration. Phys. Rev. Letts. 111(16), 168002 (2013)

Butlanska, J., Arroyo, M., Gens, A., O‘Sullivan, C.: Multi-scale analysis of cone penetration test (cpt) in a virtual calibration chamber. Canadian Geotech. J. 51(1), 51–66 (2014)

Cable, M., Clark, K., Lunine, J., Postberg, K., Spilker, L., Waite, J.: Enceladus life finder: The search for life in a habitable moon. In: IEEE Aerospace Conference, Big Sky, MT, USA (2016)

Cannon, N., Schulson, E.M., Smith, T.R., Frost, H.: Wing cracks and brittle compressive fracture. Acta Metallurgica et Materialia 38(10), 1955–1962 (1990)

Chehreghani, S., Noaparast, M., Rezai, B., Shafaei, S.Z.: Bonded-particle model calibration using response surface methodology. Particuology 32, 141–152 (2017). https://doi.org/10.1016/j.partic.2016.07.012

Cheng, H., Luding, S., Magnanimo, V., Shuku, T., Thoeni, K., Tempone, P., (2018a) An iterative sequential monte carlo filter for bayesian calibration of dem models. In: Numerical Methods in Geotechnical Engineering IX, Volume 1. 9th European Conference on Numerical Methods in Geotechnical Engineering (NUMGE, : June 25–27, 2018, p. 381. CRC Press, Porto, Portugal (2018)

Cheng, H., Shuku, T., Thoeni, K., Yamamoto, H.: Probabilistic calibration of discrete element simulations using the sequential quasi-monte carlo filter. Granular Matter 20(1), 11 (2018b)

Cheng, H., Shuku, T., Thoeni, K., Tempone, P., Luding, S., Magnanimo, V.: An iterative Bayesian filtering framework for fast and automated calibration of dem models. Comput. Methods Appl. Mech. Eng. 350, 268–294 (2019)

Choukroun, M., Molaro, J.L., Hodyss, R., Marteau, E., Backes, P.G., Carey, E.M., Dhaouadi, W., Moreland, S.J., Schulson, E.M.: Strength evolution of ice plume deposit analogs of Enceladus and Europa. Geophys. Res. Letts. (2020). https://doi.org/10.1029/2020GL088953

Choukroun, M., Backes, P., Cable, M., Fayolle, E., Hodyss, R., Murdza, A., Schulson, E., Badescu, M., Malaska, M., Marteau, E., Molaro, J., SJ M, Noell A, Nordhein T, Okamoto T, Riccobono D, Zacny K, : Sampling plume deposits on enceladus’s surface to explore ocean materials and search for traces of life or biosignatures. The Planetary Science Journal. (2021)

Ciantia, M.O., Arroyo, M., Butlanska, J., Gens, A.: Dem modelling of cone penetration tests in a double-porosity crushable granular material. Comput. Geotech. 73, 109–127 (2016)

Coetzee, C.: Calibration of the discrete element method and the effect of particle shape. Powder Technol. 297, 50–70 (2016). https://doi.org/10.1016/j.powtec.2016.04.003

Coetzee, C.: Calibration of the discrete element method. Powder Technol. 310, 104–142 (2017)

Cundall, P.A., Strack, O.D.L.: A discrete numerical model for granular assemblies. Géotechnique 29(1), 47–65 (1979)

Do, H.Q., Aragón, A.M., Schott, D.L.: A calibration framework for discrete element model parameters using genetic algorithms. Adv. Powder Technol. 29(6), 1393–1403 (2018). https://doi.org/10.1016/j.apt.2018.03.001

Fakhimi, A., Villegas, T.: Application of dimensional analysis in calibration of a discrete element model for rock deformation and fracture. Rock Mech. Rock Eng. 40(2), 193 (2007)

Gagnon, R.E., Kiefte, H., Clouter, M.J., Whalley, E.: Pressure dependence of the elastic constants of ice ih to 2.8 kbar by brillouin spectroscopy. J. Chem. Phys. 89(8), 4522–4528 (1988)

Gammon, P.H., Kiefte, H., Clouter, M.J., Denner, W.W.: Elastic constants of artificial and natural ice samples by brillouin spectroscopy. J. Glaciol. 29(103), 433–460 (1983)

Ge, R., Ghadiri, M., Bonakdar, T., Zheng, Q., Zhou, Z., Larson, I., Hapgood, K.: Deformation of 3d printed agglomerates: Multiscale experimental tests and dem simulation. Chem. Eng. Sci. 217, 115526 (2020). https://doi.org/10.1016/j.ces.2020.115526

Gerling, B., Löwe, H., van Herwijnen, A.: Measuring the elastic modulus of snow. Geophys. Res. Letts. 44(21), 11–088 (2017)

Golding, N., Schulson, E.M., Renshaw, C.: Shear localization in ice: mechanical response and microstructural evolution of p-faulting. Acta Materialia 60(8), 3616–3631 (2012)

Griffith, A.: The theory of rupture. In: First Int. Cong. Appl. Mech, pp. 55–63 (1924)

Grima, A.P., Wypych, P.W.: Development and validation of calibration methods for discrete element modelling. Granular Matter 13(2), 127–132 (2011a)

Grima, A.P., Wypych, P.W.: Investigation into calibration of discrete element model parameters for scale-up and validation of particle-structure interactions under impact conditions. Powder Technol. 212(1), 198–209 (2011b)

Hadjidoukas, P., Angelikopoulos, P., Rossinelli, D., Alexeev, D., Papadimitriou, C., Koumoutsakos, P.: Bayesian uncertainty quantification and propagation for discrete element simulations of granular materials. Comput. Methods Appl. Mech. Eng. 282, 218–238 (2014)

Han, Z., Weatherley, D., Puscasu, R.: A relationship between tensile strength and loading stress governing the onset of mode i crack propagation obtained via numerical investigations using a bonded particle model. Int. J. Numer. Anal. Methods Geomech. 41(18), 1979–1991 (2017). https://doi.org/10.1002/nag.2710

Hand, K.P.: Report of the Europa Lander science definition team. National Aeronautics and Space Administration (2017)

Hanley, K.J., O‘Sullivan, C., Oliveira, J.C., Cronin, K., Byrne, E.P.: Application of taguchi methods to dem calibration of bonded agglomerates. Powder Technol. 210(3), 230–240 (2011). https://doi.org/10.1016/j.powtec.2011.03.023

Hertz, H.: On the contact of elastic solids. Z Reine Angew Mathematik 92, 156–171 (1881)

Higa, M., Arakawa, M., Maeno, N.: Measurements of restitution coefficients of ice at low temperatures. Planetary Space Sci 44(9), 917–925 (1996)

Hill, C., Heißelmann, D., Blum, J., Fraser, H.: Collisions of small ice particles under microgravity conditions. Astronom. Astrophys. 573, A49 (2015)

Hobbs, P.V., Chang, S., Locatelli, J.D.: The dimensions and aggregation of ice crystals in natural clouds. J. Geophys. Res. 79(15), 2199–2206 (1974)

Horii, H., Nemat-Nasser, S.: Brittle failure in compression: splitting faulting and brittle-ductile transition. Philos. Trans. Royal Soc. London Series A, Math. Phys. Sci. 319(1549), 337–374 (1986)

Huybrighs, H.L.F., Roussos, E., Blöcker, A., Krupp, N., Futaana, Y., Barabash, S., Hadid, L.Z., Holmberg, M.K.G., Lomax, O., Witasse, O.: An active plume eruption on europa during galileo flyby e26 as indicated by energetic proton depletions. Geophys. Res. Letts. 47(10), e2020GL087806 (2020). https://doi.org/10.1029/2020GL087806

Ismail, M.K.A., Mohamed, Z., Razali, M.: Contact stiffness parameters of soil particles model for discrete element modeling using static packing pressure test. AIP Conference Proceedings, AIP Publishing LLC 1, 020014 (2018)

Iwashita, K., Oda, M.: Rolling resistance at contacts in simulation of shear band development by dem. J. Eng. Mech. 124(3), 285–292 (1998)

Jaumann, R., Clark, R.N., Nimmo, F., Hendrix, A.R., Buratti, B.J., Denk, T., Moore, J.M., Schenk, P.M., Ostro, S.J., Srama, R.: Icy Satellites: Geological Evolution and Surface Processes, pp. 637–681. , Springer, Netherlands (2009). https://doi.org/10.1007/978-1-4020-9217-6\_20

Ji, S., Liu, L.: DEM Analysis of Ice Loads on Offshore Structures and Ship Hull, pp. 237–310. Springer, Singapore (2020). https://doi.org/10.1007/978-981-15-3304-4_8

Johnson, J.B., Hopkins, M.A.: Identifying microstructural deformation mechanisms in snow using discrete-element modeling. J. Glaciol. 51(174), 432–442 (2005)

Johnson, K.L.: Contact mechanics. Cambridge University Press, Cambridge (1987)

Johnson, K.L., Kendall, K., Roberts, A.D.: Surface energy and the contact of elastic solids. Proc. Royal Soc. London A 324(1558), 301–313 (1971)

Johnstone, M.W.: Calibration of DEM Models for Granular Materials Using Bulk Physical Tests. The University of Edinburgh, Edinburgh (2010)

Kempf, S., Beckmann, U., Schmidt, J.: How the enceladus dust plume feeds saturn‘s e ring. Icarus 206(2), 446–457 (2010)

Kloss, C., Goniva, C.: Liggghts-open source discrete element simulations of granular materials based on lammps. In: Supplemental Proceedings: Materials Fabrication, Properties, Characterization, and Modeling, vol. 2, pp. 781–788. Wiley Online Library (2011)

Kuzkin, V.A., Asonov, I.E.: Vector-based model of elastic bonds for simulation of granular solids. Phys. Rev. E 86(5), 051301 (2012)

Ledbetter, H.M.: Stainless-steel elastic constants at low temperatures. J. Appl. Phys. 52(3), 1587–1589 (1981)

Lunine, J.I.: Ocean worlds exploration. Acta Astronautica 131, 123–130 (2017)

Mak, J., Chen, Y., Sadek, M.: Determining parameters of a discrete element model for soil-tool interaction. Soil Tillage Res. 118, 117–122 (2012). https://doi.org/10.1016/j.still.2011.10.019

Marigo, M., Stitt, E.H.: Discrete element method (dem) for industrial applications: comments on calibration and validation for the modelling of cylindrical pellets. KONA Powder Particle J. 32, 236–252 (2015)