Abstract

In this paper we consider the spatial semi-discretization of conservative PDEs. Such finite dimensional approximations of infinite dimensional dynamical systems can be described as flows in suitable matrix spaces, which in turn leads to the need to solve polynomial matrix equations, a classical and important topic both in theoretical and in applied mathematics. Solving numerically these equations is challenging due to the presence of several conservation laws which our finite models incorporate and which must be retained while integrating the equations of motion. In the last thirty years, the theory of geometric integration has provided a variety of techniques to tackle this problem. These numerical methods require solving both direct and inverse problems in matrix spaces. We present three algorithms to solve a cubic matrix equation arising in the geometric integration of isospectral flows. This type of ODEs includes finite models of ideal hydrodynamics, plasma dynamics, and spin particles, which we use as test problems for our algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical solution of polynomial matrix equations is a well studied and active field of research [2, 3, 8]. Its relevance clearly goes beyond pure mathematics and the development of efficient algorithms is crucial in several areas of computational science. Linear matrix equations have been studied since the 19th Century, beginning with Sylvester [9]. Linear matrix equations have a variety of different formulations, and according to the specific structure, different techniques can be used to solve them [3, 8]. One degree higher, we find quadratic matrix equations. For such problems the theory is more intricate and various numerical issues may appear [2]. Among the quadratic matrix equations, one of the most studied is the continuous-time algebraic Riccati equation (CARE) [2], which will appear in Section 3.

In this paper, we study the numerical solution of the cubic matrix equation

where X is unknown and Y is given in \(\mathbb {M}(N,\mathbb {R})\) or \(\mathbb {M}(N,\mathbb {C})\) (the spaces of N × N real or complex matrices), \({\mathscr{L}}\neq 0 \) is a linear operator acting on matrices and h > 0. Equation (1) appears in the geometric integration of matrix flows of the form:

where Y = Y (t) is a curve in some subspace of the space \(\mathbb {M}(N,\mathbb {R})\) or \(\mathbb {M}(N,\mathbb {C})\) and the square brackets denote the commutator of two matrices: [A,B] = AB − BA. The flow of (2) is isospectral, which means that the eigenvalues of Y (t) do not depend on t. Furthermore, when \({\mathscr{L}}\) is self-adjoint with respect to the pairing 〈A,B〉 = Tr(AB), (2) is Hamiltonian (another term is “Lie-Poisson”), with Hamiltonian function given by

A discrete approximation of the solution of (2) is determined, for h > 0 sufficiently small, by the implicit-explicit iteration defined in [10] as:

In this scheme, Yn denotes the approximate solution at time tn. This scheme preserves the spectrum of Y0 and nearly conserves the Hamiltonian (3), indeed it is a Lie–Poisson integrator (see [10]). We observe that whenever Y belongs to a quadratic matrix Lie algebra

for some fixed \(J\in \mathbb {M}(N,\mathbb {C})\), and \({\mathscr{L}} :\mathfrak {g}_{J}\rightarrow \mathfrak {g}_{J}\), (1) admits solutions in \(\mathfrak {g}_{J}\). Indeed, the left-hand side of (1) is the differential of the inverse of the Cayley transform, which is know to preserve quadratic Lie algebras [4]. Of particular interest for applications to PDEs is the case of J = I, for which \(\mathfrak {g}_{J}=\mathfrak {u}(N)\), the Lie algebra of the skew-Hermitian matrices. In Section 4, we consider the Lie algebra \(\mathfrak {su}(N)\), which consists of skew-Hermitian matrices with zero trace.

Remark 1

It is not hard to check that the following equalities hold:

Hence, up to a term of order \(\mathcal {O}(h^{2})\), Yn evolves via the midpoint scheme, whereas Xn via the trapezoidal scheme. It is known that the midpoint and the trapezoidal method are conjugate symplectic [4]. Hence, we have that Xn evolves accordingly to a scheme \({\phi _{h}^{T}}:X_{n}\mapsto X_{n+1}\) which is conjugate isospectral to the scheme \({\phi _{h}^{M}}:Y_{n}\mapsto Y_{n+1}\) as defined in (4), i.e. there exists an invertible map χh such that:

The map is clearly given by the left-hand side of the first equation in (4), which we denote as \(\chi _{h}=\phi _{h/2}^{EE}\). We observe that \(\chi _{h}=I + \mathcal {O}(h)\). Hence, if Yn evolves on a compact set, then Xn evolves on a compact set for any n ≥ 0 [4, VI.8]. We illustrate this relationship in Fig. 1 below.

Illustration of the schemes (4-5). Here \({\phi _{h}^{M}}\) denotes the map in the first line of (5), \({\phi _{h}^{T}}\) the map in the second line of (5), \(\phi _{h/2}^{IE}\) the map in the first line of (4) and \(\phi _{h/2}^{EE}\) the map in the second line of (4). Those correspond up to \(\mathcal {O}(h^{2})\) terms respectively to the implicit midpoint, trapezoidal, implicit Euler, explicit Euler schemes

The need for an efficient solver for (1) can be understood by the fact that conservative PDEs, like the vorticity equation of fluid dynamics [11, 12] or the drift-Alfvén model for a quasineutral plasma [6], admit a spatial discretization in \(\mathfrak {su}(N)\), for N = 1,2,…. The crucial aspect of these finite-dimensional models is that they retain the conservation laws of the original equations. In order to retain these features, the resulting semi-discretized equations can be integrated in time using the scheme (4). Clearly, to get good spatial accuracy, N has to be quite large (at least 103). Moreover, the need for an efficient solver for (1) can be necessary for spin systems with many interacting particles. In this case, the equation (2) are posed in the product Lie algebra \(\mathfrak {su}(2)^{N}\), where N is the number of particles. We stress that in a typical simulation, hundreds of these cubic matrix equations have to be solved to high accuracy. This paper is devoted to devise an efficient way to solve (1). First we prove existence and uniqueness of the solution for (1), for h sufficiently small. Then, we propose and investigate three possible algorithms to solve (1), which intrinsically preserve the quadratic matrix Lie algebras. First, in Section 3.1 we consider an explicit fixed point iteration scheme, whose convergence follows from the existence and uniqueness result. Then, in Section 3.2, we consider a linear scheme again based on a fixed point iteration which requires the solution of a linear matrix equation. Again the existence and uniqueness result guarantees the convergence of the scheme. We will see in Section 3.4 that a suitable inexact Newton method applied to (1) is also convergent, at least locally. We will see in Section 3.3 that the third scheme, based on the Riccati equation, is not practical, due to the non uniqueness of the solution. In the last section, we show the results of numerical experiments aimed at assessing the efficiency of the different schemes for various linear operators \({\mathscr{L}} \), which correspond to different physical models.

We mention in passing that a different cubic matrix equation has been studied in [1].

2 Existence and Uniqueness

In this section, we show the existence and uniqueness of the solution for the equation (1), when the time-step h is sufficiently small.

Theorem 1

Given \(Y\in \mathbb {M}(N,\mathbb {C})\), (1) has a unique solution for sufficiently small h > 0 in some neighbourhood of Y. Furthermore, when (1) takes place in \(\mathfrak {su}(N)\), the solution is unique in some neighbourhood of Y ≠ 0 for any \(h< \frac {1}{3\|{\mathscr{L}}\|_{op}\|Y\|}\).

Proof

We rewrite (1) as a fixed point problem Fh(X) = X, for

We first show the existence of a solution for the fixed point problem Fh(X) = X. Let us introduce the following Cauchy problem:

where

which is invertible for h sufficiently small, being \(\|\frac {\partial F_{h}}{\partial X}\|_{op}=\mathcal {O}(h)\). Hence, the Cauchy problem (7) has solution X(h) for \(\|\frac {\partial F_{h}}{\partial X}\|_{op}<1\), being the right-hand side continuous. This ensures the existence of a solution for the fixed point problem Fh(X) = X, because of the equality

In order to get uniqueness for the fixed point problem Fh(X) = X, we show that there exists a neighbourhood of a fixed point X containing Y in which Fh is a contraction. Let us calculate

Hence

Therefore, given a fixed point X and 0 < ε < 1, in the neighbourhood of (0,X)

we find \(\{h\}\times B_{r}(X)\subset \mathfrak {U}_{\varepsilon }\), with h,r > 0 determined by (8), such that \(F_{h}:B_{r}(X)\rightarrow B_{r}(X)\) is a contraction and in \(\overline {B_{r}(X)}\) we can apply the Banach–Caccioppoli theorem and get a unique solution to the fixed point problem Fh(X) = X. Taking X + Z = Y, we get the neighbourhood of Y in which we have a unique solution for (1).

Let us now assume that (1) takes place in \(\mathfrak {su}(N)\). Then

The matrix \((I - (h{\mathscr{L}} X)^{2})(I - (h{\mathscr{L}} X)^{2})\) is symmetric positive definite for any X. Indeed, the eigenvalues of \(-(h{\mathscr{L}} X)^{2}\) are real non negative, since \({\mathscr{L}} X\in \mathfrak {su}(N)\). Therefore, ∥X∥≤∥Y ∥. Hence, replacing ∥X∥, ∥X + Z∥ with ∥Y ∥ in (8) and letting \(\varepsilon \rightarrow 0\), we can find, for Y ≠ 0, the following bound for h:

□

3 Numerical Schemes

In this section, we present three possible numerical schemes to solve (1).

3.1 Explicit Fixed Point

Theorem 1 gives a first scheme to solve (1):

for k = 1,2,…. From Theorem 1 we have that Fh is a contraction for h sufficiently small. Hence, the fixed point iteration has a unique solution for h small. When we look for solutions in \(\mathfrak {su}(N)\), we can take any \(h<\frac {1}{3\|{\mathscr{L}}\|_{op}\|Y\|}\). The resulting Algorithm 1 is given below. We observe that the cost per iteration is \({\mathcal O}(N^{3})\).

3.2 Linear Scheme

Equation (1) can be decomposed into two coupled matrix equations. This splitting induces the following scheme:

for k = 1,2,…. Note that the second matrix equation in (10) is linear in the unknown matrix Xk+ 1. It is straightforward to check that Xk+ 1 = S(Xk), for \(S_{h}(X)=(I - h{\mathscr{L}} X)^{-1}Y(I + h{\mathscr{L}} X)^{-1}\). Theorem 1 gives existence and uniqueness of a solution \(\overline {X}\) for h sufficiently small for (1). Hence, since Sh is analytic in the set \(\{(h,X)|\|h{\mathscr{L}} X\|<1\}\), we can conclude that the fixed point iteration Xk+ 1 = Sh(Xk) converges to \(\overline {X}\), when X0 is taken in a closed neighbourhood of \(\overline {X}\) in which Sh is a contraction.

When \(P\in \mathfrak {su}(N)\), we have (I − hP)∗ = (I + hP). Hence, it is enough to calculate the LU-factorization for (I − hP) to have the one for (I + hP). The resulting Algorithm 2 is given below. We observe that the cost per iteration is \({\mathcal O}(N^{3})\).

3.3 Quadratic Scheme

Similarly, we can consider the same decomposition of the previous section for (1), but reversing the roles of the known and unknown variables. This splitting induces the following scheme:

where the unknown in the first equation is Pk. The first quadratic equation can be put in the form

which is a type of CARE.

Consider the case when (12) is posed in \(\mathfrak {su}(N)\). Then we have two main issues concerning its solvability. On the one hand, defining Z := (I − hP), we see that the first equation can be written as:

Therefore, in order for (13) to have solution, X and Y must be congruent. Hence, X0 must be defined via some congruence transformation of Y. On the other hand, the following proposition shows that (13) admits infinitely many solutions. Let Ip,N−p denote the diagonal matrix with the first p entries equal to 1 and the remaining N − p entries equal to − 1, where 0 ≤ p ≤ N. We denote by U(p,N − p) the Lie group of matrices that leave the bilinear form b(x,y) = x∗Ip,N−py invariant.

Proposition 2

Let \(A,B\in \mathfrak {su}(N)\) be non-singular, with signature matrix equal to Ip,N−p. Then, the equation

has solution \(Z\in GL(N,\mathbb {C})\) (the Lie group of invertible complex matrices) if and only if Z = CUD, for some U ∈ U(p,N − p) and C, D non-singular such that B = CIp,N−pC∗ and DAD∗ = Ip,N−p.

Proof

Let A, B, C, D be as in the hypotheses. Then, we can rewrite (14) as

Therefore, C− 1ZD− 1 is an element of U(p,N − p). On the other hand, for any U ∈ U(p,N − p), we have that Z = CUD is a solution of (14). Hence, all the solutions of (14) have this form and are parametrized by U ∈ U(p,N − p). □

In our particular situation, we are interested in solutions of the form Z = I + P, for P skew-Hermitian. For instance, taking \(A,B\in \mathfrak {su}(N)\) diagonal such that i(A − B) ≥ 0 and iA < 0, any P diagonal skew-hermitian (i.e., purely imaginary) such that P2 = BA− 1 − I is a solution. Hence, for generic A,B as above, we get 2N solutions, making the iteration (11) not well-defined.

We can see that the Riccati equation (12) has a non-uniqueness issue also in the following way. The equation can be split into two orthogonal components, one parallel to X and one orthogonal to it with respect to the Frobenius inner product:

where πX is the orthogonal projection onto \(\text {stab}_{X}:=\{A\in \mathbb {M}(N,\mathbb {C})\text { s.t. } [A,X]=0\}\). If we write P∥ := πXP and \(P_{\bot }:={\Pi }^{\bot }_{X} P\), we get:

If these equations have a solution (P∥,P⊥), then we also have a solution \((-P_{\parallel },P^{\prime }_{\bot })\), where \(P^{\prime }_{\bot }=P_{\bot }+\mathcal {O}(h^{2})\). In \(\mathfrak {su}(2)\cong \mathbb {R}^{3}\) this is easily seen, since the above scheme reads [10]:

where × denotes the vector product and the matrices in \(\mathfrak {su}(2)\) have been represented as vectors in \(\mathbb {R}^{3}\), via the standard isomorphism. Hence, we have the solutions:

where \(R\in \mathbb {M}(3,\mathbb {R})\) is such that Rp⊥ = h2p⊥(x ⋅ p∥) + hp⊥× x. Hence, the ambiguity of the sign in p∥ causes a non-uniqueness of solution.

3.4 Cubic Scheme

Newton’s method can be directly applied to solve (1). A practical implementation is obtained rewriting (1) in the following way:

The Jacobian of F applied to a matrix Z is given by:

Remark 2

Here we consider an inexact Newton approach. Hence, in order to apply the Newton’s method, we consider some approximation for DF(X)− 1. We notice that \(DF(X)=I - h({\mathscr{B}}_{1} + h{\mathscr{B}}_{2}) \), for \({\mathscr{B}}_{1}=[{\mathscr{L}} \cdot ,X]+[{\mathscr{L}} X,\cdot ]\) and \({\mathscr{B}}_{2}=({\mathscr{L}} \cdot ))X({\mathscr{L}} X)+({\mathscr{L}} X)\cdot ({\mathscr{L}} X)+({\mathscr{L}} X)X({\mathscr{L}} \cdot )\). Hence, we get the following third order approximation of DF(X)− 1:

At least four reasonable approximations of DF(X)− 1 can be chosen:

-

1.

\(DF(X)^{-1}\approx I + h{\mathscr{B}}_{1}\),

-

2.

\(DF(X)^{-1}\approx I + h{\mathscr{B}}_{1} + h^{2}{\mathscr{B}}_{2}\),

-

3.

\(DF(X)^{-1}\approx I + h{\mathscr{B}}_{1} + h^{2}{\mathscr{B}}_{1}^{2}\),

-

4.

\(DF(X)^{-1}\approx I + h{\mathscr{B}}_{1} + h^{2}({\mathscr{B}}_{1}^{2}+{\mathscr{B}}_{2})\).

We have found out that, among those, the second one in general performs better. Indeed, the first one might have convergence issues for large h, and even for large matrices the performances are at most comparable to the second one. The third one and the fourth one do not perform better than the second one, because the norm of \({\mathscr{B}}_{1}^{2}\) is in general much smaller than the one of \({\mathscr{B}}_{2}\). Hence, the fourth one is computationally more expensive than the second one, without any gain in convergence, whereas the third one has a slower convergence than the second one.

Then, we consider the following approximation for the inverse of the Jacobian evaluated in F(X):

This approximation leads to the inexact Newton scheme (Algorithm 3) described below.

We observe that the Inexact Newton method above has its main computational cost in the evaluation of the approximated Jacobian (15), due to the several matrix-matrix multiplications required. Hence, for large N, the lower complexity of the scheme defined in Section 3.2 makes it more advantageous than the Inexact Newton’s one, in terms of cost per iteration.

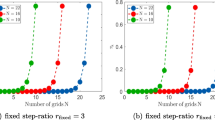

4 Numerical Experiments

In this section, we test our algorithms on three concrete examples arising from the numerical solution of spatially semi-discretized conservative PDEs: the incompressible Euler equations, the Drift-Alfvén plasma model, and the Heisenberg spin chain. To integrate in time the equations of motion, we apply the numerical scheme (4). For each equation, we test the performances of the schemes defined in Sections 3.2 and 3.4. Here we briefly summarize our findings. For large time-step h, the scheme of Section 3.2 is more efficient when solving (1) for large matrices. This makes the linear scheme more suitable for solving the Euler equations or the Drift-Alfvén plasma model. Analogously, we observe that for spin-systems, for large time-step and many particles, the scheme of Section 3.2 is faster and has better convergence properties than the one of Section 3.4.

We observe that for both the Euler equations and Drift-Alfvén plasma model, the number of iterations tends to decrease with increasing N. This is due to the fact that we are normalizing the initial values of the vorticity and the fact that we are absorbing into the time-step the factor N3/2 which should multiply the matrix bracket in order to have spatial convergence of the right-hand side of (17) and (19) (see [7]). Indeed, the same phenomenon is not observed for the Heisenberg spin chain, where the spatial discretization is kept fixed while the number of particles is increased.

Another observation is that both in the Euler equations and in the Drift-Alfvén plasma model, the small number of Newton iterations for large N prevents any benefit from combining in series two different algorithms, i.e., using a few steps of a fixed point iteration to get a good initial guess for the inexact Newton scheme. Analogously, we have not observed any improvement in the convergence speed using a mixed scheme for the Heisenberg spin chain.

The simulations are run in Matlab2020a on a Dell laptop, processor Intel(R) Core(TM) i7-1065G7 CPU @ 1.30GHz, RAM 16.0 GB. For each simulation, a tolerance of 10− 10 has been used as stopping criterion of the iteration. The results in the tables have been obtained as the average of 10 runs of the respective algorithm for solving (1), each run with respect to a different randomly generated Y. The CPU time is measured in seconds.

4.1 2D Euler Equations

The 2D Euler equations on a compact surface \(S\subset \mathbb {R}^{3}\) can be expressed in the vorticity formulation as:

where ω is the vorticity field, ψ is the stream function, the curly brackets denote the Poisson brackets, and Δ is the Laplace–Beltrami operator on S. Let us fix \(S=\mathbb {S}^{2}\), the 2-sphere. Equations (16) admit a spatial discretization called consistent truncation (see [11, 12]), which takes the form:

where \(P,W\in \mathfrak {su}(N)\), for N = 1,2,… and for a suitable operator \({\Delta }_{N}:\mathfrak {su}(N)\rightarrow \mathfrak {su}(N)\). Since ΔN can be chosen to be invertible, we get that (17) are of the form (2), with \({\mathscr{L}} W={\Delta }_{N}^{-1}W\). Equations (17) are also a Lie–Poisson system, hence the scheme (4) is well-suited to retain its qualitative properties [4]. Clearly, in order to get a good approximation of the equations (16), we have to take N large (at least around 103, see [7]).

In Table 1 we show the performances of the different schemes proposed in the previous section. We notice that for large N the linear scheme performs somewhat better than the inexact Newton one in terms of CPU time. However, the most efficient scheme is the explicit fixed point.

4.2 Drift-Alfvén Plasma Model

The Drift-Alfvén plasma model [6] can be formulated in terms of the so called generalized vorticities ω±, ω0. Although these are not directly physical quantities, they are a linear combinations of the generalized parallel momentum and the plasma density. In particular, neglecting the third order non-linearities, and absorbing the parameters in the time variable, we get the following equations:

where λ is the ratio between the electron inertial skin depth and the ion sound gyroradius [6]. Analogously to the Euler equations, equations (18) have a matrix representation in \(\mathfrak {su}(N)\times \mathfrak {su}(N)\times \mathfrak {su}(N)\), for any N ≥ 1. If we assume ω0 = 0 (which physically means excluding the electrostatic drift vortices), we get:

With the same notation of the previous section, we have the matrix equations:

Equations (19) can be cast in the form (2) in \(\mathfrak {su}(N)\oplus \mathfrak {su}(N)\), for \({\mathscr{L}}:\mathfrak {su}(N)\oplus \mathfrak {su}(N)\rightarrow \mathfrak {su}(N)\oplus \mathfrak {su}(N)\), defined by

In Table 2, the performances of the three algorithms applied component-wise for the Drift-Alfvén model are reported. Analogously to the Euler equations, the linear scheme performs a bit better than the inexact Newton scheme, especially for large matrices. As in the previous example, the most efficient scheme is the explicit fixed point.

4.3 Heisenberg Spin Chain

The Heisenberg spin chain is a conservative model of spin particle dynamics. This model arises from the spatial discretization of the Landau–Lifshitz–Gilbert Hamiltonian PDE [5]:

where \(\sigma : \mathbb {R}\times \mathbb {S}^{1}\rightarrow \mathbb {S}^{2}\) is a closed smooth curve. Each value taken by σ in \(\mathbb {S}^{2}\) represents a spin of an infinitesimal particle. We notice that unlike the previous examples of hyperbolic PDEs, (20) is a parabolic PDE. In Tables 3 and 4, we see that this requires a smaller time-step in order to have a comparable number of iterations of the two schemes as for the previous two examples.

Discretizing \(\sigma \approx \{s_{i}\}_{i=1}^{N+1}\) on an evenly spaced grid with step size Δx of \(\mathbb {S}^{1}\), \(\{x_{i}\}_{i=1}^{N+1}\), with the conditions sN+ 1 = s1 and xN+ 1 = x1, we obtain

Each spin vector si can be represented by a matrix Si with unitary norm in \(\mathfrak {su}(2)\cong \mathbb {R}^{3}\). The equations of motion are given by:

for i = 1,…N. Hence, each spin interacts only with its neighbours (which explains the chain name). Hence, the matrices involved remain very sparse. A chain of N particles is an Hamiltonian system in \(\mathfrak {su}(2)^{N}\), with Hamiltonian given by:

The operator \({\mathscr{L}}: \mathfrak {su}(2)^{N}\rightarrow \mathfrak {su}(2)^{N}\) is defined by

In Tables 3 and 4, the results for the three algorithms applied component-wise are reported. In the numerical simulations, we set Δx = 1. We observe that both the explicit fixed point scheme and the inexact Newton method do not converge for spin-systems with many particles and large time-step h = 0.5. On the other hand, the linear scheme does converge for any N ≤ 210 + 1, making it more suitable for long time simulations. For smaller time-step h = 0.1 the three algorithms perform almost equally well.

5 Conclusions

In this paper we have proposed and investigated some iterative schemes for the solution of cubic matrix equations arising in the numerical solution of certain conservative PDEs by means of (Lie–Poisson) geometrical integrators. These types of schemes enable the preservation of important physical features of the original infinite-dimensional flows, which is generally not the case when more standard discretizations and numerical integrators are used. Both the fixed point iterations and the inexact Newton type scheme we have investigated tend to work well, but we found that the fixed point method which requires the solution of a linear matrix equation is the most robust with respect to the time step and requires a comparable CPU time with the fully explicit scheme.

Change history

24 January 2023

The original version of this article was revised: Missing Open Access funding information has been added in the Funding Note.

References

Bankmann, D., Mehrmann, V., Nesterov, Y., Van Dooren, P.: Computation of the analytic center of the solution set of the linear matrix inequality arising in continuous- and discrete-time passivity analysis. Vietnam J. Math. 48, 633–659 (2020)

Bini, D., Iannazzo, B., Meini, B.: Numerical Solution of Algebraic Riccati Equations. SIAM, Philadelphia (2012)

Gohberg, I., Lancaster, P., Rodman, L.: Invariant Subspaces of Matrices with Applications. SIAM, Philadelphia (2006)

Hairer, E., Lubich, C., Wanner, G.: Geometric Numerical Integration. Springer, Berlin Heidelberg (2006)

Lakshmanan, M.: The fascinating world of the Landau–Lifshitz–Gilbert equation: an overview. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 369, 1280–1300 (2011)

Mentink, J.H., Bergmans, J., Kamp, L.P.J., Schep, T.J.: Dynamics of plasma vortices: the role of the electron skin depth. Phys. Plasmas 12, 052311 (2005)

Modin, K., Viviani, M.: A Casimir preserving scheme for long-time simulation of spherical ideal hydrodynamics. J. Fluid Mech. 884, 22 (2020)

Simoncini, V.: Computational methods for linear matrix equations. SIAM Rev. 58, 377–441 (2016)

Sylvester, J.: Sur l’équations en matrices px = xq. C. R. Acad. Sci. Paris. 99, 67–71 (1884)

Viviani, M.: A minimal-variable symplectic method for isospectral flows. BIT Num. Math. 60, 741–758 (2020)

Zeitlin, V.: Finite-mode analogues of 2D ideal hydrodynamics: coadjoint orbits and local canonical structure. Phys. D 49, 353–362 (1991)

Zeitlin, V.: Self-consistent finite-mode approximations for the hydrodynamics of an incompressible fluid on nonrotating and rotating spheres. Phys. Rev. Lett. 93, 264501 (2004)

Acknowledgements

The authors would like to thank the anonymous referees for their useful comments and suggestions. Special thanks to prof. Bruno Iannazzo for his observations on the manuscript and for pointing out reference [1].

Funding

Open access funding provided by Scuola Normale Superiore within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Dedicated to Alfio Quarteroni for his 70th Birthday.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Benzi, M., Viviani, M. Solving Cubic Matrix Equations Arising in Conservative Dynamics. Vietnam J. Math. 51, 113–126 (2023). https://doi.org/10.1007/s10013-022-00578-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10013-022-00578-z