Abstract

Motivated by applications in hyperspectral imaging, we investigate methods for approximating a high-dimensional non-negative matrix Y by a product of two lower-dimensional, non-negative matrices K and X. This so-called non-negative matrix factorization is based on defining suitable Tikhonov functionals, which combine a discrepancy measure for Y ≈KX with penalty terms for enforcing additional properties of K and X. The minimization is based on alternating minimization with respect to K and X, where in each iteration step one replaces the original Tikhonov functional by a locally defined surrogate functional. The choice of surrogate functionals is crucial: It should allow a comparatively simple minimization and simultaneously its first-order optimality condition should lead to multiplicative update rules, which automatically preserve non-negativity of the iterates. We review the most standard construction principles for surrogate functionals for Frobenius-norm and Kullback–Leibler discrepancy measures. We extend the known surrogate constructions by a general framework, which allows to add a large variety of penalty terms. The paper finishes by deriving the corresponding alternating minimization schemes explicitly and by applying these methods to MALDI imaging data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Matrix factorization methods for large scale data sets have seen increasing scientific interest recently due to their central role for a large variety of machine learning tasks. The main aim of such approaches is to obtain a low-rank approximation of a typically large data matrix by factorizing it into two smaller matrices. One of the most widely used matrix factorization methods is the principal component analysis (PCA), which uses the singular value decomposition (SVD) of the given data matrix.

In this work, we review the particular case of non-negative matrix factorization (NMF), which is favorable for a range of applications where the data under investigation naturally satisfies a non-negativity constraint. These include dimension reduction, data compression, basis learning, and feature extraction as well as higher level tasks such as classification or clustering [11, 26, 27, 30]. PCA-based approaches without any non-negativity constraints would not lead to satisfactory results in this case since possible negative entries of the computed matrices cannot be easily interpreted for naturally non-negative datasets.

Typically, the NMF problem is formulated as a minimization problem. The corresponding cost function includes a suitable discrepancy term, which measures the difference between the data matrix and the calculated factorization, as well as penalty terms to tackle the non-uniqueness of the NMF, to deal with numerical instabilities but also to provide the matrices with desirable properties depending on the application task. The NMF cost functions are commonly non-convex and require tailored minimization techniques to ensure the minimization but also the non-negativity of the matrix iterates. This leads us to the so-called surrogate minimization approaches, which are also known as majorize-minimization algorithms [18, 23, 36]. Such surrogate methods have been investigated intensively for some of the most interesting discrepancy measures and penalty terms [11, 13, 16, 23, 25, 33, 36]. The idea is to replace the original cost function by a so-called surrogate functional, such that its minimization induces a monotonic decrease of the objective function. It should be constructed in such a way that it is easier to minimize and that the deduced update rules should preserve the non-negativity of the iterates, which typically leads to alternating, multiplicative update rules.

It appears that these constructions are obtained case-by-case employing different analytical approaches and different motivations for their derivation. The purpose of this paper, first of all, is to give a unified approach to surrogate constructions for NMF discrepancy functionals. This general construction principle is then applied to a wide class of functionals obtained by different combinations of divergence measures and penalty terms, thus extending the present state of the art for surrogate-based NMF constructions.

Secondly, one needs to develop minimization schemes for these functionals. Here, we develop concepts for obtaining multiplicative minimization schemes, which automatically preserve non-negativity without the need for further projections.

Finally, we exemplify some characteristic properties of the different functionals with MALDI imaging data, which are particularly high-dimensional and challenging hyperspectral datasets.

The paper is organized as follows. Section 2 introduces the basic definition of the considered NMF problems. Section 3 gives an overview about the theory of surrogate functionals as well as the construction principles. This is then exemplified in Section 4 for the most important cases of discrepancy terms, namely the Frobenius norm and the Kullback–Leibler divergence as well as for a variety of penalty terms. Section 5 discusses alternating minimization schemes for these general functionals with the aim to obtain non-negativity-preserving, multiplicative iterations. Finally, Section 6 contains numerical results for MALDI imaging data.

1.1 Notation

Throughout this work, we will denote matrices in bold capital Latin or Greek letters (e.g., Y, K, Ψ, Λ) while vectors will be written in small bold Latin or Greek letters (e.g. c, d, β, ζ). The entries of matrices and vectors will be indicated in a non-bold format to distinguish between the i th entry xi of a vector x and n different vectors xj for j = 1,…,n. In doing so, we write for the entry of a matrix M in the i th row and the j th column Mij and the i th entry of a vector x the symbol xi. The same holds for an entry of a matrix product: the ij th entry of the matrix product MN will be indicated as (MN)ij.

Furthermore, we will use a dot notation to indicate rows and columns of matrices. For a matrix M, we will write M∙,j for the j th column and Mi,∙ for the i th row of the matrix.

What is more, we will use ∥⋅∥ for the usual Euclidean norm, \(\|\boldsymbol {M}\|_{1}:= {\sum }_{ij} |M_{ij}|\) for the 1-norm, and ∥M∥F for the Frobenius norm of a matrix M.

Besides that, we will use equivalently the terms function and functional for a mapping into the real numbers.

Finally, the dimensions of the matrices in the considered NMF problem are reused in this work and will be introduced in the following section.

2 Non-negative Matrix Factorization

Before we introduce the basic NMF problem, we give the following definition to clarify the meaning of a non-negative matrix.

Definition 1

A matrix \(\boldsymbol {M}\in \mathbb {R}^{m\times n}\) is called non-negative if \(\boldsymbol {M}\in \mathbb {R}_{\geq 0}^{m\times n}\), where \( \mathbb {R}_{\geq 0} := \{x\in \mathbb {R} : x\geq 0\}\).

The non-negativity of an arbitrary matrix M will be abbreviated for simplicity as M ≥ 0 in the later sections of this work.

The basic NMF problem requires to approximately decompose a given non-negative matrix \(\boldsymbol {Y}\in \mathbb {R}_{\geq 0}^{n\times m}\) into two smaller non-negative matrix factors \(\boldsymbol {K}\in \mathbb {R}_{\geq 0}^{n\times p}\) and \(\boldsymbol {X}\in \mathbb {R}_{\geq 0}^{p\times m}\), such that p ≪ min(n, m) and

For an interpretation, let us assume that we are given m data vectors \(\boldsymbol {Y}_{\bullet , j} \in \mathbb {R}^{n}\) for j = 1,…,m, which are stored column-wise in the matrix Y. Similarly for k = 1,…,p, we denote by K∙,k, respectively Xk,∙, the column vectors of K, respectively the row vectors of X. We then obtain the following approximation for the column vectors Y∙,j as well as the row vectors Yi,∙:

Note that the product K∙,k Xk,∙ on the right-hand side of the third equation yields rank-one matrices for every k.

By these representations, we can regard the rows Xk,∙ as a low-dimensional set of basis vectors, which are tailored for approximating the high-dimensional data vectors, i.e., NMF solves the task of basis learning with non-negativity constraints.

Following the interpretation given above, we can also regard NMF as a basis for compression. K and X are determined by storing (n + m) ⋅ p coefficients, as opposed to n ⋅ m coefficients for Y. The columns of K can be regarded as characteristic components of the given data set {Y∙,j}j. If these data vectors are input for a classification task, one can use the p correlation values with the column vectors of K as features for constructing the classification scheme.

The standard variational approach for constructing an NMF is to define a suitable discrepancy measure D(⋅,⋅) between Y and KX and to minimize the resulting functional. Despite their seemingly simple structure, NMF problems are ill-posed, non-linear, and non-convex, i.e., they require stabilization techniques as well as tailored approaches for minimization. In this paper, we consider discrepancy measures based on divergences [17].

Definition 2

(Divergence) Let Ω be an arbitrary set. A divergence D is a map \(D: {\Omega } \times {\Omega } \to \mathbb {R}\), which fulfills the following properties:

-

(i)

D(x, y) ≥ 0 ∀(x, y) ∈ Ω×Ω,

-

(ii)

D(x, y) = 0 ⇔ x = y.

Definition 3

(β-divergence) The β-divergence \(d_{\beta } : \mathbb {R}_{>0} \times \mathbb {R}_{>0} \rightarrow \mathbb {R}_{\geq 0}\) for \(\beta \in \mathbb {R}\) is defined as

Furthermore, we define accordingly \(D_{\beta }: \mathbb {R}_{>0}^{n\times m} \times \mathbb {R}_{>0}^{n\times m} \rightarrow \mathbb {R}\) for arbitrary \(m, n\in \mathbb {N}\) as

The corresponding matrix divergences are defined componentwise, i.e., β = 2 yields the Frobenius norm and β = 1 the Kullback–Leibler divergence.

These discrepancy measures are typically amended by so-called penalty terms for stabilization and for enforcing additional properties such as sparsity or orthogonality. This yields the following general minimization task.

Definition 4

(NMF minimization problem) For a data matrix \(\boldsymbol {Y}\in \mathbb {R}_{\geq 0}^{n\times m}\), we consider the following generalized NMF minimization task

The functional

is called the cost functional. Furthermore, we call

-

(i)

Dβ(Y, KX) the discrepancy term,

-

(ii)

αℓ the regularization parameters or weights,

-

(iii)

and φℓ(K, X) the penalty terms.

The functional in (2) is typically non-convex in (K, X). Hence, algorithms based on alternating minimization with respect to K and X are favorable, i.e.,

where the index d denotes the iteration index of the corresponding matrices.

This yields simpler, often convex restricted problems with respect to either K or X. Considering for example the minimization of the NMF functional with Frobenius norm and without any penalty term yields a high-dimensional linear system \(\boldsymbol {K}^{\intercal } \boldsymbol {Y} = \boldsymbol {K}^{\intercal }\boldsymbol {K} \boldsymbol {X}\), which, however, would need to be solved iteratively.

Instead, the so-called surrogate methods for computing NMF decompositions have been proposed recently and are introduced in the next section. They also consider alternating minimization steps for K and X, but they replace the restricted minimization problems in (3) and (4) by simpler minimization tasks, which are obtained by locally replacing F by surrogate functionals for K and X separately.

3 Surrogate Functionals

In this section, we discuss general surrogate approaches for minimizing general non-convex functionals, which are then exemplified for specific NMF functionals in later sections.

Let us consider a general functional \(F: {\Omega } \rightarrow \mathbb {R}\) where \({\Omega } \subset \mathbb {R}^{N}\) and the minimization problem

We will later add suitable conditions guaranteeing the existence of minimizers or at least the existence of stationary points. Surrogate concepts replace this task by solving a sequence of comparatively simpler and convex surrogate functionals, which can be minimized efficiently. These methods are also commonly referred to as surrogate minimization (or maximization) algorithms (SM) or also as MM algorithms, where the first M stands for majorize and the second M for minimize (see also [18, 23, 36]). Such approaches have been demonstrated to be very useful in many fields of inverse problems, in particular for hyperspectral imaging [11], medical imaging applications such as transmisson tomography [13, 14] and MALDI imaging and tumor typing applications [26].

Replacing a non-convex functional by a series of convex problems is the main motivation for such surrogate approaches. However, if constructed appropriately, they can also be used to replace non-differentiable functionals by a series of differentiable problems and they can be tailored such that gradient descent methods for minimization yield multiplicative update rules which automatically incorporate non-negativity constraints without further projections.

From this point on, it is important to note that possible zero denominators during the derivation of the NMF algorithms as well as in the multiplicative update rules themselves will not be discussed explicitly throughout this work. Usually, this issue is handled in practice by adding a small positive constant in the denominator during the iteration scheme. In fact, the instability of NMF algorithms due to the convergence of some entries in the matrices to zero has not been sufficiently discussed in the literature and still needs proper solution techniques. We will not focus on this problem and turn now to the basic definition and properties of surrogate functionals.

3.1 Definitions and Basic Properties

As in [25], we use the following definition of a surrogate functional.

Definition 5

(Surrogate functional) Let \({\Omega }\subseteq \mathbb {R}^{N}\) denote an open set and \(F: {\Omega }\rightarrow \mathbb {R}\) a functional defined on Ω. Then, \(Q_{F} : {\Omega } \times {\Omega } \rightarrow \mathbb {R}\) is called a surrogate functional or a surrogate for F, if it satisfies the following conditions:

-

(i)

QF(x, a) ≥ F(x) for all x, a ∈ Ω,

-

(ii)

QF(x, x) = F(x) for all x ∈ Ω.

This is the most basic definition, which does not require any convexity or differentiability of the functional. However, it already allows to prove that the iteration

yields a sequence which monotonically decreases F (see also Fig. 1).

Lemma 1

(Monotonic decrease by surrogate functionals) Let \({\Omega }\subseteq \mathbb {R}^{N}\) denote an open set, \(F: {\Omega }\rightarrow \mathbb {R}\) a given function and QF a surrogate functional for F. Assume that argminx∈ Ω QF(x, a) is well defined for all a ∈ Ω. Define the iterated updates by

with x[0] := argminx∈ Ω QF(x, a) for an arbitrary a ∈ Ω. Then, F(x[d]) is a monotonically decreasing sequence, i.e.,

Proof

The monotone decrease (6) follows directly from the defining properties of surrogate functionals, see Definition 5: We obtain

where (⋆) follows from the definition of x[d+ 1] in (5). □

Remark 1

(Addition of surrogate functionals) Let \({\Omega }\subseteq \mathbb {R}^{n}\) be an open set, \(F, G:{\Omega }\rightarrow \mathbb {R}\) pointwise defined functionals and QF, QG corresponding surrogates. Then, QF + QG is a surrogate functional for F + G.

For each functional F, there typically exist a large variety of surrogate functionals and we can aim at optimizing their structure. The following additional property is the key to simple and efficient minimization schemes for surrogate functionals.

Definition 6

(Separability of a surrogate functional) Let \({\Omega }\subseteq \mathbb {R}^{N}\) denote an open set, \(F: {\Omega }\rightarrow \mathbb {R}\) a functional and QF a surrogate for F. The surrogate QF is called separable, if there exist functions \(g_{i}:\mathbb {R} \times {\Omega } \rightarrow \mathbb {R}\), such that

Lemma 1 above only ensures the monotonic decrease of the cost functional, which is not sufficient to guarantee convergence of the sequence {x[d]} to a minimizer of F or at least to a stationary point of F. The convergence theory for surrogate functionals is far from complete (see also the works [23] and [36]).

Despite this lack of theoretical foundation, surrogate-based minimization yields strictly decreasing sequences for a large variety of applications. In particular, surrogate-based methods can be constructed such that first-order optimality conditions lead to multiplicative update rules, which—in view of the NMF—is a very desirable property.

We now turn to discussing three different construction principles for surrogate functionals.

3.2 Jensen’s Inequality

The starting point is the well-known Jensen’s inequality for convex functions (see [10]).

Lemma 2

(Jensen’s inequality) Let \({\Omega } \subseteq \mathbb {R}^{N}\) denote a convex set, \(F:{\Omega } \rightarrow \mathbb {R}\) a convex function and λi ∈ [0,1] non-negative numbers for i ∈{1,…,k} with \({\sum }_{i = 1}^{k} \lambda _{i} = 1\). Then, for all xi ∈ Ω, it holds that

In this subsection, we consider functionals F which are derived from continuously differentiable and convex functions \( f:\mathbb {R}_{>0} \rightarrow \mathbb {R}\) via

for \({\Omega } \subseteq \mathbb {R}_{\geq 0}^{N}\) and some auxiliary variable c ∈ Ω. This also implies that F is convex, since

We now choose λi ∈ [0,1] with \({\sum }_{i = 1}^{N} \lambda _{i} = 1\) and \(\boldsymbol {\alpha } \in \mathbb {R}^{N}\) and define

for some b ∈ Ω. This implies

The functional \(Q_{F}: {\Omega } \times {\Omega } \rightarrow \mathbb {R}\) defines a separable and convex surrogate for F, which can be seen by the inequality above and by observing

3.3 Low Quadratic Bound Principle

This concept is based on a Taylor expansion of F in combination with a majorization of the quadratic term. This so-called low quadratic bound principle (LQBP) has been introduced in [5] and was used in particular for the computation of maximum likelihood estimators. These methods do not require that F itself is convex and its construction is based on the following lemma.

Lemma 3

(Low quadratic bound principle) Let \({\Omega } \subseteq \mathbb {R}^{N}\) denote an open and convex set and \(f:{\Omega } \rightarrow \mathbb {R}\) a twice continuously differentiable functional. Assume that a matrix \(\boldsymbol {{\Lambda }} (\boldsymbol {x}) \in \mathbb {R}^{N\times N}\) exists, such that Λ(x) −∇2f(x) is positive semi-definite for all x ∈ Ω. We then obtain a quadratic majorization

and Qf is a surrogate functional for f.

Proof

The proof of this classical result is based on the second-order Taylor polynomial of f and shall be left to the reader. □

The related update rule for surrogate minimization can be stated explicitly under natural assumptions on the matrix Λ.

Corollary 1

Assume that the assumptions of Lemma 3 hold. In addition, assume that Λ is a positive definite and symmetric matrix. Then, the corresponding surrogate Qf is strictly convex in its first variable and we have from (5)

Proof

For an arbitrary α ∈{1,…,N}, we have that

where (⋆) utilizes the symmetry of Λ. Hence, it holds that ∇x Qf(x, a) = ∇f(a) + Λ(a)(x −a). The Hessian matrix of Qf then satisfies

This implies the positive definiteness of the functional; hence, it has a unique minimizer, which is given by

This is the update rule above. □

The computation of the inverse of Λ is particularly simple if Λ is a diagonal matrix. Furthermore, the diagonal structure ensures the separability of the surrogate functional mentioned in Definition 6. Therefore, we consider matrices of the form

where κi ≥ 0 has to be chosen individually depending on the considered cost function. We will see that an appropriate choice of κi will lead finally to the desired multiplicative update rules of the NMF algorithm.

The matrix Λ(a) in (9) fulfills the conditions in Corollary 1 as it can be seen by the following lemma. Therefore, if Λ is constructed as in (9), the update rule in (8) can be applied immediately.

Lemma 4

Let \(\boldsymbol {M}\in \mathbb {R}_{\geq 0}^{N \times N}\) denote a symmetric matrix. With \(\boldsymbol {a}\in \mathbb {R}_{>0}^{N}\) and κi ≥ 0, we define the diagonal matrix Λ by

for i = 1,…,N. Then, Λ and Λ −M are positive semi-definite.

Proof

Let \( \boldsymbol {\zeta }\in \mathbb {R}^{N}\) denote an arbitrary vector and let δ denote the Kronecker symbol. Then,

The positive semi-definiteness of Λ follows from its diagonal structure. □

3.4 Further Construction Principles

So far we have discussed two major construction principles based on either Jensen’s inequality or upper bounds for the quadratic term in Taylor expansions. Lange [23] lists further construction principles, which however will not be used for NMF constructions in the subsequent sections of this paper. For completeness, we briefly list their main properties.

A relaxation of the approach based on Jensen’s inequality is achieved by choosing αi ≥ 0,i ∈{1,…,N} such that \({\sum }_{i = 1}^{N} \alpha _{i} = 1\) and αi > 0 if ci≠ 0, which yields

A typical choice is

which leads to surrogate functionals for p ≥ 0. This type of surrogate was originally introduced in the context of medical imaging (see [12]), for positron emission tomography.

Another approach is based on combining arithmetic with geometric means and can be used for constructing surrogates for posynomial functions. For \( \boldsymbol {\alpha }, \boldsymbol {v}, \boldsymbol {a}\in \mathbb {R}_{>0}^{N}\), we obtain

4 Surrogates for NMF Functionals

In this section, we apply the general construction principles of Section 3 to the NMF problem as stated in (1). The resulting functional F(K, X) depends on both factors of the matrix decomposition and minimization is attempted by alternating minimization with respect to K and X as described in (3) and (4).

However, we replace the functional F in each iteration by suitable surrogate functionals, which allow an explicit minimization. Hence, we avoid the minimization of F itself, which even for the most simple quadratic formulation requires solving a high-dimensional linear system.

We start by considering the discrepancy terms for β = 2 (Frobenius norm) and β = 1 (Kullback–Leibler divergence) and determine surrogate functionals with respect to X and K. We then add several penalty terms and develop surrogate functionals accordingly. With regard to the construction of surrogates for the case of β = 0 (Itakura–Saito divergence), we refer to the works [15, 16, 32].

4.1 Frobenius Discrepancy and Low Quadratic Bound Principle

We start by constructing a surrogate for the minimization with respect to X for the Frobenius discrepancy

Let Y∙,j, resp. X∙,j, denote the column vectors of Y, resp. X. The separability of F yields

Hence, the minimization separates for the different \(f_{\boldsymbol {Y}_{\bullet , j}}\) terms. The Hessian of these terms is given by

and the LQBP construction principle of the previous section with κk = 0 yields

leading to the surrogate functionals

An appropriate choice of κk ensures the multiplicativity of the final NMF algorithm. In the case of the Frobenius discrepancy term, we will see that suitable κk can be chosen dependent on ℓ1 regularization terms in the cost function, which are not included up to now (see Section 4.4 and Appendix 1.1 for more details on this issue). Due to the absent ℓ1 terms, we set κk = 0 to get the desired multiplicative update rules. Summing up the contributions of the columns of X yields the final surrogate

The equivalent construction for K can be obtained by regarding the rows of K separately, which for

yields \(\nabla ^{2} g_{\boldsymbol {y}}(\boldsymbol {a})=\boldsymbol {XX}^{\intercal }\). Putting

leads to the surrogate

We summarize this surrogate construction in the following theorem.

Theorem 1

(Surrogate functional for the Frobenius norm with LQBP) We consider the cost functionals \(F(\boldsymbol {X}) := 1/2 \|\boldsymbol {Y}-\boldsymbol {KX}\|_{F}^{2}\) and \(G(\boldsymbol {K}) := 1/2 \|\boldsymbol {Y}-\boldsymbol {KX}\|_{F}^{2}\). Then,

define separable and convex surrogate functionals.

4.2 Frobenius Discrepancy and Jensen’s Inequality

Again, we focus on deriving a surrogate functional for X; the construction for K will be very similar. Expanding the Frobenius discrepancy yields

Putting \(\boldsymbol {v}:= \boldsymbol {X}_{\bullet , j} \in \mathbb {R}_{\geq 0}^{p}\) and \( \boldsymbol {c}:= {\boldsymbol {K}_{i, \bullet }}^{\intercal } \in \mathbb {R}_{\geq 0}^{p} \) allows us to define

such that

Hence, we have separated the Forbenius discrepancy suitably for applying Jensen’s inequality. Following the construction principle in Section 3.2, we define

with the auxiliary variable \(\boldsymbol {A}\in \mathbb {R}_{\geq 0}^{p\times m}\) and \(\boldsymbol {b}:= \boldsymbol {A}_{\bullet , j}\in \mathbb {R}_{\geq 0}^{p}\), which yields the inequality

Inserting this into the decomposition of the Frobenius discrepancy yields the surrogate QF,2(X, A) by

The construction of a surrogate for K proceeds in the same way. We summarize the results in the following theorem.

Theorem 2

(Surrogate functional for the Frobenius norm with Jensen’s inequality) We consider the cost functionals \(F(\boldsymbol {X}) := 1/2 \|\boldsymbol {Y}-\boldsymbol {KX}\|_{F}^{2} \) and \(G(\boldsymbol {K}) := 1/2 \|\boldsymbol {Y}-\boldsymbol {KX}\|_{F}^{2} \). Then,

define separable and convex surrogate functionals.

These surrogates are equal to the ones proposed in [11]. We will later use first-order necessary conditions of the surrogate functionals for obtaining algorithms for minimization. We already note

i.e., despite the rather different derivations, the update rules for the surrogates obtained by LQBP and Jensen’s inequality will be identical.

4.3 Surrogates for Kullback–Leibler Divergence

The case β = 1 in Definition 3 yields the so-called Kullback–Leibler divergence (KLD). For matrices \(\boldsymbol {M}, \boldsymbol {N}\in \mathbb {R}^{n\times m}_{>0}, \) it is defined as

and has been investigated intensively in connection with non-negative matrix factorization methods [11, 16, 24, 25]. In our context, we define the cost functional for the NMF decomposition by

We will focus in this subsection on Jensen’s inequality for constructing surrogates for the KLD since they will lead to the known classical NMF algorithms (see also [11, 24, 25]). However, it is also possible to use the LQBP principle to construct a suitable surrogate functional for the KLD which leads to different, multiplicative update rules (see Appendix 2).

We start by deriving a surrogate for the minimization with respect to X, i.e., we consider

Using the same λk and αk as in the section above and applying it to the convex function f(t) := −ln(t), we obtain

Multiplication with Y ij ≥ 0 and the addition of appropriate terms yield

The condition QF,1(X, X) = F(X) follows by simple algebraic manipulations, such that QF,1 is a valid surrogate functional for F.

The approach by Jensen’s inequality is very flexible and we obtain different surrogate functionals QF,2 and QF,3 by using, i.e., f1(t) = Y ij ln(Y ij/t) − Y ij + t or f2(t) = −Y ij ln(t) + t instead of f. Inserting the same λk and αk as before in (7), we obtain immediately the surrogates

It is easy to check that the partial derivatives for all three variants are the same; hence, the update rules obtained in the next section based on first-order optimality conditions will be identical. Applying the same approach for obtaining a surrogate for K yields the following theorem.

Theorem 3

(Surrogate functional for the KLD with Jensen’s inequality) We consider the cost functionalsF(X) := KL(Y, KX) and G(K) := KL(Y, KX). Then,

define separable and convex surrogate functionals.

4.4 Surrogates for ℓ 1- and ℓ 2-Norm Penalties

Computing an NMF is an ill-posed problem (see [11]), hence, one needs to add stabilizing penalty terms for obtaining reliable matrix decompositions. The most standard penalties are ℓ1- and ℓ2-terms for the matrix factors leading to

for β ∈{1,2}.

The ℓ2-penalty prohibits exploding norms for each matrix factor and the ℓ1-term promotes sparsity in the minimizing factors (see [21, 28] for a general exposition). Combinations of ℓ1- and ℓ2-norms are sometimes called elastic net regularization [20] due to their importance in medical imaging.

These penalty terms are convex and they separate; hence, they can be used as surrogates themselves. For the case of the KLD, this leads to the following surrogate for minimization with respect to X:

where QKL is the surrogate for the KLD of Theorem 3 for X.

The Frobenius case cannot be treated in the same way. If we use the penalty terms as surrogates themselves and obtain the standard minimization algorithm by first-order optimality conditions, then this does not lead to a multiplicative algorithm, which preserves the non-negativity of the iterates. It can be easily seen that the ℓ1-penalty term causes this difficulty. For a more extended discussion on this, see Appendix 1.1.

Hence, we have to construct a different surrogate. Similar to the discussion in Section 4.1, we consider here \(f_{\boldsymbol {y}}:\mathbb {R}_{\geq 0}^{p} \rightarrow \mathbb {R}\) with

which yields the Hessian \(\nabla ^{2}f_{\boldsymbol {y}}(\boldsymbol {a})=\boldsymbol {K}^{\intercal }\boldsymbol {K} +\nu \boldsymbol {I}\). The choice of κk is done dependent on the ℓ1 regularization term of the cost function fy as already described in Section 4.1. It can be shown in the derivation of the NMF algorithm that κk = λ for all k leads to multiplicative update rules. A more general cost function is considered in Appendix 1.1, where the concrete effect of κk is described in more detail.

This yields the following diagonal matrix \(\boldsymbol {{\Lambda }}_{f_{\boldsymbol {y}}}(\boldsymbol {a})\):

The surrogate for minimization with respect to X is then

Similar, for minimization with respect to K we obtain the surrogate by using the diagonal matrix

4.5 Surrogates for Orthogonality Constraints

The observation that a non-negative matrix with pairwise orthogonal rows has at most one non-zero entry per column is the motivation for introducing orthogonality constraints for K or X. This will lead to strictly uncorrelated feature vectors, which is desirable in several applications, e.g., for obtaining discriminating biomarkers from mass spectra (see Section 6 on MALDI imaging).

We could add the orthogonality constraint \(\boldsymbol {K}^{\intercal }\boldsymbol {K}=\boldsymbol {I}\) as an additional penalty term \(\sigma _{\boldsymbol {K}} \|\boldsymbol {K}^{\intercal } \boldsymbol {K}-\boldsymbol {I}\|^{2}\). However, this would introduce fourth order terms. Hence, we introduce additional variables V and W and split the orthogonality condition into two second-order terms leading to

Surrogates for the terms \(\|\boldsymbol {I} - \boldsymbol {V}^{\intercal } \boldsymbol {K} \|_{F}^{2}\) and \(\|\boldsymbol {I} - \boldsymbol {X} \boldsymbol {W}^{\intercal } \|_{F}^{2}\) can be calculated via Jensen’s inequality (see Section 4.2). The other penalties can be used as surrogates themselves and therefore, we obtain the following theorem.

Theorem 4

(Surrogate functionals for orthogonality constraints) We consider the cost functionals

with σX,1, σX,2, σK,1, σK,2 ≥ 0. Then,

define separable and convex surrogate functionals.

4.6 Surrogates for Total Variation Penalties

Total variation (TV) penalty terms are the second important class of regularization terms besides ℓp-penalty terms. TV penalties aim at smooth or even piecewise constant minimizers; hence, they are defined in terms of first-order or higher-order derivatives [7].

Originally, they were introduced for denoising applications in image processing [31] but have since been applied to inpainting, deconvolution, and other inverse problems (see, e.g., [8]). The precise mathematical formulation of the total variation in the continuous case is described in the following definition.

Definition 7

(Total variation (continuous)) Let \({\Omega } \subset \mathbb {R}^{N}\) be open and bounded. The total variation of a function \(u\in L^{1}_{\text {loc}}({\Omega })\) is defined as

There exist several analytic relaxations of TV based on ℓ1-norms of the gradient, which are more tractable for analytical investigations. For numerical implementations, one rather uses the L1-norm of the gradient \(\|\nabla f\|_{L_{1}}\) as a more computationally tractable substitute. For discretization, the gradient is typically replaced by a finite difference approximation [9].

For applying TV norms to data, we assume that the row index in the data matrix Y refers to spatial locations and the column index to so-called channels. In this case, we consider the most frequently used isotropic TV for applying it to measured, discretized hyperspectral data.

Definition 8

(Total variation (discrete)) For fixed εTV > 0, the total variation of a matrix \(\boldsymbol {K}\in \mathbb {R}_{\geq 0}^{n\times p}\) is defined as

where \(\psi _{k} \in \mathbb {R}_{\geq 0}\) is a weighting of the k th data channel and Ni ⊆{1,…,n}∖{i} denotes the index set referring to spatially neighboring pixels.

We will use the following short hand notation

which can be seen as a finite difference approximation of the gradient magnitude of the image K∙,k at pixel Kik for some neighborhood pixels defined by Ni. A typical choice for neighborhood pixels in two dimensions for the pixel (0,0) is N(0,0) := {(1,0),(0,1)} to get an estimate of the gradient components along both axes. Finally, by introducing the positive constant εTV > 0, we get a differentiable approximation of the total variation penalty.

In Section 6, we will discuss the application of NMF methods to hyperspectral MALDI imaging datasets, which has a natural “spatial structure” in its columns.

In this section, we stay with a generic choice of Ni as well as of the ψk and we construct a surrogate following the approach of the groundbreaking works of [13] and [29].

For t ≥ 0 and s > 0, we use the inequality (linear majorization)

and apply it in order to compare ∇ik K with values obtained by an arbitrary non-negative matrix A:

Summation with respect to i, multiplication with ψk, and summation with respect to k lead to

This yields a candidate for a surrogate \(Q_{\text {TV}}^{\text {Oli}}\) for the TV penalty term, which is the same as the one used in [29]. However, it is not separable; hence, we aim at a second, separable approximation. For arbitrary \(a,b,c,d \in \mathbb {R}\), we have

This leads to

Therefore, we have the following theorem.

Theorem 5

(Surrogate functional for TV penalty term) We consider the cost functionalF(K) := TV(K) with the total variation defined in (10). Then,

defines a separable and convex surrogate functional.

The separability of the surrogate is not obvious. The proof (see Appendix 3) delivers the following notation, which we also need for an description of the algorithms in the next section. First of all, we need the definition of the so-called adjoint neighborhood pixels \(\bar {N}_{i}\) given by

One then introduces matrices \(P(\boldsymbol {A})\in \mathbb {R}^{n\times p}_{\geq 0}\) and \(Z(\boldsymbol {A})\in \mathbb {R}^{n\times p}_{\geq 0}\) via

Using these notations, it can be shown that the surrogate can be written as

such that we obtain the desired separability. Here, C(A) denotes some function depending on A. The description of QTV with the help of P(A)ik and Z(A)ik will also allow us to compute the partial derivatives in a more comfortable way (see also Appendix 1.2).

4.7 Surrogates for Supervised NMF

As a motivation for this section, we consider classification tasks. We view the data matrix Y as a collection of n data vectors, which are stored in the rows of Y. Moreover, we do have an expert annotation ui for i = 1,…,n,which assigns a label to each data vector. For a classification problem with two classes, we have ui ∈{0,1}.

As already stated, the rows of the matrix X of an NMF decomposition can be regarded as a basis for approximating the rows of Y. Hence, one assumes that the correlations between a row Yi,∙ of Y and all row vectors of X, i.e., computing \(\boldsymbol {Y}_{i,\bullet }\boldsymbol {X}^{\intercal }\), contain the relevant information of Yi,∙. The vector of correlations yields the so-called feature vector of length p. A classical linear regression model, which uses these feature vectors, then asks to compute weights βk for k = 1,…,p, such that \(\boldsymbol {Y}_{i,\bullet } \boldsymbol {X}^{\intercal }\boldsymbol {\beta } \approx u_{i}\) (for more details on linear discriminant analysis methods, we refer to [4, Chapter 4]).

In matrix notation and using least squares, this is equivalent to computing β as a minimizer of

We now use X and β to define

In tumor typing classifications, where the data matrix Y is obtained by MALDI measurements, the vector x∗ can be interpreted as a characteristic mass spectrum of some specific tumor type and can be directly used for classification tasks in the arising field of digital pathology (see also Section 6 and [26]).

The classification of a new data vector y is then simply obtained by computing the scalar product \(w={\boldsymbol {x}^{\ast }}^{\intercal } \boldsymbol {y}\) and assigning either the class label 0 or 1 by comparing w with a pre-assigned threshold s. This threshold is typically obtained in the training phase of the classification procedure by computing \(\boldsymbol {YX}^{\intercal }\boldsymbol {\beta }\) for some given training data Y and choosing s, such that a performance measure of the classifier is optimized.

The approach we have described is based on first computing an NMF, i.e., K and X, and then computing the weights β of the classifier. Hence, the computation of the NMF is done independently of the class labels u, which is also referred to as an unsupervised NMF approach. We might expect that computing the NMF by minimizing a functional which includes the class labels, i.e.,

will lead to an improved classifier. In the application field of MALDI imaging, this supervised approach yields an extraction of features from the given training data, which allow a better distinction between spectra obtained from different tissue types such as tumorous and non-tumorous regions (see also [26]).

Surrogates for the first term have been determined in the previous section for the case of the Frobenius norm and the Kullback–Leibler divergence. Hence, we need to determine surrogates of the new penalty term for minimization with respect to X and β:

Surrogates can be obtained by extending Jensen’s inequality to the matrix valued case. Here, we consider a convex subset \({\Omega } \subset \mathbb {R}^{N\times M}_{>0}\) and define

with a convex and continuously differentiable function f and auxiliary variables \(\boldsymbol {c}\in \mathbb {R}^{N}_{>0}\) and \(\boldsymbol {d}\in \mathbb {R}^{M}_{>0}\). We now use the following generalized Jensen’s inequality

Setting

for some i ∈{1,…,n} yields by inserting λjk and αjk into (11)

The computation of a surrogate for minimization with respect to β proceeds analogously. We summarize the results in the following theorem.

Theorem 6

(Surrogate functionals for linear regression) Let \( F(\boldsymbol {X}) := 1/2\|\boldsymbol {u} - \boldsymbol {YX}^{\intercal } \boldsymbol {\beta }\|^{2}\) and \( G(\boldsymbol {\beta }) := 1/2\|\boldsymbol {u} - \boldsymbol {YX}^{\intercal } \boldsymbol {\beta } \|^{2} \) denote a cost functional with repect to X and β. Then,

define separable and convex surrogate functionals.

A big advantage of linear regression models is their simplicity and manageability. However, they are by far not the optimal approach to approximate the binary output data u with a continuous input. Logistic regression models offer a way more natural method for binary classification tasks. Together with the supervised NMF as a feature extraction method, this overall workflow leads in [26] to excellent classification results and outperformed classical approaches.

However, the proposed model is based on a gradient descent approach, such that the non-negativity of the iterates can only be guaranteed by a projection step. Appropriate surrogate functionals for this workflow are still ongoing research and could lead to even better outcomes (see also [35, 36]).

5 Surrogate-Based NMF Algorithms

In the previous section, we have defined surrogate functionals for various NMF cost functions. Besides the necessary surrogate properties, we also expect that the minimization of these surrogates is straightforward and can be computed efficiently.

In our case, we demand additionally that the minimization schemes based on solving the first-order optimality conditions leads to a separable algorithm and that it only requires multiplicative updates, which automatically preserve the non-negativity of its iterates. Let us start with denoting the most general functional with Kullback–Leibler divergence, the Frobenius case follows similarly.

For constructing non-negative matrix factorizations, we incorporate ℓ2-, sparsity-, orthogonality-, and TV-constraints and also the penalty terms coming from the supervised NMF. Of course, in most applications, one only uses a subset of these constraints for stabilization and for enhancing certain properties. These algorithms can readily be obtained from the general case by putting the respective regularization parameters to zero. The corresponding update rules are classical results and can be found in numerous works [11, 24, 25].

Definition 9

(NMF problem) For \(\boldsymbol {Y}\in \mathbb {R}_{\geq 0}^{n\times m}\), \(\boldsymbol {K}, \boldsymbol {V} \in \mathbb {R}_{\geq 0}^{n\times p}\), \(\boldsymbol {X}, \boldsymbol {W} \in \mathbb {R}_{\geq 0}^{p\times m}\), \( \boldsymbol {\beta }\in \mathbb {R}_{\geq 0}^{p}\) and a set of regularization parameters λ, μ, ν, ω, τ, σK,1, σK,2, σX,1, σX,2, ρ ≥ 0, we define the NMF minimization problem by

The choice of the various regularization parameters occurring in Definition 9 is often based on heuristic approaches. We will not focus on that issue in this work and refer instead to [19] and the references therein, where two methods are investigated for the general case of multi-parameter Tikhonov regularization.

The algorithms studied in this section will start with positive initializations for K, X, V, W, and β. These matrices are updated alternatingly, i.e., all matrices except one matrix are kept fixed and only the selected matrix is updated by solving the respective first-order optimality condition.

We will focus in this section on the derivation of the update rules of K (see also Appendix 1.2). The iteration schemes for the other matrices follow analogously.

For that, we only have to consider those terms in the general functional which depend on K, i.e., we aim at determining a minimizer for

Instead of minimizing this functional, we exchange it with the previously constructed surrogate functionals, which leads to

with the surrogates QKL for the Kullback–Leibler divergence in Theorem 3, QTV for the TV penalty term in Theorem 5 and QOrth for the orthogonality penalty terms in Theorem 4.

The computation of the partial derivatives leads to a system of equations

for ξ ∈{1,…,n} and ζ ∈{1,…,p}. This leads to a system of quadratic equations

Solving for Kξζ and denoting the Hadamard product by ∘ as well as the matrix division for each entry separately by a fraction line yield the following update rule for K. (Note that the notation for P(A) and Z(A) was introduced in the section on TV regularization above.)

In the above update rule, 1n×p denotes an n × p matrix with ones in every entry and \(\boldsymbol {\Psi }\in \mathbb {R}^{n\times p}_{\geq 0}\) is defined as

The exponents are applied on the matrix entries componentwise. Details on the derivation can be found in Appendix 1.2.

The partial derivatives with respect to X are computed similarly. Defining

leads to the update

The updates for V, W are straight forward and we obtain the following theorem.

Theorem 7

(Alternating algorithm for the NMF problem in Definition 9) The initializations \(\boldsymbol {K}^{[0]}, \boldsymbol {V}^{[0]} \in \mathbb {R}_{> 0}^{n\times p}\), \(\boldsymbol {X}^{[0]}, \boldsymbol {W}^{[0]} \in \mathbb {R}_{> 0}^{p\times m}\), \(\boldsymbol {\beta }^{[0]}\in \mathbb {R}_{> 0}^{p}\) and the iterative updates

lead to a monotonic decrease of the cost functional

It is easy to see that the classical, regularized NMF algorithms described in [11, 24, 25] can be regained by putting the corresponding regularization parameters to zero. In the case of ℓ1- and ℓ2-regularized NMF, this leads to the cost function

The classical update rule for X is obtained by setting

which—in connection with the update rule for X of the previous theorem—leads to

which is the update rule described in [11].

By the same approach and with the surrogate functionals derived in Section 4, we obtain the update rules for the Frobenius discrepancy term, i.e., we consider the functional

A monotonic decrease of this functional is obtained by the following iteration in combination with the update rules for V, W, β as in Theorem 7 (see also Appendix 1.1 for more details on the derivation of these algorithms.)

6 MALDI Imaging

As a test case, we analyze MALDI imaging data (matrix-assisted laser desorption/ionization) of a rat brain. MALDI imaging is a comparatively novel modality, which unravels the molecular landscape of tissue slices and allows a subsequent proteomic or metabolic analysis [1, 6, 22]. Clustering this data reveals for example different metabolic regions of the tissue, which can be used for supporting pathological diagnosis of tumors.

The data used in this paper was obtained by a MALDI imaging experiment (see Fig. 2 for a schematic experimental setup).

Structure of MALDI imaging data: A mass spectrum is obtained at different positions of a tissue slice. The full dataset is a data cube, which can be visualized with different perspectives. Fixing a position of the tissue slice gives the mass spectrum at this position. Fixing a particular molecular weight reveals the distribution of molecules across the tissue slice with this weight

In our numerical experiments, we used a classical rat brain dataset which has been used in several data processing papers before [2, 3, 22]. It constitutes a standard test set for hyperspectral data analysis.

The tissue slice was scanned at 20,185 positions. At each position, a full mass spectrum with 2974 m/z (mass over charge) values was collected, i.e., instead of three color channels, as it is usual in image processing, this data has 2974 channels, each channel containing the spatial distribution of molecules having the same m/z value.

The following numerical examples were obtained with the multiplicative algorithms described in the previous section. We just illustrate the effect of the different penalty terms for some selected functionals. One can display either the columns of K as the pseudo channels of the NMF decomposition or the rows of X as pseudo spectra characterizing the different metabolic processes present in the tissue slice (see the Figs. 3, 4, 5, and 6).

NMF of the rat brain dataset for p = 6. Orthogonality constraints on the channels with σK,1 = 10 and σK,2 = 10 and sparsity penalty term on X with λ = 0.06. The sparsity penalty term has in connection with the orthogonality constraint a comparatively strong influence on the NMF computation: The sparsity in the spectra increases significantly and thus their biological interpretability, whereas the anatomic structure in the pseudo channels diminishes

Both ways of visualization do have their respective value. Looking at the pseudo spectra in connection with orthogonality constraints leads to a clustering of the spectra and to a subdivision of the tissue slice in regions with potentially different metabolic activities (see [22]). Considering instead the different pseudo spectra, which were constructed in order to have a base which allows a low-dimensional approximation of the dataset, is the basis for subsequent proteomic analysis, e.g., one may target pseudo spectra where the related pseudo channels are concentrated in regions, which were annotated by pathological experts. Mass values which are dominant in those spectra may stem from proteins/peptides relating to biomarkers as indicators for certain diseases. Hence, classification schemes based on NMF decompositions have been widely investigated [26, 30, 34].

7 Conclusion

In this paper, we investigated methods based on surrogate minimization approaches for the solution of NMF problems. The interest in NMF methods is related to its importance for several machine learning tasks. Application for large datasets requires that the resulting algorithms are very efficient and that iteration schemes only need simple matrix-vector multiplications.

The state of the art for constructing appropriate surrogates is based on case-by-case studies for the different considered NMF models. In this paper, we embedded the different approaches in a general framework, which allowed us to analyze several extensions to the NMF cost functional, including ℓ1- and ℓ2-regularization, orthogonality constraints, and total variation penalties as well as extensions, which led to supervised NMF concepts.

Secondly, we analyzed surrogates in the context of the related iteration schemes, which are based on first-order optimality conditions. The requirement of separability as well as the need of having multiplicative updates, which preserve non-negativity without additional projections, was analyzed. This resulted in a general description of algorithms for alternating minimization of constrained NMF functionals. The potential of these methods is confirmed by numerical tests using hyperspectral data from a MALDI imaging experiment.

Several further directions of research would be of interest. First of all, besides the most widely used penalty terms discussed in this paper, further penalty terms, e.g., higher-order TV terms, could be considered. Secondly, construction principles for more general discrepancy terms could be analyzed (see also [16]).

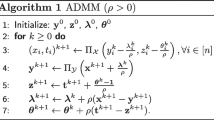

Potentially more importantly, this paper contains only very first results for combining NMF constructions directly with subsequent classification tasks. The question of an appropriate surrogate functional for the supervised NMF model with logistic regression used in [26] remains unanswered and also the comparison with algorithmic alternatives such as ADMM methods needs to be explored.

References

Aebersold, R., Goodlett, D.R.: Mass spectrometry in proteomics. Chem. Rev. 101, 269–296 (2001)

Alexandrov, T., Bartels, A.: Testing for presence of known and unknown molecules in imaging mass spectrometry. Bioinformatics 29, 2335–2342 (2013)

Alexandrov, T., Becker, M., Deininger, S.-O., Ernst, G., Wehder, L., Grasmair, M., von Eggeling, F., Thiele, H., Maass, P.: Spatial segmentation of imaging mass spectrometry data with edge-preserving image denoising and clustering. J. Proteome Res. 9, 6535–6546 (2010)

Bishop, C.: Pattern Recognition and Machine Learning. Springer-Verlag, New York (2006)

Böhning, D., Lindsay, B.G.: Monotonicity of quadratic-approximation algorithms. Ann. Inst. Stat. Math. 40, 641–663 (1988)

Caprioli, R.M., Farmer, T.B., Gile, J.: Molecular imaging of biological samples: localization of peptides and proteins using MALDI-TOF MS. Anal. Chem. 69, 4751–4760 (1997)

Chambolle, A., Caselles, V., Cremers, D., Novaga, M., Cremers, D., Pock, T.: An introduction to total variation for image analysis. In: Fornasier, M. (ed.) Theoretical Foundations and Numerical Methods for Sparse Recovery. Radon Series on Computational and Applied Mathematics, vol. 9, pp 263–340. Walter de Gruyter, Berlin (2010)

Chan, T., Esedoglu, S., Park, F., Yip, A.: Total variation image restoration: Overview and recent developments. In: Paragios, N., Chen, Y., Faugeras, O. (eds.) Handbook of Mathematical Models in Computer Vision, pp 17–31. Springer, Boston (2006)

Condat, L.: Discrete total variation: New definition and minimization. SIAM J. Imaging Sci. 10, 1258–1290 (2017)

Cvetkovski, Z.: Inequalities - Theorems, Techniques and Selected Problems. Springer-Verlag, Berlin Heidelberg (2012)

De Mol, C.: Regularized Multiplicative Algorithms for Nonnegative Matrix Factorization. Methodological Aspects of Hyperspectral Imaging Workshop, Nice (2013)

De Pierro, A.R.: On the relation between the ISRA and the EM algorithm for positron emission tomography. IEEE Trans. Méd. Imaging 12, 328–333 (1993)

Defrise, M., Vanhove, C., Liu, X.: An algorithm for total variation regularization in high-dimensional linear problems. Inverse Probl. 27, 065002 (2011)

Fessler, J.A.: Statistical image reconstruction methods for transmission tomography. In: Sonka, M., Fitzpatrick, J. (eds.) Medical Image Processing and Analysis. Handbook of Medical Imaging, vol. 2, pp 1–70. SPIE Press, Bellingham (2000)

Févotte, C., Bertin, N., Durrieu, J.-L.: Nonnegative matrix factorization with the Itakura-Saito divergence: With application to music analysis. Neural Comput. 21, 793–830 (2009)

Févotte, C., Idier, J.: Algorithms for nonnegative matrix factorization with the β-divergence. Neural Comput. 23, 2421–2456 (2011)

Hennequin, R., David, B., Badeau, R.: Beta-divergence as a subclass of Bregman divergence. IEEE Signal Process. Lett. 18, 83–86 (2011)

Hunter, D.R., Lange, K.: A tutorial on MM algorithms. Am. Stat. 58, 30–37 (2004)

Ito, K., Jin, B., Takeuchi, T.: Multi-parameter Tikhonov regularization—an augmented approach. Chin. Ann. Math. Ser. B 35, 383–398 (2014)

Jin, B., Lorenz, D.A., Schiffler, S.: Elastic-net regularization: error estimates and active set methods. Inverse Probl. 25, 115022 (2009)

Jin, B., Maass, P.: Sparsity regularization for parameter identification problems. Inverse Probl. 28, 123001 (2012)

Kobarg, J.H., Maass, P., Oetjen, J., Trop, O., Hirsch, E., Sagiv, C., Golbabaee, M., Vandergheynst, P.: Numerical experiments with MALDI imaging data. Adv. Comput. Math. 40, 667–682 (2014)

Lange, K.: Optimization, 2nd edn. Springer Texts in Statistics, vol. 95. Springer-Verlag, New York (2013)

Lecharlier, L., De Mol, C.: Regularized blind deconvolution with Poisson data. J. Phys.: Conf. Ser. 464, 012003 (2013)

Lee, D.D., Seung, H.S.: Learning the parts of objects by non-negative matrix factorization. Nature 401, 788–791 (1999)

Leuschner, J., Fernsel, P., Schmidt, M., Lachmund, D., Boskamp, T., Maass, P.: Supervised non-negative matrix factorization methods with MALDI-imaging applications. Bioinformatics (in review) (2018)

Li, T., Ding, C.: Non-negative matrix factorization for clustering: a survey. In: Aggarwal, C. C., Reddy, C. (eds.) Data Clustering: Algorithms and Applications, pp 149–176. CRC Press, Boca Raton (2013)

Louis, A.K.: Inverse und Schlecht Gestellte Probleme. Vieweg+Teubner, Verlag (1989)

Oliveira, J.P., Bioucas-Dias, J.M., Figueiredo, M.A.T.: Review: Adaptive total variation image deblurring: a majorization–minimization approach. Signal Process. 89, 1683–1693 (2009)

Phon-Amnuaisuk, S.: Applying non-negative matrix factorization to classify superimposed handwritten digits. Proced. Comput. Sci. 24, 261–267 (2013)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60, 259–268 (1992)

Sun, D.L., Févotte, C.: Alternating direction method of multipliers for non-negative matrix factorization with the beta-divergence. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 6201–6205 (2014)

Tan, V.Y.F., Févotte, C.: Automatic relevance determination in nonnegative matrix factorization with the β-divergence. arXiv:1111.6085v3 (2012)

Tang, J., Ceng, X., Peng, B.: New methods of data clustering and classification based on NMF. In: 2011 International Conference on Business Computing and Global Informatization, pp. 432–435 (2011)

Zhang, Z., Kwok, J.T., Yeung, D.-Y.: Surrogate maximization/minimization algorithms for AdaBoost and the logistic regression model. In: Proceedings of the Twenty-first International Conference on Machine Learning, ICML ’04 (2004)

Zhang, Z., Kwok, J.T., Yeung, D.-Y.: Surrogate maximization/minimization algorithms and extensions. Mach. Learn. 69, 1–33 (2007)

Acknowledgements

The authors want to thank Christine De Mol for her excellent presentations at several conferences and our joint discussions, which were the starting point for this paper. The presented results are based on the Master Thesis of the first author.

Funding

This project was supported by the Deutsche Forschungsgemeinschaft (DFG) within the framework of GRK 2224/1 “π3: Parameter Identification - Analysis, Algorithms, Applications”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Hans-Georg is always a source of scientific inspiration. This paper is dedicated to him on the occasion of his 70th birthday.

Appendices

Appendix 1: Details on the Derivation of the Algorithms in Section 5

In this section, we give a more detailed derivation of the algorithms presented in Section 5. We start with the less complex case of the Frobenius norm as discrepancy term and then turn to the Kullback–Leibler divergence. To cover both aspects, we derive the update rules of X for the Frobenius discrepancy term and of K in the case of the KLD. We will also take a closer look at the effect of κ in (9) with respect to the LQBP construction principle.

1.1 1.1 Frobenius Norm

We consider the general cost function described in Section 5 for the case of the Frobenius norm. To compute the update rules for X, it is enough to examine the function

where all terms independent from X are omitted. Based on Remark 1 and following the discussion of Section 4.4, the construction of a surrogate for F can be done separately for F1 and the remaining penalty terms.

The construction of a surrogate for F1 with the LQBP principle as it has been done similarly in Section 4.4 is essential. If we would use instead a surrogate for the discrepancy term \(1/2 \|\boldsymbol {Y}-\boldsymbol {KX}\|_{F}^{2}\) from Section 4.1 or 4.2 and take the ℓ1-penalty term λ∥X∥1 as surrogate itself, it is easy to see that this would not lead to multiplicative update rules. It is the ℓ1-penalty term which causes the difficulty. Computing the first-order optimality condition for the corresponding surrogate \(\tilde {Q}_{F}(\boldsymbol {X}, \boldsymbol {A}) =: \lambda \|\boldsymbol {X}\|_{1} + \hat {Q}_{F}(\boldsymbol {X}, \boldsymbol {A})\) with respect to X would lead to

where the second term on the right-hand side does not depend on λ. Hence, we get a sign in front of λ by solving the equation for Xξζ and we will not obtain multiplicative updates for X.

A correct surrogate is obtained by using the LQBP principle to F1 and leads to

with

where \(f_{\boldsymbol {Y}_{\bullet ,j}}:\mathbb {R}_{\geq 0}^{p} \to \mathbb {R}\) is defined as

and with the diagonal matrix

The functionals QOrth resp. QLR are the surrogates obtained from Theorem 4 resp. Theorem 6. It will turn out that an appropriate choice of κk will ensure a multiplicative NMF algorithm.

The computation of the first-order optimality condition for QF leads to

One can see immediately that the choice of κξ := λ for all ξ ∈{1,…,p} is appropriate to get rid of the problematic term λ. Hence, we obtain

Reordering the terms leads to

Solving for Xξζ and extending the equation to the whole matrix X yields finally

By exploiting the surrogate minimization principle as described in Lemma 1, we get finally the update rule for X presented in Section 5.

1.2 1.2 Kullback–Leibler Divergence

We take (12) as our starting point. The computation of the first-order optimality condition gives

Multiplying on both sides with Kξζ and sorting the terms already give the system of quadratic equations mentioned in Section 5, namely

Taking into account that

we obtain the explicit solution of Kξζ by completing the square and get

This equation holds for arbitrary ξ ∈{1,…,n} and ζ ∈{1,…,p}. We therefore can extend this relation to the whole matrix K and obtain

which is exactly the described update rule in Section 5.

Appendix 2: Kullback–Leibler Divergence Discrepancy and LQBP

In this section, we will use the LQBP construction principle to derive a multiplicative algorithm for the cost function

Similar to the approach in Appendix 1.2, we define according to the LQBP principle the surrogate

with the diagonal matrix

It follows for the partial derivatives of f

The first-order optimality condition of the surrogate functional leads then to

Setting \(\kappa _{\xi } := {\sum }_{i = 1}^{n} K_{i\xi }\) and solving for Xξζ leads finally to the multiplicative update rule

which differs from the classical update rule for the KLD described in [11, 24, 25].

Appendix 3: Surrogate of the TV Penalty—Separability

In this section, we will prove the separability of the surrogate functional

described in Theorem 5. Furthermore, we choose an arbitrary s ∈{1,…,n} and t ∈{1,…,p}. The aim is now to find all terms in (18) with \( K_{st}^{2}\) and Kst.

To find all quadratic terms \(K_{st}^{2}\) in (18), we see that we have to fix the index k, such that k = t. The remaining indices in (18), which have to be analyzed, are i and ℓ.

For the case i = s, we find that the preceding coefficient is

The case ℓ = s can only occur for those indices i, which satisfy s ∈ Ni. The definition of the adjoint neighborhood pixels gives

Therefore, the corresponding preceding coefficient is here

Altogether, we obtain for the quadratic terms \(K_{st}^{2}\) the coefficient

such that \(\tilde {P}_{s t}(\boldsymbol {A}) \cdot K_{s t}^{2}\) takes all quadratic terms of the matrix entries of K in the surrogate functional into account.

The same can be done with the linear terms Kst, which leads to the coefficient

Therefore, the surrogate QTV can be written as

for some function \(\tilde {C}\), which only depends on A. This shows the separability of the surrogate.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Fernsel, P., Maass, P. A Survey on Surrogate Approaches to Non-negative Matrix Factorization. Vietnam J. Math. 46, 987–1021 (2018). https://doi.org/10.1007/s10013-018-0315-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10013-018-0315-x

Keywords

- Non-negative matrix factorization

- Multi-parameter regularization

- Majorize-minimization algorithms

- Imaging mass spectrometry