Abstract

The parameterized verification problem seeks to verify all members of some collection of systems. We consider the parameterized verification problem applied to systems that are composed of an arbitrary number of component processes, together with some fixed processes. The components are taken from one or more families, each family representing one role in the system; all components within a family are symmetric to one another. Processes communicate via synchronous message passing. In particular, each component process has an identity, which may be included in messages, and passed to third parties. We extend Abdulla et al.’s technique of view abstraction, together with techniques based on symmetry reduction, to this setting. We give an algorithm and implementation that allows such systems to be verified for an arbitrary number of components: we do this for both safety and deadlock-freedom properties. We apply the techniques to a number of examples. We can model both active components, such as threads, and passive components, such as nodes in a linked list: thus our approach allows the verification of unbounded concurrent datatypes operated on by an unbounded number of threads. We show how to combine view abstraction with additional techniques in order to deal with other potentially infinite aspects of the analysis: for example, we deal with potentially infinite specifications, such as a datatype being a queue; and we deal with unbounded types of data stored in a datatype.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The parameterized verification problem considers a collection of systems P(x) where the parameter x ranges over a potentially infinite set, and asks whether such systems are correct for all values of x. In this paper we consider the following instance of the parameterized verification problem. Each system is built from some number of component processes, together with some fixed processes. The components may be taken from one or more families; for instance, we will consider examples representing a concurrent datatype based on a linked list: one family of components will represent threads operating on the datatype and another family will represent nodes in the linked list. All components from a particular family will be symmetric to one another. Thus these systems are parameterized by the number of components from each family. The fixed processes are independent of the number of components; we use them to model shared resources such as shared variables or locks; we also normally include a watchdog as a fixed process, which monitors the execution and signals an error if the desired specification is not met.

The components and fixed processes communicate via (CSP-style) synchronous message passing; we call each message an event. In particular, each component has an identity, drawn from some potentially infinite set. These identities can be included in events; thus a process can obtain the identity of a component process and possibly pass it on to a third process. This means that each process has a potentially infinite state space (a finite control state combined with identities from a potentially infinite set). We describe the setting for our work more formally in the next section. The problem (of showing that a system with n components is correct, for all n) is undecidable in general [9, 44]; however, verification techniques prove effective on a number of specific instances.

We adapt the technique of view abstraction of Abdulla et al. [4] to this setting. The idea of view abstraction is that we abstract each system state by its views, where each view records the states of the fixed processes, but just some subset of the components. For each analysis, we fix a set of views of interest; for example, we might consider all views containing precisely two component states (plus the fixed processes). We then calculate an upper bound on the set of such views of all reachable states of all systems of arbitrary size. By reasoning about this set of views, we can show that all such systems are correct.

The calculation proceeds as follows. Given a set S of views, we can (in principle) calculate the set of concrete states consistent with those views, i.e. those states st such that every view of st is in S; we call these concretizations. Informally (using the terminology of [6]), we “piece together” the views in S to create concretizations. We then calculate concrete transitions from those concretizations, and form the views of the resulting states. We repeat this process until we reach a fixed point: this fixed point provides the required upper bound described in the previous paragraph.

In fact, rather than considering all concretizations consistent with the set of views, it is enough to consider only concretizations that contain two components more than the views (so if all views are of size k, then the concretizations are of size \(k+2\)). We can improve the efficiency of the approach in the case that there are no three-way synchronizations between two components and a fixed process: it is enough to calculate concretizations that have a single component more than the views (so if all views are of size k, then the concretizations are of size \(k+1\)).

In [4] and our earlier work [40], the abstraction recorded all views of some size k, i.e. recording the states of k components in all possible ways. This approach works fine in principle, but on some examples suffers from a state space explosion: the number of views becomes prohibitive. In this paper, we generalise the approach to consider all views of certain profiles; for example, we might consider all views that record the states of two threads and one node, or one node and two threads. We present our use of view abstraction in Sects. 3 and 4.

Our setting is made more complicated by the use of component identities. These mean that the set of views (for some fixed collection of profiles) is potentially infinite. However, in Sect. 5 we use techniques from symmetry reduction [15, 17, 18, 24, 29, 37, 53] to reduce the views that need to be considered to a finite set. The idea is that if two views are equivalent modulo a uniform renaming of component identities, then the algorithm needs to consider only one of them.

We present the main algorithm for verifying safety properties in Sect. 6, and prove its correctness.

In Sect. 7, we extend our techniques to verify deadlock-freedom properties, i.e. that in every reachable state, at least one process is able to perform an action. Verifying such properties within view abstraction is not straightforward, since each concretization records only part of the global state: it might be that no component in the concretization can perform an action, but components outside the concretization can. We show that it is enough to consider only certain concretizations, which we term significant: if an action is possible in every significant concretization, then the same is true of every global state.

We present our implementation in Sect. 8: this is based upon the process algebra Communicating Sequential Processes (CSP) [50]. We use the model checker Failures Divergences Refinement (FDR) [28] as a back-end, to produce state machines for the components and fixed processes; this allows us to support all of machine-readable CSP@. We stress, though, that the main ideas of this paper are not CSP-specific: they apply to any formalism with a similar computational model, and, we believe, could be adapted to other computational models.

In Sect. 9, we present a range of examples, and experimental results. Being able to model process identities (or something equivalent) is necessary for all but one of the examples. Many examples present challenges beyond those of the unbounded number of processes. For instance, many examples operate on an unbounded domain of data values. For others, the requirements, such as being a queue, are naturally captured by an infinite-state specification. And in some cases, individual components may be infinite state and need to be abstracted. A number of techniques exist for tackling these challenges. We adapt and extend these techniques, using them in the models that we analyse with our tool.

A typical analysis combines abstractions in the model with the abstraction provided by the tool. Different examples require different abstraction techniques: we leave it up to the analyst to select the appropriate techniques in each case (but we hope our examples will provide guidance). It is our thesis that having the tool concentrate on just view abstraction makes that analysis more efficient, while the analyst can craft the model, tailoring it to the details of the example. (Of course, one could imagine another tool that helps the analyst craft the model, as part of a tool chain.)

In some examples, we use techniques from data independence, to capture the specification of the datatypes in a finite-state way. In particularly, we adapt Wolper’s specification technique for queues [60] and use a similar technique for stacks. We give techniques that can deal with non-fixed linearization points. In other examples, we adapt techniques of Henzinger et al. [16, 33].

Our examples include reference-linked data structures, such as linked lists. Each node in the data structure is modelled by a component process; such a process can hold the identity of another such process, modelling a reference to that node. Our examples include concurrent queues, stacks and sets, each based on a linked list (both lock-based and lock-free).

Other examples include a synchronous channel [41, 54], a multiplexed buffer, an elimination stack [32], a termination protocol for a ring, and a timestamp-based queue (inspired by [22]), that uses a number of subqueues internally (modelled as a family of components). The final example, in particular, requires the abstraction of the domain of timestamps, the abstraction of the subqueues, and a way for a thread to iterate over the subqueues.

By including views of a particular size k, our approach automatically captures invariants that concern the relationship between the states of (at most) k components and the fixed processes. For example, taking \(k=2\) in an example using linked lists, we can capture the relationship between the states of any pair of nodes, including between any pair of adjacent nodes. This allows us to capture various invariants concerning the “shape” of the list; for example it can capture the invariant that the list holds a sequence of data from the language \(A^*B^*\) (every pair of adjacent nodes hold data values (A, A), (A, B) or (B, B)).

In our examples based on linked lists, we will ensure that the sequence of data values held in the list comes from a language that: (1) can be captured using the type of invariant described in the previous paragraph, i.e. relating the values in successive nodes; and (2) allows us to prove that the relevant specification is met, using the data-independence techniques mentioned earlier. The approach does not automatically capture all properties, particularly transitivity properties such as that one node is reachable from another; however, we have developed modelling techniques that can often work around this.

Our approach is rather general and supports the analysis of a wide range of examples beyond those based on linked lists. The generality has a down-side though: the approach is not as fast as special-purpose techniques that are optimized for particular classes of problems, such as techniques targeted towards linked lists. Nevertheless, our approach gives acceptable performance.

We see our main contributions as follows.

-

An adaptation of view abstraction to synchronous message passing (this is mostly a straightforward adaptation of the techniques of [4]);

-

An extension of view abstraction to include systems where components have identities, and these identities can be passed around, using techniques based on symmetry reduction to produce a finite-state abstraction;

-

The extension of view abstraction to consider just specific profiles of views;

-

The extension of view abstraction in order to analyse for deadlock freedom;

-

The implementation of these techniques in a powerful tool, using FDR so as to support all of machine-readable CSP;

-

The application to a wide range of examples. These examples illustrate: (1) the adaptation and extension of techniques, using data independence, for specifying several datatypes; (2) the adaptation and extension of other abstraction techniques, to deal with other aspects of the models that would otherwise be infinite; (3) techniques for ensuring that the abstractions capture sufficiently strong invariants to prove correctness.

Compared to our earlier work [40], the main advances are: the extension to deal with deadlock freedom; a greatly improved implementation (reducing checking times by a factor of several hundred, and increasing the size of models checkable by a factor of several tens of thousands); extensions to allow multiple families of components, to consider just specific profiles, and to support three-way synchronizations between two components and a fixed process; and the application to a wider range of examples, together with the development of suitable specification and abstraction techniques.

1.1 Related work

There have been many approaches to the parameterized model checking problem.

Much recent work has been based on regular model checking, e.g. [14, 20, 39, 58]. Here, the state of each individual process is from some finite set, and each system state is considered as a word over this finite set; the set of initial states is a regular set; and the transition relation is a regular relation, normally defined by a transducer. An excellent survey is in [2]. Techniques include widening [55], acceleration [1] and abstraction [13].

The work [4] that the current paper builds on falls within this class. However, our setting is outside this class: the presence of component identities means that each individual process has a potentially infinite state space.

German and Sistla [27] consider a similar setting to us. They capture the correctness property via an LTL formula that considers the execution of the fixed processes and at most one component. They model a system using a vector addition system with states (VASS), which models explicitly the state of the fixed processes and of the specification automaton, and counts the number of components in each state. Again this is possible because each component is finite-state, unlike in our case. Existing algorithms can be used to decide relevant properties of a VASS, albeit in double-exponential time. They show that in the case of there being no controller, and for a specification automaton that monitors a single component, the problem is decidable in polynomial time. Despite the poor complexity result for the general case, subsequent works have produced tools that can cope with a number of examples, e.g. [3, 11, 21, 25, 38].

Other approaches include induction [23, 51], network invariants [59], and counter abstraction [19, 42, 44, 49]. In particular, [44] applied counter abstraction to systems, like in the current paper, where components had identities which could be passed from one process to another: some number B of the identities were treated faithfully, and the remainder were abstracted; the approach of the current paper seems better able to capture relationships between components, as required for the analysis of many examples.

Most approaches to symmetry reduction in model checking [15, 18, 24, 29, 37] work by identifying symmetric states, and, during exploration, replace each state encountered with a representative member of its symmetry-equivalence class: if several states map to the same representative, this reduces the work to be done. This representative might not be unique, since finding unique representatives is hard, in general; however, such approaches work well in most cases. Our approach follows this style, using unique representatives.

We discuss other techniques for specifying linearizability of various datatypes, different abstraction techniques, and other techniques for analysing datatypes based on a linked list in Sects. 9.14 and 9.15, where we are better able to compare with our own techniques.

2 The framework

In this section, we introduce more formally the class of systems that we consider, and our framework. Recall that we are interested in systems with an unbounded number of component processes, perhaps from different families, and some fixed processes.

We introduce two examples to illustrate the ideas. In our first, toy, example, the components run a simple token-based mutual exclusion protocol. Component j can receive the token from component i via a transition with event pass.i.j; it can then enter and leave the critical section, before passing the token to another component. In the initial state, a single component holds the token.

A watchdog (fixed) process observes components entering and leaving the critical section, and signals with event error if mutual exclusion is violated. Our correctness conditions will be that the event error does not occur and that the system does not deadlock. (The idea of using a watchdog is essentially the same as the automata-theoretic approach to model checking [57], where an automaton defines the allowable executions.)

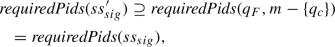

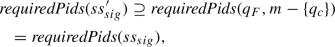

Figure 1 illustrates state machines for these processes.

Illustration of the state machines for the toy example: a component (above) and the watchdog (below). The diagrams are symbolic, and parameterized by the set of component identities. For example, the latter state diagram has a state \(wd_1(id)\) for each identity id; there is a transition labelled enter.id from \(wd_0\) to \(wd_1(id)\) for each identity id. In the events labelling transitions, “?” indicates that the following field can take an arbitrary value; a parameter in the subsequent state may be bound to this value

Each process’s state is the combination of a control state and a vector of zero or more parameters, each of which is a component identity, either its own identity or that of another component. In particular, each component process stores its own identity as its first parameter. Likewise, each event is the combination of a channel name and zero or more component identities. Processes synchronise on common events; for example, components id and \(id'\) synchronise on the event \(pass.id.id'\). We make the restriction that at most two components and maybe some fixed processes synchronise on each event. We want to verify such systems for an arbitrary number of components.

Figure 3 gives a more interesting example, representing a lock-based stack that uses a linked list internally; pseudo-code is in Fig. 2. Here the components come from two families: nodes that make up the linked list; and threads that operate on the stack.

Each node hold a piece of data x, of some type D, and a reference next to the next node in the linked list, which might be a special value null. In the state machine, each node has an identity me from the type of node identities; x is treated as part of the control state and of the initializing channel, since it does not correspond to the identity of a component. We assume that the type D of data is finite (in Sect. 9 we use techniques from data independence to justify the use of finite types of data within our models).

The node can be initialized by a thread. Subsequently, a thread can get the value of next or x. In other similar examples, there might be additional transitions corresponding to a thread updating the next reference.

The datatype uses a lock, and a variable Top that points to the top node on the stack. Each thread performs push and pop operations upon the stack: the exact details aren’t important here. In the state machine, each thread component has an identity me from a type of thread identities. It synchronises with node components to initialise them, or to get their next or x fields. It synchronises with the lock to lock and unlock the datatype, and with Top to get or set a reference to the current top node in the stack. It performs additional signal events, \(push_x.me\), \(pop_x.me\) and popEmpty.me to indicate the operation it is performing and the result; we later use these to capture the property that the system implements a stack. In each state, each thread holds its own identity; in some states, it also holds a reference to a node.

The fixed processes are the lock and Top. For the purposes of the formal development, it is simplest to assume a single fixed process, which we can take as the product of the two parts. The state machine for the lock allows the datatype to be locked and unlocked. The state machine for Top stores a reference to the current top node, which threads may get or set. In Sect. 9, we describe how to extend the fixed processes to include a watchdog part that checks that the system does indeed implement a stack.

2.1 Processes

Formally, each process (a component or a fixed process) is represented by a parameterized state machine.

Definition 1

A state machine is a tuple \((Q, \Sigma , \delta )\), where: Q is a set of states; \(\Sigma \) is a set of visible events with  (\(\tau \) represents an internal event); and

(\(\tau \) represents an internal event); and  is a transition relation.

is a transition relation.

Let T be some potentially infinite set T of component identities. A parameterized state machine over T is a state machine where:

-

the states Q are a subset of

, for some finite set S of control states;

, for some finite set S of control states; -

the events \(\Sigma \) are a subset of

, for some finite set Chan of channels.

, for some finite set Chan of channels.

We sometimes write a state \((s, \mathbf{x} )\) as \(s(\mathbf{x} )\): s is a control state, and \(\mathbf{x} \) records the values of its parameters (cf. Figs. 1 and 3). Similarly, we write an event  as \(c.\mathbf{y} \), and write

as \(c.\mathbf{y} \), and write  to denote \(((s, \mathbf{x} ), (c, \mathbf{y} ), (s', \mathbf{z} )) \in \delta \).

to denote \(((s, \mathbf{x} ), (c, \mathbf{y} ), (s', \mathbf{z} )) \in \delta \).

The type T of identities may be partitioned into one or more subtypes: in the linked-list-based stack example, T is partitioned into the subtypes of node identities and thread identities. Further, certain values may be distinguished, such as the value null representing the null node reference.

We assume that the states of a state machine are well typed in the sense that two states with the same control state have the same number and types of parameters: if \(cs(x_1,\ldots ,x_n), cs(x_1',\ldots ,x_{n'}') \in Q\), then \(n = n'\), and \(x_i\) and \(x_i'\) are from the same subtype, for each i. Likewise, we assume that the set of visible events is well typed.

We assume that the component identities are treated data independently: they can be received, stored, sent, and tested for equality; but no other operations, such as arithmetic operations, can be performed on them. Processes defined in this way are naturally symmetric, in a way that we now make clear.

Let \(\pi \) be a permutation on T that maps each value to a value of the same subtype, and maps each distinguished value to itself; we write Sym(T) for the set of all such permutations. We lift \(\pi \) to vectors from \(T^*\) by point-wise application; we then lift it to states and events by \(\pi (s(\mathbf{x} )) = s(\pi (\mathbf{x} ))\) and \(\pi (c.\mathbf{x} ) = c.\pi (\mathbf{x} )\); we lift it to sets, etc., by point-wise application. We require each state \(s(\pi (\mathbf{x} ))\) to be equivalent to \(s(\mathbf{x} )\) but with all events renamed by \(\pi \): formally the states are \(\pi \)-bisimilar [47].

Definition 2

Let \(M = (Q, \Sigma , \delta )\) be a state machine, and let \(\pi \in Sym(T)\). We say that \(\mathord {\sim } \subseteq Q \times Q\) is a \(\pi \)-bisimulation iff \(\pi (\Sigma ) = \Sigma \), and whenever \((q_1, q_2) \in \mathord {\sim }\) and  :

:

-

If

then

then  ;

; -

If

then

then  .

.

Definition 3

A parameterized state machine \((Q, \Sigma , \delta )\) is symmetric if for every  ,

,  is a \(\pi \)-bisimulation.

is a \(\pi \)-bisimulation.

The node and thread state machines in Fig. 3 are symmetric. For example, the states \(Node_A(N_0, N_1)\) and \(Node_A(N_1,N_4)\) are \(\pi \)-bisimilar for each \(\pi \in Sym(T)\) with \(\pi (N_0) = N_1\) and \(\pi (N_1) = N_4\).

This notion of symmetry is a natural condition. In [29], we proved that an arbitrary process defined using machine-readable CSP will be symmetric in this sense under rather mild syntactic conditions, principally that the definition of the process contains no non-distinguished constant from the type T.

2.2 Systems

Each system will contain a fixed process and some number of components. We assume a single fixed process here, for simplicity: a system with multiple fixed processes can be modelled by considering the parallel composition of those processes as a single process.

Each system state contains a state for the fixed process, and a finite set containing the state for each component. For example, one state of the toy mutual-exclusion example is  where

where  . We start by defining the semantics of the components.

. We start by defining the semantics of the components.

Definition 4

A component definition is a pair (Cpt, Sync) where

-

1.

\(Cpt = (Q_c, \Sigma _c, \delta _c)\) is a symmetric parameterized state machine over T, representing each component. Each component has an identity, represented by its first parameter; we defineFootnote 1\(\mathop {\mathsf {id}}(cs, p.ps) = p\). We require that the identity is a non-distinguished value, and that the identity does not change: if

in \(\delta _c\) then \(\mathop {\mathsf {id}}(q) = \mathop {\mathsf {id}}(q')\).

in \(\delta _c\) then \(\mathop {\mathsf {id}}(q) = \mathop {\mathsf {id}}(q')\). -

2.

\(Sync \subseteq \Sigma _c\) is a set of events that require the synchronization of two components; we require \(\pi (Sync) = Sync\) for each \(\pi \in Sym(T)\), and

.

.

A component definition defines a state machineFootnote 2\((\mathbb {P}(Q_c), \Sigma _c, \delta )\) that represents all the components. The transition relation \(\delta \) is defined by the following two rules, where  corresponds to \(\delta _c\); the rules represent, respectively, an event of just one component, and a synchronization between two components.

corresponds to \(\delta _c\); the rules represent, respectively, an event of just one component, and a synchronization between two components.

Note that the components may be of different families (such as threads and nodes): the different families correspond to different sets of states within \(Q_c\). We assume that all components of the same family will have the same type for their identities (such as thread identities and node identities). However, in some examples it is necessary to have two families that use the same type for their identities; for example, in Sect. 9.3 we use two families, Thread and LockSupport, that use the same type ThreadID for their identities. The following definition captures the idea of families.

Definition 5

A family function is a function  , where F is a finite set, and:

, where F is a finite set, and:

-

The family of a component is preserved by transitions:

-

For each family f, the identities of states of family q all have the same type; we denote this type

(a sub-type of T):

(a sub-type of T):

Each component can be identified by the combination of its family and identity, which we term a process identity, of type  . The function

. The function  returns the process identity of a component:

returns the process identity of a component:

(Including the family is necessary in the case that two families use the same types for their identities.)

Definition 6

A system definition is a tuple  ,

,  where

where

-

1.

T is a type, partitioned into subtypes \(T_1,\ldots , T_n\);

-

2.

\(Fixed = (Q_F, \Sigma _F, \delta _F)\) is a symmetric parameterized state machine over T representing the fixed process;

-

3.

\(Cpts = ((Q_c, \Sigma _c, \delta _c), Sync)\) is a component definition over T;

-

4.

is a family function over \(Q_c\);

is a family function over \(Q_c\); -

5.

\(Init \subseteq \mathcal {SS}\) is a set of initial states, where

denotes all possible system states.

denotes all possible system states.

Given such a system, a system state is a pair \((q_F, m) \in \mathcal {SS}\), where \({q_F \in Q_F}\) gives the state of the fixed process, and \(m \in \mathbb {P}(Q_c)\) gives the states of the components, where different components in m have distinct process identities.

A system definition defines a state machine

, where \(\delta \) is defined by the following three rules (where

, where \(\delta \) is defined by the following three rules (where  and

and  correspond to \(\delta _F\) and the transition relation of Cpts, respectively). The rules represent, respectively: events of just the fixed process; synchronizations between the fixed process and the components; and events of just the components.

correspond to \(\delta _F\) and the transition relation of Cpts, respectively). The rules represent, respectively: events of just the fixed process; synchronizations between the fixed process and the components; and events of just the components.

Each initial state \(init \in Init\) defines a rooted state machine; it represents a particular system, for example with particular numbers of components of different families.

For instance, in the toy mutual-exclusion example, we can take Cpts and Fixed to be the state machines illustrated in Fig. 1; Sync is the set of all events on channel pass; Init is all states with the watchdog in state \(wd_0\), a single component in state \(s_1\), and the remaining components in state \(s_0\) (and with components having distinct identities):

In the lock-based queue example, we can take Cpts to be the union of the node and thread state machines, and Fixed to be the product of the lock and top state machines; Sync is all events representing synchronizations between a thread and a node, i.e. events on the initNode, getNext and getValue channels; Init is all states where every component is in either state InitNode(n) for n a (non-null) node identity, or state Thread(t) for t a thread identity (with components having distinct identities), and where the fixed process is in the state corresponding to Lock and Top(null).

Note that Init can be an arbitrary set of system states. In the implementation, the user defines the initial abstract states AInit, which must be related to Init according to a condition that we give in Sect. 6. In practice, the condition allows Init to be system states where, for example: (1) all components of a family have the same initial control state \(q_0\); or (2) some fixed number of components of a family have initial control state \(q_0\), and the remainder have initial control state \(q_0'\).

Definition 7

We define the reachable states \(\mathcal {R}\) of a system to be those system states reachable from an initial state by zero or more transitions.

Our normal correctness condition will be that the distinguished event error cannot occur. We will also sometimes verify deadlock freedom.

Definition 8

A system is error-free if there are no reachable states ss and \(ss'\) such that  .

.

A system is deadlock-free if for every reachable state ss, there is at least one transition from ss.

Our normal style will be to include a watchdog as a fixed process, that observes (some) events by other processes, and performs the event error after an erroneous trace. In [30], it is shown that an arbitrary CSP traces refinement can be encoded in this way. Hence this technique can capture an arbitrary finite-state safety property.

3 Using view abstraction

In this section, we describe our application of view abstraction, adapting the techniques from [4] to our setting of synchronous message-passing with component identities. Fix a system definition  , and let \(Q_c\) be the states of Cpts.

, and let \(Q_c\) be the states of Cpts.

A particular system state \((q_F,m)\) will be abstracted by a set of system states \((q_F,v)\) that are included in \((q_F,m)\), as captured by the following relation:

We call v a view, and call \((q_F,v)\) a system view: each gives a restricted view of the whole system state.

The approach in [4, 40] was to fix a value k, and then to abstract each system state to all its views of size k. We generalise this approach, in order to reduce the state space that our algorithm will explore.

A profile is defined by the number of components of each family. Given families \(f_1,\ldots ,f_n\), we write \((f_1\,: c_1, f_2\,:c_2, \ldots , f_n\,: c_n)\) to denote all system states containing \(c_i\) components of family \(f_i\), for each i. For example, (Thread : 1, Node : 2) represents all systems states containing one thread and two nodes.

Definition 9

A profile is a tuple of natural numbers \(\mathbf{c} = (c_1, \ldots , c_n)\); we often denote this by \((f_1 c_1, f_2\,: c_2, \ldots , f_n\,: c_n)\). This represents all system states \((q_F,m)\) such thatFootnote 3

We define the size of such a profile to be the total number of components, i.e. \(\sum _{i = 1}^n c_i\).

Note that profiles are preserved by system transitions (since families are preserved).

We will perform an abstraction onto some set A of system views, defined as follows.

Definition 10

An abstraction set is a set \(A \subseteq \mathcal {SS}\) that is formed as the union of a finite number of profiles, such that all profiles have the same size.

In the implementation, the user defines the abstraction set by giving a list of the corresponding profiles. In Sect. 9, we discuss the profiles necessary for certain examples, in particular to ensure that the abstraction captures necessary invariants.

The assumption that all elements of the abstraction set have the same size simplifies both the theory and the implementation. We leave as future work consideration as to whether this assumption can be dropped.

Example 1

We will use abstraction sets of the following forms later.

-

Let \(k \in \mathbb {Z}^+\). Let \(\mathcal {SV}_k\) be all system states containing precisely k components:

\(\mathcal {SV}_k\) is the union of all profiles with a total of k components. This is the form of abstraction we considered in [40].

-

Consider a setting where the component identities are partitioned into thread identities Thread and node identities Node. Then the abstraction set consisting of system states containing precisely two threads and one node, or one thread and two nodes is denoted

.

.

Fix an abstraction set A. We write \(sv \sqsubseteq _A ss\) as shorthand for \(sv \sqsubseteq ss \wedge sv \in A\). The abstraction function  abstracts a system state by its system views in A:

abstracts a system state by its system views in A:

We lift \(\alpha _A\) to sets of system states by pointwise application. Informally, the idea of the algorithm is to calculate an upper bound on the abstraction of all reachable states, \(\alpha _A(\mathcal {R})\).

The concretization function  takes a set SV of system views and produces those system states that are consistent with SV, i.e. such that all views of the state are in SV.

takes a set SV of system views and produces those system states that are consistent with SV, i.e. such that all views of the state are in SV.

For the moment, we concentrate on systems that are of at least some minimum sizes. We fix a set C that is formed as the union of a finite number of profiles. (In the next section, we will impose further constraints on C.) Our main result of this section, Theorem 14, will consider only systems where every initial state contains an element of C, i.e. is an element of

Since profiles are preserved by transitions, every subsequent state will also be an element of \(\mathcal {SS}^{\sqsupseteq C}\). It is convenient to define the concretizations of SV from \(\mathcal {SS}^{\sqsupseteq C}\).

Further, in Lemma 15 we show that when these techniques are applied to prove error freedom, results can immediately be extended to smaller systems, with an initial state not in \(\mathcal {SS}^{\sqsupseteq C}\).

The following lemmas are proved as in [4].

Lemma 11

Suppose \(X, Y \subseteq \mathcal {SS}^{\sqsupseteq C}\) and \(V, W \subseteq A\). Then

-

1.

\(X \subseteq Y \implies \alpha _A(X) \subseteq \alpha _A(Y)\);

-

2.

\(V \subseteq W \implies \gamma _A^{\sqsupseteq C}(V) \subseteq \gamma _A^{\sqsupseteq C}(W)\);

-

3.

\(\alpha _A(\gamma _A^{\sqsupseteq C}(V)) \subseteq V\);

-

4.

\(X \subseteq \gamma _A^{\sqsupseteq C}(\alpha _A(X))\).

Lemma 12

\((\alpha _A, \gamma _A)\) forms a Galois connection: if \(X \subseteq \mathcal {SS}^{\sqsupseteq C}\) and \(Y \subseteq A\), then

We define an abstract transition relation. If \(SV \subseteq A\) and \(sv' \in A\), then define

This captures that some concretization of SV can perform a transition to a system state consistent with the abstraction \(sv'\). For example, in the mutual exclusion example we have the transition

corresponding to the concrete transition (for \(C = \mathcal {SV}_3\))

We then define the abstract post-image of a set of system views \(SV \subseteq A\) by

where post gives the concrete post-image of a set \(X \subseteq \mathcal {SS}^{\sqsupseteq C}\):

The following lemma relates abstract and concrete post-images.

Lemma 13

If \(SV \subseteq A\) and \(X \subseteq \gamma _A^{\sqsupseteq C}(SV)\), then \(post(X) \subseteq \gamma _A^{\sqsupseteq C}(aPost_A(SV))\).

Proof

If \(X \subseteq \gamma _A^{\sqsupseteq C}(SV)\) then

The result then follows from Lemma 12. \(\square \)

The following theorem shows how the reachable states \(\mathcal {R}\) can be over-approximated by iterating the abstract post-image. We write \(f^*(X)\) for  .

.

Theorem 14

If \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\), and \(AInit \subseteq A\) is such that \(\alpha _A(Init) \subseteq AInit\) then

Proof

The assumption implies \(Init \subseteq \gamma _A^{\sqsupseteq C}(AInit)\), from Lemma 12 (using the assumption \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\)). Then Lemma 13 implies

via a trivial induction. The result then follows from the fact that \(\mathcal {R}= post^*(Init)\). \(\square \)

Hence, if we can show that all states in \(\gamma _A((aPost_A^{\sqsupseteq C})^*(AInit))\) are error-free, then we will be able to deduce that all systems are error-free.

In the mutual exclusion example, we can take \(A = \mathcal {SV}_2\), \(C = \mathcal {SV}_3\), and (for the moment) redefine Init to contain just initial states of size at least 3 (so \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\)). Then we can define AInit to contain all system views of size 2 with the watchdog in state \(wd_0\), zero or one components in state \(s_1\), and the remaining components in state \(s_0\) (and with components having distinct identities).

This satisfies that \(\alpha _A(Init) \subseteq AInit\) (for Init defined earlier). Then \(\gamma _{\mathcal {SV}_2}^{\sqsupseteq \mathcal {SV}_3} ((aPost_{\mathcal {SV}_2}^{\mathcal {SV}_3})^*(AInit))\) contains all system views as follows: (1) at most one component is in state \(s_1\), \(s_2\) or \(s_3\), and the remainder are in \(s_0\); and (2) if component id is in \(s_2\) then the watchdog is in \(wd_1(id)\); if every component is in \(s_0\) then the watchdog is in either \(wd_0\) or \(wd_1(id)\) where component id is not in the view; and otherwise the watchdog is in \(wd_0\). This approximates the invariant that a single component holds the token, and the watchdog records the component in the critical region. In particular, the event error is not available from any such state. The above theorem then shows that all systems of size at least 3 are error-free. Finally, systems of size 1 and 2 can be shown to be error-free using the following lemma.

Lemma 15

If a system starting from state \((q_F, m \uplus m')\) is error-free, then the system starting from state \((q_F, m)\) is error-free.

Proof

We prove the contra-positive. Suppose the system starting in state \((q_F, m)\) has an execution leading to error. Then the system starting in state \((q_F, m \uplus m')\) has a similar execution, where the components from \(m'\) perform no transitions, again leading to error. \(\square \)

However, Theorem 14 does not immediately give an algorithm. The application of \(\gamma _A^{\sqsupseteq C}\) within \(aPost_A^{\sqsupseteq C}\) can produce an infinite set, for two reasons:

-

It can give system states with an arbitrary number of components;

-

The parameters of type T within system states can range over a potentially infinite set.

We tackle the former problem in Sect. 4: by imposing conditions on C, we show that it is enough to build only concretizations from C itself. We tackle the latter problem in Sect. 5, using symmetry.

4 Bounding the concretizations

In this section, we develop bounds on the concretizations that it is necessary to consider when calculating \(aPost_A\). We impose conditions on the set C of concretizations such that it is enough to consider concretizations from C: the result of every abstract transition will also be the result of an abstract transition using concretizations from C.

We will show that it is enough to consider concretizations that add at most two additional component states to system views in the abstraction set. For example, if \(A = \mathcal {SV}_k\), it will be enough to take \(C = \mathcal {SV}_{k+2}\). We also show that in some circumstances adding just a single additional component state is enough. For example, if again \(A = \mathcal {SV}_k\), it will be enough to take \(C = \mathcal {SV}_{k+1}\). We give the necessary properties of C in Definition 16, and prove the result described above in Proposition 19.

In each concrete state, all components have different process identities; we ensure that the concretizations respect this. We write \(\mathop {\mathsf {disjointPids}}(q, v)\) to mean that the process identity in component state q is disjoint from those in v (i.e. for all \(q_c \in v\),  ). We write \(\mathop {\mathsf {disjointPids}}(q_1, q_2, v)\) to mean that the process identities in \(q_1\) and \(q_2\) are disjoint from those in v and from each other.

). We write \(\mathop {\mathsf {disjointPids}}(q_1, q_2, v)\) to mean that the process identities in \(q_1\) and \(q_2\) are disjoint from those in v and from each other.

We write \(\mathop {\mathsf {threeWaySync}}(q_F, q_1, q_2)\) to indicate that the system can perform a three-way synchronization between fixed process state \(q_F\) and component states \(q_1\) and \(q_2\).

It is convenient to define \(\mathcal {SS}^{\sqsubseteq C}\) to be elements of C and their subviews:

Definition 16

Let A be an abstraction set. Then a set \(C \subseteq \mathcal {SV}\) is an adequate concretization set for A if:

-

1.

C is formed as the union of a finite number of profiles, all of the same size.

-

2.

Every element of A is a subview of an element of C:

$$\begin{aligned} A \subseteq \mathcal {SS}^{\sqsubseteq C}. \end{aligned}$$ -

3.

Every extension of an element of A with a single component state (with a disjoint identity) is a subview of an element of C:

-

4.

Whenever a pair of concrete states \(q_1\) and \(q_2\) can take part in a three-way synchronization with the fixed process, if we extend an element of A with those states, the resulting system state is a subview of an element of C:

(Item 3 logically implies item 2; but it is useful to have the latter stated explicitly.)

Example 2

We consider adequate concretizations for the abstraction sets from Example 1.

-

If \(A = \mathcal {SV}_k\) then \(C = \mathcal {SV}_{k+2}\) is adequate.

-

If

, and all three-way synchronizations with the fixed process involve one thread and one node, then

, and all three-way synchronizations with the fixed process involve one thread and one node, then  is adequate.

is adequate.

In some examples we consider, there are no three-way synchronizations involving the fixed process and two components. In such cases, condition 4 holds vacuously, and so we can use smaller concretization sets.

-

If \(A = \mathcal {SV}_k\) then \(C = \mathcal {SV}_{k+1}\) is adequate.

-

If

, then

, then

is adequate.

is adequate.

For the remainder of this section, fix an adequate concretization set C for A. We show that it is enough to consider concretizations that are elements of C. We define

Then for \(SV \subseteq A\) and \(sv' \in A\), we define the abstract transitions involving such concretizations, and the corresponding abstract post-image, as follows:

In Proposition 19, we will require that the concretization set C is convex. Informally, this means that if C contains two profiles, then it contains any profile in between them (in a geometrical sense). For example, if it contains the profiles \((Thread \,: 2, Node \,: 0)\) and \((Thread\,: 0, Node \,: 2)\), then it must also contain the profile \((Thread\,: 1, Node \,: 1)\). Each of the concretization sets from Example 2 is convex.

Definition 17

Let \(S \subseteq \mathbb {N}^n\) be a set of profiles, all of which have the same size s. For \(i = 1,\ldots ,n\), let \(min_i\) and \(max_i\) be, respectively, the minimum and maximum of the ith coordinates:

We say that  is convex if S contains all n-tuples of size s between \((min_1,\ldots ,min_n)\) and \((max_1, \ldots , max_n)\).

is convex if S contains all n-tuples of size s between \((min_1,\ldots ,min_n)\) and \((max_1, \ldots , max_n)\).

We will require the following technical lemma. We will use it in Proposition 19 to deduce the existence of concrete transitions using elements of C from the existence of corresponding transitions using elements of \(\mathcal {SS}^{\sqsubseteq C}\) and \(\mathcal {SS}^{\sqsupseteq C}\). Its proof is in Appendix A.

Lemma 18

Suppose C is a convex set of concretizations. Then whenever \(ss, ss'\) are such that

then

Example 3

Consider the non-convex set  , and let \(ss \in (Thread\,: 0, Node\,: 1)\) and \(ss' \in (Thread\,: 2, Node\,: 1)\) be such that \(ss \sqsubseteq ss'\). These satisfy the premise of Lemma 18. However, they don’t satisfy the consequent, since the concretization c would have to contain precisely one Node component, and no element of C does so. If we add \((Thread\,: 1, Node\,: 1)\) to C it becomes convex, and so the property of Lemma 18 holds.

, and let \(ss \in (Thread\,: 0, Node\,: 1)\) and \(ss' \in (Thread\,: 2, Node\,: 1)\) be such that \(ss \sqsubseteq ss'\). These satisfy the premise of Lemma 18. However, they don’t satisfy the consequent, since the concretization c would have to contain precisely one Node component, and no element of C does so. If we add \((Thread\,: 1, Node\,: 1)\) to C it becomes convex, and so the property of Lemma 18 holds.

The following proposition is the main result of this section. It shows that states resulting from abstract transitions can be found by considering just abstract transitions that use concretizations from C.

Proposition 19

Suppose C is convex, \(SV \subseteq A\),  . Then either (a)

. Then either (a)  or (b) \(sv' \in SV\); in particular, the former disjunct holds whenever

or (b) \(sv' \in SV\); in particular, the former disjunct holds whenever  .

.

Proof

Suppose  . Then for some

. Then for some  and some \((q_F', m') \in \mathcal {SS}^{\sqsupseteq C}\) we have

and some \((q_F', m') \in \mathcal {SS}^{\sqsupseteq C}\) we have  and \(sv' \sqsubseteq _A (q_F', m')\). Summarizing:

and \(sv' \sqsubseteq _A (q_F', m')\). Summarizing:

Let \({\hat{m}}'\) be the smallest subset of \(m'\) that includes \(v'\) and each of the (at most two) components that change state in the transition; and let \({{\hat{m}} \subseteq m}\) be the pre-transition states of the components in \({\hat{m}}'\). For example, suppose the transition corresponds to the second rule in Definition 4, combined with either the second or third rule of Definition 6; so, for some \(m_0\),  and

and  ; and suppose \(v'\) contains \(q_{c,1}'\) but not \(q_{c,2}'\); then

; and suppose \(v'\) contains \(q_{c,1}'\) but not \(q_{c,2}'\); then  ; and \({\hat{m}} \subseteq m\) is the same as \({\hat{m}}'\) but with \(q_{c,1}\) and \(q_{c,2}\) in place of \(q_{c,1}'\) and \(q_{c,2}'\).

; and \({\hat{m}} \subseteq m\) is the same as \({\hat{m}}'\) but with \(q_{c,1}\) and \(q_{c,2}\) in place of \(q_{c,1}'\) and \(q_{c,2}'\).

In each case, it is easy to see that  via the same transition rules that produced the original transition. Also \(sv' = (q_F', v') \sqsubseteq (q_F', {\hat{m}}')\). And \({\hat{m}} \subseteq m\), so \(\alpha _A(q_F, {\hat{m}}) \subseteq \alpha _A(q_F, m) \subseteq SV\), so \((q_F, {\hat{m}}) \in \gamma _A(SV)\). Summarizing:

via the same transition rules that produced the original transition. Also \(sv' = (q_F', v') \sqsubseteq (q_F', {\hat{m}}')\). And \({\hat{m}} \subseteq m\), so \(\alpha _A(q_F, {\hat{m}}) \subseteq \alpha _A(q_F, m) \subseteq SV\), so \((q_F, {\hat{m}}) \in \gamma _A(SV)\). Summarizing:

We now perform a case analysis. In case 3, below, we directly prove part (b) of the proposition. In the other three cases, we show that \((q_F, {\hat{m}}) \in \mathcal {SS}^{\sqsubseteq C}\); we will subsequently show that the transition is also reflected by a transition using concretizations from C, which will give us part (a) of the proposition.

-

1.

Suppose \({\hat{m}}' = v'\), i.e. \(v'\) contains all the components taking part in the transition. Then \((q_F,{\hat{m}}') = sv' \in A\), so \((q_F,{\hat{m}}) \in A\) (since \((q_F,{\hat{m}}')\) and \((q_F,{\hat{m}})\) have the same profile). So \((q_F,{\hat{m}}) \in \mathcal {SS}^{\sqsubseteq C}\), by condition 2 of Definition 16.

-

2.

Suppose \(v'\) contains all the components taking part in the transition except for one, \(q'\), so

. Let q be the pre-transition state corresponding to \(q'\), and let v be the pre-transition states corresponding to \(v'\). So

. Let q be the pre-transition state corresponding to \(q'\), and let v be the pre-transition states corresponding to \(v'\). So

Now, \((q_F', v') \in A\) so \((q_F, v) \in A\), since they have the same profile. Hence \((q_F,{\hat{m}}) \in \mathcal {SS}^{\sqsubseteq C}\), by condition 3 of Definition 16.

-

3.

Suppose the transition involves two components whose post-transition states are not included in \(v'\), and the fixed process is not involved in the transition, so \(q_F = q_F'\), and \(a \in Sync - \Sigma _F\). So, writing \(q_1,q_2,q_1',q_2'\) for the pre- and post-states of the relevant components,

But \(sv' = (q_F', v') \sqsubseteq _A (q_F', {\hat{m}}) \sqsubseteq (q_F',m)\), so \(sv' \in \alpha _A(q_F',m)\); but \((q_F',m) \in \gamma _A(SV)\), so \(sv' \in SV\), as required for part (b) of the proposition.

-

4.

Finally suppose the transition involves two components whose post-transition states are not included in \(v'\), and the fixed process is involved in the transition. So, naming states as in the previous item,

Now,

so \((q_F, v') \in A\), since they have the same profile. So

so \((q_F, v') \in A\), since they have the same profile. So  , by condition 4 of Definition 16.

, by condition 4 of Definition 16.

In cases 1, 2 and 4, we had

Hence, by Lemma 18, there exists \(c \in C\) such that

From the latter inclusion and \((q_F,m) \in \gamma _A(SV)\), we have \(c \in \gamma _A(SV)\). Let \(c'\) be the post-state corresponding to c, i.e. replacing the states that take part in the transition. Then  , using the same transition rules that produced the original transition; and \(c' \sqsupseteq (q_F', {\hat{m}}') \sqsupseteq _A sv'\). Summarizing:

, using the same transition rules that produced the original transition; and \(c' \sqsupseteq (q_F', {\hat{m}}') \sqsupseteq _A sv'\). Summarizing:

so  , as required.

, as required.

Finally, note that case 3, above, corresponds precisely to \(a \in Sync - \Sigma _F\); hence part (a) of the result holds whenever  . \(\square \)

. \(\square \)

Abdulla et al. [4] prove a similar result in their setting, where all abstraction sets are of the form  , i.e. all views of size k or less. They show that in the case of binary synchronizations, it is enough to consider concretizations from

, i.e. all views of size k or less. They show that in the case of binary synchronizations, it is enough to consider concretizations from  , i.e. of size \(k+1\) or less. They further show that in the case of \(m+1\)-way synchronizations, is enough to consider concretizations from

, i.e. of size \(k+1\) or less. They further show that in the case of \(m+1\)-way synchronizations, is enough to consider concretizations from  , i.e. of size \(k+m\) or less. Our result of requiring concretizations from \(\mathcal {SV}_{k+2}\) when there are three-way synchronizations can be seen as an adaptation of an instance of this to our setting. Note that we consider abstractions and concretizations of a single size, and, in particular, do not require concretizations to be closed under sub-views; this gives a state-space saving in our implementation.

, i.e. of size \(k+m\) or less. Our result of requiring concretizations from \(\mathcal {SV}_{k+2}\) when there are three-way synchronizations can be seen as an adaptation of an instance of this to our setting. Note that we consider abstractions and concretizations of a single size, and, in particular, do not require concretizations to be closed under sub-views; this gives a state-space saving in our implementation.

5 Using symmetry

The abstract transition relation from the previous section still produces a potentially infinite state space, because of the potentially unbounded set of component identities. In this section, we use techniques based on symmetry reduction to reduce this to a finite state space. We fix a system, as in Definition 6. We also fix an abstraction set A, and a concretization set C that is adequate for A.

Recall (Definitions 3 and 6) that we assume that the fixed process and each component is symmetric. We show that this implies that the system as a whole is symmetric. We lift permutations to system states by point-wise application:  .

.

Lemma 20

The state machine defined by a system is symmetric: if \((q, m) \in \mathcal {SS}\) and \(\pi \in Sym(T)\), then \((q, m) \sim _\pi \pi (q, m)\).

Proof

We show that the relation  is a \(\pi \)-bisimulation. Suppose

is a \(\pi \)-bisimulation. Suppose  . We show that

. We show that  by a case analysis on the rule used to produce the former transition. For example, suppose the transition is produced by the first rule of Definition 4 and the second rule of Definition 6, so is of the form

by a case analysis on the rule used to produce the former transition. For example, suppose the transition is produced by the first rule of Definition 4 and the second rule of Definition 6, so is of the form

such that  ,

,  and

and  . Then since Fixed and Cpts are symmetric, and \(\pi (Sync) = Sync\), \(\pi (\Sigma _c) = \Sigma _c\) and \(\pi (\Sigma _F) = \Sigma _F\), we have

. Then since Fixed and Cpts are symmetric, and \(\pi (Sync) = Sync\), \(\pi (\Sigma _c) = \Sigma _c\) and \(\pi (\Sigma _F) = \Sigma _F\), we have  ,

,  and

and  . But then

. But then

using the same rules. The cases for other rules are similar. And conversely, we can check that each transition of \(\pi (q, m)\) is matched by a transition of (q, m). \(\square \)

We now show a similar result for the abstract transition relation. We lift \(\pi \) to system views and sets of system views by point-wise application. The following straightforward lemma captures properties of permutations and the abstraction and concretization functions. Recall that we assumed that the abstraction set A and the concretization set C is each a union of profiles; this implies that each is closed under each permutation \(\pi \in Sym(T)\).

Lemma 21

Let \(\pi \in Sym(T)\). Then

-

1.

If \(ss \in \mathcal {SS}\), \(sv \in A\) and \(sv \sqsubseteq _A ss\), then \(\pi (sv) \sqsubseteq _A \pi (ss)\);

-

2.

If \(ss \in \mathcal {SS}\), then \(\pi (\alpha _A(ss)) = \alpha _A(\pi (ss))\);

-

3.

If \(SV \subseteq \mathcal {SV}\), then \(\pi (\gamma _A^{C}(SV)) = \gamma _A^{C}(\pi (SV))\).

Our approach will be to treat symmetric system views as equivalent, requiring the exploration of only one system view in each equivalence class. We will need the following definition.

Definition 22

Let \(sv_1, sv_2 \in \mathcal {SV}\). We write \(sv_1 \approx sv_2\) if \(sv_1 = \pi (sv_2)\) for some \(\pi \in Sym(T)\). Note that this is an equivalence relation. We say that \(sv_1\) and \(sv_2\) are equivalent in this case. We write \({\overline{SV}}\) for the set of views that are equivalent to an element of SV:

The following lemma follows immediately from Lemmas 20 and 21.

Lemma 23

For any set X of system views,

6 The algorithm

We now present our algorithm and prove its correctness. The algorithm is in Fig. 4. It takes as inputs a system, an abstraction set A, a convex adequate concretization set C, and a set AInit of initial system views such that \(\alpha _A(Init) \subseteq {\overline{AInit}}\). The algorithm maintains a set \(SV \subseteq A\) of system views encountered so far, up to equivalence; in other words, SV represents all system views equivalent to an element of SV, i.e. \({\overline{SV}}\). On each iteration, the algorithm applies \(aPost_A^{C}\) to \({\overline{SV}}\) (we show in Lemma 24 that \(\overline{\alpha _A(post(X))} = aPost_A^{C}({\overline{SV}})\) where \({\overline{X}} = \gamma _A^{C}({\overline{SV}})\)). This continues until either a transition on error is found, or a fixed point is reached.

When this algorithm is run on the mutual exclusion example with \(A = \mathcal {SV}_2\) and \(C = \mathcal {SV}_3\), it encounters just five system views:

(or equivalent system views) the former two being the initial system views. In particular, none of these has an abstract transition for error, so the algorithm returns success.

In Sect. 8, we describe the implementation of this algorithm. In particular, we describe how we implement the set \({\overline{SV}}\) by storing suitable representatives of each element, and how we calculate the set X of representative concretizations.

6.1 Correctness

It is convenient to define

Note that in the algorithm, the set SV represents all system views that are equivalent to any member of SV, i.e. \({\overline{SV}}\). In effect, each iteration of the algorithm updates \({\overline{SV}}\) with \(aPostId_A^{C}({\overline{SV}})\). We will show below (Lemma 25) that, if the algorithm does not return failure, then it reaches a fixed point with \({\overline{SV}} = (aPostId_A^{C})^*({\overline{AInit}})\).

The algorithm calculates \(\gamma _A^{C}({\overline{SV}})\) up to \(\approx \)-equivalence (i.e. it calculates at least one element of each equivalence class). The following lemma shows that the subsequent iteration is over representative elements of \(aPost_A^{C}({\overline{SV}})\).

Lemma 24

If \({\overline{X}} = \gamma _A^{C}({\overline{SV}})\), then

Proof

By Lemma 23, \(\overline{\alpha _A(post(X))} = \alpha _A(post({\overline{X}}))\). But this equals \(aPost_A^{C}({\overline{SV}})\) by the assumption about X. \(\square \)

Lemma 25

If the algorithm does not return failure then SV reaches a fixed point \(SV_{fix}\) such that

Proof

We show that after n iterations,

by induction on n. The base case is trivial. For the inductive case, suppose that at the start of an iteration, \({\overline{SV}} = (aPostId_A^{C})^n({\overline{AInit}})\). Each element \(sv'\) of \(\overline{\alpha _A(post(X))}\) is added to \({\overline{SV}}\) (unless SV already contains an equivalent system view). But this set equals \(aPost_A^{C}({\overline{SV}})\), by Lemma 24. Hence the subsequent value of \({\overline{SV}}\) is equivalent to the value of  at the beginning of the iteration. But, by the inductive hypothesis,

at the beginning of the iteration. But, by the inductive hypothesis,

as required.

The set A contains a finite number of equivalence classes. Hence the iteration must reach a fixed point \(SV_{fix}\) such that

\(\square \)

The following lemma and corollary relate the fixed point to the set \(\mathcal {R}\) of reachable states.

Lemma 26

Suppose \(SV \subseteq A\). Then

Proof

Proposition 19 shows that  . Then we can show

. Then we can show

by a straightforward induction, using the monotonicity of \(aPost_A\). The result then follows. \(\square \)

Corollary 27

If \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\) then

Proof

By Lemma 26,

And by Theorem 14, \(\mathcal {R}\subseteq \gamma _A((aPost_A^{\sqsupseteq C})^*({\overline{AInit}}))\). The result follows. \(\square \)

The following theorem states the correctness of the algorithm. Note that, in contrast to previous results, it does not assume \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\).

Theorem 28

If the algorithm returns success, then the system is error-free, for all systems starting in an initial state in Init.

Proof

Start by considering systems with initial states in  . We prove the contra-positive: suppose there is some reachable system state \(ss \in \mathcal {R}\) such that

. We prove the contra-positive: suppose there is some reachable system state \(ss \in \mathcal {R}\) such that  ; we show that the algorithm returns failure. From Corollary 27 and Lemma 25, for the fixed point \(SV_{fix}\), we have \(ss \in \gamma _A({\overline{SV}}_{fix})\). Hence

; we show that the algorithm returns failure. From Corollary 27 and Lemma 25, for the fixed point \(SV_{fix}\), we have \(ss \in \gamma _A({\overline{SV}}_{fix})\). Hence  (using Lemma 20, and the fact \(\pi (error) = error)\)). Then by Proposition 19,

(using Lemma 20, and the fact \(\pi (error) = error)\)). Then by Proposition 19,  , making use of the assumption (Definition 4)

, making use of the assumption (Definition 4)  . Hence the algorithm returns failure.

. Hence the algorithm returns failure.

Finally, Lemma 15 shows that all systems starting from states outside \(\mathcal {SS}^{\sqsupseteq C}\) are also error-free. \(\square \)

Of course, the algorithm may sometimes return failure when, in fact, all systems are error-free: a false positive. This might just mean that it is necessary to re-run the algorithm with a larger value of A: the current value of A is not large enough to capture relevant properties of the system. Or it might be that the algorithm would fail for all values of A. This should not be surprising, since the problem is undecidable in general.

7 Detecting deadlock

In this section, we discuss how to extend the algorithm from Sect. 6 so as to verify that a system is deadlock-free.

Our approach will only verify systems whose states are at least as large as the concretizations considered, i.e. from the set \(\mathcal {SS}^{\sqsupseteq C}\). Indeed, many families of systems deadlock in trivially small instances, but will be deadlock-free for larger instances. For example, the token-based mutual exclusion protocol will deadlock with a single component (since that component won’t be able to pass on the token), but is deadlock-free for larger systems. Systems with states not in \(\mathcal {SS}^{\sqsupseteq C}\) can be analysed directly: for suitable choices of C, there are finitely many such instances, up to symmetric equivalence.

We start by considering an approach that appears feasible, but does not work in practice. Suppose we were to check whether any concretization of the set SV can deadlock, signalling an error if so. That is, we augment the main loop of the algorithm with:

This approach would be sound. However, it would produce far too many false positives to be useful in practice. Consider, again, the token-based mutual exclusion protocol, which is deadlock-free (assuming there are at least two components). However, for the fixed point \(SV_{fix}\) of SV, \(\gamma _{\mathcal {SV}_2}^{\mathcal {SV}_3}(SV_{fix})\) would include states such as  , where no component has the token. This state is deadlocked, because no component in the state can receive the token from another. However, this clearly isn’t representative of any reachable state, because it doesn’t include the component with the token.

, where no component has the token. This state is deadlocked, because no component in the state can receive the token from another. However, this clearly isn’t representative of any reachable state, because it doesn’t include the component with the token.

Instead, our approach is to identify a set of significant concretizations, and signal an error only if a significant concretization can deadlock. The following definition captures the property necessary for this approach to be sound, in particular that every reachable system state larger than C has a significant sub-state. We write \(\mathcal {R}^{\sqsupseteq C}\) for  .

.

Definition 29

A set \(SC \subseteq C\) is significant if:

-

1.

for every system state \(ss \in \mathcal {R}^{\sqsupseteq C}\) there is some \(ss_{sig} \in SC\) such that \(ss_{sig} \sqsubseteq ss\); and

-

2.

SC is closed under all permutations \(\pi \in Sym(T)\).

Given such a significant set SC, we extend our algorithm to check whether any significant concretization can deadlock:

We describe in Sect. 7.1 how, within the implementation, we define significant sets of concretizations, so as to avoid false positives. First we prove the soundness of this approach.

Lemma 30

Suppose SC is a significant set of concretizations. Let \(SV_{fix} = (aPostId_A^C)^*(AInit)\), i.e. the fixed point of SV in the algorithm. If the system can deadlock in some reachable state \(ss \in \mathcal {R}^{\sqsupseteq C}\), then there is a concretization in  that deadlocks.

that deadlocks.

Proof

Suppose the reachable state \(ss \in \mathcal {R}^{\sqsupseteq C}\) can deadlock. Let \(ss_{sig} \in SC\) be the significant concretization, implied by Definition 29, such that \(ss_{sig} \sqsubseteq ss\). Clearly \(ss_{sig}\) deadlocks. Now \(ss \in \mathcal {R}\) so \(ss \in \gamma _A(\overline{SV_{fix}})\), by Corollary 27. We show \(ss_{sig} \in \gamma _A(\overline{SV_{fix}})\): suppose \(sv \sqsubseteq _A ss_{sig}\); then \(sv \sqsubseteq _A ss\), and so \(sv \in \overline{SV_{fix}}\) (since \(ss \in \gamma _A(\overline{SV_{fix}})\)), as required. Hence also \(ss_{sig} \in \gamma _A^C(\overline{SV_{fix}})\).

Now, there is some \(ss_{sig}' \in \gamma _A^C(SV_{fix})\) such that \(ss_{sig}' \approx ss_{sig}\). Clearly deadlocks are preserved by symmetric equivalence, so \(ss_{sig}'\) deadlocks. Further, \(ss_{sig}' \in SC\), since SC is closed under symmetric equivalence. \(\square \)

Theorem 31

Let SC be a significant set of concretizations. Suppose the enhanced algorithm, with the check for deadlocks in significant concretizations, returns success. Then the system is deadlock-free for all systems with initial states \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\).

Proof

Suppose that \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\). Then necessarily every reachable state is in \(\mathcal {SS}^{\sqsupseteq C}\).

We argue by contradiction: suppose the system can deadlock, but, nevertheless, the algorithm returns success. Then by Lemma 30, there is a deadlock in some concretization in  , where \(SV_{fix} = (aPostId_A^C)^*(AInit)\). By Lemma 25, \(SV_{fix}\) is the fixed point of SV. Hence the check for significant deadlocks will detect this deadlock. This gives a contradiction. \(\square \)

, where \(SV_{fix} = (aPostId_A^C)^*(AInit)\). By Lemma 25, \(SV_{fix}\) is the fixed point of SV. Hence the check for significant deadlocks will detect this deadlock. This gives a contradiction. \(\square \)

Note that the condition \(Init \subseteq \mathcal {SS}^{\sqsupseteq C}\) implies that we can only deduce deadlock freedom for systems that are at least as big as elements of C: other systems can be analysed directly.

7.1 Defining significant concretizations

Our normal way to define a significant set of concretizations is to identify certain components within each system state as being required: informally, the idea will be that these components are relevant to the state not being deadlocked. We will then define significant concretizations to be those that include all required components. The following examples illustrate the ideas.

Example 4

In the token-based mutual exclusion protocol, we will define the component holding the token to be required, and define significant concretizations to be those that include this component. This avoids the false positive described at the start of this section.

Example 5

Consider a system where the fixed process models a lock, and has a reference to the thread holding the lock (if any). In most such systems, any concretization that does not include the thread holding the lock will be deadlocked: only the thread holding the lock can perform an event. However, this would again be a false positive. We therefore define the thread holding the lock to be required, and so only the concretizations that include this process would be significant.

Example 6

Now consider a system using a lock, as in the previous example, but where also the thread that holds the lock can perform updates upon nodes to which it has a reference. However, those updates will be possible only in concretizations that include those nodes, so we need to define those nodes as required to avoid false positives. We therefore define the required components to be the thread holding the lock, and any node to which that thread has a reference.

We formally define the notion of required components, and the corresponding significant concretizations, in Definition 32. We then describe how the required components are defined in the implementation in Definition 33. We present two additional requirements, and then state the correctness of the approach in Proposition 36.

Recall that a component can be identified by its process identity, comprising its family and identity. We write  for the process identities of the components of ss. We define the required components via their process identities.

for the process identities of the components of ss. We define the required components via their process identities.

Definition 32

The required components of a system state are defined via a function

Given a definition of  , a concretization is significant if it contains a process for each required process identity:

, a concretization is significant if it contains a process for each required process identity:

We give example definitions for  below. We say that a process \(q = cs(ids)\) (either the fixed process or a component) references a component with identity id in system state ss if q has a parameter with value id, i.e. \(id \in ids\), but id is not a distinguished value (such as the value null in the linked-list-based stack example). We will often define the required components via the references held to components by other processes. We write \(\mathop {\mathsf {references}}(q)\) for the set of identities referenced by q:

below. We say that a process \(q = cs(ids)\) (either the fixed process or a component) references a component with identity id in system state ss if q has a parameter with value id, i.e. \(id \in ids\), but id is not a distinguished value (such as the value null in the linked-list-based stack example). We will often define the required components via the references held to components by other processes. We write \(\mathop {\mathsf {references}}(q)\) for the set of identities referenced by q:

We lift \(\mathop {\mathsf {references}}\) to system states by point-wise application.

The following examples illustrate two patterns for defining the required process identities.

Example 7

We could define the required components to be all components of family f that are referenced by the fixed process. Example 5 was an instance of this pattern with \(f = Thread\). In this case we define:Footnote 4

For example, assuming \(id_1 \in \mathop {\mathsf {idType}}(f)\) but  , for any state of the form \((q_F,m)\) where \(q_F = cs_F(id_1, id_2)\), we have

, for any state of the form \((q_F,m)\) where \(q_F = cs_F(id_1, id_2)\), we have  , because the fixed process references such a component. If m contains a component with process identity \((f,id_1)\) then \((q_F,m)\) is significant.

, because the fixed process references such a component. If m contains a component with process identity \((f,id_1)\) then \((q_F,m)\) is significant.

Example 8

We could define the required components to be all components of family \(f_1\) that are referenced by the fixed process, and also those components of family \(f_2\) that are referenced by one of those \(f_1\) components. Example 6 followed this pattern with \(f_1 = Thread\) and \(f_2 = Node\). (We use this pattern again in Sect. 9.3.) In this case, we define:

Below we indicate the family of a component via a subscript on the control state. Let  and

and  . For the concretization

. For the concretization

we have that  contains \((f_1,id_1)\) (because the fixed process references such a component) and \((f_2,id_2)\) (because the component with process identity \((f_1,id_1)\) references such a component). This concretization is not significant because it has no component with process identity \((f_2,id_2)\).

contains \((f_1,id_1)\) (because the fixed process references such a component) and \((f_2,id_2)\) (because the component with process identity \((f_1,id_1)\) references such a component). This concretization is not significant because it has no component with process identity \((f_2,id_2)\).

In the implementation, the analyst defines the required process identities by giving chains (i.e. sequences) of families. A chain  represents that the required components are all components of family \(f_1\) that are referenced by the fixed process, and also those components of family \(f_2\) that are referenced by one of those \(f_1\) components, and also those components of family \(f_3\) that are referenced by one of those \(f_2\) components, and so on. Thus Example 7 corresponds to the chain

represents that the required components are all components of family \(f_1\) that are referenced by the fixed process, and also those components of family \(f_2\) that are referenced by one of those \(f_1\) components, and also those components of family \(f_3\) that are referenced by one of those \(f_2\) components, and so on. Thus Example 7 corresponds to the chain  , and Example 8 corresponds to the chain

, and Example 8 corresponds to the chain  . The following definition captures this notion.

. The following definition captures this notion.

Definition 33

Given a chain \(\mathbf{f} \) of families, the corresponding required process identities are defined by

where the subsidiary function  traverses the chain \(\mathbf{f} \), extracting process identities, starting with the state q:

traverses the chain \(\mathbf{f} \), extracting process identities, starting with the state q:

(Note that the depth of the above recursion is bounded by the length of the chain; also the resulting process identities are a subset of those appearing in the concretization.) This is lifted pointwise to sets of chains of families:

In order to use the result of Theorem 31, we need to prove that the definition of SC in Definition 32 satisfies the requirements for being significant from Definition 29, in particular that every state in \(\mathcal {SS}^{\sqsupseteq C}\) has a significant sub-state. Informally, this comes down to checking that the concretizations are large enough to contain a component process for each required process identity.

Our approach is as follows. During a deadlock-freedom check, if the search reaches a concretization c that is not significant, by dint of not including a process with a particular required process identity pid, it calculates whether it is possible to add an arbitrary such process \(q_c'\) with process identity pid, to replace another process \(q_c\) with an unrequired process identity, while remaining inside C. That is, we check the following property.

Definition 34

We say that C is large enough for \(requiredPids\) if:

Example 9

Recall Example 7, corresponding to chain  , and suppose \(C = \mathcal {SV}_2\).

, and suppose \(C = \mathcal {SV}_2\).

Suppose we encounter the concretization

where \(id_1, id_2 \in f\). This is not significant because it is missing a component with process identity \(pid = (f,id_2)\). However, it satisfies the condition of Definition 34, since we can replace the second component state \(q_c = cs_3(id_3)\) by an arbitrary component state \(q_c'\) with process identity \((f_2,id_2)\), say \(q_c' = cs_2(id_2)\), to produce a new concretization  . Informally, C is large enough to include both the f-components referenced by the fixed process.