Abstract

The non-Markovian nature of rough volatility makes Monte Carlo methods challenging, and it is in fact a major challenge to develop fast and accurate simulation algorithms. We provide an efficient one for stochastic Volterra processes, based on an extension of Donsker’s approximation of Brownian motion to the fractional Brownian case with arbitrary Hurst exponent \(H \in (0,1)\). Some of the most relevant consequences of this ‘rough Donsker (rDonsker) theorem’ are functional weak convergence results in Skorokhod space for discrete approximations of a large class of rough stochastic volatility models. This justifies the validity of simple and easy-to-implement Monte Carlo methods, for which we provide detailed numerical recipes. We test these against the current benchmark hybrid scheme and find remarkable agreement (for a large range of values of \(H\)). Our rDonsker theorem further provides a weak convergence proof for the hybrid scheme itself and allows constructing binomial trees for rough volatility models, the first available scheme (in the rough volatility context) for early exercise options such as American or Bermudan options.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional Brownian motion has a long and famous history in probability, stochastic analysis and their applications to diverse fields; see Hurst [39], Kolmogorov [44] and Mandelbrot and Van Ness [52]. Recently, it has experienced a new renaissance in the form of fractional volatility models in mathematical finance. These were first introduced by Comte and Renault [18] and later studied theoretically by Djehiche and Eddahbi [20], Alòs et al. [2] and Fukasawa [29], and given financial motivation and data consistency by Gatheral et al. [32] and Bayer et al. [6]. Since then, a vast literature has pushed the analysis in many directions (see Bayer et al. [5], Bayer et al. [7], Bennedsen et al. [11], El Euch and Rosenbaum [24], Forde and Zhang [26], Fukasawa et al. [30], Guennoun et al. [33], Gulisashvili [34], Horvath et al. [38], Jacquier et al. [41] and Neuman and Rosenbaum [58]), leading to theoretical and practical challenges to understand and implement these models. One of the main issues, at least from a practical point of view, is on the numerical side: the absence of Markovianity rules out PDE-based schemes, and simulation is the only possibility. However, classical simulation methods for fractional Brownian motion (based on Cholesky decomposition or circulant matrices) are notoriously slow, and faster techniques are needed. The state-of-the-art so far is the recent hybrid scheme developed by Bennedsen et al. [10] and its turbocharged version in McCrickerd and Pakkanen [54]. We rise here to this challenge and propose an alternative tree-based approach, mathematically rooted in an extension of Donsker’s theorem to rough volatility.

Donsker [21] (and later Lamperti [48]) proved a functional central limit for Brownian motion, thereby providing a theoretical justification of its random walk approximation. Many extensions have been studied in the literature, and we refer the interested reader to Dudley [23, Chap. 10] for an overview. In the fractional case, Sottinen [66] and Nieminen [59] constructed – following Donsker’s ideas of using i.i.d. sequences of random variables – an approximating sequence converging to fractional Brownian motion with Hurst parameter \(H>1/2\). In order to deal with the non-Markovian behaviour of fractional Brownian motion, Taqqu [67] considered sequences of non-i.i.d. random variables, again with the restriction \(H>1/2\). Unfortunately, these methodologies do not seem to carry over to the ‘rough’ case \(H<1/2\), mainly because of the topologies involved. The recent development of rough paths theory (see Friz and Victoir [28, Sect. 13.4] and Lyons [51]) provided an appropriate framework to extend Donsker’s results to processes with sample paths of Hölder regularity strictly smaller than \(1/2\). For \(H \in (1/3,1/2)\), Bardina et al. [3] used rough paths to show that functional central limit theorems (in the spirit of Donsker) apply. This in particular suggests that the natural topology to use for fractional Brownian motion with \(H<1/2\) is the topology induced by the Hölder norm of the sample paths. Indeed, switching the topology from the Skorokhod one used by Donsker to the (stronger) Hölder topology is the right setting for rough central limit theorems, as we outline in this paper. Recent results by Bender and Parczewski [9] and Parczewski [60, 61] provide convergence for (geometric) fractional Brownian motions with general \(H \in (0,1)\) using Wick calculus, assuming that the approximating sequences are Bernoulli random variables. We extend this (Theorem 2.11) to a universal functional central limit theorem, involving general (discrete or continuous) random variables as approximating sequences, only requiring finiteness of moments.

We consider a general class of continuous processes with any Hölder regularity, including fractional Brownian motion with \(H\in (0,1)\), truncated Brownian semi-stationary processes, Gaussian Volterra processes, as well as rough volatility models recently proposed in the financial literature. The fundamental novelty here is an approximating sequence capable of simultaneously keeping track of the approximated rough volatility process (fractional Brownian motion, Brownian semistationary process, or any continuous path functional thereof) and of the underlying Brownian motion. This is crucial in order to take into account the correlation of the two processes, the so-called leverage effect in financial modelling. While approximations of two-dimensional (correlated) semimartingales are well understood in the standard case, the rough case is so far an open problem. Our analysis easily generalises beyond Brownian drivers to more general semimartingales, emphasising that the subtle, yet essential, difficulties lie in the passage from the semimartingale setup to the rough case. This is the first Monte Carlo method available in the literature, specifically tailored to two-dimensional rough systems, based on an approximating sequence for which we prove a Donsker–Lamperti-type functional central limit theorem (FCLT). This further provides a pathwise justification of the hybrid scheme by Bennedsen et al. [10], and allows the use of tree-based schemes, opening the doors to pricing early-exercise options such as American options. In Sect. 2, we present the class of models we are considering and state our main results. The proof of the main theorem is developed in Sect. 3 in several steps. We reserve Sect. 4 to applications of the main result, namely weak convergence of the hybrid scheme, binomial trees as well as numerical examples. We present simple numerical recipes, providing a pedestrian alternative to the advanced hybrid schemes in [10, 54], and develop a simple Monte Carlo scheme with low implementation complexity, for which we provide comparison charts against [10] in terms of accuracy and against [54] in terms of speed. Reminders on Riemann–Liouville operators and additional technical proofs are postponed to the Appendix.

Notations: For the interval \(\mathbb{I}:=[0,1]\), we denote by \(\mathcal {C}(\mathbb{I})\) and \(\mathcal {C}^{\alpha}(\mathbb{I})\) the spaces of continuous and \(\alpha \)-Hölder-continuous functions on \(\mathbb{I}\) with Hölder regularity \(\alpha \in (0,1)\), respectively. We also need \(\mathcal {C}^{1}(\mathbb{I}):=\{f:\mathbb{I}\to \mathbb{R}: f'\text{ exists and is continuous on } \mathbb{I}\} \). For \(0 \le \alpha \leq 1\), we define \(\mathcal {C}^{\alpha}_{+}(\mathbb{I}):=\{f \in \mathcal {C}^{\alpha }(\mathbb{I}) : f \geq 0\}\). Both definitions imply bounded first order derivatives on \(\mathbb{I}\). We use \(C\), \(\widetilde{C}\), \(\widehat{C}\), \(C_{1}\), \(C_{2}\), \(\overline{C}\), \(\underline{C}\) as strictly positive real constants which may change from line to line and whose exact values do not matter.

2 Weak convergence of rough volatility models

Donsker’s invariance principle, also termed ‘functional central limit theorem’, ensures the weak convergence in the Skorokhod space of an approximating sequence to a Brownian motion. As opposed to the central limit theorem, Donsker’s theorem is a pathwise statement which ensures that convergence takes place for all times. This result is particularly important for Monte Carlo methods which aim to approximate pathwise functionals of a given process (an essential requirement to price path-dependent financial securities for example). We prove here a version of Donsker’s result not only in the Skorokhod topology, but also in the stronger Hölder topology, for a general class of continuous stochastic processes.

2.1 Hölder spaces and fractional operators

For \(\beta \in (0,1)\), the \(\beta \)-Hölder space \(\mathcal {C}^{\beta}(\mathbb{I})\) with the norm

is a non-separable Banach space; see Krylov [45, Chap. 3]. In the spirit of Riemann–Liouville fractional operators recalled in Appendix A, we introduce generalised fractional operators. For \(\lambda \in (0,1)\) and any \(\alpha \in (-\lambda , 1-\lambda )\), we define the space \(\mathcal {L}^{\alpha }:= \{ g\in \mathcal {C}^{2}((0,1]) : |\frac{g(u)}{u^{\alpha}} |, | \frac{g^{\prime}(u)}{u^{\alpha -1}} | \text{ and }|\frac{g^{\prime \prime}(u)}{u^{\alpha -2}} |\text{ are bounded} \}\). When \(\alpha >0\), we define the function \(g\) at the origin to be \(g(0)=0\) by continuous extension.

Definition 2.1

For any \(\lambda \in (0,1)\) and \(\alpha \in (-\lambda , 1-\lambda )\), the generalised fractional operator (GFO) associated to \(g\in \mathcal {L}^{\alpha }\) is defined on \(\mathcal {C}^{\lambda}(\mathbb{I})\) as

We further use the notation \(G(t):=\int _{0}^{t}g(u)\mathrm {d}u\) for any \(t\in \mathbb{I}\). Of particular interest in mathematical finance are the kernels

The result below generalises the classical mapping properties of Riemann–Liouville fractional operators first proved by Hardy and Littlewood [36] and is of fundamental importance in the rest of our analysis.

Proposition 2.2

For any \(\lambda \in (0,1)\) and \(\alpha \in (-\lambda , 1-\lambda )\), the operator \(\mathcal {G}^{\alpha}\) is continuous from \(\mathcal {C}^{\lambda}(\mathbb{I})\) to \(\mathcal {C}^{\lambda +\alpha}(\mathbb{I})\).

The proof can be found in Appendix C. This result is analogous to the classical Schauder estimates, phrased in terms of convolution with a suitable regularising kernel, as e.g. treated in Friz and Hairer [27, Theorem 14.17] and Broux et al. [15, Theorem 2.13 and Lemma 2.9], but in settings that are slightly different from ours.

We develop here an approximation scheme for the following system which generalises the concept of rough volatility introduced in Alòs et al. [2], Fukasawa [29] and Gatheral et al. [32] in the context of mathematical finance. The process \(X\) represents the dynamics of the logarithm of a stock price process, under a given reference pricing measure, with

with \(\alpha \in (-\frac{1}{2},\frac{1}{2})\) and where \(Y\) is the (strong) solution to the stochastic differential equation

where \(\mathcal {D}_{Y}\) denotes the state space of \(Y\), usually ℝ or \(\mathbb{R}_{+}\). The two Brownian motions \(B\) and \(W\) are defined on a common filtered probability space \((\Omega , \mathcal {F}, (\mathcal {F}_{t})_{t\in \mathbb{I}}, \mathbb{P})\) and are correlated by the parameter \(\rho \in [-1,1]\), and we introduce \(\overline {\rho }:=\sqrt{1-\rho ^{2}}\). We further let \(\Phi \) be any operator such that for all \(\gamma \in (0,1)\), we have \(\Phi :\mathcal {C}^{\gamma}( \mathbb{I})\to \mathcal {C}^{\gamma}_{+}(\mathbb{I})\) with \(\Phi \) continuous from \((\mathcal {C}^{\gamma}(\mathbb{I}),\|\cdot \|_{\gamma })\) to itself. This in particular ensures that whenever \(Y \in \mathcal {C}^{\lambda}(\mathbb{I})\), then \(V \in \mathcal {C}_{+}^{\alpha +\lambda}(\mathbb{I})\), i.e., \(V\) is nonnegative and belongs to \(\mathcal {C}^{\alpha +\lambda}(\mathbb{I})\). As an example, one can consider a so-called Nemyckij operator \(\Phi (f):=\phi \circ f\) given by composition with some \(\phi :\mathbb{R} \rightarrow \mathbb{R}_{+}\), in which case Drábek [22] has shown that the operator \(\Phi \) is continuous from \((\mathcal {C}^{\gamma}(\mathbb{I}),\|\cdot \|_{\gamma })\) to \((\mathcal {C}^{\gamma}(\mathbb{I}),\|\cdot \|_{\gamma })\) for all \(\gamma \in (0,1)\) if and only if \(\phi \in \mathcal {C}^{1}(\mathbb{R})\). It remains to formulate a precise definition for \(\mathcal {G}^{\alpha }W\) (Proposition 2.4) and for \(\mathcal {G}^{\alpha }Y\) (Corollary 2.5) to fully specify the system (2.3) and clarify the existence of solutions.

Assumption 2.3

There exist \(C_{b}, C_{a}>0\) such that for all \(y \in \mathcal {D}_{Y}\),

where \(a\) and \(b\) are continuous functions such that there is a unique strong solution to (2.4).

Existence of solutions to (2.4) along with Assumption 2.3 need to be checked case by case beyond standard Lipschitz and linear growth conditions (we provide a showcase of models in Examples 2.6–2.9 below satisfying existence and pathwise uniqueness conditions). Not only is the solution to (2.4) continuous, but \(( \frac{1}{2}-\varepsilon )\)-Hölder- continuous for any \(\varepsilon \in (0, \frac{1}{2})\) as a consequence of the Kolmogorov–Čentsov theorem in Čentsov [16]. Existence and precise meaning of \(\mathcal {G}^{ \alpha }Y\) are delicate and treated below.

2.2 Examples

Before constructing our approximation scheme, let us discuss a few examples of processes within our framework. As a first useful application, these generalised fractional operators provide a (continuous) mapping between a standard Brownian motion and its fractional counterpart.

Proposition 2.4

For any \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\), the equality \((\mathcal {G}^{\alpha }W)(t)=\int _{0}^{t} g(t-s)\mathrm {d}W_{s}\) holds almost surely for all \(t\in \mathbb{I}\).

Proof

Since the paths of Brownian motion are \((\frac{1}{2}-\varepsilon )\)-Hölder-continuous for any \(\varepsilon \in (0,\frac{1}{2})\), existence (and continuity) of \(\mathcal {G}^{\alpha }W\) is guaranteed for all \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\). When \(\alpha \in (0,\frac{1}{2} )\), the kernel is smooth and square-integrable, so that Itô’s product rule yields by Proposition 2.2 (since \(g(0)=0\) and \(\alpha >0\)) that

and the claim holds. For \(\alpha \in (-\frac{1}{2}, 0)\) and any \(\varepsilon >0\), introduce the operator

which satisfies \(\frac{\mathrm {d}}{\mathrm {d}t}\lim _{\varepsilon \downarrow 0} (\mathcal {G}^{1+\alpha}_{\varepsilon }f )(t) = \left (\mathcal {G}^{\alpha}f\right )(t)\) pointwise. Now for any \(t\in \mathbb{I}\), almost surely,

Then as \(\varepsilon \) tends to zero, the right-hand side of (2.5) tends to \(\int _{0}^{t}g(t-s)\mathrm {d}W_{s}\) and furthermore, the convergence is uniform. On the other hand, the equalities

hold since convergence is uniform on compacts, and the fundamental theorem of calculus concludes the proof. □

Minimal changes to the proof of Proposition 2.4 also yield the following result.

Corollary 2.5

If \(Y\) solves (2.4), then \((\mathcal {G}^{\alpha }Y)(t)=\int _{0}^{t} g(t-s)\mathrm {d}Y_{s}\) almost surely for all \(t\in \mathbb{I}\) and \(\alpha \in (-\frac{1}{2}, \frac{1}{2})\).

Up to a constant multiplicative factor \(C_{\alpha}\), the (left) fractional Riemann–Liouville operator (see Appendix A) is identical to the GFO in (2.2) so that the Riemann–Liouville (or type-II) fractional Brownian motion can be written as \(C_{\alpha }\mathcal {G}^{\alpha }W\). Proposition 2.2 then implies that the Riemann–Liouville operator is continuous from \(\mathcal {C}^{1/2}( \mathbb{I})\) to \(\mathcal {C}^{1/2+\alpha}(\mathbb{I})\) for \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\). Each kernel in (2.2) gives rise to processes proposed by Barndorff-Nielsen and Schmiegel [4] for turbulence and financial modelling.

Example 2.6

The rough Bergomi model by Bayer et al. [6] reads

with \(V_{0}, \nu ,\xi _{0}(\,\cdot \,)>0\), \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\) and \(\mathcal {E}(\,\cdot \,)\) is the stochastic exponential. (We remark that this coincides here with the Wick stochastic exponential.) This corresponds exactly to (2.3) with \(g(u) = u^{\alpha}\), \(Y = W\) and

Example 2.7

A truncated Brownian semistationary (TBSS) process is defined as \(\int _{0}^{t} g(t-s)\sigma (s)\mathrm {d}W_{s}\) for \(t\in \mathbb{I}\), where \(\sigma \) is \((\mathcal {F}_{t})_{t\in \mathbb{I}}\)-predictable with locally bounded trajectories and finite second moments, and \(g: \mathbb{I}\setminus \{0\}\to \mathbb{I}\) is Borel-measurable and square-integrable. If \(\sigma \in \mathcal {C}^{1}(\mathbb{I})\), this class falls within the GFO framework.

Example 2.8

Bennedsen et al. [11] added a Gamma kernel to the volatility process, which yields the truncated Brownian semistationary (Bergomi-type) model

with \(\beta >0\), \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\). This corresponds to (2.3) with \(Y=W\), Gamma fractional kernel \(g(u) = u^{\alpha} \mathrm {e}^{-\beta u}\) in (2.2) and

Example 2.9

The version of the rough Heston model introduced by Guennoun et al. [33] reads

with \(Y_{0}\), \(\kappa \), \(\xi \), \(\theta \) all \(>0\), \(2\kappa \theta >\xi ^{2}\) and \(\eta >0\), \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\). This corresponds exactly to (2.3) with \(g(u) = u^{\alpha}\), \(\Phi (\varphi )(t) := \eta + \varphi (t)\), and the coefficients of (2.4) read \(b(y) = \kappa (\theta -y)\) and \(a(y) = \xi \sqrt{y}\). This model is markedly different from the rough Heston model introduced by El Euch and Rosenbaum [24] (for which the characteristic function is known in semiclosed form). Unfortunately, this version of the rough Heston model is outside of the scope of our invariance principle.

2.3 The approximation scheme

We now move on to the core of the project, namely an approximation scheme for the system (2.3), (2.4). The basic ingredient to construct approximating sequences will be suitable families of i.i.d. random variables which satisfy the following assumption.

Assumption 2.10

The family \((\xi _{i})_{i\geq 1}\) forms an i.i.d. sequence of centered random variables with finite moments of all orders and \(\mathbb{E}[\xi _{1}^{2}] = \sigma ^{2}>0\).

Given \((\zeta _{i})_{i\geq 1}\) as in Assumption 2.10, Lamperti’s [49] generalisation of Donsker’s invariance principle in Donsker [21] tells us that a Brownian motion \(W\) can be approximated weakly in Hölder space (Theorem 3.1) by processes of the form

defined pathwise for any \(\omega \in \Omega \), \(n\geq 1\) and \(t\in \mathbb{I}\). As we show in Sect. 3.2, a similar construction holds to weakly approximate the process \(Y\) from (2.4) in Hölder space via

where \(Y_{n}^{k}:=Y_{n}(t_{k})\) and \(\mathcal {T}_{n}:=\{t_{k} = \frac{k}{n} : k=0,1,\dots ,n\}\). Here the \(\zeta _{k}\) correspond to the innovations of the Brownian motion \(W\) in (2.4). Similarly, we use \(\xi _{k}\) when referring to the innovations of the Brownian \(B\) from (2.3) which enter into the approximations of the log-stock price in (2.8) below. Throughout the paper, we assume that the innovations \((\xi _{i})_{i=1, \dots ,\lfloor nt \rfloor }\) and \((\zeta _{i})_{i=1, \dots ,\lfloor nt \rfloor }\) come from two sequences \((\xi _{i})_{i\geq 1}\) and \((\zeta _{i})_{i\geq 1}\) satisfying Assumption 2.10 and such that \(((\xi _{i},\zeta _{i}))_{i\geq 1}\) is i.i.d. with \(\mathrm{corr}(\xi _{i},\zeta _{i})=\rho \) for all \(i\geq 1\). Naturally, the approximations in (2.7) and in (2.8) below should be understood pathwise, but we omit the \(\omega \)-dependence in the notations for clarity.

Regarding the approximation scheme for the process \(X\) given by (2.3), we follow a typical route in weak convergence analysis (see Billingsley [13, Sect. 13] and Ethier and Kurtz [25, Chap. 3]) and establish convergence in the Skorokhod space \(\left (\mathcal {D}(\mathbb{I}),d_{\mathcal {D}}\right )\). Here \(\mathcal {D}(\mathbb{I})=\mathcal {D}(\mathbb{I};\mathbb{R})\) denotes the space of ℝ-valued càdlàg functions on \(\mathbb{I}\) and \(d_{\mathcal {D}}\) denotes a metric inducing Skorokhod’s \(J_{1}\)-topology. To approximate \(X\) in this space, we then consider the process

Analogously to (2.7), one could rewrite these as continuous processes via linear interpolation; but we note that the interpolating term would decay to zero by Chebyshev’s inequality. The following result, proved in Sect. 3.4, confirms the functional convergence of the approximating sequence \((X_{n})_{n\geq 1}\).

Theorem 2.11

The sequence \((X_{n})_{n\geq 1}\) converges in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\) weakly to \(X\).

The construction of the proof allows extending the convergence to the case where \(Y\) is a \(d\)-dimensional diffusion without additional work. The proof of the theorem requires a certain number of steps: we start with the convergence of the approximations \((Y_{n})\) in some Hölder space, which we then translate into convergence of the sequence \((\Phi (\mathcal {G}^{\alpha }Y_{n}))\) by suitable continuity properties of the operations \(\mathcal {G}^{\alpha}\) and \(\Phi \), before finally deducing also the convergence of the corresponding stochastic integrals for the approximations of (2.3). These steps are carried out in Sects. 3.2–3.4 below.

3 Functional CLT for a family of Hölder-continuous processes

3.1 Weak convergence of Brownian motion in Hölder spaces

Donsker’s classical convergence result was proved under the Skorokhod topology. We concentrate here on convergence in the Hölder topology, due to Lamperti [49]. The standard convergence result for Brownian motion can be stated as follows.

Theorem 3.1

For \(\lambda <\frac{1}{2}\), the sequence \((W_{n})\) in (2.6) converges in \((\mathcal {C}^{\lambda}(\mathbb{I}),\|\cdot \|_{\lambda })\) weakly to a Brownian motion.

The proof relies on finite-dimensional convergence and tightness of the approximating sequence. Not surprisingly, the tightness criterion in [13, Sect. 13] for the Skorokhod space \(\mathcal {D}(\mathbb{I})\) and for a Hölder space are different. In fact, the tightness criterion in a Hölder space is strictly related to the Kolmogorov–Čentsov continuity result [16]. Note in passing that the approximating sequence (2.6) is piecewise differentiable in time for each \(n\geq 1\) even though its limit is obviously not. The proof of Theorem 3.1 follows from Theorem 3.3 below under Assumption 2.10.

Theorem 3.2

Let \(Z\in \mathcal {C}^{\lambda}(\mathbb{I})\) and \((Z_{n})_{n\geq 1}\) be an approximating sequence in the sense that for any sequence \((\tau _{k})_{k \geq 1}\) in \(\mathbb{I}\), the sequence \(((Z_{n}(\tau _{k}))_{k \geq 1})_{n \geq 1}\) converges in distribution to \((Z(\tau _{k}))_{k \geq 1}\) as \(n \to \infty \). Assume further that

holds for all \(n\geq 1\), \(t,s\in \mathbb{I}\) and some \(C\), \(\gamma \), \(\beta \) all \(>0\) with \(\frac{\beta}{\gamma}\leq \lambda \). Then \((Z_{n})_{n\geq 1}\) converges in \(\mathcal {C}^{\mu}(\mathbb{I})\) weakly to \(Z\) for \(\mu <\frac{\beta}{\gamma}\leq \lambda \).

The proof of this theorem relies on results of Račkauskas and Suquet [63], who prove the convergence in the Hölder space \(\mathcal {C}_{0}^{\lambda}(\mathbb{I})\) with the norm \(\|f\|^{0}_{\lambda}:=|f|_{ \lambda}+|f(0)|\) for all functions that satisfy

From this point, the proof of Theorem 3.2 is a straightforward consequence because \((\mathcal {C}_{0}^{\lambda}( \mathbb{I}),\|\cdot \|^{0}_{\lambda })\) is a separable closed subspace of \((\mathcal {C}^{\lambda}(\mathbb{I}),\|\cdot \|_{\lambda })\) (see Hamadouche [35] and [63] for details), and one can then use (3.1) to establish tightness as in [49]. Moreover, as the identity map from \(C_{0}^{\lambda}(\mathbb{I})\) into \(\mathcal {C}^{\lambda}(\mathbb{I})\) is continuous, weak convergence in the former implies weak convergence in the latter. To conclude our review of weak convergence in Hölder spaces, the following theorem due to Račkauskas and Suquet [63] provides necessary and sufficient conditions ensuring convergence in Hölder space.

Theorem 3.3

Račkauskas–Suquet [63, Theorem 1]

For \(\lambda \in (0, \frac{1}{2})\), the sequence \((W_{n})_{n\geq 1}\) in (2.6) converges in \(\mathcal {C}^{\lambda}(\mathbb{I})\) weakly to a Brownian motion if and only if \(\mathbb{E}[\xi _{1}] = 0\) and \(\lim \limits _{t\uparrow \infty}t^{\frac{1}{1-2\lambda}}\mathbb{P}[| \xi _{1}|\geq t] = 0\).

Assumption 2.10 ensures the conditions in Theorem 3.3. The following statement allows us to apply Theorem 3.2 on \(\mathbb{I}\) and extend the Hölder convergence result via linear interpolation to a sequence of continuous processes.

Theorem 3.4

Let \(Z\in \mathcal {C}^{\lambda}(\mathbb{I})\) and \((Z_{n})_{n\geq 1}\) be an approximation sequence such that finite-dimensional convergence holds as \(n \to \infty \). Moreover, if

for any \(t_{i}, t_{j} \in \mathcal {T}_{n}\) and some \(\beta \), \(\gamma \), \(C\) all \(>0\) with \(\frac{\beta}{\gamma}\leq \lambda \) and \(\gamma \geq 1+\beta \), then the linearly interpolating sequence

satisfies (3.1). In particular, \((\overline{Z}_{n})_{n \geq 1}\) converges in \(\mathcal {C}^{\mu}(\mathbb{I})\) weakly to \(Z\) for \(\mu <\frac{\beta}{\gamma}\leq \lambda \).

Proof

It suffices to confirm (3.1) for \(\bar{Z}_{n}\). The final claim then follows from Theorem 3.2. Take \(t, s \in \mathbb{I}\) and suppose first that \(t-s\geq \frac{1}{n}\). Letting \(Z_{n}^{k} := Z_{n}(t_{k})\) and \(\overline{Z}_{n}^{k} := \overline{Z}_{n}(t_{k})\), we can then write

where we used (3.2) and the fact that \(\frac{\lfloor nt \rfloor -\lfloor ns \rfloor }{n}\leq 2(t-s)\), \(nt -\lfloor nt \rfloor \leq 1\) for \(t\geq 0\) since \(t-s \geq \frac{1}{n}\).

It remains to consider the case \(t-s < \frac{1}{n} \). There are two possible scenarios. If \(\lfloor nt \rfloor =\lfloor ns \rfloor \), then using \(\gamma \geq 1+\beta \) gives

If \(\lfloor nt \rfloor \neq \lfloor ns \rfloor \), then either \(\lfloor nt \rfloor +1= \lfloor ns \rfloor \) or \(\lfloor nt \rfloor = \lfloor ns \rfloor +1\). Without loss of generality, consider the second case. Then

and the result follows as before since \(t-\frac{\lfloor nt \rfloor }{n}<|t-s|\) and \(|s-\frac{\lfloor nt \rfloor }{n}|\leq |t-s|\). □

3.2 Weak convergence of Itô diffusions in Hölder spaces

The first important step in our analysis is to extend the Donsker–Lamperti weak convergence from Brownian motion to the Itô diffusion \(Y\) in (2.4).

Theorem 3.5

The sequence \((Y_{n})_{n\geq 1}\) in (2.7) converges in \((\mathcal {C}^{\lambda}(\mathbb{I}),\|\cdot \|_{\lambda })\) weakly to \(Y\) in (2.4) for all \(\lambda <\frac{1}{2}\),

Proof

Finite-dimensional convergence is a classical result by Kushner [47]; so only tightness needs to be checked. In particular, using Theorem 3.4, we need only consider the partition \(\mathcal {T}_{n}\). Thus we get

where we have used the discrete version of the BDG inequality (see Beiglböck and Siorpaes [8, Theorem 6.3]) in the martingale term \(\sum _{k=i+1}^{j}\frac{1}{\sigma \sqrt{n}}a (Y_{n}^{k-1} )\zeta _{k}\) with the constant \(C(p):=6^{p}(p-1)^{p-1}\). Indeed, if we consider the discrete-time martingale process \((x^{i,j}_{n})_{u}:=\sum _{k=1}^{u} \frac{1}{\sigma \sqrt{n}}a (Y_{n}^{k+i-1} )\zeta _{i+k}\) for \(u\in \{1,\dots ,j-i\}\), we have \(|(x^{i,j}_{n})_{j-i}|\leq \max _{u\in \{1,\dots , j-i\}} |(x^{i,j}_{n})_{u}|\), and the BDG inequality clearly also applies to \(|x^{i,j}_{j-i}|\). We also used independence of \(\zeta _{k}\) and \(Y_{k-1}\) and the linear growth of \(b(\,\cdot \,)\) and \(a( \,\cdot \,)\) from Assumption 2.3, Hölder’s inequality and the discrete version of Gronwall’s lemma (see Clark [17]) in the last step. Since \(\mathbb{E}[\zeta _{k}^{2p}]\) is bounded by Assumption 2.10 and the constant \(\mathfrak {C}(p)\) only depends on \(p\), but not on \(n\), the criterion (3.2) of Theorem 3.4 holds for \(p>1\) with \(\gamma =2p\) and \(\beta =p-1\). □

Corollary 3.6

Let \((Y_{n})_{n\geq 1}\) be as in Theorem 3.5with innovations \((\zeta _{i})_{i\geq 1}\), and suppose \((B^{n})_{n\geq 1}\) is defined by the Donsker approximations (2.6) for some innovations \((\xi _{i})_{i\geq 1}\) satisfying Assumption 2.10such that \(((\zeta _{i},\xi _{i}))_{i\geq 1}\) is i.i.d. with \(\mathrm{corr}(\zeta _{i},\xi _{i})=\rho \) for all \(i\geq 1\). Then there is joint weak convergence in \((\mathcal {C}^{\lambda}(\mathbb{I},\mathbb{R}^{2}),\|\cdot \|_{\lambda })\) of \((B_{n},Y_{n})\) to \((B,Y)\), for all \(\lambda <\frac{1}{2}\), for a standard Brownian motion \(B\) such that \([B,W]_{t}=\rho t\) for \(t\in \mathbb{I}\), where \(W\) is the standard Brownian motion driving the dynamics of the weak limit \(Y\) in (2.4).

Proof

Let \((\zeta ^{\perp}_{i})_{i\geq 1}\) satisfy Assumption 2.10 and be independent of the innovations \((\zeta _{i})_{i\geq 1}\) defining \((Y^{n})_{n\geq 1}\). Then set \(\xi _{i}:=\rho \zeta _{i} + \overline {\rho }\zeta ^{\perp}_{i}\) for \(i\geq 1\) and let \(B_{n}\) be defined in terms of \((\xi _{i})_{i\geq 1}\). This yields the same finite-dimensional distributions of \((B_{n},Y_{n})\) as for the general \((\xi _{i})_{i\geq 1}\) in the statement of Corollary 3.6. Consider now the drift vector \(\textbf{b}(y)=(0,b(y))\) and the \(2\times 2\) diffusion matrix \(\textbf{a}(y)\) with rows \((\rho , \overline {\rho })\) and \((0,a(y))\). Then the result of Kushner [47] applies directly to give finite-dimensional convergence with the desired limit. Finally, tightness of \(((B_{n},Y_{n}))_{n \geq 1}\) follows analogously to the proof of Theorem 3.5, and the claim follows. □

3.3 Invariance principle for rough processes

We have set the ground to extend our results to processes that are not necessarily \((\frac{1}{2}-\varepsilon )\)-Hölder-continuous, Markovian or semimartingales. More precisely, we are interested in \(\alpha \)-Hölder-continuous paths with \(\alpha \in (0,1)\), such as Riemann–Liouville fractional Brownian motion or some TBSS processes. A key tool is the continuous mapping theorem, first proved by Mann and Wald [53], which establishes the preservation of weak convergence under continuous operators.

Theorem 3.7

Let \((\mathcal {X},\|\cdot \|_{\mathcal {X}})\) and \((\mathcal {Y},\|\cdot \|_{\mathcal {Y}})\) be two normed spaces and assume that \(g:\mathcal {X}\to \mathcal {Y}\) is a continuous operator. If the sequence \((Z_{n})_{n\geq 1}\) of random variables converges in \((\mathcal {X},\|\cdot \|_{\mathcal {X}})\) weakly to \(Z\), then \((g(Z_{n}))_{n\geq 1}\) converges in \((\mathcal {Y},\|\cdot \|_{\mathcal {Y}})\) weakly to \(g(Z)\).

Many authors have exploited the combination of Theorems 3.1 and 3.7 to prove weak convergence; see e.g. Pollard [62, Chap. IV]. This path avoids the lengthy computations of tightness and finite-dimensional convergence in classical proofs. In fact, Hamadouche [35] already realised that Riemann–Liouville fractional operators are continuous, so that Theorem 3.7 holds under mapping by Hölder-continuous functions. In contrast, the novelty here is to consider the family of GFOs applied to Brownian motion together with the extension of Brownian motion to Itô diffusions.

The analogue of Theorem 3.5 for \(\mathcal {G}^{ \alpha }Y\) reads as follows.

Theorem 3.8

For \((Y_{n})_{n \geq 1}\) in (2.7) and its weak limit \(Y\) in \((\mathcal {C}^{\lambda}(\mathbb{I}),\|\cdot \|_{\lambda} )\) for \(\lambda <\frac{1}{2}\), the representation

holds for \(t\in \mathbb{I}\). Furthermore, this sequence \(\left (\mathcal {G}^{\alpha }Y_{n}\right )_{n\geq 1}\) converges in \((\mathcal {C}^{\alpha +\lambda}(\mathbb{I}),\|{\cdot} \|_{\alpha +\lambda} )\) weakly to \(\mathcal {G}^{\alpha }Y\) for any \(\alpha \in (-\lambda , 1-\lambda )\).

Proof

Recall that the sequence (2.7) is piecewise differentiable in time. First, for \(\alpha \in (0, 1-\lambda )\), note that \(g(0)=0\) since \(\alpha >0\) and therefore by integration by parts (where \(Y_{n}\) is piecewise differentiable), for \(n\geq 1\) and \(t\in \mathbb{I}\),

and (3.3) follows since \(G(0)=0\) in the last line. When \(\alpha \in (-\lambda ,0)\), using \(G(0)=0\), we similarly get

and from there it readily follows that

as desired; note that when \(t=\frac{k}{n}\), the difference quotients pick up an extra term, but this vanishes in the limit. Finally, the claimed convergence follows analogously to that in Theorem 3.5 by continuous mapping, along with the fact that \(\mathcal {G}^{\alpha}\) is a continuous operator from \((\mathcal {C}^{\lambda}(\mathbb{I}),\|\cdot \|_{\lambda} )\) to \((\mathcal {C}^{\lambda +\alpha}(\mathbb{I}),\|\cdot \|_{\lambda +\alpha} )\) for all \(\lambda \in (0,1)\) and \(\alpha \in (-\lambda , 1-\lambda )\). □

Notice here that the mean value theorem implies

where \(t^{*}_{i}\in [t-t_{i},t-t_{i-1}]\) and \(t^{*}_{\lfloor nt \rfloor +1}\in [0,t-t_{\lfloor nt \rfloor }]\) and we use that \(G(0)=0\). This expression is closer to the usual left-point forward Euler approximation. For numerical purposes, (3.4) is much more efficient since the integral \(G\) required in (3.3) is not necessarily available in closed form. Nevertheless, not any arbitrary choice of \(t^{*}_{i}\) gives the desired convergence from the above argument. We present a suitable candidate for optimal \(t_{i}^{*}\) in Sect. 4.3.1, which guarantees weak convergence in the Hölder sense.

As could be expected, the Hurst parameter influences the speed of convergence of the scheme. We leave a formal proof to further study, but the following argument provides some intuition about the correct normalising factor. Given \(g \in \mathcal {L}^{\alpha}\), we can write \(g(u)=u^{\alpha}L(u)\), where \(L\) is a bounded function on \(\mathbb{I}\). At time \(t=t_{i}\), take \(t^{*}_{k}=t_{i}-t_{k}+\frac{\varepsilon}{n}\) for \(\varepsilon \in [0,1]\). For \(\alpha \in (-\lambda ,0)\), since \(g \in \mathcal {L}^{\alpha}\), we can rewrite the approximation (3.4) as

Here, \((i-k+\varepsilon )^{\alpha}\leq \varepsilon ^{\alpha}\) is bounded in \(n\geq 1\) as long as \(\varepsilon \in (0,1]\); so the normalisation factor is of the order \(n^{-\alpha -1/2}\). When \(\alpha \in (0, 1-\lambda )\), the approximation (3.4) instead reads

in which case \((t_{i}-t_{k}+\frac{\varepsilon}{n})^{\alpha}\leq t_{i}^{\alpha}\) is bounded in \(n\geq 1\), and hence the normalisation factor is of the order \(n^{-1/2}\). This intuition is consistent with the result by Neuenkirch and Shalaiko [57] who found the strong rate of convergence of the Euler scheme to be of the order \(\mathcal{O}(n^{-H})\) for \(H<\frac{1}{2}\) for fractional Ornstein–Uhlenbeck. So far, our results hold for \(\alpha \)-Hölder-continuous functions; however, for practical purposes, it is often necessary to constrain the volatility process \((V_{t})_{t\in \mathbb{I}}\) to remain strictly positive at all times. The stochastic integral \(\mathcal {G}^{\alpha }Y\) need not be such in general. However, a simple transformation (e.g. exponential) can easily overcome this fact. The remaining question is whether the \(\alpha \)-Hölder-continuity is preserved after this composition.

Proposition 3.9

Let \((Y_{n})_{n\geq 1}\) be the approximating sequence (2.7) in \(\mathcal {C}^{\lambda}(\mathbb{I})\) for fixed \(\lambda <1/2\). Then \((\Phi (\mathcal {G}^{\alpha }Y_{n} ) )_{n \geq 1}\) converges in \((\mathcal {C}^{\alpha +\lambda}(\mathbb{I}),\|\cdot \|_{\alpha +\lambda} )\) weakly to \(\Phi (\mathcal {G}^{\alpha }Y )\) for all \(\alpha \in (-\lambda , 1-\lambda )\).

Proof

By Theorem 3.8, \((\mathcal {G}^{\alpha }Y_{n})_{n \geq 1}\) converges in \((\mathcal {C}^{\lambda +\alpha}(\mathbb{I}),\|\cdot \|_{\lambda +\alpha} )\) weakly to \(\mathcal {G}^{\alpha }Y\). Furthermore, with our assumptions, \(\Phi \) is continuous from \((\mathcal {C}^{\lambda +\alpha}(\mathbb{I}),\|\cdot \|_{\lambda +\alpha} )\) to \((\mathcal {C}^{\lambda +\alpha}(\mathbb{I}),\|\cdot \|_{\lambda +\alpha} )\). The proposition thus follows from the continuous mapping theorem. The diagram below summarises the steps with \(\lambda <1/2\). The double arrows show weak convergence, and we indicate next to them the topology in which it takes place.

□

3.4 Extending the weak convergence to the Skorokhod space and proof of Theorem 2.11

The Skorokhod space \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}} )\) of càdlàg functions equipped with the Skorokhod topology has been widely used to prove weak convergence. It markedly simplifies when we only consider continuous functions (as is the case for our framework with Hölder-continuous processes). Billingsley [13, Sect. 12] proved that the identity \(\left (\mathcal {D}(\mathbb{I})\cap \mathcal {C}(\mathbb{I}),d_{\mathcal {D}}\right )=\left (\mathcal {C}(\mathbb{I}), \|\cdot \|_{\infty}\right )\) always holds. This seemingly simple statement allows us to reduce proofs of weak convergence of continuous processes in the Skorokhod topology to that in the supremum norm, which is usually much simpler. We start with the following straightforward observation.

Lemma 3.10

For \(\lambda \in (0,1)\), the identity map is continuous from \((\mathcal {C}^{\lambda}(\mathbb{I}),\|\cdot \|_{\lambda })\) to \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}} )\).

Proof

Since the identity map is linear, it suffices to check that it is bounded. For this observe that \(\| f\|_{\lambda}=|f|_{\lambda}+\sup _{t\in \mathbb{I}} |f(t)|=|f|_{\lambda}+ \| f\|_{\infty}>\| f\|_{\infty}\), which concludes the proof since the Skorokhod norm in the space of continuous functions is equivalent to the supremum norm. □

Applying the continuous mapping theorem twice, first with the generalised fractional operator (Theorem 3.8) and then with the identity map, directly yields the following result.

Theorem 3.11

For any \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\), the sequence \((\Phi (\mathcal {G}^{\alpha }Y_{n}) )_{n \geq 1}\) converges in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}} )\) weakly to \(\Phi (\mathcal {G}^{\alpha }Y )\). Moreover, the sequence is tight in \((\mathcal {C}(\mathbb{I}),\|\cdot \|_{\infty} )\).

The final step in the proof of our main theorem is to extend the functional weak convergence to the log-stock price \(X\). For this, we rely on the weak convergence theory for stochastic integrals due to Jakubowski et al. [42] and further developed by Kurtz and Protter [46]. Throughout, we write \(H \bullet N:=\int H \mathrm {d}N \) and use the notation \(H_{-}\) for the process \(H_{-}(t):=H(t-)\) obtained by taking left limits. The next result is a restatement of [46, Theorem 2.2] in the special case \(\delta =\infty \) (in their notation) and restricted to real-valued processes on \(\mathbb{I}\).

Theorem 3.12

For each \(n\geq 1\), let \(N_{n}=M_{n}+A_{n}\) be an \((\mathcal{F}^{n}_{t})\)-semimartingale and \(H_{n}\) an \((\mathcal{F}^{n}_{t})\)-adapted càdlàg process on \(\mathbb{I}\). Suppose that for all \(\gamma >0\), there are \((\mathcal{F}^{n}_{t})\)-stopping times \((\tau _{n}^{\gamma})_{n\geq 1}\) with the property that \(\sup _{n \geq 1}\mathbb{P}[\tau _{n}^{\gamma }\leq \gamma ] \leq 1/\gamma \) and \(\sup _{n\geq 1}\mathbb{E}[ [M_{n}]_{\tau _{n}^{\gamma}\land 1}+T_{ \tau _{n}^{\gamma}\land 1}(A_{n})]<\infty \), where \(T_{t}\) denotes the total variation on \([0,t]\). If \((H_{n},N_{n} )_{n \geq 1}\) converges in \((\mathcal {D}(\mathbb{I}, \mathbb{R}^{2}),d_{\mathcal {D}})\) weakly to \((H,N)\), then \(N\) is a semimartingale in the filtration generated by \((H,N)\) and \((H_{n}, N_{n}, (H_{n})_{-}\bullet N_{n} )_{n \geq 1}\) converges in \((\mathcal {D}(\mathbb{I}, \mathbb{R}^{3}),d_{\mathcal {D}})\) weakly to \((H, N, H_{-} \bullet N)\).

With this, we can now give the proof of Theorem 2.11 which asserts the functional weak convergence of the approximations \((X_{n})_{n \geq 1}\) from (2.8) to the desired log-price \(X\) from (2.3).

Proof

of Theorem 2.11 We begin by considering, for all \(n\geq 1\), the particular approximations

for \(t\in \mathbb{I}\), of the driving Brownian motion \(B\) in the dynamics of \(X\). Here the \(\xi _{i}\) satisfy Assumption 2.10, and so do the \(\zeta _{i}\) in the construction of \(Y_{n}\) from (2.7). While each pair \(\xi _{i}\) and \(\zeta _{i}\) are correlated, they form an i.i.d. sequence \(((\zeta _{i},\xi _{i}))_{i\geq 1}\) across the pairs. In particular, it is straightforward to see that each \(M_{n}\) is a martingale on \(\mathbb{I}\) for the filtration \((\mathcal{F}^{n}_{t})\) defined by \(\mathcal{F}^{n}_{t}:=\sigma (\zeta _{i},\xi _{i}:i=1,\ldots ,\lfloor nt \rfloor )\). Moreover, we have

for \(t\in \mathbb{I}\) and for all \(n\geq 1\). Consequently, we can simply take \(\tau _{n}^{\gamma }\equiv +\infty \) for all \(\gamma >0\) and \(n\geq 1\) to satisfy the required control on the integrators \(N_{n}:=M_{n}\) in Theorem 3.12. By Ethier and Kurtz [25, Theorem 7.1.4], the \(M_{n}\) converge in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\) weakly to a Brownian motion \(B\). Now fix \(\alpha \in (-\frac{1}{2}, \frac{1}{2})\) and define a sequence \((H_{n})_{n \geq 1}\) of càdlàg processes on \(\mathbb{I}\) by setting \(H_{n}(1):=\Phi (\mathcal {G}^{\alpha }Y_{n})(1)\) and \(H_{n}(t):=\Phi (\mathcal {G}^{\alpha }Y_{n})(t_{k-1})\) for \(t\in [t_{k-1},t_{k})\) and \(k=1,\ldots ,n\). In view of Theorem 3.11, the Arzelà–Ascoli characterisation of tightness (see [13, Theorem 8.2]) for the space \((\mathcal {C}(\mathbb{I}),\|\cdot \|_{\infty} )\) allows us to conclude that the \(H_{n}\) converge in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\) weakly to \(H:=\Phi (\mathcal {G}^{\alpha }Y)\). Furthermore, recalling the definition of \(Y_{n}\) in (2.7), each \(H_{n}\) is adapted to the filtration \((\mathcal{F}^{n}_{t})\) introduced above. By Corollary 3.6, we readily deduce that there is joint weak convergence in \((\mathcal {D}(\mathbb{I}),d_{\mathcal{D}})\times (\mathcal {D}(\mathbb{I}),d_{\mathcal{D}}) \times (\mathcal {D}(\mathbb{I}),d_{\mathcal{D}}) \) of \((Y_{n},H_{n},M_{n})\) to \((Y,H,B)\), where \(Y\) satisfies (2.4) for a Brownian motion \(W\) with \([ W, B ]_{t} = \rho t\) for all \(t\in \mathbb{I}\). As noted in [46], the Skorokhod topology on \(\mathcal {D}(\mathbb{I},\mathbb{R}^{2})\) is stronger than the product topology on \(\mathcal {D}(\mathbb{I})\times \mathcal {D}(\mathbb{I})\), but here it automatically follows that we have weak convergence in \((\mathcal {D}(\mathbb{I}, \mathbb{R}^{2}),d_{\mathcal {D}} )\) of the pairs \((H_{n},M_{n})\) to \((H,B)\) by standard properties of the Skorokhod topology (see e.g. [25, Theorem 3.10.2]), since the limiting pair \((H,B)\) is continuous. Consequently, we are in a position to apply Theorem 3.12. To this end, observe that

which is precisely the second term on the right-hand side of (2.8). Therefore, Theorem 3.12 gives that the stochastic integral \(H \bullet M = \sqrt{\Phi (\mathcal {G}^{\alpha }Y)}\bullet B\) is in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\) the weak limit of the second term on the right-hand side of (2.8). For the first term on the right-hand side of (2.8), we have \(-\frac{1}{2}\int _{0}^{\cdot }H_{n}(s) \mathrm {d}s\) converging weakly to \(-\frac{1}{2}\int _{0}^{\cdot }H(s)\mathrm {d}s\) by the continuous mapping theorem, as the integral is a continuous operator from \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\) to itself. Since there is weak convergence in \((\mathcal {D}(\mathbb{I},\mathbb{R}^{2}),d_{\mathcal {D}})\) of the pairs \((H_{n},(H_{n})_{-} \bullet M_{n})\) to \((H, H \bullet B)\), the sum of the two terms on the right-hand side of (2.8) are then also weakly convergent in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\). Recalling that the limit \(Y\) satisfies (2.4) for a Brownian motion \(W\) such that \(W\) and \(B\) are correlated with parameter \(\rho \), we hence conclude that \(X\) converges in \((\mathcal {D}(\mathbb{I}),d_{\mathcal {D}})\) weakly to the desired limit. □

4 Applications

4.1 Weak convergence of the hybrid scheme

The hybrid scheme (and its turbocharged version in McCrickerd and Pakkanen [54]) introduced by Bennedsen et al. [10] is the current state-of-the-art to simulate TBSS processes. However, only convergence in mean-square-error was proved, but not (functional) weak convergence which would justify the use of the scheme for path-dependent options. Unless otherwise stated, we denote by \(\mathcal {T}_{n}:=\{t_{k} = \frac{k}{n}:k=0,1,\dots ,n\}\) the uniform grid on \(\mathbb{I}\). We show that the Hölder convergence also holds for the case \(g(x)=x^{\alpha}\).

Proposition 4.1

The hybrid scheme sequence \((\widetilde{\mathcal {G}}^{\alpha }W_{n})_{n \geq 1}\) is defined as

for \(t\in \mathbb{I}\), where for \(i = 0,\ldots ,n\) and \(\kappa \geq 1\),

with \(\xi _{k} := \int _{t_{k-1}}^{t_{k}}\mathrm {d}W_{s}\sim \mathcal {N}(0,\frac{1}{n})\). It converges in \((\mathcal {C}^{\alpha +1/2},\|\cdot \|_{\alpha +1/2} )\) weakly to \(\mathcal {G}^{\alpha }W\) for \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\) and \(\kappa =1\).

Proof

Finite-dimensional convergence follows trivially as the target process is centered Gaussian; thus convergence of the covariance matrices ensures finite-dimensional convergence. To prove weak convergence, we only need to show that the approximating sequence is tight, by verifying the criteria from Theorem 3.4 as follows. In Theorem 3.4, we need to show that

for all \(t_{i}, t_{j} \in \mathcal {T}_{n}\), for \(p\geq 1\) and some constant \(C\geq 0\). Without loss of generality, assume \(t_{j}< t_{i}\) and take \(\kappa =1\). Define

The statement is trivial if \(j=0\) or \(i=1\), so we assume otherwise. By the definition of \(\widetilde{\mathcal{G}}^{\alpha}W_{n}(t_{i})\) and using the notation ≲ to mean less than or equal with a constant factor, we can write, since \(\kappa =1\),

For the first term, since the sequence \((\xi _{k})\) is i.i.d. \(\sim \mathcal{N}(0,\frac{1}{n})\), we can write

For the second term, we have by Jensen’s inequality that

Finally, for the last term,

Thus by standard moment properties of Gaussian random variables (see Boucheron et al. [14, Theorem 2.1]), we obtain

which gives the desired result. □

We further note that Proposition 4.1 and Theorem 2.11 ensure the weak convergence of the log-stock price for the hybrid scheme as well.

Remark 4.2

Proposition 4.1 may easily be extended to a \(d\)-dimensional Brownian motion \(W\) (for example for multifactor volatility models), also providing a weak convergence result for the \(d\)-dimensional version of the hybrid scheme recently developed by Heinrich et al. [37].

4.2 Application to fractional binomial trees

We consider a binomial setting for the Riemann–Liouville fractional Brownian motion \(\mathcal {G}^{H-1/2} W\) with \(g(u):= u^{H-1/2}\), \(H\in (0,1)\), for which Theorem 3.8 provides a weakly converging sequence. On the partition \(\mathcal {T}_{n}\) and with a Bernoulli sequence \((\zeta _{i})_{i=1, \dots ,n}\) satisfying \(\mathbb{P}[\zeta _{i}=1]=\mathbb{P}[\zeta _{i}=-1]=\frac{1}{2}\) for all \(i\) (satisfying the assumption of Theorem 2.11), the approximating sequence reads

Figure 1 shows a fractional binomial tree structure for \(H=0.75\) and \(H=0.1\). Despite being symmetric, such trees cannot be recombining due to the (non-Markovian) path-dependent nature of the process. It might be possible in principle to modify the tree at each step to make it recombining, following the procedure developed in Akyıldırım et al. [1] for Markovian stochastic volatility models. This is not so straightforward, though, and requires a thorough analysis which we leave for future research.

4.3 Monte Carlo

Theorem 2.11 introduces the theoretical foundations for Monte Carlo methods (in particular for path-dependent options) for rough volatility models. In this section, we give a general and easy-to-understand recipe to implement the class of rough volatility models (2.3). For the numerical recipe, we consider the general time partition \(\mathcal {T}:=\{t_{i}=\frac{iT}{n} : i=0,1,\ldots ,n\}\) on \([0,T]\) with \(T>0\).

Algorithm 4.3

-

1)

Simulate for \(j =1,\dots ,M \) and \(i = 1, \dots ,n\) random variables \(\xi _{j,i}\), i.i.d., and \(\zeta _{j,i}\), i.i.d., all \(\sim \mathcal {N}(0,1)\) and with \(\mathrm{corr}(\xi _{j,i},\zeta _{j,i})~=~\rho \).

-

2)

Simulate M paths of \(Y_{n}\) via

$$ Y_{n}^{j}(t_{i}) = \frac{T}{n}\sum _{k=1}^{i}b\big(Y_{n}^{j}(t_{k-1})\big) + \frac{T}{\sqrt{n}}\sum _{k=1}^{i}a\big(Y_{n}^{j}(t_{k-1})\big)\zeta _{j,k} $$for \(i=1,\ldots ,n\) and \(j=1,\ldots ,M\). Here \(Y_{n}^{j}(t_{i})\) denotes the \(j\)th path \(Y_{n}\) evaluated at the time point \(t_{i}\), which is different from the notation \(Y_{n}^{j}\) in the theoretical framework above, but should not create any confusion. We also compute, for \(i=1,\ldots ,n\) and \(j=1,\ldots ,M\),

$$ \Delta Y^{j}_{n}(t_{i}) := Y^{j}_{n}(t_{i}) - Y^{j}_{n}(t_{i-1}). $$ -

3)

Simulate \(M\) paths of the fractional driving process \(((\mathcal {G}^{\alpha }Y_{n})(t))_{t \in \mathcal {T}}\) using

$$ (\mathcal {G}^{\alpha }Y_{n})^{j}\left (t_{i}\right ) := \sum _{k=1}^{i}g(t_{i-k+1})\Delta Y^{j}_{n}(t_{k}) = \sum _{k=1}^{i}g(t_{k})\Delta Y^{j}_{n}(t_{i-k+1}) $$for \(i=1,\ldots ,n\) and \(j=1,\ldots ,M\). The complexity of this step is in general of the order \(\mathcal {O}(n^{2})\) (see Appendix Bfor details). However, it is easily implemented by using discrete convolutions with complexity \(\mathcal {O}(n\log n)\) (see Algorithm B.4in Appendix Bfor details in the implementation). With \(\mathfrak {g} := (g(t_{i}))_{i=1,\ldots ,n}\) and \(\Delta Y^{j}_{n} := (\Delta Y_{n}^{j}(t_{i}))_{i=1,\ldots ,n}\) for \(j=1,\ldots ,M\), we can write \((\mathcal {G}^{\alpha }Y_{n})^{j}(\mathcal {T})=\sqrt{\frac{T}{n}}(\mathfrak {g}\ast \Delta Y^{j}_{n})\) for \(j=1,\ldots ,M\), where ∗ represents the discrete convolution operator.

-

4)

Use the forward Euler scheme to simulate, for \(i=1,\ldots ,n\) and \(j=1,\ldots ,M\), the log-stock process as

$$ X^{j}(t_{i}) = X^{j}(t_{i-1}) - \frac{1}{2}\frac{T}{n}\Phi (\mathcal {G}^{\alpha }Y_{n} )^{j}(t_{i-1}) + \sqrt{\frac{T}{n}}\sqrt{\Phi (\mathcal {G}^{\alpha }Y_{n} )^{j}(t_{i-1})}\,\xi _{j,i}. $$

Remark 4.4

1) When \(Y=W\), we may skip Step 2 in Algorithm 4.3 and replace \(\Delta Y^{j}_{n}(t_{i})\) by \(\sqrt{\frac{T}{n}}\zeta _{i,j}\) in Step 3.

2) Step 3 may be replaced by the hybrid scheme from [10] only when \(Y=W\).

Antithetic variates in Algorithm 4.3 are easy to implement as it suffices to consider for \(j=1,\ldots ,M\) the uncorrelated random vectors \(\zeta _{j} := (\zeta _{j,1},\zeta _{j,2},\ldots ,\zeta _{j,n})\) and \(\xi _{j} := (\xi _{j,1},\xi _{j,2},\ldots ,\xi _{j,n})\). Then \((\rho \xi _{j} + \overline {\rho }\zeta _{j},\xi _{j})\), \((\rho \xi _{j} - \overline {\rho }\zeta _{j},\xi _{j})\), \((-\rho \xi _{j} - \overline {\rho }\zeta _{j},-\xi _{j})\) and \((-\rho \xi _{j} + \overline {\rho }\zeta _{j},-\xi _{j})\) for \(j=1,\ldots ,M\) constitute the antithetic variates, which significantly improves the performance of Algorithm 4.3 by reducing memory requirements, reducing variance and accelerating execution by exploiting symmetry of the antithetic random variables.

4.3.1 Enhancing the performance

A standard practice in Monte Carlo simulation is to match moments of the approximating sequence with the target process. In particular, when the process is Gaussian, matching first and second moments suffices. We only illustrate this approximation for Brownian motion: the left-point approximation (3.4) (with \(Y=W\)) may be modified to match moments as

where \(t^{*}_{k}\) is chosen optimally. The first two moments of \(\mathcal {G}^{ \alpha }W\) read

The first moment of the approximating sequence (4.1) is always zero, and the second moment reads

Equating theoretical and approximating variances gives \(\frac{1}{n}g(t^{*}_{k})^{2}=\int _{t_{k-1}}^{t_{k}} g(t-s)^{2}\mathrm {d}s\) for \(k=1,\ldots ,n\), so that the optimal evaluation point can be computed via

With the optimal evaluation point, the scheme is still a convolution so that Algorithm B.4 in Appendix B can still be used for faster computations. In the Riemann–Liouville fractional Brownian motion case, \(g(u) = u^{H-1/2}\) and the optimal point can be computed in closed form as

Proposition 4.5

The moment matching sequence \((\widehat{\mathcal {G}}^{\alpha }W_{n})_{n \geq 1}\) is defined as

for \(t\in \mathbb{I}\), where

with \((\xi _{k})_{k \geq 1}\) an i.i.d. family of centered sub-Gaussian random variables satisfying \(\mathbb{E}[\xi _{k}^{2}]=\frac{1}{n}\) (namely, \(\mathbb{P}[|\xi _{k}|>x]\leq C \mathrm {e}^{-vx^{2}}\) for all \(x>0\) and some \(C,v>0\)). Then weak convergence to \(\mathcal {G}^{\alpha }W\) holds in \((\mathcal {C}^{\alpha +1/2},\|\cdot \|_{\alpha +1/2} )\) for \(\alpha \in (-\frac{1}{2},\frac{1}{2} )\).

Proof

Finite-dimensional convergence follows from the central limit theorem as the target process is centered Gaussian; thus convergence of the covariance matrices is enough. It then suffices to prove that the approximating sequence is tight in the desired space, which in view of Theorem 3.4 can be deduced by establishing the control

for all \(t_{i},t_{j}\in \mathcal{T}_{n}\), for \(p\geq 1\) and some constant \(C\geq 0\). We have

We note that

and by Cauchy–Schwarz, we also have

We then obtain \(\widetilde{\sigma}^{2}\leq \mathbb{E}[ |\mathcal {G}^{\alpha }W(t_{i})-\mathcal {G}^{ \alpha }W(t_{j}) |^{2} ]\). Finally, \(\widetilde{\mathcal {G}}^{\alpha }W_{n}(t_{i})-\widetilde{\mathcal {G}}^{\alpha }W(t_{j})\) is sub-Gaussian as a linear combination of sub-Gaussian random variables, and the Gaussian moment inequality (see Boucheron et al. [14, Theorem 2.1]) with the variance estimate \(\widetilde{\sigma}^{2}\) yields

□

4.3.2 Reducing variance

As Bayer et al. [6] and Bennedsen et al. [10] pointed out, a major drawback in simulating rough volatility models is the very high variance of the estimators, so that a large number of simulations are needed to produce a decent price estimate. Nevertheless, the rDonsker scheme admits a very simple conditional expectation technique which reduces both memory requirements and variance while also admitting antithetic variates. This approach is best suited for calibrating European type options. We consider \(\mathcal {F}^{B}_{t}=\sigma (B_{s}:s\leq t)\) and \(\mathcal {F}^{W}_{t}=\sigma (W_{s}:s\leq t)\), the natural filtrations generated by the Brownian motions \(B\) and \(W\). In particular, the conditional variance process \((V_{t}|\mathcal {F}^{W}_{t})\) is deterministic. As discussed by Romano and Touzi [64] and recently adapted to the rBergomi case by McCrickerd and Pakkanen [54], we can decompose the stock price process as

and notice that

Thus \(\exp (X_{t})\) becomes lognormal and the Black–Scholes closed-form formulae are valid here (European, barrier options, maximum, etc.). The advantage of this approach is that the orthogonal Brownian motion \(W^{ \bot}\) is completely unnecessary for the simulation; hence the generation of random numbers is reduced to a half, yielding proportional memory saving. Not only do we get this, but this simple trick also reduces the variance of the Monte Carlo estimate; hence fewer simulations are needed to obtain the same precision. The following algorithm implements this idea, assuming that \(Y = W\).

Algorithm 4.6

On the equidistant grid \(\mathcal {T}\):

-

1)

Simulate i.i.d. random variables \(\zeta _{j,i} \sim \mathcal {N}(0,1)\) and create antithetic variates \(-\zeta _{j,i}\) for \(j=1,\ldots ,M/2\), \(i=1,\ldots ,n\).

-

2)

Simulate \(M\) paths of the fractional driving process \(\mathcal {G}^{\alpha }W\) using discrete convolution (see Algorithm B.4in Appendix Bfor details) to get

$$ (\mathcal {G}^{\alpha }W)^{j}(\mathcal {T})=\sqrt{\frac{T}{n}}(\mathfrak {g}\ast \zeta _{j}),\qquad j=1,\ldots ,M, $$and store in memory \(\overline {\rho }^{2}\int _{0}^{T}(\mathcal {G}^{\alpha }W)^{j}(s)\mathrm {d}s \approx \overline {\rho }^{2}\frac{T}{n}\sum _{k=0}^{n-1} (\mathcal {G}^{\alpha }W)^{j}(t_{k}) =: \Sigma ^{j}\) for each \(j=1,\ldots ,M\).

-

3)

Use the forward Euler scheme to simulate for \(i=1,\ldots ,n\), \(j=1,\ldots ,M\) the log-stock process as

$$ X^{j}(t_{i}) = X^{j}(t_{i-1}) - \frac{\rho ^{2}}{2}\frac{T}{n}\Phi (\mathcal {G}^{\alpha }W )^{j}(t_{i-1}) + \rho \sqrt{\frac{T}{n}}\sqrt{\Phi (\mathcal {G}^{\alpha }W )^{j}(t_{i-1})}\,\zeta _{j,i}. $$ -

4)

Finally, we may compute any option price using the Black–Scholes formula. For instance, the price of a call option with strike \(K\) and maturity \(T\in \mathbb{I}\) would be given by \(C^{j}(K)=\exp (X^{j}(T))\mathcal {N}(d^{j}_{1})-K\mathcal {N}(d^{j}_{2})\) for \(j=1,\ldots ,M\), with \(d^{j}_{1,2}\) given by \(d^{j}_{1}:=\frac{1}{\sqrt{\Sigma ^{j}}}(X^{j}(T)-\log K +\frac{1}{2}\Sigma ^{j})\) and \(d^{j}_{2}=d^{j}_{1}-\sqrt{\Sigma ^{j}}\). Thus the output of the model would be \(C(K)=\frac{1}{M}\sum _{k=1}^{M}C^{j}(K)\).

The algorithm is easily adapted to general diffusions \(Y\) as drivers of the volatility (Algorithm 4.3, Step 2). Algorithm 4.3 is obviously faster than Algorithm 4.6, especially when using control variates. Nevertheless, with the same number of paths, Algorithm 4.6 remarkably reduces the Monte Carlo variance, meaning in turn that fewer simulations are needed, making it very competitive for calibration.

4.4 Numerical example: rough Bergomi model

Figures 2–5 perform a numerical analysis of the Monte Carlo convergence as a function of \(n\). We observe that the lower \(H\), the larger \(n\) needs to be to achieve convergence. However, we also observe that for the Cholesky, rDonsker (naive and moment match) and hybrid schemes and \(H\geq 0.1\), with \(n=252\), we already achieve a precision of the order \(10^{-4}\), which is equivalent to a basis point in financial terms. For \(H<0.1\), we might require \(n\) larger than 252 if precision is required beyond \(10^{-4}\). We also observe in Fig. 5 that the naive rDonsker approximation converges extremely slowly for small \(H\). Additionally, Figs. 6–11 measure the price estimations compared to the Cholesky method which is taken as benchmark. The hybrid scheme tends to be closer to this benchmark especially for \(H< 0.1\). When \(H\geq 0.1\), for both the hybrid scheme and rDonsker moment match, we observe an error less than \(10^{-4}\) for \(n\geq 252\). It is noteworthy to mention that the naive rDonsker scheme has substantially worse convergence (at least an order of magnitude) than the other methods. We note that the black lines in all figures represent the \(99\%\) Monte Carlo standard deviations; hence errors below that threshold should be interpreted as noise.

Rough Bergomi call option price convergence using the Cholesky method with \(\xi _{0}=0.04\), \(\nu =2.3\), \(\rho =-0.9\), \(S_{0}=1\), \(T=1\) with \(2 \times 10^{6}\) simulations and antithetic variates. Absolute error represents the difference between subsequent approximations, where \(n\) represents the time grid size. For \(n=252\), the previous discretisation is \(n=126\)

Rough Bergomi call option price convergence using the hybrid scheme with \(\xi _{0}=0.04\), \(\nu =2.3\), \(\rho =-0.9\), \(S_{0}=1\), \(T=1\) with \(2\times 10^{6}\) simulations and antithetic variates. Absolute error represents the difference between subsequent approximations, where \(n\) represents the time grid size. For \(n=252\), the previous discretisation is \(n=126\)

Rough Bergomi call option price convergence using rDonsker with moment matching and \(\xi _{0}=0.04\), \(\nu =2.3\), \(\rho =-0.9\), \(S_{0}=1\), \(T=1\) with \(2 \times 10^{6}\) simulations and antithetic variates. Absolute error represents the difference between subsequent approximations, where \(n\) represents the time grid size. For \(n=252\), the previous discretisation is \(n=126\)

Rough Bergomi call option price convergence using rDonsker method with left-point Euler and \(\xi _{0}=0.04\), \(\nu =2.3\), \(\rho =-0.9\), \(S_{0}=1\), \(T=1\) with \(2 \times 10^{6}\) simulations and antithetic variates. Absolute error represents the difference between subsequent approximations, where \(n\) represents the time grid size. For \(n=252\), the previous discretisation is \(n=126\)

4.5 Speed benchmark against Markovian stochastic volatility models

In this section, we benchmark the speed of the rDonsker scheme against the hybrid scheme and a classical Markovian stochastic volatility model using \(10^{5}\) simulations and averaging the speeds over 10 trials. For the former, we simulate the rBergomi model from Bayer et al. [6], whereas for the latter, we use the classical Bergomi [12] model using a forward Euler scheme in both volatility and stock price. All three schemes are implemented in Cython to make the comparison fair and to obtain speeds comparable to C++. Figure 12 shows that rDonsker is about 2 times slower than the Markovian case, whereas the hybrid scheme is approximately 2.5 times slower, which is expected from the complexities of the two schemes. However, it is remarkable that the \(\mathcal {O}(n\log n)\) complexity of the FFT stays almost constant with the grid size \(n\), and the computational time grows almost linearly as in the Markovian case. We presume that this is the case since \(n\ll \) 10’000 is relatively small. Figure 12 also shows that rough volatility models can be implemented very efficiently and are not much slower than classical stochastic volatility models.

Computational time benchmark using hybrid scheme, rDonsker and Markovian (forward Euler) for different grid sizes \(n\). The left-hand figure shows the ratios ‘hybrid scheme / Markovian case’ and ‘rDonsker scheme / Markovian case’, where the Markovian case refers to a classical Euler scheme in a non-rough setting (i.e., with \(H=\frac{1}{2}\)). The right-hand figure shows the computational time of the Markovian case only, normalised at 1 for \(n=300\)

4.6 Implementation guidelines and conclusion

The numerical analysis above suggests some guidelines to implement rough volatility models driven by TBSS processes of the form \(\mathcal {G}^{H-1/2} Y\) for some Itô diffusion \(Y\).

Regarding empirical estimates, Gatheral et al. [32] suggest that \(H\approx 0.15\). Bennedsen et al. [11] give an exhaustive analysis of more than 2000 equities for which \(H\in [0.05,0.2]\). On the pricing side, Bayer et al. [6] and Jacquier et al. [40] found that calibration yields \(H\in [0.05,0.10]\). Finally, Livieri et al. [50] found evidence in options data that \(H \approx 0.3\). Despite the diverse ranges found so far, there is a common agreement that \(H<\frac{1}{2}\). Table 1 provides recommendations about when to use which numerical scheme depending on the value of the Hurst parameter.

Remark 4.7

The rough Heston model by Guennoun et al. [33] is out of the scope of the hybrid scheme. Moreover, any process of the form \(\mathcal {G}^{\alpha }Y\) for some Itô diffusion \(Y\) under Assumption 2.3 is in general out of the scope of the hybrid scheme. This only leaves the choice of using the rDonsker scheme, for which reasonable accuracy is obtained at least for Hölder regularities greater than 0.05.

4.7 Bushy trees and binomial markets

Binomial trees have attracted a lot of attention from both academics and practitioners, as their apparent simplicity provides easy intuition about the dynamics of a given asset. Not only this, but they are by construction arbitrage-free and allow finding prices of path-dependent options, together with their hedging strategy. In particular, early exercise options, in particular Bermudan or American options, are usually priced using trees as opposed to Monte Carlo methods. The convergence stated in Theorem 2.11 lays the theoretical foundations to construct fractional binomial trees (note that Bernoulli random variables satisfy the conditions of the theorem). Figure 1 already showed binomial trees for fractional Brownian motion, but we ultimately need trees describing the dynamics of the stock price.

4.7.1 A binary market

We invoke Theorem 2.11 with the independent sequences \((\zeta _{i})_{i=1,\dots ,n}\), \((\zeta _{i}^{\bot})_{i=1,\dots ,n}\) such that \(\mathbb{P}[\zeta _{i}=1]=\mathbb{P}[\zeta ^{\bot}_{i}=1]=\mathbb{P}[\zeta _{i}=1]=\mathbb{P}[ \zeta ^{\bot}_{i}=-1]=\frac{1}{2}\) for all \(i\). We further define on \(\mathcal {T}\) for any \(i=1, \ldots , n\) the quantities

the approximating sequences to \(B\) and \(Y\) in (2.3). The approximation for \(X\) is then given by

In order to construct the tree, we have to consider all possible permutations of the random vectors \((\zeta _{i})_{i = 1,\dots ,n}\) and \((\zeta ^{ \bot}_{i})_{i = 1,\dots ,n}\). Since each random variable only takes two values, this adds up to \(4^{n}\) possible combinations; hence the ‘bushy tree’ terminology. When \(\rho \in \{-1,1\}\), the magnitude is reduced to \(2^{n}\).

4.8 American options in rough volatility models

There is so far no available scheme for American options (or any early-exercise options for that matter) under rough volatility models, but the fractional trees constructed above provide a framework to do so. In the Black–Scholes model, American options can be priced using binomial trees by backward induction. A key ingredient is the Snell envelope and the following representation.

Definition 4.8

Let \((X(t))_{t\in \mathbb{I}}\) be an \((\mathcal{F}_{t})_{t\in \mathbb{I}}\)-adapted process. The Snell envelope \(\mathcal{J}\) of \(X\) with respect to \((\mathcal{F}_{t})_{t\in \mathbb{I}}\) is defined as \(\mathcal{J}(X)(t) := \text{esssup}_{\tau \in \tilde{\mathbb{I}}_{t}} \mathbb{E}[X(\tau )| \mathcal{F}_{t}]\) for all \(t\in \mathbb{I}\), where \(\tilde{\mathbb{I}}_{t}\) denotes the set of stopping times with values in \([t,1] \subseteq \mathbb{I}\).

Definition 4.9

For a log-strike \(k\) and price process \(S\), write \(C(t):=\mathrm {e}^{-rt}(S(t)- \mathrm {e}^{k})^{+}\) and \(P(t):=\mathrm {e}^{-rt}(\mathrm {e}^{k}-S(t))^{+}\). Then the discounted time-\(t\) prices of an American call and put with log-strike \(k\) and maturity \(T\) are given by, respectively,

Preservation of weak convergence under the Snell envelope map is due to Mulinacci and Pratelli [55], who proved that convergence takes place in the Skorokhod topology provided that the Snell envelope is continuous. In our setting, the scheme for American options is justified by the following result. Recall that a filtration is said to be immersed in a larger filtration if all local martingales in the smaller filtration are also local martingales in the larger filtration.

Theorem 4.10

Let \(V\) and \(X\) in (2.3) be such that \(\mathbb{E}[\sup _{t\in \mathbb{I}}\mathrm {e}^{X_{t}}] <\infty \) and the natural filtration of \(X\) is immersed in the natural filtration of \((B,W)\) from (2.3) and (2.4). With the sequence \((X_{n})_{n\geq 1}\) converging in \((\mathcal{D}(\mathbb{I}),d_{\mathcal{D}})\) weakly to \(X\), as per Theorem 2.11, assume additionally that \((X_{n})_{n\geq 1}\) satisfies the conditions of Mulinacci and Pratelli [55, Theorem 3.5]. Let \(S=\mathrm {e}^{X}\) and \(S_{n}=\mathrm {e}^{X_{n}}\). If for each \(n\geq 1\), \(C_{n}\) and \(P_{n}\) are defined as in Definition 4.9for the price process \(S_{n}\), then \((S_{n},\mathcal{J}(C_{n}),\mathcal{J}(P_{n}))\) converges in \((\mathcal{D}(\mathbb{I};\mathbb{R}^{3}),d_{\mathcal{D}})\) weakly to \((S,\mathcal{J}(C),\mathcal{J}(P))\), where the Snell envelopes are defined with respect to the natural filtrations of the corresponding price processes.

Proof

By the continuous mapping theorem, the weak convergence of \((X_{n})\) implies that \((S_{n},C_{n},P_{n})\) converges in \((\mathcal{D}(\mathbb{I};\mathbb{R}^{3}),d_{\mathcal{D}})\) weakly to \((S,C,P)\). Because \(C\) and \(P\) are continuous and \(\mathbb{E}[\sup _{t\in \mathbb{I}}\mathrm {e}^{X_{t}}] <\infty \), it follows from Karatzas and Shreve [43, Theorem D.13] that for both \(\mathcal{J}(C)\) and \(\mathcal{J}(P)\), the predictable finite-variation part of the Doob–Meyer decomposition is continuous. Moreover, the assumption that the natural filtration of \(X\) is immersed in that of \((W,B)\) implies that also the martingale part is continuous. Therefore \(\mathcal{J}(C)\) and \(\mathcal{J}(P)\) are continuous processes. In view of the additional assumptions, the claim now follows from [55, Theorem 5.5]. □

Mulinacci and Pratelli [55] also gave explicit conditions for weak convergence to be preserved in the Markovian case. It is trivial to see that the pricing of American options in the rough tree scheme coincides with the classical backward induction procedure. We consider a continuously compounded interest rate \(r\) and dividend yield \(d\).

Algorithm 4.11

On the equidistant grid \(\mathcal {T}\):

-

1)

Construct the binomial tree using the explicit construction in Sect. 4.7.1and obtain \((S^{j}_{t})_{t\in \mathcal {T},j=1,\dots ,4^{n}}\).

-

2)

The backward recursion for the American option price with payoff function \(h(\,\cdot \,)\) is given by \(\widetilde{h}_{t_{N}} := h(S_{t_{N}})\) and

$$ \widetilde{h}_{t_{i}} := \mathrm {e}^{(d-r)/n}\mathbb{E}[\widetilde{h}_{t_{i+1}}|\mathcal {F}_{t_{i}} ] \vee h(S_{t_{i}}) \qquad \textit{for } i=N-1,\ldots ,0, $$where \(\mathbb{E}[\,\cdot \,|\mathcal {F}_{t_{i}}] = \frac{1}{4} (\widetilde{h}^{\mathrm{++}}_{t_{i+1}}+\widetilde{h}^{\mathrm{+-}}_{t_{i+1}}+\widetilde{h}^{\mathrm{-+}}_{t_{i+1}}+\widetilde{h}^{\mathrm{--}}_{t_{i+1}} )\) and \(\widetilde{h}_{t_{i}}^{\mathrm{\pm \pm}}\) represents the outcome \((\zeta _{i},\zeta ^{\bot}_{i})=(\pm 1,\pm 1)\) for the driving binomials, following the construction in Sect. 4.7.1.

-

3)

Finally, \(\widetilde{h}_{0}\) is the price of the American option at the inception of the contract.

The main computational cost of the scheme is the construction of the tree in Step 1). Once the tree is constructed, computing American prices for different options is a fast routine.

4.8.1 Numerical example: rough Bergomi model

We note that, as proved by Gassiat [31, Theorem 1.1(1)], the price process in the rough Bergomi model is a true martingale when \(\rho \leq 0\). We construct a rough volatility tree for the rough Bergomi model (introduced in [6]) and check the accuracy of the scheme. Figures 13 and 14 show the fractional trees for different values of \(H\) and for \(\rho \in \{-1,1\}\). Both pictures show a markedly different behaviour, but as a common property, we observe that as \(H\) tends to \(1/2\), the tree structure somehow becomes simpler.

4.8.2 European options

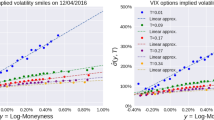

Figure 15 displays volatility smiles obtained using the tree scheme. Even though the time steps are not sufficient for small \(H\), the fit remarkably improves when \(H\geq 0.15\), and always remains inside the \(95\%\) confidence interval with respect to the hybrid scheme. Moreover, the moment-matching approach from Sect. 4.3.1 shows a better accuracy than the standard rDonsker scheme when \(H \leq 0.1\). In Fig. 16, a detailed error analysis corroborates these observations: the relative error is smaller than \(3\%\) for \(H\geq 0.15\).

4.8.3 American options

In the context of American options, there is no benchmark to compare our result. However, the accurate results found in the previous section (at least for \(H\geq 0.15\)) justify the use of trees to price American options. Figure 17 shows the output of American and European put prices with interest rates equal to \(r=5\%\). Interestingly, the rougher the process (the smaller \(H\)), the larger the difference between in-the-money European and American options.

References

Akyıldırım, E., Dolinsky, Y., Soner, H.M.: Approximating stochastic volatility by recombinant trees. Ann. Appl. Probab. 24, 2176–2205 (2014)

Alòs, E., León, J., Vives, J.: On the short-time behavior of the implied volatility for jump-diffusion models with stochastic volatility. Finance Stoch. 11, 571–589 (2007)

Bardina, X., Nourdin, I., Rovira, C., Tindel, S.: Weak approximation of a fractional SDE. Stoch. Process. Appl. 120, 39–65 (2010)

Barndorff-Nielsen, O.E., Schmiegel, J.: Ambit processes: with applications to turbulence and tumour growth. In: Benth, F.E., et al. (eds.) Stochastic Analysis and Applications. Abel Symp., vol. 2, pp. 93–124. Springer, Berlin (2007)

Bayer, C., Friz, P., Gassiat, P., Martin, J., Stemper, B.: A regularity structure for rough volatility. Math. Finance 30, 782–832 (2020)

Bayer, C., Friz, P., Gatheral, J.: Pricing under rough volatility. Quant. Finance 16, 1–18 (2016)

Bayer, C., Friz, P., Gulisashvili, A., Horvath, B., Stemper, B.: Short-time near the money skew in rough fractional stochastic volatility models. Quant. Finance 19, 779–798 (2019)

Beiglböck, M., Siorpaes, P.: Pathwise versions of the Burkholder–Davis–Gundy inequality. Bernoulli 21, 360–373 (2015)

Bender, C., Parczewski, P.: On the connection between discrete and continuous Wick calculus with an application to the fractional Black–Scholes model. In: Cohen, S.N., et al. (eds.) Stochastic Processes, Finance and Control: A Festschrift in Honor of Robert J. Elliott, pp. 3–40. World Scientific, Singapore (2012)

Bennedsen, M., Lunde, A., Pakkanen, M.S.: Hybrid scheme for Brownian semistationary processes. Finance Stoch. 21, 931–965 (2017)

Bennedsen, M., Lunde, A., Pakkanen, M.S.: Decoupling the short- and long-term behavior of stochastic volatility. J. Financ. Econom. 20, 961–1006 (2022)

Bergomi, L.: Smile dynamics II. Risk, October, 67–73 (2005)

Billingsley, P.: Convergence of Probability Measures, 2nd edn. Wiley, New York (1999)

Boucheron, S., Lugosi, G., Massart, P.: Concentration Inequalities: A Nonasymptotic Theory of Independence. Oxford University Press, London (2013)

Broux, L., Caravenna, F., Zambotti, L.: Hairer’s multilevel Schauder estimates without regularity structures. Preprint (2023). Available online at https://arxiv.org/abs/2301.07517

Čentsov, N.N.: Weak convergence of stochastic processes whose trajectories have no discontinuities of the second kind and the ‘heuristic’ approach to the Kolmogorov–Smirnov tests. Theory Probab. Appl. 1, 140–144 (1956)

Clark, D.S.: Short proof of a discrete Gronwall inequality. Discrete Appl. Math. 16, 279–281 (1987)

Comte, F., Renault, É.: Long memory continuous time models. J. Econom. 73, 101–149 (1996)

Cooley, J.W., Tukey, J.W.: An algorithm for the machine calculation of complex Fourier series. Math. Comput. 19, 297–301 (1965)

Djehiche, M., Eddahbi, M.: Hedging options in market models modulated by the fractional Brownian motion. Stoch. Anal. Appl. 19, 753–770 (2001)

Donsker, M.D.: An invariance principle for certain probability limit theorems. In: Memoirs of the AMS, vol. 6. Am. Math. Soc., Providence (1951)

Drábek, P.: Continuity of Nemyckij’s operator in Hölder spaces. Comment. Math. Univ. Carol. 16, 37–57 (1975)

Dudley, R.M.: Uniform Central Limit Theorems. Cambridge University Press, Cambridge (1999)

El Euch, O., Rosenbaum, M.: The characteristic function of rough Heston models. Math. Finance 29, 3–38 (2019)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence. Wiley, New York (1986)

Forde, M., Zhang, H.: Asymptotics for rough stochastic volatility models. SIAM J. Financ. Math. 8, 114–145 (2017)

Friz, P.K., Hairer, M.: A Course on Rough Paths. Springer, Berlin (2021)

Friz, P.K., Victoir, N.: Multidimensional Stochastic Processes as Rough Paths: Theory and Applications. Cambridge University Press, Cambridge (2010)

Fukasawa, M.: Asymptotic analysis for stochastic volatility: martingale expansion. Finance Stoch. 15, 635–654 (2011)

Fukasawa, M., Takabatake, T., Westphal, R.: Consistent estimation for fractional stochastic volatility model under high-frequency asymptotics (Is volatility rough?). Math. Finance 32, 1086–1132 (2022)

Gassiat, P.: On the martingale property in the rough Bergomi model. Electron. Commun. Probab. 24, 1–9 (2019)

Gatheral, J., Jaisson, T., Rosenbaum, M.: Volatility is rough. Quant. Finance 18, 933–949 (2018)

Guennoun, H., Jacquier, A., Roome, P., Shi, F.: Asymptotic behaviour of the fractional Heston model. SIAM J. Financ. Math. 9, 1017–1045 (2018)

Gulisashvili, A.: Large deviation principle for Volterra type fractional stochastic volatility models. SIAM J. Financ. Math. 9, 1102–1136 (2018)

Hamadouche, D.: Invariance principles in Hölder spaces. Port. Math. 57, 127–151 (2000)

Hardy, G.H., Littlewood, J.E.: Some properties of fractional integrals I. Math. Z. 27, 565–606 (1928)

Heinrich, C., Pakkanen, M., Veraart, A.E.D.: Hybrid simulation scheme for volatility modulated moving average fields. Math. Comput. Simul. 166, 224–244 (2019)

Horvath, B., Jacquier, A., Lacombe, C.: Asymptotic behaviour of randomised fractional volatility models. J. Appl. Probab. 56, 496–523 (2019)

Hurst, H.E.: Long-term storage capacity of reservoirs. Trans. Am. Soc. Civ. Eng. 116, 770–799 (1956)

Jacquier, A., Martini, C., Muguruza, A.: On VIX futures in the rough Bergomi model. Quant. Finance 18, 45–61 (2018)

Jacquier, A., Pakkanen, M., Stone, H.: Pathwise large deviations for the rough Bergomi model. J. Appl. Probab. 55, 1078–1092 (2018)

Jakubowski, A., Mémin, J., Pagès, G.: Convergence en loi des suites d’intégrales stochastiques sur l’espace \(D1\) de Skorokhod. PTRF 81, 111–137 (1989)

Karatzas, I., Shreve, S.E.: Methods of Mathematical Finance. Springer, New York (1998)

Kolmogorov, A.: Wienersche Spiralen und einige andere interessante Kurven im Hilbertschen Raum. C.R. (Dokl.) Acad. URSS (N.S.) 26, 115–118 (1940)

Krylov, N.V.: Lectures on Elliptic and Parabolic Equations in Hölder Spaces. Graduate Studies in Mathematics, vol. 12. Am. Math. Soc., Providence (1996)

Kurtz, T.G., Protter, P.E.: Weak convergence of stochastic integrals and stochastic differential equations. In: Talay, D., Tubaro, L. (eds.) CIME School in Probability. Lecture Notes in Mathematics, vol. 1627, pp. 1–41. Springer, Berlin (1991)