Abstract

We consider a government that aims at reducing the debt-to-(gross domestic product) (GDP) ratio of a country. The government observes the level of the debt-to-GDP ratio and an indicator of the state of the economy, but does not directly observe the development of the underlying macroeconomic conditions. The government’s criterion is to minimise the sum of the total expected costs of holding debt and of debt reduction policies. We model this problem as a singular stochastic control problem under partial observation. The contribution of the paper is twofold. Firstly, we provide a general formulation of the model in which the level of the debt-to-GDP ratio and the value of the macroeconomic indicator evolve as a diffusion and a jump-diffusion, respectively, with coefficients depending on the regimes of the economy. The latter are described through a finite-state continuous-time Markov chain. We reduce the original problem via filtering techniques to an equivalent one with full information (the so-called separated problem), and we provide a general verification result in terms of a related optimal stopping problem under full information. Secondly, we specialise to a case study in which the economy faces only two regimes and the macroeconomic indicator has a suitable diffusive dynamics. In this setting, we provide the optimal debt reduction policy. This is given in terms of the continuous free boundary arising in an auxiliary fully two-dimensional optimal stopping problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The question of optimally managing the debt-to-GDP ratio (also called “debt ratio”) of a country has become particularly important in the last years. Indeed, concurrently with the financial crisis started in 2007, debt-to-GDP ratios exploded from an average of 53% to circa 80% in developed countries. Clearly, the debt management policy of a government highly depends on the underlying macroeconomic conditions; indeed, these affect for example the growth rate of the GDP which in turn determines the growth rate of the debt-to-GDP ratio of a country. However, in practice, it is typically neither possible to measure in real time the growth rate of the GDP, nor can one directly observe the underlying business cycles. On August 24, 2018, during a speech at “Changing Market Structure and Implications for Monetary Policy” – a symposium sponsored by the Federal Reserve Bank of Kansas City in Jackson Hole, Wyoming –, the chairman of the Federal Reserve Jerome H. Powell said:

…In conventional models of the economy, major economic quantities such as inflation, unemployment and the growth rate of the gross domestic product fluctuate around values that are considered “normal” or “natural” or “desired”. The FOMC (Federal Open Market Committee) has chosen a 2 percent inflation objective as one of these desired values. The other values are not directly observed, nor can they be chosen by anyone…

Following an idea that dates back to Hamilton [38], we assume in this paper that the GDP growth rate of a country is modulated by a continuous-time Markov chain that is not directly observable. The Markov chain has \(Q\geq 2\) states modelling the different business cycles of the economy, so that a shift in the macroeconomic conditions induces a change in the value of the growth rate of the GDP. The government can observe only the current levels of the debt-to-GDP ratio and of a macroeconomic indicator. The latter might be e.g. one of the so-called “Big Four” which are usually considered proxies of the industrial production index, hence of the business conditions. These indicators constitute the Conference Board’s Index of Coincident Indicators; they are employment in non-agricultural businesses, industrial production, real personal income less transfers, and real manufacturing and trade sales. We refer to e.g. Stock and Watson [60], where the authors present a wide range of economic indicators and examine the forecasting performance of various of them in the recession of 2001.

Motivated by the recent aforementioned debt crisis, we consider a government that has the priority to return debt to less dangerous levels, to move away from the dark corners (O. Blanchard, former chief economist of the International Monetary Fund (2014)) e.g. through fiscal policies or imposing austerity policies in the form of spending cuts. In our model, we thus preclude the possibility for the government to increase the level of the debt ratio and we neglect any possible potential benefit resulting from holding debt, even if we acknowledge that a policy of debt reduction might not be always the most sensible approach, as also observed by Ostry et al. [55] (see also the discussion in Remark 2.6 below). We further assume that the loss resulting from holding debt can be measured through a convex and nondecreasing cost function, and that the debt ratio is instantaneously affected by any reduction. The latter need not necessarily be performed at rates, but also lump sum actions are allowed, and the cumulative amount of the debt ratio’s decrease is the government’s control variable. Any decrease of the debt ratio results in proportional costs, and the government aims at choosing a debt-reduction policy that minimises the total expected loss of holding debt, plus the total expected costs of interventions on the debt ratio. In line with recent papers on stochastic control methods for optimal debt management (see Cadenillas and Huamán-Aguilar [8, 9], Ferrari [31] and Ferrari and Rodosthenous [32]), we model the previous problem as a singular stochastic control problem. However, differently to all previous works, our problem is formulated in a partial observation setting, thus leading to a completely different mathematical analysis. In our model, the observations consist of the debt ratio and the macroeconomic indicator. The debt ratio is a linearly controlled geometric Brownian motion, and its drift is given in terms of the GDP growth rate, which is modulated by the unobservable continuous-time Markov chain \(Z\). The macroeconomic indicator is a real-valued jump-diffusion which is correlated to the debt ratio process, and which has drift and both intensity and jump sizes depending on \(Z\).

Our contributions. Our study of the optimal debt reduction problem is performed thought three main steps.

First of all, via advanced filtering techniques with mixed-type observations, we reduce the original problem to an equivalent problem under full information, the so-called separated problem. This is a classical procedure used to handle optimal stochastic control problems under partial information (see e.g. Fleming and Pardoux [33], Bensoussan [4, Chap. 7.1] and Ceci and Gerardi [12]) The filtering problem consists in characterising the conditional distribution of the unobservable Markov chain \(Z\) at any time \(t\), given observations up to time \(t\). The case of diffusion observations has been widely studied in the literature, and textbook treatments can be found in Elliott et al. [29, Chap. 8], Kallianpur [44, Chap. 8] and Liptser and Shiryaev [49, Chap. 8]. There are also known results for pure-jump observations (see e.g. Brémaud [7, Chap. IV], Ceci and Gerardi [13, 14], Kliemann et al. [47] and references therein). More recently, filtering problems with mixed-type information which involve pure-jump processes and diffusions have been studied by Ceci and Colaneri [15, 16], among others.

Notice that also the economic and financial literature has experienced papers on models under partial observation where a reduction to a complete information setting is performed via filtering techniques and the problem is split into the so-called “two-step procedure”. We refer e.g. to the literature on portfolio selection in the seminal papers by Detemple [25] and Gennotte [36] (in a continuous-time setting, with diffusive observations leading to a Gaussian filter process); to Veronesi [62] for an equilibrium model with uncertain dividend drift in the field of market over- and under-reaction to information; to the more recent work by Luo [51], where different uncertainty models are analysed in a Gaussian setting with the aim of studying strategic consumption–portfolio rules when dealing with precautionary savings. Generally, the economic and financial literature refers to well-known results in filtering theory, in the case of diffusive observation processes such as additive Gaussian white noise (e.g. Detemple [25], Gennotte [36] and Luo [51]) or in the case of pure-jump observations (see Bäuerle and Rieder [3] and Ceci [11], among others). A few papers consider mixed-type information (see e.g. Callegaro et al. [10] and Frey and Schmidt [35], among others). In our paper, we deal with a more general setting with a two-dimensional observation process allowing jumps, for which known results cannot be invoked.

Usually, two filtering approaches are followed: the so-called reference probability approach (see the seminal paper by Zakai [65] and the more recent papers Frey and Runggaldier [34], Ceci and Colaneri [16] and Colaneri et al. [18], among others) and the innovation approach (see e.g. Brémaud [7, Chap. IV.1], Ceci and Colaneri [15], Eksi and Ku [27] and Frey and Schmidt [35]). Due to the general structure of our observations’ dynamics, the innovation approach is more suitable to handle our filtering problem, and this leads to the so-called Kushner–Stratonovich equation. In particular, it turns out that the dynamics of our filter and of the observation process are coupled, thus making the proof of uniqueness of the solution to the Kushner–Stratonovich system more delicate. After providing such a result, we are then able to show that the original problem under partial observation and the separated problem are equivalent, that is, they share the same value and the same optimal control.

Secondly, we exploit the convex structure of the separated problem and provide a general probabilistic verification theorem. This result – which is in line with findings in Baldursson and Karatzas [2], De Angelis et al. [20] and Ferrari [31], among others – relates the optimal control process to the solution to an auxiliary optimal stopping problem. Moreover, it proves that the value function of the separated problem is the integral – with respect to the controlled state variable – of the value function of the optimal stopping problem. The stopping problem thus gives the optimal timing at which debt should be marginally reduced.

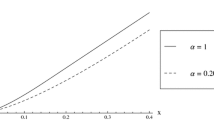

Finally, by specifying a setting in which the continuous-time Markov chain faces only two regimes (a fast growth or slow growth phase) and the macroeconomic indicator is a suitable diffusion process, we are able to characterise the optimal debt reduction policy. In this framework, the filter process is a two-dimensional process \((\pi _{t},1-\pi _{t})_{t\geq 0}\), where \(\pi _{t}\) is the conditional probability at time \(t\) that the economy enjoys the fast growth phase. We prove that the optimal control prescribes to keep at any time the debt ratio below an endogenously determined curve that is a function of the government’s belief about the current state of the economy. Such a debt ceiling is the free boundary of the fully two-dimensional optimal stopping problem that is related to the separated problem (in the sense of the previously discussed verification theorem). By using almost exclusively probabilistic arguments, we are able to show that the value function of the auxiliary optimal stopping problem is a \(C^{1}\)-function of its arguments, and thus enjoys the so-called smooth-fit property. Moreover, the free boundary is a continuous, bounded and increasing function of the filter process. This last monotonicity property has also a clear economic interpretation: the more the government believes that the economy enjoys a regime of fast growth, the less strict the optimal debt reduction policy should be.

As a remarkable byproduct of the regularity of the value function of the optimal stopping problem, we also obtain that the value function of the singular stochastic control problem is a classical solution to its associated Hamilton–Jacobi–Bellman (HJB) equation. The latter takes the form of a variational inequality involving an elliptic second-order partial differential equation (PDE). It is worth noticing that the \(C^{2}\)-regularity of the value function implies the validity of a second-order principle of smooth fit, usually observed in one-dimensional problems.

We believe that the study of the auxiliary fully two-dimensional optimal stopping problem is a valuable contribution to the literature on its own. Indeed, while the literature on one-dimensional optimal stopping problems is very rich, the problem of characterising the optimal stopping rule in multi-dimensional settings has been so far rarely explored in the literature (see the recent work by Christensen et al. [17], De Angelis et al. [20] as well as Johnson and Peskir [43] among the very few papers dealing with multi-dimensional stopping problems). This discrepancy is due to the fact that a standard guess-and-verify approach, based on the construction of an explicit solution to the variational inequality arising in the considered optimal stopping problem, is no longer applicable in multi-dimensional settings where the variational inequality involves a PDE rather than an ordinary differential equation.

Related literature. As already noticed above, our paper is placed among those recent works addressing the problem of optimal debt management via continuous-time stochastic control techniques. In particular, Cadenillas and Huamán-Aguilar [8, 9] model an optimal debt reduction problem as a one-dimensional control problem with singular and bounded-velocity controls, respectively. In the work by Ferrari and Rodosthenous [32], the government is allowed to increase and decrease the current level of the debt ratio, and the interest rate on debt is modulated by a continuous-time observable Markov chain. The mathematical formulation leads to a one-dimensional bounded-variation stochastic control problem with regime switching. In the model by Ferrari [31], when optimally reducing the debt ratio, the government takes into consideration the evolution of the inflation rate of the country. The latter evolves as an uncontrolled diffusion process and affects the growth rate of the debt ratio, which is a process of bounded variation. In this setting, the debt reduction problem is formulated as a two-dimensional singular stochastic control problem whose HJB equation involves a second-order linear parabolic partial differential equation. All the previous papers are formulated in a full information setting, while ours is under partial observation.

The literature on singular stochastic control problems under partial observation is also still quite limited. Theoretical results on the PDE characterisation of the value function of a two-dimensional optimal correction problem under partial observation are obtained by Menaldi and Robin [53], whereas a general maximum principle for a not necessarily Markovian singular stochastic control problem under partial information has more recently been derived by Øksendal and Sulem [54]. We also refer to De Angelis [19] and Decámps and Villeneuve [23] who provide a thorough study of the optimal dividend strategy in models in which the surplus process evolves as a drifted Brownian motion with unknown drift that can take only two constant values, with given probabilities.

Outline of the paper. The rest of the paper is organised as follows. In Sect. 2, we introduce the setting and formulate the problem. The reduction of the problem under partial observation to the separated problem is performed in Sect. 3; in particular, the filtering results are presented in Sect. 3.1. The probabilistic verification theorem connecting the separated problem to one of optimal stopping is then proved in Sect. 3.3. In Sect. 4, we consider a case study in which the economy faces only two regimes. Its solution, presented in Sects. 4.2 and 4.3, hinges on the study of a two-dimensional optimal stopping problem that is performed in Sect. 4.1. Finally, Appendix A collects the proofs of some technical filtering results.

2 Setting and problem formulation

2.1 The setting

Consider the complete filtered probability space \((\Omega ,\mathcal{F},\mathbb{F},\mathbb{P})\) capturing all the uncertainty of our setting. Here, \(\mathbb{F}:={(\mathcal{F}_{t})}_{t \ge 0}\) denotes the full information filtration. We suppose that it satisfies the usual hypotheses of completeness and right-continuity.

We denote by \(Z\) a continuous-time finite-state Markov chain describing the different states of the economy. For \(Q\geq 2\), let \(S := \{1, 2,\dots , Q\}\) be the state space of \(Z\) and \((\lambda _{ij})_{1 \leq i,j \leq Q}\) its generator matrix. Here \(\lambda _{ij}\), \(i \neq j\), gives the intensity of a transition from state \(i\) to state \(j\) and is such that \(\lambda _{ij} \geq 0\) for \(i \neq j\) and \(\sum _{j=1, j\ne i}^{Q} \lambda _{ij} = - \lambda _{ii}\). For any time \(t\geq 0\), \(Z_{t}\) is \(\mathcal{F}_{t}\)-measurable.

In the absence of any intervention by the government, we assume that the (uncontrolled) debt-to-GDP ratio evolves as

where \(W\) is a standard \(\mathbb{F}\)-Brownian motion on \((\Omega , \mathcal{F})\) independent of \(Z\), \(r\geq 0\) and \(\sigma >0\) are constants and \(g: S \rightarrow \mathbb{R}\). The constant \(r\) is the real interest rate on debt, \(\sigma \) is the debt’s volatility and \(g(i) \in \mathbb{R}\) is the rate of the GDP growth when the economy is in state \(i\in S\).

It is clear that (2.1) admits a unique strong solution, and when needed, we denote it by \(X^{x,0}\) for any \(x>0\). The current level of the debt-to-GDP ratio is known to the government at any time \(t\), and \(X^{x,0}\) is therefore the first component of the so-called observation process.

The government also observes a macroeconomic stochastic indicator \(\eta \), e.g. one of the so-called “Big Four”, which we interpret as a proxy of the business conditions. We assume that \(\eta \) is a jump-diffusion process solving the stochastic differential equation

where \(\sigma _{1} >0\), \(\sigma _{2}>0\) and \(b_{1}\), \(c\) are measurable functions of their arguments and \(\mathcal{I}\subseteq \mathbb{R}\) is the state space of \(\eta \). Here, \(B\) is an \(\mathbb{F}\)-standard Brownian motion independent of \(W\) and \(Z\). Moreover, \(N\) is an \(\mathbb{F}\)-adapted point process, without common jump times with \(Z\), independent of \(W\) and \(B\). The predictable intensity of \(N\) is denoted by \((\lambda ^{N}(Z_{t-}))_{t\geq 0}\) and depends on the current state of the economy, with \(\lambda ^{N}(\,\cdot \,)>0\) being a measurable function. From now on, we make the following assumptions that ensure strong existence and uniqueness of the solution to (2.2) (by a standard localising argument, one can indeed argue as e.g. in the proof of Xi and Zhu [64, Theorem 2.1], performed in a setting more general than ours by employing Ikeda and Watanabe [40, Theorem IV.9.1]).

Assumption 2.1

The functions \(b_{1}: \mathcal{I}\times S \to \mathbb{R}\), \(\sigma _{1}: \mathcal{I}\to (0,\infty )\), \(\sigma _{2}: \mathcal{I}\to (0,\infty )\) and \(c: \mathcal{I}\times S \to \mathbb{R}\) are such that for any \(i \in S\),

(i) (continuity) \(b_{1}(\cdot , i)\), \(\sigma _{1}(\cdot )\), \(\sigma _{2}(\cdot )\) and \(c(\cdot , i)\) are continuous;

(ii) (local Lipschitz conditions) for any \(R>0\), there exists a constant \(L_{R}>0\) such that if \(|q|< R\), \(|q'|< R\), \(q, q' \in \mathcal{I}\), then

(iii) (growth conditions) there exists a constant \(C>0\) such that

The dynamics proposed in (2.2) is of jump-diffusive type and allows size and intensity of the jumps to be affected by the state of the economy. It is therefore flexible enough to describe a large class of stochastic factors which may exhibit jumps.

The observation filtration \(\mathbb{H} = {(\mathcal{H}_{t})}_{t \ge 0}\) is defined as

where \(\mathbb{F}^{X^{0}}\) and \(\mathbb{F}^{\eta }\) denote the natural filtrations generated by \(X^{0}\) and \(\eta \), respectively, as usual augmented by ℙ-null sets. Clearly, \((X^{0}, \eta )\) is adapted to both ℍ and \(\mathbb{F}\), and

The above inclusion means that the government cannot directly observe the state \(Z\) of the economy, but that this has to be inferred through the observation of \((X^{0}, \eta )\). We are therefore working in a partial information setting.

2.2 The optimal debt reduction problem

The government can reduce the level of the debt-to-GDP ratio by intervening on the primary budget balance (i.e., the overall difference between government revenues and spending), for example through austerity policies in the form of spending cuts. When doing so, the debt ratio dynamics becomes

The process \(\nu \) is the control that the government chooses based on the information at its disposal. More precisely, \(\nu _{t}\) defines the cumulative reduction of the debt-to-GDP ratio made by the government up to time \(t\), and \(\nu \) is therefore a nondecreasing process belonging to the set

for any given and fixed initial value \(x \in (0, \infty )\) of \(X^{\nu }\), initial value \(q \in \mathcal{I}\) of \(\eta \), and \({\underline{y}} \in \mathcal{Y}\). Here

is the probability simplex on \(\mathbb{R}^{Q}\), representing the space of initial distributions of the process \(Z\). From now on, we set \(\nu _{0-}=0\)a.s. for any \(\nu \in \mathcal{M}(x, \underline{y},q)\).

Remark 2.2

Notice that in the definition of the set ℳ above, as well as in (2.4) and in (2.5) below, we have stressed the dependency on the initial data \((x,\underline{y},q)\) just for notational convenience, not to indicate any Markovian nature of the considered problem, which is in fact not given.

For any \((x,\underline{y},q) \in (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\) and \(\nu \in \mathcal{M}(x,\underline{y},q)\), there exists a unique solution to (2.3), denoted by \(X_{t}^{x,\nu }\), that is given by

where

Here and in the rest of this paper, we use the notation \(\int _{0}^{t} (\,\cdot \,)d\nu _{s} = \int _{[0,t]} (\,\cdot \,) d \nu _{s}\) for the Lebesgue–Stieltjes integral with respect to the random measure \(d\nu _{\cdot }\) induced by the nondecreasing process \(\nu \) on \([0,\infty )\).

Remark 2.3

The dynamics (2.3) might be justified in the following way. Suppose that the public debt (in real terms) \(D\) and the GDP \(Y\) follow the classical dynamics

where \(\xi _{t}\) is the cumulative real budget balance up to time \(t\) and \(\widetilde{W}\) is a Brownian motion. An easy application of Itô’s formula and a change of measure then gives that the ratio \(X:=D/Y\) evolves as in (2.3), upon setting \(\nu _{\cdot }:=\int _{0}^{\cdot } d\xi _{s}/Y_{s}\) and \(x:=d/y\).

The government aims at reducing the level of the debt ratio. Having a level of \(X_{t}=x\) at time \(t\geq 0\) when the state of the economy is \(Z_{t}=i\), the government incurs an instantaneous cost (loss) \(h(x,i)\). This may be interpreted as an opportunity cost resulting from private investments’ crowding out, less room for financing public investments, and from a tendency to suffer low subsequent growth (see the technical report [30] and the work by Woo and Kumar [63], among others). The cost function \(h:\mathbb{R} \times S \mapsto \mathbb{R}_{+}\) fulfils the following requirements (see also Cadenillas and Huamán-Aguilar [8] and Ferrari [31]).

Assumption 2.4

(i) For any \(i\in S\), the mapping \(x \mapsto h(x,i)\) is strictly convex, continuously differentiable and nondecreasing on \(\mathbb{R}_{+}\). Moreover, \(h(0,i)=0\).

(ii) For any given \(x\in (0,\infty )\) and \(i\in S\), one has

Remark 2.5

1) As an example, the power function given by \(h(x,i) = \vartheta _{i} x^{n_{i} + 1}\) for \((x,i) \in [0,\infty )\times S\), \(\vartheta _{i}>0\), \(n_{i} \geq 1\) satisfies Assumption 2.4 (for a suitable \(\rho >0\) taking care of requirement (ii) above). Inspired by the careful discussion of Cadenillas and Huamán-Aguilar in [8, Sect. 2], \(n_{i}\) is a subjective regime-dependent parameter capturing the government’s aversion/intolerance towards the debt ratio. On the other hand, the parameter \(\vartheta _{i}\) can be thought of as a measure (in monetary terms) of the importance of debt: the better the debt’s characteristics (for example, a larger portion of debt is domestic rather than external, cf. Japan), the lower the parameter \(\vartheta _{i}\) (relative to marginal cost of intervention, see below). A power cost function as the one above is in line with the usual quadratic loss function adopted in the economic literature (see the influential paper by Tabellini [61], among many others).

2) Notice that the integrability conditions in Assumption 2.4 (ii) ensure that the expected cost and marginal cost of having debt and not intervening on it are finite for any possible regime of the economy. In particular, the finiteness of the second expectation in Assumption 2.4 (ii) guarantees that the stopping functional considered in Sect. 3.3 below is finite.

Whenever the government intervenes in order to reduce the debt-to-GDP ratio, it incurs a proportional cost. This might be seen as a measure of the social and financial consequences deriving from a debt-reduction policy, and the associated regime-dependent marginal cost \(\kappa (Z_{t})\) allows to express it in monetary terms (we refer e.g. to Lukkezen and Suyker [50] for an empirical evaluation of those costs). We assume that \(\kappa (\,\cdot \,)>0\) is a measurable finite function.

Given an intertemporal discount rate \(\rho >0\), for any given and fixed triple \((x,\underline{y},q) \in (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\), the government thus aims to minimise the expected total cost functional

for \(\nu \in \mathcal{M}(x, \underline{y}, q)\). The government’s problem under partial observation can be therefore defined as

Remark 2.6

1) We provide here some comments on our formulation of the optimal debt reduction problem. In line with the recent literature [8, 9, 31, 32] on stochastic control models for debt management, the cost/loss function \(h\) appearing in the government’s objective functional is nondecreasing and null when the debt level is zero. While the latter requirement can be made without loss of generality, the former implicitly means that the government believes that disadvantages arising from debt far outweigh the advantages, and therefore neglects any potential social and financial benefit arising from having debt (cf. Holmström and Tirole [39]). One could think that this assumption is more appropriate for those countries that have faced severe debt crises during the last financial crisis and whose governments trust that high government debt has a negative effect on the long-term economic growth, makes the economy less resilient to macroeconomic shocks (e.g. sovereign default risks and liquidity shocks) and poses limits to the adoption of counter-cyclical fiscal policies (see e.g. the book by Blanchard [5, Chap. 22], the technical report [30] and Won and Kumar [63] for empirical studies).

However, it is also worth noticing that the general results of Sect. 3 of this paper still hold if we take \(x \mapsto h(x,i)\) convex and bounded from below and remove the condition of being nondecreasing on \(\mathbb{R}_{+}\) (thus allowing potential benefits arising from debt). On the other hand, the monotonicity of \(h(\cdot ,i)\) has an important role in our analysis of Sects. 3.3 and 4 (see Propositions 4.4 and 4.6).

2) In our model, we do not allow policies that might lead to an increase of the debt like e.g. investments in infrastructure, healthcare, education and research, and we neglect any possible social and financial benefit that those economic measures might induce (see Ostry et al. [55]). From a mathematical point of view, allowing policies of debt increase would lead to a singular stochastic control problem with controls of bounded variation, where the two nondecreasing processes giving the minimal decomposition of any admissible control represent the cumulative amount of the debt’s increase and decrease. In this case, one might also allow in the government’s objective functional the total expected social and financial benefits arising from a policy of debt expansion. We refer to Ferrari and Rodosthenous [32] where a similar setting has been considered in a problem of debt management under complete observation.

The function \(V_{\mathrm{po}}\) is well defined and finite. Indeed, it is nonnegative due to the nonnegativity of \(h\); moreover, since the admissible policy “instantaneously reduce at initial time the debt ratio to 0” is a priori suboptimal and has cost \(x\), we have \(V_{\mathrm{po}} \leq x\).

We should like to stress once more that any \(\nu \in \mathcal{M}(x,\underline{y},q)\) is ℍ-adapted, and therefore (2.5) is a stochastic control problem under partial observation. In particular, it is a singular stochastic control problem under partial observation, that is, an optimal control problem in which the random measures induced by the nondecreasing control processes on \([0,\infty )\) might be singular with respect to Lebesgue measure, and in which one component \(Z\) of the state variable is not directly observable by the controller.

In its current formulation, the optimal debt reduction problem is not Markovian and the dynamic programming approach via an HJB equation is not applicable. In the next section, by using techniques from filtering theory, we introduce an equivalent problem under complete information, the so-called separated problem. This enjoys a Markovian structure, and its solution is characterised in Sect. 3.3 through a Markovian optimal stopping problem.

3 Reduction to an equivalent problem under complete information

In this section, we derive the separated problem. To this end, we first study the filtering problem arising in our model. As already discussed in the introduction, results on such a filtering problem cannot be directly obtained from existing literature due to the structure of our dynamics.

3.1 The filtering problem

The filtering problem consists in finding the best mean-squared estimate of \(f(Z_{t})\), for any \(t\) and any measurable function \(f\), on the basis of the information available up to time \(t\). In our setting, that information flow is given by the filtration ℍ. The estimate can be described through the filter process \({(\pi _{t})}_{t \ge 0}\) which provides the conditional distribution of \(Z_{t}\) given \(\mathcal{H}_{t}\) for any time \(t\) (see for instance Liptser and Shiryaev [49, Chap. 8]). For any probability measure \(\mu \) on \(S=\{1,\dots ,Q\}\) and any function \(f\) on \(S\), we write \(\mu (f):= \int _{S} f d \mu = \sum _{i=1}^{Q} f(i)\mu (\{i\})\). It is known that there exists a càdlàg (right-continuous with left limits) process taking values in the space of probability measures on \(S=\{1,\dots ,Q\}\) such that for any measurable function \(f\) on \(S\),

see for further details Kurtz and Ocone [48, Lemma 1.1]. Moreover, since \(Z\) takes only a finite number of values, the filter is completely described by the vector

where  , \(i \in S\). With a slight abuse of notation, we denote in the following by \(\pi (i)\) the process \(\pi (f_{i})\), so that for all measurable functions \(f\), (3.1) gives

, \(i \in S\). With a slight abuse of notation, we denote in the following by \(\pi (i)\) the process \(\pi (f_{i})\), so that for all measurable functions \(f\), (3.1) gives

Setting \(\beta (Z_{t}):=r-g(Z_{t})\) and \(\beta (i):= r - g(i)\), \(i \in S\), notice that \(\beta \) is clearly a bounded function. Then we define two processes \(I\) and \(I^{1}\) such that for any \(t\geq 0\),

where

Henceforth, we work under the following Novikov condition.

Assumption 3.1

Under Assumption 3.1, by classical results from filtering theory (see e.g. [49, Chap. 7]), the innovation processes \(I\) and \(I^{1}\) are Brownian motions with respect to the filtration ℍ. Moreover, given the assumed independence of \(B\) and \(W\), they turn out to be independent.

The integer-valued random measure associated to the jumps of \(\eta \) is defined as

where \(\delta _{(a_{1},a_{2})}\) denotes the Dirac measure at the point \((a_{1},a_{2}) \in \mathbb{R}_{+} \times \mathbb{R}\). Notice that the ℍ-adapted random measure \(m\) is such that

To proceed further we need the following useful definitions.

Definition 3.2

For any filtration \(\mathbb{G}\), we denote by \({\mathcal{P}}(\mathbb{G})\) the predictable \(\sigma \)-field on the product space \((0,\infty )\times \Omega \). Moreover, let \({\mathcal{B}}(\mathbb{R})\) be the Borel \(\sigma \)-algebra on ℝ. Any mapping \(H: (0,\infty ) \times \Omega \times \mathbb{R} \to \mathbb{R}\) which is \({\mathcal{P}}(\mathbb{G}) \times {\mathcal{B}}(\mathbb{R})\)-measurable is called a \(\mathbb{G}\)-predictable process indexed by ℝ.

Letting

we denote by \(\mathbb{F}^{m}:=(\mathcal{F}^{m}_{t})_{t\geq 0}\) the filtration which is generated by the random measure \(m(dt, dq)\). It is right-continuous by [7, Theorem T25 in Appendix A2].

Definition 3.3

Given any filtration \(\mathbb{G}\) with \(\mathbb{F}^{m} \subseteq \mathbb{G}\), the \(\mathbb{G}\)-dual predictable projection of \(m\), denoted by \(m^{p, \mathbb{G}}(dt, dq)\), is the unique positive \(\mathbb{G}\)-predictable random measure such that for any nonnegative \(\mathbb{G}\)-predictable process \(\Phi \) indexed by ℝ,

To prove that a given positive \(\mathbb{G}\)-predictable random measure is the \(\mathbb{G}\)-dual predictable projection of \(m\), it suffices to verify (3.6) for any process which has the form  with \(C\) a nonnegative \(\mathbb{G}\)-predictable process and \(A \in {\mathcal{B}}(\mathbb{R})\). For further details, we refer to the books by Brémaud [7, Sect. VIII.4] and Jacod [41, Sect. III.1].

with \(C\) a nonnegative \(\mathbb{G}\)-predictable process and \(A \in {\mathcal{B}}(\mathbb{R})\). For further details, we refer to the books by Brémaud [7, Sect. VIII.4] and Jacod [41, Sect. III.1].

We now aim at deriving an equation for the evolution of the filter (the filtering equation). To this end, we use the so-called innovation approach (see Brémaud [7, Chap. IV.1], Liptser and Shiryaev [49, Chaps. 7.4 and 10.1.5] and Ceci and Colaneri [15], among others), which in our setting requires the introduction of the ℍ-compensated jump measure of \(\eta \),

The triplet \((I, I^{1}, m^{\pi })\) also represents a building block for the construction of ℍ-mar- tingales as shown in Proposition 3.5 below. We start by determining the form of \(m^{p, \mathbb{H}}\).

Proposition 3.4

The ℍ-dual predictable projection of \(m\)is given by

where \(\delta _{a}\)denotes the Dirac measure at the point \(a \in \mathbb{R}\).

Proof

1) We first prove that the \(\mathbb{F}\)-dual predictable projection of \(m\) is given by

Let \(A\in {\mathcal{B}}(\mathbb{R})\) and introduce

Then \(\mathcal{N}(A)\) is the point process counting the number of jumps of \(\eta \) up to time \(t\) with jump size in the set \(A\). Since (2.2) implies that  for \(s\!\geq 0\) and \(N\) is a point process with \(\mathbb{F}\)-predictable intensity given by \((\lambda ^{N}\!(Z_{t-}))_{t\geq 0}\), we obtain for each nonnegative \(\mathbb{F}\)-predictable process \(C\) that

for \(s\!\geq 0\) and \(N\) is a point process with \(\mathbb{F}\)-predictable intensity given by \((\lambda ^{N}\!(Z_{t-}))_{t\geq 0}\), we obtain for each nonnegative \(\mathbb{F}\)-predictable process \(C\) that

So for any \(A\in {\mathcal{B}}(\mathbb{R})\),  provides the \(\mathbb{F}\)-predictable intensity of the counting process \(\mathcal{N}(A)\). Recalling (3.10) and Definition 3.3, this implies that \(m^{p, \mathbb{F}}(dt,dq)\) in (3.9) coincides with the \(\mathbb{F}\)-dual predictable projection of \(m\), since (3.6) holds with the choice \(\mathbb{G} = \mathbb{F}\) and

provides the \(\mathbb{F}\)-predictable intensity of the counting process \(\mathcal{N}(A)\). Recalling (3.10) and Definition 3.3, this implies that \(m^{p, \mathbb{F}}(dt,dq)\) in (3.9) coincides with the \(\mathbb{F}\)-dual predictable projection of \(m\), since (3.6) holds with the choice \(\mathbb{G} = \mathbb{F}\) and  .

.

2) As in Ceci [11, Proposition 2.3], we can now derive the ℍ-dual predictable projection of \(m^{p, \mathbb{F}}\) by projecting \(m^{p, \mathbb{F}}\) onto the observation flow ℍ. More precisely, the ℍ-predictable intensity of the point process \(\mathcal{N}(A)\), \(A\in {\mathcal{B}}(\mathbb{R})\), is given by

This implies that \(m^{p, \mathbb{H}}(dt, dq)\) is given by (3.8), since (3.6) is satisfied with the choice \(\mathbb{G} = \mathbb{H}\),  . □

. □

An essential tool to prove that the original problem under partial information is equivalent to the separated one is the characterisation of the filter as the unique solution to the filtering equation (see El Karoui et al. [28], Mazliak [52] and Ceci and Gerardi [12]). In order to derive the filtering equation solved by \(\pi \), we first give a representation theorem for ℍ-martingales. The proof of the following technical result is given in Appendix A.

Proposition 3.5

Under Assumptions 2.1and 3.1, every ℍ-local martingale \(M\)admits the decomposition

where \(\varphi \)and \(\psi \)are ℍ-predictable processes and \(w\)is an ℍ-predictable process indexed by ℝ such that a.s.

We are now in the position to prove the following fundamental result, whose proof is postponed to Appendix A.

Theorem 3.6

Recall (3.7), let \({\underline{y}} \in \mathcal{Y} \)be the initial distribution of \(Z\)and let Assumptions 2.1and 3.1hold. Then the filter \(({\underline{\pi }}_{t})_{t \geq 0} := (\pi _{t}(i); i\in S)_{t \geq 0}\)solves the Kushner–Stratonovich system

for any \(i\in S\). Here, \(\beta (i) = r -g(i)\)and

denotes the Radon–Nikodým derivative of  with respect to

with respect to  .

.

Let us introduce the sequence of jump times and jump sizes of the process \(\eta \), denoted by \((T_{n}, \zeta _{n} )_{n\geq 1}\) and recursively defined, with \(T_{0} := 0\), as

We use the standard convention that \(\inf \emptyset = +\infty \). Then the integer-valued measure associated to the jumps of \(\eta \) (cf. (3.4)) can also be written as

The filtering system (3.11) has a natural recursive structure in terms of the sequence \(( T_{n} )_{n\geq 1}\), as shown in the next proposition.

Proposition 3.7

Between two consecutive jump times, i.e., for \(t \in [T_{n}, T_{n+1})\), the filtering system (3.11) reads as

for any \(i\in S\). At a jump time \(T_{n}\)of \(\eta \), \(({\underline{\pi }}_{t})_{t \geq 0}= (\pi _{t}(i); i\in S)_{t \geq 0}\)jumps as well, and its value is given by

Proof

First, recalling that \(m^{\pi }(dt, dq)= m(dt, dq) - m^{p, \mathbb{H}}(dt, dq)\) and

we obtain that

which from (3.11) implies that \(\pi _{t}(i)\) solves (3.14) for any \(t \in [T_{n}, T_{n+1})\). Finally, (3.15) follows by (3.12) and

We want to stress that (3.15) shows that the vector \({\underline{\pi }}_{T_{n}}\) is completely determined by the observed data \(\eta \) and the knowledge of \({\underline{\pi }}_{t}\) for \(t \in [T_{n-1}, T_{n})\), since \(\pi _{{T_{n} -}}(i) := \lim _{t \uparrow T_{n}} \pi _{t}(i)\), \(i \in S\).

Example 3.8

1) In the case \(c(q,i) \equiv c \neq 0\) for any \(i \in S\) and \(q \in \mathcal{I}\), the sequences of jump times of \(\eta \) and \(N\) coincide and the filtering system (3.11) reduces to (for \(i \in S\))

2) In the case \(\alpha (q,i)=\alpha (i)\) and \(c(q,i) \equiv 0\) for any \(i \in S\) and \(q \in \mathcal{I}\), the filtering system (3.11) does not depend explicitly on the process \(\eta \). In particular, one has

where we have set \(\alpha (i) := \sigma _{2}^{-1} (b_{1}(i) - \sigma ^{-1} \beta (i) \sigma _{1})\). In Sect. 4, we provide the explicit solution to the optimal debt reduction problem within this setting. With reference to (2.2) and (3.3), this setting corresponds e.g. to the purely diffusive arithmetic case \(c(q,i) = 0\), \(b_{1}(q,i) = b_{1}(i)\) and \(\sigma _{1}(q) = \sigma _{1} >0\), \(\sigma _{2}(q) = \sigma _{2} >0\) for any \(i \in S\) and \(q \in \mathcal{I}\), or to the purely diffusive geometric case \(c(q,i) = 0\), \(b_{1}(q,i) = b_{1}(i)q\) and \(\sigma _{1}(q) = \sigma _{1} q\), \(\sigma _{2}(q) = \sigma _{2} q\) for any \(i \in S\) and \(q \in \mathcal{I}\).

3.2 The separated problem

Thanks to the introduction of the filter, (2.1)–(2.3) can now be rewritten in terms of observable processes. In particular, we have that

Notice that for any \(\nu \in \mathcal{M}(x,\underline{y},q)\), the process \(X^{\nu }\) turns out to be ℍ-adapted and depends on the vector \(({\underline{\pi }}_{t})_{t \geq 0} =(\pi _{t}(i); i\in S)_{t \geq 0}\) with \({\underline{\pi }}_{0}= {\underline{y}} \in \mathcal{Y}\).

Definition 3.9

We say that a process \(( { \underline{\widetilde{\pi }}}_{t}, \widetilde{\eta }_{t} )_{t \geq 0}\) with values in \(\mathcal{Y} \times \mathcal{I}\) is a strong solution to (3.11) and (3.17) if it satisfies those equations pathwise. We say that strong uniqueness for the system (3.11) and (3.17) holds if for any strong solution \(( { \underline{\widetilde{\pi }}}_{t}, \widetilde{\eta }_{t} )_{t \geq 0}\) to (3.11) and (3.17), one has \({\underline{\widetilde{\pi }}}_{t} = {\underline{\pi }}_{t}\) and \(\widetilde{\eta }_{t} = \eta _{t}\) a.s. for all \(t \geq 0\).

Proposition 3.10

Let Assumptions 2.1and 3.1hold and suppose that \(\alpha (\cdot ,i)\)is locally Lipschitz for any \(i\in S\)and there exists \(M>0\)such that \(|\alpha (q,i)|\leq M(1 + |q|)\)for any \(q \in \mathcal{I}\)and any \(i\in S\). Then the system (3.11) and (3.17) admits a unique strong solution.

The proof of Proposition 3.10 is postponed to Appendix A. Notice that under Assumption 2.1, the requirement on \(\alpha \) of Proposition 3.10 is verified e.g. whenever \(\sigma _{2}(q) \geq \underline{\sigma }\) for some \(\underline{\sigma }>0\) and for any \(q \in \mathcal{I}\), or if \(b_{1}/\sigma _{2}\) and \(\sigma _{1}/\sigma _{2}\) are locally Lipschitz in \(q \in \mathcal{I}\) and have sublinear growth. As a byproduct of Proposition 3.10, we also have strong uniqueness of the solution to (3.18). In the following, when there is a need to stress the dependence with respect to the initial value \(x>0\), we denote the solution to (3.16) and (3.18) by \(X^{x,0}\) and \(X^{x,\nu }\), respectively. Since

an application of the Fubini–Tonelli theorem allows writing

where \(\mathbb{E}_{(x,\underline{y},q)}\) denotes the expectation conditioned on \(X^{\nu }_{0^{-}}=x>0\), \(\underline{\pi }_{0}=\underline{y} \in \mathcal{Y}\), and \(\eta _{0}=q \in \mathcal{I}\). Also, because \(\pi (\kappa )\) is the ℍ-optional projection of the process \(\kappa (Z)\) (cf. [48, Lemma 1.1]) and any admissible control \(\nu \) is increasing and ℍ-adapted, an application of Dellacherie and Meyer [24, Theorem VI.57, in particular (VI.57.1)] yields

Hence, the cost functional of (2.4) can be rewritten in terms of observable quantities as

Notice that the latter expression does not depend on the unobservable process \(Z\) any more, and this allows us to introduce a control problem with complete information, the separated problem, in which the new state variable is given by the triplet \((X^{\nu }, \underline{\pi }, \eta )\). For this problem, we introduce the set \(\mathcal{A}(x,{\underline{y}},q) \) of admissible controls, given in terms of the observable processes in (3.11), (3.17) and (3.18) as

for every initial value \(x \in (0,\infty )\) of \(X^{x,\nu }\) defined in (3.18), any initial value \({\underline{y}} \in \mathcal{Y}\) of the process \(({\underline{\pi }}_{t} )_{t \geq 0}= (\pi _{t}(i); i\in S)_{t \geq 0}\) solving (3.11) and any initial value \(q \in \mathcal{I}\) of \(\eta \). In the following, we set \(\nu _{0-}=0\) a.s. for any \(\nu \in \mathcal{A}(x,{\underline{y}},q)\).

Given \(\nu \in \mathcal{A}(x, {\underline{y}}, q)\), the triplet \((X_{t}^{x,\nu }, {\underline{\pi }}_{t}, \eta _{t}) _{t \geq 0}\) solves (3.18), (3.11) and (3.17) and the jump measure associated to \(\eta \) has ℍ-predictable dual projection given by (3.8). Hence, the process \((X_{t}^{x,\nu }, {\underline{\pi }}_{t}, \eta _{t}) _{t \geq 0}\) is an ℍ-Markov process and we therefore define the Markovian separated problem as

This is now a singular stochastic problem under complete information, since all the processes involved are ℍ-adapted.

The next proposition immediately follows from the construction of the separated problem and the strong uniqueness of the solutions to (3.11), (3.17) and (3.18).

Proposition 3.11

Assume strong uniqueness for the system of (3.11) and (3.17), and let \((x,\underline{y},q) \in (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\)be the initial values of the process \((X,Z,\eta )\)in the problem (2.5) under partial observation. Then

Moreover, \(\mathcal{A}(x,\underline{y},q) = \mathcal{M}(x,\underline{y},q)\)and \(\nu ^{*}\)is an optimal control for the separated problem (3.19) if and only if it is optimal for the original problem (2.5) under partial observation.

Remark 3.12

Notice that in the setting of Example 3.8, 2), the pair \((X^{x,\nu }, {\underline{\pi }})\) solving (3.18) and (3.11) is an ℍ-Markov process for any given control \(\nu \in \mathcal{A}(x,\underline{y},q)\), \((x,\underline{y},q) \in (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\). As a consequence, since the cost functional and the set of admissible controls do not depend explicitly on the process \(\eta \), the value function of the separated problem (3.19) does not depend on the variable \(q\). We consider this setting in Sect. 4.

3.3 A probabilistic verification theorem via reduction to optimal stopping

In this section, we relate the separated problem to a Markovian optimal stopping problem and show that the solution to the latter is directly related to the optimal control of the former. The following analysis is fully probabilistic and based on a change-of-variable formula for Lebesgue–Stieltjes integrals that has already been employed in singular control problems (see e.g. Baldursson and Karatzas [2] and Ferrari [31]). The result of this section is then employed in Sect. 4 where in a case study, we determine the optimal debt reduction policy by solving an auxiliary optimal stopping problem.

With regard to (3.19), notice that we can write \(\pi _{t} (\kappa (\cdot ) ) = \sum _{i=1}^{Q} \pi _{t}(i) \kappa (i)\) as well as \(\pi _{t} (h(X_{t}^{x,\nu }, \cdot ) ) = \sum _{i=1}^{Q} \pi _{t}(i) h(X_{t}^{x, \nu }, i)\) a.s. for any \(t\geq 0\). For any \((x,\underline{\pi })\) in \((0,\infty ) \times \mathcal{Y}\), set

and given \(z \in (0,\infty )\), we introduce the optimal stopping problem

where the optimisation is taken over all ℍ-stopping times \(\tau \geq t\).

Under Assumption 2.4, the expectation in (3.20) is finite for any ℍ-stopping time \(\tau \geq t\), for any \(t\geq 0\). Observing that \(\kappa (i) < \infty \) for any \(i \in S\), in order to take care of the event \(\{\tau = \infty \}\), we use in (3.20) the convention

Denote by \(U(z)\) a càdlàg modification of \(\widetilde{U}(z)\) (which under our assumptions exists due to the results in Karatzas and Shreve [46, Appendix D]), and observe that \(0 \leq U_{t}(z) \leq \widehat{\kappa }(\underline{\pi }_{t}) X^{1,0}_{t}\) for any \(t \geq 0\), a.s. Also, define the stopping time

with the usual convention \(\inf \emptyset = \infty \). Then by [46, Theorem D.12], \(\tau _{t}^{*}(z)\) is an optimal stopping time for (3.20). In particular, \(\tau ^{*}(z):= \tau _{0}^{*}(z)\) is optimal for the problem

Notice that since \(h_{x}(\cdot , \underline{\pi })\) is a.s. increasing, \(z \mapsto \tau ^{*}(z)\) is a.s. decreasing. This monotonicity of \(\tau ^{*}(\,\cdot \,)\) will be important in the sequel as we need to consider its generalised inverse. Moreover, since the triplet \((X^{z,0}, \underline{\pi }, \eta )\) is a homogeneous ℍ-Markov process, there exists a measurable function \(U: (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\to \mathbb{R}\) such that \(U_{t}(z) = U (X^{z,0}_{t}, \underline{\pi }_{t}, \eta _{t})\) for any \(t \geq 0\), a.s. Hence \(U_{0}(z)=U(z,\underline{y},q)\), and for any \((x,\underline{y},q) \in (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\), we define

Moreover, we introduce the nondecreasing right-continuous process

and then also the process

Notice that \(\overline{\nu }^{{*}}_{\cdot }\) is the right-continuous inverse of \(\tau ^{*}(\,\cdot \,)\).

Theorem 3.13

Let \(\widetilde{V}\)be as in (3.23) and \(V\)as in the definition (3.19). Then \(\widetilde{V} = V\), and \(\nu ^{*}\)is the (unique) optimal control for (3.19).

Proof

1) Let \(x > 0\), \(\underline{y} \in \mathcal{Y}\) and \(q \in \mathcal{I}\) be given and fixed. For \(\nu \in \mathcal{A}(x, \underline{y}, q)\), we introduce the process \(\overline{\nu }\) such that \(\overline{\nu }_{t}:= \int _{0}^{t} \frac{d{\nu }_{s}}{X^{1,0}_{s}}\), \(t\geq 0\), and define its inverse (see e.g. Revuz and Yor [59, Sect. 0.4]) by

Notice that the process \((\tau ^{\overline{\nu }}(z))_{z \leq x}\) has decreasing left-continuous sample paths, and hence it admits right limits

Moreover, the set of points \(z\in \mathbb{R}\) at which \(\tau ^{\overline{\nu }}(z)(\omega ) \neq \tau ^{\overline{\nu }}_{+}(z)( \omega )\) is a.s. countable for a.e. \(\omega \in \Omega \). The random time \(\tau ^{\overline{\nu }}(z)\) is actually an ℍ-stopping time because it is the entry time into an open set of the right-continuous process \(\overline{\nu }\), and ℍ is right-continuous. Moreover, since \(\tau ^{\overline{\nu }}_{+}(z)\) is the first entry time of the right-continuous process \(\overline{\nu }\) into a closed set, it is an ℍ-stopping time as well for any \(z \leq x\).

Proceeding then as in Ferrari [31, Step 1 of the proof of Theorem 3.1], by employing the change-of-variable formula in [59, Proposition 0.4.9], one finds that

Hence, since \(\nu \) was arbitrary, we find that

2) To complete the proof, we have to show the reverse inequality. Let \(x \in (0,\infty )\), \(\underline{y} \in \mathcal{Y}\) and \(q \in \mathcal{I}\) be initial values of \(X^{x,\nu }\), \(\underline{\pi }\) and \(\eta \). We first notice that \(\nu ^{*} \!\in\! \mathcal{A}(x, \underline{y},q)\). Indeed, \(\nu ^{*}\) is nondecreasing, right-continuous and such that \(X^{x,\nu ^{*}}_{t} \!\!= X^{1,0}_{t}(x - \overline{\nu }^{{*}}_{t}) \geq 0\) a.s. for all \(t\geq 0\), since \(\overline{\nu }^{{*}}_{t} \leq x\) a.s. by definition. Moreover, for any \(0< z \leq x\), we can write by (3.24) and (3.25) that

Then recalling that \(\tau ^{\overline{\nu }^{{{*}}}}_{+}(z)=\tau ^{\overline{\nu }^{{{*}}}}(z)\) ℙ-a.s. for almost every \(z\le x\), we pick \(\nu =\nu ^{*}\) (equivalently, \(\overline{\nu }=\overline{\nu }^{{*}}\)) and following [31, Step 2 in the proof of Theorem 3.1], we obtain \(\widetilde{V}(x,\underline{y},q) = \mathcal{J}_{x,\underline{y},q}( \nu ^{*}) \geq V(x,\underline{y},q)\), where the last inequality is due to the admissibility of \(\nu ^{*}\). Hence, by (3.26), we have \(\widetilde{V}=V\) and \(\nu ^{*}\) is optimal. In fact, by strict convexity of \(\mathcal{J}_{x,\underline{y}, q}(\,\cdot \,)\), \(\nu ^{*}\) is the unique optimal control in the class of controls belonging to \(\mathcal{A}(x, \underline{y}, q)\) and such that \(\mathcal{J}_{x,\underline{y}, q}(\nu ) < \infty \). □

Remark 3.14

For any given \((x,\underline{y},q) \in (0,\infty ) \times \mathcal{Y} \times \mathcal{I}\), define the Markovian optimal stopping problem

where \(\underline{\pi }^{\,\underline{y}}\) denotes the filter process starting at time zero from \(\underline{y} \in \mathcal{Y}\). Then, since \(X^{x,0}=x X^{1,0}\) by (3.16) and \(U_{0}(z)= U(z, \underline{y}, q)\) for some measurable function \(U\), one can easily see that \(v(x, \underline{y}, q)= x U(x, \underline{y}, q)\). Moreover, the previous considerations together with (3.22) (evaluated at \(t=0\)) ensure that the stopping time

is optimal for \(v(x,\underline{y}, q)\), where \(q=\eta _{0}\).

4 The solution in a case study with \(Q=2\) economic regimes

In this section, we build on the general filtering analysis developed in the previous sections and on the result of Theorem 3.13, and provide the form of the optimal debt reduction policy in a case study defined through the following standing assumption.

Assumption 4.1

1) \(Z\) takes values in \(S=\{1, 2\}\), and with reference to (2.3), we assume \(g_{2}:=g(2)< g(1)=:g_{1}\).

2) For any \(q\in \mathcal{I}\) and \(i\in \{1, 2\}\), one has \(c(q,i) = 0\), and for \(\alpha \) as in (3.3), we assume \(\alpha (q,i)=\alpha (i)\).

3) \(h(x,i)=h(x)\) for all \((x,i) \in (0,\infty ) \times \{1,2\}\), with \(h:\mathbb{R} \to \mathbb{R}\) such that

(i) \(x \mapsto h(x)\) is strictly convex, twice continuously differentiable and nondecreasing on \(\mathbb{R}_{+}\) with \(h(0)=0\) and \(\lim _{x \uparrow \infty }h(x)=\infty \);

(ii) there exist \(\gamma > 1\), \(0< K_{o}< K\) and \(K_{1},K_{2}>0\) such that

4) \(\kappa (i)=1\) for \(i \in \{1,2\}\).

Notice that under Assumption 4.1, 2), the macroeconomic indicator \(\eta \) has a suitable diffusive dynamics whose coefficients \(b_{1}\), \(\sigma _{1}\), \(\sigma _{2}\) are such that the function \(\alpha \) is independent of \(q\). As discussed in Example 3.8, 2), this is the case of a geometric or arithmetic diffusive dynamics for \(\eta \). In this setting, the Kushner–Stratonovich system (3.11) reduces to

and \(\pi _{t}(2) = 1- \pi _{t}(1)\). Here, \(\lambda _{1}:= \lambda _{12} >0\) and \(\lambda _{2} := \lambda _{21} >0\).

Setting \(\pi _{t} := \pi _{t}(1)\), \(t\geq 0\), (3.19) then reads as

with initial conditions \(X^{x,y,\nu }_{0-}=x >0\), \(\pi _{0} =y \in (0,1)\), and where \(g_{i}= r -\beta _{i}\) denotes the rate of economic growth in the state \(i\), \(i=1,2\). Note that we switch here from arguments \((i)\) to subscripts i.

It is worth noticing that there is no need to involve the process \(\eta \) in the Markovian formulation (4.2). This is due to the fact that the couple \((X^{\nu },\pi )\) solving the two stochastic differential equations above is a strong Markov process and the cost functional and the set of admissible controls \(\mathcal{A}(x,y,q)\) do not depend explicitly on \(\eta \); hence we simply write \(\mathcal{A}(x,y)\) instead of \(\mathcal{A}(x,y,q)\) (cf. (4.2) above). For this reason, the value function of (4.2) does not depend on the initial value \(q\) of the process \(\eta \). However, the memory of the macroeconomic indicator process \(\eta \) appears in the filter \(\pi \) through the constant term \(\alpha _{1} - \alpha _{2}\) in its dynamics.

Since (4.1) admits a unique strong solution, Proposition 3.11 implies the following result.

Proposition 4.2

Under Assumption 4.1, solving (4.2) is equivalent to solving the original problem (2.5). That is,

and a control is optimal for the separated problem (4.2) if and only if it is optimal for the original problem (2.5) under partial observation.

In the following analysis, we need (for technical reasons due to the infinite horizon of our problem) to take a sufficiently large discount factor. Namely, defining

with \(\theta ^{2}:=\frac{1}{2} (\frac{(g_{1}-g_{2})^{2}}{\sigma ^{2}} + ( \alpha _{1}-\alpha _{2})^{2} )\), we assume the following.

Assumption 4.3

One has \(\rho > \rho _{o}^{+}\).

Due to the growth condition on \(h\), let us notice that Assumption 4.3 in particular ensures that \(\rho > \gamma \beta _{2} + \frac{1}{2}\sigma ^{2}\gamma (\gamma -1)\) so that the (trivial) admissible control \(\nu \equiv 0\) has a finite total expected cost.

4.1 The related optimal stopping problem

Motivated by the results of the previous sections (in particular Theorem 3.13), we now aim at solving (4.2) through the study of an auxiliary optimal stopping problem whose value function can be interpreted as the marginal value of the optimal debt reduction problem (cf. (3.23) and Theorem 3.13). Therefore, we can think informally of the solution to that optimal stopping problem as the optimal time at which the government should marginally reduce the debt ratio. The optimal stopping problem involves a two-dimensional diffusive process, and in the sequel, we provide an almost exclusively probabilistic analysis.

4.1.1 Formulation and preliminary results

Recall that \((I_{t},I^{1}_{t})_{t\geq 0}\) is a two-dimensional standard ℍ-Brownian motion, and introduce the two-dimensional diffusion process \((\widehat{X},\pi ):=(\widehat{X}_{t},\pi _{t})_{t\geq 0}\) solving the stochastic differential equations (SDEs)

with initial conditions \(\widehat{X}_{0}=x\), \(\pi _{0}=y\) for any \((x,y ) \in \mathcal{O} :=(0,\infty ) \times (0,1)\). Recall that \(\beta _{2}= r - g_{2}\).

Since the process \(\pi \) is bounded, classical results on SDEs ensure that (4.3) admits a unique strong solution that, when needed, we denote by \((\widehat{X}^{x,y},\pi ^{y})\) to stress its dependence on the initial datum \((x,y) \in \mathcal{O}\). In particular, one easily obtains

Moreover, it can be shown that Feller’s test of explosion (see e.g. Karatzas and Shreve [45, Chap. 5.5]) gives \(1= \mathbb{P}[\pi ^{y}_{t} \in (0,1), \forall t\geq 0]\) for all \(y \in (0,1)\). In fact, the boundary points 0 and 1 are classified as “entrance-not-exit”, hence are unattainable for the process \(\pi \). In other words, the diffusion \(\pi \) can start from 0 and 1, but it cannot reach any of those two points when starting from \(y \in (0,1)\) (we refer to Borodin and Salminen [6, Sect. II.6] for further details on boundary classification).

With regard to Remark 3.14, we study here the fully two-dimensional Markovian optimal stopping problem with value function

In (4.5), the optimisation is taken over all ℍ-stopping times, and \(\mathbb{E}_{(x,y)}\) denotes the expectation under the probability measure \(\mathbb{P}_{(x,y)}[\,\cdot \,]:=\mathbb{P}[\,\cdot \,|\widehat{X}_{0}=x, \pi _{0}=y]\).

Because \(\pi \) is positive, \(g_{2} - g_{1} <0\) and \(\rho >\beta _{2}\) by Assumption 4.3, (4.4) gives

which implies via the convention (3.21) that \(e^{-\rho \tau } \widehat{X}_{\tau }=0\) on \(\{\tau =\infty \}\) for any ℍ-stopping time \(\tau \).

Clearly, \(v\geq 0\) since \(\widehat{X}\) is positive and \(h\) is increasing on \(\mathbb{R}_{+}\). Also, \(v \leq x\) on \(\mathcal{O}\), and we can therefore define the continuation region and the stopping region as

Notice that integrating by parts the term \(e^{-\rho \tau } \widehat{X}_{\tau }\), taking expectations and exploiting that \(\mathbb{E}[\int _{0}^{\tau } e^{-\rho s} \widehat{X}_{s} dI_{s}]=0\) for any ℍ-stopping time \(\tau \) (because \(\rho >\beta _{2} + \frac{1}{2}\sigma ^{2}\) by Assumption 4.3), we can equivalently rewrite (4.5) as

for any \((x,y) \in \mathcal{O}\). From (4.7), it is readily seen that

which implies

Moreover, since \(\rho \) satisfies Assumption 4.3 and \(0 \leq \pi _{t} \leq 1\) for any \((x,y) \in \mathcal{O}\), one has that

and the family of random variables

is therefore ℍ-uniformly integrable under \(\mathbb{P}_{(x,y)}\).

Preliminary properties of \(v\) are given in the next proposition.

Proposition 4.4

The following hold:

(i) \(x \mapsto v(x,y)\)is increasing for any \(y \in (0,1)\).

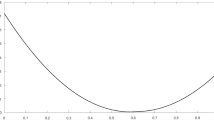

(ii) \(y \mapsto v(x,y)\)is decreasing for any \(x \in (0,\infty )\).

(iii) \((x,y) \mapsto v(x,y)\)is continuous in \(\mathcal{O}\).

Proof

(i) Recall (4.5). By the strict convexity and monotonicity of \(h\) and (4.4), it follows that \(x \mapsto \widehat{\mathcal{J}}_{(x,y)}(\tau )\) is increasing for any ℍ-stopping time \(\tau \) and any \(y \in (0,1)\). Hence the claim is proved.

(ii) This is due to the fact that \(y \mapsto \widehat{\mathcal{J}}_{(x,y)}(\tau )\) is decreasing for any stopping time \(\tau \) and \(x \in (0,\infty )\). Indeed, the mapping \(y \mapsto \widehat{X}^{x,y}_{t}\) is a.s. decreasing for any \(t\geq 0\) because \(y \mapsto \pi ^{y}_{t}\) is a.s. increasing by the comparison theorem of Yamada and Watanabe (see e.g. Karatzas and Shreve [45, Proposition 5.2.18]) and \(g_{2}-g_{1}<0\), and \(x \mapsto xh'(x)\) is increasing.

(iii) Since \((x,y) \mapsto (\widehat{X}^{x,y}_{t}, \pi ^{y}_{t})\) is a.s. continuous for any \(t\geq 0\), it is not hard to verify that \((x,y) \mapsto \widehat{\mathcal{J}}_{(x,y)}(\tau )\) is continuous for any given \(\tau \geq 0\). Hence \(v\) is upper semicontinuous. We now show that it is also lower semicontinuous.

Let \((x,y) \in \mathcal{O}\) and let \((x_{n},y_{n})_{n \in \mathbb{N}} \subseteq \mathcal{O}\) be any sequence converging to \((x,y)\). Without loss of generality, we may take \((x_{n},y_{n}) \in (x-\delta ,x+\delta ) \times (y-\delta ,y+\delta )\) for a suitable \(\delta >0\). Letting \(\tau ^{n}_{\varepsilon }:=\tau ^{n}_{\varepsilon }(x_{n},y_{n})\) be \(\varepsilon \)-optimal for \(v(x_{n},y_{n})\), but suboptimal for \(v(x,y)\), we can then write

Notice now that a.s.

where we have used that \(x \mapsto \widehat{X}^{x,y}\) is increasing, \(y \mapsto \widehat{X}^{x,y}\) is decreasing and \(x \mapsto xh'(x)\) is positive and increasing. The random variable on the right-hand side above is independent of \(n\) and integrable due to (4.9). Also, using integration by parts and performing standard estimates, we can write that a.s.

and the last integral above is independent of \(n\) and has finite expectation due to (4.9). Then taking limits as \(n\uparrow \infty \), invoking the dominated convergence theorem thanks to the previous estimates and using that \((x,y) \mapsto (\widehat{X}^{x,y}_{t}, \pi ^{y}_{t})\) is a.s. continuous for any \(t\geq 0\), we find (after rearranging terms) that

We thus conclude that \(v\) is lower semicontinuous at \((x,y)\) by arbitrariness of \(\varepsilon \). Since \((x,y) \in \mathcal{O}\) was arbitrary as well, \(v\) is lower semicontinuous on \(\mathcal{O}\). □

Due to Proposition 4.4 (iii), the stopping region is closed whereas the continuation region is open. Moreover, thanks to (4.9) and the \(\widehat{\mathbb{P}}_{(x,y)}\)-a.s. continuity of the paths of the process \(( \int _{0}^{t} e^{- \rho s} \widehat{X}_{s} (h'(\widehat{X}_{s})-( \rho - \beta _{2} - (g_{2}-g_{1}))\pi _{s} ) ds )_{t\geq 0}\), we can apply Karatzas and Shreve [46, Theorem D.12] to obtain that the first entry time of \((\widehat{X},\pi )\) into \(\mathcal{S}\) is optimal for (4.5), that is,

attains the infimum in (4.5) (with the usual convention \(\inf \emptyset = \infty \)). Also, standard arguments based on the strong Markov property of \((\widehat{X},\pi )\) (see e.g. Peskir and Shiryaev [57, Theorem I.2.4]) allow one to show that \(\mathbb{P}_{(x,y)}\)-a.s., the process \(S:= (S_{t} )_{t\geq 0}\) with

is an ℍ-submartingale, and the stopped process \((S_{t\wedge \tau ^{\star }} )_{t\geq 0}\) is an ℍ-martingale. The latter two conditions are usually referred to as the subharmonic characterisation of the value function \(v\).

We now rule out the possibility of an empty stopping region.

Lemma 4.5

The stopping region of (4.6) is not empty.

Proof

We argue by contradiction and suppose that \(\mathcal{S} = \emptyset \). Hence for any \((x,y) \in \mathcal{O}\), we can write

where the inequality \(xh'(x) \geq h(x)\), due to convexity of \(h\), and the growth condition assumed on \(h\) (cf. Assumption 4.1) have been used. Now by taking \(x\) sufficiently large, we reach a contradiction since \(\gamma >1\) by assumption. Hence \(\mathcal{S} \neq \emptyset \). □

Proposition 4.6

For any \(y \in (0,1)\), let

with the convention \(\inf \emptyset = \infty \). Then:

(i) We have

(ii) \(y \mapsto \overline{x}(y)\)is increasing and left-continuous.

(iii) There exist \(0 < x_{\star } < x^{\star } < \infty \)such that for any \(y \in [0,1]\),

Proof

(i) To show (4.12), it suffices to show that if \((x_{1},y) \in \mathcal{S}\), then \((x_{2},y) \in \mathcal{S}\) for any \(x_{2} \geq x_{1}\). Let \(\tau ^{\varepsilon }:= \tau ^{\varepsilon }(x_{2},y)\) be an \(\varepsilon \)-optimal stopping time for \(v(x_{2},y)\). Then exploiting \(\widehat{X}^{x_{2},y}_{t} = \frac{x_{2}}{x_{1}}\widehat{X}^{x_{1},y}_{t} \geq \widehat{X}^{x_{1},y}_{t}\) a.s. and monotonicity of \(h'\), (4.7) yields

Therefore, by arbitrariness of \(\varepsilon \), we conclude that \((x_{2},y) \in \mathcal{S}\) as well, and therefore that \(\overline{x}\) in (4.11) splits \(\mathcal{C}\) and \(\mathcal{S}\) as in (4.12).

(ii) Let \((x,y_{1})\in \mathcal{C}\). Since \(y \mapsto v(x,y)\) is decreasing by Proposition 4.4 (ii), it follows that \((x,y_{2})\in \mathcal{C}\) for any \(y_{2} \geq y_{1}\). This in turn implies that \(y \mapsto \overline{x}(y)\) is increasing. The monotonicity of \(y \mapsto \overline{x}(y)\) together with the fact that \(\mathcal{S}\) is closed then gives the claimed left-continuity by standard arguments.

(iii) Let \(\Theta ^{x}_{t}:= x \exp ((\beta _{2} - \frac{1}{2}\sigma ^{2} + (g_{2}-g_{1}))t + \sigma I_{t})\) and introduce the one-dimensional optimal stopping problem

Because \(g_{2} - g_{1} <0\), \(h'\) is increasing and \(\pi ^{y}_{t} \leq 1\) a.s. for all \(t\geq 0\) and \(y\in (0,1)\), it is not hard to see that \(v(x,y) \geq v^{\star }(x)\) for any \((x,y) \in \mathcal{O}\).

By arguments similar to those employed to prove (i), one can show that there exists \(x^{\star }\) such that \(\{x \in (0,\infty ): v^{\star }(x) \geq x\} = \{x \in (0,\infty ): x \geq x^{\star }\}\). In fact, by arguing as in the proof of Lemma 4.5, the latter set is not empty. Then we have the inclusions

which in turn show that \(\overline{x}(y) \leq x^{\star }\) for all \(y \in (0,1)\). Hence also \(\overline{x}(y)\leq x^{\star }\) for all \(y \in [0,1]\), setting \(\overline{x}(0+) :=\lim _{y\downarrow 0}\overline{x}(y)\) by monotonicity and \(\overline{x}(1):=\lim _{y\uparrow 1}\overline{x}(y)\) by left-continuity. As for the lower bound of \(\overline{x}\), notice that (4.8) implies

where \((h')^{-1}(\,\cdot \,)\) is the inverse of the strictly increasing function \(h': [0,\infty ) \to (0,\infty )\) (notice that \(\rho - \beta _{2} - (g_{2}-g_{1})y \geq 0 \) since \(\rho >\beta _{2}\), \(g_{2}-g_{1}<0\) and \(y >0\)). Since \((h')^{-1}\) is strictly increasing and \(-(g_{2}-g_{1})y \geq 0 \), we can conclude from (4.13) that \(\overline{x}(y) \geq (h')^{-1} (\rho - \beta _{2})\) for every \(y\in [0,1]\). Moreover, setting

and introducing the one-dimensional optimal stopping problem

one has \(v(x,y) \leq v_{\star }(x)\) for any \((x,y) \in \mathcal{O}\). Following arguments as those employed above and defining \(x_{\star }:=\inf \{x>0: v_{\star }(x) \geq x\} \in (0,\infty )\), the last inequality implies that \(\overline{x}(y) \geq x_{\star }\) for all \(y \in [0,1]\). □

4.1.2 Smooth-fit property and continuity of the free boundary

We now aim at proving further regularity of \(v\) and the free boundary \(\overline{x}\).

The second-order linear elliptic differential operator

acting on any function \(f \in C^{2}(\mathcal{O})\), is the infinitesimal generator of the process \((\widehat{X},\pi )\). The nondegeneracy of the process \((\widehat{X},\pi )\) and the smoothness of the coefficients in (4.14) together with the subharmonic characterisation of \(v\) allow proving by standard arguments (see e.g. [57, Sect. 3.7.1]) and classical regularity results for elliptic partial differential equations (see e.g. Gilbarg and Trudinger [37, Sect. 6.6.3]) the following result.

Lemma 4.7

The value function \(v\)of (4.5) belongs to \(C^{2}\)separately in the interior of \(\mathcal{C}\)and in the interior of \(\mathcal{S}\) (i.e., away from the boundary \(\partial \mathcal{C}\)of \(\mathcal{C}\)). Moreover, in the interior of \(\mathcal{C}\), it satisfies

with \(\mathbb{L}\)as in (4.14).

We continue our analysis by proving that the value function of (4.5) belongs to the class \(C^{1}((0,\infty ) \times (0,1))\). This will be obtained through probabilistic methods that rely on the regularity (in the sense of diffusions) of the stopping set \(\mathcal{S}\) for the process \((\widehat{X},\pi )\) (see De Angelis and Peskir [22] where this methodology has recently been developed in a general context; for other examples, refer to De Angelis et al. [21] as well as to Johnson and Peskir [43]). Recall that the boundary points are regular for \(\mathcal{S}\) with respect to \((\widehat{X},\pi )\) if (cf. Karatzas and Shreve [45, Definition 4.2.9])

The time \(\widehat{\tau }(x_{o},y_{o})\) is the first hitting time of \((\widehat{X}^{x_{o},y_{o}},\pi ^{y_{o}})\) to \(\mathcal{S}\).

Notice that defining \(U_{t}:=\ln \widehat{X}_{t}\), one has

as well as \(\mathbb{E}_{(x,y)} [f(\widehat{X}_{t},\pi _{t}) ]=\mathbb{E}_{(u,y)} [f(e^{U_{t}},\pi _{t}) ]\) for every bounded Borel function \(f: \mathbb{R}^{2} \mapsto \mathbb{R}\), where \(u:=\ln x\). Due the nondegeneracy of the process \((U,\pi )\) and the smoothness and boundedness of its coefficients, the pair \((U,\pi )\) has a continuous transition density \(\widehat{p}(\cdot ,\cdot ,\cdot ;u,y)\), \((u,y) \in \mathbb{R} \times (0,1)\), such that for any \((u',y') \in \mathbb{R} \times (0,1)\) and \(t\geq 0\) (see e.g. Aronson [1]),

for some constants \(M>m>0\) and \(\Lambda > \lambda >0\). It thus follows that the mapping \((u,y) \mapsto \mathbb{E}_{(u,y)} [f(e^{U_{t}}, \pi _{t}) ]\) is continuous, so that \((U,\pi )\) is a strong Feller process. Hence \((\widehat{X},\pi )\) is strong Feller as well, and we can therefore conclude that (4.15) holds if and only if (see Dynkin [26, Chap. 13.1-2])

where \(\tau ^{\star }\) is as in (4.10).

The next proposition shows the validity of (4.15).

Proposition 4.8

The boundary points in \(\partial \mathcal{C}\)are regular for \(\mathcal{S}\)with respect to \((\widehat{X},\pi )\), that is, (4.15) holds.

Proof

Let \((x_{o},y_{o})\in \partial \mathcal{C}\) and set \(u_{o}:=\ln x_{o}\). We set \(\widehat{\sigma }(u_{o},y_{o}):=\widehat{\tau }(e^{u_{o}},y_{o})\) for any given \((u_{o},y_{o}) \in \mathbb{R} \times (0,1)\) and equivalently rewrite (4.15) in terms of the process \((U,\pi )\), with \(U\) as defined above, as

Given that \(y \mapsto \ln \overline{x}(y)\) is increasing like \(y \mapsto \overline{x}(y)\), the region

enjoys the so-called cone property (see Karatzas and Shreve [45, Definition 4.2.18]). In particular, we can always construct a cone \(C_{o}\) with vertex in \((u_{o},y_{o})\) and aperture \(0 \leq \phi \leq \pi /2\) such that \(C_{o} \cap (\mathbb{R} \times (0,1)) \subseteq \widehat{\mathcal{S}}\) and for any \(t_{o}\geq 0\), we have

Then using (4.16), one has

using that the change of variables \(u':= (u-u_{o})/\sqrt{t_{o}}\) and \(y':= (y-y_{o})/\sqrt{t_{o}}\) maps the cone \(C_{o}\) into itself. The number \(\ell \) above depends on \(u_{o}\), \(y_{o}\), but is independent of \(t_{o}\). From (4.17) and (4.18), we thus have \(\mathbb{P}[\widehat{\sigma }(u_{o},y_{o}) \leq t_{o}] \geq \ell \), and letting \(t_{o} \downarrow 0\) yields \(\mathbb{P}[\widehat{\sigma }(u_{o},y_{o}) = 0] \geq \ell > 0\). However, \(\{\widehat{\sigma }(u_{o},y_{o}) = 0\} \in \mathcal{H}_{0}\), and by the Blumenthal 0–1 law, we obtain \(\mathbb{P}[\widehat{\sigma }(u_{o},y_{o}) = 0] =1\), which completes the proof. □

Theorem 4.9

One has that \(v \in C^{1}(\mathcal{O})\).

Proof

The value function belongs to \(C^{2}\) in the interior of the continuation region due to Lemma 4.7, and it is \(C^{\infty }\) in the interior of the stopping region where \(v(x,y)=x\). It thus only remains to prove that \(v\) is continuously differentiable across \(\partial \mathcal{C}\). In the sequel, we prove that (i) the function \(\overline{w}( x,y) :=\frac{1}{x}(v(x,y) - x)\) has a continuous derivative with respect to \(x\) across \(\partial \mathcal{C}\) (and this clearly implies the continuity of \(v_{x}\) across \(\partial \mathcal{C}\)); (ii) the function \(v_{y}\) is continuous across \(\partial \mathcal{C}\).

(i) Continuity of \(v_{x}\)across \(\partial \mathcal{C}\): For the subsequent arguments, it is useful to notice that the function \(\overline{w}\) admits the representation (recall (4.7))

and to bear in mind that the optimal stopping time \(\tau ^{\star }\) for \(v\) in (4.10) is also optimal for \(\overline{w}\) since \(v \geq x\) if and only if \(\overline{w}\geq 0\). We now prove that \(\overline{w}_{x}\) is continuous across \(\partial \mathcal{C}\), thus implying continuity of \(v_{x}\) across \(\partial \mathcal{C}\).

Take \((x,y) \in \mathcal{C}\) and let \(\varepsilon >0\) be such that \(x-\varepsilon >0\). Since \(x \mapsto \overline{w}(x,y)\) is increasing due to the monotonicity of \(h'\), it is clear that \((x-\varepsilon ,y) \in \mathcal{C}\) as well. Denote by \(\tau ^{\star }_{\varepsilon }(x,y):=\tau ^{\star }(x-\varepsilon ,y)\) the optimal stopping time for \(\overline{w}(x-\varepsilon ,y)\) and notice that \(\tau ^{\star }_{\varepsilon }(x,y)\) is suboptimal for \(\overline{w}(x,y)\) and \(\tau ^{\star }_{\varepsilon }(x,y)\rightarrow \tau ^{\star }(x,y)\) a.s. as \(\varepsilon \downarrow 0\). To simplify the exposition, we write \(\tau ^{\star }_{\varepsilon }:=\tau ^{\star }_{\varepsilon }(x,y)\) and \(\tau ^{\star }:=\tau ^{\star }(x,y)\) in the sequel. We then have from (4.19) that

for some \(\xi _{\varepsilon } \in (x-\varepsilon ,x)\), where we have used in the last step the mean value theorem and the fact that \(\widehat{X}^{x,y}_{t} - \widehat{X}^{x-\varepsilon ,y}_{t} = \varepsilon \widehat{X}^{1,y}_{t}\). Letting \(\varepsilon \downarrow 0\), invoking dominated convergence (thanks to the fact that \(\rho > (\gamma \beta _{2} + \frac{1}{2}\sigma ^{2} \gamma (\gamma -1) ) \vee (2\beta _{2} + \sigma ^{2} )\) by Assumption 4.3) and using that \(\overline{w} \in C^{1}(\mathcal{C})\) (since \(v \in C^{1}(\mathcal{C})\)), we then find from (4.20) that

Now let \((x_{o},y_{o})\) be an arbitrary point belonging to \(\partial \mathcal{C}\). Taking limits \((x,y) \rightarrow (x_{o},y_{o})\) in (4.21), using dominated convergence and Proposition 4.8, we obtain

thus proving that \(\overline{w}_{x}\) is continuous across \(\partial \mathcal{C}\). This immediately implies the continuity of \(v_{x}\) across \(\partial \mathcal{C}\), upon recalling that \(v(x,y)=x(\overline{w}(x,y)+1)\).

(ii) Continuity of \(v_{y}\)across \(\partial \mathcal{C}\): Take again \((x,y) \in \mathcal{C}\) and \(\varepsilon >0\) such that \(y+\varepsilon <1\). Since \(y \mapsto v(x,y)\) is decreasing by Proposition 4.4 (ii), it is clear that \((x,y+\varepsilon ) \in \mathcal{C}\) as well. Denote by \(\tau ^{\star }_{\varepsilon }(x,y):= \tau ^{\star } (x, y+\varepsilon )\) the optimal stopping time for \(v(x, y +\varepsilon )\) and notice that \(\tau ^{\star }_{\varepsilon }(x,y)\) is suboptimal for \(v(x,y)\) and \(\tau ^{\star } (x, y+\varepsilon ) \rightarrow \tau ^{\star } (x,y) \) a.s. as \(\varepsilon \downarrow 0\). To simplify the notation, we write \(\tau ^{\star }_{\varepsilon }\) instead of \(\tau ^{\star }_{\varepsilon }(x,y)\) in the sequel. From Proposition 4.4 (ii) and (4.7), we then have

Now add and subtract on the right-hand side both \(\mathbb{E}[\int _{0}^{\tau ^{\star }_{\varepsilon }} e^{- \rho t} \widehat{X}^{x,y+\varepsilon }_{t} h'( \widehat{X}^{x,y}_{t}) dt]\) and \((g_{2}-g_{1})\mathbb{E}[\int _{0}^{\tau ^{\star }_{\varepsilon }} e^{- \rho t} \widehat{X}^{x,y+\varepsilon }_{t} \pi ^{y}_{t} dt]\) and recall that \(g_{2} - g_{1} <0\), \(\widehat{X}^{x,y}_{t} \ge 0\) a.s. and \(\pi ^{y+\varepsilon }_{t} - \pi ^{y}_{t} \ge 0\) a.s., for every \(t \ge 0\). Then after rearranging terms and using the integral mean value theorem for some \(L_{t}^{\varepsilon } \in (\widehat{X}^{x,y+\varepsilon }_{t}, \widehat{X}^{x,y}_{t})\) a.s., we obtain that

In the last inequality, we have used that \(\rho - \beta _{2} - \pi ^{y}_{t} (g_{2} - g_{1}) \geq 0\) since \(\rho >\beta _{2}\) by Assumption 4.3, that \(g_{2}-g_{1}<0\) and that \(\widehat{X}^{x,y+\varepsilon }_{t} \leq \widehat{X}^{x,y}_{t}\).

Define now \(\Delta \pi ^{y}_{t}:=\frac{1}{\varepsilon }(\pi ^{y+\varepsilon }_{t} - \pi ^{y}_{t})\), \(t\geq 0\), and notice that by using the second equation in (4.3), we can write for any \(t\geq 0\) that

With the help of Itô’s formula, it can easily be shown that

with \(\theta ^{2}:=\frac{1}{2} (\frac{(g_{2}-g_{1})^{2}}{\sigma ^{2}} + ( \alpha _{1}-\alpha _{2})^{2} )\). Also, by (4.4) and simple algebra,

Using the definition of \(\Delta \pi ^{y}_{t}\) and (4.24) in (4.22) and \(\widehat{X}^{x,y+\varepsilon }_{t} \leq \widehat{X}^{x,y}_{t}\), one finds

We now aim at taking limits as \(\varepsilon \downarrow 0\) in (4.25). To this end, notice that \(\Delta \pi ^{y}_{t} \rightarrow Z^{y}_{t}\) a.s. for all \(t\geq 0\) as \(\varepsilon \downarrow 0\), where by Protter [58, Theorem V.7.39], \((Z^{y}_{t})_{t\geq 0}\) is the unique strong solution to

with \(Z_{0}^{y} = 1\). Then, if we are allowed to invoke the dominated convergence theorem when taking limits as \(\varepsilon \downarrow 0\) in (4.25), we obtain that

upon recalling that \(v\in C^{2}(\mathcal{C})\). Therefore, letting \((x_{o},y_{o})\) be an arbitrary point belonging to \(\partial \mathcal{C}\), by taking limits in (4.26) as \((x,y) \rightarrow (x_{o},y_{o})\) and using dominated convergence and Proposition 4.8, we obtain that

thus proving that \(v_{y}\) is continuous across \(\partial \mathcal{C}\).

To complete the proof, it only remains to show that the dominated convergence theorem can be applied when taking limits as \(\varepsilon \downarrow 0\) in (4.25). We show this in the two following technical steps.

1) To prove that the dominated convergence theorem can be invoked when taking \(\varepsilon \downarrow 0\) in the first expectation on the right-hand side of (4.25), we set