Abstract

Requirements are elicited from the customer and other stakeholders through an iterative process of interviews, prototyping, and other interactive sessions. Then, requirements can be further extended, based on the analysis of the features of competing products available on the market. Understanding how this process takes place can help to identify the contribution of the different elicitation phases, thereby allowing requirements analysts to better distribute their resources. In this work, we empirically study in which way requirements get transformed from initial ideas into documented needs, and then evolve based on the inspiration coming from similar products. To this end, we select 30 subjects that act as requirements analysts, and we perform interview-based elicitation sessions with a fictional customer. After the sessions, the analysts produce a first set of requirements for the system. Then, they are required to search similar products in the app stores and extend the requirements, inspired by the identified apps. The requirements documented at each step are evaluated, to assess to which extent and in which way the initial idea evolved throughout the process. Our results show that only between 30% and 38% of the requirements produced after the interviews include content that can be fully traced to initial customer’s ideas. The rest of the content is dedicated to new requirements, and up to 21% of it belongs to completely novel topics. Furthermore, up to 42% of the requirements inspired by the app stores cover additional features compared to the ones identified after the interviews. The results empirically show that requirements are not elicited in strict sense, but actually co-created through interviews, with analysts playing a crucial role in the process. In addition, we show evidence that app store-inspired elicitation can be particularly beneficial to complete the requirements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Requirements are elicited from the customer and other stakeholders through an iterative process of interviews, prototyping, and other interactive sessions [1,2,3,4]. The iterations transform the initial ideas of the customer into more explicit needs, normally expressed in the form of a requirements document to be used for system specification. Then, the analysis of competing products in the market [5,6,7,8,9]—typically retrieved from app stores [9]—as well as the collection of feedback from users [10,11,12,13], can lead to further updates of the requirements, bug fixing and product enhancement. Throughout the elicitation process, the initial customer’s ideas can go through a radical transformation. Relevant needs may have remained unexpressed, others may have been discarded through early negotiation, and some requirements may be introduced to mimic successful features of existing products. Understanding how this process takes place can help to properly allocate resources for the elicitation activities and also address possible communication problems typically occurring during the early elicitation phases [14,15,16].

Previous literature in requirements engineering (RE) individually studied the different elicitation phases and supporting techniques. Inquisitive elicitation strategies, such as interviews and focus groups, are recognized as extremely common in industrial practice [3, 4]. Some researchers studied the impact of domain knowledge [17, 18], communication issues [16, 19, 20] and other factors [21,22,23] on the success of these strategies. Concerning requirements elicitation through app store analysis, the survey by Al-Subaihin et al. [9] highlighted that 51% of mobile app developers frequently perform product maintenance based on user’s public feedback in the app stores, and 56% elicit requirements by browsing similar apps. In the field of app store analysis, a large set of work is dedicated to the development of automatic tools, often based on natural language processing (NLP), to support these tasks [8, 10, 13, 24]. Despite the vast literature, however, it is unclear what is the actual contribution of the different elicitation activities to the final content of the requirements document. Furthermore, the recent survey by Dabrowski et al. [25] highlighted that—except for the study by Al-Subaihin et al. [9]—there is limited evidence of the practical utility of app store analysis for RE and software engineering activities.

In this work, we perform an exploratory study of descriptive nature to understand in which way requirements get transformed from initial ideas into documented needs, and then further evolve based on the analysis of existing similar products available on the market in general, and in the app store in the specific. The study focuses on software solutions that can have a mobile version, or include a relevant mobile app-oriented component, so that comparison with products in the app store market can be possible. The study consists of two phases. In Phase I, which we call interview-based elicitation, we investigate the difference between customer ideas and documented requirements after a set of interview sessions. In Phase II, which we call app store-inspired elicitation, we compare the requirements produced as output of Phase I with additional ones created by analysts after taking inspiration from similar products identified in the app stores.

To collect data for the study, we recruit 58 subjects that will act as requirements analysts. In the interview-based elicitation phase, the analysts perform a set of elicitation sessions with a fictional customer. The fictional customer is required to study a set of about 50 user stories for a system, which are regarded as the initial customer ideas for the experiment. Then, each analyst performs two requirements elicitation interview sessions with the customer, who is required to answer based on the user stories, and on novel ideas that can be triggered during the conversation. The sessions are separated by a period of 2 weeks, in which the analyst is working on the data collected in the first interview through notes, diagrams, or mockups. After the elicitation sessions, each analyst documents the requirements into 50 to 60 user stories.

Then, the app store-inspired elicitation phase takes place. The analysts are required to imagine that the initial idea needs to be evolved to reach a wider market, and thus additional requirements are to be added. To take inspiration for additional features, they are required to look into the app stores, e.g., Apple App Store and Google Play, and identify further requirements by analyzing five similar products of their choice. After this analysis, they need to document the additional requirements with 20 user stories and to explain why certain apps have been chosen as inspiration for the additional requirements.

The produced user stories from a sample of 30 subjects are compared with the original ones by two researchers, to assess to which extent and in which way the initial requirements evolved throughout the interactive sessions. A comparison is also carried out between the user stories initially produced by the analysts, and those added after app store-inspired elicitation. In the comparison of user stories, we also consider differences in the user story roles, i.e., the actors explicitly mentioned in the user stories. Our results quantitatively show that there is a substantial gap between initial ideas and documented requirements, and that searching for similar apps is also crucial to identify novel features and eventually enhance the product.

More specifically, we provide the following main findings, in relation to interview-based elicitation:

-

Only between 30% and 38% of the user stories produced after interview-based elicitation include content that was already present in the initial ones;

-

Most of the user stories produced—specifically between 54% and 63%—are refinements of the initial ideas, while between 12% and 20% are related to completely novel ideas;

-

Most of the user story roles considered—between 58% and 72%—are the same as the ones initially planned, but between 18% and 29% are entirely novel roles;

-

The relevance given to certain requirement categories is different between initial ideas and documented needs;

-

The original user story roles are mostly preserved in the analysts’ user stories, but the distribution of the analysts’ stories among roles differs;

-

Analysts introduce non-functional requirements, which were not initially considered by the customer, especially concerning security and privacy.

In addition, we provide the following findings in relation to app store-inspired elicitation:

-

Between 28% and 42% of the user stories inspired by the app stores cover entirely novel topics with respect to the ones written after the interviews;

-

Between 8% and 17% novel roles are discovered;

-

There is an increasing attention to the “user”, i.e., the target subject who will be using the software, and usability requirements, rather than to the initial ideas of the customer, i.e., the subject commissioning the initial development.

This study contributes to theory in RE, as it empirically shows that: (1) in interview-based elicitation, requirements are not elicited but co-created by stakeholders and analysts; (2) interviews and app store analysis play complementary roles in requirements definition; (3) app store analysis provides a relevant contribution in practice. This last point addresses the limited evidence about the practical utility of app store analysis observed by the survey of Dabrowski et al. [25].

The data of our study are shared in a replication package [26] made available at: https://doi.org/10.5281/zenodo.6475039.

This paper is an extension of a previous conference contribution [27]. The previous manuscript was focused solely on the evolution of requirements during the interview sessions. The current one, instead, includes also an analysis on the contribution of app store-inspired elicitation to the final requirements. More specifically, the current paper provides the following additional content: (i) an extended description of the design of the study, including data collection and analysis for app store-inspired elicitation; (ii) corresponding results and discussion related to app store-inspired elicitation; (iii) an extended related work section; (iv) additional statistical tests for the data of the previous contribution. The formatting and presentation of data, figures and original content has also been revised.

The remainder of the paper is organised as follows. Section 2 summarizes the related work. Section 3 describes the adopted methodology, Sections 4 and 5 present the results of our analysis, and Section 6 discusses their implications. Section 7 describes the threats to the validity of our results and how they have been mitigated and Section 8 concludes the paper.

2 Related work

Our work focuses on the evolution of requirements during elicitation. In the following, we report related work on requirements evolution in relation to early interview-based elicitation, and in relation to later phases, including app store-inspired elicitation. As the creativity of the analyst can influence the final requirements document, we also report related work on creativity in requirements engineering (RE). Finally, we discuss our contribution with respect to the literature in these research areas.

Early Requirements Evolution Requirements evolution is a well-recognized phenomenon that can have critical effects on software systems [28,29,30]. A few studies in the literature focus on requirements evolution during the early stages, towards the production of a first requirements document. Among them, Zowghi and Gervasi [31] analyze how the initial incomplete knowledge about a system evolves and identify consistency, completeness, and correctness (the “three C”) as the driving factors. The main idea behind this conclusion is that the goal of an analyst is to produce a consistent, complete and consequently correct set of requirements. Thus, the analysts keep working using the collected knowledge and their expertise trying to get closer to the three Cs at every step of the process.

Grubb and Chechik [30, 32] focus on the early stage of requirements evolution, but they look at the modeling phase. In particular, they propose to use goal model analysis to help stakeholders to answer what if questions to support the evolution of a system considering different scenarios, as well as the customer in understanding trade-offs among different decisions. In their approach, the authors augment goal models with the capability of explicitly modeling time to provide a more useful analysis for the stakeholders. Still within the field of model-driven RE, Ali et. al. [33] identify in “assumptions” one of the main reasons behind requirements evolution. Indeed, assumptions might be or can become inaccurate or incorrect. Once a problem with an assumption is identified, requirements need to evolve consequently. The authors develop a system to monitor the assumptions and evolve the model of the systems every time they are violated. Other authors have looked into the concept of pre-requirements [34], intended as information available prior to requirement specification—-including system concepts, user expectations, the environment of the system—-and their tracing with expressed needs. Studies in this field and in particular Hayes et al. [34] specifically focus on automatic tracing by means of cluster analysis.

Another stream of works on early requirements elicitation is concerned with empirical studies on interviews and focus groups. These are the most common techniques used by companies, as shown by the NaPiRE survey [3], and by the recent study by Paolmares et al. [4]. Hadar et al. [17] show the double-sided impact of the domain knowledge of the analyst on the interview process. On the one hand, domain knowledge can facilitate the creation of a shared understanding, but on the other hand it can lead to tunnel vision, with the analyst discarding relevant information. Niknas and Berry [18] show that domain ignorance can play a complementary role in requirements elicitation. By including a subject who is domain ignorant together with a software engineering expert in a requirements focus group, the process of idea generation appears to be more effective. Other studies on requirements elicitation interviews are focused on communication aspects. Among them, Ferrari et al. [19] study the role of ambiguity, while Bano et al. [16] present a list of typical communication mistakes committed by novel requirements analysts, which can have an impact on the resulting requirements document.

Late Requirements Evolution The term “requirements evolution” often refers to the evolution of requirements once the system is deployed, and a wide body of work exists in the area. In particular, a set of studies consider evolution of requirements based on the analysis of similar products, considering in particular app stores. Fu et al. [35] acknowledge that a market-wide analysis across the entire app store can allow developers to find undiscovered requirements. In this line of research, Sarro et al. [5] empirically shows that features migrate through the app store, passing from one product to another, and suggest that identifying those features that have strong migratory tendency can lead to identify undiscovered requirements. Other works are concerned with the development of recommender systems, e.g., [6,7,8, 36]. Among them, Chen et al. [6] recommend software features for mobile apps, based on the comparison of the user interface (UI) of an existing app with the UIs of similar ones. Liu et al. [7], instead leverages textual data in terms of app feature descriptions, to support feature recommendation. Jiang et al. [8] extend descriptions with API names, to better inform the recommendation system. Finally, the recent work of Liu et al. [36] combines UI information and textual descriptions of apps. Besides the field of app store analysis, the topic of requirements elicitation based on similar products has been also studied in software product line engineering. The majority of the works in the area focuses on the automatic identification of product features from existing documents—brochures or requirements—by means of natural language processing (NLP) techniques [37,38,39]. A literature review on similarity-based analysis of software applications is presented by Auch et al. [40].

Another well-studied factor of late requirements evolution is user feedback [11, 41], in the form of app reviews, Tweets or other media. Carreño and Winbladh [41] created a system to automatically extract topics from user feedback and generate new requirements for future versions of the app. Feedback in the form of app reviews is analyzed by a stream of works from Maalej and his team (e.g., [10, 42]). Along the same line of research, Guzman et al. [43] proposed to use the information mined from Twitter to guide the evolution of requirements. Additional similar approaches are discussed by Khan et al. [44] and by Morales-Ramirez et al. [45].

Creativity in RE Creativity plays an important role in many RE activities, including the requirements evolution process [46,47,48]. According to Sternberg, creativity can be described as the ability to produce work that is both novel and appropriate [49]. Nguyen [50] states that creativity can be attributed to five factors: product, process, domain, people, and context. To analyze the impact of creativity in discovering new requirements, Maiden et al. [51] performed a study consisting of a series of creative workshops to discover new requirements for an Air Traffic Management System. This work provides empirical evidence of the impact of creativity and creative processes in identifying new requirements. Inspired by these seminal contributions on creativity and RE, the CreaRE workshop was establishedFootnote 1, and it is currently at its 10th edition, indicating the interest of the community in the topic. Several techniques have been experimented in the literature; among them, the EPMcreate technique [52], theoretical frameworks for understanding creativity in RE [50], platforms to support collaboration for distributed teams [53], toolboxes for selecting the appropriate creativity technique [54, 55], and the use of combination of goal modeling and creativity techniques [56, 57].

2.1 Contribution

With respect to the literature on early requirements evolution, our work is among the first ones that considers requirements elicitation performed with traditional interviews, which are extremely common in practice [3, 4, 22]. The closest work to ours is the contribution of Hayes et al. [34], focusing on pre-requirements information. Their concept of pre-requirement is analogous to our notion of “initial idea”. However, their goal is to aggregate and automatically cluster pre-requirements from multiple stakeholders, and support traceability. Instead, in our paper we want to evaluate how the initial ideas get transformed through the elicitation and documentation process.

Compared to work on late requirements evolution, most of the works focuses on automatic tools for app store mining, supporting feature extraction or app review analysis. Instead, in this paper we consider a manual approach for app store-inspired elicitation, and we measure the impact with respect to existing requirements. Our paper also differs from most of the existing works because we do not consider the evolution of requirements after a product has been developed. Instead, we study the extension of existing requirements based on a market analysis performed before releasing the product.

Compared to work on creativity our study also differs from the literature. Instead of providing a novel technique to stimulate creativity, it gives quantitative evidence on the impact of early elicitation and documentation activities. In particular, it shows that (a) creativity takes place as a natural phenomenon without introducing specific triggering techniques; (b) a quantitative evaluation is possible, and can be used to compare different creativity techniques for requirements elicitation.

3 Research design

3.1 Research questions

The overarching objective of this research is to explore in which way requirements evolve from ideas to expressed needs. More specifically, we want to first understand what is the difference between initial ideas and user stories documented after initial interactions with a customer. Then, we want to understand and what is the difference between these user stories and those that come out after an analysis of similar products in the market. This way, we can understand how different strategies of requirements elicitation contribute to the definition of a product. Two main research questions, with associated sub-questions, are considered:

-

RQ1: What is the difference between the initial customer ideas and the requirements documented by an analyst after customer-analyst interview sessions?

-

RQ1.1: How large is the difference in terms of documented requirements and roles with respect to initial ideas? With this question we want to quantitatively evaluate how different are the user stories with respect to initial customer ideas. This gives a numerical indication of how much is the contribution of the elicitation sessions to documented requirements.

-

RQ1.2: What is the relevance given to the different categories of requirements and roles with respect to initial ideas? The question aims to understand whether there is a difference in terms of relevance given to categories or requirements with respect to initial customer ideas. With the term “relevance” we arguably intend the percentage of the stories dedicated to a certain category or roleFootnote 2. In other terms, we want to understand whether the elicitation sessions gave more prominence to certain aspects with respect to others, compared to initial ideas.

-

RQ1.3: What are the emerging categories and roles? This question aims to understand whether there are typical categories of user stories and roles that were not originally present in the ideas of the customer, and therefore what is the actual contribution of the elicitation process in terms of content.

-

-

RQ2: What is the additional contribution of requirements produced after an analysis of products available from the app stores?

-

RQ2.1: How large is the difference in terms of covered requirements categories and roles with respect to the requirements documented after the interview sessions? This question aims to quantitatively evaluate how much is the contribution given by the analysis of products from the app stores, and if the additional requirements introduced cover different categories with respect to the ones documented in the previous phase.

-

RQ2.2: What is the relevance given to the different categories of requirements and roles with respect to the requirements documented after the interview sessions? This question investigates what are the specific requirements categories and roles considered in the additional requirements, and whether their distributions are different, when compared with the previously documented requirements. In other terms, we want to understand if the focus of the analysts shifted in this second phase.

-

RQ2.3: What are the additional categories and roles? This question aims to identify whether there are categories and roles that are common across analysts, and that emerged in this specific phase. This allows us to understand what is the contribution of app store-inspired elicitation.

-

3.2 Data collection

To answer the questions, we perform an experiment with one fictional customer (3rd author of the current paper), and a set of 58 different student analysts recruited from Kennesaw State University. In particular, the analysts were graduate students enrolled in the first or second semester of the MSc in Software Engineering and, at the time of the experiment, were all taking a course on Requirements Engineering. In that course, they have been introduced to elicitation techniques and user stories using very standard teaching material which also included examples from very common systems such as an event ticket store and a bookstore. During the first semesters of the program, students have usually taken, or are taking, an introductory course on software engineering and a course on project management. Elective specialized courses (e.g., User Interaction Engineering and courses on Privacy) are in general taken later in the program.

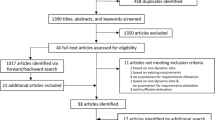

The steps of the experiment (approved, together with the recruitment process, by the Institutional Review Board of Kennesaw State University) are described in the following. Please refer to Fig. 1 for an overview of the steps. Overall, the study is composed of two phases. Phase I, which we call interview-based elicitation, is associated to RQ1 and includes all the steps that go from initial customer ideas until documented requirements. Phase II, called app store-inspired elicitation, is associated to RQ2, and includes the steps that lead to an extension of the requirements based on the analysis of app stores.

Phase I - Analyst and Customer Steps

1. Preparation Analysts are given a brief description of a product to develop and are asked to prepare questions for a customer that they will have to interview to elicit the products’ requirements. The product is a system for the management of a summer camp. The brief description has an initial part that describes the company’s current practice followed by a set of briefly described needs about (1) managing the information, registration, and activities of the participants, (2) giving the participants’ guardians the opportunity to register and follow their children, (3) managing the employees’ performance and schedule, (4) communicating with parents and employees, and (5) managing facilities. The fictional customer is required to study a set of about 50 user stories for the system, which are regarded as the initial customer ideas for the experiment. The initial user stories are taken from the dataset by Dalpiaz [58], file g21.badcamp.txt. The use of the same customer for all the interviews is in line with similar experiments, such as [16] and [59].

2. Interview Each analyst performs a 15 minutes interview with the customer, possibly asking additional questions with respect to the ones that they prepared. The fictional customer answers their questions based on the set of user stories that describe the product, and that are not shown to the analysts. Overall, the customer is required to stick to the content of the user stories as much as possible. However, he is allowed to answer freely when he does not find a reasonable answer in the user story document, to keep the interview as realistic as possible, and capture novel ideas that emerge in the dialogue. The analysts are required to record their interviews, and take notes.

3. Requirements Analysis Based on the recording and their notes, the analysts have to: (a) perform an initial analysis of the requirements, and based on this analysis (b) produce additional questions for the customer to be asked in a follow-up interview. The initial analysis can be performed with the support of a graphical prototype, use cases, or written form. Analysts can adopt the method they find more suitable.

4. Follow-up Interview Then, they perform a follow-up interview with the customer, which also lasts 15 minutes, to ask the additional questions prepared. During the interview, they can use the graphical prototype, the use cases, or any material produced as a support to ask questions to the customers. In practice, they can show the material to the customer and discuss based on the material.

5. Requirements Documentation After the second interview, they are required to write down from 50 to 60 user stories for the system. We constrain the number of user stories between 50 and 60 to be consistent with the number of user stories in the original set and, thus, to better compare and analyze the collected data. About 50 user stories are also the typical number in the dataset by Dalpiaz [58], which we deem representative of user story sets used for research purposes.

Phase II - Analyst and Customer Steps

6. Analysis of App Stores Each analyst starts from the user stories that they developed in the previous phase. They are now asked to produce an enhanced set of user stories, which take into account possible competing products available on the market, as commonly done by app developers [9]. To this end, the analysts are required to perform an informal market analysis based on the app stores. In particular, they are required to:

-

Select at least 5 mobile apps from Google Play or the Apple App Store that are in some way related to the developed product (for example apps for summer camps, apps for trekking, or anything that they consider related).

-

Try out the apps to have an idea of their features when compared with their product.

-

Go through the app reviews to identify desired features, and additional requirements that may be appropriate also for their product.

No automated tool, except for the default app store search engines, was provided for the market analysis task. The analysts were free to browse the app stores following their intuition, ability and preferred strategy.

7. Requirements Extension Based on the analysis of the app stores, the analysts are required to list the selected apps and their links, together with a brief description that 1. outlines the main features of the product, 2. explains in which way the product is related to the original one, and why they have chosen it. Furthermore, they are asked to add 20 user stories to the original list, based on the analysis of the app stores.

Phase I - Researchers’ Steps

8. Comparison with Initial Ideas Among the 58 participants, some did not consent to use their work for publication. In addition, as the analysis has been performed manually, to make it manageable and consistent, we reduced the number of analyzed analysts to 30 which is a number that still allowed us to obtain significant results. We randomly selected them and their work has been inspected by two researchers (2nd and 3rd author) to identify:

-

User stories that express content that was already entirely present in the initial set of user stories (marked as “existing”, E).

-

User stories that express content that is novel with respect to the initial set of user stories, but that belongs to one of the existing high-level categories of the initial set (marked as “refinement” R).

-

User stories that express content that is novel, and belonging to a novel category not initially present (marked as “new” N).

-

The name of novel categories of user stories introduced.

-

Recurrent themes in R and N stories.

-

Roles that were used also in the original stories (E\(_\rho\)).

-

Roles that represented a refinement of roles used in the original stories (R\(_\rho\)).

-

Roles that were novel and never considered in the original stories (N\(_\rho\)).

This process is carried out by means of a template spreadsheet that is used to annotate the user stories. Given a list of user stories produced by one of the analysts, a researcher went through the list, and marked each user story with E, R, or N. For E and R, the researcher was asked to mark the high-level original category to which the user story belonged. The high-level original categories were extracted according to the Validity Procedure, reported later in this section, and are Customers, Facilities, Personnel, Camp, and Communication. Whenever a user story was marked with N, the researcher was asked to report the name of the new category identified. An excerpt of the data analysis for one of the user story documents is reported in Fig. 2. It should be noticed that, to make the annotation task practically feasible, the evaluation was carried out considering high-level, domain-specific categories of user stories and not by linking individual user stories with original ones. This was considered hardly feasible, since single user stories could be traced to multiple ones, or to subsets of existing ones. This fine-grained task would have led to major disagreements between annotators, thus hampering the validity of the results.

Subsequently, the roles used in the stories were extracted and marked as E\(_\rho\), R\(_\rho\) or N\(_\rho\). For the case of E\(_\rho\) and R\(_\rho\) roles, the researcher also indicated the corresponding role in the original story. For N\(_\rho\), the role was added to the list of roles.

Validity Procedure. To produce the data analysis scheme presented in Fig. 2, we needed to identify the original categories—i.e., a categorisation for the original user stories—and interview categories—i.e., the names of the novel categories emerging from the user stories produced after interviews. These latter are the possible values of cells under the column “Interview Category” in Fig. 2. To identify these categories, a validity procedure was set-up, comprising the following steps.

-

Identification of original categories: The initial set of user stories used as preparation material for the interviewee has been analyzed by the three authors independently to identify the high-level categories emerging from the original user stories, referred in the following as original categories. These categories are: Customers, Facilities, Personnel, Camp, and Communication, and are defined in more detail in Sect. 4.2.1.

-

Validation of original categories: To validate the original categories, each researcher independently used them to label a set of 107 user stories sampled from different analysts. If a user story did not belong to any of the original categories it was marked with “N” to indicate a new category, and a preliminary name was provided. After the labeling process, the researchers met in a 30 minutes meeting to reconcile the disagreements. For 9 of the user stories (about \(8.5\%\) of the total), they either assigned different labels or they were not sure which label to assign and wanted to discuss the user story in the meeting.

-

Identification of interview categories: The researchers met in a 1-hour meeting to agree on the names of the new categories emerging from the data, referred in the following as interview categories. As one of the researchers also played the role of the customer in the interviews, he gave additional insights which helped to name the new categories. When further input was needed, the researchers also referred to the recordings of the interviews. After the meeting, the spreadsheet model used for the comparison procedure was produced, and it is shown in Fig. 2.

-

Consolidation of the schema: Using the spreadsheet model the 2nd and 3rd authors independently labeled more than 100 user stories and then met to reconcile. In this activity, novel interview categories were also introduced. The disagreement on the category to assign to the user stories was minimal (less than \(~4\%\) of the cases) and was mostly related to the name assigned by the researchers to new interview categories. The 1st author analyzed the spreadsheet model and approved the consolidated schema.

-

Application of the Schema: The schema was applied to the whole set of user stories by the 2nd author to carry out the comparison activity. In this activity, the author could use the original categories, the interview categories identified in the previous steps, or add further interview categories. The user stories that raised doubts (about 2%) were marked as “to be discussed”.

-

Final Reconciliation: After the analysis a final meeting was held between 2nd and 3rd author, to consolidate the names of additional interview categories and resolve doubts. This meeting was broken over two days for a total of more than 6 hours.

The analysis of the original roles did not require a similar effort, as the original user stories clearly identified three well-separated roles, namely Administrator, Worker and Parent. For the new roles emerging from interviews, the user stories also clearly identified role names, and these were discussed and confirmed in the final meetings.

Phase II - Researchers’ Steps

9. Comparison between Interview-based Elicitation and App Store-inspired Elicitation The additional user stories of the 30 analysts already considered in Step 8 are evaluated by two researchers (2nd and 3rd author) to identify, for each analyst:

-

The user stories that express content that belonged to novel categories not considered in the previous phase by the specific analyst (referred in the following as “app-inspired”, A).

-

Roles that were novel with respect to those previously considered by the specific analyst (A\(_\rho\)).

This process is supported by a template spreadsheet that is used to annotate the user stories. An example is reported in Fig. 3. For each list of user stories belonging to an analyst, a researcher went through the list, and assigned a category to each user story. The category could be selected among the five original ones, and also within the whole set of categories already identified in the user stories of all the analysts in the previous phase, i.e., all the interview categories. If an appropriate category was not present in the list, the researcher could add it in an additional column (“App-inspired Category”, in Fig. 3). Since the researchers could choose among all the categories—including original ones—they could also select categories that were not considered by the specific analyst in the initial phase. Therefore, to determine whether a user story could be marked as A, we automatically extracted the list of categories already present in the interview-based user stories of the specific analyst. A user story produced in this second phase was considered as A if its category did not appear in the extracted list. This way, even though a category was already considered by some other analyst, or was part of the original set, it would be counted as “app-inspired” for the specific analyst.

After this analysis, the roles were extracted and marked as A\(_\rho\), with the same rationale and approach used for the marking of user stories.

Validity Procedure. The alignment between researchers in terms of categories of user stories was already achieved in the previous step. In this step, we needed to ensure that the newly introduced categories, referred in the following as app-inspired categories, were uniform and agreed upon. Therefore, the 2nd and 3rd authors independently annotated half of the complete list of user stories produced by the 30 analysts, around 300 user stories for each author. Then, they cross-checked each other’s work, to identify disagreements in assigning labels. In total, they discussed 7% of the user stories, for which disagreement was observed. This reconciliation meeting lasted one hour and 20 minutes. The names of the new categories introduced, which form the app-inspired categories, were homogenized and consolidated in another meeting that lasted around 3 hours. The consolidated set was finally assessed by the 1st author.

To summarize, in both Phase I and II, the researchers categorised each individual user story. In Phase I, the categories could be original ones, and novel ones emerging from interview-based elicitation (interview categories). In Phase II, the categories could be original categories, interview categories, and novel ones emerged during app store-inspired elicitation (app-inspired categories). In Phase I, researchers were also asked to indicate whether a user story in an original category was something novel for that specific category (marked as R), or something already existing in that category (marked as E). This distinction between E and R was not adopted in Phase II, were only the category was specified. At this stage, it would not be practical to have such a fine-grained comparison, considering all the interview categories, plus the original categories—for a total of 25 categories.

3.3 Data analysis

Data analysis is carried out based on the results of the comparison activities, and on a thematic analysis of the selected apps and associated rationales provided by analysts.

To answer RQ1.1 and RQ2.1, we perform a quantitative statistical analysis, as these questions are specifically concerned with measures of differences. We thus consider the following study variables, oriented to give a quantitative representation of the concepts of initial customer ideas, documented requirements, and extended requirements, as well as their differences.

The dependent variables of the first phase of the study are:

-

Conservation rate: rate of produced user stories that include content that can be traced directly to the original set of user stories. More formally, given a set of user stories produced by a certain analyst, let e be the number of user stories that are marked as E by the researchers for some of the original categories, let T be the total number of user stories for the analyst, the conservation rate c is defined as \(c = e / T\).

-

Refinement rate: rate of produced user stories including content that can be mapped to the original categories, but that provide novel content within one or more of those categories. Formally, the refinement rate r is \(r = en / T\), where en is the number of user stories marked as R.

-

Novelty rate: rate of produced user stories including content that is novel, and cannot be traced to existing categories. Formally, the novelty rate is \(v = n / T\), where n is the number of user stories marked as N. These user stories belong to interview categories.

To understand the rates, consider the following case. An analyst wrote 50 user stories: 12 of them can be mapped to the original categories and do not add content with respect to the original user stories (marked as E); 30 can be mapped to the original categories, e.g., Communication, but also add novel content, e.g., related to social media (marked as R); 8 introduce entirely new categories (marked as N), e.g., Company Information or Usability. In this case, the conservation rate is \(24\%\), the refinement rate is \(60\%\), and the novelty rate is \(16\%\).

For the roles, we have analogous variables, Role Conservation, Refinement and Novelty Rate, defined respectively as: \(c_\rho = e_\rho / T_\rho\), \(r_\rho = en_\rho / T_\rho\), and \(v_\rho = n_\rho / T_\rho\), where:

-

1.

\(T_\rho\) is the number of roles identified by the analyst;

-

2.

\(e_\rho , en_\rho , n_\rho\) are the roles that can be traced to the initial roles, the roles that express a refinement of the original ones, and the new roles, respectively.

Other dependent variables are those related to the second phase of the study, in which the analysts took inspiration from the app stores to produce additional user stories:

-

App Store Novelty rate: rate of user stories, produced after the analysis of similar apps, which belong to novel categories introduced in this phase by the analyst. Formally, the app store novelty rate is \(v^{App} = a / S\), where a is the number of user stories belonging to entirely novel categories for the specific analyst, and S is the total number of user stories produced in this second phase by the analyst. This rate indicates to what extent the additional activity helped the analysts in identifying novel features not explored in the previous phases. Consider for example the following scenario. The user stories that the analyst X defines after the interviews belong to 4 out of 5 original categories and to interview category “Usability”. After the analysis of the apps, X defines 20 user stories. 16 of them belong to the 4 original categories already used and to “Usability”. The other user stories belong to the remaining original category, and to the category “Availability”, which was introduced already by other analysts in the Phase I. In this case, \(v^{App}\) is \(20\%\) as there are 4 out of 20 user stories belonging to categories that X did not explore in Phase I.

-

App Store Refinement rate: this rate represents the rate of user stories that belong to the same categories already used by the analyst. Formally, this rate is \(r^{App} = o / S\), where o indicates the number of user stories belonging to existing categories used by the specific analystFootnote 3. This rate indicates to what extent the additional activity helped in refining the features identified in the previous phase. In the previous example, \(r^{App}\) is \(80\%\), as 16 out of 20 user stories belong to categories already considered by the analyst in Phase I.

It should be noticed that in this phase we have no conservation rate, as we are not comparing with existing user stories. Concerning roles, we consider the app store role novelty rate as \(v^{App}_\rho = a_\rho / S_\rho\), where \(a_\rho\) is the number of new roles introduced in this phase for the specific analyst and \(S_\rho\) is the total number of roles used by the analyst in the whole set of user stories. We do not consider other rates for roles, as we want to focus solely additional profiles introduced after app store-inspired elicitation.

Based on these rate variables, we want to see the interval, for which we can state that, for a confidence level of 0.95 (\(\alpha =0.05\)), the rate variable Y is comprised between a certain lower bound \(L_Y\) and a certain upper bound \(U_Y\). This can be achieved by identifying the confidence interval of the mean of the sample of each rate variable Y—or the confidence interval of its median, when the data are not normally distributed. To test the normality assumption, we use the Shapiro-Wilk Test. When the assumption is met, we apply the one sample t-test. In the other cases, we apply the percentile method.

The labeling procedure and theme extraction performed by the 2nd and 3rd authors (Step 8 and 9 in Data Collection) produced additional information that can help to answer RQ1.2, RQ1.3, as well as RQ2.2 and RQ2.3. Indeed, these questions are concerned with the categories of user stories and roles, and their evolution in the different steps.

In particular, we are interested in analyzing the following indicators, with associated analysis.

-

Categories and Roles Frequency: frequency of user stories belonging to each category and role, considered as a proxy to evaluate their relevance.

-

To answer RQ1.2, we compare the frequency in the original user stories, and the frequency in the user stories produced in Phase I. This comparison will be performed based on the original categories, and we will use one sample t-test, or Wilcoxon—if normality assumptions are not satisfied—with \(\alpha =0.05\), to evaluate the differences. The goal will be to identify whether there is a difference between the original frequencies and the ones observed in the data produced after interviews. Additional statistics will also be provided to complement statistical tests.

-

To answer RQ2.2, we compare the frequencies in the user stories produced in Phase I with the frequencies in the user stories produced in Phase II by the same analysts. The comparison will be performed considering original, interview, and app-inspired categories and roles. We will use paired t-test, or Wilcoxon—if normality assumptions are not satisfied—with \(\alpha =0.05\), to evaluate the differences. The goal is to understand whether there is a difference between the distributions of the different categories in Phase I and in Phase II. This helps to understand if the focus of the analysts changed in the two phases. Additional statistics will also be provided to complement statistical tests.

-

-

Emerging Categories and Roles: specific names and associated frequencies of interview categories and roles (to answer RQ1.3) and app-inspired categories and roles (to answer RQ2.3).

Given the exploratory nature of the study, we do not make an explicit definition of all the variables involved, and all the hypotheses tested throughout the evaluation, which would suggest a confirmatory study design. Instead, in the following results sections, we will focus on presenting multiple statistics to provide evidence of the evolution of requirements throughout the different elicitation phases.

4 RQ1: Interview-based elicitation—execution and results

In this section, we report the results of the activities related to interview-based elicitation, evaluating the confidence intervals for the different rates (RQ1.1), the variations in terms of distributions of categories and roles with respect to initial ideas (RQ1.2), and the novel categories and roles introduced by the analysts (RQ1.3).

4.1 RQ1.1: How large is the difference in terms of documented requirements and roles with respect to initial ideas?

Boxplots of the different rates (cf. Sect. 3.3 for definitions): conservation = conservation rate; refinement = refinement rate; novelty = novelty rate. The non-overlapping notches indicate that the difference between the median of the rates is significant for a confidence level of 95%. From the figure, we observe that most of the user stories produced are refinements, followed by existing user stories and by novel ones

Boxplots of the different rates. (cf. Sect. 3.3 for definitions): role cons. = role conservation rate; role ref. = role refinement rate; role nov. = role novelty rate. The non-overlapping notches between role conservation and the other two rates indicate that the difference between the median is significant, with a confidence level of 95%. Instead, the difference between role refinement and novelty is not significant. From the figure, we also observe that most of the roles belong to the initial set (role cons. is the highest), while novel ones or refinements are less frequent

In Figs. 6 and 7, we report the plots of the values of the different rates for each analyst. Instead, the boxplots in Fig. 4 and 5 give an overview of the distribution of the rates. We see that in general the highest values are observed for the refinement rate, followed by the conservation rate and by the novelty rate. Conversely, for roles, conservation rate dominates over novelty and refinement ones. Looking at Figs. 6 7, we intuitively see that variations for each rate are quite high from an analyst to the other. This suggests that each individual analyst produces different user stories in terms of content, and thus, depending on the analyst, different systems may be developed. In some cases, analysts lean more towards the refinement of user stories in the existing categories, while in others focus on completely novel features, as one can observe, e.g., for analysts 7 and 16. In other cases, e.g., analysts 2 or 12, the elicitation process tends to be more conservative, with less space for creativity.

In Table 1, we answer RQ1 by identifying the confidence intervals for each rate variable.

Based on the tests results, the following statements can be given, for a confidence level of 95%:

-

The conservation rate c is between 30% and 38%, meaning that between 30% and 38% of the produced user stories (i.e., roughly up to 19 out of 50) can be fully traced to user stories belonging to the initial ideas.

-

The refinement rate r is between 54% and 62%, meaning that between 54% and 62% of the produced user stories (i.e., roughly up to 31 out of 50) are refinements of initial ideas. Specifically, they belong to categories of features already conceived by the customer, but provide novel content.

-

The novelty rate n is between 12% and 20%, meaning that between 12% and 20% of the produced user stories (i.e., roughly up to 10 out of 50) identify entirely novel categories of features, which did not belong to the initial ideas.

-

The role conservation rate \(c_\rho\) is between \(58\%\) and 72%, meaning that between \(58\%\) and 72% of the roles used in the produced user stories match with the initial roles identified by the customer.

-

The role refinement rate \(r_\rho\) is between \(6\%\) and \(17\%\), meaning that between \(6\%\) and \(17\%\) of the roles used in the produced user stories are refinements of the initial roles.

-

The role novelty rate \(n_\rho\) is between \(18\%\) and \(29\%\), meaning that between \(18\%\) and \(29\%\) of the roles are entirely novel with respect to the initial ones.

4.2 RQ1.2: What is the relevance given to the categories of requirements and roles with respect to initial ideas?

To answer RQ1.2, we analyze how the relevance given to each category and each role change in the analysts’ stories with respect to the original ones. We remark that, with the term “relevance” we arguably intend the fraction of the stories dedicated to a certain category or role. This analysis will allow us to identify what is important for the analysts and to reflect on the meaning of these preferences.

4.2.1 Analysis of categories

Through the process described in Sect. 3.2, the researchers identified 5 different categories:

-

Administrative procedure related to customers (labeled as customers): this category includes features such as registration to a camp, creation of new campers and parents profiles, modification, and elimination of profiles.

-

Management of facilities (facilities): this category includes the tracking of the facilities’ status both in terms of usage and maintenance needs and the management of the inventory.

-

Administrative procedure related to personnel (personnel): This category includes features related to assigning tasks to workers and evaluating them. In its more general interpretation, it can also include the ability to create and modify personnel profiles and other administrative needs.

-

Individual camp management (camp): This category includes features such as scheduling activities within a specific camp, managing its participants, and dealing with additional planning details.

-

Communication (communication): This category includes everything related to communication from one-to-one messaging and broadcasting to a specific category of users to posting information online and in social media.

Initial Categories Distribution In the original stories, and, thus, in the mind of the fictional customer, great relevance is given to the administrative activities on the customer side (i.e., stories belonging to customers), such as “As a camp administrator, I want to be able to add campers so that I can keep track of each individual camper”. In particular, \(64.2\%\) of the total number of stories belong to this category, and the remaining is distributed among the other categories as follows: 5.7% belong to facilities, 9.4% to personnel, 7.6% to camp, and 15.1% to communication.

Categories Distribution in the Analysts’ Stories

The category distribution of the analysts’ stories is different with respect to the original stories. Indeed, looking at Table 2, we observe that the mean of the percentage of stories belonging to customers is lower than half of the one in the original stories, while the mean of communication is double of the value for the original stories.

Box plots of the distributions for each category, measured as the percentage of user stories in the category. The black asterisks * represent the percentage in the original set. The figure shows higher interest in communication-related user stories with respect to the original set, and much less interest in customer-related user stories.

The box plots in Fig. 8, in which the black asterisks represent the percentage for each category in the original stories, show that the relevance given to facilities, personnel, and camp of the original stories is comparable with the one given by the analysts. Instead, for customers and communication there are strong differences. This suggests that the analysts focused their attention on aspects that were not the original focus of their customer. Table 3 reports the statistical tests to assess whether there is a difference between the means of the distribution and the relevance in the original user stories. The tests show that the differences between observed mean (Obs \(\mu\)) and expected one (Exp. \(\mu\)) are significant in all the cases for \(\alpha = 0.05\). The tests are all one-sample tests. We use the t-test when the normality assumption is fulfilled, and Wilcoxon Signed Rank in the other cases. It is rather striking to observe that the decrease of relevance for the customer category is about 59% (i.e., 37.81).

4.2.2 Analysis of roles

Similar considerations can be done about the perspective considered in imagining the system, and, thus, the roles used in the user stories. In the original set of user stories, there are three roles: camp administrator, parent, and camp worker.

Initial Roles Distribution The majority of stories is dedicated to the camp administrator (66.0% of the user stories), followed by the parents of the participants (24.5%) and the camp worker (9.4%). This also helped the preparation of the fictional customer who was acting as the camp administrator as he had most of the available stories looking at the system to be developed from his perspective.

Roles Distribution in the Analysts’ Stories The analysts had only the chance to talk with the administrator and, nevertheless, 30% of them in their stories were dedicated to other roles, and only 16.67% of them had more than half of the stories focused on the administrator.

Table 4 shows the descriptive statistics for the distribution of the analysts’ user stories among the different roles. It is interesting to observe that the mean for camp workers is much higher than the one on parents. This could be connected to the decreasing attention to the category customers in the analysts’ requirements. Notice that, while all the analysts considered the role of camp administrator, two of them did not consider the role of camp workers, and four did not consider the role of parents.

As shown in the box plots in Fig. 9, in which the black asterisks represent the percentage for each role in the original stories, only the outlier focused more on the camp administrator role than the original stories. Similarly, the relevance given to the parents’ perspective is in general much smaller than in the original stories.

Box plots of user story distribution for each role, measured as the percentage of user stories that used that role. The asterisks * are the number of user stories for the specific role in the original set. The figure shows higher interest in the role of Workers with respect to the original set, and much less interest in the Administrator role, which is also the customer that has been interviewed

The impact of new roles is limited (mean of 13.8%). All the analysts considered at least 2 of the original roles, and 80% of them considered all the 3 original roles. However, the relevance given to the perspectives and roles in the analysts’ user stories is considerably different than in the original ones. This is confirmed by the statistical tests in Table 5, which show that the percentage of the original stories for each role is significantly different from the one observed in our data.

4.3 RQ1.3: What are the emerging categories and roles?

To answer RQ1.3, we analyze the new categories and new roles, their recurrence among analysts, and their weight within the set of stories of the analysts who included them. This analysis provides insight on what is generated by the analysts’ expertise, background, preparation, and analysis.

4.3.1 Analysis of new categories

In their stories, every analyst included new categories up to a maximum of 8 with a mean of 3.73. In total, they introduced 20 new categories, reported in Fig. 10. Among them, 4 have been used by a single analyst but were very specific and could not map over any other existing or newly created categories.

Table 6 reports descriptive statistics on new categories. The more recurrent new category, used by 53% of the analysts, is Security/Privacy. The impact on the total of user stories in this category varies from 1.79%, one story, to 10%, 5 stories. An example of such a story is “As an employee, I want this app to not have access to my personal phone data so that what I have on there stays private”.

The second more recurrent new category is Data Aggregation/Analysis. This category collect all the features that aim at aggregating and analyzing information at company-level. An example is “As a business owner, I want to be able to analyze the data that is entered into the system so that I can see trends in the information that I receive.”. The mean usage is 3.9% (2 stories) with std. dev. 2.1% as the relevance given varies from 1.9% to 7.8%.

Notice that there is a fundamental difference between this category and the previous one. “Security/Privacy” includes nonfunctional requirements that many of the analysts decided to investigate during their conversation with the customer, while “Data Aggregation/Analysis” includes functional requirements that describe global operations at a company level.

The third more recurrent new category is Advertisement, used once or twice by almost a third of the analysts. This category includes the functionalities that the analysts identified to advertise the camps to potential guests and their parents. An example is “As a camp administrator, I want to have the ability to advertise scheduled activities so that potential camp attendees and their guardians will know what activities are upcoming”.

Among the other nonfunctional requirements categories that emerged in the analysis are Usability and Portability, which have both been considered by 8 participants (26.7% of the total). An example that belongs to the Usability category is “As an employee, I wish for this app to be simple to use so that our older staffers can still use it”. Notice that, when present, usability is highly considered with an average of 6.9% of the stories. Portability has a lower impact on the stories of the analysts who use it (4.2%). An example in this category is “As a camp administrator, I want users to be able to use the system on all platforms so that no users are excluded from seeing what the campsites have to offer.”.

The functionalities related to publishing and accessing to general information about the companies (e.g., its contact information) were not present in the original user stories and have been collected in the category Company Information. This category has also been considered by 7 participants.

In synthesis, we observe that new categories emerged for every analyst and some of them are highly predominant both in terms of number of analysts who considered them and in impact on the stories of the analysts who considered them. The new categories are almost equally divided into new functionalities and nonfunctional requirements.

4.3.2 Analysis of new roles

Differently from the case of categories, not all the analysts added new roles to their stories, but still a large number did it (22 analysts over 30). 6 new roles emerged from the analysis, namely, in order of frequency, attendee (16), visitor (8), user (7)Footnote 4, consultant (3), system administrator (2), and investor (1). Table 7 reports descriptive statistics on new roles (excluding system administrators and investors that appear just once in the stories of 2 and 1 analysts, respectively). The most frequent among these roles, attendee, refers to the children participating in the camps and thus assumes that they all will have access to the system—while this was not possible in the original system’s idea. When used, this role has a high impact with a mean of 15.8% (standard deviation of 8.4%), meaning that analysts who introduced this role, used it in 15.8% of their stories. Notice that the analyst who used it more dedicated 38% of the stories to this role, which represented the most frequent role in the analyst’s set. Summarizing, we observe that many analysts consider additional roles with respect to the ones in the original set.

5 RQ2: App-store inspired elicitation— execution and results

In this section, we report the results of the activities related to app store-inspired elicitation, evaluating the confidence intervals for the different rates (RQ2.1), the variations in terms of distributions of categories and roles with respect to the previous phase (RQ2.2), and the novel categories and roles introduced (RQ2.3).

5.1 RQ2.1: How large is the difference in terms of covered requirements categories and roles with respect to the requirements documented after the interview sessions?

Figure 11 reports the relative percentages of app store novelty and app store refinement rates for each analyst. We see that again there are several differences between analysts, with some of them (e.g., 2, 15) including a large percentage of novel categories, and some of them (e.g., 7, 9), sticking to the original ones. However, we notice all analysts included at least some novel categories in their user stories. For the role novelty rate, shown in Fig. 12, we see an even higher variability in the results, with some analysts (13, 15, 17) introducing different roles, and the majority of them (17 subjects out of 30) keeping the originally identified ones. Figure 13 reports the boxplots of the rates, further highlighting the high variance of the app store role novelty rate. The non-overlapping notches indicate that the difference between the medians of the rates is significant for a confidence level of 95%.

Table 8 reports the results of the tests performed to identify upper and lower bounds of the different rates. From the tests, we conclude the following, with a confidence level of 95%:

-

The app store novelty rate \(v^{App}\) is between 28% and 42%. This means that up to 42% of the user stories produced after the analysis of similar apps (i.e., roughly up to 8 out of 20) belong to categories that were not identified by the analyst after the first phases of elicitation.

-

The app store refinement rate \(r^{App}\) is between 68% and 87%, meaning that the majority of the user stories produced after the analysis of similar apps (i.e., roughly up to 17 out of 20) are refinements of ideas already identified during the previous elicitation activities.

-

The app store role novelty rate \(v^{App}_\rho\) is between 8% and 17%, meaning that few additional roles are identified after app store-inspired elicitation by the individual analysts, but still a non-negligible number.

Boxplots of the different app store-related rates. (cf. Sect. 3.3 for definitions): app store nov. = app store novelty rate; app store ref. = app store refinement rate; app store role nov. = app store role novelty rate. The figure shows that most of the user stories belong to categories already considered (app store ref. is higher than app store nov.). Furthermore, the novel roles are generally more limited with respect to those already discovered—app store role nov. is below 0.3

5.2 RQ2.2: What is the relevance given to the different categories of requirements and roles with respect to the requirements documented after the interview sessions?

To answer this RQ, we first look at the relevance of the categories by group, and then we look at the variation of single specific categories. The same will be done for roles.

5.3 Analysis of categories

To facilitate the analysis, we group the categories into three groups, namely: Original, i.e., the set of categories that were part of the initial ideas; Interview-based, i.e., the categories that emerged after interview-based elicitation; App store-inspired, i.e., the categories that emerged after app store-inspired elicitation. Figure 14 shows the boxplots of the distribution of the three category groups (abbreviated as Original-CAT, Interview-CAT and App-CAT) in the two phases, identified as Ph-I and Ph-II. From the plot, we clearly see that the original categories received substantially less attention in Ph-II. Conversely, more relevance was given to categories in the Interview-based group. Overall, the novel categories belonging to the App group received less attention than the others, but still a non-negligible rate of user stories (mean 14%) was dedicated to them.

Boxplots that represent the fraction of user stories in each category group for the different phases, identified as Ph-I and P-II. The category groups are: Original-CAT = initial set of categories; Interview-CAT = new categories introduced in the interview phase; App-CAT = new categories introduced after the app store-inspired phase. The boxplots show that the original categories still dominate in Phase II. However there is less attention to them with respect to Phase I (Original-CAT Ph-I is lower than Original-CAT Ph-II), and increased attention to categories introduced in the interview phase (Interview-CAT Ph-II is higher than Interview CAT Ph-I). Categories introduced in Phase II (i.e., App-CAT Ph. II) are the less frequent in Phase II, and do not obviously exist in Phase I

The statistical tests reported in Table 9 show that the difference between the means of Original-CAT Ph-I and Original-CAT Ph-II, and Interview-CAT Ph-I and Interview-CAT Ph-II, are statistically significant for \(\alpha = 0.05\). Similarly, the variation from zero within the App category is also statistically significant. Therefore, we can conclude that in the different elicitation phases the analysts give relevance to different category groups. More specifically, we see: a mean decrease of 0.21 for the group Original, which is 23% of the mean in Phase I (0.92); a mean increase of 0.14 for the group Interview, which is 45% of the mean in Phase II (0.31). Furthermore 14% of the user stories in Phase II consider novel categories (group App, mean = 0.14).

To look into the single categories, we consider, for each analyst, their individual rate of user stories in a certain category during Phase I and during Phase II. Then we compute the absolute difference, to check whether some variation occurred in that category. The boxplots of the differences are reported in Fig. 15. We see that there are several categories with a limited number of data points, indicating that only a part of the analysts focused on certain categories in any of the phases (e.g., Filtering Feedback or Legal Reqs). On the other hand, we also see that for some categories for which there is sufficient data, some interesting variations occur. In particular, most of the Original categories, namely Customer, Facilities, Workers and Camp tend to decrease. Conversely, the relevance of Communication increases. In the other categories, interesting increments are observed for Search/Filter and Usability, while the relevance of Security/Privacy and Advertisement decreases.

Figure 16 provides a different perspective, indicating the absolute number of analysts focusing on a certain category in Phase I and in Phase II. The diagram shows that some more analysts dedicated attention to features that were marginally considered before, such as Live-chat, Settings, and Availability, while some topics are abandoned by part of the analysts in this phase, as, e.g., Ticket System or Filtering Feedback, either because they were considered completely addressed by the previously written requirements, or because these aspects did not appear as relevant in the retrieved apps.

Boxplots of the fraction of user stories after interviews (Ph-I) and after app store-inspired elicitation (Ph-II), considering different role groups, i.e., those in the original set (Original-Roles), and those identified after interviews (Interview-Roles). Original-Roles-Ph-I/Original-Roles-Ph-II = fraction of user stories using roles in the original set, in Ph-I and Ph-II, respectively; Interview Roles-Ph-I/Interview-Roles-Ph-II = fraction of user stories using roles introduced after interviews, in Ph-I and Ph-II, respectively. In a few cases, the fraction is higher than 1.0 because certain user stories were dedicated to more than one role. The figure indicates that in both Phase I and II, more user stories are dedicated to original roles, and fewer to new roles identified after interviews. Furthermore, in Phase II, we see that user stories dedicated to original roles decrease, in favor of those dedicated to new roles

5.4 Analysis of roles

Concerning roles, Fig. 17 reports the difference between groups of roles in terms of rate of user stories dedicated to role groups in Phase I and Phase II. The grouping is analogous to the one already considered for user story categories. We do not report the boxplot for novel roles introduced after app store-inspired elicitation, since solely three subjects out of 30 introduced novel roles. From the figure, we see that the relevance of the original roles decreased, in favor of the roles identified after interview-based elicitation. The statistical tests reported in Table 10 show that the difference between the groups is significant for \(\alpha = 0.5\) for Interview-CAT roles. Therefore, there is a statistically significant variation in terms of relevance given to novel roles already identified in the interview phase. More specifically, interview roles increase by 100% (the mean difference is 0.14, and the value in Phase I was 0.14). Instead, the mean decrease of 0.12 observed in terms of user stories dedicated to roles of the original set is not significant (p-value = 0.07).

Concerning the relevance of specific roles, we can qualitatively observe some variations in Fig. 18. The figure reports the distribution of the difference between Phase I and Phase II of the fraction of user stories belonging to a certain role. We consider solely the most frequent roles. We see that the role of Admin and Worker decreased, while some increase is observed for the other roles. In particular, the generic role of User, intended as app user, received more attention, together with Attendee and Parent. Overall, the app store analysis allowed the analysts to focus more on the user-side of the business, rather than on the internal view, represented by Admin and Worker roles.

Boxplots of the difference between the fraction of user stories after app store-inspired elicitation (Phase II) and after interviews (Phase I), considering the different roles. The plot reports only the most common roles. The boxplots below 0 (Admin, Worker) indicate a decrease of relevance for that role in Phase II. The boxplots above 0 (Attendee, User) indicate an increase of relevance, as a greater fraction of user stories is dedicated to these roles

5.5 RQ2.3: What are the emerging categories and roles?

After app-store driven requirements elicitation, 80% of the analysts introduced storied that did not belong to any of the previously identified categories and we have grouped these storied into 16 novel categories. In Fig. 19, we report the histogram of these categories, considering the number of analysts that wrote at least one user story in the specific category.

The most frequently used category, used by almost half of the analysts (13 out of 30), is Maps and Directions, which contains all the stories related to the identification of positions on a map (e.g., for camp, event, individual), or to get directions. An example is “As a visitor, I want to be able to look for the event location using the application so that I do not have to drive through the campground looking for the best site available.”

Other frequently used new categories are Payment/Discounts and Training/Tutorials, both used by 20% of the analysts. They are both categories that introduce new functionalities in the system: Payment/Discounts groups functionalities related to acquiring and using coupons, or understanding the costs of camps and activities (e.g., “As a site user, I can add promotion code for the camp so that I can get discount on camp event”); Training/Tutorials includes educational functionalities to train both the staff and the participants on the use of the app and on camp activities (e.g., “As a site user, I should see tutorial guide on how to book for app and how to use the system so it will help me to understand how to find camp events and make booking.”).

Finally, 5 analysts included stories to introduce the possibility to personalize the user experience in different ways. We have grouped these functionalities under Personalization. The descriptive statistics of these 4 more frequent categories are reported in Table 11.

Concerning roles, we have already observed that the role novelty rate is not negligible, meaning that individual analysts considered novel roles. However, most of these roles were already introduced by other analysts already in Phase I, and the number of entirely novel roles is limited to four (Outdoorsman, Camping enthusiast, Developer, and Tourist). Furthermore, only three analysts identified novel roles. Given these results, we cannot make relevant conclusions concerning the qualitative contribution of app store-driven elicitation towards the discovery of novel roles.

6 Discussion

The analysis of the data collected in our study suggests the presence of interesting patterns in the analysts’ behaviour that empirically confirm the intuition that requirements are not only elicited but co-created through the interview process. Then, requirements are substantially extended when the analyst looks at similar products in the market. Overall, our analysis shows that throughout the elicitation process there is an evolution of the original idea. While this evolution might be partially driven by the three Cs [31], our data show that it does not only go in the direction of completing the existing information, but often changes the focus of the requirements and the roles, adds new functionalities that were not part of the initial ideas, and introduces nonfunctional requirements. In the following, we answer the RQs and we provide observations in relation to existing literature. Our study contributes to theory in RE, and it is mainly oriented to researchers. In the following, we use the \(\Rightarrow\) symbol to highlight take-away messages to trigger future studies of the RE community.

RQ1.1