Abstract

Requirements engineering has traditionally been stakeholder-driven. In addition to domain knowledge, widespread digitalization has led to the generation of vast amounts of data (Big Data) from heterogeneous digital sources such as the Internet of Things (IoT), mobile devices, and social networks. The digital transformation has spawned new opportunities to consider such data as potentially valuable sources of requirements, although they are not intentionally created for requirements elicitation. A challenge to data-driven requirements engineering concerns the lack of methods to facilitate seamless and autonomous requirements elicitation from such dynamic and unintended digital sources. There are numerous challenges in processing the data effectively to be fully exploited in organizations. This article, thus, reviews the current state-of-the-art approaches to data-driven requirements elicitation from dynamic data sources and identifies research gaps. We obtained 1848 hits when searching six electronic databases. Through a two-level screening and a complementary forward and backward reference search, 68 papers were selected for final analysis. The results reveal that the existing automated requirements elicitation primarily focuses on utilizing human-sourced data, especially online reviews, as requirements sources, and supervised machine learning for data processing. The outcomes of automated requirements elicitation often result in mere identification and classification of requirements-related information or identification of features, without eliciting requirements in a ready-to-use form. This article highlights the need for developing methods to leverage process-mediated and machine-generated data for requirements elicitation and addressing the issues related to variety, velocity, and volume of Big Data for the efficient and effective software development and evolution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Requirements elicitation is one of the most critical activities in requirements engineering, which, in turn, is a major determinant of successful development of information systems [1]. In conventional requirements engineering, requirements are elicited from domain knowledge obtained from stakeholders, relying primarily on qualitative data collection methods (e.g., interviews, workshops, and focus group discussions) [2]. The ongoing digitalization of organizations and society at large—as seen, for instance, by the proliferation of e-commerce and the advent of IoT—has led to an unprecedented and increasing amount of high-velocity and heterogeneous data, which is often referred to as Big Data [3].

The digital transformation has spawned new opportunities to consider this type of dynamic data from digital sources as potentially valuable sources of requirements, in addition to domain knowledge. Harnessing both traditional and new data sources in a complementary fashion may help improve the quality of existing or facilitate the development of new software systems. Nevertheless, conventional elicitation techniques are often time-consuming and not sufficiently scalable for processing such fast-growing data or capable of considering stakeholder groups that are becoming increasingly large and global. This highlights the need for a data-driven approach to support continuous and automated requirements engineering from ever-growing amounts of data.

There have been numerous efforts to automate requirements elicitation from static data, i.e., data that are generated with a relatively low velocity and rarely updated. These efforts can be grouped according to the following three aims: (1) eliciting requirements from static domain knowledge (e.g., documents written in natural languages [4, 5], ontologies [6, 7], and various types of models, e.g., business process models [8], UML use cases and sequence diagrams [9]), (2) performing specific requirements engineering activities based on requirements that have been already elicited (e.g., requirements prioritization [10], classification of natural language requirements [11], management of requirements traceability [12], requirements validation [13], generation of a conceptual model from natural language requirements [14]), or (3) developing tools to enhance stakeholders’ ability to perform requirements engineering activities based on static domain knowledge or existing requirements (e.g., tool-support for collaborative requirements prioritization [15] and requirements negotiation with rule-based reasoning [16]).

Several systematic reviews have been conducted on automated requirements elicitation from static domain knowledge. Meth et al. conducted a systematic review on tool support for automated requirements elicitation from domain documents written in natural language, where they analyzed and categorized the identified studies according to an analytical framework which consists of tool categories, technological concepts, and evaluation approaches [17]. Nicolás and Toval conducted a systematic review of the methods and techniques for transforming domain models (e.g., business models, UML models, and user interface models), use cases, scenarios, and user stories into textual requirements [18]. In both of these reviews, the requirements sources contained static domain knowledge.

Much less focus has been placed on eliciting requirements from dynamic data, and data that were not intentionally collected for the purpose of requirements elicitation. There are four main advantages to focus on dynamic data from such “unintended” digital sources. First, dynamic data-driven requirements engineering facilitates secondary use of data, which eliminates the need for collecting data specifically for requirements engineering, in turn enhancing scalability. Second, unintended digital sources can include data relevant for new system requirements that otherwise would not be discovered since utilizing such data sources allows for the collection of data from larger and global stakeholders who are beyond the reach of an organization relying on traditional elicitation methods [19]. Including such requirements, which a current software system is not supporting, can bring business values in the form of improved customer satisfaction, cost and time reduction, and optimized operations [20]. Third, focusing on dynamic data allows for capturing up-to-date user requirements, which in turn enables timely and effective operational decision making. Finally, dynamic data from unintended digital sources are machine-readable, which facilitates automated and continuous requirements engineering. A fitting requirements elicitation approach provides new opportunities and competitive advantages in a fast-growing market by extracting real-time business insights and knowledge from a variety of digital sources.

Crowd-based requirements engineering (CrowdRE) is a good example that has taken advantage of dynamic data from unintended digital sources. A primary focus of CrowdRE has been on eliciting requirements from explicit user feedback from crowd users (e.g., app reviews and data from social media) by applying various techniques based on machine learning and natural language processing [21]. Genc-Nayebi and Abran conducted a systematic review on opinion mining from mobile app store user reviews to identify existing solutions and challenges for mining app reviews, as well as to propose future research directions [22]. They focused on specific data-mining techniques used for review analysis, domain adaptation methods, evaluation criteria to assess the usefulness and helpfulness of the reviews, techniques for filtering out spam reviews, and application features. Martin et al. [26] surveyed on studies that performed app store analysis to extract both technical and non-technical attributes for software engineering. Tavakoli et al. [27] conducted a systematic review on techniques and tools for extracting useful software development information through mobile app review mining. The aforementioned literature reviews only focus on utilizing app reviews, while leaving out other types of human-sourced data that are potentially useful as requirement sources. There is also a growing interest in embracing contextual and usage data of crowd users (i.e., implicit user feedback) for requirements elicitation. This systematic review, thus, broadens the scope of previous literature reviews by considering more diverse data sources than merely app reviews for requirements elicitation.

Another relevant approach to data-driven requirements engineering is the application of process mining capabilities for requirements engineering. Process mining is an evidence-based approach to infer valuable process-related insights primarily from event logs, discovered models, and pre-defined process models. Process mining can be divided into three types: process discovery, conformance checking, and process enhancement [23]. Ghasemi and Amyot performed a systematic review on goal-oriented process modeling in which the selected studies were categorized into three areas: (1) goal modeling and requirements elicitation, (2) intention mining (i.e., the discovery of intentional process models going beyond mere activity process models), and (3) key performance indicators (i.e., means for monitoring goals) [23]. Their findings indicate that the amount of research on goal-oriented process mining is still limited. In addition to explicit and implicit user feedback, as well as event logs and process models, there may be more opportunities to leverage a broader range of dynamic data sources for requirements engineering, such as sensor readings.

Zowghi and Coulin [24] performed a comprehensive survey on techniques, approaches, and tools used for requirements elicitation. However, their work exclusively focused on conventional, stakeholder-driven requirements elicitation methods. Our study instead investigated the data-driven requirements elicitation. More recently, Arruda and Madhavji [25] systematically reviewed the literature on requirements engineering to develop Big Data applications. They identified the process and type of requirements needed for developing Big Data applications, identified challenges associated with requirements engineering in the context of Big Data applications, discussed the available requirements engineering solutions for the development of Big Data applications, and proposed future research directions. This study is different from their work because we studied methods to elicit requirements from Big Data rather than eliciting requirements for Big Data applications.

To our knowledge, no systematic review has been performed with an explicit focus on automated requirements elicitation for information systems from three types of dynamic data sources: human-sourced data sources, process-mediated data sources, and machine-generated data sources. The aim of this study is, therefore, to perform a comprehensive and systematic review of the research literature on existing state-of-the-art methods for facilitating automatic requirements elicitation for information systems driven by dynamic data from unintended digital sources.

This review may help requirements engineers and researchers understand the existing data-driven requirements elicitation techniques and gaps need to be addressed to facilitate data-driven requirements elicitation. Those insights may provide a basis for further development of algorithms and methods to leverage the increasing availability of Big Data as requirements sources.

Definitions and Scope

In this study, dynamic data are defined as raw data available in a digital form that changes frequently and have not already been analyzed or aggregated. Dynamic data certainly include but are not limited to Big Data, which in itself is challenging to define [28]. In addition to Big Data, dynamic data also include data that does not strictly meet the 4 Vs of Big Data (i.e., Volume, Variety, Veracity, and Velocity) but are still likely to contain relevant requirements-related information. Domain knowledge includes, for example, intellectual property, business documents, existing system specifications, goals, standards, conferences, and knowledge from customers or external providers.

This study excludes static domain knowledge that is less frequently created or modified and has been the primary focus of existing automated requirements engineering. Unintended digital sources are defined as sources of data generated via digital technologies that are unintended with respect to requirements elicitation. Thus, dynamic data from unintended digital sources are the digital data pulled from data sources that are created/modified frequently without the intention of eliciting requirements.

Of note is that the two terms “dynamic data” and “unintended digital source” together define the scope of this systematic review. For example, although domain documents are often created without the intention of performing requirements engineering, they are not considered to be dynamic data and, therefore, outside of the scope of this study.

Dynamic data from unintended digital sources expand explicit and implicit user feedback, defined by Morales-Ramirez et al. [29]. In their study, user feedback is considered as “a reaction of users, which roots in their perceived quality of experience”, which indicates the existence of a specific user is assumed. However, there are many devices which collect Big Data such as environmental IoT sensors to measure temperature, humidity, and pollution level, without interacting users. Since we foresee the possibility of eliciting requirements from such data sources, we decided to use a different term from the term “implicit user feedback”. To categorize the sources of data, we used human-sourced, process-mediated, and machine-generated data, following Firmani et al. [30].

Research Questions

To achieve the aim of the study, we formulated the main research question as follows: how can requirements elicitation from dynamic data be supported through automation? The main research question has been further divided into the following sub-research questions:

-

RQ1: What types of dynamic data are used for automated requirements elicitation?

-

We focus on describing the sources of the data, but also study whether there have been attempts to integrate multiple types of data sources and whether domain knowledge has been used in addition to dynamic data.

-

-

RQ2: What types of techniques and technologies are used for automating requirements elicitation?

-

We are interested in learning which underlying techniques and technologies are used in the proposed methods, as well as how they are put together and evaluated.

-

-

RQ3: What are the outcomes of automated requirements elicitation?

-

We assess how far the proposed methods go in automating requirements elicitation, the form of the outputs generated by the data-driven elicitation method, and what types of requirements are elicited.

-

This systematic review will advance scientific knowledge on data-driven requirements engineering for continuous system development and evolution by (1) providing a holistic analysis of the state-of-the-art methods that support automatic requirements elicitation from dynamic data, (2) identifying associated research gaps, and (3) providing directions for future research. The paper is structured as follows: the second section presents the research methods used in our study; the third section presents an overview of the selected studies and the results based on our analytical framework; the fourths section provides a detailed analysis and discussion of each component of the analytical framework; the fifth section describes potential threats to validity; finally, the last section concludes the paper and suggests directions for future work.

Methods

A systematic literature review aims to answer a specific research question using systematic methods to consolidate all relevant evidence that meets pre-defined eligibility criteria [3]. It consists of three main phases: planning, conducting, and reporting the review. The main activities of the planning phase are problem formulation and protocol development. Before the actual review process started, we formulated research questions. The study protocol was then developed, conforming to the guideline of the systematic literature review proposed by Kitchenham and Charters [31]. The protocol included the following contents: background, the aim of the study, research questions, selection criteria, data sources (i.e., electronic databases), search strategy, data collection, data synthesis, and the timeline of the study. The protocol was approved by the research group, which consists of the first author and two research experts: one expert in requirements engineering and one expert in data science. The actual review process starts during the conducting phase. The phase includes the following activities: identifying potentially eligible studies based on title, abstract and keywords, selecting eligible studies through full-text screening, extracting and synthesizing data that are relevant to answer the defined research question(s), performing a holistic analysis, and interpreting the findings. During the reporting phase, the synthesized findings are documented and disseminated to an appropriate channel.

Selection Criteria

Inclusion and exclusion criteria were developed to capture the most relevant articles for answering our research questions.

Inclusion Criteria

We included articles that met all the following inclusion criteria:

-

Requirements elicitation is supported through automation.

-

Requirements are elicited from digital and dynamic data sources.

-

Digital and dynamic data sources are created without intention with respect to requirements engineering.

-

Changes in requirements should involve the elicitation of new requirements.

-

The article has been peer-reviewed.

-

The full text of the article is written in English.

Exclusion Criteria

We excluded articles that met at least one of the following exclusion criteria:

-

Requirements are elicited solely from non-dynamic data.

-

The proposed method is performed based on existing requirements.

-

Studies that merely presented the proposed artifact without any or sufficient descriptions of evaluation methods.

-

Review papers, keynote talks, or abstracts of conference proceedings.

Data Sources

We performed a comprehensive search in six electronic databases (Table 1). In the first iteration, we searched Scopus, Web of Science, ACM Digital Library, and IEEE Xplore. Those databases were selected because they together cover the top ten information systems journals and conferences [17]. In addition, EBSCOhost and ProQuest, which are two major databases in the field of information systems, were searched to maximize the coverage of relevant publications, in line with a previous systematic review in the area [17]. ProQuest and EBSCOhost include both peer-reviewed and non-peer-reviewed articles. We, however, considered only peer-reviewed articles to be consistent with our inclusion criteria. The differences in the search field across databases are due to the different search functionalities of each electronic database.

Search Strategy

A comprehensive search strategy was developed in consultation with a librarian and the two co-authors who are experts in the fields of requirements engineering and data science, respectively. First, we extracted three key components from the first research question: requirements elicitation, automation, and Big Data sources and related analytics (Table 2). These components formed the basis for creating a logical search string. Big Data can refer either to data sources or to analytics/data-driven techniques to process Big Data. The term is also closely related to data-mining/machine-learning/data science/artificial intelligence techniques. We thus included keywords and synonyms that cover both Big Data sources and related analytics.

To construct a search string, keywords and synonyms that were grouped in the same component were connected by OR-operators, while each key component was connected by AND-operators, which means at least one keyword from each component must be present. The search string was adapted using the specific syntax of each database’s search function. The search string was iteratively tested and refined to optimize search results through trial search.

Study Selection

The entire search was performed by the first author (SL). Before starting the review process, we tested a small number of articles to establish agreement and consistency among reviewers. We then conducted a pilot study in which three reviewers independently assessed 50 randomly selected papers to estimate the sample size that is needed to ensure a substantial level of agreement (i.e., 0.61–0.80) based on the Landis and Koch-Kappa’s benchmark scale [32]. Each paper was screened by assessing its title, abstract, and keywords against our selection criteria (level 1 screening). During level 1 screening, articles were classified into one of the three categories: (1) included, (2) excluded, or (3) uncertain. Studies that fell into category 1 and 3 proceeded to full-text screening (level 2 screening) since the aim of the level 1 screening was to identify potentially relevant articles or those that lack sufficient information to be excluded.

After each reviewer had assessed 50 publications, we computed the Fleiss’s Kappa to calculate the inter-rater reliability. We, however, did not discuss the results of each reviewer’s assessment. The Fleiss’s Kappa was used because there were more than two reviewers. The Fleiss’ Kappa was computed to be 0.786. Sample size estimation was performed, following a confidence interval approach suggested by Rotondi and Donner [33]. Using 0.786 as the point estimate of Kappa and 0.61 as the expected lower bound, the required minimum sample size was estimated to be 139. The value of 0.61 was used as the lower bound of Kappa because it is the lower limit of “substantial” inter-rater reliability based on the Landis and Koch-Kappa’s benchmark scale [32], which is what we had aimed for. Since we achieved a substantial level of agreement and did not discuss results not to influence each other’s decisions, each of three reviewers independently continued to screen the remainder of the 89 randomly chosen publications based on titles, abstracts, and keywords (level 1 screening). The overall Fleiss’ Kappa for reviewing 139 articles was 0.850, which indicates an “almost perfect” agreement, according to the benchmark scale proposed by Landis and Koch [32]. Since we were able to achieve a very high inter-rater reliability, the rest of the level 1 screening was conducted by a single reviewer (SL). However, all of the three reviewers discussed and reached a consensus on the articles which SL classified as uncertain or could not decide on with sufficient confidence.

Before conducting the level 2 screening, we discussed which information should be extracted from the eligible articles. Based on the discussion, we developed a preliminary analytical framework to standardize the information to be extracted. We tested this on a small number of full-text papers and refined the data extraction form accordingly. In the level 2 screening, at least two authors reviewed the full-text of each paper that has been identified in level 1 screening to assess its eligibility in the final analysis. In addition to keyword-based search on the databases, we also performed forward/backward reference searching of all the included studies. SL extracted data from all the eligible studies, while each of AH and JZ divided the data extraction task by half. This was done to ensure that data extracted by SL could be cross-checked by at least one of the two reviewers who have richer experiences and knowledge. Any disagreements between the two reviewers were referred to the third reviewer and resolved by consensus.

To update the search results, an additional search was performed on July 3, 2020, using the same search query and introducing the two-level screening process (i.e., keyword-based search followed by full-text screening). While filtering can be performed by specifying the publication date and year in some databases, in other databases, the search can only be filtered by the publican year. Thus, we manually excluded the studies that have been published before the date of the initial search. However, we did not perform a backward and forward reference search during the updating phase. We then applied the same selection criteria used for the initial search to identify the relevant studies.

Analytical Framework and Data Collection

After considering the selected articles, we iteratively developed and refined an analytical framework, which covers both design and evaluation perspectives, to answer our research questions. The framework consists of three components: types of dynamic data sources used for automated requirements elicitation, techniquesand technologies used for automated requirements elicitation, and the outcomes of automated requirements elicitation. Table 3 summarizes the extracted data that are associated with each component of the analytical framework. Each component of the analytical framework is described in detail below.

Types of Dynamic Data Sources Used for Automated Requirements Elicitation

To answer RQ1, we extracted the following information: (1) types of dynamic data sources, (2) types of dynamic data, (3) integration of data sources, (4) relation of dynamic data to a given organization, and (5) additional domain knowledge that is used to elicit system requirements.

Types of Dynamic Data Sources

Dynamic data sources are categorized into one or a combination of human-sourced data sources, process-mediated data sources, and machine-generated data sources [30]. This provides insights into which types of data sources have drawn the most or the least attention as potential requirements sources in the existing literature. The categorization also helps to analyze whether there exists any process pattern in the automated requirements elicitation within each data source type.

Human-sourced data sources refer to the digitized records of human experiences. To name a few, examples of human-sourced data sources include social media, blogs, and contents from mobile phones. Process-mediated data sources are records of business processes and business events that are monitored, which includes electronic health records, commercial transactions, banking records, credit card payments. Machine-generated data sources are the records of fixed and mobile sensors and machines that are used to measure the events and situations in the physical world. They include, for example, readings from environmental and barometric pressure sensors, outputs of medical devices, satellite image data, and location data such as RFID chip readings and GPS outputs.

Types of Dynamic Data

To understand what types of dynamic data have been used for eliciting system requirements in the existing literature, we extracted the specific types of dynamic data that were used in each of the selected studies and grouped them into seven categories. Those categories are online reviews (e.g., app reviews, expert reviews, and user reviews), micro-blogs (e.g., Twitter), online discussions/forums, software repositories (e.g., issue tracking systems and GitHub), usage data, sensor readings, and mailing lists.

Integration of Data Sources

We explored whether the study integrates multiple types of dynamic data sources (i.e., any combination of human-sourced, process-mediated, and machine-generated data sources). We classified the selected studies into “yes” if the study has used multiple dynamic data sources, otherwise into “no.”

Relation of Dynamic Data to a Given Organization

Understanding whether requirements are elicited from external or internal data sources in relation to a given organization is important for requirements engineers to identify potential sources that can bring innovations into the requirements engineering process and facilitate software evolution and development of new promising software systems. We thus classified the selected studies into “yes” if the platform is owned by the organization and “no” if it is owned by a third party.

Additional Domain Knowledge that was Used to Elicit System Requirements

We assessed whether the study uses any domain knowledge in combination with dynamic data to explore the possible ways of integrating both dynamic data and domain knowledge. The selected studies were classified into “yes,” if the study uses any domain knowledge in addition to dynamic data, otherwise classified into “no.”

Techniques Used for Automated Requirements Elicitation

To answer RQ2, the following four types of information were extracted: (1) technique(s) used for automated requirements elicitation, including process pattern of automating requirements elicitation, (2) use of aggregation/summarization, (3) use of visualization, and (4) evaluation methods.

Technique(s) Used for Automation

Implementing promising algorithms is a prerequisite for effective and efficient automation of the requirements elicitation process. To identify the state-of-the-art algorithms, specific methods that were used for automating requirements elicitation were extracted and categorized into machine learning, rule-based classification, model-oriented approach, topic modeling, and traditional clustering.

Aggregation/Summarization

Summarization helps navigate requirements engineers to pinpoint the relevant information efficiently out of the ever-growing amount of data. We thus assessed whether the study summarizes/aggregates requirements-related information to obtain high-level requirements. If summarization/aggregation is performed, we also extracted specific techniques used for summarization/aggregation.

Visualization

Visualization facilitates requirements engineers to interpret the results of data analysis efficiently and effectively as well as to gain (new) insights in data. We assessed whether the study visualizes the output of the study to enhance their interpretability. If visualization is provided, the specific method used for visualization was also extracted.

Evaluation Methods

To understand how rigorously the performance of the proposed artifact was evaluated, we extracted methods that were used to assess the artifact. Evaluation methods were further divided into two dimensions: evaluation approach and evaluation concepts and metrics [17]. The evaluation concept of each selected study was categorized into one of the following groups: controlled experiment, case study, proof of concept, and other concepts. In a controlled experiment, the proposed artifact is evaluated in a controlled environment [34]. A case study aims to assess the artifact in-depth in a real-world context [34]. A proof of concept is defined as a demonstration of the proposed artifact to verify its feasibility for a real-world application. Other concepts refer to studies using other approaches to evaluate their artifact that does not fall into any category of the aforementioned evaluation approach. We also extracted evaluation concepts and metrics used for the artifact evaluation. Evaluation concepts were classified into one or more of the following categories: completeness, correctness, efficiency, and other evaluation concepts.

The Outcomes of Automated Requirements Elicitation

To answer RQ3, we assessed the outcomes of automated requirements elicitation by extracting the following information: (1) types of requirements, (2) expression of the elicited requirements (i.e., in what form outputs that were generated by automated requirements elicitation were expressed), and (3) additional requirements engineering activity supported through automation.

Expression of the Elicited Requirements

To understand how the obtained requirements are expressed and how far the elicitation activity reached, outputs of automated requirements elicitation were extracted, which were grouped into the following categories: identification and classification of requirements-related information, identification of candidate features related to requirements, and elicitation of requirements.

Intended Degree of Automation

Based on the degree of the proposed automated method, the selected studies were classified into either full automation or semi-automation. We classified the study as full automation if the study fulfilled either of the following conditions: (1) the proposed artifact automated the entire requirements elicitation process without human interaction, or (2) the proposed artifact only supports the partial process of requirements elicitation; however, the part it addressed was fully automated. Semi-automation refers to having a human-in-the-loop for automating requirements elicitation, thus requirements are directed by human interactions.

Additional Requirements Engineering Activity Supported Through Automation

Understanding to what extent the entire requirements engineering process has already been automated is essential to clarify the direction of future research that aims at increasing the level of automation in performing the requirements engineering process. We thus extracted the requirements engineering activity that was supported through automation other than requirements elicitation, if any.

Quality Assessment

We simply assessed the quality of the selected studies based on CORE Conference Rankings for conferences, workshops, and symposia, and SCImago Journal Rank (SJR) indicators for journal papers. We assumed that a study with a higher score of CORE or SJR has higher quality than one with a lower score. The papers that have been ranked A*, A, B, or C for the CORE index get the point of 1.5, 1.5, 1, and 0.5, respectively. If a paper is ranked Q1 or Q2 for the SJR indicator, the paper receives 2 and 1.5, respectively, while a paper that is ranked Q3 or Q4 gets 1. If a conference/journal paper is not included in the CORE/SJR ranking, the paper scores 0 points.

Data Synthesis

We narratively synthesized the findings of this systematic review, which includes basic descriptive statistics and qualitative analyses of (semi-)automated elicitation methods that are sub-grouped by dynamic data source as well as identified research gap(s), and implications and recommendations for future research.

Results

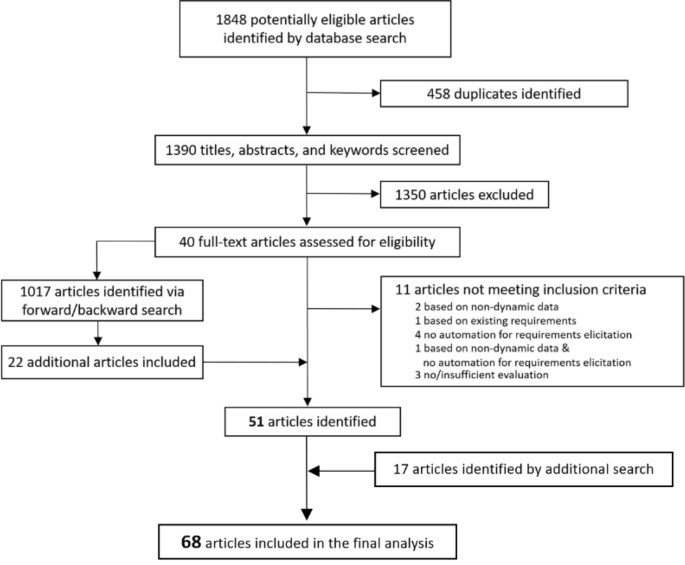

Figure 1 shows a flow diagram of the article selection. We obtained 1,848 hits when searching the 6 electronic databases. We removed 458 duplicates, leaving 1,390 articles for level 1 screening (Table 4). After level 1 screening, we identified 40 articles to proceed to level 2 screening. The level 2 screening resulted in the inclusion of 29 articles for data extraction. We excluded the remaining eleven papers due to: the study not using dynamic data for requirements elicitation; the study being based on existing requirements that had already been elicited; the study not automating requirements elicitation to any degree; and the study proposing a method for automated requirements elicitation without sufficient evaluation.

In addition, a forward and backward reference search identified 1017 additional articles. Out of these, 22 articles met our inclusion criteria. Thus, a total of 51 papers were considered in the final analysis. Reasons for similar numbers of articles being identified in the query-based search and the backward/forward search include: the studies using terms such as “elicit requirements”, “requirements”, “requirements evolution” instead of “requirements elicitation”; using keywords which cover only one or two of the three keyword blocks despite being relevant; using only the name of a specific analytics technique (e.g., Long Short-term Memory) and not more general terms included in the identified keywords, e.g., machine learning.

To update the search results, we performed additional search and two-level screening, using the same search query process. The updated search identified 401 after removing duplicates (Table 4). Two-level screening resulted in including 17 additional studies. However, we did not perform a backward and forward reference search during this phase. We also included one study that was not captured by the search query but was recommended by an expert due to its relevance to our research question. We, therefore, selected a total 68 studies to be included in this review.

General Characteristics of the Selected Studies

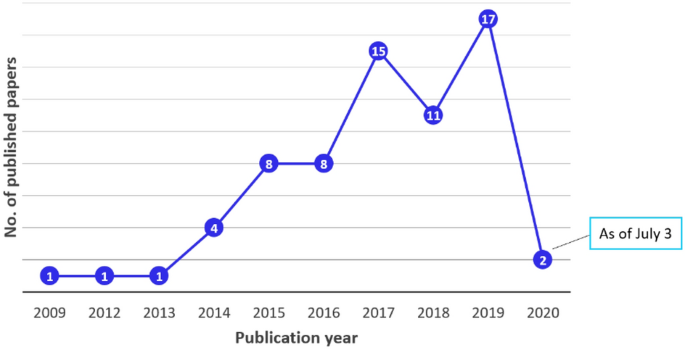

Of the 68 selected articles, conference proceedings are the most frequent publication type (n = 41), followed by journal articles (n = 16), workshop papers (n = 7), and symposium papers (n = 4). All selected studies except one (2009) were published between 2012 and 2020. Figure 2 depicts the total number of the included papers per publication year. Although the number of publications dropped in 2018, in general, there is an increasing trend of publications between 2012 and 2019. For the year 2020, the result is shown as of July 3. A further observation is thus needed to confirm the increasing trend at the end of the year. The median score for study quality was 1 with the interquartile range of 0–1.5 (Appendix 2).

Types of Dynamic Data Sources Used for Requirements Elicitation

Dynamic Data Sources Used for Automated Requirements Elicitation

Among dynamic data sources, human-sourced data sources have been primarily used as requirements sources. Among the three types of dynamic data sources, the vast majority (93%, n = 63) of the studies used human-sourced data sources for eliciting requirements. Only four studies (6%) explored using either machine-generated (n = 2) or process-mediated (n = 2) data sources. Almost all the studies focused on a single type of dynamic data source. We identified only one study attempting to integrate multiple types of dynamic data sources (1%).

The Specific Types of Dynamic Data Used for Automated Requirements Elicitation

The following seven data sources have been used for automated requirements elicitation: online reviews, micro-blogs, online discussions/forums, software repositories, software/app production descriptions, sensor readings, usage data from system–user interactions, and mailing lists (Table 5). Online reviews are reviews of a product or service that is posted and shown publicly online by people who have purchased a given service or product. Microblogs, which are typically published on social media sites, are a type of blog in which users can post a message in a form of different content formats such as short texts, audio, video, and images. They are designed for quick conversational interactions among users. Online discussions/forums are online discussion sites where people can post messages to exchange knowledge. Software repositories are platforms for sharing software packages or source codes, which primarily contain three elements: a trunk, branches, and tags. This study also considered issue-tracking systems as software repositories, which are detailed reports of bugs or complaints written in the form of free texts. Sensor readings are electrical outputs of devices that detect and respond to inputs from a physical phenomenon, which results in a large amount of streaming data. Usage data are run-time data collected when users are interacting with a given system. Mailing lists are a type of electronic discussion forums. E-mail messages sent by specific subscribers are shared by everyone on a mailing list.

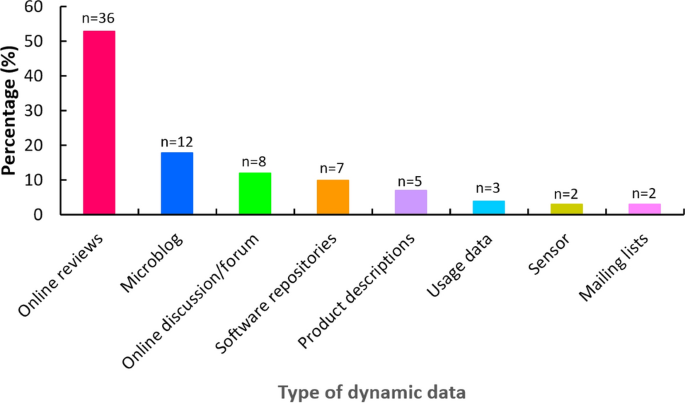

Figure 3 depicts the types of dynamic data that have been used for automated requirements elicitation. Online reviews are the most frequently used type of dynamic data for eliciting requirements (53%), followed by micro-blogs (18%) and online discussions/forums (12%), software repositories (10%), and software/app product descriptions (7%). Other types of dynamic data include sensor readings (3%), usage data from system–user interactions (4%), and mailing lists (3%).

Several studies used multiple types of human-sourced data to gain complementary information and improve the quality of the analysis. Wang et al. [92] assessed whether the use of app changelogs improves the accuracy of identifying and classifying functional and non-functional requirements from app reviews, compared to the results obtained from the mere use of app reviews. Although there were no additional positive effects of app changelogs on improving the accuracy of automatic requirements classification, their subsequent study [93] shows that the accuracy of classifying requirements in app reviews by augmenting the reviews with the text feature words extracted from app changelogs.

Takahashi et al. in [100] used Apache Commons User List and App Store reviews. However, those two types of datasets were used independently without being integrated to evaluate their proposed elicitation process. Moreover, Stanik et al. [65] used three datasets: app reviews, tweets written in English, and tweets written in Italian. On the other hand, Johann et al. [94] integrated both app reviews and descriptions to provide information on which app features are or are not actually reviewed. In addition, Ali et al. [66] combined tweets for a smartwatch and Facebook comments of wearable and smartwatch.

Some studies used multiple types of software repositories. Morales-Ramirez et al. [84] used two types of datasets obtained from the issue tracking system of the Apache OpenOffice community and the feedback gathering system of SEnerCON, which is an industrial project in the home energy management domain. In their different study [79], open-source software mailing lists, and OpenOffice online discussions were used to identify relevant requirements information. Nyamawe et al. [87] used commits from GitHub repository and feature requests from JIRA issue tracker, while Oriol et al. [89] and Franch et al. [88] considered heterogenous software repositories.

Only one study used multiple types of data sources (e.g., human-sourced data and machine-generated data). Wüest et al. in [99] used both app user feedback (i.e., human-sourced data) and app usage data (i.e., process-mediated data).

Relation of Dynamic Data to an Organization of Interest

The majority of the studies used dynamic data that was external to the organization of interest. Of the 68 studies included in the analysis, 57 studies (85%) used dynamic data which was externally related to a given organization (i.e., data were collected outside of an organization’s platforms) [36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77, 80,81,82, 86,87,88,89,90,91,92,93,94, 96, 101]. Nine studies (13%) used dynamic data that were collected from platforms belonging to the organization: issue tracking systems [84, 85, 102]; user feedback from the online discussion and open-source software mailing lists [79]; sensors equipped with an intelligent product which is also known as a product embedded information devices (PEID) [95]; software production forum [103]; user feedback tool [99]. On the other hand, only two studies (3%) used both internal and external dynamic data [78, 100].

Additional Use of Domain Knowledge Used for Requirements Elicitation

Only one study considered additional inclusion of domain knowledge in eliciting requirements. Yang et al. [44] combined the app review analysis and the Wizard-of-Oz technique for the requirements elicitation process. The results indicate that integrating the two sources can complement each other to elicit more comprehensive requirements that cannot be obtained from either one of the sources.

Approaches for Automated Requirements Elicitation

Approaches Used for Human-Sourced Data

Since human-sourced data are typically expressed in natural language, natural language processing (NLP) is commonly used for analyzing this type of data. All of the 63 studies which used human-sourced information started the requirements elicitation process by preprocessing the raw data using NLP techniques. Data preprocessing typically involves removing noise (e.g., HTML tags) to retain only text data. Another critical data preparation activity is tokenization, which means splitting the text into sentences and tokens (words, punctuation marks, and digits), respectively.

Further analysis of the text using NLP typically involves syntactic analysis, such as part-of-speech tagging. Two studies have used speech-acts, which are acts performed by a speaker when making an utterance, as parameters to train supervised learning algorithms [79, 84]. For eliciting requirements, nouns, verbs, and adjectives are often identified since they are more likely used for describing requirements-related information than other parts of speech, including adverbs, numbers, and quantifiers [40].

A common preprocessing activity is stopword filtering, which involves removing tokens that are common but carry little meaning, including function words (e.g., “the”, “and”, and “this”), punctuations (e.g., “.”, “?”, and “!”), and special characters (e.g., “#” and “@”), and numbers. Normalization is moreover often carried out by lowercasing (i.e., convert all text data to lowercase), stemming (i.e., reduce inflectional word forms to their root form such as reducing “play”, “playing” and “played” to their common root form of “play”) and lemmatization (i.e., grouping the different inflected forms of words which are syntactically different but semantically equal to be analyzed as a base form, called lemma, such as grouping “sees” and “saw” into a single base form of “see”).

Once the text data have been preprocessed, features are typically extracted for the subsequent modeling phase. Feature extraction can be done using a bag of words (i.e., simply count occurrences of tokens without considering word order nor normalizing counters), n-grams (i.e., extract the contiguous sequence of n tokens such as bi-gram which indicates the extraction of token pairs), and collocations (i.e., extract a sequence of words that co-occur more often than by chance, for example, “strong tea”). To evaluate how important a word is for a given document, a bag of words are often weighted, using a weighting scheme such as term frequency-inverse document frequency (tf-idf), which gives high weights to words that have a high frequency in a particular document, while having a low frequency in an entire set of documents. Other common features are based on syntactic or semantic analysis of the text (e.g., part-of-speech tags). Sentiment analysis, which is the automated process of identifying and quantifying the opinion or emotional tone of a piece of text through NLP, was used in 18 studies (38%), either to feed into algorithms as features to increase the accuracy of the algorithms or to understand user satisfaction.

After preprocessing the human-sourced data and extracting features for data modeling, the next step of requirements elicitation was to perform either classification or clustering. Classification refers to classifying (text) data into pre-defined categories related to requirements, for example, classifying app reviews into bug reports, feature requests, user experiences, and text ratings [38]. Classification has been performed using three approaches: machine learning (ML), rule-based classification, or model-oriented approaches. In the ML approach, classification is performed by a model built by a learning algorithm based on pre-labeled data.

In the ML approach, various learning algorithms automatically learn statistical patterns within a set of training data, such that a predictive model is able to predict a class for unseen data. In most studies, ML relied on supervised ML. In supervised ML, a predictive model is built based on instances that were pre-assigned with known class labels (i.e., training set). The model is then used to predict a label associated with unseen instances (i.e., test set). A downside with supervised ML is that it typically requires a large amount of labeled data (i.e., ground-truth set) to learn accurate predictive models.

To reduce the cost of labeling a large amount of data, a few studies used the active learning paradigm and semi-supervised machine learning for classification. Active learning enables machines to wisely select unlabeled data points to be labeled next in a way that optimizes a decision boundary created by a given learning algorithm and interactively queries the user to label the selected data points to improve classification accuracy. Semi-supervised learning is an intermediate technique between supervised and unsupervised ML, which utilizes both labeled and unlabeled data in the training process.

Rule-based classification is a classification scheme that uses certain rules, such as language patterns. Rule-based classification excels in performing simpler tasks where domain experts can define rules, while classification using ML works well for the tasks which are easily performed by humans but where (classification) rules are hard to formulate. However, listing all the rules can be tedious and needs to be hand-crafted by skilled experts with abundant domain knowledge. Moreover, rules might need to be refined as new datasets become available, which requires additional resources and limits scalability [77]. A model-oriented approach, which includes utilizing conceptual or meta-models, are applied to define and relate the mined terms and drive classification.

On the other hand, clustering has been performed using either topic modeling or more traditional clustering techniques. Topic modeling is an unsupervised (i.e., learn from unlabeled instances) dimension reduction and clustering technique, which aims to discover hidden semantic patterns in the collection of a document. Topic modeling is used to represent an extensive collection of documents as abstract topics consisting of a set of keywords. In automated requirements elicitation, topic modeling is mainly used for either discovering system features or grouping similar fine-grained features that are extracted using different approaches into high-level features. Traditional clustering is an unsupervised ML technique that aims to discover the intrinsic structure of the data by partitioning a set of data into groups based on their similarity and dissimilarity. Among the selected studies, traditional clustering has been mainly used to discover inherent groupings of features in requirements-related information.

Some studies have performed clustering after classification. Classification was first performed to identify and classify requirements-related information, using machine learning or rule-based classification. Clustering is then applied to the identified requirements-related information (e.g., improvement requests), while ignoring data irrelevant to requirements, to discover inherent groupings of features, using topic modeling or traditional clustering. Table 6 provides a more detailed description of the automated approaches proposed in each study.

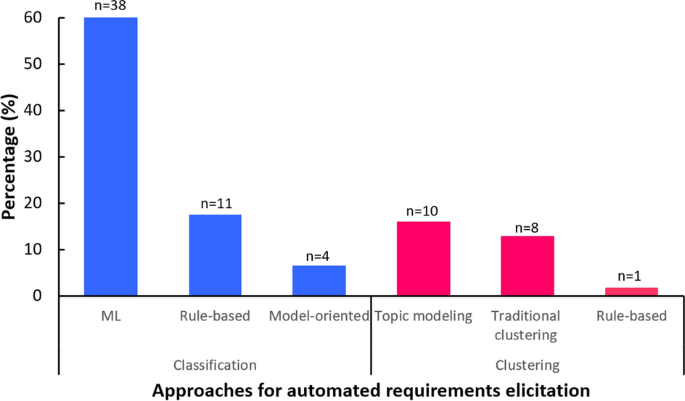

Figure 4 depicts the descriptive statistics of the approaches for automated requirements elicitation used in the selected studies. For classification, the most commonly used approach was based on the ML approach (60%), followed by rule-based classification (17%) and model-oriented approach (6%). For clustering, topic modeling (16%) was the most commonly used approach, followed by more traditional clustering techniques (13%) and unsupervised rule-based clustering (2%).

In nine studies, two different approaches have been combined. Two studies performed classification with supervised ML for filtering and subsequently conducted clustering with topic modeling [47, 68]. Guzman et al. [68] first ran Multinomial Naïve Bayes and Random Forest, which are both supervised learning algorithms, to extract tweets that request software improvement. Biterm Topic Model, which is a topic modeling used for short texts, was then used to group semantically similar tweets for software evolution. Zhao and Zhao [47] ran a supervised deep-learning neural network was first used to extract software features, and their corresponding sentiments and hierarchical LDA was subsequently to extract hierarchical software features with positive and negative sentiments.

Two studies performed classification using ML, which was followed by unsupervised clustering analysis [53, 58]. Jiang et al. [58] used Support Vector Machine, or a supervised machine-learning algorithm, for pruning incorrect software features that were extracted from online reviews. K-means clustering, an unsupervised clustering analysis, was then performed to categorize the extracted features into semantically similar system aspects. Sun and Peng [53] first used Naïve Bayes, a supervised machine-learning algorithm, for filtering informative comments, which were subsequently clustered using K-means, an unsupervised clustering analysis.

Jiang et al. [41] first performed rule-based classification based on syntactic parsing and sentiment analysis to extract opinions about software features and their corresponding sentiment words. Subsequently, S-GN, whose base algorithms are a type of K-means clustering, was performed to cluster similar opinion expressions about software features into a category which represents an overall, functional, or quality requirements. On the other hand, Bakar et al. [63] combined unsupervised clustering analysis and topic modeling in which K-means was first run to identify the similar documents. They then performed latent semantic analysis, which is a type of topic modeling, to group similar software features within the documents.

Guzman and Maalej [40] and Dalpiaz and Parente [46] first extracted software features based on rule-based classification, which uses collocation finding algorithm and the LDA was subsequently applied to group similar software features. Zhang et al. [60] first used linear regressions based on supervised ML to select helpful online reviews. Then conjoint analysis (i.e., a statistical technique used in market research to assess and quantify the consumers’ values on product features or service) was performed to assess the impact of the features from helpful online reviews on the consumers’ overall rating.

In several studies, visualization has been provided to help requirements engineers efficiently sift through and effectively interpret the most important requirements-related information. Bakiu and Guzman [55] first performed the aggregation of features. The results were then visualized at two levels of granularity (i.e., high-level and detailed). Sun and Peng [53] first extracted scenario information of similar user comments and then aggregated and visualized as aggregated scenario models. Software features [52] and technically informative information from the potential requirements sources [64, 86] were summarized, ranked, and visualized using word clouds. Luiz et al. [49] summarized overall user evaluation of the mobile applications, their features, and the corresponding user sentiment polarity and scores in a single graphical interface. Oriol et al. [89] implemented a quality-aware strategic dashboard, which has various functionalities (e.g., quality assessment, forecasting techniques, and what-if analysis) and allows for maintaining traceability of quality requirements generation and documentation process. Wüest et al. [99] fused user feedback and correlated GPS data and visualize the fused data on a map, equipping the parking app with context-awareness.

Techniques Used for Process-Mediated Data

The two studies that used process-mediated data focused on eliciting emerging requirements through observations and analysis of time-series user behavior (i.e., run-time observation of system–user interactions) and the corresponding environmental context values [97, 98]. In both studies, Conditional Random Fields (CRF), which is a statistical modeling method, was used to infer goals (i.e., high-level requirements).

Xie et al. [97] proposed a method to elicit requirements consisting of the three steps. First, a computational model is trained and built based on pre-defined user’s goals in the domain knowledge, using supervised CRF to infer user’s implicit goals (i.e., outputs) from the observation and analysis of run-time user behavior and the corresponding environmental values (i.e., inputs). After the goal inference, the user’s intention (i.e., the execution path) for achieving a given goal is obtained by connecting the situation (i.e., a time-stamped sequence of user behavior that is labeled with a goal and environmental context values) labeled with the same goal into a sequence. Finally, an emerging intention, which is a new sequence pattern of user behavior that has not been pre-defined or captured in the domain knowledge base, is detected.

An emerging intention can occur in three cases; when a user has a new goal; when a user has a new strategy for achieving an existing goal; when a user cannot perform operations in an intended way due to system flaws. Requirements, thus, can be elicited by validating emerging intentions by domain experts based on the analyses of goal transition, divergent behaviors from the optimal usage, and erroneous behavior.

In the analysis of goal transition, domain experts look at two goals that frequently appear consecutively based on the results of goal inference with a high confidence level assigned by the CRF and elicit requirements that make the goal transition smoother.

In the analysis of divergent behavior, domain experts focus on user behaviors that deviate from an expected way to operate the system because the user’s irregular behavior may indicate user’s misunderstanding of required operational procedures, dissatisfaction with the system, and emerging desires. Those divergent behaviors are given a low confidence level by the CRF model.

In the analysis of erroneous behavior, requirements can be elicited by investigating the error reports with high occurrences that may reflect users’ emerging desires that are not supported by the current system. In addition, requirements can be elicited from user behaviors, which are actually normal behavior but are mistakenly considered as erroneous due to the system flaws. The proposed method is assumed to be used in a sensor-laden computer application domain. Thus, it may also be applicable to machine-generated data. The main challenge, however, is to increase the level of automation for analyzing potential emerging intentions and users’ emerging requirements.

Yang et al. in [98] used CRF to infer goals based on a time-stamped sequence of user behavior that is labeled with a goal and environmental context values, which is called a situation. Based on the results of goal inference, intention inference was performed by relating a sequence of situations that are labeled as the same goal. When an intention has not been pre-defined in the domain knowledge base, the intention is detected as an emerging intention and exported as possible new requirements for future system development or evolution.

However, the method proposed in both studies still requires a substantial degree of human oracles, which needs to be reduced in future research to increase the scalability and promote the implementation of their approach in real-life settings. In addition, the proposed method does not yet support diverse requirements. The method proposed by Xie et al. [97], capture only emerging functional but not non-functional requirements. The approach proposed in [98] can only support the identification of the low-level design alternatives (i.e., new ways of fulfilling a given intention).

Notably, Wüest et al. in [99] proposed to use both human-sourced and process-generated data. Their approach is based on the control loop for self-adaptive systems for collecting and analyzing user feedback (i.e., human source data) as well as system usage and the location data (i.e., GPS data). The analysis is driven by rules or models of expected system usage. The system decides how to interpret the results of the analysis and modify its behavior at run-time, which allows for understanding changing user requirements for software evolution.

Techniques Used for Machine-Generated Data

Voet et al. [95] first extracted goal-relevant usage elements as features, from the data recorded via a handheld grinder, a type of product embedded information devices (PEID) equipped with sensors and onboard capabilities. Feature selection was then performed to reduce system workload and improve the prediction accuracy of the machine-learning algorithm, compared to using raw sensor data. Specifically, the support vector machine classifier, which is a supervised machine-learning algorithm, was used to build and train the model to predict the four different usage element states. The model was then tested on the sensor data from the two different usage scenarios that have not been used for training. The collection of the predicted usage element states, or user profiles, can be analyzed manually or by clustering to identify the deviation from the intended optimal usage profile. Requirements can be inferred by analyzing users’ deviant behaviors.

Liang et al. [96] mined user behavior patterns from instances of user behavior, which consist of user context (i.e., time, the location and the motion state of the crowd mobile users) and the currently running apps, using Apriori-M algorithm, which is an efficient algorithm based on Apriori algorithm that is used for frequent item set mining. User behavior patterns, which infer emergent requirements or requirements changes, are ranked and used for service recommendation. Service recommendation is performed periodically, using the service recommendation algorithm. The algorithm takes mined user behavior as inputs and outputs the apps to remind the user. In service recommendation, matching is performed between the current user context and the context of user behavior patterns mined from mobile crowd users, according to the ranking order. If the two matches, the mobile app(s) in the user behavior patterns are automatically recommended to the user as solutions to meet the requirements inferred from user behavior patterns.

In summary, most of the existing solutions support the elicitation of requirements from a single data source, primarily from human source data. There is a lack of methods to support requirements elicitation from heterogeneous data sources. In addition, only a few studies have supported context-awareness and real-time data processing and analysis. Those features are crucial to enable continuous and dynamic elicitation of requirements, which are especially important for context-aware applications and time-critical systems such as health systems. Moreover, many studies lack the argument on how each proposed solution help processing a large volume of data.

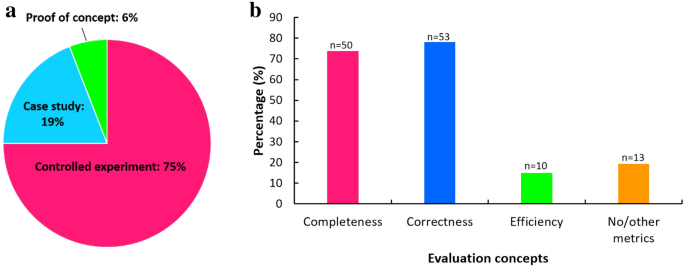

Evaluation Methods

Evaluation methods include three components: evaluation approach, concept, and metrics. Of the 68 selected studies, controlled experiments were the most frequently applied approach for evaluating the proposed artifact (75%), followed by a case study (19%) and a proof of concept (6%) (Fig. 5a).

Among the 51 studies that used controlled experiments, 46 studies compared the results produced by the proposed artifacts against a manually annotated ground-truth set. For example, Bakiu and Guzman [55] compared the performance of multi-label classification against a manually created golden standard in classifying features extracted from unseen user reviews into different dimensions of usability and user experience.

Only three studies compared the performance of the proposed artifact with the results of manual analysis without the aid of automation [57, 62, 78]. For example, Bakar et al. [62] compared the software features that were extracted using their proposed semi-automated method with those that were obtained manually.

Two studies conducted an experiment in different ways. Liang et al. [96] used a longitudinal approach for conducting an experiment. They compared obtained user behavior patterns with those that were collected after some time interval to confirm the correctness of the Apriori-M algorithm. Abad et al. [44] compared Wizard-of-Oz (WOz) and user review analysis qualitatively. In a few studies [46, 88, 90], the proposed techniques have been evaluated with intended users. The rest of the studies used a case study or a proof of concept as an evaluation approach.

Most frequently used evaluation concept was correctness (78%), followed by completeness (74%), no/other metrics (13%), and efficiency (10%) (Fig. 5b). Other metrics, for example, include usability, creativity, the intended user’s perceived usefulness, and satisfaction. Most of the studies combined several evaluation concepts. Three different combinations of the concepts were identified: (1) completeness and correctness (n = 42), (2) completeness and correctness and efficiency (n = 7), and (3) correctness and efficiency (n = 2). In most cases, the correctness and completeness were assessed using precision (i.e., the fraction of correctly predicted instances among the total predicted instances) and recall (i.e., the fraction of correctly predicted positive instances among all the instances in actual class), respectively. In addition, F-measure was also used to address a trade-off between precision and recall.

Efficiency has been assessed in terms of the size of training data [38, 39], the time to recognize and classify software features [76], the time required to identify relevant requirements information for both manual and automated analysis [57], the time taken to complete the extraction of software features [63], the time and space needed to build the classification model [48, 50, 51], and the total execution time of the machine-learning algorithm [78]. The user’s perceived efficiency was measured using a 5-point Likert scale [89]. (Fig. 6)

The Outcomes of the Automated Requirements Elicitation

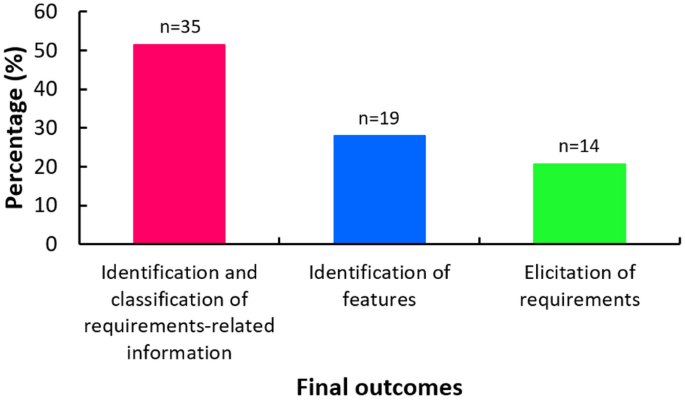

Expression of Final Outcomes Produced by the Automated Part of Requirements Elicitation

Outcomes of the automated requirements elicitation have been classified into the following three categories: (1) identification and classification of requirements-related information, (2) identification of candidate features related to requirements, and (3) elicitation of requirements (Table 7). Only 21% of the studies have enabled the automated elicitation of requirements. A majority of the studies have resulted in automated identification and classification of requirements-related information (51%), or identification of candidate features related to requirements (28%) (Fig. 5).

Identification and classification of requirements-related information have been made by classifying dynamic data into different classes of issues based on; relevance to different stakeholders for identifying responsibilities, the technical relevance for filtering only relevant data (e.g., classifying into either feature request or other), and types of technical issues to be inspected (e.g., classifying into feature requests, bug reports, user experiences, and user ratings, and classifying into functional or non-functional requirements). Some studies performed classification at a deeper level (e.g., classifying into four types of non-functional requirements (i.e., usability, reliability portability, or performance, or functional requirements).

Identification of candidate features related to requirements refers to discovering functional components of a system. Features, however, typically have less granularity than requirements and do not tell what behavior, conditions, and details would be needed to obtain the full functionality. They, thus, need to be further processed to become full requirements.

Elicitation of requirements has been done mostly at high level. Most of them elicited requirements at high level in the form of goals, aggregated scenarios, or high-level textual requirements. Franch et al. [88] and Oriol et al. [89] semi-automated the elicitation of complete requirements in the form of user stories and requirements specified in semi-formal language.

Degree of Intended Automation

A proposed artifact was classified into the two levels of the intended automation: intended full automation or semi-automation. Of note is that we consider artifacts that support the automation of requirements elicitation either entirely or partially. Artifacts are classified into intended full automation in the following two circumstances: (1) when the proposed part is automated without human intervention for completion or (2) when only minimum interactions are needed for completion. Minimum human interaction is defined as human oracles being in the loop once at the initial stage of the elicitation process, which includes the creation of the ground-truth set and conceptual models as well as the specification of a set of keywords and language patterns. Based on the definitions, the majority of the proposed methods (84%) were intended to be fully automated, while the rest are semi-automated methods that require human oracles to be in the loop for each iteration of the process.

Additional Requirements Engineering Activity Supported Through Automation

The majority of the selected studies exclusively focused on enabling requirements elicitation from dynamic data, without considering other requirements engineering activities. Of the 68 studies included in the analysis, 50 studies (74%) exclusively proposed methods to enable automated requirements elicitation, while 18 studies (26%) supported other requirements engineering activities in addition to requirements elicitation. Prioritization was the most frequently supported additional requirements engineering activity (n = 11), followed by elicitation for change management (n = 7), and documentation (n = 2). More detailed information is provided in Table 8.

Discussion

We conducted a systematic literature review on the existing data-driven methods for automated requirements elicitation. The main motivations for this review were two-fold: (1) using dynamic data has the potential to enrich stakeholder-driven requirements elicitation by eliciting new requirements which cannot be obtained from other sources, and (2) no systematic review has been conducted on the state-of-the-art methods to elicit requirements from dynamic data from unintended digital sources. Of 1848 records retrieved from 6 electronic database search and 1017 articles identified through backward and forward reference search, we selected 51 studies that met our inclusion criteria and included in the final analysis to answer the following three research questions. RQ1: What types of dynamic data are used for automated requirements elicitation? RQ2: What types of techniques and technologies are used for automating requirements elicitation? RQ3: What are the outcomes of automated requirements elicitation? In the following sections, we provide a discussion of the main findings, the identified research gaps, and issues to be addressed in future research.

RQ1: What Types of Dynamic Data Are Used for Automated Requirements Elicitation?

Existing research on data-driven requirements elicitation from dynamic data sources has primarily focused on utilizing human-sourced data in the form of online reviews, micro-blogs, online discussions/forums, software repositories, and mailing lists. The use of online reviews was substantially more prevalent, compared to other types of human-sourced data. The result indicates the current data-driven requirements elicitation is largely crowd-based. On the contrary, process-mediated and machine-generated data sources have only, in some instances, been explored as potential sources of requirements. The predominance of human-sourced information is rather expected and can be explained by the following two reasons: (1) users’ preferences and needs regarding system are typically explicitly expressed in natural language, from which it is—relatively speaking—straightforward to obtain requirements compared to process-mediated and machine-generated data, and (2) there are abundant sources of human-sourced data that are publicly available and readily accessible.

Much more research is, thus, needed to develop methods capable of eliciting requirements from process-mediated and machine-generated data that are not expressed in natural language and from which requirements need to be inferred. There is still a lack of methods to infer requirements as well as evidence regarding the applicability of the proposed approach to more diverse types of process-mediated and machine-generated data. Process-mediated and machine-generated data enable run-time requirements elicitation [19]. They also help system developers to understand usage data and the corresponding context, which allows elicitation of performance-related as well as context-dependent requirements [19]. In addition, almost all of the studies have focused on using only a single type of dynamic data and typically also a single data source.

A few studies have utilized multiple human-sourced data sources; however, there has been only one attempt to combine different types of dynamic data sources. As such, there is currently insufficient evidence that using multiple types of data leads to more effective requirements elicitation, but it remains an open issue that merits investigation. We believe that research in this direction would be highly interesting in an attempt to improve data-driven requirements elicitation, both in terms of the coverage and quality of the elicited requirements. Utilizing semantic technologies can be useful for enabling the integration of heterogeneous data sources [107].

In addition, only one study integrated dynamic data and domain knowledge to elicit requirements [44]. The results from that study indicate the potential benefits of using dynamic data together with domain knowledge to elicit requirements that cannot be captured using either one of the data sources. It is likely that domain knowledge, which is typically relatively static but of high quality, can help to enrich data-driven requirements elicitation efforts from dynamic data sources. A larger number of studies are needed to confirm the impacts of integrating domain knowledge with dynamic data on the quality and diversity of outcomes obtained from the automated requirements process.

RQ2: What Types of Techniques and Technologies Are Used for Automating Requirements Elicitation?

Techniques Used for the Automated Requirement Elicitation

Human-sourced data are typically expressed in natural language, which is inherently difficult to analyze computationally due to its ambiguous nature and lack of rigid structure. In all the selected studies, human-sourced data have been (pre-)processed using natural language processing techniques to facilitate subsequent analysis. Although the techniques used for preprocessing varies across studies, data cleaning, text normalization, and feature extraction for data modeling are frequently performed preprocessing steps in automated requirements engineering. Commonly used features include surface-level tokens, words, and phrases, but also syntactic (e.g., part of speech tags) and semantic features (e.g., the positive/negative/neutral sentiment of a sentence). After data preparation and feature extraction, data modeling or analysis for the purpose of requirements elicitation is typically performed using classification or clustering, or classification followed by clustering.

Classification in the context of automated requirements elicitation involves either of the following three tasks: (1) filtering out data irrelevant to requirements, (2) classifying text based on the relevance to different stakeholder groups, or (3) classifying text into different categories of technical issues, such as bug reports and feature requests. The classification tasks have been tackled using either rule-based approaches or machine learning, which is mostly done within the supervised learning paradigm. Although supervised machine learning can achieve high predictive performance in a well-defined classification task, it requires access to a sufficient amount of human-annotated data. As a result, many studies involved human to annotate data into pre-defined classes. The labeling task, however, is labor-intensive, time-consuming, and error-prone due to a considerable amount of noise and the ambiguous nature inherent in natural language [35].

Two solutions have been proposed to reduce the cost of labeling a large amount of data: active learning [35] and semi-supervised machine learning [43]. Dhinakaran et al. in [35] showed that classifiers trained with active learning strategies outperformed in classifying app reviews into feature requests, bug reports, user rating, or user experience than the baseline classifies that were passively trained on the randomly selected dataset. Deocadez et al. in [43] demonstrated that three semi-supervised algorithms (i.e., Self-training, RASCO, and Rel-RASCO) with four base classifiers achieved comparable predictive performance as that of classical supervised machine learning in classifying app reviews into functional or non-functional requirements. Although there is not a sufficient number of studies to draw a generalizable conclusion, classification using active learning and semi-supervised machine-learning strategies may have similar potential as conventional supervised machine learning in identifying and classifying requirements-related information, but requires a much smaller amount of labeled data compared to conventional supervised machine learning.