Abstract

In Rao et al. (Comptes Rendus Acad Sci Paris Ser I(342):191–196, 2006), Rao–Ruan–Xi solved an open question posed by David and Semmes and gave a complete Lipschitz classification of self-similar sets on \(\mathbb R\) with touching structure. In this short note, by applying a matrix rearrangeable condition introduced in Luo (J Lond Math Soc 99(2):428–446, 2019), we generalize their result onto the self-similar sets with overlapping structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Two compact metric spaces \((E,d_1)\) and \((F,d_2)\) are said to be Lipschitz equivalent, denote by \(E\simeq F\), if there is a bijection f from E onto F and a constant \(C>0\) such that for all \(x,y\in E\), we have

From the definition, it is trivial to know that two Lipschitz equivalent spaces must have the common Hausdorff dimension. But the converse is usually not true in general. Hence except the Hausdorff dimension, Lipschitz equivalence plays another important role for the classification of fractal sets.

Around 1990, with different viewpoints, Cooper–Pignataro [2] and Falconer–Marsh [5] made an initial attempt on the Lipschitz equivalence of Cantor sets. In 2006, Rao–Ruan–Xi [16] achieved a breakthrough on self-similar sets with touching structure. They used a technique of graph directed system to give a solution to an open question of David and Semmes [3]. The result triggered a lot of interest. Since then, there have been fruitful researches on this topic [4, 10,11,12,13, 15, 17, 20, 21]. However, very limited results involved the self-similar sets with overlapping structure (in the absence of the open set condition). It is known that the topological structure of self-similar sets with overlaps is quite complicated (see [14]). That may make more difficult to study the Lipschitz equivalence.

Recently, Guo et al. [7] first constructed a Lipschitz equivalence class of self-similar sets with complete overlaps on \(\mathbb R\). Some other sufficient or necessary conditions for the Lipschitz classification of self-similar sets on \(\mathbb R\) were also concerned in [1, 8]. At the same time, Lau and Luo [10, 12] introduced the classical Gromov hyperbolic graph into the study of Lipschitz equivalence. They developed a new tractable approach on the problem. In particular, for self-similar sets with overlaps, Luo in [10] provided a very general sufficient condition (later we will call it the matrix rearrangeable condition (MRC)) for the Lipschitz equivalence of self-similar sets.

The main purpose of this note is to apply the MRC to classify the collection of self-similar sets as follows: Let \(I=[0,1]\) be a unit interval, \(n\ge 3\) be an integer and let \(0<r<1/n\). Denote by \(\mathcal {C}_{n,r, \ell }\) the collection of self-similar sets K satisfying:

-

(1)

\(K=\bigcup _{i=1}^{n}S_i(K)\) where \(S_i(x)=rx+b_i\) for \(i=1,\ldots ,n\).

-

(2)

\(0=b_1<b_2<\cdots <b_n=1-r\).

-

(3)

If \(S_i(I)\cap S_{i+1}(I)\ne \emptyset \) for some \(1\le i<n\), then the intersection either is a one-point set (touching) or satisfies \(|S_i(I)\cap S_{i+1}(I)|=r^2\) (overlapping).

-

(4)

\(\ell =\#\{i\in \{1,\ldots ,n-1\}: \ |S_i(I)\cap S_{i+1}(I)|=r^2\}\) (the number of overlaps).

We prove that

Theorem 1.1

Any two self-similar sets in \(\mathcal {C}_{n,r,\ell }\) are Lipschitz equivalent.

Note that the self-similar set \(K\in \mathcal {C}_{n,r, \ell }\) is always totally disconnected as \(nr<1\). Rao–Ruan–Xi [16] proved the case that \(\ell =0\) (i.e., there are no overlaps on K). Let \(\mathcal {C}_{n,r}=\bigcup _{\ell =0}^{n-1}\mathcal {C}_{n,r,\ell }\) be the collection of self-similar sets K satisfying the above conditions (1), (2), (3). Then the Lipschitz classification of \(\mathcal {C}_{n,r}\) only depends on the number of overlaps \(\ell \).

Corollary 1.2

Let \(K, K'\in \mathcal {C}_{n,r}\). Then \(K\simeq K'\) if and only if \(K, K'\in \mathcal {C}_{n,r, \ell }\) for some \(\ell \).

We recall some well established results on Gromov hyperbolic graphs, hyperbolic boundaries and the definition of MRC in Sect. 2. By verifying the MRC of the hyperbolic graph induced by K in the condition (1), we finally prove Theorem 1.1 and Corollary 1.2 in Sect. 3.

2 Preliminaries

In this section, we follow the standard notation in [10]. Let \((X,\mathcal {E})\) be a graph, where X is a set of vertices and \(\mathcal {E}\) is a set of edges, i.e., \(\mathcal {E}\subset (X\times X)\setminus \{(x,x): x\in X\}\). By a path in \((X,\mathcal {E})\) from x to y, means a finite sequence \(x=x_0,x_1,\ldots ,x_n=y\) such that \((x_i,x_{i+1})\in \mathcal {E}\) for \(i=0,1,\ldots ,n-1\). Moreover, if the path has the minimal length among all possible paths from x to y, then we say that the path is a geodesic and denote by \(\pi (x,y)\). Call X a tree if for any two distinct vertices there is a unique path connecting them. We equip a graph \((X,\mathcal {E})\) with an integer valued metric d(x, y), which is the length of a geodesic \(\pi (x,y)\) from x to y. Let \(\vartheta \in X\) be a fixed vertex and call it the root (or reference point) of the graph. We use |x| to denote \(d(\vartheta ,x)\), and say that x belongs to the n-th level of the graph if \(|x|=n\). We always assume that the graph X is connected, i.e., any two vertices can be joined by a path.

A graph \((X,\mathcal {E})\) is called a Gromov hyperbolic graph (with respect to \(\vartheta \)) if there is \(\delta \ge 0\) such that

where \(|x\wedge y|:=\frac{1}{2}(d(\vartheta ,x)+d(\vartheta ,y)-d(x,y))\) is the Gromov product [6]. We choose \(a>0\) with \(\exp (3\delta a)<\sqrt{2}\), and define for \(x,y\in X\), \(\rho _a(x,y)=\delta _{x,y}\exp (-a|x\wedge y|)\), where \(\delta _{x,y}=0\) or 1 according to \(x=y\) or \(x\ne y\). This is not necessarily a metric unless X is a tree. However \(\rho _a\) is equivalent to a metric, hence we usually regard \(\rho _a\) as a (visual) metric [19]. Let \(\overline{X}\) be the completion of a hyperbolic graph X under \(\rho _a\). The hyperbolic boundary of X is defined by \(\partial X:=\overline{X}\setminus X\) which is a compact metric space under the metric \(\rho _a\). A tree is a hyperbolic graph with \(\delta =0\) and its hyperbolic boundary is a Cantor-type set.

Let \(K=\cup _{i=1}^{n}S_i(K)\) be a self-similar set of \(\mathcal {C}_{n,r, \ell }\) as in the introduction. Denote by \(\Sigma =\{1,2,\ldots ,n\}\) and \(\Sigma ^*=\cup _{k\ge 0}\Sigma ^k\) where \(\Sigma ^0=\emptyset \) (as a root). For \(x=i_1i_2\ldots i_k\in \Sigma ^k\), write the length \(|x|=k\) and the composition of maps \(S_x=S_{i_1}\circ \cdots \circ S_{i_k}\). Say \(x,y\in \Sigma ^*\) are equivalent, denote by \(x\sim y\), if \(S_x=S_y\). Trivially, \(\sim \) defines an equivalence relation on \(\Sigma ^*\). Let \(X_k=\Sigma ^k/\sim \) be the set of equivalence classes on level k. Then \(X=\cup _{k=0}^\infty X_k\) is the quotient space of \(\Sigma ^*\) under the relation \(\sim \). For convenience, we use [x] to express the equivalence class containing x. Occasionally, we replace [x] by x with no confusion.

There is a natural graph structure on X by the standard concatenation of indices, we denote the (vertical) edge set by \({\mathcal {E}}_v\). That is, \((x,y)\in {\mathcal {E}}_v\) if and only if there exist \(x'\in [x], y'\in [y]\) and some \(z\in \Sigma \) such that \(y'=x'z\) or \(x'=y'z\). According to the geometric structure of K, we need to define a horizontal edge set on X:

Let \({\mathcal {E}}={\mathcal {E}}_v\cup {\mathcal {E}}_h\), then \((X, {\mathcal {E}})\) resembles Kaimanovich’s augmented tree [9], and is a Gromov hyperbolic graph (due to Theorem 1.2 of [18]). Moreover, we have

Proposition 2.1

The hyperbolic boundary of \((X, {\mathcal {E}})\) is Hölder equivalent to K.

Proof

According to Theorem 1.3 of [18]. It suffices to prove that the iterated function system \(\{S_i\}_{i=1}^n\) of K satisfies the condition (H):

There exists a constant \(c>0\) such that for any integer \(k>0\) and any \(x,y\in X_k\), either \(S_x(K)\cap S_y(K)\ne \emptyset \) or \(|S_x(p)-S_y(q)|\ge cr^k\) for any \(p,q\in K\).

Since \(r<\frac{1}{n}\), \(I\ne \cup _{i=1}^nS_i(I)\). Let \(I_1, \ldots , I_m\) be the open intervals from left to right in \(I\setminus \cup _{i=1}^nS_i(I)\). Then the shortest length of the open intervals, say \(c:=\min _{0\le i\le m}|I_i|\), is the desired constant in condition (H). \(\square \)

For \(T\subset X\), we say that T is a horizontal component if \(T\subset X_k\) for some k and T is a maximal connected subset of \(X_k\) with respect to \({{\mathcal {E}}}_h\). We remark that if \({\mathcal {E}}_h=\emptyset \) then the horizontal component T is a single vertex. Geometrically, T is a horizontal component of X if and only if \(\cup _{x\in T}S_x(I)\) is a connected component of \(\cup _{x\in X_k}S_x(I)\). We use \(T_{\mathcal D}\) to denote the union of T and its all descendants, that is,

Then \(T_{\mathcal D}\), equipped with the edge set \({\mathcal {E}}\) restricted on \(T_{\mathcal D}\), is a subgraph of X. For any \(x\in X\), we define \(xT:=\{xy:y\in T\}\).

Let \(T\subset X_k, T'\subset X_m\) be two horizontal components of X. We say that T and \(T'\) are equivalent, denote by \(T \sim T'\), if there exists a graph isomorphism \(g: T_{\mathcal D}\rightarrow T'_{\mathcal D}\), i.e., the map g and the inverse map \(g^{-1}\) preserve the vertical and horizontal edges of \(T_{\mathcal D}\) and \(T'_{\mathcal D}\). Denote by [T] the equivalence class and \({\mathcal {F}}\) the family of all horizontal components of X.

Lemma 2.2

For any two horizontal components \(T_1\subset X_{k_1}, T_2\subset X_{k_2}\) with \(\#T_1=\#T_2\). If there exists a similitude \(\phi (x)=r^{k_2-k_1}Rx+c\) where \(R=\pm 1, c\in {\mathbb R}\) such that

then \(T_1 \sim T_2\).

Proof

Without loss of generality, we may assume that \(T_1=\{x_1,\ldots , x_m\}\) and \(T_2=\{y_1, \ldots , y_m\}\) and \(\phi \circ S_{x_i}= S_{y_i}\) where \(i=1,\ldots , m\). Then for any finite word \(z\in \Sigma ^*\), we have

It follows that for any \(z,w\in \Sigma ^*\), \(S_{x_iz}=S_{x_iw}\) if and only if \(S_{y_iz}=S_{y_iw}\) and \( S_{x_iz}(I)\cap S_{x_iw}(I)\ne \emptyset \) if and only if \(S_{y_iz}(I)\cap S_{y_iw}(I)\ne \emptyset \). That proving \(T_1 \sim T_2\). \(\square \)

Definition 2.3

We call the graph \((X,\mathcal {E})\) simple if the number of equivalence classes in \(\mathcal {F}\) is finite. Let \([T_1],[T_2],\ldots ,[T_d]\) be the equivalence classes, and let \(a_{ij}\) denote the cardinality of the horizontal components of offspring of \(T\in [T_i]\) that belong to \([T_j]\). We call \(A=[a_{ij}]_{d\times d}\) the incidence matrix of \((X,\mathcal {E})\).

Since \(S_x=S_y\) may happen for \(x\ne y\), the natural graph \((X,\mathcal {E}_v)\) may be not a tree. In that situation, one vertex of X may have multiparents that destroys the tree structure. However, we can reduce it into a tree \((X,\mathcal {E}_v^{*})\) in the following way: for a vertex \(y\in X\setminus \{\vartheta \}\), let \(x_1,x_2,\ldots ,x_k\) be all the parents of y such that \(|x_i|=|y|-1\) and \((x_i, y)\in {\mathcal {E}}_v\), \(i=1,2,\ldots ,k\). Suppose \(x_1<x_2<\cdots <x_k\) in the lexicographical order for finite indices. We keep only the edge \((x_1,y)\) and remove all other vertical edges. We denote by \(\mathcal {E}_v^{*}\) the set of remained edges. Then \((X,\mathcal {E}_v^{*})\) is the reduced tree.

Similarly, for the tree \((X,\mathcal {E}_v^{*})\), we say two vertices x, y of X are equivalent (write \(x\sim y\)) if the two subtrees \(\{x\}_{\mathcal {D}}\) and \(\{y\}_{\mathcal {D}}\) are graph isomorphic. \((X,\mathcal {E}_v^{*})\) is called a simple tree if X has only finitely many non-equivalent vertices. In this sense, we denote by \([t_1],[t_2],\ldots ,[t_k]\) the equivalence classes of vertices (\(\vartheta \in [t_1]\) for convenience) and \(B=[b_{ij}]_{k\times k}\) the corresponding incidence matrix. Obviously, if \((X,\mathcal {E})\) is simple then as a subgraph, \((X,\mathcal {E}_v^{*})\) is also simple. Therefore, any horizontal component of \((X,\mathcal {E})\) can be represented by the equivalence classes of vertices as follows: For \(T\in [T_i]\), \(1\le i\le d\), suppose that T consists of \(u_{ij}\) vertices belonging to \([t_j]\), \(j=1,\ldots ,k\). We represent it by using a vector \(\mathbf {u}_i=[u_{i1},\ldots ,u_{ik}]\) (relative to \([t_1],\ldots ,[t_k]\)), and write \(\mathbf {u}=[\mathbf {u}_1,\ldots ,\mathbf {u}_d]\). Then the vector \(\mathbf {u}\) can be regard as a representation of the classes \([T_1],\ldots ,[T_d]\) with respect to the classes \([t_1],\ldots ,[t_k]\).

Theorem 2.4

[10] Let \((X,\mathcal {E}_v^{*})\) and \((Y,\mathcal {E}_v^{'*})\) be two simple trees defined as above. If they have same incidence matrix B, then they are graph isomorphic.

Definition 2.5

Let B be a \(k\times k\) non-negative matrix, let \(\mathbf {u}=[\mathbf {u}_1,\ldots ,\mathbf {u}_d]\) where \(\mathbf {u}_i=[u_{i1},\ldots ,u_{ik}]\in \mathbb {Z}_{+}^{k}\). A \(d\times d\) matrix A is said to be \((B,\mathbf {u})\)-rearrangeable (or to satisfy the matrix rearrangeable condition) if for each row vector \(\mathbf {a}_i\) of A, there exists a non-negative matrix \(C=[c_{ij}]_{p\times d}\) where \(p=\sum _{j=1}^{k}u_{ij}\) such that

and \(\#\left\{ \mathbf {c}_t:\mathbf {c}_t=\mathbf {b}_j,\ t=1,2,\ldots ,p\right\} =u_{ij}\), where \(\mathbf {b}_j\) is the j-th row vector of B, \(\mathbf {1}=[1,\ldots ,1]\) such that the involved matrix product is well defined, and \( \mathbf {u}^*=\tiny {\left[ \begin{array}{c} \mathbf {u}_1\\ \vdots \\ \mathbf {u}_d\end{array}\right] }. \)

The notion of the matrix rearrangeable condition (MRC) is the most important technical device in constructing the needed bi-Lipschitz map. It was introduced and systematically studied by Lau and Luo (please see a series of papers [4, 10, 12]). Many matrices, such as primitive matrices, satisfy the MRC.

With the above notion and Proposition 2.1, the main theorems of [10] can be reformulated as follows.

Theorem 2.6

Suppose the graph \((X,\mathcal {E})\) of a self-similar set K is simple and suppose the incidence matrix A is \((B,\mathbf {u})\)-rearrangeable. Then \(\partial (X, {\mathcal {E}})\simeq \partial (X, {\mathcal {E}}_v^*)\).

Moreover, since \((X, {\mathcal {E}}_v^*)\) is a tree, its hyperbolic boundary is a Cantor-type set. Then the self-similar set K is Lipschitz equivalent to a Cantor-type set.

3 The proof of Theorem 1.1

By Theorem 2.6, to prove Theorem 1.1, we only need to show that the graph \((X,\mathcal {E})\) induced by any self-similar set \(K\in \mathcal {C}_{n,r,\ell }\) where \(\ell \ge 1\) is simple and the incidence matrix A is \((B,\mathbf {u})\)-rearrangeable.

Lemma 3.1

Let \(K\in \mathcal {C}_{n,r,\ell }, \ell \ge 1\). Then the hyperbolic graph \((X,\mathcal {E})\) is simple.

Proof

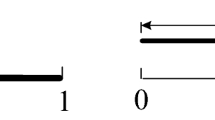

Obviously, \(0, 1\in K\) and \(K\subset I\). Let \(X=\cup _{k=0}^\infty X_k\) and \({\mathcal {E}}={\mathcal {E}}_v\cup {\mathcal {E}}_h\) be defined as in Sect. 2. From the conditions (1), (2), (3) of K, it can be seen that any horizontal component of X is generated by either one vertex (see Fig. 1b where \(1T_0\) is generated by the vertex 1) or two vertices that are joined by one horizontal edge in the previous level (see Fig. 1b where \(T_3\) is generated by the two vertices \(\{1, 2\}\)). In the later case, there are only two types according to the condition (3): Let \((x,y)\in {\mathcal {E}}_h\) be a horizontal edge with \(x<y\). Then either

Write

From the condition (2) of K, there are at least two components in the first iteration \(\cup _{i\in \Sigma }S_i(I)\) (see Fig. 1a). Hence there are at least two horizontal components in the first level \(X_1\) of the hyperbolic graph \((X,\mathcal {E})\). We denote them by \(T_0,T_1,\ldots ,T_k\), where \(k\ge 1\). Without loss of generality, we may assume that \(1\in T_0\) and \(n\in T_1\).

Let \(m_i=\#(T_i\cap V_1)\) and \(\ell _i=\#(T_i\cap V_2)\), then \(\#T_i=m_i+\ell _i+1\) where \(i=0,1,\ldots ,k\). Moreover, we have

For any two vertices \(x,y\in T_i\) with \(x<y\), if \((x,y)\in {\mathcal {E}}_h\), then we need to consider the following two cases:

-

(i)

the edge (x, y) is of Type A. Since \(S_{xn}=S_{y1}\), the offsprings of vertices \(\{x,y\}\) arise a new horizontal component, say \(T_{k+1}:=xT_1\cup yT_0\) with \(\#T_{k+1}=\#T_0+\#T_1-1\) (see Fig. 1b). All the horizontal components generated by \(\{x,y\}\) are

$$\begin{aligned} xT_0,xT_2,\ldots ,xT_k,T_{k+1},yT_2,\ldots ,yT_k,yT_1. \end{aligned}$$It can be seen that \(\#T_{k+1}=m_{k+1}+\ell _{k+1}+1\) where \(m_{k+1}:=\#(T_{k+1}\cap V_1)\) and \(\ell _{k+1}:=\#(T_{k+1}\cap V_2)\). Hence

$$\begin{aligned} m_{k+1}=m_0+m_1, \quad \ell _{k+1}=\ell _0+\ell _1. \end{aligned}$$(3.2) -

(ii)

the edge (x, y) is of Type B. In the offsprings of \(\{x,y\}\), we can find a new horizontal component \(T_{k+2}:=xT_1\cup yT_0\) with \(\#T_{k+2}=\#T_0+\#T_1\) (see Figure 1(c)). All the horizontal components generated by \(\{x,y\}\) are

$$\begin{aligned} xT_0,xT_2,\ldots ,xT_k,T_{k+2},yT_2,\ldots ,yT_k,yT_1. \end{aligned}$$

If we let \(m_{k+2}=\#(T_{k+2}\cap V_1)\) and \(\ell _{k+2}=\#(T_{k+2}\cap V_2)\). Then \(\#T_{k+2}=m_{k+2}+\ell _{k+2}+1\). Hence

By similarity and Lemma 2.2, it is easy to check that \(yT_i\sim T_i\) for \(i=0,\ldots ,k\). We mention that it is possible that \([T_i]=[T_j]\) for \(i\ne j\in \{0,1,\ldots ,k+2\}\), but that will not affect the finiteness of the equivalence classes.

In the third level \(X_3\), for \(i=0,1,\ldots ,k+2\), we have \(\#T_i=m_i+\ell _i+1\) with \(m_i=\#(T_i\cap V_1)\) and \(\ell _i=\#(T_i\cap V_2)\). It follows that, in \(T_i\), there are \(m_i\) pairs of \(\{x,y\}\) so that (x, y) is of Type A and \(\ell _i\) pairs of \(\{x,y\}\) so that (x, y) is of Type B. By using the similar arguments as above and Lemma 2.2, the offsprings of vertices in \(T_i\) give rise to one class \([T_0]\), one class \([T_1]\), \(m_i\) classes \([T_{k+1}]\), \(\ell _i\) classes \([T_{k+2}]\) and \(m_i+\ell _i+1\) classes \([T_i]\) for \(i=2,\ldots , k\). Hence we find out all the equivalence classes of horizontal components in the first three levels of X which are \([T_0],[T_1],\ldots ,[T_{k+2}]\).

Based on the previous arguments, there will be no new classes appearing in the offsprings of any component \(T\in [T_i]\) for \(i=0,1,\ldots ,k+2\). Therefore, \((X,{\mathcal {E}})\) is simple and the incidence matrix A is of the form

\(\square \)

Lemma 3.2

Let \(K\in \mathcal {C}_{n,r,\ell }, \ell \ge 1\). Then the reduced tree \((X,\mathcal {E}_v^{*})\) is simple with incidence matrix

Proof

By using the similar arguments as in the proof of Lemma 3.1, we shall find the incidence matrix B. For any pair of indices \(x,y\in \Sigma ^*\) with \(x<y\). If \(|S_x(I)\cap S_{y}(I)|=r^{|x|+1}\) then we must have \(S_{xn}=S_{y1}\) and \(xn,y1\in \Sigma ^*\) are equivalent (i.e., \(xn\sim y1\)), hence \(\{xn, y1\}\) forms an identifying vertex of X (\(=\Sigma ^*/\sim \)). On the other hand, from the conditions (1), (2), (3) of the self-similar set K, we can see that any identifying vertex of X has at most two members of the form \(\{xn, y1\}\).

Let \(t_1=\vartheta \) be the root of X and \([t_1]\) be the equivalence class containing \(\vartheta \). Due to condition (4) of K, we may assume that \(i_1,\ldots , i_\ell \) are the indices in the first level \(X_1\) such that \(|S_{i_j}(I)\cap S_{i_j+1}(I)|=r^2, j=1,\ldots , \ell \). Hence there are \(\ell \) identifying vertices in the second level \(X_2\), say \(x_j:=\{i_jn, (i_{j}+1)1\}, j=1,\ldots , \ell \). By the construction of the reduced tree \((X,\mathcal {E}_v^{*})\), we need to remove the vertical edges \((i_{j}+1, x_j)\) and keep \((i_j, x_j)\) in the graph \((X,\mathcal {E}_v)\) as \(i_j<i_j+1\). Then the number of offsprings of vertex \(i_j+1\) is \(n-1\) for each j. We denote by \([t_2]\) the equivalence class containing \(i_j+1\). If we continue this progress until the third level \(X_3\), it can be seen that there are only two classes of vertices in \((X,\mathcal {E}_v^{*})\), say \([t_1], [t_2]\), and the incidence matrix follows. \(\square \)

Lemma 3.3

A is \((B,\mathbf {u})\)-rearrangeable, where \(\mathbf {u}\) is the representation defined in Sect. 2.

Proof

Matrices A and B have been determined by Lemmas 3.1 and 3.2. We still need to get the vector \(\mathbf {u}\). For \(i=0,1,\ldots ,k+2\), we have \(\#T_i=m_i+\ell _i+1\) where \(m_i=\#(T_i\cap V_1)\) and \(\ell _i=\#(T_i\cap V_2)\). Hence there are \(m_i\) vertices in \(T_i\) that belong to the class \([t_2]\). The remaining \(\ell _i+1\) vertices belong to \([t_1]\). We can write \(\mathbf {u}\) as

Let \(\mathbf {a}_i\) be the i-th row vector of the matrix A in (3.4) and let \(p=1+\ell _i+m_i\). By (3.1), (3.2) and (3.3), we obtain that \(\mathbf {1}C=\mathbf {a}_i\) and

Consequently, A is \((B,\mathbf {u})\)-rearrangeable by Definition 2.5. \(\square \)

Proof of Theorem 1.1

Let \(K, K'\in \mathcal {C}_{n,r,\ell }\), and let X, Y be their hyperbolic graphs, respectively. By Lemma 3.2, their reduced trees are both simple with the common incidence matrix B as in (3.5), hence are graph isomorphic by Theorem 2.4. Lemma 3.1 implies that both X and Y are simple. We denote their incidence matrices by A and \(A'\), respectively. According to Lemma 3.3, A is \((B,\mathbf {u})\)-rearrangeable and \(A'\) is \((B,\mathbf {u}')\)-rearrangeable where \(\mathbf {u},\mathbf {u}'\) are the corresponding representations. Then K and \(K'\) are Lipschitz equivalent to the common Cantor-type set by Theorem 2.6. Therefore \(K\simeq K'\). \(\square \)

Proof of Corollary 1.2

If \(K\in \mathcal {C}_{n,r,\ell }\) for \(\ell \ge 1\). Let \((X,{\mathcal {E}})\) be the hyperbolic graph induced by K. Lemma 3.2 implies that the reduced tree \((X, {\mathcal {E}}_v^*)\) is simple with the incidence matrix B as in (3.5). The spectral radius of B is \(\rho := \frac{n+\sqrt{n^2-4\ell }}{2}\). Let \(\mathbf {e}_1=[1,0], \mathbf {1}=[1, 1]\) and \(\mathbf {1}^t\) be the transpose of \(\mathbf {1}\). Then the number of vertices of k-th level can be calculated by \(\#X_k=\mathbf {e}_1B^k\mathbf {1}^t\) and \(\lim _{k\rightarrow \infty } (\mathbf {e}_1B^k\mathbf {1}^t)^{1/k}=\lim _{k\rightarrow \infty }(||B^k||_{\infty })^{1/k}=\rho \) by Gelfand’s formula. Moreover, \(\#X_k\) also counts the number of different pieces of K in the k-th iteration. Since the corresponding IFS \(\{S_i\}_{i=1}^n\) of K satisfies the weak separation property [18], by Theorem 2 in [22], we obtain

If \(K\in \mathcal {C}_{n,r,0}\), there are no overlaps, then \(\dim _H K=\frac{\log n}{-\log r}\). Suppose \(K, K'\in \mathcal {C}_{n,r}(=\bigcup _{\ell =0}^{n-1}\mathcal {C}_{n,r,\ell })\) and \(K\simeq K'\), then they have the same Hausdorff dimension. Thus, \(K, K'\) belong to the common collection \(\mathcal {C}_{n,r,\ell }\) for some \(\ell \). The converse follows from Theorem 1.1. \(\square \)

References

Chen, X., Jiang, K., Li, W.X.: Lipschitz equivalence of a class of self-similar sets. Ann. Acad. Sci. Fenn. Math. 42(1), 1–7 (2017)

Cooper, D., Pignataro, T.: On the shape of Cantor sets. J. Differ. Geom. 28, 203–221 (1988)

David, G., Semmes, S.: Fractured Fractals and Broken Dreams: Self-Similar Geometry Through Metric and Measure. Oxford University Press, Oxford (1997)

Deng, G.T., Lau, K.S., Luo, J.J.: Lipschitz equivalence of self-similar sets and hyperbolic boundaries II. J. Fract. Geom. 2, 53–79 (2015)

Falconer, K.J., Marsh, D.T.: On the Lipschitz equivalence of Cantor sets. Mathematika 39, 223–33 (1992)

Gromov, M.: Hyperbolic Groups. MSRI Publications 8, pp. 75–263. Springer, New York (1987)

Guo, Q.L., Li, H., Wang, Q., Xi, L.-F.: Lipschitz equivalence of a class of self-similar sets with complete overlaps. Ann. Acad. Sci. Fenn. Math. 37(1), 229–243 (2012)

Jiang, K., Wang, S., Xi, L.: Lipschitz equivalence of self-similar sets with exact overlaps. Ann. Acad. Sci. Fenn. Math. 43, 905–912 (2018)

Kaimanovich, V.: Random walks on Sierpi\(\grave{\text{n}}\)ski graphs: hyperbolicity and stochastic homogenization. Fractals in Graz 2001, Trends Math., Birkh\(\ddot{a}\)user, pp. 145–183 (2003)

Luo, J.J.: Self-similar sets, simple augmented trees, and their Lipschitz equivalence. J. Lond. Math. Soc. 99(2), 428–446 (2019)

Luo, J.J.: On the Lipschitz equivalence of self-affine sets. Math. Nachr. 292(5), 1032–1042 (2019)

Luo, J.J., Lau, K.S.: Lipschitz equivalence of self-similar sets and hyperbolic boundaries. Adv. Math. 235, 555–579 (2013)

Luo, J.J., Ruan, H.J., Wang, Y.L.: Lipschitz equivalence of Cantor sets and irreducibility of polynomilals. Mathematika 64, 730–741 (2018)

Luo, J.J., Wang, L.: Topological structure and Lipschitz classification of self-similar sets with overlaps, preprint (2020)

Rao, H., Ruan, H.J., Wang, Y.: Lipschitz equivalence of Cantor sets and algebraic properties of contraction ratios. Trans. Am. Math. Soc. 364, 1109–1126 (2012)

Rao, H., Ruan, H.J., Xi, L.-F.: Lipschitz equivalence of self-similar sets. Comptes Rendus Acad. Sci. Paris Ser. I(342), 191–196 (2006)

Ruan, H.J., Wang, Y., Xi, L.-F.: Lipschitz equivalence of self-similar sets with touching structures. Nonlinearity 27, 1299–1321 (2014)

Wang, X.Y.: Graphs induced by iterated function systems. Math. Z. 277, 829–845 (2014)

Woess, W.: Random walks on infinite graphs and groups. Cambridge Tracts in Mathematics 138 (2000)

Xi, L.-F., Ruan, H.J.: Lipschitz equivalence of generalized \(\{1,3,5\}\)-\(\{1,4,5\}\) self-similar sets. Sci. China Ser. A 50, 1537–1551 (2007)

Xi, L.-F., Xiong, Y.: Lipschitz equivalence of fractals generated by nested cubes. Math. Z. 271, 1287–1308 (2012)

Zerner, M.P.W.: Weak separation properties for self-similar sets. Proc. Am. Math. Soc. 124, 3529–3539 (1996)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by H. Bruin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research is supported by the Fundamental Research Funds for the Central Universities (Project No. 2019CDXYST0015), the Venture & Innovation Support Program for Chongqing Overseas Returnees (Project No. cx2018065) and Natural Science Foundation of Chongqing (No. cstc2019jcyj-msxmX0030).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, L., Xiong, DH. Lipschitz classification of self-similar sets with overlaps. Monatsh Math 195, 343–352 (2021). https://doi.org/10.1007/s00605-021-01542-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00605-021-01542-8