Abstract

In this paper we prove Gamma-convergence of a nonlocal perimeter of Minkowski type to a local anisotropic perimeter. The nonlocal model describes the regularizing effect of adversarial training in binary classifications. The energy essentially depends on the interaction between two distributions modelling likelihoods for the associated classes. We overcome typical strict regularity assumptions for the distributions by only assuming that they have bounded BV densities. In the natural topology coming from compactness, we prove Gamma-convergence to a weighted perimeter with weight determined by an anisotropic function of the two densities. Despite being local, this sharp interface limit reflects classification stability with respect to adversarial perturbations. We further apply our results to deduce Gamma-convergence of the associated total variations, to study the asymptotics of adversarial training, and to prove Gamma-convergence of graph discretizations for the nonlocal perimeter.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

While modern machine learning methods and in particular deep learning [37] are known to be effective tools for difficult tasks like image classification, they are prone to adversarial attacks [45]. The latter are imperceptible perturbations of the input which destroy classification accuracy. As a way to mitigate the effects of adversarial attacks, Madry et al. [38] suggested a robust optimization algorithm to train more stable classifiers. Given a metric space \(\mathcal {X}\) acting as feature space, a set \(\mathcal {Y}\) acting as label space, a probability measure \(\mu \in \mathcal {M}(\mathcal {X}\times \mathcal {Y})\), which models the distribution of training data, a loss function \(\ell : \mathcal {Y}\times \mathcal {Y}\rightarrow \mathbb {R}\), and a collection of classifiers \(\mathcal {C}\), adversarial training takes the form of the minimization problem

Here we use the notation \(\mathbb {E}_{z\sim \mu }[f(z)]:=\int f(z)\,\textrm{d}\mu (z)\). Adversarial training seeks a classifier for which adversarial attacks in the ball of radius \(\varepsilon \) around x (with respect to the metric on \(\mathcal {X}\)) have the least possible impact, as measured through the function \(\ell \). Here \(\varepsilon >0\) is referred to as the adversarial budget and will play an important role in this article.

Since its introduction a significant body of literature has evolved around adversarial training, both focusing on its empirical performance and improvement (see the survey [5]), and its theoretical understanding. Since the purpose of this article is primarily of theoretical nature, we restrict our discussion to the latter developments. As it turns out, adversarial training has intriguing connections within mathematics. Firstly, it was noted in different works that adversarial training is strongly connected to optimal transport. This was explored in the binary classification case, where \(\mathcal {Y}= \{0,1\}\) and \(\ell \) equals the 0-1-loss, in a series of works, see [9, 32, 41, 42] and the references therein. Only recently these results were generalized to the multi-class case where \(\mathcal {Y}=\{1,2,\dots ,K\}\) by García Trillos et al. [31] who characterize the associated adversarial training problem in terms of a multi-marginal optimal transport problem. Secondly, it was already observed by Finlay and Oberman [26] that asymptotically, meaning for very small values of \(\varepsilon >0\) in (1.1), adversarial training is related to a regularization problem, where the gradient of the loss function is penalized in a suitable norm:

Here \(\mathcal {X}\) is assumed to be a Banach space and \(\left\| \cdot \right\| _*\) is the corresponding dual norm. While these connections were mostly formal, García Trillos and García Trillos [30] made them rigorous in the context of adversarial training for residual neural networks. Still even there the relation between adversarial training and regularization was of asymptotic type, in particular, not allowing statements about the relation of minimizers of (1.1) and (1.2). Furthermore, in [32] García Trillos and Murray regard adversarial training in the form of (1.1) for binary classifiers as evolution with artificial time \(\varepsilon \) and relate it to (1.2) with a perimeter regularization at time \(\varepsilon =0\). A different approach was taken in their work together with the first author of this paper [12] where, again in the binary classification case and for \(\ell \) the 0-1-loss, it was shown that adversarial training is equivalent to a non-local regularization problem:

Here \({{\,\textrm{TV}\,}}_\varepsilon (\cdot ;\varvec{\rho })\) denotes a nonlocal total variation functional depending on the measures \(\varvec{\rho }:=(\rho _0,\rho _1)\) defined as \(\rho _i:= \mu (\cdot \times \{i\}\)) for \(i\in \{0,1\}\) which are—up to normalization—the conditional distributions of the two classes describing their respective likelihoods. The set of classifiers can be the set of characteristic functions of Borel sets \(\mathcal {C}_\textrm{char} = \{\chi _A \,:\,A \in \mathfrak B(\mathcal {X})\}\) or the set of “soft classifiers” \(\mathcal {C}_\textrm{soft} = \{u: \mathcal {X}\rightarrow [0,1]\}\) which live in a Lebesgue space equipped with a suitable measure on \(\mathcal {X}\). In this paper existence of solutions for adversarial training was proven, which included suitable relaxations of the objective function in (1.3) to a lower semi-continuous function, the construction of precise representatives, and the insight that the model with \(\mathcal {C}_\textrm{soft}\) is a convex relaxation of the model with \(\mathcal {C}_\textrm{char}\). Furthermore, regularity properties of the decision boundaries of solutions are investigated.

We would like to emphasize that the results in [12] are proved for open balls \(B(x,\varepsilon )\) in (1.1) and this will also be the setting of the present paper. Usually, adversarial training is defined using closed balls which does not change the model drastically but requires more care with respect to measurability of the underlying functions, see the discussion in [12, Remark 1.3, Appendix B.1]. For a different approach to proving existence—using a closed ball model—we refer to the work [4] by Awasthi et al., and the follow-up paper [3] studying consistency of adversarial risks.

The focus of this paper will be on the asymptotics of the functional \({{\,\textrm{TV}\,}}_\varepsilon (\cdot ;\varvec{\rho })\) in (1.3). In fact, we will work with the associated perimeter functional \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho }):= {{\,\textrm{TV}\,}}_\varepsilon (\chi _A;\varvec{\rho })\) for \(A\subset \mathcal {X}\) since all statements, in particular Gamma-convergence, proved for the perimeter directly carry over to the total variation (see Sect. 4). This perimeter was shown in [12] to be of the form

where the essential supremum and infimum are taken with respect to a suitable measure \(\nu \) with sufficiently large support. When \(\mathcal {X}=\mathbb {R}^d\) and \(\rho _i\) equal the Lebesgue measure, the perimeter (1.4) can be used to recover the Minkwoski content of subset of \(\mathbb {R}^d\) by sending \(\varepsilon \rightarrow 0\), see (2.1) below. In this simplified setting, the nonlocal perimeter has applications in image processing [6] and is mathematically well-understood. A thorough study of its properties like isoperimetric inequalities or compactness was undertaken in [16, 17], Gamma-convergence of related variants to local perimeters was investigated in [18, 19], and associated curvature flows were analyzed in [20, 21]. We note that Chambolle et al. [19] introduce anisotropy into the perimeter by replacing the ball B(x, r) in the definition of (1.4) by a scaled convex set C(x, r).

In this paper, we study the asymptotic behavior of the nonlocal perimeter (1.4) as \(\varepsilon \rightarrow 0\), using the framework of Gamma-convergence (see, e.g., [11, 23]). This approach is widely used in applications to materials science (see, e.g., [1, 22, 29, 40]) and is particularly applicable to the study of energy minimization problems depending on singular perturbations, where it can describe complex energy landscapes in terms of simpler understood effective energies. In the case of phase separation in binary alloys, energetic minimizers closely approximate minimal surfaces [39]. Likewise, we will relate local minimizers of the perimeter (1.4) to a weighted minimal surface, which has a transparent geometric interpretation.

Though the nonlocal perimeter (1.4) is not a phase field approximation of the classical perimeter, similar analytic tools are helpful. The Ambrosio–Tortorelli functional was introduced as an elliptic regularization of the Mumford–Shah energy for image segmentation with the nature of approximation made precise via Gamma-convergence [1, 10]. From the technical perspective, our work is related to the results of Fonseca and Liu [28] where Gamma-convergence of a weighted Ambrosio–Tortorelli functional is proven. In their setting, they consider a density described by a bounded SBV function with density uniformly bounded away from 0. In contrast to Chambolle et al. [19] and Fonseca and Liu [28], a principal challenge in our setting will be understanding the interaction between the densities \(\rho _0\) and \(\rho _1\) in the energy (1.4) and how these give rise to preferred directions.

We assume that \(\mathcal {X}= \Omega \subset \mathbb {R}^d\) and that the measures \(\rho _i\) have densities with respect to the Lebesgue measure and are supported on some subset of the domain \(\Omega \). While for smooth densities Gamma-convergence is proven relatively easily (as in [19]), we only assume that the densities are bounded BV functions. In this case, we prove that the Gamma-limit is an anisotropic and weighted perimeter of the form

where \(\nu _A\) denotes the unit normal vector to the boundary of A. More rigorous definitions are given the Sect. 2. Here, BV functions are a natural space for the distributions as discontinuities are allowed, but they are sufficiently regular to be well-defined on surfaces and thereby prescribe interfacial weights. We note that while the anisotropic dependence on the normal \(\nu _A\) vanishes for continuous densities \(\rho _i\), in the discontinuous case, the anisotropy provides a direct interpretation of the asymptotic regularization effect coming from adversarial training (1.1) for small adversarial budgets (see Examples 1 and 2).

An interesting consequence of our Gamma-convergence result is the convergence of adversarial training (1.1) as \(\varepsilon \rightarrow 0\) to a solution of the problem with \(\varepsilon =0\) with minimal perimeter. Furthermore, the primary result in the continuum setting can be used to recover a Gamma-convergence result for graph discretizations of the nonlocal perimeter; a setting that is especially relevant in the context of graph-based machine learning [33, 34, 36]. Our approach for this discrete to continuum convergence is in the spirit of these works and relies on \(TL^p\) (transport \(L^p\)) spaces which were introduced for the purpose of proving Gamma-convergence of a graph total variation by García Trillos and Slepčev in [34], but have also been used to prove quantitative convergence statements for graph problems, see, e.g., the works [14, 15] by Calder et al.

The rest of the paper is structured as follows: In Sect. 2 we introduce our notation, state our main results, and list some important properties of the nonlocal perimeter. Section 3 is devoted to proving a compactness result as well as Gamma-convergence of the nonlocal perimeters. In Sect. 4, we finally apply our results to deduce Gamma-convergence of the corresponding total variations, prove conditional convergence statements for adversarial training, and prove Gamma-convergence of graph discretizations.

2 Setup and main results

2.1 Notation

The most important bits of our notation are collected in the following.

Balls and cubes For \(x\in \mathbb {R}^d\) and \(r>0\) we denote by \(B(x,r):=\{y\in \mathbb {R}^d\,:\,\left| x-y\right| <r\}\) the open ball with radius r around x. Furthermore, \(Q_{\nu }(x,r)\) is an open cube centered at \(x\in \mathbb {R}^d\) with sides of length r and two faces orthogonal to \(\nu \). If \(\nu \) is absent, the cube is assumed to be oriented along the axes. We also define \(Q_{\nu }^{\pm }(x,r):= \{y\in Q_{\nu }(x,r): \pm \langle y-x,\nu \rangle > 0 \}\) and let \(Q'(x,r)\) denote the \(d-1\) dimensional cube centered at x with sides of length r.

Measures and sets The d-dimensional Lebesgue measure in \(\mathbb {R}^d\) is denoted by \(\mathcal {L}^d\) and the k-dimensional Hausdorff measure in \(\mathbb {R}^d\) as \(\mathcal {H}^k\). For a set \(A\subset \mathbb {R}^d\) we denote its complement by \(A^c:= \mathbb {R}^d{\setminus } S\). If \(A\subset \Omega \subset \mathbb {R}^d\) is a subset of some fixed other set \(\Omega \), we let \(A^c\) denote its relative complement \(\Omega {\setminus } A\). The symmetric difference of two sets \(A,B\subset \mathbb {R}^d\) is denoted by \(A\triangle B:= (A{\setminus } B)\cup (B{\setminus } A)\). Furthermore, the characteristic function of a set \(A\subset \mathbb {R}^d\) is denoted by

and by definition it holds \(\mathcal {L}^d(A\triangle B) = \int _{\mathbb {R}^d}\left| \chi _A-\chi _B\right| \,\textrm{d}x\). The distance function to a set \(A\subset \mathbb {R}^d\) is defined as

Measure-theoretic set quantities For \(t\in [0,1]\) the points where a measurable set \(A\subset \mathbb {R}^d\) has density t are defined as

The Minkowski content \(\mathcal {M}(A)\) of \(A\subset \mathbb {R}^d\) is defined as the following limit (in case it exists):

We denote by \(\partial ^*A\) the reduced boundary of a set A. This is the set of points where the measure-theoretic normal exists on the boundary of A [2, Definition 3.54].

Functions of bounded variation For an open set \(\Omega \subset \mathbb {R}^d\) we let \(BV(\Omega )\) denote the space of functions of bounded variation [2]. Let \(u\in BV(\Omega )\) and \(M \subset \Omega \) be an \(\mathcal {H}^{d-1}\)-rectifiable set with normal \(\nu \) (defined \(\mathcal {H}^{d-1}\)-a.e.). For \(\mathcal {H}^{d-1}\)-a.e. point \(x\in M\), the measure-theoretic traces in the directions \(\pm \nu \) exist and are denoted by \(u^{\pm \nu }(x)\) [2, Theorem 3.77]. These are the values approached by u as the input tends to x within the half-space \(\{y\,:\,\langle y-x,\pm \nu \rangle >0\}\), precisely,

We typically write \(u^\nu \) instead of \(u^{+\nu }\). We denote by \(u^+(x):= \max \{u^{\nu }(x),u^{-\nu }(x)\}\) the maximum of the trace values, and likewise \(u^-(x):=\min \{u^{\nu }(x),u^{-\nu }(x)\}\) for the minimum. Note that this notation is different from the one used in [2, Definition 3.67]. The standard total variation of a function \(u\in L^1(\Omega )\) is denoted by \({{\,\textrm{TV}\,}}(u)\) and satisfies \({{\,\textrm{TV}\,}}(u)=|Du|(\Omega )\) for Du the measure representing the distributional derivative (\(+\infty \) if it is not a finite measure). For \(u\in BV(\Omega )\) we let \(J_u\) denote its jump set, see, for example, [2] for a definition.

2.2 Main results

Let \(\Omega \subset \mathbb {R}^d\) be an open domain. We consider two non-negative measures \(\rho _0,\rho _1\) which are absolutely continuous with respect to the d-dimensional Lebesgue measure. To simplify our notation we shall identify these measures with their densities from now on, meaning that \(\,\textrm{d}\rho _i(x) = \rho _i(x) \,\textrm{d}x\) and \(\rho _i\in L^1(\Omega )\) for \(i\in \{0,1\}\).

We define the nonlocal perimeter of a measurable set \(A\subset \Omega \) with respect to the measures \(\varvec{\rho }:=(\rho _0,\rho _1)\) and a parameter \(\varepsilon >0\) as

It arises a special case of (1.4) by choosing \(\mathcal {X}=\Omega \) and \(\nu \) as the Lebesgue measure. Our main result that we prove in this paper is Gamma-convergence of the nonlocal perimeters (2.2) to the localized version which preserves the apparent anisotropy of the energy. To motivate the correct topology for Gamma-convergence we first state a compactness property of the energies.

Theorem 2.1

Let \(\Omega \subset \mathbb {R}^d\) be an open and bounded Lipschitz domain, and let \(\rho _0,\rho _1\in BV(\Omega )\cap L^\infty (\Omega )\) satisfy \(\mathop {\mathrm {ess\,inf}}\limits _\Omega (\rho _0+\rho _1)>0\). Then for any sequence \((\varepsilon _k)_{k\in \mathbb {N}}\) with \(\lim _{k\rightarrow \infty }\varepsilon _k=0\) and collection of sets \(A_k \subset \Omega \) with \(\limsup _{k\rightarrow \infty }{{\,\textrm{Per}\,}}_{\varepsilon _k}(A_{k};\varvec{\rho }) < \infty \), we have that up to a subsequence (not relabeled)

Remark 2.2

The assumption that \(\rho _0\) and \(\rho _1\) belong to \(BV(\Omega )\) is crucial for regularity of the set A following from Theorem 2.1. To see this, fix a set \(A\subset \Omega \) which is not a set of finite perimeter. Defining \(\rho _0 = \chi _A\) and \(\rho _1 = \chi _{A^c}\), we have that \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho }) \equiv 0\), showing that (2.3) cannot hold. If one enforces the constraint \(\mathop {\mathrm {ess\,inf}}\limits _\Omega \rho _i > 0\) on both densities, this problem is resolved, however this is an unreasonable constraint in the context of classification since it would require both classes to be entirely mixed.

Supposing now that A is a set of finite perimeter (i.e., \(\chi _A \in BV(\Omega )\)) and \(\rho _0,\rho _1 \in BV(\Omega )\), we define the function

where \(\nu = \frac{D\chi _A}{|D\chi _A|}\) is the measure-theoretic inner normal for A. We suppress dependence of \(\beta (\nu ;\varvec{\rho })\) on A as \(\beta (\nu ;\varvec{\rho })\) is uniquely prescribed, in the sense that if \(A_0\) and \(A_1\) are two sets of finite perimeter then the definition (2.4) is \(\mathcal {H}^{d-1}\)-a.e. equivalent in \(\left\{ x\in \Omega : \frac{D\chi _{A_0}}{|D\chi _{A_0}|}(x) = \frac{D\chi _{A_1}}{|D\chi _{A_1}|}(x)\in S^{d-1}\right\} .\) Note that if \(\rho _0\) and \(\rho _1\) are also continuous, then it holds for all \(\nu \) that

but for general BV-densities \(\beta \) may be anisotropic.

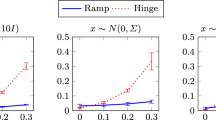

Example 1

To understand the behavior of \(\beta \), it is informative to consider two simple 1-dimensional examples. Supposing that

we can ask what the minimum energy of A should be in the limit. Note that \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho }) = 2\); this is the case for \(\rho _0^{-\nu } + \rho _1^{\nu } =2\) in the definition of \(\beta \). But if we shift A to the right and define \(A_\delta = (-1,\delta )\) with \(\delta > 0\), for sufficiently small \(\varepsilon \), then \({{\,\textrm{Per}\,}}_\varepsilon (A_\delta ;\varvec{\rho }) = 1\), which amounts to picking up the minimum \(\rho _0^{-\nu } + \rho _1^{-\nu } = 1\) in the definition of \(\beta .\) Likewise, one could shift to the left to recover \(\rho _0^{\nu } + \rho _1^{\nu } = 1\). However, we can never recover a minimum energy like \(\rho _0^{\nu } + \rho _1^{-\nu } = 0\) as this would require orienting the boundary of set A like the boundary of the set (0, 1), which it is not close to in an \(L^1\) sense. Consequently, the limit perimeter of A is \({{\,\textrm{Per}\,}}(A;\varvec{\rho })=\min \{1,1,2\}=1\). To see that the minimum of \(\rho _0^{-\nu } + \rho _1^{\nu }\) is needed in the definition of \(\beta \), one can simply swap the roles of \(\rho _0\) and \(\rho _1\) in the previous example. Precisely, for

we have that \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho }) = 0 = \rho _0^{-\nu } + \rho _1^\nu \), and shifting A increases the energy to \(\rho _0^{\nu } + \rho _1^\nu = \rho _0^{-\nu } + \rho _1^{-\nu } = 1\). Consequently, we have \({{\,\textrm{Per}\,}}(A;\varvec{\rho })=\min \{1,1,0\}=0\).

With the density \(\beta \) defined, we may state the principal Gamma-convergence result of the paper.

Theorem 2.3

Let \(\Omega \subset \mathbb {R}^d\) be an open and bounded Lipschitz domain, and let \(\rho _0,\rho _1\in BV(\Omega )\cap L^\infty (\Omega )\) satisfy \(\mathop {\mathrm {ess\,inf}}\limits _\Omega (\rho _0+\rho _1)>0\). Then

in the \(L^1(\Omega )\) topology, where the weighted perimeter is defined by

We note that Example 1 and Theorem 2.3 provide a direct interpretation for how the nonlocal perimeter (2.2) selects a minimal surface for adversarial training (1.1). The fidelity term \(\mathbb {E}_{(x,y)\sim \mu }\left[ \ell (u(x), y)\right] \) in (1.1) roughly wants to align A with \({{\,\textrm{supp}\,}}\rho _1\), for which a simple case is given by (2.6). In this situation, the cost function in (1.1) for \(A=(-1,0)\) takes the value \(2\varepsilon \) and the perimeter picks up the value \(\beta =2\). In contrast, for the sets \(A_\delta =(-1,\pm \delta )\) for \(\delta >\varepsilon \) the cost function has the value \(\delta +\varepsilon \) and these sets recover the limiting perimeter with \(\beta =1\). Hence, adversarial regularization reduces the cost by effectively performing a preemptive stabilizing perturbation of the classification region.

Remark 2.4

(Decomposition of the limit perimeter) We remark that the limit perimeter may be decomposed into an isotropic perimeter plus an anisotropic energy living only on the intersection of the jump sets \(J_{\rho _0}\cap J_{\rho _1}\) of the two densities. In particular, one can directly verify that

where \(\nu _A:= \frac{D\chi _A}{\left| D\chi _A\right| }\) is the measure-theoretic inner unit normal of the boundary of A and we use the notation \(t_+:=\max (t,0)\) for \(t\in \mathbb {R}\). Note that the only case for which the non-isotropic term is different from zero is the case that \(\rho _0^{\nu _A}<\rho _0^{-\nu _A}\) and \(\rho _1^{\nu _A}>\rho _1^{-\nu _A}\). In particular, if at least one of the densities is continuous, the anisotropy vanishes.

The most important consequence of Theorems 2.1 and 2.3 is a convergence statement as \(\varepsilon \rightarrow 0\) for the adversarial training problem for binary classifier which takes the form

For this we need some mild regularity for the so-called Bayes classifiers, i.e., the solutions of (2.10) for \(\varepsilon =0\). The condition is derived in Sect. 4.2 and stated in precise form in (SC) there. Sufficient for it to hold is that the Bayes classifier with minimal value of the limiting perimeter \({{\,\textrm{Per}\,}}(A;\varvec{\rho })\) defined in (2.8) has a sufficiently smooth boundary. We have the following convergence statement for minimizers of adversarial training.

Theorem 2.5

(Conditional convergence of adversarial training) Under the conditions of Theorems 2.1 and 2.3 and assuming the source condition (SC), any sequence of solutions to (2.10) possesses a subsequence converging to a minimizer of

Example 2

Another one-dimensional example highlights the effect of the anisotropy for the statement of Theorem 2.5. For this let \(\Omega :=(-2,2)\) and define the piecewise constant densities

Note that all the sets \(A\in \{(\alpha ,2)\,:\,\alpha \in [-1,1]\}\) minimize the Bayes risk \(\mathbb E_{(x,y)\sim \mu }\left[ \left| \chi _A(x)-y\right| \right] \). However, easy computations show that \({{\,\textrm{Per}\,}}((\alpha ,2);\mathbf \rho )=\frac{4}{16}\) for \(\alpha \in (-1,1]\) and \({{\,\textrm{Per}\,}}((-1,2);\mathbf \rho )=\frac{3}{16}\). Hence, \(A=(-1,2)\) has the smallest perimeter among these minimizers and hence solves (2.11). In this case the optimal Bayes classifier saturates the entire support of \(\rho _1\) at the cost of picking up the small jump \(\lim _{x\uparrow -1}\rho _0(x)-\lim _{x\downarrow -1}\rho _0(x)=\frac{1}{16}\).

Note that if one symmetrizes the situation by setting the values of \(\rho _0\) on \((-2,1)\) and of \(\rho _1\) on (1, 2) to \(\frac{4}{16}\), all the perimeters are the same. So in the previous situation it is the imbalance of the two classes which picks the support of \(\rho _1\) as perimeter-minimal Bayes classifier and hence favors class 1.

Theorem 2.3 is a direct consequence of Theorem 3.2 for the \(\liminf \) inequality and Theorem 3.3 for the \(\limsup \) inequality. To prove Theorem 3.2, we proceed via a slicing method, which allows us to reduce the argument to an elementary, though technical, treatment of the \(\liminf \) inequality in dimension \(d=1\). To prove the \(\limsup \) inequality in Theorem 3.3, we apply a density result of De Philippis et al. [24] to reduce to the case of a regular interface. In this setting, with the target energy in mind, we can locally perturb the interface to recover the appropriate minimum in the definition of \(\beta (\nu ;\varvec{\rho })\) for the given orientation.

As is well known, Theorem 2.3 implies convergence of minimization problems involving \({{\,\textrm{Per}\,}}_\varepsilon (\cdot ;\varvec{\rho })\) to problems defined in terms of the local perimeter \({{\,\textrm{Per}\,}}(\cdot ;\varvec{\rho })\) (see, e.g., [11]). Further, this result has a couple of important applications which we discuss in Sect. 4: Firstly, it immediately implies Gamma-convergence of the corresponding total variation. Secondly, as seen in Theorem 2.5, it has important implications for the asymptotic behavior of adversarial training (1.1) as \(\varepsilon \rightarrow 0\). Lastly, we can use it to prove Gamma-convergence of discrete perimeters on weighted graphs in the \(TL^p\) topology, see Theorem 4.7 in Sect. 4.

Future work will include using the results of this paper to study dynamical versions of adversarial training. We remark that one way to think of the anisotropy in the limiting energy is that trace discontinuities pick up derivative information (in the spirit of BV). To recover an effective sharp interface model with anisotropy even for smooth densities, one could then look to the “gradient flow” of the energy. For this one can interpret (1.3) as first step in a minimizing movement discretization of the gradient flow of the perimeter, where \(\varepsilon >0\) is interpreted as time step. Iterating this and sending \(\varepsilon \rightarrow 0\) one expects to arrive at a weighted mean curvature flow, depending on the densities \(\rho _i\). Furthermore, preliminary calculations also indicate that the next order Gamma-expansion of the nonlocal perimeter, i.e., the expression \(\frac{1}{\varepsilon }\big ({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho }) - {{\,\textrm{Per}\,}}(A;\varvec{\rho })\big )\), relies on the gap between the trace values of \(\rho _0\) and \(\rho _1\) on \(\partial A\) which induces anisotropy even for smooth densities.

2.3 Auxiliary definitions and reformulations of the perimeter

To work with the perimeter (2.2), it is convenient to reformulate the energy in a way such that it resembles the thickened sets introduced in the definition of the \(d-1\) dimensional Minkowski content (2.1). Recalling that \(A^t\) denotes the points of density t in A, for our setting, we have the following lemma, which may be directly verified (see also [19]).

Lemma 2.6

The perimeter (2.2) of a measurable set \(A\subset \Omega \) admits the following equivalent representation:

and furthermore it holds

meaning that \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho })\) can be expressed in terms of \(A^1\).

The above formulations provide a clear way to define restricted (localized) versions of the nonlocal perimeter. For measurable subsets A and \(\Omega ' \subset \Omega \), we define outer and inner nonlocal perimeters (respectively) of A in \(\Omega '\) as

Note that by definition the monotonicity property \({{\,\textrm{Per}\,}}_\varepsilon ^i(A;\varvec{\rho }, \Omega _1)\le {{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho },\Omega _2)\) holds for subsets \(\Omega _1\subset \Omega _2\subset \Omega \) and \(i\in \{0,1\}\). Furthermore, we define the restricted nonlocal perimeter of A in \(\Omega '\) as the sum

3 Gamma-convergence and compactness

In this section we will prove that the Gamma-limit of the nonlocal perimeters \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho })\), defined in (2.2), is given by \({{\,\textrm{Per}\,}}(A;\varvec{\rho })\), defined in (2.8), thereby completing the proof of Theorem 2.3.

3.1 Compactness

We can directly turn to the proof of compactness. The argument adapts the approach introduced in [19, Theorem 3.1], but takes care to account for the fact that the densities \(\rho _i\) are allowed to vanish.

Proof of Theorem 2.1

Let us define the following sequences of functions \((u_k)_{k\in \mathbb {N}}\) and \((v_k)_{k\in \mathbb {N}}\) by

Here \(u_k\) changes value in a small layer outside \(A_k\), and similarly, \(v_k\) transitions to 1 inside \(A_k\). Recalling that the gradient of the distance function has norm 1 almost everywhere outside of the 0 level-set (see, e.g., [25]), up to a null-set, these functions satisfy

Since

it follows

By the hypothesis \(\mathop {\mathrm {ess\,inf}}\limits _\Omega (\rho _0+\rho _1)>0\), we have \(\rho _0 + \rho _1 >c_\rho \) almost everywhere in \(\Omega \) for some constant \(c_\rho >0\). Hence, the sets \(\Omega _i: =\{\rho _i > \delta \}\) cover \(\Omega \) for \(0<\delta <c_\rho /2\), i.e., \(\Omega =\Omega _0\cup \Omega _1\). Applying the coarea formula to \(\rho _0\) and \(\rho _1\), we may choose \(\delta \) such that \(\Omega _i\) for \(i=0,1\) are sets of finite perimeter.

Applying the chain rule for BV functions [2, Theorem 3.96] with \(f:(y,z)\mapsto yz\), we find

Note that by construction \({{\,\textrm{TV}\,}}(\chi _{\Omega _0})<\infty \). Using this together with (3.2) shows that \(u_k \chi _{\Omega _0}\) is bounded uniformly in \(BV(\Omega )\). Similarly, we also have that \(v_k \chi _{\Omega _1}\) is bounded uniformly in \(BV(\Omega )\). Consequently, we apply BV-compactness (see, e.g., [2, Theorem 3.23]) to both sequences, to find that \(u_k \chi _{\Omega _0} \rightarrow u\) and \(v_k \chi _{\Omega _1} \rightarrow v \) in \(L^1(\Omega )\) where \(u,v \in BV(\Omega )\).

Taking into account that

and a similar computation for \(\left\| v_k-\chi _{A_k}\right\| _{L^1(\Omega _1)}\), we further have \(\chi _{A_k}\rightarrow u\) in \(L^1(\Omega _0)\) and \(\chi _{A_k}\rightarrow v\) in \(L^1(\Omega _1)\). Necessarily, it follows that \(u = \chi _U \) and \(v = \chi _V \) where \(U\subset \Omega _0\) and \(V \subset \Omega _1\) are sets with \(\chi _U = \chi _V\) in \(\Omega _0\cap \Omega _1\). Using lower semi-continuity of the total variation we get

and similarly \({{\,\textrm{TV}\,}}(\chi _V)<\infty \), meaning that both sets have finite perimeter in \(\Omega \).

Consequently, the set \(A:= U\cup V\) is of finite perimeter and satisfies \(\chi _{A_k}\rightarrow \chi _A\) in \(L^1(\Omega )\) since \(\chi _{U} = \chi _V\) on \(\Omega _0\cap \Omega _1\), and therefore

\(\square \)

3.2 Liminf bound

We now prove the associated \(\liminf \) bound in the definition of Gamma-convergence for Theorem 2.3. The argument relies on slicing techniques, which allow the general d-dimensional case to be reduced to 1-dimension. To keep this part of the argument relatively self-contained and notationally unencumbered, we perform the slicing argument locally, while remarking that the approach developed in [10] could also be applied.

In our proof of the 1-dimensional case, we will need the following auxiliary lemma, which is a direct consequence of Reshetnyak’s lower semi-continuity theorem.

Lemma 3.1

Let \((\varepsilon _k)_{k\in \mathbb {N}}\subset (0,\infty )\) be a sequence of numbers with \(\varepsilon _k \rightarrow 0\). Consider an interval \(I\subset \mathbb {R}\), \(\rho _0,\rho _1\in BV(I)\), and a sequence of sets \((A_k)_{k\in \mathbb {N}}\) with A a set of finite perimeter in I such that \(\chi _{A_k} \rightarrow \chi _{A}\) in \(L^1(I)\). It follows that

Proof

We restrict our attention to \(i = 0\). Note, up to an equivalent representative, we may assume that \(\rho _0 = \rho ^-_0\) is a lower semi-continuous function defined everywhere (see [2, Section 3.2]). Recall the function \(u_k\) introduced in the proof of Theorem 2.1 in (3.1) for which we have

As \(u_k \rightarrow \chi _A\) in \(L^1(\{x:\rho _0>\delta \})\) for all \(\delta > 0\), we may apply the Reshetnyak lower semi-continuity theorem [44, Theorem 1.7] in each open set \(\{x\in I:\rho _0>\delta \}\) to find

Letting \(\delta \rightarrow 0\) and applying (3.4) concludes the result. \(\square \)

Theorem 3.2

Let \(\Omega \subset \mathbb {R}^d\) be an open, bounded subset with Lipschitz boundary. Assume that \(\rho _0,\rho _1\) belong to \( BV(\Omega ) \cap L^\infty (\Omega )\). Let \(A\subset \mathbb {R}^d\) be a subset and \(\{A_k\}_{k\in \mathbb {N}}\subset \mathbb {R}^d\) be a sequence of sets such that \(\chi _{A_k}\rightarrow \chi _A\) in \(L^1(\Omega )\) and \(\chi _A \in BV_{loc}(\Omega )\). Let \(\{\varepsilon _k\}_{k\in \mathbb {N}}\subset (0,\infty )\) be a sequence of numbers with \(\varepsilon _k \rightarrow 0\). Then it holds that

Proof

For notational convenience, we suppress dependence on \(\varvec{\rho }\) and write \({{\,\textrm{Per}\,}}_\varepsilon (A)\) instead of \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho })\). Likewise, we consider \(\varepsilon \rightarrow 0,\) with the knowledge that this refers to a specific subsequence. Furthermore we define \(\nu :=\frac{D\chi _A}{\left| D\chi _A\right| }\) and write \(\beta _\nu \) instead of \(\beta \left( \frac{D\chi _A}{\left| D\chi _A\right| };\varvec{\rho }\right) \).

We split the proof into two steps. In Step 1, we show that the result holds in dimension \(d=1.\) In this setting, a good representative of a BV functions possesses one sided limits everywhere, which will effectively allow us to reduce to the consideration of densities given by Heaviside functions and an elementary analysis. To recover the \(\liminf \) in general dimension, in Step 2, we use a slicing and covering argument along with fine properties of both BV functions and sets of finite perimeter.

Step 1: Dimension \(d=1\) We may without loss of generality suppose that \(\Omega \) is a single connected open interval and \(\chi _A \in BV(\Omega ; \{0,1\})\). Consequently, the reduced boundary \(\partial ^* A\) of A is a finite set. Supposing \(x_0 \in \partial ^* A \), we show for sufficiently small \(\eta \) that

With this in hand, one can cover each element of \(\partial ^* A\) by pairwise-disjoint neighborhoods to apply the inequality (3.5) to conclude the theorem in the case \(d=1\).

We first suppose without loss of generality that \(x_0 = 0\), \(\nu (x_0) = -1,\) and that for any \(\eta \le \eta _0\) we have \(\partial ^* A \cap (-\eta , \eta ) = \{0\}\). Up to choosing a smaller \(\eta _0,\) we proceed by contradiction and suppose that there is a subsequence such that

for all \(\eta \le \eta _0.\) Applying Lemma 3.1, it follows that

This implies

Checking all the cases for the two minima on the right hand side shows that we must have

and

Using (3.9), we see that

and so, without loss of generality, we may suppose that

Using (3.10) inside (3.6) and applying (3.7) with the minimum identified by (3.9), we have that

thereby showing that

for some \(\delta >0.\) As (3.6) and (3.11) are unaffected by choosing \(\eta \) smaller, we now restrict \(\eta \) to be \(\eta <\delta /4\) and sufficiently small such that, for \(i=0,1\), the one-sided limits are approximately satisfied, precisely,

Using Lemma 2.6 it holds

with the key point being that \({{\,\textrm{Per}\,}}_\varepsilon ^1\) can also be expressed in terms of the same underlying set \(A^1_\varepsilon \). Using the above representation, by (3.11) and (3.12), for all \(\varepsilon \) sufficiently small, there is \(x_\varepsilon \in A_\varepsilon ^1\) such that \(x_\varepsilon \in (0,\eta ).\) As \(\chi _{A_\varepsilon } \rightarrow \chi _A\) in \(L^1(-\eta ,\eta ),\) we have that \(|(A_\varepsilon ^1)^c\cap (0,\eta )| >\eta /2\) for all sufficiently small \(\varepsilon .\) Using \(x_\varepsilon \), the representation (3.13), and (3.12), it follows that

Using this in (3.6) and applying (3.7) for \(i=1\) with minimum determined via (3.9), we have

for all \(\eta >0.\) Taking \(\eta \rightarrow 0\), we have

contradicting the definition of \(\beta _\nu \) in (2.4).

Step 2: Dimension \(d> 1\) For any \(\eta > 0\), we show that for \(\mathcal {H}^{d-1}\)-almost every \(x_0\in \partial ^* A\) there is \(r_0: =r_0(x_0,\eta )>0\) such that for every \(r<r_0\) then

where we recall that \(Q_\nu (x_0,r)\) is a cube oriented along \(\nu \).

Supposing, we have proven this, we may apply the Morse covering theorem [27] to find a countable collection of disjoint cubes \(\{Q_{\nu (x_i)}(x_i,r_i)\}_{i\in \mathbb {N}}\) satisfying (3.14) and covering \(\partial ^* A\) up an \(\mathcal {H}^{d-1}\)-null set. Directly estimating, we find that

Taking \(n \rightarrow \infty \) and then \(\eta \rightarrow 0\) concludes the theorem.

Turning now to prove (3.14), we apply the De Giorgi structure theorem to conclude that up to a \(\mathcal {H}^{d-1}\)-null set, we have

where \(K_i\) is a subset of \(C^1\) manifold. Consequently, for \(\mathcal {H}^{d-1}\)-almost everywhere \(x_0\in \partial ^* A\), we have that there is \(i\in \mathbb {N}\) such that the density relations

hold and the normals are aligned with

Fixing \(x_0\) as above, choose a scale \(r_0\) such that the density relations in (3.15) hold up to error \(\eta ,\) precisely,

and such that

After some algebraic manipulation, one sees that (3.16) implies

Without loss of generality, we may assume \(x_0 = 0\) and \(\nu _{\partial ^*A} (0) =e_d\).

We perform a slicing argument. For notational convenience, we choose \(A_\varepsilon \) to be given by the equivalent representative \(A^1_\varepsilon \), allowing us to use the representation (3.13) without writing the superscript. Defining

note that for any set

where the distance is directly in \(\mathbb {R}\) for the first set. We now use the representation (3.13), Fubini’s theorem with \(x = (x',x_d)\), and (3.20) to estimate

Applying Fatou’s lemma and Step 1 in the previous inequality, we have

We note for almost every \(x'\in Q'(0,r)\), if \(x_d\in \partial ^* A^{x'}\), then \(x = (x',x_d)\in \partial ^* A\) and \(\langle (0,\nu _{A^{x'}}(x_d)), \nu (x)\rangle >0\) by [2, Theorem 3.108 (a) and (b)] applied to \(\chi _A\). It then follows from [2, Theorem 3.108 (b)] applied to \(\rho _0\) and \(\rho _1\) that \( \beta _{\nu _{A^{x'}}}(x_d) = \beta _{\nu }(x)\) for \(\,\textrm{d}x' \otimes \mathcal {H}^0\)-a.e. \(x= (x',x_d).\) Using this, and subsequently the coarea formula [2], (3.17), (3.18), and the \(L^\infty \) bound on the densities (\(\left\| \rho _i\right\| _{L^\infty }\le C\)), we have

which together with (3.21) concludes (3.14) and the theorem. \(\square \)

3.3 Limsup bound

In this section, we show that for a given target classification region \(A\subset \Omega \), we can construct a recovery sequence with the optimal asymptotic energy. The result is precisely stated in the following theorem.

Theorem 3.3

Let the hypotheses of Theorem 3.2 hold. For any measurable set \(A \subset \Omega \) and sequence \((\varepsilon _k)_{k\in \mathbb {N}}\) with \(\varepsilon _k\rightarrow 0\) as \(k\rightarrow \infty \), there is a sequence of sets \(A_{k}\) such that \(\chi _{A_k}\rightarrow \chi _A\) in \(L^1(\Omega )\) and the following bound holds

The proof of this theorem relies on technical properties of BV functions, but is conceptually simple. Our approach is outlined in the following steps:

-

1.

We use use a recent approximation result of De Philippis et al. [24] to approximate the BV function \(\chi _A\) by a function \(u \in BV(\Omega )\) having higher regularity. We select a level-set, given by \(A_\eta \), of u such that \(\partial A_\eta = \partial ^*A_\eta \), \(\mathcal {H}^{d-1}(\partial A_\eta \triangle \partial ^* A) \ll 1\), and a large portion of \(\partial A_\eta \) is locally given by a \(C^1\) graph.

-

2.

We then break \(\partial A_\eta \) into a good set, with smooth boundary, and a small bad set, with controllable error.

-

3.

In the good set, \(A_\eta \) has \(C^1\) boundary, and here we construct an almost optimal improvement by perturbing the smooth interface. This is the result of Proposition 3.7.

-

(a)

Using a covering argument, we localize the construction of a near optimal sequence and introduce improved approximations in balls centered on points \(x_0 \in \partial A_\eta \).

-

(b)

Depending on the minimum value of \(\beta (\nu ;\varvec{\rho }) = \min \{\rho _0^{\nu } + \rho _1^{\nu },\rho _0^{-\nu } + \rho _1^{-\nu },\rho _0^{- \nu } + \rho _1^{ \nu } \}\), where \(\nu \) is the inner normal of \(A_\eta \), we either shift the interface up or down slightly or, in the latter case of the minimum, leave it unperturbed to recover the optimal trace energies. The essence of this is to approximately satisfy \(\rho _0 + \rho _1 \approx \beta (\nu ;\varvec{\rho })\) on the modified interface.

-

(a)

-

4.

Diagonalizing on approximations for \(A_\eta \) and then on \(\eta \), one obtains a recovery sequence the original set A.

We begin with the proof of Proposition 3.7 for Step 3, and for this, a couple auxiliary lemmas will make the argument easier. In our construction, we will select an appropriate level-set using the following lemma, which says that given control on an integral one can control the integrand in a large region.

Lemma 3.4

Let \(f:(a,b) \rightarrow [0,\infty )\) be integrable and \(\theta \in (0,1)\). Then

Proof

Using Markov’s inequality one computes

\(\square \)

To control errors arising in our interface construction, we will take advantage of the assumption \(\rho _i \in L^\infty (\Omega )\). Specifically, energetic contributions of \(\mathcal {H}^{d-1}\)-small pieces of our construction will be thrown away using the following proposition for the classical Minkwoski content.

Proposition 3.5

(Theorem 2.106 [2]) Suppose that \(f:\mathbb {R}^{d-1}\rightarrow \mathbb {R}^d\) is a Lipschitz map. Then for a compact set \(K\subset \subset \mathbb {R}^{d-1}\) it holds

where \(\mathcal {M}\) is the \(d-1\)-dimensional Minkowski content defined in (2.1).

In the proof of Step 3 and in the case that the optimal energy sees the traces on both sides of the interface, we will need a recovery sequence with non-flat interface. We will apply the following lemma to show that the weighted perimeters converge on either side of the interface.

Lemma 3.6

Suppose that \(A\subset Q(0,r)\) is given by the sub-graph of a function in \(C^1(\overline{Q'(0,r)})\) which does not intersect the top and bottom of the cube Q(0, r). Then

Proof

Again, we suppress the dependency of \(\varvec{\rho }\). It suffices to show convergence for the outer perimeter \({{\,\textrm{Per}\,}}_\varepsilon ^0 (A;Q(0,r))\) defined in (2.14).

Let \(g_0:Q'(0,r)\rightarrow (-r/2,r/2)\) be the function prescribing the graph associated with \(\partial A\). Let \(x \in \mathbb {R}^{d} \mapsto {\text {sdist}}(x, A)\) denote the signed distance from A, with the convention that it is non-negative outside of A. Note first that \({\text {sdist}}^{-1}(s)\cap Q(0,r-2s)\) is the graph of a Lipschitz function \(g_s:Q'(0,r-2s)\rightarrow (-r/2,r/2)\) with uniformly bounded gradient depending on \(g_0\) for all \(s>0\) sufficiently small. To see this, note that the level set \({\text {sdist}}\) can be expressed by translating the graph of the boundary, that is,

which is the supremum of equicontinuous bounded functions. In fact, we have that

where \(\omega \) is the modulus of continuity of \(\nabla g_0\) in Q(0, r). To see this, we note that for sufficiently small \(t\in \mathbb {R}^{d-1}\) and \(x' \in Q'(0,r-2\,s)\), by (3.22), there is always a \(\nu _{t}\in S^{d-1}\) such that \(g_s(x'+t) = g_0(x'+t+s\nu '_{t})- s\nu _{t,d}\). Using the mean value theorem, we can estimate

where \(\theta \in (0,1);\) the same bound holds from below. Now assuming that \(x'\) is a point of differentiability for \(g_s,\) we insert \(g_s(x'+t) - g_s(x') = \langle \nabla g_s(x'),t\rangle + o(|t|)\) into the above inequality to find

Fixing \(r \in (0,1)\), taking the supremum over \(t \in rS^{d-1}\), dividing by r, and then sending \(r\rightarrow 0\), this inequality becomes

and as Lipschitz functions are differentiable almost everywhere, we recover (3.23).

Define \(\phi (x',s): = \phi _s(x'):= (x',g_s(x'))\). We now apply the coarea and area formulas to rewrite the perimeter as

Using the area formula once again, we have

Taking the difference, we can estimate

Taking the \(\limsup \) as \(\varepsilon \rightarrow 0\) and then letting \(\delta \rightarrow 0 \) in the above estimate, we see that the lemma will be concluded if we show that

Note that \((x',s)\mapsto \phi (x',s)\) is a bi-Lipschitz function on \(Q'(0,r(1-\delta ))\times (0,\varepsilon )\) for sufficiently small \(\varepsilon .\) By [2, Theorem 3.16] on the composition of BV functions with Lipschitz maps, it follows that \((x',s)\mapsto \rho _0 \circ \phi (x',s)\) is a BV function with

Further, by fine properties of BV functions (see, [2, Theorem 3.108 (b)]), it follows that

for \(x'\in Q'(0,r)\) almost everywhere.

Rewriting [2, Eq. (3.88)], for \(f \in BV(Q(0,r(1-\delta )))\), we have

Inserting \(f = \rho _0\circ \phi \) into the above equation and using (3.25) and (3.26) concludes the proof of (3.24) and thereby the lemma. \(\square \)

We now prove that smooth sets have near optimal approximations, completing the proof of Step 3. As a matter of notation, we will typically consider the closure and boundary of a set A relative to \(\Omega \) and denote this as \(\overline{A}\) and \(\partial A\), respectively. However, to denote the closure of a set A in \(\mathbb {R}^d,\) we will write \(\overline{A}^{\mathbb {R}^d}\). This distinction will be important to ensure that the energy does not charge the boundary of \(\Omega .\)

Proposition 3.7

Let \(\Omega \subset \mathbb {R}^d\) be an open, bounded set with Lipschitz boundary, \(M\subset \mathbb {R}^d\) a \(C^1\)-manifold without boundary, and \(A \subset \Omega \) a set. Suppose that \(\overline{\partial A}^{\mathbb {R}^d}\) is a submanifold of M, with the additional properties that

Then for any \(\eta >0,\) there is \(A_\eta \) such that \(A_\eta = A\) in a neighborhood of \(\partial \Omega \) and the following inequalities hold:

Proof

The primary challenge in this construction is controlling the interaction between the interfaces given by \(\partial A\) and by \(J_\rho : = J_{\rho _0}\cup J_{\rho _1}\), denoting the jump set of \(\rho _0\) and \(\rho _1\), with a secondary challenge being to ensure that \(\partial A\) does not charge the boundary \(\partial \Omega \). We denote the inner normal of A by \(\nu (x): =\frac{D\chi _A}{|D\chi _A|}(x)\), abbreviate \(\beta _\nu =\beta \left( \tfrac{D\chi _A}{\left| D\chi _A\right| };\varvec{\rho }\right) \), and suppress the dependency on \(\varvec{\rho }\) for notational simplicity.

We write \(\partial A\) as the union of a good surface and a bad surface

We will select neighborhoods of Lebesgue points useful to our construction, and as such, we are only interested in keeping track of properties of \(S_G\) and \(S_B\) up to an \(\mathcal {H}^{d-1}\)-null set. We may assume the following are satisfied up to \(\mathcal {H}^{d-1}\)-null sets:

-

1.

For each point \(x\in \partial A\), there is \(r_x > 0\) such that up to a rotation and translation, \(Q_{\nu (x)}(x,r_x)\cap A\) is given by the subgraph of a \(C^1\) function \(g_x\) centered at the origin with \(\nabla g_x(0) = 0\).

-

2.

For each point \(x \in \partial A\),

$$\begin{aligned} \lim _{r \rightarrow 0}\frac{\mathcal {H}^{d-1}( \partial A \cap Q_{\nu (x)}(x,r))}{r^{d-1}}= 1, \end{aligned}$$where we recall \(Q_{\nu }(x,r)\) is a cube oriented in the direction \(\nu \).

-

3.

For \(x \in \partial A\),

-

4.

For \(x\in S_G\), we have

-

5.

For \(x \in S_G\),

-

6.

For \(x\in S_B\), \(\nu (x) = \pm \nu _{J_\rho }(x) \), where \(\nu _{J_\rho }\) denotes a unit normal of the \(d-1\)-rectifiable set \(J_{\rho }.\)

-

7.

For \(x\in S_B\), we may assume

where \(Q_{\nu }^{\pm }(x,r):= \{y\in Q_{\nu }(x,r): \pm \langle y-x,\nu \rangle > 0 \}.\)

-

8.

For \(x \in S_B\),

To prove the proposition, we will use a covering argument to select cubes containing the majority of points in \(S_G\) and \(S_B\), for which the limits in the above list are approximately satisfied up to small relative error \(\eta \ll 1\). Inside each of these nice cubes, we will modify the interface or leave it alone depending on the target energy.

We begin by showing that for fixed \(\eta > 0\), for \(\mathcal {H}^{d-1}\)-almost every \(x\in \partial A\) there is \(r_0(x,\eta ) > 0\) such that for any \(r<r_0\), there is a modification of A given by \(A_\eta \) for which \( A_\eta = A\) in a neighborhood of \(\partial Q_{\nu (x)}(x,r)\) and the inequalities

hold. As the idea is similar for \(x\in S_G\), we focus on the more complicated situation where \(x\in S_B.\)

Without loss of generality, we suppose that \(x = 0 \in S_B\), that \(\nu (0) = -e_d\), and the relevant properties of Hypothesis 1.)– are satisfied. Recalling the definition of \(\beta _\nu \) in (2.4), to construct a modification of A in a small box centered at 0, we break into cases: Either

In the first case, we shift the interface up or down to recover the optimal trace. In the second case, the energy is best when picking up the traces from either side of A, and consequently we will not modify A, but must show this suffices.

Case 1 \(\beta _\nu (0) = \rho _0^{\pm \nu }(0) + \rho _1^{\pm \nu }(0)\). Without loss of generality, we suppose that \(\beta _\nu (0) = \rho _0^{-\nu }(0) + \rho _1^{-\nu }(0)\), which means that the energy is optimal slightly outside of A.

By Hypothesis 1.), the relative height satisfies

where \(Q'\) denotes the \(d-1\) dimensional cube. Consequently, also using that \(\nabla g_0\) is continuous, we may assume that \(r_0 = r(0,\eta )\ll 1\) is such that

Further by Hypothesis 7.), we may take \(r_0 \ll 1\) such that for \(r<r_0\) we have

which gives

Thus by Lemma 3.4, for \(\theta \in (0,1)\), we may find a \(\theta \)-fraction of \(a\in (\eta r,2\eta r)\) such that

Further, as \(J_\rho \) is \(\sigma \)-finite with respect to \(\mathcal {H}^{d-1}\) (as the absolute value of the jump is positive and integrable on this set), we can assume that for such choices of the value a we also have \(\mathcal {H}^{d-1} (J_\rho \cap (Q'(0,r) \times \{a\}) ) = 0,\) and thus

By Lemma 3.6 and (3.30), for all \(r' <r,\) we have

Now, we introduce a second small parameter \(0< \delta <1\) (that can be taken equal to \(\eta \)), which we use to shrink the cube under consideration.

Defining \(A_\eta \) in the cube Q(0, r) to be \(A \cup (Q'(0,r(1-\delta ))\times (-a,a)),\) the \(L^1\) estimate of (3.28) follows immediately. By Proposition 3.5, we have

and one can additionally see that

where j sums over the faces \(F_{\pm j} = \partial A_\eta \cap \{x \in \overline{Q(0,r(1-\delta ))}:\pm x\cdot e_j = r(1-\delta )\}.\)

Consequently, we may apply (3.31) and (3.29), the coarea formula [2], and Hypotheses 2.) and 3.) to find

As the constant \(C>0\) arising in the previous inequality is independent of x and r, taking \(\delta =\eta \), up to redefinition of \(A_\eta \) by \(A_{\eta /C}\), the proof of this case is concluded.

Case 2 \(\beta _\nu (0) = \rho _0^{-\nu }(0) + \rho _1^{\nu }(0)\). For \(A = A_\eta \), the \(L^1\) estimate of (3.28) is trivially satisfied. By Lemma 3.6, we have that

Assuming that the limits Hypotheses and 3.) are satisfied up to error \(\eta \) for \(r<r_0 = r(0,\eta )\), as in (3.32) we can estimate

Once again, as \(\eta >0\) is arbitrary, the proof of (3.28) is complete.

By the Morse measure covering theorem [27], we may choose a finite collection of disjoint cubes \(\{Q_i\}\) satisfying (3.28) compactly contained in \(\Omega \) such that

We define \(A_\eta \) to be the set satisfying (3.28) in each of the finitely many disjoint cubes \(Q_i\), and the same as A outside of these cubes. The \(L^1\) estimate within the proposition statement is satisfied. For each point \(x\in F:=\overline{\partial A_\eta {\setminus } \bigcup _i Q_i}^{\mathbb {R}^d}=\overline{\partial A {\setminus } \bigcup _i Q_i}^{\mathbb {R}^d}\), there is an open cube \(C_x\) such that \(F\cap \overline{C_x}^{\mathbb {R}^d}\) is given as the \(C^1\)-graph of a compact set up to rotation. Applying the Besicovitch covering theorem [27], we may find a finite subset of \(\{C_x\}\), given by \(\{C_j\}\), covering F such that each point x belongs to \(C_j\) for at most C(d) many j.

Noting that

by Proposition 3.5 and using the properties of the selected \(Q_i,\) we then estimate

As \(\eta > 0 \) is arbitrary, we conclude the proof of the proposition. \(\square \)

We now complete the proof of the \(\limsup \) bound in Theorem 3.3, and thereby the Gamma-convergence result of Theorem 2.3.

Proof of Theorem 3.3

As usual, we denote \(\varepsilon _k\) by \(\varepsilon .\) By Theorem 2.1, we may without loss of generality assume that \(\chi _A \in BV(\Omega ).\)

Step 1: Construction of an approximating “smooth” set For \(\eta >0\), we apply the approximation result [24, Theorem C] to find \(u\in SBV(\Omega )\) such that

We note that the approximation is found by applying [24, Theorem C] to \(\chi _A-1/2\), and the \(L^\infty \) bound follows from the comment at the top of page 372 therein (in fact, \(\mathcal {H}^{d-1}(M{\setminus } J_u) = 0\) at this point).

We will select a level-set of u to approximate A, and to do this, we will need to know that the boundary of the level-set is well-behaved: away from M, it will suffice to apply Sard’s theorem to see this is a \(C^1\)-manifold; however, it is possible the level-set boundary oscillates as it approaches M and creates a large intersection. To ensure this doesn’t happen, we begin by modifying u so that

for which the precise meaning will become apparent.

To modify u to satisfy (3.34), we locally use reflections to regularize. We remark that the trace is well defined on M, and up to a small extension of the manifold at \(\partial M\), we can assume that \(u^{+} = u^-\) in a neighborhood of \(\partial M\) (meaning the manifold boundary). We modify u as follows: For each \(x \in M \cap \overline{\{u^+ \ne u^-\}}\) we choose \(r_x>0\) such that \(M\cap B(x,r_x)\) is a graph and \(\overline{B(x,r)} \cap \partial M = \emptyset \). Consider a partition of unity \(\{\psi _i\}\) with respect to a (finite) cover \(\{B(x_i,r_{i})\}\) of \(\overline{\{u^+ \ne u^-\}}.\) For each ball \(B(x_i,r_i)\), define \(M_i^\pm \) to be the ball intersected with the sub- or super-graph. In \(M_i^\pm \), we can reflect and mollify \(u\psi _i\). Choosing fine enough mollifications, restricting to \(M_i^\pm \), and adding together the mollified functions (and \(u(1-\sum _i\psi _i)\)) provides the desired approximation satisfying (3.34) and preserving the relations in (3.33). Similarly, one may smoothly extend u to \(\mathbb {R}^d\) as \(M \subset \subset \Omega \).

Substep 1.1 \(\partial \{u>s\}\) approximates \(\partial A\) Fixing \(\theta \in (0,1)\), we show there is a \(\theta \)-fraction of \(s\in (3/8,5/8)\) such that \(A_s:=\{u>s\}\) is a good level-set approximating A, in the sense that, \(\partial A_s\) is sufficiently regular (as for the next step) and

First, for any \(s \in (3/8,5/8),\) we bound the \(L^1\)-norm by (3.33) as

To control the gradient of \(\chi _{A_s} - \chi _A\), first note that

where we let \(\nu _A\) and \(\nu _{A_s}\) denote the measure-theoretic inner normals. To control the first term on the right-hand side of (3.37), we have

We show that this symmetric difference can be chosen to be small. To control (3.38), note that by the coarea formula (see [2])

Consequently, by Lemma 3.4, for a \(\theta \)-fraction of \(s \in (3/8,5/8)\), we have that

To estimate (3.39) and (3.40), we have

Finally, to control (3.41), note that

To see this, we prove the converse inclusion and suppose \(x \in \partial ^* A \cap J_u \cap \{|(u^+ - u^-) - 1|\le 1/4\};\) in particular, at such a point we have \(u^+(x)\ge u^-(x) + 3/4\). Given the \(L^\infty \) bound of (3.33), we have \(u^-(x) \ge 0\) and \(1 \ge u^+(x)\). Putting these facts together, we see that \(u^+(x) \ge 3/4 >1/4\ge u^-(x)\), showing that for \(s \in (3/8,5/8)\) we have \(x\in \partial ^*\{u>s\}\). Thus, we can estimate (3.41) as follows:

With the above estimates, up to redefinition of \(\theta \), we have that

for a \(\theta \) portion of \(s \in (3/8,5/8).\)

We control the second right-hand side term in (3.37) as follows. As in (3.42), we have \(\mathcal {H}^{d-1}(\partial ^* \{u>s\} \cap \partial ^* A \cap \{\nu _A \ne \nu _{\{u>s\}}\} {\setminus } J_u)\le \mathcal {H}^{d-1}( J_u \triangle \partial ^* A) < \eta \). Then we note that

Consequently by Lemma 3.4, \(u^{\nu _A} - u^{-\nu _A} > 3/4\) in \(J_u\cap \partial ^* A\) outside of a set with \(\mathcal {H}^{d-1}\)-measure less than \(C\eta .\) As the normal \(\nu _{A_s}\) coincides with the direction of positive change for u, for \(s \in (3/8,5/8),\) we have that \(\nu _A = \nu _{A_s}\) outside of a small set controlled by \(\eta .\) In other words, we have that

Putting this estimate together with the bounds (3.36) and (3.44), we conclude (3.35).

Substep 1.2: Regularity of \(\partial A_s\) We claim that for almost every choice of \(s \in (3/8,5/8)\), \(\overline{\partial A_s}^{\mathbb {R}^d}\) is contained in the compact image of a Lipschitz function \(f:\mathbb {R}^d\rightarrow \overline{\Omega }\), i.e., \(f(K) = \overline{\partial A_s}^{\mathbb {R}^d}\) for some \(K\subset \subset \mathbb {R}^d\), and there is compact set \(N \subset \overline{\Omega }\) with \(\mathcal {H}^{d-1}(N) = 0\), such that for any point \(x\in \overline{\partial A_s}^{\mathbb {R}^d} {\setminus } N\), there is a radius \(r_x\) such that \(\overline{\partial A_s}^{\mathbb {R}^d} \cap B(x,r_x) = \partial A_s \cap B(x,r_x)\) is a \(C^1\) surface.

Note first by Sard’s theorem, for almost every \(s\in (0,1)\), the set \(\partial A_s \cap \Omega {\setminus } \overline{\{u^+\ne u^-\}}\) is a \(C^1\) surface away from \(M\cup \partial \Omega \) (i.e., locally in \(\Omega {\setminus } M\)). We will show that the claim holds locally in \(\overline{\{u^+\ne u^-\}}\cup \partial \Omega \), meaning that: for any \(x_0 \in \overline{\{u^+\ne u^-\}}\cup \partial \Omega \), there is a radius \(r>0\) such that, for almost every \(s \in (0,1)\), \(\overline{\partial A_s}^{\mathbb {R}^d} \cap \overline{B(x,r)}\) satisfies the claim, with the amendment that for \(x\in (\overline{\partial A_s}^{\mathbb {R}^d} {\setminus } N)\cap B(x_0,r)\), there is \(r_x>0\) such that \(\overline{\partial A_s}^{\mathbb {R}^d} \cap B(x,r_x) = \partial A_s \cap B(x,r_x)\) is a \(C^1\) surface for some compact set \(N\subset \overline{\Omega }\) (this allows us to avoid proving anything on \(\partial B(x_0,r)\)). With the claim satisfied locally, a covering argument concludes the claim.

We will assume that \(x_0\in \overline{\{u^+\ne u^-\}}\), as the case of \(x_0\in \partial \Omega \) is simpler. Recall that we chose M so that \(\textrm{dist}(\overline{\{u^+\ne u^-\}},\partial M)>0\), so that there is \(r>0\) such that \(B(x_0,2r) \cap M\) is a \(C^1\) surface. Let \(M^+\) and \(M^-\) be the associated super-graph and sub-graph in \(B(x_0,2r)\), respectively. By (3.34), \(u|_{M^\pm }\) has a \(C^1\) extension to \(\mathbb {R}^d\), which we denote by \(u^{\textrm{ext}, \pm }\). Similarly, denoting the trace of u from \(M^\pm \) onto \(M\cap B(x_0,2r)\) by \(u^\pm ,\) we have that \(u^\pm \) belongs to \(C^1(M \cap B(x_0,2r))\). Applying Sard’s theorem three times, in \(M^\pm \) and in \(M \cap B(x_0,2r)\), we find that for almost every \(s\in (0,1)\)

are \(C^1\) surfaces of dimension \(d-1\) and \(d-2\), respectively, and further

where \(\partial _M\) denotes the boundary with respect to the topology relative to M. Clearly we have

and taking \(N: = (\partial _M \{u^+>s\} \cup \partial _M \{u^- >s\}) \cap \overline{B(x_0,r)}\), we have that the claim is locally satisfied.

Step 2: Good and bad parts of \(\partial A_s\) We now fix \(s \in (3/8,5/8)\) such that the previous step holds with regularity (as in Substep 1.2) and estimate (3.35) for \(A_s: = \{u>s\}.\) We construct open sets \(U_1\) and \(U_2\) which cover \(\overline{\partial A_s}^{\mathbb {R}^d}.\) The set \(U_1\) will only contain points of \(\partial A_s\) where it is a smooth manifold. The set \(U_2\) will contain the \(\mathcal {H}^{d-1}\)-small collection of points in \(\overline{\Omega }\) for which \(\overline{\partial A_s}^{\mathbb {R}^d}\) is not given by a smooth manifold, i.e., containing N.

We choose \(N \subset U_2 \subset \{y \,:\,{{\,\textrm{dist}\,}}(y,N)\le \delta \}\) for \(0<\delta \ll 1\) such that

this is possible as \(\bigcap _{\delta >0} \{y \,:\,{{\,\textrm{dist}\,}}(y,N)\le \delta \} = N\) and \(\mathcal {H}^{d-1}(N) = 0.\)

For every \(x \in \partial A_s {\setminus } U_2\), there is a local neighborhood contained in \(\Omega \) for which the boundary is a graph. Consequently, we may choose \(U_1 \subset \subset \Omega \) to be an open set with smooth boundary such that the boundary is covered with \(\overline{\partial A_s}^{\mathbb {R}^d}\subset U_1\cup U_2\), the boundary of \(U_1\) is not charged, that is, \(\mathcal {H}^{d-1}(\partial U_1 \cap \partial A_s)\), and \(U_1\) is well separated from the sets arising from interface intersections with \(\overline{U_1} \cap N = \emptyset \).

Step 3: Near optimal approximation We will now construct a new set \(A_\eta \) by modifying \(A_s\) in \(U_1\) while in \(U_2\), we leave the surface unchanged. We will show that this set \(A_\eta \) satisfies

Within \(U_i\) the constructed \(A_\eta \) will leave the set \(A_s\) unchanged in a neighborhood of \(\partial U_i\). Consequently, for sufficiently small \(\varepsilon > 0\), we have

where \(\mathcal {M}_\varepsilon \) is defined in (2.1). By properties (3.45), one can argue as at the end of the proof of Proposition 3.7 (with \(\{C_j\}\)) to find that

Further, by Proposition 3.7, we can construct \(A_\eta \) in \(U_1\) such that \(A_\eta = A_s\) in a neighborhood of \(\partial U_1\),

With this, using (3.35), we see that the \(L^1\) estimate in (3.46) immediately follows. To obtain the \(\limsup \) inequality, we apply the decomposition (3.47), the estimate of the Minkowski content (3.48), and the difference between \(\partial A_s\) and \(\partial ^* A\) in (3.37) to find

Up to redefinition of \(\eta \) to absorb the constant C, this concludes (3.46), which by a diagonalization argument concludes the theorem. \(\square \)

For use in the next section, we highlight that the approximation introduced in the above proof may be used as a near optimal constant recovery sequence.

Corollary 3.8

If the conditions of Theorem 2.3 hold true, then for all measurable sets \(A\subset \mathbb {R}^d\) with \({{\,\textrm{Per}\,}}(A;\varvec{\rho })<\infty \) and for all \(\eta >0\) there is a set \(A_\eta \) with smooth boundary away from a finite union of \(d-2\)-dimensional manifolds having transverse intersection with the domain boundary, in the sense that

and such that

4 Applications

Having proved our main statement Theorem 2.3, we now turn towards applications. We deduce Gamma-convergence of the total variation functional which is associated to \({{\,\textrm{Per}\,}}_{\varepsilon }(\cdot ;\varvec{\rho })\) in Sect. 4.1, in Sect. 4.2 we discuss the asymptotic behavior of adversarial training as \(\varepsilon \rightarrow 0\), and in Sect. 4.3 we define discretizations of the nonlocal perimeter on random geometric graphs and prove their Gamma-convergence.

4.1 Gamma-convergence of total variation

We define the nonlocal total variation of \(u\in L^1(\Omega )\):

One can check easily (see also [12, Proposition 3.13] for \(L^\infty \)-functions) that \({{\,\textrm{TV}\,}}_\varepsilon \) satisfies the generalized coarea formula

For any integrable function, the measure of the set of values t such that level-sets \(\{u=t\}\) have positive mass is zero. Consequently, as definition (2.2) is invariant under modification by null-sets, we may rewrite this as

This motivates us to define a limiting version of this total variation as

which is identified as the Gamma-limit of \({{\,\textrm{TV}\,}}_\varepsilon \) in the following theorem.

Theorem 4.1

Under the conditions of Theorem 2.3 it holds

as \(\varepsilon \rightarrow 0\) in the topology of \(L^1(\Omega )\).

Proof

The result is a consequence of Theorem 2.3, (4.2), and [18, Proposition 3.5]—a generic Gamma-convergence result for functionals satisfying a coarea formula. \(\square \)

We further provide a natural integral characterization of the limit energy (4.3), where we cannot directly argue via a density argument as \(\beta \)’s behavior on \(d-1\)-dimensional sets is not “continuous” when approximated from the bulk.

Proposition 4.2

Under the conditions of Theorem 2.3 and for \({{\,\textrm{TV}\,}}\) defined as in (4.3), the following representation holds

Proof

For u fixed, we may define \(\beta : = \beta \left( \frac{D u}{\left| D u\right| };\varvec{\rho }\right) \) for \(\mathcal {H}^{d-1}\)-almost every point and treat it as a fixed function. Given the properties of the jump set [2, Section 3.6], it follows that \(\beta \) has an \(\mathcal {H}^{d-1}\)-equivalent Borel representative. We can then rewrite the equality in the proposition as

where \(\beta \) is a generic positive, bounded Borel measurable function. By a standard approximation argument, (4.4) will follow if we show that it holds for \(\beta := \chi _{A}\) for any Borel measurable subset \(A\subset \Omega .\)

To extend to a generic Borel subset, define the class \(\mathcal {S}:=\{A\subset \Omega : (4.4) \text { holds for }\beta :=\chi _A\}.\) For A, an open subset of \(\Omega \), and \(\beta := \chi _A\), (4.4) reduces to the standard coarea formula for BV functions [2]. Consequently \(\mathcal {S}\) contains all open subsets. Noting that \(\mathcal {S}\) satisfies the hypothesis of the monotone class (or \(\pi -\lambda \)) theorem [25, Theorem 1.4], it follows that \(\mathcal {S}\) contains all Borel subsets, concluding the proposition. \(\square \)

4.2 Asymptotics of adversarial training

In this section we would like to apply our Gamma-convergence results to adversarial training (1.1). For this we let \(\mu \in \mathcal M(\Omega \times \{0,1\})\) be the measure characterized through

and consider the following version of adversarial training

which arises from (1.1) by choosing \(\mathcal {X}=\Omega \) equipped with the Euclidean distance and \(\mathcal {C}:= \{\chi _A \,:\,A \in \mathfrak B(\Omega )\}\) as the collection of characteristic functions of Borel sets. As proved in [12] the problem can equivalently be reformulated as

where \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho })\) is the nonlocal perimeter (2.2) for which we proved Gamma-convergence in Sect. 3.

Before we turn to convergence of minimizers of this problem, we first discuss an alternative and simpler model for adversarial training which arises from fixing the regularization parameter in front of the nonlocal perimeter in (4.7) to \(\alpha >0\):

Unless for \(\alpha =\varepsilon \) this problem is not equivalent to adversarial training anymore but it can be interpreted as an affine combination of the non-adversarial and the adversarial risk:

Gamma-convergence as \(\varepsilon \rightarrow 0\) is an easy consequence of Theorem 2.3 since (4.8) is a continuous perturbation of a Gamma-converging sequence of functionals.

Corollary 4.3

Under the conditions of Theorem 2.3, the functionals

Gamma-converge in the \(L^1(\Omega )\) topology as \(\varepsilon \rightarrow 0\) to the functional

Let us now continue the discussion of the original adversarial training problem (4.6) (or equivalently (4.8)). In order to preserve the regularizing effect of the perimeter, it is natural to take the approach of [7, 13], developed in the context of Tikhonov regularization for inverse problems, and to consider the rescaled functional

Obviously, this functional has the same minimizers as the original problem (4.6) and (4.7). Judging from the results in [7, 13] one might hope that the Gamma-limit of \(J_\varepsilon \) is the functional

Indeed the \(\liminf \) inequality is trivially satisfied:

Lemma 4.4

Under the conditions of Theorem 2.3 it holds for any sequence of measurable sets \((A_k)_{k\in \mathbb {N}}\subset \Omega \) and any sequence \((\varepsilon _k)_{k\in \mathbb {N}}\subset (0,\infty )\) with \(\chi _{A_k}\rightarrow \chi _A\) in \(L^1(\Omega )\) and \(\lim _{k\rightarrow \infty }\varepsilon _k=0\) that

Proof

If we assume that \(\alpha :=\mathbb {E}_{(x,y)\sim \mu }[\left| \chi _A(x)-y\right| ]-\inf _{B\in \mathfrak B(\Omega )}\mathbb {E}_{(x,y)\sim \mu }[\left| \chi _B(x)-y\right| ]>0\) (and hence \(J(A)=\infty \)) then we have by continuity

Hence, we see

In the other case if \(\alpha =0\) we use the \(\liminf \) inequality from Theorem 2.3 to find

since the first term in \(J_{\varepsilon _k}\) is non-negative. \(\square \)

The \(\limsup \) inequality is non-trivial and potentially even false. Letting \((A_k)_{k\in \mathbb {N}}\) be a recovery sequence for the perimeter \({{\,\textrm{Per}\,}}(A;\varvec{\rho })\) of \(A\in \mathop {\mathrm {arg\,min}}\limits _{B\in \mathfrak B(\Omega )}\mathbb {E}_{(x,y)\sim \mu }[\left| \chi _B(x)-y\right| ]\) one has

It obviously holds that

and hence, for the \(\limsup \) inequality to be satisfied, we would need to make sure that

This requires that the recovery sequences converges sufficiently fast to A in \(L^1(\Omega )\), namely

and is not obvious from our proof of Theorem 3.3. Even for smooth densities \(\rho _0,\rho _1\) where the construction of the recovery sequences is much simpler, (4.12) is not obvious.

On the other hand, condition (4.12) for the validity of the \(\limsup \) inequality only has to be satisfied for the minimizers (so-called Bayes classifiers) of the unregularized problem \(\inf _{B\in \mathfrak B(\Omega )}\mathbb {E}_{(x,y)\sim \mu }[\left| \chi _B(x)-y\right| ]\) which have finite weighted perimeter.

This motivates to assume a so-called “source condition”, demanding some regularity on these Bayes classifiers. In the field of inverse problems source conditions are well-studied and known to be necessary for proving convergence of variational regularization schemes [8, 13]. Our first source condition—referred to as strong source condition—takes the following form:

Note that a Bayes classifier \(A^\dagger \) admits a recovery sequence satisfying (4.12), for instance, if \(\partial A^\dagger \) is sufficiently smooth and the densities \(\rho _0\) and \(\rho _1\) are continuous. In this case the constant sequence, which trivially satisfies (4.12), recovers. Under this strong condition we have proved the following Gamma-convergence result:

Proposition 4.5

(Conditional Gamma-convergence) Under the conditions of Theorem 2.3 and assuming (sSC) it holds that

as \(\varepsilon \rightarrow 0\) in the \(L^1(\Omega )\) topology.

In fact, a substantially weaker source condition suffices for compactness of solutions as \(\varepsilon \rightarrow 0\):

We get the following compactness statement assuming validity of this source condition.

Proposition 4.6

(Conditional compactness) Under the conditions of Theorems 2.1 and 2.3 nd assuming the source condition (wSC) any sequence of solutions to (4.6) is precompact in \(L^1(\Omega )\) as \(\varepsilon \rightarrow 0\).

Proof

Let us take a sequence of solutions \(A_\varepsilon \) of (4.6) for \(\varepsilon \rightarrow 0\). Furthermore let \(A_\varepsilon ^\dagger \) denote a recovery sequence for the Bayes classifier \(A^\dagger \) which satisfies (wSC). Using the minimization property of \(A_\varepsilon \) it holds

Subtracting the Bayes risk and rescaling by \(\varepsilon \), we have

Using that the leftmost term is non-negative, taking the \(\limsup \), and using (wSC) yields

Hence, we can apply Theorem 2.1 and conclude. \(\square \)

So far we have introduced the strong source condition (sSC) for proving Gamma-convergence and the weak one (wSC) for showing compactness. As it turns out, for proving Theorem 2.5, concerning convergence of minimizers of adversarial training (4.6), it suffices to assume the source condition (SC) which is in the middle but only slightly stronger than (wSC), i.e.,

This condition is the following:

Under this condition we can prove our last main result.

Proof of Theorem 2.5

Since (SC) implies (wSC), by Proposition 4.6 up to a subsequence it holds \(A_\varepsilon \rightarrow A\) in \(L^1(\Omega )\). Let \(A_\varepsilon ^\dagger \) denote a recovery sequence for \(A^\dagger \) satisfying (SC). Lemma 4.4 implies

Since \({{\,\textrm{Per}\,}}(A^\dagger ;\varvec{\rho })<\infty \) we get that \(J(A)<\infty \) and hence \(A\in \mathop {\mathrm {arg\,min}}\limits _{B\in \mathfrak B(\Omega )}\mathbb {E}_{(x,y)\sim \mu }[\left| \chi _B(x)-y\right| ]\) and \({{\,\textrm{Per}\,}}(A;\varvec{\rho })=J(A)\le {{\,\textrm{Per}\,}}(A^\dagger ;\varvec{\rho })\). This shows that A is a minimizer of the problem in (2.11). \(\square \)

4.3 Gamma-convergence of graph discretizations

In this section we discuss a discretization of the nonlocal perimeter \({{\,\textrm{Per}\,}}_\varepsilon (\cdot ;\varvec{\rho })\) on a random geometric graph and prove Gamma-convergence in a suitable topology. For this, let \(G_n=(X_n,W_n)\) for \(n\in \mathbb {N}\) be a weighted random geometric graph with vertex set \(X_n\) and weights \(W_n\). This means that the vertex set \(X_n:= \{x_1,\dots ,x_n\}\subset \Omega \) is a collection of independent and identically distributed (i.i.d.) random variables with law \(\rho := \rho _0 + \rho _1\), and the weights are defined as \(W_n(x,y):= \phi (\left| x-y\right| /\varepsilon _n)\) for some parameter \(\varepsilon _n>0\) and \(\phi (t):=\chi _{[0,1]}(t)\) for \(t\in \mathbb {R}\).

We can identify the graph vertices with their empirical measure \(\nu _n:= \frac{1}{n}\sum _{i=1}^n\delta _{x_i}\). Thanks to [35] (see also [34, Theorem 2.5]) and the fact that \(\rho \) is strictly positive on \(\Omega \), with probability one (almost surely) there exists maps \(T_n: \Omega \rightarrow \Omega \) such that

Here the quantity \(\delta _n>0\) is given by

which for \(d\ge 3\) coincides with the asymptotic connectivity threshold for random geometric graphs. We can use these transport maps to consider the measures

which are the empirical measures of the graph points that are associated with the i-th density \(\rho _i\).

We define a graph discretization of \({{\,\textrm{Per}\,}}_\varepsilon (A;\varvec{\rho })\) for \(A\subset X_n\) as

which effectively counts the number of points in an exterior strip around A carrying the label 0 and the number of points in an interior strip in A carrying the label 1. Note that, although the complement \(A^c\) of a subset A of the graph vertices \(X_n\) is not a subset of \(X_n\) anymore, the empirical measure \(\nu _n^1\) only considers points in \(A^c\cap X_n\) so one can just as well replace \(A^c\) by \(X_n {\setminus } A\). Note also that this graph model using (4.14) assumes that the label distribution is performed according to the ground truth distributions \(\rho _0\) and \(\rho _1\). One can also treat more general labeling models such that (4.14) is asymptotically satisfied as \(n\rightarrow \infty \), but for the sake of simplicity we limit the discussion to the model above.

Using the weight function \(W_n(x,y)\) we can equivalently express \(E_n(A)\) as

This graph perimeter functional combines elements of the graph perimeter studied in [36] and the graph Lipschitz constant studied in [43]. Correspondingly, also the following Gamma-convergence proof bears similarities with both of these works. For proving Gamma-convergence of these graph perimeters to the continuum perimeter we employ the \(TL^p\)-framework, developed in [36]. Here, we do not go into too much detail regarding the definition and the properties of these metric spaces. We just define the space as the set of pairs of \(L^p\) functions and measures \(TL^p(\Omega ):= \{(f,\mu ) \,:\,\mu \in \mathcal {P}(\Omega ),\; f\in L^p(\Omega ,\mu )\}\), where \(\mathcal {P}(\Omega )\) is the set of probability measures on \(\Omega \). We highlight [36, Proposition 3.12, 4.] which says that in our specific situation with \(\rho \) being a strictly positive absolutely continuous measure, convergence of \((u_n,\nu _n)\rightarrow (u,\rho )\) in the topology of \(TL^p(\Omega )\) is equivalent to \(u_n \circ T_n \rightarrow u\) in \(L^p(\Omega )\) for the maps \(T_n\) satisfying (4.13). Furthermore, we would like to emphasize that the functionals \(E_n\) are random variables since they depend on the given realization of the random variables which constitute the vertices \(X_n\) of the graph. Still it is possible to prove Gamma-convergence of these functionals with probability one, meaning that Gamma-convergence might be violated only for a set of graph realizations which have zero probability. We refer the interested reader to [36, Definition 2.11] for precise definitions.

The following is the main result of this section and asserts Gamma-convergence of the functionals \(E_n\) to the Gamma-limit from Theorem 2.3.

Theorem 4.7

Let the assumptions of Theorem 2.3 be satisfied. If \(\varepsilon _n>0\) satisfies

then with probability one it holds

as \(n\rightarrow \infty \) in the \(TL^1(\Omega )\) topology, and the following compactness property holds:

Proof