Abstract

We characterise all linear maps \({\mathscr {A}}:\mathbb R^{n\times n}\rightarrow \mathbb R^{n\times n}\) such that, for \(1\le p<n\),

holds for all compactly supported \(P\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{n\times n})\), where \({\text {Curl}}P\) displays the matrix curl. Being applicable to incompatible, that is, non-gradient matrix fields as well, such inequalities generalise the usual Korn-type inequalities used e.g. in linear elasticity. Different from previous contributions, the results gathered in this paper are applicable to all dimensions and optimal. This particularly necessitates the distinction of different combinations between the ellipticities of \({\mathscr {A}}\), the integrability p and the underlying space dimensions n, especially requiring a finer analysis in the two-dimensional situation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the most fundamental tools in (linear) elasticity or fluid mechanics are Korn-type inequalities. Such inequalities are pivotal for coercive estimates, leading to well-posedness and regularity results in spaces of weakly differentiable functions; see [12, 14, 16, 25, 27, 29, 33, 34, 39, 40, 48, 61] for an incomplete list. In their most basic form they assert that for each \(n\ge 2\) and each \(1<{q}<\infty \) there exists a constant \(c=c({q},n)>0\) such that

holds for all \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{n})\). Here, \(\varepsilon (u)={\text {sym}}{\text {D}}\!u\) is the symmetric part of the gradient. Within linearised elasticity, where \(\varepsilon (u)\) takes the role of the infinitesimal strain tensor for some displacement \(u:\Omega \rightarrow \mathbb R^{n}\), variants of inequality (1.1) imply the existence of minimisers for elastic energies

in certain subsets of Sobolev spaces \({\text {W}}^{1,{q}}(\Omega ;\mathbb R^{n})\) provided the elastic energy density W satisfies suitable growth and semiconvexity assumptions, see e.g. Fonseca and Müller [24]. Variants of (1.1) also prove instrumental in the study of (in)compressible fluid flows [4, 23, 48] or in the momentum constraint equations from general relativity and trace-free infinitesimal strain measures [2, 17, 26, 43, 44, 63, 64].

Originally, (1.1) was derived by Korn [39] in the \({\text {L}}^{2}\)-setting and later on generalised to all \(1<{q}<\infty \). Inequality (1.1) is non-trivial because it strongly relies on gradients not being arbitrary matrix fields; note that there is no constant \(c>0\) such that

holds. As such, (1.3) fails since general matrix fields need not be \({\text {Curl}}\)-free. It is therefore natural to consider variants of (1.3) that quantify the lack of \({\text {curl}}\)-freeness or incompatibility. Such inequalities prove crucial in view of infinitesimal (strain-)gradient plasticity, where functionals typically involve integrands \({\text {D}}\!u\mapsto W({\text {sym}}e)+|{\text {sym}}P|^2+V({\text {Curl}}P)\) based on the additive decomposition of the displacement gradient \({\text {D}}\!u\) into incompatible elastic and irreversible plastic parts: \({\text {D}}\!u=e+P\) and \({\text {sym}}e\) representing a measure of (infinitesimal) elastic strain while \({\text {sym}}P\) quantifies the plastic strain; see Garroni et al. [28], Müller et al. [42, 51] and Neff et al. [21, 52, 54, 57, 58, 60] for related models. Another field of application are generalised continuum models, e.g. the relaxed micromorphic model, cf. [55, 56, 65]. A key tool in the treatment of such problems are the incompatible Korn–Maxwell–Sobolev inequalities which, in the context of (1.3), read as

where the reader is referred to Sect. 3.1 for the definition of the n-dimensional matrix curl operator. For brevity, we simply speak of KMS-inequalities. Scaling directly determines q in terms of p (or vice versa), e.g. leading to the Sobolev conjugate \(q=\frac{n\,p}{n-p}\) if \(1\le p<n\). Variants of (1.4) on bounded domains have been studied in several contributions [2, 15, 28, 32, 42,43,44,45,46, 59]. Inequality (1.4) asserts that the symmetric part of P and the curl of P are strong enough to control the entire matrix field P. However, it does not clarify the decisive feature of the symmetric part to make inequalities as (1.4) work and thus has left the question of the sharp underlying mechanisms for (1.4) open.

In this paper, we aim to close this gap. Different from previous contributions, where such inequalities were studied for specific combinations (p, n) or particular choices of matrix parts \({\mathscr {A}}[P]\) such as \({\text {sym}}P\) or \({\text {dev}}{\text {sym}}P\),Footnote 1 the purpose of the present paper is to classify those parts \({\mathscr {A}}[P]\) of matrix fields P such that (1.4) holds with \({\text {sym}}P\) being replaced by \({\mathscr {A}}[P]\) for all possible choices of \(1\le p\le \infty \) depending on the underlying space dimension n. We now proceed to give the detailed results and their context each.

2 Main results

2.1 All dimensions estimates

To approach (1.4) in light of the failure of (1.3), one may employ a Helmholtz decomposition to represent P as the sum of a gradient (hence curl-free part) and a curl (hence divergence-free part). Under suitable assumptions on \({\mathscr {A}}\), the gradient part can be treated by a general version of Korn-type inequalities implied by the usual Calderón–Zygmund theory [9]. On the other hand, the div-free part is dealt with by the fractional integration theorem (cf. Lemma 3.4 below) in the superlinear growth regime \(p>1\). If \(p=1\), then the particular structure of the div-free term in \(n\ge 3\) dimensions allows to reduce to the Bourgain–Brezis theory [3]. As a starting point, we thus first record the solution of the classification problem for combinations \((p,n)\ne (1,2)\):

Theorem 2.1

(KMS-inequalities for \((p,n)\ne (1,2)\)) Let \(n=2\) and \(1<p<2\) or \(n\ge 3\) and \(1\le p<n\). Given a linear map \({\mathscr {A}}:\mathbb R^{{m}\times n}\rightarrow \mathbb R^{{N}}\), with \(m, N\in \mathbb N\), the following are equivalent:

-

(a)

There exists a constant \(c=c(p,n,{\mathscr {A}})>0\) such that the inequality

$$\begin{aligned} \left\Vert P\right\Vert _{{\text {L}}^{p^{*}}(\mathbb R^{n})}\le c\,\Big (\left\Vert {\mathscr {A}}[P]\right\Vert _{{\text {L}}^{p^{*}}(\mathbb R^{n})}+\left\Vert {\text {Curl}}P\right\Vert _{{\text {L}}^{p}(\mathbb R^{n})}\Big ) \end{aligned}$$(2.1)holds for all \(P\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{{m}\times n})\).

-

(b)

\({\mathscr {A}}\) induces an elliptic differential operator, meaning that \({\mathbb {A}}u{:=}{\mathscr {A}}[{\text {D}}\!u]\) is an elliptic differential operator; see Sect. 3.2 for this terminology.

For the particular choice \({\mathscr {A}}[P]={\text {sym}}P\), this gives us a global variant of Conti and Garroni [15, Theorem 2] by Conti and Garroni. Specifically, Theorem 2.1 is established by generalising the approach of Spector and the first author [32] from \(n=3\) to \(n\ge 3\) dimensions, and the quick proof is displayed in Sect. 5. As a key point, though, we emphasize that ellipticity of \({\mathbb {A}}\) suffices for (2.1) to hold.

As mentioned above, if \(n\ge 3\), the borderline estimate for \(p=1\) is a consequence of a Helmholtz decomposition and the Bourgain-Brezis estimate

Even if \(n=3\), interchanging \({\text {div}}\) and \({\text {curl}}\) in (2.2) is not allowed here, as can be seen by considering regularisations of the gradients of the fundamental solution \(\Phi (\cdot )=\frac{1}{3\omega _{3}}|\cdot |^{-1}\) of the Laplacian (and \(\rho _{\varepsilon }(\cdot )=\frac{1}{\varepsilon ^{3}}\rho (\frac{\cdot }{\varepsilon })\) for a standard mollifier \(\rho \)): Let \((\varepsilon _{i}),(r_{i})\subset (0,\infty )\) satisfy \(\varepsilon _{i}\searrow 0\) and \(r_{i}\nearrow \infty \) and choose \(\varphi _{r_{i}}\in {\text {C}}_{c}^{\infty }(\mathbb R^{n})\) with \(\mathbbm {1}_{{\text {B}}_{r_{i}}(0)}\le \varphi _{r_{i}}\le \mathbbm {1}_{{\text {B}}_{2r_{i}}(0)}\) with \(|\nabla ^{l}\varphi _{r_{i}}|\le 4/r_{i}^{l}\) for \(l\in \{1,2\}\). Putting

we then have \(\sup _{i\in \mathbb N}\left\Vert \Delta g_{i}\right\Vert _{{\text {L}}^{1}(\mathbb R^{3})}<\infty \), and validity of the corresponding modified inequality would imply the contradictory estimate \(\sup _{i\in \mathbb N}\left\Vert f_{i}\right\Vert _{{\text {L}}^{\frac{3}{2}}(\mathbb R^{3})}=\sup _{i\in \mathbb N}\left\Vert \nabla g_{i}\right\Vert _{{\text {L}}^{\frac{3}{2}}(\mathbb R^{3})}<\infty \).

However, as can be seen from Example 2.2 below, inequality (2.2) fails to extend to \(n=2\) dimensions. Still, Garroni et al. [28, §5] proved validity of a variant of inequality (1.4) in \(n=2\) dimensions for the particular choice of the symmetric part of a matrix, and so one might wonder whether Theorem 2.1 remains valid for the remaining case \((p,n)=(1,2)\) as well. This, however, is not the case, as can be seen from

Example 2.2

Consider the trace-free symmetric part of a matrix

where \({\text {dev}}{\text {sym}}\) induces the usual trace-free symmetric gradient \(\varepsilon ^{D}(u)={\text {dev}}{\text {sym}}{\text {D}}\!u\) which is elliptic (cf. Section 3.2). Let us now consider for \(f\in {\text {C}}_{c}^{\infty }(\mathbb R^{2})\) the matrix field

Then we have

If Theorem 2.1 were correct for the combination \((p,n)=(1,2)\), the ellipticity of \(\varepsilon ^{D}\) would give us

and this inequality is again easily seen to be false by taking regularisations and smooth cut-offs of the fundamental solution of the Laplacian, \(\Phi (x)=\frac{1}{2\pi }\log (|x|)\).

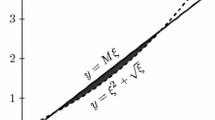

In conclusion, Theorem 2.1 does not extend to the case \((p,n)=(1,2)\). As the strength of \({\text {Curl}}\) decreases when passing from \(n\ge 3\) to \(n=2\), one expects that validity of inequalities

in general requires stronger conditions on \({\mathscr {A}}\), see Fig. 1. In this regard, our second main result asserts that the critical case \((p,n)=(1,2)\) necessitates very strong algebraic conditions on the matrix parts \({\mathscr {A}}\) indeed:

Theorem 2.3

(KMS-inequalities in the case \((p,n)=(1,2)\)) Let \(n=2\) and \(p=1\), then the following are equivalent:

-

(a)

The critical Korn–Maxwell–Sobolev estimate (2.4) holds.

-

(b)

The part map \({\mathscr {A}}\) induces a \({\mathbb {C}}\)-elliptic differential operator.

-

(c)

The part map \({\mathscr {A}}\) induces an elliptic and cancelling differential operator.

-

(d)

The part map \({\mathscr {A}}\) induces an elliptic differential operator and we have \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])\in \{3,4\}\).

Here, we understand by inducing a differential operator \({\mathbb {A}}\) that the associated differential operator \({\mathbb {A}}u={\mathscr {A}}[{\text {D}}\!u]\), \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{2};\mathbb R^{2})\), satisfies the corresponding properties. For the precise meaning of \({\mathbb {C}}\)-ellipticity and ellipticity and cancellation, the reader is referred to Sect. 3.2.

Properties (b) and (c) express in which sense mere ellipticity has to be strengthened in order to yield the critical Korn–Maxwell–Sobolev inequality (2.4). Working from Example 2.2, condition (c) is natural from the perspective of limiting \({\text {L}}^{1}\)-estimates on the entire space (cf. Van Schaftingen [71]). It is then due to the specific dimensional choice \(n=2\) that \({\mathbb {C}}\)-ellipticity, usually playing a crucial role in boundary estimates, coincides with ellipticity and cancellation; see Raiţă and the first author [30] and Lemma 3.1 below for more detail. In establishing necessity and sufficiency of these conditions to yield (a) we will however employ direct consequences of the corresponding features on the induced differential operators, which is why Theorem 2.3 appears in the above form. Lastly, the dimensional description of \({\mathbb {C}}\)-ellipticity in the sense of (d) is a by-product of the proof, which might be of independent interest.

Since \({\mathscr {A}}={\text {sym}}\) induces a \({\mathbb {C}}\)-elliptic differential operator in \(n=2\) dimensions whereas \({\mathscr {A}}={\text {dev}}{\text {sym}}\) does not, this result clarifies validity of (2.4) for \({\mathscr {A}}={\text {sym}}\) and its failure for \({\mathscr {A}}={\text {dev}}{\text {sym}}\). If \((p,n)=(1,2)\), by the non-availability of (2.2), inequalities (1.3) cannot be approached by invoking Helmholtz decompositions and using critical estimates on the solenoidal parts. As known from [28], in the particular case of \({\mathscr {A}}[P]={\text {sym}}P\) one may use the specific structure of symmetric matrices (and their complementary parts, the skew-symmetric matrices) to deduce estimate (2.4). The use of this particularly simple structure also enters proofs of various variants of Korn-Maxwell inequalities. A typical instance is the use of Nye’s formula, allowing to express \({\text {D}}\!P\) in terms of \({\text {Curl}}P\) in three dimensions provided P takes values in the set of skew-symmetric matrices \(\mathfrak {so}(3)\); also see the discussion in [42, Sect. 1.4] by Müller and the second named authors.

However, in view of classifying the parts \({\mathscr {A}}[P]\) for which (2.4) holds, we cannot utilise the simple structure of symmetric matrices. To resolve this issue, we will introduce so-called almost complementary parts for the sharp class of parts \({\mathscr {A}}[P]\) for which (2.4) can hold at all, and establish that these almost complementary parts have a sufficiently simple structure to get suitable access to strong Bourgain–Brezis estimates.

2.2 Subcritical KMS-inequalities and other variants

The inequalities considered so far scale and thus the exponent \(p^{*}\) cannot be improved for a given p. On balls \({\text {B}}_{r}(0)\), one might wonder which conditions on \({\mathscr {A}}\) need to be imposed for inequalities

for all \(P\in {\text {C}}_{c}^{\infty }({\text {B}}_{r}(0);\mathbb R^{m\times n})\) to hold with \(c=c(p,q,n,{\mathscr {A}})>0\) provided q is strictly less than the optimal exponent \(p^{*}\). Since the exponent q here is strictly less than the non-improvable choice \(p^{*}\), one might anticipate that even in the case \(n=2\) ellipticity of the operator \({\mathbb {A}}u{:=}{\mathscr {A}}[{\text {D}}\!u]\) alone suffices. Indeed we have the following

Theorem 2.4

(Subcritical KMS-inequalities) Let \(n\ge 2\), \(m, N \in \mathbb N\), \(1\le p<\infty \) and \(1\le q<p^{*}\). Then the following hold:

-

(a)

Let \(q=1\). Writing \({\text {D}}\!u=\sum _{i=1}^{n}{\mathbb {E}}_{i}\,\partial _{i}u\) and \({\mathbb {A}}u{:=}{\mathscr {A}}[{\text {D}}\!u]=\sum _{i=1}^{n}{\mathbb {A}}_{i}\,\partial _{i}u\) for suitable \({\mathbb {E}}_{i},{\mathbb {A}}_{i}\in \mathscr {L}(\mathbb R^{m};\mathbb R^{N})\) and all \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{m})\), (2.5) holds if and only if there exists a linear map \(L :\mathbb R^{N\times m}\rightarrow \mathbb R^{N\times m}\) such that \({\mathbb {E}}_{i}=\text {L}\circ \,{\mathbb {A}}_{i}\) for all \(1\le i\le n\).

-

(b)

Let \(1<q<p^{*}\) if \(p<n\) and \(1<q<\infty \) otherwise. Then (2.5) holds if and only if \({\mathscr {A}}\) induces an elliptic differential operator by virtue of \({\mathbb {A}}u={\mathscr {A}}[{\text {D}}\!u]\) (Fig. 2).

Let us remark that Theorems 2.1–2.4 all deal with exponents \(p<n\). Inequalities that address the case \(p\ge n\) and hereafter involve different canonical function spaces, are discussed in Sects. 5 and 6. We conclude this introductory section by discussing several results and generalisations which appear as special cases of the results displayed in the present paper:

Remark 2.5

-

(i)

For the particular choice \({\mathscr {A}}[P]={\text {sym}}P\) and \(q=p>1\), Theorem 2.4 yields with \(n=3\) the result from [46, Theorem 3] and for \(n\ge 2\) the result from [45, Theorem 8].

-

(ii)

For the particular choice of \({\mathscr {A}}[P]={\text {sym}}P\) and \((p,n)=(1,2)\), Theorem 4.8 yields [28, Theorem 11] as a special case.

-

(iii)

For \({\mathscr {A}}[P]={\text {dev}}{\text {sym}}P\) and \(q=p>1\), Theorem 2.4 yields [44, Theorem 3.5] and moreover catches up with the missing proof of the trace-free Korn-Maxwell inequality in \(n=2\) dimensions.

-

(iv)

For \({\mathscr {A}}[P]\in \{{\text {sym}}P,{\text {dev}}{\text {sym}}P\}\), Theorems 2.1–2.4 yield the sharp, scaling-optimal generalisation of [44, Theorem 3.5], [45, Theorem 8] and [46, Theorem 3].

-

(v)

For the part map \({\mathscr {A}}[P]={\text {skew}}P +{{\,\textrm{tr}\,}}P\cdot \mathbbm {1}_{n}\) as arising e.g. in the study of time-incremental infinitesimal elastoplasticity [57], now appears as a special case, cf. Example 5.1.

2.3 Organisation of the paper

Besides the introduction, the paper is organised as follows: In Sect. 3 we fix notation and gather preliminary results on differential operators and other auxiliary estimates. Based on our above discussion, we first address the most intricate case \((p,n)=(1,2)\) and thus establish Theorem 2.3 in Sect. 4. Theorems 2.1 and 2.4, which do not require the use of almost complementary parts, are then established in Sects. 5 and 6. Finally, the appendix gathers specific Helmholtz decompositions used in the main part of the paper, contextualises the approaches and results displayed in the main part with previous contributions and provides auxiliary material on weighted Lebesgue and Orlicz functions.

3 Preliminaries

In this preliminary section we fix notation, gather auxiliary notions and results and provide several examples that we shall refer to throughout the main part of the paper.

3.1 General notation

We denote \(\omega _{n}{:=}{\mathscr {L}}^{n}({\text {B}}_{1}(0))\) the n-dimensional Lebesgue measure of the unit ball. For \(m\in \mathbb N_{0}\) and a finite dimensional (real) vector space X, we denote the space of X-valued polynomials on \(\mathbb R^{n}\) of degree at most m by \({\mathscr {P}}_{m}(\mathbb R^{n};X)\); moreover, for \(1<p<\infty \), we denote \({\dot{{\text {W}}}}{^{1,p}}(\mathbb R^{n};X)\) the homogeneous Sobolev space (i.e., the closure of \({\text {C}}_{c}^{\infty }(\mathbb R^{n};X)\) for the gradient norm \(\left\Vert {\text {D}}\!u\right\Vert _{{\text {L}}^{p}(\mathbb R^{n})}\)) and \({\dot{{\text {W}}}}{^{-1,p'}}(\mathbb R^{n};X):={\dot{{\text {W}}}}{^{1,p}}(\mathbb R^{n};X)'\) its dual. Finally, to define the matrix curl \({\text {Curl}}P\) for \(P:\mathbb R^{n}\rightarrow \mathbb R^{n\times n}\), we recall from [41, 44] the generalised cross product \(\times _{n}:\mathbb R^n \times \mathbb R^n\rightarrow \mathbb R^{\frac{n(n-1)}{2}}\). Letting

we inductively define

The generalised cross product \(a\times _{n}\cdot \) is linear in the second component and thus can be identified with a multiplication with a matrix denoted by \(\llbracket a\rrbracket _{\times _{n}}\in \mathbb R^{\frac{n(n-1)}{2}\times n}\) so that

For a vector field \(a:\mathbb R^{n}\rightarrow \mathbb R^{n}\) and matrix field \(P:\mathbb R^{n}\rightarrow \mathbb R^{m\times n}\), with \(m\in \mathbb N\), we finally declare \({\text {curl}}a\) and \({\text {Curl}}P\) via

3.2 Notions for differential operators

Let \({\mathbb {A}}\) be a first order, linear, constant coefficient differential operator on \(\mathbb R^{n}\) between two finite-dimensional real inner product spaces V and W. In consequence, there exist linear maps \({\mathbb {A}}_{i}:V\rightarrow W\), \(i\in \{1,...,n\}\) such that

For \(\Omega \subseteq \mathbb R^n\) open and \(1\le q\le \infty \), we set

With an operator of the form (3.4), we associate the symbol map

Following [7], we denote

In the specific case where \(V=\mathbb R^{m}\), \(W=\mathbb R^{m\times n}\) and \({\mathbb {A}}={\text {D}}\!\) is the derivative, this gives us back the usual tensor product \(v\otimes \xi =v\, \xi ^{\top }\); if \(V=\mathbb R^{n}\), \(W={\text {Sym}}(n)=\mathbb R_{{\text {sym}}}^{n\times n}\), it gives us back the usual symmetric tensor product \(v\odot \xi =\frac{1}{2}(v\otimes \xi +\xi \otimes v)\).

Elements \(w\in W\) of the form \(w=\,v\otimes _{{\mathbb {A}}}\xi \) are called pure \({\mathbb {A}}\)-tensors. We now define

Moreover, if \(\{{{\textbf {a}}}_{1},...,{{\textbf {a}}}_{N}\}\) is a basis of \(\mathbb R^{N}\) and \(\{{{\textbf {e}}}_{1},...,{{\textbf {e}}}_{n}\}\) is a basis of \(\mathbb R^{n}\), then by linearity the set \(\{{{\textbf {a}}}_{j}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{i}\mid 1\le i\le n,\;1\le j \le N\}\) spans \({\mathscr {R}}({\mathbb {A}})\) and hence contains a basis of \({\mathscr {R}}({\mathbb {A}})\). For our future objectives, it is important to note that this result holds irrespectively of the particular choice of bases.

We now recall some notions on differential operators gathered from Breit et al. [7], Hörmander [35], Smith [66], Spencer [67] and Van Schaftingen [71]. An operator of the form (3.4) is called elliptic provided the Fourier symbol \({\mathbb {A}}[\xi ]:V\rightarrow W\) is injective for any \(\xi \in \mathbb R^{n}\backslash \{0\}\). This is equivalent to the Legendre–Hadamard ellipticity of \(W(P)=\frac{1}{2}|{\mathscr {A}}[P]|^2\) in the sense that the bilinear form \({\text {D}}\!^2_P W(P)(\xi \otimes \eta ,\xi \otimes \eta )=|{\mathscr {A}}(\xi \otimes \eta )|^2>0\) is strictly positive definite. A strengthening of this notion is that of \({\mathbb {C}}\)-ellipticity. Here we require that for any \(\xi \in {\mathbb {C}}^{n}\backslash \{0\}\) the associated symbol map \({\mathbb {A}}[\xi ]:V+{\text {i}}V\rightarrow W+{\text {i}}W\) is injective.

Lemma 3.1

([30, 31, 71]) Let \({\mathbb {A}}\) be a first order differential operator of the form (3.4) with \(n=2\). Then the following are equivalent:

-

(a)

\({\mathbb {A}}\) is \({\mathbb {C}}\)-elliptic.

-

(b)

\({\mathbb {A}}\) is elliptic and cancelling, meaning that \({\mathbb {A}}\) is elliptic in the above sense and

$$\begin{aligned} \bigcap _{\xi \in \mathbb R^{2}\backslash \{0\}}{\mathbb {A}}[\xi ](V)=\{0\}. \end{aligned}$$ -

(c)

There exists a constant \(c>0\) such that the Sobolev estimate \(\left\Vert u\right\Vert _{{\text {L}}^{2}(\mathbb R^{2})}\le c\left\Vert {\mathbb {A}}u\right\Vert _{{\text {L}}^{1}(\mathbb R^{2})}\) holds for all \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{2};V)\).

This equivalence does not hold true in \(n\ge 3\) dimensions (see [31, Sect. 3] for a discussion; for general dimensions \(n\ge 2\), one has \({(b)}\Leftrightarrow {(c)}\), see [71], but only \({(a)}\Rightarrow {(b)}\), see [30, 31]). The following lemma is essentially due to Smith [66]; also see [19, 37] for the explicit forms of the underlying Poincaré-type inequalities:

Lemma 3.2

An operator \({\mathbb {A}}\) of the form (3.4) is \({\mathbb {C}}\)-elliptic if and only if there exists \(m\in {\mathbb {N}}_{0}\) such that \(\ker ({\mathbb {A}})\subset {\mathscr {P}}_{m}(\mathbb R^{n}; V)\). Moreover, for any open, bounded and connected Lipschitz domain \(\Omega \subset \mathbb R^{n}\) there exists \(c=c({q},n,{\mathbb {A}},\Omega )>0\) such that for any \(u\in {\text {W}}^{{\mathbb {A}},{q}}(\Omega )\) there is \(\Pi _{{\mathbb {A}}}u\in \ker ({\mathbb {A}})\) such that

We conclude with the following example to be referred to frequently, putting the classical operators \(\nabla \), \(\varepsilon \) and \(\varepsilon ^{D}\) into the framework of (3.4). For this, it is convenient to make the identification

Example 3.3

(Gradient, deviatoric gradient, symmetric gradient and deviatoric symmetric gradient) In the following, let \(n=2\), \(V=\mathbb R^{2}\) and \(W=\mathbb R^{2\times 2}\). The derivative fits into the framework (3.4) by taking

the deviatoric gradient \(\nabla ^{D}u{:=}{\text {dev}}{\text {D}}\!u ={\text {D}}\!u-\frac{1}{n}{\text {div}}u\cdot \mathbbm {1}_{n}\) by taking

whereas the symmetric gradient is recovered by taking

Finally, the deviatoric symmetric gradient \(\varepsilon ^{D}(u){:=}{\text {dev}}{\text {sym}}{\text {D}}\!u={\text {sym}}{\text {D}}\!u -\frac{1}{n}{\text {div}}u\cdot \mathbbm {1}_{n}\) is retrieved by

Similar representations can be found in higher dimensions. However, let us remark that whereas \(\nabla ,\nabla ^{D}\) and \(\varepsilon \) are \({\mathbb {C}}\)-elliptic in all dimensions \(n\ge 2\), the trace-free symmetric gradient is \({\mathbb {C}}\)-elliptic precisely in \(n\ge 3\) dimensions (cf. [7, Ex. 2.2]). Another class of operators that arises in the context of infinitesimal elastoplasticity but is handled most conveniently by the results displayed below, is discussed in Example 5.1.

3.3 Miscellaneous bounds

In this section, we record some auxiliary material on integral operators. Given \(0<s<n\), the s-th order Riesz potential \({\mathcal {I}}_{s}(f)\) of a locally integrable function f is defined by

where \(c_{s,n}>0\) is a suitable finite constant, the precise value of which shall not be required in the sequel. We now have

Lemma 3.4

(Fractional integration [1, Theorem 3.1.4], [18, §2.7],[68, Sect. V.1]) Let \(n\ge 2\) and \(0<s<n\).

-

(a)

For any \(1<p<\infty \) with \(sp<n\) the Riesz potential \({\mathcal {I}}_{s}\) is a bounded linear operator \({\mathcal {I}}_{s}:{\text {L}}^{p}(\mathbb R^{n})\rightarrow {\text {L}}^{\frac{np}{n-sp}}(\mathbb R^{n})\).

-

(b)

Let \(1\le p<\infty \). For any \(1\le q <\frac{np}{n-sp}\) if \(sp<n\) or all \(1\le q<\infty \) if \(sp\ge n\) and any \(r>0\) there exists \(c=c(p,q,n,s,r)>0\) such that we have

$$\begin{aligned} \left\Vert {\mathcal {I}}_{s}(\mathbbm {1}_{{\text {B}}_{r}(0)}f)\right\Vert _{{\text {L}}^{q}({\text {B}}_{r}(0))}\le c\left\Vert f\right\Vert _{{\text {L}}^{p}({\text {B}}_{r}(0))}\quad \mathrm{for \,all}\;f\in {\text {C}}^{\infty }(\mathbb R^{n}). \end{aligned}$$

In the regime \(s=0\) we require two other ingredients as follows. The first one is

Lemma 3.5

(Nečas–Lions [53, Theorem 1]) Let \(\Omega \subset \mathbb R^{n}\) be open and bounded with Lipschitz boundary and let \(N\in {\mathbb {N}}\). Then for any \(1<p<\infty \) there exists a constant \(c=c(p,n,N,\Omega )>0\) such that we have

Whereas the preceding lemma proves particularly useful in the context of bounded domains (also see Theorem 4.8 below), the situation on the entire \(\mathbb R^{n}\) can be accessed by Calderón–Zygmund estimates [9] (see [71, Proposition 4.1] or [16, Proposition 4.1] for related arguments) and implies

Lemma 3.6

(Calderón–Zygmund–Korn) Let \({\mathbb {A}}\) be a differential operator of the form (3.4) and let \(1<p<\infty \). Then \({\mathbb {A}}\) is elliptic if and only if there exists a constant \(c=c(p,n,{\mathbb {A}})>0\) such that we have

4 Sharp KMS-inequalities: The case \((p,n)=(1,2)\)

4.1 Almost complementary parts

Throughout this section, let \(n=2\). The complementary part of P with respect to \({\mathscr {A}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\) is given by \(P-{\mathscr {A}}[P]\). In the present section we establish that, if \({\mathscr {A}}\) induces a \({\mathbb {C}}\)-elliptic differential operator, then for a suitable linear map \({\mathcal {L}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\) the image \((\text {Id}-{\mathcal {L}}{\mathscr {A}}[\cdot ])(\mathbb R^{2\times 2})\) is one-dimensional, in fact a line spanned by some invertible matrix. We then shall refer to \(P-{\mathcal {L}}{\mathscr {A}}[P]\) as the almost complementary part.

To this end, note that if \({\mathbb {A}}u={\mathscr {A}}[{\text {D}}\!u]\) for all \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{2};\mathbb R^{2})\) with some linear map \({\mathscr {A}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\), then the algebraic relation

holds. Within this framework, we now have

Proposition 4.1

Suppose that \({\mathbb {A}}\) is \({\mathbb {C}}\)-elliptic. Then there exists a linear map \({\mathcal {L}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\) and some \({\mathfrak {G}}\in \text {GL}(2)\) such that

Proof

Let \(\{{{\textbf {e}}}_{1},{{\textbf {e}}}_{2}\}\) be a basis of \(\mathbb R^{2}\). As \(\{{{\textbf {e}}}_{i}\otimes {{\textbf {e}}}_{j}\mid (i,j)\in \{1,2\}\times \{1,2\}\}\) spans \(\mathbb R^{2\times 2}\), it suffices to show that there exist a linear map \({\mathcal {L}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\), \({\mathfrak {G}}\in \text {GL}(2)\) and numbers \(\gamma _{ij}\in \mathbb R\) not all equal to zero such that

If we can establish (4.3), we express \(X\in \mathbb R^{2\times 2}\) as

and use (4.3) in conjunction with (4.1) to find

Since not all \(\gamma _{ij}\)’s equal zero, this gives (4.2).

Let us therefore establish (4.3). We approach (4.3) by distinguishing several dimensional cases. First note that \({\mathscr {A}}[\mathbb R^{2\times 2}]\) is at least two dimensional. In fact,

and since \({\mathbb {A}}\) is elliptic, \(\ker ({\mathbb {A}}[{{\textbf {e}}}_{1}])=\{0\}\), whence \(\dim ({\mathbb {A}}[{{\textbf {e}}}_{1}](\mathbb R^{2}))=2\) by the rank-nullity theorem. Now, if \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=2\), we conclude from (4.5) that \({\mathscr {A}}[\mathbb R^{2\times 2}]={\mathbb {A}}[{{\textbf {e}}}_{1}](\mathbb R^{2})\). We conclude that for all \(\xi \in \mathbb R^{2}\backslash \{0\}\) we have \({\mathbb {A}}[\xi ](\mathbb R^{2})={\mathbb {A}}[{{\textbf {e}}}_{1}](\mathbb R^{2})\) and hence \({\mathbb {A}}\) fails to be cancelling. Since ellipticity together with cancellation is equivalent to \({\mathbb {C}}\)-ellipticity for first order operators in \(n=2\) dimensions by Lemma 3.1, we infer that \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])\in \{3,4\}\).

If \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=4\), we note that \(\{{{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\mid 1\le i,j\le 2\}\) spans \({\mathscr {A}}[\mathbb R^{2\times 2}]\). Consequently, the vectors \({{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\), \(1\le i,j\le 2\), form a basis of \({\mathscr {A}}[\mathbb R^{2\times 2}]\). For any given \({\mathfrak {G}}\in \text {GL}(2)\) we may simply declare \({\mathcal {L}}\) by its action on these basis vectors via

Then (4.3) is fulfilled with \(\gamma _{ij}=1\) for all \((i,j)\in \{1,2\}\times \{1,2\}\), and we conclude in this case.

It remains to discuss the case \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=3\). Then there exists \((i_{0},j_{0})\in \{1,2\}\times \{1,2\}\) such that

for some \(a_{ij}\in \mathbb R\) not all equal to zero, \((i,j)\ne (i_{0},j_{0})\). In what follows, we assume that \((i_{0},j_{0})=(2,1)\); for the other index combinations, the argument is analogous. Our objective is to show that

is invertible. By assumption, not all coefficients \(a_{11},a_{12},a_{22}\) vanish. We distinguish four options:

-

(i)

If \(a_{22}=0\), \(a_{12}\ne 0\), then the matrix \({\mathfrak {G}}\) from (4.8) is invertible.

-

(ii)

If \(a_{22}\ne 0\), \(a_{12}=0\), then necessarily \(a_{11}\ne 0\) and so \({\mathfrak {G}}\) is invertible. Indeed, if \(a_{11}=0\), then (4.1) and (4.7) yield \({\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{2}=a_{22}{\mathbb {A}}[{{\textbf {e}}}_{2}]{{\textbf {e}}}_{2}\). Since \({\mathbb {A}}\) is of first order and homogeneous, the map \(\xi \mapsto {\mathbb {A}}[\xi ]v\) is linear for any fixed v. We conclude that \({\mathbb {A}}[{{\textbf {e}}}_{1}-a_{22}{{\textbf {e}}}_{2}]{{\textbf {e}}}_{2}=0\), and since \({{\textbf {e}}}_{1}-a_{22}{{\textbf {e}}}_{2}\ne 0\) independently of \(a_{22}\), this is impossible by ellipticity of \({\mathbb {A}}\).

-

(iii)

If \(a_{12}=a_{22}=0\), then (4.1) and (4.7) yield \({\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{2}=a_{11}{\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{1}\). We then conclude that \({\mathbb {A}}[{{\textbf {e}}}_{1}]({{\textbf {e}}}_{2}-a_{11}e_{1})=0\). But \({{\textbf {e}}}_{2}-a_{11}{{\textbf {e}}}_{1}\ne 0\) independently of \(a_{11}\), this is impossible by ellipticity of \({\mathbb {A}}\).

-

(iv)

If \(a_{22},a_{12}\ne 0\), then non-invertibility of the matrix is equivalent to a constant \(\lambda \ne 0\) such that

$$\begin{aligned} -a_{11}=-\lambda a_{12},\;\;\;1=-\lambda a_{22}. \end{aligned}$$(4.9)Again using linearity of \(\xi \mapsto {\mathbb {A}}[\xi ]v\) for any fixed v, (4.7) becomes

$$\begin{aligned} -\lambda a_{22}{\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{2}&= a_{11}{\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{1} + a_{12}{\mathbb {A}}[{{\textbf {e}}}_{2}]{{\textbf {e}}}_{1} + a_{22}{\mathbb {A}}[{{\textbf {e}}}_{2}]{{\textbf {e}}}_{2} \\&\!\!\!{\mathop {=}\limits ^{(4.9)}} \lambda a_{12}{\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{1} + a_{12}{\mathbb {A}}[{{\textbf {e}}}_{2}]{{\textbf {e}}}_{1} + a_{22}{\mathbb {A}}[{{\textbf {e}}}_{2}]{{\textbf {e}}}_{2}\\&= \lambda a_{12}{\mathbb {A}}[{{\textbf {e}}}_{1}]{{\textbf {e}}}_{1} + {\mathbb {A}}[{{\textbf {e}}}_{2}](a_{12}{{\textbf {e}}}_{1}+a_{22}{{\textbf {e}}}_{2}), \end{aligned}$$whereby

$$\begin{aligned} {\mathbb {A}}[-\lambda {{\textbf {e}}}_{1}](a_{12}{{\textbf {e}}}_{1}+a_{22}{{\textbf {e}}}_{2})={\mathbb {A}}[{{\textbf {e}}}_{2}](a_{12}{{\textbf {e}}}_{1}+a_{22}{{\textbf {e}}}_{2}), \end{aligned}$$so that

$$\begin{aligned} {\mathbb {A}}[\lambda {{\textbf {e}}}_{1}+{{\textbf {e}}}_{2}](a_{12}{{\textbf {e}}}_{1}+a_{22}{{\textbf {e}}}_{2})=0. \end{aligned}$$(4.10)By assumption, \(a_{12},a_{22}\ne 0\) and hence \(a_{12}{{\textbf {e}}}_{1}+a_{22}{{\textbf {e}}}_{2}\ne 0\). But \(\lambda {{\textbf {e}}}_{1}+{{\textbf {e}}}_{2}\ne 0\) independently of \(\lambda \), and hence (4.10) is at variance with the ellipticity of \({\mathbb {A}}\).

In conclusion, \({\mathfrak {G}}\) defined by (4.8) satisfies \({\mathfrak {G}}\in \text {GL}(2)\) and moreover

where we recall that \((i_{0},j_{0})=(2,1)\). By assumption and because of (4.7), \({{\textbf {e}}}_{1}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{1},{{\textbf {e}}}_{1}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{2}\) and \({{\textbf {e}}}_{2}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{2}\) form a basis of \({\mathscr {A}}[\mathbb R^{2\times 2}]\), and we may extend these three basis vectors by a vector \({{\textbf {f}}}\in \mathbb R^{2\times 2}\) to a basis of \(\mathbb R^{2\times 2}\). We then declare the linear map \({\mathcal {L}}\) by its action on these basis vectors via

Combining our above findings, we obtain

and so, in light of (4.12), we may choose

to see that (4.3) is fulfilled; in particular, not all \(\gamma _{ij}\)’s vanish, and \({\mathfrak {G}}\in \text {GL}(2)\). The proof is complete. \(\square \)

The next lemma shows that in two dimensions the operator \({\text {dev}}{\text {sym}}\) is indeed a typical example of an elliptic but not \({\mathbb {C}}\)-elliptic operator:

Lemma 4.2

(Description of first order \({\mathbb {C}}\)-elliptic operators in two dimensions) Suppose that \({\mathbb {A}}\) is elliptic. Then the operator \({\mathbb {A}}\) given by \({\mathbb {A}}u:={\mathscr {A}}[{\text {D}}\!u]\) for \(u:\mathbb R^{2}\rightarrow \mathbb R^{2}\) is \({\mathbb {C}}\)-elliptic if and only if \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}]) \in \{3,4\}\).

Proof

The sufficiency part is already contained in the proof of the previous Proposition 4.1. For the necessity part consider for \(a,b\in {\mathbb {C}}^2\) with \(a=(a_1,a_2)^\top \), \(b=(b_1,b_2)^\top \):

If \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=4\), then all \(\,{{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\) are linearly independent over \(\mathbb R\), and the condition \(\,a\otimes _{{\mathbb {A}}}b=0\) implies

and

Thus, with \(a_i,b_i\in {\mathbb {C}}\) we have

And for \(b\ne 0\) we deduce \(a=0\), meaning that the operator \({\mathbb {A}}\) is \({\mathbb {C}}\)-elliptic in this case.

In the case \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=3\) we assume without loss of generality, that there exist \(\alpha ,\beta ,\gamma \in \mathbb R\) such that

It follows from the ellipticity assumption on \({\mathbb {A}}\) (cf. the invertibility of \({\mathfrak {G}}\) from (4.8) in the proof of Proposition 4.1) that these coefficients satisfy

The condition \(\,a\otimes _{{\mathbb {A}}}b=0\) with \(a,b\in {\mathbb {C}}\) then implies

Hence, \(a_i,b_i\in {\mathbb {C}}\) satisfy

If \(b_2=0\), then by \(b\ne 0\) we must have \(b_1\ne 0\) and we obtain \(a_1=a_2=0\), meaning that the operator \({\mathbb {A}}\) is \({\mathbb {C}}\)-elliptic. Otherwise, with \(b_2\ne 0\) we have \(a_1+\beta \,a_2=0\). If \(a_2=0\) then we are done. We show, that the case \(a_2\ne 0\) cannot occur. Indeed, if \(a_2\ne 0\) then (4.15) yields:

which cannot be fulfilled since \(b\ne 0\) and \(\alpha +\beta \gamma \ne 0\). \(\square \)

Let us now be more precise and explain in detail where the proof of Proposition 4.1 fails if \({\mathbb {A}}\) is elliptic but not \({\mathbb {C}}\)-elliptic. Ellipticity implies that for any \(\xi \in \mathbb R^{2}\), \(\dim ({\mathbb {A}}[\xi ](\mathbb R^{2}))=2\) and by the foregoing lemma we must have \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=2\). Hence, if \({\mathbb {A}}\) is not \({\mathbb {C}}\)-elliptic, then precisely two pure \({\mathbb {A}}\)-tensors \({{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\), \((i,j)\in {\mathcal {I}}{:=}\{(i_{0},j_{0}),(i_{1},j_{1})\}\), are linearly independent. In order to introduce the linear map \({\mathcal {L}}\), we define it via its action on these basis vectors. At a first glance, this seems to only yield two conditions, but this is not so because of (4.3) as we here pose compatibility conditions for all indices that ought to be fulfilled. In particular, the definition of \({\mathcal {L}}\) on the fixed basis vectors must be compatible with (4.3) also for \((i,j)\notin {\mathcal {I}}\), and since (4.3) includes all rank-one-matrices, this is non-trivial. As can be seen explicitly from Example 4.4 below, non-\({\mathbb {C}}\)-elliptic operators do not satisfy this property.

Example 4.3

(Gradient and symmetric gradient) For the (\({\mathbb {C}}\)-elliptic) gradient, the set \(\{{{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\}\) is just

and so the gradient falls into the case \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=4\) in the case distinction of the above proof. For the (\({\mathbb {C}}\)-elliptic) deviatoric or symmetric gradients, the set \(\{{{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\}\) is just

and so both the deviatoric and the symmetric gradient falls into the case \(\dim ({\mathscr {A}}[\mathbb R^{2\times 2}])=3\) in the case distinction of the above proof.

Example 4.4

(Trace-free symmetric gradient) For the (non-\({\mathbb {C}}\)-elliptic) trace-free symmetric gradient, the set \(\{{{\textbf {e}}}_{i}\otimes _{{\mathbb {A}}}{{\textbf {e}}}_{j}\}\) is given by

and so the first and the third element are linearly dependent. We now verify explicitly that the relation (4.3) cannot be achieved in this case for some \({\mathfrak {G}}\in \text {GL}(2)\). Suppose towards a contradiction that (4.3) can be achieved. Then

and

From (4.16) we infer that \({\mathfrak {G}}\) needs to be a multiple of the identity matrix, whereas we infer from (4.17) that \({\mathfrak {G}}\) is contained in the skew-symmetric matrices, and this is impossible.

4.2 The implication ‘\({(b)}\Rightarrow {(a)} \)’ of Theorem 2.3

Proposition 4.1 crucially allows to adapt the reduction to Bourgain–Brezis-type estimates as pursued in [28] in the symmetric gradient case. We state a version of the result that will prove useful in later sections too:

Lemma 4.5

([3, 8, 70]) Let \(n\ge 2\). Then the following hold:

-

(a)

There exists a constant \(c_{n}>0\) such that

$$\begin{aligned} \left\Vert f\right\Vert _{\dot{{\text {W}}}^{-1,\frac{n}{n-1}}(\mathbb R^{n})}\le c_{n}\Big (\left\Vert {\text {div}}f\right\Vert _{\dot{{\text {W}}}^{-2,\frac{n}{n-1}}(\mathbb R^{n})}+\left\Vert f\right\Vert _{{\text {L}}^{1}(\mathbb R^{n})} \Big ) \end{aligned}$$(4.18)holds for all \(f\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{n})\). Moreover, if \(\Omega \subset \mathbb R^{n}\) is an open and bounded domain with Lipschitz boundary, estimate (4.18) persists for all \(f\in {\text {C}}^{\infty }(\Omega ;\mathbb R^{n})\) with \(\mathbb R^{n}\) being replaced by \(\Omega \).

-

(b)

If \(n\ge 3\), there exists a constant \(c_{n}>0\) such that

$$\begin{aligned} \left\Vert f\right\Vert _{{\text {L}}^{\frac{n}{n-1}}(\mathbb R^{n})}\le c_{n}\left\Vert {\text {curl}}f\right\Vert _{{\text {L}}^{1}(\mathbb R^{n})}\quad \text {for all}\;f\in {\text {C}}_{c,{\text {div}}}^{\infty }(\mathbb R^{n};\mathbb R^{n}). \end{aligned}$$(4.19)

For completeness, let us note that by embedding \({\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{n})\) into \({\text {W}}^{-1,p'}(\mathbb R^{n};\mathbb R^{n})\) via the dual pairing \(\langle f,\varphi \rangle _{\dot{{\text {W}}}^{-1,p'}\times \dot{{\text {W}}}^{1,p}} = \int _{\mathbb R^{n}}\langle f,\varphi \rangle _{\mathbb R^n}{\text {d}}\!x\), for any \(1<p<\infty \) and \(A\in \mathbb R^{n\times n}\) there exists \(c=c(p,n,A)>0\) such that we have the elementary inequality

Based on the preceding lemma and the results from the previous section we may now pass on to

Proof of the implication ‘\({(b)}\Rightarrow {(a)}\)’ of Theorem 2.3 Let \(P\in {\text {C}}_{c}^{\infty }(\mathbb R^{2};\mathbb R^{2\times 2})\). By Proposition 4.1, there exists a linear map \({\mathcal {L}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\), \({\mathfrak {G}}\in \text {GL}(2)\) and a linear function \(\gamma :\mathbb R^{2\times 2}\rightarrow \mathbb R\) such that

Realising that

the pointwise relation (4.21) gives

whence the invertibility of \({\mathfrak {G}}\) yields

In consequence,

for some scalar, linear, homogeneous second order differential operator \({\mathbb {B}}\). Now, based on inequality (4.18) and recalling \(n=2\), we infer

By definition of \({\mathfrak {f}}\), cf. (4.23), the previous inequality implies

and (4.20) then yields by virtue of \({\mathfrak {G}}\in \text {GL}(2)\)

Next, put \(f{:=}{\text {Curl}}P\in {\text {C}}_{c}^{\infty }(\mathbb R^{2};\mathbb R^{2})\) and consider the solution \(u=(u_{1},u_{2})^{\top }\) of the equation \(-\Delta u= f\) obtained as \(u=\Phi _{2}*f\), where \(\Phi _{2}\) is the two-dimensional Green’s function for the negative Laplacian. By classical elliptic regularity estimates we have on the one hand

On the other hand, setting

and

We may thus write \(T-P={\text {D}}\!v\) for some \(v:\mathbb R^{2}\rightarrow \mathbb R^{2}\), for which Lemma 3.6 yieldsFootnote 2

The proof is then concluded by splitting

and using (4.30), (4.29) for the first and (4.29) for the second term. The proof is complete. \(\square \)

Remark 4.6

Note that, despite of the special situation in three dimensions, in \(n=2\) dimensions we have in general

In fact, it is a crucial step in the previous proof to express \({\text {div}}{\text {Curl}}P\) by a linear combination of second derivatives of \({\mathscr {A}}[P]\). This is clearly the case if \({\mathscr {A}}[P]={\text {dev}}P\) but is not fulfilled for general \({\mathbb {C}}\)-elliptic operators \({\mathbb {A}}\). For this reason we need Proposition 4.1 to ensure that there exists an invertible matrix \({\mathfrak {G}}\) such that \( {\text {div}}{\mathfrak {G}}^{-1}{\text {Curl}}P = {\mathcal {L}}({\text {D}}\!^2 {\mathscr {A}}[P])\).

4.3 The implication ‘\({(a)}\Rightarrow {(b)} \)’ of Theorem 2.3

Again, let \(n=2\) and \(p=1\). As in [32], one directly obtains the necessity of \({\mathscr {A}}\) being induced by an elliptic differential operator for (2.4) to hold; in fact, testing (2.4) with gradient fields \(P={\text {D}}\!u\) gives us the usual Korn-type inequality \(\left\Vert {\text {D}}\!u\right\Vert _{{\text {L}}^{2}(\mathbb R^{2})}\le c\left\Vert {\mathbb {A}}u\right\Vert _{{\text {L}}^{2}(\mathbb R^{2})}\) for all \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{2};\mathbb R^{2})\). Then one uses Lemma 3.6 to conclude the ellipticity; hence, in all of the following we may tacitly assume \({\mathscr {A}}\) to be induced by an elliptic differential operator.

The necessity of \({\mathbb {C}}\)-ellipticity requires a refined argument, which we address now:

Lemma 4.7

In the situation of Theorem 2.3, validity of (2.4) implies that \(\mathscr {A}\) induces a \({\mathbb {C}}\)-elliptic differential operator.

Proof

Suppose that \({\mathbb {A}}\) is not \({\mathbb {C}}\)-elliptic, meaning that there exist \(\xi \in {\mathbb {C}}^{2}\backslash \{0\}\) and \(v=\text {Re}(v)+\mathrm{i\, Im}(v)\in {\mathbb {C}}^{2}\backslash \{0\}\) such that \({\mathbb {A}}[\xi ]v = 0\). Writing this last equation by separately considering real and imaginary parts, we obtain

For \(f\in {\text {C}}_{c}^{\infty }(\mathbb R^{2})\), we then define \(x_{\xi }{:=}(\langle x,\text {Re}(\xi )\rangle ,\langle x,\text {Im}(\xi )\rangle )\) and

We first claim that there exists a constant \(c>0\) such that

To this end, we first note that we may assume \(\text {Re}(\xi )\) and \(\text {Im}(\xi )\) to be linearly independent over \(\mathbb R\). This argument is certainly clear to experts, but since it is crucial for our argument, we give the quick proof. Suppose that \(\text {Re}(\xi )\) and \(\text {Im}(\xi )\) are not linearly independent. Then there are three options:

-

(i)

If \(\text {Im}(\xi )=0\), then \(\text {Re}(\xi )\ne 0\) (as otherwise \(\xi =0\)). Then \((4.32)_{1}\) implies \(\text {Re}(v)=0\) by ellipticity of \({\mathbb {A}}\), and \((4.32)_{2}\) implies \(\text {Im}(v)=0\) by ellipticity of \({\mathbb {A}}\). Thus \(v=0\in {\mathbb {C}}^{2}\), contradicting our assumption \(v\in {\mathbb {C}}^{2}\backslash \{0\}\).

-

(ii)

If \(\text {Re}(\xi )=0\), we may imitate the argument from (i) to arrive at a contradiction.

-

(iii)

Based on (i) and (ii), we may assume that \(\text {Im}(\xi ),\text {Re}(\xi )\ne 0\) and that there exists \(\lambda \ne 0\) such that \(\text {Re}(\xi )=\lambda \text {Im}(\xi )\). Inserting this relation into (4.32), we arrive at

$$\begin{aligned} \lambda {\mathbb {A}}[\text {Im}(\xi )]\text {Re}(v)&= {\mathbb {A}}[\text {Im}(\xi )]\text {Im}(v){} & {} \Rightarrow&{\mathbb {A}}[\text {Im}(\xi )](\lambda \text {Re}(v)-\text {Im}(v))&=0,\\ {\mathbb {A}}[\text {Im}(\xi )]\text {Re}(v)&= -\lambda {\mathbb {A}}[\text {Im}(\xi )]\text {Im}(v){} & {} \Rightarrow&{\mathbb {A}}[\text {Im}(\xi )](\text {Re}(v)+\lambda \text {Im}(v))&=0. \end{aligned}$$By our assumption, \(\lambda \ne 0\), and ellipticity of \({\mathbb {A}}\) implies that

$$\begin{aligned} \lambda \text {Re}(v)=\text {Im}(v)\quad \text {and}\quad \text {Re}(v)+\lambda \text {Im}(v)=0,\quad \text {so}\;(1+\lambda ^{2})\text {Re}(v)=0, \end{aligned}$$so \(\text {Re}(v)=\text {Im}(v)=0\), and this is at variance with our assumption \(v\ne 0\).

Secondly, we similarly note that \(\text {Re}(v)\) and \(\text {Im}(v)\) can be assumed to be linearly independent over \(\mathbb R\). Suppose that this is not the case. Then there are three options:

-

(i’)

If \(\text {Re}(v)=0\), then necessarily \(\text {Im}(v)\ne 0\). Then \((4.32)_{1}\) implies that \(\text {Im}(\xi )=0\). Inserting this into \((4.32)_{2}\) yields \(\text {Re}(\xi )=0\) and so \(\xi =0\), which is at variance with our assumption of \(\xi \in {\mathbb {C}}^{2}\backslash \{0\}\).

-

(ii’)

If \(\text {Im}(v)=0\), we may imitate the argument from (i’) to arrive at a contradiction.

-

(iii’)

Based on (i’) and (ii’), we may assume that \(\text {Im}(v),\text {Re}(v)\ne 0\) and that there exists \(\lambda \ne 0\) such that \(\text {Re}(v)=\lambda \text {Im}(v)\). Inserting this relation into (4.32) yields

$$\begin{aligned} \lambda {\mathbb {A}}[\text {Re}(\xi )]\text {Im}(v)&= {\mathbb {A}}[\text {Im}(\xi )]\text {Im}(v){} & {} \Rightarrow&{\mathbb {A}}[\lambda \text {Re}(\xi )-\text {Im}(\xi )]\text {Im}(v)&= 0,\\ \lambda {\mathbb {A}}[\text {Im}(\xi )]\text {Im}(v)&= - {\mathbb {A}}[\text {Re}(\xi )]\text {Im}(v){} & {} \Rightarrow&{\mathbb {A}}[\lambda \text {Im}(\xi )+\text {Re}(\xi )]\text {Im}(v)&=0. \end{aligned}$$By our assumption \(\text {Im}(v)\ne 0\), ellipticity of \({\mathbb {A}}\) yields

$$\begin{aligned} \lambda \text {Re}(\xi )=\text {Im}(\xi )\;\text {and}\;-\lambda \text {Im}(\xi )=\text {Re}(\xi ) \Rightarrow (1+\lambda ^{2})\text {Im}(\xi )=0. \end{aligned}$$But then \(\text {Im}(\xi )=0\) and, by \(\lambda \ne 0\), \(\text {Re}(\xi )=0\), so \(\xi =0\), in turn being at variance with \(\xi \ne 0\).

Based on (i)–(iii) and (i’)–(iii’), we conclude that the set

displays a basis of \(\mathbb R^{2\times 2}\), and that

is a norm on \(\mathbb R^{2\times 2}\). This norm is equivalent to any other norm on \(\mathbb R^{2\times 2}\), and hence (4.34) follows by the definition of \(P_{f}\) and a change of variables, again recalling that \(\text {Re}(\xi )\), \(\text {Im}(\xi )\) and \(\text {Re}(v)\), \(\text {Im}(v)\) are linearly independent.

In a next step, we record that for any \(f\in {\text {C}}_{c}^{\infty }(\mathbb R^{2})\)

To conclude the proof, we will now establish that the Korn–Maxwell–Sobolev inequality in this situation yields the contradictory estimate

To this end, note that for any \(\varphi \in {\text {C}}_{c}^{\infty }(\mathbb R^{2})\) and any \(X\in \mathbb R^{2\times 2}\) we have as in (4.22):

Moreover, writing \(\text {Re}(\xi )=(\xi _{11},\xi _{12})^{\top }\) and \(\text {Im}(\xi )=(\xi _{21},\xi _{22})^{\top }\), we find

As a consequence of (4.38), we have for \(j\in \{1,2\}\)

Based on (4.37), the previous two identities imply by definition of \(P_{f}\) [cf. (4.33)]

Now define \(\displaystyle \alpha {:=}\det \begin{pmatrix} \xi _{11} &{} \xi _{12} \\ \xi _{21} &{} \xi _{22}\end{pmatrix} \) so that

Hence, in view of (4.34), (4.35) and (4.40), starting with the Korn–Maxwell–Sobolev inequality and a change of variables (recall that \(\text {Re}(\xi ),\text {Im}(\xi )\) are linearly independent), we end up at the contradictory estimate (4.36). Thus, \({\mathbb {A}}\) has to be \({\mathbb {C}}\)-elliptic and the proof is complete. \(\square \)

4.4 Proof of Theorem 2.3

We now briefly pause to concisely gather the arguments required for the proof of Theorem 2.3. The direction ‘(a) \(\Rightarrow \) (b)’ is given by Lemma 4.7, whereas the equivalence of (b) and (c) is a direct consequence of Lemma 3.1. In turn, the equivalence ‘(b)\(\Leftrightarrow \)(d)’ is established in Lemma 4.2. Finally, the remaining implication ‘(b) \(\Rightarrow \) (a)’ is proved at the end of Paragraph Sect. 4.2 above. In conclusion, the proof of Theorem 2.3 is complete.

4.5 KMS inequalities with non-zero boundary values

Even though the present paper concentrates on compactly supported maps, we like to comment on how Proposition 4.1 can be used to derive variants for maps with non-zero boundary values; its generalisation to higher space dimensions shall be pursued elsewhere.

Theorem 4.8

(Non-zero boundary values, \((p,n)=(1,2)\)) The following are equivalent:

-

(a)

The linear map \({\mathscr {A}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\) induces a \({\mathbb {C}}\)-elliptic differential operator \({\mathbb {A}}\) in \(n=2\) dimensions via \({\mathbb {A}}u {:=}{\mathscr {A}}[{\text {D}}\!u]\).

-

(b)

There exists a finite dimensional subspace \({\mathcal {K}}\) of the \(\mathbb R^{2\times 2}\)-valued polynomials such that for any open and bounded, simply connected domain \(\Omega \subset \mathbb R^{2}\) with Lipschitz boundary \(\partial \Omega \) there exists \(c=c({\mathscr {A}},\Omega )>0\) such that

$$\begin{aligned} \min _{\Pi \in {\mathcal {K}}}\left\Vert P-\Pi \right\Vert _{{\text {L}}^{2}(\Omega )}\le c\,\Big (\left\Vert {\mathscr {A}}[P]\right\Vert _{{\text {L}}^{2}(\Omega )}+\left\Vert {\text {Curl}}P\right\Vert _{{\text {L}}^{1}(\Omega )}\Big ) \end{aligned}$$(4.41)holds for all \(P\in {\text {C}}^{\infty }(\Omega ;\mathbb R^{2\times 2})\).

Proof of Theorem 4.8

Ad ‘(a) \(\Rightarrow \) (b)’. As at the end of Paragraph Sect. 4.2, we use Proposition 4.1 to write \(P={\mathcal {L}}({\mathscr {A}}[P])+\gamma (P)\,{\mathfrak {G}}\) pointwisely for all \(P\in {\text {C}}^{\infty }(\Omega ;\mathbb R^{2\times 2})\), suitable linear maps \({\mathcal {L}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\), \(\gamma :\mathbb R^{2\times 2}\rightarrow \mathbb R\) and a fixed matrix \({\mathfrak {G}}\in \text {GL}(2)\). Let \(1<q<\infty \). For \(\Omega \subset \mathbb R^{2}\) has Lipschitz boundary, we may invoke the Nečas estimate from Lemma 3.5 to obtain for all \(P\in {\text {C}}^{\infty }(\Omega ;\mathbb R^{2\times 2})\)

with a constant \(c=c(q,\Omega )>0\). We then equally have (4.23), whereby

Combining (4.42) and (4.43), we arrive at

where \(c=c(q,{\mathscr {A}},\Omega )>0\). Next let, adopting the notation from (3.5),

Since \(\Omega \subset \mathbb R^{2}\) is simply connected and has Lipschitz boundary, \({\text {Curl}}P=0\) implies that \(P={\text {D}}\!u\) for some \(u\in {\text {W}}^{1,q}(\Omega ;\mathbb R^{2})\). Then \({\mathscr {A}}[P]=0\) yields \({\mathbb {A}}u {:=}{\mathscr {A}}[{\text {D}}\!u]=0\). In conclusion, \({\mathbb {C}}\)-ellipticity of \({\mathbb {A}}\) yields by virtue of Lemma 3.2 that there exists \(m\in \mathbb N\) such that \({\mathcal {N}}\subset \nabla {\mathscr {P}}_{m}(\mathbb R^{2};\mathbb R^{2})\hookrightarrow {\text {L}}^{2}(\Omega ;\mathbb R^{2\times 2})\). We denote \(m_{0}{:=}\dim ({\mathcal {N}})\) and choose an \({\text {L}}^{2}\)-orthonormal basis \(\{{{\textbf {g}}}_{1},...,{{\textbf {g}}}_{m_{0}}\}\) of \({\mathcal {N}}\); furthermore, slightly abusing notation, we set

Our next claim is that there exists a constant \(c=c(q,{\mathscr {A}},\Omega )>0\) such that

holds for all \(P\in {\text {W}}^{{\text {Curl}},q}(\Omega )\). If (4.45) were false, for each \(i\in \mathbb N\) we would find \(P_{i}\in {\text {W}}^{{\text {Curl}},q}(\Omega )\) with \(\left\Vert P_{i}\right\Vert _{{\text {L}}^{q}(\Omega )}=1\) and

Since \(1<q<\infty \), the Banach–Alaoglu theorem allows to pass to a non-relabeled subsequence such that \(P_{i}\rightharpoonup P\) weakly in \({\text {L}}^{q}(\Omega ;\mathbb R^{2\times 2})\) for some \(P\in {\text {L}}^{q}(\Omega ;\mathbb R^{2\times 2})\). Lower semicontinuity of norms with respect to weak convergence and (4.46) then imply \({\mathscr {A}}[P]=0\). Moreover, we have for all \(\varphi \in {\text {W}}_{0}^{1,q'}(\Omega ;\mathbb R^{2})\) that the formal adjoint of \({\text {Curl}}\) is given by \({\text {Curl}}^{*} \varphi =\varphi \llbracket \nabla \rrbracket _{\times _{n}}\in {\text {L}}^{q'}(\Omega ;\mathbb R^{2\times 2})\) and therefore

as \(i\rightarrow \infty \) because of \(P_{i}\rightharpoonup P\) in \({\text {L}}^{q}(\Omega ;\mathbb R^{2\times 2})\). Thus, \({\text {Curl}}P_{i}{\mathop {\rightharpoonup }\limits ^{*}}{\text {Curl}}P\) in \({\text {W}}^{-1,q}(\Omega ;\mathbb R^{2})\). By lower semicontinuity of norms for weak*-convergence and (4.46), we have \({\text {Curl}}P=0\) in \({\text {W}}^{-1,q}(\Omega ;\mathbb R^{2})\). On the other hand, since the \({{\textbf {g}}}_{j}\)’s are polynomials and hence trivially belong to \({\text {L}}^{q'}(\Omega ;\mathbb R^{2\times 2})\), we deduce that

so that \(P\in {\mathcal {N}}\cap {\mathcal {N}}^{\bot }=\{0\}\). Finally, since \({\mathcal {L}}\circ \,{\mathscr {A}}:\mathbb R^{2\times 2}\rightarrow \mathbb R^{2\times 2}\) is linear, we have \(P_{i}-{\mathcal {L}}({\mathscr {A}}[P_{i}])\rightharpoonup P-{\mathcal {L}}({\mathscr {A}}[P]) = 0\) weakly in \({\text {L}}^{q}(\Omega ;\mathbb R^{2\times 2})\), and by compactness of the embedding \({\text {L}}^{q}(\Omega ;\mathbb R^{2\times 2})\hookrightarrow \hookrightarrow {\text {W}}^{-1,q}(\Omega ;\mathbb R^{2\times 2})\), we may assume that \(P_{i}-{\mathcal {L}}({\mathscr {A}}[P_{i}])\rightarrow 0\) strongly in \({\text {W}}^{-1,q}(\Omega ;\mathbb R^{2\times 2})\). Inserting \(P_{i}\) into (4.44), the left-hand side is bounded below by 1 whereas the right-hand side tends to zero as \(i\rightarrow \infty \). This yields the requisite contradiction and (4.45) follows.

Based on (4.45), we may conclude the proof as follows. If \(P\in {\mathcal {N}}^{\bot }\), we use Lemma 4.5(a) for Lipschitz domains and imitate (4.23) to find with \({\mathfrak {f}}{:=}{\mathfrak {G}}^{-1}{\text {Curl}}P\)

Then (4.45) with \(q=2\) becomes

In the general case, we apply inequality (4.47) to \(P-\sum _{j=1}^{m_{0}}\int _{\Omega }\langle {{\textbf {g}}}_{j},P\rangle {\text {d}}\!x\,{{\textbf {g}}}_{j}\in {\mathcal {N}}^{\bot }\). Hence (b) follows.

Ad ‘(b) \(\Rightarrow \) (a)’. Inserting gradients \(P={\text {D}}\!u\) into (4.41) yields the Korn-type inequality

By routine smooth approximation, this inequality extends to all \(u\in {{\text {W}}}{^{{\mathbb {A}},2}}(\Omega )\). In particular, if \({\mathbb {A}}u\equiv 0\) in \(\Omega \), then u must coincide with a polynomial of fixed degree. Since this is possible only if \({\mathbb {A}}\) is \({\mathbb {C}}\)-elliptic by Lemma 3.2, (a) follows and the proof is complete. \(\square \)

5 Sharp KMS-inequalities: the case \((p,n)\ne (1,2)\)

For the conclusions of Theorem 2.1 we only have to establish the sufficiency parts; note that the necessity of ellipticity follows as in Sect. 4.3.

As in [32], the proof of the sufficiency part is based on a suitable Helmholtz decomposition. In view of the explicit expressions of these parts [cf. (A.10) in the appendix], we have in all dimensions \(n\ge 2\) for all \(a\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{n})\) and all \(x\in \mathbb R^{n}\):

where we have used \(|\llbracket b\rrbracket _{\times _{n}}|=\sqrt{n-1}\,|b|\) for any \(b\in \mathbb R^{n}\) as a direct consequence of (3.2). Now, we decompose \(P\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{m\times n})\) also in its divergence-free part \(P_{{\text {Div}}}\) and curl-free part \(P_{{\text {Curl}}}\); for the following, it is important to note that these matrix differential operators act row-wise. Thus we may write \(P_{{\text {Curl}}} = {\text {D}}\!u\) for some \(u:\mathbb R^n\rightarrow \mathbb R^m\). Then by Lemma 3.6 we have

The remaining proofs then follow after establishing

To this end, we consider the rows of our incompatible field \(P=(P^1,\ldots ,P^m)^{\top }\). Using Lemma 3.4 with \(s=1\) yields

Combining (5.2) and (5.3) then yields Theorem 2.1 for \(1<p<n\). If \(n\ge 3\) and \(p=1\), estimate (5.3) is now a consequence of Lemma 4.5(b). Indeed, using (4.19), we have

where in the penultimate step we added \(P^j_{{\text {curl}}}\) and used the fact that \({\text {curl}}P^j_{{\text {curl}}}=0\). Summarising, the proof of the Theorem 2.1 is now complete.

Example 5.1

(Incompatible Maxwell/div-curl-inequalities) In the context of time-incremental infinitesimal elastoplasticity [57], the authors investigated the operator \({\mathscr {A}}:\mathbb R^{n\times n}\rightarrow \mathbb R^{n\times n}\) given by:

In \(n=1\) dimensions this operator clearly induces an elliptic differential operator. We show, that the induced operator \({\mathbb {A}}\) is also elliptic in \(n\ge 2\) dimensions. To this end consider for \(\xi \in \mathbb R^n\backslash \{0\}\):

Hence,

Since the generalized cross product and the skew-symmetric part of the dyadic product consist of the same entries, cf. [41, Eq. (3.9)], the first condition here (5.8)\(_1\) is equivalent to

Furthermore the generalized cross product satisfies the area property, cf. [41, Eq. (3.13)], so that

meaning that \({\mathscr {A}}\) induces an elliptic operator \({\mathbb {A}}\). But since \(\dim {\mathscr {A}}[\mathbb R^{2\times 2}]=2\) we infer from Lemma 4.2 that \({\mathbb {A}}\) is not \({\mathbb {C}}\)-elliptic in \(n=2\) dimensions. Hence, our Theorem 2.1 and (anticipating the proof from Sect. 6 below) Theorem 2.4 are applicable to this \({\mathscr {A}}\):

provided \(( n\ge 3, 1\le p<n )\) or \(( n=2, 1< p<2 )\). Inserting \(P={\text {D}}\!u\) and using that \(\text {skew}{\text {D}}\!u\) and the generalised \({\text {curl}}u\) consist of the same entries, (5.11) generalises the usual \({\text {div}}\)-\({\text {curl}}\)-inequality to incompatible fields. If \(n=2\) and \(p=1\), inequality (5.11) fails since \({\mathbb {A}}\) fails to be \({\mathbb {C}}\)-elliptic. In fact, the induced elliptic operator \({\mathbb {A}}\) is not cancelling in any dimension \(n\in \mathbb N\) since:

We conclude this section by addressing inequalities that (i) involve other function space scales and (ii) also deal with the case \(p\ge n\) left out so far. As such, we provide three exemplary results on the validity of inequalities

for \(n\ge 2\) that can be approached analogously to (5.1)–(5.4) by combining the Helmholtz decomposition with suitable versions of the fractional integration theorem. To state these results, we require some notions from the theory of weighted Lebesgue and Orlicz spaces, and to keep the paper self-contained, the reader is directed to Appendix A.3 for the underlying background terminology. For the following, let \({\mathbb {A}}u:={\mathscr {A}}[{\text {D}}\!u]\) be elliptic.

-

Weighted Lebesgue spaces. Let \(n\ge 2\) and w be a weight that belongs to the Muckenhoupt class \(A_{1}\) on \(\mathbb R^{n}\) (see e.g. [49]) and denote \({\text {L}}^{p}(\mathbb R^{n};w)\) the corresponding weighted Lebesgue space. Given \(1<p<n\), the Korn-type inequality \(\left\Vert {\text {D}}\!u\right\Vert _{{\text {L}}^{p^{*}}(\mathbb R^{n},w)}\le c\,\left\Vert {\mathbb {A}}u\right\Vert _{{\text {L}}^{p^{*}}(\mathbb R^{n},w)}\) for \(u\in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{n})\) (see e.g. [36, 37] or [16, Cor. 4.2]) and \({\mathcal {I}}_{1}:{\text {L}}^{p}(\mathbb R^{n};w^{p/p^{*}})\rightarrow {\text {L}}^{p^{*}}(\mathbb R^{n};w)\) boundedly (see [50, Theorem 4]) combine to (5.13) with \(X(\mathbb R^{n})={\text {L}}^{p^{*}}(\mathbb R^{n},w)\) and \(Y(\mathbb R^{n})={\text {L}}^{p}(\mathbb R^{n};w^{p/p^{*}})\).

-

Orlicz spaces. Let \(A,B:[0,\infty )\rightarrow [0,\infty )\) be two Young functions such that B is of class \(\Delta _{2}\) and \(\nabla _{2}\). Adopting the notation from (A.14) and (A.15), let us further suppose that A dominates \(B_{(n)}\) globally and that \(A^{(n)}\) dominates B globally, together with

$$\begin{aligned} \int _{0}\frac{B(t)}{t^{1+\frac{n}{n-1}}}{\text {d}}\!t<\infty \;\;\;\text {and}\;\;\;\int _{0}\frac{{\widetilde{A}}(t)}{t^{1+\frac{n}{n-1}}}{\text {d}}\!t<\infty . \end{aligned}$$(5.14)Under these assumptions, Cianchi [11, Theorem 2(ii)] showed that \({\mathcal {I}}_{1}:{\text {L}}^{A}(\mathbb R^{n})\rightarrow {\text {L}}^{B}(\mathbb R^{n})\) is a bounded linear operator. In conclusion, if A, B are as above, we may then invoke the Korn-type inequality [16, Proposition 4.1] (or [6] in the particular case of the symmetric gradient) to deduce (5.13) with \(X={\text {L}}^{B}(\mathbb R^{n})\) and \(Y={\text {L}}^{A}(\mathbb R^{n})\).

-

\({\text {BMO}}\) and homogeneous Hölder spaces for \(p\ge n\). If \(p= n\) and \(Y(\mathbb R^{n})={\text {L}}^{n}(\mathbb R^{n})\), scaling suggests \(X={\text {L}}^{\infty }(\mathbb R^{n})\), but for this choice inequality (1.4) is readily seen to be false for general elliptic operators \({\mathbb {A}}\) by virtue of Ornstein’s Non-Inequality on \({\text {L}}^{\infty }\) [62]. Let \(\left\Vert \cdot \right\Vert _{{\text {BMO}}(\mathbb R^{N})}\) denote the \({\text {BMO}}\)-seminorm. We may use the fact that the Korn-type inequality from Lemma 3.6 also holds in the form \(\left\Vert {\text {D}}\!u\right\Vert _{{\text {BMO}}(\mathbb R^{n})}\le c\,\left\Vert {\mathbb {A}}u\right\Vert _{{\text {BMO}}(\mathbb R^{n})}\); this follows by realising that the map \({\text {D}}\!u\mapsto {\mathbb {A}}u\) is a multiple of the identity plus a Calderón-Zygmund operator of convolution type. As observed by Spector and the first author in the three-dimensional case [32, Sect. 2.2] and since \({\text {Curl}}\) as defined in Sect. 3.1 acts row-wisely, we may combine this with \({\mathcal {I}}_{1}:{\text {L}}^{n}(\mathbb R^{n})\rightarrow {\text {BMO}}(\mathbb R^{n})\) boundedly. This gives us (5.13) with \(X(\mathbb R^{n})={\text {BMO}}(\mathbb R^{n})\) and \(Y(\mathbb R^{n})={\text {L}}^{n}(\mathbb R^{n})\). If \(n<p<\infty \), one may argue similarly to obtain (5.13) with \(\left\Vert \cdot \right\Vert _{X(\mathbb R^{n})}\) being the homogeneous \(\alpha =1-\frac{n}{p}\)-Hölder seminorm. Combining this with \({\mathcal {I}}_{1}:{\text {L}}^{p}(\mathbb R^{n})\rightarrow {\dot{{\text {C}}}}{^{0,1-n/p}}(\mathbb R^{n})\) boundedly yields (5.13) with \(X(\mathbb R^{n})={\dot{{\text {C}}}}{^{0,1-n/p}}(\mathbb R^{n})\) and \(Y(\mathbb R^{n})={\text {L}}^{p}(\mathbb R^{n})\).

Remark 5.2

(\(n=1\)) We now briefly comment on the case \(n=1\) that has been left out so far. In this case, the only part maps \({\mathscr {A}}:\mathbb R\rightarrow \mathbb R\) that induce elliptic operators are (non-zero) multiples of the identity. Since every \(\varphi \in {\text {C}}_{c}^{\infty }(\mathbb R)\) is a gradient, the corresponding KMS-inequalities read \(\left\Vert P\right\Vert _{{\text {L}}^{\infty }(\mathbb R)}\le \left\Vert {\mathscr {A}}[P]\right\Vert _{{\text {L}}^{\infty }(\mathbb R)}\) for \(P\in {\text {C}}_{c}^{\infty }(\mathbb R)\) and hold subject to the ellipticity assumption on \({\mathscr {A}}\).

These results only display a selection, and other scales such as smoothness spaces e.g. à la Triebel–Lizorkin [69] equally seem natural but are beyond the scope of this paper. However, returning to the above examples we single out the following

Open Question 5.3

(On weighted Lebesgue and Orlicz spaces)

-

(a)

If one aims to generalise the above result for weighted Lebesgue spaces to the case \(p=1\) in \(n\ge 3\) dimensions, a suitable weighted version of Lemma 4.5(b) is required. To the best of our knowledge, the only available weighted Bourgain–Brezis estimates are due to Loulit [47] but work subject to different assumptions on the weights than belonging to \(A_{1}\). In this sense, it would be interesting to know whether (5.13) extends to \(X(\mathbb R^{n})={\text {L}}^{\frac{n}{n-1}}(\mathbb R^{n},w)\) and \(Y={\text {L}}^{1}(\mathbb R^{n};w^{\frac{n-1}{n}})\) for \(w\in A_{1}\).

-

(b)

The reader will notice that a slightly weaker bound can be obtained in the above Orlicz scenario provided one keeps the \(\Delta _{2}\)- and \(\nabla _{2}\)-assumptions on B but weakens the assumptions on A. By Cianchi [11, Theorem 2(i)] the Riesz potential \({\mathcal {I}}_{1}\) maps \({\text {L}}^{A}(\mathbb R^{n})\rightarrow {\text {L}}_{\text {w}}^{B}(\mathbb R^{n})\) (with the weak Orlicz space \({\text {L}}_{\text {w}}^{B}(\mathbb R^{n})\)) precisely if \((5.14)_{2}\) holds and \(A^{(n)}\) dominates B globally. For \(A(t)=t\) and hereafter

$$\begin{aligned} {\widetilde{A}}(t)={\left\{ \begin{array}{ll} 0&{}\;\text {if}\;0\le t\le 1,\\ \infty &{}\;\text {otherwise}, \end{array}\right. } \end{aligned}$$together with the \(\Delta _{2}\cap \nabla _{2}\)-function \(B(t)=t^{\frac{n}{n-1}}\), this gives us the well-known mapping property \({\mathcal {I}}_{1}:{\text {L}}^{1}(\mathbb R^{n})\rightarrow {\text {L}}_{\text {w}}^{\frac{n}{n-1}}(\mathbb R^{n})\) boundedly. Similarly as this boundedness property can be improved on divergence-free maps to yield estimate (4.19), it would be interesting to know whether estimates of the form \(\left\Vert u\right\Vert _{{\text {L}}^{B}(\mathbb R^{n})}\le c \left\Vert {\text {curl}}u\right\Vert _{{\text {L}}^{A}(\mathbb R^{n})}\) hold for \(u\in {\text {C}}_{c,{\text {div}}}^{\infty }(\mathbb R^{n};\mathbb R^{n})\) for if \(n\ge 3\) provided \((5.14)_{2}\) is in action (which is the case e.g. if A has almost linear growth) and \(A^{(n)}\) dominates the \(\Delta _{2}\cap \nabla _{2}\)-function B. This would imply (5.13) under slightly weaker conditions on A, B than displayed above.

6 Subcritical KMS-inequalities

We conclude the paper by giving the quick proof of Theorem 2.4. As to Theorem 2.4(a), let \(q=1\), \(\varphi \in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{m})\) be arbitrary and choose \(r>0\) so large such that \({\text {spt}}(\varphi )\subset {\text {B}}_{r}(0)\). Since \(c>0\) as in (2.5) is independent of \(r>0\), we may apply this inequality to \(P={\text {D}}\!\varphi \). Sending \(r\rightarrow \infty \), we obtain \(\left\Vert {\text {D}}\!\varphi \right\Vert _{{\text {L}}^{1}(\mathbb R^{n})}\le c\left\Vert {\mathbb {A}}\varphi \right\Vert _{{\text {L}}^{1}(\mathbb R^{n})}\) for all \(\varphi \in {\text {C}}_{c}^{\infty }(\mathbb R^{n};\mathbb R^{m})\). By the sharp version of Ornstein’s Non-Inequality as given by Kirchheim and Kristensen [38, Theorem 1.3] (also see [13, 22]), the existence of a linear map \({L}\in {\mathscr {L}}(\mathbb R^{N\times m};\mathbb R^{N\times m})\) with \({\mathbb {E}}_{i}={L}\circ \,{\mathbb {A}}_{i}\) for all \(1\le i\le n\) follows at once. If \(1<q<n\), we may similarly reduce to the situation on the entire \(\mathbb R^{n}\) and use the argument given at the beginning of Sect. 4.3 to find that ellipticity of \({\mathbb {A}}u{:=}{\mathscr {A}}[{\text {D}}\!u]\) is necessary for (2.5) to hold.

For the sufficiency parts of Theorem 2.4(a) and (b), we note that by scaling it suffices to consider the case \(r=1\). Given \(P\in {\text {C}}_{c}^{\infty }({\text {B}}_{1}(0);\mathbb R^{m\times n})\), we argue as in Sect. 5 and Helmholtz decompose \(P=P_{{\text {Curl}}}+P_{{\text {Div}}}\). Writing \(P_{{\text {Curl}}}={\text {D}}\!u\), we have \(\left\Vert P_{{\text {Curl}}}\right\Vert _{{\text {L}}^{q}({\text {B}}_{1}(0))}\le c\,(\left\Vert {\mathscr {A}}[P]\right\Vert _{{\text {L}}^{q}({\text {B}}_{1}(0))}+\left\Vert P_{{\text {Div}}}\right\Vert _{{\text {L}}^{q}({\text {B}}_{1}(0))})\); for \(q=1\) this is a trivial consequence of \({\mathbb {E}}_{i}=\text {L}\circ \,{\mathbb {A}}_{i}\) for all \(1\le i\le n\), whereas for \(q>1\) this follows as in Sect. 5, cf. (5.2). Finally, the part \(P_{{\text {Div}}}\) is treated by use of the subcritical fractional integration theorem, cf. Lemma 3.4(b). This completes the proof of Theorem 2.4.

Remark 6.1

(Variants and generalisations of Theorem 2.4) If \(n=2\), Korn’s inequality in the form of Theorem 4.8 (under the same assumptions on \(\Omega \subset \mathbb R^{2}\))

persists for all \(1< p<2\) and all \(1< q\le p^{*}=\frac{2p}{2-p}\). One starts from (4.45) and estimates

by Hölder’s inequality and \(\left\Vert \varphi \right\Vert _{{\text {L}}^{p'}(\Omega )} \le c\left\Vert {\text {D}}\!\varphi \right\Vert _{{\text {L}}^{q'}(\Omega )}\) for \(\varphi \in {\text {W}}_{0}^{1,q'}(\Omega ;\mathbb R^{2})\). If \(q<2\) and thus \(q'>2\), this follows by Morrey’s embedding theorem and by the estimate \(\left\Vert \varphi \right\Vert _{{\text {L}}^{s}(\Omega )}\le c\left\Vert {\text {D}}\!\varphi \right\Vert _{{\text {L}}^{2}(\Omega )}\) for all \(1<s<\infty \) provided \(q=2\). If \(2<q\le \frac{2p}{2-p}\), we require \(p'\le (q')^{*}\), and this is equivalent to \(q\le \frac{2p}{2-p}\).

We conclude with a link between the Orlicz scenario from Sect. 5 and Theorem 2.4:

Remark 6.2

As discussed by Cianchi et al. [5, 10] for the (trace-free) symmetric gradient, if the Young function B is not of class \(\Delta _{2}\cap \nabla _{2}\), then Korn-type inequalities persist with a certain loss of integrability on the left-hand side. For instance, by Cianchi et al. [10, Ex. 3.8] one has for \(\alpha \ge 0\) the inequality

with the Zygmund classes \(\text {L}\log ^{\alpha }{\text {L}}\). By the method of proof, the underlying inequalities equally apply to general elliptic operators. One then obtains e.g. the refined subcritical KMS-type inequality

for all \(P\in {\text {C}}_{c}^{\infty }({\text {B}}_{1}(0);\mathbb R^{n\times n})\), where \(\alpha \ge 0\), \(p\ge 1\) and \(c=c(p,\alpha ,n,{\mathscr {A}})>0\) is a constant. The reader will notice that this inequality holds if and only if \({\mathscr {A}}\) induces an elliptic operator \({\mathbb {A}}\). Note that for \(\alpha =0\), the additional logarithm on the right-hand side of (6.3) is the key ingredient for (6.3) to hold for such \({\mathscr {A}}\), while without the additional logarithm one is directly in the situation of Theorem 2.4(a) and this not only forces \({\mathbb {A}}={\mathscr {A}}[{\text {D}}\!u]\) to be elliptic but to trivialise.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed.

Notes

\({\text {dev}}X{:=}X-\frac{{{\,\textrm{tr}\,}}X}{n}\cdot \mathbbm {1}_{n}\) denotes the deviatoric (trace-free) part of an \(n\times n\) matrix X.

Note that T is not compactly supported (and neither is v), but \(v\in {\dot{{\text {W}}}}{^{1,2}}(\mathbb R^{2};\mathbb R^{2})\) and then the Korn-type inequality underlying the first inequality in (4.30) follows by smooth approximation, directly using the definition of \({\dot{{\text {W}}}}{^{1,2}}(\mathbb R^{2})\).

References

Adams, D.R., Hedberg, L.: Function Spaces and Potential Theory. Grundlehren der mathematischen Wissenschaften (A Series of Comprehensive Studies in Mathematics), vol. 314, Springer, Berlin-Heidelberg-New York, (1996). https://doi.org/10.1007/978-3-662-03282-4

Bauer, S., Neff, P., Pauly, D., Starke, G.: Dev-Div- and DevSym-DevCurl-inequalities for incompatible square tensor fields with mixed boundary conditions. ESAIM Control Optim. Calc. Var. 22(1), 112–133 (2016). https://doi.org/10.1051/cocv/2014068

Bourgain, J., Brezis, H.: New estimates for elliptic equations and Hodge type systems. J. Eur. Math. Soc. (JEMS) 9(2), 277–315 (2007). https://doi.org/10.4171/JEMS/80

Breit, D.: Existence Theory for Generalized Newtonian Fluids. Mathematics in Science and Engineering. Academic Press, Elsevier (2017).https://www.sciencedirect.com/book/9780128110447/existence-theory-for-generalized-newtonian-fluids

Breit, D., Cianchi, A., Diening, L.: Trace-free Korn inequalities in Orlicz spaces. SIAM J. Math. Anal. 49(4), 2496–2526 (2017). https://doi.org/10.1137/16M1073662

Breit, D., Diening, L.: Sharp conditions for Korn inequalities in Orlicz spaces. J. Math. Fluid Mech. 14, 565–573 (2012). https://doi.org/10.1007/s00021-011-0082-x

Breit, D., Diening, L., Gmeineder, F.: On the trace operator for functions of bounded \({\mathbb{A} }\)-variation. Anal. PDE 13(2), 559–594 (2020). https://doi.org/10.2140/apde.2020.13.559

Brezis, H., Van Schaftingen, J.: Boundary estimates for elliptic systems with \({{\rm L}}^{1}\)-data. Calc. Var. PDE 30, 369–388 (2007). https://doi.org/10.1007/s00526-007-0094-9

Calderón, A.P., Zygmund, A.: On singular integrals. Am. J. Math. 78, 289–309 (1956). https://doi.org/10.2307/2372517

Cianchi, A.: Korn type inequalities in Orlicz spaces. J. Funct. Anal. 267(7), 2313–2352 (2014). https://doi.org/10.1016/j.jfa.2014.07.012

Cianchi, A.: Strong and weak type inequalities for some classical operators in Orlicz spaces. J. Lond. Math. Soc. (2) 60(1), 187–202 (1999). https://doi.org/10.1112/S0024610799007711

Ciarlet, P.G.: Linear and Nonlinear Functional Analysis with Applications. Society for Industrial and Applied Mathematics, Philadelphia (2013)

Conti, S., Faraco, D., Maggi, F.: A new approach to counterexamples to \({{\rm L}}^{1}\) estimates: Korn’s inequality, geometric rigidity, and regularity for gradients of separately convex functions. Arch. Ration. Mech. Anal. 175(2), 287–300 (2005). https://doi.org/10.1007/s00205-004-0350-5

Conti, S., Focardi, M., Iurlano, F.: A note on the Hausdorff dimension of the singular set of solutions to elasticity type systems. Commun. Contemp. Math. 21(06), 1950026 (2019). https://doi.org/10.1142/S0219199719500263

Conti, S., Garroni, A.: Sharp rigidity estimates for incompatible fields as consequence of the Bourgain Brezis div-curl result. C. R. Math. Acad. Sci. Paris 359(2), 155–160 (2021). https://doi.org/10.5802/crmath.161

Conti, S., Gmeineder, F.: \({\mathscr {A}}\)-quasiconvexity and partial regularity. Calc. Var. PDE 61, 215 (2022). https://doi.org/10.1007/s00526-022-02326-0

Dain, S.: Generalized Korn’s inequality and conformal Killing vectors. Calc. Var. PDE 25(4), 535–540 (2006). https://doi.org/10.1007/s00526-005-0371-4

DiBenedetto, E.: Partial Differential Equations, 2nd edn. Birkhäuser, Boston (2010). https://doi.org/10.1007/978-0-8176-4552-6

Diening, L., Gmeineder, F.: Sharp trace and Korn inequalities for differential operators. arXiv:2105.09570

Duoandikoetxea, J.: Forty Years of Muckenhoupt Weights. In: Lukves, J., Pick L. (eds.) Function Spaces and Inequalities. Lecture Notes Paseky nad Jizerou, pp. 23–75. Matfyzpress, Praga (2013)

Ebobisse, F., Hackl, K., Neff, P.: A canonical rate-independent model of geometrically linear isotropic gradient plasticity with isotropic hardening and plastic spin accounting for the Burgers vector. Contin. Mech. Thermodyn. 31(5), 1477–1502 (2019). https://doi.org/10.1007/s00161-019-00755-5

Faraco, D., Guerra, A.: Remarks on Ornstein’s non-inequality in \({\mathbb{R} }^{2\times 2}\). Q. J. Math. 73(1), 17–21 (2022). https://doi.org/10.1093/qmath/haab016

Feireisl, E.: Dynamics of Viscous Compressible Fluids. Oxford Lecture Series in Mathematics and its Applications, vol. 36. Oxford University Press, Oxford (2004). https://doi.org/10.1093/acprof:oso/9780198528388.001.0001

Fonseca, I., Müller, S.: \({\cal{A} }\)-quasiconvexity, lower semicontinuity, and Young measures. SIAM J. Math. Anal. 30(6), 1355–1390 (1999). https://doi.org/10.1137/S0036141098339885

Friedrichs, K.O.: On the boundary value problems of the theory of elasticity and Korn’s inequality. Ann. Math. 48(2), 441–471 (1947). https://doi.org/10.2307/1969180