Abstract

This paper deals with the large-scale behaviour of dynamical optimal transport on \(\mathbb {Z}^d\)-periodic graphs with general lower semicontinuous and convex energy densities. Our main contribution is a homogenisation result that describes the effective behaviour of the discrete problems in terms of a continuous optimal transport problem. The effective energy density can be explicitly expressed in terms of a cell formula, which is a finite-dimensional convex programming problem that depends non-trivially on the local geometry of the discrete graph and the discrete energy density. Our homogenisation result is derived from a \(\Gamma \)-convergence result for action functionals on curves of measures, which we prove under very mild growth conditions on the energy density. We investigate the cell formula in several cases of interest, including finite-volume discretisations of the Wasserstein distance, where non-trivial limiting behaviour occurs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the past decades there has been intense research activity in the field of optimal transport, both in pure mathematics and in applied areas [35, 39, 41, 42]. In continuous settings, a central result in the field is the Benamou–Brenier formula [6], which establishes the equivalence of static and dynamical optimal transport. It asserts that the classical Monge–Kantorovich problem, in which a cost functional is minimised over couplings of given probability measures \(\mu _0\) and \(\mu _1\), is equivalent to a dynamical transport problem, in which an energy functional is minimised over all solutions to the continuity equation connecting \(\mu _0\) and \(\mu _1\).

In discrete settings, the equivalence between static and dynamical optimal transport breaks down, and it turns out that the dynamical formulation [11, 30, 32] is essential in applications to evolution equations, discrete Ricci curvature, and functional inequalities [15,16,17,18,19,20, 33]. Therefore, it is an important problem to analyse the discrete-to-continuum limit of dynamical optimal transport in various setting.

This limit passage turns out to be highly nontrivial. In fact, seemingly natural discretisations of the Benamou–Brenier formula do not necessarily converge to the expected limit, even in one-dimensional settings [25]. The main result in [26] asserts that, for a sequence of meshes on a bounded convex domain in \({\mathbb {R}}^d\), an isotropy condition on the meshes is required to obtain the convergence of the discrete dynamical transport distances to \({\mathbb {W}}_2\). This is in sharp contrast to the scaling behaviour of the corresponding gradient flow dynamics, for which no additional symmetry on the meshes is required to ensure the convergence of discretised evolution equations to the expected continuous limit [12, 21].

The goal of this paper is to investigate the large-scale behaviour of dynamical optimal transport on graphs with a \(\mathbb {Z}^d\)-periodic structure. Our main contribution is a homogenisation result that describes the effective behaviour of the discrete problems in terms of a continuous optimal transport problem, in which the effective energy density depends non-trivially on the geometry of the discrete graph and the discrete transport costs.

1.1 Main results

We give here an informal presentation of the main results of this paper, ignoring several technicalities for the sake of readability. Precise formulations and a more general setting can be found from Sect. 2 onwards.

1.1.1 Dynamical optimal transport in the continuous setting

For \(1 \le p < \infty \), let \(\mathbb {W}_p\) be the Wasserstein–Kantorovich–Rubinstein distance between probability measures on a metric space \((X,\textsf{d})\): for \(\mu ^0, \mu ^1 \in \mathcal {P}(X)\),

where \(\Gamma (\mu ^0,\mu ^1)\) denotes the set of couplings of \(\mu ^0\) and \(\mu ^1\), i.e., all measures \(\gamma \in \mathcal {P}(X \times X)\) with marginals \(\mu ^0\) and \(\mu ^1\). On the torus \(\mathbb {T}^d\) (or more generally, on Riemannian manifolds), the Benamou–Brenier formula [3, 6] provides an equivalent dynamical formulation for \(p > 1\), namely

where the infimum runs over all solutions \((\rho , j)\) to the continuity equation \(\partial _t \rho + \nabla \cdot j = 0\) with boundary conditions \(\rho _0(x) \, \textrm{d}x = \mu ^0(\textrm{d}x)\) and \(\rho _1(x) \, \textrm{d}x = \mu ^1(\textrm{d}x)\).

In this paper we consider general lower semicontinuous and convex energy densities \(f: \mathbb {R}_+ \times \mathbb {R}^d \rightarrow \mathbb {R}\cup \{ + \infty \}\) under suitable (super-)linear growth conditions. (The Benamou–Brenier formula above corresponds to the special case \(f(\rho ,j) = \frac{|j|^p}{\rho ^{p-1}}\)). For sufficiently regular curves of measures \( {\varvec{\mu }}: (0,1) \rightarrow \mathcal {M}_+(\mathbb {T}^d) \), we consider the continuous action

Here, the infimum runs over all time-dependent vector-valued measures \({\varvec{\nu }}: (0,1) \rightarrow \mathcal {M}^d(\mathbb {T}^d)\) satisfying the continuity equation \((\mathbb{C}\mathbb{E})\) \(\partial _t \mu _t + \nabla \cdot \nu _t = 0\) in the sense of distributions.

1.1.2 Dynamical optimal transport in the discrete setting

A natural discrete counterpart to (1.2) can be defined on finite (undirected) graphs \((\mathcal {X}, \mathcal {E})\). For each edge \((x,y) \in \mathcal {E}\) we fix a lower semicontinuous and convex energy densityFootnote 1\(F_{xy}: \mathbb {R}_+ \times \mathbb {R}_+ \times \mathbb {R}\rightarrow \mathbb {R}_+\). For sufficiently regular curves \({\pmb {m}}: (0,1) \rightarrow \mathcal {M}_+(\mathcal {X})\) we then consider the discrete action

Here, the infimum runs over all time-dependent “discrete vector fields”, i.e., all anti-symmetric functions \(\pmb {J}: (0,1) \rightarrow \mathbb {R}^\mathcal {E}\) satisfying the discrete continuity equation \((\mathcal{C}\mathcal{E})\) \(\partial _t m_t(x) + {{\,\mathrm{\text {\textsf{div}}}\,}}J_t(x) = 0 \) for all \(x \in \mathcal {X}\), where \({{\,\mathrm{\text {\textsf{div}}}\,}}J_t(x):= \sum _{y: (x,y) \in \mathcal {E}} J_t(x,y)\) denotes the discrete divergence. Variational problems of the form (1.3) arise naturally in the formulation of jump processes as generalised gradient flows [37].

1.1.3 Dynamical optimal transport on \(\mathbb {Z}^d\)-periodic graphs

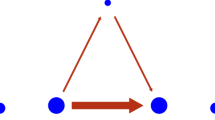

In this work we fix a \(\mathbb {Z}^d\)-periodic graph \((\mathcal {X},\mathcal {E})\) embedded in \(\mathbb {R}^d\), as in Fig. 1. For sufficiently small \(\varepsilon > 0\) with \(1/\varepsilon \in \mathbb {N}\), we then consider the finite graph \((\mathcal {X}_\varepsilon , \mathcal {E}_\varepsilon )\) obtained by scaling \((\mathcal {X}, \mathcal {E})\) by a factor \(\varepsilon \), and wrapping the resulting graph around the torus, so that the resulting graph is embedded in \(\mathbb {T}^d\). We are interested in the behaviour of the rescaled discrete action, defined for curves \({\pmb {m}}: (0,1) \rightarrow \mathcal {M}_+(\mathcal {X}_\varepsilon )\) by

As above, the infimum runs over all time-dependent “discrete vector fields” \(\pmb {J}: (0,1) \rightarrow \mathbb {R}^{\mathcal {E}_\varepsilon }\) satisfying the discrete continuity equation \((\mathcal{C}\mathcal{E}_\varepsilon )\) on the rescaled graph \((\mathcal {X}_\varepsilon , \mathcal {E}_\varepsilon )\).

1.1.4 Convergence of the action

One of our main results (Theorem 5.1) asserts that, as \(\varepsilon \rightarrow 0\), the action functionals \(\mathcal {A}_\varepsilon \) converge to a limiting functional \(\mathbb {A}= \mathbb {A}_\textrm{hom}\) of the form (1.2), with an effective energy density \(f = f_\textrm{hom}\) which depends non-trivially on the geometry of the graph \((\mathcal {X},\mathcal {E})\) and the discrete energy densities \(F_{xy}\). We only require a very mild linear growth condition on the energy densities \(F_{xy}\):

As \(\varepsilon \rightarrow 0\), the functionals \(\mathcal {A}_\varepsilon \) \(\Gamma \)-converge to \(\mathbb {A}_{\textrm{hom}}\) in the weak (and vague) topology of \(\mathcal {M}_+\big ((0,1) \times \mathbb {T}^d\big )\).

The precise formulation of this result involves an extension of \(\mathbb {A}_\textrm{hom}\) to measures on \((0,1) \times \mathbb {T}^d\); see Sect. 3 below.

Let us now explain the form of the effective energy density \(f_\textrm{hom}\), which is given by a cell formula. For given \(\rho \ge 0\) and \(j \in \mathbb {R}^d\), \(f_\textrm{hom}(\rho , j)\) is obtained by minimising the discrete energy per unit cube among all periodic mass distributions \(m: \mathcal {X}\rightarrow \mathbb {R}_+\) representing \(\rho \), and all periodic divergence-free discrete vector fields \(J: \mathcal {E}\rightarrow \mathbb {R}\) representing j in the following sense. Set \(\mathcal {X}^Q:= \mathcal {X}\cap [0,1)^d\) and \(\mathcal {E}^Q:= \big \{ (x,y) \in \mathcal {E}\ : \ x \in \mathcal {X}^Q \big \}\). Then \(f_\textrm{hom}:\mathbb {R}_+ \times \mathbb {R}^d \rightarrow \mathbb {R}_+\) is given by

where the set of representatives \({{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ,j)\) consists of all \(\mathbb {Z}^d\)-periodic functions \(m: \mathcal {X}\rightarrow \mathbb {R}_+\) and all \(\mathbb {Z}^d\)-periodic discrete vector fields satisfying

1.1.5 Boundary value problems

Our second main result deals with the corresponding boundary value problems, which arise by minimising the action functional among all curves with given boundary conditions, as in the Benamou–Brenier formula (1.1). We define

We then obtain the following result (Theorem 5.10):

As \(\varepsilon \rightarrow 0\), the minimal actions \(\mathcal{M}\mathcal{A}_\varepsilon \) \(\Gamma \)-converge to \(\mathbb{M}\mathbb{A}_{\textrm{hom}}\)

in the weak topology of \(\mathcal {M}_+(\mathbb {T}^d) \times \mathcal {M}_+(\mathbb {T}^d)\).

This result is proved under a superlinear growth condition on the discrete energy densities, which holds for discretisations of the Wasserstein distance \(\mathbb {W}_p\) for \(p > 1\).

A special case of interest is the case where \(\mathcal{M}\mathcal{A}_\varepsilon \) is a Riemannian transport distance associated to a gradient flow structure for Markov chains as in [30, 32]. In this situation, we show that the discrete transport distances converge to a 2-Wasserstein distance on the torus (Corollary 5.3). Interestingly, the underlying distance is induced by a Finsler metric, which is not necessarily Riemannian.

We also investigate transport distances with nonlinear mobility [13, 29] and their finite-volume discretisations on the torus \(\mathbb {T}^d\). In the spirit of [26], we give a geometric characterisation of finite-volume meshes for which the discretised transport distances converge to the expected limit.

1.1.6 Compactness

The results for boundary value problems are obtained by combining our first main result with a compactness result for sequence of measures with bounded action, which is of independent interest. We obtain two results of this type.

In the first compactness result (Theorem 5.4) we assume at least linear growth of the discrete energies \(F_{xy}\) at infinity. Under this condition we prove compactness in the space \(\textrm{BV}_{\textrm{KR}}\big ((0,1); \mathcal {M}_+(\mathbb {T}^d) \big )\), which consists of curves of bounded variation, with respect to the Kantorovich–Rubinstein (\(\textrm{KR}\)) norm on the space of measures. The convergence holds for almost every \(t\in (0,1)\).

In the second compactness result (Theorem 5.9), which is used in the analysis of the boundary value problems, we assume a stronger condition of at least superlinear growth on the energy densities \(F_{xy}\). We then obtain compactness in the space \(W_\textrm{KR}^{1,1}\big ((0,1); \mathcal {M}_+(\mathbb {T}^d)\big )\), which consists of absolutely continuous curves with respect to the \(\textrm{KR}\)-norm. The convergence is uniform for \(t \in (0,1)\). We refer to the “Appendix” for precise definitions of these spaces.

1.1.7 Related works

For a classical reference to the study of flows on networks, we refer to Ford and Fulkerson [22].

Many works are devoted to discretisations of continuous energy functionals in the framework of Sobolev and BV spaces, e.g., [1, 4, 5, 36]. Cell formulas appear in various discrete and continuous variational homogenisation problems; see, e.g., [4, 7, 9, 27, 31].

The large scale behaviour of optimal transport on random point clouds has been studied by Garcia–Trillos, who proved convergence to the Wasserstein distance [23].

1.2 Organisation of the paper

Sects. 2 and 3 contain the necessary definitions as well as the assumptions we use throughout the article in the discrete and continuous settings. Section 4 deals with the definition of the homogenised action functional. In Sect. 5 we present the rigorous statements of our main results, including the \(\Gamma \)-convergence of the discrete energies to the effective homogenised limit and the compactness theorems for curves of bounded discrete energies. The proof of our main results can be found in Sect. 6 (compactness and convergence of the boundary value problems) and Sects. 7 and 8 (\(\Gamma \)-convergence of \(\mathcal {A}_\varepsilon \)). Finally, in Sect. 9, we discuss several examples and apply our results to some common finite-volume and finite-difference discretisations.

1.3 Sketch of the proof of Theorem 5.1

In the last part of this section, we sketch the proof of our main result on the convergence of \(\mathcal {A}_\varepsilon \) to the homogenised limit (Theorem 5.1). Crucial tools to show both the lower bound and the upper bound in Theorem 5.1 are regularisation procedures for solutions to the continuity equation, both at the discrete and at the continuous level.

In this section, we use the informal notation \(\lessapprox \) and \( > rapprox \) to mean that the corresponding inequality holds up to a small error in \(\varepsilon >0\), e.g., \(A_\varepsilon \lessapprox B_\varepsilon \) means that \(A_\varepsilon \le B_\varepsilon + o_\varepsilon (1)\) where \(o_\varepsilon (1) \rightarrow 0\) as \(\varepsilon \rightarrow 0\).

For \(\varepsilon > 0\) and \(z \in \mathbb {Z}^d\) (or more generally, for \(z \in \mathbb {R}^d\)), we set \(Q_\varepsilon ^z:= \varepsilon z + [0, \varepsilon )^d \subseteq \mathbb {T}^d\). For \(x \in \mathcal {X}_\varepsilon \subset \mathbb {T}^d\), we denote by \(x_\textsf{z}\) the unique element of \(\mathbb {Z}_\varepsilon ^d\) satisfying \(x \in Q_\varepsilon ^{x_\textsf{z}}\). Note that \(\{Q_\varepsilon ^z \ : \ z \in \mathbb {Z}_\varepsilon ^d\}\) defines a partition of \(\mathbb {T}^d\).

To compare discrete and continuous objects, we consider embeddings of probability measures \(m \in \mathcal {P}(\mathcal {X}_\varepsilon )\) and anti-symmetric functions \(J: \mathcal {E}_\varepsilon \rightarrow \mathbb {R}\) defined by

These embeddings preserve the continuity equation in the following sense: if \(({\pmb {m}}, \pmb {J}) \in \mathcal{C}\mathcal{E}_\varepsilon \), then \((\iota _\varepsilon {\pmb {m}}, \iota _\varepsilon \pmb {J}) \in \mathbb{C}\mathbb{E}\).

We also use the notation \(\mathcal {F}_\varepsilon (m,J):= \sum _{(x,y) \in \mathcal {E}_\varepsilon } \varepsilon ^d F_{xy} \Big ( \frac{m(x)}{\varepsilon ^d}, \frac{m(y)}{\varepsilon ^d}, \frac{J(x,y)}{\varepsilon ^{d-1}} \Big ) \).

Sketch of the liminf inequality. For \(\varepsilon > 0\) with \(\frac{1}{\varepsilon } \in \mathbb {N}\), consider the curve \((m_t^\varepsilon )_{t \in (0,1)} \subseteq \mathcal {M}_+(\mathcal {X}_\varepsilon )\) and let \({\pmb {m}}^\varepsilon \in \mathcal {M}_+\big ((0,1) \times \mathcal {X}_\varepsilon \big )\) be the corresponding measure on space-time defined by \({\pmb {m}}^\varepsilon (\textrm{d}x, \textrm{d}t) = m_t^\varepsilon (\textrm{d}x) \, \textrm{d}t\). Suppose that \(\iota _\varepsilon {\pmb {m}}^\varepsilon \rightarrow {\varvec{\mu }}\) vaguely in \(\mathcal {M}_+\big ((0,1) \times \mathbb {T}^d\big )\) as \(\varepsilon \rightarrow 0\). The goal is to show the liminf inequality

Without loss of generality we assume that \(\mathcal {A}_\varepsilon ({\pmb {m}}^\varepsilon ) = \mathcal {A}_\varepsilon ({\pmb {m}}^\varepsilon , \pmb {J}^\varepsilon ) \le C < \infty \) for every \(\varepsilon >0\), for some sequence of vector fields \(\pmb {J}^\varepsilon \) such that \(({\pmb {m}}^\varepsilon , \pmb {J}^\varepsilon ) \in \mathcal{C}\mathcal{E}_\varepsilon \). As we will see in (4.11), the embedded solutions to the continuity equation \((\iota _\varepsilon {\pmb {m}}^\varepsilon , \iota _\varepsilon \pmb {J}^\varepsilon ) \in \mathbb{C}\mathbb{E}\) define curves of measures with densities with respect to \(\mathscr {L}^d\) on \(\mathbb {T}^d\) of the form

for every \(u \in Q_\varepsilon ^{\bar{z}} \subset \mathbb {T}^d\). Here, \(J_{t,u}^\varepsilon \in \mathbb {R}^{\mathcal {E}_\varepsilon }\) is a convex combination of the functions \( \big \{ J_t^\varepsilon \big ( \cdot -\varepsilon z \big ) \,: \, z \in \mathbb {Z}_\varepsilon ^d, \, |z|_\infty \le R_0 + 1 \big \}\).

As we will estimate the discrete energies at any time \(t \in (0,1)\), for simplicity we drop the time dependence and write \(\rho = \rho _t\), \(j= j_t\), \(m^\varepsilon =m_t^\varepsilon \), \(J^\varepsilon =J_t^\varepsilon \), \(J_u^\varepsilon = J_{t,u}^\varepsilon \). A crucial step is to construct, for every \(u \in Q_\varepsilon ^{\bar{z}}\), a representative

which is approximately equal to the values of \((m^\varepsilon , J^\varepsilon )\) close to \(\mathcal {X}\cap \{ x_\textsf{z}= \bar{z} \}\). The lower bound (1.7) would then follow by time-integration of the static estimate

together with the lower semicontinuity of \(\mathbb {A}_\textrm{hom}\). In the last inequality we used the definition of the homogenised density \(f_\textrm{hom}\big (\rho (u),j(u)\big )\), which corresponds to the minimal microscopic cost with total mass \(\rho (u)\) and flux j(u).

To find the sought representatives in (1.8), it may seem natural to define \(\widehat{m}_u \in \mathbb {R}_+^{\mathcal {X}}\) and \(\widetilde{J}_u \in \mathbb {R}_a^{\mathcal {E}}\) by taking the values of m and \(J_u\) in the \(\varepsilon \)-cube at \(\bar{z}\), and insert these values at every cube in \((\mathcal {X}, \mathcal {E})\), so that the result is \(\mathbb {Z}^d\)-periodic. Precisely:

where \(\bar{x}:= x - x_\textsf{z}+ \bar{z}\). This would ensure that \(\varepsilon ^{-d} \widehat{m}_u \in {{\,\mathrm{{\textsf{Rep}}}\,}}\big ( \rho (u) \big )\). Unfortunately, this construction produces a vector field \(\varepsilon ^{-(d-1)}\widetilde{J}_u\) which does not in general belong to \({{\,\mathrm{{\textsf{Rep}}}\,}}\big (j(u)\big )\): indeed, while \(\widetilde{J}_u\) has the desired effective flux (i.e., \({{\,\mathrm{\textsf{Eff}}\,}}(\varepsilon ^{-(d-1)}\widetilde{J}_u) = j(u)\), as given in (1.6)), it is not in general divergence-free.

To deal with this complication, we introduce a corrector field \(\bar{J}_u\) associated to \(\widetilde{J}_u\), i.e., an anti-symmetric and \(\mathbb {Z}^d\)-periodic function \( \bar{J}_u: \mathcal {E}\rightarrow \mathbb {R}\) satisfying

whose existence we prove in Lemma 7.3.

It is clear that if we set \(\widehat{J}_u:= \widetilde{J}_u + \bar{J}_u\) by construction we have \({{\,\mathrm{\text {\textsf{div}}}\,}}\widehat{J}_u = 0\) and \({{\,\mathrm{\textsf{Eff}}\,}}\big (\varepsilon ^{-(d-1)}\widehat{J}_u\big ) = j(u)\), thus

To carry out this program and prove a lower bound of the form (1.9), we need to quantify the error we perform passing from \((m^\varepsilon , J^\varepsilon )\) to \(\big \{ (\widehat{m}_u, \widehat{J}_u) \ : \ u \in \mathbb {T}^d\big \}\). It is evident by construction and from (1.10) that spatial and time regularity of \((m^\varepsilon , J^\varepsilon )\) are crucial to this purpose. For example, an \(\ell ^\infty \)-bound on the time derivative of the form \(\Vert \partial _t m_t^\varepsilon \Vert _\infty \le C \varepsilon ^d\) (or, in other words, a Lipschitz bound in time for \(\rho _t\)) together with \(({\pmb {m}}^\varepsilon , \pmb {J}^\varepsilon ) \in \mathcal{C}\mathcal{E}_\varepsilon \) would imply a control on \({{\,\mathrm{\text {\textsf{div}}}\,}}J\) and thus a control of the error in (1.10) of the form \(\Vert \varepsilon ^{1-d}\bar{J}_u \Vert _\infty \le C \varepsilon \).

This is why the key first step in our proof is a regularisation procedure at the discrete level: for any given sequence of curves \(\big \{ ({\pmb {m}}^\varepsilon , \pmb {J}^\varepsilon ) \in \mathcal{C}\mathcal{E}_\varepsilon \ : \ \varepsilon >0 \big \}\) of (uniformly) bounded action \(\mathcal {A}_\varepsilon \), we can exihibit another sequence \(\big \{ (\widetilde{{\pmb {m}}{}}^\varepsilon , \widetilde{\pmb {J}{}}^\varepsilon ) \in \mathcal{C}\mathcal{E}_\varepsilon \ : \ \varepsilon >0 \big \}\), quantitatively close as measures and in action \(\mathcal {A}_\varepsilon \) to the first one, which enjoy good Lipschitz and \(l^\infty \) properties and for which the above explained program can be carried out.

This result is the content of Proposition 7.1 and it is based on a three-fold regularisation, that is in energy, in time, and in space (see Sect. 7.1).

Sketch of the limsup inequality. Fix \(({\varvec{\mu }},{\varvec{\nu }}) \in \mathbb{C}\mathbb{E}\). The goal is to find \({\pmb {m}}^\varepsilon \in \mathcal {M}_+((0,1) \times \mathcal {X}_\varepsilon )\) such that \(\iota _\varepsilon {\pmb {m}}^\varepsilon \rightarrow {\varvec{\mu }}\) weakly in \(\mathcal {M}_+((0,1) \times \mathbb {T}^d)\) and

As in the the proof of the liminf inequality, the first step is a regularisation procedure, this time at the continuous level (Proposition 8.26). Thanks to this approximation result, we can assume without loss of generality that

where \((\rho _t,j_t)_t\) are the smooth densities of \(({\varvec{\mu }},{\varvec{\nu }})\in \mathbb{C}\mathbb{E}\) with respect to \(\mathscr {L}^{d+1}\) on \((0,1) \times \mathbb {T}^d\), and \({{\,\mathrm{\textsf{D}}\,}}(f_{\textrm{hom}})^\circ \) denotes the interior of the domain of \(f_{\textrm{hom}}\) (see “Appendix 1”). The convexity of \(f_\textrm{hom}\) ensures its Lipschitz-continuity on every compact set \(K \Subset {{\,\mathrm{\textsf{D}}\,}}(f_{\textrm{hom}})^\circ \), hence the assumption (1.12) allows us to assume such regularity for the rest of the proof.

We split the proof of the upper bound into several steps. In short, we first discretise the continuous measures \(({\varvec{\mu }},{\varvec{\nu }})\) and identify an optimal discrete microstructure, i.e., minimisers of the cell problem described by \(f_\textrm{hom}\), on each \(\varepsilon \)-cube \(Q_\varepsilon ^z\), \(z \in \mathbb {Z}_\varepsilon ^d\). A key difficulty at this stage is that the optimal selection does not preserve the continuity equation, hence an additional correction is needed. For this purpose, we first apply the discrete regularisation result Proposition 7.1 to obtain regular discrete curves and then find suitable small correctors that provide discrete competitors for \(\mathcal {A}_\varepsilon \), i.e., solutions to \(\mathcal{C}\mathcal{E}_\varepsilon \) which are close to the optimal selection.

Let us explain these steps in more detail.

Step 1: For every \(z \in \mathbb {Z}_\varepsilon ^d\), \(t \in (0,1)\), and each cube \(Q_\varepsilon ^z\) we consider the natural discretisation of \(({\varvec{\mu }},{\varvec{\nu }})\), that we denote by \( \big ( \textrm{P}_\varepsilon \mu _t(z),\textrm{P}_\varepsilon \nu _t(z) \big )_{t,z} \subset \mathbb {R}_+ \times \mathbb {R}^d \), given by

An important feature of this construction is that the continuity equation is preserved from \(\mathbb {T}^d\) to \(\mathbb {Z}_\varepsilon ^d\), in the sense that

for \(t \in (0,1)\) and \(z \in \mathbb {Z}_\varepsilon ^d\).

Step 2: We build the associated optimal discrete microstructure for the cell problem for each cube \(Q_\varepsilon ^z\), meaning we select \( ({\pmb {m}},\pmb {J}) = \big ( m_t^z, J_t^z \big )_{t\in (0,1), z \in \mathbb {Z}_\varepsilon ^d} \) such that

where \({{\,\mathrm{{\textsf{Rep}}}\,}}_o\) denotes the set of optimal representatives in the definition of the cell-formula (1.5). Using the smoothness of \({\varvec{\mu }}\) and \({\varvec{\nu }}\), one can in particular show that

Step 3: The next step is to glue together the microstructures \(({\pmb {m}}, \pmb {J})\) defined for every \(z \in \mathbb {Z}_\varepsilon ^d\) via a gluing operator \(\mathcal {G}_\varepsilon \) (Definition 8.4) to produce a global microstructure \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon ) \in \mathcal {M}_+((0,1) \times \mathcal {X}_\varepsilon ) \times \mathcal {M}((0,1) \times \mathcal {E}_\varepsilon )\). As the gluing operators are mass preserving and \(m_t^z \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\textrm{P}_\varepsilon \mu _t(z))\), it is not hard to see that \(\iota _\varepsilon \widehat{{\pmb {m}}{}}^\varepsilon \rightarrow {\varvec{\mu }}\) weakly in \(\mathcal {M}_+((0,1) \times \mathbb {T}^d)\) as \(\varepsilon \rightarrow 0\).

Step 4: In contrast to \(\textrm{P}_\varepsilon \), the latter operation produces curves \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\) which do not in general solve the discrete continuity equation \(\mathcal{C}\mathcal{E}_\varepsilon \). Therefore, we seek to find suitable corrector vector fields in order to obtain a discrete solution, and thus a candidate for \(\mathcal {A}_\varepsilon (\widehat{{\pmb {m}}{}}^\varepsilon )\). For this purpose we regularise \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\) using Proposition 7.1 below. This yields a regular curve which is close in the sense of measures and in energy to the original one. Note that no discrete regularity is guaranteed for \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\), despite the smoothness assumption on \(({\varvec{\mu }},{\varvec{\nu }})\), due to possible singularities of \(F_{xy}\).

For the sake of the exposition, we shall discuss the last steps of the proof assuming that \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\) already enjoy the Lipschitz and \(\ell ^\infty \)–regularity properties ensured by Proposition 7.1.

Step 5: For sufficiently regular \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\), we seek a discrete competitor for \(\mathcal {A}_\varepsilon (\widehat{{\pmb {m}}{}}^\varepsilon )\) which is close to \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\). As the latter does not necessary belong to \(\mathcal{C}\mathcal{E}_\varepsilon \), we find suitable correctors \(\pmb {V}^\varepsilon \) such that the corrected curves \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon + \pmb {V}^\varepsilon )\) belong to \(\mathcal{C}\mathcal{E}_\varepsilon \), with \(\pmb {V}^\varepsilon \) small in the sense that it satisfies the bound

The proof of existence of the corrector \(\pmb {V}^\varepsilon \), together with the quantitative bound relies on a localisation argument (Lemma 8.22) and a study of the divergence equation on periodic graphs (Lemma 8.16), performed at the level of each cube \(Q_\varepsilon ^z\), for every \(z \in \mathbb {Z}_\varepsilon ^d\). The regularity of \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\) is crucial in order to obtain the estimate (1.14).

Step 6: The final step consists of estimating the action of the measures defined by \({\pmb {m}}^\varepsilon := \widehat{{\pmb {m}}{}}^\varepsilon \rightarrow {\varvec{\mu }}\) weakly as \(\varepsilon \rightarrow 0\), and the vector fields \(\pmb {J}^\varepsilon := \widehat{\pmb {J}{}}^\varepsilon + \pmb {V}^\varepsilon \).

Using the regularity assumption on \((\widehat{{\pmb {m}}{}}^\varepsilon , \widehat{\pmb {J}{}}^\varepsilon )\), the smoothness (1.12) of \(({\varvec{\mu }},{\varvec{\nu }})\), and the convexity of \(f_\textrm{hom}\), together with the bounds (1.13) and (1.14) for the corrector, we obtain

Using this bound and the fact that \(({\pmb {m}}^\varepsilon , \pmb {J}^\varepsilon ) \in \mathcal{C}\mathcal{E}_\varepsilon \), an integration in time yields

which is the sought upper bound (1.11).

2 Discrete dynamical optimal transport on \(\mathbb {Z}^d\)-periodic graphs

This section contains the definition of the optimal transport problem in the discrete periodic setting. In Sect. 2.1 we introduce the basic objects: a \(\mathbb {Z}^d\)-periodic graph \((\mathcal {X}, \mathcal {E})\) and an admissible cost function F. Given a triple \((\mathcal {X}, \mathcal {E}, F)\), we introduce a family of discrete transport actions on rescaled graphs \((\mathcal {X}_\varepsilon , \mathcal {E}_\varepsilon )\) in Sect. 2.2.

2.1 Discrete \(\mathbb {Z}^d\)-periodic setting

Our setup consists of the following data:

Assumption 2.1

\((\mathcal {X}, \mathcal {E})\) is a locally finite and \(\mathbb {Z}^d\)-periodic connected graph of bounded degree.

More precisely, we assume that

where \(V\) is a finite set. The coordinates of \(x = (z, v) \in \mathcal {X}\) will be denoted by

The set of edges \(\mathcal {E}\subseteq \mathcal {X}\times \mathcal {X}\) is symmetric and \(\mathbb {Z}^d\)-periodic, in the sense that

Here, \(S^{\bar{z}}: \mathcal {X}\rightarrow \mathcal {X}\) is the shift operator defined by

We write \(x \sim y\) whenever \((x,y) \in \mathcal {E}\).

Let \(R_0:= \max _{(x,y) \in \mathcal {E}} | x_\textsf{z}- y_\textsf{z}|_{\ell _\infty ^d} \) be the maximal edge length, measured with respect to the supremum norm \(|\cdot |_{\ell _\infty ^d}\) on \(\mathbb {R}^d\). It will be convenient to use the notation

Remark 2.2

(Abstract vs. embedded graphs) Rather than working with abstract \(\mathbb {Z}^d\)-periodic graphs, it is possible to regard \(\mathcal {X}\) as a \(\mathbb {Z}^d\)-periodic subset of \(\mathbb {R}^d\), by choosing \(V\) to be a subset of \([0,1)^d\) and using the identification \((z,v) \equiv z + v\), see Fig. 2. Since the embedding plays no role in the formulation of the discrete problem, we work with the abstract setup. Note that edges between nodes that are not in adjacent cells are also allowed.

Assumption 2.3

(Admissible cost function) The function \(F: \mathbb {R}_+^\mathcal {X}\times \mathbb {R}_a^\mathcal {E}\rightarrow \mathbb {R}\cup \{+\infty \}\) is assumed to have the following properties:

-

(a)

F is convex and lower semicontinuous.

-

(b)

F is local in the sense that there exists \(R_1 < \infty \) such that \(F(m,J) = F(m',J')\) whenever \(m, m' \in \mathbb {R}_+^\mathcal {X}\) and \(J, J' \in \mathbb {R}_a^\mathcal {E}\) agree within a ball of radius \(R_1\), i.e.,

$$\begin{aligned} m(x)&= m'(x){} & {} \text {for all } x \in \mathcal {X}\text { with } |x_\textsf{z}|_{\ell _\infty ^d} \le R_1, \quad and \\ J(x,y)&= J'(x,y){} & {} \text {for all } (x,y) \in \mathcal {E}\text { with } |x_\textsf{z}|_{\ell _\infty ^d}, |y_\textsf{z}|_{\ell _\infty ^d} \le R_1. \end{aligned}$$ -

(c)

F is of at least linear growth, i.e., there exist \(c > 0\) and \(C < \infty \) such that

$$\begin{aligned} F(m,J) \ge c \sum _{ (x, y) \in \mathcal {E}^Q} |J(x,y)| - C \Bigg ( 1 + \sum _{\begin{array}{c} x\in \mathcal {X}\\ |x|_{\ell _\infty ^d} \le R \end{array}} m(x) \Bigg ) \end{aligned}$$(2.1)for any \(m \in \mathbb {R}_+^\mathcal {X}\) and \(J \in \mathbb {R}_a^\mathcal {E}\). Here, \(R:= \max \{R_0, R_1\}\).

-

(d)

There exist a \(\mathbb {Z}^d\)-periodic function \(m^\circ \in \mathbb {R}_+^\mathcal {X}\) and a \(\mathbb {Z}^d\)-periodic and divergence-free vector field \(J^\circ \in \mathbb {R}_a^\mathcal {E}\) such that

$$\begin{aligned} ( m^\circ , J^\circ ) \in {{\,\mathrm{\textsf{D}}\,}}(F)^\circ . \end{aligned}$$(2.2)

Remark 2.4

As F is local, it depends on finitely many parameters. Therefore, \({{\,\mathrm{\textsf{D}}\,}}(F)^\circ \), the topological interior of the domain \({{\,\mathrm{\textsf{D}}\,}}(F)\) of F is defined unambiguously.

Remark 2.5

In many examples, the function F takes one of the following forms, for suitable functions \(F_x\) and \(F_{xy}\):

We then say that F is vertex-based (respectively, edge-based).

Remark 2.6

Of particular interest are edge-based functions of the form

where \(1 \le p < \infty \), the constants \(q_{xy}, q_{yx} > 0\) are fixed parameters defined for \((x,y)\in \mathcal {E}^Q\), and \(\Lambda \) is a suitable mean (i.e., \(\Lambda : \mathbb {R}_+ \times \mathbb {R}_+ \rightarrow \mathbb {R}_+\) is a jointly concave and 1-homogeneous function satisfying \(\Lambda (1,1) = 1\)). Functions of this type arise naturally in discretisations of Wasserstein gradient-flow structures [11, 30, 32].

We claim that these cost functions satisfy the growth condition (2.1). Indeed, using Young’s inequality \(|J| \le \tfrac{1}{p} \tfrac{|J|^p}{\Lambda ^{p-1}} + \tfrac{p-1}{p} \Lambda \) we infer that

with constant \(C>0\) depending on \(\max _{x,y} (q_{xy} + q_{yx})\). This shows that (2.1) is satisfied.

2.2 Rescaled setting

Let \((\mathcal {X}, \mathcal {E})\) be a locally finite and \(\mathbb {Z}^d\)-periodic graph as above. Fix \(\varepsilon > 0\) such that \(\frac{1}{\varepsilon }\in \mathbb {N}\). The assumption that \(\frac{1}{\varepsilon }\in \mathbb {N}\) remains in force throughout the paper.

The rescaled graph. Let \(\mathbb {T}_\varepsilon ^d = (\varepsilon \mathbb {Z}/ \mathbb {Z})^d\) be the discrete torus of mesh size \(\varepsilon \). The corresponding equivalence classes are denoted by \([\varepsilon z]\) for \(z \in \mathbb {Z}^d\). To improve readability, we occasionally omit the brackets. Alternatively, we may write \(\mathbb {T}_\varepsilon ^d = \varepsilon \mathbb {Z}_\varepsilon ^d\) where \(\mathbb {Z}_\varepsilon ^d = \big (\mathbb {Z}/ \tfrac{1}{\varepsilon }\mathbb {Z}\big )^d\).

The rescaled graph \((\mathcal {X}_\varepsilon , \mathcal {E}_\varepsilon )\) is constructed by rescaling the \(\mathbb {Z}^d\)-periodic graph \((\mathcal {X}, \mathcal {E})\) and wrapping it around the torus. More formally, we consider the finite sets

where, for \(\bar{z} \in \mathbb {Z}_\varepsilon ^d\),

Throughout the paper we always assume that \(\varepsilon R_0 < \frac{1}{2}\), to avoid that edges in \(\mathcal {E}\) “bite themselves in the tail” when wrapped around the torus. For \(x = \big ([\varepsilon z], v\big ) \in \mathcal {X}_\varepsilon \) we will write

The rescaled energies. Let \(F: \mathbb {R}_+^\mathcal {X}\times \mathbb {R}_a^\mathcal {E}\rightarrow \mathbb {R}\cup \{ + \infty \}\) be a cost function satisfying Assumption 2.3. For \(\varepsilon > 0\) satisfying the conditions above, we shall define a corresponding energy functional \(\mathcal {F}_\varepsilon \) in the rescaled periodic setting.

First we introduce some notation, which we use to transfer functions defined on \(\mathcal {X}_\varepsilon \) to \(\mathcal {X}\) (and from \(\mathcal {E}_\varepsilon \) to \(\mathcal {E}\)). Let \(\bar{z} \in \mathbb {Z}_\varepsilon ^d\). Each function \(\psi : \mathcal {X}_\varepsilon \rightarrow \mathbb {R}\) induces a \(\frac{1}{\varepsilon }\mathbb {Z}^d\)-periodic function

see Fig. 3. Similarly, each function \(J: \mathcal {E}_\varepsilon \rightarrow \mathbb {R}\) induces a \(\frac{1}{\varepsilon }\mathbb {Z}^d\)-periodic function

Definition 2.7

(Discrete energy functional) The rescaled energy is defined by

Remark 2.8

We note that \(\mathcal {F}_\varepsilon (m,J)\) is well-defined as an element in \(\mathbb {R}\cup \{ + \infty \}\). Indeed, the (at least) linear growth condition (2.1) yields

For \({\bar{z}} \in \mathbb {Z}_\varepsilon ^d\) it will be useful to consider the shift operator \(S^{\bar{z}}_\varepsilon : \mathcal {X}_\varepsilon \rightarrow \mathcal {X}_\varepsilon \) and \(S^{\bar{z}}_\varepsilon : \mathcal {E}_\varepsilon \rightarrow \mathcal {E}_\varepsilon \) defined by

Moreover, for \(\psi :\mathcal {X}_\varepsilon \rightarrow \mathbb {R}\) and \(J: \mathcal {E}_\varepsilon \rightarrow \mathbb {R}\) we define

Definition 2.9

(Discrete continuity equation) A pair \(({\pmb {m}}, \pmb {J})\) is said to be a solution to the discrete continuity equation if \({\pmb {m}}: \mathcal {I}\rightarrow \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) is continuous, \(\pmb {J}: \mathcal {I}\rightarrow \mathbb {R}_a^{\mathcal {E}_\varepsilon }\) is Borel measurable, and

for all \(x \in \mathcal {X}_\varepsilon \) in the sense of distributions. We use the notation

Remark 2.10

We may write (2.6) as \( \partial _t m_t + {{\,\mathrm{\text {\textsf{div}}}\,}}J_t = 0 \) using the notation (B.15).

Lemma 2.11

(Mass preservation) Let \(({\pmb {m}}, \pmb {J}) \in \mathcal{C}\mathcal{E}_\varepsilon ^\mathcal {I}\). Then we have \(m_s(\mathcal {X}_\varepsilon ) = m_t(\mathcal {X}_\varepsilon )\) for all \(s, t \in \mathcal {I}\).

Proof

Without loss of generality, suppose that \(s, t \in \mathcal {I}\) with \(s < t\). Approximating the characteristic function \(\chi _{[s,t]}\) by smooth test functions, we obtain, for all \(x \in \mathcal {X}_\varepsilon \),

Summing the above over \(x\in \mathcal {X}_\varepsilon \) and using the anti-symmetry of \(\pmb {J}\), the result follows.

We are now ready to define one of the main objects in this paper.

Definition 2.12

(Discrete action functional) For any continuous function \({\pmb {m}}: \mathcal {I}\rightarrow \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) such that \(t \mapsto \sum _{x \in \mathcal {X}_\varepsilon } m_t(x) \in L^1(\mathcal {I})\) and any Borel measurable function \(\pmb {J}: \mathcal {I}\rightarrow \mathbb {R}_a^{\mathcal {E}_\varepsilon }\), we define

Furthermore, we set

Remark 2.13

We claim that \(\mathcal {A}_\varepsilon ^\mathcal {I}({\pmb {m}}, \pmb {J})\) is well-defined as an element in \(\mathbb {R}\cup \{ + \infty \}\). Indeed, the (at least) linear growth condition (2.1) yields as in Remark 2.8

for any \(t \in \mathcal {I}\). Since \(t \mapsto \sum _{x \in \mathcal {X}_\varepsilon } m_t(x) \in L^1(\mathcal {I})\), the claim follows.

In particular, \(\mathcal {A}_\varepsilon ^\mathcal {I}({\pmb {m}}, \pmb {J})\) is well-defined whenever \(({\pmb {m}}, \pmb {J}) \in \mathcal{C}\mathcal{E}_\varepsilon ^\mathcal {I}\), since \(t \mapsto \sum _{x \in \mathcal {X}_\varepsilon } m_t(x)\) is constant by Lemma 2.11.

Remark 2.14

If the time interval is clear from the context, we often simply write \(\mathcal{C}\mathcal{E}_\varepsilon \) and \(\mathcal {A}_\varepsilon \).

The aim of this work is to study the asymptotic behaviour of the energies \(\mathcal {A}_\varepsilon ^\mathcal {I}\) as \(\varepsilon \rightarrow 0\).

3 Dynamical optimal transport in the continuous setting

We shall now define a corresponding class of dynamical optimal transport problems on the continuous torus \(\mathbb {T}^d\). We start in Sect. 3.1 by defining the natural continuous analogues of the discrete objects from Sect. 2. In Sect. 3.2 we define generalisations of these objects that have better compactness properties.

3.1 Continuous continuity equation and action functional

First we define solutions to the continuity equation on a bounded open time interval \(\mathcal {I}\).

Definition 3.1

(Continuity equation) A pair \(({\varvec{\mu }}, {\varvec{\nu }})\) is said to be a solution to the continuity equation \(\partial _t {\varvec{\mu }}+ \nabla \cdot {\varvec{\nu }}= 0 \) if the following conditions holds:

-

(i)

\({\varvec{\mu }}: \mathcal {I}\rightarrow \mathcal {M}_+(\mathbb {T}^d)\) is vaguely continuous;

-

(ii)

\({\varvec{\nu }}: \mathcal {I}\rightarrow \mathcal {M}^d(\mathbb {T}^d)\) is a Borel family satisfying \(\int _\mathcal {I}|\nu _t|(\mathbb {T}^d) \, \textrm{d}t < \infty \);

-

(iii)

The equation

$$\begin{aligned} \partial _t \mu _t(x) + \nabla \cdot \nu _t(x) = 0 \end{aligned}$$(3.1)holds in the sense of distributions, i.e., for all \(\varphi \in \mathcal {C}_c^1\big (\mathcal {I}\times \mathbb {T}^d \big )\),

$$\begin{aligned} \int _\mathcal {I}\int _{\mathbb {T}^d} \partial _t \varphi _t(x) \, \textrm{d}\mu _t(x) \, \textrm{d}t + \int _\mathcal {I}\int _{\mathbb {T}^d} \nabla \varphi _t(x) \cdot \, \textrm{d}\nu _t(x) \, \textrm{d}t = 0. \end{aligned}$$

We use the notation

We will consider the energy densities f with the following properties.

Assumption 3.2

Let \(f: \mathbb {R}_+ \times \mathbb {R}^d \rightarrow \mathbb {R}\cup \{+\infty \}\) be a lower semicontinuous and convex function, whose domain has nonempty interior. We assume that there exist constants \(c > 0\) and \(C < \infty \) such that the (at least) linear growth condition

holds for all \(\rho \in \mathbb {R}_+\) and \(j \in \mathbb {R}^d\).

The corresponding recession function \(f^\infty : \mathbb {R}_+ \times \mathbb {R}^d \rightarrow \mathbb {R}\cup \{+\infty \}\) is defined by

where \((\rho _0, j_0) \in {{\,\mathrm{\textsf{D}}\,}}(f)\) is arbitrary. It is well known that the function \(f^\infty \) is lower semicontinuous and convex, and it satisfies

We refer to [2, Section 2.6] for a proof of these facts.

Let \(\mathscr {L}^d\) denote the Lebesgue measure on \(\mathbb {T}^d\). For \(\mu \in \mathcal {M}_+(\mathbb {T}^d)\) and \(\nu \in \mathcal {M}^d(\mathbb {T}^d)\) we consider the Lebesgue decompositions given by

for some \(\rho \in L_+^1(\mathbb {T}^d)\) and \(j \in L^1(\mathbb {T}^d; \mathbb {R}^d)\). It is always possible to introduce a measure \(\sigma \in \mathcal {M}_+(\mathbb {T}^d)\) such that

for some \(\rho ^\perp \in L_+^1(\sigma )\) and \(j^\perp \in L^1(\sigma ;\mathbb {R}^d)\). (Take, for instance, \(\sigma = \mu ^\perp + |\nu ^\perp |\).) Using this notation we define the continuous energy as follows.

Definition 3.3

(Continuous energy functional) Let \(f: \mathbb {R}_+ \times \mathbb {R}^d \rightarrow \mathbb {R}\cup \{ + \infty \}\) satisfy Assumption 3.2. We define the continuous energy functional by

Remark 3.4

By 1-homogeneity of \(f^\infty \), this definition does not depend on the choice of the measure \(\sigma \in \mathcal {M}_+(\mathbb {T}^d)\).

Definition 3.5

(Action functional) For any curve \({\varvec{\mu }}: \mathcal {I}\rightarrow \mathcal {M}_+(\mathbb {T}^d)\) with \(\int _\mathcal {I}\mu _t(\mathbb {T}^d) \, \textrm{d}t < \infty \) and any Borel measurable curve \({\varvec{\nu }}: \mathcal {I}\rightarrow \mathcal {M}^d(\mathbb {T}^d)\) we define

Furthermore, we set

Remark 3.6

As \(f(\rho , j) \ge - C (1 + \rho )\) by (3.2), the assumption \(\int _\mathcal {I}\mu _t(\mathbb {T}^d) \, \textrm{d}t < \infty \) ensures that \(\mathbb {A}^\mathcal {I}({\varvec{\mu }}, {\varvec{\nu }})\) is well-defined in \(\mathbb {R}\cup \{ + \infty \}\).

Remark 3.7

(Dependence on time intervals) Remark 2.14 applies in the continuous setting as well. If the time interval is clear from the context, we often simply write \(\mathbb{C}\mathbb{E}\) and \(\mathbb {A}\).

Under additional assumptions on the function f, it is possible to prove compactness for families of solutions to the continuity equation with bounded action; see [13, Corollary 4.10]. However, in our general setting, such a compactness result fails to hold, as the following example shows.

Example 3.8

(Lack of compactness) To see this, let \(y^\varepsilon (t)\) be the position of a particle of mass m that moves from 0 to \(\bar{y} \in [0,\frac{1}{2}]^d\) in the time interval \((a_\varepsilon , b_\varepsilon ):= \big (\tfrac{1-\varepsilon }{2}, \tfrac{1+\varepsilon }{2}\big )\) with constant speed \(\frac{|\bar{y}|}{\varepsilon }\). At all other times in the time interval \(\mathcal {I}= (0,1)\) the particle is at rest:

The associated solution \(({\varvec{\mu }}^\varepsilon , {\varvec{\nu }}^\varepsilon )\) to the continuity equation \(\partial _t {\varvec{\mu }}^\varepsilon + \nabla \cdot {\varvec{\nu }}^\varepsilon = 0\) is given by

Let \(f(\rho , j) = |j|\) be the total momentum, which satisfies Assumption 3.2. We then have \(\mathbb {F}(\mu _t^\varepsilon , \nu _t^\varepsilon ) = \frac{m|\bar{y}|}{\varepsilon } {\textbf{1}}_{(a_\varepsilon , b_\varepsilon )}(t) \), hence \(\mathbb {A}^\mathcal {I}({\varvec{\mu }}^\varepsilon , {\varvec{\nu }}^\varepsilon ) = m \bar{y}\), independently of \(\varepsilon \).

However, as \(\varepsilon \rightarrow 0\), the motion converges to the discontinuous curve given by \(\mu _t = \delta _0\) for \(t \in [0,\tfrac{1}{2})\) and \(\mu _t = \delta _{\bar{y}}\) for \(t \in (\tfrac{1}{2}, 1]\). In particular, it does not satisfy the continuity equation in the sense above.

3.2 Generalised continuity equation and action functional

In view of this lack of compactness, we will extend the definition of the continuity equation and the action functional to more general objects.

Definition 3.9

(Continuity equation) A pair of measures \(({\varvec{\mu }}, {\varvec{\nu }}) \in \mathcal {M}_+\big (\mathcal {I}\times \mathbb {T}^d\big ) \times \mathcal {M}^d\big (\mathcal {I}\times \mathbb {T}^d\big )\) is said to be a solution to the continuity equation

if, for all \(\varphi \in \mathcal {C}_c^1\big (\mathcal {I}\times \mathbb {T}^d\big )\), we have

As above, we use the notation \(({\varvec{\mu }}, {\varvec{\nu }}) \in \mathbb{C}\mathbb{E}^\mathcal {I}\).

Clearly, this definition is consistent with Definition 3.5.

Let us now extend the action functional \(\mathbb {A}^\mathcal {I}\) as well. For this purpose, let \(\mathscr {L}^{d+1}\) denote the Lebesgue measure on \(\mathcal {I}\times \mathbb {T}^d\). For \({\varvec{\mu }}\in \mathcal {M}_+\big ( \mathcal {I}\times \mathbb {T}^d\big )\) and \({\varvec{\nu }}\in \mathcal {M}^d\big ( \mathcal {I}\times \mathbb {T}^d\big )\) we consider the Lebesgue decompositions given by

for some \(\rho \in L_+^1\big ( \mathcal {I}\times \mathbb {T}^d \big )\) and \(j \in L^1\big ( \mathcal {I}\times \mathbb {T}^d; \mathbb {R}^d\big )\). As above, it is always possible to introduce a measure \({\varvec{\sigma }}\in \mathcal {M}_+( \mathcal {I}\times \mathbb {T}^d)\) such that

for some \(\rho ^\perp \in L_+^1({\varvec{\sigma }})\) and \(j^\perp \in L^1({\varvec{\sigma }};\mathbb {R}^d)\).

Definition 3.10

(Action functional) We define the action by

Furthermore, we set

Remark 3.11

This definition does not depend on the choice of \({\varvec{\sigma }}\), in view of the 1-homogeneity of \(f^\infty \). As \(f(\rho ,j) \ge - C(1+\rho )\) and \(f_\infty (\rho ,j) \ge - C \rho \) from (3.2) and (3.3), the fact that \({\varvec{\mu }}(\mathcal {I}\times \mathbb {T}^d) <\infty \) ensures that \(\mathbb {A}^\mathcal {I}({\varvec{\mu }}, {\varvec{\nu }})\) is well-defined in \(\mathbb {R}\cup \{ + \infty \}\).

Example 3.12

(Lack of compactness) Continuing Example 3.8, we can now describe the limiting jump process as a solution to the generalised continuity equation. Consider the measures \({\varvec{\mu }}^\varepsilon \in \mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\) and \({\varvec{\nu }}^\varepsilon \in \mathcal {M}^d(\mathcal {I}\times \mathbb {T}^d)\) defined by

Then we have \({\varvec{\mu }}^\varepsilon \rightarrow {\varvec{\mu }}\) and \({\varvec{\nu }}^\varepsilon \rightarrow {\varvec{\nu }}\) weakly, respectively, in \(\mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\) and \(\mathcal {M}^d(\mathcal {I}\times \mathbb {T}^d)\), where \({\varvec{\mu }}\) represents the discontinuous curve

The measure \({\varvec{\nu }}\) does not admit a disintegration with respect to the Lebesgue measure on \(\mathcal {I}\); in other words, it is not associated to a curve of measures on \(\mathbb {T}^d\). We have

Here \(\mathscr {H}^1|_{[0,\bar{y}]}\) denotes the 1-dimensional Hausdorff measure on the (shortest) line segment connecting 0 and \(\bar{y}\).

Note that \(({\varvec{\mu }}, {\varvec{\nu }})\) solves the continuity equation, as \(\mathbb{C}\mathbb{E}^\mathcal {I}\) is stable under joint weak-convergence. Furthermore, we have \(\mathbb {A}^\mathcal {I}({\varvec{\mu }}, {\varvec{\nu }}) = m \bar{y}\).

The next result shows that any solution to the continuity equation \(({\varvec{\mu }}, {\varvec{\nu }}) \in \mathbb{C}\mathbb{E}^\mathcal {I}\) induces a (not necessarily continuous) curve of measures \((\mu _t)_t \in \mathcal {I}\). The measure \({\varvec{\nu }}\) is not always associated to a curve of measures on \(\mathcal {I}\); see Example 3.12. We refer to “Appendix 1” for the definition of \(\textrm{BV}_{\textrm{KR}}(\mathcal {I}; \mathcal {M}_+(\mathbb {T}^d))\).

Lemma 3.13

(Disintegration of solutions to \(\mathbb{C}\mathbb{E}^\mathcal {I}\)) Let \(({\varvec{\mu }}, {\varvec{\nu }}) \in \mathbb{C}\mathbb{E}^{\mathcal {I}}\). Then \(\, \textrm{d}{\varvec{\mu }}(t,x) = \, \textrm{d}\mu _t(x) \, \textrm{d}t\) for some measurable curve \(t \mapsto \mu _t \in \mathcal {M}_+(\mathbb {T}^d)\) with finite constant mass. If \(\mathbb {A}^{\mathcal {I}}({\varvec{\mu }}) < \infty \), then this curve belongs to \(\textrm{BV}_{\textrm{KR}}(\mathcal {I}; \mathcal {M}_+(\mathbb {T}^d))\) and

Proof

Let \(\lambda \in \mathcal {M}_+(\mathcal {I})\) be the time-marginal of \({\varvec{\mu }}\), i.e., \(\lambda := (e_1)_{\#} {\varvec{\mu }}\) where \(e_1: \mathcal {I}\times \mathbb {T}^d\rightarrow \mathcal {I}\), \(e_1(t,x) = t\). We claim that \(\lambda \) is a constant multiple of the Lebesgue measure on \(\mathcal {I}\). By the disintegration theorem (see, e.g., [3, Theorem 5.3.1]), this implies the first part of the result.

To prove the claim, note that the continuity equation \(\mathbb{C}\mathbb{E}^{\mathcal {I}}\) yields

for all \(\varphi \in C_c^\infty (\mathcal {I})\).

Write \(\mathcal {I}= (a,b)\), let \(\psi \in C_c^\infty (\mathcal {I})\) be arbitrary, and set \(\bar{\psi }:= \frac{1}{|\mathcal {I}|}\int _\mathcal {I}\psi (t) \, \textrm{d}t\). We define \(\varphi (t) = \int _a^t \psi (s) \, \textrm{d}s - (t-a) \bar{\psi }\). Then \(\varphi \in C_c^\infty (\mathcal {I})\) and \(\partial _t \varphi = \psi - \bar{\psi }\). Applying (3.7) we obtain \(\int _\mathcal {I}(\psi - \bar{\psi })\, \textrm{d}\lambda = 0\), which implies the claim, and hence the first part of the result.

To prove the second part, suppose that \({\varvec{\mu }}\in \mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\) has finite action, and let \({\varvec{\nu }}\in \mathcal {M}^d\big ( \mathcal {I}\times \mathbb {T}^d\big )\) be a solution to the continuity equation (3.4). Applying (3.4) to a test function \(\varphi \in \mathcal {C}_c^1\big ( \mathcal {I}; \mathcal {C}^1(\mathbb {T}^d) \big ) \subseteq \mathcal {C}_c^1\big ( \mathcal {I}\times \mathbb {T}^d\big )\) such that \(\max _{t\in \mathcal {I}}\Vert \varphi _t\Vert _{\mathcal {C}^1(\mathbb {T}^d)} \le 1\), we obtain

which implies the desired bound in view of (B.14).

The next lemma deals with regularity properties for curves of measures with finite action and fine properties for the functionals \(\mathbb {A}\) defined in Definition 3.10 with \(f=f_\textrm{hom}\).

Lemma 3.14

(Properties of \(\mathbb {A}^\mathcal {I}\)) Let \(\mathcal {I}\subset \mathbb {R}\) be a bounded open interval. The following statements hold:

-

(i)

The functionals \(({\varvec{\mu }}, {\varvec{\nu }}) \mapsto \mathbb {A}^\mathcal {I}({\varvec{\mu }}, {\varvec{\nu }})\) and \({\varvec{\mu }}\mapsto \mathbb {A}^\mathcal {I}({\varvec{\mu }})\) are convex.

-

(ii)

Let \({\varvec{\mu }}\in \mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\). Let \(\{\mathcal {I}_n\}_n\) be a sequence of bounded open intervals such that \(\mathcal {I}_n \subseteq \mathcal {I}\) and \(|\mathcal {I}\setminus \mathcal {I}_n| \rightarrow 0\) as \(n \rightarrow \infty \). Let \({\varvec{\mu }}^n \in \mathcal {M}_+(\mathcal {I}_n \times \mathbb {T}^d)\) be such thatFootnote 2

$$\begin{aligned} {\varvec{\mu }}^n \rightarrow {\varvec{\mu }}\ \text {vaguely in} \ \mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d) \ \text { and } \ {\varvec{\mu }}^n(\mathcal {I}_n \times \mathbb {T}^d) \rightarrow {\varvec{\mu }}(\mathcal {I}\times \mathbb {T}^d). \end{aligned}$$as \(n \rightarrow \infty \). Then:

$$\begin{aligned} \liminf _{n \rightarrow \infty } \mathbb {A}^{\mathcal {I}_n}({\varvec{\mu }}^n) \ge \mathbb {A}^{\mathcal {I}}({\varvec{\mu }}). \end{aligned}$$(3.9)If, additionally, \({\varvec{\nu }}\in \mathcal {M}^d(\mathcal {I}\times \mathbb {T}^d)\) and \({\varvec{\nu }}^n \in \mathcal {M}^d(\mathcal {I}_n \times \mathbb {T}^d)\) satisfy \({\varvec{\nu }}^n \rightarrow {\varvec{\nu }}\) vaguely in \(\mathcal {M}^d(\mathcal {I}\times \mathbb {T}^d)\), then we have

$$\begin{aligned} \liminf _{n \rightarrow \infty } \mathbb {A}^{\mathcal {I}_n}({\varvec{\mu }}^n, {\varvec{\nu }}^n) \ge \mathbb {A}^{\mathcal {I}}({\varvec{\mu }}, {\varvec{\nu }}). \end{aligned}$$(3.10)In particular, the functionals \(({\varvec{\mu }}, {\varvec{\nu }}) \mapsto \mathbb {A}^\mathcal {I}({\varvec{\mu }}, {\varvec{\nu }})\) and \({\varvec{\mu }}\mapsto \mathbb {A}^\mathcal {I}({\varvec{\mu }})\) are lower semicontinuous with respect to (joint) vague convergence.

Proof

(i): Convexity of \(\mathbb {A}^\mathcal {I}\) follows from convexity of f, \(f^\infty \), and the linearity of the constraint (3.4).

(ii): First we show (3.10). Consider the convex energy density \(g(\rho ,j):= f(\rho ,j) + C(\rho + 1)\), which is nonnegative by (2.1). Let \(\mathbb {A}_g\) be the corresponding action functional defined using g instead of f. Using the nonnegativity of g, the fact that \(|\mathcal {I}{\setminus } \mathcal {I}_n| \rightarrow 0\), and the lower semicontinuity result from [2, Theorem 2.34], we obtain

for every open interval \(\widetilde{\mathcal {I}} \Subset \mathcal {I}\). Taking the supremum over \(\widetilde{\mathcal {I}}\), we obtain

Since we have \({\varvec{\mu }}^n\big ( \mathcal {I}_n \times \mathbb {T}^d\big ) \rightarrow {\varvec{\mu }}\big ( \mathcal {I}\times \mathbb {T}^d\big )\) by assumption, the desired result (3.10) follows from (3.11) and the identity

Let us now show (3.9). Let \(\{{\varvec{\mu }}^n\}_n \subseteq \mathcal {M}_+\big ( \mathcal {I}_n \times \mathbb {T}^d\big ) \) be such that \(\sup _n \mathbb {A}^{\mathcal {I}_n}({\varvec{\mu }}^n) < \infty \) and \({\varvec{\mu }}^n \rightarrow {\varvec{\mu }}\) vaguely in \(\mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\). Let \({\varvec{\nu }}^n \in \mathcal {M}^d(\mathcal {I}_n \times \mathbb {T}^d)\) be such that \(({\varvec{\mu }}^n, {\varvec{\nu }}^n) \in \mathbb{C}\mathbb{E}^{\mathcal {I}_n}\) and

From Lemma 3.13, we infer that \(\, \textrm{d}{\varvec{\mu }}^n(t,x) = \, \textrm{d}\mu _t^n(x) \, \textrm{d}t\) where \((\mu _t^n)_{t \in \mathcal {I}_n}\) is a curve of constant total mass \(c_n:= \mu _t^n(\mathbb {T}^d)\). Moreover, \(M:= \sup _n c_n <+\infty \), since \({\varvec{\mu }}^n \rightarrow {\varvec{\mu }}\) vaguely. The growth condition (3.2) implies that

Hence, by the Banach–Alaoglu theorem, there exists a subsequence of \(\{{\varvec{\nu }}^n\}_n\) (still indexed by n) such that \({\varvec{\nu }}^n \rightarrow {\varvec{\nu }}\) vaguely in \(\mathcal {M}^d(\mathcal {I}\times \mathbb {T}^d)\) and \(({\varvec{\mu }}, {\varvec{\nu }}) \in \mathbb{C}\mathbb{E}^{\mathcal {I}}\). Another application of Lemma 3.13 ensures that \(\, \textrm{d}{\varvec{\mu }}(t,x) = \, \textrm{d}\mu _t(x) \, \textrm{d}t\) where \((\mu _t)_{t \in \mathcal {I}}\) is of constant mass \(c:= \mu _t(\mathbb {T}^d) = \lim _{n \rightarrow \infty } c_n \).

We can thus apply the first part of (ii) to obtain

which ends the proof.

4 The homogenised transport problem

Throughout this section we assume that \((\mathcal {X},\mathcal {E})\) safisfies Assumption 2.1 and F safisfies Assumption 2.3.

4.1 Discrete representation of continuous measures and vector fields

To define \(f_\textrm{hom}\), the following definition turns out to be natural.

Definition 4.1

(Representation)

-

(i)

We say that \(m\in \mathbb {R}_+^{\mathcal {X}}\) represents \(\rho \in \mathbb {R}_+\) if m is \(\mathbb {Z}^d\)-periodic and

$$\begin{aligned} \sum _{x \in \mathcal {X}^Q} m(x) = \rho . \end{aligned}$$ -

(ii)

We say that \(J\in \mathbb {R}_a^{\mathcal {E}}\) represents a vector \(j \in \mathbb {R}^d\) if

-

(a)

J is \(\mathbb {Z}^d\)-periodic;

-

(b)

J is divergence-free (i.e., \({{\,\mathrm{\text {\textsf{div}}}\,}}J(x) = 0\) for all \(x \in \mathcal {X}\));

-

(c)

The effective flux of J equals j; i.e., \({{\,\mathrm{\textsf{Eff}}\,}}(J) = j\), where

$$\begin{aligned} {{\,\mathrm{\textsf{Eff}}\,}}(J) := \frac{1}{2} \sum _{(x,y) \in \mathcal {E}^Q} J(x,y) \big ( y_\textsf{z}- x_\textsf{z}\big ). \end{aligned}$$(4.1)

-

(a)

We use the (slightly abusive) notation \(m \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho )\) and \(J \in {{\,\mathrm{{\textsf{Rep}}}\,}}(j)\). We will also write \({{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ,j) = {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ) \times {{\,\mathrm{{\textsf{Rep}}}\,}}(j)\).

Remark 4.2

Let us remark that \(x_\textsf{z}= 0\) in the formula for \({{\,\mathrm{\textsf{Eff}}\,}}(J)\), since \(x_\textsf{z}\in \mathcal {X}^Q\).

Remark 4.3

The definition of the effective flux \({{\,\mathrm{\textsf{Eff}}\,}}(J)\) is natural in view of Lemmas 4.9 and 4.11 below. These results show that a solution to the continuous continuity equation can be constructed starting from a solution to the discrete continuity equation, with a vector field of the form (4.1).

Clearly, \({{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ) \ne \emptyset \) for every \(\rho \in \mathbb {R}_+\). It is also true, though less obvious, that \({{\,\mathrm{{\textsf{Rep}}}\,}}(j) \ne \emptyset \) for every \(j \in \mathbb {R}^d\). We will show this in Lemma 4.5 using the \(\mathbb {Z}^d\)-periodicity and the connectivity of \((\mathcal {X}, \mathcal {E})\).

To prove the result, we will first introduce a natural vector field associated to each simple directed path P on \((\mathcal {X},\mathcal {E})\), For an edge \(e =(x,y) \in \mathcal {E}\), the corresponding reversed edge will be denoted by \(\overline{e} = (y,x) \in \mathcal {E}\).

Definition 4.4

(Unit flux through a path; see Fig. 4) Let \(P:= \{x_i\}_{i=0}^m\) be a simple path in \((\mathcal {X}, \mathcal {E})\), thus \(e_i = (x_{i-1}, x_i) \in \mathcal {E}\) for \(i = 1, \ldots , m\), and \(x_i \ne x_k\) for \(i \ne k\). The unit flux through P is the discrete field \(J_{P} \in \mathbb {R}^{\mathcal {E}}_a\) given by

The periodic unit flux through P is the vector field \(\widetilde{J}_P \in \mathbb {R}^{\mathcal {E}}_a\) defined by

In the next lemma we collect some key properties of these vector fields. Recall the definition of the discrete divergence in (B.15).

Lemma 4.5

(Properties of \(J_P\)) Let \(P:= \{x_i\}_{i=0}^m\) be a simple path in \((\mathcal {X}, \mathcal {E})\).

-

(i)

The discrete divergence of the associated unit flux \(J_P: \mathcal {E}\rightarrow \mathbb {R}\) is given by

$$\begin{aligned} {{\,\mathrm{\text {\textsf{div}}}\,}}J_P = {\textbf{1}}_{\{x_0\}} - {\textbf{1}}_{\{x_m\}}. \end{aligned}$$(4.4) -

(ii)

The discrete divergence of the periodic unit flux \(\widetilde{J}_P: \mathcal {E}\rightarrow \mathbb {R}\) is given by

$$\begin{aligned} {{\,\mathrm{\text {\textsf{div}}}\,}}\widetilde{J}_P(x) = {\textbf{1}}_{\{(x_0)_\textsf{v}\}}(x_\textsf{v}) - {\textbf{1}}_{\{(x_m)_\textsf{v}\}}(x_\textsf{v}), \quad x \in \mathcal {X}. \end{aligned}$$(4.5)In particular, \({{\,\mathrm{\text {\textsf{div}}}\,}}\widetilde{J}_P \equiv 0\) iff \((x_0)_\textsf{v}= (x_m)_\textsf{v}\).

-

(iii)

The periodic unit flux \(\widetilde{J}_P: \mathcal {E}\rightarrow \mathbb {R}\) satisfies \({{\,\mathrm{\textsf{Eff}}\,}}(\widetilde{J}_P) = (x_m)_\textsf{z}- (x_0)_\textsf{z}\).

-

(iv)

For every \(j \in \mathbb {R}^d\) we have \({{\,\mathrm{{\textsf{Rep}}}\,}}(j) \ne \emptyset \).

Proof

(i) is straightforward to check, and (ii) is a direct consequence.

To prove (iii), we use the definition of \(\widetilde{J}_P\) to obtain

By construction, we have

which yields the result.

For (iv), taking \(j = e_i\), we use the connectivity and nonemptyness of \((\mathcal {X},\mathcal {E})\) to find a simple path connecting some \((v,z) \in \mathcal {X}\) to \((v,z+e_i) \in \mathcal {X}\). The resulting \(\widetilde{J}_P \in \mathbb {R}_a^\mathcal {E}\) is divergence-free by (ii) and \({{\,\mathrm{\textsf{Eff}}\,}}(\widetilde{J}_P)= e_i\) by (iii), so that \(\widetilde{J}_P \in {{\,\mathrm{{\textsf{Rep}}}\,}}(e_i)\). For a general \(j=\sum _{i=1}^d j_i e_i\) we have \({{\,\mathrm{{\textsf{Rep}}}\,}}(j) \supseteq \sum _{i=1}^d j_i {{\,\mathrm{{\textsf{Rep}}}\,}}(e_i) \ne \emptyset \).

4.2 The homogenised action

We are now in a position to define the homogenised energy density.

Definition 4.6

(Homogenised energy density) The homogenised energy density \(f_{\textrm{hom}}: \mathbb {R}_+ \times \mathbb {R}^d \rightarrow \mathbb {R}\cup \{ +\infty \}\) is defined by the cell formula

For \((\rho , j)\in \mathbb {R}_+ \times \mathbb {R}^d\), we say that \((m,J) \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ,j)\) is an optimal representative if \(F(m,J) = f_{\textrm{hom}}(\rho ,j)\). The set of optimal representatives is denoted by

In view of Lemma 4.5, the set of representatives \({{\,\mathrm{{\textsf{Rep}}}\,}}(\rho , j)\) is nonempty for every \((\rho , j)\in \mathbb {R}_+ \times \mathbb {R}^d\). The next result shows that \({{\,\mathrm{{\textsf{Rep}}}\,}}_o(\rho , j)\) is nonempty as well.

Lemma 4.7

(Properties of the cell formula) Let \((\rho , j)\in \mathbb {R}_+ \times \mathbb {R}^d\). If \(f_\textrm{hom}(\rho ,j) < + \infty \), then the set of optimal representatives \({{\,\mathrm{{\textsf{Rep}}}\,}}_o(\rho , j)\) is nonempty, closed, and convex.

Proof

This follows from the coercivity of F and the direct method of the calculus of variations.

Lemma 4.8

(Properties of \(f_\textrm{hom}\) and \(f_\textrm{hom}^\infty \)) The following properties hold:

-

(i)

The functions \(f_\textrm{hom}\) and \(f_\textrm{hom}^\infty \) are lower semicontinuous and convex.

-

(ii)

There exist constants \(c > 0\) and \(C < \infty \) such that, for all \(\rho \ge 0\) and \(j \in \mathbb {R}^d\),

$$\begin{aligned} f_\textrm{hom}(\rho ,j) \ge c|j| - C (\rho + 1), \qquad f_\textrm{hom}^\infty (\rho , j) \ge c|j| - C \rho . \end{aligned}$$(4.7) -

(iii)

The domain \({{\,\mathrm{\textsf{D}}\,}}(f_\textrm{hom}) \subseteq \mathbb {R}_+ \times \mathbb {R}^d\) has nonempty interior. In particular, for any pair \((m^\circ , J^\circ )\) satisfying (2.2), the element \((\rho ^\circ , j^\circ ) \in (0,\infty ) \times \mathbb {R}^d\) defined by

$$\begin{aligned} (\rho ^\circ , j^\circ ) := \bigg ( \sum _{x \in \mathcal {X}^Q} m^\circ (x) , \frac{1}{2}\sum _{(x,y) \in \mathcal {E}^Q} J^\circ (x,y) \big ( y_\textsf{z}- x_\textsf{z}\big ) \bigg ) \end{aligned}$$(4.8)belongs to \({{\,\mathrm{\textsf{D}}\,}}(f_\textrm{hom})^\circ \).

Proof

(i): The convexity of \(f_\textrm{hom}\) follows from the convexity of F and the affinity of the constraints. Let us now prove lower semicontinuity of \(f_\textrm{hom}\).

Take \((\rho , j) \in \mathbb {R}_+ \times \mathbb {R}^d\) and sequences \(\{\rho _n\}_n \subseteq \mathbb {R}_+\) and \(\{j_n\}_n \subseteq \mathbb {R}^d\) converging to \(\rho \) and j respectively. Without loss of generality we may assume that \(L:= \sup _n f_\textrm{hom}(\rho _n, j_n) < \infty \). By definition of \(f_\textrm{hom}\), there exist \((m_n, J_n) \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho _n, j_n)\) such that \(F(m_n, J_n) \le f_\textrm{hom}(\rho _n, j_n) + \frac{1}{n}\). From the growth condition (2.1) we deduce that, for some \(C < \infty \),

From the Bolzano–Weierstrass theorem we infer subsequential convergence of \(\{(m_n, J_n)\}_n\) to some \(\mathbb {Z}^d\)-periodic pair \((m, J) \in \mathbb {R}_+^\mathcal {X}\times \mathbb {R}^\mathcal {E}\). Therefore, by lower semicontinuity of F, it follows that

Since \((m,J) \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ,j)\), we have \(f_\textrm{hom}(\rho , j) \le F(m, J)\), which yields the desired result. Convexity and lower semicontinuity of \(f_\textrm{hom}^\infty \) follow from the definition, see [2, Section 2.6].

(ii) Take \(\rho \in \mathbb {R}_+\) and \(j \in \mathbb {R}^d\). If \(f_\textrm{hom}(\rho , j) = + \infty \), the assertion is trivial, so we assume that \(f_\textrm{hom}(\rho , j) < + \infty \). Then there exists a competitor \((m, J) \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ,j)\) such that \(F(m,J) \le f_\textrm{hom}(\rho ,j) + 1\). The growth condition (2.1) asserts that

Therefore, the claim follows from the fact that

where \(R_0 = \max _{(x,y) \in \mathcal {E}} | x_\textsf{z}- y_\textsf{z}|_{\ell _\infty ^d} \).

(iii): Let \(( m^\circ , J^\circ ) \in {{\,\mathrm{\textsf{D}}\,}}(F)^\circ \) satisfy Assumption 2.3, and define \((\rho ^\circ , j^\circ ) \in (0,\infty ) \times \mathbb {R}^d\) by (4.8). For \(i = 1, \ldots , d\), let \(e_i\) be the coordinate unit vector. Using Lemma 4.5(iv) we take \(J^i \in {{\,\mathrm{{\textsf{Rep}}}\,}}(e_i)\). For \(\alpha \in \mathbb {R}\) with \(|\alpha |\) sufficiently small, and \(\beta = \sum _{i=1}^d \beta _i e_i \in \mathbb {R}^d\) we define

It follows that \((m_\alpha , J_\beta ) \in {{\,\mathrm{{\textsf{Rep}}}\,}}(\rho ^\circ + \alpha , j^\circ + \beta )\), and therefore, \(f_\textrm{hom}(\rho ^\circ + \alpha , j^\circ + \beta ) \le F(m_\alpha , J_\beta )\). By Assumption 2.3, the right-hand side is finite for \(|\alpha | + |\beta |\) sufficiently small. This yields the result.

The homogenised action \(\mathbb {A}^\mathcal {I}_\textrm{hom}\) can now be defined by taking \(f = f_\textrm{hom}\) in Definition 3.10.

4.3 Embedding of solutions to the discrete continuity equation

For \(\varepsilon > 0\) and \(z \in \mathbb {Z}^d\) (or more generally, for \(z \in \mathbb {R}^d\)) let \(Q_\varepsilon ^z:= \varepsilon z + [0, \varepsilon )^d \subseteq \mathbb {T}^d\) denote the cube of side-length \(\varepsilon \) based at \(\varepsilon z\). For \(m \in \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) and \(J \in \mathbb {R}_a^{\mathcal {E}_\varepsilon }\) we define \(\iota _\varepsilon m \in \mathcal {M}_+(\mathbb {T}^d)\) and \(\iota _\varepsilon J \in \mathcal {M}^d(\mathbb {T}^d)\) by

The embeddings (4.10) are chosen to ensure that solutions to the discrete continuity equation are mapped to solutions to the continuous continuity equation, as the following result shows.

Lemma 4.9

Let \(({\pmb {m}}, \pmb {J}) \in \mathcal{C}\mathcal{E}_\varepsilon ^\mathcal {I}\) solve the discrete continuity equation and define \(\mu _t = \iota _\varepsilon m_t\) and \(\nu _t = \iota _\varepsilon J_t\). Then \(({\varvec{\mu }}, {\varvec{\nu }})\) solves the continuity equation (i.e., \(({\varvec{\mu }}, {\varvec{\nu }}) \in \mathbb{C}\mathbb{E}^\mathcal {I}\)).

Proof

Let \(\varphi : \mathcal {I}\times \mathbb {T}^d \rightarrow \mathbb {R}\) be smooth with compact support. Then:

On the other hand, the discrete continuity equation yields

Comparing both expressions, we obtain the desired identity \(\partial _t {\varvec{\mu }}+ \nabla \cdot {\varvec{\nu }}= 0\) in the sense of distributions.

The following result provides a useful bound for the norm of the embedded flux.

Lemma 4.10

For \(J \in \mathbb {R}_a^{\mathcal {E}_\varepsilon }\) we have

Proof

This follows immediately from (4.11), since \(\mathscr {L}^d \big (Q_\varepsilon ^{(1-s)x_\textsf{z}+ s y_\textsf{z}}\big ) = \varepsilon ^d \) and \(|y_\textsf{z}- x_\textsf{z}| \le R_0 \sqrt{d}\) for \((x,y) \in \mathcal {E}_\varepsilon \).

Note that both measures in (4.10) are absolutely continuous with respect to the Lebesgue measure. The next result provides an explicit expression for the density of the momentum field. Recall the definition of the shifting operators \(\sigma _\varepsilon ^{\bar{z}}\) in (2.5).

Lemma 4.11

(Density of the embedded flux) Fix \(\varepsilon <\frac{1}{2R_0}\). For \(J \in \mathbb {R}_a^{\mathcal {E}_\varepsilon }\) we have \(\iota _\varepsilon J = j_\varepsilon \mathscr {L}^d\) where \(j_\varepsilon : \mathbb {T}^d\rightarrow \mathbb {R}^d\) is given by

Here, \(J_u(x, y)\) is a convex combination of \(\big \{ \sigma _\varepsilon ^{\bar{z}} J (x,y )\big \}_{{\bar{z}}\in \mathbb {Z}_\varepsilon ^d}\), i.e.,

where \(\lambda _u^{\varepsilon , {\bar{z}}}(x,y) \ge 0\) and \(\sum _{{\bar{z}} \in \mathbb {Z}_\varepsilon ^d} \lambda _u^{\varepsilon ,{\bar{z}}}(x,y) = 1\). Moreover,

Proof

Fix \(\varepsilon < \frac{1}{2R_0}\), let \(z \in \mathbb {Z}_\varepsilon ^d\) and \(u \in Q_\varepsilon ^z\). We have

which is the desired form (4.11) with

for \((x, y) \in \mathcal {E}_\varepsilon \) with \(x_\textsf{z}= z\). Since the family of cubes \( \big \{ Q_\varepsilon ^{\bar{z} + sy_\textsf{z}+(1-s)x_\textsf{z}} \big \}_{\bar{z} \in \mathbb {Z}_\varepsilon ^d} \) is a partition of \(\mathbb {T}^d\), it follows that \(\sum _{{\bar{z}} \in \mathbb {Z}_\varepsilon ^d} \lambda _u^{\varepsilon ,{\bar{z}}}(x,y) = 1\).

To prove the final claim, let \((x, y) \in \mathcal {E}_\varepsilon \) with \(x_\textsf{z}= z\) as above and take \(\bar{z} \in \mathbb {Z}_\varepsilon ^d\) with \(|{\bar{z}}|_\infty > R_0 + 1\). Since \(|x_\textsf{z}- y_\textsf{z}| \le R_0\), the triangle inequality yields

for \(s \in [0,1]\). Therefore, \(u \in Q_\varepsilon ^z\) implies \(\chi _{Q_\varepsilon ^{\bar{z} + (1-s)x_\textsf{z}+ s y_\textsf{z}}}(u) = 0\), hence \(\lambda _u^{\varepsilon , {\bar{z}}}(x,y) = 0\) as desired.

5 Main results

In this section we present the main result of this paper, which asserts that the discrete action functionals \(\mathcal {A}_\varepsilon \) converge to a continuous action functional \(\mathbb {A}= \mathbb {A}_\textrm{hom}\) with the nontrivial homogenised action density function \(f = f_\textrm{hom}\) defined in Sect. 4.

5.1 Main convergence result

We are now ready to state our main result. We use the embedding \(\iota _\varepsilon : \mathbb {R}_+^{\mathcal {X}_\varepsilon }\rightarrow \mathcal {M}_+(\mathbb {T}^d)\) defined in (4.10a). The proof of this result is given in Sects. 7 and 8.

Theorem 5.1

(\(\Gamma \)-convergence) Let \((\mathcal {X}, \mathcal {E})\) be a locally finite and \(\mathbb {Z}^d\)-periodic connected graph of bounded degree (see Assumption 2.1). Let \(F: \mathbb {R}_+^\mathcal {X}\times \mathbb {R}_a^\mathcal {E}\rightarrow \mathbb {R}\cup \{+\infty \}\) be a cost function satisfying Assumption 2.3. Then the functionals \(\mathcal {A}^\mathcal {I}_\varepsilon \) \(\Gamma \)-converge to \(\mathbb {A}^\mathcal {I}_{\textrm{hom}}\) as \(\varepsilon \rightarrow 0\) with respect to the weak (and vague) topology. More precisely:

-

(i)

(liminf inequality) Let \({\varvec{\mu }}\in \mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\). For any sequence of curves \(\{{\pmb {m}}^\varepsilon \}_{\varepsilon }\) with \({\pmb {m}}^\varepsilon = (m_t^\varepsilon )_{t \in \mathcal {I}} \subseteq \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) such that \(\iota _\varepsilon {\pmb {m}}^\varepsilon \rightarrow {\varvec{\mu }}\) vaguely in \(\mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\) as \(\varepsilon \rightarrow 0\), we have the lower bound

$$\begin{aligned} \liminf _{\varepsilon \rightarrow 0} \mathcal {A}^\mathcal {I}_\varepsilon ({\pmb {m}}^\varepsilon ) \ge \mathbb {A}^\mathcal {I}_{\textrm{hom}}({\varvec{\mu }}). \end{aligned}$$(5.1) -

(ii)

(limsup inequality) For any \({\varvec{\mu }}\in \mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\) there exists a sequence of curves \(\{{\pmb {m}}^\varepsilon \}_{\varepsilon }\) with \({\pmb {m}}^\varepsilon = (m_t^\varepsilon )_{t \in \mathcal {I}} \subseteq \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) such that \(\iota _\varepsilon {\pmb {m}}^\varepsilon \rightarrow {\varvec{\mu }}\) weakly in \(\mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\) as \(\varepsilon \rightarrow 0\), and we have the upper bound

$$\begin{aligned} \limsup _{\varepsilon \rightarrow 0} \mathcal {A}^\mathcal {I}_\varepsilon ({\pmb {m}}^\varepsilon ) \le \mathbb {A}^\mathcal {I}_{\textrm{hom}}({\varvec{\mu }}). \end{aligned}$$(5.2)

Remark 5.2

(Necessity of the interior domain condition) Assumption 2.3 is crucial in order to obtain the \(\Gamma \)-convergence of the discrete energies. Too see this, let us consider the one-dimensional graph \(\mathcal {X}=\mathbb {Z}\) and the edge-based cost associated with

Clearly F satisfies conditions \((a)-(c)\) from Assumption 2.3, but (d) fails to hold. The constraint \(m(x)=m(y)\) on neighbouring \(x,y \in \mathcal {X}\) forces every \({\pmb {m}}: \mathcal {I}\rightarrow \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) with \(\mathcal {A}_\varepsilon ({\pmb {m}})<\infty \) to be constant in space (and hence in time, by mass preservation). Therefore, the \(\Gamma \)-limit of the \(\mathcal {A}_\varepsilon \) is finite only on constant measures \({\varvec{\mu }}= \alpha \mathscr {L}^{d+1}\), with \(\alpha \in \mathbb {R}_+\). On the other hand, we haveFootnote 3 that \(f_\textrm{hom}(\rho ,j)=\frac{|j|^2}{\rho }\), which corresponds to the \(\mathbb {W}_2\) action on the line.

It is interesting to note that if the constraint “\(m(x) = m(y)\)” is replaced by a weaker one of the form “\(|m(x)-m(y)|\le \delta \) ” for some \(\delta >0\), then all the assumptions are satisfied and our theorem can be applied. Intuitively speaking, the constraint which forces admissible curves to be constant is replaced by a constraint that merely forces admissible curves to be Lipschitz; in this case the limit coincides with the \(\mathbb {W}_2\) action.

See also Sect. 9.2 for a general treatment of the cell formula on the integer lattice \(\mathcal {X}=\mathbb {Z}^d\).

5.2 Scaling limits of Wasserstein transport problems

For \(1 \le p < \infty \), recall that the energy density associated to the Wasserstein metric \(\mathbb {W}_p\) on \(\mathbb {R}^d\) is given by \(f(\rho , j) = \frac{|j|^p}{\rho ^{p-1}}\). This function satisfies the scaling relations \(f(\lambda \rho , \lambda j) = \lambda f(\rho , j)\) and \(f(\rho , \lambda j) = |\lambda |^p f(\rho , j)\) for \(\lambda \in \mathbb {R}\).

In discrete approximations of \(\mathbb {W}_p\) on a periodic graph \((\mathcal {X},\mathcal {E})\), it is reasonable to assume analogous scaling relations for the function F, namely \(F(\lambda m, \lambda J) = \lambda F(m, J)\) and \(F(m, \lambda J) = |\lambda |^p F(m, J)\). The next result shows that if such scaling relations are imposed, we always obtain convergence to \(\mathbb {W}_p\) with respect to some norm on \(\mathbb {R}^d\). This norm does not have to be Hilbertian (even in the case \(p=2\)) and is characterised by the cell problem (4.6).

Corollary 5.3

Let \(1 \le p < \infty \), and suppose that F has the following scaling properties for \(m \in \mathbb {R}_+^\mathcal {X}\) and \(j \in \mathbb {R}_a^\mathcal {E}\):

-

(i)

\(F(\lambda m, \lambda J) = \lambda F(m, J)\) for all \(\lambda \ge 0\);

-

(ii)

\(F(m, \lambda J) = |\lambda |^p F(m, J)\) for all \(\lambda \in \mathbb {R}\).

Then \(f_\textrm{hom}(\rho , j) = \frac{\Vert j \Vert ^p}{\rho ^{p-1}}\) for some norm \(\Vert \cdot \Vert \) on \(\mathbb {R}^d\).

Proof

Fix \(\rho > 0\) and \(j \in \mathbb {R}^d\). The scaling assumptions imply that

Consequently,

We claim that \(f_\textrm{hom}(1, j) > 0\) whenever \(j \ne 0\). Indeed, it follows from (4.7) that \(f_\textrm{hom}(1, j) > 0\) whenever |j| is sufficiently large. By homogeneity (5.3), the same holds for every \(j \ne 0\). It also follows from (5.3) that \(f_\textrm{hom}(1, 0) = 0\).

We can thus define \(\Vert j\Vert := f_\textrm{hom}(1,j)^{1/p} \in [0,\infty )\). In view of the previous comments, we have \(\Vert 0\Vert = 0\) and \(\Vert j\Vert > 0\) for all \(j \in \mathbb {R}^d \setminus \{0\}\). The homogeneity (5.3) implies that \(\Vert \lambda j\Vert = |\lambda |\, \Vert j \Vert \) for \(j \in \mathbb {R}^d\) and \(\lambda \in \mathbb {R}\).

It remains to show the triangle inequality \(\Vert j_1 + j_2 \Vert \le \Vert j_1\Vert + \Vert j_2\Vert \) for \(j_1, j_2 \in \mathbb {R}^d\). Without loss of generality we assume that \(\Vert j_1\Vert + \Vert j_2\Vert > 0\). For \(\lambda \in (0,1)\), the convexity of \(f_\textrm{hom}\) (see Lemma 4.8) and the homogeneity (5.3) yield

Substitution of \(\lambda = \frac{\Vert j_2\Vert }{\Vert j_1\Vert + \Vert j_2\Vert }\) yields the triangle inequality.

5.3 Compactness results

As we frequently need to compare measures with unequal mass in this paper, it is natural to work with the the Kantorovich–Rubinstein norm. This metric is closely related to the transport distance \(\mathbb {W}_1\); see “Appendix 1”.

The following compactness result holds for solutions to the continuity equation with bounded action. As usual, we use the notation \({\varvec{\mu }}(\textrm{d}x, \textrm{d}t) = \mu _t(\textrm{d}x) \, \textrm{d}t\).

Theorem 5.4

(Compactness under linear growth) Let \({\pmb {m}}^\varepsilon :\mathcal {I}\rightarrow \mathbb {R}_+^{\mathcal {X}_\varepsilon }\) be such that

Then there exists a curve \((\mu _t)_{t \in \mathcal {I}} \in \textrm{BV}_{\textrm{KR}}(\mathcal {I}; \mathcal {M}_+(\mathbb {T}^d))\) such that, up to extracting a subsequence,

-

(i)

\(\iota _\varepsilon {\pmb {m}}^\varepsilon \rightarrow {\varvec{\mu }}\) weakly in \(\mathcal {M}_+(\mathcal {I}\times \mathbb {T}^d)\);

-

(ii)

\(\iota _\varepsilon m_t^\varepsilon \rightarrow \mu _t\) weakly in \(\mathcal {M}_+(\mathbb {T}^d)\) for almost every \(t\in \mathcal {I}\);

-

(iii)

\(t \mapsto \mu _t(\mathbb {T}^d)\) is constant.

The proof of this result is given in Sect. 6.

Under a superlinear growth condition on the cost function F, the following stronger compactness result holds.

Assumption 5.5