Abstract

We study a free boundary isometric embedding problem for abstract Riemannian two-manifolds with the topology of the disc. Under the assumption of positive Gauss curvature and geodesic curvature of the boundary being equal to one, we show that every such disc may be isometrically embedded into the Euclidean three-space \(\mathbb {R}^3\) such that the image of the boundary meets the unit sphere \(\mathbb {S}^2\) orthogonally. We also show that the embedding is unique up to rotations and reflections through planes containing the origin. Finally, we define a new Brown-York type quasi-local mass for certain free boundary surfaces and discuss its positivity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is a fundamental problem in differential geometry to understand which abstract Riemannian manifolds can be realised as embedded submanifolds of a Euclidean space. In a seminal work, J. Nash showed that every sufficiently smooth Riemannian manifold can be isometrically embedded in a higher dimensional Euclidean space, see [27]. A similar result was later obtained by M. Günther, see [11]. While these results are of broad generality, they give little information about the dimension of the ambient Euclidean space and the extrinsic geometry of the embedded manifold.

By contrast, stronger results can be obtained in more restrictive settings. In 1916, H. Weyl conjectured that every sufficiently smooth Riemannian metric h defined on the unit sphere \({\mathbb {S}}^2\) with positive Gauss curvature \(K_h\) may be realised as a convex surface in \({\mathbb {R}}^3\). This problem, which is now known as the Weyl problem, was solved by H. Lewy in 1938 if h is analytic, see [18], and in a landmark paper by L. Nirenberg if h is of class \(C^4\), see [28].

As had been proposed by H. Weyl, L. Nirenberg used the continuity method in his proof. Namely, he constructed a smooth one-parameter family of positive curvature metrics \(h_t,\) where \(t\in [0,1],\) such that \(h_1\) equals h and \(h_0\) is the round metric. The round metric is realised by the round sphere \({\mathbb {S}}^2\subset {\mathbb {R}}^3\) and it thus suffices to show that the set of points t for which the Weyl problem can be solved is open and closed in [0, 1]. In order to show that this set is open, L. Nirenberg used a fix point argument which is based on proving existence and estimates for a linearised equation. To show that the set is closed, he established a global \(C^2\)-estimate for solutions of fully non-linear equations of Monge-Ampere type. Here, the positivity of the Gauss curvature translates into ellipticity of the equation. We remark that a similar result was independently obtained by A. Pogorelov using different techniques, see [30].

L. Nirenberg’s result has been generalized subsequently in various ways. In the degenerate case \(K_h\ge 0\), P. Guan and Y. Li as well as J. Hong and C. Zuily, showed that there exists a \(C^{1,1}\)-embedding into the Euclidean space, see [8, 17]. Regarding the regularity required on the metric h, E. Heinz established an interor \(C^2\)-estimate which allowed him to relax the regularity assumption in [28] to h being of class \(C^3\), see [12, 13]. Using similar techniques, F. Schulz further weakened the assumption to h being of class \( C^{2,\alpha }\) for some \(\alpha \in (0,1)\), see [32]. We note that the isometric embedding problem for more general target manifolds, particularly with warped product metrics, has been studied. We refer to the works of P. Guan and S. Lu, see [9], S. Lu, see [22], as well as C. Li and Z. Wang, see [23].

A natural extension of the Weyl problem consists in the consideration of isometric embedding problems for manifolds with boundary. In [16], J. Hong considered Riemannian metrics on the disc (D, h) with both positive Gauss curvature \(K_h\) and positive geodesic curvature of the boundary \(k_h\) and showed that (D, h) can be isometrically embedded into \({\mathbb {R}}^3\) such that the image of the boundary is contained in a half space. In [10], B. Guan studied a similar embedding problem in the Minkowski space. These boundary value problems are reminiscent of the classical free boundary problem for minimal surfaces, see for instance [29]. However, in the case of minimal surfaces, the variational principle forces the contact angle to be \(\pi /2\). By contrast, there is no additional information in [16] about the contact angle between the embedding of \(\partial D\) and the supporting half-space. In order to make this distinction precise, we call surfaces that meet a supporting surface at a contact angle of \(\pi /2\) free boundary surfaces (with respect to the supporting surface). Moreover, we call geometric boundary problems which require the solution to be a free boundary surface free boundary problems.

If the supporting surface is a half-space, free boundary problems can often be solved using a reflection argument. Consequently, it is more interesting to consider more general supporting surfaces, the most simple non-trivial example being the unit sphere. In recent years, there has been considerable activity in the study of free boundary problems with respect to the unit sphere. For example, A. Fraser and R. Schoen studied free boundary minimal surfaces in the unit ball, see [5,6,7]. B. Lambert and J. Scheuer studied the free boundary inverse mean curvature flow and derived a geometric inequality for convex free boundary surfaces, see [20, 21] and also [37]. G. Wang and C. Xia proved the uniqueness of stable capillary surfaces in the unit ball, see [40], while J. Scheuer, G. Wang and C. Xia proved certain Alexandrov-Fenchel type inequalities, see [35].

In this paper, we study a free boundary isometric embedding problem with respect to the unit sphere. More precisely, we prove the following theorem.

Theorem 1.1

Let \(k\ge 4\), \(\alpha \in (0,1)\) and \(h\in C^{k,\alpha }({\bar{D}},{\text {Sym}}({\mathbb {R}}^2))\) be a Riemannian metric on the closed unit disc \({{\bar{D}}}\) with positive Gauss curvature \(K_h\) and geodesic curvature \(k_h\) along \(\partial D\) equal to one. Then there exists an isometric embedding

of class \(C^{k+1,\alpha }\) such that \(F(\partial D)\subset {\mathbb {S}}^2\) and \(F({\bar{D}})\) meets \({\mathbb {S}}^2\) orthogonally along \(F(\partial D)\). This embedding is unique up to rotations and reflections through planes that contain the origin.

Remark: As pointed out by the anonymous referee, the proof of Theorem 1.1 still works if h is only of class \(C^{3,1}\). In this case, the presented argument shows that F is of class \(C{4,\beta }\) for every \(\beta \in (0,1)\).

One may check that the condition \(k_h=1\) is necessary. However, we expect that the regularity assumption and the condition \(K_h>0\) may be weakened in a similar way as for the classical Weyl problem. Before we give an overview of the proof, we provide some motivation to study this problem besides its intrinsic geometric interest.

In general relativity, the resolution of the classical Weyl problem is used to define the so-called Brown-York mass, see [1]. Let (M, g) be a compact Riemannian three-manifold with boundary \(\partial M\) and assume that \(\partial M\) is a convex sphere. J. Nirenberg’s theorem implies that \(\partial M\) may be isometrically embedded into \({\mathbb {R}}^3\). We denote the mean curvature of \(\partial M\) as a subset of \({\mathbb {R}}^3\) by \({{\bar{H}}}\) and the mean curvature of \(\partial M\) as a subset of M by H. The Brown York mass is then defined to be

where h is the metric of \(\partial M\). It was already proved by S. Cohn-Vossen in 1927 that an isometric embedding of a convex surface is unique up to rigid motions provided it exists, see [2]. It follows that the Brown-York mass is well-defined. Under the assumption that \(\partial M\) is strictly mean convex and that (M, g) satisfies the dominant energy condition \(R\ge 0\), where R denotes the scalar curvature of (M, g), Y. Shi and L.-F. Tam proved in [33] that the Brown-York mass is non-negative and that equality holds precisely if (M, g) is isometric to a smooth domain in \({\mathbb {R}}^3\). In fact, they proved that the positive mass theorem for asymptotically flat manifolds is equivalent to the positivity of the Brown-York mass of every compact and convex domain. A weaker inequality, which still implies the positive mass theorem, was proven by O. Hijazi and S. Montiel using spinorial methods, see [15]. We would also like to mention that C.-C. Liu and S.-T. Yau introduced a quasi-local mass in the space-time case and proved positivity thereof, see [24, 25]. Their mass was later on generalized and further studied by M.-T. Wang and S.-T. Yau, see [41, 42]. Moreover, S. Lu and P. Miao derived a quasi-local mass type inequality in the Schwarzschild space, see [19].

In the setting of free boundary surfaces, we instead consider a compact three-manifold (M, g) with a non-smooth boundary \(\partial M=S\cup \Sigma \). Here, S and \(\Sigma \) are compact smooth surfaces meeting orthogonally along their common boundary \(\partial \Sigma =\partial S\). We assume that \(\partial \Sigma \) is strictly mean convex, has positive Gauss curvature and geodesic curvature along the boundary equal to one. The latter requirement is for instance satisfied if S is a totally umbilical surface with mean curvature H(S) equal to two. We may then isometrically embed \(\Sigma \) into \({\mathbb {R}}^3\) with free boundary in the unit sphere. It is natural to ask if we can expect a geometric inequality in the spirit of [33] to hold on the free boundary surface \(\Sigma \). In Appendix B, we provide some numerical evidence that this might be indeed the case. The condition \(H(S)\ge 2\) in the following conjecture is natural in the context of positive mass type theorems, see for instance [26].

Conjecture 1.2

Let (M, g) be a manifold with boundary \(\partial M=S\cup \Sigma \) where S and \(\Sigma \) are smooth discs meeting orthogonally along \(\partial \Sigma =\partial S\). Assume that \(\Sigma \) is strictly mean convex, has positive Gauss curvature as well as geodesic curvature along \(\partial \Sigma \) equal to one and that \(R\ge 0\) on M and \(H(S)\ge 2\) on S. Then there holds

Equality holds if and only if (M, g) is isometric to a domain in \({\mathbb {R}}^3\) and if the isometry maps S to a subset of \({\mathbb {S}}^2\).

A positive answer to this conjecture would mean that in certain cases, mass can be detected with only incomplete information about the geometry of the boundary of the domain under consideration. It turns out that proving such an inequality is not straightforward. On the one hand, there does not seem to be an obvious approach to adapt spinorial methods such as in [15] to the free boundary setting. On the other hand, arguing as in [33], we observe that a positive answer to the conjecture is equivalent to a positive mass type theorem for asymptotically flat manifolds which are modeled on a solid cone.

We now describe the proof of Theorem 1.1. As in [28], we use the continuity method and smoothly connect the metric h to the metric \(h_0\) of the spherical cap whose boundary has azimuthal angle \(\pi /4\). In particular, the free boundary isometric embedding problem can be solved for \(h_0\). We then need to show that the solution space is open and closed. Contrary to the argument by Nirenberg, the non-linearity of the boundary condition does not allow us to use a fixed point argument. We instead use a power series of maps, as H. Weyl had initially proposed, where the maps are obtained as solutions of a linearised first-order system. This system was previously studied by C. Li and Z. Wang in [23]. Another difficulty arises from the fact that the prescribed contact angle makes the problem seemingly overdetermined. We thus solve the linearised problem without prescribing the contact angle and recover the additional boundary condition from the constancy of the geodesic curvature and a lengthy algebraic computation. Regarding the convergence of the power series, we prove a-priori estimates using the Nirenberg trick. Here, it turns out that a recurrence relation for the Catalan numbers plays an important part. In order to show that the solution space is closed, we observe that the Codazzi equations imply a certain algebraic structure for the normal derivative of the mean curvature at the boundary. This allows us to use the maximum principle to prove a global \(C^2\)-estimate for every isometric embedding.

The rest of this paper is organized as follows. In Sect. 2, we define the so-called solution space and show that the space of metrics on D with positive Gauss curvature and geodesic curvature along \(\partial D\) being equal to 1 is path-connected. In Sect. 3, we study the linearised problem and show that the solution space is open. In Sect. 4, we prove a global curvature estimate and show that the solution space is closed. In Sect. 5, we prove Theorem 1.1.

2 Basic properties of the solution space

Let \(\alpha \in (0,1)\) and fix an integer \(k\ge 2\). Let \(D\subset {\mathbb {R}}^2\) be the unit two-disc and consider a \(C^{k,\alpha }\)-Riemannian metric h defined on the closure \({\bar{D}}\). We assume that the Gauss curvature \(K_h\) is positive and that the geodesic curvature of \(\partial D\) satisfies \(k_h=1\).

We would like to find a map \( F\in C^{k+1,\alpha }({\bar{D}},{\mathbb {R}}^3)\) which isometrically embeds the Riemannian manifold \(({\bar{D}},g)\) into \({\mathbb {R}}^3\) such that the boundary \( F(\partial D)\) meets the unit sphere \({\mathbb {S}}^2\) orthogonally. To this end, we consider the space \({\mathcal {G}}^{k,\alpha }\) of all Riemannian metrics \({\tilde{h}}\in C^{k,\alpha }({\bar{D}},{\text {Sym}}({\mathbb {R}}^2)\) with positive Gauss curvature and geodesic curvature along \(\partial D\) equal to one. Up to a diffeomorphism of \({\bar{D}}\), we may write the metric \({\tilde{h}}\) in isothermal coordinates, see [3]. This means that there exists a positive function \({\tilde{E}}\in C^{k,\alpha }({\bar{D}})\) such that

Here, \((x_1,x_2)\) denote the standard Euclidean coordinates on D while \((\varphi ,r)\) denote polar coordinates centred at the origin. A map \({\tilde{F}}\) isometrically embeds \(({\bar{D}},{\tilde{h}})\) into \({\mathbb {R}}^3\) with free boundary in the unit sphere if and only if it solves the following boundary value problem

We will prove the existence of such a map F corresponding to the metric h using the continuity method. More precisely, we define the solution space \({\mathcal {G}}_*^{k,\alpha }\subset {\mathcal {G}}^{k,\alpha }\) to be the space which contains all metrics \({\tilde{h}}\in {\mathcal {G}}^{k,\alpha }\) for which there exists a map \({\tilde{F}}\in C^{k+1,\alpha }({\bar{D}},{\mathbb {R}}^3)\) solving the problem (1). One may construct explicit examples to see that the space \({\mathcal {G}}_*^{k,\alpha }\) is non-empty. In order to show that \({\mathcal {G}}_*^{k,\alpha }={\mathcal {G}}^{k,\alpha }\), we equip \({\mathcal {G}}^{k,\alpha }\) with the \(C^{k,\alpha }({\bar{D}},{\text {Sym}}({\mathbb {R}}^2))\)-topology and show that \({\mathcal {G}}^{k,\alpha }\) is path-connected, open and closed. It actually turns out that \({\mathcal {G}}^{k,\alpha }\) is not only path-connected but that the paths can be chosen to be analytic maps from the unit interval to \({\mathcal {G}}^{k,\alpha }\).

In the proof of the next lemma, the connection and Laplacian of the Euclidean metric \({{\bar{g}}}\) on D will be denoted by \({\bar{\nabla }}\) and \({\bar{\Delta }}\), respectively.

Lemma 2.1

Given \(h_0,\) \(h_1\in {\mathcal {G}}^{k,\alpha }\), there exists an analytic map

such that \(h(0)=h_0\) and \(h(1)=h_1\). In particular, the space \({\mathcal {G}}^{k,\alpha }\) is path-connected.

Proof

Let \(h_0,\) \(h_1\in {\mathcal {G}}^{k,\alpha }\) and choose isothermal coordinates with conformal factors \(E_0,E_1\), respectively. Given \(t\in [0,1]\), we define a one-parameter family of Riemannian metrics \(h_t\) connecting \(h_0\) and \(h_1\) to be

where

denotes the conformal factor of the metric \(h_t\). Since both \(E_0\) and \(E_1\) are positive, \(E_t\) is well-defined. A straightforward computation reveals that given a metric h, the Gauss curvature and the geodesic curvature of \(\partial D\) satisfy the formulae

see [14]. This implies that

for every \(t\in [0,1]\). In order to see that the Gauss curvature is positive, we abbreviate \(K_0=K_{h_0}\), \(K_1=K_{h_1}\) and compute

In the last inequality, we used Young’s inequality and the positivity of \(K_0 \), \(K_1,\) \(E_0\) and \(E_1\) as well as \(t\in [0,1]\). In particular, \(K_{h_t}\) is positive for every \(t\in [0,1]\). Clearly, \(h_t\) is analytic with respect to t. \(\square \)

3 Openness of the solution space

In this section, we show that the solution space \({\mathcal {G}}_*^{k,\alpha }\) is open. Let \(h\in {\mathcal {G}}^{k,\alpha }\) and suppose that there is a map \(F\in C^{k+1,\alpha }({\bar{D}},{\mathbb {R}}^3)\) satisfying (1) with respect to the metric h. Since the Gaussian curvature \(K=K_h\) is strictly positive, it follows that \(F({\bar{D}})\) is strictly convex. We will now show that for every \({\tilde{h}}\in {\mathcal {G}}^{k,\alpha }\) which is sufficiently close to h in the \(C^{2,\alpha }({\bar{D}},{\text {Sym}}({\mathbb {R}}^2))\)-topology for some \(\alpha \in (0,1)\) there exists a solution \({\tilde{F}}\in C^{3,\alpha }({\bar{D}},{\mathbb {R}}^3)\) of (1). To this end, we use Lemma 2.1 to find a path \(h_t\) connecting h and \({\tilde{h}}\) in \({\mathcal {G}}^{k,\alpha }\) and observe that \(h_t\) and all of its spacial derivatives are analytic with respect to t.

3.1 The linearised problem

We define a family of \(C^{3,\alpha }({\bar{D}},{\mathbb {R}}^3)\)-maps

which is analytic with respect to t and satisfies \(F_0=F\). This means that

where \(\Psi ^0=F_0\) and \(\Psi ^l\in C^{3,\alpha }({\bar{D}},{\mathbb {R}}^3)\) are solutions to certain linearised equations if \(l\ge 1\). Clearly, if (1) is solvable up to infinite order at \(t=0\) and if \(F_t\) and all its time derivatives converge in \(C^{2,\alpha }(\bar{{D}},{\mathbb {R}}^3)\) for every \(t\in [0,1]\), then it follows that \({\tilde{F}}=F_1\) is a solution of (1) with respect to \({\tilde{h}}\). More precisely, we have the following lemma.

Lemma 3.1

Consider two metrics h, \({\tilde{h}}\in {\mathcal {G}}^{k,\alpha }\) with conformal factors E, \({\tilde{E}}\) and let \(h_t:[0,1]\rightarrow {\mathcal {G}}^{k,\alpha }\) be the connecting path from Lemma 2.1 with conformal factor \(E_t\). Let F be a solution of the free boundary problem (1) with respect to h and suppose that \(F_t\) as defined in (5) converges in \(C^{2,\alpha }({\bar{D}})\) uniformly. Then the following identities hold

We therefore consider the following boundary value problem. For every \(l\in {\mathbb {N}}\), we seek to find a map \(\Psi ^l\) satisfying

The first equation is understood in the sense of symmetric two-tensors, which means that we use the convention \(d^1d^2=d^2d^1={\text {Sym}}(d^1\otimes d^2)\), where \(d^1,d^2\) are the dual one-forms of the given coordinate system.

Lemma 3.2

Consider two metrics h, \({\tilde{h}}\in {\mathcal {G}}^{k,\alpha }\) with conformal factors E, \({\tilde{E}}\) and let \(h_t:[0,1]\rightarrow {\mathcal {G}}^{k,\alpha }\) be the connecting path from Lemma 2.1. Let F be a solution of the free boundary problem (1) with respect to h and suppose that there exists a family \(\{\Psi ^l\in C^{2,\alpha }({\bar{D}}):l\in {\mathbb {N}}\}\) satisfying (6) for every \(l\in {\mathbb {N}}\). Furthermore, assume that \(F_t\) as defined in (5) converges in \(C^{2,\alpha }({\bar{D}})\) uniformly. Then there holds

for every \(t\in [0,1]\). Here, \(E_t\) is the conformal factor of the metric \(h_t\).

Proof

This follows from the previous lemma and well-known facts about analytic functions. \(\square \)

We now modify the approach used in [23] to find solutions of (7). For ease of notation, we fix an integer \(l\in {\mathbb {N}}\) and define \(\Psi =\Psi ^l\). Given a coordinate frame \(\partial _1,\) \(\partial _2\) with dual one-forms \(d^1,\) \(d^2\), we define

and

where \(\nu \) is the unit normal of the surface \(F({\bar{D}})\) pointing inside the larger component of \(B_1(0)-F(D)\). Here and in the following, the Latin indices i, j, m, n always range over \(\{1,2\}\) unless specified otherwise. We consider the one-form

We denote the second fundamental form of the surface F with respect to our choice of unit normal \(\nu \) by \(A=(A_{ij})_{ij}\) and compute

where \(\nabla _h\) and \(\Gamma ^m_{i,j}\) denote the Levi-Civita connection and Christoffel-Symbols of the metric h, respectively. In the second equation, we used that

Hence, if \({\tilde{q}} \in C^{k,\alpha }({\bar{D}},{\text {Sym}}({\mathbb {R}}^2))\) is a symmetric covariant two-tensor, \(\psi \in C^{k+1,\alpha }({\bar{D}})\) and \(\Phi \in C^{k,\alpha }({\bar{D}},{\mathbb {R}}^3)\), the system

is equivalent to

This system is over-determined. However, it turns out that it suffices to solve the first four lines, as in the special situation of (6), the last equation will be automatically implied by the constancy of the geodesic curvature along \(\partial D\). Another way of writing the first three lines of (7) is

Since \(F({\bar{D}})\) is strictly convex, the second fundamental form A defines a Riemannian metric on \({\bar{D}}\). We can thus take the trace with respect to A to find

Given \(b\in \{0,1\}\), we define

to be the sections of the bundle of trace-free (with respect to A) symmetric (0,2)-tensors of class \(C^{b,\alpha }\). Moreover, we define

to be the one-forms of class \(C^{b,\alpha }\). Next, we define the operator

We are then left to find a solution w of the equation

We abbreviate the right hand side by q. We note that \({\mathcal {A}}^{b,\alpha }_A\) and \({\mathcal {B}}^{b,\alpha }_A\) are both isomorphic to \({\mathcal {C}}^{b,\alpha }=C^{b,\alpha }({\bar{D}},{\mathbb {R}}^2)\) via the isomorphisms

Here, \(x_1,x_2\) are the standard Euclidean coordinates. At this point, we emphasize that \((A^{ij})_{ij}\) denotes the inverse tensor of the second fundamental form A and not the quantity \(h^{im}h^{jn}A_{mn}\). We also define inner products on \({\mathcal {A}}^{b,\alpha }_{A}\) and \({\mathcal {B}}^{b,\alpha }_{A}\), respectively. Namely,

where \(q^0,\) \(q^1\in {\mathcal {A}}^{b,\alpha }_A\) and \(\omega ^0,\) \(\omega ^1\in {\mathcal {B}}^{b,\alpha }_A\). The particular choice of the inner product does not really matter as the strict convexity implies that these inner products are equivalent to the standard \(L^2\)-product with respect to the metric h. However, our choice implies a convenient form for the adjoint operator of L.

As was observed by C. Li and Z. Wang in [23], the operator L is elliptic. For the convenience of the reader, we provide a brief proof.

Lemma 3.3

The operator L is a linear elliptic first-order operator.

Proof

We regard L as an operator from \({\mathcal {C}}^{1,\alpha }\) to \({\mathcal {C}}^{0,\alpha }\). The leading order part of the operator is given by

In order to compute the principal symbol at a point \(p\in D\), we may rotate the coordinate system such that A is diagonal. It then follows that

Given \(\xi \in {\mathbb {S}}^1\), the principal symbol thus equals

Consequently,

as F(D) is strictly convex. \(\square \)

We proceed to calculate the adjoint of L denoted by \({L}^*\). Let \(\omega \in {\mathcal {B}}^{1,\alpha }_A\) and \(q\in {\mathcal {A}}^{1,\alpha }_A\). We denote the outward co-normal of \(\partial D\) by \(\mu =\mu ^i\partial _i\) and the formal adjoint of \(\nabla _h\), regarded as an operator mapping (0, 1)-tensors to (0, 2)-tensors, by \(-{\text {div}}_h\), regarded as an operator mapping (0, 2)-tensors to (0, 1)-tensors. Moreover, we denote the musical isomorphisms of h and A by \(\sharp _A, \) \(\sharp _h, \) \(\flat _A\) and \(\flat _h\). Using integration by parts and the fact that q is trace free with respect to A, we obtain

Consequently, the adjoint operator

is given by

As \({L}^*\) is the adjoint of an elliptic operator, it is elliptic itself. We now take a closer look at the boundary term. We consider isothermal polar coordinates \((\varphi ,r)\) centred at the origin with conformal factor E and compute at \(\partial D\), using the free boundary condition, that

The last equality holds since F, \(\partial _\varphi F\) are both tangential at \(\partial D\). Consequently, we have \(A^{r\varphi }\equiv 0\) on \(\partial D\). Combining this with \({\text {tr}}_A(q)=0\) and writing \(\omega =\omega _id^i\) we obtain

This leads us to define the boundary operators

to be

Here, \(e:\partial D\rightarrow \bar{{D}}\) denotes the inclusion map. Clearly, given \(b\in \{0,1\}\), the spaces \(C^{b,\alpha }(\partial D)\) and \(C^{b,\alpha }(\partial D,T^*\partial D)\) are isomorphic.

Next, we define the operators

and

Given \(\omega ^0,\) \(\omega ^1\in {\mathcal {B}}^{0,\alpha }_A\), \(q^0,\) \(q^1\in {\mathcal {A}}^{0,\alpha }_A\), \(\psi \in C^{0,\alpha }(\partial D)\) and \(\zeta \in C^{0,\alpha }(\partial D, T^*\partial D)\), we define the inner products

According to (10) and (13), there holds

for every \(\omega \in {\mathcal {B}}_A^{1,\alpha }\) and \(q\in {\mathcal {A}}_A^{1,\alpha }\).

3.2 Existence of solutions to the linearised problem

First-order elliptic boundary problems satisfy a version of the Fredholm alternative if the boundary operator satisfies a certain compatibility condition, the so-called Lopatinski-Shapiro condition; cf. [38]. A definition of this condition can be found in Chapter 4 of [38] for instance. It can be proved that both \({\mathcal {L}}\) and \({\mathcal {L}}^*\) satisfy this condition. We postpone the proof to the appendix.

We proceed to prove the following solvability criterion. From now on, all norms are computed with respect to the isothermal Euclidean coordinates on D.

Lemma 3.4

Let \(q\in {\mathcal {A}}^{0,\alpha }_A\) and \(\psi \in C^{1,\alpha }(\partial D)\). Then there exists a one-form \(w\in {\mathcal {B}}^{1,\alpha }_{A}\) with \({\mathcal {L}}(w)=(q,\psi )\) if and only if

for every \({\hat{q}}\in \ker ({\mathcal {L}}^*)\). If (15) holds, then w satisfies the estimate

where c depends on \(\alpha \), \(|A|_{C^{0,\alpha }({\bar{D}})}\) and \(|h|_{C^{1,\alpha }({\bar{D}})}\). Moreover, if q is of class \(C^{b,\alpha }\) and \(\psi \) of class \(C^{b+1,\alpha }\) for some integer \(1\le b\le k-1\), then w is of class \(C^{b+1,\alpha }\).

Proof

We can regard the operators \({\mathcal {L}}\) and \({\mathcal {L}}^*\) as mappings from

According to Lemma 3.3 and Lemma A.1, the operators \({\mathcal {L}}\) and \({\mathcal {L}}^*\) are elliptic operators that satisfy the Lopatinski-Shapiro condition. Consequently, they are Fredholm operators, see [38, Theorem 4.2.1]. The existence of a \(C^{1,\alpha }\)-solution under the given hypothesis now follows from the identity (14) and [38, Theorem 1.3.4]. Moreover, [38, Theorem 4.1.2] provides us with the a-priori estimate. The claimed regularity follows from standard elliptic theory. \(\square \)

In order to complete the proof of the existence of solutions to the first four lines of the linearised Eq. (7), we proceed to show that the kernel of \({\mathcal {L}}^*\) is empty. Let \({\hat{q}}\in {\mathcal {A}}_A^{1,\alpha }\) such that \({\mathcal {L}}^*({\hat{q}})=0\). We first transform (11) into a more useful form.

Lemma 3.5

The (0, 3) tensor \(\nabla _h {\hat{q}}\) is symmetric. In particular, in any normal coordinate frame, there holds

as well as

Proof

See [23, p. 10]. \(\square \)

Let \(\times :{\mathbb {R}}^3\rightarrow {\mathbb {R}}^3\) be the Euclidean cross product. Taking the cross product with the normal \(\nu \) defines a linear map on the tangent bundle of \(F({\bar{D}})\). We define the (1, 1)-tensor Q via

Using the properties of the cross product, we find that

The next lemma is a variation of Lemma 7 in [23]. In its statement ant its proof, \({\bar{\nabla }}\) denotes the flat connection of \({\mathbb {R}}^3\).

Lemma 3.6

Let \(Y\in C^1({\bar{D}},{\mathbb {R}}^3)\) be a vector field satisfying \(Y\cdot \partial _r F=0\) on \(\partial D\) and

If Y satisfies

in the sense of symmetric (0, 2)-tensors, then \(\omega = 0\).

Proof

We compute

We fix a point \(p\in D\) and choose normal coordinates centred at p. Equation (21) reads

Together with (19) and (20), this implies that

Moreover, we may choose the direction of the normal coordinates to be principal directions, that is, \(A_{12}\)=0. Using (19) and (20), we then find

where we have used (17), (18) and \({\text {tr}}_A({\hat{q}})=0\). It follows that \(\omega \) is closed. Since the disc is contractible, \(\omega \) is also exact and consequently, there exists a function \(\zeta \in C^{2,\alpha }({\bar{D}})\) satisfying

This implies that \(\partial _l\zeta =X_l\cdot Y\). As \(R^*_2({\hat{q}})=0\), we have \({\hat{q}}_{r\varphi }=0\) on \(\partial D \) where \(\varphi ,r\) denote isothermal polar coordinates. It follows that

on \(\partial D\). In particular, \(\zeta \) is constant on \(\partial D\). We can now argue as in the proof of Lemma 7 in [23] to show that \(\zeta \) satisfies a strongly elliptic equation with bounded coefficients. The maximum principle implies that \(\zeta \) attains its maximum and minimum on the boundary. Since \(\zeta \) is constant on \(\partial D\), \(\zeta \) is constant on all of \({\bar{D}}\) and consequently \(\omega =d\zeta =0\). \(\square \)

We now prove the following existence result.

Lemma 3.7

Let \({\tilde{q}}\in C^{k-1,\alpha }({\bar{D}},{\text {Sym}}(T^*D\otimes T^*D))\) and \(\psi \in C^{k,\alpha }(\partial D)\). Then there exists a map \(\Psi \in C^{k,\alpha }({\bar{D}},{\mathbb {R}}^3)\) which satisfies

Moreover, the one-form \(w=u_id^i\) where \(u_i=\Psi \cdot \partial _iF\) satisfies the estimate

where c depends on \(|h|_{C^{2}({\bar{D}})}\) as well as \(|A|_{C^{1}({\bar{D}})}\).

Proof

We have seen that it is sufficient to prove the existence of a smooth one-form w solving \({\mathcal {L}}(w)=(q,\psi )\), where \(q={\tilde{q}}-\frac{1}{2} {\text {tr}}_A({\tilde{q}})A\). w then uniquely determines the map \(\Psi \). Moreover, there holds \(|q|_{C^{0,\alpha }({\bar{D}})}\le c |{\tilde{q}}|_{C^{0,\alpha }({\bar{D}})}\), where c solely depends on \(|A|_{C^1({\bar{D}})}\). Hence, according to Lemma 3.4 it suffices to show that \(\ker {({\mathcal {L}}^*)}\) is empty.

Let \({\hat{q}}\in \ker ({\mathcal {L}}^*)\) and Q be defined as above. Denote the standard basis of \({\mathbb {R}}^3\) by \(\{e_1,e_2,e_3\}\) and define the vector fields \(Y_a=e_a\times F\) for \(a\in \{1,2,3\}\). Since \(\partial _rF=EF\) on \(\partial D\), there holds \(Y_a\cdot \partial _rF=0\) on \(\partial D\). We denote the group of orthogonal matrices by O(3). It is well-known that \(T_{{\text {Id}}}(O(3))={\text {Skew}}(3)\) which is the space of skew-symmetric matrices. The operator \(Y\mapsto e_a\times Y\) is skew-symmetric and it follows that there exists a \(C^1\)-family of orthogonal matrices \({\mathcal {I}}(t)\), where \(t\in (-\epsilon ,\epsilon )\) for some \(\epsilon >0\), such that \({\mathcal {I}}(0)={\text {Id}}\) and \({\mathcal {I}}'(0)(F)=Y_a\). In particular, every \({\mathcal {I}}(t)(F)\) is a solution to the free boundary value problem (1) for the metric h. It follows that

as the connection \(\bar{\nabla }\) and the vector-valued differential d on \({\mathbb {R}}^3\) can be identified with each other. Hence the hypotheses of Lemma 3.6 are satisfied and \(Y_a\cdot Q \) vanishes identically on D. Clearly, \(Q\cdot \nu = 0\) and \(Q=0\) implies \({\hat{q}}=0\). Thus, it suffices to show that

on D.

At any point \(p\in D\), the vector fields \(Y_1, Y_2, Y_3\) span the space \(F(p)^\perp \) so we need to show that \(\nu \notin F(p)^\perp \) on D. Applying the Gauss-Bonnet formula we obtain

that is, \({\text {Length}}(\partial D)< 2\pi \). According to the Crofton formula, we have

The set of great circles which intersect \(F(\partial D)\) exactly once is of measure zero. It follows that F has to avoid a great circle and, after a rotation, we may assume that \(F(\partial D)\) is contained in the upper hemisphere. Now, the strict convexity of F implies that F lies above the cone

and only touches C on the boundary. On \(\partial D\), there holds \(\nu \cdot F=0\). If there was an interior point p such that \(\nu (p)\cdot F(p)=0\), then the tangent plane \(T_{F(p)}{F({\bar{D}})}\) would meet a part of \({\mathbb {S}}^2\) above the cone C. However, strict convexity then implies that F has to lie on one side of that tangent plane. This contradicts \(F(\partial D)=\partial C\). \(\square \)

By induction, we are now able to find \(C^2\)-solutions \(\Psi ^l\) to the first four lines of (7) which however do not yet satisfy the condition

We now show that this additional boundary condition is implied by the constancy of the geodesic curvature in the t-direction.

Lemma 3.8

Let \(\Psi ^l\), \(l\in {\mathbb {N}}\), be \(C^2\)-solutions of

Then there also holds

Proof

We choose isothermal polar coordinates \((\varphi ,r)\) centred at the origin and abbreviate \(\partial _1=\partial _\varphi \) as well as \(\partial _2=\partial _r\). Differentiating the boundary condition in (23) tangentially we find

At every point \(p\in \partial D\), we need to show the following three equations

where we have used that \(|F|^2=1\), \(F\cdot \Psi ^1=0\) and \(F\cdot \partial _1F=F\cdot \nu =0\) on \(\partial D\). We prove the statement by induction and start with \(l=1\). Since \(\partial _2 F=EF\) we find

by the differential Eq. (23). This proves (25). Next, we have

where we have used the Eq. (23), the free boundary condition of F and (24). This proves (26). Now let \(\zeta =rE_t\). Since \(k_h(\partial D)= 1\) for all \(t\in [0,1]\), there holds \(1=-\partial _2(\zeta ^{-1})\) on \(\partial D\). Differentiating in time, we obtain

Since \(\partial _2 \zeta =\zeta ^2\) at \(t=0\), there holds

According to (23), we have \(\zeta \partial _t\zeta =\partial _1F\cdot \partial _1\Psi ^1\) at \(t=0\). Differentiating yields

at \(t=0\). Using that \(\zeta =E_t\) on \(\partial D\), \(E_t=E\) for \(t=0\) and

we obtain

We compute

which together with (28) implies that

A calculation shows that \(\partial _1\partial _1F=-A_{11}\nu +\partial _1EE^{-1}\partial _1F-E\partial _2F\). Furthermore, we have already shown that

Together with the above, \(F\cdot \partial _2F=E\) and \(F\cdot \partial _1F=0\), this implies that

This proves the claim since \(A_{11}>0\) and \(E=E_0\).

Now, let \(l>1\) and suppose that the assertion has already been shown for every \(i<l\). At \(t=0\), there holds

which proves (25). In the first equation, we used that F satisfies the free boundary condition. In the second equation, we used the (2, 2)-component of the differential equation for \(\Psi ^l\). In the third equation, we used that the claim holds for every \(i<l\). The fourth equation follows from extracting the terms involving F and using \(|F|^2=1\) and \(\Psi ^0=F\). The fifth equation follows from rearranging the sums, changing indices and rewriting the binomial coefficients. In the sixth equation, we used the boundary condition for each \(\Psi ^{l-i-j-m}\) and cancel the terms which appear twice. Finally, we used that \(\Psi ^1\cdot F=0\). We proceed to show (26). We have

which proves (26). In the first equation, we used the (1, 2) component of the differential equation for \(\Psi ^l\). In the second equation, we used the free boundary condition. The third equation follows from the differentiated boundary condition (24) for \(\Psi ^l\) and the induction hypothesis. The fourth equation, follows from using the \(j=0\) terms to cancel the second term. The fifth equation follows from changing indices and recomputing the binomial coefficients. The last equation follows from the differentiated boundary condition for \(\Psi ^{l-1}\).

We recall that \(\zeta =rE_t\). Differentiating the identity \( \partial _2\zeta =\zeta ^2 \) l times with respect to t, we obtain

Moreover,

Hence, using \(\zeta =E\) on \(\partial D\), the identity \(\partial _2\zeta =\zeta ^2\) for the lower order terms and the (1, 1) component of the differential equation for \(\Psi ^l\), we obtain, at \(t=0\), that

Consequently, at \(t=0\), we have

We calculate

as well as

In the second equation, we used the (1, 2) component of the differential equation for \(\Psi ^l\) and in the fourth equation, we used the differentiated boundary condition. Now I and the first term on the left hand side of (31) cancel out because of the differentiated boundary condition, V cancels out the third term on the left hand side of (30). Moreover, at \(t=0\), we have

Combining all of this and noting that \(E=\zeta \) on \(\partial D\), we are left with, again at \(t=0\),

We now proceed to calculate the term \(II+IV\). Using the induction hypothesis and changing the order of summation, we deduce at \(t=0\)

Differentiating the boundary condition (24) again and using the (1, 1) component of the differential equation for \(\Psi ^{l-j}\) we conclude

which is exactly the right hand side of (34). Hence, we conclude, at \(t=0\), that

Since we already know that the only potentially non-zero component of the second term in the product is the normal component, we conclude as before that

\(\square \)

3.3 A-priori estimates and convergence of the power series

The final ingredient to prove that the solution space is open is an a-priori estimate for the map \(\Psi ^l\). This will establish the convergence of the power series.

Lemma 3.9

Let \(l\in {\mathbb {N}}\) and \(\Psi ^l\) be a solution of (6). Then \(\Psi ^l\) satisfies the estimate

where c depends on \(\alpha \), the \(C^{3,\alpha }\)-data of F and the \(C^{2,\alpha }\)-data of h. As usual, all norms are taken with respect to Euclidean isothermal coordinates on D.

Proof

We fix an integer \(l\in {\mathbb {N}}\), abbreviate \(\Psi =\Psi ^l\) and define

as well as

Let \(\partial _1,\partial _2\) be any coordinate system on D. We recall that

\(\psi \) and \(\Phi \) are of class \(C^2\) while q is of class \(C^1\). Thus, Lemma (3.7) implies the a-priori estimate

where c depends on \(\alpha \), \(|A|_{C^1({\bar{D}})}\) and \(|h|_{C^1({\bar{D}})}\). After choosing w to be orthogonal to the kernel of \({\mathcal {L}}\), a standard compactness argument implies the improved estimate

We rewrite (7) as

and

For the sake of readability, we define Q to be a quantity which can be written in the form

where \(\rho ^{ijm}\) and \(\zeta _{ijm}\) are functions defined on D. In practice, \(\zeta \) will be one of \(u_i\), \(q_{ij},\) \(\partial _i u_j\), \(\partial _i q_{jm}\) and for every \(\rho \) under consideration, we have the uniform estimate

where c only depends on \(|h|_{C^{2,\alpha }({\bar{D}})}\) and \(|A|_{C^{1,\alpha }({\bar{D}})}\). Differentiating the first equation of (36) with respect to \(\partial _1\) and the second equation with respect to \(\partial _2 \), we obtain

Multiplying the second inequality by \(A_{11}/A_{22}\) and adding the equalities we infer

Similarly, one obtains

Since strict convexity implies that these equations are strongly elliptic, we may choose Euclidean coordinates and appeal to the Schauder theory for elliptic equations to obtain the interior estimate

where c depends on \(\alpha \), \(|h|_{C^{2,\alpha }({\bar{D}})}\), \(|A|_{C^{1,\alpha }({\bar{D}})}\) and \(|A^{-1}|_{C^{0}({\bar{D}})}\). Next, we choose polar coordinates \((\varphi ,r)\) and note that

are well-defined functions on the annulus \(D\setminus D_{1/4}\). Here, \((x_1,x_2)\) denote Euclidean coordinates on D. The ellipticity of (38) and (39) remains unchanged if we write \(\partial _\varphi ,\) \(\partial _r\) in terms of \(\partial _{x_1},\) \(\partial _{x_2}\). Consequently, \(u_r\) and \(u_\varphi \) satisfy a strongly elliptic equation with respect to Euclidean coordinates. Moreover, for every \(b\in {\mathbb {N}}\), there is a constant \(c_b>1\) which only depends on b such that

We now apply the Schauder theory for elliptic equations to \(u_r\) and \(u_\varphi \). Since \(u_r=\psi \) on \(\partial D\), we find

where c has the same dependencies as before. Conversely, equation (36) yields that

on \(\partial D\). Consequently, the Schauder estimates for equations with Neumann boundary conditions give

Combining this with (42), we may remove the \(u_r\) term on the right hand side. Then, the interior estimate (40), the estimate (35) and (41) imply

Finally, we need an estimate for v. There holds

and consequently

In order to proceed, we use the so-called Nirenberg trick in the following way. We differentiate the first equation of (7) twice in \(\partial _1\) direction, the second equation in \(\partial _1\) and \(\partial _2\) direction and the third equation twice in \(\partial _2\) direction. We then multiply the second equation by \((-1)\) and add all three equations. The terms involving third derivatives cancel out and we obtain

As before, strict convexity translates into strong ellipticity of this equation. Moreover, the Gauss-Codazzi equations imply that the second order derivatives of the second fundamental form on the left hand side cancel out. Similarly, one may check that no third order terms of the metric h appear on the right hand side. On the other hand, in polar coordinates, we have

where we used Lemma 3.8 and \(A_{\varphi r}=0\) on \(\partial D\). Hence the Schauder estimates with Neumann boundary conditions imply

where the derivatives are taken with respect to Euclidean coordinates. Combining this with the previous estimates, we obtain the final estimate

The term \(2\partial _1\partial _2q_{12}-\partial _1\partial _1q_{22}-\partial _2\partial _2q_{11}\) does not contain any third derivatives of \(\Psi ^i\) where \(i< l\) and it follows that

\(\square \)

We now iteratively use this a-priori estimate to show that the power series (5) converges in \(C^{2,\alpha }({\bar{D}})\). To this end, recall that the conformal factors of the metrics h and \({\tilde{h}}\) are given by E and \({\tilde{E}}\), respectively.

Lemma 3.10

Given \(\epsilon >0\) small enough, there exists a constant \(\Lambda >0\) depending only on \(|F|_{C^{3,\alpha }({\bar{D}})},\) \(|E|_{C^{2,\alpha }({\bar{D}})},\) \(|{\tilde{E}}|_{C^{2,\alpha }({\bar{D}})},\) \( \alpha \) and a number \(\delta >0\) which additionally depends on \(\epsilon \) such that the following holds. If

then

Proof

Let us define

Now, Lemma 3.9 becomes

We may assume that \(c\ge 1\). In order to proceed, we use the following recursive estimate.

Lemma 3.11

Let \(\{y_i\}_{i=1}^\infty \) be a sequence of positive numbers, \(\epsilon \), \(\gamma \), \(c>0\) and assume that

for every \(i<l\), where \(l\in {\mathbb {N}}\). Then there holds

We will prove the lemma later on. We now show that for every number \(l\in {\mathbb {N}}\), the following two estimates hold

Using the explicit definition of \(E_t\) from Lemma 2.1, we compute

Hence, given \({\tilde{\epsilon }}>0\), we can chose \(\delta \) small enough such that

and consequently

provided

Moreover,

Increasing c if necessary, we may arrange that \(|F|_{C^{2,\alpha }({\bar{D}})}\le c\). Together with (44) this implies, decreasing \({\tilde{\epsilon }}\) appropriately, that

In particular, for every \(\gamma \ge 1\) we have

This proves (45) for \(l=1\) and every \(\Lambda ,\) \(\gamma >1\).

Now given \(l\ge 2\), let us assume that we have already shown that

for every \(i<l\) and some suitable choice of \(\gamma >1\). Then the a-priori estimate (44), Lemma 3.11 as well as (46) and (47) imply

If we also ensure that \(\tilde{\epsilon }<\epsilon /2,\) then

Furthermore, we have the trivial estimate

provided \(c\ge 2\). Combining this with (48), we obtain

provided \(\gamma \ge 3\). Thus, we can choose \(\Lambda =4\gamma c\) to obtain

\(\square \)

Proof of Lemma (3.11)

We may assume that \(\epsilon =c=\gamma =1\). We are then left to show the following identity

There holds

where \({\tilde{y}}_{j}\) is the j-th Catalan number. For the Catalan numbers, the well-known recurrence relation

holds, see for instance [34]. This implies the above identity. \(\square \)

We are now in the position to prove that the solution space is open.

Proposition 3.12

Let h, \({\tilde{h}}\in \mathcal {\mathcal {G}}^{k,\alpha }\) and \(F\in C^{k+1,\alpha }({\bar{D}})\) be a solution of the free boundary problem (1) for h. Then there exists a constant \({\delta }>0\) depending only on \(\alpha \), the \(C^{2,\alpha }\)-data of E and the \(C^{3,\alpha }\)-data of F such that the following holds. There exists a solution \({\tilde{F}}\in C^{k+1,\alpha }({\bar{D}})\) of (1) for \({\tilde{h}}\) provided

In particular, the space \({\mathcal {G}}_*^{k,\alpha }\) is open with respect to the \(C^{2,\alpha }\)-topology.

Proof

Let E and \({\tilde{E}}\) be the conformal factors of h and \({\tilde{h}}\), respectively, and \(h_t\) the connecting analytic path from see Lemma 2.1. We solve (6) to obtain the maps \(\Psi ^l\) and define \(F_t:{\bar{D}}\times [0,1]\rightarrow {\mathbb {R}}^3\) by

First, we choose \(\delta >0\) such that \(|{\tilde{E}}|_{C^{2,\alpha }({\bar{D}})}<2|E|_{C^{2,\alpha }({\bar{D}})},\) provided \(|E-{\tilde{E}}|_{C^{2,\alpha }({\bar{D}})}<\delta .\) Let \(\Lambda \) be the constant from Lemma 3.10 and \(\epsilon =(2\Lambda )^{-1}\). Lemma 3.10 then implies that we may decrease \(\delta \) appropriately such that

for every \(l\in {\mathbb {N}}\) provided \(|E-{\tilde{E}}|_{C^{2,\alpha }({\bar{D}})}< \delta \). It follows that \(F_t\) converges uniformly in \(C^{2,\alpha }({\bar{D}})\). Now, Lemma 3.2 implies that \({\tilde{F}}=F_1\) is a solution of (1) with respect to \({\tilde{h}}\). Standard elliptic theory yields the claimed regularity for \({\tilde{F}}\). \(\square \)

4 Closedness of the solution space

In this section, we will prove the closedness of the solution space \({\mathcal {G}}_*^{k,\alpha }\) with respect to the \(C^{4}\)-topology, provided \(k\ge 4\). We suspect that this requirement can be weakened to \(k\ge 3\) using a more refined \(C^2\)-estimate; cf. [13, 32].

Let \(\{h_l\}_{l=1}^\infty \) be a sequence of metrics \(h_l\in {\mathcal {G}}_*^{k,\alpha }\) converging to a metric \({\tilde{h}}\in {\mathcal {G}}^{k,\alpha }\) in \(C^{4}\). We use a compactness argument to show that \({\tilde{h}}\in {\mathcal {G}}_*^{k,\alpha }\). To this end, we prove an a-priori estimate for \(|F_l|_{C^{2,\alpha }({\bar{D}})}\), where \(F_l\) is the solution (1) with respect to \(h_l\).

4.1 A global curvature estimate

We fix \(l\in {\mathbb {N}}\) and abbreviate \(F=F_l\) as well as \(h=h_l\). Since F is an isometric embedding of a compact disc, it is bounded in \(C^1\) in terms of the \(C^0\)-norm of the metric h, that is,

Furthermore, in every coordinate chart, there holds

The second fundamental form can be expressed in terms of the Gauss curvature and the mean curvature. In fact, if \(\kappa _1,\kappa _2\) are principal directions, then

This implies the following estimate.

Lemma 4.1

Let \(h\in {\mathcal {G}}_*^{k,\alpha }\) and F be a solution of (1) with respect to h. Then there is a constant c which only depends on \(|h|_{C^1({\bar{D}})}\) and \(|H|_{C^0({\bar{D}})}\) such that

In order to bound H in terms of the intrinsic geometry, we use the maximum principle. To this end, we observe that the free boundary condition implies a useful formula for the normal derivative of the mean curvature at the boundary \(\partial D\).

Lemma 4.2

Let \(h\in {\mathcal {G}}_*^{k,\alpha }\) and let F be a solution of (1) with respect to h. Then there exists a constant c which only depends on \(|h|_{C^{4}({\bar{D}})}\) and \(|1/K|_{C^0({\bar{D}})}\) such that

Proof

We choose Fermi-coordinates \(h=\zeta ^2ds^2+dt^2\) adapted to the boundary \(\partial D\) with \(\zeta (s,0)=1\). Since the geodesic curvature is equal to 1, it follows that \(\partial _t \zeta (\cdot ,0)=-1\). One may compute that the only non-zero Christoffel symbols at the boundary are

In this coordinate chart, the mean curvature is given by

Since \(A_{st}\) vanishes on \(\partial D\), see (12), the Gauss-Codazzi equations imply

Consequently, differentiating the Gauss equation and using \(\partial _t \zeta =-1\), we find

on \(\partial D\), where we used that \(A_{st}=0 \). In particular,

on \(\partial D\). It follows that

where

Now, let \(\gamma >0\) be such that \(A_{11}=\gamma A_{22}\). It follows that

unless

Now, suppose that H attains its global maximum at \(p\in {\bar{D}}\). If \(p\in \partial D\), then

and it follows that (50) holds. We may assume that \(A_{ss}\le A_{tt}\) and estimate

as claimed. So let us assume that \(p\in D\). We choose normal coordinates \(\partial _1\), \(\partial _2\) centred at p. Clearly, we have

As has been shown in Lemma 9.3.3. in [14], there also holds

Since the Hessian of H is non-positive at p, strict convexity, (51) and (52) imply that

The claim follows. \(\square \)

4.2 A Krylov-Evans type estimate

Now, we improve the \(C^2\)-estimate to a \(C^{2,\alpha }\)-estimate. The potential function

can be used to estimate the second fundamental form A; cf. [14]. Namely, there holds

It follows that \(|A|_{C^{0,\alpha }({\bar{D}})}\) can be estimated in terms of \(|h|_{C^{1,\alpha }({\bar{D}})}\) and \(|f|_{C^{2,\alpha }({\bar{D}})}\) provided that \(|F\cdot \nu |\) is uniformly bounded from below. Taking the determinant of both sides of the equation, we find that f satisfies the following Monge-Ampere type equation

This suggests that a Krylov-Evans type estimate might be applicable. The major obstacle in this regard is that the free boundary condition implies that \(F\cdot \nu =0 \) on \(\partial D\).

Lemma 4.3

Let \(h\in {{\mathcal {G}}}_*^{k,\alpha }\) and F be a solution of the free boundary problem (1) with respect to h. Then there exists a constant c which only depends on \( |h|_{C^{4}({\bar{D}})},|1/K|_{C^0({\bar{D}})}\) and \(\alpha \in (0,1)\) such that

Proof

Let \((\varphi ,r)\) be isothermal polar coordinates centred at the origin with conformal factor E. We consider the Gauss map \(\nu :{\bar{D}}\rightarrow {\mathbb {S}}^2\subset {\mathbb {R}}^3\). Since F is strictly convex, \(\nu \) is a strictly convex embedding, too. Let \({\hat{h}}=\nu ^*{{\bar{g}}}\) be the pull-back metric of the Euclidean metric \({{\bar{g}}}\). There holds \(A_{r\varphi }=0\) on \(\partial D\); cf. (12). This implies that

on \(\partial D\). It follows that \({{\hat{\mu }}}=E^{-1}\partial _r F=\mu \) is the outward co-normal of \(\partial D\) with respect to \({\hat{h}}\). Consequently, the geodesic curvature of \({\hat{h}}\) along \(\partial D\) is given by

where we used the fact that \(k_h=1\). Thus, the previous lemma implies that

for some constant \(\eta >0\) which can be uniformly bounded from below in terms of \(|h|_{C^{4}({\bar{D}})}\) and \(|1/K|_{C^0({\bar{D}})}\). Arguing as in the proof of Lemma 3.7, we may assume, after a suitable rotation, that \(\nu ({\bar{D}})\) is contained in the lower hemisphere \({\mathbb {S}}^2_-\) and that the function \(\nu \cdot e_3\) attains its maximum \(s<0\) in at least two points \(p_1,p_2\in \nu (\partial D)\). It then follows that there has to be another point \(p\in \nu (\partial D)\) where \(k_{{\hat{h}}}\le k_{s}=-s\sqrt{1-s^2}\). Here, \(k_{s}\) is the curvature of the curve \(\{ p\in {\mathbb {S}}^2:p\cdot e_3=s\}\). ConsequentlyFootnote 1,

As we have seen in the proof of Lemma (3.7), \(F\cdot \nu \) vanishes precisely at the boundary. This implies that \( F\cdot \nu \le 0\) and it follows that \({\hat{F}}\cdot \nu \le s<0\), where \({\hat{F}}=F+e_3\). Clearly, \({\hat{F}}\) satisfies estimates comparable to F. We define the function \(\hat{f}=\frac{1}{2} {\hat{F}}\cdot {\hat{F}}\) and obtain

Since \({\hat{F}}\cdot \nu \le s<0\), the equation is uniformly elliptic and the ellipticity constant can be estimated in terms of \(|h|_{C^{4}({\bar{D}})}\) and \(|1/K|_{C^0({\bar{D}})}\). Now, the Krylov-Evans type estimate [36, Theorem 6] implies

where c depends on \(\alpha \), \(|h|_{C^{4,\alpha }({\bar{D}})}\), the ellipticity constant, \(|f|_{C^2({\bar{D}})}\) and the boundary data. All these terms can be estimated in terms of \(|h|_{C^{4}({\bar{D}})}\) and \(|1/K|_{C^0({\bar{D}})}\). \(\square \)

We now prove the main result of this section.

Proposition 4.4

Let \(\{h_l\}_{l=1}^\infty \) be a sequence of Riemannian metrics \(h_l\in {\mathcal {G}}_*^{k,\alpha }\) converging in \(C^{4}\) to a Riemannian metric \({\tilde{h}}\in {\mathcal {G}}^{k,\alpha }\), where \(k\ge 4\). Then \({\tilde{h}}\in {\mathcal {G}}_*^{k,\alpha }\).

Proof

Let \(K_l\) denote the curvature of the metric \(h_l\). The convergence implies that there is a number \(\Lambda \) such that \( |h_l|_{C^{4}({\bar{D}})}\), \(|1/K_l|_{C^0({\bar{D}})}\le \Lambda \) for all \(l\in {\mathbb {N}}\). Lemma 4.3 then implies the uniform estimate

where \(F_l\) are the respective solutions of (1). According to the Arzela-Ascoli theorem, we can extract a subsequence converging in \(C^2({\bar{D}})\) to a map \(F\in C^2({\bar{D}})\) which is a solution of the free boundary problem (1) with respect to \({\tilde{h}}\). Standard elliptic theory implies the claimed regularity. \(\square \)

5 Proof of Theorem 1.1

5.1 Existence.

It suffices to show that \({\mathcal {G}}_*^{k,\alpha }={\mathcal {G}}^{k,\alpha }\). According to Lemma 2.1, \({{\mathcal {G}}}^{k,\alpha }\) is path-connected while Proposition 3.12 and Proposition 4.4 imply that \({\mathcal {G}}_*^{k,\alpha }\) is open and closed. We define the map

The image of \(F_0\) has positive curvature, geodesic curvature along the boundary equal to 1 and meets the unit sphere orthogonally. Consequently, \({\mathcal {G}}_*^{k,\alpha }\) is a non-empty, open and closed subset of a path-connected space which implies that \({\mathcal {G}}_*^{k,\alpha }={\mathcal {G}}^{k,\alpha }\).

5.2 Uniqueness.

Suppose that \({\tilde{F}}\) is another solution of (1). We denote the Gauss curvature of h by K, and the second fundamental forms of F and \({\tilde{F}}\) by \(A=(A_{ij})_{ij}\) and \({\tilde{A}}=({\tilde{A}}_{ij})_{ij}\), respectively. The respective normals \(\nu \) and \({\tilde{\nu }}\) are chosen in a way such that the respective mean curvatures, denoted by H and \({\tilde{H}}\), share the same sign. We now use a variation of the argument in [31].

After a rotation and reflection, we may assume that both F and \({\tilde{F}}\) are contained in the open upper hemisphere, that \(\nu \) and \({\tilde{\nu }}\) both point downwards and that \(F\cdot \nu \) and \({\tilde{F}} \cdot {\tilde{\nu }}\) vanish precisely at the boundary. Next, we choose a local orthonormal frame \(e_1,e_2\) with respect to h and define the following two vector fields

It can be checked that these definitions do not depend on the choice of the orthonormal frame. Using the conformal property of the position vector field in \({\mathbb {R}}^3\), one computes as in [31, Proposition 1.2 and Proposition 1.8]) that

Let \((\varphi ,r)\) denote isothermal polar coordinates, \(\mu \) the outward co-normal of the Riemannian manifold (D, h) and E the conformal factor of h. Using the free boundary condition and \(A_{r\varphi }={\tilde{A}}_{r\varphi }=0\), we find

on \(\partial D\). Hence, integrating (57) and (58) over M, applying the divergence theorem and subtracting both equations we find that

Interchanging the roles of F and \({\tilde{F}}\) and performing the same computation again, we conclude

\(F\cdot \nu ,\) \({\tilde{F}} \cdot {\tilde{\nu }}\) are both positive on D. Conversely, \(A,{\tilde{A}}>0\) and

imply that \(\det (A-{\tilde{A}})\ge 0\). It follows that

and thus

Hence, F and \({\tilde{F}}\) share the same second fundamental form and consequently only differ by a rigid motion. Since both of their boundaries are contained in the unit sphere, this rigid motion must be a composition of a rotation and a reflection through a plane containing the origin.

Notes

A similar result is also due to W. Fenchel, see [4].

References

Brown, J. David., York, Jr., James, W.: Quasilocal energy in general relativity. Math. Asp. Class. Field. Theory. (Seattle, WA, 1991) 132, 129–142 (1992)

Cohn-Vossen, Stephan: Zwei Sätze über die Starrheit der Eiflächen. Nachrichten Göttingen 125–137, 1927 (1927)

DeTurck, Dennis M., Kazdan, Jerry L.: Some regularity theorems in Riemannian geometry. Ann. Sci. École Norm. Sup. 14(3), 249–260 (1981)

Fenchel, Werner: Über Krümmung und Windung geschlossener Raumkurven. Math. Ann. 101(1), 238–252 (1929)

Fraser, Ailana, Schoen, Richard: The first Steklov eigenvalue, conformal geometry, and minimal surfaces. Adv. Math. 226(5), 4011–4030 (2011)

Fraser, Ailana, Schoen, Richard: Minimal surfaces and eigenvalue problems. Geom. Anal. Math. Relat. Nonlin. Partial. Diff. Eq. 599, 105–121 (2013)

Fraser, Ailana, Schoen, Richard: Sharp eigenvalue bounds and minimal surfaces in the ball. Invent. Math. 203(3), 823–890 (2016)

Guan, Pengfei, Li, Yan Yan: The Weyl problem with nonnegative Gauss curvature. J. Differential Geom. 39(2), 331–342 (1994)

Guan, Pengfei, Siyuan, Lu.: Curvature estimates for immersed hypersurfaces in Riemannian manifolds. Invent. Math. 208(1), 191–215 (2017)

Guan, Bo.: Isometric embedding of negatively curved disks in the Minkowski space. Pure Appl. Math. Q. 3(3), 827–840 (2007)

Günther, Matthias. (1991): Isometric embeddings of Riemannian manifolds. In Proceedings of the international congress of mathematicians, II (Kyoto, 1990), Math. Soc. Japan, Tokyo, 1: 1137–1143

Heinz, Erhard: On elliptic Monge-Ampère equations and Weyl’s embedding problem. J. Analyse Math. 7, 1–52 (1959)

Heinz, Erhard: On Weyl’s embedding problem. J. Math. Mech. 11, 421–454 (1962)

Han, Qing, Hong, Jia-Xing. (2006): Isometric embedding of Riemannian manifolds in Euclidean spaces. Mathematical surveys and monographs. American mathematical society, Providence, RI, 130

Hijazi, Oussama, Montiel, Sebastián: A holographic principle for the existence of parallel spinor fields and an inequality of Shi-Tam type. Asian J. Math. 18(3), 489–506 (2014)

Hong, Jiaxing: Darboux equations and isometric embedding of Riemannian manifolds with nonnegative curvature in \({ R}^3\). Chin. Ann. Math. Ser. B 20(2), 123–136 (1999)

Hong, J., Zuily, C.: Isometric embedding of the \(2\)-sphere with nonnegative curvature in \({ R}^3\). Math. Z. 219(3), 323–334 (1995)

Lewy, Hans: On the existence of a closed convex surface realizing a given Riemannian metric. Proceedings of the National Academy of Sciences of the United States of America 24(2), 104 (1938)

Siyuan, Lu., Miao, Pengzi: Minimal hypersurfaces and boundary behavior of compact manifolds with nonnegative scalar curvature. J. Differential Geom. 113(3), 519–566 (2019)

Lambert, Ben, Scheuer, Julian: The inverse mean curvature flow perpendicular to the sphere. Math. Ann. 364(3–4), 1069–1093 (2016)

Lambert, Ben, Scheuer, Julian: A geometric inequality for convex free boundary hypersurfaces in the unit ball. Proc. Amer. Math. Soc. 145(9), 4009–4020 (2017)

Siyuan, Lu.: On Weyl’s embedding problem in Riemannian manifolds. Int. Math. Res. Not. IMRN 11, 3229–3259 (2020)

Li, Chunhe, Wang, Zhizhang: The Weyl problem in warped product spaces. J. Differential Geom. 114(2), 243–304 (2020)

Liu, Chiu-Chu Melissa., Yau, Shing-Tung.: Positivity of quasilocal mass. Phys. Rev. Lett. 90(23), 231102 (2003)

Liu, Chiu-Chu Melissa., Yau, Shing-Tung.: Positivity of quasi-local mass II. J. Amer. Math. Soc. 19(1), 181–204 (2006)

Miao, Pengzi: Positive mass theorem on manifolds admitting corners along a hypersurface. Adv. Theor. Math. Phys. 6(6), 1163–1182 (2002)

Nash, John: The imbedding problem for Riemannian manifolds. Ann. Math. 2(63), 20–63 (1956)

Nirenberg, Louis: The Weyl and Minkowski problems in differential geometry in the large. Comm. Pure Appl. Math. 6, 337–394 (1953)

Nitsche, Johannes C. C.: Stationary partitioning of convex bodies. Arch. Rational Mech. Anal. 89(1), 1–19 (1985)

Pogorelov, A. V. 1973: Extrinsic geometry of convex surfaces. American mathematical society, Providence, R.I. Translated from the Russian by Israel program for scientific translations, translations of mathematical monographs, Vol. 35

Pigola, Stefano, Rigoli, Marco, Setti, Alberto G.: Some applications of integral formulas in Riemannian geometry and PDE’s. Milan J. Math. 71, 219–281 (2003)

Schulz, Friedmar (1990): Regularity theory for quasilinear elliptic systems and Monge-Ampère equations in two dimensions. Lecture Notes in Mathematics, Springer-Verlag, Berlin, vol. 1445

Shi, Yuguang, Tam, Luen-Fai.: Positive mass theorem and the boundary behaviors of compact manifolds with nonnegative scalar curvature. J. Differential Geom. 62(1), 79–125 (2002)

Richard, P.: Stanley. Catalan numbers. Cambridge University Press, New York (2015)

Scheuer, Julian., Wang, Guofang., Xia, Chao. (2018): Alexandrov-Fenchel inequalities for convex hyper surfaces with free boundary in a ball. arXiv preprint arXiv:1811.05776, to appear in J. Differential Geom

Trudinger, Neil S. (1984): Boundary value problems for fully nonlinear elliptic equations. In Miniconference on nonlinear analysis (Canberra, 1984), volume 8 of Proc. Centre Math. Anal. Austral. Nat. Univ., pages 65–83. Austral. Nat. Univ., Canberra

Volkmann, Alexander: A monotonicity formula for free boundary surfaces with respect to the unit ball. Comm. Anal. Geom. 24(1), 195–221 (2016)

Wendland, W.L.: Elliptic systems in the plane, of Monographs and Studies in Mathematics, p. 3. Pitman (Advanced Publishing Program), Boston, Mass, London (1979)

Wloka, J.T., Rowley, B., Lawruk, B.: Boundary value problems for elliptic systems. Cambridge University Press, Cambridge (1995)

Wang, Guofang, Xia, Chao: Uniqueness of stable capillary hypersurfaces in a ball. Math. Ann. 374(3–4), 1845–1882 (2019)

Wang, Mu-Tao., Yau, Shing-Tung.: A generalization of Liu-Yau’s quasi-local mass. Comm. Anal. Geom. 15(2), 249–282 (2007)

Wang, Mu-Tao., Yau, Shing-Tung.: Isometric embeddings into the Minkowski space and new quasi-local mass. Comm. Math. Phys. 288(3), 919–942 (2009)

Acknowledgements

The author would like to thank his PhD advisor Guofang Wang for suggesting the problem and for many helpful conversations. The author would also like to thank the anonymous referee for clarifying the regularity assumptions in Theorem 1.1

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

Open access funding provided by University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by R. M. Schoen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. The Lopatinski-Shapiro condition

In this section, we verify the Lopatinski-Shapiro condition. We refer to [38] for its definition.

Lemma A.1

\({\mathcal {L}}\) satisfies the Lopatinski-Shapiro condition.

Proof

We interpret L as an operator from \({\mathcal {C}}^{1,\alpha }\) to \({\mathcal {C}}^{0,\alpha }\) and let \(\partial _1, \partial _2\) be any local coordinate system on D. We compute \(L(u)={\mathcal {M}}_1\partial _1 u +{\mathcal {M}}_2\partial _2(u)+{\mathcal {M}}_3u\), where

and \({\mathcal {M}}_3\) is a matrix which we do not need to determine. Calculating the inverse of A we find

Let \(p\in \partial D\) and choose polar coordinates \((\varphi ,r)\) centred at the origin with \(\partial _1=\partial _\varphi \) and \(\partial _2=\partial _r\). The free boundary condition implies \(A_{12}=0\), see (12). Furthermore, there holds \(\det (A)=A_{11}A_{22}\). Consequently,

where \(\psi ^2=\frac{A_{11}}{A_{22}}\). The eigenvalues of this matrix are given by \(\pm i\psi \). In the chosen coordinate chart, the boundary operator \(R_1\) is given by

According to [39, 16.1], the Lopatinski condition is satisfied if the matrix

has rank 1 for every closed path \(\gamma \) in the upper half plane containing \(i\psi \). We compute

The residue theorem implies

\(\square \)

Appendix B. Explicit examples in the Schwarzschild space

In this section, we consider explicit free boundary surfaces supported on the spheres of symmetry in the Schwarzschild space and provide some numerical evidence for the validity of Conjecture 1.2.

On \({\mathbb {R}}^3\setminus \{0\}\), we consider the Schwarzschild metric \(g_m\) with mass \(m>0\) defined by

where \({{\bar{g}}}\) denotes the Euclidean metric and \(\phi _m\) the conformal factor of \(g_m\). The continuous function

vanishes at \(\lambda =m/2\) and approaches 0 as \(\lambda \rightarrow \infty \). A lengthy but straightforward calculation shows that

Let \(m\in (0,3^{-\frac{3}{2}})\) and \(\lambda _m>m/2\) be maximal such that

We consider the embedded disc \(\Sigma ={\text {Im}}(\Phi )\), where

Let h be the metric induced by the Schwarzschild background metric. One may check that the geodesic curvature of \(\partial \Sigma \) satisfies

Similarly, the Gauss equation shows that

Here, we recall that

Let M be the closure of the component of \(B_{\lambda _m}(0)\setminus \Sigma \) with less volume. Since the scalar curvature of the Schwarzschild manifold vanishes, M satisfies the assumptions of Conjecture 1.2.

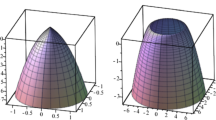

A plot of the total mean curvature of the free boundary surface \(\Sigma \) with respect to the Euclidean background metric and the Schwarzschild background metric for different values of m. The dotted line, corresponding to the total Euclidean mean curvature, lies above the solid line, corresponding to the Schwarzschild background metric

In order to check if Conjecture 1.2 holds up to these examples, we explicitly solve the isometric embedding problem. To this end, we first observe that

Consequently, there exists a function \(\eta :[0,\pi /4]\rightarrow (0,\infty )\) such that

for every \(\varphi \in (0,2\pi )\). Moreover, we notice that

We then consider the map

The isometric embedding equation becomes

It can be checked that, as predicted by Theorem 1.1, the solution to this system can be chosen such that \(\Psi (\Sigma )\) meets \({\mathbb {S}}^2\) orthogonally along \(\Psi (\partial \Sigma )\). Let \({\bar{\nu }}\) and \({{\bar{H}}}\) be the normal and mean curvature of \(\Psi (\Sigma )\subset {\mathbb {R}}^3\). A direct computation gives

as well as

Conversely, we have

A numerical computation for several sample values of m suggests that

for every \(m\in (0,3^{-3/2})\), see Figure 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.