Abstract

We consider the homogenization of a Poisson problem or a Stokes system in a randomly punctured domain with Dirichlet boundary conditions. We assume that the holes are spherical and have random centres and radii. We impose that the average distance between the balls is of size \(\varepsilon \) and their average radius is \(\varepsilon ^{\alpha }\), \(\alpha \in (1; 3)\). We prove that, as in the periodic case (Allaire, G., Arch. Rational Mech. Anal. 113(113):261–298, 1991), the solutions converge to the solution of Darcy’s law (or its scalar analogue in the case of Poisson). In the same spirit of (Giunti, A., Höfer, R., Ann. Inst. H. Poincare’- An. Nonl. 36(7):1829–1868, 2019; Giunti, A., Höfer, R., Velàzquez, J.J.L., Comm. PDEs 43(9):1377–1412, 2018), we work under minimal conditions on the integrability of the random radii. These ensure that the problem is well-defined but do not rule out the onset of clusters of holes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

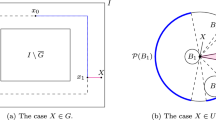

We are interested in the effective behaviour of a Stokes system or a Poisson equation in a bounded domain \(D^\varepsilon \subseteq {\mathbb {R}}^3\), perforated by many random small holes \(H^\varepsilon \). We impose Dirichlet boundary conditions on the boundary of the holes and of the domain. Problems like the one studied in this paper arise mostly in fluid-dynamics where a Stokes system in a punctured domain models the flow of a viscous and incompressible fluid through many disjoint obstacles. We focus on the regime where the effective equation is given by Darcy’s law or its scalar analogue in the case of the Poisson problem. For the latter, this corresponds to the case where the average density of harmonic capacity of the holes \(H^\varepsilon \) goes to infinity in the limit \(\varepsilon \downarrow 0\). In the case of Stokes the same is true, this time with the harmonic capacity being replaced by the so-called Stokes capacity. This is a vectorial version of the harmonic capacity where the class of minimizers further satisfies the incompressibility constraint (see (4.8)).

We construct the randomly punctured domain \(D^\varepsilon \) as follows: Given \(\alpha \in (1, 3)\) and a bounded \(C^{1,1}\)-domain \(D\subseteq {\mathbb {R}}^3\), we define

Here, the set of centres \(\Phi \) is a Poisson point process of intensity \(\lambda >0\) and the set \(\frac{1}{\varepsilon }D :=\{ x \in {\mathbb {R}}^3 \, :\, \varepsilon x \in D \}\). The radii \({\mathcal {R}}= \{ \rho _z\}_{z\in \Phi } \subseteq [1; +\infty )\) are independent and identically distributed random variables satisfying for a constant \(C< +\infty \)

This condition is minimal in order to ensure that, \({\mathbb {P}}\)-almost surely, the sets \(H^\varepsilon \) cannot asymptotically fully cover the domain D, hence implying that \(D^\varepsilon = \emptyset \) (see Lemma 1.1). However, condition (0.2) does not prevent that, with high probability, the balls in \(H^\varepsilon \) do overlap.

For \(\varepsilon >0\) and \(D^\varepsilon \) as above, we consider the (weak) solution to either

or to

In the case of the Stokes system, we further assume that

We refer to the next section for a more detailed discussion on what conditions (0.2) and (0.5) entail in terms of the geometric properties of the set \(H^\varepsilon \).

It is easy to see that in the case of spherical periodic holes having distance \(\varepsilon \) and radius \(\varepsilon ^\alpha \), \(\alpha \in (1, 3]\), the density of harmonic capacity of \(H^\varepsilon \) is asymptotically of order \(\varepsilon ^{-3+ \alpha }\); The same is true in the case of the Stokes capacity. When \(\alpha =3\) these limits are thus finite. In the case of the Poisson problem, the solutions to (0.3) thus converge to the solution \(u \in H^1_0(D)\) to \(-\Delta u +\mu u = f\) in D, where the constant \(\mu >0\) is the limit of the capacity density [7]. Similarly, the limit problem for (0.4) is given by a Brinkmann system, namely a Stokes system in D with no-slip boundary conditions and with the additional term \({\tilde{\mu }} u\) in the system of equations [1]. The term \({\tilde{\mu }} >0\) is as well strictly related to the limit of the Stokes capacity density. We also mention that, for holes that are periodic but not spherical, the term \({\tilde{\mu }}\) is a positive-definite matrix. For \(\alpha \in (1; 3)\) as in the present paper, the solutions to (0.3) or (0.4) need to be rescaled by the factor \(\varepsilon ^{-3+ \alpha }\) in order to converge to a non-trivial limit. The effective equations, in this case, are either \(u = k f\) in D or Darcy’s law \(u=K( f - \nabla p)\) in D [2, 26]. Here, k, K are related to the rescaled limit of the density of capacity and admit a representation in terms of a corrector problem solved in the exterior domain \({\mathbb {R}}^3 \backslash B_1(0)\).

When \(\alpha =1\), namely when the distance between holes and their size have the same order \(\varepsilon \), the effective equations for (0.3) and (0.4) are as in the case \(\alpha \in (1, 3)\); the effective constants k, K obtained in the limit, however, are determined by a corrector problem of different nature. In this case indeed, there is only one microscopic scale \(\varepsilon \) and the relative distance between the connected components of the holes \(H^\varepsilon \) does not tends to infinity for \(\varepsilon \rightarrow 0\). This yields that the corrector equations are solved in the periodic cell and not in the exterior domain \({\mathbb {R}}^3\backslash B_1(0)\) [3].

For holes that are not periodic, the extremal regimes \(\alpha \in \{1, 3\}\) have been rigorously studied both in deterministic and random settings. For \(\alpha =3\) we mention, for instance [6, 9, 16,17,18, 23,24,25] and refer to the introductions in [12] and [14] for a detailed overview of these results. We stress that the homogenization of (0.3) and (0.4) when \(H^\varepsilon \) is as in (0.1) with \(\alpha =3\) has been studied in the series of papers [12,13,14]. These works prove the convergence to the effective equation under the minimal assumption that \(H^\varepsilon \) has finite averaged capacity density. There is no additional condition on the minimal distance between the balls in the set of \(H^\varepsilon \).

There are many works devoted also to the regime \(\alpha =1\). For periodic holes, we refer to [20, 21] for the homogenization of compressible and incompressible Navier-Stokes systems, respectively. In the random setting, we refer to [4] where (0.3) and (0.4) are studied for a very general class of stationary and ergodic punctured domains. For these domains, the formulation of the corrector equation for the the effective quantities k, K is solved in the probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\) generating the holes.

There is fewer mathematical literature concerning the homogenization of (0.3) or (0.4) in the regime \(\alpha \in (1; 3)\). For periodic holes, this has been studied in [2, 26]. These results have been extended for certain regimes to compressible Navier-Stokes systems [15] or to elliptic systems in the context of linear elasticity [19]. We are not aware of analogous results when the holes \(H^\varepsilon \) are not periodic. The present paper considers this problem when \(H^\varepsilon \) is random and, in the same spirit of [12, 14], the balls in \(H^\varepsilon \) may overlap and cluster.

The main result of this paper is the following:

Let \(\sigma _\varepsilon := \varepsilon ^{-\frac{3 - \alpha }{2}}\) and let \(H^\varepsilon \) and \(D^\varepsilon \) be the random sets defined in (0.1).

-

(a)

Let \(u_\varepsilon \in H^1_0(D^\varepsilon )\) solve (0.3) with \(f \in L^q(D)\) for \(q \in (2; +\infty ]\). Then, if the marked point process \((\Phi , {\mathcal {R}})\) satisfies (0.2), for every \(p \in [1; 2)\) we have that

$$\begin{aligned} \lim _{\varepsilon \downarrow 0}{\mathbb {E}}\left[ \int _D | \sigma _\varepsilon ^2 u_\varepsilon - k f|^p \right] = 0, \ \ \ \text {with }k:= (4\pi \lambda {\mathbb {E}}\left[ \rho \right] )^{-1}. \end{aligned}$$Here, and in the rest of the paper, \({\mathbb {E}}\left[ \, \cdot \, \right] \) denotes the expectation under the probability measure for \((\Phi , {\mathcal {R}})\).

-

(b)

Let \(u_\varepsilon \in H^1_0(D^\varepsilon ; {\mathbb {R}}^3)\) solve (0.4) with \(f \in L^q(D; {\mathbb {R}}^3)\) for \(q \in (2 ; +\infty ]\). If \((\Phi , {\mathcal {R}})\) satisfies (0.5), then for every \(p \in [1; 2)\) we have

$$\begin{aligned} \lim _{\varepsilon \downarrow 0}{\mathbb {E}}\left[ \int _D | \sigma _\varepsilon ^2 u_\varepsilon - K (f - \nabla p^*)|^p \right] = 0, \ \ \ \text {with }K:= (6\pi \lambda {\mathbb {E}}\left[ \rho \right] )^{-1} \end{aligned}$$and \(p^* \in H^1(D)\) (weakly) solving

$$\begin{aligned} {\left\{ \begin{array}{ll} -\nabla \cdot ( \nabla p^* - f) = 0 &{}\text {in }D\\ (\nabla p^*-f) \cdot \nu = 0 &{}\text {on }\partial D \end{array}\right. }\ \ \ \ \ \ \fint _D p^*= 0. \end{aligned}$$

As mentioned above, condition (0.2) is minimal in order to ensure that the set \(D^\varepsilon \) is non-empty for \({\mathbb {P}}\)-almost every realization. A lower stochastic integrability assumption for the radii, indeed, yields that, in the limit \(\varepsilon \downarrow 0\) and \({\mathbb {P}}\)-almost surely \(H^\varepsilon \), covers the full set D (see Lemma 1.1 in the next section). By the Strong Law of the Large Numbers, condition (0.2) implies that the density of capacity is almost surely of order \(\varepsilon ^{-3+\alpha }\) as in the periodic case. As already remarked in [12] in the case \(\alpha =3\), with (0.4) we require that the radii satisfy the slightly stronger assumption (0.5). While (0.2) seems to be the optimal condition in order to control the density of harmonic capacity, the lack of subadditivity of the Stokes capacity calls for a better control on the geometry of the set \(H^\varepsilon \).

The ideas used in the proof of Theorem 0.1 are an adaptation of the techniques used in [2, 7] for the periodic case. They are combined with the tools developed in [12, 14] to tackle the case of domains having holes that may overlap. As shown in [2], the uniform bounds on the sequences \(\{ \sigma _\varepsilon ^2 u_\varepsilon \}_{\varepsilon >0}\), \(\{ \sigma _\varepsilon \nabla u_\varepsilon \}_{\varepsilon >0}\) are obtained by means of a Poincaré’s inequality for functions that vanish on \(\partial D^\varepsilon \). If \(v \in H^1_0(D^\varepsilon )\), since the function vanishes on the holes \(H^\varepsilon \), the constant in the Poincaré’ s inequality is of order \(\sigma _\varepsilon ^{-1}<< 1\). If \(v \in H^1_0(D)\), this would instead be of order 1 (dependent on the domain D). Note that, as for \(\alpha =3\) we have \(\sigma _\varepsilon = 1\), there is no gain in using a Poincaré’s inequality in \(H^1_0(D^\varepsilon )\) instead of in \(H^1_0(D)\) in this regime. In the case of centres of \(H^\varepsilon \) that are distributed like a Poisson point process, the is a low probability that some regions of \(D^\varepsilon \) have few holes, thus leading to a worse Poincaré’s constant. This causes the lack of uniform bounds for the family \(\{\sigma _\varepsilon ^2 u_\varepsilon \}_{\varepsilon >0}\) in \(L^2(D)\).

Equipped with uniform bounds for the rescaled solutions of (0.3), one may prove Theorem 0.1, (a) by constructing suitable oscillating test functions \(\{w_\varepsilon \}_{\varepsilon >0}\). These allow to pass to the limit in the equation and identify the effective problem. We stress that a crucial ingredient in these arguments is given by the quantitative bounds obtained in [11] in the case \(\alpha =3\). These bounds may indeed also be extended to the current setting so that the rate of convergence of the measures \(-\sigma _\varepsilon ^{-2}\Delta w_\varepsilon \in H^{-1}(D)\) is quantified. This allows to control the convergence of the duality term \(\langle -\Delta w_\varepsilon ; u_\varepsilon \rangle _{H^{-1}(D) ; H^1_0(D)}\). In contrast with the periodic case, the unboundedness of \(\{ \sigma _\varepsilon ^2 u_\varepsilon \}_{\varepsilon >0}\) in \(L^2(D)\) requires a careful study of the duality term above. For the precise statements, we refer to (3.3) in Lemma 3.1 and Lemma 3.3. The same ideas sketched here apply also to the case of solutions to (0.4). This time, the oscillating test functions \(\{ w_\varepsilon \}_{\varepsilon >0}\) are replaced by the reduction operator \(R_\varepsilon \) of Lemma 4.1.

We comment below on some variations and corollaries of Theorem 0.1:

- (i):

-

If \(\Phi = {\mathbb {Z}}^d\) or is a stationary point process satisfying for a finite constant \(C < +\infty \)

$$\begin{aligned} \max _{z_i, z_j \in \Phi } |z_i - z_j | < C \ \ \ {\mathbb {P}}-\text {almost surely,} \end{aligned}$$then the convergence of Theorem 0.1 holds also with \(p=2\). In this case, indeed, we may drop the logarithmic factor in the bounds of Lemma 2.1. The assumption \({\mathcal {R}}\subseteq [1; +\infty )\) may be also weakened to \({\mathcal {R}}\subseteq [0; +\infty )\), provided that

$$\begin{aligned} {\mathbb {E}}\left[ \rho ^{-\gamma }\right] < +\infty , \end{aligned}$$for an exponent \(\gamma \in (1; +\infty ]\). In this case, the convergence of Theorem 0.1 holds in \(L^p(D)\) for \(p \in [1; {\bar{p}})\) with \({\bar{p}}= {\bar{p}}(\gamma ) \in [1; 2)\) such that \({\bar{p}}(\gamma ) \rightarrow 2\) when \(\gamma \rightarrow +\infty \).

- (ii):

-

A careful inspection of the proof of Theorem 0.1 yields that, under assumption (0.5) and for a source \(f\in W^{1,\infty }\), the convergences in both (a) and (b) may be upgraded to

$$\begin{aligned} {\mathbb {E}}\left[ \int _D | \sigma _\varepsilon ^2 u_\varepsilon - u|^p \right] \lesssim \varepsilon ^\kappa , \end{aligned}$$for an exponent \(\kappa >0\) depending on \(\alpha , \beta \).

- (iii):

-

The quenched version of Theorem 0.1, namely the \({\mathbb {P}}\)-almost sure convergence of the families in \(L^p(D)\), holds as well provided that we restrict to any vanishing sequence \(\{ \varepsilon _j\}_{j\in {\mathbb {N}}}\) that converges fast enough. For instance, it suffices that \(j^{\frac{1}{3} +\epsilon } \varepsilon _j \rightarrow 0\), \(\epsilon >0\). It is a technical but easy argument to observe that, under this assumption, limits (3.3) of Lemma 3.1 and (4.1)-(4.2) of Lemma 4.1 vanish also \({\mathbb {P}}\)-almost surely. From these, the quenched version of Theorem 0.1 may be shown as done in the annealed case. To control the limits in (3.3), (4.1) and (4.2) without taking the expectation, one may follow the same lines of the current proof and control most of the terms by the Strong Law of Large Numbers. Condition \(j^{\frac{1}{3} + \epsilon } \varepsilon _j \rightarrow 0\) on the speed of the convergence for \(\{\varepsilon _j\}_{j\in {\mathbb {N}}}\) is needed in order to obtain quenched bounds for the term in (3.31) by means of Borel-Cantelli’s Lemma.

- (iv):

-

The analogue of Theorem 0.1 holds also for a general dimension \(d \geqslant 3\) if we consider the values \(\alpha \in (1; \frac{d}{d-2})\) and rescale the solutions by \(\sigma _\varepsilon ^2= \varepsilon ^{-\frac{d}{d-2} + \alpha }\). In this case, (0.2) and (0.5) hold with the exponent \(\frac{3}{\alpha }\) replaced by \(\frac{d}{\alpha }\).

The paper is structured as follows: In the next section we describe the setting and introduce the notation that we use throughout the proofs. Subsection 1.2 is devoted to discussing the minimality of assumption (0.2) and what condition (0.5) implies on the geometry of the holes \(H^\varepsilon \). In Sect. 2, we show the uniform bounds on the family \(\{ \sigma _\varepsilon ^2 u_\varepsilon \}_{\varepsilon >0}\), with \(u_\varepsilon \) solving (0.3) or (0.4). In Sect. 3 we argue Theorem 0.1 in case (a), while in Sect. 4 we adapt it to case (b). The proof of case (b) is conceptually similar to the one for (a), but it is technically more challenging. It heavily relies on the geometric properties of the holes implied by condition (0.5). Finally, Sect. 5 contains the proof of the main auxiliary results used throughout the paper.

1 Setting and notation

Let \(D \subseteq {\mathbb {R}}^3\) be an open set having \(C^{1,1}\)-boundary. We assume that D is star-shaped with respect to a point \(x_0 \in {\mathbb {R}}^3\). This assumption is purely technical and allows us to give an easier formulation for the set of holes \(H^\varepsilon \). With no loss of generality we assume that \(x_0=0\).

The process \((\Phi ; {\mathcal {R}})\) is a stationary marked point process on \({\mathbb {R}}^3\) having identically and independent distributed marks on \([1 +\infty )\). In other words, \((\Phi ; {\mathcal {R}})\) may be seen as a Poisson point process on the space \({\mathbb {R}}^3 \times [1;+\infty )\), having intensity \({\tilde{\lambda }}(x, \rho )= \lambda f(\rho )\). The expectation in (0.2) or (0.5) is therefore taken with respect to the measure \(f(\rho ) {\mathrm {d}}\rho \). We denote by \((\Omega ; {\mathcal {F}}, {\mathbb {P}})\) the probability space associated to \((\Phi , {\mathcal {R}})\), so that the random sets in (0.1) and the random fields solving (0.3) or (0.4) may be written as \(H^\varepsilon = H^\varepsilon (\omega )\), \(D^\varepsilon =D^\varepsilon (\omega )\) and \(u_\varepsilon (\omega ; \cdot )\), respectively. The set of realizations \(\Omega \) may be seen as the set of atomic measures \(\sum _{n \in {\mathbb {N}}} \delta _{(z_n, \rho _n)}\) in \({\mathbb {R}}^3 \times [1; +\infty )\) or, equivalently, as the set of (unordered) collections \(\{ (z_n , \rho _n) \}_{ n\in {\mathbb {N}}} \subseteq {\mathbb {R}}^3 \times [1; +\infty )\).

We choose as \({\mathcal {F}}\) the smallest \(\sigma \)-algebra such that the random variables \(N(B): \Omega \rightarrow {\mathbb {N}}\), \(\omega \mapsto \#\{ \omega \cap B \}\) are measurable for every set \(B \subseteq {\mathbb {R}}^4\) the Borel \(\sigma \)-algebra \({\mathcal {B}}_{{\mathbb {R}}^{4}}\). Here and throughout the paper, \(\#\) stands for the cardinality of the set considered. For every \(p \in [1; +\infty )\) we define the space \(L^p(\Omega )\) as the space of (\({\mathcal {F}}\)-measurable) random variables \(F: \Omega \rightarrow {\mathbb {R}}\) endowed with the norm \({\mathbb {E}}\left[ |F(\omega )|^p \right] ^{\frac{1}{p}}\). For \(p=+\infty \), we set \(L^\infty (\Omega )\) as the space of \({\mathbb {P}}\)-essentially bounded random variables. We denote by \(L^p(\Omega \times D)\), \(p \in [1; +\infty )\), the space of random fields \(F: \Omega \times {\mathbb {R}}^3 \rightarrow {\mathbb {R}}\) that are measurable with respect to the product \(\sigma \)-algebra and such that \({\mathbb {E}}\left[ \int _D |F(\omega , x)|^p {\mathrm {d}}x \right] ^{\frac{1}{p}} < +\infty \). The spaces \(L^p(\Omega ), L^p(\Omega \times {\mathbb {R}}^3)\) are separable for \(p \in [1, +\infty )\) and reflexive for \(p \in (1, +\infty )\) (see e.g. [5][Section 13,4]). The same definition, with obvious modifications, holds in the case of the target space \({\mathbb {R}}\) replaced by \({\mathbb {R}}^3\).

We often appeal to the Strong Law of Large Numbers (SLLN) for averaged sums of the form

where \(\{X_z \}_{z\in \Phi ^\varepsilon (D)}\) are identically distributed random variables that have sufficiently decaying correlations. Here, we send the radius of the ball \(B_R\) to infinity. It is well-known that such results hold and we refer to [14][Section 5] for a detailed proof of the result that is tailored to the current setting.

1.1 Notation

We use the notation \(\lesssim \) or \( > rsim \) for \(\leqslant C\) or \(\geqslant C\) where the constant depends only on \(\alpha \), \(\lambda \), D and, in case (b), also on \(\beta \) in (0.5). Given a parameter \(p \in {\mathbb {R}}\), we use the notation \(\lesssim _p\) if the implicit constant also depends on the value p. For \(r>0\), we write \(B_r\) for the ball of radius r centred in the origin of \({\mathbb {R}}^3\). We denote by \(\langle \, \cdot \, ; \, \cdot \, \rangle \) the duality bracket between the spaces \(H^{-1}(D)\) and \(H^1_0(D)\).

When no ambiguity occurs, we skip the argument \(\omega \in \Omega \) in all the random objects considered in the paper. If \((\Phi ; {\mathcal {R}})\) is as in the previous subsection, for a set \(A \subseteq {\mathbb {R}}^d\), we define

For \(x \in {\mathbb {R}}^3\), we define the random variables

1.2 On the assumptions on the radii

In this subsection we discuss the choice of assumptions (0.2) and (0.5) in Theorem 0.1. We postpone to the Appendix the proofs of the statements. The next result states that assumption (0.2) is sufficient to have only microscopic holes whose size vanishes in the limit \(\varepsilon \downarrow 0\). Moreover, it is also necessary in order to have that holes \(H^\varepsilon \) do not cover the full domain D.

Lemma 1.1

The following conditions are equivalent:

-

(i)

The process satisfies (0.2);

-

(ii)

For \({\mathbb {P}}\)-almost every realization and for every \(\varepsilon \) small enough the set \(D^\varepsilon \ne \emptyset \).

Furthermore, (i)( or (ii)) implies that for \({\mathbb {P}}\)-almost realization \(\lim _{\varepsilon \downarrow 0}|D^\varepsilon | =|D|\).

In the following result we provide the geometric information on \(H^\varepsilon \) that may be inferred by strengthening condition (0.2) to (0.5). Roughly speaking, the next lemma tells that, under condition (0.5), we have a control on the maximum number of holes of comparable size that intersect. More precisely, we may discretize the range of the size of the radii \(\{ \rho _z\}_{z\in \Phi ^\varepsilon (D)}\) and partition the set of centres \(\Phi ^\varepsilon (D)\) according to the order of magnitude of the associated radii. The next statement says that there exists an \(M\in {\mathbb {N}}\) (that is independent from the realization \(\omega \in \Omega \)) such that, provided that the step-size of the previous discretization is small enough, each sub-collection contains at most M holes that overlap when dilated by a factor 4. This result allows to treat also the case of the Stokes system in Theorem 0.1, (b) and motivates the need of the stronger assumption (0.5) in that setting.

Lemma 1.2

Let \((\Phi , {\mathcal {R}})\) satisfy (0.5). Then:

-

(i)

There exists \(\kappa = \kappa (\alpha , \beta ) > 0\), \(k_{\text {max}}= k_{\text {max}}(\alpha , \beta ), M=M(\alpha , \beta ) \in {\mathbb {N}}\) and disjoint sets \(\{ I_{\varepsilon ,i}\}_{i=1}^{k_{\text {max}}} \subseteq \Phi ^\varepsilon (D)\) such that for \({\mathbb {P}}\)-almost every realization and for every \(\varepsilon \) small enough it holds

$$\begin{aligned} \sup _{z \in \Phi ^\varepsilon (D)} \varepsilon ^\alpha \rho _z \leqslant \varepsilon ^{\kappa } \end{aligned}$$(1.2)and we may rewrite

$$\begin{aligned} H_\varepsilon = \bigcup _{i=1}^{k_{\text {max}}} \bigcup _{ z \in I_{i,\varepsilon }} B_{\varepsilon ^\alpha \rho _z}(\varepsilon z), \ \ \ \ \inf _{z \in I_{i,\varepsilon }} \varepsilon ^\alpha \rho _z \geqslant \varepsilon ^\kappa \sup _{z \in I_{i-2,\varepsilon }} \varepsilon ^\alpha \rho _z \ \ \ \text {for }i=1, \cdots , k_{\text {max}}\nonumber \\ \end{aligned}$$(1.3)such that for every \(i=1, \ldots k_{\text {max}}\)

$$\begin{aligned} \{ B_{4\varepsilon ^\alpha \rho _z}(\varepsilon z)\}_{z \in I_{i,\varepsilon } \cup I_{i-1,\varepsilon }}, \ \ \text {contains at most }M\hbox { elements that intersect.} \end{aligned}$$(1.4) -

(ii)

For every \(\delta > 0\) there exists \(\varepsilon _0=\varepsilon _0(\delta )> 0\) and a set \(B \in {\mathcal {F}}\) such that \({\mathbb {P}}(B) \geqslant 1-\delta \) and for every \(\omega \in B\) and \(\varepsilon \leqslant \varepsilon _0\) inequality (1.2) holds and there exists a partition of \(H^\varepsilon \) satisfying (1.3)–(1.4).

2 Uniform bounds

In this section we provide uniform bounds for the family \(\{ \sigma _\varepsilon ^2 u_\varepsilon \}_{\varepsilon >0}\) and \(\{ \sigma _\varepsilon \nabla u_\varepsilon \}_{\varepsilon >0}\), where \(u_\varepsilon \) is as in Theorem 0.1, (a) or (b). We stress that, as in [2], this is done by relying on a Poincaré’s inequality for functions that vanish in the holes \(H^\varepsilon \). The order of magnitude of the typical size (i.e. \(\varepsilon ^\alpha \)) and distance (i.e. \(\varepsilon \)) of the holes yields that the Poincaré’s constant scales as the factor \(\sigma _\varepsilon \) introduced in Theorem 0.1. This, combined with the energy estimate for (0.3) or (0.4), allows to obtain the bounds on the rescaled solutions. We mention that the next results contain both annealed and quenched uniform bounds. The quenched versions are not needed to prove Theorem 0.1, but may be used to prove the quenched analogue described in Remark 0.2, (iii).

Lemma 2.1

Let the process \((\Phi , {\mathcal {R}})\) satisfy (0.2). For \(\varepsilon > 0\), let \(u_\varepsilon \) be as in Theorem 0.1, (a) or (b). Then for every \(p \in [1; 2)\)

Furthermore, for \({\mathbb {P}}\)-almost every realization, the sequences \(\{ \sigma _\varepsilon ^{2} u_\varepsilon \}_{\varepsilon >0}\) and \(\{\sigma _\varepsilon \nabla u_\varepsilon \}_{\varepsilon >0}\) are bounded in \(L^p(D)\), \(p \in (1; 2)\), and in \(L^2(D)\), respectively.

This, in turn, is a consequence of

Lemma 2.2

If \((\Phi , {\mathcal {R}})\) satisfies (0.2), then for every \(p \in [1; 2]\) and for every \(v \in H^1_0(D^\varepsilon )\) we have

where the random variables \(\{ C_\varepsilon (p) \}_{\varepsilon > 0}\) satisfy

Proof of Lemma 2.2

As first step, we argue that the following Poincaré’s inequality holds: Let V be a convex domain. Assume that \(V \subseteq B_r\) for some \(r> 0\). Let \(s < r\). Then, for every \(q \in [1; 2]\) and \(u \in H^1(V \backslash B_s)\) such that \(u=0\) on \(\partial B_s\) it holds

The proof of this result is standard and may be easily proven by writing the integrals in spherical coordinates. We stress that the assumptions on V allows to write the domain \(V \backslash B_s\) as \(\{ (\omega , r) \in {\mathbb {S}}^{n-1} \times {\mathbb {R}}_+, \ \ s \wedge R(\omega ) \leqslant r < R(\omega ) \}\) for some function \(R: {\mathbb {S}}^{2} \rightarrow {\mathbb {R}}\) satisfying \(\Vert R \Vert _{L^\infty (S^{2})} \leqslant r\).

As second step, we construct an appropriate random tesselation for D: We consider the Voronoi tesselation \(\{ V_z\}_{z\in \Phi }\) associated to the point process \(\Phi \), namely the sets

We define

Note that, by the previous rescaling, we have that, if \(\mathop {diam}(V_z):= r_z\), then \(\mathop {diam}(V_{\varepsilon ,z})=\varepsilon r_z\).

It is immediate to see that, for every realization \(\omega \in \Omega \), the sets \(\{ V_{\varepsilon ,z} \}_{z\in A^\varepsilon }\) are essentially disjoint, convex and cover the set D. Since \(\Phi \) is stationary, the random variables \(\{r_z\}_{z\in \Phi }\) are identically distributed. Furthermore, they are distributed as a generalized Gamma distribution having intensity \(g(r)= C(\lambda ) r^{8} \exp ^{- c(d,\lambda ) r^3}\) [22][Proposition 4.3.1.]. From this, it is a standard computation to show that

and that there exists a constant \(c=c(\lambda )>0\) such that for every function \(F: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}\) (that is integrable with respect to the measure g(r)dr)

Equipped with \(\{ V_{\varepsilon ,z} \}_{z \in A^\varepsilon }\), we argue that for every realization of \(H^\varepsilon \) and all \(p \in [1 ; 2)\) it holds

with \(C^\varepsilon (p)^p:= \left( \varepsilon ^3 \sum _{z\in A^\varepsilon } r_z^{\frac{6}{2-p}} \right) ^{\frac{2-p}{2}}\). Note that by (2.4), (2.5) and the Law of Large Numbers the family \(\{ C^\varepsilon (p)\}_{\varepsilon >0}\) satisfies (2.3). We show (2.6) as follows: For every \(v\in H^1_0(D^\varepsilon )\), we rewrite

Since \(\rho _z \geqslant 1\), we have that \(B_{\varepsilon ^\alpha }(\varepsilon z) \subseteq B_{\varepsilon ^\alpha \rho _z}(\varepsilon z)\) so that the function \(v \in H^1_0(D^\varepsilon )\) vanishes on \(B_{\varepsilon ^\alpha }(\varepsilon z)\). Hence, thanks to the choice of \(\{ V_{\varepsilon ,z}\}_{z\in A^\varepsilon }\), we apply Lemma 2 in each set \(V_z^\varepsilon \) with \(B_s= B_{\varepsilon ^\alpha }(\varepsilon z)\) and \(B_r= B_{\varepsilon r_z}(\varepsilon z)\) and infer that

Since \(p\in [1, 2)\), we may appeal to Hölder’s inequality and conclude that

i.e. inequality (2.6). This concludes the proof of (2.2) in the case \( p \in [1; 2)\).

To tackle the case \(p=2\) we need a further manipulation: we distinguish between points \(z \in A^\varepsilon \) having \(r_z > - \log \varepsilon \) or \(r_z \leqslant - \log \varepsilon \):

We apply Poincaré’s inequality in \(H^1_0(D)\) on every integral of the second sum above. This implies that

so that Chebyschev’s inequality and (2.5) yield

where we set \(C_\varepsilon (2) := \left( \varepsilon ^3 \sum _{z \in A^\varepsilon } \exp \left( r_z^2 \right) \right) \). Note that, again by (2.4)-(2.5) and the Law of Large Numbers, this definition of \(C_\varepsilon (2)\) satisfies (2.3). Inserting the previous display into (2.10) implies that

We now apply Lemma 2 in the remaining sum and obtain (2.7) with \(p=2\), where the sum is restricted to the points \(z \in A^\varepsilon \) such that \(r_z \leqslant -\log \varepsilon \). From this, we infer that

By redefining \(C_\varepsilon (2)^2 = \min \left( \varepsilon ^3 \sum _{z \in A^\varepsilon } \exp \left( r_z^2 \right) ; 1 \right) \), the above inequality immediately implies (2.2) for \(p=2\). The proof of Lemma 2.2 is complete. \(\square \)

Proof of Lemma 2.1

We prove Lemma 2.1 for \(u_\varepsilon \) solving (0.3). The case (0.4) is analogous. Since \(f \in L^q(D)\) with \(q \in (2 ; +\infty ]\), we may test (0.3) with \(u_\varepsilon \) and use Hölder’s inequality to control

We thus appeal to (2.2) with \(p= \frac{q}{q-1}\) and obtain that

Thanks to (2.3) of Lemma 2.2, this yields that the sequence \(\{ \sigma _\varepsilon \nabla u_\varepsilon \}_{\varepsilon >0}\) is bounded in \(L^2(D)\) for \({\mathbb {P}}\)-almost every realization. Similarly, we infer (2.1) by taking the expectation and applying Hölder’s inequality.

We argue the remaining bounds for the terms of \(u_\varepsilon \) in a similar way: We combine Lemma 2.2 with the same calculation above for (2.11) and apply Hölder’s inequality. This establishes Lemma 2.1.

3 Proof of Theorem 0.1, (a)

Lemma 3.1

Let \((\Phi , {\mathcal {R}})\) satisfy (0.2). Then there exists an \(\varepsilon _0=\varepsilon _0(d)\) such that for every \(\varepsilon < \varepsilon _0\) and \({\mathbb {P}}\)-almost every realization there exists a family \(\{ w_\varepsilon \}_{\varepsilon > \varepsilon _0} \subseteq W^{1,+\infty }({\mathbb {R}}^3)\) such that \(\Vert w_\varepsilon \Vert _{L^\infty ({\mathbb {R}}^3)} = 1\), \(w_\varepsilon = 0\) in \(H^\varepsilon \) and

In addition,

and for every \(\phi \in C^\infty _0(D)\) and \(v_\varepsilon \in H^1_0(D^\varepsilon )\) satisfying the bounds of Lemma 2.1 and such that \(\sigma _\varepsilon ^2 v_\varepsilon \rightharpoonup v\) in \(L^1(\Omega \times D)\), it holds

Here, the constant k is as in Theorem 0.1, (a).

Proof of Theorem 0.1, (a)

We recall that, for part (a) of Theorem 0.1, \((\Phi , {\mathcal {R}})\) satisfies (0.2).

The proof is similar to the one in [2]. We first show that \(\sigma _\varepsilon ^2 u_\varepsilon \rightharpoonup u\) in \(L^p(D \times \Omega )\), \(p\in [1, 2)\): We may appeal to Lemma 2.1 and infer that, up to a subsequence, there exists a weak limit \(u^* \in L^p(\Omega \times {\mathbb {R}}^d)\), \(p \in [1,2)\). We prove that, \({\mathbb {P}}\)-almost surely, the function \(u^*= k f\) in D. This, in particular, also implies that the full family \(\{\sigma _\varepsilon ^2u_\varepsilon \}_{\varepsilon >0}\) weakly converges to \(u^*\).

We restrict to the converging subsequence \(\{ \sigma _{\varepsilon _j}^2 u_{\varepsilon _j}\}_{j\in {\mathbb {N}}}\). However, for the sake of a lean notation, we forget about the subsequence \(\{\varepsilon _j \}_{j\in {\mathbb {N}}}\) and continue using the notation \(u_\varepsilon \) and \(\varepsilon \downarrow 0\). Let \(\varepsilon _0\) and \(\{w_\varepsilon \}_{\varepsilon >0}\) be as in Lemma 3.1. For every \(\varepsilon < \varepsilon _0\), \(\chi \in L^\infty (\Omega )\) and \(\phi \in C^\infty _0(D)\) we test equation (0.3) with \(\chi w_\varepsilon \phi \) and take the expectation:

Using Leibniz’s rule, integration by parts and the bounds for \(u_\varepsilon \) and \(w_\varepsilon \) in Lemma 2.1 and 3.1 we reduce to

We now appeal to (3.3) in Lemma 3.1 applied to the converging subsequence \(\{u_\varepsilon \}_{\varepsilon >0}\) and conclude that

Since \(\chi \in L^\infty (\Omega )\) and \(\phi \in C^\infty _0(D)\) are arbitrary, we infer that for \({\mathbb {P}}\)-almost every realization \(u^*= k f\) for (Lebesgue-)almost every \(x \in D\). We stress that in this last statement we used the separability of \(L^p(D)\), \(p \in [1, \infty )\). This establishes that the full family \(\sigma _\varepsilon ^2 u_\varepsilon \rightharpoonup k f\) in \(L^p(\Omega \times D)\), \(p \in [1, 2)\).

To conclude Theorem 0.1, (a) it remains to upgrade the previous convergence from weak to strong. We fix \(p \in [1, 2)\). By the assumption on f, the function \(u^* \in L^q(D)\), for some \(q \in (2; +\infty ]\). Let \(\{ u_n \}_{n\in {\mathbb {N}}} \subseteq C^\infty _0(D)\) be an approximating sequence for \(u^*\) in \(L^q(D)\).

Since \(w_\varepsilon \in W^{1,\infty }(D)\), the function \(w_\varepsilon u_n \in H^1_0(D)\). Hence, by Lemma 2.2 applied to \(u_\varepsilon - w_\varepsilon u_n\) we obtain

and, since \(p< 2\) and C(p) satisfies (2.3) of Lemma 2.2, also

We claim that

so that

Provided this holds, we establish Theorem 0.1, (a), as follows: By the triangle inequality we have that

Since \(u^*\) and \(u_n \in C^\infty _0(D)\) are deterministic, we take the expectation and use Lemma 3.1 with (3.6) to get

This implies the statement of Theorem 0.1, (a), since \(p< 2\) and \(\{ u_n \}_{n\in {\mathbb {N}}}\) converges to \(u^*\) in \(L^2(D)\).

We thus turn to (3.5): We skip the lower index \(n \in {\mathbb {N}}\) and write u instead of \(u_n\). If we expand the inner square, we write

For the first term in the right-hand we use (0.3) and the fact that \(\sigma _\varepsilon ^2 u_\varepsilon \rightharpoonup u^*\) in \(L^p(\Omega \times D)\) with \(p \in [1, 2)\). Hence,

We focus on the remaining two terms in (3.7): Using Leibniz’s rule and an integration by parts we have that

Thanks to Lemma 2.1, Lemma 3.1 and since \(u \in C^{\infty }_0(D)\), the first and second term vanish in the limit \(\varepsilon \downarrow 0\). Hence,

By Lemma 2.1 and since \(u_\varepsilon \rightharpoonup u^*\), we may apply (3.3) of Lemma 3.1 with \(\phi = u\) and \(v_\varepsilon = u_\varepsilon \) to the limit on the right-hand side above. This yields

We now turn to the last term in (3.7). Also here, we use Leibniz rule to compute

By an argument similar to the one for (3.10), we reduce to

We now apply (3.3) of Lemma 3.1 to \(v_\varepsilon = w_\varepsilon u\) and \(\phi =u\). This implies that

Inserting (3.8), (3.10) and (3.11) into (3.7) we have that

Since \(u^* = k f\), it is easy to see the the right-hand side above equals the right-hand side of (3.5). This establishes (3.5) and concludes the proof of Theorem 0.1, case (a). \(\square \)

3.1 Proof of Lemma 3.1

Throughout this section, we assume that the process \((\Phi , {\mathcal {R}})\) satisfies assumption (0.2).

Lemma 3.1 may be proven in a way that is similar to [14][Lemma 3.1]. The first crucial ingredient is the following lemma, that allows to find a suitable partition of the holes \(H^\varepsilon \) by dividing this set into a part containing well separated holes and another one containing the clusters. The next result is the analogue of [14][Lemma 4.2] with the different rescaling of the radii of the balls generating the set \(H^\varepsilon \).

For every \(x\in {\mathbb {R}}^3\), we recall the definition of \(R_{\varepsilon ,x}\) in (1.1). We have:

Lemma 3.2

Let \(\gamma \in (0, \alpha -1)\). Then there exists a partition \(H^\varepsilon := H^\varepsilon _{g} \cup H^\varepsilon _{b}\), with the following properties:

-

There exists a subset of centres \(n^\varepsilon (D) \subseteq \Phi ^\varepsilon (D)\) such that

$$\begin{aligned} H^\varepsilon _g : = \bigcup _{z \in n^\varepsilon (D)} B_{\varepsilon ^\alpha \rho _z}( \varepsilon z ), \ \ \ \ \min _{z \in n^\varepsilon (D)}R_{\varepsilon , z}\geqslant \varepsilon ^{1+\frac{\gamma }{2}}, \ \ \ \ \ \max _{z \in n^\varepsilon (D)}\varepsilon ^\alpha \rho _z \leqslant \varepsilon ^{1+\gamma }. \end{aligned}$$(3.13) -

There exists a set \(D^\varepsilon _b(\omega ) \subseteq {\mathbb {R}}^3\) satisfying

$$\begin{aligned} H^\varepsilon _{b} \subseteq D^\varepsilon _b, \ \ \ {{\,\mathrm{Cap}\,}}( H^\varepsilon _b, D_b^\varepsilon ) \lesssim C(\gamma ) \varepsilon ^\alpha \sum _{z \in \Phi ^\varepsilon (D) \backslash n^\varepsilon (D)} \rho _z \end{aligned}$$and for which

$$\begin{aligned} B_{\frac{R_{\varepsilon ,z}}{2}}(\varepsilon z) \cap D^\varepsilon _b = \emptyset , \ \ \ \ \ \ \ \text {for every }z \in n^\varepsilon (D). \end{aligned}$$

Finally, we have that

Let \(\gamma \) in Lemma 3.2 be fixed. We construct \(w_\varepsilon \) as done in [14]: we set \(w_\varepsilon = w_\varepsilon ^g \wedge w_\varepsilon ^b\) with

where for each \(z\in n^\varepsilon (D)\), the function \(w_{\varepsilon ,z}\) vanishes in the hole \(B_{\varepsilon ^\alpha \rho _z}(\varepsilon z)\) and solves

We also define the measure

We stress that all the previous objects depend on the choice of the parameter \(\gamma \) in Lemma 3.2. The next result states that this parameter may be chosen so that the norm \(\Vert \mu _\varepsilon - 4\pi \lambda {\mathbb {E}}\left[ \rho \right] \Vert _{H^{-1}(D)}\) is suitably small. This, together with Lemma 3.2, provides the crucial tool to show Lemma 3.1:

Lemma 3.3

There exists \(\gamma \in (0, \alpha -1)\) such that if \(\mu _\varepsilon \) is as in (3.17) there exists \(\kappa >0\) such that for every random field \(v \in H^1_0(D)\)

Proof of Lemma 3.1

By construction, it is clear that, for \({\mathbb {P}}\)-almost every realization, the functions \(w_\varepsilon \in W^{1,\infty }({\mathbb {R}}^3) \cap H^1({\mathbb {R}}^3)\), vanish in \(H^\varepsilon \) and are such that \(\Vert w_\varepsilon \Vert _{L^\infty ({\mathbb {R}}^3)}=1\).

We now turn to (3.1). Using the definitions of \(w_g^\varepsilon \) and \(w^\varepsilon _b\) and Lemma 3.2 we have that

By Poincaré’s inequality in each ball \(\{ B_{R_{\varepsilon ,z}}(\varepsilon z) \}_{z\in n^\varepsilon (D)}\) we bound

Thanks to (0.2) and the Strong law of Large numbers, for \({\mathbb {P}}\)-a.e. realization the right-hand side vanishes in the limit \(\varepsilon \downarrow 0\).

We now turn to the second term: Since by the maximum principle \(|w_\varepsilon ^b -1| \leqslant 1\), we may use the definition of \(D^\varepsilon _b\) to bound

Thanks to (3.14) in Lemma 3.2, the right-hand side vanishes in the limit \(\varepsilon \downarrow 0\) for \({\mathbb {P}}\)-almost every realization. Combining this with (3.19) and (3.18) yields (3.1) for \(w_\varepsilon - 1\). Inequality (3.1) for \(\sigma _\varepsilon ^{-1}\nabla w_\varepsilon \) follows by Lemma 3.2 and the definition (3.15) of \(w_\varepsilon \) as done in [14][Lemma 3.1]. Limit (3.2) may be argued as done above for (3.1), this time appealing to the bound (0.2) and the stationarity of \((\Phi , {\mathcal {R}})\).

It thus remains to show (3.3). Using (3.15), (3.17) and the fact that \(\phi u_\varepsilon \in H^1_0(D^\varepsilon )\), we may decompose

Since \(v_\varepsilon \) is assumed to satisfy the bounds in Lemma 2.1, Hölder’s inequality, Lemma 2.1 , definition (3.15) and (3.14) of Lemma 3.2 imply that

This and (3.20) thus yield that

Using the triangle inequality and the assumption \(v_\varepsilon \rightharpoonup v\) in \(L^1(\Omega \times D)\), we further reduce to

By Lemma 3.3, there exists \(\kappa > 0\) such that

Thanks to the assumptions on \(v_\varepsilon \), we infer that the right-hand side is zero. This, together with (3.21), yields (3.3). The proof of Lemma 3.1 is thus complete. \(\square \)

Proof of Lemma 3.3

We divide the proof into steps. The strategy of this proof is similar to the one for [11][Theorem 2.1, (b)].

Step 1: (Construction of a partition for D) Let \(Q := [-\frac{1}{2} ;\frac{1}{2}]^3\); for \(k \in {\mathbb {N}}\) and \(x\in {\mathbb {R}}^3\) we define

Let \(N_{k,\varepsilon } \subseteq {\mathbb {Z}}^3\) be a collection of points such that \(|N_{k,\varepsilon }| \lesssim \varepsilon ^{-3}\) and \(D \subseteq \bigcup _{x \in N_{k,\varepsilon }} Q_{\varepsilon , k,x}\). For each \(x \in N_{k,\varepsilon }\) we consider the collection of points \(N_{\varepsilon , k, x}:= \{ z \in n^\varepsilon (D) \, :\, \varepsilon z \in Q_{\varepsilon , k,x} \} \subseteq \Phi ^\varepsilon (D)\) and define the set

Since by definition of \(n^\varepsilon (D)\) in Lemma 3.2 the cubes \(\{ Q_{\varepsilon ,z} \}_{z \in {\tilde{\Phi }}^\varepsilon (D)}\) are all disjoint, we have that

Note that the previous properties hold for every realization \(\omega \in \Omega \).

Step 2. For \(k \in {\mathbb {N}}\) fixed, let \(\{ K_{\varepsilon , x,k} \}_{x \in N_{k,\varepsilon }}\) be the covering of D constructed in the previous step. We define the random variables

and construct the random step function

Let v be as in the statement of the lemma and \(m_\varepsilon (k)\) as above. The triangle and Cauchy-Schwarz inequalities imply that

so that the proof of the lemma reduces to estimating the norms

We now claim that there exists a \(\gamma >0\), \(k \in {\mathbb {N}}\)

for a positive exponent \(\kappa >0\). Combining these two inequalities with (3.24) establishes Lemma 3.3.

In the remaining part of the proof we tackle inequalities (3.25). We follow the same lines of [11][Theorem 1.1, (b)]. and thus only sketch the main steps for the argument.

Step 3. We claim that

We first argue that that

This follows by Lemma 4.3 applied to the measure \(\sigma _\varepsilon ^{-2}\mu _\varepsilon \): In this case, the random set of centres is \({\mathcal {Z}}={\tilde{\Phi }}^\varepsilon (D)\), the random radii \({\mathcal {R}}= \{ R_{\varepsilon ,z}\}_{z\in {\tilde{\Phi }}(D)}\), the functions \(g_i = \sigma _{\varepsilon }^{-2} \nabla _\nu w_{\varepsilon ,z}\), \(z\in {\tilde{\Phi }}^\varepsilon (D)\) and the partition \(\{ K_{\varepsilon ,k,x}\}_{x \in N_{\varepsilon ,k}}\) of the previous step. Note that, by construction, this partition satisfies the assumptions of Lemma 4.3. The explicit formulation of the harmonic functions \(\{ w_{\varepsilon ,z}\}_{z\in n^\varepsilon (D)}\) defined in (3.16) (c.f. also [11][(2.24)]) implies that for every \(z \in n^\varepsilon (D)\)

Therefore, Lemma 4.3 and the bounds (3.28) yield that

which implies (3.27) thanks to (3.22).

It thus remains to pass from (3.27) to (3.26): We do this by taking the expectation and arguing as for [11][Inequality (4.22)]. We rely on the stationarity of \((\phi , {\mathcal {R}})\), the properties of the Poisson point process and the fact that \(z \in n_\varepsilon \) implies that \(\varepsilon ^\alpha \rho _z \leqslant \varepsilon ^{1 + \gamma }\) and \(R_{\varepsilon ,z} \geqslant \varepsilon ^{1+\frac{1}{2}\gamma }\).

Step 4. We now turn to the left-hand side in the second inequality of (3.25) and show that

The proof of this step is similar to [11][Theorem 2.1, (b)]: Using the explicit formulation of \(m_\varepsilon (k)\) we reduce to

If \(\mathring{N}_{\varepsilon ,k}:= \{ x \in N_{\varepsilon ,k} \, :\, \text {dist}(Q_{\varepsilon ,k, x} ; \partial D) > 2\varepsilon \}\), we split

Since \(\partial D\) is \(C^1\) and compact, for \(\varepsilon \) small enough (depending on D) we have

By stationarity, the second term in (3.30) is controlled by

Hence,

The remaining term on the righ-hand side may be controlled by the right-hand side in (3.29) by means of standard CLT arguments as done in [11][Inequality (4.23)] for the analogous term. We stress that the crucial observation is that the random variables \(S_{k,\varepsilon ,x} - \lambda {\mathbb {E}}\left[ \rho \right] \) are centred up to an error term. We mention that in this case the set \(K_{\varepsilon ,k,x}\) has been defined in a different way from [11] and we use properties (3.22) instead of [11][(4.13)]. This yields (3.29)

Step 5. We show that, given (3.26) and (3.29) of the previous two steps, we may pick \(\gamma \) and \(k\in {\mathbb {N}}\) such that inequalities (3.25) hold: Thanks to the definition of \(\sigma _\varepsilon \) and since \(\alpha \in (1; 3)\), we may find \(\gamma \) close enough to \(\alpha -1\), e.g. \(\gamma = \frac{20}{21}(\alpha -1)\), and a \(k \in {\mathbb {N}}\), e.g. \(k=-\frac{9}{20}(\alpha -1)\), such that

This, thanks to (3.26), implies that the first inequality in (3.25) holds with the choice \(\kappa =\frac{1}{2}0 (\alpha -1) > 0\). The same values of \(\gamma \) and \(\kappa \) yield that also the right hand side of (3.29) is bounded by \(\varepsilon ^{1-\frac{1}{2} (\alpha -1)}\). This yields also the remaining inequality in (3.25) and thus concludes the proof of Lemma 3.3.

Proof of Lemma 3.2

The proof of this lemma follows the same construction implemented in the proof of [11][Lemma 4.1] with \(d=3\), \(\delta = \gamma \) and with the radii \(\{\rho _z \}_{z \in \Phi ^\varepsilon (D)}\) rescaled by \(\varepsilon ^\alpha \) instead of \(\varepsilon ^3\). Note that the constraint for \(\gamma \) is due to this different rescaling. In the current setting, we replace \(\varepsilon ^2\) by \(\varepsilon ^{1+\frac{1}{2} \gamma }\) in the definition of the set \(K_b^\varepsilon \) in [11][(4.7)]. Estimate (3.14) may be argued as [11][Lemma 4.4] by relying on (0.2). \(\square \)

4 Proof of Theorem 0.1, (b)

The next lemma plays, in the case of the Stokes system in Theorem 0.1, (b), the same role that Lemma 3.1 play for the Poisson problem in Theorem 0.1, (a):

Lemma 4.1

Assume that \((\Phi , {\mathcal {R}})\) satisfies (0.5). Then, for every \(\delta > 0\), there exists an \(\varepsilon _0 >0\) and a set \(A_\delta \in {\mathcal {F}}\), having \({\mathbb {P}}(A_\delta ) \geqslant 1-\delta \), such that for every \(\omega \in A_\delta \) and \(\varepsilon \leqslant \varepsilon _0\) there exists a linear map

satisfying \(R_\varepsilon \phi =0\) in \(H^\varepsilon \), \(\nabla \cdot R_\varepsilon \phi =0\) in D and such that

Furthermore, if \(v_\varepsilon \) satisfies the bounds of Lemma 2.1 and \(\sigma _\varepsilon ^2 v_\varepsilon \rightharpoonup v\) in \(L^1(\Omega \times D)\), then

Proof of Theorem 0.1, (b)

The proof of this statement is very similar to the one for case (a) and we only emphasize the few technical differences. We recall that, in contrast with (a), in this case the process \((\Phi , {\mathcal {R}})\) satisfies the stronger condition (0.5). Using the bounds of Lemma 2.1, that we may apply since (0.5) implies (0.2), we have that, up to a subsequence, \(u_{\varepsilon _j} \rightharpoonup u^*\) in \(L^p(\Omega \times D)\), \(1 \leqslant p < 2\). We prove that

where \(p \in H^{1}(D)\) is the unique weak solution to

Identity (4.3) also implies that the full \(\{u_\varepsilon \}_{\varepsilon >0}\) converges to \(u^*\).

As for the proof of Theorem 0.1, case (a), we restrict to the converging subsequence \(\{ u_{\varepsilon _j} \}_{j\in {\mathbb {N}}}\) but we skip the index \(j\in {\mathbb {N}}\) in the notation. We start by noting that, using the divergence-free condition for \(u_\varepsilon \) and that \(u_\varepsilon \) vanishes on \(\partial D\), we have that for every \(\phi \in C^\infty (D)\) and \(\chi \in L^\infty (\Omega )\)

Let \(\chi \in L^\infty (\Omega )\) and \(\phi \in C^\infty _0(D)\) with \(\nabla \cdot \phi =0\) in D be fixed. For every \(\delta > 0\), we appeal to Lemma 4.1 to infer that there exists an \(\varepsilon _\delta >0\) and a set \(A_\delta \in {\mathcal {F}}\), having \({\mathbb {P}}(A_\delta ) \geqslant 1-\delta \), such that for every \(\omega \in A_\delta \) and for every \(\varepsilon \leqslant \varepsilon _\delta \) we may consider the function \(R_\varepsilon \phi \in H^1_0(D^\varepsilon )\) of Lemma 4.1. Testing equation (0.4) with \(R_\varepsilon (\rho )\), and using that the vector field \(R_\varepsilon v\) is divergence-free, we infer that

Using Lemma 4.1 and the bounds of Lemma 2.1 this implies that in the limit \(\varepsilon \downarrow 0\) we have

We now send \(\delta \downarrow 0\) and appeal to the Dominated Convergence Theorem to infer that

Since D has \(C^{1,1}\)-boundary and is simply connected, the spaces \(L^p(D)\), \(p \in (1, +\infty )\) admit an \(L^p\)-Helmoltz decomposition \(L^p(D)= L^p_{\text {div}}(D) \oplus L^p_{\text {curl}}(D)\) [10][Section III.1]. This, the separability of \(L^p(D)\), \(p \in [1, +\infty )\), and the arbitrariness of \(\chi \) and \(\phi \) in (4.6), allows us to infer that for \({\mathbb {P}}\)-almost realization the function \(u^*\) satisfies \(u^* = K f + \nabla p(\omega ; \cdot )\) for \(p(\omega ; \cdot ) \in W^{1,p}(D)\), \(p \in [1; 2)\). By a similar argument, we may use (4.5) to infer that for \({\mathbb {P}}\)-almost every realization and for every \(v\in W^{1.q}(D)\), \(q > 2\) we have

Since (4.4) admits a unique mean-zero solution, we conclude that \(p(\omega , \cdot )\) does not depend on \(\omega \). Finally, since D is regular enough and \(f \in L^q(D)\), standard elliptic regularity yields that \(p \in H^1(D)\). This concludes the proof of (4.3).

We now upgrade the convergence of the family \(\{ u_\varepsilon \}_{\varepsilon >0}\) to \(u^*\) from weak to strong: We claim that for every \(\delta >0\) we may find a set \(A_\delta \subseteq \Omega \) with \({\mathbb {P}}(A_\delta ) > 1-\delta \) such that

Here, \(q \in [1, 2)\). The proof of this inequality follows the same lines of the proof for (3.12) in case (a): In this case, we rely on Lemma 4.1 instead of Lemma 3.1 and use that, thanks to the definition (4.4), it holds

From (4.7), the statement of Theorem 0.1, (b) easily follows: Let, indeed, \(q \in [1, 2)\) be fixed. For every \(\delta >0\), let \(A_\delta \) be as above. We rewrite

and, given an exponent \(p \in (q; 2)\), we use Hölder’s inequality and the assumption on \(A_\delta \) to control

Since by Lemma 2.1 the family \(\sigma _\varepsilon ^2 u_\varepsilon \) is uniformly bounded in every \(L^p(\Omega \times D)\) for \(p \in [1, 2)\), we establish

Since \(\delta \) is arbitrary, we conclude the proof of Theorem 0.1, (b). \(\square \)

4.1 Proof of Lemma 4.1

Throughout this section we assume that the process \((\Phi , {\mathcal {R}})\) satisfies assumption (0.5). We recall that this assumption is stronger than (0.2). Therefore, all the previous results that relied on (0.2) (e.g. Lemma 3.1, Lemma 3.3) hold also in this case.

We argue Lemma 4.1 by leveraging on the geometric information on the clusters of holes \(H^\varepsilon \) contained in Lemma 1.2. The idea behind this proof is, in spirit, very similar to the one for Lemma 3.1 in case (a): As in that setting, indeed, we aim at partitioning the holes of \(H^\varepsilon \) into a subset \(H^\varepsilon _g\) of disjoint and “small enough” holes and \(H^\varepsilon _b\) where the clustering occurs. The main difference with case (a), however, is due to the fact that we need to ensure that the so-called Stokes capacity of the set \(H^\varepsilon _b\), namely the vector

vanishes in the limit \(\varepsilon \downarrow 0\). The divergence-free constraint implies that, in contrast with the harmonic capacity of case (a), the Stokes capacity is not subadditive. This yields that, if \(H^\varepsilon _b\) is constructed as in Lemma 3.2, then we cannot simply control its Stokes-capacity by the sum of the capacity of each ball of \(H^\varepsilon _b\).

We circumvent this issue by relying on the information on the length of the clusters given by Lemma 1.2. We do this by adopting the exact same strategy used to tackle the same issue in the case of the Brinkmann scaling in [12]. The following result is a simple generalization of [12][Lemma 3.2] and upgrades the partition of Lemma 3.2 in such a way that we may control the Stokes-capacity of the clustering holes in \(H^\varepsilon _b\). For a detailed discussion on the main ideas behind this construction, we refer to [12][Subsection 2.3].

Lemma 4.2

Let \(\gamma > 0\) be as chosen in Lemma 3.3. For every \(\delta > 0\) there exists \(\varepsilon _0 > 0\) and \(A_\delta \subseteq \Omega \) with \({\mathbb {P}}(A_\delta ) > 1-\delta \) such that for every \(\omega \in \Omega \) and \(\varepsilon \leqslant \varepsilon _0\) we may choose \(H^\varepsilon _g, H^\varepsilon _b\) of Lemma 3.2 as follows:

-

There exist \( \Lambda (\beta )> 0\), a sub-collection \(J^\varepsilon \subseteq {\mathcal {I}}^\varepsilon \) and constants \(\{ \lambda _l^\varepsilon \}_{z_l\in J^\varepsilon } \subseteq [1, \Lambda ]\) such that

$$\begin{aligned} H_b^\varepsilon \subseteq {\bar{H}}^\varepsilon _b := \bigcup _{z_j \in J^\varepsilon } B_{\lambda _j^\varepsilon \varepsilon ^\alpha \rho _j}( \varepsilon z_j), \ \ \ \lambda _j^\varepsilon \varepsilon ^\alpha \rho _j \leqslant \Lambda \varepsilon ^{\kappa }. \end{aligned}$$ -

There exists \(k_{max}= k_{max}(\beta , d)>0\) such that we may partition

$$\begin{aligned} {\mathcal {I}}^\varepsilon = \bigcup _{k=-3}^{k_{max}} {\mathcal {I}}_k^\varepsilon , \ \ \ J^\varepsilon = \bigcup _{i=-3}^{k_{max}} J_k^\varepsilon , \end{aligned}$$with \({\mathcal {I}}^\varepsilon _k \subseteq J^\varepsilon _k\) for all \(k= 1, \ldots , k_{\text {max}}\) and

$$\begin{aligned} \bigcup _{z_i \in {\mathcal {I}}_k^\varepsilon } B_{\varepsilon ^\alpha \rho _i}( \varepsilon z_i) \subseteq \bigcup _{z_j \in J_k^\varepsilon } B_{\lambda _j^\varepsilon \varepsilon ^\alpha \rho _j}( \varepsilon z_j); \end{aligned}$$ -

For all \(k=-3, \ldots , k_{max}\) and every \(z_i, z_j \in J_k^\varepsilon \), \(z_i \ne z_j\)

$$\begin{aligned} B_{\theta ^2 \lambda _i^\varepsilon \varepsilon ^\alpha \rho _i}(\varepsilon z_i) \cap B_{\theta ^2 \lambda _j^\varepsilon \varepsilon ^\alpha \rho _j}(\varepsilon z_j) = \emptyset ; \end{aligned}$$ -

For each \(k=-3, \ldots , k_{max}\) and \(z_i \in {\mathcal {I}}_k^\varepsilon \) and for all \( z_j \in \bigcup _{l=-3}^{k-1} J_l^\varepsilon \) we have

$$\begin{aligned} B_{\varepsilon ^\alpha \rho _i}(\varepsilon z_i) \cap B_{\theta \lambda _j^\varepsilon \varepsilon ^\alpha \rho _j}(\varepsilon z_j) = \emptyset . \end{aligned}$$(4.9)

Finally, the set \(D^\varepsilon _b\) of Lemma 3.2 may be chosen as

The same statement is true for \({\mathbb {P}}\)-almost every \(\omega \in \Omega \) for every \(\varepsilon > \varepsilon _0\) (with \(\varepsilon _0\) depending, in this case, also on the realization \(\omega \)).

Proof of Lemma 4.2

The proof of this result follows the exact same lines of of [12][Lemma 3.2]. We thus refer to it for the proof and to [12][Subsection 3.1] for a sketch of the ideas behind the quite technical argument. We stress that the different scaling of the radii does not affect the argument since the necessary requirement is that \(\varepsilon ^\alpha<< \varepsilon \). This holds for every choice of \(\alpha \in (1, 3)\). We also emphasize that in the current setting, Lemma 1.2 plays the role of [12][Lemma 5.1]. This result is crucial as it provides information on the length of the overlapping balls of \(H^\varepsilon \). For every \(\delta >0\), we thus select the set \(A_\delta \) of Lemma 1.2 containing those realizations where the partition of \(H^\varepsilon \) satisfies (1.2) and (1.4). Once restricted to the set \(A_\delta \), the construction of the set \(H^\varepsilon _b\) is as in [12][Lemma 3.1]. \(\square \)

Equipped with the previous result, we may now proceed to prove Lemma 4.1:

Proof of Lemma 4.1

The proof of this is similar to the one in [12][Lemma 2.5] for the analogous operator and we sketch below the main steps and the main differences in the argument. For \(\delta >0\), let \(\varepsilon _0>0\) and \(A_\delta \subseteq \Omega \) be the set of Lemma 4.2; From now on, we restrict to the realization \(\omega \in A_\delta \). For every \(\varepsilon < \varepsilon _0\) we appeal to Lemma 3.2 and Lemma 4.2 to partition \(H^\varepsilon = H^\varepsilon _b \cup H^\varepsilon _g\). We recall the definitions of the set \(n^\varepsilon \subseteq \Phi ^\varepsilon (D)\) in (3.13) in Lemma 3.2 and of the subdomain \(D^\varepsilon _b \subseteq D\) in (4.10) of Lemma 4.2.

Step 1. (Construction of \(R_\varepsilon \)) For every \(\phi \in C^\infty _0(D)\), we define \(R_\varepsilon \phi \) as

where the functions \(\phi ^\varepsilon _b\) and \(\phi ^\varepsilon _g\) satisfy

and

Step 2. (Construction of \(\phi ^b_\varepsilon \)) We construct \(\phi ^\varepsilon _b\) as done in [12][Proof of Lemma 2.5, Step 2]: For every \(z \in J^\varepsilon \), we define

It is clear that the previous quantities also depend on \(\varepsilon \). However, in order to keep a leaner notation, we skip it in the notation. We use the same understanding for the function \(\phi ^\varepsilon _b\) and the sets \(\{ I_{\varepsilon ,i}\}_{i=-3}^{k_{\text {max}}}\) and \(\{ J_{\varepsilon ,i} \}_{i=-3}^{k_{\text {max}}}\) of Lemma 4.2.

We define \(\phi ^b\) by solving a finite number of boundary value problems in the annuli

We stress that, thanks to Lemma 4.2, for every \(k= -3, \ldots , k_{\text {max}}\), each one of the above collections contains only disjoint annuli. Let \(\phi ^{(k_{\text {max}}+1)}= \phi \). Starting from \(k= k_{\text {max}}\), at every iteration step \(k= k_{\text {max}}, \ldots , -3\), we solve for every \(z \in I_{\varepsilon ,k}\) the Stokes system

We then extend \(\phi ^{(k)}\) to \(\phi ^{(k+1)}\) outside \(\bigcup _{z \in I_k} B_{\theta ,z}\) and to zero in \(\bigcup _{z \in I_{k}} B_{z}\).

The analogue of inequalities of [12][(4.12)-(4.14)], this time with the factor \(\varepsilon ^{\frac{d-2}{d}}\) replaced by \(\varepsilon ^\alpha \) and with \(d=3\), is

and

Moreover,

These inequalities may be proven exactly as in [12]. We stress that condition (4.9) in Lemma 4.2 is crucial in order to ensure that this construction satisfies the right-boundary conditions. In other words, the main role of Lemma 4.2 is to ensure that, if at step k the function \(\phi ^{(k)}\) vanishes on a certain subset of \(H^\varepsilon _b\), then \(\phi ^{(k+1)}\) also vanishes in that set (and actually vanishes on a bigger set).

We set \(\phi ^\varepsilon _b = \phi ^{(-3)}\) obtained by the previous iteration. The first property in (4.11) is an easy consequence of (4.13) and the first identity in (4.14). We recall, indeed, that thanks to Lemma 4.2 we have that

The second property in (4.11) follows immediately from (4.13). The third line in (4.11) is an easy consequence of the first line in (4.11) and the second inequality in (4.12). Finally, the last inequality in (4.11) follows by multiplying the last inequality in (4.14) with the factor \(\sigma _\varepsilon \) and using that, since \(\phi \in C^\infty \), we have that

Thanks to Lemma 4.2 and the definition of the set \(n^\varepsilon \) in Lemma 3.2, the previous inequality yields the last bound in (4.11).

Step 3. (Construction of \(\phi ^\varepsilon _g\)) Equipped with \(\phi ^\varepsilon _b\) satisfying (4.11), we now turn to the construction of \(\phi _g^\varepsilon \). Also in this case, we follow the same lines of [12][Proof of Lemma 2.5, Step 3] and exploit the fact that the set \(H^\varepsilon _g\) is only made by balls that are disjoint and have radii \(\varepsilon ^\alpha \rho \) that are sufficiently small. We define the function \(\phi _g^\varepsilon \) exactly as in [12][Proof of Lemma 2.5, Step 3] with the radius \(a_{i,\varepsilon }\) in [12][(4.18)] being defined as \(a_{\varepsilon ,z}= \varepsilon ^\alpha \rho _z\) instead of \(\varepsilon ^{\frac{d-2}{d}}\rho _z\). More precisely, for every \(z \in n^\varepsilon \), we write

and we set

With this notation, we define the function \(\phi ^\varepsilon _g\) as in [12][(4.19)-(4-21)]. Also in this case, identities, [12][(4.22)-(4.23)] hold. By Lemma 3.2 It is immediate to see that this construction satisfies the first two properties in (4.12).

We now turn to show the remaining part of (4.12): We remark that, since \(z \in n^\varepsilon (D)\), Lemma 3.2 and definition (4.15) yield that

where \(\gamma >0\) is as in Lemma 4.2 and \(\beta >0\) is as in (0.5). Equipped with the previous bounds, the analogue of estimates [12][(4.26)-(4.30)] yield that for every \(z \in n^\varepsilon (D)\)

Since \(B_{2,z} = D_z \cup C_z\cup T_z\) and the function \(\phi ^\varepsilon _g - \phi \) is supported only on \(\bigcup _{z\in n^\varepsilon (D)}B_{2,z}\), we infer that for every \(z \in n^\varepsilon (D)\), it holds

Summing over \(z \in n^\varepsilon \) we obtain the last two inequalities in (4.12). We thus established (4.12) and completed the proof of Step 1.

Step 4. (Properties of \(R_\varepsilon \)) We now argue that \(R_\varepsilon \) defined in Step 1. satisfies all the properties enumerated in Lemma 4.2. It is immediate to see from (4.12) and (4.11) that \(R_\varepsilon \phi \) vanishes on \(H^\varepsilon \) and is divergence-free in D. Inequalities (4.1) also follow easily from the inequalities in (4.12) and (4.11) and arguments analogous to the ones in Lemma 3.1. We stress that, in this case, we appeal to condition (0.5) and, in the expectation, we need to restrict to the subset \(A_\delta \subseteq \Omega \) of the realizations for which \(R_\varepsilon \) may be constructed as in Step 1.

To conclude the proof, it only remains to tackle (4.2). We do this by relying on the same ideas used in Lemma 3.1 in the case of the Poisson equation. We use the same notation introduced in Step 2. We begin by claiming that (4.2) reduces to show that for every \(i=1, \ldots , 3\)

where

with \(({\bar{w}}_i , {\bar{q}}_i)\) solving

We use the definition of \(R_\varepsilon \phi \) to rewrite for every \(\omega \in \mathbf {1}_{A_\delta }\)

We claim that, after multiplying by \(\mathbf {1}_{A_\delta }\) and taking the expectation, the last two integrals on the right-hand side vanish in the limit. In fact, using the triangle and Cauchy-Schwarz’s inequalities and combining them with (4.11) and the uniform bounds for \(\{v_\varepsilon \}_{\varepsilon >0}\) we have that

Hence, we show (4.2) provided that

Furthermore, since \(\sigma _\varepsilon ^{-2}v_\varepsilon \rightharpoonup v\) in \(L^p(\Omega \times D)\), \(p \in [1, 2)\) and \(\phi \in C^\infty _0(D)\), it suffices to prove that

We further reduce this to (4.19) if

An argument analogous to the one outlined in [12] to pass from the left-hand side of [12][(4.34)] to the one in [12][(4.39)] yields that

We stress that in the current setting we use again the uniform bounds on the sequence \(\sigma _\varepsilon ^{-1}\nabla u_\varepsilon \) and we rely on estimates (4.18) instead of [12][(4.26)-(4.30)]. To pass from (4.20) to (4.19) it suffices to use the smoothness of \(\phi \) and, again, the bounds on the family \(\{v_\varepsilon \}_{\varepsilon >0}\). We thus established that (4.2) reduces to (4.19).

We finally turn to the proof of (4.19). By the triangle inequality it suffices to show that

where the measures \(\mu _{\varepsilon ,i} \in H^{-1}(D)\), \(i=1, 2 ,3\), are defined as

We focus on the limit above in the case \(i=1\). The other values of i follow analogously. We skip the index \(i=1\) in all the previous objects. As done in the proof of (3.3) in Lemma 3.1, it suffices to show that there exists a positive exponent \(\kappa >0\) such that

with \(\lim _{\varepsilon \downarrow 0} r_\varepsilon = 0\). From this, (4.21) follows immediately thanks to the bounds assumed for \(\{v_\varepsilon \}_{\varepsilon >0}\).

The proof of (4.23) is similar to (3.3): For \(k\in {\mathbb {N}}\) to be fixed, we apply once Lemma 4.3 to this new measure \(\sigma _{\varepsilon }^{-2}{\tilde{\mu }}_{\varepsilon }\), with \({\mathcal {Z}}= \{\varepsilon z\}_{z \in n^\varepsilon (D)}\), \({\mathcal {R}} = \{ d_{\varepsilon ,z}\}_{z\in {\tilde{\Phi }}^\varepsilon (D)}\), \(\{g_{z,\varepsilon }\}_{z \in {\tilde{\Psi }}^\varepsilon (D)}\) and with the partition \(\{ K_{\varepsilon ,z,k}\}_{z\in N_{k,\varepsilon }}\) constructed in Step 1 in the proof of Lemma 3.3. This implies that

Appealing to the definition of \(g_{\varepsilon ,z}\) and to the bounds for \(({\bar{w}}, {\bar{q}})\) obtained in [1][Appendix], for each \(z \in n^\varepsilon (D)\) it holds that

This, (4.24), (4.22), the triangle inequality and the definition of \(K^{-1}\), imply that

where \(\mu _\varepsilon (k)\) is as in Step 2 of Lemma 3.3 and k is as in Theorem 0.1, (a). From this, we argue (4.2) exactly as done in Step 2-5 of Lemma 3.3. We established Lemma 4.1.

References

Allaire, G.: Homogenization of the Navier-Stokes equations in open sets perforated with tiny holes. I. Abstract framework, a volume distribution of holes. Arch. Rational Mech. Anal. 113(3), 209–259 (1990)

Allaire, G.: Homogenization of the Navier-Stokes equations in open sets perforated with tiny holes II: Non-critical sizes of the holes for a volume distribution and a surface distribution of holes. Arch. Rational Mech. Anal. 113(113), 261–298 (1991)

Allaire, G.: Continuity of the Darcy’s law in the low-volume fraction limit. Ann. Della Scuola Norm Sup. di Pisa 18(4), 475–499 (1991)

Beliaev, A.Y., Kozlov, S.M.: Darcy equation for random porous media. Comm. Pure App Math. 49(1), 1–34 (1996)

Bruckner, A. M., Bruckner J. B. and Thomson B. S.: Elementary Real Analysis, Prentice Hall (Pearson) (2001)

Brillard A.: Asymptotic analysis of incompressible and viscous fluid flow through porous media. Brinkman’s law via epi-convergence methods, Annales de la Faculté des sciences de Toulouse : Mathématiques 8 (1986-1987), no. 2, 225–252

Cioranescu, D., Murat, F.: A strange term coming from nowhere, topics in the mathematical modelling of composite materials. Progress Nonlinear Differ. Equ. Appl. 31, 45–93 (1997)

Daley, D. J. and Vere-Jones D.: An introduction to the theory of point processes. vol.II: General theory and structures, probability and its applications, Springer-Verlag New York (2008)

Desvillettes, L., Golse, F., Ricci, V.: The mean-field limit for solid particles in a Navier-Stokes flow. J. Stat. Phys. 131(5), 941–967 (2008)

Galdi, G.P.: An Introduction to the Mathematical Theory of the Navier-Stokes Equations, 2nd edn. Springer-Verlag, New York, Steady-State Problems (2011)

Giunti, A.: Convergence rates for the homogenization of the Poisson problem in randomly perforated domains, ArXiv preprint (2020)

Giunti, A., Höfer, R.: Homogenization for the Stokes equations in randomly perforated domains under almost minimal assumptions on the size of the holes. Ann. Inst. H. Poincare’- An. Nonl. 36(7), 1829–1868 (2019)

Giunti A. and Höfer, R.: Convergence of the pressure in the homogenization of the Stokes equations in randomly perforated domains, ArXiv preprint (2020)

Giunti, A., Höfer, R., Velàzquez, J.J.L.: Homogenization for the Poisson equation in randomly perforated domains under minimal assumptions on the size of the holes. Comm. PDEs 43(9), 1377–1412 (2018)

Höfer, R. M., Kowalczyk, K. and Schwarzacher, S.: Darcy’s law as low mach and homogenization limit of a compressible fluid in perforated domains, ArXiv preprint (2020)

Hillairet, M.: On the homogenization of the Stokes problem in a perforated domain. Arch. Ration. Mech. Anal. 230(3), 1179–1228 (2018)

Lèvy, T.: Fluid flow through an array of fixed particles. Int. J. Eng. Sci. 21(1), 11–23 (1983)

Marchenko, V.A., Khruslov, E.Y.: Homogenization of partial differential equations, Progress in Mathematical Physics, : 46. Boston. Birkhäuser Boston Inc, MA (2006)

Jing W.: Layer potentials for Lamé systems and homogenization of perforated elastic medium with clamped holes, ArXiv preprint (2020)

Masmoudi, N.: Homogenization of the compressible Navier-Stokes equations in a porous medium, ESAIM: Control. Opt. Calc. Var 8, 885–906 (2002)

Mikelić, A.: Homogenization of nonstationary Navier-Stokes equations in a domain with a grained boundary. Ann. Mat. Pura Appl. 158, 167–179 (1991)

Moller J.: Poisson-Voronoi tessellations, In Lectures on Random Voronoi Tessellations, Lecture Notes in Statistics, 87 (1994), Springer, New York, NY

Papanicolaou, G. C. and Varadhan, S. R. S.: Diffusion in regions with many small holes, Springer Berlin Heidelberg (1980), Berlin, Heidelberg, pp. 190–206

Rubinstein, J.: On the macroscopic description of slow viscous flow past a random array of spheres. J. Stat. Phys. 44(5–6), 849–863 (1986)

Sanchez-Palencia, E.: On the asymptotics of the fluid flow past an array of fixed obstacles. Int. J. Eng. Sci. 20, 1291–1301 (1982)

Tartar, L.: Incompressible fluid flow in a porous medium: Convergence of the homogenization process, in Nonhom. media and vibration theory, edited by E. Sanchez?Palencia (1980), 368–377

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by L. Caffarelli.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

5 Appendix

5 Appendix

Proof of Lemma 1.1

Without loss of generality, we assume that \(\mathop {diam}(D) =1\).

\((i) \Rightarrow (ii)\): We prove that

We do this by bounding

and, for \(0< \delta < \alpha -1\),

Since \(\Phi \) is a Poisson point process and we assumed (0.2), the right-hand side above vanishes \({\mathbb {P}}\)-almost surely in the limit \(\varepsilon \downarrow 0\). This concludes the proof of (5.1) and immediately yields (ii).

\((ii) \Rightarrow (i)\): This is equivalent to show that if \({\mathbb {E}}\left[ \rho ^{\frac{3}{\alpha }} \right] = +\infty \) then for \({\mathbb {P}}\)-almost every realization there exists a sequence \(\{\varepsilon _k \}_{k\in {\mathbb {N}}}\) satisfying \(\varepsilon _k \rightarrow 0\) and such that the set \(D^{\varepsilon _k} =\emptyset \) for all \(k\in {\mathbb {N}}\). We claim that if \(\varepsilon _j:= 2^{-j}\), \(j\in {\mathbb {N}}\), then the events

satisfy

Since the events are independent, this implies by the (second) Borel-Cantelli Lemma that

By the definition of the events \(A_j\), this in particular yields that, \({\mathbb {P}}\)-almost surely, there exists a subsequence of \(\{\varepsilon _j \}_{j\in {\mathbb {N}}}\) along which the sets \(D^{\varepsilon _j}\) are empty.

We argue (5.2) as follows: Let

then, if \(\Psi = (\Phi ; {\mathcal {R}})\) denotes the extended point process on \({\mathbb {R}}^d \times [1; +\infty )\) with intensity \({\tilde{\lambda }} (x, \rho ) = \lambda f(\rho )\) (c.f. Section 1), we rewrite

Since

we bound

Recalling that \(\varepsilon _j = 2^{-j}\), we may sum over \(j \in {\mathbb {N}}\) in the previous inequality and get that

We may assume that \(\varepsilon _j^{-3} {\mathbb {P}}( 12\varepsilon _{j}^{-\alpha }\leqslant \rho \leqslant 24\varepsilon _{j+1}^{-\alpha }) \rightarrow 0\). If not, indeed, (5.2) immediately follows. Since \(\varepsilon _j = 2^{-j}\), we have that

By the assumption \({\mathbb {E}}\left[ \rho ^{\frac{3}{\alpha }}\right] = + \infty \), this establishes (5.2). The proof of Lemma 1.1 is complete.

Proof of Lemma 1.2

The proof of this lemma relies on an application of Borel-Cantelli’s lemma and follows the same lines of the one in [12][Lemma 5.1].

For \(\kappa > 0\), let \(k_{\text {max}}= \lfloor \frac{1}{\kappa }\rfloor +1\). We partition the set of centres \(\Phi ^\varepsilon (D)\) in terms of magnitude of the associated radii: We write \(\Phi ^\varepsilon (D)=\bigcup _{k=-3}^{k_{\text {max}}} I_{\varepsilon , k}\) with

Note that, up to a relabelling of the indices \(k=-3, \ldots , k_{\text {max}}\), the previous partition satisfies (1.3) of Lemma 1.2.

For any set \(\chi \subseteq \Phi ^\varepsilon (D)\), we say that A contains a chain of length \(M\in {\mathbb {N}}\), \(M \geqslant 2\), if there exist \(z_1, \ldots , z_M \in \chi \) such that \(B_{4\varepsilon ^\alpha \rho _{z_i}}(\varepsilon z_i) \cap B_{4\varepsilon ^\alpha \rho _{z_j}}(\varepsilon z_j)\ne \emptyset \), for all \(i, j = 1, \ldots M\). We say that A contains a chain of size 1 if and only if \(A \ne \emptyset \).

Equipped with this notation, (1.2) follows provided we argue that for \(\kappa \) suitably chosen, there exists \(k_0 < k_{\text {max}}-1\) such that, \({\mathbb {P}}\)-almost surely and for \(\varepsilon \) small, the sets \(\{ I_{\varepsilon ,k} \cup I_{\varepsilon , k+1} \}_{k=k_0}^{k_{\text {max}}}\) are empty. This is equivalent to prove that they do not contain any chain of size at least 1. Similarly, (1.4) is obtained if we find an \(M \in {\mathbb {N}}\) such that \({\mathbb {P}}\)-almost surely and for \(\varepsilon \) small enough, all the sets \(\{ I_{\varepsilon ,k} \cup I_{\varepsilon , k+1} \}_{k=-3}^{k_0}\) contain chains of length at most \(M-1\).

For \(M \in {\mathbb {N}}\) and \(k= -3, \ldots , k_{\text {max}}\), we define the events

We claim that if \(\kappa < \min \left( \frac{\beta }{6} ; \frac{\alpha ^2\beta }{6+ 2\alpha \beta } \right) \), then there exists \(k_0 \in {\mathbb {N}}\), \(k_0 < k_{\text {max}}\) such that for every \(k \in \{ k_0, \ldots , k_{\text {max}}\}\)

and there exists \(M=M(\alpha , \beta ) \in {\mathbb {N}}\) such that for every \(k = -3, \ldots , k_0 -1\), also

These claims immediately yield (1.2) and (1.4) and conclude the proof of Lemma 1.2, (i).

The argument for (5.3) and (5.4) relies on an application of Borel-Cantelli’s Lemma and is analogous to the one for [12][Lemma 5.1]. We thus only sketch the proof. As shown in [12][Proof of Lemma 5.1, (5.5) to (5.6)], up changing the constant 4 in the definition of chain, we may reduce to prove (5.3)-(5.4) for a sequence \(\{ \varepsilon _j \}_{j \in {\mathbb {N}}}= \{ r^j\}_{j \in {\mathbb {N}}}\), with \(r \in (0,1)\).

Using stationarity and the independence properties of the Poisson point process \((\Phi , {\mathcal {R}})\), it is easy to see that

where

Using the moment condition (0.5) and provided \(\kappa < \frac{\beta }{6}\) this yields

Hence, by (5.5), we have that

On the one hand, if \(\kappa < \min \left( \frac{\beta }{6} ; \frac{\alpha ^2\beta }{6+ 2\alpha \beta }\right) \), then we may pick \(k_0:= \lfloor \frac{1}{\kappa } \frac{\alpha ^2\beta }{6 + 2 \alpha \beta } \rfloor \) and observe that for every \(k \in \{ k_0, \ldots , k_{\text {max}}\}\) we have that

On the other hand, if \(M \in {\mathbb {N}}\) is chosen big enough, for every \(k=-3, \ldots , k_0\) also

Using these two bounds, we may apply Borel-Cantelli to the family of events \(\{ A_{k, \varepsilon _j, M}\}\) and conclude (5.3) and (5.4).

We now turn to case (ii). Identity (5.4) may be rewritten as

This implies that for every \(\delta > 0\), we may pick \(\varepsilon _0>0\) such that the set \({\mathbb {P}}(\bigcap _{\varepsilon < \varepsilon _0} (\bigcup _{k =-3}^{k_{\text {max}}} A_{k, \varepsilon ,M})^c )> 1-\delta \). The statement of (ii) immediately follows if we set \(A_\delta := \bigcap _{\varepsilon < \varepsilon _0} (\bigcup _{k =-3}^{k_{\text {max}}} A_{k, \varepsilon ,M})^c\). The same argument applied to (5.3) implies the same statement for (1.2). \(\square \)

Lemma 4.3

Let \({\mathcal {Z}}:= \{ z_i \}_{i \in I} \subseteq D\) be a collection of points and let \({\mathcal {R}}:=\{ r_i \}_{i\in I} \subseteq {\mathbb {R}}_+\) such that the balls \(\{ B_{r_i}(z_i) \}_{i\in I}\) are disjoint. We define the measure

where \(g_i \in L^2( \partial B_{r_i}(z_i) )\). Then, there exists a constant \(C< +\infty \) such that for every Lipschitz and (essentially) disjoint covering \(\{ K_j\}_{j \in J}\) of D such that

we have that

with

Proof of Lemma 4.3

This lemma is a simple generalization of [11][Lemma 5.1], where the harmonic functions \(\{ \partial _n v_i \}_{i\in I}\) are replaced by a more general collection of functions \(\{ g_i \}_{i\in I}\). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.