Abstract

Breast cancer detection is considered a challenging task for the average experienced radiologist due to the variation of the lesions’ size and shape, especially with the existence of high fibro-glandular tissues. The revolution of deep learning and computer vision contributes recently in introducing systems that can provide an automated diagnosis for breast cancer that can act as a second opinion for doctors/radiologists. The most of previously proposed deep learning-based Computer-Aided Diagnosis (CAD) systems mainly utilized Convolutional Neural Networks (CNN) that focuses on local features. Recently, vision transformers (ViT) have shown great potential in image classification tasks due to its ability in learning the local and global spatial features. This paper proposes a fully automated CAD framework based on YOLOv4 network and ViT transformers for mass detection and classification of Contrast Enhanced Spectral Mammography (CESM) images. CESM is an evolution type of Full Field Digital Mammography (FFDM) images that provides enhanced visualization for breast tissues. Different experiments were conducted to evaluate the proposed framework on two different datasets that are INbreast and CDD-CESM that provides both FFDM and CESM images. The model achieved at mass detection a mean Average Precision (mAP) score of 98.69%, 81.52%, and 71.65% and mass classification accuracy of 95.65%, 97.61%, and 80% for INbreast, CE-CESM, and DM-CESM, respectively. The proposed framework showed competitive results regarding the state-of-the-art models in INbreast. It outperformed the previous work in the literature in terms of the F1-score by almost 5% for mass detection in CESM. Moreover, the experiments showed that the CESM could provide more morphological features that can be more informative, especially with the highly dense breast tissues.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Cancer diseases are considered one of the most common diseases worldwide; breast cancer is one of the cancers that hits women hugely. Breast cancer develops in the breast tissues and occurs when the cells grow abnormally, forming a mass or lump, as these cells divide themselves rapidly compared to the other healthy ones.

The American Cancer Society (ACS) published an estimation for the breast cancer cases among females in the USA only (2022), and it stated that about 290,560 new cases would be diagnosed with breast cancer and 43,780 would die. Most cases mainly occur between 45 and 62 years old [1]. The substantial support for awareness about the risks of breast cancer helped a lot in the early diagnosis of this disease. This happens through encouraging regular check-ups, which can be done via different approaches, such as regular screening. There are various modalities for breast screening, but mammogram is the most widely used and effective one. Although screening cannot prevent breast cancer, it can help a lot in detecting the lesions early, even before the symptoms start to develop.

Mammograms are X-ray pictures of the breast with two different views, Medio Lateral Oblique (MLO) and Carnio Caudal (CC), for each breast side. Various abnormalities can be detected through mammogram interpretation; these abnormalities can be classified into masses and calcifications. Those abnormalities are diagnosed as benign or malignant according to their appearance and morphological features such as shape and pattern. Benign masses have an oval or circular shape with well-defined edges, while malignant masses look like it has spikes out from their center.

The accurate interpretation of the mammogram leads to precise diagnosis; Radiologists spend a lot of time and effort in the interpretation process. Interpretation of many cases can cause inaccurate diagnosing for some cases, especially with radiologists with fewer years of experience [2].

With the rapid development in machine learning and computer vision techniques, and because of the above problems, Computer-Aided Diagnosis (CAD) systems appeared and developed in the past decades. These systems are developed to process different forms of data such as medical images, clinical data, and genetic data. Recently, deep learning models have made these systems work more consistently, especially now there are a variety of neural network architectures that can be used in different ways depending on the data type in order to get the most out of them. For example, CNN models fit more the medical imaging tasks [3], while RNN [4, 5] models seem to be more effective with the sequential data such as genetic data [6]. Therefore, the data type is critical in determining which architecture will be used to design the CAD system.

This work mainly focuses on the mammographic CAD systems. Mammographic CAD systems are divided into Computer-Aided Diagnosis and Computer-Aided Detection systems; the first one mainly focuses on interpreting the predefined abnormality (benign or malignant). On the other hand, the second type of these systems aims to detect and localize the abnormality within mammography. Different factors make detecting and classifying the masses very challenging for the CAD system, such as shape, mass size variation ranging from too small to very large, and the nature of the breast tissues that sometimes mask the masses, especially with the highly dense breast tissues.

Mammograms were generated using systems based on phosphorescent screen-film technology until the US Food and Drug Administration approved the Full Field Digital Mammography (FFDM) systems. The FFDM systems have significantly improved the quality of mammographic images and the sensitivity of breast cancer detection. Even the models that were trained on FFDM images provided better results than the ones provided through the Screen Film Mammography technique (SFM) [7]. However, the contrast of the FFDM images depends on the differences between breast tissues; those images can provide just structural information. One of the limitations of this type of mammographic image is that the highly dense breast tissues can mask the mass, as most of the fibro-glandular tissues have the same image gray levels of the lesions. This can lower the sensitivity in detecting tumors when breast density increases [8]. Recently, Contrast Enhanced Spectral Mammography (CESM) was introduced in 2011 as a new image technique for mammogram screening. CESM provides improved visualization for mammographic images by combining low and high-energy breast images. Figure 1 illustrates the difference between the FFDM and CESM images and how the CESM can provide more morphological features that can enhance breast cancer detection, especially with masked masses in highly dense breasts. Although the crucial information that these images can provide clinically, few studies have been proposed for developing deep learning-based CAD systems in CESM images. Almost all of the previously introduced studies by researchers used FFDM images or SFM images [9, 10].

a Low-energy FFDM image shows negative findings; b recombination CESM image that clearly shows the existence of mass [8]

These various studies proposed different models for mass detection and classification based on different deep learning techniques and with the use of transfer learning concept. As deep learning has great capability to automatically extract the deep features from the mammograms with no need for hand-crafted features, in addition to that, transfer learning reduces the computational cost and the need of large datasets for training.

Convolutional Neural Networks (CNN) ruled the detection and classification tasks in medical imaging diagnosis through the last few years in the most of these studies, relying on the idea of the dependency on the immediate neighboring pixels which represent the local features of the image (such as color, contrast, etc.). This allows the model to learn and extract the essential features and edges only without learning the details of each pixel and the global context of the features. However, learning the entire image, rather than the parts that the filter extracts, may increase the chances of obtaining better performance from the model.

Recently, vision transformers have shown competitive performance compared to CNNs; vision transformers are built on the attention mechanism, which focuses on the local and global spatial features [11]. Few studies adopted the ViT transformers in medical imaging tasks [12,13,14] especially in breast cancer diagnosis. Furthermore, few works proposed a fully automated model for detecting and classifying the masses. Based on the aforementioned points, this work proposes a fully automated framework in an end-to-end training fashion for mass detection and classification based on YOLOv4 and ViT transformers in CESM mammographic images and FFDM images. This work leverages the ViT transformer to extract the global features alongside with the local features to enhance the accuracy of the diagnosis.

Moreover, the experiments are designed to explore the efficacy of the automated interpretation of CESM and how it can increase the sensitivity of breast cancer detection more than FFDM.

The novelty and contributions of this work can be summarized in the following points:

-

1.

Integrate the YOLOv4 with ViT transformers for mass detection and classification, to utilize the capability of ViT in learning the local and global spatial features in the mass classification instead of the CNN models.

-

2.

To the best of our knowledge, this is the first fully automated CAD framework for mass detection and classification in CESM images, specifically in the CDD-CESM dataset [15].

-

3.

Evaluate how CESM can have the potential to enhance the diagnostic accuracy comparing to Digital Mammography (DM).

-

4.

Assess the performance of YOLOv4, YOLOv7, and YOLOv8 in mass detection on CDD-CESM and INbreast dataset based on a comprehensive evaluation through different experiments.

-

5.

Conduct different experiments to evaluate and compare the performance of different vision transformer models and different CNN models at mass classification in mammograms.

-

6.

Utilize the newly introduced CDD-CESM dataset [15] for mass detection and classification to evaluate the performance of the proposed model.

This paper is organized as follows: Sect. 2 presents the literature survey, while Sect. 3 demonstrates the methods and materials employed in this work. Section 4 shows the experimental design and results, and Sect. 5 discusses the results. Finally, Sect. 6 presents the conclusion, while Sect. 7 discusses the advantages, limitations, and directions for future work.

2 Related work

The rapid development of deep learning techniques hugely affected the researchers' contribution in developing more accurate CAD systems. Over the past years, many attempts have been made to introduce reliable systems for breast cancer diagnosis and prognosis, especially with the dependency on using mammograms as a first tool for the initial diagnosis. The proposed techniques in this literature mainly focused on one or more of three tasks; detection, segmentation, and classification.

Detection can be described as the process of localizing the abnormal area or spots within the mammogram images, while segmentation mainly targets the pixel-by-pixel annotation of the abnormal findings; finally, the classification is considered as the process of classifying the findings into (Normal/Abnormal) or (Benign/Malignant). Some studies focused on observing the morphological features (texture, color, brightness, etc.) in their works to extract the ROIs and then classify them into benign or malignant using feature-based/conventional machine learning techniques [16,17,18,19,20].

However, feature-based techniques have been used for a long time. Still, they have some drawbacks as those techniques mainly depend on classical feature engineering that is affected by different factors such as the subject knowledge of the developer, his intuition, and his skills in the mathematical models. This process is considered a time-consuming process; moreover, this may not capture all the relevant features in the image as those techniques cannot automatically learn the most discriminant features [21]. Furthermore, feature-based techniques are often designed to capture specific characteristics or patterns through the training phase. Accordingly, this may not generalize well to unseen data, especially since those techniques struggle with large and high-dimensional feature space datasets; and this can lead to more computational overhead.

On the other side, with the appearance of deep learning, CNNs replaced the traditional hand engineering approaches for feature extraction, as the CNNs can automatically extract and learn complex features in more detail and in a more efficient way that fits the required task [22, 23]. The initial convolutional layers in the deep CNN can effectively extract the low-level features, while subsequent layers propagate these features to extract more complex and abstract features. Through the training process, the filters and pooling operations automatically select the most discriminant and informative features. Using deep learning in feature extraction provides benefits that can overcome the problems of feature-based techniques. Automating the feature extraction process through deep learning saves the time and effort needed to extract hand-crafted features. The deep learning techniques capture the features at multiple levels of abstraction, and this hierarchal representation allows more informative features to be extracted. Moreover, transfer learning reduces the computational cost of learning the features from scratch; as the pre-trained models can be used as a feature extractor for related tasks [24].

Different studies adopted various architectures in their developed systems that integrates CNN with conventional machine learning techniques. In [25], they proposed a system based on CNN and SVM for mass and microcalcification segmentation and classification that achieved a classification accuracy of 80.5% and 87.2% on DDSM and CBIS-DDSM, respectively. One of the points that needs to be investigated in this work is employing a deep learning model for the segmentation phase to allow the model to learn more discriminant features about masses and calcifications; and accordingly, this may enhance the results.

In [26], the authors also proposed a CAD system for whole mammogram classification based on deep CNNs for feature extraction and SVM for classification. They conducted their experiments on two different datasets, MIAS and INbreast, that is composed of FFDM images; their approach achieved an accuracy of 97.93% and 96.64%, respectively.

In 2015, a giant leap occurred in object detection techniques, especially with the appearance of new deep learning-based models that can detect multiple objects within one image. Those models are categorized into one-shot and two-shot detectors; the most well known among them is You Look Only Once (YOLO) [27] because of its performance at both accuracy and computational time levels. Al-Mansi et al. [28] were one of the pioneers who exploited YOLO in their work; they introduced a YOLO-based CAD system that achieved an accuracy of 85.2% for detection. In [29], they proposed a CAD system for mass detection, segmentation, and classification; they utilized YOLO for detection, then segmented the detected masses using Full Resolution Convolutional Network (FRCN) and AlexNet architecture-based classifier for classification. Their approach achieved a detection accuracy of 97.2%, segmentation accuracy of 92.97%, and classification accuracy of 95.3%. However, their model was straggling with small mass detection, moreover, one of the drawbacks in their model is the manual elimination of the false localized masses before segmentation phase, which is impractical for automated diagnosis.

In [30], the authors developed a YOLO-based CAD system to detect the tumors and classify them into masses and calcification; their experiments were conducted on two different datasets, INbreast and CBIS-DDSM. The model achieved 98.1% and 95.7%, respectively. Their model isn’t providing a diagnosis about the malignancy of the detected masses. Additionally, the model has high inference rate/image relative to the other recent similar studies.

Also, Faster-RCNN [31] was one of the object detection models that showed promising performance in some studies. In [32], Ribli et al. also utilized the two-shot detector Faster-RCNN in their developed system for mass detection and classification. Their system detected 90% of the malignant masses with a classification accuracy of 95%. Agarwal et al. [33] also proposed a Faster-RCNN-based model for mass detection; the model achieved a sensitivity of 95–71% and a specificity of 70%. Comparing to the results of the models that adopted the YOLO, these models have lower detection sensitivity. Additionally, Faster-RCNN consumes more time at detection than YOLO.

Cao et al. [34] developed a novel model that detects breast masses based on anchor-free technique named FSAF, an enhanced model of RetinaNet [35]. Moreover, they proposed a new augmentation method to increase the size of the dataset. This augmentation technique enhanced their results; however, it has more computational cost rather than the traditional augmentation techniques. The model attained 0.495 False Positive Rate (FPR)/image for INbreast and 0.599 FPR/image for DDSM.

Shen et al. [36] proposed a framework for mass detection with an attempt to automate the process of mass annotation in mammographic images. The model mainly depended on adversarial learning; the experiments were done over a private dataset and INbreast; it achieved an AUC of 0.9083 and 0.8522 for each dataset, respectively. However, their training strategy addressed the problem of oscillation and the limitation of small batch size, this approach needs to be experimented on more different medical imaging datasets.

2.1 Insights from related work

The mammograms' quality affected breast cancer detection sensitivity; most of the proposed work was done over mammographic datasets of SFM or FFDM mammographic images. FFDM images provide better quality, so this type of image has replaced the SFM images in recent years; however, one of the problems still exists is the masked masses in cases with high breast density.

In FFDM, the gray levels of the glandular tissues have similar values as the masses. Accordingly, this makes the mass hide within the dense tissues and decreases the visibility of the masses. CESM is a relatively new technique for obtaining mammographic images; this technique depends on getting a new mammographic image by subtracting the low-energy image from the high-energy image.

Studies showed that the CESM could provide more morphological features and higher sensitivity in detecting lesions than the FFDM and the SFM [8, 37] especially for cases with highly dense tissues. Song et al. [38] proposed a deep-information bottleneck-based network for classifying the CESM images; their model aimed to learn the relevant features between the images. Their approach achieved an accuracy of 97.2%; they used the CDD-CESM dataset in their experiments. Other studies [39,40,41] introduced different classification models based on deep learning and conventional machine learning for CESM images. From Table 1, it can be noticeable that most of the proposed work was done mainly on the FFDM images, few studies only have been proposed CAD systems for CESM. There is not enough evaluation of the performance of CAD systems on CESM images, specifically in mass detection.

Moreover, based on the previously reviewed work, the proposed techniques in the literature mainly did not yet explore the potential of the transformers in learning the global context of the pixels in the classification task of abnormal findings in mammographic images. Therefore, a comprehensive assessment has been done between CNN models and vision transformers models to exploit the ability of the vision transformers in learning the long-range dependencies and the relationship between image pixels in mass classification.

Consequently, this work proposes a fully automated framework for mass detection and classification in CESM images by integrating the power of YOLO with the ViT transformer. Furthermore, the performance of the model was also evaluated on FFDM images. Also, the work provides a comprehensive study of the potential of CESM vs. FFDM in mass detection and classification. Table 1 summarizes recent studies in FFDM and CESM images for mass detection and/or (mass/whole image) classification.

3 Methods and materials

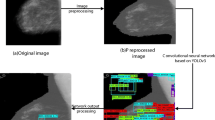

This work proposes a fully automated framework for mass detection and classification in end-to-end training strategy in CESM and FFDM images; deep learning models are adopted in both phases. The proposed CAD system can be divided into three parts, as shown in Fig. 2; pre-processing, detection, and classification. The pre-processing steps were inspired by the technique that was used by [34]; three methods were applied at this phase Gaussian Filter [44], Otsu's thresholding [45] and clip limit adaptive histogram equalization (CLAHE) [46] as shown in Fig. 2. YOLOv4 architecture was adopted for mass detection while the classification network of YOLO was replaced with the ViT-transformer network; Fig. 3 illustrates the proposed framework.

3.1 Pre-processing phase

3.1.1 Gaussian filter and Otsu's thresholding

Firstly, the Gaussian filter was used to reduce the noise and blurring of the images. Gaussian filter is a linear filter with a symmetric kernel with an odd size that passes through each pixel in the image. The values inside the kernels are calculated as shown in Eq. 1, where (x, y) represents the pixel coordinate, \(\sigma\) is the standard deviation of the Gaussian distribution.

Otsu thresholding was also used to find the suitable threshold for separating the foreground pixels and the background pixels to minimize the area of the background to crop the Region of Interest (RoI) of the breast.

3.1.2 Clip limit adaptive histogram equalization (CLAHE)

CLAHE was used to enhance the contrast of the mammographic image; CLAHE mainly enhances the local contrast of the image. It works at small tiles of the image rather than the whole image. This algorithm is used to enhance the contrast of the medical images to improve the visual appearance of the mammogram. The clip limit (CL) is an essential parameter for CLAHE, as this parameter controls the image's brightness level. In the pre-processing phase, two clip limits were used, as shown in Fig. 2.

3.2 Detection phase

At this phase, You Look Only Once (YOLO) model has been selected for mass detection. YOLO is a well-known object detection architecture known as a single-shot detector, as the image is processed in one shot to detect multiple objects within it. One of the advantages of YOLO is looking at the complete image, which means less information loss, and this is considered one of the crucial points that affect the interpretation of medical images. Furthermore, the detection and classification are done simultaneously, making the YOLO faster than other detectors. Figure 4 illustrates the architecture of YOLO.

YOLO v4 Architecture [33]

There are different official versions of YOLO, which are YOLOv1, YOLOv2 [47], YOLOv3 [48], YOLOv4 [49], YOLOv7 [50], and YOLOv8 [51]. Different studies [7, 52, 53] show that YOLOv4 performs better than the other elder versions, YOLOv1, YOLOv2, and YOLOv3. The improvements that were introduced in YOLOv4 enhanced the accuracy and detection time. However, the recent versions, YOLOv7 and YOLOv8, were introduced with some improvements to enhance the trade-off between accuracy and time.

3.2.1 YOLOv4 vs. YOLOv7 and YOLOv8

An experiment was conducted to select the most suitable version of YOLO that fit the used datasets in this work among the recently introduced YOLO versions. The experiments use the same datasets for YOLOv4, YOLOv7, and YOLOv8 to evaluate the performance of these recent models on medical images, specifically mammograms. According to the experiments, YOLOv4 outperformed YOLOv7 and YOLOv8 regarding mAP, recall, and precision; however, YOLOv7 showed competitive results on the INbreast dataset. On the other side, YOLOv7 and YOLOv8 provide faster performance than YOLOv4, as shown in Table 2.

Based on the conducted experiments, YOLOv7 struggles in small mass detection, especially with crowded mammographic scenes, whether false mass detection or missed mass detection, especially with CESM-CDD dataset. It is not performed well in detecting masses at different scales, as it struggles with masses that are very large or very small regarding the other masses in the mammographic image. In addition, lighting changes can cause significant variations in the appearance of masses; YOLOv7 is adversely affected by the changes in lighting [54], which makes it inconvenient for mass detection, especially in mammographic scanning, where lighting variations are common.

Regarding YOLOv8, the main change is adopting a new anchor-free detection mechanism [55]. Also, the model is built based on a new modified version of CSP-DarkNet-53 as the backbone [51] such as YOLOv4. On the other hand, the model is still under construction and development, so its performance is not stable yet. Also, the model struggles with small object detection in complex scenes [56], especially with the probability of the overlapping between the small-size objects and other size objects that may partially block its appearance.

Due to the consequences of the above reasons, at the detection phase, YOLOv4 was used to mainly detect the masses existing in the mammograms. It splits the input mammographic image into grid cells (s × s) cells; if the mass falls within the cell, it is considered responsible for detecting this mass. A fixed number of bounding boxes is predicted for each cell with their confidence score; each box's confidence score represents the probability of containing a mass multiplied by the Intersection over Union (IoU) between the ground truth and the predicted box.

Many different configurations were introduced for YOLO; these configurations are set up according to the application domain and the used datasets in the experiments. The most important and effective step before training the YOLO is adjusting the anchor boxes according to the dataset and the resolution of the input image; accordingly, the K-Means clustering algorithm was used for that in all conducted experiments. The anchors were generated in these experiments for each dataset separately and based on the different resolutions used.

3.3 Classification phase

3.3.1 Vision transformers

The transformer is considered a de facto architecture for Natural Language Processing (NLP) tasks. The transformers generally are built on the self-attention mechanism that allows the model to learn the global dependencies between the inputs and outputs. This mechanism mainly lets the inputs interact with each other to know which features the model should pay more attention to.

The promising results achieved with transformers in NLP tasks, especially with the transfer learning on downstream tasks, opened the door to introducing the transformers to the computer vision tasks. Many versions of vision transformers have been introduced recently; Dosovitskiy et al. [57] were the first ones who introduced the ViT transformer. After that, many were proposed, such as DeiT (Data Efficient Transformer) [58], Swin–Transformer [59], and ConvNeXt [60]. Furthermore, different studies recently utilized transformers in various medical imaging tasks such as classification, segmentation, and detection. In [61], the authors proposed a model for predicting breast tumor malignancy using a convNeXt transformer over ultrasound images. Van et al. [62] also introduced a model that utilized transformers to build a cross-view transformer model that was tested on multi-view medical images from two datasets CBIS-DDSM for breast cancer mammography and CheXpert for chest X-rays. In addition, different models based on transformers were proposed for COVID-19 diagnosis [63, 64]. Accordingly, and due to its success in prediction and classification, one of the main objectives of this work is to utilize the transformers in the detected masses classification.

3.3.2 Why transformers?

The kernels of the convolutional networks focus only on the local texture, which represents a local subset of pixels from the image, and that enforces the network to ignore the global context of the features as the network fails to encode the relative position of the features; accordingly, different studies were proposed recently to overcome this problem by utilizing attention mechanisms and pyramid networks.

Transformers are one of the recent architectures that built on the concept of the self-attention mechanism. The significant advantage of transformers that can be exploited in medical image diagnosis is the model's ability to understand the pixels' global context through learning the long-term dependencies between the data. For example, in this work, the surrounding area of the mass provides more information that can help the model observe more discriminant features about the global context and the correlation between the masses and its surrounding tissues; this can provide a more accurate diagnosis.

3.3.3 How vision transformer (ViT) works

The ViT Transformer is used for classification in this work; as shown in Fig. 5, at the classification phase, there are three main components of the ViT network; patch embedding, feature extraction using stacked transformer encoders, and the classification head that is built with Multilayer Perceptron (MLP).

The mammography image is reshaped into a sequence of patches. Then, the patches are flattened and mapped to dimensions with linear projection, as a constant latent vector is used through all the layers of the transformer network. Each image \({\mathbf{x}} \in {\mathbb{R}}^{H \times W \times C}\) (where H, W, and C are representing height, width, and number of channels of the image) is split into N non-overlapped patches, each of size 16 × 16. These patches are reshaped into this form \({\mathbf{x}}_{p} \in {\mathbb{R}}^{{N\; \times \;\left( {P^{2} \cdot C} \right)}}\) to be in sequence of 2D patches in n line vectors of the shape of (1, \(P^{2} \cdot C)\); where P is the resolution of each patch, while N represents the input sequence length (number of patches) that will be fed to the transformer after applying linear projection according to Eq. 2.

At the patch embedding phase and after splitting the image into n2 patches of shape (\(P\), \(P\), \(C\)), the flattened patches are multiplied by a trainable embedding tensor which is in a shape of (\(P^{2} \cdot C, d\)) to learn how to project each patch linearly into d dimension (where d is a constant value with the network architecture). The embedded patches are aggregated with the positional embeddings generated through \({\mathbf{E}}_{{\text{pos }}}\), a trainable positional embedding tensor. The resultant \({\mathbf{z}}_{0}\) is fed into the transformer encoders; the encoders learn the global features from the embedded patches of the mass through multi-headed self-attention layers. The encoders generate a sequence of tokens. Those tokens are fed into the MLP to generate the prediction of the class label if it is a benign mass or a malignant mass.

3.4 Transfer learning

This work adopted the transfer learning concept due to the lack of publicly available mammograms and to minimize the training time. Pre-trained weights for different networks were used to initialize the parameters of these networks through the training phase.

The YOLO network was fine-tuned for detection, and the pre-trained weights on the COCO dataset were transferred to initialize the network weights for training on the two mammographic datasets. On the other side, the models that were used for classification were trained on ImageNet and fine-tuned to predict the class of each mass.

In the conducted experiments for classification, six classification models were used; three of them are transformer based, which are ViT, SWIN, and ConvNeXt, while the other three are CNN-based, which are VGG16, ReseNet50, and Inception v3.

The proposed model used ViT transformer; this model was pre-trained on ImageNet21K. The input image size of the model is 224 × 224, and the input images in this model are divided into 16 × 16 patches. The Adam optimizer was used through training. A linear layer was added on the top of the pre-trained encoder to downstream the model for the mass classification task. Additionally, the MLP head was modified to just generate two outputs that are malignant and benign.

Furthermore, for the other classification models, some modifications have been done to transfer the pre-trained weights of those models into the required task. The base model of SWIN was used; the model was pre-trained on ImageNet1K, and the model took an input image size of 224 × 224. Also, the Adam optimizer was used with weight decay to avoid the overfitting problem, and the MLP head was also modified to output malignant or benign.

ConvNeXt architecture was adopted in the experiments to explore the potential of this model, as the architecture of this model integrates the ConvNeXt based on ResNet50 with the design of the training approaches of the vision transformer, the network was pre-trained on ImageNet22K, with an input image size of 224 × 224.

ResNet50 was modified; the last dense layer was excluded from the feature extraction layers. Moreover, the layers of ResNet were frozen to use the pre-trained weights during the training phase. A fully connected layer was added to obtain the final prediction. Binary cross-entropy loss function and Adam optimizer were used.

VGG16 was also modified as the classification layer was removed and replaced by a fully connected layer to classify the masses into malignant and benign. Furthermore, the base layers of VGG16 were frozen to restore the pre-trained weights of the ImageNet.

The final tested model was Inception v3, used in the classification phase; the model took input images of size 224 × 224. The base layers were set to be not trainable, and the last layer was also replaced with a fully connected layer to fit the mass classification task. For ResNet50, VGG16, and Inception v3, the Adam optimizer was used with binary cross-entropy loss function; also, the three models were pre-trained on ImageNet.

4 Experimental design and results

The experiments were conducted through three main phases; the first phase is the pre-processing of the datasets, followed by the mass detection phase, and finally, the mass classification phase. For mass detection, the YOLOv4 model was trained to detect the masses regardless of their type; then, the detected masses are fed into a model based on the vision transformers architecture to classify those masses into benign and malignant. Furthermore, different classification models were used through the experiments to evaluate the performance of the transformer-based models versus the CNN-based models on mammographic diagnosing.

4.1 Dataset

This work used two datasets: INbreast for FFDM images and CDD-CESM for both FFDM and CESM images. Each dataset was split into training, validation, and testing sets using the splitting ratio of 70%–10% and 20%, respectively. During the splitting process, the images for the same patients were included in the same set to prevent the results from being biased. The same sets were used across both detection and classification tasks to guarantee fair evaluation for the whole model.

4.1.1 INbreast

This dataset is composed of mammographic images that were produced using the FFDM technique. It includes 410 images in DICOM format for 115 cases with both views, Medio Lateral Oblique (MLO) and Carnio Caudal (CC). However, the dataset did not provide both views for each patient. Ninety cases of this dataset were diagnosed with cancer; 107 images were diagnosed with benign and malignant masses, and those are included in this work's experiments. The masses were classified into benign and malignant based on the BI-RADS score that is provided with the dataset, where 1, 2, and 3 are considered benign; on the other hand, 3, 4, and 5 are counted as malignant. The mass annotations were extracted from the XML files that are provided with the dataset, as those annotations were converted to be in the accepted form for YOLO annotation. As each image should be attached with its corresponding txt file that has the bounding boxes of the existing masses, where each box is represented by (Cx, Cy, w, h); (Cx, Cy) represents the coordinates of the center point of the bounding box, w is the width, and h is the height of this box. Table 3 illustrates the distribution of the dataset over training, validation, and testing sets that have been used for both detection and classification.

4.1.2 CDD-CESM

CDD-CESM is a newly introduced publicly available dataset that provides images in two types FDDM and CESM. It is the first publicly available dataset that contains CESM images. The dataset includes 2006 images divided into 1003 low-energy images (FDDM) and 1003 subtracted images representing the (CESM). The dataset has MLO and CC view images; the images were acquired using two different scanners that are G.E. Healthcare Senographe DS and Hologic Selenia Dimensions Mammography Systems. The average resolution of the images is 2355 × 1315; the images were obtained from 326 patients, all of whom are females between 18 and 90 years old. The dataset provides manual segmentation annotations for the existing abnormalities in the mammographic images, which are provided according to the ACR-BIRADS lexicon. The images are available in JPEG format with a CSV file for the annotations; the medical reports are also attached to the dataset. The dataset includes 310 FFDM images with masses and 333 CESM images with masses; in the conducted experiments, we used 310 images for the experiments on FFDM and CESM. Table 4 shows the data distribution of CESM in the training, validation, and testing sets. The corresponding images (cases) to those used in the CESM modality were included in the experiments conducted on the FFDM modality to guarantee a fair evaluation of each modality's efficacy in the automated breast cancer diagnosis process.

As the provided annotations in this dataset were done for segmentation, there are three forms for those annotations, polygon, circle, and ellipse. Accordingly, some pre-processing was done to convert those annotations into bounding boxes; the polygon points for X and Y were used to get Xmin, Xmax, Ymin, and Ymax from those lists, and those coordinates were used as coordinates for obtaining the bounding boxes.

For the circle annotations, the dataset provides center x (cx), center y (cy), and the radius r; So Eqs. (3) and (4) were used to get, \(x_{1}\), and, \(y_{1}\) of the box; to get the width and the height the r multiplied by 2 then the width were added to \(x_{1}\) to get, \(x_{2}\) and height to \(y_{1}\) to get \(y_{2}\).

To get the bounding box of the ellipse segmentation annotation, the provided values for cx, cy, rx, and ry were used to get \(x_{1}\), \(y_{1}\), \(x_{2}\), and, \(y_{2}\) according to Eqs. (5), (6), (7), and (8).

After converting the segmentation annotations into bounding boxes, those boxes' coordinates are altered to fit the format of the YOLO annotations, as mentioned before. Table 4 illustrates the distribution of the dataset over training, validation, and testing sets that have been used for both detection and classification.

4.2 Implementation environment

The experiments were conducted on a single machine with NVIDIA GeForce RTX3080Ti GPU with 12 vRAM, Intel® Core i7-11700 k processor with 3.200 GHz frequency, and 32 GB RAM. C + + , python 3.8, and TensorFlow were used to implement the proposed system on Windows 10 operating system.

4.3 Implementation set-up

The proposed framework was implemented over different phases: pre-processing, augmentation, detection, and classification. The following subsections demonstrate the implementation set-up of each phase.

4.3.1 Pre-processing and augmentation

For INbreast images, the images were converted from DICOM images into JPEG images; however, this step was skipped for CDD-CESM as the images are already in the JPEG format. The breast region was cropped to minimize the background area. The images were normalized to make the pixel intensity distribution in the range from (0–255). Then CLAHE was used at two different clip limits (1 and 2), and the generated images were mixed up with the normalized image to reconstruct new colored images. This step was done for both datasets to obtain new images with enhanced visibility for the mammographic images in addition to the original images in the training phase. The images were augmented to increase the number of images in the training phase, especially with the existence of imbalanced distribution between malignant and benign cases; four augmentation techniques were applied (vertical flip. Horizontal flip, multiplicative noise, and random rotation) for both images the original and the newly reconstructed one. At the detection phase, one more augmentation technique (mosaic) was used and selected based on previous experiments by [65], as it showed better performance in detection than other techniques that are used with YOLO. Algorithms 1 and 2 show the pre-processing algorithmic steps for CDD-CESM and INbreast.

4.3.2 Mass detection

The YOLOv4 is used in this work for the detection task; Darknet with CSP53 is used as a backbone for the network. Some network parameters were set up and modified at the network layers based on the domain and the used datasets in the experiments.

Table 5 shows the set-up configurations that were used in this work for YOLOv4 layers. In this work, different experiments were conducted to select the suitable input image size for the network, so the experiments were done for two input sizes (416 × 416) and (640 × 640). Moreover, the model performance was evaluated by two different scenarios; the first one was to detect the existence of the masses regardless of their type (benign/malignant), while the other one was designed to detect the benign masses and malignant masses separately. Accordingly, the number of classes in some experiments was 1 class, and in other experiments was 2. This consequently affects the number of filters to be 18 with the 1 class detection and 21 with the 2 classes detection based on the equation mentioned in [10]. The learning rate was selected to be 0.001 based on different experiments. Two scales, 0.1 and 0.1, were used to change the learning rate at two different steps that are 3200 and 3600. Finally, the max batches were set up to be 4000 as recommended in [27] (max batches = number of classes × 2000). Also, K-means clustering was used to select the anchors' sizes based on the dataset; nine anchors were used for those experiments.

4.3.3 Mass classification

Six experiments were conducted for classification with the same sets for training, validation, and testing that were used in detection for each dataset. The experiments were done to evaluate the performance of transformers versus the CNN-based networks. The selected CNN-based networks are VGG16, ResNet50, and Inception v3, as those networks showed promising results in other studies for mass classification. This work uses three transformer-based networks: ViT transformer, SWIN, and ConvNeXt. The input size for all networks was 224 × 224, Adam optimizer was used with binary cross-entropy loss function, and all the models were modified to transfer their pre-trained weights to fit the mass classification task. Furthermore, weight decay was used for all transformer models to avoid the overfitting problem. Algorithm 3 shows the algorithmic steps for the mass detection and classification process.

4.4 Evaluation metrics

Different evaluation metrics were used to evaluate the performance of the proposed model. The Intersection over Union (IoU), mean Average Precision (mAP), F1-score, precision, and recall are used for detection. Moreover, the True Positive Rate (TPR) and False Negative Rate (FNR) were calculated using True Positive (TP), False Negative (FN), and False Positive (FP). The performance was evaluated for classification through accuracy, sensitivity, specificity, confusion matrix, Area Under the Curve AUC, and Receiver Operating Characteristics (ROC).

IoU was used in evaluating the detection; IoU represents the overlapping between the predicted bounding box and the ground truth at a specific threshold; the threshold used in the proposed model is 0.5.

For detection, only TP, FP, and FN were defined as the detected mass is considered to be TP if the IoU > = 0.5 and considered to be FP if the IoU is < 0.5. If the model fails to detect an existing mass, this is counted as FN.

The mAP is also calculated to estimate the mean of all average precisions over all classes in the used dataset, where mAP is calculated using the following Equation.

Also, the precision was measured to define the percentage of the truly detected masses regarding the total number of actual existing masses, as shown in Eq. (10).

Furthermore, the recall (sensitivity) and FNR were calculated as shown in Eqs. (11) and (13).

The dataset sets suffer from an imbalanced class problem, as shown from the data distribution in Tables 3 and 4 in Sects. 4.1.1 and 4.1.2. Accordingly, F1-score was calculated as it is used as one of the useful evaluation matrices in this case, as shown in Eq. (12).

Different classification metrics were used for evaluation, and the accuracy score of the model was calculated for both validation and testing according to Eq. (14). The confusion matrix was used to represent mainly four attributes based on the classification results that are:

-

True Positive (TP): this represents the number of benign masses that were classified correctly as benign.

-

True Negative (TN): this indicates the number of malignant masses that were classified as malignant.

-

False Positive (FP): this represents the number of the misclassified masses as benign, and they are malignant.

-

False Negative (FN): this represents the number of the misclassified masses as malignant, and they are benign.

Moreover, specificity was calculated as shown in Eq. (15), also FPR and TPR were calculated to plot the ROC curve and calculate the AUC score. The ROC curve represents the trade-off between FPR and TPR; the higher the AUC score, the better the ability of the model to differentiate between benign and malignant masses.

4.5 Mass detection results

This section illustrates the detection results on both datasets INbreast and CDD-CESM, with its two image types (DM-CESM). Table 6 shows the Benign vs. malignant mass detection results on INbreast. In contrast, Tables 7 and 8 show the results of mass detection regardless of its type on INbreast and CESM, respectively, at different resolution input sizes. Moreover, Fig. 6 presents false detection on a mammographic image from the INbreast dataset. At the same time, Fig. 7 illustrates detection results on some images on FFDM vs. its corresponding CESM images from the CDD-CESM dataset.

Tables 6 and 7 show that the performance of YOLO is better at detecting the existence of masses regardless its type. Also, Tables 7 and 8 show that the higher input image size improves the detection accuracy as the best mAP is achieved at input size of 640. The 640-input size achieved mAP of 98.96% in INbreast dataset, 81.52% on CESM images and 71.65% on DM images from CDD_CESM dataset. Moreover, the experimental results showed that DM lower detection sensitivity than CESM.

It can be seen from Fig. 6 an example of a false detection mass of a mammographic image from the INbreast dataset. The ground truth based on the provided annotations has only one mass for this case with patient_id (22,614,236), while the model detected two masses. One of them is truly detected with confidence score of 94% and the other one is a false detected mass.

For further demonstration of the impact of mass detection on CESM, Fig. 7 illustrates the effectiveness of using CESM in enhancing the sensitivity of mass detection rather than FFDM images. The figure provides samples of images from CDD-CESM dataset in forms of CESM and their corresponding DM to illustrate the performance of the model at mass detection in these images regarding their ground truth.

4.6 Mass classification results

This section provides the results of the mass classification using six modified models; three of them are CNN based, and the other three are vision transformer-based models. Table 9 shows each network's results in different evaluation metrics. Moreover, Figs. 8, 9, 10, 11, 12, and 13 show the ROC and Confusion matrix for the results on each dataset; INbreast, CE-CESM, and CDD-CESM, respectively.

Table 9 shows that ViT transformer outperforms the other networks, specifically the CNN-based ones. It can be noticed that model achieved competitive accuracy scores on the testing set with 95.65% in INbreast, 97.61% in CESM, and 80% in DM. Also, it provides the highest AUC score among the other experimented networks, it achieved 88%, 90%, and 70% in INbreast CESM and DM, respectively. Figures 8, 10, and 12 show that ViT has the ability to differentiate better between benign and malignant masses comparing to the other networks. However, SWIN transformer and ConvNext also provide higher AUC scores than the CNN-based models (ResNet50, VGG16, and Inceptionv3). Furthermore, Confusion matrix in Figs. 9, 11, and 13 demonstrates that most of the misclassified masses is benign masses and this may have happened because the datasets lacked sufficient samples of benign masses.

4.6.1 INbreast mass classification results in terms of ROC and confusion matrix

4.6.2 CESM-CDD mass classification results in terms of ROC and confusion matrix

4.6.3 DM-CDD mass classification results in terms of ROC and confusion matrix

5 Discussion

This section provides a discussion of the results. This work conducted several experiments to evaluate the model at mass detection and classification. For mass detection, various experiments were carried on to explore the significance of the higher-resolution input size of YOLO. In addition, evaluating the network's performance at detecting the existence of the masses versus detecting benign and malignant masses in both CE and FFDM images. At the classification phase, the classification network of YOLO was replaced by different Networks. Furthermore, the experiments aimed to explore the potential of the transformers regarding the CNN-based models that were used for mass classification.

5.1 Mass detection

Four experiments are conducted on INbreast. The first two experiments were done to evaluate the model in two cases; the first was to detect the benign and malignant masses, while the other aimed to detect the existence of the mass regardless it was benign or malignant. Considering the results of Tables 6 and 7, it can be deduced that the model showed better results at detecting the masses regardless of its type. The performance enhanced significantly by almost \(\simeq\) 13% in terms of mAP. Moreover, this approach improved the number of the true detected masses from 19 to 22 with less FP and FN, as shown in Tables 6 and 7.

Table 7 also shows that the input image size affected the model's performance as the higher resolution showed better mAP. The experiments are done for an input image size of 416 × 416 and 640 × 640; the proposed model in Trial 3 detected all 23 existing masses in the testing set; however, there were 2 FP masses. This model achieved 98.96% mAP with a sensitivity of 100%. In Trial 1, the model achieved mAP of 97.78%, as the model failed to detect one of the existing masses, and there was one falsely detected mass. Higher resolution means less information loss, and this affected the detection, especially with the existence of small masses and high fibro-glandular tissues. Accordingly, this can clarify why the model performed better in Trial 3 than in Trial 1. Table 8 also showed that the higher input resolution provides higher mAP with more TP and less FN for both trials on DM and CE images from CDD-CESM. However, the higher resolution needs more time through training as this affects the batch size and subdivision values to allow enough memory through training.

The model in Trial 2 from Table 7 was trained with some normal images within the training set; it showed lower mean Average Precision than in Trial 1. However, the model succeeded in detecting 22 masses out of the 23 from the testing set, but it detected 1 extra false detection more than in Trial 1. This may explain why the mAP became lower, as this false detection was in one of the normal images.

The CESM is considered a relatively recent technique for screening mammograms; based on the literature, no CAD system model for mass detection in CESM images has been introduced yet, specifically on the CDD-CESM dataset. Accordingly, in this work, we conducted experiments to explore the potential of using those images in detecting the masses. Moreover, the experiments evaluate the detection in contrast-enhanced mammography and its equivalent digital mammography for the same dataset. The results of Table 8 showed that mass detection in CESM has promising results and outperformed the model's performance on the FFDM images. In Table 8, Trials 2 and 4 showed that the mAP of the detection model was improved by 3.4%–9.8% on the CESM images for an input image size of 416 and 640, respectively. Furthermore, the model succeeded in enhancing the true mass detections, sensitivity, and precision.

Moreover, Fig. 7 illustrates the results on FFDM images and their corresponding CESM images, and it can be noticed that according to ground truth, CESM provides more accurate detection results. Apparently, from those conducted experiments, this can show that the CESM can reveal more morphological features rather than FFDM images. And accordingly, this helped the model to learn more discriminant features during the training phase. These improvements can be demonstrated from even the trials that were done on the images of size 416 × 416.

5.2 Mass classification

For classification, six models were experimented on each dataset. From Table 9, it can be deduced that the vision transformer (ViT) outperformed the other classifiers in classifying the detected masses. As shown in Table 9, the vision transformers achieved the highest accuracy for INbreast, DM images, and CE images from the CDD-CESM dataset. The model achieved a classification accuracy of 95.65% for INbreast, 97.61% for CE images, and 80% for FFDM. For INbreast and CE images, the results of ViT outperformed the other classifiers in all terms (accuracy, sensitivity, specificity, and AUC).

The ResNet 50 achieved the highest accuracy score for the D.M. images with 82%; however, it showed a lower AUC score than ViT. The ViT achieved 80% on DM images, but its performance is still considered the best among the other classifiers regarding the ROC and AUC values. The model achieved the highest score of 70%, as shown in Fig. 12. This means that the model can differentiate between benign and malignant masses more than the other classifiers. On the other hand, ResNet50 achieved an AUC of 57% and sensitivity of 100%, which means that the model is useless because it considered most of the cases as malignant. It showed bad performance at predicting the benign masses rightly, as shown in the confusion matrix from Fig. 13. Moreover, the results show that the ViT model can be generalized; based on the validation accuracy and testing accuracy scores in all trials, as the model provided a higher performance on the testing set.

SWIN and ConvNeXt performed relatively better than the CNN-based classifiers, especially in AUC scores. The transformer interprets the images as a matrix of patches, not a matrix of pixels, and this allowed the model to preserve long global relationships between the patches and obtain more semantic information rather than CNN-based models. However, the SWIN transformer provides some improvements on the regular vision transformer (ViT); it showed lower performance than the ViT. The architecture of ViT is mainly based on observing the relationships between each patch (image token) and all of the rest patches of the input image. On the other hand, SWIN is built on the idea of the shifted window design; accordingly, it looks only at the relationships between the patch and only the other patches in the windowed area. The main task for the classification part of this work is to classify the cropped mass from the detection phase into benign or malignant. In this case, the input image is the masses itself, not the whole mammographic images. Accordingly, the relationship between each patch and all other patches for the mass matters; it is not only about the relation of the patch and its neighboring patches of the same window. And so, this can clarify why ViT outperformed the SWIN in the conducted experiments. Maybe the approach of SWIN can increase the computation efficiency of the model; however, this was not so influential in these experiments as the images were not with high resolution, especially with the fact that they represent a cropped part of the original images. ConvNeXt architecture is mainly based on ResNet architecture with the same training approaches as the basic vision transformer. As shown in Table 9, ResNet provides low performance compared to the other transformer-based classifiers; which means that the architecture of the ResNet was not performed well with the mass classification task, and this can justify why the performance of ConvNeXt is lower than ViT and SIWN. However, it showed better performance than ResNet50.

From Fig. 9, it can be deduced that only one benign mass was misclassified with ViT in INbreast Dataset; the model succeeded in classifying all the malignant masses. Also, from Fig. 11 for CE images, the proposed model rightly predicted all the malignant masses. Only two benign masses were misclassified; Fig. 14 shows the misclassified masses in INbreast and CE images from CESM-CDD.

It can be noticed from Fig. 13 that the most significant ratio of the misclassified masses was the benign ones compared to the ratio of the misclassified malignant masses. This may happen because the existing datasets do not have enough cases with benign masses compared to the number of existing malignant masses, and accordingly, the model trained in a better way to classify the malignant masses. However, augmentation techniques were used to overcome this problem, but those techniques do not provide too much realistic transformation for the images. And this can be considered one of the limitations facing breast cancer CAD systems.

ViT transformers utilize the idea of parallel processing, making the transformers provide more computational efficiency than CNN-based models. Table 10 shows that the inference time that the proposed framework took per image is less than the time YOLOv4 took before replacing the classification layers of YOLO with the ViT.

Tables 11 and 12 show a comparison between the proposed work and other recent studies on INbreast and CDD-CESM. The results in those tables illustrate that the proposed work shows promising and competitive results regarding the previous work. The proposed model achieved a detection accuracy of 98.96% and a classification accuracy of 95.64% in INbreast, which is almost the same result provided by the model introduced by [66]. Moreover, Table 12 shows that the proposed model outperformed the proposed model by [15] in terms of F1-score for mass detection in CE images by almost 5%; however, it achieved the same score as DM images.

6 Conclusion

Vision transformers are considerably revolutionizing computer vision tasks, especially image classification. Utilizing the power of transformers in medical image interpretation can help in enhancing the performance of CAD systems. This work proposed a novel framework for mass detection and classification based on integrating the YOLOV4 with the basic architecture of the vision transformer (ViT).

CESM images are a relatively new type of mammographic images that need more investigation in the direction of developing CAD systems that can utilize the morphological features of these images. Accordingly, this work introduces the first automated CAD system for mass detection and classification in CESM images. Furthermore, the model also was evaluated on FFDM images. The INbreast and the newly introduced CDD-CESM datasets were used in the experiments. The conducted experiments showed that the CESM images could improve the CAD system's performance at both detection and classification levels, as they showed better results than FFDM images. The proposed model achieved detection accuracy of 98.96% and 81.52%; moreover, it achieved a classification accuracy of 95.65% and 97.61% for INbreast and CESM, respectively.

The experiments also showed that the image size affected the detection results specifically for the CDD-CESM dataset as the image size of 640 enhanced the mAP for DM images by 3.4% and 9.8% for CE images compared to the image size of 416.

The proposed model utilized the potential of the vision transformers with mammographic images in classifying the masses detected using YOLOv4. Integrating the ViT architecture into our model has not only boosted its performance, but also revealed its potential in learning global and semantic features, crucial for the task at hand. ViT transformer showed very promising results compared to the other experimented models; it shows the best AUC score for INbreast and CDD-CESM datasets. Vit achieved AUC scores of 88%, 90%, 70%, and F1-score of 97.43%, 98.66%, 87.39% for INbreast, CE-CESM, and DM-CESM, respectively.

7 Advantages, limitations, and future work

Based on the conducted experiments, transformers showed better results than the CNN-based models; the transformers showed great capability at learning the global features along with the semantic features, especially with the existence of the attention mechanism that can help the model to learn which features to pay attention to at the classification task. Moreover, these experiments showed the efficacy of CESM images in developing breast cancer CAD systems.

However, some limitations need to be considered in future work; the available datasets suffer from the imbalance class problem even with using augmentation techniques as they did not provide natural transformations. Furthermore, the size of the publicly available datasets is relatively small. Moreover, the proposed model's computational time can be considered another limitation that can be enhanced in the future regarding the performance of the recent versions of YOLO.

Based on the results, this work can be extended in different directions; CESM images can be used along with FDDM for introducing a multimodal CAD system that can utilize both of these types to improve the performance of the CAD systems for breast cancer diagnosis. Also, the newly introduced YOLO models configurations need more investigations, especially with the significant improvement in the computational time and performance, so this work can be extended to investigate the potential of using these models on medical images, especially with the benefit of the ability to use them for detection or segmentation.

Data availability

The datasets analyzed during the current study are available in the Cancer Imaging archive repository, https://wiki.cancerimagingarchive.net/pages/viewpage.action?pageId=109379611, and in the Kaggle repository, https://www.kaggle.com/datasets/ramanathansp20/inbreast-dataset.

Abbreviations

- ACR-BIRADS:

-

American College of Radiology Breast Imaging Reporting and Data System

- ACS:

-

American Cancer Society

- AUC:

-

Area Under Curve

- CAD:

-

Computer-Aided (Diagnoses/Detection)

- CBIS-DDSM:

-

Curated Breast Imaging Subset of DDSM

- CC:

-

Carnio Caudal

- CDD-CESM:

-

Categorized Digital Database for Low energy and Subtracted Contrast Enhanced Spectral Mammography

- CE:

-

Contrast Enhanced

- CESM:

-

Contrast Enhanced Spectral Mammography

- CL:

-

Clip limit

- CLAHE:

-

Clip limit adaptive histogram equalization

- CNN:

-

Convolutional Neural Networks

- CSP-Darknet:

-

Cross Stage Partial Darknet

- CSV:

-

Comma-Separated Values

- DEiT:

-

Data Efficient Transformer

- DM:

-

Digital Mammography

- ELM:

-

Extreme Learning Machine

- Faster-RCNN:

-

Faster Region-Convolutional Neural Network

- FFDM:

-

Full Field Digital Mammography

- FN:

-

False Negative

- FNR:

-

False Positive Rate

- FP:

-

False Positive

- FPR:

-

False Positive Rate

- FRCN:

-

Full Resolution Convolutional Network

- GLCM:

-

Gray Level Co-occurrence Matrix

- IoU:

-

Intersection over Union

- LWT:

-

Lifting Wavelet Transform

- mAP:

-

Mean Average Precision

- MIAS:

-

Ammographic Image Analysis Society

- MLO:

-

Medio Lateral Oblique

- MLP:

-

Multilayer Perceptron

- NLP:

-

Nature Language Processing

- ROC:

-

Receiver Operating Characteristics

- ROI:

-

Region of Interest

- SFM:

-

Screen Film Mammography

- SVM:

-

Support Vector Machine

- TN:

-

True Negative

- TP:

-

True Positive

- TPR:

-

True Positive Rate

- ViT:

-

Vision transformer

- YOLO:

-

You Look Only Once

References

Giaquinto AN, Sung H, Miller KD et al (2022) Breast cancer statistics, 2022. CA Cancer J Clin 72:524–541. https://doi.org/10.3322/CAAC.21754

Miglioretti DL, Smith-Bindman R, Abraham L et al (2007) Radiologist characteristics associated with interpretive performance of diagnostic mammography. J Natl Cancer Inst 99:1854–1863. https://doi.org/10.1093/JNCI/DJM238

Alzubaidi L, Zhang J, Humaidi AJ et al (2021) Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J Big Data 8:53. https://doi.org/10.1186/s40537-021-00444-8

Kumar R (2023) Memory recurrent elman neural network-based identification of time-delayed nonlinear dynamical system. IEEE Trans Syst Man Cybern Syst 53:753–762. https://doi.org/10.1109/TSMC.2022.3186610

Sherstinsky A (2020) Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys D Nonlinear Phenom 404:132306

Nasser M, Yusof UK (2023) Deep learning based methods for breast cancer diagnosis: a systematic review and future direction. Diagnostics 13:161. https://doi.org/10.3390/DIAGNOSTICS13010161

Aly GH, Marey M, El-Sayed SA, Tolba MF (2021) YOLO based breast masses detection and classification in full-field digital mammograms. Comput Methods Programs Biomed 200:105823. https://doi.org/10.1016/J.CMPB.2020.105823

Sensakovic WF, Carnahan MB, Czaplicki CD et al (2021) Contrast-enhanced mammography: how does it work? Radiographics 41:829–839. https://doi.org/10.1148/RG.2021200167/ASSET/IMAGES/LARGE/RG.2021200167.TBL2.JPEG

Wei J, Hadjiiski LM, Sahiner B et al (2007) Computer-aided detection systems for breast masses: comparison of performances on full-field digital mammograms and digitized screen-film mammograms. Acad Radiol 14:659–669. https://doi.org/10.1016/J.ACRA.2007.02.017

Hassan NM, Hamad S, Mahar K (2022) Mammogram breast cancer CAD systems for mass detection and classification: a review. Multimed Tools Appl 81:20043–20075. https://doi.org/10.1007/S11042-022-12332-1/FIGURES/5

Raghu M, Unterthiner T, Kornblith S et al (2021) Do vision transformers see like convolutional neural networks? Neural Inf Process Syst 34:12116–12128

He K, Gan C, Li Z et al (2022) Transformers in medical image analysis: a review. Intell Med. https://doi.org/10.1016/J.IMED.2022.07.002

Gheflati B, Rivaz H (2022) Vision transformers for classification of breast ultrasound images. In: Proceedings of the annual international conference of the IEEE engineering in medicine and biology society, EMBS 2022-July, pp 480–483. https://doi.org/10.1109/EMBC48229.2022.9871809

Shamshad F, Khan S, Zamir SW et al (2023) Transformers in medical imaging: a survey. Med Image Anal 88:102802. https://doi.org/10.1016/j.media.2023.102802

Khaled R, Helal M, Alfarghaly O et al (2022) Categorized contrast enhanced mammography dataset for diagnostic and artificial intelligence research. Sci Data 9:122. https://doi.org/10.1038/S41597-022-01238-0

Suhail Z, Denton ERE, Zwiggelaar R (2018) Classification of micro-calcification in mammograms using scalable linear Fisher discriminant analysis. Med Biol Eng Comput 56:1475–1485. https://doi.org/10.1007/S11517-017-1774-Z/TABLES/2

Punitha S, Amuthan A, Joseph KS (2018) Benign and malignant breast cancer segmentation using optimized region growing technique. Future Comput Inform J 3:348–358. https://doi.org/10.1016/J.FCIJ.2018.10.005

Mughal B, Sharif M, Muhammad N (2017) Bi-model processing for early detection of breast tumor in CAD system. Eur Phys J Plus 132:1–14. https://doi.org/10.1140/EPJP/I2017-11523-8

Rouhi R, Jafari M, Kasaei S, Keshavarzian P (2015) Benign and malignant breast tumors classification based on region growing and CNN segmentation. Expert Syst Appl 42:990–1002. https://doi.org/10.1016/J.ESWA.2014.09.020

Dong M, Lu X, Ma Y et al (2015) An efficient approach for automated mass segmentation and classification in mammograms. J Digit Imaging 28:613–625. https://doi.org/10.1007/S10278-015-9778-4

Montenegro L, Abreu M, Fred A, Machado JM (2022) Human-assisted vs. deep learning feature extraction: an evaluation of ECG features extraction methods for arrhythmia classification using machine learning. Appl Sci (Switzerland) 12:7404. https://doi.org/10.3390/app12157404

Dara S, Tumma P (2018) Feature extraction by using deep learning: a survey. In: Proceedings of the 2nd international conference on electronics, communication and aerospace technology, ICECA 2018, pp 1795–1801. https://doi.org/10.1109/ICECA.2018.8474912

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444. https://doi.org/10.1038/nature14539

Bengio Y, Courville A, Vincent P (2012) Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell 35(8):1798–1828

Ragab DA, Sharkas M, Marshall S, Ren J (2019) Breast cancer detection using deep convolutional neural networks and support vector machines. PeerJ 7:e6201. https://doi.org/10.7717/PEERJ.6201

Sannasi Chakravarthy SR, Bharanidharan N, Rajaguru H (2022) Multi-deep CNN based experimentations for early diagnosis of breast cancer. IETE J Res. https://doi.org/10.1080/03772063.2022.2028584

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection

Al-Masni MA, Al-Antari MA, Park JM et al (2017) Detection and classification of the breast abnormalities in digital mammograms via regional convolutional neural network. In: Annual international conference IEEE engineering medicine and biology society, pp 1230–1233. https://doi.org/10.1109/EMBC.2017.8037053

Al-antari MA, Al-masni MA, Kim TS (2020) Deep learning computer-aided diagnosis for breast lesion in digital mammogram. Adv Exp Med Biol 1213:59–72. https://doi.org/10.1007/978-3-030-33128-3_4

Baccouche A, Garcia-Zapirain B, Olea CC, Elmaghraby AS (2021) Breast lesions detection and classification via YOLO-based fusion models. Comput Mater Contin 69:1407–1425. https://doi.org/10.32604/CMC.2021.018461

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39:1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031

Ribli D, Horváth A, Unger Z et al (2018) Detecting and classifying lesions in mammograms with deep learning. Sci Rep 8:1–7. https://doi.org/10.1038/s41598-018-22437-z

Agarwal R, Díaz O, Yap MH et al (2020) Deep learning for mass detection in full field digital mammograms. Comput Biol Med 121:103774. https://doi.org/10.1016/J.COMPBIOMED.2020.103774

Cao H, Pu S, Tan W, Tong J (2021) Breast mass detection in digital mammography based on anchor-free architecture. Comput Methods Programs Biomed 205:106033. https://doi.org/10.1016/J.CMPB.2021.106033

Zhu C, He Y, Savvides M (2019) Feature selective anchor-free module for single-shot object detection

Shen R, Yao J, Yan K et al (2020) Unsupervised domain adaptation with adversarial learning for mass detection in mammogram. Neurocomputing 393:27–37. https://doi.org/10.1016/j.neucom.2020.01.099

Mohamed SAS, Moftah SG, Chalabi NAEM, Salem MAAW (2021) Added value of contrast-enhanced spectral mammography in symptomatic patients with dense breasts. Egypt J Radiol Nuclear Med 52:1–10. https://doi.org/10.1186/S43055-020-00372-2/FIGURES/4

Song J, Zheng Y, Wang J et al (2022) Multi-feature deep information bottleneck network for breast cancer classification in contrast enhanced spectral mammography. Pattern Recognit 131:108858. https://doi.org/10.1016/J.PATCOG.2022.108858

Danala G, Patel B, Aghaei F et al (2018) Classification of breast masses using a computer-aided diagnosis scheme of contrast enhanced digital mammograms. Ann Biomed Eng 46:1419–1431. https://doi.org/10.1007/s10439-018-2044-4

Gao F, Wu T, Li J et al (2018) SD-CNN: a shallow-deep CNN for improved breast cancer diagnosis. Comput Med Imaging Graph 70:53–62. https://doi.org/10.1016/J.COMPMEDIMAG.2018.09.004

Perek S, Kiryati N, Zimmerman-Moreno G et al (2019) Classification of contrast-enhanced spectral mammography (CESM) images. Int J Comput Assist Radiol Surg 14:249–257. https://doi.org/10.1007/s11548-018-1876-6

Berbar MA (2018) Hybrid methods for feature extraction for breast masses classification. Egypt Inform J 19:63–73. https://doi.org/10.1016/j.eij.2017.08.001

Muduli D, Dash R, Majhi B (2020) Automated breast cancer detection in digital mammograms: a moth flame optimization based ELM approach. Biomed Signal Process Control 59:10192. https://doi.org/10.1016/j.bspc.2020.101912

D’Haeyer JPF (1989) Gaussian filtering of images: a regularization approach. Signal Process 18:169–181. https://doi.org/10.1016/0165-1684(89)90048-0

Otsu N (1979) Threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern SMC 9:62–66. https://doi.org/10.1109/TSMC.1979.4310076

Pizer SM, Amburn EP, Austin JD et al (1987) Adaptive histogram equalization and its variations. Comput Vis Graph Image Process 39:355–368. https://doi.org/10.1016/S0734-189X(87)80186-X

Redmon J, Farhadi A (2016) YOLO9000: better, faster, stronger. In: Proceedings–30th IEEE conference on computer vision and pattern recognition, CVPR 2017-January, pp 6517–6525. https://doi.org/10.1109/CVPR.2017.690

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement

Bochkovskiy A, Wang C-Y, Liao H-YM (2020) YOLOv4: optimal speed and accuracy of object detection

Wang C-Y, Bochkovskiy A, Liao H-YM (2022) YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Reis D, Kupec J, Hong J, Daoudi A (2023) Real-time flying object detection with YOLOv8

Nepal U, Eslamiat H (2022) Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors 22:464. https://doi.org/10.3390/S22020464

Ismail A, Mehri M, Sahbani A et al (2021) Performance benchmarking of YOLO architectures for vehicle license plate detection from real-time videos captured by a mobile robot. Sorbonne University, Paris

Zhou S, Cai K, Feng Y et al (2023) An accurate detection model of Takifugu rubripes using an improved YOLO-V7 network. J Mar Sci Eng 11:1051. https://doi.org/10.3390/jmse11051051

Tian Z, Shen C, Chen H, He T (2019) FCOS: fully convolutional one-stage object detection

Lou H, Duan X, Guo J et al (2023) DC-YOLOv8: small-size object detection algorithm based on camera sensor. Electronics (Switzerland) 12:2323. https://doi.org/10.3390/electronics12102323

Dosovitskiy A, Beyer L, Kolesnikov A et al (2022) An image is worth 16 × 16 words: transformers for image recognition at scale

Touvron H, Cord M, Douze M et al (2021) Training data-efficient image transformers & distillation through attention

Liu Z, Lin Y, Cao Y et al (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE international conference on computer vision 9992–10002. https://doi.org/10.48550/arxiv.2103.14030

Liu Z, Mao H, Wu C-Y et al (2022) A ConvNet for the 2020s

Hassanien MA, Singh VK, Puig D, Abdel-Nasser M (2022) Predicting breast tumor malignancy using deep ConvNeXt radiomics and quality-based score pooling in ultrasound sequences. Diagnostics (Basel) 12:1053. https://doi.org/10.3390/DIAGNOSTICS12051053

van Tulder G, Tong Y, Marchiori E (2021) Multi-view analysis of unregistered medical images using cross-view transformers. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) 12903 LNCS. Springer, Cham, pp 104–113

Fan X, Feng X, Dong Y, Hou H (2022) COVID-19 CT image recognition algorithm based on transformer and CNN. Displays 72:102150. https://doi.org/10.1016/J.DISPLA.2022.102150

Al-Rahhal MM, Bazi Y, Jomaa RM et al (2022) COVID-19 detection in CT/X-ray imagery using vision transformers. J Pers Med 12:310. https://doi.org/10.3390/JPM1202031