Abstract

Very sensitive loads require the safe operation of electrical distribution networks, including hospitals, nuclear and radiation installations, industries used by divers, etc. To address this issue, the provided paper suggests an innovative method for evaluating the appropriate allocation of Distribution STATic COMpensator (DSTATCOM) to alleviate total power losses, relieve voltage deviation, and lessen capital annual price in power distribution grids (PDGs). An innovative approach, known as the modified capuchin search algorithm (mCapSA), has been introduced for the first time, which is capable of addressing several issues regarding optimal DSTATCOM allocation. Furthermore, the analytic hierarchy process method approach is suggested to generate the most suitable weighting factors for the objective function. In order to verify the feasibility of the proposed mCapSA methodology and the performance of DSTATCOM, it has been tested on two standard buses, the 33-bus PDG and the 118-bus PDG, with a load modeling case study based on real measurements and analysis of the middle Egyptian power distribution grid. The proposed mCapSA technique's accuracy is evaluated by comparing it to other 7 recent optimization algorithms including the original CapSA. Furthermore, the Wilcoxon sign rank test is used to assess the significance of the results. Based on the simulation results, it has been demonstrated that optimal DSTATCOM allocation contributes greatly to the reduction of power loss, augmentation of the voltage profile, and reduction of total annual costs. As a result of optimized DSTATCOM allocation in PDGs, distribution-level uncertainties can also be reduced.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A typical power distribution grid (PDG) has a radial structure since it is easier to protect and has a reduced short circuit level. A distribution system delivers power to clients to meet their needs. It has the highest losses as compared to the generating and transmission systems due to low voltage, high current flow, a high R/X ratio, and the radial nature [1, 2]. According to the literature, about 10–13% of total generated power is squandered as I2R losses in PDG [3]. Researchers have paid close attention to distribution networks in recent years because they play an imperative role in the quality and planning of power systems. Power quality degradation impacts a number of significant demands. These may include divers' heavy industries, hospitals, emergency systems, and nuclear and radiation installations. For example according to the international atomic authority section [2, 4], the research reactors are suffering from interruptions in voltage profiles that may cause Loss of Off-site Power Supply (LOPS). A reliable on-site power supply with reliable power quality is crucial for ensuring the safety of nuclear reactors as well as safeguarding the public and the environment from the radioactive dangers posed by LOPS. By installing appropriate compensatory devices, distribution system losses can be minimized. In this regard, capacitors are critical in decreasing power losses in PDG. It is also possible to increase voltage levels across buses using capacitors; however, providing a variable reactive power source is extremely challenging [5, 6]. As a result, distributors must account for these capacitor costs as well as installing them in appropriate places with optimal sizes. Another problem with capacitor placement is that load balancing is not possible due to capacitor operating issues such as resonance. Furthermore, one of the key complications associated with the use of capacitors in PDG is the oscillation in nature of capacitor devices when compared to inductive components with comparable circuits [7]. To address these issues, Distributed Flexible Alternating Current Transmission Systems (D-FACTS) are utilized in PDGs to minify power losses and increase bus voltages. D-FACTS devices can offer higher performance and lower-cost way of improving network controllability and reliability, increasing asset utilization, and improving end-user power quality while minimizing environmental impact and system cost [8,9,10,11]. Presently, quality issues in electrical power networks are becoming more sophisticated at all levels. As a result, maintaining appropriate levels of electric power quality is a considerable task [10].

Distribution Static Compensator (DSTATCOM) and Static Var Compensator (SVC) are the most commonly utilized D-FACTS devices. As evidenced in previous studies [12,13,14,15,16,17], the DSTATCOM is the preferred appropriate device since it provides excellent reactive power while preventing unbalanced loading in PDG. Furthermore, it has outstanding characteristics such as no resonance or transient harmonics troubles, low harmonic content output, and small footprint [12, 13]. Voltage source converters are used in customized DSTATCOM devices. It can also function as a shunted device, capacitor connection, and conjunction transformer. It is used to regulate system bus voltages, control power factor, real power, and reactive power. DSTATCOM can supply capacitive and inductive modal adjustment that is both quick and permanent [14]. DSTATCOM is the most effective device for eliminating harmonic deformity from the system and avoiding imbalance [15]. DSTATCOM deployment maximizes annual cost savings, lowers power losses, increases load ability, boosts stability, adapts for reactive power, and maintains power quality [16]. Ultimately, DSTATCOM has numerous benefits, including low power losses, low harmonic output, robust regulatory capabilities, relatively inexpensive, and tiny size [17]. Consequently, finding the most effective allocation of DSTATCOM has a serious influence on PDG. Table 1 outlines the primary advantages and purposes of DSTATCOM and SVC.

1.1 Literature review

DSTATCOM allocations have received little attention from researchers. So far, different strategies for DSTATCOM allocation have been adopted, including (1) modal analysis, (2) analytical techniques, and (3) optimization algorithms.

Originally, modal analysis approaches and time-domain simulation were employed to solve the DSTATCOM determination issue in the distribution systems (DS) to foster power goodness [18].

Analytical approaches, on the other hand, are employed to tackle DSTATCOM layout issues in PDG for loss relief and bus voltage augmentation [19]. The power loss index (PLI) is taken into account while resolving DSTATCOM allocation concerns in PDG [20]. The voltage stability index (VSI) has been determined before locating the DSTATCOM devices optimally in different PDGs [21]. The PLI and VSI are taken into account while resolving DSTATCOM allocation concerns in PDGs [22, 23]. In [24], the VSI has been employed to elect the candidate nodes for locating the DSTATCOM devices. For handling uncertain attributes of loads by [25], the Monte Carlo simulation (MCS) technique is applied. The analytical approach-based technique has been utilized to deduce the allocation of distributed generators (DG) and DSTATCOM based on the VSI and loss sensibility factor (LSF) [26].

Numerous researches have focused on recent techniques and methodologies. However, these researches can be divided in two categories.

In the first category, many researchers considered the study of the optimum allocation of DSTATCOM. Like the particle swarm optimization (PSO) [27], bacterial foraging optimization algorithm (BFOA) [28], and echolocation-based bat algorithm (EBA) [29] have been introduced to minify the losses and operational costs, as well as to enhance the voltage profile in PDG. The multi-objective genetic algorithm (MOGA) [30] has been developed to consider the average current total harmonic distortion (THD) and the overall operational prices as the main objective function (ObjF). Differential evolution (DE) and long-term planning of DSTATCOM allocation have been proposed to maximize the total net profit (TNP) with annual savings price and minimize the energy price [31]. Based on the modified crow search algorithm (MCSA) developed [32], with the aim of minimizing system losses, the pollution index (PI) is calculated based on pollution levels, operation costs, and enhancing voltage levels. In this paper [32], the MCSA has been compared with the Crow search algorithm (CSA), differential evolution (DE), and harmony search algorithm (HSA). The total power losses have been reduced by utilizing the harmony search algorithm (HAS) to allocate the DSTATCOM in the PDG [33]. A multi-objective seeker optimization algorithm (MOSOA) was developed considering the pareto-optimal solution to deduce the final optimal results of DSTATCOM allocation [34]. In addition, the contingency load-loss index (CLLI) has been used to estimate reliability as a planning tool in [34]. The dragonfly algorithm (DA) has been introduced by [35] to allocate the DSTATCOM in PDG. The DA has been validated by a comprehensive analysis with PSO and genetic algorithm (GA). In [36], the improved bacterial foraging search algorithm (IBFA) has been utilized for increasing the bus voltages and stability with minifying the system losses based on the DSTATCOM compensation. Based on [37], the DSTATCOMs have been installed and sized based on the discrete–continuous vortex search algorithm (DCVSA) to minify the energy losses and annual investments. In [38], the MOPSO was utilized to minify the system losses by maximizing the voltage levels and the transmission by ensuring the conditions limits of the node voltage violation and the line loading to allocate the DSTATCOM in DS optimally. The authors in [39] introduced the hybrid analytical–coyote optimization technique (COA) for minifying the power losses and enhancing the voltage levels of the DS based on the DSTATCOM compensation. Under different load conditions, the installation and capacity of the DSTATCOM have been optimized using the Bat Algorithm (BA) [40]. The immune algorithm (IA) was suggested to ensure system power quality, minimize power losses, and reduce the installation price of DSTATCOM in PDG [41]. Noori et al. [42] implements the multi-objective improved golden ratio optimization algorithm (MOIGROM) for the simultaneous allocation of capacitors and DSTATCOM in the PDG. Moreover, the power-loss-reduction-factor (PLRF) was used to detect the suggested candidate nodes to locate the capacitors and DSTATCOM in PDGs.

In the second category, the optimum allocation of DSTATCOM with distributed generators (DGs) have been considered. However, the improved PSO was utilized in [43] to allocate the DGs and DSTATCOM in PDG with a focus on the following indexes; voltage profile enhancement Index (VPEI), benefit cost ratio (BCR), and emission cost benefit index (ECBI). In [44], the modified ant lion optimizer (MALO) has been employed to allocate the Photovoltaic DG (PVDG) and DSTATCOM in PDG for minifying the system cost and enhancing the voltage levels (profile/stability). DG and DSTATCOM have been assigned to PDG based on Multi-Objective Functions (MObjF), using several hybrid optimizers, mainly the Firefly Algorithm (FA) via particle swarm optimizations (PSO) [45]. In this research paper [45], the proposed acceleration coefficients for PSO were considered using the following methodologies such as; Adaptive Acceleration Coefficients (FA-AAC-PSO), Autonomous Particle Groups (FA-APG-PSO), Time-Varying Acceleration (FA-TVA-PSO), Nonlinear Dynamic Acceleration Coefficients (FA-NDAC-PSO), and Sine Cosine Acceleration Coefficients (FA-SCAC-PSO).

Table 2 summarizes previous DSTATCOM allocation approaches.

According to Table 2, it is able to note that: (1) most of the objective-functions (ObjFs) have been utilized to analyze the optimum location and capacity of DSTATCOM in power distribution grids (PDGs) focusing on minifying the power/energy losses and enhancing the voltage levels. Not many ObjFs were considered the investment and operating prices. (2) The latest methodologies have presented the investment and operational prices of the DSTATCOM making an allowance for daily demand power graphs into the study. (3) A lot of optimizers have been implemented depending upon the principles of metaheuristic techniques to overcome the problems of DSTATCOM allocations in PDGs and to try ensuring the possibility of escaping from local optimum. In addition, the No-Free-Lunch theory [46] dictates that some mentioned methodologies do not guarantee suitable results in other applications since they remain anchored in the local optimum.

In relation to that, this article proposes an effective algorithm, which combined the Capuchin Search Algorithm (CapSA) [47] with centroid-based fuzzy mutation called mCapSA. mCapSA’s main role is to prevent pre-mature convergence of search agents by balancing exploration and exploitation phases without avoiding the immobility of local optimal solutions. The performance of the proposed algorithm is evaluated on CEC’2020 test suite and compared with several optimization algorithms including; Particle swarm optimization (PSO) [48], Evolution strategy with covariance matrix adaptation (CMA-ES) [49], Gravitational search algorithm (GSA) [50], Whale optimization algorithm (WOA) [51], Harris' Hawks optimization (HHO) [52], Archimedes optimization algorithm (AOA) [53], and the original CapSA. Furthermore, the Wilcoxon sign rank test is used to verify the relevance of the results. Due to the aforementioned and previous reasons, as well as based on specialized literature, the proposed mCapSA is used to locate and demonstrate DSTATCOM optimally in PDGs via the multi-objective function (MObjF) approach. Furthermore, the research paper presents an analytic hierarchy process (AHP) algorithm for selecting weighting factors (WFs) to improve MObjF performance and effectiveness [54, 55]. The advanced scheme is implemented on 33-bus and 118-bus standard systems. The scheme is further applied to a practical model of the power demand of the Middle Egyptian power distribution grid (MEPDG). A 33-bus standard system is used to model the power demand of the MEPDG. Furthermore, it has been implemented a comprehensive study that applied on 33-bus and 118-bus standard PDG between the proposed approach (mCapSA) with its original one and with other recent optimizers in the literature such as basic particle swarm optimizer (PSO) [45], basic Firefly Algorithm (FA) [45], hybrid FA-PSO [45], FA-Sine Cosine Acceleration Coefficients-PSO (FA-SCAC-PSO), FA-Nonlinear Dynamic Acceleration Coefficients-PSO (FA-NDAC-PSO) [45], FA-Adaptive Acceleration Coefficients-PSO (FA-AAC-PSO) [45], FA-Autonomous Particles Groups-PSO (FA-APG-PSO) [45], and FA-Time-Varying Acceleration-PSO (FA-TVA-PSO) [45], bacterial foraging optimization algorithm (BFOA) [28], multi-objective PSO (MOPSO) [42], multi-objective golden ratio optimization method (MOGROM) [42], and the improved one of MOGROM named (MOIGROM) [42]. However, the main contributions are summarized as follows:

-

Developing an effective optimization called mCapSA to enhance the performance of the original CapSA.

-

The performance of the mCapSA is legalized based on the CEC'2020 test suite.

-

The analytical hierarchy process (AHP) algorithm is utilized to elect the most suitable weighting factors (WFs) to enhance the multi-objective function (MObjF) performance and effectivity via mCapSA optimizer process.

-

Sufficient comprehensive analysis is executed between the efficient mCapSa and 7 recent optimizers considering the original one.

-

The performance of the proposed approach is compared with several other optimization algorithms in the literature which solved the same problem.

-

An application of real-time measurements of the power load curve of the middle Egyptian distribution grid was conducted on the 33-bus grid, to determine the efficiency and validity of the proposed scheme.

The following structure of the manuscript is divided as: Sect. 2 introduces the mathematical scheme formulation. Section 3 defines the basic concept of CapSA. In Sect. 4, the implementation of the mCapSA algorithm is introduced. The performance estimation of mCapSA is explained in Sect. 5. The simulation findings as well as commentaries are drawn in Sect. 6. Finally, Sect. 7 deduces the conclusions.

2 Mathematical scheme formulation

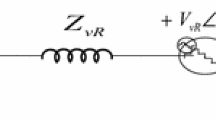

Over the last 2 decades, the growing utilization of renewable energy-based distributed generators (DG) in the grid along with rapid demand growth has generated new problems for utilities in terms of voltage stability, power quality, and effective energy utilization. As a result, paying close attention to DSTACOMs’ technology has become a critical issue that must be considered for its impact on the distribution system (DS). It has become essential to determine the most appropriate allocation of DSTACOMs to address these issues. This is because they constitute the main issue under network constraints, including load flow, with the purpose of enhancing overall system performance. Figure 1 presents a sample distribution network considering the DSTATCOM connection.

This paper uses the Backward-forward sweep method (BFSM) as an alternative to standard approaches to load flow reckoning due to the low X/R ratio, imbalanced load, and radially laid-out nature of PDG [2, 56].

2.1 Modelling of DSTATCOM

The DSTATCOM device is considered to be a device related to FACTs. At a point of common coupling (PCC), DSTATCOM can absorb/inject the reactive currents. As illustrated by Fig. 1, the DSTATCOM device can be connected to the selected bus based on an optimization algorithm with the optimum capacity. In DSTATCOM device, the voltage amplitude and its angle are regulated based on the PID controller controlling the node voltage and to enhance the power factor (PF) [11]. DSTATCOM can either produce or consume the reactive power that undergoes in this manuscript. The phasor diagram after DSTATCOM integration is presented by [41]. Moreover, the reactive power exchanged with the grid at node i are estimated by the equations in [45]. However, the reactive power injected by DSTATCOM is computed as in the following formula,

where \(\left| {V_{{{\text{new}},i}} } \right|\) is the absolute voltage at node \(i\)

2.2 multi-objective function (MObjF) approach

In the proposed work, the suggested MObjF approach is implemented including the objective function of power losses (ObjF1), voltage deviation (ObjF2), voltage stability (ObjF3), and the capital annual price (ObjF4) that can be evaluated in the following formula,

where the κ1, κ2, κ3, and κ4 are the weighting factors and they have been determined in this paper using AHP method that is explained in Sect. 2.4.

Based on Fig. 1, the power losses, which are active PLoss(i,j) and reactive QLoss(i,j), through “i-bus” and “j-bus” are estimated by the following equations,

wherever, Vi is the voltage at i-bus, R and X are the resistance and reactance of the branch, respectively.

The total basic active power loss of PDGs is evaluated as,

wherever, N is the branches number.

Hence, the ObjF1 is evaluated as a proportion of the system active losses including DSTATCOMs (PDST,TLoss) to the basic one with no DSTATCOMs as follows,

The ObjF2 approach is formulated as follows:

The index of the voltage deviation at the basic-scenario (IVDb) without DSTATCOMs is formulated as follows,

The index of the voltage deviation with DSTATCOM location (IVDDST) can be written as follows,

where the voltage boundaries Vmax and Vmin are deemed to be 1.05 p.u. and 0.95 p.u, and the ‘nb’ represents the node number. Consequently, the ObjF2 is realized to be the ratio between the difference of the base index and the index with DSTATCOM allocation to the base one as follows,

The main purpose of the voltage stability (VS) evaluation is to inspect the level of system security. Based on the power flow determination, the VS of network nodes can be maximized while avoiding voltage collapse potential. The VS is deduced from Fig. 1 as follows,

where the Pj,eff and Qj,eff are the effective values of active and reactive loads, consequently, that are beyond jth-bus.

Regarding the VS estimation, the ObjF3 is the ratio of the voltage stability deviation in the base case to the difference between the base index and the index with DSTATCOM integration as follows:

The Index of the voltage stability deviation at the basic scenario (IVSDb) with no DSTATCOM can be expressed by,

On the other hand, the Index of the voltage stability deviation with DSTATCOM (IVSDDST) can be deduced by,

Finally, the ObjF3 is given as follows,

The capital annual price (CAPt) of the proposed scheme as ObjF4 is made up two parts as follows:

(I) the first part is the energy price after allocating the DSTATCOM can be rewritten in the following formula,

where Cen is the coefficient of the energy price rate (60 $/MWh) [45], Tp is the scheduling period of years (Tp = 30 years), tr is years’ number, Th can be considered as the hour/year. Equation (17) gives the present-worth-factor (PWF) by the following formula,

wherever, the inflation (InfR) and interest (IntR) equal 9% and 12.5%, respectively [2].

On the other hand, the energy price in the base scenario with no DSTATCOM location and it can be estimated by the following equation,

(II) the second part is formulated to include the total operational and location costs of the DSTATCOM (TOLDST) can be formulated by the following:

where α = 0.1 that represents the expected rate of return and TDST is the longevity of the DSTATCOM [41]. The βDST is the operational cost of the QDSTATCOM (βDST = 5.3 $/kVAR) [42]. Di is the number of DSTATCOM locations. The βLoc is the investment price of the QDSTATCOM location (βLoc = 1600 $/location) [42]. The nDST is the DSTATCOM number. Ty is the time period based on the ith load level (LL). The nLL is the number of the LL. The λi is the ratio of the period of the ith LL to the full time period and it can be evaluated as follows [41],

Then, the ObjF4 can be estimated as the ratio of CAPt by the following equation,

2.3 Constraints

The limitations that assure stellar performance of the proposed system can be deduced as follows:

-

Power balance constraints can be written according to Fig. 1 as follows,

$$P_{j} = P_{i,j} - P_{L,j} - R_{i,j} \frac{{\left( {P_{i,j}^{2} + Q_{i,j}^{2} } \right)}}{{V_{i}^{2} }}$$(22)$$Q_{j} = Q_{i,j} - Q_{L,j} - X_{i,j} \frac{{\left( {P_{i,j}^{2} + Q_{i,j}^{2} } \right)}}{{V_{i}^{2} }} + \left( {\psi_{{{\text{DST}}}} \times Q_{{{\text{DSTATCOM}},j}} } \right)$$(23)

where the ΨDST is the reactive power set 0 or 1.

-

Voltage profile level,

$$\left| {V_{i}^{\min } } \right| \le \left| {V_{i} } \right| \le \left| {V_{i}^{\max } } \right|,\begin{array}{*{20}c} {} \\ \end{array} \forall i \in nb$$(24) -

DSTATCOM size,

$$Q_{{{\text{DSTATCOM}}}}^{{{\text{min}}}} \le Q_{{{\text{DSTATCOM}}}} \le \begin{array}{*{20}c} {Q_{{{\text{DSTATCOM}}}}^{{{\text{max}}}} } \\ \end{array}$$(25) -

DSTATCOM location,

$$2 \le {\text{DSTATCOM}}_{{{\text{Location}}}} \le \begin{array}{*{20}c} {nb} \\ \end{array}$$(26)

2.4 AHP method for weighting factors assignment

Adding weights to each objective function (ObjF) may enhance the performance of the current scheme. The prepared AHP algorithm is the suitable tool to deduce the optimum weights for the specific objective [54, 55]. The AHP constructions, steps, and definitions can be deduced by [54, 55]. However, to start with the AHP method, it is required to construct the reciprocal matrix [A] based upon the decision maker's pairwise comparison in the range of 1–9. As mentioned by [55], in the first step, a pairwise comparison matrix is formed on a scale from 1 to 9 by comparing the importance of each of the indices. (Therefore in the current manuscript, indices refer to objectives.) The larger the scale value, the more important the index is. In this paper, it is therefore the power losses that are of the utmost importance, followed by the voltage level, voltage stability, and the annual total cost. In this case, the weighting factor of power losses will be the highest, followed by the weighting factors of voltage level, voltage stability, and the annual total cost.

However, the [A] matrix size can be estimated considering the elected Number of objective functions (NF). The individual ObjF can be represented as a row, where the element higher value of such row specifies the significance of such ObjF versus the other one. Equation (27) estimated the square matrix [A] as follows [54, 55],

where aii equals unity representing the self-value that is dedicated to objective i. The mutual value aij = 1/aji sets to objective j in contrast to objective i. The variables i and j ϵ [1, NF].

However, the weighting-factors (WFs) for each objective i is determined using the following Eq. (28),

where the WFs is introduced in as a vector in the following form \(\kappa = \left[ {\kappa_{1} ,\kappa_{2} , \ldots ,\kappa_{NF} } \right]^{{\text{T}}}\).

Finally, the consistency of the matrix [A] can be verified based on the index of consistency ratio (\(I_{{{\text{CR}}}}\)) as follows,

where \(I_{{{\text{CI}}}}\) and \(I_{{{\text{RI}}}}\) are the consistency and random indexes, correspondingly. In the case of the \(I_{{{\text{CR}}}}\) is below 0.1 p.u., then, the weight of each index is sensible. The value of the random index, \(I_{{{\text{RI}}}}\) depends upon the NF as well as these values are found by Table 3 [54, 55].

The maximum eigenvalue (λmax) can be evaluated by the following form,

2.5 Sensitivity index algorithm (SIA)

In Fig. 1, the line active losses (Pline(i,j)) can be defined based on the effective values of active and reactive loads Pj,eff + Qj,eff, consequently, that are behind jth bus by the following Eq. (31),

Henceforward, the first derivative of Eq. (31) according to the Pj,eff is defined as the Sensitivity Index of Losses (SIL) that is expressed by Eq. (32) [2],

The existing values of the SIL are arranged based on the voltage stability index (VSI) values that are given by Eq. (12) from high to low in descendant order for all lines. As a means of reducing the research space for the proposed techniques, it will be selected (2/3) as a candidate node from the existing results of the SIA method to deduce the most optimal values.

3 Capuchin search algorithm (CapSA)

This section presents the design of Capuchin search algorithm (CapSA), which can focus on the attitude of the capuchin monkeys as a major social model of animals seeking food, in detail [47]. As follows, CapSA consists of three main stages: Leaping motion (global search), Swinging motion (local search) and Climbing motion (local search):

3.1 Stage 1: Leaping motion

The leaping motion considering the jumping strategy is comparable to the global search. The actual distance stimulated by the capuchin outfits the horizontal distance, \(x\), among the source tree branch and the target tree. Mathematically, the third law of motion can be utilized to define the \(x\) as follows,

where \(x\) and \(x_{0}\) are the new and initial positions of the capuchin. The \(v\) and \(v_{0}\) are the velocity and the initial one of the capuchins. The \(a\) is the acceleration, and \(t\) can be defined as the time instance.

The \(v\) during the jumping motion is defined based up on the first law of motion as follows,

3.2 Swinging motion

This movement approach can be simulated a local search procedure, which is presented by two models. Consistent with the trigonometry rules, the \(x\) throughout the swinging motion of the capuchin is identified by Eq. (35),

where \(\theta\) is the angle.

3.3 Climbing motion

This climbing motion is termed by means of a demonstrative and a theoretical model. The capuchin position throughout the climbing or sliding a tree is evaluated via Eqs. (33) and (34) as follows,

where \(t\) = 1, which is the iteration to identify the inconsistency between iterations.

3.4 Arithmetical concept of CapSA

Like other swarm intelligence methodologies, the CapSA method is defined as a population-based technique, which can initialize a number of the established particles in advance in a random form. It is imperative to note that the singulars in capuchins' swarms have two diversities: Leader (i.e., Alpha) and supporters. However, Eq. (37) is performed to assign the initial position of each capuchin in the swarm as follows,

where \(ub_{j}\) and \(lb_{j}\) are the higher and minor bounds of the \(i\)th capuchin in the \(j\)th dimension, correspondingly. \(r \in \left[ {0,1} \right]\) is a random number.

CapSA's population is constructed by including both individuals—the alpha of the team, as well as its followers. However, the location of leader capuchins is defined by the following formula,

The jumping angle of the capuchins \(\theta\) is formulated as,

In the CapSA methodology process, for the duration global and local search implementation, the \(\tau\) is responsible to realize a balance between exploration and exploitation. This \(\tau\) factor is defined by Eq. (40),

where \(k\)/\(K\) represent the current/maximum iteration values. The factors \(\beta_{0} ,\beta_{1}\) and \(\beta_{2}\) are arbitrarily designated as 2, 21, and 2.

The velocity of the CapSA is estimated using the following formula,

The updated location of the alpha and the followers’ capuchins is deduced by follows,

where \(P_{{{\text{bf}}}}\) and \(P_{{{\text{ef}}}}\) are the balance and elasticity factors’ probability of the capuchin motion on the ground. Hence, the updated location of leader capuchins that utilize normal walking is defined by the following Eq. (43),

The two \(P_{{{\text{bf}}}}\) and \(P_{{{\text{ef}}}}\) factors are responsible for the efficient exploitation and exploration to boost the competence of the local as well as the global search strategy. The estimated values of these factors are \(P_{{{\text{bf}}}} = 0.7\) and \(P_{{{\text{ef}}}} = 9\) that have been deduced by intensive analysis.

Some of the capuchins are swinging on branches by strolling over not large distances, where the leader and followers may consider a local search for seeking foods on as well as around the tree branches. These capuchins utilize two processes, using tails to clutch on a tree branch as well as using the swing process to detect the food sources on the other side of the branch. Hence, the capuchins’ location is estimated by Eq. (44) as follows,

Some of the capuchins are climbing on the trees and branches numerous times throughout foraging in a process similar to local search. Therefore, the capuchins’ location is estimated by Eq. (45) as follows

where \(v_{j}^{i}\) represents the velocity of the \(i\)th capuchin in the \(j\)th dimension in a current form. On the other hand, the \(v_{j - 1}^{i}\) introduces the previous velocity.

In some random conditions of the capuchins reposition, the capuchins may execute new different ways to deduce a better way for seeking the food source. The mention attitude can be realized to allow the capuchins enhancing their exploration professionally of the forest to evaluate new regions for food sources. During foraging, these random repositions are expressed using the following Eq. (46).

where the constant \({\text{Pr}} = 0.1\) represents the possibility of random search of the capuchins.

During the iteration process of the optimizer, the variance among successive iterations, \(t_{i} - t_{i - 1}\), is equal to 1. Hence, the attitude of the accompanying capuchins following the alphas, can be deduced as follows,

4 Modified capuchin search algorithm (CapSA)

In the current section, the prepared mCapSA technique is detailed as well as how it boosts search capabilities and balances the exploration and exploitation phases. This is compared with the original CapSA. The proposed fuzzy mutation embedded in the capuchin search algorithm. While CapSA is an efficient meta-heuristic algorithm, it suffers from local optimum stagnation and low convergence rate, as do many classical meta-heuristic algorithms. CapSA may occasionally be affected by premature convergence, skipping of the real solution, and failure to balance between the exploration and exploitation phases. In order to address the previous shortcomings, we propose two-pronged enhancements: (1) the injection of fuzzy mutation; and (2) adding a decreasing time-dependent factor to achieve the exploration–exploitation balance.

4.1 The injection of Fuzzy mutation

Fuzzy logic describes the fuzziness as Membership Functions (MFs) in a graphical form that is used in fuzzy set theory. The issues discussed above are addressed by improving CapSA using a fuzzy mutation called centroid-based fuzzy mutation. This mutation prevents premature convergence of search agents. A mutation is applied depending on its probability. The probability of mutation (\(P_{i} )\) is given by,

where ρ = 0.6 and φ = 0.4, \(P_{d} \;{\text{ and}}\;P_{c}\) are given by,

dist is the distance of the agent from the centroid, a = 0.5, b = 0.5, α = 4, and β = 5, and unchanged is the number of iterations for which the global best agent has remained \({\text{unchanged}}{.}\)

If the \(P_{c}\) is greater sufficient to achieve the agent mutation, the mutation occur is given by,

\(U\;{\text{and}}\;L\) are the bound of the problem, t current iteration, and T is the total number of iterations.

4.2 Exploration–exploitation balance

From exploration to exploration, the CapSA heavily relies on random parameters. As can be seen, the CapSA searches through a large number of random values without avoiding the immobility of local optimal solutions. So, here it will be replaced with the decreasing time dependent factor that is given by,

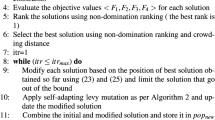

\(d^{t + 1}\) decreases with time to decrease the randomization with time; therefore, the operation transfers from global part to local part with dynamic behavior. However, the pseudocode of the prepared mCapSA is illustrated by the following Algorithm 1.

In conclusion, the proposed mCapSA has successfully overcome the original CapSA limitations, avoiding local optimum, more balance between exploration and exploitation by introducing decreasing time-dependent factor given by Eq. (52). Moreover, mCapSA has many other advantages such as the injection of Fuzzy mutation in the original CapSA given by Eqs. (48, 49, 50 and 51).

5 Performance estimation of mCapSA

The CEC'2020 test suite is implemented for evaluating the performance of the developed mCapSA against quantitatively and qualitatively derived metrics. The statistical metrics such as the mean and standard deviation (STD) for best-practice outcomes are determined for the prepared mCapSA methodology and the corresponding item. Moreover, qualitative metrics considering boxplot and convergence curves are implemented. The proposed mCapSA outcomes have been compared with other 7 techniques namely, Particle swarm optimization (PSO) [48], Evolution strategy with covariance matrix adaptation (CMA-ES) [49], Gravitational search algorithm (GSA) [50], Whale optimization algorithm (WOA) [51], Harris' Hawks optimization (HHO) [52], Archimedes optimization algorithm (AOA) [53], and the original CapSA [47].

5.1 Parameters setting

It is noted in [57] that the parameters (that is, the population size) of the algorithms used affect the performance of the optimization algorithm and the quality of the search process. To achieve fair and statistically significant comparative benchmarking, in addition to considering that the algorithm's nature is stochastic, each algorithm used in the comparison process has its own parameters that must be set to their default values.

For fair evaluation, the proposed mCapSA algorithm and the counterparts are evaluated in the same common setting. The initial parameter settings of these algorithms (default values) are reported in Table 4. For fair benchmarking comparison, the population size N was set to 30, the dimension of the optimization problem Dim was set as 10, each algorithm performs 30 runs for each function, the maximum number of function evaluations (FEs) are selected as 100,000.

5.2 Concept of CEC'2020 benchmark functions

Current subsection presents the estimation operation of the novel form of the CapSA named mCapSA. The IEEE Congress on Evolutionary Computation (CEC) [58] is then developed as test problems to evaluate the performance of the prepared techniques. First, the CEC'2020 benchmark functions contained 10 test functions, including multimodal, unimodal, hybrid, and composition functions, for more details see [59]. Figure 2 illustrates the 2D visualization of CEC'2020 functions, making the differences and nature of each issue easier to understand.

5.3 Statistical results

Table 5 presents the most significant results from the proposed mCapSA technique and the counted items for the statistical metrics over the CEC'2020 benchmark function with Dim = 10. The most promising results (lower values) are indicated in bold-red. The determined results revealed the superiority of the proposed mCapSA algorithm compared to its counterparts in solving six of functions from the CEC'2020 test suite. Moreover, the first rank in terms of mean and STD is recorded for the developed mCapSA methodology.

5.4 Boxplot performance analysis

Figure 3 displays boxplot curves for the proposed mCapSA method and corresponding items to depict data distributions into quartiles over the ten CEC'2020 test suite for Dim = 10. The lowermost and largest data items reached by the algorithms are presented as the minimum and maximum values in the boxplot’s curves, which are the edges of the whiskers. The lower and upper quartiles are restricted by the ends of the rectangles. A narrow boxplot indicates that the data are closely matched. For most functions, the boxplot analysis demonstrated that the proposed mCapSA technique realized the most suitable distribution with the lowest values in comparison with the other challenger algorithms.

5.5 Convergence performance analysis

The convergence-curves of the proposed mCapSA method and the counterparts over the 10 of CEC'2020 test suite for Dim = 10 are provided by Fig. 4. It is noteworthy that the developed mCapSA algorithm obtained the lowest values of the ObjF for most functions, meaning that the prepared technique is capable of achieving convergence quickly. Furthermore, this convergence can be observed, making the proposed mCapSA a promising optimization tool for solving online optimization problems which require fast calculations.

5.6 Statistical analysis using Wilcoxon’s rank sum test

As a means of assessing the significance of the results and complementing the statistical analysis, Wilcoxon rank sum tests can be performed. A Wilcoxon test is carried out to ensure that the evaluated performance is relatively stable and not random. In [59], the Wilcoxon rank sum test is described in detail. Using paired methods, Table 6 presents a comprehensive analysis of the average of the highest quality solutions to date. This test has a significance level of 5%. Based on the fact that the p-values for most functions are ≤ 0.05 (5% significance level), the results for the four classes of the CEC'2020 test suite are statistically significant. This confirms that the performance of the mCapSA algorithm is not random. In conclusion, the optimum solutions are achieved by applying an applicable and prearranged set of optimization rules.

6 The existing results

The suggested scheme utilizing the mCapSA technique was executed by means of MATLAB package’R2021a that is executed on an Intel® Core™ i5-5200U CPU @2.20 GHz including a memory of 6.00 GB and 64-bit operational system. Based on the above methodology steps considering the AHP algorithm, the optimal weights κ1, κ2, κ3, and κ4 for the MObjF are concluded as 0.4498, 0.2950, 0.1973, and 0.0579, respectively. Moreover, the λmax = 4.1155, while, the consistency and index ratios, respectively, are \(I_{{{\text{CR}}}}\) = 0.0428 and \(I_{{{\text{CI}}}}\) = 0.0385, respectively. Supplementary, the reciprocal matrix [A] can be deduced as follows,

The results of this work are performed considering 4 cases. In the first and second cases, the proposed scheme was applied to 33-bus and 118-bus PDGs to assess the performance of the studied optimization techniques with the modified mCapSA method. Based on the comprehensive analysis with recently published optimizers for DSTATCOM allocation, the mCapSA was validated. Ultimately, the DSTATCOM performance based on the mCapSA method was evaluated using the Egyptian case study as a real load model applied to a 33-bus PDG for 24 h.

6.1 Case1: The results of 33-bus standard power distribution grid (PDG)

The prepared methodology is tested on 33-bus standard PDG, where the rated line voltage and MVA are 12.66 kV and 100 MVA, respectively. The data of the 33-bus model is given by [60]. Figure 5 illustrates the 33-bus single line diagram. As illustrated in Table 7, in the base case, the total active (Ploss) and reactive (Qloss) power losses are 210.9678 kW and 143.013 kVAR, respectively. Moreover, the minimum voltage profile (Vmin) is 0.9038 p.u, minimum voltage stability index (VSImin) is 0.6672 p.u, and the capital annual price (CAPt) is 2,115,286.523 $. However, the existing results of the SIA algorithm based on the SIL and VSI methodology are shown in Table 8. The proposed mCapSA technique elected the optimal nodes 24, 30, and 14 for DSTATCOM location from 2/3 of the SIL results.

mCapSA and the other recently proposed optimizers have been compared for allocating DSTATCOM in the most appropriate positions and sizes (MVAr) as shown in Table 7, where the optimal locations are 24(0.540), 30(1.0549), and 14(0.4086). Based on the comprehensive analysis performed, it is quite evident that mCapSA is superior in finding optimal solutions to the proposed problem, where the active power loss reduction (LR) is minimized by 34.4305% and the capital annual price (CAPt) is saved by 31.1725%. The AOA method has the highest net saving of 31.1787%, but it has the lowest LR of 34.4157% when compared with mCapSA. Moreover, all the proposed methods such as original CapSA, PSO, WOA, CMA-ES, AOA, HHO, and GSA have higher values of Vmin and VSImin compared to mCapSA. However, mCapSA has the lowest values of active (138.3305 kW) and reactive (94.3241 kVAr) power losses, and its performance spent only 108.878 s in 200 function evaluations, the shortest CPU time. Furthermore, the mCapSA has the lowest energy costs (1,386,982.639 $) and CAPt (1,455,899.207 $), except the AOA method.

Figures 6 and 7 explain the performance effect of the DSTATCOM devices on the voltage and stability profiles. In addition, the convergence of the MObjF with the function evaluations has been illustrated in Fig. 8. Moreover, Fig. 9 zooms in from Fig. 8 that illustrates the superiority in convergence of the mCapSA algorithm to deduce the optimal MObjF value of 0.571240.

6.2 Case2: The results of 118-bus standard power distribution grid (PDG)

The 118-bus standard PDG is displayed in Fig. 10. The 118-bus PDG rated line voltage is 11 kV and the rated MVA is 100. The PDG data in details can be found at [61]. According to Table 7, the base case of 118-bus PDG illustrates that the Ploss and Qloss are 1297.7 kW and 978.471 kVAr, respectively, the Vmin is 0.8688 pu, VSImin is 0.5698 pu, the CAPt is 13,011,688.314 $.

In Table 9, the deduced results of the SIA method are used to reduce the research space needed by the developed optimizers. This is done to determine the most critical location for DSTATCOM devices. Thus, the proposed optimizers have been optimized (2/3) using the existing results of the SIA method. From Table 7, the optimizers were elected based on the most optimum locations in the following nodes {101, 31, 104, 42, 23, 110, 50, 74, 80, 96}. The existing result in Table 7 proved that the superiority of the proposed mCapSa to deduce the optimal solutions relative that has the highest LR = 36.1914% and the CAP net savings = 32.8958%, in addition, it has the lowest capacity of the total DSTATCOM value = 13.0000 MVAr. As illustrated by Table 7, despite the AOA and WOA having the highest values of Vmin and VSImin, they failed to find the global optimum of the other parameters. Figures 11 and 12 represented the influence of the DSTATCOM allocation on the voltage and stability index profiles. Figure 13 shows how the MObjF compares with functional evaluations, where the value of 0.556862 pu is the lowest among the optimization algorithms, while the mCapSA optimizer can complete its task in 230.4002 s. According to these results, the mCapSA has strong ability to obtain optimal solutions at different scales of the distribution grids.

6.3 Case3: The comprehensive analysis with the last studies from literature

In case 3, a comprehensive analysis has been conducted between the proposed modified CapSA algorithm, its original case, and the most recent published optimizers. This analysis has been conducted to ensure the validity and ability of the proposed mCapSA to achieve the global optimum of the current problem. However, Table 10 summarized the comprehensive study in two scenarios: 33-bus and 118-bus distribution systems.

The mCapSA was compared, in the case of 33-bus PDG, with its original one, as well as with PSO, FA, hybrid FA-PSO, FA-AAC-PSO, FA-APG-PSO, FA-TVA-PSO, FA-NDAC-PSO, and FA-SCAC-PSO [45]. Following Table 10, the optimization methods had been ordered to start with the highest optimum solutions based on mCapSA. By contrast, the mCapSA technique can reduce the power system losses by 34.4305%. In addition, it can reduce the total energy cost by 1,386,982.639 $ as compared to previous optimization methods, including the original. Furthermore, the mCapSA can improve the minimum voltage level to 0.9347 p.u., the highest in this comprehensive study.

For the second case with 118-bus PDG, the mCapSA method was compared with its original one as well as with BFO [28], MOPSO [42], MOGROM [42], MOIGROM [42], and the original CapSA methods. In Table 10, the compared optimizers were sorted from the most optimum, which is mCapSA, to the least optimum, which is BFO. Although, the MOPSO, MOGROM, and MOIGROM have the highest minimum voltage level, the mCapSA methodology has the lowest energy cost (8,302,576.958 $) and the highest power system loss reduction (LR = 36.1914%).

According to the comprehensive analysis that is illustrated by Table 10, the mCapSA technique can find the optimal solution of the proposed scheme successfully. However, in this work, it is very relevant to examine the performance of the DSTATCOM with load levels that can be explained by the fourth case of the existing results.

6.4 Case 4: DSTATCOM performance study at different load levels of Egyptian case study

In the current case study, the performance of DSTATCOM has been studied considering load modeling based on the practical measurement and analysis of the Middle Egyptian power distribution grid (MEPDG). As depicted in Fig. 14, a power analyzer has been applied for 24 h to different load points of the MEPDG. This is done to deduce the power quality of the measured system and to determine the power demand curve. The real load demand model illustrated by Fig. 15 is applied to 33-bus standard PDG in this study. It can be seen that the nominal load demand amounts to 84% of the load at 10:00 a.m. and 22:00 p.m., as illustrated in Fig. 15.

In the base case, the bus voltage and the voltage stability profiles are shown in Figs. 16a and 17a, respectively.

After installing the DSTATCOMs optimally at buses 14, 24, and 30 as illustrated by Fig. 18, the bus voltage and stability voltage profiles are improved to the extent shown in Figs. 16b and 17b, respectively. It has been illustrated by Fig. 18 that the DSTATCOMs devices compensate different reactive power at nodes 24 and 30 at all times with different load demand levels. The DSTATCOM device located at bus 14 absorbs reactive power from the grid with light loads from 01:00 a.m. to 8:00 a.m. and compensates reactive power from 9:00 a.m. to 00:00. These devices had a decisive impact on the performance of the grid using the mCapSA technique. The MCapSA successfully minimized the active and reactive losses as well as the installation and operating costs of installed devices. This is done while maximizing the net savings of the total annual price for the device and its energy consumption, as depicted in Figs. 19, 20, and 21, respectively.

However, Table 11 illustrates the values of active and reactive power losses that were reduced to 2926.5481 kW by 33.4673% of active loss reduction and to 2000.489 kVAr by 32.9098% of reactive loss reduction. Moreover, the total energy costs have been minimized to 1,222,636.99 $ with a net saving of 615,012.06 $. In addition, the total capital annual cost has been minimized to 1,284,197.56 $ with a net saving of 553,451.49 $.

To conclude, the mCapSA technique proved superior in finding the optimal allocation of DSTATCOM devices within the PDG, regardless of the load level.

7 Conclusion

The prepared article presents an approach based on the modified Capuchin Search Algorithm (mCapSA) with sensitivity index algorithm (SIA) to deduce the optimum siting and capacity of DSTATCOM devices in the power distribution grids (PDGs). In order to estimate the power flow, the backward/forward sweep algorithm (BFS) was used. Moreover, the SIA has been employed to determine the farthest applicant nodes, which are required to minimize the search space for the proposed optimizer. By using the SIA method, the prepared mCapSA enables DSTATCOM to determine the optimum capacity and siting of their existing buses. A multi-objective function (MObjF) approach is designed to improve the performance of the proposed system and provide global optimal solutions. The overall scheme has been verified on the 33-bus and 118-bus PDGs. To evaluate the DSTATCOM devices in practice, the proposed scheme has also been applied to the 33-bus distribution system using the real load demand analysis of the middle Egyptian distribution grid (MEPDG). This case study demonstrates that the total net savings of capital annual price (CAPt) is 30.1173% for 30 years, while the active and reactive loss reduction of the modeled distribution system are 33.4673% and 32.9098%, respectively, for 30 years. To investigate the performance of the prepared methodology, a comprehensive analysis of the existing results in conjunction with the newly and previously published techniques has been conducted. However, the proposed mCapSA technique proved superior in deriving the optimum allocation of the DSTATCOM devices in the PDGs. This was to enhance voltage levels, minimize system losses, and minimize CAPt of the PDGs. A future work will conclude with the operation management of the distribution grids considering different load levels using DSTATCOM with distributed generators incorporating distributed storage batteries.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Abbreviations

- \(Q_{{{\text{DSTATCOM}},i}}\) :

-

Reactive power injected by DSTATCOM

- MObjF:

-

Multi-objective function

- \(V_{{{\text{new}},i}}\) :

-

Absolute voltage at node \(i\)

- κ 1, κ 2, κ 3, and κ 4 :

-

Weighting factors of the objective function

- P Loss (i,j), Q Loss (i,j) :

-

Active and reactive power losses, respectively through “i-bus” and “j-bus”

- \(P_{{{\text{TLoss}},b}}\) :

-

Total base power losses

- P DST,TLoss :

-

System active losses including DSTATCOMs

- IVDb :

-

Index of the voltage deviation at the basic-scenario

- IVDDST :

-

Index of the voltage deviation with DSTATCOM location

- V max, V min :

-

Maximum and minimum voltage levels, respectively

- V i, DST , V i,b :

-

Voltage with DSTATCOM and the base one, respectively

- IVSDb :

-

Index of the voltage stability deviation at the base case

- IVSDDST :

-

Index of the voltage stability deviation with DSTATCOM

- VS, VSmax :

-

Voltage stability and its maximum value, respectively

- \({\text{En}}_{{\text{b}}}\), \({\text{En}}_{{{\text{DST}}}}\) :

-

Energy price before and after allocating the DSTATCOM, respectively

- \(C_{{{\text{en}}}}\) :

-

Coefficient of the energy price rate (60 $/MWh)

- β DST :

-

Operational cost of the QDSTATCOM

- β Loc :

-

Investment price of the QDSTATCOM location

- Ψ DST :

-

Reactive power set 0 or 1

- \(I_{{{\text{CI}}}}\) and \(I_{{{\text{RI}}}}\) :

-

Consistency and random indexes, respectively

- P j, eff and Q j ,eff :

-

Effective values of active and reactive loads

- λ max :

-

Maximum eigenvalue

References

Ali MH, Mehanna M, Othman E (2020) Optimal planning of RDGs in electrical distribution networks using hybrid SAPSO algorithm. Int J Electr Comput Eng 10:6153. https://doi.org/10.11591/ijece.v10i6.pp6153-6163

Tolba MA, Rezk H, Al-Dhaifallah M, Eisa AA (2020) Heuristic optimization techniques for connecting renewable distributed generators on distribution grids. Neural Comput Appl 32:14195–14225. https://doi.org/10.1007/s00521-020-04812-y

Rohouma W, Balog RS, Peerzada AA, Begovic MM (2020) D-STATCOM for harmonic mitigation in low voltage distribution network with high penetration of nonlinear loads. Renew Energy 145:1449–1464. https://doi.org/10.1016/j.renene.2019.05.134

International Atomic Energy Agency (2017) Industrial applications of nuclear energy. IAEA Nuclear Energy Series No. NP-T-4.3. IAEA, Vienna

Tolba MA, Zaki Diab AA, Tulsky VN, Abdelaziz AY (2019) VLCI approach for optimal capacitors allocation in distribution networks based on hybrid PSOGSA optimization algorithm. Neural Comput Appl. https://doi.org/10.1007/s00521-017-3327-7

Tolba MA, Tulsky VN, Vanin AS, Diab AAZ (2017) Comprehensive analysis of optimal allocation of capacitor banks in various distribution networks using different hybrid optimization algorithms. In: Conference proceedings—2017 17th IEEE international conference on environment and electrical engineering and 2017 1st IEEE industrial and commercial power systems europe, EEEIC/I and CPS Europe 2017

Ahmad AL, A, Sirjani R, (2020) Optimal placement and sizing of multi-type FACTS devices in power systems using metaheuristic optimisation techniques: An updated review. Ain Shams Eng J 11:611–628. https://doi.org/10.1016/j.asej.2019.10.013

Hemeida MG, Rezk H, Hamada MM (2018) A comprehensive comparison of STATCOM versus SVC-based fuzzy controller for stability improvement of wind farm connected to multi-machine power system. Electr Eng 100:935–951. https://doi.org/10.1007/s00202-017-0559-6

Divan DM, Brumsickle WE, Schneider RS et al (2007) A distributed static series compensator system for realizing active power flow control on existing power lines. IEEE Trans Power Deliv 22:642–649. https://doi.org/10.1109/TPWRD.2006.887103

Ali MS, Haque MM, Wolfs P (2019) A review of topological ordering based voltage rise mitigation methods for LV distribution networks with high levels of photovoltaic penetration. Renew Sustain Energy Rev 103:463–476. https://doi.org/10.1016/j.rser.2018.12.049

Shehata AA, Tolba MA, El-Rifaie AM, Korovkin NV (2022) Power system operation enhancement using a new hybrid methodology for optimal allocation of FACTS devices. Energy Rep. https://doi.org/10.1016/j.egyr.2021.11.241

Bayat A, Bagheri A (2019) Optimal active and reactive power allocation in distribution networks using a novel heuristic approach. Appl Energy 233–234:71–85. https://doi.org/10.1016/j.apenergy.2018.10.030

Kazmi SAA, Ameer Khan U, Ahmad HW et al (2020) A techno-economic centric integrated decision-making planning approach for optimal assets placement in meshed distribution network across the load growth. Energies 13:1444. https://doi.org/10.3390/en13061444

Kumar C, Mishra MK (2012) A control algorithm for flexible operation of DSTATCOM for power quality improvement in voltage and current control mode. In: 2012 IEEE international conference on power electronics, drives and energy systems (PEDES). IEEE, pp 1–6

Wasiak I, Mienski R, Pawelek R, Gburczyk P (2007) Application of DSTATCOM compensators for mitigation of power quality disturbances in low voltage grid with distributed generation. In: 2007 9th international conference on electrical power quality and utilisation. IEEE, pp 1–6

Gandoman FH, Ahmadi A, Sharaf AM et al (2018) Review of FACTS technologies and applications for power quality in smart grids with renewable energy systems. Renew Sustain Energy Rev 82:502–514. https://doi.org/10.1016/j.rser.2017.09.062

Gitibin R, Hoseinzadeh F (2015) Comparison of D-SVC and D-STATCOM for performance enhancement of the distribution networks connected WECS including voltage dependent load models. In: 2015 20th conference on electrical power distribution networks conference (EPDC). IEEE, pp 90–100

Gupta AR, Kumar A (2016) Energy saving using D-STATCOM placement in radial distribution system under reconfigured network. Energy Procedia 90:124–136. https://doi.org/10.1016/j.egypro.2016.11.177

Hussain SMS, Subbaramiah M (2013) An analytical approach for optimal location of DSTATCOM in radial distribution system. In: 2013 international conference on energy efficient technologies for sustainability. IEEE, pp 1365–1369

Gupta AR, Kumar A (2018) Optimal placement of D-STATCOM using sensitivity approaches in mesh distribution system with time variant load models under load growth. Ain Shams Eng J 9:783–799. https://doi.org/10.1016/j.asej.2016.05.009

Yuvaraj T, Ravi K, Devabalaji KR (2017) Optimal allocation of DG and DSTATCOM in radial distribution system using cuckoo search optimization algorithm. Model Simul Eng. https://doi.org/10.1155/2017/2857926

Jain A, Gupta AR, Kumar A (2014) An efficient method for D-STATCOM placement in radial distribution system. In: 2014 IEEE 6th India international conference on power electronics (IICPE). IEEE, pp 1–6

Gupta AR, Kumar A (2015) Energy savings using D-STATCOM placement in radial distribution system. Procedia Comput Sci 70:558–564. https://doi.org/10.1016/j.procs.2015.10.100

Sannigrahi S, Roy Ghatak S, Acharjee P (2019) Strategically incorporation of RES and DSTATCOM for techno-economic-environmental benefits using search space reduction-based ICSA. IET Gener Transm Distrib 13:1369–1381. https://doi.org/10.1049/iet-gtd.2018.5220

Shahryari E, Shayeghi H, Moradzadeh M (2018) Probabilistic and multi-objective placement of D-STATCOM in distribution systems considering load uncertainty. Electr Power Compon Syst 46:27–42. https://doi.org/10.1080/15325008.2018.1431819

Weqar B, Khan MT, Siddiqui AS (2018) Optimal placement of distributed generation and D-STATCOM in radial distribution network. Smart Sci 6:125–133. https://doi.org/10.1080/23080477.2017.1405625

Devi S, Geethanjali M (2014) Optimal location and sizing determination of distributed generation and DSTATCOM using particle swarm optimization algorithm. Int J Electr Power Energy Syst 62:562–570. https://doi.org/10.1016/j.ijepes.2014.05.015

Devabalaji KR, Ravi K (2016) Optimal size and siting of multiple DG and DSTATCOM in radial distribution system using Bacterial Foraging Optimization Algorithm. Ain Shams Eng J 7:959–971. https://doi.org/10.1016/j.asej.2015.07.002

Khorram-Nia R, Baziar A, Kavousi-Fard A (2013) A novel stochastic framework for the optimal placement and sizing of distribution static compensator. J Intell Learn Syst Appl 05:90–98. https://doi.org/10.4236/jilsa.2013.52010

Nazari MH, Khodadadi A, Lorestani A et al (2018) Optimal multi-objective D-STATCOM placement using MOGA for THD mitigation and cost minimization. J Intell Fuzzy Syst 35:2339–2348. https://doi.org/10.3233/JIFS-17698

Sanam J, Ganguly S, Panda AK, Hemanth C (2017) Optimization of energy loss cost of distribution networks with the optimal placement and sizing of DSTATCOM using differential evolution algorithm. Arab J Sci Eng 42:2851–2865. https://doi.org/10.1007/s13369-017-2518-y

Sannigrahi S, Acharjee P (2018) Implementation of crow search algorithm for optimal allocation of DG and DSTATCOM in practical distribution system. In: 2018 international conference on power, instrumentation, control and computing (PICC). IEEE, pp 1–6

Yuvaraj T, Devabalaji KR, Ravi K (2015) Optimal placement and sizing of DSTATCOM using harmony search algorithm. Energy Procedia 79:759–765. https://doi.org/10.1016/j.egypro.2015.11.563

Kumar D, Samantaray SR (2016) Implementation of multi-objective seeker-optimization-algorithm for optimal planning of primary distribution systems including DSTATCOM. Int J Electr Power Energy Syst 77:439–449. https://doi.org/10.1016/j.ijepes.2015.11.047

Tejaswini V, Susitra D (2020) Dragonfly algorithm for optimal allocation of D-STATCOM in distribution systems, pp 213–228

Khan B, Redae K, Gidey E et al (2022) Optimal integration of DSTATCOM using improved bacterial search algorithm for distribution network optimization. Alex Eng J 61:5539–5555. https://doi.org/10.1016/j.aej.2021.11.012

Montoya OD, Gil-González W, Hernández JC (2021) Efficient operative cost reduction in distribution grids considering the optimal placement and sizing of D-STATCOMs using a discrete-continuous VSA. Appl Sci 11:2175. https://doi.org/10.3390/app11052175

Rezaeian Marjani S, Talavat V, Galvani S (2019) Optimal allocation of D-STATCOM and reconfiguration in radial distribution network using MOPSO algorithm in TOPSIS framework. Int Trans Electr Energy Syst 29:e2723. https://doi.org/10.1002/etep.2723

Amin A, Kamel S, Selim A, Nasrat L (2019) Optimal placement of distribution static compensators in radial distribution systems using hybrid analytical-coyote optimization technique. In: 2019 21st international middle east power systems conference (MEPCON). IEEE, pp 982–987

Yuvaraj T, Ravi K, Devabalaji KR (2017) DSTATCOM allocation in distribution networks considering load variations using bat algorithm. Ain Shams Eng J 8:391–403. https://doi.org/10.1016/j.asej.2015.08.006

Taher SA, Afsari SA (2014) Optimal location and sizing of DSTATCOM in distribution systems by immune algorithm. Int J Electr Power Energy Syst 60:34–44. https://doi.org/10.1016/j.ijepes.2014.02.020

Noori A, Zhang Y, Nouri N, Hajivand M (2020) Hybrid allocation of capacitor and distributed static compensator in radial distribution networks using multi-objective improved golden ratio optimization based on fuzzy decision making. IEEE Access 8:162180–162195. https://doi.org/10.1109/ACCESS.2020.2993693

Roy Ghatak S, Sannigrahi S, Acharjee P (2018) Comparative performance analysis of DG and DSTATCOM using improved PSO based on success rate for deregulated environment. IEEE Syst J 12:2791–2802. https://doi.org/10.1109/JSYST.2017.2691759

Oda ES, El HAMA, Ali A et al (2021) Stochastic optimal planning of distribution system considering integrated photovoltaic-based dg and dstatcom under uncertainties of loads and solar irradiance. IEEE Access 9:26541–26555. https://doi.org/10.1109/ACCESS.2021.3058589

Zellagui M, Lasmari A, Settoul S et al (2021) Simultaneous allocation of photovoltaic DG and DSTATCOM for techno-economic and environmental benefits in electrical distribution systems at different loading conditions using novel hybrid optimization algorithms. Int Trans Electr Energy Syst 31:1–35. https://doi.org/10.1002/2050-7038.12992

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1:67–82. https://doi.org/10.1109/4235.585893

Braik M, Sheta A, Al-Hiary H (2021) A novel meta-heuristic search algorithm for solving optimization problems: capuchin search algorithm. Neural Comput Appl 33:2515–2547. https://doi.org/10.1007/s00521-020-05145-6

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95—international conference on neural networks. IEEE, pp 1942–1948

Hansen N, Müller SD, Koumoutsakos P (2003) Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol Comput 11:1–18. https://doi.org/10.1162/106365603321828970

Rashedi E, Nezamabadi-pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci (Ny) 179:2232–2248. https://doi.org/10.1016/j.ins.2009.03.004

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

Heidari AA, Mirjalili S, Faris H et al (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872. https://doi.org/10.1016/j.future.2019.02.028

Hashim FA, Hussain K, Houssein EH et al (2021) Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl Intell 51:1531–1551. https://doi.org/10.1007/s10489-020-01893-z

Jin J, Rothrock L, McDermott PL, Barnes M (2010) Using the analytic hierarchy process to examine judgment consistency in a complex multiattribute task. IEEE Trans Syst Man Cybern Part A Syst Humans 40:1105–1115. https://doi.org/10.1109/TSMCA.2010.2045119

Liu Z, Wen F, Ledwich G (2011) Optimal siting and sizing of distributed generators in distribution systems considering uncertainties. IEEE Trans Power Deliv 26:2541–2551. https://doi.org/10.1109/TPWRD.2011.2165972

Ali MH, Mehanna M, Othman E (2020) Optimal network reconfiguration incorporating with renewable energy sources in radial distribution networks optimal network reconfiguration incorporating with renewable energy sources in radial distribution networks

Arcuri A, Fraser G (2013) Parameter tuning or default values? An empirical investigation in search-based software engineering. Empir Softw Eng 18:594–623. https://doi.org/10.1007/s10664-013-9249-9

Mohamed AW, Hadi AA, Mohamed AK, Awad NH (2020) Evaluating the performance of adaptive gaining sharing knowledge based algorithm on CEC 2020 benchmark problems. In: 2020 IEEE congress on evolutionary computation (CEC). IEEE, pp 1–8

Wilcoxon F (1992) Individual comparisons by ranking methods, pp 196–202

Dolatabadi SH, Ghorbanian M, Siano P, Hatziargyriou ND (2021) An enhanced IEEE 33 bus benchmark test system for distribution system studies. IEEE Trans Power Syst 36:2565–2572. https://doi.org/10.1109/TPWRS.2020.3038030

Zhang D, Fu Z, Zhang L (2007) An improved TS algorithm for loss-minimum reconfiguration in large-scale distribution systems. Electr Power Syst Res 77:685–694. https://doi.org/10.1016/j.epsr.2006.06.005

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

MAT: conceptualization, methodology, formal analysis, data curation, investigation, writing—original draft, writing—review and editing. EHH: supervision, methodology, visualization, writing—review and editing, project administration. MHA: formal analysis, investigation, visualization, writing—review and editing. FAH: conceptualization, methodology, data curation, writing—review and editing. all authors read and approved the final paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest. They have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tolba, M.A., Houssein, E.H., Ali, M.H. et al. A new robust modified capuchin search algorithm for the optimum amalgamation of DSTATCOM in power distribution networks. Neural Comput & Applic 36, 843–881 (2024). https://doi.org/10.1007/s00521-023-09064-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-09064-0