Abstract

In this paper, we propose an Intelligent Decision Support System (IDSS) for the design of new textile fabrics. The IDSS uses predictive analytics to estimate fabric properties (e.g., elasticity) and composition values (% cotton) and then prescriptive techniques to optimize the fabric design inputs that feed the predictive models (e.g., types of yarns used). Using thousands of data records from a Portuguese textile company, we compared two distinct Machine Learning (ML) predictive approaches: Single-Target Regression (STR), via an Automated ML (AutoML) tool, and Multi-target Regression, via a deep learning Artificial Neural Network. For the prescriptive analytics, we compared two Evolutionary Multi-objective Optimization (EMO) methods (NSGA-II and R-NSGA-II) when optimizing 100 new fabrics, aiming to simultaneously minimize the physical property predictive error and the distance of the optimized values when compared with the learned input space. The two EMO methods were applied to design of 100 new fabrics. Overall, the STR approach provided the best results for both prediction tasks, with Normalized Mean Absolute Error values that range from 4% (weft elasticity) to 11% (pilling) in terms of the fabric properties and a textile composition classification accuracy of 87% when adopting a small tolerance of 0.01 for predicting the percentages of six types of fibers (e.g., cotton). As for the prescriptive results, they favored the R-NSGA-II EMO method, which tends to select Pareto curves that are associated with an average 11% predictive error and 16% distance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the textile and clothing industry there is a frequent need to design new fabrics in order to meet the fashion market trends. The Fabric Design (FD) process, often termed as fabric engineering, begins with the definition of a new prototype design, which includes several components that affect the physical properties and aesthetics of the textile product. Initially, the textile designer often uses her/his experience and intuition to select fabrics that have been previously manufactured and that are similar to the desired product specifications. Aiming to reach these specifications, she/he then reshapes the selected fabric by altering one or more of the design elements (e.g., the type and number of yarns used). Next, the prototype goes into a production stage, in order to check if the desired physical properties are met. If not, then a new prototype design iteration is attempted, in which the designer sets a new fabric configuration that is then produced and tested. Typically, success is reached only after a larger number of fabric prototype developments.

The development of the fabric prototypes involves specialized equipment and personnel, manufacturing lines and several cycles of adjustments before reaching the final prototype (the order to be mass-produced), making it a time-consuming and expensive process [1]. Each time a prototype is produced, laboratory quality tests are required, to assure regulatory compliance of the physical properties [2]. In some cases, there are also validation sessions with customers, which might require the production of replicas of the prototype in a larger quantity.

This research is set within a R &D project involving a Portuguese textile company that is being transformed under the Industry 4.0 context. The company expressed the need for an Intelligent Decision Support System (IDSS) that could enhance the design of new fabrics, aiming to reduce the number of fabric development attempts, which would highly reduce costs and fabric development time. An IDSS is a decision support system that incorporates Artificial Intelligence (AI) methods, such as Machine Learning (ML) and metaheuristics, to improve decision-making [3].

As shown in Sect. 2, there are few research works that address the FD task. In particular, there are only two studies that combine predictive and prescriptive analytics for FD [4, 5] and these studies contain several limitations, such as: optimization of a reduced set of fabric design features and number of designed fabrics; usage of a single ML algorithm; and use of a single objective optimization. In a preliminary previous work [6], we have obtained some interesting fabric property prediction results by using an Automated ML (AutoML). However, we did not address two important FD tasks that were requested by the Portuguese textile company: the prediction of the final textile composition (e.g., % of cotton) and the search for the best set of FD features. Aiming to provide a full FD solution, in this work we propose a novel data-driven IDSS that is based on the Adaptive Business Intelligence (ABI) [7] concept, which assumes a combination of predictive models, based on ML, with prescriptive analytics, based on metaheuristics.

In particular, the proposed IDSS targets two nontrivial predictive goals: first ML goal—the estimation of four relevant fabric physical properties (bias distortion, warp and weft elasticity, and pilling); and second ML goal—the prediction of the final textile composition (e.g., % of cotton and % of polyester). The physical property ML model can be used as a substitute for the fabric production and laboratory testing, thus reducing time and costs. As for the second ML goal (within our knowledge, first attempted here, see Sect. 2), it allows to automatically compute the final textile composition from the same set of inputs, which is a relevant information to be shown to the textile clients and customers. Both predictive goals are modeled as regression tasks that depend on several design inputs (e.g., the type of yarns). To solve these goals, historical data, including around 8.6 thousands of fabric creation records, are used to adapt and compare two ML main approaches: Single-Target Regression (STR), based on an AutoML tool [8]; and Multi-Target Regression (MTR) [9], based on a deep Multilayer Perceptron Artificial Neural Network (ANN) [10].

As for the IDSS prescriptive analytics, they use the first ML goal best prediction models. The aim is to search for the model inputs that match the desired textile properties, thus minimizing the ML predictive error. However, when adopting only this objective, the optimization methods often select inputs that are distant from the learned input space, performing a prediction that is more an extrapolation than an interpolation, thus less reliable. To solve this issue, we also minimize the input vector distance when compared with the ML training dataset. Both objectives are simultaneously optimized by adapting two Evolutionary Multi-Objective Optimization (EMO) methods [11]: NSGA-II and R-NSGA-II. The EMO methods are compared by using 100 additional records of designed fabrics (not used for the training and testing of the predictive ML models). The EMO result is a Pareto front of fabric designs, each related with a particular predictive error and input distance trade-off. For the optimized solutions, the IDSS also presents the final textile composition values by using the second ML goal predictions.

The rest of the paper is organized as follows. Section 2 presents the related work. Section 3 describes the fabric design task. Section 4 presents the textile data and the proposed IDSS. Section 5 details the experiments conducted and the obtained results. Finally, Sect. 6 discusses the main conclusions and also presents future research directions.

2 Related work

In the textile industry, even for the production of basic fabrics or garments, a large amount of data is typically created and stored (e.g., yarn characteristics, machine settings and quality tests). In recent years, the increase in the volume of data being stored has enabled AI tools to enhance textile production processes. In effect, several AI methods were applied successfully to the industry, with different approaches being employed to optimize different textile stages. Table 1 summarizes the most relevant related works, assuming a chronological order and the following characterizing elements: TS—the textile stage addressed by the study, Data—the type of data used, Pred.—if used, the type of predictive ML approach, MH—the type of metaheuristic used, Obj.—a description of the optimization objective, and M—the number of objective functions used by the metaheuristic (if \(M>2\) then the study assumes a Multi-Objective approach).

Most of the analyzed studies are focused on the manufacturing of fabrics or garments (as shown in column TS of Table 1). There are only four-related works that directly address the FD process. When compared with our approach (last row of Table 1), the FD studies only optimize a reduced set of fabric features (F), ranging from four to six inputs. For example, Majumdar et al. [5] only optimized the loop length, carriage speed, yarn input tension and yarn count. In contrast, our work assumes a richer and more complex set of \(F=44\) input parameters (see Sect. 4.1), reflecting diverse elements such as machine settings, warp and weft yarns and finishing operations. Within our knowledge, this work is the first to use finishing operations data (e.g., dyeing, washing, drying). Moreover, our study targets four desired physical properties (P), which is a higher number when compared with the related works that range from one [13] to three targets [4, 5, 16]. In addition, only two of the related FD works assumed a combination of predictive and prescriptive analytics [4, 16]. Regarding the number of optimized fabrics (V), the FD works optimize a reduced number of new fabrics, ranging from 1 to 4. In contrast, this work optimizes a substantially higher number of 100 new fabrics. Furthermore, none of the related works with predictive approaches (column Pred from Table 1) have modeled the final textile composition (e.g., % of cotton, % of polyester) based on the fabric design features, which is addressed in our work. Regarding the two FD studies that performed a prediction of the physical properties, they used only one ML algorithm, a simpler ANN structure composed of a Multilayer Perceptron with only one hidden layer. In a previous work [6], we have obtained interesting fabric property prediction results by using an AutoML STR modeling. In this research, we compare this AutoML STR approach with an alternative MTR based on a deep learning ANN. Finally, while all four FD studies have employed metaheuristics in order to optimize the fabric design elements, only one work adopted a EMO approach [4], via the NSGA-II method. In this paper, we propose a novel multi-objective approach that simultaneously minimizes the physical properties predictive error and also the distance of the selected inputs when compared with the learned input space. To perform the multi-objective task, we compare two EMO algorithms, NSGA-II and R-NSGA-II.

3 Problem statement

This work was carried out with collaboration of a Portuguese textile company that produces high-quality fabrics, based on natural, synthetic, artificial and recycled fibers, focusing on polyester, viscose and elastane blends. The company presents a vertical production system comprised of the following areas: Research and Development, Spinning, Dyeing, Twisting, Weaving and Finishing, with a production capacity of 700,000 ms per month. Currently, the development of new fabrics consists of several trial-and-error cycles that are executed until the client requirements are met. This process is heavily sustained in the knowledge and intuition of the textile designer. When a designer leaves the company, all this knowledge is lost.

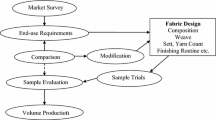

Figure 1 presents the process flow for the development of a new fabric. It starts with the selection of a previously developed fabric that is similar to new fabric physical property requirements. Based on the designer experience, she/he will then alter several parameters of the selected fabric, aiming to obtain the desired characteristics of the new fabric. Next, a fabric physical prototype is produced and inspected for its quality by running several laboratory tests. If the prototype passes the tests, it is presented to the client. Often, this is not the case and thus a new cycle is executed, where the designer attempts a new fabric design based on the previous prototype tests.

Typically, a larger number of design cycles is needed (e.g., 20) until the fabric is ready for mass production, with each cycle requiring costs and time.

Under this context, is essential to reduce the number of fabric development cycles. This work proposes a data-driven IDSS approach to solve this task, which first uses ML to model the fabric physical properties and final textile composition, and then performs an EMO to select the best set of fabric design values based on the previously obtained ML models. This is a nontrivial task due to several reasons. Firstly, there is a high number of fabric design features combinations, thus the search space is quite large. Secondly, there are interactions between the features, where a desired physical property change might only occur if there are simultaneous alterations in multiple design elements. Thirdly, producing changes that improve one physical property can prejudice other properties. For example, increasing the fabric elasticity can also increase the fabric bias distortion. Fourthly, the final composition of the fabric is a highly relevant information for textile clients and customers (e.g., % of cotton). Yet, it is not trivial to compute the precise final composition for a new fabric, since it can assume different types of yarns (each with a particular thickness) that can be repeated in different ways in the weft and warp elements of the fabric. In effect, the textile composition is often measured by using the produced fabric prototypes, assuming a manually counting of the number of weft and warp yarns for each fiber type contained in a fabric square of 2.54 cms.

4 Material and methods

4.1 Fabric data

The data was provided by a Portuguese textile company that creates and produces fabrics for fashion and clothing collections. We collected data from two main data sources: the Enterprise Resource Planning (ERP), which included all the data related to fabrics, such as yarns, finishing operations, machine settings and identification codes, and the laboratory testing database, which contained the fabric quality tests performed between 2012 and 2019. We implemented then an Extraction, Transformation, Load (ETL) process to merge the different data sources and clean the data. The ERP data included 34,998 fabric examples with 2391 features per row. Using manual analysis and domain expert knowledge, the ERP features were filtered into a total of 805 potential relevant attributes by removing irrelevant data (e.g., designer and customer identification codes) and data with a low variance (thus almost constant), such as the elasticity type of the fabric. Since the number of potential attributes was still high, we then performed several feature selection iterations by using domain knowledge (retrieved from textile experts) to discard more irrelevant features (e.g., color, falling weight and rapport), ending with 68 features. The final set of selected attributes is associated with a total of 8650 records, and it was obtained by executing several ML process iterations that involved the company designers, as described in our previous work [6]. In this work, 100 randomly selected fabrics are used to execute the prescriptive experiments. The remainder 8550 examples are used to perform the predictive experiments (e.g., external 10-fold cross-validation).

Table 2 summarizes the adopted set of fabric attributes in terms of their Attribute name, Description and Range of the domain values.

The first 13 rows of Table 2 are related to a fixed set of design attributes whose values can be changed by the fabric designer. They are common to all fabrics and therefore are used to create the 44 inputs of our predictive and prescriptive models (as explained below). The remainder rows of Table 2 are used as the targets of the predictive models. The physical properties are measured by laboratory tests that were executed on the final fabric prototypes (accepted for mass production). It should be noted that each fabric can have one or more distinct tests. For fabrics that had the same test repeated with a slight different value, we opted to compute the average values in order to get a single number per fabric and test. The analyzed dataset contained 15 different tests but only a tiny portion of the fabrics (27) had the full 15 test values. In this work, we model the most frequently measured physical properties, which correspond to the four tests shown in Table 2. Finally, the last 6 rows Table 2 denote the final textile composition attributes, representing the percentage of the 6 types of most common fibers that are used in the yarns. Each textile composition type includes a combination of the fiber percentages, where the sum equals to 100%. For instance, the most popular composition type includes 63% of PES, 27% of CV, 7% of CO and 3% of EL. In the analyzed dataset, there are a total of 95 different types of textile compositions that include the six types of fibers, corresponding to 93% of fabrics produced by the company. In this work, the textile composition prediction is also modeled as a regression task due to two main reasons. Firstly, it avoids dealing with a large multi-class (95) classification task that would produce poor results for the least represented classes. Secondly, it allows the reuse of the regression algorithms already developed for the physical property goal.

Figure 2 exemplifies some of the design features related to real textile fabrics. The left of Fig. 2 presents a yarn composed of four wool fibers. The middle of Fig. 2 presents a fabric where it is possible to distinguish between the yarns that compose the weft (horizontal yarns) and the ones that compose the warp (vertical yarns). The right of Fig. 2 presents a wool fabric with a higher number of reed dents per centimeter making the spaces between the weft and warp yarns much narrower.

Figure 3 presents two different components of a loom machine. In the left of Fig. 3, the coils contain the warp yarns used to produce the fabric. The right of Fig. 3 presents the loom that will raise alternate warp yarns to create a shed using a harness, which has a series of wires called heddles and the warp yarns pass through the heddle eyelets. The weft yarn is inserted through the shed by a carrier device. A single crossing of the filling from one side of the loom to the other is called a pick. The reed pushes each weft yarn against the portion of the fabric that has already been formed, resulting in a firm and compact fabric.

Figure 4 further exemplifies how some of the design features are related with the textile fabric and machine settings. Structurally, a fabric is made of two primary components: warp and weft. The left of Fig. 4 presents a view of a fabric that contains interlaced yarns related with the warp (blue color) and weft (gray color) components used in the weaving. In the example, the fabric has one type of yarn for the warp and another for the weft, both repeated five times. Since the warp is the set of yarns that is placed on the loom, it contains a minimum number of warp yarns to support the tension applied during the weaving process. A higher number of yarns per centimeter implies a better fabric quality. To obtain the number of finished threads per centimeter (t_pol), the designer must define how many warp yarns will be put in a centimeter of the fabric, and for the p_pol attribute, she/he defines how many weft yarns will be per centimeter. To obtain the finished width, the distance between the first and last warp yarn of the fabric is measured. The top right of Fig. 4 presents the weave design, where the spaces between the yarns are eliminated and only the squares where warp yarns are over the weft yarns are shown. This distribution defines the type of the fabric, which in this case is a twill. The bottom left image of Fig. 4 presents a representation of a loom machine, where the weft yarns (gray color) are inserted in a loom, going through the reed with a specific width, that will press the warp (blue color) that is inserted in between the warps yarn threads, according to the structure implemented in the weave design. The reed has two main characteristics: the number of the reed dents per centimeter (denting, in this case 5) and the reed width (in this case 15), which is the distance from the first reed dent to the last one. When designing the fabric, it is necessary to define how many of the weft yarns will pass in each dent (attribute ends/dent).

The warp and weft elements of the fabric can contain a variable combination of yarns, ranging from 1 to 21 in our dataset. Moreover, each fabric is processed with a varying sequence of finishing operations, ranging from 1 to 47 in our data. In the analyzed data, the most commonly used yarns assume combinations of the six fibers types that appear Table 2 (e.g., cotton, viscose, elastane), while the prevalent finishing operations are drying, finishing and dyeing. In order to represent these repeated elements, in previous work [6], we performed several feature engineering experiments, resulting in the final representation adopted in this work. For each fabric, the representation assumes a sequence with a maximum of \(\textrm{max}_y=6\) yarns for warp and then another similar sequence of \(\textrm{max}_y=6\) yarns for weft, allowing to encode 99.7% of the fabrics without any information loss. Each yarn is represented by two elements: a numeric unique code and the number of times the yarn appears in that specific element (warp or weft). When one element does not have up to \(\textrm{max}_y=6\) yarns, a zero padding is used to fill the empty yarn values. A similar approach was adopted to represent the finishing operations, defined in terms of a sequence of \(\textrm{max}_{op}=10\) operations, which represents 85% of the analyzed fabrics. Also, if a fabric is not processed with 10 operations, then a zero padding is used to fill the empty values. Thus, a total of 44 fabric design inputs are adopted by the predictive models, corresponding to: 6\(\times \)2 values to denote the warp and weft yarns (total of 24 inputs), 10 values to code the finishing operations, and the other 10 attributes from Table 2 (e.g., finished width, weave design). We note that in [6] several ML feature selection experiments were executed but worst predictive results were obtained when removing some of the 44 fabric input elements (e.g., finishing operations).

In terms of data preprocessing, the three categorical attributes in Table 2 (weave design, yarn code and op) were first transformed into numeric inputs. Then, all numeric inputs were standardized to a zero mean and one standard deviation (Z-score transformation). These transformations are needed for three main reasons. Firstly, several of the explored ML algorithms (e.g., ANN) only work with numeric inputs. Secondly, the ML is often more efficient when all inputs are standardized [29]. Thirdly, the computation of distance measures when comparing two sets of inputs is much simpler when all inputs are numeric and standardized (e.g., usage of the Euclidean distance).

Given that some of the categorical attributes present a high cardinality (e.g., the yarn code contains 1,730 distinct levels), we opted to transform all nominal attributes by using the Inverse Document Frequency (IDF) function [30]:

where \(x_i\) denotes a data attribute, n is the total number of instances and \(f_l\) is the number of occurrences (frequency) of the level \(l \in x_i\) in the analyzed data. The IDF mapping is computed using only training data and then stored, in order to enable a future encoding for unseen data. If a new level appears on the unseen data, it is replaced by the highest ln(n) value present on the training data, which represents the most infrequent IDF value.

The advantage of the IDF method is that encodes a nominal attribute into a single numeric value, where the levels with a higher frequency are set near 0 (but with a larger difference between them), while the less frequent levels are coded close to each other and near a IDF\((x_i)\) maximum value. Thus, more frequent levels are more easily distinguished by the ML algorithms. And while the one-hot encoding is a popular categorical transform, assigning one Boolean value per nominal level, its usage would highly increase the input space, resulting in a very sparse representation that would enlarge the computational memory and effort required by the ML algorithms [31]. Moreover, given that IDF produces a single numeric value for each categorical input, the computation of input distance measures is much straightforward than when adopting the one-hot method.

4.2 Intelligent decision support system

The proposed IDSS consists of three main modules (Fig. 5): data extraction and processing, predictive and prescriptive. All modules were implemented by using the Python programming language. The first module is responsible for extracting the relevant textile data, converting it into the adopted fabric design representation (with 44 inputs), leading to a stored fabric design database.

The prediction module receives the data, which is then divided into training and test sets, according to the adopted cross-validation method. Then, the features are preprocessed (IDF and standardization). Next, an ML algorithm is trained and evaluated (using validation data), allowing to perform a model selection. The best ML per task is stored, allowing a later computation of fabric physical properties (first ML goal) and textile composition (second ML goal). More ML modeling details are provided in Sect. 4.3.

The prescriptive module performs two main operations. It can search for previously manufactured fabrics that are similar to some desired product specifications. This search is executed by iterating all data examples, aiming to minimize the Euclidean distance between the desired values and the historical data, assuming a standardized multidimensional space. It can also execute a EMO search for the best set of design inputs, returning a Pareto front of solutions. Section 4.4 presents further EMO details.

The proposed IDSS includes also a friendly dashboard that sets the interaction with the textile designer. This interaction can assume several possibilities. First, the designer can inspect the historical data and search for the most similar fabrics, when assuming a desired set of physical properties. Second, the selected fabrics can be changed in some of their components, with the IDSS presenting the predicted physical properties and fabric composition. Third, a set of selected fabrics can be fed into the EMO search, thus using a seeding procedure to generate the initial population (instead of a purely random process). Fourth, Pareto front of solutions returned by the EMO can be further inspected, allowing to select one or more interesting candidate designs for prototype production. Fifth, once more prototypes are produced and tested, the predictive modules can be retrained using the recently acquired data. Sixth, if none of the suggested EMO designs are accepted by the designer, then the search can be rerun by reshaping some of previously obtained Pareto solutions and then seeding them into the next EMO population. All these interaction scenarios were found interesting by the analyzed textile company. However, in order to obtain an objective evaluation of the EMO results (independent of a specific designer), in this work we assume a pure automated IDSS usage, with no designer interaction. First we evaluate the predictive models (Sect. 4.3). After selecting the best ML approaches, we then evaluate the EMO methods (Sect. 4.4).

The proposed IDSS can be integrated into the textile information system by deploying it in the company computer server. If an optimized IDSS fabric design is accepted by the textile designer then a textile production order is created. Currently, any production order (manually designed or IDSS assisted) involves both automated and manual machine settings. For instance, the warp yarn coils (left of Fig. 3) are manually inserted but once the yarns are fed into the loom (right of Fig. 3), the fabric production process is automated. As mentioned in Sect. 1, we note that the main IDSS goal is to reduce the number of fabric prototype development attempts and not the fabric production order execution time. Nevertheless, in future it is possible to further optimize the production order execution time by adopting more Industry 4.0 components (e.g., usage of automated and digitally connected machines).

4.3 Predictive modeling

In this work, the two goals (physical properties and textile composition) contain multiple regression outputs for the same number of inputs. In particular, there are four targeted physical properties and six types of fiber percentages (Table 2). Under this context, performing a MTR can be interesting modeling approach, since it requires a single ML model to simultaneously model several equally important targets [9]. Thus, less effort is required to design and maintain a single MTR model when compared with several STR ones. In this work, we adopt a deep multilayer ANN, which is a natural ML model for MTR, since it can directly model several regression targets without requiring any learning algorithm changes by simply assigning a distinct output node for each target. Another interesting ML modeling possibility is to adopt an AutoML tool, which automates the search for the best ML algorithm and its associated set of hyperparameters. Thus, it alleviates the ML design, allowing future model updates to new data without a human effort. In this work, we adopt the H2O AutoML tool [32], which achieved good results in a recent AutoML benchmark study [8]. Since the H2O AutoML only performs a STR, the H2O tool is set to generate a distinct ML model for each output target (e.g., four distinct models will be searched for the first ML goal).

For the MTR experiments, we implemented a ANN using the Keras Python module [33]. Tabel 3 summarizes the main characteristics of the ANNs implemented for the MTR experiments. The ANN consists of a fully connected (thus dense) multilayer perceptrons [10]. Let \((I,L_1,...,L_h,O)\) denote a vector with the layer sizes, where I is the input layer size, h is the number of hidden layers, and O is the output layer. In this work, the ANNs assume a total of \(I=44\) fabric design inputs (see Sect. 4.1), while the number of outputs depends on the number of MTR tasks (\(O=4\) for the four physical properties; and \(O=6\) for the six textile composition percentages). Some preliminary experiments were held to set the ANN structure (in terms of number of hidden layers and their layer sizes) using older textile data and triangular-shaped multilayer perceptrons, in which each subsequent layer size is smaller [34]. As the result of these experiments, the MTR ANN was set to use the ReLU activation function under two types of structures: first ML goal (physical properties) (44, 28, 20, 12, 4) (\(h=3\) hidden layers); and second ML goal (textile composition) (44, 18, 12, 6) (\(h=2\) hidden layers). The popular ADAM optimizer was used to adjust the ANN weights during the training phase [35], assuming the Mean Absolute Error (MAE) loss function, an early stopping (with 10% of the training data being used as the validation set) and maximum of 1000 epochs.

Regarding the STR experiments, the adopted AutoML tool was configured to automatically select the optimal regression model and its hyperparameters for each validation fold data by minimizing the MAE measure, using an internal 10-fold cross-validation applied over the training data. The AutoML was run with its default configuration values, including a maximum execution duration of 1 h. We used the same ML search setup adopted in [8], which provided competitive results for the H2O tool when compared with seven other AutoML frameworks (e.g., TPOT, AutoGluon). Thus, a total of six different ML algorithms are automatically searched by the AutoML tool for each regression task: Random Forests (RF), Extremely Randomized Trees (XRT), Generalized Linear Models (GLM), Gradient Boosting Machines (GBM), XGBoost (XG) and Stacked Ensembles (SE). The H2O tool utilizes a grid search to set the hyperparameters for GLM (1 hyperparameter), GBM (9 hyperparameters), and XG (10 hyperparameters), while RF and XRT are configured using their default hyperparameters. As for the SE, the tool uses GLM as the second-level learner and compares three distinct ensemble methods: one with the best model of each individual approach (e.g., the best XG model), one with the best 100 models and one with all trained models.

To evaluate the ML models for both regression tasks, an external 10-fold cross-validation [29] was implemented, which is a standard ML evaluation procedure (e.g., used in [36]). The regression quality was assessed by using the MAE and the Normalized MAE (NMAE) metrics. The NMAE measure presents a standardized MAE result, thus scale independent, showing the error as a percentage of the response range. The MAE and NMAE errors are calculated as [37]:

where \(\mathcal {T}\) denotes the test set with a cardinality of \(\#\mathcal {T}\), \(y_{i,j}\) and \(\hat{y}_{i,j}\) represent the desired and predicted value for output target i and test example j, and \(\max {(y_i)}\) and \(\min {(y_i)}\) corresponds to the highest and the lowest values of the target \(y_i\) (considering all available data). For both metrics, the closer the value is to zero the better is the regression.

Regarding the specific second ML goal, both STR or MTR prediction models can return values such that the sum of the six fiber percentages is not equal to 100%, resulting in unfeasible textile compositions (this direct normal output usage is termed here as the N approach). To solve this issue, we explore two output post-processing strategies that return feasible compositions. The first strategy assumes a proportional normalization (P), where first all negative values are replaced by zero and then transformed according to:

where \(\hat{y}'_{i,j}\) is the transformed value of the predicted fiber target \(\hat{y}_{i,j}\), \(i \in \{1,2,...,K\}\) (one value for each fiber type, thus \(K=6\)). The second strategy works similarly to the previous one except that the zero or positive values are now transformed by using the softmax (S) function:

To select the best strategy and provide a final composition class value, we compute the overall classification accuracy for a given low tolerance T value, where a class composition is considered correct if all six fiber percentages are correctly predicted within the T absolute tolerance value. This measure is based on the Regression Error Characteristic (REC) curve concept, which allows an easy visual comparison of different prediction methods [38]. In this work, we selected 10 small tolerance values within the range \(T\in \{0.01,0.02,...,0.10\}\).

4.4 Prescriptive modeling

A possible solution \(s=(s_1,s_2,...,s_I)\) is represented as a sequence of \(I=44\) numeric values (as explained in Sect. 4.1), assuming the IDF standardized input space, as it allows to provide the same importance to each value when computing distance measures. Each \(s_i\) value is set within the range \([\min {(s_i)},\max {(s_i)}]\), where \(\min {(s_i)}\) and \(\max {(s_i)}\) denote the minimum and maximum values of the IDF standardized space using the training data. We repair solutions in two different types of design features: yarns and finishing operations. A yarn is represented by a code and its number of repetitions. Thus, when one of the two values is empty (thus 0 in the original space), then the other value is also set to zero. For instance, if a yarn code is 0 (“empty”), then the number of its repetitions is also set to 0. Moreover, we also assure that both the warp and weft have at least one yarn, set to the first yarn type with one repetition (if needed). In case of the finishing operations, any zero intermediate value (no operation followed by an operation) is shifted right, such that the initial part of the sequence contains concrete operating values. Furthermore, since a fabric needs to be processed by at least two finishing operations, we set the lower bound as \(\min {(s_i)}>0\) (in the original space) for the first two finishing operations values of the sequence.

The EMO goal is to simultaneously minimize the absolute error predictive error (\(f_p\)) and the training distance (\(f_d\)) associated with a candidate solution s. Let \(\mathcal {D}\) denote the data used to train \(\mathcal {M}_i\), where \(\mathcal {M}_i\) is the selected ML method to predict a desired physical property i, under the mapping: \(i=1\)—bias distortion; \(i=2\)—warp elasticity; \(i=3\)—weft elasticity; and \(i=4\)—pilling. Let \(\mathcal {Y}_i{(s)}\) and \(\hat{\mathcal {Y}}_i{(s)}\) denote normalized (using a min-max normalization within the [0,1] range) desired target and predicted values when using model \(\mathcal {M}_i\) and the input solution s. In this work we assume normalized objective functions, set within the [0,1] range. This facilitates the EMO result analysis, since each computed objective can be interpreted as a percentage value:

where \(f_p(s)\) corresponds to the MAE error for all four desired properties and \(f_d(s)\) is the minimum Euclidean distance of solution s when compared with the training set \(\mathcal {D}\). For the second objective, the term \(\sqrt{I}\) corresponds to a high distance value (deviation of 1 for each of the analyzed \(I=44\) inputs). As explained in Sect. 1, ideally both \(f_p\) and \(f_p\) should present lower values. A low predictive error (\(f_p\)) means that the desired target properties are reached, while a small distance (\(f_d\)) reflects that the selected inputs (s) are close to known input space, meaning that the predictions should be more reliable.

Two EMO approaches are compared in this work: NSGA-II and R-NSGA-II, as implemented in the pymoo Python module [39]. The Non-dominated Sorting Genetic Algorithm (NSGA)-II, adopts several distinct features (e.g., elitist strategy, fast crowded distance estimation procedure) and it is considered a parameterless approach [40]. When compared with other EMO algorithms, such as based on the hypervolume measure (e.g, SMS-EMOA), NSGA-II tends to obtain competitive results when only two or three objectives are optimized [41]. As for R-NSGA-II, it consists of a more recent NSGA variant that assumes a modified survival selection, which is based on one or more user defined reference points [42].

The EMO methods return a Pareto curve of optimized solutions, containing a set of trade-off points. Once the Pareto curve is optimized and for the user selected trade-offs, the IDSS computes the prediction of the textile composition (using the second ML goal prediction models), such that the user can further inspect the quality of the obtained solutions. In order to obtain a single measure per Pareto curve, we adopt the hypervolume measure, which is computed by defining a baseline reference point (anti-optimal) [43]. The higher the hypervolume value, the better is the Pareto curve optimization.

For the evaluation of the EMO methods, we use the selected 100 external fabric records, that are not used in the predictive experiments. The best physical property prediction models (as shown in Sect. 5.1) are selected and retained with all predictive experiment data (8550 records). The EMO methods are then run by adopting an initial random population of individuals. We highlight that each EMO method is executed for \(V=100\) times (one run for each new targeted fabric), which is a number that is substantially higher when compared with the state-of-the-art FD works (see Sect. 2).

5 Experiments and results

All experiments were conducted using code written in the Python programming language. The experiments were executed on a personal computer with a Intel Core i7 2.20GHz processor, with 6 cores, a NVIDIA GeForce GTX 1050 Ti, using a Windows operating system.

5.1 Physical property prediction results

Table 4 presents the physical property predictive performance results. For each predicted task (Property), we compare the two STR (AutoML) and MTR (deep ANN) approaches. The results are shown in terms of the mean MAE and NMAE values, associated with its student-t 95% confidence intervals, for the external 10 folds, with the best values being highlighted by using a boldface text font (statistical significance is measured by executing a paired t-test). When comparing both approaches it becomes clear that STR is the best regression strategy, achieving the lowest regression errors for all four targets, with the NMAE values ranging from 4.05 to 11.22%. Thus, the STR approach, as provided by the AutoML modeling, is the selected regression approach that is adopted by the EMO methods.

For demonstration purposes, Fig. 6 shows the Regression Error Characteristic (REC) curves for the four targets and the 6th external k-fold iteration. Each REC curve plots the percentage of correctly predicted examples (y-axis) for a given absolute error tolerance (x-axis) [38]. The curves confirm that the STR presents slight better results for the pilling and bias distortion tests, and much better results for both elasticity tests. For example, for the warp elasticity, the STR method correctly predicts around 80% of the examples when a small tolerance of \(T=0.1\) points.

To complement the visualization of the obtained STR results, Fig. 7 presents the scatter plots of the measured (x-axis) versus the predicted values (y-axis), complemented with coefficient of determination (R\(^2\)) for the specific 6th external fold. This metric has a positive orientation, that is, the closer the result is to 1 the better are the predictions.

Visually, it can be seen that the predictions for the elasticity warp and elasticity weft tests (top of Fig. 7) are close to the ideal prediction diagonal line and both present very good R\(^2\) values of 0.90 and 0.87 respectively. While the same effect is not that visible for the pilling predictions (bottom right of Fig. 7), it should be noted that the real values are mainly distributed in 6 clusters, and the predicted values variate within each cluster, resulting in an interesting R\(^2\) of 0.64. The bias distortion test presented the lowest R\(^2\) (0.32), with a higher concentration of values between 1.5 and 4, which confirms that this property is more difficult to be predicted. Nevertheless, the REC curve (bottom left plot of Fig. 6) shows that, for the same 6th external fold, the selected STR prediction model for the bias distortion is still capable of predicting around 50% of the examples for a 0.1 tolerance and around 80% of the examples for a 0.25 tolerance value.

5.2 Textile composition prediction results

Table 5 presents the predictive performance results of the test data regarding the fabric composition. For each predicted task (%Fiber), we compare the two regression approaches (STR, MTR). The results are shown in terms of the mean MAE values (with their student-t 95% confidence intervals), for the external 10-fold test sets, with the best values being highlighted by using a boldface text font. It should be noted that only the MAE error is computed, since the targets are already scaled within [0,1], thus the NMAE values are identical to MAE in this case.

Similarly to the physical property results, the STR approach presents lowest MAE values for all six types of fibers, ranging from 0.002 to 0.01. It also obtains the lowest average MAE value over all six fibers (difference of 0.007 points when compared with MTR).

Additional comparative results are shown in Table 6, which presents the composition classification accuracy (in %) when assuming a small tolerance (T). For each regression approach (STR and MTR), we compare the three output normalization strategies (N, P and S), with the best results for a given tolerance value being highlighted by using a boldface text font.

The results clearly favor the STR regression approach. As for the output normalization strategies, all three strategies provide high classification accuracy values even for very low tolerances (e.g., 87% when \(T=0.01\%\)). Given that there is a large number of different composition classes (95), this is a very interesting result, confirming the value of the proposed ML textile composition approach. In particular, the second output normalization strategy, based on proportions (P), obtained a slight increase of 1% point for the tolerance values of \(T=0.07\) and \(T=0.10\). Given that when compared with the no normalization strategy (N), it presents the additional advantage of always showing feasible compositions, the STR approach with the P output transformation method was selected to be used in the proposed IDSS system.

5.3 Fabric input optimization results

The selected 100 external fabrics for the EMO experiments (not used in the predictive experiments) present the following physical property ranges: Bias Distortion—[1.1, 8.9]; Elasticity Warp—[5.2, 45.5]; Elasticity Weft—[5.4, 44.3]; and Pilling—[2, 4.5]. A distinct EMO execution is performed for each target fabric, thus 100 runs were executed for each of the tested NSGA-II and R-NSGA-II methods. The initial populations were randomly generated (as explained in Sect. 4.4). Moreover, all solutions were repaired (e.g., removal of yarn codes when there are no repetitions) before computing the two objective functions.

The NSGA-II and R-NSGA-II algorithms were configured with a check procedure that eliminates duplicates, ensuring that the mating produces offspring that are different from themselves and the existing population regarding their design space values. The NSGA-II was set up with the default values provided by the pymoo Python module: population size of 100, two-point crossover with 90%, polynomial mutation probability of 20%. After some preliminary experiments, in which the hypervolume measure was monitored for 5 fabrics, the total number of generations was set to 200 generations, with an average execution time of 1750 s per fabric. The R-NSGA-II method was set up with the same configuration. Given that in this domain it is highly relevant to obtain a low predictive error and a low input to training set distance, two reference points were adopted close to the ideal (0,0) point: (0.05, 0.01) and (0.01, 0.05). The goal is to guide the R-NSGA-II to obtain trade-off points near the (0,0) region. R-NSGA-II contains an additional parameter, \(\epsilon \) that was set to a low value (0.01), which increases the search pressure to select points closer to the two reference points. The R-NSGA-II had an average execution time of 1852 s per fabric. Following a similar reasoning to the one used for the setting the R-NSGA-II reference points, we defined the baseline reference point for the hypervolume metric as (0.3, 0.3) when evaluating the EMO methods. For each optimized fabrics, we discard the solutions that are outside that area and calculate the hypervolume.

Table 7 presents the obtained hypervolume performance results for the two EMO methods (% values, where 100% denotes the perfect Pareto curve). For each Method, we present the mean hypervolume value associated with its student-t 95% confidence interval (column Mean), and the Median and its associated nonparametric Wilcoxon-Signed-Rank 95% confidence interval (since this interval is not symmetric, it is fully shown in a different column) [44, 45]. Statistical significance is measured by executing paired t-test (for the Mean values) and Wilcoxon tests (for the Median values). When comparing the EMO methods, it becomes clear that the R-NSGA-II is the best approach. It outperforms the NSGA-II in both aggregation measures, with a difference of around 5 points for the mean and around 10 points for the median.

To complement the hypervolume results, for each optimized Pareto front, the nearest points to the ideal (0,0) solution were computed, assuming an Euclidean distance under the optimized multi-objective space. These points represent potential interesting solutions that are associated with both low predictive error and distance values. Table 8 summarizes the obtained results in terms of the mean and median values of all 100 selected points for each individual objective (\(f_p\) and \(f_d\)). Table 8 also presents the mean and median values for the required CPU time (in s) and final number of optimized Pareto solutions. Similarly to Table 7, the student-t and Wilcoxon-Signed-Rank 95% confidence intervals and paired tests were also computed. The results confirm that R-NSGA-II is the best approach for the optimization process. In effect, R-NSGA-II presents lower mean and median values for both objectives, with a difference of 2% points for the distance (\(f_d\)) and 3% points for the predictive error (\(f_p\)) when compared with NSGA-II. The R-NSGA-II optimized points that are closer to the ideal (0,0) solution present similar mean and median values, which are 11% for the predictive error (\(f_p\)) and 16% for the inputs distance (\(f_d\)). Regarding the additional EMO evaluation criteria, R-NSGA-II requires a slight higher computational effort when compared with NSGA-II, returning an average execution of 1852 s. This value is still considered reasonable for a new fabric design optimization, since it corresponds to around 30 min. More importantly, R-NSGA-II tends to return a richer Pareto front, containing on average around 50 distinct solutions, which is substantially better when compared with NGSA-II (average of around 22 Pareto points).

For demonstration purposes, we selected two fabrics (#64 and #82) from the 100 external fabrics used to evaluate the optimization process. Figure 8 presents the evolution of both EMO methods in terms of a percentage hypervolume measure (y-axis) through the executed 200 generations. It should be noted that the measure is calculated for the baseline reference point (0.3, 0.3), thus it returns a value of zero for the first EMO generations, since these include worst solutions than the baseline. The plots show a substantial improvement that is obtained by R-NSGA-II when comparing with NSGA-II. In fact, R-NSGA-II requires fewer generations to start to obtain solutions that are within the evaluation range (e.g., 75 generations for fabric #64), and after 200 generations, the difference in the hypervolume % value of both fabrics is considerable, with an improvement of 28% points for fabric #64 and 33% points for fabric #82.

Another demonstration example is provided in Fig. 9, which presents the Pareto front of both algorithms after 200 generations when considering the #64 (left) and #82 (right) fabrics, with the defined reference points utilized by R-NSGA-II. Considering fabric #64, the Pareto front of NSGA-II contains 31 solutions, with the distance objective ranging from 0.172 to 0.290 and predictive error objective ranging from 0.023 to 0.060. As for R-NSGA-II, it returns a Pareto curve with 100 non-dominated solutions, with the distance measure ranging from 0.085 to 0.290 and the predictive error going from 0.015 to 0.055. The computed hypervolume (HV) is thus much higher for R-NSGA-II (66%) when compared with NSGA-II (38%). Regarding fabric #82, the Pareto front of NSGA-II contains 37 solutions (inputs distance ranging from 0.185 to 0.298; predictive error within 0.026 to 0.068; hypervolume of 32%), while the R-NSGA-II returns a Pareto front with 44 solutions (distance ranging from 0.077 to 0.229; predictive error from 0.023 to 0.076; hypervolume of 65%). The nearest point to the ideal point (0,0) is also shown in both plots. They belong to the R-NSGA-II optimized fronts and correspond to: fabric #64—(\(f_d=0.155\), \(f_p=0.026\)) and fabric #82—(\(f_d=0.097\), \(f_p=0.053\)).

These examples confirm that R-NSGA-II outperforms NSGA-II. In fact, R-NSGA-II tends to provide more Pareto front solutions and lower values for both optimized objectives. Thus, we select R-NSGA-II for the proposed IDSS.

To illustrate the R-NSGA-II convergence, Fig. 10 presents the evolution of the solutions toward the Pareto-optimal front. Each point represents a potential solution and line segments are used to connect the points that belong to the Pareto front. A color scheme is employed to facilitate the visual inspection of the plots, ranging from light gray (first generation) to full black (last generation). For both #64 and #82 fabrics, there is an initial fast R-NSGA-II convergence, with substantial movements of the Pareto front toward the interesting bottom left region.

6 Conclusions

Due to fashion trend dynamics, the textile and clothing industry is constantly designing new fabrics. However, the creation of a new fabric is a nontrivial, costly and time-consuming process, often based on the textile designer experience and requiring a large number of design, prototype production and laboratory testing cycles. Aiming to reduce the number of fabric prototype production attempts, this paper proposes a purely data-driven and automated Intelligent Decision Support System (IDSS) that combines predictive and prescriptive analytics.

Using a large and realistic set of 44 fabric design components (e.g., yarns used for the warp and weft components), two main predictive goals were used by the proposed IDSS, the estimation of four desired physical properties (e.g., warp and weft elasticity) and the detection of the final textile composition (e.g., % of cotton, % of polyester). Thousands of historical fabric production records, collected from a Portuguese textile company, were used to compare two distinct Machine Learning (ML) approaches, a Single-Target Regression (STR) using an Automated ML (AutoML) approach, and Multi-Target Regression (MTR) performed by a deep Artificial Neural Network (ANN). Overall, the STR approach provided the best results for both predictive goals, resulting in Normalized Mean Absolute Error (NMAE) values that ranged from 4% (weft elasticity) to 11% (pilling), when predicting the physical properties, and a textile composition classification accuracy of 87%, when assuming a small tolerance of 0.01 for predicting the percentages of six main types of fibers (e.g., cotton, polyester).

Regarding the prescriptive analytics, they assume an Evolutionary Multi-objective Optimization (EMO) search. Using the best physical property prediction models (provided by STR), the EMO optimizes the set of 44 fabric inputs that simultaneously minimizes the physical property predictive error and its distance to the training input space. Using 100 additional fabric records (not used in the predictive experiments), two EMO methods were compared: NSGA-II and R-NSGA-II. Thus, each method was executed 100 times, resulting in 100 distinct Pareto fronts. Several EMO measures, such as hypervolume and selection of the Pareto front points that were closer to the ideal solution, allowed to confirm the R-NSGA-II as the best EMO method, thus being included in the proposed IDSS. On average, the R-NSGA-II selected points closer to the perfect (0,0) value and that are associated with an 16% distance and 11% predictive error. Moreover, the R-NSGA-II method returned a richer set of Pareto points (average of around 50 distinct solutions for each new fabric).

We note that the obtained results can not be directly compared with the state-of-the-art works, since the four-related Fabric Design (FD) studies (Sect. 2) are more limited and target different FD goals. For instance, the ANNs used in [4] predicted different physical properties (ultraviolet protection factor, air permeability and moisture vapor transmission rate). The study used a smaller dataset (with just 42 fabric examples) and a simpler validation procedure (random holdout split and not the 10-fold cross-validation adopted in this work). Furthermore, in [4] a Genetic Algorithm was used to reduce the ANN predictive error (single objective) regarding the three physical properties and only 4 new fabrics were optimized, presenting a fabric property deviation that ranged from 2 to 20%. While these results are comparable to our average 11% predictive error, we note that our research contains substantial differences. For instance, we target four different physical properties (e.g., weft elasticity) and optimize a much larger set of 100 fabrics under a true EMO approach that also aims to reduce the distance to the learned input space.

In terms of scientific contributions, this research shows that the combination of predictive and prescriptive analytics can be a valuable tool to address the nontrivial FD task. Indeed, the predictive and prescriptive IDSS results were shown to the textile company experts, which provided a very positive feedback.

While interesting results were obtained, this research includes some limitations. As already mentioned in Sect. 4.2, this paper assumes an objective evaluation of the EMO results by using unseen test data. Thus, there was no assessment of the IDSS interaction with textile designers. Moreover, since the proposed IDSS was not deployed in a real environment, we have not directly measured the IDSS value in terms of reducing the number of FD prototype attempts. In effect, in future work, we intend to address these limitations by deploying the IDSS in the real textile company environment. This will allow us to gather further feedback from the textile designers by using the Technology Acceptance Model (TAM) model, such as executed in [46]. Also, the IDSS deployment will be used to confirm its potential to reduce the costs and time associated with each new fabric development. Also, we plan to study a many objective approach [47], which is more challenging than the approached bi-objective EMO, aiming to simultaneously minimize the individual predictive error when predicting a larger set of fabric physical properties (e.g., abrasion, seam slippage, stability to steam). Finally, although the combination of predictive and prescriptive analytics was targeted to support the creation of new textile fabrics, it can be adapted to other production domains that have similar prototype design processes, such the plastic and chemical industries.

Data availability

The datasets analyzed during the current study are not publicly available due to business privacy issues of the Portuguese textile company but are available from the corresponding author on reasonable request.

References

Studd R (2002) The textile design process. Des J 5(1):35–49

Hu J (2008) Introduction to fabric testing. In: Hu J (ed) Fabric testing. Woodhead publishing series in textiles. Woodhead Publishing, Cambridge, pp 1–26. https://doi.org/10.1533/9781845695064.1

Arnott D, Pervan G (2014) A critical analysis of decision support systems research revisited: the rise of design science. J Inf Technol 29(4):269–293. https://doi.org/10.1057/jit.2014.16

Majumdar A, Das A, Hatua P, Ghosh A (2016) Optimization of woven fabric parameters for ultraviolet radiation protection and comfort using artificial neural network and genetic algorithm. Neural Comput Appl 27(8):2567–2576. https://doi.org/10.1007/s00521-015-2025-6

Majumdar A, Mal P, Ghosh A, Banerjee D (2017) Multi-objective optimization of air permeability and thermal conductivity of knitted fabrics with desired ultraviolet protection. J Text Inst 108(1):110–116. https://doi.org/10.1080/00405000.2016.1159270

Ribeiro R, Pilastri A, Moura C, Rodrigues F, Rocha R, Morgado J, Cortez P (2020) Predicting physical properties of woven fabrics via automated machine learning and textile design and finishing features. In: Maglogiannis I, Iliadis L, Pimenidis E (eds) Artificial intelligence applications and innovations. Springer, Cham, pp 244–255. https://doi.org/10.1007/978-3-030-49186-4_21

Michalewicz Z, Schmidt M, Michalewicz M, Chiriac C (2006) Adaptive business intelligence. Springer, Berlin Heidelberg. https://doi.org/10.1007/978-3-540-32929-9

Ferreira L, Pilastri AL, Martins CM, Pires PM, Cortez P (2021) A comparison of automl tools for machine learning, deep learning and xgboost. In: International Joint Conference on Neural Networks, IJCNN 2021, Shenzhen, China, July 18–22, 2021, pp. 1–8. IEEE, NJ, USA. https://doi.org/10.1109/IJCNN52387.2021.9534091

Arashloo SR, Kittler J (2022) Multi-target regression via non-linear output structure learning. Neurocomputing 492:572–580. https://doi.org/10.1016/j.neucom.2021.12.048

Goodfellow IJ, Bengio Y, Courville AC (2016) Deep learning. Adaptive computation and machine learning. MIT Press

Cortez P (2021) Modern optimization with R. Springer, New York. https://doi.org/10.1007/978-3-030-72819-9

Ghosh A, Das S, Banerjee D (2013) Multi objective optimization of yarn quality and fibre quality using evolutionary algorithm. J Inst Eng Ser E 94(1):15–21. https://doi.org/10.1007/s40034-013-0015-8

Das S, Ghosh A, Banerjee D (2014) Designing of engineered fabrics using particle swarm optimization. Int J Cloth Sci Technol 26(1):48–57

Gloy Y-S, Renkens W, Herty M, Gries T (2015) Simulation and optimisation of warp tension in the weaving process. J Text Sci Eng 5:1000179. https://doi.org/10.4172/2165-8064.1000179

Junior AU, de Freitas Filho PJ, Silveira RA (2015) E-HIPS: an extention of the framework HIPS for stagger of distributed process in production systems based on multiagent systems and memetic algorithms. In: Sidorov G, Galicia-Haro SN (eds.), Advances in artificial intelligence and soft computing—14th Mexican international conference on artificial intelligence, MICAI 2015, Cuernavaca, Morelos, Mexico, October 25-31, 2015, Proceedings, Part I. Lecture Notes in Computer Science, vol. 9413, pp. 413–430. Springer, Cham. https://doi.org/10.1007/978-3-319-27060-9_34

Mitra A, Majumdar PK, Banerjee D (2015) Production of engineered fabrics using artificial neural network-genetic algorithm hybrid model. J Inst Eng Ser E 96(2):159–165. https://doi.org/10.1007/s40034-014-0048-7

Zhang R, Chang P-C, Song S, Wu C (2017) A multi-objective artificial bee colony algorithm for parallel batch-processing machine scheduling in fabric dyeing processes. Knowl-Based Syst 116:114–129. https://doi.org/10.1016/j.knosys.2016.10.026

Chakraborty S, Diyaley S (2018) Multi-objective optimization of yarn characteristics using evolutionary algorithms: a comparative study. J Inst Eng Ser E 2(99):129–140. https://doi.org/10.1007/s40034-018-0121-8

Huynh N, Chien C (2018) A hybrid multi-subpopulation genetic algorithm for textile batch dyeing scheduling and an empirical study. Comput Ind Eng 125:615–627. https://doi.org/10.1016/j.cie.2018.01.005

Lorente-Leyva LL, Murillo-Valle JR, Montero-Santos Y, Herrera-Granda ID, Herrera-Granda EP, Rosero-Montalvo PD, Peluffo-Ordóñez DH, Blanco-Valencia XP (2019) Optimization of the master production scheduling in a textile industry using genetic algorithm. In: Pérez García H, Sánchez González L, Castejón Limas M, Quintián Pardo H, Corchado Rodríguez E (eds) Hybrid artificial intelligent systems. Springer, Cham, pp 674–685

Liyanage I, Nuwanga S, Anjana R, Rankothge W, Gamage N (2020) Sustainable manufacturing: application of optimization to textile manufacturing plants. Glob J Comput Sci Technol. https://doi.org/10.34257/GJCSTHVOL20IS2PG11

Xu Y, Sébastien T, Xianyi Z (2020) Optimization of garment sizing and cutting order planning in the context of mass customization. Int J Adv Manuf Technol 106(7–8):3485–3503. https://doi.org/10.1007/s00170-019-04866-w

Jaouachi B, Khedher F (2021) Assessment of jeans sewing thread consumption by applying metaheuristic optimization methods. Int J Cloth Sci Technol 34(3):347–366. https://doi.org/10.1108/IJCST-01-2021-0005

Ferro R, Cordeiro GA, Ordóñez REC, Beydoun G, Shukla N (2021) An optimization tool for production planning: a case study in a textile industry. Appl Sci 11(18):8312. https://doi.org/10.3390/app11188312

Ribeiro R, Pilastri A, Carvalho H, Matta A, Pereira PJ, Rocha P, Alves M, Cortez P (2021) An intelligent decision support system for production planning in garments industry. In: Yin H, Camacho D, Tino P, Allmendinger R, Tallón-Ballesteros AJ, Tang K, Cho SB, Novais P, Nascimento S (eds.), Intelligent data engineering and automated learning—IDEAL 2021, pp. 378–386. Springer, Cham. https://doi.org/10.1007/978-3-030-91608-4_37

Tsao Y-C, Hung C-H, Vu T-L (2021) Hybrid heuristics for marker planning in the apparel industry. Arab J Sci Eng 46(10):10077–10096. https://doi.org/10.1007/s13369-020-05210-1

Zhang Z, Guo C, Wei Q, Guo Z, Gao L (2021) A bi-objective stochastic order planning problem in make-to-order multi-site textile manufacturing. Comput Ind Eng 158:107367. https://doi.org/10.1016/j.cie.2021.107367

Elahi I, Ali H, Asif M, Iqbal K, Ghadi Y, Alabdulkreem E (2022) An evolutionary algorithm for multi-objective optimization of freshwater consumption in textile dyeing industry. PeerJ Comput Sci 8:932. https://doi.org/10.7717/peerj-cs.932

Hastie T, Tibshirani R, Friedman JH (2009) The elements of statistical learning: data mining, inference, and prediction, 2nd edn. Springer series in statistics. Springer, USA. https://doi.org/10.1007/978-0-387-84858-7

Campos GO, Zimek A, Sander J, Campello RJGB, Micenková B, Schubert E, Assent I, Houle ME (2016) On the evaluation of unsupervised outlier detection: measures, datasets, and an empirical study. Data Min Knowl Disc 30(4):891–927. https://doi.org/10.1007/s10618-015-0444-8

Matos LM, Azevedo J, Matta A, Pilastri A, Cortez P, Mendes R (2022) Categorical attribute transformation environment (cane): a python module for categorical to numeric data preprocessing. Softw Impacts 13:100359. https://doi.org/10.1016/j.simpa.2022.100359

LeDell E, Poirier S (2020) H2O AutoML: scalable automatic machine learning. In: 7th ICML workshop on automated machine learning (AutoML)

Chollet F (2021) Deep learning with Python, 2nd edn. Simon and Schuster, USA

Matos LM, Cortez P, Mendes R, Moreau A (2019) Using deep learning for mobile marketing user conversion prediction. In: International joint conference on neural networks, IJCNN 2019 Budapest, Hungary, July 14–19, pp. 1–8. IEEE, NY, USA. https://doi.org/10.1109/IJCNN.2019.8851888

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: Bengio Y, LeCun Y (eds.) 3rd International conference on learning representations, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference Track Proceedings. arxiv:1412.6980

Sumeet Saurav Saini AK, Saini R, Singh S (2022) Deep learning inspired intelligent embedded system for haptic rendering of facial emotions to the blind. Neural Comput Appl 34(6):4595–4623. https://doi.org/10.1007/s00521-021-06613-3

Oliveira N, Cortez P, Areal N (2017) The impact of microblogging data for stock market prediction: using twitter to predict returns, volatility, trading volume and survey sentiment indices. Expert Syst Appl 73:125–144. https://doi.org/10.1016/j.eswa.2016.12.036

Bi J, Bennett KP (2003) Regression error characteristic curves. In: Fawcett T, Mishra N. (eds.) Machine learning, proceedings of the twentieth international conference (ICML 2003), August 21–24, Washington, DC, USA, pp. 43–50. AAAI Press, California, USA. http://www.aaai.org/Library/ICML/2003/icml03-009.php

Blank J, Deb K (2020) Pymoo: multi-objective optimization in python. IEEE Access 8:89497–89509. https://doi.org/10.1109/ACCESS.2020.2990567

Deb K, Agrawal S, Pratap A, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197. https://doi.org/10.1109/4235.996017

Chiandussi G, Codegone M, Ferrero S, Varesio FE (2012) Comparison of multi-objective optimization methodologies for engineering applications. Comput Math Appl 63(5):912–942. https://doi.org/10.1016/j.camwa.2011.11.057

Deb K, Sundar J (2006) Reference point based multi-objective optimization using evolutionary algorithms. In: Proceedings of the 8th annual conference on genetic and evolutionary computation. GECCO ’06, pp. 635–642. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/1143997.1144112

While L, Hingston P, Barone L, Huband S (2006) A faster algorithm for calculating hypervolume. IEEE Trans Evol Comput 10(1):29–38. https://doi.org/10.1109/TEVC.2005.851275

Hollander M, Wolfe DA, Chicken E (2013) Nonparametric statistical methods. Wiley, USA

Cortez P, Pereira PJ, Mendes R (2020) Multi-step time series prediction intervals using neuroevolution. Neural Comput Appl 32(13):8939–8953. https://doi.org/10.1007/s00521-019-04387-3

António João Silva Cortez P (2022) An Industry 4.0 intelligent decision support system for analytical laboratories. In: Maglogiannis I, Iliadis L, MacIntyre J, Cortez P (eds.), Artificial intelligence applications and innovations—18th IFIP WG 12.5 international conference, AIAI 2022, Hersonissos, Crete, Greece, June 17–20, 2022, Proceedings, Part II. IFIP Advances in Information and Communication Technology, vol. 647, pp. 159–169. Springer, Cham, Switzerland. https://doi.org/10.1007/978-3-031-08337-2_14

Guo X (2022) A survey of decomposition based evolutionary algorithms for many-objective optimization problems. IEEE Access 10:72825–72838. https://doi.org/10.1109/ACCESS.2022.3188762

Acknowledgements

This work was carried out within the project “TexBoost: less Commodities more Specialities” reference POCI-01-0247-FEDER-024523, co-funded by Fundo Europeu de Desenvolvimento Regional (FEDER), through Portugal 2020 (P2020).

Funding

Open access funding provided by FCT|FCCN (b-on).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ribeiro, R., Pilastri, A., Moura, C. et al. A data-driven intelligent decision support system that combines predictive and prescriptive analytics for the design of new textile fabrics. Neural Comput & Applic 35, 17375–17395 (2023). https://doi.org/10.1007/s00521-023-08596-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08596-9