Abstract

We propose a system for calculating a “scaling constant” for layers and weights of neural networks. We relate this scaling constant to two important quantities that relate to the optimizability of neural networks, and argue that a network that is “preconditioned” via scaling, in the sense that all weights have the same scaling constant, will be easier to train. This scaling calculus results in a number of consequences, among them the fact that the geometric mean of the fan-in and fan-out, rather than the fan-in, fan-out, or arithmetic mean, should be used for the initialization of the variance of weights in a neural network. Our system allows for the off-line design & engineering of ReLU (Rectified Linear Unit) neural networks, potentially replacing blind experimentation. We verify the effectiveness of our approach on a set of benchmark problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The design of neural networks is often considered a black-art, driven by trial and error rather than foundational principles. This is exemplified by the success of recent architecture random-search techniques [22, 37], which take the extreme of applying no human guidance at all. Although as a field we are far from fully understanding the nature of learning and generalization in neural networks, this does not mean that we should proceed blindly.

In this work, we define a scaling quantity \(\gamma _{l}\) for each layer l that approximates two quantities of interest when considering the optimization of a neural network: The ratio of the gradient to the weights, and the average squared singular value of the corresponding diagonal block of the Hessian for layer l. This quantity is easy to compute from the (non-central) second moments of the forward-propagated values and the (non-central) second moments of the backward-propagated gradients. We argue that networks that have constant \(\gamma _{l}\) are better conditioned than those that do not, and we analyze how common layer types affect this quantity. We call networks that obey this rule preconditioned neural networks.

As an example of some of the possible applications of our theory, we:

-

Propose a principled weight initialization scheme that can often provide an improvement over existing schemes;

-

Show which common layer types automatically result in well-conditioned networks;

-

Show how to improve the conditioning of common structures such as bottlenecked residual blocks by the addition of fixed scaling constants to the network.

2 Notation

Consider a neural network mapping \(x_{0}\) to \(x_{L}\) made up of L layers. These layers may be individual operations or blocks of operations. During training, a loss function is computed for each minibatch of data, and the gradient of the loss is back-propagated to each layer l and weight of the network. We prefix each quantity with \(\varDelta \) to represent the back-propagated gradient of that quantity. We assume a batch-size of 1 in our calculations, although all conclusions hold using mini-batches as well.

Each layer’s input activations are represented by a tensor \(x_{l}:n_{l}\times \rho _{l}\times \rho _{l}\) made up of \(n_{l}\) channels, and spatial dimensions \(\rho _{l}\times \rho _{l}\), assumed to be square for simplicity (results can be adapted to the rectangular case by using \(h_{l}w_{l}\) in place of \(\rho _{l}\) everywhere).

3 A model of ReLU network dynamics

Our scaling calculus requires the use of simple approximations of the dynamics of neural networks, in the same way that simplifications are used in physics to make approximate calculations, such as the assumption of zero-friction or ideal gasses. These assumptions constitute a model of the behavior of neural networks that allows for easy calculation of quantities of interest, while still being representative enough of the real dynamics.

To this end, we will focus in this work on the behavior of networks at initialization. Furthermore, we will make strong assumptions on the statistics of forward and backward quantities in the network. These assumptions include:

-

1.

The input to layer l, denoted \(x_{l}\), is a random tensor assumed to contain i.i.d entries. We represent the element-wise uncentered 2nd moment by \(E[x_{l}^{2}]\).

-

2.

The back-propagated gradient of \(x_{l}\) is \(\varDelta x_{l}\) and is assumed to be uncorrelated with \(x_{l}\) and iid. We represent the uncentered 2nd-moment of \(\varDelta x_{l}\) by \(E[\varDelta x_{l}^{2}]\).

-

3.

All weights in the network are initialized i.i.d from a centered, symmetric distribution.

-

4.

All bias terms are initialized as zero.

Our calculations rely heavily on the uncentered second moments rather than the variance of weights and gradients. This is a consequence of the behavior of the ReLU activation, which zeros out entries. The effect of this zeroing operation is simple when considering uncentered second moments under a symmetric input distribution, as half of the entries will be zeroed, resulting in a halving of the uncentered second moment. In contrast, expressing the same operation in terms of variance is complicated by the fact that the mean after application of the ReLU is distribution-dependent. We will refer to the uncentered second moment just as the “second moment” henceforth.

4 Activation and layer scaling factors

The key quantity in our calculus is the activation scaling factor \(\varsigma _{l}\), of the input activations for a layer l, which we define as:

This quantity arises due to its utility in computing other quantities of interest in the network, such as the scaling factors for the weights of convolutional and linear layers. In ReLU networks, many, but not all operations maintain this quantity in the sense that \(\varsigma _{l}=\varsigma _{l+1}\) for a layer \(x_{l+1}=F(x_{l})\) with operation F, under the assumptions of Sect. 3. Table 1 contains a list of common operations and indicates if they maintain scaling. As an example, consider adding a simple scaling layer of the form \(x_{l+1}=\sqrt{2}x_{l}\) which doubles the second moment during the forward pass and doubles the backward second moment during back-propagation. We can see that:

Our analysis in our work is focused on ReLU networks primarily due to the fact that ReLU nonlinearities maintain this scaling factor.

Using the activation scaling factor, we define the layer or weight scaling factor of a convolutional layer with kernel \(k_{l}\times k_{l}\) as:

Recall that \(n_{l}\) is the fan-in and \(n_{l+1}\) is the fan-out of the layer. This expression also applies to linear layers by taking \(k_{l}=1\). This quantity can also be defined extrinsically without reference to the weight initialization via the expression:

we establish this equivalence under the assumptions of Sect. 3 in the Appendix.

5 Motivations for scaling factors

We can motivate the utility of our scaling factor definition by comparing it to another simple quantity of interest. For each layer, consider the ratio of the second moments between the weights, and their gradients:

This ratio approximately captures the relative change that a single SGD step with unit step-size on \(W_{l}\) will produce. We call this quantity the weight-to-gradient ratio. When \(E[\varDelta W_{l}^{2}]\) is very small compared to \(E[W_{l}^{2}]\), the weights will stay close to their initial values for longer than when \(E[\varDelta W_{l}^{2}]\) is large. In contrast, if \(E[\varDelta W_{l}^{2}]\) is very large compared to \(E[W_{l}^{2}]\), then learning can be expected to be unstable, as the sign of the elements of W may change rapidly between optimization steps. A network with constant \(\nu _{l}\) is also well-behaved under weight-decay, as the ratio of weight-decay second moments to gradient second moments will stay constant throughout the network, keeping the push-pull of gradients and decay constant across the network. This ratio also captures a relative notion of exploding or vanishing gradients. Rather than consider if the gradient is small or large in absolute value, we consider its relative magnitude instead.

Theorem 1

The weight to gradient ratio \(\nu _{l}\) is equal to the scaling factor \(\gamma _{l}\) under the assumptions of Sect. 3

5.1 Conditioning of the Hessian

The scaling factor of a layer l is also closely related to the singular values of the diagonal block of the Hessian corresponding to that layer. We derive a correspondence in this section, providing further justification for our definition of the scaling factor above. We focus on non-convolutional layers for simplicity in this section, although the result extends to the convolutional case without issue.

ReLU networks have a particularly simple structure for the Hessian for any set of activations, as the network’s output is a piecewise-linear function g fed into a final layer consisting of a loss. This structure results in greatly simplified expressions for diagonal blocks of the Hessian with respect to the weights, and allows us to derive expressions involving the singular values of these blocks.

We will consider the output of the network as a composition of two functions, the current layer g, and the remainder of the network h. We write this as a function of the weights, i.e. \(f(W_{l})=h(g(W_{l}))\). The dependence on the input to the network is implicit in this notation, and the network below layer l does not need to be considered.

Let \(R_{l}=\nabla _{x_{l+1}}^{2}h(x_{l+1})\) be the Hessian of h, the remainder of the network after application of layer l (For a linear layer \(x_{l+1}=W_{l}x_{l}\)). Let \(J_{l}\) be the Jacobian of \(y_{l}\) with respect to \(W_{l}\). The Jacobian has shape \(J_{l}:n_{l}^{\text {out}}\times \left( n_{l}^{\text {out}}n_{l}^{\text {in}}\right) \). Given these quantities, the diagonal block of the Hessian corresponding to \(W_{l}\) is equal to:

The lth diagonal block of the Generalized Gauss-Newton matrix G [23]. We discuss this decomposition further in the appendix.

Assume that the input-output Jacobian \(\Phi \) of the remainder of the network above each block is initialized so that \(\left\| \Phi \right\| _{2}^{2}=O(1)\) with respect to \(n_{l+1}\). This assumption just encodes the requirement that initialization used for the remainder of the network is sensible, so that the output of the network does not blow-up for large widths.

Theorem 2

Under the assumptions outlined in Sect. 3, for linear layer l, the average squared singular value of \(G_{l}\) is equal to:

The Big-O term is with respect to \(n_{l}\) and \(n_{l+1}\); its precise value depends on properties of the remainder of the network above the current layer.

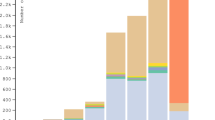

Despite the approximations required for its derivation, the scaling factor can still be close to the actual average squared singular value. We computed the ratio of the scaling factor (Eq. 2) to the actual expectation \(E[\left( G_{l}r\right) ^{2}]\) for a strided (rather than max-pooled, see Table 1) LeNet model, where we use random input data and a random loss (i.e. for outputs y we use \(y^{T}Ry\) for an i.i.d normal matrix R), with batch-size 1024, and \(32\times 32\) input images. The results are shown in Fig. 1 for 100 sampled setups; there is generally good agreement with the theoretical expectation.

6 Initialization of ReLU networks

An immediate consequence of our definition of the scaling factor is a rule for the initialization of ReLU networks. Consider a network where the activation scaling factor is constant through-out. Then any two layers l and r will have the same weight scaling factor if \(\gamma _{l}=\gamma _{r}\), which holds immediately when each layer is initialized with:

for some fixed constant c independent of the layer. Initialization using the geometric-mean of the fan-in and fan-out ensures a constant layer scaling factor throughout the network, aiding optimization. Notice that the dependence on the kernel size is also unusual, rather than \(k_{l}^{2}\), we normalize by \(k_{l}\).

6.1 Other initialization schemes

The most common approaches are the Kaiming [11] (sometimes called He) and Xavier [6] (sometimes called Glorot) initializations. The Kaiming technique for ReLU networks is one of two approaches:

For the feed-forward network above, assuming random activations, the forward-activation variance will remain constant in expectation throughout the network if fan-in initialization of weights [21] is used, whereas the fan-out variant maintains a constant variance of the back-propagated signal. The constant factor 2 corrects for the variance-reducing effect of the ReLU activation. Although popularized by [11], similar scaling was in use in early neural network models that used tanh activation functions [1].

These two principles are clearly in conflict; unless \(n_{l}=n_{l+1}\), either the forward variance or backward variance will become non-constant. No prima facie reason for preferring one initialization over the other is provided. Unfortunately, there is some confusion in the literature as many works reference using Kaiming initialization without specifying if the fan-in or fan-out variant is used.

The Xavier initialization [6] is the closest to our proposed approach. They balance these conflicting objectives using the arithmetic mean:

to “... approximately satisfy our objectives of maintaining activation variances and back-propagated gradients variance as one moves up or down the network”. This approach to balancing is essentially heuristic, in contrast to the geometric mean approach that our theory directly guides us to.

Figure 2 shows heat maps of the average singular values for each block of the Hessian of a LeNet model under the initializations considered. The use of geometric initialization results in an equally weighted diagonal, in contrast to the other initializations considered.

Average singular value heat maps for the strided LeNet model, where each square represents a block of the Hessian, with blocking at the level of weight matrices (biases omitted). Using geometric initialization maintains an approximately constant block-diagonal weight. The scale goes from Yellow (larger) through green to blue (smaller)

6.2 Practical application

The dependence of the geometric initialization on kernel size rather than its square will result in a large increase in forward second moments if c is not carefully chosen. We recommend setting \(c=2/k\), where k is the typical kernel size in the network. Any other layer in the network with kernel size differing from this default should be preceded by a fixed scaling factor \(x_{l+1}=\alpha x_{l}\), that corrects for this. For instance, if the typical kernel size is 1, then a 3x3 convolution would be preceded with a \(\alpha =\sqrt{1/3}\) fixed scaling factor.

In general, we have the freedom to modify the initialization of a layer, then apply a fixed multiplier before or after the layer to “undo” the increase. This allows us to change the behavior of a layer during learning by modifying the network rather than modifying the optimizer. Potentially, we can avoid the need for sophisticated adaptive optimizers by designing networks to be easily optimizable in the first place. In a sense, the need for maintaining the forward or backward variance that motivates that fan-in/fan-out initialization can be decoupled from the choice of initialization, allowing us to choose the initialization to improve the optimizability of the network.

Initialization by the principle of dynamical isometry [30, 35], a form of orthogonal initialization [24] has been shown to allow for the training of very deep networks. Such orthogonal initializations can be combined with the scaling in our theory without issue, by ensuring the input-output second moment scaling is equal to the scaling required by our theory. Our analysis is concerned with the correct initialization when layer widths change within a network, with is a separate concern from the behavior of a network in a large-depth limit, where all layers are typically taken to be the same width. In ReLU networks orthogonal initialization is less interesting, as “... the ReLU nonlinearity destroys the qualitative scaling advantage that linear networks possess for orthogonal weights versus Gaussian” [27].

7 Output second moments

A neural network’s behavior is also very sensitive to the second moment of the outputs. We are not aware of any existing theory guiding the choice of output variance at initialization for the case of log-softmax losses, where it has a non-trivial effect on the back-propagated signals, although output variances of 0.01 to 0.1 are reasonable choices to avoid saturating the nonlinearity while not being too close to zero. The output variance should always be checked and potentially corrected when switching initialization schemes, to avoid inadvertently large or small values.

In general, the variance at the last layer may easily be modified by inserting a fixed scalar multiplier \(x_{l+1}=\alpha x_{l}\) anywhere in the network, and so we have complete control over this variance independently of the initialization used. For a simple ReLU convolutional network with all kernel sizes the same, and without pooling layers we can compute the output second moment when using geometric-mean initialization (\(c=2/k\)) with the expression:

The application of a sequence of these layers gives a telescoping product:

so the output variance is independent of the interior structure of the network and depends only on the input and output channel sizes.

8 Biases

The conditioning of the additive biases in a network is also crucial for learning. Since our model requires that biases be initialized to zero, we can not use the gradient to weight ratio for capturing the conditioning of the biases in the network. The average singular value notion of conditioning still applies, which leads to the following definition: The scaling of the bias of a layer l, \(x_{l+1}=C_{l}(x_{l})+b_{l}\) is defined as:

In terms of the activation scaling this is:

From Eq. 6 it’s clear that when geometric initialization is used with \(c=2/k\), then:

and so all bias terms will be equally scaled against each other. If kernel sizes vary in the ReLU network, then a setting of c following Sect. 6.2 should be used, combined with fixed scalar multipliers that ensure that at initialization \(E[x_{l+1}^{2}]=\sqrt{\frac{n_{l}}{n_{l+1}}}E[x_{l}^{2}]\).

8.1 Network input scaling balances weights against biases

It is traditional to normalize a dataset before applying a neural network so that the input vector has mean 0 and variance 1 in expectation. This scaling originated when neural networks commonly used sigmoid and tanh nonlinearities, which depended heavily on the input scaling. This principle is no longer questioned today, even though there is no longer a good justification for its use in modern ReLU based networks. In contrast, our theory provides direct guidance for the choice of input scaling.

Consider the scaling factors for the bias and weight parameters in the first layer of a ReLU-based network, as considered in previous sections. We assume the data is already centered. Then the scaling factors for the weight and bias layers are:

We can cancel terms to find the value of \(E\left[ x_{0}^{2}\right] \) that makes these two quantities equal:

In common computer vision architectures, the input planes are the 3 color channels and the kernel size is \(k=3\), giving \(E\left[ x_{0}^{2}\right] \approx 0.2\). Using the traditional variance-one normalization will result in the effective learning rate for the bias terms being lower than that of the weight terms. This will result in potentially slower learning of the bias terms than for the input scaling we propose. We recommend including an initial forward scaling factor in the network of \(1/(n_{0}k^{2})^{1/4}\) to correct for this (Table 2).

9 Experimental results on 26 LIBSVM datasets

We considered a selection of dense and moderate-sparsity multi-class classification datasets from the LibSVM repository, 26 in total, collated from a variety of sources [3,4,5, 13, 14, 16, 18,19,20, 25, 28, 34]. The same model was used for all datasets, a non-convolutional ReLU network with 3 weight layers total. The inner-two layer widths were fixed at 384 and 64 nodes, respectively. These numbers were chosen to result in a larger gap between the optimization methods, less difference could be expected if a more typical \(2\times \) gap was used. Our results are otherwise generally robust to the choice of layer widths.

For every dataset, learning rate, and initialization combination we ran 10 seeds and picked the median loss after 5 epochs as the focus of our study (The largest differences can be expected early in training). Learning rates in the range \(2^{1}\) to \(2^{-12}\) (in powers of 2) were checked for each dataset and initialization combination, with the best learning rate chosen in each case based on the median of the 10 seeds. Training loss was used as the basis of our comparison as we care primarily about convergence rate, and are comparing identical network architectures. Some additional details concerning the experimental setup and which datasets were used are available in the appendix.

Table 2 shows that geometric initialization is the most consistent of the initialization approaches considered. The best value in each column is in bold. It has the lowest loss, after normalizing each dataset, and it is never the worst of the 4 methods on any dataset. Interestingly, the fan-out method is most often the best method, but consideration of the per-dataset plots (Fig. 3) shows that it often completely fails to learn for some problems, which pulls up its average loss and results in it being the worst for 9 of the datasets.

10 Convolutional case: AlexNet experiments

To provide a clear idea of the effect of our scaling approach on larger networks we used the AlexNet architecture [17] as a test bench. This architecture has a large variety of filter sizes (11, 5, 3, linear), which according to our theory will affect the conditioning adversely, and which should highlight the differences between the methods. The network was modified to replace max-pooling with striding as max-pooling is not well-scaled by our theory.

Following Sect. 7, we normalize the output of the network at initialization by running a single batch through the network and adding a fixed scaling factor to the network to produce output standard deviation 0.05. We tested on CIFAR-10 following the standard practice as closely as possible, as detailed in the Appendix. We performed a geometric learning rate sweep over a power-of-two grid. Results are shown in Fig. 4 for an average of 40 seeds for each initialization. Preconditioning is a statistically significant improvement (\(p=3.9\times 10^{-6})\) over arithmetic mean initialization and fan-in initialization, however, it only shows an advantage over fan-out at mid-iterations.

11 Case study: unnormalized residual networks

In the case of more complex network architectures, some care needs to be taken to produce well-scaled neural networks. We consider in this section the example of a residual network, a common architecture in modern machine learning. Consider a simplified residual architecture like the following, where we have omitted ReLU operations for our initial discussion:

where for some sequence of operations F:

we further assume that \(\alpha ^{2}+\beta ^{2}=1\) and that \(E[F(x)^{2}]=E[x^{2}]\) following [31]. The use of weighted residual blocks is necessary for networks that do not use batch normalization [7, 10, 32, 36].

If geometric initialization is used, then \(C_{0}\) and L will have the same scaling, however, the operations within the residual blocks will not. To see this, we can calculate the activation scaling factor within the residual block. We define the shortcut branch for the residual block as the \(\alpha x\) operation and the main branch as the C(x) operation. Let \(x_{R}=\beta C(x)\) and \(x_{S}=\alpha x\), and define \(y=x_{S}+x_{R}\).

Let \(\varsigma \) be the scaling factor at x

We will use the fact that:

From rewriting the scale factor for \(x_{R}\), we see that:

A similar calculation shows that the residual branch’s scaling factor is multiplied by \(\alpha ^{2}\). To ensure that convolutions within the main branch of the residual block have the same scaling as those outside the block, we must multiply their initialization by a factor c. We can calculate the value of c required when geometric scaling is used for an operation in layer l in the main branch:

For \(\gamma _{l}\) to match \(\gamma \) outside the block we thus need \(\gamma _{l}=\varsigma _{R}/a_{l}^{2}=(\beta ^{2}/c^{2})\varsigma _{R},\) i.e. \(c=\beta \). If the residual branch uses convolutions (such as for channel widening operations or down-sampling as in a ResNet-50 architecture) then they should be scaled by \(\alpha \). Modifying the initialization of the operations within the block changes \(E[F(x)^{2}],\) so a fixed scalar multiplier must be introduced within the main branch to undo the change, ensuring \(E[F(x)^{2}]=E[x^{2}]\).

11.1 Design of a pre-activation ResNet block

Using the principle above we can modify the structure of a standard pre-activation ResNet block to ensure all convolutions are well-conditioned both across blocks and against the initial and final layers of the network. We consider the full case now, where the shortcut path may include a convolution that changes the channel count or the resolution. Consider a block of the form:

We consider a block with fan-in n and fan-out m. There are two cases, depending on if the block is a downsampling block or not. In the case of a downsampling block, a well-scaled shortcut branch consists of the following sequence of operations:

In our notation, C is initialized with the geometric initialization scheme of Eq. 3 using numerator \(c=\alpha \). Here, op is output planes and ks is the kernel size. The constant 4 corrects for the downsampling, and the constant \(\alpha \) is used to correct the scaling factor of the convolution as described above. In the non-downsampled case, this simplifies to

For the main branch of a bottlenecked residual block in a pre-activation network, the sequence begins with a single scaling operation \(x_{0}=\sqrt{\beta }x\), the following pattern is used, with w being inner bottleneck width.

Followed by a 3x3 conv:

and the final sequence of operations mirrors the initial operation with a downscaling convolution instead of upscaling. At the end of the block, a learnable scalar \(x_{9}=\frac{v}{\sqrt{\beta }}x_{8}\) with \(v=\sqrt{\beta }\) is included following the approach of [31], and a fixed scalar corrects for any increases in the forward second moment from the entire sequence, in this case, \(x_{9}=\sqrt{\frac{m}{\beta n}}x_{8}\). This scaling is derived from Eq. 6 (\(\beta \) here undoes the initial beta from the first step in the block).

11.2 Experimental results

We ran a series of experiments on an unnormalized pre-activation ResNet-50 architecture using our geometric initialization and scaling scheme both within and outside of the blocks. We compared against the RescaleNet unnormalized ResNet-50 architecture. Following their guidelines, we added dropout which is necessary for good performance and used the same \(\alpha /\beta \) scheme that they used. Our implementation is available in the supplementary material. We performed our experiments on the ImageNet dataset [29], using standard data preprocessing pipelines and hyper-parameters. In particular, we use batch-size 256, decay 0.0001, momentum 0.9, and learning rate 0.1 with SGD, using a 30-60-90 decreasing scheme for 90 epochs. Following our recommendation in Sect. 7, we performed a sweep on the output scaling factor and found that a 0.05 final scalar gives the best results. Across 5 seeds, our approach achieved a test set accuracy of 76.18 (SE 0.04), which matches the performance of the RescaleNet within our test framework of 76.13 (SE 0.03). Our approach supersedes the “fixed residual scaling” that they propose as a way of balancing the contributions of each block.

12 Related work

Our approach of balancing the diagonal blocks of the Gauss-Newton matrix has close ties to a large literature studying the input-output Jacobian of neural networks. The Jacobian is the focus of study in a number of ways. The singular values of the Jacobian are the focus of theoretical study in [30, 35], where it’s shown that orthogonal initializations better control the spread of the spectrum compared to Gaussian initializations. [8, 9] also study the effect of layer width and depth on the spectrum. Regularization of the jacobian, where additional terms are added to the loss to minimize the Frobenius norm of the Jacobian, can be seen as another way to control the spectrum [12, 33], as the Frobenius norm is the sum of the squared singular values. The spectrum of the Jacobian captures the sensitivity of a network to input perturbations and is key to the understanding of adversarial machine learning, including generative modeling [26] and robustness [2, 15].

13 Conclusion

Although not a panacea, by using the scaling principle we have introduced, neural networks can be designed with a reasonable expectation that they will be optimizable by stochastic gradient methods, minimizing the amount of guess-and-check neural network design. Our approach is a step towards “engineering” neural networks, where aspects of the behavior of a network can be studied in an off-line fashion before use, rather than by a guess-implement-test-and-repeat experimental loop.

Availability of data and material

No unreleased data was used in this work.

References

Bottou L (1988) Reconnaissance de la parole par reseaux connexionnistes. In: Proceedings of Neuro Nimes 88

Chan A, Tay Y, Ong YS, Fu J (2020) Jacobian adversarially regularized networks for robustness. In: Eighth international conference on learning representations (ICLR2020)

Dua D, Graff C (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml

Duarte M, Hu YH (2004) Vehicle classification in distributed sensor networks. J Parallel Distrib Comput

Feng C, Sutherland A, King S, Muggleton S, Henery R (1993) Comparison of machine learning classifiers to statistics and neural networks. AI & Stats Conf. 93

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics

Hanin B (2018) Which neural net architectures give rise to exploding and vanishing gradients? In: Advances in neural information processing systems 31, pp. 582–591. Curran Associates, Inc

Hanin B, Nica M (2020) Products of many large random matrices and gradients in deep neural networks. Commun Math Phys

Hanin B, Paouris G: Non-asymptotic results for singular values of gaussian matrix products (2020)

Hanin B, Rolnick D (2018) How to start training: the effect of initialization and architecture. In: Advances in Neural Information Processing Systems, vol. 31

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the 2015 ieee international conference on computer vision (ICCV) (2015)

Hoffman J, Roberts DA, Yaida S (2019) Robust learning with jacobian regularization

Hsu CW, Chang CC, Lin CJ (2003) A practical guide to support vector classification. Technical report, Department of Computer Science, National Taiwan University (2003)

Hull JJ (1994) A database for handwritten text recognition research. IEEE Transactions on Pattern Analysis and Machine Intelligence

Jakubovitz D, Giryes R (2018) Improving dnn robustness to adversarial attacks using jacobian regularization. In: Computer Vision – ECCV 2018. Springer International Publishing

Krizhevsky A (2009) Learning multiple layers of features from tiny images

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Lang K (1995) Newsweeder: Learning to filter netnews. In Proceedings of the twelfth international conference on machine learning

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proceedings of the IEEE

LeCun Y, Huang F, Bottou L (2004) Learning methods for generic object recognition with invariance to pose and lighting. IEEE computer society conference on computer vision and pattern recognition (CVPR)

LeCun YA, Bottou L, Orr GB, Müller KR (2012) Neural Networks: Tricks of the Trade, chap. Springer, Efficient BackProp

Li L, Talwalkar AS (2019) Random search and reproducibility for neural architecture search. CoRR

Martens J (2014) New insights and perspectives on the natural gradient method. In: ArXiv e-prints

Mishkin D, Matas J (2016) All you need is a good init

Netzer Y, Wang T, Coates A, Bissacco A, Wu B, Ng AY (2011) Reading digits in natural images with unsupervised feature learning. NIPS Workshop on Deep Learning and Unsupervised Feature Learning

Nie W, Patel A (2019) Towards a better understanding and regularization of gan training dynamics. In: UAI

Pennington J, Schoenholz SS, Ganguli S (2018) The emergence of spectral universality in deep networks. Proceedings of the 21st international conference on artificial intelligence and statistics (AISTATS)

Rocha A, Goldenstein S (2014) Multiclass from binary: expanding one-vs-all, one-vs-one and ecoc-based approaches. IEEE transactions on neural networks and learning systems

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis (IJCV) 115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y

Saxe AM, McClelland JL, Ganguli S (2014) Exact solutions to the nonlinear dynamics of learning in deep linear neural networks. 2nd international conference on learning representations (ICLR 2014)

Shao J, Hu K, Wang C, Xue X, Raj B (2020) Is normalization indispensable for training deep neural networks?. In: 34th conference on neural information processing systems (NeurIPS 2020)

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the Thirty-First AAAI conference on artificial intelligence

Varga D, Csiszárik A, Zombori Z (2018) Gradient regularization improves accuracy of discriminative models

Wang JY (2002) Application of support vector machines in bioinformatics. Master’s thesis, Department of Computer Science and Information Engineering, National Taiwan University

Xiao L, Bahri Y, Sohl-Dickstein J, Schoenholz SS, Pennington J (2018) Dynamical isometry and a mean field theory of cnns: How to train 10,000-layer vanilla convolutional neural networks. ICML

Zhang H, Dauphin YN, Ma T (2019) Residual learning without normalization via better initialization. In: International conference on learning representations

Zoph B, Le QV (2016) Neural architecture search with reinforcement learning. CoRR

Funding

This research was funded in whole by Facebook Inc.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Code availability

An implementation of the modified rescale net is available upon request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Forward and backward second moments

We make heavy use of the equations for forward propagation and backward propagation of second moments, under the assumption that the weights are uncorrelated to the activations or gradients. For a convolution

with input channels \(n_{l}\), output channels \(n_{l+1},\) and square \(k\times k\) kernels, these formulas are (recall our notation for the second moments is element-wise for vectors and matrices):

Appendix B: Scaling properties of common operations

Recall the scaling factor \(\varsigma \):

we show in this section how common neural network building blocks effect this factor.

2.1 Convolutions

Recall the rules for forward and back-propagated second moments for randomly initialized convolutional layers:

These relations require that W be initialized with a symmetric mean zero distribution. When applied to the scaling factor we see that:

2.2 Linear layers

These are a special case of convolutions with \(k=1\) and \(\rho =1\).

2.3 Averaging pooling

If the kernel size is equal to the stride, then element-wise we have:

So:

2.4 ReLU

If the input to a ReLU is centered and symmetrically distributed, then during the forward pass, half of the inputs are zeroed out in expectation, meaning that \(E[x_{l+1}^{2}]=\frac{1}{2}E[x_{l}^{2}]\). The backward operation just multiplies \(\varDelta x_{l+1}\) by the zero pattern used during the forward pass, so it also zeros half of the entries, giving \(E[\varDelta x_{l}^{2}]=\frac{1}{2}E[\varDelta x_{l+1}^{2}]\) So:

2.5 Dropout

The reasoning for dropout is essentially the same as for the ReLU. If nodes are dropped out with probability p, then \(E[x_{l+1}^{2}]=(1-p)E[x_{l}^{2}]\) and \(E[\varDelta x_{l}^{2}]=\left( 1-p\right) E[\varDelta x_{l+1}^{2}]\). So scaling is maintained. Note that in the PyTorch implementation, during training the outputs are further multiplied by \(1/(1-p)\).

2.6 Scalar multipliers

Consider a layer:

Then

and

so the forward and backward signals are multiplied by \(u_{l}^{2}\), which maintains scaling.

2.7 Residual blocks

Consider a residual block of the form:

for some operation R. Suppose that \(E[R(x)^{2}]=s^{2}E[x^{2}]\) for some constant s. The forward signal second moment gets multiplied by \(\left( 1+s^{2}\right) \) after the residual block:

The backwards signal second moment is also multiplied by s:

So:

Appendix C: Extrinsic and intrinsic form equivalence

Recall the extrinsic definition of \(\gamma _{l}\):

We rewrite this as:

Then by using the forward and backward relations Eqs. 9 and 10:

Appendix D: The weight gradient ratio is equal to GR scaling for MLP models

Theorem 3

The weight-gradient ratio \(\nu _{l}\) is equal to the scaling \(\gamma _{l}\) factor under the assumptions of Sect. 3.

Proof

First, we rewrite Eq. 9 to express \(E[W^{2}]\) in terms of forward moments:

For the gradient w.r.t to weights of a convolutional layer, we have:

Therefore:

Appendix E: Scaling of scalar multipliers

Consider the layer:

with a single learnable scalar \(u_{l}\). Using :

we have:

Likewise from the equations of back-prop we have:

so

Therefore:

This equation is the same for a 1x1 convolutional layer. We can write the scaling factor in terms of the weight u more directly, by rearranging the scaling rule as:

and substituting it into Eq. 11:

To ensure that the scaling factor matches that of convolutions used in the network, suppose that geometric initialization is used with global constant c, then each convolution has \(\gamma _{l}=\varsigma _{l}/c^{2}\), so we need:

or just \(u_{l}=\sqrt{c}\). Notice that if we had used a per-channel scalar instead, then the layer scaling would not match the scaling of convolution weights, which would result in uneven layer scaling in the network. This motivates using scalar rather than channel-wise scaling factors.

Appendix F: The Gauss–Newton matrix

Standard ReLU classification and regression networks have a particularly simple structure for the Hessian with respect to the input, as the network’s output is a piecewise-linear function g feed into a final layer consisting of a convex log-softmax operation, or a least-squares loss. This structure results in the Hessian with respect to the input being equivalent to its Gauss–Newton approximation. The Gauss–Newton matrix can be written in a factored form, which is used in the analysis we perform in this work. We emphasize that this is just used as a convenience when working with diagonal blocks, the GN representation is not an approximation in this case.

The (Generalized) Gauss-Newton matrix G is a positive semi-definite approximation of the Hessian of a non-convex function f, given by factoring f into the composition of two functions \(f(x)=h(g(x))\) where h is convex, and g is approximated by its Jacobian matrix J at x, for the purpose of computing G:

The GN matrix also has close ties to the Fisher information matrix [23], providing another justification for its use.

Surprisingly, the Gauss-Newton decomposition can be used to compute diagonal blocks of the Hessian with respect to the weights \(W_{l}\) as well as the inputs [23]. To see this, note that for any activation \(y_{l}\), the layers above may be treated in a combined fashion as the h in a \(f(W_{l})=h(g(W_{l}))\) decomposition of the network structure, as they are the composition of a (locally) linear function and a convex function and thus convex. In this decomposition \(g(W_{l})=W_{l}x_{l}+b_{l}\) is a function of \(W_{l}\) with \(x_{l}\) fixed, and as this is linear in \(W_{l}\), the Gauss-Newton approximation to the block is thus not an approximation.

Appendix G: GR scaling derivation

Our quantity of interest is the average squared singular value of \(G_{l}\), which is simply equal to the (element-wise) non-central second moment of the product of G with a i.i.d normal random vector r:

Recall that our notation \(E[X^{2}]\) refers to the element-wise non-central second moment of the vector. To compute the second moment of the elements of \(G_{l}r\), we can calculate the second moment of matrix-random-vector products against \(J_{l}\), \(R_{l}\) and \(J_{l}^{T}\) separately since R is uncorrelated with \(J_{l}\), and the back-propagated gradient \(\varDelta y_{l}\) is uncorrelated with \(y_{l}\) (Assumption A3).

5.1 Jacobian products \(J_{l}\) and \(J_{l}^{T}\)

Note that each row of \(J_{l}\) has \(n_{l}^{\text {in}}\) non-zero elements, each containing a value from \(x_{l}\). This structure can be written as a block matrix,

Where each \(x_{l}\) is a \(1\times n_{l}^{\text {in}}\) row vector. This can also be written as a Kronecker product with an identity matrix as \(I_{n_{l}^{\text {out}}}\otimes x_{l}\). The value \(x_{l}\) is i.i.d random at the bottom layer of the network. For layers further up, the multiplication by a random weight matrix from the previous layer ensures that the entries of \(x_{l}\) are identically distributed. So we have:

Note that we didn’t assume that the input \(x_{l}\) is mean zero, so \(Var[x_{l}]\ne E[x_{l}^{2}].\) This is needed as often the input to a layer is the output from a ReLU operation, which will not be mean zero.

For the transposed case, we have a single entry per column, so when multiplying by an i.i.d random vector u we have:

5.2 Upper Hessian \(R_{l}\) product

Instead of using \(R_{l}u\), for any arbitrary random u, we will instead compute it for \(u=y_{l}/E[y_{l}^{2}]\), it will have the same expectation since both \(J_{l}r\) and \(y_{l}\) are uncorrelated with \(R_{l}\). The piecewise linear structure of the network above \(y_{l}\) with respect to the \(y_{l}\) makes the structure of \(R_{l}\) particularly simple. It is a least-squares problem \(g(y_{l})=\frac{1}{2}\left\| \Phi y_{l}-t\right\| ^{2}\) for some \(\Phi \) that is the linearization of the remainder of the network. The gradient is \(\varDelta y=\Phi ^{T}\left( \Phi y-t\right) \) and the Hessian is simply \(R=\Phi ^{T}\Phi \). So we have that

Applying this gives:

5.3 Combining

To compute \(E[\left( G_{l}r\right) ^{2}]=E[\left( J_{l}^{T}R_{l}J_{l}r\right) ^{2}]\) we then combine the simplifications from Eqs. 13, 14 and 15 to give:

Appendix H: Details of LibSVM dataset input/output scaling

To prevent the results from being skewed by the number of classes and the number of inputs affecting the output variance, the logit output of the network was scaled to have standard deviation 0.05 after the first minibatch evaluation for every method, with the scaling constant fixed thereafter. LayerNorm was used on the input to whiten the data. Weight decay of 0.00001 was used for every dataset. To aggregate the losses across datasets we divided by the worst loss across the initializations before averaging.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Defazio, A., Bottou, L. A scaling calculus for the design and initialization of ReLU networks. Neural Comput & Applic 34, 14807–14821 (2022). https://doi.org/10.1007/s00521-022-07308-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07308-z