Abstract

To date, Artificial Intelligence systems for handwriting and drawing analysis have primarily targeted domains such as writer identification and sketch recognition. Conversely, the automatic characterization of graphomotor patterns as biomarkers of brain health is a relatively less explored research area. Despite its importance, the work done in this direction is limited and sporadic. This paper aims to provide a survey of related work to provide guidance to novice researchers and highlight relevant study contributions. The literature has been grouped into “visual analysis techniques” and “procedural analysis techniques”. Visual analysis techniques evaluate offline samples of a graphomotor response after completion. On the other hand, procedural analysis techniques focus on the dynamic processes involved in producing a graphomotor reaction. Since the primary goal of both families of strategies is to represent domain knowledge effectively, the paper also outlines the commonly employed handwriting representation and estimation methods presented in the literature and discusses their strengths and weaknesses. It also highlights the limitations of existing processes and the challenges commonly faced when designing such systems. High-level directions for further research conclude the paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Neuropsychology is an established discipline that investigates brain–behavior relationships [1]. A neuropsychologist attempts to determine the presence of brain dysfunction by examining abnormal behavioral patterns exhibited by a potentially at-risk individual [2]. Dysfunctional brain processing can result from an underlying neurological disorder, psychiatric imbalance or syndromes [3]. A comprehensive neuropsychological assessment can prove effective in explaining consequential changes in an individual’s behavior, emotions, and executive functioning due to an underlying dysfunction. By employing specific methodological procedures sensitive to specific functional changes, a neuropsychologist can correlate a particular cognitive or behavioral impairment and suggest adequate rehabilitation needs [4]. Similar procedures can also be used to measure the effectiveness of treatment [5]. In this regard, a neuropsychologist provides counseling services to both neurologists and psychiatrists.

Neuropsychological test batteries comprise a series of performance-based tests that require individuals to perform various verbal and non-verbal tasks. These tests are non-intrusive, easy to administer, and are designed to assess an individual’s various cognitive, perceptual, and motor skills. A popular category of neuropsychological assessments includes the pen-and-paper tests, which include some graphomotor-based tasks involving drawing or handwriting. Studies, e.g. [6,7,8], suggest that complex multi-component tasks, such as drawing and handwriting, require necessary graphomotor skills, such as visual-perceptual maturity, spelling coding, motor planning and execution, kinesthetic feedback, and visual-motor coordination. Any dysfunction of the aforementioned skills due to associated brain disorders consequently affects an individual’s drawing and handwriting performance. Leveraging the screening potential of graphomotor impressions, these have been employed as psychometric tools for the detection of a variety of neuropsychological and neurological disorders such as apraxia, visuo-spatial neglect (VSN), dysgraphia, and dementia [9]. The impact of writers’ emotional state on their handwriting has also been established in some studies, e.g. [10].

Conventional test conducting protocol requires subjects to draw or write on a page using a pen or pencil as a medium. The test may include copying or recalling a visual stimulus (reproduction method), completing a partially drawn/written task (completion method), or projecting a concept into words or graphics (projective method). Some commonly used graphomotor tests include the Rey-Osterrieth complex figure (ROCF) test [11], the Clock Drawing test (CDT) [12], the Bender-Gestalt test (BGT) [13], the Archimedean spiral [14], and the Draw-a-Person (DAP) test [15]. These tests can be conducted individually or in groups. Once the subject produces a response, it is then visually examined by the domain expert with the aim of identifying indicators of specific brain dysfunction, using standard scoring manuals [16,17,18]. The results obtained from these assessments are further correlated with other clinical findings to diagnose associated disorders and thus to suggest adequate rehabilitation.

1.1 Motivations

Despite the importance of graphomotor-based neuropsychological assessments, there has been a gradual decline in their use over the years [19]. Among the different contributing factors, two major concerns are time and standardization. Test administration and scoring is a time-consuming and tedious task. In a typical clinical setting, evaluating a patient’s response can take several hours [20]. Manual scoring and interpretation also lack precision and accuracy, due to inter-scorer and test-retest reliability issues [21]. Additionally, they may not capture or reveal subtle but relevant patterns, which can aid in predictive accuracy. Traditional tests are also not designed for repeated measurements and are practically not accessible for home care. Another important factor is the lack of interdisciplinary research, due to which neuropsychologists have been relatively slow to embrace applied computer technologies in their work, despite mass digitization. However, a growing interest of the Artificial Intelligence (AI) community in analyzing graphomotor samples for potential screening of cognitive impairments has inspired practical neuropsychologists to become less skeptical of adopting these emerging technologies in the quest to provide cost-effective and time-efficient services to the masses [22]. Figure 1 depicts the main high-level differences between a typical medical evaluation, which requires the simultaneous presence of patient and doctor, versus the use of AI in the procedure.

To date, there is a growing literature on the work carried out by the AI community, and more specifically that of Pattern Recognition, e.g. [23, 24], ranging from feature extraction, selection, and validation for knowledge representation, to graphomotor task analysis for effective detection, diagnosis and monitoring of disease progression. And this growing interest has been boosted by the recent advancement and explosion of machine learning and deep learning methods. However, the highly specific nature of the domain problem makes it difficult to compile and categorize a comprehensive review. Recently, there have been some attempts to compile relevant literature. For example, [25] presented a review of recent work aimed at detecting and monitoring Parkinson’s disease (PD) using the recent technologies. In another review, De Stefano et al. [26] presented a survey of handwriting modeling approaches used to support the early diagnosis, monitoring and tracking of neurodegenerative diseases. The study focuses mainly on Alzheimer’s disease (AD) as well as Mild Cognitive Impairment (MCI). Similar reviews are presented in Impedovo and Pirlo, [27] and Vessio [28], where the authors underlined the importance of dynamic attributes of the handwriting for the identification of AD and PD. Dynamic handwriting analysis has gained a lot of popularity in recent years due to the advent of digital technology. This importance is also highlighted in a very recent survey by Faundez-Zanuy et al. [29], which, however, is more generic and does not deepen into the health domain. Impedovo et al. [30] also argue the effectiveness of dynamic handwriting analysis for the early diagnosis of PD.

1.2 Contribution

Most studies presenting a review of the relevant literature focus on a specific disorder (PD or AD) or a particular technique (dynamic attributes or handwriting modeling). Although these provide an in-depth analysis in a particular direction, we believe that a study is needed that presents a generic overview of possible directions that can be explored by novice researchers. The main contribution of this work is the compilation and systematization of the prominent works related to the computerized analysis of graphomotor tests for a variety of neuropsychological and neurological disorders. To this end, we have collected and categorized many studies that addressed various impairments and proposed various analytical techniques. A novel categorization is proposed which classifies the literature into “visual analysis techniques” and “procedural analysis techniques”. Visual analysis techniques evaluate offline samples of a graphomotor response after completion, while procedural analysis techniques focus on the dynamic processes involved in producing a graphomotor response. On the one hand, this paper can provide directions to researchers in computer science to investigate the application of Artificial Intelligence techniques for computer-aided analysis of different graphomotor tests. On the other hand, the findings of this study may be helpful for neuropsychologists providing them with an overview of computational methods that can potentially be included in their practice to provide objectivity. The key idea of such systems is to facilitate (and not to replace) especially the human experts for the mass screening of at-risk subjects.

For a better understanding by readers, we first present the proposed contextual categorization of the studies referred to in this work in Sect. 2. We summarize in Sect. 3 the commonly used features and estimation methods and compare their strengths and weaknesses from a pattern recognition perspective. Section 4 discusses the visual analysis techniques in detail, while Sect. 5 introduces the procedural analysis techniques. Finally, Sect. 6 concludes the paper with a discussion of the main findings and directions for future research.

2 Proposed categorization of the literature

Computerized analysis of handwriting and hand-drawn shapes has remained an active area of research in the AI community for the past several decades. In general, a graphomotor impression contains both explicit and implicit information that can be used for a variety of applications. As a result, research in domains like handwriting recognition [31,32,33,34,35], character recognition [36, 37], binarization [38], segmentation [39], keyword spotting [40], manuscript dating [41], signature verification [42, 43], writer identification [44] and writer demographics prediction [45,46,47], has matured considerably, and is practically applied in fields like forensic investigation [48], document analysis [49], document preservation [50] and information retrieval [51].

Contrary to the aforementioned popular applications, the computerized analysis of handwriting and hand-drawn shapes to assess a person’s mental health or predict different brain disorders still needs further investigation to reach clinical practice and achieve technology transfer. However, since the renewed interest of AI experts in analyzing neuropsychological graphomotor tasks for various diagnostic purposes, several attempts have been made in the recent years. Due to their sporadic nature, the categorization of existing systems could be done on the basis of several criteria. For example, these can be grouped according to their goals (e.g., feature validation or disease diagnosis), or their mode of data acquisition (e.g., offline or online). However, we opted for a categorization criterion based on the mode of analysis, i.e. whether the system analyzes the visual feedback of the completed graphomotor response or evaluates the procedure involved during its creation; thus, naming them “visual analysis techniques” and “procedural analysis techniques”.

This categorization criterion is motivated by the observation that research in this field has been driven primarily by advances in hardware and software technology. This has led us to provide the reader with not only a historical, but also a technical perspective on the topic. We will discuss the preliminary studies, which have led to research directions that, after the initial explosion, are now dormant, as well as the more recent contributions that have gained increasing interest thanks to the emergence of techniques in the field of machine and deep learning.

Furthermore, it is worth pointing out that a reader of the biometric community would find a parallel between our proposed distinction of visual and procedural analysis techniques and the already known distinction between “static” and “dynamic” handwriting analysis [29]. We believe that a new terminology is needed because the static/dynamic distinction is traditionally linked to the biometric literature alone. The focus of our current study, on the other hand, encompasses many interdisciplinary problems. More general terminology that encompasses not only the type of analysis but also what domain experts expect from the administration of a given test could serve to enhance the acceptability of the related literature.

Visual analysis techniques include studies that attempt to analyze completed responses of graphomotor-based neuropsychological tasks such as the Necker’s cube [52], ROCF [53], BGT [54], and CDT [55]. Most of these tests include geometrically inspired shapes that involve linearity, circularity, curvilinearity and angularity components, and aim at the visual-perceptual orientation of the subjects. The goal is to assess an individual’s ability to accurately perceive and reproduce such shapes. Common deformations considered by clinical professionals while evaluating drawn responses to these stimuli are rotation, fragmentation, cohesion, perseverance, and so on. For example, the drawings of individuals suffering from frontal lobe injuries are prone to perseverance (i.e., reproducing the same pattern over and over again), such as exceeding the number of dots or drawing extra columns of circles [13]. Closure difficulty or the inability to join parts of a shape are linked to an indication of emotional distress and visuo-spatial neglect [56]. Similarly, rotation of a complete figure from \(80^\circ\) to \(180^\circ\) has been associated with signs of focal brain lesions and dementia in elderly [57]. Computerized systems designed to identify such deformations are mainly based on static geometric and spatial features (such as size, angle, orientation or pixel-wise distance) extracted from digitized offline samples, and in some cases online samples of drawings or handwritten responses, e.g. [52, 58]. These features are then compared to those of the expected templates through template-matching, machine learning, or extensive domain-specific heuristics.

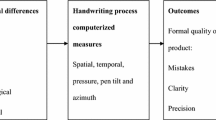

With the rise of technology, AI researchers are now exploring potential alternatives or additional techniques such as dynamic handwriting and drawing analysis using specialized electronic devices, e.g. digitizing tablets and smart pens [59]. This has led to a paradigm shift from visual analysis to procedural analysis techniques. Procedural analysis techniques focus on identifying and evaluating unconventional biomarkers such as hand movement, pressure exerted, and the time it takes an individual to perform a graphomotor task [30, 60, 61]. A conventional online acquisition tool such as a digitizing tablet usually records various attributes of pen writing. These include (x, y) coordinates, timestamps, button status (indicating pen-up and pen-down movements), pen pressure and tilt. The functional values provided can then be used to derive various dynamic features. All these values are computed on consecutive time intervals and are present in sequence. In most state-of-the-art cases, these sequences are converted to a single value by computing statistics (such as the mean or standard deviation), which is then used to make diagnostic decisions.

Both approaches have their inherent strengths and weaknesses. Also, since they allow to capture different patterns, they can complement each other successfully. Figure 2 shows a spectrum of tests used in the recent literature to assess various pathologies in the non-mature and degenerative neuromotor system with visual and procedural analysis of the handwriting. Methods and techniques employed in both of these categories are discussed later in the paper. Prior to these details, we discuss the various features that have been investigated for handwriting representation and estimation.

3 Representation and estimation

A thorough review of the techniques presented in the next sections will highlight several issues that require attention from the community. However, the most significant and common to all studies are representation and estimation, as outlined in Table 1 and detailed in the following. “Representation” refers to the characterization of clinical manifestations using computational features, while “estimation” concerns the measurement of deviations in graphomotor impressions produced by a subject from the expected prototypes. It is worth pointing out that the term “features” in Table 1 is standard terminology in the machine learning literature [62] and, in the context of this study, refers to the mathematical representation of mainly physical observations in handwriting, which are subsequently used for classification, verification, or prediction purposes.

3.1 Representation

In an attempt to effectively represent the clinical manifestations and graphomotor patterns being assessed, a series of computable feature classes have been proposed in the literature. These include various static and dynamic features specific to the objective of the study. Spatial and geometric features are extracted at the component-level or globally. Spatial and geometric features have also been employed in a series of procedural analysis techniques [67, 68] to enhance the performance of dynamic features. Commonly used spatial and geometric features include height, width, tangential angle, size, dimension, orientation, etc. Spatial features are useful in characterizing visuo-spatial or visuo-perceptual patterns; however, due to the limitation of definition and the unconstrained nature of the free-hand responses, traditional hand-crafted features prove insufficient for a complete analysis.

The kinematic features are mainly acquired from a digitizing tablet or smart pen. They are used to characterize an individual’s fine motor skills and handwriting fluency, which can be affected by an underlying impairment, such as PD. Kinematic features are highly dependent on the task employed and can lead to poor performance if extracted from a non-relevant task [71, 72]. It is worth noting that their calculation typically results in feature vectors of varying lengths depending on the time taken by the subject to complete a task. In most cases, these vector features cannot be used directly to train a machine learning model and, therefore, several statistical features are computed from them, such as the number of changes of velocity or acceleration (NCV/NCA), or, more simply, mean, median, etc. The main purpose of these statistical features is to map sequential information based on time series into a single value. However, vital information can be lost by condensing a sequence into a single parameter [90].

In an attempt to evaluate different modalities, pen and pressure signals were also used in several studies, e.g. [67, 72, 74,75,76]. Two types of pressure units are mainly evaluated, i.e. the pen pressure exerted on the surface when writing [72] and the finger grip on the writing tool, usually a smart pen [74]. The pressure signals captured by the smart pen can be transformed into 2D images to extract visual features from them [74].

Temporal features were used in several studies, e.g. [30, 68, 72, 77,78,79], to indicate several cognitive impairments. Among the temporal measures provided, in-air time has shown promise especially in identifying cognitive impairments resulting from neurodegenerative diseases such as PD and AD. Despite the promising results presented in studies like [77, 78], temporal features may not prove effective for the differential diagnosis of diseases with overlapping conditions. For example, the time delay in PD can be due to motor impairments, while in AD and MCI this can be attributed to memory or executive planning impairments.

Nonlinear dynamic features such as entropy and energy can capture randomness and irregularities in a given signal. In a handwriting signal, these irregularities can highlight alterations of fine movements, which are otherwise difficult to analyze using only the kinematic features. Based on this assumption, studies such as Impedovo et al. [30]; Drotár et al. [60]; Taleb et al. [68]; Drotár et al. [72]; Garre-Olmo et al. [79]; Drotár et al. [80] have extracted several nonlinear dynamic features from handwriting and drawing signals. As with all other dynamic feature vectors, some statistical functions of the feature vectors are computed prior to classification.

Neuromotor features are obtained from the stroke velocity profiles, which extract the intrinsic properties of the neuromuscular system and the control strategy of an individual performing a graphomotor task. According to the Kinematic Theory of Rapid Human Movements, various parameters are calculated that characterize a stroke velocity profile with either the Delta- or Sigma-Lognormal [83]. These features have been adopted by studies like [84,85,86] to discriminate between normal and abnormal age-related changes in handwriting. Moreover, recent investigations on this theory seem to find common parameters to quantify the deficits caused by Parkinson’s disease in handwriting and voice [98] or shoulder fatigue detection [99].

Despite the success of deep learning in other areas, its applicability in the analysis of neuropsychological graphomotor tests has not been explored to its full potential. Two main concerns have limited its use in this domain: the scarcity of training data and the black-box nature of the techniques. However, recent studies, e.g. [74, 87,88,89], have shown the potential of machine-learned features in modeling different graphomotor tasks. On the one hand, the problem of limited data can be partially mitigated by using transfer learning from larger natural image datasets [87]. On the other hand, some qualitative assessments can be made on the importance of some subsets of features over others by feeding the network model with features already engineered in some way [90]. Explainability issues in deep learning are a hot topic these days, and work is underway to try to fill this gap. However, some attempts have been proposed to design explainable systems to detect neurodegenerative diseases [100].

3.2 Estimation

To evaluate the effectiveness of the proposed handwriting representations, several approaches (outlined in Table 1) have been proposed in recent years.

The simplest of these techniques is template matching that can prove beneficial in estimating primitive component-level patterns. Pixel-wise distance is computed between the drawn component and the expected stimulus. Although some techniques in literature [52, 68] have employed a template matching-based approach, such approaches have limited applicability in this domain due to the unconstrained nature of the free hand drawings.

Heuristic-based techniques have also been employed in the literature [53, 58, 63, 69, 91]. Their prime objective is to provide explainable solutions for the target users, i.e. the clinical practitioners. Due to the inherent superiority of fuzzy logic over a strict rule-based approach, it is a preferred design strategy in this domain. However, despite being advantageous in modeling clinical manifestations, heuristics are difficult to design and lack scalability.

Statistical analysis methods are mainly applied to determine the strength of the association between the computed features and clinical scores. These methods determine the effectiveness of a feature in representing target handwriting. One of the commonly used methods is the Pearson correlation test [101]. Because different features are combined into a high-dimensional feature set, some form of statistical ranking is performed to reduce dimensionality. The Spearman correlation test [102] is used in most cases to select the most significant features for the final model training. Depending on the distribution of the feature set, methods such as the t-test [103] and the Mann-Whitney U test [104] are also used.

Probably, machine learning is the most popular approach in the related literature [30, 60, 61, 64, 65, 67, 68, 71,72,73, 76,77,78, 80, 86, 92,93,94,95]. Graphomotor-based analysis can be approached as a regression [77, 78] or a classification problem, e.g. [64, 65, 68, 72, 92, 93]. Commonly used machine learning models include Support Vector Machines (SVMs), K-Nearest Neighbour (K-NN), Decision Trees (DTs), Random Forests (RFs), Optimum-Path Forests (OPFs), and Linear Discriminant Analysis (LDA). Boosting approaches (AdaBoost and XGBoost) have also been used. Similar to many other pattern recognition problems, the literature suggests that no single model alone performs better in all scenarios for this problem as well. For this reason, some studies, e.g. [30, 61, 75], evaluated the performance of the proposed features on different classifiers before selecting the best one (or the best subset of them for use in an ensemble scheme). Similarly, end-to-end feature learning (and classification) using deep learning models is also gaining popularity in recent research, e.g. [74, 87, 90, 96, 97].

3.3 Challenges and opportunities

It is quite evident from the literature that defining representations is the most challenging task in a computerized analysis system. The difficulty of finding an effective representation affects the performance of the estimation method and, ultimately, the outcome of the analysis. From the complete graphomotor responses, both static and dynamic features can be extracted to represent visuo-motor, visuo-spatial and visuo-perceptual deformations due to some cognitive disorder. In an effort to do this, several features have been extracted from both offline (scanned images of paper-based responses) and online (acquired by digitizing tablets) samples. Due to the insufficiency of independent features, various combinations have been proposed in the literature, resulting in high-dimensional feature vectors. As expected, the combined approach allows for improved performance; however, due to the highly task-specific nature of the features [105], feature selection must be performed. Machine-learned features have emerged as a viable alternative to classic hand-crafted approaches. However, their applicability in the domain of handwriting representation and estimation has not been thoroughly investigated. These features, although not always intuitive to correlate or interpret, can provide useful information on the intrinsic properties of the writer/drawer.

3.4 Features (neuropsychology perspective)

To bridge the gap between computer-aided screening/diagnosis and clinical practice, the explainability of computational features plays a key role. Allowing domain experts to establish a correlation between what they follow in conventional practice (to diagnose or control a disorder) and what automated solutions measure can also lead to greater acceptability of such systems by practitioners. To improve this explainability, we have made an effort to establish a linkage between the computational features employed in different studies by AI researchers and the corresponding high-level measures practiced by experts. These mappings are summarized in Table 2 where several quantitative features representing qualitative attributes of neuropsychology are listed.

For instance, “bradykinesia”, “micrographia”, “rigidity” and “tremor” are popular manifestations observed by clinical practitioners while determining signs of PD. These conditions have been measured computationally by AI experts using electronic devices, such as digitizing tablets that allow the extraction of various temporal, kinematic, pressure, spatial and geometric features. Temporal features such as in-air time (when the pen tip is not touching the writing surface) have been used to determine pauses between strokes. This, along with the total task completion time recorded, indicates slowness of movement. Similarly, spatial and geometric features such as vertical and horizontal stroke size changes are computed to indicate micrographia. Using sensors to measure the pressure exerted on the pen and the writing surface can help analyze muscular rigidity, while kinematic features such as inversions in stroke acceleration can assess non-fluency. Naturally, this linkage is more intuitive in the case of hand-crafted features than machine-learned features, where they are learned during training. For machine-learned features, a standard method is to visualize the output of intermediate layers in the network to explain what the model learns. Their effectiveness is generally inferred from predictive performance analysis.

4 Visual analysis techniques

This section discusses notable studies that analyze a graphomotor response after completion. The inspiration for analyzing a graphomotor task after its completion comes from the conventional clinical procedures of scoring a graphomotor-based test. Although it is an easy task for a human expert, it is very difficult for the machine to determine the degree of deformation versus the healthy control behavior based on visual analysis. However, in an attempt to design a system to achieve this, two common approaches have been used in the literature:

-

Techniques that evaluate deviations at the local level, i.e. at the primitive component level;

-

Techniques that evaluate the response as a whole by extracting shape-based global features.

Each of these categories of methods is discussed in the next section.

4.1 Review of methods

A summarized overview of well-known visual analysis techniques (component as well as global-level) is presented in Table 3 listing the type of drawing used for the tasks, the disease studied and the type of subjects who participated in the experiments. From a computational point of view, the table also shows the methodology carried out in terms of features and classifiers, and the main findings.

In the component-level analysis, the basic notion of such techniques is to evaluate the quality of the whole figure by estimating the deformations in its constituent parts independently. Localization and estimation of primitive components further increase the difficulty of scoring a test that requires comparison or correlation of constituent components to evaluate the complete drawing. The Clock Drawing Test is one such example that is commonly used to screen cognitive disorders, such as dementia [113]. Similar to ROCF, CDT drawings have a complex scoring criterion. However, the CDT score requires the assessment of the spatial organization of its components in addition to the quality of the independent components. This requires inference not only from the presence/absence of essential shapes, but also from their organization (i.e., the correct positioning of the clock digits and hands). Consequently, an independent evaluation of the individual components cannot help in the complete interpretation of the CDT drawing. To overcome the limitations of localization and segmentation of individual shape components in multi-component drawings (such as ROCF and CDT), some studies [58, 63] suggested the use of online sample acquisition methods.

In the global-level analysis, online sample acquisition methods were initially introduced to facilitate the segmentation of primitive components. However, researchers soon realized the potential of these devices to capture several spatio-temporal features as well. This approach soon gained popularity, and researchers began studying various attributes extracted globally from the complete drawing instead of those calculated locally from the primitive components [109], or using machine learning techniques [110].

4.2 Challenges and opportunities

We have observed that studies [52, 53, 91] that evaluate the quality of primitive components or analyze their spatial organization [58, 63] are characterized by the challenges of localization and segmentation. The localization of the intended segment is mainly affected by the inherent imprecision and ambiguity of a free-hand drawing. To overcome the challenges of localization, studies such as Harbi et al. [63] suggested mapping a layout on top of the image or limiting drawers/writers by printing a pre-drawn template. Such constraints can facilitate localization; however, these changes can add further complexity to the analysis by introducing a pre-processing step to separate the drawn response from the pre-drawn trace. To address all these issues, a holistic approach to analysis is needed, capable of identifying local deformations without the complex step of primitive extraction.

Studies that attempt to discriminate between healthy controls and diseased subjects using simple geometric shapes such as cubes [52], spirals [64] and meanders [65] are based on the analysis of static geometric features to evaluate the quality of the drawn template. Feature analysis is performed using template matching or statistical approaches. Although these features may prove effective for such shapes, they are not sufficient to characterize complex graphomotor deformations scored in tasks such as CDT, ROCF and BGT. Due to the insufficiency of static features, such studies must employ extensive heuristics to estimate the magnitude of the deviations. Although exhaustive, a heuristic-based approach lacks the robustness of a statistical approach. Machine-learned features and their statistical analysis can overcome the limitations of feature insufficiency and lack of robustness, especially in the case of tasks with complex scoring criteria such as BGT.

5 Procedural analysis techniques

Until recently, researchers have focused on extracting effective biomarkers from graphomotor samples after their completion. However, the advent of some technology (i.e., digitizing tablets, electronic pens, and wearable sensors), has made it convenient to also incorporate the assessment of the underlying processes involved in the production of the graphomotor impressions. The most prominent of these are the motor and executive planning skills of a subject. As discussed earlier, the functional attributes captured by the acquisition devices are the x- and y-coordinates of the pen position along with their time stamps. In addition to position, pen trajectories, orientation (azimuth and altitude), and pressure, both on-surface and in-air, are also recorded. These functional attributes are then used to compute several features that are difficult (and in some cases impossible) to compute by visual analysis of the offline graphomotor sample. Since the beginning of dynamic handwriting/drawing analysis, research in this field has moved in the following two directions:

-

Hand movement analysis during writing or drawing;

-

Analysis of the executive planning involved in completing a graphomotor task.

A discussion in the following section details both of these approaches with reference to notable studies in the literature.

5.1 Review of methods

We have summarized some of the most notable works in the literature in Table 4. We can observe the evolution of the works, the tasks used and the target pathology studied by the authors. We highlight the main findings of these works as well as the proposed features and classifiers.

In hand movement analysis, some theories, such as Nicolas et al. [114], suggest a strong correlation between motor and cognitive skills. The main reason is the commonality of their underlying processes such as sequencing, monitoring, and planning. Thus, kinematic features are one of the most commonly used attributes of drawing and handwriting to discriminate between samples of healthy subjects and patients with various cognitive diseases. In a series of related studies [94, 115, 116], some authors evaluated the performance of various temporal and kinematic features against different static features, in an attempt to identify patient drawings with conditions like VSN and dyspraxia. Simple geometric shapes such as the Necker’s cube [117] were used as templates to capture both static and dynamic features. Principal Component Analysis was used to select the most effective features. Studies [94, 116] suggested that a combination of both static and dynamic features further improves the analysis.

The visual and procedural information obtained from CDT drawings of healthy controls, PD patients and individuals with MCI was analyzed in Vessio [28]. The visual analysis of the samples indicated that PD patients degrade the quality of clock components due to motor deficits, but show no signs of poor cognition. In contrast, the drawings of MCI patients were qualitatively similar to healthy controls, but the incorrect positioning of clock hands indicated a cognitive difficulty. In case of procedural analysis based on plotting dynamic features such as velocity and pressure, samples from PD and MCI patients show more peaks than samples of healthy controls, depicting irregularities due to underlying impairments. Although these attributes are successful in discriminating between healthy and diseased samples, they appear to be inconclusive in differentiating between PD and MCI samples. However, the difference is most noticeable in visual analysis due to the misplacement of the clock components by MCI patients.

The most commonly used assessments include handwriting and drawing samples from the elderly population. Most of them suffer manual dexterity because of the aging effect [118] and are affected by various neurodegenerative diseases such as PD and AD. While motor deficits become evident in later stages of AD, these are one of the first symptoms of PD. Graphomotor-based parkinsonian conditions include bradykinesia (slowness of movement), tremor (irregularity) and micrographia (shrinking of letters). Among their several works (see Table 4), the main contribution of Drotár et al. has been the compilation of the benchmark dataset PaHaW [71, 75], comprising several handwriting tasks together with the conventional Archimedean spiral. This dataset is commonly used by studies looking to evaluate the effectiveness of novel handwriting features for evaluating PD samples.

In both Tables 3 and 4 we can observe that the use of deep learning techniques has recently become popular. Competitive performance has been reported in the handwriting sample analysis from PD patients, e.g. [74, 87, 89, 124, 125].

Another trend concerns the observation of the executive planning adopted by the subject during drawing/writing. For example, some subjects trace the contours of the figure to be copied, others put points first and then connect them with segments, and so on. Although the idea is not that recent, it has gained renewed popularity due to the availability of online data acquisition systems. Such analysis focuses on the behavior and preferred drawing/writing strategy of the subject while producing a response, rather than the final product or hand movement. This is also referred to as the “grammar of action” [121]. Additionally, optoelectronic systems have been proposed to analyze the drawing gestures of patients with disabilities [126,127,128,129].

5.2 Challenges and opportunities

Both hand movement and executive planning techniques do not depend on the visual feedback of the final response, but rather rely on the features that represent the motor and cognitive functionalities employed during the process. Although the additional information provided by procedural analysis may provide a better understanding of the pathophysiological changes associated with the disease, research in this direction requires greater interdisciplinary correspondence to establish norms. For example, studies such as Impedovo et al. [30]; Drotár et al. [71]; Müller et al. [77, 78] which extracted various hand movement-based features, have shown that the nature of the task employed for the acquisition of these novel features has a significant impact on their predictive performance. The same features, when extracted from two different templates, can lead to a different outcome for the same disease. For this reason, Drotár et al. [75] suggested employing unconventional tasks based on handwriting instead of just using the traditional spiral drawing, to discriminate between PD patients and healthy controls. However, in Pereira et al. [74], spiral drawing outperformed an unconventional meander task, when pressure signals were employed. This requires careful consideration of the feature-task relation to avoid feature validity issues. Changing conventional test templates can lead to resistance from target users, namely clinical professionals. To bridge the gap between clinical practices and AI-based solutions, proposed techniques must first improve results on conventional models and then suggest modifications that need to be decided by the physician.

Dynamic handwriting analysis offers the opportunity to evaluate various attributes including kinematic, temporal and pressure measures. However, almost all the studies reviewed in the literature indicate the insufficiency of these features when used independently. Consequently, a combined high dimensional feature vector approach was adopted, as shown for example in Impedovo et al. [30]; Taleb et al. [68]; Garre-Olmo et al. [79]; Jerkovic et al. [92]. In some cases [66, 76, 94], these additional features were combined with static visual features to improve their performance. A first fusion-based approach can give negative results due to the different nature of the combined features. Indeed, feature selection and fusion have been implemented in different ways.

Although many studies realize the impact of template selection on feature performance, most of these works combine features extracted from multiple templates due to the scarcity of data. This can lead to overall performance degradation, as suggested in Impedovo et al. [30]. Instead of combining the features extracted from all tasks before training a single classifier, a late fusion-based approach, such as majority voting, should be adopted where the decision of each task is considered separately.

Studies such as Drotár et al. [60, 70]; Müller et al. [77, 78]; Jerkovic et al. [92] supported the effectiveness of a new mode called “in-air”, in which the kinematic and temporal features can be calculated while the pen does not touch the surface. The authors attribute the success of this modality to the fact that the width of the pause between writing/drawing strokes increases due to underlying motor (in case of PD) or cognitive (in case of AD) dysfunction [130, 131]. Although the rationale is valid and the performance of the modality in discriminating between healthy and diseased samples is promising, it is inconclusive whether the same modality can distinguish between diseased samples with overlapping conditions. This concern was discussed in Vessio [28], where discrimination based on visual analysis of CDT samples taken from a PD and an AD patient proved more effective than procedural analysis. One of the main reasons contributing to this is that procedural analysis techniques based on hand movement are not designed to characterize visuo-spatial and visuo-perceptual patterns, which are necessary assessments in most cognitive dysfunctions. Further exploration is needed in this direction, until a strong visual analysis technique can prove more effective in differential diagnosis.

Techniques that evaluate the quality of a graphomotor response by analyzing the preferred drawing/writing strategy also provide a promising approach [66, 95, 119,120,121, 123]. However, before such techniques can mature, it is necessary to develop models for a correct strategy. Although these techniques are more suitable for rehabilitation purposes, their success in characterizing handwriting is not yet conclusive and calls for more in-depth research investigations.

6 Concluding remarks and future directions

This paper presented a review of the various trends and approaches used to analyze graphomotor tasks for disease diagnosis. A contextual categorization of the relevant works has been proposed based on the method of analysis used. Popular features and estimation methods have also been discussed. While each technique has its inherent strengths and limitations, several high-level open issues need to be considered to integrate modern technology effectively into traditional clinical settings. We are, in fact, still a long way from an inclusive technological solution that doctors can use and trust in the current medical practice.

Standardized data acquisition and system deployment

One of the main problems is the sample acquisition mode used. Techniques that rely on the analysis of traditional paper-based samples require digitization using a scanner or digital camera. The digitized images are then pre-processed to make them suitable for automated analysis. Like any handwritten document analysis system, preliminary localization, segmentation, and recognition tasks must be performed before analysis. On the other hand, techniques that support online sample acquisition require modification of conventional test conducting protocols. Because of this, such systems may encounter hesitation from target users, i.e. clinicians and patients. These problems are exacerbated by the lack of a commonly accepted standardized protocol for data acquisition. This includes not only the tasks but also the device because there are interoperability issues as well [132, 133]. Computer scientists and clinicians need to work much more in synergy so that the latter can effectively communicate their practical needs and these can be met by implemented solutions. This raises many other questions related to the performance of the final implemented system, how its results are provided as feedback to the physician and its human-computer interaction. Once finally implemented in current practice, the system should also be continuously monitored and evolved to adapt to concept drifts [134].

New analysis objectives

Each test has a different and, in most cases, extensive scoring criteria that require effective translation of clinical manifestations (domain knowledge) into computable features to make an inference similar to the clinical practitioner. Two common approaches are adopted in the literature: these include hand-crafted heuristics and supervised machine learning. Heuristics are mostly exhaustive and rigid and may not prove sufficient in most real-life applications due to the highly unconstrained nature of the responses. In contrast, machine learning approaches can generalize a wide variety of situations but require a large amount of training data, a well-known problem in this domain. With this in mind, a deeper exploration of both approaches is required before applying to the issue under consideration. In particular, a problem with data collection and classification goals is the so-called differential diagnosis and prediction of the actual degree of disease severity. Most systems only address the healthy/diseased binary classification task. However, it is not always the disease diagnosis that matters for doctors (which can sometimes be quite simple for them), but the ability of the system to detect the stage of the disease, differentiate diseases with overlapping conditions, and monitor the responsiveness of patients to therapy. Furthermore, most of the studies have dealt with the Western script. However, some studies, such as Ammour et al. [135], have recently analyzed combined French and Arabic tasks from bilingual subjects. Another open question is the different methodologies that could be successfully transferred from one script to another. Lessons learned from this type of research can also be successfully transferred to similar systems where the focus is not on handwriting but on similar movement impairments. Important examples include finger tapping [136], keystroke dynamics [137], and touchscreen typing [138] for diagnostic purposes. In summary, research efforts should be made in non-standard directions as well.

Benchmark dataset

As mentioned above, another important problem is data scarcity. Like most health-related problems, the lack of sufficient training data is a major limiting factor in designing a computerized analysis system for any neuropsychological test. Being a highly domain-specific problem, sample acquisition and ground truth labeling must be made by domain experts. Failure to do so can cast doubt on the validity of the system. Since smaller datasets are common in most real-life medical scenarios, robust techniques need to be developed to overcome cardinality issues. The different communities should work much more in synergy to guide research towards developing a large benchmark dataset periodically updated with new specimens and tasks that reflect human behavior: temporal evolution, aging, tasks, posture, emotions, multiscripts, collection devices, protocols, and so on. It is worth highlighting that a similar idea has been done in related health studies, such as neuroimaging. Popular examples include ADNI [139] and OASIS [140]. This synergy, together with the aforementioned standardized data acquisition method and the culture of “transparency”, which is still missing in this line of research, could finally favor the emergence of a large unified dataset to support the community. In other words, large projects that integrate joint efforts in collecting data in different places are needed in this research field. Furthermore, with the increasing level of maturity achieved by data augmentation and generative techniques, the problem of data scarcity can also be mitigated by the injection of synthetic but plausible samples into the training data. Promising directions have already been drawn recently in the more general context of written text generation [141, 142].

7 Conclusion

Concluding, bridging the gap between the latest technology and conventional practices requires serious attention from both the AI community and domain experts. An acceptable trade-off between accuracy and explainability of the solution needs to be defined to allow for integrating emerging techniques into this significant but less explored research area. On the other hand, medical data contain sensitive information. Thus, doctors are obligated to keep patient medical records, often handwriting, safe and confidential. In a computerized context, applications to de-identify anonymous handwriting data are welcome [143]. Further ethical issues should also be taken with special attention, as the machine cannot completely replace the human expert (who will otherwise be responsible for the final decision on the patient?). Therefore, the most critical step is to give more attention to the research area itself. Due to the lack of direction and the highly domain-specific nature of the research, the state-of-the-art is mostly sporadic and disconnected. More attention is needed to enable domain maturity and to provide generic frameworks.

Change history

12 August 2022

Missing Open Access funding information has been added in the Funding Note

References

Heilman KM, Valenstein EE (2003) Clinical neuropsychology. Oxford University Press, Oxford

Hall J, O’Carroll RE, Frith CD (2010) 7 - neuropsychology. In: Johnstone EC, Owens DC, Lawrie SM et al (eds) Companion to psychiatric studies, 8th edn. Churchill Livingstone, St. Louis, New York, pp 121–140. https://doi.org/10.1016/B978-0-7020-3137-3.00007-3

Zhang X, Liu X (2020) Handwriting function in children with tourette syndrome and neurodevelopmental disorders. Int J Psychiatry Neurol 9(3):48–52

Silat S, Sadath L (2021) Behavioural biometrics in feature profiles-engineering healthcare and rehabilitation systems. In: 2021 international conference on computational intelligence and knowledge economy (ICCIKE). IEEE, pp. 160–165

Lezak MD, Howieson DB, Loring DW et al (2004) Neuropsychological assessment. Oxford University Press, USA

Smits-Engelsman BC, Van Galen GP (1997) Dysgraphia in children: lasting psychomotor deficiency or transient developmental delay? J Exp Child Psychol 67(2):164–184

Weintraub N, Graham S (2000) The contribution of gender, orthographic, finger function, and visual-motor processes to the prediction of handwriting status. Occup Ther J Res 20(2):121–140

Ziviani JM, Wallen M (2006) The Development of graphomotor skills. In: Henderson A, Pehoski C (eds) Hand function in the child: foundations for remediation. Elsevier, Philadelphia, USA, pp 217–236. https://doi.org/10.1016/B978-032303186-8.50014-9

Smith AD (2009) On the use of drawing tasks in neuropsychological assessment. Neuropsychology 23(2):231

Likforman-Sulem L, Esposito A, Faundez-Zanuy M et al (2017) Emothaw: a novel database for emotional state recognition from handwriting and drawing. IEEE Trans Hum-Mach Syst 47(2):273–284

Shin MS, Park SY, Park SR et al (2006) Clinical and empirical applications of the rey-osterrieth complex figure test. Nature Protoc 1(2):892–899

Mainland BJ, Shulman KI (2013) Clock drawing test. In: Cognitive screening instruments. Springer, London, pp 79–109. https://doi.org/10.1007/978-1-4471-2452-8_5

Bender L (1938) A visual motor gestalt test and its clinical use. Research Monographs, American Orthopsychiatric Association, Washington

Hsu AW et al (2009) Spiral analysis in niemann-pick disease type c. Mov Disord 24(13):1984–1990

Naglieri JA, McNeish TJ, Achilles N (2004) Draw a person test. In: Tools of the trade: A therapist’s guide to art therapy assessments, vol 124

Goodenough FL (1926) Measurement of intelligence by drawings. World Book Co, Chicago

Lacks P (1999) Bender Gestalt screening for brain dysfunction. John Wiley & Sons Inc, New York

Brannigan GG, Decker SL, Madsen DH (2004) Innovative features of the bender-gestalt ii and expanded guidelines for the use of the global scoring system. In: Bender visual-motor gestalt test, 2nd edn. Assessment Service Bulletin, vol 1, pp 1–24

Camara WJ, Nathan JS, Puente AE (2000) Psychological test usage: implications in professional psychology. Prof Psychol Res Pract 31(2):141

Groth-Marnat G, Strub F, Black R et al (2000) Neuropsychological assessment in clinical practice: a guide to test interpretation and integration. Wiley and Sons, New York

Groth-Marnat G (2009) Handbook of psychological assessment. John Wiley & Sons, New York

Parsey CM, Schmitter-Edgecombe M (2013) Applications of technology in neuropsychological assessment. Clin Neuropsychol 27(8):1328–1361

Cilia N, et al (2019) An overview on handwriting protocols and features for the diagnosis of Alzheimer disease. In: Proceedings of 19th international graphonomics conference

Faundez-Zanuy M, Mekyska J, Impedovo D (2021) Online handwriting, signature and touch dynamics: tasks and potential applications in the field of security and health. Cogn Comput 13(5):1406–1421

Pereira CR, Pereira DR, Weber SA et al (2019) A survey on computer-assisted parkinson’s disease diagnosis. Artif Intell Med 95:48–63

De Stefano C, Fontanella F, Impedovo D et al (2019) Handwriting analysis to support neurodegenerative diseases diagnosis: a review. Pattern Recognit Lett 121:37–45

Impedovo D, Pirlo G (2018) Dynamic handwriting analysis for the assessment of neurodegenerative diseases: a pattern recognition perspective. IEEE Rev Biomed Eng 12:209–220

Vessio G (2019) Dynamic handwriting analysis for neurodegenerative disease assessment: a literary review. Appl Sci 9(21):4666

Faundez-Zanuy M, Fierrez J, Ferrer MA et al (2020) Handwriting biometrics: applications and future trends in e-security and e-health. Cogn Comput 12(5):940–953

Impedovo D, Pirlo G, Vessio G (2018) Dynamic handwriting analysis for supporting earlier parkinson’s disease diagnosis. Information 9(10):247

Plamondon R, Srihari SN (2000) Online and off-line handwriting recognition: a comprehensive survey. IEEE Trans Pattern Anal Mach Intell 22(1):63–84

Graves A, Schmidhuber J (2009) Offline handwriting recognition with multidimensional recurrent neural networks. In: Advances in neural information processing systems, pp. 545–552

Doermann D, Tombre K et al (2014) Handbook of document image processing and recognition. Springer, Berlin

Keysers D, Deselaers T, Rowley HA et al (2016) Multi-language online handwriting recognition. IEEE Trans Pattern Anal Mach Intell 39(6):1180–1194

Chherawala Y, Roy PP, Cheriet M (2017) Combination of context-dependent bidirectional long short-term memory classifiers for robust offline handwriting recognition. Pattern Recognit Lett 90:58–64

Kimura T, Premachandra C, Kawanaka H (2016) Simultaneous mixed vertical and horizontal handwritten japanese character line detection. In: International conference on computer vision and graphics. Springer, pp. 564–572

Premachandra HWH, Premachandra C, Kimura T et al (2016) Artificial neural network based sinhala character recognition. In: International conference on computer vision and graphics. Springer, pp 594–603

Ntirogiannis K, Gatos B, Pratikakis I (2014) A combined approach for the binarization of handwritten document images. Pattern Recognit Lett 35:3–15

Chen K, Wei H, Hennebert J et al (2014) Page segmentation for historical handwritten document images using color and texture features. In: 2014 14th international conference on Frontiers in handwriting recognition. IEEE, pp. 488–493

Zagoris K, Pratikakis I, Gatos B (2017) Unsupervised word spotting in historical handwritten document images using document-oriented local features. IEEE Trans Image Process 26(8):4032–4041

He S, Schomaker L (2017) Beyond ocr: multi-faceted understanding of handwritten document characteristics. Pattern Recognit 63:321–333

Sae-Bae N, Memon N (2014) Online signature verification on mobile devices. IEEE Trans Inform Forensics Secur 9(6):933–947

Diaz M, Fischer A, Ferrer MA et al (2016) Dynamic signature verification system based on one real signature. IEEE Trans Cybern 48(1):228–239

Djeddi C, Siddiqi I, Souici-Meslati L et al (2013) Text-independent writer recognition using multi-script handwritten texts. Pattern Recognit Lett 34(10):1196–1202

Siddiqi I, Djeddi C, Raza A et al (2015) Automatic analysis of handwriting for gender classification. Pattern Anal Appl 18(4):887–899

Mirza A, Moetesum M, Siddiqi I et al (2016) Gender classification from offline handwriting images using textural features. In: 2016 15th international conference on frontiers in handwriting recognition (ICFHR). IEEE, pp. 395–398

Moetesum M, Siddiqi I, Djeddi C et al (2018) Data driven feature extraction for gender classification using multi-script handwritten texts. In: 2018 16th international conference on frontiers in handwriting recognition (ICFHR). IEEE, pp. 564–569

Leedham SSG (2003) A survey of computer methods in forensic handwritten document examination. In: Proceeding the eleventh international graphonomics society conference. Sccottsdale Arazona, pp 278–281

Premachandra HWH, Premachandra C, Parape CD et al (2017) Speed-up ellipse enclosing character detection approach for large-size document images by parallel scanning and hough transform. Int J Mach Learn Cybern 8(1):371–378

Chakraborty A, Blumenstein M (2016) Preserving text content from historical handwritten documents. In: 2016 12th IAPR workshop on document analysis systems (DAS). IEEE, pp. 329–334

Li Y, Li W (2018) A survey of sketch-based image retrieval. Mach Vis Appl 29(7):1083–1100

Smith SL, Hiller DL (1996) Image analysis of neuropsychological test responses. In: Medical imaging 1996: image processing, international society for optics and photonics, pp. 904–915

Canham R, Smith SL, Tyrrell AM (2000) Automated scoring of a neuropsychological test: the rey osterrieth complex figure. In: Proceedings of the 26th euromicro conference. EUROMICRO 2000. Informatics: inventing the future. IEEE, pp 406–413

Moetesum M, Siddiqi I, Masroor U et al (2015) Automated scoring of bender gestalt test using image analysis techniques. In: 2015 13th international conference on document analysis and recognition (ICDAR). IEEE, pp. 666–670

Bennasar M, Setchi R, Bayer A et al (2013) Feature selection based on information theory in the clock drawing test. Proced Comput Sci 22:902–911

Conson M, Nuzzaci C, Sagliano L et al (2016) Relationship between closing-in and spatial neglect: a case study. Cogn Behav Neurol 29(1):44–50

Molteni F, Traficante D, Ferri F et al (2014) Cognitive profile of patients with rotated drawing at copy or recall: a controlled group study. Brain Cognit 85:286–290

Harbi Z, Hicks Y, Setchi R (2017) Clock drawing test interpretation system. Proced Comput Sci 112:1641–1650

Lunardini F, Di Febbo D, Malavolti M et al (2020) A smart ink pen for the ecological assessment of age-related changes in writing and tremor features. IEEE Trans Instrum Meas 70:1–13

Drotár P, Mekyska J, Rektorová I et al (2014) Analysis of in-air movement in handwriting: a novel marker for parkinson’s disease. Comput Methods Programs Biomed 117(3):405–411

Mucha J et al (2018) Fractional derivatives of online handwriting: a new approach of parkinsonic dysgraphia analysis. In: 2018 41st international conference on telecommunications and signal processing (TSP). IEEE, pp. 1–4

Miao J, Niu L (2016) A survey on feature selection. Proced Comput Sci 91:919–926

Harbi Z, Hicks Y, Setchi R (2016) Clock drawing test digit recognition using static and dynamic features. Proced Comput Sci 96:1221–1230

Pereira CR, Pereira DR, da Silva FA, et al (2015) A step towards the automated diagnosis of parkinson’s disease: Analyzing handwriting movements. In: 2015 IEEE 28th international symposium on computer-based medical systems. IEEE, pp. 171–176

Pereira CR, Pereira DR, Silva FA et al (2016) A new computer vision-based approach to aid the diagnosis of parkinson’s disease. Comput Methods Progr Biomed 136:79–88

Smith SL, Lones MA (2009) Implicit context representation cartesian genetic programming for the assessment of visuo-spatial ability. In: 2009 IEEE congress on evolutionary computation. IEEE, pp. 1072–1078

Werner P, Rosenblum S, Bar-On G et al (2006) Handwriting process variables discriminating mild alzheimer’s disease and mild cognitive impairment. J Gerontol Series B Psychol Sci Soc Sci 61(4):P228–P236

Taleb C, Khachab M, Mokbel C et al (2017) Feature selection for an improved parkinson’s disease identification based on handwriting. In: 2017 1st International workshop on arabic script analysis and recognition (ASAR). IEEE, pp. 52–56

Senatore R, Della Cioppa A, Marcelli A (2019) Automatic diagnosis of neurodegenerative diseases: an evolutionary approach for facing the interpretability problem. Information 10(1):30

Drotár P et al (2013b) A new modality for quantitative evaluation of parkinson’s disease: In-air movement. In: 13th IEEE international conference on bioInformatics and bioEngineering. IEEE, pp 1–4

Drotár P, Mekyska J, Smékal Z et al (2013a) Prediction potential of different handwriting tasks for diagnosis of parkinson’s. In: 2013 E-health and bioengineering conference (EHB), IEEE, pp 1–4

Drotár P, Mekyska J, Smékal Z et al (2015) Contribution of different handwriting modalities to differential diagnosis of parkinson’s disease. In: 2015 IEEE international symposium on medical measurements and applications (MeMeA) proceedings. IEEE, pp. 344–348

Kotsavasiloglou C, Kostikis N, Hristu-Varsakelis D et al (2017) Machine learning-based classification of simple drawing movements in parkinson’s disease. Biomed Signal Process Control 31:174–180

Pereira CR, Weber SA, Hook C et al (2016) Deep learning-aided parkinson’s disease diagnosis from handwritten dynamics. In: 2016 29th SIBGRAPI conference on graphics. Patterns and images (SIBGRAPI). IEEE, pp. 340–346

Drotár P, Mekyska J, Rektorová I et al (2016) Evaluation of handwriting kinematics and pressure for differential diagnosis of parkinson’s disease. Artif Intell Med 67:39–46

Heinik J, Werner P, Dekel T et al (2010) Computerized kinematic analysis of the clock drawing task in elderly people with mild major depressive disorder: an exploratory study. Int Psychogeriatr 22(3):479–488

Müller S, Preische O, Heymann P et al (2017) Increased diagnostic accuracy of digital vs. conventional clock drawing test for discrimination of patients in the early course of alzheimer’s disease from cognitively healthy individuals. Front Aging Neurosci 9:101

Müller S, Preische O, Heymann P et al (2017) Diagnostic value of a tablet-based drawing task for discrimination of patients in the early course of alzheimer’s disease from healthy individuals. J Alzheimer’s Dis 55(4):1463–1469

Garre-Olmo J et al (2017) Kinematic and pressure features of handwriting and drawing: preliminary results between patients with mild cognitive impairment, alzheimer disease and healthy controls. Curr Alzheimer Res 14(9):960–968

Drotár P, Mekyska J, Rektorová I et al (2014) Decision support framework for parkinson’s disease based on novel handwriting markers. IEEE Trans Neural Syst Rehabil Eng 23(3):508–516

Bromiley P, Thacker N, Bouhova-Thacker E (2004) Shannon entropy, renyi entropy, and information. Stat Inf Ser 9:1–5

Kaiser JF (1990) On a simple algorithm to calculate the’energy’of a signal. In: International conference on acoustics, speech, and signal processing. IEEE, pp. 381–384

Laniel P, Faci N, Plamondon R et al (2020) Kinematic analysis of fast pen strokes in children with adhd. Appl Neuropsychol Child 9(2):125–140

Plamondon R, O’Reilly C, Rémi C et al (2013) The lognormal handwriter: learning, performing, and declining. Front Psychol 4:945

Duval T, Rémi C, Plamondon R et al (2015) Combining sigma-lognormal modeling and classical features for analyzing graphomotor performances in kindergarten children. Hum Mov Sci 43:183–200

Pirlo G, Diaz M, Ferrer MA et al (2015) Early diagnosis of neurodegenerative diseases by handwritten signature analysis. In: International conference on image analysis and processing. Springer, pp. 290–297

Diaz M, Ferrer MA, Impedovo D et al (2019) Dynamically enhanced static handwriting representation for parkinson’s disease detection. Pattern Recognit Lett 128:204–210

Moetesum M, Siddiqi I, Vincent N (2019a) Deformation classification of drawings for assessment of visual-motor perceptual maturity. In: 2019 international conference on document analysis and recognition (ICDAR). IEEE, pp. 941–946

Moetesum M, Siddiqi I, Vincent N et al (2019) Assessing visual attributes of handwriting for prediction of neurological disorders-a case study on parkinson’s disease. Pattern Recognit Lett 121:19–27

Diaz M, Moetesum M, Siddiqi I et al (2021) Sequence-based dynamic handwriting analysis for parkinson’s disease detection with one-dimensional convolutions and bigrus. Expert Syst Appl 168(114):405

Canham R, Smith S, Tyrrell A (2005) Location of structural sections from within a highly distorted complex line drawing. IEE Proc Vis Image Signal Process 152(6):741–749

Jerkovic VM, Kojic V, Miskovic ND et al (2019) Analysis of on-surface and in-air movement in handwriting of subjects with parkinson’s disease and atypical parkinsonism. Biomed Eng 64(2):187–194

Bennasar M, Setchi R, Hicks Y et al (2014) Cascade classification for diagnosing dementia. In: 2014 IEEE international conference on systems, man, and cybernetics (SMC). IEEE, pp. 2535–2540

Garbi A, Smith S, Heseltine D et al (1999) Automated and enhanced assessment of unilateral visual neglect. In: IET conference proceedings

Rémi C, Frélicot C, Courtellemont P (2002) Automatic analysis of the structuring of children’s drawings and writing. Pattern Recognit 35(5):1059–1069

Ribeiro LC, Afonso LC, Papa JP (2019) Bag of samplings for computer-assisted parkinson’s disease diagnosis based on recurrent neural networks. Comput Biol Med 115(103):477

Moetesum M, Siddiqi I, Javed F et al (2020b) Dynamic handwriting analysis for parkinson’s disease identification using c-bigru model. In: 2020 17th international conference on frontiers in handwriting recognition (ICFHR). IEEE, pp. 115–120

Carmona-Duarte C, Ferrer MA, Gómez-Vilda P et al (2021) Evaluating parkinson’s disease in voice and handwriting using the same methodology, Chap 7. In: Plamondon R, Marcelli A, Ferrer MA (eds) The lognormality principle and its applications. World Scientific, pp 161–175. https://doi.org/10.1142/9789811226830_0007

Laurent A, Plamondon R, Begon M (2022) Reliability of the kinematic theory parameters during handwriting tasks on a vertical setup. Biomed Signal Process Control 71(103):157

Della Cioppa A et al (2019) Explainable ai for automatic diagnosis of parkinson’ disease by handwriting analysis: experiments and findings. In: Proceedings of 19th international graphonomics conference

Sedgwick P (2012) Pearson’s correlation coefficient. Bmj 345:e4483

Myers L, Sirois MJ (2004) Spearman correlation coefficients, differences between. Encycl Stat Sci 12

Hotelling H et al (1951) A generalized t test and measure of multivariate dispersion. In: Proceedings of the second Berkeley symposium on mathematical statistics and probability, The Regents of the University of California

McKnight PE, Najab J (2010) Mann-whitney u test. Corsini Encycl Psychol pp. 1–1

Dentamaro V, Impedovo D, Pirlo G (2021) An analysis of tasks and features for neuro-degenerative disease assessment by handwriting. In: International conference on pattern recognition. Springer, pp. 536–545

Moetesum M et al (2016) Segmentation and classification of offline hand drawn images for the bgt neuropsychological screening test. In: Eighth international conference on digital image processing (ICDIP 2016), international society for optics and photonics, pp. 100334N

Nazar HB, Moetesum M, Ehsan S et al (2017) Classification of graphomotor impressions using convolutional neural networks: an application to automated neuro-psychological screening tests. In: 2017 14th IAPR international conference on document analysis and recognition (ICDAR). IEEE, pp. 432–437

Moetesum M, Zeeshan O, Siddiqi I (2019c) Multi-object sketch segmentation using convolutional object detectors. In: Tenth international conference on graphics and image processing (ICGIP 2018), international society for optics and photonics, pp. 1106929

Guest RM, Fairhurst MC (2002) A novel multi-stage approach to the detection of visuo-spatial neglect based on the analysis of figure-copying tasks. In: Proceedings of the fifth international ACM conference on Assistive technologies. ACM, pp. 157–161

Glenat S, Heutte L, Paquet T et al (2008) The development of a computer-assisted tool for the assessment of neuropsychological drawing tasks. Int J Inf Technol Decis Mak 7(04):751–767

Moetesum M, Siddiqi I, Ehsan S, Vincent N (2020) Deformation modeling and classification using deep convolutional neural networks for computerized analysis of neuropsychological drawings. Neural Comput Appl 32(16):12909–12933

Gazda M, Hireš M, Drotár P (2021) Multiple-fine-tuned convolutional neural networks for parkinson’s disease diagnosis from offline handwriting. IEEE Trans Syst Man Cybern Syst 52(1):78–89

Price CC et al (2011) Clock drawing in the montreal cognitive assessment: recommendations for dementia assessment. Dement Geriatr Cogn Disord 31(3):179–187

Nicolas S, Andrieu B, Croizet JC et al (2013) Sick? or slow? on the origins of intelligence as a psychological object. Intelligence 41(5):699–711

Fairhurst M, Smith SL (1991) Application of image analysis to neurological screening through figure-copying tasks. Int J Bio-med Comput 28(4):269–287

Smith SL, Cervantes BR (1998) Dynamic feature analysis of vector-based images for neuropsychological testing. In: Medical imaging 1998: physiology and function from multidimensional images, international society for optics and photonics, pp. 304–313

Kornmeier J, Bach M (2005) The necker cube-an ambiguous figure disambiguated in early visual processing. Vis Res 45(8):955–960

Fang Q et al (2019) Ageing reduces performance asymmetry between the hands in force production and manual dexterity. In: Proceedings of 19th international graphonomics conference

Chindaro S, Guest R, Fairhurst M et al (2004) Assessing visuo-spatial neglect through feature selection from shape drawing performance and sequence analysis. Int J Pattern Recognit Artif Intell 18(07):1253–1266

Renau-Ferrer N, Rémi C (2011) A generic approach for recognition and structural modelling of drawers’ sketching gestures. In: Proceedings of the international conference on image processing, computer vision, and pattern recognition (IPCV). Citeseer, pp. 1

Khalid PI, Yunus J, Adnan R et al (2010) The use of graphic rules in grade one to help identify children at risk of handwriting difficulties. Res Dev Disabil 31(6):1685–1693

Tabatabaey-Mashadi N, Sudirman R, Khalid PI (2012) An evaluation of children’s structural drawing strategies. Jurnal Teknologi 61(2):27–32

Tabatabaey-Mashadi N, Sudirman R, Guest RM et al (2015) Analyses of pupils’ polygonal shape drawing strategy with respect to handwriting performance. Pattern Anal Appl 18(3):571–586

Pereira CR, Passos LA, Lopes RR et al (2017) Parkinson’s disease identification using restricted boltzmann machines. In: International conference on computer analysis of images and patterns. Springer, pp. 70–80

Passos LA et al (2018) Parkinson disease identification using residual networks and optimum-path forest. In: 2018 IEEE 12th international symposium on applied computational intelligence and informatics (SACI). IEEE, pp. 000,325–000,330

De Pandis MF, Galli M, Vimercati S et al (2010) A new approach for the quantitative evaluation of the clock drawing test: preliminary results on subjects with parkinson’s disease. Neurol Res Int. https://doi.org/10.1155/2010/283890

Galli M, Vimercati SL, Stella G et al (2011) A new approach for the quantitative evaluation of drawings in children with learning disabilities. Res Dev Disabil 32(3):1004–1010

Beuvens F, Vanderdonckt J (2012) Usigesture: sn environment for integrating pen-based interaction in user interface development. In: 2012 sixth international conference on research challenges in information science (RCIS). IEEE, pp. 1–12

Vimercati S, Galli M, De Pandis M et al (2012) Quantitative evaluation of graphic gesture in subjects with parkinson’s disease and in children with learning disabilities. Gait Posture 35:S23–S24

He Q, Chang K, Lim EP (2006) Anticipatory event detection via sentence classification. In: 2006 IEEE international conference on systems. Man and cybernetics. IEEE, pp. 1143–1148

Smiley-Oyen A, Lowry K, Kerr J (2007) Planning and control of sequential rapid aiming in adults with parkinson’s disease. J Mot Behav 39(2):103–114

Tolosana R, Vera-Rodriguez R, Fierrez J et al (2017) Benchmarking desktop and mobile handwriting across cots devices: the e-biosign biometric database. PloS one 12(5):e0176

Impedovo D, Pirlo G, Sarcinella L et al (2019) An evolutionary approach to address interoperability issues in multi-device signature verification. In: 2019 IEEE international conference on systems. Man and cybernetics (SMC). IEEE, pp. 3048–3053

Lu J, Liu A, Dong F et al (2018) Learning under concept drift: a review. IEEE Trans Knowl Data Eng 31(12):2346–2363

Ammour A et al (2021) Online arabic and french handwriting of parkinson’s disease: the impact of segmentation techniques on the classification results. Biomed Signal Process Control 66(102):429

Roalf DR, Rupert P, Mechanic-Hamilton D et al (2018) Quantitative assessment of finger tapping characteristics in mild cognitive impairment, Alzheimer’s disease, and Parkinson’s disease. J Neurol 265(6):1365–1375

Lam K, Meijer K, Loonstra F et al (2021) Real-world keystroke dynamics are a potentially valid biomarker for clinical disability in multiple sclerosis. Mult Scler J 27(9):1421–1431

Iakovakis D, Hadjidimitriou S, Charisis V et al (2018) Motor impairment estimates via touchscreen typing dynamics toward Parkinson’s disease detection from data harvested in-the-wild. Front ICT 5:28

Jack CR Jr et al (2008) The alzheimer’s disease neuroimaging initiative (ADNI): MRI methods. J Magn Reson Imaging Off J Int Soc Magn Reson Med 27(4):685–691

LaMontagne PJ, Benzinger TLS, Morris JC, Keefe S, Hornbeck R, Xiong C, Grant E, Hassenstab J, Moulder K, Vlassenko A et al (2019) OASIS: longitudinal neuroimaging, clinical, and cognitive dataset for normal aging and alzheimer disease. MedRxiv, Cold Spring Harbor Laboratory Press. https://doi.org/10.1101/2019.12.13.19014902

Davis B, Tensmeyer C, Price B, et al (2020) Text and style conditioned GAN for generation of offline handwriting lines. arXiv preprint arXiv:2009.00678

Bhunia AK, Khan S, Cholakkal H et al (2021) Handwriting transformers. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 1086–1094

Catelli R, Casola V, De Pietro G et al (2021) Combining contextualized word representation and sub-document level analysis through bi-lstm+ crf architecture for clinical de-identification. Knowl Based Syst 213(106):649

Coates DR, Wagemans J, Sayim B (2017) Diagnosing the periphery: using the rey–osterrieth complex figure drawing test to characterize peripheral visual function. i-Perception 8(3):1–20

Funding

Open access funding provided by CRUI-CARE. Part of this research was funded by the Higher Education Commission (HEC), Pakistan, under grant number 8910/Federal/NRPU/R&D/HEC/2017. Additional funding was received from the Spanish government’s PID2019-109099RB-C41 research projects and the European Union FEDER program.

Author information

Authors and Affiliations

Contributions

MM, UM and IS wrote the first draft. MD and GV commented on the draft and prepared a revised manuscript. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare they have no conflict of interest.

Additional information

Publisher's Note