Abstract

Image segmentation has attracted a lot of attention due to its potential biomedical applications. Based on these, in the current research, an attempt has been made to explore object enhancement and segmentation for CT images of lungs infected with COVID-19. By implementing Pythagorean fuzzy entropy, the considered images were enhanced. Further, by constructing Pythagorean fuzzy measures and utilizing the thresholding technique, the required values of thresholds for the segmentation of the proposed scheme are assessed. The object extraction ability of the five segmentation algorithms including current sophisticated, and proposed schemes are evaluated by applying the quality measurement factors. Ultimately, the proposed scheme has the best effect on object separation as well as the quality measurement values.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

COVID-19, the disease caused by SARS-CoV-2, was formally named as a pandemic by the World Health Organization (WHO) [1, 2] in March 2020. COVID-19 is a highly contagious virus that can lead to deadly acute respiratory distress syndrome (ARDS). But there are just a few specific COVID-19 vaccinations available, most unvaccinated persons are susceptible to infection. Early isolation and diagnosis of the infected individual by any legal means is one of the most effective strategies to prevent the spread of viral infection among healthy people. Further, an X-ray or CT scan of the patient’s chest is one of the most effective ways to detect this virus. In addition, lung inflammation can be dangerous to people’s health. The rising number of infected persons in the community needs more effective therapies as well as a cost-effective process based on the primary diagnosis. The ability to recognize contaminated tissue quickly and precisely is critical for optimal patient treatment and survival [3,4,5,6].

CT provides a pathophysiology guide, which may aid in the diagnosis and progression of various disease stages. It develops to become a viable diagnostic tool for treating COVID-19-related lung infection in medical practice [7]. According to early studies, chest CT provides high sensitivity for detecting COVID-19 lung disease. As per the article, [8], several organizations have proven the ability to diagnose using CAD systems with an accuracy of up to 95%. Nowadays, medical imaging has just been exploited for a variety of disease diagnostics. Medical imaging technologies may also be employed as a key pathological tool that helps for identifying possible diseases.

On the other hand, digital images are commonly illustrated by utilizing computer-based image processing. The intensity of each image element in a digital image is reported by a numerical integer. In general, the purpose of image processing is to transform the source image into a more informative image with the aid of mathematically manipulated recorded numerical integers. In practice, this is performed by subjecting the source image to appropriate mathematical functions and saving the effects of the calculation as a new image. The mathematical mechanisms employed in image processing are almost limitless, but a wide variety of mechanisms can be classified into one of four major functions: thematic classification, image restoration, image segmentation, and image enhancement. At this, image enhancement mechanisms [9] try to improve the detection of patterns or objects in an image. The authors [10] suggested the image enhancement mechanism and analysis for the satellite images. Ram et al. [11] proposed polarization-based spatial filtering for the edge enhancement approach utilizing S-waveplate. Further, enhancement and classification based on brain MRI images have been done in [12]. Zhong et al. [13] recommended an image enhancement technique based on wavelet analysis and new pseudo-color image processing for the black-and-white image. The author [14] offered a contrast enhancement mechanism is enforced to improve image contrast, descriptive ability, and image appearance by increasing the gray level range. And also, the enhanced images are usually comfortable to interpret than the source images.

Image segmentation refers to the division of a digital image into several parts, which is a simple and significant tool in digital image processing. The main purpose of segmentation is to transform the images into more meaningful parts, separating objects from the background and locating image edges. The great applications in this field are as image denoising, face detection, video surveillance, fingerprint recognition, iris recognition, machine vision, content-based image retrieval, and brake light detection, locate objects (roads, forests, and crops) in satellite images [15], and particularly in the domain of medical imaging [16]. Recently, there have been several division methods and procedures in the literature, some of which include: edge detection, clustering, thresholding, region-growing methods, dual clustering method, histogram-based methods, watershed transformation, and so on. Each of the aforementioned methods is based on some methodologies for partitioning regions of the image.

Thresholding is a fascinating object separation mechanism that is also the most widely used, well-known, and dependable technique for image segmentation analysis. This mechanism works on a noisy image, converting the grey image to a binary image with a threshold value and this threshold value serves as the key feature of this mechanism. Over the past few decades, the choice of threshold has been based on certain statistical characteristics [17, 18], such as minimum error approach, entropy approach, moment-based algorithm, and class variation systems. The above statistical term class variance system limits the high computational time and cost. Following that, some thresholding approaches fail in a unimodal distribution as well as incapable of determining the threshold for imprecise data in an image; these issues are addressed by recommending the use of non-linear thresholds based on a fuzzy rule (multi-dimensional). Vague/imprecise image object is separated using the fuzzy set (FS) theory provided by Zadeh [19]. The authors [20] suggested a scheme to extract the image based on the FS approach instead of the crisp set. As a result, the entire image in the form of the FS, as well as each of their image elements (grey pixel), has a membership value. Under those circumstances, the membership function plays a vital role in the separation of the image object, and it is defined in terms of the unique characteristics of the image. As evidenced in [21, 22], there is rich literature on FS-based image thresholding techniques. Later, Atanassov [23] suggested that the new FS be an Intuitionistic Fuzzy Set (IFS), with two degrees of membership and non-membership for each component. Moreover, the author Yager [24, 25] proposed the Pythagorean Fuzzy Set (PFS), an extension of IFS in which each element is represented by a pair of membership and non-membership degrees. Image segmentation employs a variety of membership and non-membership functions [21, 26,27,28], including the Gamma, triangular, Sugeno, and Yager.

Notably, in reference, the primary goal is to achieve image segmentation by optimizing the threshold with the fuzzy measure and calculating the appropriate membership function of the original image prior to applying the fuzzy measure. Different fuzzy threshold selection measures, such as the entropy measure [22], the similarity score function [29], and the divergence measure [30] have recently flourished, and these solve the problem of separation in imprecise images. The thresholding based on similarity measure is an appealing mechanism that is employed as an elementary tool to determine the threshold in an image [29, 31]. In the literature, the PFS-based similarity, distance, and entropy measures are discussed [32,33,34,35]. The main aspiration for using similarity-based thresholding is that a particular object is associated with the same grey levels in the image’s pixels. Thresholding mechanisms like these are used to classify the foreground (Fg) and background (Bg) into different groups.

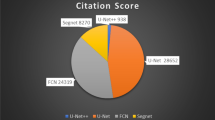

Inspired by the previous conceptions, image enhancement from the PFS feature and the segmentation of two-dimensional biomedical images such as lungs affected due to the COVID-19 virus has been effectively demonstrated in this article. The proposed scheme is divided into two steps: image enhancement and division. After activating the Pythagorean fuzzy (PFS) entropy, the aforesaid clinical images were properly enhanced. Further, enhanced images are employed for the purpose of the extraction process, which determines the thresholds that separate the object from the background. Furthermore, the proposed and other object extraction schemes, such as Method1 [4], Method2 [5], Method3 [6], Method4 [7] are compared with each other with the aid of quality measurement factors. According to the results of the aforementioned analysis, the proposed PFS-based object extraction technique performs admirably in terms of segmentation and factor values.

The following is the overall framework for this study. Section 2 investigates the theoretical background like PFS-based image enhancement and segmentation. In Sect. 3, objective analysis is discussed. Section. 4 expresses the experimental results and analysis. Finally, in Sect. 5, the concluding annotations are written.

2 Theoretical background

2.1 Pythagorean fuzzy set

A fascinating novel system revealed by Yager [24], the Pythagorean fuzzy theory is a formidable scheme and it has freshly become attractive. Pythagorean Fuzzy Theory (PFT) is proposed by an aspect of the Fuzzy set theory (FST). In PFT, situations are categorized and explored under three factors: ‘Membership’, ‘Non-membership’, and ‘indeterminacy’. In PFS’s view, it is a beneficial device to solve the indeterminacy issues, which has been employed in the applications of image processing namely, edge detection, segmentation, and so on.

2.2 Pythagorean fuzzy image (PFI)

The source RGB image has been altered to a grayscale image and the altered image is again reconfigured in the design of PFS, which holds the factors such as membership ’\(\mu\)’, indeterminacy ’\(\pi\)’, and non-membership ’\(\nu\)’. In that, there are foreground (Fg) in \(\mu\), background (Bg) in \(\nu\), and ambiguities or edges (Ed) belongs to \(\pi\) in the grayscale domain. Later, the Fg, Bg, and Ed regions must be obtained by executing the functions \(\mu\), \(\nu\), and \(\pi\). The foreground division is being carried out in the final stages.

2.3 Image enhancement

Firstly, in order to build a PFS from IFS, PFI is formed. The efficient strategy of PFI is the formulation of membership and non-membership functions of the image. In the beginning process, the grayscale image \({\mathbb {A}}\) is fuzzified by the upcoming formula:

Here, gl(r, c) is the gray picture element at the location (r, c). The notations \({gl}_{{min}}\) and \({gl}_{{max}}\) are the minimum and maximum intensities of the image \({\mathbb {A}}\).

2.3.1 \(\mu\), \(\nu\), and \(\pi\) degrees estimation

Based on the IFS, the degree of membership is estimated by applying the following generator [14]:

Then implementing the fuzzy negation \(\Psi ({x})=\frac{1-{x}}{1+(e^{\lambda }-1){x}}\), \(\lambda > 0\), the degree of non-membership in the PFI is estimated as:

Finally, the degree of indeterminacy in the PFI is estimated as

2.3.2 Entropy

Entropy act as a significant part of image processing. The authors De Luca and Termini [36] first recommended non-probabilistic entropy in the FS environment. Moreover, the authors [35] offered numerous entropy measurements by employing PFT. In this study, the Pythagorean Fuzzy Entropy (PFE) is implemented and its mathematical formula is as follows:

PFE is measured by utilizing the above equation (5) for each \(\lambda\) value. Likewise, the maximum value of PFE corresponds to the \(\lambda\) value, is considered as optimum value, which is written by:

Here, the measured value \(\lambda _{{opt}}\) is substituted in equation (2), then the equation is of the mathematical form as follows:

Further, the Pythagorean fuzzy image is constructed.

2.3.3 Enhancement

The PFI is utilized in the image enhancement process and their mathematical term is written as:

The aforementioned equation (8) forms the contrast enhanced image.

2.4 Image segmentation

In general, every image contains unimodal, bimodal, trimodal, and multimodal regions according to the intensity range. Bimodal images need a single threshold for image segmentation and trimodal, multimodal images need two or more thresholds to segment the images. In this, multimodal images are taken into account. The segmentation algorithm is shown in Fig. 1. In the aforementioned figure, the best threshold values are obtained using the PFS measure between the manually thresholded image and the enhanced thresholded image. The manually thresholded image is that image in which each pixel strictly belongs to its respective (object or background) regions. In that, each pixel has a degree of membership as 1 and its degrees of non-membership and hesitation are 0. The threshold selection algorithm is illustrated by the following steps.

Step 1 The search process is to find the optimal threshold value, and this mechanism requires only single for loop with T varying from 0–255.

Step 2 The enhanced image \({\mathbb {A}}^{{enh}}\) of dimension \({\mathbb {R}} \times {\mathbb {C}}\) and let the symbol ‘\({\mathscr {L}}\)’ indicates the levels of the grayness of the image \({\mathbb {A}}^{{enh}}\) that is \({\mathscr {L}}=\{0, 1,...,{\mathbb {L}}-1\}\), where \({\mathbb {L}}\) specifies the maximum gray level of \({\mathbb {A}}^{{enh}}\). \({\mathbb {N}}({gl})\) denotes the frequency of the gray level ‘gl’.

Step 3 The pixels of the enhanced image \({\mathbb {A}}^{{enh}}\) are splited in two classes, namely foreground (Fg) and background (Bg). The notation ‘Bg’ exposes the set of pixels accompanied with the intensity values \(\{0, 1,..., {t}\}\) and ‘Fg’ expresses the set of pixels accompanied with the intensity values \(\{{t}+1, ..., {\mathbb {L}}-1\}\) where ‘t ’specifies the threshold value.

Step 4: The mean of the Fg and Bg classes are expressed as follows:

Here \({m}_1\) and \({m}_2\), respectively, denote the average values of Bg and Fg classes.

Step 5: This paper considers the gamma distribution for experimental purposes because it ensures the images to symmetric nature. Then, each image element (r, c) membership value in the enhanced image \({\mathbb {A}}^{{enh}}\) is determined by applying Gamma distribution [21] as follows:

Here, \({m}_1\) and \({m}_2\) are the mean intensity for two regions of the \(({r}, {c})^{th}\) pixel, and the constant \(c_1^*=\frac{1}{\left( \text{ max }({gl}) - \text{ min } ( {gl}) \right) }\). For multilevel thresholding, since there are n-regions in the image, \(n-1\) threshold values (t1, t2,..., \(tn-1\)) were chosen such that \(0 \le t1< t2<...<tn-1 \le {\mathbb {L}}-1\), whereby \({\mathbb {L}}\) is just the image’s maximum grey level. Following the notion of bilevel thresholding, in the case of multilevel thresholding, the membership function will take the form:

Here, the average grey levels for the n-regions partitioned by that of the thresholds t1, t2, ..., \(tn-1\) are \({m}_1\), \({m}_2\), ..., \({m}_n\), as well as the constant \(c_2^*\), is much like \(c_1^*\) in Equation (10).

Step 6 Each image element (r, c) non-membership value in the enhanced image \({\mathbb {A}}^{{enh}}\) is computed by employing Sugeno’s generator (Sugeno [26]) as follows:

Step 7: Each image element (r, c) indeterminacy value in the enhanced image \({\mathbb {A}}^{{enh}}\) is illustrated by implementing the above equations (10) and (12), which can be written in the following form:

where \(\pi _{\mathbb {A}}^{{enh}}({gl}({{r, c}}))\) indicates the degree of indeterminacy of the image \({\mathbb {A}}^{{enh}}\).

Step 8:This paper utilizes the measure [32] provided below to determine the degree of similarity between the manually thresholded image and the enhanced thresholded image with the threshold t.

Now, if \({{\mathbb {A}}}^{{enh}}\) is the enhanced thresholded image and \({{\mathbb {B}}}^{{enh}}\) is the manually thresholded image, then \(\mu _{{\mathbb {B}}}^{{enh}}({gl(r, c)}) = 1\), \(\nu _{{\mathbb {B}}}^{{enh}}({gl(r, c)}) = 0\) and \(\pi _{{\mathbb {B}}}^{{enh}}({gl(r, c)}) = 0\). Hence, equation. (14) is reduced, which is denoted in the form as

Step 9: Calculate the max(\(\mathbb {S(A, B)}\)), the resultant value corresponding to the gray value is considered as an optimal threshold \({\mathfrak {t}}\).

Step 10: Employ the obtained optimal threshold t in the enhanced image \({\mathbb {A}}^{{enh}}\) to produce the thresholded (segmented) image \({\mathbb {A}}^{{seg}}\).

3 Objective analysis

Image quality evaluation measures are applied to obtain the efficacy of the proposed scheme in comparison with other existing works.

3.1 Mean absolute error (MAE)

Deviations from thresholded and manually thresholded images are measured in MAE, and the mathematical form is given below

Here, \({\mathbb {B}}^{{seg}} ({gl(r, c)})\), and \({\mathbb {A}}^{{seg}} ({gl(r, c)})\) mentions the manually thresholded image and thresholded image utilizing proposed scheme in the pixel gl(r, c). If the above equation (16) delivers the minimum deviation (zero or near to zero), then both images \({\mathbb {A}}^{{seg}} ({gl(r, c)})\), and \({\mathbb {B}}^{{seg}} ({gl(r, c)})\) are more similar. Or else the images are not identical.

3.2 Root-mean-square error (RMSE)

The metric RMSE has implemented to enumerate the root-mean-square error value of the image. If an image has an eminent quality, then the RMSE value should be near to zero. The RMSE signified by the mathematical design is as below

where the symbols \({\mathbb {R}}, {\mathbb {C}}\) defines the number of rows and columns of the images \({\mathbb {A}}^{{seg}}\) and \({\mathbb {B}}^{{seg}}\) respectively.

3.3 Correlation (CORR)

The other crucial factor is prescribed to assess the correlation of the two images like \({\mathbb {A}}^{{seg}}\) and \({\mathbb {B}}^{{seg}}\). The factor CORR is nominated by the following equ.

The largest value of CORR estimates the superior thresholding results. Aforementioned equation (18) delivers the outcome as 1 which mean that the images \({\mathbb {A}}^{{seg}}\) and \({\mathbb {B}}^{{seg}}\) are identical. Besides, if it returns the value of 0, then the images are not identical.

3.4 Signal to noise ratio (SNR)

The huge value of SNR reveals the excellent outcome because both the images \({\mathbb {A}}^{{seg}}\) and \({\mathbb {B}}^{{seg}}\) are identical.

3.5 Peak signal to noise ratio (PSNR)

The supreme value of PSNR exposes the superior effect because both the images \({\mathbb {A}}^{{seg}}\) and \({\mathbb {B}}^{{seg}}\) are equivalent.

4 Experimental results and analysis

The capability of the proposed PFS-based thresholding scheme has been inspected in several lungs affected due to COVID-19 (above 50% and below 50%) images. In order to exhibit the great performance of the proposed scheme, it is compared with four methods namely Method1 [4], Method2 [5], Method3 [6], and Method4 [7], several quality measurement factors examined in the before-mentioned section have been applied. The values of the quality measurement factors reveal that the outcomes of the proposed method produce a greater efficiency than recent sophisticated methods. Besides, the addressed method signifies

the minimal error, high similarity, and is very convenient for real-time applications. Also, the proposed scheme would be highly beneficial in terms of image quality.

Initially, the dataset contains the CT scan of lungs affected by COVID-19 that can be found at the following link: https://github.com/UCSD-AI4H/COVID-CT. For this investigation, more than 50% and less than 50% of the lungs infected due to COVID-19 were taken for the experimental analysis. Then, Fig. 2 presents the source RGB images and it includes both the above and the below 50% infected lungs images due to COVID-19. The resolution of these images are \(210 \times 150\), \(275 \times 193\), \(273 \times 192\), \(343 \times 188\), \(250 \times 190\), and so on. Further, the Red, Green, and Blue channels of source images were remodeled into grayscale images, and these converted images are exhibited in Fig. 3 (1a)–(4j). Meanwhile, Fig. 4 (1a)–(2j) exposes the histogram of the above 50% affected lungs due to COVID-19. In the same way, the histogram of remaining below 50% affected lungs due to COVID-19 are given in the same Fig. 4 (3a)–(4j). Later, PFS based enhancement scheme is implemented for Fig. 3 (1a)–(4j), then the enhanced images are displayed in Fig. 5 (1a)–(4j).

In addition, the values of the threshold for an enhanced first dataset (Fig. 5 (1a)) of above 50% affected lungs owing to COVID-19 images are determined by employing some other object extraction schemes and the proposed scheme, which are shown in the first row and second to sixth columns of Table 1. Then, Fig. 6 (1a)–(1e) provides object separated first dataset (Fig. 5 (1a)) of affected lungs images owing to COVID-19 after activating the current sophisticated methods, and proposed PFS method, respectively. From Fig. 6 (1e), it is clear that the proposed method is much improved and that it separates the foreground of the first dataset of affected lungs due to COVID-19. The values of the error in object extraction are estimated by analyzing the image mentioned above with the manually extracted object image. In this, two types of error rating factors such as MAE, and RMSE are applied, and these estimated error values are portrayed in the first row and second to sixth columns in Tables 2 and 3, whereas the CORR, SNR, and PSNR values are enumerated, which are also presented in the first row and second to sixth columns in Tables 4, 5, and 6. At last, the obtained MAE, RMSE, SNR, PSNR, and CORR metrics with distinct methods are plotted in the form of graphs, which are displayed in Fig. 8 (a)–(e).

On the other hand, the threshold values for an enhanced twenty-first dataset (Fig. 5 (3a)) of the below 50% affected lungs due to COVID-19 images are acquired by several methods, which are provided in the first row and seventh to twelfth columns of Table 1. After implementing such mentioned methods, the foreground of the enhanced lungs affected due to COVID-19 (below 50%) image is extracted and the resulting foregrounds are presented in Fig. 7 (1a)–(1e). Utilizing the resultant foregrounds, the quality measurement factors like MAE, RMSE, SNR, PSNR, and CORR are quantified and these measured values are arranged in a first row and seventh to twelfth columns in Tables 2, 3, 4, 5 and 6. Subsequently, the measured/tabulated outcomes are depicted as the plotted graphs, and these graphs are shown in Fig. 8 (a)–(e). From the mentioned row and columns of Tables 23, 4, 5, 6 and Fig. 8 (a)−(e), the proposed scheme establishes the great outcomes by generating smaller MAE and RMSE error values as well as larger SNR, PSNR, and CORR values.

In the same manner, employing the maximum similarity principle [32], the enhanced images of all remaining affected lungs (above 50%) due to COVID-19 (Fig. 5 (1b) –(2j)) are thresholded, and these depicted thresholds are listed in rows second to twenty and columns second to sixth in Table 1. By presenting the methods of Method1 [4], Method2 [5], Method3 [6], and Method4 [7], the foregrounds of the enhanced images (Fig. 5 (1b)–(2j)) are separated and these foregrounds are pictured in Fig. 6 [(2a) – (2d)] –[(20a) –(20d)]. In the final analysis, Fig. 6 (2e)– (20e) illustrates the output images found after the foreground extraction procedure by implementing the proposed scheme. Four unique methods and the experimental outcomes of the proposed method are analyzed, and this reveals that the proposed PFS method devotes the preferable thresholded image rather than current state-of-the-art methods. Further, the aforementioned metric values of current sophisticated object extraction methods along with the proposed PFS scheme are assessed, and these values are listed in rows second to twenty and columns second to sixth in Tables 2, 3, 4, 5 and 6. Moreover, the listed values are drawn as the plotted graph, which is exhibited in Fig. 8 (a)–(e). From Tables 2, 3, 4, 5, 6 and Fig. 8 reports that the foreground separation received by the proposed PFS scheme exceeds other methods mentioned.

Likewise, the remaining images of below 50% affected lungs due to COVID-19 are enhanced then the resultant images are given in Fig. 5 (3b)–(4j). Further to that, the obtained images were utilized for the purpose of the extraction process, which determines the thresholds that are exhibited in rows second to twenty and columns seventh to twelfth in Table 1. Besides, using recent sophisticated techniques as well as the proposed one to segment the images, which are depicted in Fig. 7 [(2a) –(2e)]– [(20a) –(20e)]. After the segmentation, we employed some evaluation metrics to compute MAE, RMSE, CORR, SNR, and PSNR values then they are tabulated in rows second to twenty and seventh to twelfth columns in Tables 2, 3, 4, 5 and 6. Following the Tables, the values are as plotted as graphical forms, which are shown in Fig. 8 (a)–(e). Figs. 7, 8, and Tables 2, 3, 4, 5 and 6 are evidenced to display the performance of the addressed method.

In general, Tables 2 and 3, and Fig. 8 (a)–(b) exhibit the quality measurement factor values found after implementing various segmentation schemes in enhanced images of lungs affected due to COVID-19. It is noteworthy that from the values in the aforementioned tables, the proposed PFS scheme delivers minimal error values compared to the state-of-the-art methods. The maximum CORR values (Table 4) for the proposed scheme confirm that the object in the enhanced images (above and below 50%) of lungs affected due to COVID-19 is clearly separated. Although the first and second sophisticated methods hold the big CORR values, the visual quality of the resulting images gained by the proposed scheme outperforms other methods. Furthermore, it is obvious from the values in Tables 4, 5 and 6 that the proposed method yields higher SNR, CORR, and PSNR values compared to the mentioned current sophisticated methods. The difference between the proposed and state-of-the-art techniques shows the maximum values, which are the reasons mentioned to determine that the proposed PFS thresholding scheme is most worthy for all images of lungs infected owing to COVID-19.

5 Conclusion

Recent developments in image analysis for digital images such as image enhancement and division have been surveyed in the construction of PFS and FS theory. This research paper deals with the perusal and structure of the segmentation scheme related to the PFS feature of lung infected owing to COVID-19 images. The recommended object segmentation scheme develop image enhancement and thresholding technique. By employing the PFS entropy, the considered images are enhanced, and it exhibits adequate quality images. Besides, the successful implementation of quality measurement factors will lead to some significant improvements in image quality as the PFS-based segmentation scheme is attractive, which makes the proposed scheme more relevant and ensures image quality if the image is considered blurry / noise. Therefore, such work would be important for efforts to discover a more beneficial scheme for image segment analysis.

References

Ahmadi M, Sharifi A, Dorosti S, Jafarzadeh Ghoushchi S, Ghanbari N (2020) Investigation of effective climatology parameters on COVID-19 outbreak in Iran. Sci Total Environ. 729:138705

Wang X, Deng X, Fu et al (2020) A weakly-supervised framework for COVID-19 classification and lesion localization from chest CT. IEEE Trans Med Imag. 39(8):2615–2625

Wang G, Liu X, Li et al (2020) A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images. IEEE Trans Med Imag. 39(8):2653–2663

Li L, Sun L, Xue Y, Li S, Mansour RF (2021) Fuzzy multilevel image thresholding based on improved coyote optimization algorithm. IEEE Access 9:33595–33607

Houssein EH, Emam MM, Ali AA (2021) Improved manta ray foraging optimization for multi-level thresholding using COVID-19 CT images. Neural Comput Appl. 33:16899–16919

Hassanien AE, Mahdy LN, Ezzat KA, Elmousalami HH, Ella HA (2020) Automatic x-ray covid-19 lung image classification system based on multi-level thresholding and support vector machine,medRxiv

Abualigah L, Diabat A, Sumari P, Gandomi AH (2021) A Novel Evolutionary Arithmetic Optimization Algorithm for Multilevel Thresholding Segmentation of COVID-19 CT Images, processes, Vol. 9, PP. 1155

Elaziz MA, Ewees AA, Yousri et al (2020) An improved marine predators algorithm with fuzzy entropy for multilevel thresholding: real world example of covid-19 CT image segmentation. IEEE Access. 8:125306–125330

Dibya JB, Thakur RS (2018) An Efficient Technique for Medical Image Enhancement Based on Interval Type-2 Fuzzy Set Logic. Progress Comput, Analyt Network. 710:667–678

Borra S, Thanki R, Dey N (2019) Satellite image enhancement and analysis. In: Satellite image analysis: clustering and classification. SpringerBriefs in Applied Sciences and Technology, Springer, Singapore. https://doi.org/10.1007/978-981-13-6424-2\_2

Ram BSB, Senthilkumaran P, Sharma A (2017) Polarization based spatial filtering for directional and nondirectional edge enhancement using S-waveplate. Appl opt. 56:3171–3178

Ullah Z, Farooq MU, Lee S-H, An D (2020) A hybrid image enhancement based brain MRI images classification technique. Med Hypoth. 143:109922

Zhong S, Jiang X, Wei J, Wei Z (2013) Image enhancement based on wavelet transformation and pseudo-color coding with phase-modulated image density processing. Infrared Phys Technol. 58:56–63

Chaira T (2014) Enhancement of medical images in an Atanassovs intuitionistic fuzzy domain using an alternative intuitionistic fuzzy generator with application to image segmentation. J Intell Fuzzy Syst. 27(3):1347–1359

Bhandari AK, Kumar A, Singh GK (2015) Improved knee transfer function and gamma correction based method for contrast and brightness enhancement of satellite image. AEU-Int J Electr Commun. 69(2):579–589

Yacin Sikkandar M, Alrasheadi BA, Prakash NB et al (2021) Deep learning based an automated skin lesion segmentation and intelligent classification model. J Ambient Intell Human Comput. 12:3245–3255

Nie F, Zhang P, Li J, Ding D (2017) A novel generalized entropy and its application in image thresholding. Signal Process. 134:23–34

Kaur T, Saini BS, Gupta S (2016) Optimized multi threshold brain tumor image segmentation using two dimensional minimum cross entropy based on co-occurrence matrix, Medical imaging in clinical applications, PP. 461-486

Zadeh LA (1965) Fuzzy sets. Inf Control. 8:338–356

Bustince H, Barrenechea E, Pagola M (2007) Image thresholding using restricted equivalence functions and maximizing the measures of similarity. Fuzzy Sets Syst. 158(5):496–516

Chaira T (2010) Intuitionistic fuzzy segmentation of medical images. IEEE Trans Biomed Eng. 57(6):1430–1436

Ananthi VP, Balasubramaniam P (2016) A new thresholding technique based on fuzzy set as an application to leukocyte nucleus segmentation, Computer Methods and Programs in Biomedicine.https://doi.org/10.1016/j.cmpb.2016.07.002.

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20:87–96

Yager RR (2013) Pythagorean fuzzy subsets. Proceeding of The Joint IFSA World Congress and NAFIPS Annual Meeting. Edmonton, Canada, pp 57–61

Yager RR (2014) Pythagorean membership grades in multicriteria decision making. IEEE Trans Fuzzy Syst. 22:958–965

Raj S, Vinod DS, Mahanand BS et al (2020) Intuitionistic fuzzy C means clustering for lung segmentation in diffuse lung diseases. Sens Imag. 21(37):1–16

Yager RR (1979) On the measure of fuzziness and negation, part I: membership in the unit interval. Int J Gen Syst. 5:221–229

Yager RR (1980) On a general class of fuzzy connectives. Fuzzy Sets Syst. 4(3):235–242

Yanhui G, Abdulkadir SE (2014) A novel image segmentation algorithm based on neutrosophic similarity clustering. Appl Soft Comput. 25:391–398

Arindam J, Garima S, Rashmi M, Madhumala G, Amit K, Chandan C, Atulya KN (2014) Automatic leukocyte nucleus segmentation by intuitionistic fuzzy divergence based thresholding. Micron. 58:55–65

Yanhui G, Abdulkadir S, Ye J (2014) A novel image thresholding algorithm based on neutrosophic similarity score. Measurement. 58:175–186

Li Z, Lu M (2019) Some novel similarity and distance measures of pythagorean fuzzy sets and their applications. J Intell Fuzzy Syst. 37(2):1781–1799

Qiang Z, Junhua H, Jinfu F, Liu A, Yongli L (2019) New similarity measures of pythagorean fuzzy sets and their applications. IEEE Access. https://doi.org/10.1109/ACCESS.2019.2942766

Peng X (2019) New similarity measure and distance measure for Pythagorean fuzzy set. Complex and Intell Syst. 5(5):101–111

Peng XD, Yuan H, Yang Y (2017) Pythagorean fuzzy information measures and their applications. Int J Intell Syst. 32(10):991–1029

Luca DA, Termini (1972) Definition of non probabilistic entropy in the setting of fuzzy set theory. Inform Control. 20(461):301–312

Acknowledgements

This paper is partly supported by the Department of Science and Technology (DST)-Promotion of University Research and Scientific Excellence (PURSE) Phase-II, Government of India, New Delhi (Memo No. BU/DST PURSE (II)/APPOINTMENT/515).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Premalatha, R., Dhanalakshmi, P. Enhancement and segmentation of medical images through pythagorean fuzzy sets-An innovative approach. Neural Comput & Applic 34, 11553–11569 (2022). https://doi.org/10.1007/s00521-022-07043-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07043-5