Abstract

We study integral input-to-state stability of bilinear systems with unbounded control operators and derive natural sufficient conditions. The results are applied to a bilinearly controlled Fokker–Planck equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this note, we continue recent developments on input-to-state stability (ISS) for systems governed by evolution equations. This concept unifies both asymptotic stability with respect to the initial values and robustness with respect to the external inputs such as controls or disturbances. Loosely, if a system \(\Sigma \) is viewed as a mapping which sends initial values \(x_{0}\in X\) and inputs \(u:[0,\infty )\rightarrow U\) to the time evolution \(x:[0,T)\rightarrow X\) for some maximal \(T>0\), then \(\Sigma \) is ISS if \(T=\infty \) and

where the continuous functions \(\beta : {\mathbb {R}}_{0}^{+}\times {\mathbb {R}}_{0}^{+}\rightarrow {\mathbb {R}}_{0}^{+} \) and \(\gamma :{\mathbb {R}}_{0}^{+}\rightarrow {\mathbb {R}}_{0}^{+}\) are of Lyapunov class \({\mathcal {K}}{\mathcal {L}}\) and \(\mathcal {K}\), respectively (see Sect. 2.1 for the definitions).

Here, X is called the state space and U the input space equipped with norms \(\Vert \cdot \Vert _{X}\) and \(\Vert \cdot \Vert _{U}\).

where A is the infinitesimal generator of a \(C_{0}\)-semigroup \((T(t))_{t\ge 0}\) on a Banach space X and \(B:U\rightarrow X\) is a bounded linear operator, ISS is equivalent to uniform exponential stability of the semigroup [4, 10]. If B is not bounded as operator from U to X, which is typically the case for boundary controlled PDEs, the property of being ISS becomes non-trivial even for linear systems. In fact, this is closely related to suitable solution concepts see, e.g., [10, 20, 29]. Along with the recent developments in ISS theory for infinite-dimensional systems [4, 5, 8, 15, 27], several partial results have been derived in the (semi)linear context, with a slight focus on parabolic equations, see, e.g., [13, 16, 21, 23, 25, 35]. We refer to recent surveys on ISS for infinite-dimensional systems [26, 29] and the book [17].

The origin of ISS theory, introduced by Sontag in 1989 [30], is nonlinear systems, and we refer the reader to [31] for a survey on ISS for ODEs.

Already seemingly harmless system classes such as bilinear systems

where \(B(x,u)=\sum _{i=1}^{m}u_{i}B_{i}x\) and \(A, B_{i}\in {\mathbb {R}}^{d\times d}\), see [6], are typical counterexamples for ISS [32]. Nevertheless, the following variant of ISS [32] is satisfied by such systems; there exists functions \(\beta \in {\mathcal {K}}{\mathcal {L}}\) and \(\gamma _{1},\gamma _{2}\in \mathcal {K}\) such that

which is called integral input-to-state stable (integral ISS), see also [32]. Clearly, there is no elementary implication between estimates (1) and (3).

Still integral ISS and ISS are equivalent for infinite-dimensional linear systems with a bounded linear operator \(B:U\rightarrow X\), [10, 24] as this reduces to uniform exponential stability of the uncontrolled system. The corresponding question for general infinite-dimensional systems seems to be much harder and notorious questions remain, see [10, 12, 28] and [13] for a negative result.

On the other hand in [24], the equivalence of integral ISS and uniform exponential stability is shown for a natural infinite-dimensional version of (2), with A generating a \(C_{0}\)-semigroup and \(B:X\times U\rightarrow X\) satisfying a Lipschitz condition on bounded subsets of X uniformly in the second variable and being bounded in the sense that \(\Vert B(x,u)\Vert \lesssim \Vert x\Vert \gamma (\Vert u\Vert )\) for some \(\mathcal {K}\)-function \(\gamma \) and all x and u. As indicated above, the property whether a system is ISS or integral ISS is more subtle when boundary controls are considered and consequently, the involved input operators become unbounded. This also applies for bilinear systems which—in the presence of boundary control—cannot be treated as in the references mentioned above.

In this article, we establish the abstract theory to overcome such issues. More precisely, we study infinite-dimensional control systems of the abstract form

where A generates a \(C_0\)-semigroup on a Banach space X and \(B_{1}\) and \(B_{2}\) are possibly unbounded linear operators defined on Banach spaces \(\bar{X}\) and \(U_{2}\), respectively. The nonlinearity \(F:X\times U_{1}\rightarrow \bar{X}\) is assumed to satisfy a Lipschitz condition and to be bounded. With a slight abuse of notation, but following the literature, e.g., [22], we call such systems “bilinear” because of the prototypical example given by \(F(x,u)=ux\) with \(U_{1}={\mathbb {C}}\), which already shares most interesting aspects. In Sect. 2, we present the details of this abstract framework and derive the main Result, which, in terms of integral ISS, see also Definition 2.1, reads as follows.

Main Result (Theorem 2.9) The bilinear system \(\Sigma (A,[B_1,B_2],F)\) is integral ISS, if the linear systems \(\Sigma (A,B_{1})\) and \(\Sigma (A,B_{2})\) are integral ISS.

In order to prove this statement, we show existence of global mild solutions to \(\Sigma (A,[B_1,B_2],F)\) by classical fixed point arguments under the weak conditions on the operators \(B_{1}\), \(B_{2}\).

We conclude by applying our abstract result to the example of a bilinearly controlled Fokker–Planck equation with reflective boundary conditions, which has recently appeared in [3, 9].

2 Input-to-state stability for bilinear systems

2.1 System class and notions

In the following, we study bilinear control systems of the form

where

-

X, \(\bar{X}\) and \(U_{1}, U_{2}\) are Banach spaces and \(x_{0}\in X\),

-

A generates a \(C_0\)-semigroup \((T(t))_{t\ge 0}\) on X,

-

the input functions \(u_1\) and \(u_2\) are locally integrable function with values in \({U_{1}}\) and \(U_2\), respectively, that is, \(u_1 \in L^1_\mathrm{loc}(0,\infty ;U_{1})\) and \(u_2\in L^1_\mathrm{loc}(0,\infty ;U_2)\),

-

the operators \(B_1\) and \(B_2\) are defined on \(\bar{X}\) and \(U_2\), respectively. Both operators map into a space (see below) in which X is densely embedded,

-

the nonlinear operator \(F:X\times U_1 \rightarrow \bar{X}\) is bounded in the sense that there exists a constant \(m>0\) such that

$$\begin{aligned} \Vert F(x,u)\Vert _{\bar{X}}\le m \Vert x\Vert _X \Vert u\Vert _{U_{1}} \qquad \forall x\in X, u\in U_1 \end{aligned}$$(4)and Lipschitz continuous in the first variable on bounded subsets of X, where the Lipschitz constant depends on the \(U_{1}\)-norm of the second argument, that is, for all bounded subsets \(X_{\text {b}}\subset X\) there exists a constant \(L_{X_{\text {b}}}>0\), such that

$$\begin{aligned} \Vert F(x,u)-F(y,u) \Vert _{\bar{X}} \le L_{X_{\text {b}}} \Vert u\Vert _{U_1} \Vert x-y\Vert _X \qquad \forall x,y\in X_{\text {b}}, u\in U_1, \end{aligned}$$(5) -

\(s\mapsto F(f(s),g(s))\) is measurable for any interval I and measurable functions \(f:I\rightarrow X\), \(g:I\rightarrow U_1\),

-

we write \(\Sigma (A,B_{2})=\Sigma (A,[0,B_2],0)\) if \(B_{1}=0\) and thus \(\Sigma (A,B_{2})\) is linear.

Before explaining the details on the assumptions on \(B_{1}\) and \(B_{2}\) below, we list some examples for functions F and operators that fit our setting.

-

(a)

\(\bar{X}=X\), \(U={\mathbb {C}}\) and \(F(x,u)=xu\),

-

(b)

\(\bar{X}=U=X\), \(f\in X^{*}\), \(F(x,u)=f(x)u\),

-

(c)

\(\bar{X}={\mathbb {C}}\), \(U=X^{*}\), \(F(x,u)=\langle x,u\rangle \).

Let \(X_{-1}\) be the completion of X with respect to the norm \( \Vert x\Vert _{X_{-1}}= \Vert (\beta -A)^{-1}x\Vert _X\) for some \(\beta \) in the resolvent set \(\rho (A)\) of A. For a reflexive Banach space, \(X_{-1}\) can be identified with \((D(A^*))'\), the continuous dual of \(D(A^*)\) with respect to the pivot space X. The operators \(B_{1}\) and \(B_{2}\) are assumed to map to \(X_{-1}\), more precisely, \(B_{1}\in L(\bar{X},X_{-1})\) and \(B_{2}\in L(U_2,X_{-1})\), where L(X, Y) refers to the bounded linear operators from X to Y. Only in the special case that \(B_{1}\) or \(B_{2}\) are in \(L(\bar{X},X)\) or \(L(U_{2},X)\), we say that the respective operator is bounded. The \(C_{0}\)-semigroup \((T(t))_{t\ge 0}\) extends uniquely to a \(C_{0}\)-semigroup \((T_{-1}(t))_{t\ge 0}\) on \(X_{-1}\) whose generator \(A_{-1}\) is the unique extension of A to an operator in \(L(X,X_{-1})\), see, e.g., [7]. Note that \(X_{-1}\) can be viewed as taking the role of a Sobolev space with negative index. With the above considerations, we may consider System \(\Sigma (A,[B_1,B_2],F)\) on the Banach space \(X_{-1}\). We want to emphasize that our interest is primarily in the situation where \(B_1\) and \(B_2\) are not bounded—something that typically happens if the control enters through point boundary actuation.

Note, however, that the assumptions imply that “the unboundedness of \(B_1\) and \(B_2\) is not worse than the one of A”—which particularly means that if \(A\in L(X,X)\), then \(B_1\in L(\bar{X},X)\) and \(B_2\in L(U_{2},X)\).

For zero-inputs \(u_1\) and \(u_2\), the solution theory for System \(\Sigma (A,[B_1,B_2],F)\) is fully characterized by the property that A generates a \(C_{0}\)-semigroup as this reduces to solving a linear, homogeneous equation. For non-trivial inputs, the solution concept is a bit more delicate.

More precisely, for given \(t_{0},t_{1}\in [0,\infty )\), \(t_{0}<t_{1}\), \(x_{0}\in X\), \(u_1\in L_{loc}^{1}(0,\infty ;U_1)\) and \(u_{2}\in L_{loc}^{1}(0,\infty ;U_{2})\), a continuous function \(x:[t_{0},t_{1}] \rightarrow X\) is called a mild solution of \(\Sigma (A,[B_1,B_2],F)\) on \([t_{0},t_{1}]\) if for all \(t\in [t_{0},t_{1}]\),

We say that \(x:[0,\infty )\rightarrow X\) is a global mild solution or a mild solution on \([0,\infty )\) of \(\Sigma (A,[B_1,B_2],F)\) if \(x|_{[0,t_{1}]}\) is a mild solution on \([0,t_{1}]\) for every \(t_{1}>0\).

We stress that existence of a mild solution is non-trivial, even when \(u_{1}=0\). In this case, it is easy to see that \(x\in C([0,\infty ); X_{-1})\), but not necessarily \(x(t)\in X\), \(t>0\), without further assumptions on \(B_{2}\). The existence of a mild solution to the linear System \(\Sigma (A,B_{2})\) is closely related to the notion admissibility of the operator \(B_2\) for the semigroup \((T(t))_{t\ge 0}\) and various sufficient and necessary conditions are available, see, e.g., Proposition 2.4 and [10].

We need the following well-known function classes from Lyapunov theory.

The following concept is central in this work. It originates from works by Sontag [30, 32]. We refer, e.g., to [26, 27] for the infinite-dimensional setting.

Definition 2.1

The system \(\Sigma (A,[B_1,B_2],F)\) is called

-

(i)

input-to-state stable (ISS), if there exist \(\beta \in {\mathcal {K}}{\mathcal {L}}\), \( \mu _1, \mu _2 \in {{\mathcal {K}}}_\infty \) such that for every \(x_0\in X\), \(u_1 \in L^\infty (0,\infty ;U_1)\) and \(u_2 \in L^\infty (0,\infty ;U_2)\) there exists a unique global mild solution x of \(\Sigma (A,[B_1,B_2],F)\) and for every \(t\ge 0\)

$$\begin{aligned} \left\| x(t)\right\| \le \beta (\Vert x_0\Vert ,t)+ \mu _1 (\Vert u_1\Vert _{L^\infty (0,t;U_1)}) + \mu _2 (\Vert u_2\Vert _{L^\infty (0,t;U_2)}); \end{aligned}$$ -

(ii)

integral input-to-state stable (integral ISS), if there exist \(\beta \in {\mathcal {K}}{\mathcal {L}}\), \( \theta _1, \theta _2 \in {{\mathcal {K}}}_\infty \) and \(\mu _1, \mu _2 \in {{\mathcal {K}}}\) such that for every \(x_0\in X\), \(u_1\in L^\infty (0,\infty ;U_1)\) and \(u_2 \in L^\infty (0,\infty ;U_2)\) System \(\Sigma (A,[B_1,B_2],F)\) has a unique global mild solution x and for every \(t\ge 0\)

$$\begin{aligned} \left\| x(t)\right\| \le \beta (\Vert x_0\Vert ,t) + \theta _1 \left( \int _0^t \mu _1 (\Vert u_1(s)\Vert ) \, \mathrm {d}s\right) + \theta _2 \left( \int _0^t \mu _2 (\Vert u_2(s)\Vert ) \, \mathrm {d}s\right) . \end{aligned}$$

One may define some mixed type of these definitions like (ISS, integral ISS) (and (integral ISS,ISS)), in the sense that one has an ISS-estimate for \(u_1\) and some integral ISS-estimate for \(u_2\) (and vice versa).

The terms involving \(u_{1}\) and \(u_{2}\) on the right-hand-side of the integral ISS estimate do not define norms in general. However, there are function spaces which are naturally linked to integral ISS [10].

In this context, we briefly introduce the Orlicz space \(E_\varPhi (I;Y)\) associated with a so-called Young function \(\varPhi \) for an interval \(I \subset {\mathbb {R}}\) and a Banach space Y in the 5. Note that the Orlicz space \(E_\varPhi \) corresponding to the Young function \(\varPhi (t)=t^p\), \(1<p<\infty \), is isomorphic to \(L^p\).

Definition 2.2

Let \((T(t))_{t\ge 0}\) be a \(C_0\)-semigroup.

-

(i)

We say that \((T(t))_{t\ge 0}\) is of type \((M,\omega )\) if \(M\ge 1\) and \(\omega \in {\mathbb {R}}\) are such that

$$\begin{aligned} \Vert T(t)\Vert \le M\text {e}^{-\omega t},\qquad t\ge 0. \end{aligned}$$(7) -

(ii)

We say that \((T(t))_{t\ge 0}\) is (uniformly) exponentially stable if \((T(t))_{t\ge 0}\) is of type \((M,\omega )\) for some \(\omega >0\).

-

(iii)

Let \(Z=E_\varPhi \) or \(Z=L^\infty \). An operator \(B \in L(U,X_{-1})\) is called Z-admissible for \((T(t))_{t \ge 0}\), if for every \(t>0\) and \(u\in Z(0,t;U)\) it holds that

$$\begin{aligned} \int _0^t T_{-1}(t-s)B u(s) \, \mathrm {d}s\in X. \end{aligned}$$We will neglect the reference to \((T(t))_{t \ge 0}\) if this is clear from the context.

Recall that every \(C_0\)-semigroup is of type \((M,\omega )\) for some \(M\ge 1\) and \(\omega \in {\mathbb {R}}\). Note that any bounded operator B is Z-admissible for all Z considered above.

Remark 2.3

Let \(B\in L(U,X_{-1})\) be Z-admissible for \((T(t))_{t\ge 0}\) with \(Z=E_\varPhi \) or \(Z=L^\infty \). Then, for any \(t>0\) there exists a minimal constant \(C_{t,B}>0\) such that

This is a consequence of the closed graph theorem. Also note that B is Z-admissible for \((\text {e}^{\delta t}T(t))_{t\ge 0}\) for any \(\delta \in {\mathbb {R}}\). Furthermore, the function \(t\mapsto C_{t,B}\) is non-decreasing and, if \((T(t))_{t\ge 0}\) is exponentially stable, even bounded, that is, \(C_{B}:=\sup _{t\ge 0} C_{t,B}<\infty \).

The following result clarifies the relation between admissibility and (integral) ISS. The interest to study admissibility with respect to Orlicz spaces follows by the natural connection to integral ISS for linear systems, see Proposition 2.4 (iii).

Note in particular that the existence of mild solutions for \(E_{\varPhi }\)-admissible operators \(B_{2}\) is based on the absolute continuity of the Orlicz norm with respect to the length of the interval and the strong continuity of the shift-semigroup on \(E_\varPhi (I;Y)\) for any interval I and any Banach space Y. The latter can be proven by similar methods one uses to prove the strong continuity of the shift-semigroup on \(L^p(I;Y)\).

Proposition 2.4

(Prop. 2.10 & Thm. 3.1 in [10]) Let A generate the \(C_{0}\)-semigroup \((T(t))_{t \ge 0}\) on X and \(B_{2}\in L(U_{2},X_{-1})\).

-

(i)

If \(B_{2}\) is \(E_\varPhi \)-admissible, then for every \(x_0 \in X\) and \(u_{2} \in E_{\varPhi ,\mathrm{loc}}(0,\infty ;U_2)\) there exists a unique global mild solution x of System \(\Sigma (A,B_{2})\), which is given by (6) with \(B_1=0\).

-

(ii)

System \(\Sigma (A,B_{2})\) is ISS if and only if \((T(t))_{t\ge 0}\) is exponentially stable and \(B_{2}\) is \(L^\infty \)-admissible.

-

(iii)

\(\Sigma (A,B_{2})\) is integral ISS if and only if \((T(t))_{t \ge 0}\) is exponentially stable and \(B_{2}\) is \(E_\varPhi \)-admissible for some Young function \(\varPhi \).

2.2 Main Results

Whether ISS implies integral ISS for a system \(\Sigma (A,B)\) is still an open question. This is true for B bounded, see, e.g., [10, Prop. 2.14] or [24]. However, various conditions on A and the input spaces U are available under which integral ISS and ISS are equivalent [13] in the case of boundary control.

The following proposition proves an estimate between Orlicz-norms and integral ISS estimates, which will be useful for the proof of the main Result.

Proposition 2.5

Let \(\varPhi \) be a Young function. Then, there exist \(\mathcal {K}_{\infty }\)-functions \(\theta \) and \(\mu \) such that for any Banach space U and \(t>0\),

Moreover, \(\theta \) and \(\mu \) can be chosen as

where \(\phi \) equals the right-derivative of \(\varPhi \) a.e. and, for \(\alpha >0\),

with \(\theta (0)=0\).

If \(\varPhi \) satisfies the \(\varDelta _2\)-condition (c.f. 5), then \(\mu =\varPhi \) can be chosen as well.

Proof

Note that we only need to show that \(\mu \) and \(\theta \) define \(\mathcal {K}_{\infty }\)-functions since (9) is immediate from the definition of \(\theta \). The proof is similar in spirit to an argument used in [28, Proof of Thm. 1], with the crucial fact being that \(\mu \) defined by (10) defines a Young function such that

for all \(c>0\), see [28, Lem. 1]. In the special case that \(\varPhi \) satisfies the \(\varDelta _{2}\)-condition (with \(s_{0}=0\)), the above properties also hold for \(\mu =\varPhi \), by the defining properties of the \(\varDelta _{2}\)-condition. This implies that whenever a sequence \((f_{n})_{n\in {\mathbb {N}}}\) with \(f_n\in L^\infty (0,t_n;U)\) is such that

it follows that \(\lim _{n\rightarrow \infty }\Vert f_{n}\Vert _{E_{\varPhi }(0,t_n;U)}=0\), see [28, Lem. 2]. Clearly, \(\mu \) is a \(\mathcal {K}_{\infty }\)-function, since \(\mu \) is a Young function. Therefore, it remains to consider \(\theta \). It is easy to see that \(\theta \) is well-defined, non-decreasing and unbounded, whence we are left to show continuity. Moreover, since \(\theta (\alpha )\) is of the form \(\sup M_{\alpha }\) with nested sets \((M_{\alpha })_{\alpha >0}\), it follows that \(\theta \) is right-continuous on \((0,\infty )\). To see that \(\theta \) is continuous at \(\alpha =0\), let \((\alpha _{n})_{n}\) be a decreasing sequence of positive numbers with \(\lim _{n\rightarrow \infty }\alpha _{n}=0\) and for every \(n \in {\mathbb {N}}\) let \(u_{n}\in L^\infty (0,t_n;U)\) be such that \(\int _{0}^{t_n}\mu (\Vert u_{n}(s)\Vert )\, \mathrm {d}s\le \alpha _{n}\) and \(0\le \theta (\alpha _{n})-\Vert u_{n}\Vert _{E_{\varPhi }(0,t_n;U)}<\frac{1}{n}\). By the above mentioned argument, we can conclude that \(\Vert u_{n}\Vert _{E_{\varPhi }(0,t_n;U)}\) converges to 0 as \(n\rightarrow \infty \). Thus, \(\lim _{n\rightarrow \infty }\theta (\alpha _{n})=0\).

We finish the proof by showing that \(\theta \) is left-continuous on \((0,\infty )\). Now, let \(\alpha >0\), \(\alpha _{n}\nearrow \alpha \) and let \(u_{k}\in L^\infty (0,t_k;U)\), \(k\in {\mathbb {N}}\), such that

For every \(n\in {\mathbb {N}}\), we aim to find \({\tilde{u}}_{n}\in L^\infty (0,t_n;U)\) such that \(\int _{0}^{t_n}\mu (\Vert {\tilde{u}}_{n}(s)\Vert ) \, \mathrm {d}s\le \alpha _{n}\) and \(\lim _{n\rightarrow \infty }\Vert u_{n}-{\tilde{u}}_{n}\Vert _{E_{\varPhi }(0,t_n;U)}=0\). Indeed, then

tends to 0 as \(n\rightarrow \infty \), which shows left-continuity. We define \({\tilde{u}}_{n}:=u_{n}\chi _{M_{n}}\) where the measurable set \(M_{n}\) is chosen such that

or \(M_{n}=(0,t_n)\) otherwise. It follows that

Thus, using the argument from the beginning of the proof again, we infer that \(\Vert u_{n}-{\tilde{u}}_{n}\Vert _{E_{\varPhi }(0,t_n;U)}\rightarrow 0\) as \(n\rightarrow \infty \). This concludes the proof. \(\square \)

Combining Proposition 2.5 with [10, Prop 2.10] allows us to formulate the following result:

Corollary 2.6

If System \(\Sigma (A,B_{2})\) possesses a unique mild solution x for every \(x_0 \in X\) and \(u_2 \in L^\infty (0,\infty ;U_2) \), then the following statements are equivalent.

-

(i)

There exist functions \(\beta \in {\mathcal {K}}{\mathcal {L}}\) and \(\mu _2 \in \mathcal {K}_\infty \) such that

$$\begin{aligned} \Vert x(t) \Vert \le \beta (\Vert x_0 \Vert ,t) + \mu _2(\Vert u_2 \Vert _{E_\varPhi (0,t;U_2)}) \end{aligned}$$(11)holds for all \(t \ge 0\) and \(u_2 \in L^\infty (0,\infty ;U_2)\).

-

(ii)

There exist functions \(\beta \in {\mathcal {K}}{\mathcal {L}}\), \(\theta _2 \in \mathcal {K}\) and \(\mu _2 \in \mathcal {K}_\infty \) such that

$$\begin{aligned} \Vert x(t) \Vert \le \beta ( \Vert x_0 \Vert , t ) + \theta _2 \left( \int _0^t \mu _2(\Vert u_2(s) \Vert _{U_2}) \, \mathrm {d} s \right) \end{aligned}$$(12)holds for all \(t \ge 0\) and \(u_2 \in L^\infty (0,\infty ;U_2)\).

Remark 2.7

Let us make the following comments on the construction of \(\mu \) and \(\theta \) in Proposition 2.5.

-

1.

If \(\varPhi (s)=s^{p}\), \(s>0\), then \(\mu (s)=s^{p}\) and it is not hard to see that, up to a constant, \(\theta (r)\) is given by \(\varPhi ^{-1}(r)=r^{\frac{1}{p}}\). This shows that the choice of \(\theta \) is rather natural.

-

2.

With similar techniques as in the proof of Proposition 2.5, it has been shown in [10, 28] that if a linear system \(\Sigma (A,B)\) satisfies (11), then it is integral ISS with the estimate

$$\begin{aligned} \Vert x(t)\Vert \le \beta (\Vert x_{0}\Vert ,t)+\theta \left( \int _{0}^{\infty }\mu (\Vert u(s)\Vert _{U}) \, \mathrm {d}s\right) , \end{aligned}$$where

$$\begin{aligned} \theta (\alpha ) = {}\sup&\left\{ \left\| \int _{0}^{t}T_{-1}(s)Bu(s) \, \mathrm {d}s\right\| \Bigm |\right. \nonumber \\ \quad&\left. u \in L^\infty (0,t;U),\,t \ge 0,\,\int _0^t \mu (\Vert u(s)\Vert _U) \, \mathrm {d}s \le \alpha \right\} . \end{aligned}$$(13)Proposition 2.5 shows that \(\theta \) can actually be chosen independent of the semigroup \((T(t))_{t \ge 0 }\) and B provided the system is \(E_{\varPhi }\)-admissible (which, however, depends on \((T(t))_{t \ge 0}\) and B, of course). In some sense, this fact simplifies the proofs in [10, 28]. On the other hand, the choice of \(\theta \) based on (13) is more refined; in case, the system was even \(E_{\varPsi }\)-admissible with some \(\varPsi \le \varPhi \), this would affect the choice of \(\theta \), even if \(\mu \) is constructed from \(\varPhi \) only.

In contrast to linear systems, the existence of mild solutions is less clear for bilinear control systems of the form \(\Sigma (A,[B_1,B_2],F)\).

Sontag [32] showed that finite-dimensional bilinear systems are hardly ever ISS, but integral ISS if and only if the semigroup is exponentially stable. In [24], it was shown that this result generalizes to infinite-dimensional bilinear systems provided that \(B_{1}\) and \(B_{2}\) are bounded operators and \(\bar{X}=X\). The following results give sufficient conditions for integral ISS and some combination of ISS and integral ISS of \(\Sigma (A,[B_1,B_2],F)\). We start with a result on existence of local solutions to \(\Sigma (A,[B_1,B_2],F)\). The proof involves typical arguments in the context of mild solutions for semilinear equations.

A similar result for the existence of the unique mild solution as in the following Lemma 2.8 was proved under slightly stronger conditions in [2] for \(L^{p}\)-admissible \(B_{1}\), scalar-valued inputs \(u_1\), \(F(x,u_1)=u_1 x\) and \(B_2=0\). Our condition is more natural as the same condition guarantees the existence of continuous (and unique) global mild solutions of the linear systems \(\Sigma (A,B_{1})\) and \(\Sigma (A,B_{2})\), see Proposition 2.4.

Lemma 2.8

Let A generate a \(C_{0}\)-semigroup \((T(t))_{t \ge 0}\) on X. Suppose that \(B_{1}\in L(\bar{X},X_{-1})\) is \(E_\varPhi \)-admissible and that \(B_{2}\in L(U_{2},X_{-1})\) is \(E_\varPsi \)-admissible. Then, for every \(t_0\ge 0\), \(x_0\in X\), \(u_1\in E_\varPhi (0,\infty ;U_1)\) and \(u_2\in E_\varPsi (0,\infty ;U_2)\) there exists \(t_1>t_0\) such that System \(\Sigma (A,[B_1,B_2],F)\) possesses a unique mild solution x on \([t_0,t_1]\).

Moreover, if \(t_{\mathrm {max}} > t_0\) denotes the supremum of all \(t_1>t_0\) such that System \(\Sigma (A,[B_1,B_2],F)\) has a unique mild solution x on \([t_0,t_1]\), then \(t_{\mathrm {max}} < \infty \) implies that

Proof

We first show that for every \(t_0 \ge 0, x_0\in X\), \(u_1\in E_\varPhi (0,\infty ;U_1) \) and \(u_2\in E_\varPsi (0,\infty ;U_2)\) there exists \(t_1>t_{0}\) such that System \(\Sigma (A,[B_1,B_2],F)\) possesses a unique mild solution on \([t_0,t_1]\) with initial condition \(x_0\) and input functions \(u_1\) and \(u_2\). Moreover, we show that \(t_{1}=t_{0}+\delta \) can be chosen such that \(\delta \) is independent for any bounded sets of initial data \(x_{0}\) and \(t_{0}\). Let \(T>0\), \(r>0\), \(u_1\in E_\varPhi (0,\infty ;U_1) \) and \(u_2\in E_\varPsi (0,\infty ;U_2)\) be arbitrarily. We first recall the following property of Orlicz spaces. For any \(\varepsilon >0\), there exists \(\delta >0\) such that

see, e.g., [19, Thm. 3.15.6]. Let \(t_{0}\in [0,T]\), \(t_1>t_0\) and \(x_{0}\in K_{r}(0)=\{x\in X:\Vert x\Vert \le r\}\) and define the mapping

The strong continuity of \((T(t))_{t\ge 0}\) and Proposition 2.4 imply that \(\varPhi _{t_0,t_1}\) is well-defined, that is, \(\varPhi _{t_0,t_1}(x)\in C([t_0,t_1];X)\) for every \( x\in C([t_0,t_1];X)\). Note that we applied Proposition 2.4 twice: to System \(\Sigma (A,B_{2})\) with input \(u_2\) and to System \(\Sigma (A,B_{1})\) with input \(F(x(\cdot ),u_1(\cdot ))\), where we set \(u_1,u_2,x\) zero on \((0,t_0)\).

Let \(M \ge 1\) and \(\omega \in {\mathbb {R}}\) be such that \(\Vert T(t)\Vert \le M \text {e}^{-\omega t}\) for all \(t \ge 0\) and choose \(k=4Mr + 2M\). Set

We will show next that \(t_1\) can be chosen such that \(\varPhi _{t_0,t_1}\) maps \(M_k(t_0,t_1)\) to \(M_k(t_0,t_1)\) and is contractive on this set. Let \(C_{t,B_{1}}\) and \(C_{t,B_{2}}\) refer to the admissibility constants such that (8) holds for \(B_1\) and \(B_2\) which can be chosen non-decreasing in t. Let m be the boundedness constant of F from (4) and let \(L_{K_{k}(0)}\) be the Lipschitz constant of F such that (5) holds for the bounded set \(X_{b}=\{ x(t) \mid x \in M_k(t_0,t_1), t \in [t_0,t_1] \} \subset X\) which is equal to \(K_{k}(0)=\{x\in X:\Vert x\Vert \le k\}\). Now, let \(t_1 = t_0 + \delta \) with \(\delta \in (0,1)\) be chosen such that for all \(t_{0}\in [0,T]\),

-

(i)

\(\text {e}^{\omega (t_1-t_0)} =\text {e}^{\omega \delta } \le 2 \),

-

(ii)

\(m C_{T+1,B_{1}}\Vert u_1\Vert _{E_\varPhi (t_{0},t_{0}+\delta ;U_1)} \le \frac{1}{2}\),

-

(iii)

\(C_{T,B_{2}} \Vert u_2\Vert _{E_\varPsi (t_{0},t_{0}+\delta ;U_2)}\le M\) and

-

(iv)

\(C_{T+\delta ,B_{1}} L_{K_k(0)} \Vert u_1\Vert _{E_\varPhi (t_{0},t_{0}+\delta ;U_1)} < 1\)

holds, where we used (14) in (ii)-(iv). Note that apart from the parameters of the operators \(B_{1},B_{2}, A,F\), the choice of \(\delta \) only depends on r and T, where the r-dependence of \(\delta \) arises from the r-dependence of k. It follows that for all \(t_{0}\in [0,T]\), \(x\in M_k(t_0,t_1)\) and \(x_0\in K_r(0)\)

where we used admissibility in the first inequality and (4) in the second inequality as well as the monotonicity of the Orlicz norm in both estimates. Hence, \(\varPhi _{t_0,t_1}\) maps \(M_k(t_0,t_1)\) to \(M_k(t_0,t_1)\). The contractivity follows by \(\mathrm {(iv)}\) since

where we used again admissibility, the Lipschitz property of F and the monotonicity of the Orlicz norm. By Banach’s fixed-point theorem, we conclude that System \(\Sigma (A,[B_1,B_2],F)\) possesses a unique mild solution on \([t_0,t_1]\) with initial condition \(x_0\) and input functions \(u_1\) and \(u_2\).

Now, let \(t_{\mathrm {max}}\) be the supremum of all \(t_{1}\) such that there exists a mild solution x of \(\Sigma (A,[B_1,B_2],F)\) on \([t_0,t_1]\) for every \(t_1 < t_{\mathrm {max}}\), where \(x_0 \in X\), \(u_1 \in E_\varPhi (0,\infty ;U_1)\) and \(u_2 \in E_\varPsi (0,\infty ;U_2)\) are given. Suppose that \(t_{\mathrm {max}}\) is finite. We will show, that then \(\lim _{t\rightarrow t_{\mathrm {max}}}\Vert x(t)\Vert =\infty \). If this is not the case, we have

Let \((t_n)_{n \in {\mathbb {N}}}\) be a sequence of positive real numbers converging to \(t_{\mathrm {max}}\) from below. Since \(t_{n}\in [0,t_{\mathrm {max}}]\) and \(\Vert x(t_{n})\Vert \le r\) for all \(n\in {\mathbb {N}}\), there exists \(\delta >0\) independent of \(n\in {\mathbb {N}}\) such that the equation

has a mild solution y on \([t_{n},t_{n}+\delta ]\). Therefore, we can extend x by \(x(t) =y(t)\), \(t \in (t_n,t_n+ \delta ]\), to a solution of \(\Sigma (A,[B_1,B_2],F)\) on \([t_0,t_n+ \delta ]\). This contradicts the maximality of \(t_{\mathrm {max}}\) and hence, x has to be unbounded in \(t_{\mathrm {max}}\). \(\square \)

Theorem 2.9

Suppose that the linear systems \(\Sigma (A,B_{1})\) and \(\Sigma (A,B_{2})\) are integral ISS, then the bilinear system \(\Sigma (A,[B_1,B_2],F)\) is integral ISS and (integral ISS,ISS).

The assumption that \(\Sigma (A,B_{2})\) is integral ISS is necessary.

Proof

The necessity of \(\Sigma (A,B_{2})\) being integral ISS follows by setting \(u_1=0\) in the bilinear system.

Proposition 2.4 says that integral ISS of the linear systems is equivalent to the exponential stability of the semigroup \((T(t))_{t \ge 0}\) generated by A and the admissibility of the control operators \(B_1\) and \(B_2\) with respect to some Orlicz spaces \(E_\varPhi \) and \(E_\varPsi \), respectively.

Using this characterization, we will give the proof in two steps. At first, we prove the existence of a global mild solution x of \(\Sigma (A,[B_1,B_2],F)\) (which does not need the exponential stability of \((T(t))_{t \ge 0}\)). Afterward, we prove the (integral) ISS properties.

STEP I. Let \((M,\omega )\) denote the type of \((T(t))_{t \ge 0}\). By Remark 2.3, there exist \(C_{t,B_1},C_{t,B_2}>0\) such that for every \(t\ge 0\), \(y\in E_\varPhi (0,\infty ;\bar{X})\) and \({\tilde{y}} \in E_\varPsi (0,\infty ;U_2)\), we have

and

Let \(x_0 \in X\), \(u_1 \in E_\varPhi (0,\infty ;U_1)\) and \(u_2 \in E_\varPsi (0,\infty ;U_2)\) and let \(t_{\mathrm {max}}\) be the supremum over all \(t_1\) such that \(\Sigma (A,[B_1,B_2],F)\) possesses a unique x mild solution on \([0,t_1]\). Lemma 2.8 yields \(t_{\mathrm {max}}>0\). For \(t \in [0,t_{\mathrm {max}})\) we have that

where \(C_{\omega ,u_{2},t}=C_{t,B_2}\text {e}^{-\frac{\omega }{2}t} \left\| \text {e}^{\frac{\omega }{2}\cdot }u_2 \right\| _{E_\varPsi (0,t;U_2)}\). The \(\Vert \cdot \Vert _{E_{\varPhi }}\)-norm in the second term can be estimated by the boundedness of F,

We pass over to the equivalent norm on \(E_{\varPhi }\) given in the 5, (24). Therefore, for \(\varepsilon >0\) there exists a function \(g \in L_{\tilde{\varPhi }}(0,t;{\mathbb {C}})\) with \(\Vert g\Vert _{L_{\tilde{\varPhi }}(0,t;{\mathbb {C}})} \le 1\) such that

Hence, by combining this with (15) gives

Setting \(\alpha (t) := M \text {e}^{- \frac{\omega }{2}t}\Vert x_0\Vert + m C_{t,B_1} \varepsilon + \text {e}^{\frac{\omega }{2} t}C_{\omega ,u_{2},t}\), Gronwall’s inequality implies that

where we used the generalized Hölder inequality for Orlicz spaces, see (25) in the 5. Thus, by letting \(\varepsilon \) tend to 0, multiplying with \(\text {e}^{-\frac{\omega }{2}t}\) and using \(ab \le \frac{1}{2}a^2+\frac{1}{2}b^2\) for \(a,b\in {\mathbb {R}}\), we obtain

by monotonicity of the Orlicz norm,

Thus, we have shown

for all \(u_1 \in E_\varPhi (0,\infty ;U_1)\), \(u_2 \in E_\varPsi (0,\infty ;U_2)\) and functions \(\beta \in {\mathcal {K}}{\mathcal {L}}\) and \(\gamma _1, \gamma _2 \in \mathcal {K}_\infty \), which can be chosen as

Moreover, the mild solution exists on \([0,\infty )\). Indeed, if this is not the case, we have \(t_{\mathrm {max}}<\infty \) and Lemma 2.8 implies that x is unbounded in \(t_{\mathrm {max}}\). This contradicts (16) since the right-hand-side is uniformly bounded in t on finite intervals \([0,t_{\mathrm {max}})\).

STEP II. Since we are dealing with an exponentially stable semigroup, Remark 2.3 implies that \(C_{t,B_1}\) and \(C_{t,B_2}\) are uniformly bounded in t and we can choose \(\omega >0\). Hence, (16) yields for all \(u_1 \in E_\varPhi (0,\infty ;U_1)\) and \(u_2 \in E_\varPsi (0,\infty ;U_2)\) that

with \(C_{B_i}= \sup _{t \ge 0}C_{t,B_i}\), \(i=1,2\).

Using Proposition 2.5 for \(u_1\) and \(u_2\), we have shown that \(\Sigma (A,[B_1,B_2],F)\) is integral ISS since \(L^\infty \) is contained in any Orlicz space on compact intervals. If we apply Proposition 2.5 only for \(u_1\) in (16), \(\Sigma (A,[B_1,B_2],F)\) is (integral ISS,ISS) by realizing that there exists a constant \(C>0\) such that

for all \(u_{2}\in L^{\infty }(0,\infty ;U_{2})\) and \(t>0\). To see this, let \(\varepsilon >0\) such that \(\varPsi (x)\le x\) for all \(x\in (0,\varepsilon )\), which exists by the property that \(\lim _{s\rightarrow 0}\frac{\varPsi (s)}{s}=0\). Therefore, choosing \(C=\max \{\frac{1}{\epsilon },\frac{2}{\omega }\}\),

This implies that

by the definition of the \(E_{\varPsi }\)-norm. \(\square \)

The assumption in Theorem 2.9 that System \(\Sigma (A,B_{1})\) is integral ISS is not necessary as the choice \(F=0\) shows.

Remark 2.10

-

(1)

In Theorem 2.9, one cannot expect the bilinear systems to be ISS as the trivial finite-dimensional example \({\dot{x}}=-x+u_{1}x\) shows.

-

(2)

Using the definitions of \(\gamma _1\), \(\gamma _2\) after (16) and the definitions of \(\mu \) and \(\theta \) from Proposition 2.5, up to constants the functions \(\mu _1\), \(\mu _2\), \(\theta _1\) and \(\theta _2\) in the integral ISS estimate for \(\Sigma (A,[B_1,B_2],F)\) can be given explicitly.

-

(3)

The proof of Theorem 2.9 is easier in the case that the Orlicz spaces are \(L^p\) spaces, since the \(L^p\)-norm is already an integral of the form we are seeking for in the integral ISS estimate (c.f. Definition 2.1).

-

(4)

Note that the assumptions of Lemma 2.8 already yield that the unique mild solution is global. This is the first step of the proof of Theorem 2.9.

In order to investigate integral ISS, it is thus sufficient to check that the linear systems \(\Sigma (A,B_{1})\) and \(\Sigma (A,B_{2})\) are integral ISS, or by Proposition 2.4 equivalently, that A generates an exponential stable \(C_0\)-semigroup and the control operators \(B_1\) and \(B_2\) are admissible. Note that there are control operators B which are \(E_\varPhi \)-admissible for some Young function \(\varPhi \) but not \(L^p\)-admissible for any \(p \in [1,\infty )\). In the context of linear systems, such an example was already given in [10, Ex. 5.2] for an operator B defined on \({\mathbb {C}}\) using the connection between a Carleson-measure criterion and admissibility stated in [10], see also [11]. The next example extends this result to control operators defined on \(\bar{X}\).

Example 2.11

Let \(X= \ell ^2({\mathbb {N}})\) and define \(F:X\times {\mathbb {C}}\rightarrow X\), by \(F(x,u):=ux\) and the diagonal operators

where \((e_n)_{n {\mathbb {N}}}\) is the canonical basis of X and A is defined on its maximal domain.

The general assumptions of Sect. 2.1 are satisfied with \(B_1=B\) and \(B_2=0\). Let \(x=(\frac{1}{n})_{n \in {\mathbb {N}}} \in X\) and \(p \in [1, \infty )\). Following [10, Ex. 5.2], the operator \(b=Bx\) defined on \({\mathbb {C}}\) is not \(L^p\)-admissible. Hence, B is not \(L^p\)-admissible.

Next, we show that B is \(E_\varPhi \)-admissible, where \(\varPhi \) is the complementary Young function to

It is easy to check that \(\tilde{\varPhi }\) is a Young function. Define the sequence \(k=(k_n)_{n \in {\mathbb {N}}}\) by \(k_n=\frac{\ln (Cn)}{n}\), \(n\in {\mathbb {N}}\), where \(C=\ln (2)+\ln (2\text {e})>1\). We choose n large enough, such that \(k_n n = \ln (Cn)\ge 1\) holds. Similar to [34, Ex. 4.2.13], one can show

We deduce

and hence \(\Vert \text {e}^{-2^n (t-\cdot )}\frac{2^n}{n}\Vert _{L_{\tilde{\varPhi }}(0,t;{\mathbb {C}})} \le k_n\) for sufficiently large n. Using the generalized Hölder inequality (25), we get for \(u \in E_\varPhi (0,t;\ell ^2({\mathbb {N}}))\) and sufficiently large n

where we used in the last inequality that

Therefore, for some \(M>0\),

which shows that B is \(E_\varPhi \)-admissible and thus \(\Sigma (A,[B,0],F)\) is integral ISS.

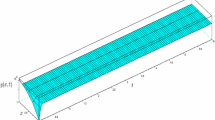

3 Controlled Fokker–Planck equation

Following [3, 9], we consider the following variant of the Fokker–Planck equation on a bounded domain \(\varOmega \subset {\mathbb {R}}^n\), with smooth boundary \(\partial \varOmega \),

where \( x\in \varOmega , t>0\), with reflective boundary conditions

Here, \(\mathbf {n}\) refers to the outward normal vector on the boundary, \(\rho _0\) denotes the initial probability distribution with \(\int _\varOmega \rho _0(x) \, \mathrm {d}x=1\) and \(\nu >0\). Furthermore, the potential V is assumed to be of the form

where \(W \in W^{2,\infty }(\varOmega )\) and \(\alpha \in W^{1,\infty } \cap H^2(\varOmega )\) satisfying the structural assumption \(\nabla \alpha \cdot \mathbf {n} =0\) on \(\partial \varOmega \). Thus, the scalar-valued input function u enters via the spatial profile \(\alpha \) in the potential.

In order to cast the equations in an abstract framework, we introduce the following operators:

where \({X}=L^{2}(\varOmega )\) and \(H^{1}(\varOmega )\), \(H^{2}(\varOmega )\) refer to the standard Sobolev spaces. By standard arguments, the operator A is seen to generate a bounded \(C_0\)-semigroup on X, with discrete spectrum \(\sigma (A) = \sigma _p(A) \subseteq (-\infty ,0]\) and \(\rho _\infty = c\text {e}^{-\varPhi }\) is an eigenfunction to the simple eigenvalue 0, where \(\varPhi \) is given by \(\varPhi = \ln \nu + \frac{W}{\nu }\) and \(c>0\) is such that \(\int _{\varOmega }\rho _{\infty }\mathrm {d}x=1\), see [3]. Furthermore, we will identify B with its extension from X to \(X_{-1}\).

We now consider the system around the stationary distribution \(\rho _{\infty }\) instead of the origin, see also [3] and decompose X according to the projections

Note that \(\text {ran}(Q)=\ker (P)=\mathrm {span}{\{\rho _\infty \}}\) and \(\ker (Q) = \text {ran}(P)\). Define \( \mathcal {X}=\text {ran}(P)\). Using \(y=\rho - \rho _\infty \) and \(y=y_P + y_Q\) with \(y_P=Py \in \mathcal {X}\) and \(y_Q=Qy\in \mathrm {span}{\{\rho _\infty \}}\) and following [3, Sec. 3.2], the Fokker–Planck equation can be rewritten as

where

and \(\mathcal {A}\) generates a strongly continuous semigroup as \(APf=PAf\) for \(f\in D(A)\), see also [3, Eq. (3.12)]. We emphasize that \(Q\rho _0 - \rho _\infty = 0\) follows by the assumption that \(\int _{\varOmega }\rho _{0}(x)\mathrm {d}x=1\). That \(\mathcal {B}_{1}\) and \(\mathcal {B}_{2}\) are well-defined will be argued below.

Theorem 3.1

System (21) is integral ISS.

In the remainder of the section, we will lay out the proof of Theorem 3.1 based on Theorem 2.9 and Proposition 2.4. This includes to show that \(\mathcal {A}\), \(\mathcal {B}_{1}\), \(\mathcal {B}_{2}\) satisfy the assumptions of the abstract system class from Sect. 2.1 and considering the nonlinearity

Let M be the multiplication operator by \(\text {e}^{\frac{\varPhi }{2}}\) on \(L^2(\varOmega )\). Clearly, M is bounded and invertible on \(L^2(\varOmega )\), leaves \(H^1(\varOmega )\) invariant, and the inverse \(M^{-1}\) is the multiplication operator by \(\text {e}^{-\frac{\varPhi }{2}}\). Hence, \({\tilde{A}}\) given by

is well-defined and self-adjoint.

To study admissibility of B, we introduce the following well-known abstract interpolation and extrapolation spaces, see, e.g., [33]. Let \({\tilde{X}}_{1}\) and \({\tilde{X}}_{-1}\) be defined in the same way as \(X_1\) and \(X_{-1}\), but using \({\tilde{A}}\) instead of A. We define \({\tilde{X}}_{\frac{1}{2}}\) as the completion of \(D({\tilde{A}})\) with respect to the norm given by

and we denote by \({\tilde{X}}_{-\frac{1}{2}}\) the dual space of \({\tilde{X}}_{\frac{1}{2}}\) with respect to the pivot space X, i.e., the completion of X with respect to the norm \( \sup _{\Vert v\Vert _{{\tilde{X}}_{\frac{1}{2}}}\le 1} |\langle z,v \rangle _X|\). The following embeddings are dense and continuous: \({\tilde{X}}_1 \hookrightarrow {\tilde{X}}_{\frac{1}{2}} \hookrightarrow X \hookrightarrow {\tilde{X}}_{-\frac{1}{2}} \hookrightarrow {\tilde{X}}_{-1}\).

We first prove that the operator \({\tilde{B}}:= M B M^{-1}\) defined on \(D({\tilde{A}})\) has a unique extension \({\tilde{B}} \in L(X,{\tilde{X}}_{-\frac{1}{2}})\) which is \(L^{2}\)-admissible for \({{\tilde{A}}}\). Integration by parts gives

For \(f \in D({\tilde{A}})\) and \(v \in D({\tilde{A}})\), \(\Vert v\Vert _{{\tilde{X}}_{\frac{1}{2}}} \le 1\), we have that

where \(\sigma \) is the surface measure on \(\partial \varOmega \). Thus, \({\tilde{B}}\in L(X,{\tilde{X}}_{-\frac{1}{2}})\) and \({\tilde{B}}\) is \(L^2\)-admissible for \({\tilde{A}}\) by [33, Prop. 5.1.3]. We have for \(\beta \in \rho (A)=\rho ({\tilde{A}})\) and \(f \in X\)

Thus, \(M^{-1}\) extends uniquely to an operator in \(L({\tilde{X}}_{-1},X_{-1})\). The same argument yields a unique extension \(M\in L(X_{-1},{\tilde{X}}_{-1})\). Note that these extensions are inverse to each other, so it is natural to denote the extensions again by M and \(M^{-1}\).

We claim that \(M^{-1} {\tilde{B}} M \in L(X,X_{-1})\) extends B to an \(L^{2}\)-admissible operator for A which we again denote by B. Indeed, if \((T(t))_{t \ge 0}\) is the semigroup generated by A, then \((S(t))_{t \ge 0}\) with \(S(t)=MT(t)M^{-1}\) is the semigroup generated by \({\tilde{A}}\) and for \(u \in L^2(0,t;X)\) we have \( M u \in L^2(0,t;X)\) and

As \(\mathcal {B}_2 \in L({\mathbb {C}},\mathcal {X})\), \(\mathcal {B}_2\) is clearly \(L^1\)-admissible. The operator P commutes with the \(C_0\)-semigroup generates by A [3, Eq. (3.12)], by [14, Lem. 4.4] the operator \(\mathcal {B}_1=B|_{\mathcal {X}}\in \mathcal {L}(\mathcal {X},\mathcal {X}_{-1})\) is well-defined and \(L^2\)-admissible for \(\mathcal {A}\).

Thus, the bilinearly controlled Fokker–Planck system given by (18)–(20) can be written as a system \(\Sigma (\mathcal {A},[\mathcal {B}_{1},\mathcal {B}_{2}],F)\).

Remark 2.10 implies that the Fokker–Planck system (18)–(20) has a unique global mild solution \(\rho \) for any initial value \(\rho _{0}\in L^{2}(\varOmega )\) and input function \(u\in L^{2}(0,\infty ;U)\). Further, in [3, Proposition 2.2] it is shown that \(\int _{\varOmega }\rho _{0}(x)\mathrm {d}x=1\) implies \(\int _{\varOmega }\rho (t,x)\mathrm {d}x=1\) for all \(t>0\).

Following the construction of the integral ISS estimate (c.f. (16)), we deduce an explicit integral ISS estimate: There exist constants \(C,\omega >0\) such that for any \(\rho _{0}\in L^{2}(\varOmega )\) with \(\int _{\varOmega }\rho _{0}(x)\mathrm {d}x=1\) and \(u\in L^2(0,\infty ;U)\), the global mild solution of the Fokker–Planck system (18) satisfies

where \( \gamma (r) =C r\text {e}^{Cr^{\frac{1}{2}}}+Cr^{\frac{1}{2}}+Cr\).

4 Conclusion

Bilinear systems appear naturally in control theory, e.g., when considering multiplicative disturbances in feedback loops of linear systems. The results in this article draw a link between bilinear systems, which are a classical example class in (integral) ISS in finite-dimensions, and recent progress in ISS for infinite-dimensional systems. We emphasize that the most natural example in this context,

with A generating a \(C_{0}\)-semigroup \((T(t))_{t \ge 0}\) on X, is covered by the system class considered here. More precisely, by the results in Sect. 2, it follows that this system is integral ISS if and only if \((T(t))_{t \ge 0}\) is exponentially stable. More precisely, the sufficiency follows since the identity is \(L^1\)-admissible and hence, the system is integral ISS. It seems that prior works on integral ISS [24, Sec. 4.2] did not cover this comparably simple class as the bilinearity \(x\mapsto xu\) fails to satisfy a Lipschitz condition uniform in u required thereFootnote 1.

Moreover, our results generalize to integral ISS assessment for bilinearities arising from boundary control (or lumped control).

5 Appendix

We briefly introduce Orlicz spaces of functions \(f:I\rightarrow Y\) for an interval \(I\subset {\mathbb {R}}\) and a Banach space Y. For more details on Orlicz spaces, we refer to [1, 18, 19]. Let \(\varPhi :{\mathbb {R}}_{0}^{+} \rightarrow {\mathbb {R}}_{0}^{+}\) be a Young function, i.e., \(\varPhi \) is continuous, increasing, convex with \(\lim _{s \rightarrow 0}\frac{\varPhi (s)}{s}=0\) and \(\lim _{s \rightarrow \infty }\frac{\varPhi (s)}{s}= \infty \) and denote by \(L_\varPhi (I;Y)\) the set of Bochner-measurable functions \(u:I\rightarrow Y\) for which there exists a constant \(k>0\) such that \(\varPhi (k\Vert u(\cdot )\Vert )\) is integrable. We equip \(L_\varPhi (I;Y)\) with the norm

Despite the fact that \(L_\varPhi (I;Y)\) is typically referred to as “Orlicz space” in the literature, we prefer to call

the Orlicz space associated with the Young function \(\varPhi \). We write \(\Vert u\Vert _{E_\varPhi (I;Y)} = \Vert u\Vert _{L_\varPhi (I;Y)}\) for \(u \in E_\varPhi \). Note that \(u \in E_\varPhi (I;Y)\) implies that \(\varPhi \circ \Vert u(\cdot )\Vert \) is integrable. Typical examples of Orlicz spaces are \(L^{p}\)-spaces; for \(\varPhi (t)=t^{p}\) with \(p\in (1,\infty )\) it holds that \(E_{\varPhi }(I;Y)\) is isomorphic to \(L^{p}(I;Y)\).

A Young function \(\varPhi \) is said to satisfy the \(\varDelta _2\)-condition if there exist \(K>0\) and \(s_{0}\ge 0\) such that

Note that \(E_\varPhi (I;Y) = L_\varPhi (I;Y)\) if and only if \(\varPhi \) satisfies the \(\varDelta _2\)-condition. Also note that \(\varPhi (s)=s^p\), \(p \in (1,\infty )\) satisfies the \(\varDelta _{2}\)-condition. For a Young function \(\varPhi \), the complementary Young function \(\tilde{\varPhi }\) is defined by

Again, this is a Young function and \(\varPhi \) can be recovered from \(\tilde{\varPhi }\) in the same manner. The complementary Young function to \(\varPhi (s)=\frac{s^p}{p}\), \(1<p<\infty \), is given by \(\tilde{\varPhi }(s)=\frac{s^q}{q}\) with \(\frac{1}{p}+ \frac{1}{q}=1\).

As for \(L^p\) spaces, an equivalent norm to \(\Vert \cdot \Vert _{L_\varPhi (I;Y)}\) is given by

Furthermore, for a Young functions \(\varPhi \) and its complementary Young function \(\tilde{\varPhi }\) the following generalized Hölder inequality

holds. This also implies the continuity of the embeddings

if I is bounded. Although \(L^{1}\) is not an Orlicz space, we will explicitly allow for \(\varPhi (t)=t\) in our notation referring to \(E_\varPhi (I;Y)=L^1(I;Y)\). Note that the definition of the norm (23) is indeed consistent with the \(L^1\)-norm and that \(\varPhi \) satisfies the \(\varDelta _2\)-condition. However, we will not define a “complementary Young function” for this particular \(\varPhi \).

An essential property of Orlicz spaces is the absolute continuity of the \(E_\varPhi \) norm with respect to the length of the interval I (see, e.g., [19, Thm. 3.15.6]), this is for \(u \in E_\varPhi (I;Y)\) and \(\varepsilon > 0\) there exists \(\delta > 0\) such that for each interval I holds

where refers to the Lebesgue-measure on \({\mathbb {R}}\).

Notes

However, it seems that this can be overcome with a carefully refined argument in the proof of [24, Thm. 4.2].

References

Adams R (1975) Sobolev spaces. Pure and applied mathematics. Academic Press, New York-London

Berrahmoune L (2009) A note on admissibility for unbounded bilinear control systems. Bull Belg Math Soc Simon Stevin 16(2):193–204

Breiten T, Kunisch K, Pfeiffer L (2018) Control strategies for the Fokker-Planck equation. ESAIM Control Optim Calc Var 24(2):741–763

Dashkovskiy S, Mironchenko A (2013) Input-to-state stability of infinite-dimensional control systems. Math Control Signals Syst 25(1):1–35

Dashkovskiy S, Mironchenko A (2013) Input-to-state stability of nonlinear impulsive systems. SIAM J Control Optim 51(3):1962–1987

Elliott DL (2009) Bilinear control systems, vol 169. Applied mathematical sciences. Springer, Dordrecht

Engel K-J, Nagel R (2000) One-parameter semigroups for linear evolution equations. Graduate texts in mathematics. Springer, New York

Guiver C, Logemann H, Opmeer MR (2019) Infinite-dimensional lure systems: input-to-state stability and convergence properties. SIAM J Control Optim 57(1):334–365

Hartmann C, Schäfer-Bung B, Thöns-Zueva A (2013) Balanced averaging of bilinear systems with applications to stochastic control. SIAM J Control Optim 51(3):2356–2378

Jacob B, Nabiullin R, Partington J, Schwenninger F (2018) Infinite-dimensional input-to-state stability and Orlicz spaces. SIAM J Control Optim 56(2):868–889

Jacob B, Partington JR, Pott S (2014) Applications of Laplace-Carleson embeddings to admissibility and controllability. SIAM J Control Optim 52(2):1299–1313

B. Jacob, F. L. Schwenninger, and J. Wintermayr. A refinement of Baillon’s theorem on maximal regularity. Available at arXiv:2008.00459, accepted for publication at Studia Math., 2021

Jacob B, Schwenninger FL, Zwart H (2019) On continuity of solutions for parabolic control systems and input-to-state stability. J Diff Equ 266(10):6284–6306

Jacob B, Zwart H (1999) Equivalent conditions for stabilizability of infinite-dimensional systems with admissible control operators. SIAM J Control Optim 37(5):1419–1455

Jayawardhana B, Logemann H, Ryan E (2008) Infinite-dimensional feedback systems: the circle criterion and input-to-state stability. Commun Inf Syst 8(4):413–444

Karafyllis I, Krstic M (2017) ISS in different norms for 1-D parabolic PDEs with boundary disturbances. SIAM J Control Optim 55(3):1716–1751

Karafyllis I, Krstic M (2019) Input-to-state stability for PDEs. Communications and control engineering series. Springer, Cham

Krasnoselskiĭ M, Rutickiĭ Y (1961). In: Leo F, Boron. P (eds) Convex functions and Orlicz spaces. Noordhoff Ltd, Groningen

Kufner A, John O, Fučík S (1977) Function spaces. Monographs and Textbooks on Mechanics of Solids and Fluids. Noordhoff International Publishing, Leyden

Lhachemi H, Shorten R (2019) ISS Property with respect to boundary disturbances for a class of Riesz-spectral boundary control systems. Automatica J IFAC 109:108504

Mazenc F, Prieur C (2011) Strict Lyapunov functions for semilinear parabolic partial differential equations. Math Control Relat Fields 1(2):231–250

A. Mironchenko and H. Ito (2014) Integral input-to-state stability of bilinear infinite-dimensional systems. In: Proceeding of the 53th IEEE conference on decision and control, pp. 3155–3160

Mironchenko A, Ito H (2015) Construction of Lyapunov functions for interconnected parabolic systems: an iISS approach. SIAM J Control Optim 53(6):3364–3382

Mironchenko A, Ito H (2016) Characterizations of integral input-to-state stability for bilinear systems in infinite dimensions. Math Control Relat Fields 6(3):447–466

Mironchenko A, Karafyllis I, Krstic M (2019) Monotonicity methods for input-to-state stability of nonlinear parabolic PDEs with boundary disturbances. SIAM J Control Optim 57(1):510–532

Mironchenko A, Prieur C (2020) Input-to-state stability of infinite-dimensional systems: recent results and open questions. SIAM Rev 62(3):529–614

Mironchenko A, Wirth F (2018) Characterizations of input-to-state stability for infinite-dimensional systems. IEEE Trans Automat Control 63(6):1602–1617

Nabiullin R, Schwenninger FL (2018) Strong input-to-state stability for infinite-dimensional linear systems. Math Control Signals Syst 30(1):1–15

Schwenninger FL (2020) Input-to-state stability for parabolic boundary control: linear and semi-linear systems. In: Kerner J, Laasri L, Mugnolo D (eds) Control theory of infinite-dimensional systems. Birkhäuser, Cham, pp 83–116

Sontag E (1989) Smooth stabilization implies coprime factorization. IEEE Trans Automat Control 34(4):435–443

Sontag E (2008) Input to state stability: basic concepts and results. Nonlinear and optimal control theory, vol 1932. Lecture notes in mathematics. Springer, Berlin

Sontag E (1998) Comments on integral variants of ISS. Syst Control Lett 34(1–2):93–100

Tucsnak M, Weiss G (2009) Observation and control for operator semigroups. Advanced texts. Birkhäuser, Basel

J. Wintermayr (2019) Positivity in perturbation theory and infinite-dimensional systems. Dissertation, University of Wuppertal

Zheng J, Zhu G (2018) Input-to-state stability with respect to boundary disturbances for a class of semi-linear parabolic equations. Automatica J IFAC 97:271–277

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work has been supported by the German Research Foundation (DFG) via the joint grant JA 735/18-1 / SCHW 2022/2-1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hosfeld, R., Jacob, B. & Schwenninger, F.L. Integral input-to-state stability of unbounded bilinear control systems. Math. Control Signals Syst. 34, 273–295 (2022). https://doi.org/10.1007/s00498-021-00308-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00498-021-00308-9

Keywords

- Input-to-state stability

- Integral input-to-state stability

- Bilinear systems

- \(C_0\)-semigroups

- Admissibility

- Orlicz spaces