Abstract

Available climate change projections, which can be used for quantifying future changes in marine and coastal ecosystems, usually consist of a few scenarios. Studies addressing ecological impacts of climate change often make use of a low- (RCP2.6), moderate- (RCP4.5) or high climate scenario (RCP8.5), without taking into account further uncertainties in these scenarios. In this research a methodology is proposed to generate further synthetic scenarios, based on existing datasets, for a better representation of climate change induced uncertainties. The methodology builds on Regional Climate Model scenarios provided by the EURO-CORDEX experiment. In order to generate new realizations of climate variables, such as radiation or temperature, a hierarchical Bayesian model is developed. In addition, a parameterized time series model is introduced, which includes a linear trend component, a seasonal shape with varying amplitude and time shift, and an additive residual term. The seasonal shape is derived with the non-parametric locally weighted scatterplot smoothing, and the residual term includes the smoothed variance of residuals and independent and identically distributed noise. The distributions of the time series model parameters are estimated through Bayesian parameter inference with Markov chain Monte Carlo sampling (Gibbs sampler). By sampling from the predictive distribution numerous new statistically representative synthetic scenarios can be generated including uncertainty estimates. As a demonstration case, utilizing these generated synthetic scenarios and a physically based ecological model (Delft3D-WAQ) that relates climate variables to ecosystem variables, a probabilistic simulation is conducted to further propagate the climate change induced uncertainties to marine and coastal ecosystem indicators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is widely accepted that long term changes in climatic variables will cause shifts (phenological and biogeographic shifts) in species distributions, but the extent of these shifts is not yet well understood and any prediction will have a high level of associated uncertainty (Goberville et al. 2015). Climate change data in ecosystem assessments are used as forcing conditions for the numerous non-linear ecological processes. These ecological processes are influenced by changes in extreme values, or shifts in distributions and peaks of the climate forcings. Applicable methodologies for estimating ranges and expected changes in statistical properties of the climate scenarios are therefore essential for subsequent ecological impact assessment.

The uncertainty accumulated throughout the climate modelling chain, such as initial conditions, boundary conditions, parametric and model structure of both General Circulation Models (GCMs) and Regional Climate Models (RCMs) may further propagate and influence ecological impact estimates. Yet in most impact studies climate change induced uncertainty is only characterized by different GCM and Representative Concentration Pathway (RCP) configurations in a small ensemble of climate scenarios to anticipate potential trajectories (Vesely et al. 2019), but without a fully probabilistic uncertainty quantification.

If available time series of the climate variables are not sufficient to serve as stochastic input variables for ecological, agro-meteorological or hydro-meteorological assessment studies, one way to obtain better uncertainty estimates is to generate multiple realizations of the climate input variables. Numerous studies exist on generating new datasets of meteorological variables using probabilistic models. These models are often referred to as stochastic weather generators. Some well known examples of stochastic weather generators are LARS-WG (Semenov and Barrow 2002), WeaGETS (Chen et al. 2012), or CLIMAK (Danuso 2002). These widely used stochastic weather generators have been compared in various studies to assess their validity for long-term climate data simulation (Mehan et al. 2017), performance in different climatic regions (Vu et al. 2018), adequacy for water resources systems risk assessment (Alodah and Seidou 2019), or to quantify uncertainty due to the choice of the weather generator (Vesely et al. 2019). In short the aim of all stochastic weather generators is to simulate new synthetic sets of meteorological time series with statistical properties similar to the historical data or models (Birt et al. 2010). The expected impact of such methods on the relevant scientific community is to facilitate studying the effect of long-term changes in mean climate variables, climatic variability, and the frequency of extreme events (Vesely et al. 2019).

In the above mentioned weather generators the primary variable of interest is precipitation and the simulation of other variables, such as temperature and solar radiation, is conditioned on the occurrence of rainfall (wet or dry days). Thus, most of these stochastic weather generators are of Richardson type (Richardson 1981). The concept of these types of generators is that solar radiation and temperature are modeled jointly as a bivariate stochastic process with the daily means and standard deviations conditioned on the wet or dry state. First a ’residual’ time series is obtained by removing a periodic trend. This residual time series is assumed stationary and normally distributed, and the autocorrelation and cross-correlation coefficients are estimated using the residuals of the maximum temperature, minimum temperature, and solar radiation variables. Finally, the removed means and standard deviations are reintroduced to produce the generated daily values.

Recently, for the simulation of temperature improvements have been made to the Richardson type weather generators. One of the major improvements is simulating nonstationary temperature time series directly instead of simulating standardized residuals first and then adding them to the periodic mean and standard deviation (Smith et al. 2017). The proposed approach called Stochastic Harmonic Autoregressive Parametric weather generator (SHArP) allows for trends and seasonality in the temperature generation. Another extension, the Seasonal Functional Heteroscedastic Autoregressive (SFHAR) generator (Dacunha-Castelle et al. 2015), uses a decomposition of the temperature signal into trends and seasonality in the mean and the standard deviation, and a stochastic part. This was later applied to generate a long trajectory of past and near future (up to 2040) temperature by also incorporating GCM simulations (Parey 2019). This is an innovative feature considering that the commonly used weather generators focus on historical periods with observed climate characteristics and allow the inclusion of future climate projections only through change factors. Those change factors are then used to alter the observed statistics to account for the offset in the future projections and recalibrate the weather generators.

The lack of proper treatment of parameter uncertainty in previous weather generators gave rise to studies which employed Bayesian methods. These methods have a clear advantage as they better capture uncertainty by providing the full distribution of model parameters instead of a single best estimate. This enhanced parameter uncertainty characterization allows us to represent the full range of plausible climate scenarios and subsequently the full range of impacts, once climate input is propagated through process-based models (Verdin et al. 2019). For these reasons, hierarchical Bayesian frameworks have been increasingly applied for a range of purposes in the field of weather generators. Applications have primarily focused on precipitation and temperature modelling, such as spatial modelling of extreme precipitation (Reich and Shaby 2012), spatial modelling of daily precipitation and temperature (Verdin et al. 2019), statistical downscaling of precipitation (Hashmi et al. 2009), or to quantify future temperature and precipitation uncertainties from multiple climate models (Tebaldi et al. 2005; Tebaldi and Sansó 2009; Najafi and Moradkhani 2014; Katzfuss et al. 2017). Even though all these applications benefited from the hierarchical or multi-layered Bayesian model structure, their exact model formulations are not transferable to our case, in which the parameters of our proposed time series model are to be inferred in order to simulate long term traces of radiation, and making use of an ensemble of RCM simulations.

While our proposed method shares the primary objective of existing stochastic weather generators, in that we also aim to generate numerous gap-free time series of atmospheric variables using available climate data and with statistical characteristics similar to these, there are few important differences in the main concept. Firstly, we aim to directly simulate trajectories with long-term trend, avoiding the common practice of simulating residuals which are then added to climatology (historical or climate change adjusted). Moreover, simulating a very long future projection until the end of the century is not common in existing studies. Secondly, we use a high-resolution 0.11 degree (or 12.5 km) RCM ensemble from Euro-CORDEX as calibration data for our generator to quantify the temporal evolution of future uncertainty in regional climate change radiation projections, as opposed to most previous studies using GCMs and focusing on precipitation and temperature. Since we propose a single site generator, we do not make full use of the high spatial resolution of the RCM ensemble, on the other hand, we can argue that high-resolution RCMs describe regional and local processes more accurately than GCMs. In this regard, the novelty is not that the generator can create spatial fields, but rather that it is using input data from a climate modelling experiment that describes local processes the best, which was often not the case in existing stochastic climate generators. Thirdly, our hierarchical Bayesian model consist of a new time series model formulation and derived Gibbs parameter update formulas for the parameter inference. The proposed multi-layered Bayesian structure combines different climate scenarios into one model (rather than separately treating them), making the estimates statistically more robust. Lastly, we apply the generator to simulate marine water quality indicators, whereas previous weather generators were mainly focusing on land based impacts (hydrology, agriculture, ecosystem changes). While these conceptual elements separately exist in the field of stochastic weather generators or more broadly in the field of climate sciences and/or environmental sciences, the combination of these features can be considered innovative.

In summary, this paper presents a Bayesian approach to simulate climate variables in analogy with stochastic weather generators extended to a larger temporal scale. The generated ensemble of future radiation projections is used to characterize climate model uncertainties and to assess ecological response in marine and coastal ecosystems through a physically-based impact model.

2 Dataset

Numerous General Circulation Models (GCMs) and Regional Climate Models (RCMs) exist that produce long term predictions of climate variables. In this study the Surface Downwelling Shortwave Radiation dataset, hereafter referred to as radiation, was obtained from the high resolution 0.11 degree (or 12.5 km) EURO-CORDEX (Coordinated Regional Downscaling Experiment) (Jacob et al. 2014) which uses the Swedish Meteorological and Hydrological Institute Rossby Centre regional atmospheric model (SMHI-RCA4). Radiation was chosen for the demonstration case due to its high influence on ecological processes. In short, radiation is the measure of solar radiation energy received on a given surface area. Radiation influences light and energy availability for living organisms in the water column and therefore controls their growth and mortality among others, such as nutrient availability and temperature.

In order to produce various regionally downscaled scenarios, EURO-CORDEX applies a range of GCMs to drive the above mentioned RCM. The four driving GCMs in this study are the National Centre for Meteorological Research general circulation model (CNRM-CM5), the global climate model system from the European EC-Earth consortium (EC-EARTH), the Institut Pierre Simon Laplace Climate Model at medium resolution (IPSL-CM5A-MR), and the Max-Planck-Institute Earth System Model at base resolution (MPI-ESM-LR). These GCMs have been previously used in recent studies describing the impacts of climate change on ecosystem state and biodiversity (Goberville et al. 2015). In addition to the driving models, further scenarios are obtained by considering different socio-economic changes described in the Representative Concentration Pathways (RCPs). RCPs are labeled according to their specific radiative forcing pathway in 2100 relative to pre-industrial values. In this study we include RCP8.5 (high), and RCP4.5 (medium-low) (van Vuuren et al. 2011). Together the four different driving GCMs and two RCPs provide us with an ensemble of eight future radiation scenarios (see Fig. 1). We make use of these driving GCMs and RCP scenarios as they were previously selected and post-processed for climate change assessments for this study area by the Institute of Atmospheric Sciences and Climate (ISAC-CNR) within the EU H2020 ECOPOTENTIAL project.

Daily field observations of solar radiation energy were obtained from the Royal Netherlands Meteorological Institute (KNMI) at the closest weather station, De Kooy, from 1970 until August 2020. This time interval covers the entire Euro-CORDEX reference period (1970-2005) and more than 14 years of the projection period (2006–mid 2020). These observations were used for the bias correction of the RCM scenarios and for validation of the generated scenarios.

While this ensemble of \(GCM \times RCP\) combinations already encompasses a certain degree of uncertainty, the number of ensemble members might not be sufficient and information on the likelihood of its members is difficult to obtain. This is due to the fact that RCP scenarios have not been assigned a formal likelihood and it is generally assumed that each climate model is independent and of equal ability (Hayhoe et al. 2017).

Previously, attempts have been made to assess the likelihood of the different climate change pathways (Capellán-Pérez et al. 2016) and a number of studies use model weighting based on past performance, yet technological, economic, political and climatic factors underlying RCP scenarios remain largely uncertain and model weights based on historical performance might not be adequate for other regions, variables and for future projections (Knutti and Sedláček 2013). For this reason, in this study the given ensemble of scenarios is enriched to be used for comprehensive uncertainty quantification studies and for fully probabilistic simulations in assessment studies.

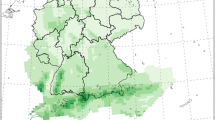

The used subset of the EURO-CORDEX dataset covers a domain between 2.0W-10E longitudes and 48-57N latitudes, as depicted in Fig. 2, with a resolution of 0.11 degree on curvilinear grid (cca. \(12\times 10\) km). For the purpose of this study, time series were extracted at a given location in the Dutch Wadden Sea (see red dot in Fig. 2) to reduce the data dimension and to be used as input for the single site stochastic generator. The original radiation time series is a high frequency dataset, with 3-h time step, which was aggregated to daily averages excluding zero radiation values during night time. Data aggregation was done to match the daily time step of the validation data and to reduce unnecessary noise (sub-daily variations) in the dataset as the sub-daily scale of the processes are not relevant for the purpose of this study. In other cases where smaller temporal scales are important the data aggregation step could be excluded.

3 Stochastic generator methodology

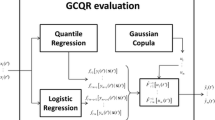

The methodological workflow depicted in Fig. 3 starts with the pre-processing of the time series of radiation data. This step includes bias correction of the Euro-CORDEX RCM scenarios, as well as extracting the seasonal shape \(\varphi ^S\), the seasonality in the variance of residuals \(\varphi ^V\), and deviations from the seasonal cycle (\(d_j\)). The stochastic generator uses a parameterized time series model as in Eq. (1) below which consists of a linear component (Eq. 2), seasonal component (Eq. 3), and a variance component (Eq. 4). Consequently, the model contains the following parameters: intercept \(\alpha\), slope \(\beta\), amplitudes of seasonal shapes \(\{A_j^S\}\), amplitudes of seasonality in the variance of residuals \(\{A_j^V\}\)) and a variance parameter (\(\sigma ^2\)). We will endow all parameters with a prior distribution and perform inference within the Bayesian setup. Sampling from the posterior distribution is done using the Gibbs sampler. Finally, the posterior samples can be used to sample from the predictive distribution and generate synthetic radiation signals. The temporal evolution of these generated synthetic scenarios is regular, meaning that the lengths of yearly cycles are always equal. Since we observe in the pre-processing step that in reality seasonal cycles should not be equally long, temporal deregularization is done using a time change function \(\tau (t_k)\) (see Eq. 5). The end result is numerous generated radiation scenarios, which are representative of the input Euro-CORDEX scnearios and have varying lengths of seasonal cycles.

3.1 Bias correction

The RCM simulations are subject to climate model structural error and boundary errors from the driving GCMs (Navarro-Racine et al. 2020), hence, they should be bias corrected before applying them in impact studies (Luo 2016). These systematic biases present in climate models are most commonly addressed using standard bias correction techniques, such as mean adjustment or quantile mapping. Nevertheless, due to the known problems with these bias correction techniques (Maraun et al. 2017), one can confidently apply them only if the relevant processes are reasonably well captured by the chosen climate models, since fundamental model biases cannot be corrected by the bias correction approaches. While a comprehensive validation of the RCM simulations was not conducted in this study, sufficient credibility of the future projections in representing local and large-scale processes is assumed since they are originated from a high-resolution regional downscaling experiment adhered to a coordinated model evaluation framework.

Based on this assumption, quantile mapping bias correction (Amengual et al. 2012) was applied using the RCM simulations for reference period (1976–2005) and daily historical radiation field measurements from KNMI for the same period. The quantile–quantile mapping transfer functions were established for the reference period and separately for each RCM simulation. The transfer functions were then applied for the bias correction of each future projections separately. Figure 4 depicts the histogram of observations together with the uncorrected and bias corrected RCM simulations in the projection time interval when field measurements are still available (2006–2020). While dissimilarities exist between modelled and observed distributions, these are not major, indicating that key processes are not misrepresented by this RCM (Maraun et al. 2017).

3.2 Temporal evolution

It was verified that significant differences in the temporal evolution of the selected RCM scenarios during the projection interval (2006–2100), which could be reflected in differences in trends, do not exist. Nevertheless, pre-processing steps were applied to remove identified minor differences in time evolution. Since it was observed that not all years had the same number of data points (within RCM scenarios and across scenarios), the time evolution was regularized by interpolation using nearest neighbor method. As the differences in the number of yearly data points were minor, the interpolation had limited impact on the dataset. After this regularization step all scenarios had uniform time evolution. Further considerations regarding the lengths of the yearly cycles is described in Sect. 3.3.3.

3.3 Time series model definition

For simplicity we first consider only one scenario in this section and later extend the time series model to all scenarios in its full form (Sect. 3.3.4). Suppose \(t_0<t_1<\cdots t_K\) are observation times (in years) and \(j\in \{1,\ldots , J\}\) indexes years. Let \(y(t_k)\) denote the radiation measurement at time \(t_k\). We assume

with \(T(t_k)\) the trend component, \(S(t_k)\) the seasonal component, \(V(t_k)\) the variance of noise and \(E(t_k)\) the noise component.

3.3.1 Seasonality

As a first step, we assume a linear trend in the data:

where \(\alpha\) and \(\beta\) are the intercept and slope parameters respectively. The detrended time series has a noisy but clearly distinguishable yearly cyclic pattern. Our goal is to estimate this cyclic behaviour and define a seasonal shape function \(\varphi ^S\) that represents the seasonality. In order to achieve this, the following steps are performed. After removing the trend \(T(t_k)\), the time series is smoothed using a non-parametric smoother LOWESS (Locally Weighted Scatterplot Smoothing) (Cleveland 1979) to remove noise. Then the local minima points (\(m_j\)) of the smooth time series are identified, see the upper plot in Fig. 5. Based on the identified local minima points the original detrended time series is split into yearly curves and the LOWESS smoother is applied again to estimate the seasonal shape (center plot in Fig. 5) separately for each scenarios.

In the LOWESS smoothing, the time window was chosen intuitively by plotting various LOWESS curves and comparing the fits graphically to avoid over- or under-fitting. The aim was to find a time window which allows us to obtain a seasonal curve with sufficient details to describe characteristic features, such as the two shoulders in the seasonal curve, but at the same time removing all noise. The fraction value found to be most appropriate for the yearly seasonality was 0.1 (\(10\%\) of the yearly data points) meaning that the time window is roughly one month. Another option could have been to choose a time window that optimizes the fit of the LOWESS curve through bias-corrected Akaike information criterion (AIC) method, Generalized Cross-Validation (GCV) method or similar. While these options might be more robust in other cases, we think that intuitively choosing the time window for the seasonal shape is preferable for the stochastic generator methodology as it is more flexible and allows us to incorporate domain knowledge and preferences. The averaged LOWESS smoothed seasonal shape (\(\varphi ^S\)) is depicted in the lower plot in Fig. 5.

Considering a time series with seasonal cycles (years) the seasonality \(S(t_k)\) in the time series model is defined by

where \(A_{j}^S\) is a scaling factor for year j, and the seasonal shape \(\varphi ^S: [0,1] \rightarrow \mathbb {R}\). As an example if \(t_k = 1.5\) then \(S(1.5) = A_{2}^S\varphi ^S(0.5)\) since it is in the second year. Note that \(\mathbb {1}_{[j,j+1)}(t_k)\) is an indicator function which is 1 for all elements within the interval \([j,j+1)\) and 0 otherwise.

3.3.2 Residuals and seasonal shape in the variance \(\varphi ^V\)

Apart from the linear- and seasonal trends there is an additive residual term \(\sqrt{V(t_k)} E(t_k)\) in the time series model. In this residual term the noise variables \(E(t_k)\), where \(0 \le k \le K\), are assumed to be independent and identically distributed (i.i.d) \(N(0,\sigma ^2)\) and the variance term \(V(t_k)\) is defined similarly to the seasonal component in Eq. (3):

where \(A_{j}^V\) is a scaling factor for year j and \(\varphi ^V: [0,1] \rightarrow \mathbb {R}\) is the LOWESS smoothed variance of residuals. The seasonal shape of the variance \(\varphi ^V\) depends on the specific scenario, same as for the seasonal shape \(\varphi ^S\). The seasonal variance shape is depicted in Fig. 6. The lower panel of Fig. 6 shows the comparison of the observed (in blue) and modeled (in red) residual, which show good agreement, meaning that the time series model is capable of representing the input signal. The time series model refinement process stops at this point when residuals are properly modeled.

Residuals and deriving seasonal shape in the variance \(\varphi ^V\). Seasonal signal and data (top), smoothed shape of the variance (middle), residual term including variance and independent and identically distributed noise (bottom). In the bottom panel the blue color represents the observed residuals while the red color represents the modeled residual

3.3.3 Modelling lengths of periods

In the radiation dataset the local minima points (upper panel of Fig. 5) are not equidistant, indicating that the seasonal cycle lengths have slight deviations over the years. Since the variation in the length of seasonal periods is an important feature, it should be incorporated in the stochastic generator. The deviations from the calendar year \(d_j\) are defined as:

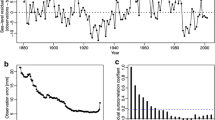

where \(m_j\) is the j-th local minimum location (in years). The upper panel of Fig. 7 shows the deviations from the calendar year and their autocorrelation, while the middle panel shows the distribution of these deviations. It can be observed that the deviations are centered around zero and have a negative lag 1 autocorrelation meaning that most positive deviations tend to be followed by negative deviations and vice versa. In this way the yearly cycle lengths remain close to the ideal cycle length (one calendar year) throughout the time series.

In order to account for these non-uniform cycle lengths when generating new synthetic scenarios, a time change function \(\tau (t_k)\) is introduced. For a visual representation of \(\tau (t_k)\) see the bottom panel of Fig. 7.

When a new synthetic scenario is generated, for each year j a deviation value \(d_j\) is produced by sampling from the observed deviation distribution of that scenario. Then, knowing the deviation for each year, we calculate the location of the end of the j-th period \(m_j\). Plotting \(m_j\) against the year j will result in a piecewise function as shown in the bottom panel of Fig. 7. When introducing \(\tau (t_k)\) we essentially create a piecewise linear function which provides for every time instance \(t_k\) the new continuous time value \(\tau (t_k)\) assuming that between \([m_j,m_{j+1}]\) the time is linearly increasing. Mathematically, the piecewise linear time change function is described as follows:

where \(m_j = j - d_j\), and the sequence \(\{d_j\}\) is modeled as independent and identically distributed random variables \(\mathrm {N}\left( 0,\sigma _{d}^2\right)\).

Deviations from the calendar year (a), together with their autocorrelation plot (b), and histogram (c). Sub-figure (d) depicts the \(\tau (t_k)\) time change piecewise linear function. This function is represented by the j-th local minimum location (in years) \(m_j\) on the y-axis, against the number of years j on the x-axis. Differences between \(m_j\) and the diagonal line are the deviations from the calendar years \(d_j\). Example shown for one Euro-CORDEX scenario

3.3.4 Full time series model

In this section we write the introduced time series model in full form, extended to all scenarios, without time change \(\tau (t_k)\).

Recall that j indexes years and k indexes days. Let \(\ell \in \{1, \cdots , L\}\) index the scenarios (we have \(L=8\)). For scenario \(\ell\) we have measurements

Define

and set

along with

and

The conditional distribution for the observation vector for scenario \(\ell\) is given by

where

denoting the vector obtained by stacking all components of its elements.

3.4 Prior specification

We choose partially conjugate priors to simplify MCMC-sampling with the Gibbs sampler. We denote the Normal, Inverse Gamma and Gamma distributions by N, IG and G respectively. Moreover, we denote by \(\mathrm {N}\left( x;\mu ,\sigma \right)\) the density of \(\mathrm {N}\left( \mu ,\sigma \right)\)-distribution, evaluated at x. Similar notation is used for the Gamma- and InverseGamma distributions. In the “Appendix” we specify the densities of these distributions to clarify the parametrisations used in their definitions. We take the following prior for \(\xi _\ell\)

for hyperparameters \(a_V\) and \(a_\sigma\). To tie together the laws for different scenarios, we complete the prior specification by another layer

for hyperparameters \(\delta _1, \delta _2, \lambda _1, \lambda _2\).

Since climate scenarios originate from a common genealogy (e.g. similar computational schemes, description of similar physical processes) (Steinschneider et al. 2015), our underlying idea is that scenarios can be assumed exchangeable rather than independent. This induced the well known phenomenom of “borrowing strength” where estimates for parameters over different scenarios are combined (“pooled”), see Fig. 8. This can correct outlier-like behaviour and makes the estimates statistically more robust (Gamerman and Lopes 2006; Gelman and Hill 2006).

In our case the reason to opt for a hierarchical setup is to enhance the Bayesian model by using all the data (all scenarios) to perform inferences for each group (scenario). This provides a trade off between the noisy within-group estimate, where parameters are estimated independently from the other groups, and an oversimplified parameter estimate that uses all data and ignores the presence of groups (Gelman and Hill 2006).

3.5 Gibbs sampler for drawing from the posterior distribution

Sampling from the posterior can be done using a blocked Gibbs sampler where for the parameters in the second layer we sample from the following full conditionals

-

\(\theta _\ell \sim \mathrm {N}\left( \Sigma (\Phi _\ell ^S)^T \sigma ^{-2}_\ell V_\ell ^{-1} y_\ell ,\Upsilon _\ell \right)\), where

$$\begin{aligned} {\scriptstyle \Upsilon _\ell ^{-1} = \Sigma _\ell ^{-1} + (\Phi _\ell ^S)^T \sigma ^{-2}_\ell V_\ell ^{-1} \Phi _\ell ^S,\, \text {with} \, V_\ell =\text{ diag }\,(\sigma ^2_\alpha , \sigma ^2_\beta , \sigma ^2_{SL},\ldots , \sigma ^2_{S\ell }}). \end{aligned}$$ -

\({\scriptstyle A_{j\ell }^V \sim \mathrm {IG}\left( a_v+|I_j|/2,b_{V\ell } + (2\sigma ^2_\ell \varphi _{\ell ,j,k}^V)^{-1} \sum _{k \in I_j} (y_\ell (t_k)-\mu _{\ell ,k})^2\right) },\)with \(\begin{aligned} I_j=\{k\, :\, t_k \in [j,j+1)\}\, \text {and} \, \mu _{\ell ,k}=\text{ row}_k(\Phi ^S_\ell ) \theta _\ell . \end{aligned}\)

-

\({\scriptstyle \sigma ^2_\ell \sim \mathrm {IG}\left( a_\sigma + K/2,b_\sigma + \tfrac{1}{2} (y_\ell - \Phi ^S_\ell \theta _\ell ) ^TV_\ell ^{-1}(y_\ell - \Phi ^S_\ell \theta _\ell )\right) }\)

Here, \(\ell =1,\ldots , L\), and \(j=1,\ldots , J\). For the third layer we sample from

-

\(\sigma ^2_\alpha \sim \mathrm {IG}\left( \delta _1 +L/2,\delta _2+\tfrac{1}{2} \sum _{\ell =1}^L \alpha _\ell ^2\right)\)

-

\(\sigma ^2_\beta \sim \mathrm {IG}\left( \delta _1 +L/2,\delta _2+\tfrac{1}{2} \sum _{\ell =1}^L \beta _\ell ^2\right)\)

-

\(\sigma ^2_{S\ell } \sim \mathrm {IG}\left( \delta _1+J/2,\tfrac{1}{2} \sum _{j=1}^J (A_{j\ell }^S)^2\right)\)

-

\(b_{V\ell } \sim \mathrm {G}\left( \lambda _1 + Ja_v,\lambda _2 + \sum _{j=1}^J \left( A_{j\ell }^V\right) ^{-1}\right)\)

-

\(b_\sigma \sim \mathrm {G}\left( \lambda _1 +La_\sigma ,\lambda _2+\sum _{\ell =1}^L \sigma ^{-2}_\ell \right)\)

Values of the hyperparameters were set to \(\delta _1 = \lambda _1 = 2\), \(\delta _2 = 1\), and \(\lambda _2 = 0.01\).

Derivation of these updates is straightforward as the hierarchical model implies that the posterior satisfies

Only derivation of the update for \(A_\ell ^V\) is slightly tedious and requires bookkeeping that any time \(t_k\) is only in 1 year (indexed by j). Note that the priors are chosen such that all update steps in the Gibbs sampler are partially conjugate. Due to the random error, generated values may fall below zero when radiation is low. In order to avoid this, results are truncated at zero to comply with physics, as solar radiation cannot be negative. For this reason, the above introduced model is an approximation. In the model formulation we neglect the impact of truncation.

4 Propagation of uncertainty: demonstration case

In this demonstration case, the generated radiation scenarios are used to drive a physically based model to investigate the effects on water quality (ecology) and further propagate climate related uncertainties to better characterize the response of the ecological system. The optimal number of stochastic generator realizations for environmental applications has been previously investigated (Guo et al. 2018; Alodah and Seidou 2020). These studies assessed the impact of output size of weather generators on statistical characteristics and indices as compared to historical data and try to reach a predefined accuracy. Apart from accuracy, for probabilistic impact studies one should also consider the impact of ensemble size on how well the predictive distribution of a weather-related variable, such as radiation, can be estimated (Leutbecher 2019). Based on these studies the authors conclude that an ensemble size of around 100 members is optimal for the demonstration case, while also computationally feasible.

For the impact modelling, the water quality sub-module of the Delft3D integrated modelling system, Delft3D-WAQ, is used with an existing model setup which has been previously calibrated and validated for the location of our demonstration case (Los et al. 2008). The spatial domain of the physical model covers the Southern North Sea with coarser horizontal resolution offshore and finer resolution along the Dutch coast, as shown in Fig. 9. The model comprises of twelve vertical layers, making it a three dimensional physical model.

Delft3D-WAQ is a comprehensive hybrid ecological model including an array of modules reproducing water quality processes that are then combined with a transport module to calculate advection and dispersion. The model most importantly calculates primary production and chlorophyll-a concentration while integrating dynamic process modules for dissolved oxygen, nutrient availability and phytoplankton species. Delft3D-WAQ can include a phytoplankton module (BLOOM) that simulates the growth, respiration and mortality of phytoplankton. Using this module the species competition and their adaptation to limiting nutrients or light can be simulated (Los et al. 2008). A graphical overview of the modelled ecological processes can be seen in Fig. 10. Without describing in details the formulation of these ecological processes we briefly introduce how our variable of interest, chlorophyll-a, is calculated and how solar radiation influences its concentration.

The chlorophyll-a content of algae is species specific. The total chlorophyll-a concentration is equal to the sum of the contributions of all algae species:

where \(C_\mathrm{chlfa}\) is the total chlorophyll-a concentration and \(C_\mathrm{alg,i}\) is the biomass concentration for algae species type i. The mass balances for algae types are based on growth, respiration and mortality which are influenced by factors such as nutrients (nitrogen, phosphorus, silicon, carbon) and light in the water column. BLOOM uses linear optimisation to calculate the species competition and the optimum distribution of biomass over all algae types. The goal of the optimization process is to maximize the net growth rate of the total of all algae types under nutrient availability, light availability, maximum growth rate, and maximum mortality rate constraints.

Light availability, therefore, is an important driving factor for phytoplankton processes, and this light availability is a function of solar radiation energy provided by the RCMs and the stochastic generator. More specifically, the available light at a particular water depth is calculated as a function of solar irradiation on the top layer and the light attenuation in the water column caused by extinction (scattering and absorption). This light extinction is modelled by the Lambert–Beer law (Eq. 7) which states that the light intensity in the water layers is exponentially decreasing with the water depth:

where \(I_b\) is the light intensity at bottom of the water column, \(I_t\) is the light intensity at the top of the water column (solar radiation forcing), K is the light extinction coefficient and H is the water depth. The extinction coefficient K is the sum of the background extinction and the extinction of all other light absorbing suspended organic or inorganic matter (the self-shading of phytoplankton, extinction of total suspended matter and the dissolved humic substances).

Consequently, projected change in solar radiation at the water surface, which translates into light intensity in the water column, is an influential factor to determine future changes in chlorophyll-a concentration. The demonstration case aims to showcase this cause-effect relationship and quantify the associated uncertainties.

5 Results

5.1 Results of stochastic generator

Initial states and hyperparameters were specified, and the Gibbs sampler was run for over a thousand iterations. Samples drawn from the posterior distributions for all scenarios are summarized in Fig. 11 as violin plots. For the interpretation of the results the reader is reminded that scenarios one to four are from different driving GCMs with RCP4.5, and scenarios five to eight are from the same GCMs as the first four scenarios, respectively, but with RCP8.5. It can be seen that the intercept and slope parameters of all scenarios are similar and their ranges are overlapping, even though, scenario four shows a slightly different behaviour. GCM number three (IPSL-CMSA-MR) and 4 (MPI-ESM-LR) have higher variances than the other GCMs as indicated by the \(\sigma ^2\) plot (see third plot in Fig. 11). It should be mentioned that before pooling was applied, through an additional layer in the hierarchical model, scenario four showed stronger outlier behaviour. This behaviour is reduced in the hierarchical scheme as estimates get pulled towards the overall mean of the various scenarios. We can also observe in the third panel of Fig. 11, where the \(\sigma ^2\) estimates are shown for each scenario, that dissimilarities between scenarios are dominated by driving GCMs. This is in line with previous finding that uncertainty in the RCM projection scenarios are primarily influenced by the driving GCMs while the impact of RCPs is less dominant (Morim et al. 2019).

Regarding the trend slope, it is a general expectation that RCP8.5 (scenarios 5-8) has steeper slope than RCP4.5 (scenarios 1-4). This expectation was confirmed for the temperature variable. While trend slopes for solar radiation under RCP8.5 are also slightly steeper, with an average difference of 0.014, it is less pronounced. This unexpected feature could be explained by the complexity of projecting solar radiation for this region, which has been previously discussed in literature. A study by Bartók et al. (2017) found remarkable discrepancy between RCMs and their driving GCMs, since GCMs consistently indicated increase in solar radiation over Europe until the end of the century, while most RCMs detected general decrease. Moreover, the difficulty of projecting cloud cover and solar radiation changes in coastal areas with sea-land-atmosphere boundaries, such as the study site, has also been highlighted.

By sampling from the predictive distribution new synthetic scenarios are generated. Posterior predictive checks (Gelman and Hill 2006) have been done visually by comparing the original and sampled data, together with observations, as shown in Fig. 14. It can be observed that the seasonal shape is well reproduced and the ensemble band of the new scenarios around the baseline scenario suggests the presence of uncertainties both in peak concentrations and phase shifts. This indicates benefits of using a larger ensemble of scenarios as input for ecological studies. Further validation of the stochastic generator, and especially its ability to accurately represent long-term trends, has been done by fitting it with the observations for the period between 1970 and 2020. The time series of the observations and generated scenarios have been decomposed and their trend, seasonal and residual components have been compared in Fig. 12. We can observe that the time series model performs as intended and able to closely reproduce the long-term trend, seasonal and residual signals of the observations. Consequently, we can conclude that the stochastic generator produces valid outputs including correct representation of the climate change signal.

For the demonstration case an ensemble of 120 new scenarios were generated by equally drawing from each baseline scenario (15 new scenarios of each type). The generated scenarios have been verified by comparing their empirical quantiles graphically to the baseline scenarios for the entire projection period (2006–2090), depicted in Fig. 13. The quantile–quantile plots of three example generated scenarios approximately lie on the diagonal line and there are no obvious discrepancies, except for the tale, which can be explained by the fact that we take normally distributed noise which is symmetric.

Finally, Fig. 15 shows boxplots of the generated scenarios for each month, with the corresponding monthly mean statistics of the baseline scenarios as solid lines. Since the temporal evolution of baseline and generated scenarios are similar, there is no problem with the long-term linear trend differences and therefore the figure covers the entire projection period (2006–2090). We can observe that each RCM climatological mean for all the months are well captured by the generated synthetic scenarios, as they fall within the interquartile range of the boxplots.

5.2 Results of probabilistic water quality simulation

The eight baseline Euro-CORDEX radiation scenarios and the 120 generated ones are used as input for the Delft3D-WAQ numerical model to drive ecological processes which calculate chlorophyll-a concentration, among others. The objective of this demonstration case is to illustrate the benefit of using a larger ensemble of radiation inputs and to assess the impacts of different radiation intensities towards the end of this century, during the early spring season when (solar) energy is the limiting factor to biomass growth. Consequently, further analysis focuses on the early spring months. In order to simulate ecological variables for the spring season the baseline Euro-CORDEX radiation scenarios and the outputs of the stochastic generator were post-processed. Seasonal averages of the first (2006–2015) and last (2081–2090) simulated decade were derived, thus obtaining a single year signal for each baseline and generated scenarios. These processed radiation signals were then used to force the deterministic physical model. The simulated chlorophyll-a concentrations therefore indicate the characteristic spring peak of the beginning and the end of the century, not a single event.

A subset of the simulation results are shown in Fig. 16 focusing on the spring peak. The figure depicts the chlorophyll-a concentration ensemble members and their medians derived from the baseline and generated scenarios for the first and last simulated decade, as well as the prediction intervals that can be computed using the generated scenarios. The figure aims at comparing the evolution and peak of the characteristic spring blooms. In the upper panel, it is visible that most of the baseline scenarios are close to each other and only one or two scenarios behave slightly differently. In the stochastic generator the parameter inference process favors the majority behavior as the data drives the process. Therefore, when generating new radiation traces it is more likely produce scenarios which are similar to the majority of the baseline scenarios rather than the one(s) with outlier behavior. For this reason, it may happen that a baseline ensemble member is outside of the generated ensemble band at few time steps. In addition to this, it should be noted that the more synthetic scenarios we produce with the stochastic generator, the larger the ensemble band will become since it can better cover the parameter space. Despite these facts, the generated ensemble has an uncertainty band which covers well the baseline ensemble members.

Regarding climate change impacts, we can observe from the baseline scenarios that the characteristic spring bloom of the end of the century (2081–2090) is consistently lower than the one representing the beginning of the century (2006–2015). Generated scenarios accurately reproduce this phenomena. This finding is in line with physical expectations since radiation projections show mild negative long-term trend for almost all scenarios (second panel of Fig. 11), and during the energy limited spring period radiation positively correlates with the chlorophyll-a concentration. It should be noted, however, that in this experiment we only consider the effect of radiation and assume all other climate forcing, such as temperature, unchanged. Consequently, this demonstration does not replace comprehensive climate change impact studies but rather showcases a possible use of the radiation generator.

In order to demonstrate the benefit of a larger ensemble, Fig. 17 depicts the histogram of the pointwise predictive distribution at the time of the characteristic spring peak concentration. One can argue that the eight baseline ensemble members may be used to derive an ensemble mean and width of the ensemble band (or spread), but not for full uncertainty characterization which also includes the predictive distribution. Looking at the basic uncertainty metrics the baseline ensemble has a mean of 25.42 mg/m3, standard deviation of 3.7 mg/m3, and 11.33 mg/m3 wide uncertainty band. The generated ensemble has comparable metrics with 25.58 mg/m3 mean, 3.13 mg/m3 standard deviation, and 14.13 mg/m3 uncertainty band. While the basic metrics remain similar the added value is that the larger ensemble permits us to derive predictive distribution and better express confidence in the predictions. Having only few ensemble members reduces the ability to resolve the unknown probability distribution that one tries to estimate, hence, higher number of ensemble members providing sufficient resolution in terms of probabilities is required (Leutbecher 2019).

Simulated chlorophyll-a concentrations. Ensemble members and median of the baseline scenarios for the first and last simulated decades (top), ensemble members and median of the generated scenarios for the first and last simulated decades (middle), and pointwise prediction intervals derived from the generated ensemble (bottom). Orange dashed line indicates the time of the spring peak concentration

6 Conclusions and recommendations

This paper presents an approach to complement existing regional climate projections by generating new synthetic scenarios with similar statistical properties. Due to the Bayesian hierarchical (multi-level) setup the proposed method offers flexibility and allows full characterisation of uncertainties. Thus, the main value of the proposed methodology is that we can compute predictive uncertainty conditional on all the data (considering all scenarios).

Moreover, the pre-processing step allows adaptability to other climate variables, such as temperature, or potentially to other environmental variables, noting that adjustments to the model formulation might be necessary as the current time series model was defined for time series with seasonality. The underlying parameterized time series model formulation therefore needs to be adjusted for non-seasonal signals with substantially different characteristics.

In addition, there is a practical limitation to the number of generated scenarios in cases when probabilistic simulations are performed using computationally expensive physical models. The three dimensional physical model, used in the demonstration case, covers a large spatial domain, hence, simulation times are long (approx. 12 h for 1 year simulation on a medium performance baremetal Linux computer cluster). Based on this we can conclude that while the stochastic generator itself has no computational time limitations, the subsequent model that is utilized to forward propagate uncertainties may present such limitations. On the other hand, if the synthetic radiation scenarios are used as input to surrogate models, this limitation on the number of scenarios is reduced.

Moreover, for future research the authors recommend to extend the current Bayesian hierarchical model to include spatial correlation (multi-site stochastic generator) and to incorporate other climate variables, since currently only one location and variable is considered. Extending the stochastic generator in this way would allow us to make use of the multi-dimensional data structure.

Finally, we conclude that the demonstration case, in which the generated synthetic radiation scenarios were utilized for probabilistic water quality simulation, could showcase the potential of the presented approach to express future likelihoods of predicted chlorophyll-a concentrations via pointwise predictive distributions. Since with smaller ensembles one may only derive ensemble mean and spread as a proxy of uncertainty, it is this added feature of simulating numerous chlorophyll-a concentration scenarios and subsequently deriving the pointwise predictive distribution, which helps to achieve better characterization of uncertainties. This enhanced uncertainty estimate in turn supports better informed and rational decision making which often brings socio-economic and monetary benefits.

Data Availability Statement

The data used for this study are available at the following URLs but might be subject to third party restrictions: http://data.dta.cnr.it/ecopotential/wadden_sea/; https://www.euro-cordex.net/; http://projects.knmi.nl/klimatologie/daggegevens/.

Code availability

The Python scripts used to produce the results can be accessed at: https://github.com/lorincmeszaros/stochastic-climate-generator.

References

Alodah A, Seidou O (2019) The adequacy of stochastically generated climate time series for water resources systems risk and performance assessment. Stoch Environ Res Risk Assess 33(1):253–269. https://doi.org/10.1007/s00477-018-1613-2

Alodah A, Seidou O (2020) Influence of output size of stochastic weather generators on common climate and hydrological statistical indices. Stoch Environ Res Risk Assess 34(7):993–1021. https://doi.org/10.1007/s00477-020-01825-w

Amengual A, Homar V, Romero R, Alonso S, Ramis C (2012) A statistical adjustment of regional climate model outputs to local scales: application to Platja de Palma, Spain. J Clim 25(3):939–957. https://doi.org/10.1175/JCLI-D-10-05024.1

Bartók B, Wild M, Folini D, Lüthi D, Kotlarski S, Schär C, Vautard R, Jerez S, Imecs Z (2017) Projected changes in surface solar radiation in CMIP5 global climate models and in EURO-CORDEX regional climate models for Europe. Clim Dyn 49(7–8):2665–2683. https://doi.org/10.1007/s00382-016-3471-2

Birt A, Valdez-Vivas M, Feldman R, Lafon C, Cairns D, Coulson R, Tchakerian M, Xi W, Guldin J (2010) A simple stochastic weather generator for ecological modeling. Environ Model Softw 25(10):1252–1255. https://doi.org/10.1016/J.ENVSOFT.2010.03.006

Capellán-Pérez I, Arto I, Polanco-Martínez JM, González-Eguino M, Neumann MB (2016) Likelihood of climate change pathways under uncertainty on fossil fuel resource availability. Energy Environ Sci 9(8):2482–2496. https://doi.org/10.1039/c6ee01008c

Chen J, Brissette F, Leconte R (2012) WeaGETS a Matlab-based daily scale weather generator for generating precipitation and temperature. Procedia Environ Sci 13:2222–2235. https://doi.org/10.1016/j.proenv.2012.01.211

Cleveland WS (1979) Robust locally weighted regression and smoothing scatterplots. J Am Stat Assoc 74(368):829–836. https://doi.org/10.1080/01621459.1979.10481038

Dacunha-Castelle D, Hoang T, Parey S (2015) Modeling of air temperatures: preprocessing and trends, reduced stationary process, extremes, simulation. Journal de la Société Française de Statistique 156(1):138–168

Danuso F (2002) Climak: a Stochastic model for weather data generation. Ital J Agron 6:57–71

Gamerman D, Lopes HF (2006) Markov chain Monte Carlo: Stochastic simulation for Bayesian Inference, vol 1, 2nd edn. Taylor & Francis, New York

Gelman A, Hill J (2006) Data analysis using regression and multilevel/hierarchical models. Analytical methods for social research. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511790942

Goberville E, Beaugrand G, Hautekèete NC, Piquot Y, Luczak C (2015) Uncertainties in the projection of species distributions related to general circulation models. Ecol Evol 5(5):1100–1116. https://doi.org/10.1002/ece3.1411

Guo T, Mehan S, Gitau MW, Wang Q, Kuczek T, Flanagan DC (2018) Impact of number of realizations on the suitability of simulated weather data for hydrologic and environmental applications. Stoch Env Res Risk Assess 32(8):2405–2421. https://doi.org/10.1007/s00477-017-1498-510.1007/s00477-017-1498-5

Hashmi MZ, Shamseldin AY, Melville BW (2009) Statistical downscaling of precipitation: state-of-the-art and application of bayesian multi-model approach for uncertainty assessment. Hydrol Earth Syst Sci Discuss 6(5):6535–6579. https://doi.org/10.5194/hessd-6-6535-2009

Hayhoe K, Edmonds J, Kopp R, LeGrande A, Sanderson B, Wehner M, Wuebbles D (2017) Ch. 4: climate models, scenarios, and projections. Climate science special report: fourth national climate assessment, volume I. https://doi.org/10.7930/J0WH2N54.https://science2017.globalchange.gov/chapter/4/

Jacob D, Petersen J, Eggert B, Alias A, Christensen OB, Bouwer LM, Braun A, Colette A, Déqué M, Georgievski G, Georgopoulou E, Gobiet A, Menut L, Nikulin G, Haensler A, Hempelmann N, Jones C, Keuler K, Kovats S, Kröner N, Kotlarski S, Kriegsmann A, Martin E, van Meijgaard E, Moseley C, Pfeifer S, Preuschmann S, Radermacher C, Radtke K, Rechid D, Rounsevell M, Samuelsson P, Somot S, Soussana JF, Teichmann C, Valentini R, Vautard R, Weber B, Yiou P (2014) Euro-cordex: new high-resolution climate change projections for european impact research. Reg Environ Change 14(2):563–578. https://doi.org/10.1007/s10113-013-0499-2

Katzfuss M, Hammerling D, Smith RL (2017) A Bayesian hierarchical model for climate change detection and attribution. Geophys Res Lett 44(11):5720–5728. https://doi.org/10.1002/2017GL073688

Knutti R, Sedláček J (2013) Robustness and uncertainties in the new CMIP5 climate model projections. Nat Clim Change 3(4):369–373. https://doi.org/10.1038/nclimate1716

Leutbecher M (2019) Ensemble size: how suboptimal is less than infinity? Q J R Meteorol Soc 145(S1):107–128. https://doi.org/10.1002/qj.3387

Los FJ, Villars MT, Van der Tol MW (2008) A 3-dimensional primary production model (BLOOM/GEM) and its applications to the (southern) North Sea (coupled physical-chemical-ecological model). J Mar Syst 74(1–2):259–294. https://doi.org/10.1016/j.jmarsys.2008.01.002

Luo Q (2016) Necessity for post-processing dynamically down scaled climate projections for impact and adaptation studies. Stoch Environ Res Risk Assess 30(7):1835–1850. https://doi.org/10.1007/s00477-016-1233-7

Maraun D, Shepherd TG, Widmann M, Zappa G, Walton D, Gutiérrez JM, Hagemann S, Richter I, Soares PM, Hall A, Mearns LO (2017) Towards process-informed bias correction of climate change simulations. Nat Clim Change 7(11):764–773. https://doi.org/10.1038/nclimate3418

Mehan S, Guo T, Gitau M, Flanagan DC (2017) Comparative study of different Stochastic weather generators for long-term climate data simulation. Climate. https://doi.org/10.3390/cli5020026

Morim J, Hemer M, Wang XL, Cartwright N, Trenham C, Semedo A, Young I, Bricheno L, Camus P, Casas-Prat M, Erikson L, Mentaschi L, Mori N, Shimura T, Timmermans B, Aarnes O, Breivik Ø, Behrens A, Dobrynin M, Menendez M, Staneva J, Wehner M, Wolf J, Kamranzad B, Webb A, Stopa J, Andutta F (2019) Robustness and uncertainties in global multivariate wind-wave climate projections. Nat Clim Change 9(9):711–718. https://doi.org/10.1038/s41558-019-0542-5

Najafi MR, Moradkhani H (2014) A hierarchical Bayesian approach forthe analysis of climate change impact on runoff extremes. Hydrol Process 28(26):6292–6308. https://doi.org/10.1002/hyp.10113

Navarro-Racines C, Tarapues J, Thornton P, Jarvis A, Ramirez-Villegas J (2020) High-resolution and bias-corrected CMIP5 projections for climate change impact assessments. Sci Data 7(1):1–14. https://doi.org/10.1038/s41597-019-0343-8

Parey S (2019) Generating a set of temperature time series representative of recent past and near future climate. Front Environ Sci 7:99. https://doi.org/10.3389/fenvs.2019.00099

Reich BJ, Shaby BA (2012) A hierarchical max-stable spatial modelfor extreme precipitation. Ann Appl Stat 6(4):1430–1451. https://doi.org/10.1214/12-AOAS591

Richardson CW (1981) Stochastic simulation of daily precipitation, temperature, and solar radiation. Water Resour Res 17(1):182–190. https://doi.org/10.1029/WR017i001p00182

Semenov M, Barrow E (2002) LARS-WG a stochastic weather generator for use in climate impact studies. User Manual, Hertfordshire, UK, 0-27

Smith K, Strong C, Rassoul-Agha F (2017) A new method forgenerating stochastic simulations of daily air temperature for usein weather generators. J Appl Meteorol Climatol 56(4):953–963. https://doi.org/10.1175/JAMC-D-16-0122.1

Steinschneider S, McCrary R, Mearns LO, Brown C (2015) Theeffects of climate model similarity on probabilistic climateprojections and the implications for local, risk-based adaptationplanning. Geophys Res Lett 42(12):5014–5044. https://doi.org/10.1002/2015GL064529

Tebaldi C, Sansó B (2009) Joint projections of temperature andprecipitation change from multiple climate models: a hierarchicalBayesian approach. J R Stat Soc: Ser A (Stat Soc) 172(1):83–106. https://doi.org/10.1111/j.1467-985X.2008.00545.x

Tebaldi C, Smith RL, Nychka D, Mearns LO (2005) Quantifying uncertainty in projections of regional climate change: a Bayesian approach to the analysis of multimodel ensembles. J Clim 18(10):1524–1540. https://doi.org/10.1175/JCLI3363.1

van Vuuren DP, Edmonds J, Kainuma M, Riahi K, Thomson A, Hibbard K, Hurtt GC, Kram T, Krey V, Lamarque JF, Masui T, Meinshausen M, Nakicenovic N, Smith SJ, Rose SK (2011) Therepresentative concentration pathways: an overview. Clim Change 109(1):5. https://doi.org/10.1007/s10584-011-0148-z

Verdin A, Rajagopalan B, Kleiber W, Podestá G, Bert F (2019) BayGEN: a Bayesian spacetime stochastic weather generator. Water Resour Res 55(4):2900–2915. https://doi.org/10.1029/2017WR022473

Vesely FM, Paleari L, Movedi E, Bellocchi G, Confalonieri R (2019) Quantifying uncertainty due to Stochastic weather generators in climate change impact studies. Sci Rep. https://doi.org/10.1038/s41598-019-45745-4

Vu TM, Mishra AK, Konapala G, Liu D (2018) Evaluation of multiple stochastic rainfall generators in diverse climatic regions. Stoch Environ Res Risk Assess 32(5):1337–1353. https://doi.org/10.1007/s00477-017-1458-0

Acknowledgements

This research has received funding from the European Unions Horizon 2020 research and innovation programme under Grant agreement No. 727277. We are grateful to the anonymous reviewers for their insightful comments, which significantly improved the manuscript.

Author information

Authors and Affiliations

Contributions

The study was conducted within the PhD research of the first author. All authors contributed to the study conception, design and manuscript writing. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mészáros, L., van der Meulen, F., Jongbloed, G. et al. A Bayesian stochastic generator to complement existing climate change scenarios: supporting uncertainty quantification in marine and coastal ecosystems. Stoch Environ Res Risk Assess 35, 719–736 (2021). https://doi.org/10.1007/s00477-020-01935-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-020-01935-5