Abstract

3D Concrete Printing (3DCP) is a rapidly evolving technology that allows for the efficient and accurate construction of complex concrete objects. In this paper, a numerical modelling approach is presented for the simulation of the printing process of cementitious materials, based on the homogeneous fluid assumption. To cope with the large deformations of the domain and the nonlinearity resulting from the use of a non-Newtonian rheological law, the Navier–Stokes equations are solved in the framework of the Particle Finite Element Method (PFEM). Furthermore, tailored solutions have been formulated and implemented for the time-dependent moving boundary conditions at the nozzle outlet and for the efficient handling of the inter-layer contact in the same PFEM framework. The overall computational cost is decreased by the implementation of an adaptive de-refinement technique, which drastically reduces the number of degrees of freedom in time. The proposed modelling approach is finally validated by simulating the printing process of six rectilinear layers and one multi-layer “wall”. The results show good agreement with the experimental data and provide valuable insights into the printing process, paving the way for the use of numerical modelling tools for the optimization of materials and processes in the field of 3D Concrete Printing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Additive manufacturing (AM) techniques are nowadays established in different industrial sectors, including automotive, aerospace, and healthcare, where they contribute greatly to the optimization of the production process. However, in the construction industry, these techniques are still not affirmed and much research is going on to explore their potential and practical feasibility. Some of the most promising digital construction technologies are reported in [1], among them, 3D Concrete Printing (3DCP) is probably the most well-known. In 3DCP a cementitious mortar is extruded through a digitally controlled nozzle and the target shape of the architectural/structural artefact is progressively generated out of layers of material. Therefore, 3DCP offers the possibility to easily cope with the construction of free-form topologically optimized shapes, avoiding the use of any formwork [2]. Moreover, 3DCP can reduce drastically building times and costs with respect to more traditional building technologies, and it could also turn out to be a key factor in lowering the environmental impact of concrete constructions.

Nonetheless, this technology is not ready yet for large-scale adoption, because of the technical issues still to be addressed and the many uncertainties linked to the extrusion process and its outcomes. The progress of numerical models is expected to cover this gap and to provide the designer with better knowledge and control of 3DCP [3].

Modelling 3DCP is a complex task, because of different physical phenomena occurring at different space and time scales. As a consequence, a comprehensive numerical tool has not yet been developed, and different approaches, mainly derived from standard simulations of fresh concrete, are being investigated. Similar to what is reported in [4] for standard concrete modelling, it is possible to subdivide numerical simulation techniques for 3DCP into three main groups: continuum single-phase approaches (solid or fluid) and discrete methods. The first two groups model the material as a homogeneous continuum, either solid if the focus of the simulation is on the structural behaviour of the printed object (e.g., buildability analysis), or fluid if the interest is on the extrusion process and on the fresh state behaviour. On the contrary, discrete approaches reproduce the material as a set of interacting discrete elements (such as particles) and in principle, they could be applied to simulate different stages of the 3DCP process.

The first attempts to simulate 3DCP have been conducted with the FEM, in a solid single-phase framework, with the aim of assessing the stability of the object during printing. In [5], the printing process of a cylindrical column was studied with the commercial software ABAQUS, by sequentially activating the layers of material. Using a similar technique, implemented in the 3D printed-dedicated plugin of the commercial software ATENA, in [6] it was carried out the stability analysis of a small set of 3D-printed intersecting walls.

Subsequently, other researchers focused on the task of including uncertainties and time dependency in the material parameters governing 3DCP simulations. In [7] a 2D Stochastic Finite Element Method (SFEM) was formulated with this aim and applied to the virtual printing of a 2D rectilinear wall. To further improve the accuracy of the simulation the finite elements were activated one by one. However, for three-dimensional objects with complex geometry, it is challenging to generate in advance the finite elements and assign their correct order of activation. For this reason, in [8] two Grasshopper plugins were developed to generate automatically the ABAQUS input files needed for the analysis of the printing of arbitrary shapes.

A different approach, based on a discrete lattice model was studied in [9]. The printed object was replaced by a network of Timoshenko beams and the extrusion process was reproduced with an “element birth technique”, consisting in deactivating and reactivating the beams during the analysis. The above-mentioned solid continuum methods successfully predict failure during printing, but they can not give a comprehensive understanding of the material flow during extrusion. From the physical point of view, in fact, fresh concrete is a suspension of particles in a fluid matrix.

Depending on its workability, it can behave similarly to a granular material, suggesting researchers to employ discrete particle approaches for its simulation. The Discrete Element Method (DEM), originally developed in [10], reproduces the material as a set of independent particles interacting through specific “contact laws”. DEM is often applied for the simulation of the extrusion in 3DCP with the aim to capture specific aspects associated with the heterogeneity of the material. To name a few examples: assessing fibres orientations during extrusion [11]; accounting for the presence of aggregates of different sizes, according to the granulometric curve and the mix design [12]; modelling of the mixing and pumping phases [13]. In DEM simulations to obtain accurate results it is necessary to use a large number of particles, leading to a very high computational cost. Another drawback lies in the difficulty of determining proper contact laws to establish how the particles interact during simulation. In fact, differently from continuum approaches, for which physical-based constitutive equations are generally available, in DEM, the contact laws are directly calibrated from the experiments.

For this reason, other methods, relying on the continuous single-phase fluid approach have also been explored. The underlying assumption is that fresh concrete can be treated as a homogeneous viscous fluid. In this view, the Navier–Stokes equations govern the problem and Computational Fluid Dynamic (CFD) techniques are used to solve them. Overall, CFD methods can realistically reproduce the flow of the material, but they also require a quite high computational effort.

The commercial CFD software FLOW-3D based on the Finite Volume Method (FVM) was adopted in [14, 15], to simulate the extrusion process and to predict the cross-sectional shapes of a few rectilinear layers. Also in [16], FLOW-3D is employed to study the printing process and to asses the layer’s quality.

A different option consists of the use of the Particle Finite Element Method (PFEM) [17, 18]. To cope with the large deformations proper of fluid simulations and the tracking of the free surface. PFEM combines a standard Lagrangian FEM formulation with a re-meshing scheme. It has the advantage to be strictly related to the standard and well-known FEM, allowing for the use of familiar constitutive models. It has been extensively employed in a variety of applications (e.g., dam break, slide simulations, metal forming, mould casting) and also to reproduce rheological tests on concrete in [19,20,21]. More recently PFEM was applied to simulate 3DCP in [22, 23] and also in [24].

This work describes the formulation of a three-dimensional single-phase fluid PFEM numerical model of 3DCP. The Lagrangian nature of PFEM intrinsically allows for the tracking of the free surface. In addition, to facilitate the imposition of the moving time-dependent boundary conditions typical of 3DCP problems, an original Arbitrary Lagrangian–Eulerian formulation is presented and used for the boundary nodes. Differently from [22, 23], an explicit solver is chosen to cope with the nonlinearities associated with the large deformation regime, the non-Newtonian rheological law and the complex boundary conditions. Moreover, a new cost-effective way is also presented to treat contact and self-contact in PFEM, which improves mass conservation and can reduce the errors associated with the re-meshing algorithm [22, 23]. Finally, an adaptive de-refinement technique is proposed and implemented to decrease the computational cost, without affecting too much the overall accuracy.

The structure of the paper is organized as follows. In Sect. 2, the theoretical framework is presented, starting with a general discussion of the main underlying assumptions and then detailing the governing equations. Subsequently, in Sect. 3 the numerical discretization of the equations is reported and in Sect. 4 the PFEM is introduced. The specific computational tools developed to simulate the extrusion processes of 3DCP are instead covered more in-depth in Sect. 5. Section 6 presents the benchmarks used to validate the model, for which the experimental data were available in the literature. The key findings derived from the simulation of the printing scenarios described in the benchmarks are also summarised there. Finally, in Sect. 7, the conclusions are drawn, also addressing potential issues and topics deserving further research.

2 Governing equations

Fresh concrete is composed of a mixture of particles and aggregates suspended in a fluid matrix. This dual nature of fluid and granular material motivates the use of both continuum and discrete numerical approaches for concrete simulation. In [25] it was shown how both these families of methods can successfully capture the experimental results of the slump and channel flow tests. More in the specific, as stated in [4], assimilating fresh concrete to a homogeneous fluid is justified by the use of an appropriate scale of observation with respect to the phenomenon to be described. The 3DCP process can be subdivided into different phases, involving different physical phenomena and characteristic dimensions. According to [3] the most relevant phases in 3DCP are: mixing, pumping and extrusion, layer deposition, stability and shape retention of the printed object. The focus of this work is on the simulation of the extrusion and filament deposition processes, which are associated with characteristic lengths of the order of the nozzle width and of the filament height (i.e., generally about a few centimetres). Therefore a scale of observation of one order of magnitude larger than the coarsest particle size (which amounts to a few millimetres), would be enough to consider the material as a continuum and at the same time capture the main physics of the problem. Some examples of works adopting the single-phase fluid approach to simulate 3DCP extrusion are reported in [26].

In the homogeneous fluid assumption, the Navier–Stokes equations govern the problem and standard CFD techniques (e.g., FEM) can be used to compute the solution. The Navier–Stokes equations are a system of partial differential equations, accounting for the physical conservation of momentum, mass, and energy in fluid dynamics. The weakly-compressible fluid hypothesis will be adopted, motivated by the fact that for fresh concrete standard applications the compressibility effects are almost negligible. In fact, the Mach number (Ma), computed by taking the ratio between the average fluid velocity for a generic 3DCP application (\(v_f =\) 0.1 m/s) and the speed of a dilatational wave in fresh concrete (\(\sim 500\) m/s), results to be in the order of \(Ma = 2 \times 10^{-4}\) (the incompressible limit is obtained for \(Ma=0\)). Moreover, the weakly-compressible framework is preferred to the incompressible one since relaxing the incompressibility constraint facilitates the numerical solution. Because of the weakly-compressible assumption, an equation of state will be required to link the pressure and the density.

2.1 Momentum balance and mass conservation

Standard CFD simulations usually adopt an Eulerian framework. Solving the governing equations on a fixed computational grid can be particularly advantageous to avoid problems related to mesh distorsion. Additionally, the Eulerian framework allows for straightforward management of inflow and outflow boundary conditions. Based on these considerations, an Eulerian point of view seems convenient to study 3DCP, where large deformations are present and a steady-state inflow condition must be specified at the nozzle outlet.

However, in the Eulerian description, it is difficult to deal with evolving domains and interfaces. A Lagrangian framework is generally preferred when a transient free surface needs to be tracked, such as the concrete-air interface in 3DCP. Moreover, it facilitates the treatment of materials with history-dependent constitutive laws.

A third option consists of the use of the Arbitrary Lagrangian-Eulerian (ALE) description, which combines the advantages of both the Eulerian and the Lagrangian frameworks [27]. In the ALE framework, neither the material coordinates nor the spatial coordinates are taken as reference, but instead, the reference system is related to the computational mesh. In this view, the motion of the physical points becomes decoupled from the mesh movement. Let \({\textbf {v}}\) be the mesh velocity and \({\textbf {u}}\) the physical velocity at a certain material point. The ALE framework will determine the presence of a convective term in the balance equations, depending on the convective (or relative) velocity \({\textbf {c}} = {\textbf {u}}-{\textbf {v}}\) between the physical points and the mesh nodes. The Lagrangian and the Eulerian description are recovered as particular sub-cases of the ALE by imposing respectively that the convective velocity is null \({\textbf {c}} ={\textbf {0}}\) (i.e., \({\textbf {v}}={\textbf {u}}\) ) and that the convective velocity is coincident with the physical one \({\textbf {c}} = {\textbf {u}}\) (i.e., \({\textbf {v}}=0\)).

In this work, the Navier–Stokes equations will be written in the more general ALE framework. However, different mesh velocities will be specified in different regions of the computational domain. In the majority of the fluid domain the standard Lagrangian description, associated with all the advantages discussed before, is recovered (see Sect. 5). At the nozzle outlet, to facilitate the imposition of the time-dependent moving boundary conditions, the ALE description is exploited, by specifying a mesh velocity equal to the prescribed printing velocity (see Sect. 5 for further details).

Let an evolving fluid domain \(\Omega _t\) be considered in the interval [0, T]. In this domain, \({\textbf {X}}\) are the position vectors in the material configuration, \({\textbf {x}}\) the position vectors at time t in the local “or current” configuration and \(\varvec{\chi }\) the position vectors in a reference system associated with the mesh. Consequently, the momentum balance and mass continuity equations, written in the ALE form, read:

where \(\varvec{\sigma }= \varvec{\sigma }(\varvec{x},t)\) is the Cauchy stress tensor, \(\varvec{b}\) is the vector of the body forces in the local configuration, \(\varvec{u}=\varvec{u}(\varvec{x},t)\) is the velocity field, \(p=p(\rho ,t)\) is the pressure field and K is the bulk modulus of the material. Additionally, to formulate the rheological law, the Cauchy stress tensor is decomposed in an isotropic and a deviatoric part:

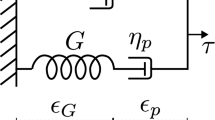

where \(\varvec{I}\) is the identity tensor and \(\varvec{\tau }\) is the deviatoric stress tensor. Once the governing equations are defined, a suitable set of initial and boundary conditions must be prescribed:

where \(\varvec{u_0}\), \(\widetilde{\varvec{u}}\), \(\varvec{h}\), \(p_0\) are prescribed known functions and \(\varvec{n}\) is the outward normal to the boundary \(\Gamma _t = \partial \Omega _t\), which is subdivided in two non-overlapping subsets \(\Gamma _D\) and \(\Gamma _N\), such that \(\Gamma _D \cup \Gamma _N = \Gamma _t\) and \(\Gamma _D \cap \Gamma _N = \emptyset \).

2.2 Rheological law

The rheological behaviour of fresh concrete is characterized by the existence of a yield stress [28, 29], consisting of a stress threshold which separates the perfectly rigid behaviour of the material from the flowing fluid regime. A non-Newtonian rheological law is necessary to accurately represent this characteristic. One commonly adopted model for this purpose is the Bingham law [30], which reads:

In Eqs. (8) and 9, \(\varvec{\tau }\) is the deviatoric stress tensor introduced in (3), \(\mu \) is the fluid viscosity, \(\tau _0\) is the yield stress and \(\varvec{\epsilon }(\varvec{u})\) is the deviatoric strain rate defined as:

In the Bingham law, the yield stress represents a sharp discontinuity which prevents a straightforward numerical implementation. Therefore, to avoid possible instabilities and convergence issues the regularization proposed in [31], consisting of an exponential approximation of the Bingham law, is here adopted:

Fig. 1 shows for the mono-dimensional case that as the exponent m in Eq. (11) increases, a better approximation of the Bingham law is obtained. In this work, the value \(m=1000\) s is chosen, as it is high enough to quite accurately reproduce the Bingham law at the lower strain rates (see Fig. 1), while preserving the numerical stability of the model. Lower values of m could bring to a model exhibiting excessive deformability.

2.3 Equation of state

The mass continuity Eq. (2) is written in the assumption of weakly compressible fluid, therefore an equation of state is necessary to relate the pressure p and the density \(\rho \). The modified Tait equation of state is adopted, since, according to [32], it gives a good approximation of the compressibility of a fluid with a density of the same order of magnitude of water, under pressures comparable to the atmospheric one. Moreover, as reported in [33], if the modified Tait equation is used, the energy equation becomes decoupled from the continuity and momentum equations and, assuming isothermal conditions, it can be disregarded. The Tait equation directly links the pressure and the density fields:

with \(p_0\) and \(\rho _0\) respectively the pressure and the density in the reference configuration (atmospheric conditions) and \(\gamma \) the specific heat ratio. In the applications section of Sect. 6, following [32], \(\gamma =7\) will be used.

3 Numerical solution

The Navier–Stokes equations can rarely be solved in closed form. Typically, numerical solutions based on discretization techniques such as the Finite Element Method (FEM) are necessary for standard engineering applications. To compute an approximate numerical solution, the first step consists of the reformulation of the balance Eqs. (1)–(2) into the weak (or variational) form.

Following the standard Galerkin formulation, the spaces of the trial functions are introduced on the domain \(\Omega \), for the velocity \(S^u\) and for the pressure \(S^p\) [34]. For the velocity, the space of the test functions \(S_0^u\) is also defined, which requires the functions to vanish on the Dirichlet portion of the boundary. On the contrary, \(S^p\) suffices also as a test space, since there are no explicit boundary conditions on the pressure.

The weak form of the momentum balance is obtained by multiplying Eq. (1) for the generic vector test function \(\varvec{w} \in S_0^u\) and integrating over the computational domain \(\Omega \). Analogously, the weak form of the mass conservation is achieved by multiplying Eq. (2) for the generic scalar test function \(q \in S^p\) and integrating over the computational domain \(\Omega \). The weak formulation of the problem, therefore, consists of computing \(\varvec{u} \in S^u\) and \(p \in S^p\) that satisfy:

From the weak forms, it is possible to obtain a finite-dimensional problem by introducing an isoparametric finite element discretization of the velocity and pressure fields:

where \(n_e\) represents the number of nodes in the element, \(\varvec{U}_i\) is the vector of nodal velocities in the i-th direction, \(\varvec{P}\) is the vector of the nodal pressures and \(N_{a}^{u}\), \(N_{a}^{p}\) are the shape functions for the velocity and the pressure respectively. The integrals in Eqs. (13) and (14) can then be evaluated separately over each element, leading to the semi-discretized in-space balance equations:

where \(\varvec{M_u}(t)\) and \(\varvec{M}_{p}(t)\) are the velocity and the pressure mass matrices, \(\varvec{K}_c^{\varvec{u}}\) and \(\varvec{K}_c^p\) are the matrices containing the convective terms related to the velocity and to the pressure, \(\varvec{K}_{\mu }\) is the viscous matrix, \(\varvec{F_{ext}}\) is the vectors of the external forces and \(\varvec{D}\) is the discrete gradient operator matrix (see [35]).

The equation of state (12) instead does not need any space discretization, as it is evaluated point-wise at the FE nodes:

where \(P_{0a}\) and \(R_{0a}\) are the reference nodal values of pressure and density.

Subsequently, the time history can be subdivided into a finite set of time steps \(\Delta t\) and the equations are enforced only at discrete time instants. A forward Euler scheme is used to approximate time derivatives. The fully discretized counterparts of Eqs. (1), (2) and (12), at the generic discrete time instant \(t_n\), read:

where \(\varvec{F_{int}}\) is the vector of the internal forces, which includes all contributions written explicitly in Eq. (17). The forward Euler scheme is explicit, therefore, to avoid instability, the Courant-Friedrichs-Lewy (CFL) condition must not be violated. At each iteration, the stable time step is adapted based on:

where \(h_e\) is a characteristic element size of the distorted elements, \(\beta \) is a scaling safety factor and \(c_e\) is the speed of propagation of a dilatational pressure wave in the material. Based on the studies on setting concrete done in [36], it emerges that the velocity of a P-wave in fresh concrete amounts to 200–500 m/s. These values, which are rather low if compared to the velocity of sound in the water (1400 m/s), are attributed to the granular nature of concrete.

Finally, it is worth mentioning that the choice of an explicit solver allows for an effortless treatment of nonlinearities. In addition, explicit solvers are generally easier to be parallelized than implicit ones and, as the dimension of the problem increase, they become computationally more efficient.

4 Particle Finite Element Method

The numerical model of 3DCP has been formulated in the ALE framework, to facilitate the imposition of the steady-state, moving boundary conditions at the nozzle outlet. However, except for a few nodes positioned in correspondence with this region, the majority of the computational domain is treated in a standard Lagrangian fashion, by specifying a zero convective velocity.

Problems characterized by large deformations, if studied in the Lagrangian framework will suffer from the distortion of the computational domain. If the mesh becomes too distorted, accuracy and instability problems may arise. Therefore, a possible solution consists in the use of the so-called “Particle Finite Element Method (PFEM)” [17]. The PFEM is a mesh-based Lagrangian approach originally intended for the simulation of free surface flows and breaking wave problems [37].

Afterwards, because of its capacity to deal with large deformation and topology changes of the domain, it was applied also in other fields, such as landslide simulations [38, 39], geotechnical tests on soil [40], fresh concrete flow tests [19,20,21] and multi-phase flows [41]. Additionally, PFEM can efficiently be coupled with other methods to study complex particle-laden flows [42] and fluid–structure interaction problems [43, 44].

Another advantage of PFEM stands in its ability to treat complex contact interaction, which explains the large number of PFEM works on the simulation of manufacturing processes, to name a few [45, 46]. The use of PFEM to model challenging additive manufacturing (AM) processes involving a fluid-to-solid phase transition was also explored in [47]. All these aspects make PFEM a promising candidate for the simulation of 3DCP. In particular, recently, PFEM has been adopted in [22, 23] and also in [24] to reproduce numerically the 3D printing process of a few rectilinear layers of cementitious mortar.

In the PFEM the continuum is discretized with a finite element mesh having the nodes coincident with the material fluid particles. As the particles move according to their Lagrangian nature, the mesh will start to deteriorate in time. The underlying idea in the PFEM is to adopt a re-meshing scheme to generate a new mesh every time it becomes overly distorted. The main phases of the re-meshing algorithm are illustrated with reference to Fig. 2. During the analysis the mesh distortion is monitored, and, whenever it becomes excessive, the mesh is deleted and only the nodes are maintained, as shown in Fig. 2a. Then, a new connectivity is generated with the Delaunay algorithm [48]. The triangulation resulting from this process coincides with the convex hull of the given set of points (Fig. 2b). For this reason, the “\(\alpha \)-shape method” [18, 49] is then applied to recover the physical fluid domain. In the specific, the \(\alpha \)-shape method is a technique which distinguishes between physical and unphysical elements based on their distortion: the more distorted the element the less it is likely to belong to the fluid domain. With the application of the \(\alpha \)-shape method, all the unphysical elements are removed and the original boundary of the fluid domain is approximately reconstructed, as shown in Fig. 2c.

Sometimes, the re-meshing scheme alone may not be sufficient to improve the quality of the mesh, particularly in situations where node concentrations become too dense or too sparse in certain regions. Furthermore, in 3D, the mesh generated by the Delaunay tessellation is not optimal and can frequently include badly shaped tetrahedra, named “slivers”. In these cases, it is useful to perform additional operations aimed at repositioning some of the nodes in the domain, either locally (without modifying the connectivity) or globally (modifying the connectivity and thus requiring re-meshing) [18, 50].

One of the critical aspects of PFEM is that during the re-meshing the elemental information stored at the Gauss points is lost. Therefore, all the relevant elemental data must be saved at the nodes, inevitably introducing some degree of approximation. To facilitate this operation it is a common approach in PFEM to employ linear shape functions. In this way, the finite element nodes have a one-to-one correspondence with the physical points (or particles) and no mapping is required between the old and the new mesh.

In this work, the domain is discretized with isoparametric tetrahedral finite element using linear shape functions for the approximation of both the velocity and pressure fields. However, by using equal-order finite elements the Ladyzhenskaya-Babuška-Brezzi (LBB) condition is violated. Consequently, even if the assumption of weakly-compressible fluid tends to mitigate the instability behaviour, it is still necessary to introduce an appropriate stabilization. In the present work the stabilizing effect is achieved by adding a new term in the mass conservation, based on the local \(L_2\) polynomial projection of the pressure field onto a lower-order interpolation space [51, 52].

5 Computational strategies for 3DCP

In the PFEM framework, different computational strategies have been implemented to enhance the accuracy of the simulations of the printing process. In the specific, this section presents: the use of the ALE framework to impose simultaneously the boundary conditions regarding the material’s flow and the printing velocity at the nozzle outlet; two algorithms to improve contact with the ground and among different layers; an adaptive de-refinement technique to reduce the total number of the degrees of freedom in the model.

5.1 Continuous material flow during printing

This work focuses on the simulation of the extrusion and layer deposition processes in 3DCP. Therefore, since the mixing and pumping phases have been disregarded, the problem arises of how to achieve a continuous flow of the material from the nozzle. A possible solution would be to impose a steady state inflow boundary condition at the nozzle outlet by means of the mixed Lagrangian-Eulerian technique presented in [35]. A layer of Eulerian nodes is introduced at the nozzle outlet with a prescribed velocity profile. However, because the Eulerian nodes are fixed, this operation would prevent the possibility to assign the printing velocity (see Fig. 3) to the nozzle. Here, the use of the ALE formulation, already described in sect. 2.1, is proposed to overcome the problem. The key idea of the ALE is to introduce a computational mesh which can move independently from the material particles. The ALE description is particularly advantageous if there is a clear mesh-updating algorithm, which enables the automatic prescription of the mesh movement [27]. In 3DCP this is easily accommodated, as the nozzle motion (i.e., its velocity history) is prescribed and thus known \(a \ priori\).

In this view, the discrete boundary at the nozzle outlet \(\Gamma \) (see Fig. 3b) will be represented as a set of ALE nodes. The position of these nodes is updated in time with a mesh velocity equal to the printing velocity \(\varvec{v} = \varvec{v_{print}}\). The remaining nodes of the computational domain \(\Omega {\backslash } \Gamma \) are instead always treated in a standard Lagrangian fashion Figure (4a), by prescribing a mesh velocity coincident with the physical velocity \(\varvec{v=u}\). The mesh velocity \(\varvec{v}\) is thus defined at each node i of the computational domain, at every time instant, as:

At each time step the position of the nodes is updated based on the mesh velocity \(\varvec{v}\):

Having modelled arbitrary nozzle translations by specifying a proper mesh velocity, it becomes possible to assign to the ALE nodes the fluid inflow velocity \(\varvec{u}=\varvec{u_{flow}}\) as a boundary condition. Therefore, independently from the nozzle movements, a continuous vertical material flow from the nozzle outlet can always be obtained. In this view, the ALE description is just exploited to impose the time-dependent moving boundary condition at the nozzle outlet. Moreover, it can be observed that the Lagrangian nodes connected to the inflow boundary, in time, according to their Lagrangian nature, will move down causing a local stretching of the mesh (Fig. 4b). To cope with this issue the layer of elements connected to the boundary nodes is monitored. Whenever these elements become overly stretched, a new set of Lagrangian nodes is added in the middle (Fig. 4c). The solution at the new nodes is computed by interpolation using the shape functions and the nodal values from the current mesh. Finally, at the beginning of the next time step, a re-meshing operation is performed. The procedure described above is summarised in Algorithm 1.

5.2 Contact with the base plane and inter-layer contact

As underlined in [53], PFEM re-meshing operations can affect the mass conservation. The application of the \(\alpha \)-shape method to detect the new boundaries can in fact alter the topology of the computational domain, causing an artificial change in the global volume. Generally, refining the mesh can be sufficient to reduce these volume variations.

As observed in [22], in 3DCP the fictitious volume variations tend to sum up over time, progressively increasing the total volume and eventually affecting the geometry of the printed filament. The origin of this behaviour is associated with the contact between different portions of the fluid or the fluid and the base plane. As already observed, this behaviour can be controlled by reducing the mesh size. However, to improve accuracy also with quite coarse meshes, two techniques to minimize the volume gain in the contact regions are hereafter proposed.

The first one considers the contact of the first layer of cementitious mortar with the ground. Typically, in the PFEM the ground plane is materialized by a set of fixed nodes. When the fluid nodes approach the fixed ones, some unphysical elements could be generated (see Fig. 5a). In this work, the base plane is not present and the coordinates of the Lagrangian nodes are instead monitored during the analysis. As soon as their z-coordinates become lower than a fixed threshold \(z_{tol}\), the nodes are “frozen” by prescribing zero Dirichlet boundary conditions, as shown in Fig. 5b. In this way, it is as if only one object (the fluid) is present, avoiding contact and any related fictitious volume variation. The procedure described is schematically reported in Algorithm 2. More complex base plane conditions (e.g., inclined or non-planar surfaces) can also be treated in a similar way by defining the ground constraint with an analytical equation or by approximating it through proper interpolation.

On the contrary, inter-layer contact requires to be treated in a more structured way. In order to create a general algorithm, valid in any printing scenario, also with coarse meshes, some underlying operations are performed:

-

1.

Refinement of the free surface of the current layer.

-

2.

Removal of the internal nodes of the previous layer which are too close to the possible interface with the current one.

Both these improvements are performed for the entire duration of the analysis and turn out to be quite helpful in preventing the formation of a locally degenerate mesh at the interface (elements in red in Fig. 6a), thus guaranteeing an optimal straight interface (Fig. 6b). The general idea behind the technique for treating interlayer contact is to modify the \(\alpha \)-shape algorithm to detect the elements forming between the outer surfaces of different layers. If the detected elements have a volume above a certain fixed tolerance they are simply deleted (green element in Fig. 6b). Otherwise, if their volume is below the fixed tolerance (green element in Fig. 7a), they are still deleted, but, additionally, their nodes belonging to the upper layer are also removed. In this second case, a re-meshing is then performed to obtain a new updated interface between the two layers, as shown in Fig. 7b. Notice that, the interface between the two layers after contact consists only of nodes from the lower layer. These nodes will be shared between the elements of the top layer and those on the lower layer. The main steps of this technique are reported in Algorithm 3. The contact algorithms developed help to control the volume variations and they have a limited computational cost. In particular, the second technique, which is the most elaborate, because it relies on a modification of the \(\alpha \)-shape method, it does not increase the computational cost.

5.3 Adaptive mesh de-refinement

Typically, adaptive mesh refinement consists of moving the nodes towards the zones of strong solution gradient [27]. In fluid single-phase 3D printing simulations based on the FEM, a quite refined mesh is needed from the beginning in order to correctly reproduce the geometry and obtain precisely the free surface evolution. However, since the computational domain is constantly expanding, maintaining such a refined mesh everywhere during the whole analysis is excessively expensive.

A recent work [54] has shown the huge potential of combining PFEM with mesh adaptive refinement techniques. In the following, we present an adaptive mesh de-refinement algorithm, based on a geometric criterion, which has been integrated with the numerical model of 3DCP. The idea is to subdivide the computational domain into three different portions, which are reported in Fig. 8a. Inside each sector, a different concentration of nodes is kept in order to achieve different mesh sizes: \(h_1,h_2\) and \(h_3\). In particular, each node is monitored at the generic time step to asses whether it belongs to a certain region or not, by evaluating its distance from the nozzle centre r. In the first region (\(r \le r_1\)) containing the nozzle outlet the most refined mesh is employed, with element size \(h_1=h\). This reference mesh size is determined as a trade-off between accuracy (i.e., h is small enough with respect to the characteristic dimensions of the problem) and computational performances. The comparison with the experimental data in Sect. 6 will further justify the choice of h. In the other two regions, characterized by \(r_1<r<r_2\) and \(r>r_2\), mesh sizes which are multiples of the most refined one are prescribed, respectively \(h_2 = c_2 h\) and \(h_3 = c_3 h\). The constants \(c_2\) and \(c_3\) are both larger than one and \(c_2<c_3\). They are selected to guarantee the presence of at least a couple of finite elements along the height and width of the filament. To obtain the target mesh sizes, the average distance of each node from its adjacent neighbourhoods is compared to the prescribed mesh size in that region multiplied by a parameter \(\zeta \) (which is taken between \(0.6 - 0.8\) ) and it is established whether the node must be removed or not. The use of this geometric criterion is motivated by the plot of the velocity field, reported in Fig. 8b. The highest gradient is close to the nozzle region, while the variations in the velocity field tend to fade moving away from the nozzle. Therefore, the radius \(r_1\) must be carefully chosen in such a way that the region with the most refined mesh encloses the nozzle neighbourhoods. On the contrary, radius \(r_2\) should be selected to ensure the presence of a smooth transition region between the refined and the coarse mesh. Finally, the adaptive de-refinement main steps are summarized in Algorithm 4. In conclusion, the adaptive de-refinement technique presented allows for a drastic reduction of the number of degrees of freedom in the model, leading to a substantial decrease in computational times. Moreover, because the distribution of the mesh sizes reflects the gradient of the solution, the overall loss in accuracy is minimal. It is also important to specify that at the free surface the most refined mesh size is prescribed everywhere. However, in time, the adaptive de-refinement may lead to a deterioration of the free surface mesh. To avoid any problem related to this aspect, an automatic procedure is implemented for adding and removing nodes on the free surface where the mesh quality is compromised.

6 Results and discussion

6.1 Single-layer filament deposition for different printing scenarios

The first benchmark consists of the extrusion of a single rectilinear filament of cementitious mortar along a 300 mm length, under different printing conditions. The material and printing parameters necessary for the simulations are taken from [14], where also some experimental data is available.

The nozzle employed is cylindrical with a diameter of 25 mm. The rheological behaviour of the cement paste was obtained in [14] by performing rotational rheometric tests and resulted in the following Bingham parameters: viscosity \(\mu = 7.5\) Pa \(\cdot \) s; yield stress \(\tau _0=750\) Pa. Six different case studies have been obtained by changing the imposed flow velocity out of the nozzle outlet \(u_{flow}\), the printing velocity \(v_{print}\) and the nozzle height from the ground \(h_{nozzle}\). The printing parameters are reported for each one of the six cases in Table 1.

Comparison of the cross-sectional shapes obtained for printing scenarios (a–f) from the present model, the experimental data (EXP) and the numerical models reported in [14]: generalized Newtonian fluid model (GNF), elasto-visco-plastic model (EVP)

Regarding the numerical parameters, an initial mesh size of about \(h=\)1.5 mm was assigned in the region below the nozzle. Furthermore, the dimensions of the de-refinement regions and the associated characteristic mesh sizes were appropriately chosen depending on the nozzle height (\(h_{nozzle}\)). To ensure a correct discretization of the problem, it is safe to always provide at least a couple of finite elements in the vertical direction. The prescribed mesh sizes \(h_2\), \(h_3\) and the radii determining the sizes of the de-refinement regions \(r_1\), \(r_2\) are reported in a dimensionless form in Table 2 for each one of the six cases. The results were post-processed to extract the cross-section profiles in correspondence of the mid span of the filaments. This location was chosen to minimize boundary disturbances. The comparison of the results with both the experimental and the numerical cross-sectional shapes reported in [14] are illustrated in Fig. 9. The experimental data, reported as a single dashed line, consists approximately in the average of the various experimental curves shown in [14]. Figure 9 indicates very good agreement between the present work numerical results and the experimental data, with maximum differences of a couple of millimetres. However, overall, the present model tends to be slightly more deformable than the experiments: it underestimates the cross-sectional height and overestimates the width. It is worth noticing that this behaviour is also present in the results obtained from the models employed in [14], suggesting a numerical origin of the phenomenon. Additionally, the present results seem to better capture the geometry of the printed layer, especially when compared to the Generalized Newtonian Fluid (GNF) model outcomes. This could be due to some differences in the numerical models or it could also be a consequence of how the Bingham law was implemented: using Papanastasiou exponential smoothing in the present work and with a bi-viscous regularization of the viscosity function in [14]. Figure 10 shows the top view of the filament during the different printing scenarios. It is evident how much the overall layer shape can change by varying the printing parameters. For cases 1 and 2, as the nozzle height is very low \(h_{nozzle}=7.5\) mm and the flow velocity is quite high, a very shallow and flat filament is obtained (Fig. 10a, b). On the contrary, in cases 5 and 6 a much taller and rounded layer is achieved (Fig. 10e, f). This is mainly due to an increased nozzle height of \(h_{nozzle}=17.5\) mm, employed in conjunction with high printing speeds. Generally, an optimal layer shape for 3DCP is obtained for intermediate values, such as those of cases 3 or 4 (Fig. 10c, d). Case 3 was also selected in [14] for the simulation of the three superimposed layers.

While the geometry of the layers is a crucial aspect, it should not be the sole focus when assessing the outcome of the simulation. Figure 11 reports the plots of the velocity and pressure fields along a longitudinal section for all the printing scenarios. In cases 1 and 2 the pressure under the nozzle outlet rises up to \(3500 \ Pa\), while much lower values, limited to more or less \(1000 \ Pa\), are obtained in case 6. As observed in [23] the pressure increment below the nozzle outlet, due to the dynamic impact of the material flowing out the nozzle, turns out to be a relevant parameter to be monitored during printing.

6.2 Multi-layer filament deposition

The second application proposed regards the printing of three superimposed rectilinear layers 300 mm long. Again the material and printing properties are taken from [14]. In the specific, a cementitious mortar characterized by yield stress \(\tau _0=750 \ Pa\) and viscosity \(\mu = 7.5\) Pa \(\cdot \) s has been employed. The printing parameters are those of case 3 in the previous benchmark: nozzle height \(h_{nozzle}=12.5\) mm, printing velocity \(v_{print}=30\) mm/s and flow velocity \(u_{flow}= 33.6\) mm/s. At the end of each layer, the nozzle was raised of \(\Delta z = h_{nozzle}\) without stopping the virtual printer, creating a single continuous filament wall (Fig. 12). Regarding the numerical parameters for the mesh refinement instead, maintaining the notation introduced in the previous benchmark, the following values were assigned: \(h_2=2 h\), \(h_3=3 h\), \(r_1 = 1.1 h_{nozzle}\) and \(r_2 = 1.3 h_{nozzle}\). Fig. 12 also shows how the contact algorithm illustrated in Sect. 5.2 performed reasonably well in maintaining a sharp outline between the different layers. Moreover, the good inter-layer bonding observed in the experimental data is also confirmed by the uniform and compact cross-sections obtained in the numerical simulations.

Comparison of the cross-sections obtained from the print of one (a), two (b) and three (c) layers for the present work with the experimental data (EXP) and the elasto-visco-plastic model (EVP) results presented in [15]

In Fig. 13 the extracted cross-sections are compared with the experimental data and the numerical results reported in [15]. A very good agreement is found for the single layer and two layers cross sections. However, for the cross-section involving three layers, the present model shows particularly high deformability. This could be partially imputed to the fact that the rheological parameters are kept constant during the analysis, while in reality time-dependent effects, due to thixotropy or early age formation of hydration products, may be playing a role. However, in [15] it is reported that a retarder admixture was used to minimize time-dependent effects in the printed material. Therefore, the main causes of this discrepancy could be associated to two other factors. The first one is that in [15] an elasto-visco-plastic material constitutive law was used, while in the present model the rheological law is not accounting for elastic contributions. The second cause is related to the uncertainties on the estimate given for the velocity of the compression wave on fresh concrete, which may lead to an excessive compressibility of the model.

Finally, the velocity and pressure fields during the printing of the multi-layer wall are analysed. In Fig. 14 the velocity field is reported at different times. It appears that the velocity assumes zero values almost everywhere, except in the vicinity of the nozzle. This is the target behaviour in 3DCP, which indicates that the already extruded material is able to retain its shape and possibly also sustain the subsequent layers. From Fig. 15 some insights into the pressures that develop during the printing process can also be obtained. As the number of layers grows, the average pressure at the base increases up to a 500 Pa. Much higher and localized pressures are instead observed during the printing process below the nozzle. It is interesting to notice that a larger portion of the material is affected by this pressure increment during the printing of the first layer. This is because, for the first layer, the distance between the nozzle and the ground is exactly 17.5 mm. Instead, for the successive layers, due to the squashing of the filaments underneath, the distance between the nozzle and the free surface of the previous layer becomes slightly more than 17.5 mm, allowing the material more space to flow.

7 Conclusion

The work reported in this paper has presented a detailed single-phase fluid computational model for the simulation of the extrusion and layer deposition processes of cementitious materials. To handle the large deformations typically encountered in 3DCP simulations, the Navier–Stokes equations were discretized using the PFEM method. Specifically, the presented model included the following innovative aspects:

-

The governing equations were developed in the Arbitrary Lagrangian-Eulerian description to facilitate the imposition of the time-dependent moving boundary conditions at the nozzle outlet.

-

An explicit time integration scheme was used, allowing for an easy treatment of the nonlinearities and simplifying the parallelization of the code.

-

Mass conservation in the PFEM framework was improved trough the implementation of a contact algorithm, based on a modification of the \(\alpha \)-shape method.

-

The computational cost was reduced by applying a mesh de-refinement technique.

The model was applied to the simulation of the printing of six rectilinear single-layer filaments and one multi-layer filament of cementitious mortar. The cross-sectional shapes were validated against the experimental data provided in [14, 15]. The simulations also proved the efficiency of the numerical strategies illustrated before.

The model can be exploited to gain further insights about the extrusion process by performing parametric studies exploring more printing velocities and printing conditions. The gathered knowledge is fundamental to predict and avoid unwanted behaviours during extrusion such as filament tearing or filament coiling, but also to better control the filament shapes by calibrating the rheological properties. Alternatively, the model could be a valuable substitute to experimental tests for developing new and improved printable cementitious mixes. Other possible applications regard the evaluation of the effect of different nozzle shapes on the printed filament or the study of more complex printing conditions, such as printing along curvilinear toolpath and on non-planar layers. As in this study, the focus was on the extrusion process and on the early ages shape prediction at the scale of the filament, a rheological law with constant parameters was adopted. However, for longer printing times simulations (i.e., on the scale of minutes or hours) the time-dependent behaviour of the material due to early-age creep [55], thixotropic effects [56] and the fluid-to-solid phase transition (i.e., hydration reaction) might start becoming relevant. Future works will address these issues and will focus on further reducing the computational cost, aiming to enable the model to simulate the printing process at the scale of the printed object and to study its structural properties.

References

Wangler T, Lloret E, Reiter L, Hack N, Gramazio F, Kohler M et al (2016) Digital concrete: opportunities and challenges. RILEM Tech Lett 10(1):67–75. https://doi.org/10.21809/rilemtechlett.2016.16

Mechtcherine V, Nerella VN, Will F, Näther M, Otto J, Krause M (2019) Large-scale digital concrete construction - CONPrint3D concept for on-site, monolithic 3D-printing. Autom Constr 11:107. https://doi.org/10.1016/j.autcon.2019.102933

Perrot A, Pierre A, Nerella VN, Wolfs RJM, Keita E, Nair SAO et al (2021) From analytical methods to numerical simulations: a process engineering toolbox for 3D concrete printing. Cem Concr Compos 9:122. https://doi.org/10.1016/j.cemconcomp.2021.104164

Roussel N, Geiker M, Dufour F, Thrane LN, Szabo P (2007) Computational modeling of concrete flow: general overview. Cem Concr Res 9(37):1298–1307. https://doi.org/10.1016/j.cemconres.2007.06.007

Wolfs RJM, Bos FP, Salet TAM (2018) Early age mechanical behaviour of 3D printed concrete: numerical modelling and experimental testing. Cem Concr Res 4(106):103–116. https://doi.org/10.1016/j.cemconres.2018.02.001

Vaitová M, Jendele L, Červenka J (2020) 3D printing of concrete structures modelled by FEM. Sol St Phen 309:261–266. https://doi.org/10.4028/www.scientific.net/SSP.309.261

Schmidt A, Lahmer T (2021) Numerical simulation of a 3D concrete printing process under polymorphic uncertainty. In: 9th International workshop on reliable engineering computing. Taormina, Italy; p. 118–126

Vantyghem G, Ooms T, Corte WD (2020) FEM modelling techniques for simulation of 3D concrete printing. In: Proceedings of the fib symposium 2020: concrete structures for resilient society. Shanghai, China; p. 964–972

Chang Z, Xu Y, Chen Y, Gan Y, Schlangen E, Šavija B (2021) A discrete lattice model for assessment of buildability performance of 3D-printed concrete. Comput-Aided Civ Inf 5(36):638–655. https://doi.org/10.1111/mice.12700

Cundall PA, Strack ODL (1979) A discrete numerical model for granular assemblies. Géotecnique 29:47–65. https://doi.org/10.1680/geot.1979.29.1.47

Mechtcherine V, Gram A, Krenzer K, Schwabe JH, Shyshko S, Roussel N (2014) Simulation of fresh concrete flow using Discrete Element Method (DEM): theory and applications. Mater Struct 47:615–630. https://doi.org/10.1617/s11527-013-0084-7

Ramyar E, Cusatis G (2022) Discrete fresh concrete model for simulation of ordinary, self-consolidating, and printable concrete flow. J Eng Mech 2:148. https://doi.org/10.1061/(asce)em.1943-7889.0002059

Krenzer K, Palzer U, Müller S, Mechtcherine V (2022) Simulation of 3D concrete printing using discrete element method. In: Buswell R, Blanco A, Cavalaro S, Kinnell P (eds) Third RILEM international conference on concrete and digital fabrication. UK, Loughborough, pp 161–166

Comminal R, da Silva WRL, Andersen TJ, Stang H, Spangenberg J (2020) Modelling of 3D concrete printing based on computational fluid dynamics. Cem Concr Res 12:138. https://doi.org/10.1016/j.cemconres.2020.106256

Spangenberg J, da Silva WRL, Comminal R, Mollah MT, Andersen TJ, Stang H (2021) Numerical simulation of multi-layer 3D concrete printing. RILEM Tech Lett 3(6):119–123. https://doi.org/10.21809/RILEMTECHLETT.2021.142

Wolfs RJM, Salet TAM, Roussel N (2021) Filament geometry control in extrusion-based additive manufacturing of concrete: the good, the bad and the ugly. Cem Concr Res 12:150. https://doi.org/10.1016/j.cemconres.2021.106615

Oñate E, Idelsohn SR, Pin FD, Aubry R (2004) The particle finite element method. An overview. Int J Comput Methods. 1:267–307

Cremonesi M, Franci A, Idelsohn S, Oñate E (2020) A state of the art review of the particle finite element method (PFEM). Arch Comput Methods Eng. 11(27):1709–1735. https://doi.org/10.1007/s11831-020-09468-4

Cremonesi M, Ferrara L, Frangi A, Perego U (2010) Simulation of the flow of fresh cement suspensions by a Lagrangian finite element approach. J Non-Newton Fluid. 12(165):1555–1563. https://doi.org/10.1016/j.jnnfm.2010.08.003

Ferrara L, Cremonesi M, Tregger N, Frangi A, Shah SP (2012) On the identification of rheological properties of cement suspensions: rheometry, computational fluid dynamics modeling and field test measurements. Cem Concr Res 8(42):1134–1146. https://doi.org/10.1016/j.cemconres.2012.05.007

Ferrara L, Cremonesi M, Faifer M, Toscani S, Sorelli L, Baril MA et al (2017) Structural elements made with highly flowable UHPFRC: correlating computational fluid dynamics (CFD) predictions and non-destructive survey of fiber dispersion with failure modes. Eng Struct 2(133):151–171. https://doi.org/10.1016/j.engstruct.2016.12.026

Reinold J, Nerella VN, Mechtcherine V, Meschke G (2019) Particle finite element simulation of extrusion processes of fresh concrete during 3D-concrete-printing. In: Sim-AM 2019: II international conference on simulation for additive manufacturing. Pavia, Italy: CIMNE; p. 428–439

Reinold J, Nerella VN, Mechtcherine V, Meschke G (2022) Extrusion process simulation and layer shape prediction during 3D-concrete-printing using the particle finite element method. Autom Constr 4:136. https://doi.org/10.1016/j.autcon.2022.104173

Rizzieri G, Cremonesi M, Ferrara L (2022) A Numerical Model of 3D Concrete Printing. In: di Prisco M, Meda A, Balazs GL, editors. Proceedings of the 14th fib PhD symposium in civil engineering. Rome, Italy; p. 841–848

Roussel N, Gram A, Cremonesi M, Ferrara L, Krenzer K, Mechtcherine V et al (2016) Numerical simulations of concrete flow: a benchmark comparison. Cem Concr Res 1(79):265–271. https://doi.org/10.1016/j.cemconres.2015.09.022

Roussel N, Spangenberg J, Wallevik J, Wolfs R (2020) Numerical simulations of concrete processing: from standard formative casting to additive manufacturing. Cem Concr Res 9:135. https://doi.org/10.1016/j.cemconres.2020.106075

Donea J, Huerta A, Ponthot JP, Rodríguez-Ferran A (2017) Arbitrary Lagrangian-Eulerian Methods. In: Stein E, de Borst R, Hughes TJR (eds) Encyclopedia of computational mechanics, 2nd edn. John Wiley & Sons, Ltd, pp 1–23

Banfill PFG (1991) Rheology of fresh cement and concrete: proceedings of an international conference, 1st ed. CRC Press, Liverpool

Tattersall GH (1991) Workability and quality control of concrete, 1st edn. CRC Press, London

Bingham EC (1922) Fluidity and plasticity. Mcgraw-Hill Book Company, Inc., New York

Papanastasiou TC (1987) Flows of materials with yield. J Rheol 7(31):385–404. https://doi.org/10.1122/1.549926

Bernard-Champmartin A, Vuyst FD (2014) A low diffusive Lagrange-remap scheme for the simulation of violent air-water free-surface flows. J Comput 10(274):19–49. https://doi.org/10.1016/j.jcp.2014.05.032

Farhat C, Rallu A, Shankaran S (2008) A higher-order generalized ghost fluid method for the poor for the three-dimensional two-phase flow computation of underwater implosions. J Comput 8(227):7674–7700. https://doi.org/10.1016/j.jcp.2008.04.032

Donea J, Huerta A (2003) Finite element methods for flow problems. John Wiley & Sons, Ltd, Chichester, England

Cremonesi M, Meduri S, Perego U (2020) Lagrangian-Eulerian enforcement of non-homogeneous boundary conditions in the particle finite element method. Comput Part Mech 1(7):41–56. https://doi.org/10.1007/s40571-019-00245-0

Reinhardt HW, Grosse C, Weiler B, Bohnert J, Windisch N (1996) P-wave propagation in setting and hardening concrete. Otto-Graf-J 7:181–189

Idelsohn SR, Oñate E, Pin FD (2004) The particle finite element method: A powerful tool to solve incompressible flows with free-surfaces and breaking waves. Int J Numer Methods Eng 10(61):964–989. https://doi.org/10.1002/nme.1096

Zhang X, Krabbenhoft K, Sheng D, Li W (2015) Numerical simulation of a flow-like landslide using the particle finite element method. Comput Mech 1(55):167–177. https://doi.org/10.1007/s00466-014-1088-z

Cremonesi M, Ferri F, Perego U (2017) A basal slip model for Lagrangian finite element simulations of 3D landslides. Int J Numer Anal Methods Geomech 1(41):30–53. https://doi.org/10.1002/nag.2544

Monforte L, Ciantia MO, Carbonell JM, Arroyo M, Gens A (2019) A stable mesh-independent approach for numerical modelling of structured soils at large strains. Comput Geotech 12:116. https://doi.org/10.1016/j.compgeo.2019.103215

Idelsohn S, Mier-Torrecilla M, Oñate E (2009) Multi-fluid flows with the particle finite element method. Comput Methods Appl Mech Eng 7(198):2750–2767. https://doi.org/10.1016/j.cma.2009.04.002

Celigueta MA, Deshpande KM, Latorre S, Oñate E (2016) A FEM-DEM technique for studying the motion of particles in non-Newtonian fluids. Application to the transport of drill cuttings in wellbores. Comput Part Mech 4(3):263–276. https://doi.org/10.1007/s40571-015-0090-3

Cremonesi M, Frangi A, Perego U (2010) A Lagrangian finite element approach for the analysis of fluid-structure interaction problems. Int J Numer Methods Eng 10(84):610–630. https://doi.org/10.1002/nme.2911

Oñate E, Cornejo A, Zárate F, Kashiyama K, Franci A (2022) Combination of the finite element method and particle-based methods for predicting the failure of reinforced concrete structures under extreme water forces. Eng Struct 1:251. https://doi.org/10.1016/j.engstruct.2021.113510

Ryzhakov PB, García J, Oñate E (2016) Lagrangian finite element model for the 3D simulation of glass forming processes. Comput Struct 12(177):126–140. https://doi.org/10.1016/j.compstruc.2016.09.007

Rodriguez Prieto JM, Carbonell JM, Cante JC, Oliver J, Jonsén P (2018) Generation of segmental chips in metal cutting modeled with the PFEM. Comput Mech 6(61):639–655. https://doi.org/10.1007/s00466-017-1442-z

Bobach BJ, Falla R, Boman R, Terrapon V, Ponthot JP (2022) Phase change driven adaptive mesh refinement in PFEM. ESAFORM 2021–24th international conference on material forming. Liège, Belgium, pp 3861–3869

Si H (2015) TetGen, a Delaunay-based quality tetrahedral mesh generator. ACM Trans Math Softw. https://doi.org/10.1145/2629697

Edelsbrunner H, Mücke EP (1994) Three-dimensional alpha shapes. ACM Trans Graph 13:43–72. https://doi.org/10.1145/174462.156635

Meduri S, Cremonesi M, Perego U (2019) An efficient runtime mesh smoothing technique for 3D explicit Lagrangian free-surface fluid flow simulations. Int J Numer Methods Eng. 1(117):430–452. https://doi.org/10.1002/nme.5962

Bochev PB, Dohrmann CR, Gunzburger MD (2006) Stabilization of low-order mixed finite elements for the stokes equations. SIAM J Numer Anal 44:82–101. https://doi.org/10.1137/S0036142905444482

Dohrmann CR, Bochev PB (2004) A stabilized finite elements method for the Stokes problem based on polynomial pressure projections. Int J Numer Methods Fluids 9(46):183–201. https://doi.org/10.1002/fld.752

Franci A, Cremonesi M (2017) On the effect of standard PFEM remeshing on volume conservation in free-surface fluid flow problems. Comput Part Mech 7(4):331–343. https://doi.org/10.1007/s40571-016-0124-5

Falla R, Bobach BJ, Boman R, Ponthot JP, Terrapon VE (2023) Mesh adaption for two-dimensional bounded and free-surface flows with the particle finite element method. Comput Part Mech. https://doi.org/10.1007/s40571-022-00541-2

Esposito L, Casagrande L, Menna C, Asprone D, Auricchio F (2020) Early-age creep behaviour of 3D printable mortars: experimental characterisation and analytical modelling. Mater Struct. https://doi.org/10.1617/s11527-021-01800-z

Roussel N (2006) A thixotropy model for fresh fluid concretes: theory, validation and applications. Cement Concrete Res 36:1797–1806. https://doi.org/10.1016/j.cemconres.2006.05.025

Acknowledgements

This research was partially supported by the Italian Ministry of University and Research through the project PRIN2017 XFASTSIMS: Extra fast and accurate simulation of complex structural systems (D41F19000080001). The second authors acknowledges the support of the MUSA–Multilayered Urban Sustainability Action–project, funded by the European Union–NextGenerationEU, under the National Recovery and Resilience Plan (NRRP) Mission 4 Component 2 Investment Line 1.5: Strenghtening of research structures and creation of R &D “innovation ecosystems”, set up of “territorial leaders in R &D”.

Funding

Open access funding provided by Politecnico di Milano within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rizzieri, G., Ferrara, L. & Cremonesi, M. Numerical simulation of the extrusion and layer deposition processes in 3D concrete printing with the Particle Finite Element Method. Comput Mech 73, 277–295 (2024). https://doi.org/10.1007/s00466-023-02367-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-023-02367-y