Abstract

Physics Informed Neural Networks (PINNs) are shown to be a promising method for the approximation of partial differential equations (PDEs). PINNs approximate the PDE solution by minimizing physics-based loss functions over a given domain. Despite substantial progress in the application of PINNs to a range of problem classes, investigation of error estimation and convergence properties of PINNs, which is important for establishing the rationale behind their good empirical performance, has been lacking. This paper presents convergence analysis and error estimates of PINNs for a multi-physics problem of thermally coupled incompressible Navier–Stokes equations. Through a model problem of Beltrami flow it is shown that a small training error implies a small generalization error. Posteriori convergence rates of total error with respect to the training residual and collocation points are presented. This is of practical significance in determining appropriate number of training parameters and training residual thresholds to get good PINNs prediction of thermally coupled steady state laminar flows. These convergence rates are then generalized to different spatial geometries as well as to different flow parameters that lie in the laminar regime. A pressure stabilization term in the form of pressure Poisson equation is added to the PDE residuals for PINNs. This physics informed augmentation is shown to improve accuracy of the pressure field by an order of magnitude as compared to the case without augmentation. Results from PINNs are compared to the ones obtained from stabilized finite element method and good properties of PINNs are highlighted.

Similar content being viewed by others

References

Ames WF (1967) Nonlinear partial differential equations, vol 1. Elsevier, Amsterdam

Finlayson BA (2013) The method of weighted residuals and variational principles. SIAM, New Delhi

Fletcher CA (1988) Computational techniques for fluid dynamics. Volume 1-fundamental and general techniques. Volume 2-specific techniques for different flow categories, Springer-Verlag, Berlin and New York

Dissanayake M, Phan-Thien N (1994) Neural-network-based approximations for solving partial differential equations. Commun Numer Methods Eng 10(3):195–201

Lagaris IE, Likas A, Fotiadis DI (1998) Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans Neural Netw 9(5):987–1000

Raissi M, Wang Z, Triantafyllou MS, Karniadakis GE (2019) Deep learning of vortex-induced vibrations. J Fluid Mech 861:119–137

Raissi M, Perdikaris P, Karniadakis GE (2019) Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J Comput Phys 378:686–707

Baydin AG, Pearlmutter BA, Radul AA, Siskind JM (2018) Automatic differentiation in machine learning: a survey. J Mach Learn Res 18:1–43

Hornik K, Stinchcombe M, White H (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2(5):359–366

Pinkus A (1999) Approximation theory of the mlp model in neural networks. Acta Numer 8:143–195

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, et al (2016) Tensorflow: a system for large-scale machine 377 learning. In: 12th USENIX symposium on operating systems design and Imple-378 mentation (OSDI’16), pp 265–283

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in pytorch

Shin Y, Darbon J, Karniadakis GE (2020) On the convergence of physics informed neural networks for linear second-order elliptic and parabolic type pdes. arXiv:2004.01806

Gao H, Sun L, Wang J-X (2021) Phygeonet: physics-informed geometry-adaptive convolutional neural networks for solving parameterized steady-state pdes on irregular domain. J Comput Phys 428:110079

Jin X, Cai S, Li H, Karniadakis GE (2021) Nsfnets (Navier–Stokes flow nets): physics-informed neural networks for the incompressible Navier–Stokes equations. J Comput Phys 426:109951

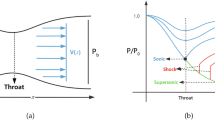

Mao Z, Jagtap AD, Karniadakis GE (2020) Physics-informed neural networks for high-speed flows. Comput Methods Appl Mech Eng 360:112789

Rao C, Sun H, Liu Y (2020) Physics-informed deep learning for incompressible laminar flows. Theor Appl Mech Lett 10(3):207–212

Sun L, Wang J-X (2020) Physics-constrained Bayesian neural network for fluid flow reconstruction with sparse and noisy data. Theor Appl Mech Lett 10(3):161–169

He Q, Barajas-Solano D, Tartakovsky G, Tartakovsky AM (2020) Physics-informed neural networks for multiphysics data assimilation with application to subsurface transport. Adv Water Resour 141:103610

Tripathy RK, Bilionis I (2018) Deep uq: learning deep neural network surrogate models for high dimensional uncertainty quantification. J Comput Phys 375:565–588

Zhu Y, Zabaras N (2018) Bayesian deep convolutional encoder-decoder networks for surrogate modeling and uncertainty quantification. J Comput Phys 366:415–447

Cai S, Wang Z, Wang S, Perdikaris P, Karniadakis GE (2021) Physics-informed neural networks for heat transfer problems. J Heat Transf 143(6):060801

Kissas G, Yang Y, Hwuang E, Witschey WR, Detre JA, Perdikaris P (2020) Machine learning in cardiovascular flows modeling: predicting arterial blood pressure from non-invasive 4d flow mri data using physics-informed neural networks. Comput Methods Appl Mech Eng 358:112623

Sahli Costabal F, Yang Y, Perdikaris P, Hurtado DE, Kuhl E (2020) Physics-informed neural networks for cardiac activation mapping. Front Phys 8:42

Zhang X, Garikipati K (2020) Machine learning materials physics: multi-resolution neural networks learn the free energy and nonlinear elastic response of evolving microstructures. Comput Methods Appl Mech Eng 372:113362

Karumuri S, Tripathy R, Bilionis I, Panchal J (2020) Simulator-free solution of high-dimensional stochastic elliptic partial differential equations using deep neural networks. J Comput Phys 404:109120

Patel DV, Ray D, Oberai AA (2022) Solution of physics-based Bayesian inverse problems with deep generative priors. Comput Methods Appl Mech Eng 400:115428

Mishra S, Molinaro R (2022) Estimates on the generalization error of physics-informed neural networks for approximating a class of inverse problems for pdes. IMA J Numer Anal 42(2):981–1022

Guo M, Haghighat E (2022) Energy-based error bound of physics-informed neural network solutions in elasticity. J Eng Mech 148(8):04022038

De Ryck T, Jagtap AD, Mishra S (2022) Error estimates for physics informed neural networks approximating the Navier–Stokes equations. arXiv:2203.09346

Masud A, Khurram R (2004) A multiscale/stabilized finite element method for the advection-diffusion equation. Comput Methods Appl Mech Eng 193(21–22):1997–2018

Masud A, Khurram R (2006) A multiscale finite element method for the incompressible Navier–Stokes equations. Comput Methods Appl Mech Eng 195(13–16):1750–1777

Masud A, Calderer R (2009) A variational multiscale stabilized formulation for the incompressible Navier–Stokes equations. Comput Mech 44(2):145–160

Masud A, Al-Naseem AA (2018) Variationally derived discontinuity capturing methods: fine scale models with embedded weak and strong discontinuities. Comput Methods Appl Mech Eng 340:1102–1134

Zhu L, Masud A (2021) Residual-based closure model for density-stratified incompressible turbulent flows. Comput Methods Appl Mech Eng 386:113931

Zhu L, Goraya SA, Masud A (2023) A variational multiscale method for natural convection of nanofluids. Mech Res Commun 127:103960

Masud A, Goraya SA (2022) Variational embedding of measured data in physics-constrained data-driven modeling. J Appl Mech 89(11):111001

Doering CR, Gibbon JD (1995) Applied analysis of the Navier-Stokes equations, vol 12. Cambridge University Press, Cambridge

Haghighat E, Raissi M, Moure A, Gómez H, Juanes R (2021) A physics-informed deep learning framework for inversion and surrogate modeling in solid mechanics. Comput Methods Appl Mech Eng 379:113741

Sirignano J, Spiliopoulos K (2018) Dgm: a deep learning algorithm for solving partial differential equations. J Comput Phys 375:1339–1364

Rao C, Sun H, Liu Y (2021) Physics-informed deep learning for computational elastodynamics without labeled data. J Eng Mech 147(8):04021043

Simard PY, Steinkraus D, Platt JC, et al (2003) Best practices for convolutional neural networks applied to visual document analysis. In: Icdar, Vol. 3

Yaeger L, Lyon R, Webb B (1996) Effective training of a neural network character classifier for word recognition, Advances in neural information processing systems, Vol. 9, MIT Press, Cambridge MA

Wong JC-F (2007) Numerical simulation of two-dimensional laminar mixed-convection in a lid-driven cavity using the mixed finite element consistent splitting scheme. Int J Numer Methods Heat Fluid Flow 17:46–93

Garnier C, Currie J, Muneer ET (2009) Integrated collector storage solar water heater: temperature stratification. Appl Energy 86(9):1465–1469

McKay MD, Beckman RJ, Conover WJ (2000) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42(1):55–61

Raghu M, Poole B, Kleinberg J, GanguliS, Sohl-Dickstein J (2017) On the expressive power of deep neural networks. In: International conference on machine learning, PMLR, pp 2847–2854

Saarinen S, Bramley R, Cybenko G (1993) Ill-conditioning in neural network training problems. SIAM J Sci Comput 14(3):693–714

Strang G (2019) Linear algebra and learning from data, vol 4. Wellesley-Cambridge Press, Cambridge

Kutz JN (2013) Data-driven modeling & scientific computation: methods for complex systems & big data. Oxford University Press, Oxford

Acknowledgements

Computing resources for the numerical test cases were provided by the Teragrid/XSEDE Program under NSF Grant TG-DMS100004. This research was partially supported by NIH-USA Grant No. R01GN135921.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

Authors have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A . Error estimates for a different problem domain

In this appendix, error estimates for a problem with a different geometric description than that of the problem in Sect. 4 are presented. The new domain is half of the original domain in the x-direction i.e., \(\Omega =\left[ {0,1} \right] \times \left[ {-1,1} \right] \) and the sinusoidal solution is no longer axisymmetric. Convergence rate of total error w.r.t the training error is shown in Fig. 22 and the convergence of \(W^{0,\infty }\), \(W^{1,\infty }\), and \(W^{2,\infty }\) norms of total error w.r.t total number of collocation points is shown in Figs. 23, 24 and 25. The convergence rates are in the same ballpark as in Sect. 4.

Appendix B . Error estimates for higher Reynolds number

Here error estimates for a problem with a different flow parameters than that of the problem in Sect. 4 are presented. Reynolds number is increased from 1 to 10 and the value of gravitational acceleration is changed from -1 to \(-\)9.8. Convergence rate of total error w.r.t the training error is shown in Fig. 26 and the convergence of \(W^{0,\infty }\), \(W^{1,\infty }\), and \(W^{2,\infty }\) norms of total error w.r.t total number of collocation points is shown in Figs. 27, 28 and 29. The convergence rates are similar to the ones shown in Sect. 4.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Goraya, S., Sobh, N. & Masud, A. Error estimates and physics informed augmentation of neural networks for thermally coupled incompressible Navier Stokes equations. Comput Mech 72, 267–289 (2023). https://doi.org/10.1007/s00466-023-02334-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-023-02334-7