Abstract

Background

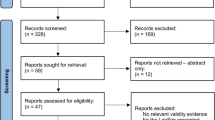

Simulation is increasingly being explored as an assessment modality. This study sought to develop and collate validity evidence for a novel simulation-based assessment of operative competence. We describe the approach to assessment design, development, pilot testing, and validity investigation.

Methods

Eight procedural stations were generated using both virtual reality and bio-hybrid models. Content was identified from a previously conducted Delphi consensus study of trainers. Trainee performance was scored using an equally weighted Objective Structured Assessment of Technical Skills (OSATS) tool and a modified Procedure-Based Assessment (PBA) tool. Validity evidence was analyzed in accordance with Messick’s validity framework. Both ‘junior’ (ST2–ST4) and ‘senior’ trainees (ST 5–ST8) were included to allow for comparative analysis.

Results

Thirteen trainees were assessed by ten assessors across eight stations. Inter-station reliability was high (α = 0.81), and inter-rater reliability was acceptable (inter-class correlation coefficient 0.77). A significant difference in mean station score was observed between junior and senior trainees (44.82 vs 58.18, p = .004), while overall mean scores were moderately correlated with increasing training year (rs = .74, p = .004, Kendall’s tau-b .57, p = 0.009). A pass-fail score generated using borderline regression methodology resulted in all ‘senior’ trainees passing and 4/6 of junior trainees failing the assessment.

Conclusion

This study reports validity evidence for a novel simulation-based assessment, designed to assess the operative competence of higher specialist trainees in general surgery.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The traditional Halstedian apprenticeship training model in surgery has undergone significant revolution in recent decades [1]. Evolving patient expectations regarding the role of surgical trainees in their care [2], an increased emphasis on theater efficiency [3] and well-explored concerns regarding the perceived operative competence and confidence of graduating trainees [4,5,6], has led to a re-evaluation of this training paradigm. ‘Competency-based’ approaches to outcome-driven training and assessment are well established across training jurisdictions [7, 8], leading to the development of nominally time-independent postgraduate surgical programs [9]. The surgical specialties pose a unique challenge in this regard, due to the requirement for robust and reliable methods of teaching and assessing competence in operative skill [10].

From August of 2021, surgical training in Ireland and the United Kingdom (UK) has become explicitly outcomes based [11]. Trainees are measured against high-level outcomes, (i.e., ‘Capabilities in Practice’). Some trainees who demonstrate accelerated development may complete training more rapidly than the indicative time [11]. A somewhat similar system of high-level ‘milestones’ and derived ‘Entrustable Professional Activity’ assessments now operates in the USA context [8]. In order to transition to a truly competency-based, time-independent training paradigm, operative skill will need to be assessed using objective, standardized, and validated approaches. The primary tool for measuring operative competence in the UK and Ireland context remains the Procedure-Based Assessment, a workplace-based procedure-specific assessment tool [12]. Though this tool has accrued substantial validity evidence, concerns exist regarding the opportunistic way in which these assessments are undertaken by trainees, as well as their potential for rater subjectivity [13]. Operative experience targets, though a traditional proxy measure of operative competence, have been de-emphasized in the updated curriculum and lack validity evidence in the UK and Irish context [13].

The role of simulation-based training and assessment in surgery has evolved in tandem with competency-based education [14]. Simulation is commonplace in surgical training curricula [15] and is increasingly being deployed as an assessment modality [16, 17]. Simulation has most notably been used in high-stake assessments through the Colorectal Objective Structured Assessment of Technical Skill (COSATS) by the American Board of Colon and Rectal Surgery [16, 18]. Such simulation-based assessments have not yet been used in a high-stakes setting for higher specialist training in Ireland or the UK to date. We hypothesize that simulation could be used as a reliable, valid, objective, and standardizable method of summative operative competence assessment for trainees undertaking higher specialist training in general surgery.

This study sought to develop and validate a pilot simulation-based assessment of operative competence based on the common training curriculum of the UK and Ireland; the Joint Committee on Surgical Training (JCST)/Intercollegiate Surgical Curriculum Program (ISCP) curriculum in general surgery. Herein, we describe the approach to assessment design, development, and pilot testing and report on the validity of this assessment in line with Messick’s validity framework [19].

Methods

Initial development of the examination framework

The approach to assessment development and validation was derived from a primer for the validation of simulation-based educational assessment published by Cook and Hatala [20]. The primary interpretation of the intended construct was defined as “learners have operative skill sufficient to operate safely as an independent practitioner in General Surgery.” With reference to the UK and Ireland surgery curriculum, this construct relates to the following Capabilities in Practice (CiPs), Generic Professional Capabilities (GPCs), and syllabus requirements: ‘manages an operating list’ (CiP), ‘professional skills’ (GpC), and both ‘technical skills—general’ and ‘technical skills—index procedures’ (syllabus). The intended decision related to this examination was derived through an iterative process of literature review [21], semi-structured interviews with key stakeholder representatives [22], and a survey of surgical trainees regarding their perceptions and experience of simulation-based assessment (unpublished data). This pilot assessment was derived to explore whether scores achieved could inform, as part of a multi-faceted assessment framework, whether a given trainee has sufficient procedural competence to proceed to the next stage of training or independent practice.

This assessment was designed based on the well-established Objective Structured Assessment of Technical Skill (OSATS) examination [23] as followed by other high-stakes simulation-based assessments [16, 18]. Therefore, the initial design of this assessment was that of a time-limited eight-station framework [23]. Operative procedures for use in this assessment were selected using a modified Copenhagen Academy for Medical Education and Simulation Needs Assessment Framework (CAMESNAF) [24]. This resulted in a prioritized list of procedures suitable for simulation-based assessment of general surgery trainees at the end of phase 2 (ST6), from which eight were chosen for inclusion in the assessment model [25]. Future, varying assessment iterations could be created using the procedural lists generated from this Delphi process.

Assessment instruments

Performance was assessed at each station using a modified Procedure-Based Assessment (PBA) tool (consisting of a task-specific checklist and a global rating scale) [26] and the OSATS tool [27]. The OSATS tool is the most commonly used tool in surgical assessment research [28]. The PBA tool consists of a procedure-specific checklist containing pre-, intra- and post-operative domains, along with a global rater from 1a to 4b. A combination of an equally weighted checklist tool and OSATS tool is currently used by RCSI’s ‘Operative Surgical Skill’ assessments which inform decisions on progression from Core to Higher specialist training [29]. These annual assessments undergo rigorous annual standard setting and validity testing, although focus on core basic surgical skills and tasks rather than procedures. Due to time and feasibility constraints, key component tasks, rather than entire procedures, were simulated for assessment in some stations in this assessment. For this reason, the intra-operative domain of the PBA tool was adapted by a steering group of simulation and surgical education researchers (CT, MM, DOK). Items on the modified checklist were scored as ‘done,’ ‘partially done,’ or ‘not done,’ with total scores standardized and weighted equally to OSATs scores to calculate the total final score. An example assessment tool is available in the supplemental material (Fig. S1, supplemental data). The modified PBA checklists were trialed by a consultant surgeon and surgical trainee to ensure that they could be completed on the proposed stations in the indicated time frame. Data from this trial were not included in subsequent analysis. As assessment tool modifications can have impacts on their validity, this study also seeks to generate validity data for these modified tools.

The LapSim™ simulator was used to assess performance in three of the eight stations. This simulator reports a number of computer-measured metrics (Table S1, supplementary data). LapSim™ recorded metrics did not contribute to candidate scores, although statistical analyses are reported herein.

Participants

Previous literature has suggested that 8 stations provide a reliable indicator of performance in a multi-station OSCE [23]. While this pilot assessment may inform future sample size considerations in larger iterations, initial sample size was determined based on the number of participants required to demonstrate a difference in outcomes between ‘senior’ and ‘junior’ general surgery trainees (trainees in their last or first four years of surgical training, respectively). Studies reporting mean PBA scores awarded with standard deviations are lacking since the introduction of a revised global rating scale in 2017 [13]. Furthermore, a novel, modified PBA tool for use in simulation-based assessment is being used in this study. For these reason, power calculations could only be conducted using the OSATS tool. Sample size was determined based on anticipated differences in overall scores across the eight-station OSCE format and not at the individual station level. Mean OSATS scores and standard deviations were derived from a prior study using a simulation-based assessment to assess surgeons at the end of training (mean score 0.75, SD 0.06) [30]. Assuming that a score difference of 20% or more was a relevant difference between groups, a sample size of six participants per assessment group was sufficient to minimize the risk of a type II error (α = 0.05). Again, it is important to emphasize the pilot nature of this assessment, and the lack of data reporting mean scores across simulated PBA assessment tools let alone the modified checklists used in this study.

Procedure

Ethical approval was granted by the University of Medicine and Health Sciences at the Royal College of Surgeons in Ireland. The assessment took place at the National Surgical Training Center, Royal College of Surgeons in Ireland, which contains a purpose-built ‘wet lab’ for simulation-based technical skills training and assessment. Examiners were familiarized with the format, tools, and models prior to the assessment, and pre-assessment standard setting was conducted using a modified Angoff method [31]. Candidates were informed of the assessment format in a 30-min briefing session prior to commencement. This orientation session also allowed for introduction to the virtual reality models used (LapSim, Surgical Science, Sweden). Examiners were not informed of the trainee’s level of training. Stations utilized a combination of bio-hybrid models (ileostomy reversal, operative management of a fistula-in-ano, pilonidal sinus excision, ventral hernia repair, emergency laparotomy, and management of a blunt liver injury) and virtual reality models (laparoscopic appendicectomy, laparoscopic cholecystectomy, and right hemi-colectomy—vessel ligation). The LapSim simulator (Surgical Science, Sweden) was chosen for use in the virtual reality stations given the substantial validity evidence published with respect to its use in training and assessment [32]. Candidates rotated through the stations and were assessed by a single assessor using the modified PBA and OSATs tools. Two stations (ileostomy reversal and emergency laparotomy) were also scored by a second, independent rater. Performance in each station was video recorded in an anonymized fashion for quality assurance purposes. Scores were entered immediately to a secure database (QPERCOM). Instructions regarding the procedure or task to be completed were given to participants before each station. Video capture was used for quality control purposes. Rater training was conducted before the assessment. All assessors were registered consultant surgeon trainers with the Royal College of Surgeons in Ireland.

Statistical analysis

Validity evidence was collated according to Messick’s validity framework [19]. Internal consistency was assessed using Cronbach’s alpha. Inter-rater reliability was calculated using intra-class correlation coefficients. Reliability was further explored using generalizability theory [33]; variance component analysis was used to determine the relative contribution of trainees, assessors, and stations to observed score variance allowing for the estimation of the ‘true’ or ‘intended’ variance, i.e., the proportion of score variance attributable to individual trainees. For the purpose of this analysis, trainees, assessors, and stations were thought of as a sample from an infinite universe of potential trainees, assessors, and stations [34]. The Consequential validity evidence was explored by generating a pass-fail cut-off score, using borderline regression methodology [35]. A linear regression model was used to plot the global competency score against both OSATS and PBA checklist scores. The global score representing the ‘borderline’ candidate was inserted into the linear equation, and the corresponding total OSATS and PBA checklist scores were extrapolated, representing the true pass score for each station. The pass score for the assessment as a whole was the sum of the pass scores for each station. OSATS and modified PBA scores were equally weighted. Relationships with other variables (training year) were performed using Pearson correlation coefficients. Findings are reported according to the domains of Messick’s unified validity framework; response process and content domains are outlined above, and no further supporting evidence according to these domains is further outlined.

Results

Thirteen candidates undertook the assessment across eight 15-min stations. Candidates were divided into ‘junior’ trainees in their first 4 years of surgical training (ST1–ST4, N = 6) and ‘Senior’ Trainees in their last 4 years of training (ST5–ST8, N = 7). Mean scores awarded are outlined in Table 1.

Internal structure

Inter-station reliability for the total score awarded was assessed using Cronbach’s alpha (α), calculated as 0.81 for the assessment overall. Removing station 1(management of a fistula-in-ano) would result in a higher α (Table 2). Internal consistency of individual assessment tools is outlined in Table S2, supplementary data. Correlations between individual station scores and the total score and between scores awarded at each station, are reported in Tables S3 and S4 (supplementary data).

Inter-rater reliability was assessed for two stations: emergency laparotomy (Station 7) and ileostomy reversal (Station 2). On intra-class correlation coefficient analysis, inter-rater reliability was 0.77. Individual station scores variably correlated with the total score awarded. Using Generalizability Theory, a reliability coefficient (G) of 0.7 was calculated for the overall 8-station assessment. Fourteen hypothetical stations would be required to increase the reliability coefficient to > 0.8. Further, data relating to the model construction and variance component analysis are outlined in Table 3.

Relationships with other variables—differentiating between junior and more senior trainees

A significant difference in mean station score was observed between ‘Junior’ and ‘Senior’ trainees (44.82 vs 58.18, p = 0.004) (Fig. 1). Mean scores were moderately correlated with increasing training year (rs = 0.74, p = 0.004, Kendall’s tau-b 0.57, p = 0.009) (Fig. 2).

At an individual station level, five of eight stations could differentiate between ‘Junior’ and ‘Senior’ trainee candidates (Table 4).

A number of simulator-measured metrics were further recorded for the three laparoscopic skills (LapSim™) stations (Table S1, supplementary data). Correlations between simulator- and assessor-recorded metrics are outlined in Tables S5, S6, and S7 (supplementary data).

Consequences

A pass-fail standard for each station was generated using borderline regression methodology. Passing scores and passing rates for individual stations are outlined in Table 5. There was no difference in the pass rate (9/13) when using a compensatory model (where the candidate can fail an unlimited number of stations once the overall pass mark is achieved) or a conjunctive model (where the candidate is required to achieve the overall pass mark and pass at least 50% of stations). Either method resulted in all ‘senior’ trainees passing and 2/6 of ‘junior’ trainees passing. Only one trainee passed all eight stations.

Feasibility

Two rounds of assessment were conducted. Each session was conducted over 2 h. Models and required equipment for open stations cost an estimated €4680, although much of the reusable equipment was already available within the simulation laboratory at RCSI. Rental and support costs for the LapSim laparoscopic simulators were €4305. Staffing costs were estimated at an additional €4000, bringing the estimated cost of the assessment to €12,985.

Discussion

This study outlines validity evidence for a novel pilot simulation-based assessment of operative competence, designed for assessment of higher specialist trainees in General surgery and centered around operative competency expectations derived from the intercollegiate surgical curriculum program of Ireland and the UK. The principles of assessment design and validity testing are not specific to this jurisdictional context and can be used to generate simulation-based assessments within competency-based training curricula internationally. High internal consistency metrics were generated, with acceptable inter-rater reliability. While a sufficient number of observations were observed to reliably rank candidates (G > 0.8 with 6 stations), 14 stations would be required in order to reliably determine whether a candidate met the desired level of operative competence across procedures, due to the observed absolute error variance [34]. The assessment can differentiate between trainees according to level of training, with more senior trainees outperforming their junior trainee counterparts across management of a fistula in-ano, ileostomy closure, right hemi-colectomy, laparoscopic appendicectomy, and emergency laparotomy stations. Mean station scores were moderately and significantly correlated with increasing training year (R2 = 0.6). Using borderline regression methodology, a pass-fail mark was generated which resulted in all trainees in their last 4 years of training passing the assessment and 4/6 more junior trainees failing. The estimated cost of delivering this pilot assessment was €12,985.

Only one participant passed all eight stations. Given the absolute curricular (and indeed, clinical) requirements for competence across several key index procedures, it is arguable that an alternate approach to standard setting and pass/fail cut-off is required. In particular, it could be argued that candidates should be required to pass all stations in order to pass the entire assessment, without the capacity for inter-station score compensation. The ‘patient safety’ method of standard setting [36, 37] is an approach to developing defensible minimum passing standards whereby incorrect performance in critical items or procedures leads to failure without recourse to compensation.

The implementation of competency-based education principles across surgical training programs requires robust methods of trainee assessments. The newly updated general surgery curriculum of the UK and Ireland emphasizes two critical stages during higher specialist training with corresponding operative competency expectations: phase 2 outlining expectation in core elective and emergency procedures and phase 3 outlining further competency expectations in a given trainee’s sub-specialty of interest. Assessment across these procedures is primarily conducted in the workplace. In other jurisdictions, high-stakes simulation-based assessments have been designed to inform end-of-training certification decisions [16,17,18, 38]. This study suggests that a similar assessment modality has sufficient validity evidence to support its use in the assessment of trainees in Ireland. However, it is important to emphasize that this study reports pilot data with a limited number of trainee participants and does not yet support the implementation of such a modality in informing high-stakes assessment outcomes. The role, if any, of simulation in informing high-stakes training decisions such as progression or end-of-training certification remains to be fully elucidated, particularly in the context of an existing longitudinal multi-faceted assessment program. Furthermore, this study does not further explore the potential value of this assessment, or similar assessments, in a purely formative context.

In a 2012 Delphi study of members of the Association for Surgical Education, clarifying the role of simulation for the certification of residents and practicing surgeons was identified as a high priority for surgical education research [39]. Simulation-based assessments (SBA) currently play varying, though often significant, roles in the training and certification process across jurisdictions. For example, passing the fundamentals of laparoscopic surgery assessment is a certification requirement in certain specialties in the USA [40,41,42,43,44]. For practicing surgeons, the General Medical Council of the UK use simulated procedures, with cadaveric models, to inform performance assessments to support fitness-to-practice decisions [45]. At end-of-training certification level, studies to date suggest that SBA assesses a different construct to knowledge-based assessments and may therefore add validity to the certification process [18, 46]; a low positive correlation only (r = 0.25) was observed between scores awarded to trainees in a simulation-based certification exam and oral examination scores by de Montbrun et al. [18]. Despite published validity evidence for such assessments according to modern concepts of construct validity, the acceptability of such assessments remains a barrier to their widespread implementation. Stakeholders report lack of evidence and financial considerations as factors contributing to lack of SBA implementation [47].

These concerns, particularly with respect to cost, are not unfounded. The estimated costs of the reported assessment approached €13,000. This assessment took place in a well-equipped national training center with purpose-designed facilities and trained personnel. The implementation of such assessments across programs and local contexts will therefore likely vary. Furthermore, studies have yet to determine the appropriate role and weighting of simulation-based assessments in the context of programmatic assessment. Potential drawbacks of SBA when compared to workplace-based assessment include insufficient model fidelity, difficulty in simulating case complexity or variation, and further challenges associated with conducting longitudinal, repeated assessments. Finally, the acceptability of such assessments to key stakeholders in surgical education should be further explored by future studies prior to the implementation of any high-stakes assessment.

Limitations

This study is limited by its small sample size. In particular, pass rates calculated by borderline regression methodology are limited due to low numbers or ‘borderline’ performance scores, particularly in some individual stations. Initial power calculations were derived using published data on the OSATS tool, with limited published data available on mean PBA scores across procedures. The ultimate scoring tool used an equally weighted OSATs and PBA tool. Derived procedures, assessment tools, and competency expectations may differ across jurisdictions, training stages, and local contexts. For example, it may be inappropriate to assess the competence of breast surgery trainees to consultant-ready standard in colorectal procedures, such as ileostomy reversal or fistula-in-ano surgery. During standard setting examiners were informed to assess candidates to the level of a day-1 general surgery consultant regardless of sub-specialty interest. In future iterations it may be required to further outline competency expectations for each procedure in more detail, and in particular emphasize the need to assess to the minimum standard of competence required for all trainees regardless of intended or declared sub-specialty interest. The somewhat unique highly centralized, nationally delivered and relatively well-resourced simulation-based training curriculum in Ireland may mean that further implementation challenges would be encountered by other jurisdictions. A further potential limitation is the use of a compensatory scoring method over a non-compensatory method. Given that trainees at the end of phase 2 are expected to display competent performance in a series of core general and emergency procedures, it may be more appropriate to design an assessment that ensures a baseline procedure-specific competence in all included procedural stations.

Conclusion

This study reports validity evidence of a novel simulation-based assessment of operative competence, designed for assessment of Irish higher specialist trainees in General surgery. Further larger-scale validation studies, along with studies exploring the role of such assessments in a longitudinal training and evaluation program, will be required prior to the implementation of SBA in general surgery training.

Data availability

Data is available upon request.

References

Hurreiz H (2019) The evolution of surgical training in the UK. Adv Med Educ Pract 10:163–168

Brown E, Choi J, Sairi T (2020) Resident involvement in plastic surgery: divergence of patient expectations and experiences with surgeon’s attitudes and actions. J Surg Educ 77:291–299

Aggarwal R, Darzi A (2006) Training in the operating theatre: is it safe? Thorax 61:278–279

Elfenbein DM (2016) Confidence crisis among general surgery residents: a systematic review and qualitative discourse analysis. JAMA Surg 151:1166–1175

Poudel S, Hirano S, Kurashima Y, Stefanidis D, Akiyama H, Eguchi S, Fukui T, Hagiwara M, Hashimoto D, Hida K, Izaki T, Iwase H, Kawamoto S, Otomo Y, Nagai E, Saito M, Takami H, Takeda Y, Toi M, Yamaue H, Yoshida M, Yoshida S, Kodera Y et al (2020) Are graduating residents sufficiently competent? Results of a national gap analysis survey of program directors and graduating residents in Japan. Surg Today 50:995–1001

Vu JV, George BC, Clark M, Rivard SJ, Regenbogen SE, Kwakye G (2021) Readiness of graduating general surgery residents to perform colorectal procedures. J Surg Educ. https://doi.org/10.1016/j.jsurg.2020.12.015

Harris KA, Nousiainen MT, Reznick R (2020) Competency-based resident education: the Canadian perspective. Surgery 167:681–684

(2014) The general surgery milestone project. J Grad Med Educ 6:320–328

James HK, Gregory RJH (2021) The dawn of a new competency-based training era. Bone Joint Open 2:181–190

Schijven MP, Bemelman WA (2011) Problems and pitfalls in modern competency-based laparoscopic training. Surg Endosc 25:2159–2163

Cook T, Lund J (2021) General surgery curriculum—the Intercollegiate Surgical Curriculum Programme. https://www.iscp.ac.uk/media/1103/general-surgery-curriculum-aug-2021-approved-oct-20v3.pdf

Marriott J, Purdie H, Crossley J, Beard JD (2011) Evaluation of procedure-based assessment for assessing trainees’ skills in the operating theatre. Br J Surg 98:450–457

Toale C, Morris M, Kavanagh DO (2022) Assessing operative skill in the competency-based education era: lessons from the UK and Ireland. Ann Surg 275(4):e615–e625. https://doi.org/10.1097/SLA.0000000000005242

Agha RA, Fowler AJ (2015) The role and validity of surgical simulation. Int Surg 100:350–357

Milburn JA, Khera G, Hornby ST, Malone PSC, Fitzgerald JEF (2012) Introduction, availability and role of simulation in surgical education and training: review of current evidence and recommendations from the association of surgeons in training. Int J Surg 10:393–398

de Montbrun SL, Roberts PL, Lowry AC, Ault GT, Burnstein MJ, Cataldo PA, Dozois EJ, Dunn GD, Fleshman J, Isenberg GA, Mahmoud NN, Reznick RK, Satterthwaite L, Schoetz D Jr, Trudel JL, Weiss EG, Wexner SD, MacRae H (2013) A novel approach to assessing technical competence of colorectal surgery residents: the development and evaluation of the Colorectal Objective Structured Assessment of Technical Skill (COSATS). Ann Surg 258:1001–1006

Halwani Y, Sachdeva AK, Satterthwaite L, de Montbrun S (2019) Development and evaluation of the General Surgery Objective Structured Assessment of Technical Skill (GOSATS). Br J Surg 106:1617–1622

de Montbrun S, Roberts PL, Satterthwaite L, MacRae H (2016) Implementing and evaluating a national certification technical skills examination: the colorectal objective structured assessment of technical skill. Ann Surg 264:1–6

Association AER (1999) American Psychological Association, National Council on Measurement in Education. Standards for educational and psychological testing

Cook DA, Hatala R (2016) Validation of educational assessments: a primer for simulation and beyond. Adv Simul 1:31

Toale C, Morris M, Kavanagh D (2021) Perceptions and experiences of simulation-based assessment of technical skill in surgery: a scoping review. Am J Surg 222:723–730

Toale C, Morris M, Kavanagh DO (2022) Perspectives on simulation-based assessment of operative skill in surgical training. Med Teach. https://doi.org/10.1080/0142159X.2022.2134001

Martin JA, Regehr G, Reznick R, MacRae H, Murnaghan J, Hutchison C, Brown M (1997) Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg 84:273–278

Nayahangan LJ, Clementsen PF, Paltved C, Lindorff-Larsen KG, Nielsen BU, Konge L (2016) Identifying technical procedures in pulmonary medicine that should be integrated in a simulation-based curriculum: a national general needs assessment. Respiration 91:517–522

Toale C, Morris M, Konge L, Nayahangan LJ, Roche A, Heskin L, Kavanagh DO (2024) Generating a prioritized list of operative procedures for simulation-based assessment of general surgery trainees through consensus. Ann Surg 279:900–905

Mayne A, Wilson L, Kennedy N (2020) The usefulness of procedure-based assessments in postgraduate surgical training within the intercollegiate surgical curriculum programme; a scoping review. J Surg Educ 77:1227–1235

Moorthy K, Munz Y, Sarker SK, Darzi A (2003) Objective assessment of technical skills in surgery. BMJ 327:1032–1037

Vaidya A, Aydin A, Ridgley J, Raison N, Dasgupta P, Ahmed K (2020) Current status of technical skills assessment tools in surgery: a systematic review. J Surg Res 246:342–378

de Blacam C, O’Keeffe DA, Nugent E, Doherty E, Traynor O (2012) Are residents accurate in their assessments of their own surgical skills? The American Journal of Surgery 204:724–731

Schijven MP, Reznick RK, ten Cate OTJ, Grantcharov TP, Regehr G, Satterthwaite L, Thijssen AS, MacRae HM (2010) Transatlantic comparison of the competence of surgeons at the start of their professional career. Br J Surg 97:443–449

Dwyer T, Wright S, Kulasegaram KM, Theodoropoulos J, Chahal J, Wasserstein D, Ringsted C, Hodges B, Ogilvie-Harris D (2016) How to set the bar in competency-based medical education: standard setting after an Objective Structured Clinical Examination (OSCE). BMC Med Educ 16:1

Toale C, Morris M, Kavanagh DO (2022) Training and assessment using the LapSim laparoscopic simulator: a scoping review of validity evidence. Surg Endosc. https://doi.org/10.1007/s00464-022-09593-0

Andersen SAW, Nayahangan LJ, Park YS, Konge L (2021) Use of generalizability theory for exploring reliability of and sources of variance in assessment of technical skills: a systematic review and meta-analysis. Acad Med 96:1609–1619

Yudkowsky R, Park YS, Downing SM (2019) Assessment in health professions education. Routledge

Kramer A, Muijtjens A, Jansen K, Düsman H, Tan L, van der Vleuten C (2003) Comparison of a rational and an empirical standard setting procedure for an OSCE: objective structured clinical examinations. Med Educ 37:132–139

Yudkowsky R, Tumuluru S, Casey P, Herlich N, Ledonne C (2014) A patient safety approach to setting pass/fail standards for basic procedural skills checklists. Simul Healthc 9:277–282

Barsuk JH, Cohen ER, Wayne DB, McGaghie WC, Yudkowsky R (2018) A comparison of approaches for mastery learning standard setting. Acad Med 93:1079–1084

Sousa J, Mansilha A (2019) European panomara on vascular surgery: results from 5 years of FEBVS examinations. Angiologia e Cirurgia Vascular 15:171–175

Stefanidis D, Arora S, Parrack DM, Hamad GG, Capella J, Grantcharov T, Urbach DR, Scott DJ, Jones DB (2012) Research priorities in surgical simulation for the 21st century. Am J Surg 203:49–53

Seaman SJ, Jorgensen EM, Tramontano AC, Jones DB, Mendiola ML, Ricciotti HA, Hur HC (2021) Use of fundamentals of laparoscopic surgery testing to assess gynecologic surgeons: a retrospective cohort study of 10-years experience. J Minim Invasive Gynecol 28:794–800

Bilgic E, Kaneva P, Okrainec A, Ritter EM, Schwaitzberg SD, Vassiliou MC (2018) Trends in the Fundamentals of Laparoscopic Surgery® (FLS) certification exam over the past 9 years. Surg Endosc 32:2101–2105

Rooney DM, Brissman IC, Gauger PG (2015) Ongoing evaluation of video-based assessment of proctors’ scoring of the fundamentals of laparoscopic surgery manual skills examination. J Surg Educ 72:471–476

Ritter EM, Brissman IC (2016) Systematic development of a proctor certification examination for the Fundamentals of Laparoscopic Surgery testing program. Am J Surg 211:458–463

Xeroulis G, Dubrowski A, Leslie K (2009) Simulation in laparoscopic surgery: a concurrent validity study for FLS. Surg Endosc 23:161–165

General Medical Council UK (2024) Performance assessments as part of an investigation or hearing. In: General Medical Council UK (ed.) Performance assessments as part of an investigation or hearing. GMC-UK. www.gmc-uk.org

Sousa J, Mansilha A (2021) European panorama on vascular surgery: results from five years of FEBVS examinations. Eur J Vasc Endovasc Surg 61:S41–S42

Louridas M, Szasz P, de Montbrun S, Harris KA, Grantcharov TP (2016) International assessment practices along the continuum of surgical training. Am J Surg 212:354–360

Funding

Open Access funding provided by the IReL Consortium. This work is supported by the Royal College of Surgeons/Hermitage Medical Clinic Strategic Academic Recruitment (StAR MD) program.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

Conor Toale received grant funding as part of undertaking a higher degree, from the Royal College of Surgeons in Ireland/ Hermitage Medical Clinic Strategic Academic Recruitment (StAR MD) program. Marie Morris, Mr Adam Roche, Mr Miroslav Voborsky, Oscar Traynor, and Dara Kavanagh have no conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Data from this study were presented at the Annual Congress of the Association of Surgeons of Great Britain and Ireland, 17–19 May 2023, Harrogate, UK.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Toale, C., Morris, M., Roche, A. et al. Development and validation of a simulation-based assessment of operative competence for higher specialist trainees in general surgery. Surg Endosc (2024). https://doi.org/10.1007/s00464-024-11024-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00464-024-11024-1