Abstract

This qualitative study explored factors influencing the scoring decisions of raters in a simulation-based assessment of operative competence, by analysing feedback provided to trainees receiving ‘borderline’ or ‘not competent’ global scores across stations in an Objective Structured Clinical Examination (OSCE). Directed qualitative content analysis was conducted on feedback provided to trainees during a novel simulation-based assessment. Initial codes were derived from the domains of the Objective Structured Assessment of Technical Skills (OSATS) tool. Further quantitative analysis was conducted to compare the frequency of codes across feedback provided to ‘junior’ and ‘senior’ general surgery trainees. Thirteen trainees undertook the eight-station OSCE and were observed by ten trained assessors. Seven of these were ‘senior’ trainees in their last 4 years of surgical training, while 6 were ‘junior’ trainees in their first 4 years. A total of 130 individual observations were recorded. Written feedback was available for 44 of the 51 observations scored as ‘borderline’ or ‘not competent’. On content analysis, ‘knowledge of the specific procedure’ was the most commonly cited reason for failure, while ‘judgement’ and ‘the model as a confounder’ were two newly generated categories found to contribute to scoring decisions. The OSATS tool can capture a majority of reasons cited for ‘borderline’ or ‘not competent’ performance. Deficiencies in ‘Judgement’ may require simultaneous non-technical skill assessments to adequately capture. It is imperative that assessors and candidates are adequately familiarised with models prior to assessment, to limit the potential impact of model unfamiliarity as a confounder.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

Competency-based education (CBE) principles underpin the development of outcomes-based, nominally time-independent training programmes across jurisdictions [1, 2]. CBE is focussed on the certification of “graduate outcome abilities”, de-emphasising time-based training in favour of “greater accountability, flexibility, and learner-centeredness” [3]. Valid and reliable assessments are of great importance to any such CBE-based programmes. Given acknowledged challenges in utilising more established assessment modalities, such as operative case logs or work-place-based assessments, in determining learner competencies, simulation has evolved as a potentially more feasibly standardisable and objective assessment modality [4, 5]. The success of such simulation-based assessments (SBA), however, depends on the validity of the models, tools, and assessment framework employed.

Within Messick’s unified validity framework, ‘response process validity’ refers to the fit between the construct and the detailed nature of the performance […] actually engaged in [6]. Measures of response process validity, therefore, reflect the extent to which the documented rating reflects the observed performance, and is often explored through analysis of raters’ thoughts or actions during the assessment (for example using a ‘think-aloud’ protocol) [7]. How raters come to a pass or fail decision in a high-stakes competency assessment has further impacts upon the ‘content validity’ of a given assessment (i.e., “the relationship between the content of a test and the construct it is intended to measure” [6]) and also the consequences of a given assessment, in that these global ratings are often used in a standard setting process to determine the pass/fail standard.

In this qualitative study, we explore the factors that influence the scoring decisions of raters in a novel simulation-based assessment of operative competence, by analysing feedback provided to trainees receiving ‘borderline’ or ‘not competent’ global rating scores across stations in an eight-station OSCE (Objective Structured Clinical Examination). Through qualitative content analysis, we explore the extent to which the assessment captures the competencies expected of surgical trainees. This study seeks to provide valuable insights into the challenges inherent in assessing operative competence in surgical trainees using simulation-based assessments, and identifies areas for improvement in assessment tools and models.

Methods

Methodological orientation

Qualitative content analysis was conducted using a directed content analysis framework. Findings are reported in accordance with the COnsolidated criteria for Reporting Qualitative research (COREQ) 2007 guidelines [8]. Ethical approval was granted by the ethics committee of the University of Medicine and Health Sciences at the Royal College of Surgeons in Ireland.

Participants

Volunteer surgical trainees in Ireland undertook a feasibility study exploring a novel formative simulation-based assessment of operative competence. This assessment was designed to assess operative competence at the end of ‘phase 2’ of specialist training in Ireland and the United Kingdom, i.e. the point at which competent performance (to the level of a ‘day-1’ consultant) is expected across a range of core general and emergency surgery procedures, before the development of a sub-specialty interest. It is expected that competent performance will be observed for trainees in their sixth year of dedicated post-graduate surgical training (ST6). Participants and assessors were approached via email, through purposive sampling. Trained assessors were consultant surgeons responsible for the training and education of surgical trainees at academic teaching hospitals. All assessors underwent rater training and standard setting using a modified Angoff approach prior to conducting the assessment [9].

Data collection

Trainees were directly observed across eight task- and procedural stations of 15-min duration each (ileostomy reversal, pilonidal sinus excision, laparoscopic appendicectomy, emergency laparotomy (blunt liver trauma), laparoscopic cholecystectomy, laparoscopic right hemi-colectomy, fistula-in-ano, epigastric hernia repair). These procedures were chosen based on results from a national modified Delphi consensus of expert surgeons (unpublished data). Open surgical skills and procedures were simulated using bio-hybrid models, while three laparoscopic procedures were simulated using the LapSim™ simulator (Surgical Science, Sweden). The LapSim is a portable virtual reality laparoscopic simulator with published validity evidence across general surgery, gynaecology and thoracic surgery training applications [10]. As well as simulating basic laparoscopic skills, the LapSim also generates procedural simulations. Users interact with left and right handed ‘instruments’ connected to a screen-based interface. Haptic feedback is simulated. The three LapSim stations included were: laparoscopic appendicectomy, laparoscopic cholecystectomy and laparoscopic right hemi-colectomy (vessel ligation).

Two stations (ileostomy reversal, emergency laparotomy) were scored by a second independent rater. Performance was scored using a modified Procedure-Based Assessment (PBA) tool, the Objective Structured Assessment of Technical Skill (OSATS) and a global rater of ‘not competent’, ‘borderline’ or ‘competent’. An example score-sheet is included in the supplementary data (Appendix 1). The assessment took place in the National Surgical Training Centre, Royal College of Surgeons in Ireland. Data were collected using QPERCOM assessment software (QPERCOM, Galway, Ireland) to reduce data-error associated with paper recording. Performance across stations was recorded using the LearningSpace platform (CAE Healthcare) for quality assurance. A ‘borderline’ or ‘not competent’ score on the global rater prompted the assessor to provide written feedback to the assessed trainee.

Data analysis

The OSATS tool is the most commonly used technical skill assessment tool, and has accrued substantial validity evidence across a wide range of procedural, sub-specialty, workplace-based and simulation-based settings [11]. Given the volume of existing theory and prior research regarding technical skill assessments, a directed content analysis approach was deemed the most appropriate methodological framework, in line with Hsieh and Shannon’s approach [12]. Initial categories were therefore derived from the seven domains of the OSATS tool, namely: (1) Respect for tissues, (2) instrument handling, (3) knowledge of instruments, (4) flow of operation, (5) use of assistants (6) knowledge of specific procedure, and (7) time and motion. Written feedback, recorded using Qpercom, was analysed initially by a single researcher (CT). Codes, sub-categories and categories were subsequently reviewed and confirmed by the research team (MM, DOK). Any text that could not be categorised according to the above criteria was given a new code. Further quantitative content analysis was conducted on codes and categories in order to determine the relative frequency of categories across ‘junior’ (ST2-ST4) and ‘senior’ (ST5-ST8) trainees. Statistical comparisons across groups were made using Fischer’s exact tests (SPSS version 23, IBM, California USA).

Reflexivity

As qualitative content analysis requires the researchers to make judgements regarding the assigning of 'units of meaning' to categories, a brief statement regarding reflexivity follows: CT (first author) completed the CST (Core Surgical Training programme) in RCSI (Royal College of Surgeons in Ireland). Annual simulation-based assessments are conducted as part of this programme and are used to inform decisions regarding progression to higher specialist training. MM is a senior lecturer within RCSI with research expertise in assessment in surgical education. DOK is Head of Surgical Research within RCSI and is a consultant general/ colorectal surgeon. The research team were familiar with the OSATS tool and its domains and definitions.

Results

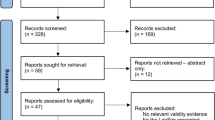

Thirteen general surgery trainees (7 ‘senior’ trainees, ST5-ST8, and 6 ‘junior’ trainees, ST2-ST4) were observed over eight OSCE stations, each scored by a trained surgeon examiner. Two stations were scored by a second independent rater. Of the 130 scores awarded, 51 were marked as either ‘borderline’ or ‘not competent’, and of these, 44 had associated written feedback. Categories and example quotes are outlined in Table 1.

From the 44 analysed texts, 65 units of meaning (words, phrases or sentences) were mapped to the categories outlined in Table 1. Of these units of meaning, 55 (84.62%) could be mapped to the seven domains of the OSATS tool. Two new categories were generated on qualitative content analysis: ‘judgement’, and ‘the model as a confounder’. The relative frequencies across ‘junior’ and ‘senior’ groups are outlined in Table 2. Reasons listed for determining competency decisions were not statistically different across ‘Junior’ and ‘Senior’ groups (Table 2).

‘Knowledge of the specific procedure’ was the most cited reason for determining a borderline/ not competent’ decision on the global rater. Deficit in both procedural knowledge. e.g. “unfamiliar with steps” and anatomical knowledge e.g. “unable to identify the layers” were identified across groups. “Judgement” or decision making was a reason identified by assessors contributing to pass-fail decisions that is not adequately captured by the OSATS tool. Interestingly, all three observations regarding the candidate’s judgement were made during the ‘emergency laparotomy’ station which required the trainee to demonstrate how to pack an injured liver, and how to perform a Pringle manoeuvre to manage bleeding. Deficits in judgement referred to two candidates who sought to proceed to hepatectomy, and one candidate who the assessors believed did not recognise the need for escalation to specialist (hepatobiliary) care in case of failed initial measures—“wanted to try to bring back to theatre again in local hospital”.

The second important category that was generated from content analysis is the concept of the ‘model as a confounder’ in making pass-fail decisions. This contributed to seven of the decisions regarding a trainee’s level of competency, as assessors could not be sure, based on the trainee’s performance on the model, whether perceived deficits were due to unfamiliarity with the model, the tissue handling of the model, or true deficiencies in competence. The ‘model as a confounder’ was highlighted by three different independent assessors across three different stations, though interestingly these three stations were those which utilised the LapSim (Surgical Science, Sweden) laparoscopic simulator to emulate laparoscopic appendicectomy, laparoscopic cholecystectomy and laparoscopic right hemi-colectomy procedures. In part, it was felt that “tissue properties were not adequately simulated” [assessor, laparoscopic appendicectomy station] in order to make a summary judgement regarding competence. In the laparoscopic cholecystectomy station, “Calot’s triangle [was] not a realistic simulation”, though it was reported that the “dissection from the liver”, in contrast, “tests the trainee’s ability”. This assessor also noted that “the forces required to dissect are far greater than could be safely applied in real life”. This suggests that adequate performance on the model would not necessarily reflect safe performance in the operating theatre. Similarly, an assessor observing the laparoscopic right hemi-colectomy station identified that “the main difficulty [encountered by the trainee] was the tissue behaviour in the model”.

Beyond the fidelity of the model itself, another contributor to global pass/fail decisions was the perceived familiarity of the trainee with the model. One assessor [right hemi-colectomy station] noted that the assessed trainee seemed “familiar with the operation but not the simulator”. Another assessor [laparoscopic cholecystectomy station] identified improved performance as trainees moved from one LapSim station to the next, remarking that: “this is the third [LapSim] simulated activity that the trainee has performed today, and the benefit of experience on the platform is evident”. The same assessor also raised an interesting view with respect to the use of virtual reality models to assess real-world competence, remarking that “the scoring system here can be used to assess someone on the simulator but not on the operation of cholecystectomy”.

Discussion

This study utilised qualitative and quantitative directed content analysis to identify contributors to global competent/not competent decisions made by trained surgeon assessors. The OSATS tool captures the majority of reasons by which assessors come to pass–fail decisions for trainees, with 84.62% of written feedback submissions analysed by this study referencing a domain of the OSATS tool. While junior trainees failed more stations than their more senior colleagues, there was no statistically significant difference observed with respect to the reasons identified for borderline/not competent decisions on quantitative analysis, though for several of the categories sample sizes were likely too low to meaningfully infer any differences.

Deficits in ‘judgement’ contributed to three of the 44 borderline/not competent decisions, a facet not adequately assessed by the OSATS tool alone. We have previously identified that key stakeholders believe ‘higher order’ skills, such as intra-operative judgement or decision making, were key elements of competence, particularly when assessing senior trainees [13]. Interesting, all three judgement deficits were identified by assessors observing the ‘emergency laparotomy’ station which simulated liver trauma, and required candidates to pack the injured liver and perform a Pringle manoeuvre to stop bleeding. We hypothesise that this more difficult clinical scenario, which likely represents a scenario which trainees are less likely to have encountered in practice, requires candidates to verbalise and act upon intra-operative planning decisions. Such ‘emergency’ scenarios may be useful assessments of non-technical and technical skill. The Non-Technical Skills for Surgeons assessment tool contains a domain on ‘decision making’ along with ‘situation awareness’, 'communication and teamwork’ and ‘leadership’ [14]. This tool may strengthen the validity and reliability to future simulation-based competence assessments, though may require the use of a more representative mock-operating theatres and inter-disciplinary assessments of high-fidelity [15] in order to adequately assess technical and non-technical skill concurrently.

The importance of model fidelity and model familiarity to the assessment of technical skills, particularly in high-stakes assessments of operative competence, cannot be overstated. Studies demonstrating the validity of the LapSim platform as a training and assessment modality often do so within the context of a ‘train to proficiency’ paradigm [16,17,18] where trainees reached pre-defined competency metrics on the LapSim prior to assessment. Within this framework, transfer of skill from the simulated environment to the operating theatre has been demonstrated [18]. There is, however, an inherent learning curve with virtual reality platforms. Within the constraints of simulation-based assessment, trainees need to learn technical aspects of the model (e.g. how to change instruments or how to change the camera view) that by necessity will not reflect the process of doing so in the operating theatre. Therefore, while the transfer of skill from the simulated environment to the operating room may be observed, it does not necessarily follow that the reverse is true; competent performance in the operating room may not be captured by the simulator due to new difficulties introduced by the limitations of simulators. The validity of the assessment as a whole therefore relies on whether the models used are of sufficient physical, visual and psychological fidelity to reflect real-world competence to a sufficient degree. Furthermore, trainee performance can vary across simulated platforms. Prior studies have concluded that manual dexterity or technical skill may be better taught using physical models [19], though others have not demonstrated any significant difference in training outcomes across platforms [20], and a 2016 meta-analysis (Alaker et al.) concluded that virtual reality training was significantly more effective than training using a box trainer [21]. The most suitable models for assessment of operative competence, however, remain to be established.

In a time-limited examination format, where candidates may not be entirely familiar with the platform, it is understandable that such limitations would impact upon a candidate’s perceived competence in completing the simulated task or operation. This limitation of simulation-based assessments could be overcome by ensuring that candidates have sufficient time for model familiarisation prior to undertaking the assessment, though this has significant time and resource ramifications for high-stakes assessments. While this has implications for high-stakes assessments such as the Fundamental of Laparoscopic Skills qualification, it is a particularly important consideration for centralised end-of-training assessments which take place outside of a simulation-based training programme [5, 22]. How this familiarization can be feasibly conducted is an area for future research. Should candidates have a ‘warm-up’ session, and how would ‘familiarization’ be sufficiently measured? How would the ‘familiarization curve’ differ from, and be distinguishable from, the ‘competency curve’? How would theories of spaced-learning [23] impact upon the timing of these familiarisation sessions, to reduce skill attrition over time? Ultimately, should high-stakes assessments form part of a broader simulation-based train-to-proficiency curriculum developed for Higher Specialist trainees, and if so, how is this best delivered in the context of a national training programme?

If candidates are given sufficient time on simulators to achieve a degree of familiarity, then the extent to which the assessment tests real-world competence and not just simulator-specific skill depends on the extent to which the simulator reflects the visual, physical and psychological fidelity of the real-world task. The comment from one assessor in this study that the assessment “can be used to assess someone on the simulator but not on the operation of cholecystectomy” reflects this difficulty, as does the observation made by the same assessor that higher levels of performance on the LapSim could in part be attributable to the candidates undertaking two prior assessments of different skills on the LapSim model. Future studies should investigate not only the transfer of skills obtained in the operating room to the simulated environment, but could also interrogate the transfer of skills from the operating room to simulation-based assessments. The development of objective assessment tools, including using advance computational and artificial intelligence methods [24,25,26], may further improve the validity and reliability of simulation-based operative competence assessments in the near future.

Limitations

One limitation of this study is its relatively small sample size. Relative frequencies of codes were not significantly different across trainee groups, though this study is likely underpowered to identify smaller differences in distribution.

Conclusion

The OSATS tool can capture a significant majority of the reasons cited for borderline or not competent performance in a simulation-based assessment. A small number of observations were classified as not competent due to perceived deficiencies in intra-operative judgement. In order to capture this beyond a gestalt global rater, a simultaneous assessment of non-technical skills, for example using the NOTSS tool, could be considered Prior to assessment, it is imperative that assessors and candidates are familiar with the models being used in the assessment, to mitigate against the impact of the model as a confounder in examiner scoring of trainee results. Furthermore, the model should be of sufficient fidelity to ensure that it reflects real-world performance. This is particularly important if the model is to be used for trainee assessment outside of a ‘train to simulation proficiency’ curriculum.

References

"The general surgery milestone project," (in eng), J Grad Med Educ, 6,1 Suppl 1, pp. 320–8, Mar 2014, https://doi.org/10.4300/JGME-06-01s1-40.1.

Lund J. “The new general surgical curriculum and ISCP,” (in eng). Surgery (Oxf). 2020;38(10):601–6. https://doi.org/10.1016/j.mpsur.2020.07.005.

Frank JR, Mungroo R, Ahmad Y, Wang M, De Rossi S, Horsley T. Toward a definition of competency-based education in medicine: a systematic review of published definitions. Med Teach. 2010;32(8):631–7. https://doi.org/10.3109/0142159X.2010.500898

de Montbrun SL, et al. “A novel approach to assessing technical competence of colorectal surgery residents: the development and evaluation of the colorectal objective structured assessment of technical skill (COSATS),” (in eng). Ann Surg. 2013;258(6):1001–6. https://doi.org/10.1097/SLA.0b013e31829b32b8.

de Montbrun S, Roberts PL, Satterthwaite L, MacRae H. “Implementing and evaluating a national certification technical skills examination: the colorectal objective structured assessment of technical skill,” (in eng). Ann Surg. 2016;264(1):1–6. https://doi.org/10.1097/sla.0000000000001620.

A. E. R. Association, (2014) "Validity, “Standards for educational and psychological testing, pp. 11–31.

Cook DA, Hatala R. "Validation of educational assessments: a primer for simulation and beyond. Adv Simul. 2016;1(1):31. https://doi.org/10.1186/s41077-016-0033-y.

Tong A, Sainsbury P, Craig J. “Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups,” (in eng). Int J Qual Health Care. 2007;19(6):349–57. https://doi.org/10.1093/intqhc/mzm042.

George S, Haque MS, Oyebode F. Standard setting: comparison of two methods. BMC Med Educa. 2006;6(1):46. https://doi.org/10.1186/1472-6920-6-46.

Toale C, Morris M, Kavanagh DO. “Training and assessment using the LapSim laparoscopic simulator: a scoping review of validity evidence,” (in eng). Surg Endosc. 2023;37(3):1658–71. https://doi.org/10.1007/s00464-022-09593-0.

Vaidya A, Aydin A, Ridgley J, Raison N, Dasgupta P, Ahmed K. Current status of technical skills assessment tools in surgery: a systematic review”. J Surg Res. 2020. https://doi.org/10.1016/j.jss.2019.09.006.

Hsieh HF, Shannon SE. “Three approaches to qualitative content analysis,” (in eng). Qual Health Res. 2005;15(9):1277–88. https://doi.org/10.1177/1049732305276687.

Toale C, Morris M, Kavanagh DO. Perspectives on simulation-based assessment of operative skill in surgical training. Med Teach. 2023. https://doi.org/10.1080/0142159X.2022.2134001.

Jung JJ, Borkhoff CM, Jüni P, Grantcharov TP. “Non-technical skills for surgeons (NOTSS): critical appraisal of its measurement properties,” (in eng). Am J Surg. 2018;216(5):990–7. https://doi.org/10.1016/j.amjsurg.2018.02.021.

L. Owei et al. (2017)” In Situ Operating Room-Based Simulation: A Review" (in eng). J Surg Educ https://doi.org/10.1016/j.jsurg.2017.01.001.

Palter VN, Graafland M, Schijven MP, Grantcharov TP. “Designing a proficiency-based, content validated virtual reality curriculum for laparoscopic colorectal surgery: a Delphi approach,” (in eng). Surgery. 2012;151(3):391–7. https://doi.org/10.1016/j.surg.2011.08.005.

Gauger PG, et al. “Laparoscopic simulation training with proficiency targets improves practice and performance of novice surgeons,” (in eng). Am J Surg. 2010;199(1):72–80. https://doi.org/10.1016/j.amjsurg.2009.07.034.

Ahlberg G, et al. “Proficiency-based virtual reality training significantly reduces the error rate for residents during their first 10 laparoscopic cholecystectomies,”(in eng). Am J Surg. 2007;193(6):797–804. https://doi.org/10.1016/j.amjsurg.2006.06.050.

Breimer GE, et al. “Simulation-based education for endoscopic third ventriculostomy: a comparison between virtual and physical training models,” (in eng). Oper Neurosurg (Hagerstown). 2017;13(1):89–95. https://doi.org/10.1227/neu.0000000000001317.

Whitehurst SV, et al. “Comparison of two simulation systems to support robotic-assisted surgical training: a pilot study (Swine model),” (in eng). J Minim Invasive Gynecol. 2015. https://doi.org/10.1016/j.jmig.2014.12.160.

Alaker M, Wynn GR, Arulampalam T. Virtual reality training in laparoscopic surgery: a systematic review and meta-analysis. Int J Surg. 2016. https://doi.org/10.1016/j.ijsu.2016.03.034.

Sousa J, Mansilha A. European panomara on vascular surgery: results from 5 years of FEBVS examinations. Angiologia e Cirurgia Vascular. 2019. https://doi.org/10.1016/j.ejvs.2021.01.034.

Versteeg M, Hendriks RA, Thomas A, Ommering BWC, Steendijk P. “Conceptualising spaced learning in health professions education: a scoping review,” (in eng). Med Educ. 2020;54(3):205–16. https://doi.org/10.1111/medu.14025.

Lam K, et al. Machine learning for technical skill assessment in surgery: a systematic review. Npj Digital Med. 2022. https://doi.org/10.1038/s41746-022-00566-0.

Lavanchy JL, et al. “Automation of surgical skill assessment using a three-stage machine learning algorithm.” Sci Rep. 2021. https://doi.org/10.1038/s41598-021-84295-6.

Soangra R, Sivakumar R, Anirudh ER, Reddy YS, John EB. Evaluation of surgical skill using machine learning with optimal wearable sensor locations (in eng). PLoS ONE. 2022. https://doi.org/10.1371/journal.pone.0267936.

Funding

Open Access funding provided by the IReL Consortium. Royal College of Surgeons in Ireland/Hermitage Medical Clinic Strategic Academic Recruitment (StAR MD) programme

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Toale, C., Morris, M. & Kavanagh, D.O. Why do residents fail simulation-based assessments of operative competence? A qualitative analysis. Global Surg Educ 2, 83 (2023). https://doi.org/10.1007/s44186-023-00161-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44186-023-00161-1