Abstract

The factor graph of an instance of a constraint satisfaction problem with n variables and m constraints is the bipartite graph between [m] and [n] describing which variable appears in which constraints. Thus, an instance of a CSP is completely determined by its factor graph and the list of predicates. We show optimal inapproximability of Max-3-LIN over non-Abelian groups (both in the perfect completeness case and in the imperfect completeness case), even when the factor graph is fixed. Previous reductions which proved similar optimal inapproximability results produced factor graphs that were dependent on the input instance. Along the way, we also show that these optimal hardness results hold even when we restrict the linear equations in the Max-3-LIN instances to the form \(x\cdot y\cdot z = g\), where x, y, z are the variables and g is a group element. We use representation theory and Fourier analysis over non-Abelian groups to analyze the reductions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Constraint Satisfaction Problems (CSPs), and especially k-LIN, are the most fundamental optimization problems. An instance of a CSP consists of a set of n variables and a set of m local constraints where each constraint involves a small number of variables. The goal is to decide if there exists an assignment to the variables that satisfies all the constraints. 3-LIN is a special type of CSP where each constraint is a linear equation in the variables involved in the constraint. More specifically, a 3-LIN instance over a (non-Abelian or Abelian) group G has the constraints of the form \(a_{1} \cdot x_{1}\cdot a_{2} \cdot x_{2}\cdot a_{3} \cdot x_{3} = b\), where \(a_{1}, a_{2}, a_{3}, b\) are the group elements and \(x_1, x_2, x_3\) are the variables. One can also sometimes allow inverses of the variables in the equations.

For most CSPs, the decision version is \(\text {NP}\)-complete. Therefore, from the algorithmic point of view, one can relax the goal to finding an assignment that satisfies as many constraints as possible. An \(\alpha \)-approximation algorithm for a Max-CSP is an algorithm that always returns a solution that satisfies at least \(\alpha \cdot \text {OPT}\) many constraints, where \(\text {OPT}\) is the maximum number of constraints that can be satisfied by an assignment.

The famous PCP theorem [1, 2, 12] shows that certain Max-CSPs are hard to approximate within a factor \(c<1\). A seminal result of Håstad [14] gives optimal inapproximability results for many CSPs including Max-k-SAT, Max-k-LIN over Abelian groups, Set Splitting, etc. Once we understand the opitmal worst-case complexity of a Max-CSP, it is interesting to understand how the complexity of the problem changes under certain restrictions on the instances. One such example of restrictions is the study of promise CSPs [4, 7] in which it is guaranteed that a richer solution exists (e.g., a given graph has a proper 3-coloring) and the goal is to find or even approximate a weaker solution (e.g., find a coloring with 10 colors that maximizes the number of non-monochromatic edges). Another example is the study of complexity of Max-CSP when the instance is generated using a certain random process [11, 16].

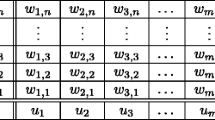

In this paper we study the complexity of Max-3-LIN when the underlying constraints vs. variables graph is fixed. A factor graph of an instance is a bipartite graph between \(\{C_1, C_2, \ldots , C_m\}\) and \(\{x_i, x_2, \ldots , x_n\}\) where we connect \(C_i\) to \(x_j\) if the constraint \(C_i\) depends on the variable \(x_j\). An instance can be completely described by its factor graph and by specifying which predicate to use for each constraint. Feige and Jozeph [13] were interested in understanding the effect of the factor graph on the complexity of approximating CSPs. Namely, for a given CSP, is there a factor graph \(G_n\) for each input length n such that the CSP remains hard even after restricting its factor graph to \(G_n\)? They answered this positively by showing that there exists a family of factor graphs for Max-3-SAT such that it is \(\text {NP}\)-hard to approximate within a factor of s \(\frac{77}{80} + \varepsilon \), for every \(\varepsilon >0\). They defined such a family of graphs as the universal factor graphs.

Definition 1.1

A family of (c, s)-universal factor graphs for a Max-CSP is a family of factor graphs, one for each input length, such that given a Max-CSP instance restricted to the factor graphs in the family, it is \(\text {NP}\)-hard (under deterministic polynomial time reductions) to find a solution with value at least s even if it is guaranteed that there is a solution with value at least c.

In a follow-up work by Jozeph [15], it was shown that there are universal factor graphs for every \(\text {NP}\)-hard Boolean CSP and for every APX-hard Boolean Max-CSP. However, the inapproximability factors in these results are weaker than what was known in the standard setting.

The result of Feige and Jozeph [13] was vastly improved recently by Austrin, Brown-Cohen and Håstad [3]. They gave optimal inapproximability results for many well-known CSPs including Max-k-SAT, Max-TSA,Footnote 1 “\((2+\varepsilon )\)-SAT" and any predicate supporting a pairwise independent subgroup. Namely, they show the existence of \((1,\frac{7}{8}+\varepsilon )\)-universal factor graphs for a Max-3-SAT, \((1-\varepsilon ,\frac{1}{2}+\varepsilon )\)-universal factor graphs for a Max-3-LIN over \({\mathbb {Z}}_2\), etc., for every \(\varepsilon >0\). The optimal universal factor graph inapproximability of Max-3-LIN over any finite Abelian groups was also shown in [3].

In this paper, we investigate the existence of universal factor graphs for Max-3-LIN over finite non-Abelian groups. Engebretsen, Holmerin, and Russell [10] showed optimal inapproximability of Max-3-LIN over non-Abelian groups with imperfect completeness.Footnote 2 Recently, the first author and Khot [6] showed optimal inapproximability of Max-3-LIN over non-Abelian groups with perfect completeness. Our main theorems extend both these results by showing the existence of universal factor graphs with the same hardness factor.

In the imperfect completeness case, we show that it is \(\text {NP}\)-hard to do better than the random assignment even if the factor graph is fixed, thereby extending the result of [10] to the universal factor graph setting.

Theorem 1.2

For every \(\varepsilon >0\) and any finite non-Abelian group G, there are \((1-\varepsilon , \frac{1}{|G|}+\varepsilon )\)-universal factor graphs for Max-3-LIN over G.

For a non-Abelian group G, we denote by [G, G] a commutator subgroup of G, i.e., the subgroup generated by the elements \(\{g^{-1}h^{-1}gh\mid g,h\in G\}\). The factor \(\frac{1}{|[G,G]|}\) comes naturally in the results on approximating Max-3-LIN over G in the perfect completeness situation. This is because of the fact that G/[G, G] is an Abelian group and this can be used in getting the \(\frac{1}{|[G,G]|}\)-approximation algorithm for Max-3-LIN. For a concrete example, consider the group \(S_3\), the group of all permutations of a three-element set. The commutator subgroup of \(S_3\) is \(\{(), (1,2,3), (1,3,2)\}\) which is isomorphic to \({\mathbb {Z}}_3\). In this case, \(S_3/{\mathbb {Z}}_3 \cong {\mathbb {Z}}_2\) which is an Abelian group. More generally, a given Max-3-LIN instance \(\phi \) over G can be thought of as a Max-3-LIN over G/[G, G] by replacing the group constant by its coset in G/[G, G]. If \(\phi \) is satisfiable over G, then \(\phi '\) is satisfiable over G/[G, G]. The satisfying assignment for \(\phi '\) can be found in polynomial time as \(\phi '\) is a collection of linear equations over an Abelian group. To get the final assignment for \(\phi \), we can assign with each variable x a random element from the coset assigned by the satisfying assignment of \(\phi '\). It is easy to see that this random assignment satisfies each equation of \(\phi \) with probability \(\frac{1}{|[G,G]|}\).

In the perfect completeness situation too, we get the optimal universal factor graph hardness for Max-3-LIN, matching the inapproximability threshold of [6].

Theorem 1.3

For every \(\varepsilon >0\) and any finite non-Abelian group G, there are \((1, \frac{1}{|[G,G]|}+\varepsilon )\)-universal factor graphs for Max-3-LIN over G.

The actual theorem statements are stronger that what are stated. Along with the universal factor graph hardness, the instances have the following additional structure.

-

1.

In the imperfect completeness case, our hardness result from Theorem 1.2 holds for constraints of the form \(x_{1}\cdot x_{2}\cdot x_{3} = g\) for some \(g\in G\). In [10], the constraints involve inverses of the variables as well as group constants on the left hand side, for example, the constraints can be of the form \(a_{1} \cdot x_{1}\cdot a_{2} \cdot x_{2}^{-1}\cdot a_{3} \cdot x_{3} = b\).

-

2.

Similar to the above, our hardness result from Theorem 1.3 holds even if we restrict the constraints in the Max-3-LIN instance to the form \(x_{1}\cdot x_{2}\cdot x_{3} = g\) for some \(g\in G\). In comparison, in [6], the definition of a linear equation involves using constants on the left-hand side of the equations.

To sum it up, our results show that the exact ‘literal patterns’ as well as the factor graphs are not the main reasons for the optimal \(\text {NP}\)-hardness of the aforementioned results [6, 10] on Max-3-LIN over finite non-Abelian groups.

1.1 Techniques

In this section, we highlight the main differences between the previous works [3, 6, 10] and this work.

A typical way of getting optimal inapproximability result is to start with a gap \((1, 1-\varepsilon )\) \(\text {NP}\)-hard instance of a Max-CSP and apply parallel repetition on it to create a 2-CSP (a.k.a. Label Cover, see Definition 2.32) with arbitrarily large gap of \((1,\delta )\), for any constant \(\delta >0\). Each vertex on one side of the Label Cover corresponds to a subset of constraints of the initial Max-CSP instance. In order to reduce a Label Cover to a given Max-CSP over smaller alphabet, one key component in the reduction is to use a long-code encoding of the labels (i.e., the assignments to the variables in the constraints associated with the vertex) in the Label Cover instance. A long code of a label \(i\in [n]\) is given by the truth-table of a function \(f: [q]^n \rightarrow [q]\) defined as \(f(x) = x_i\). One of the main reasons for using long code is that its high redundancy allows one to implicitly check if the assignment satisfies the given set of constraints associated with a vertex using the operation called folding. Thus, the only thing to check is if the given encoding is indeed (close to) a long code encoding of a label and hence it is successful in getting many tight inapproximability results.

One issue with the foldings that appeared before the work of [3] is that the folding structure changes if we change the literal structure of the underlying constraints. Therefore, even if we start with a universal factor graph hard instance of a gap \((1, 1-\varepsilon )\) Max-CSP, different literal patterns give different constraints vs. variables graphs for the final Max-CSP instances. Therefore, the reduction template is not enough to get the same factor graph.

To overcome this difficulty the functional folding was introduced in [3]. This folding is ’weaker’ than the previously used foldings in terms of decoding to a label that satisfies the underlying predicate in the Label Cover instance. However, they show that this type of folding can be used to get the tight inapproximability results. The key lemma that was proved in [3] is that non-zero Fourier coefficients of such a folded function correspond to sets of assignments with an odd number of them satisfying the constraints.

We cannot directly use the functional folding defined in [3] for two main reasons. Firstly, the way functional folding was defined, the underlying group is always an Abelian group. Secondly, the aforementioned main lemma from [3] talks about the non-zero Fourier coefficient of the folded function when viewed as a function over an Abelian group. Since our soundness analyses use Fourier analysis over non-Abelian groups (similar to [6, 10] ), we cannot directly use their folding.

In this work, we define functional folding for functions \(f: G^n \rightarrow G\), where G is a finite non-Abelian group (See Definition 2.36). One of the main contributions of this work is to prove that non-zero Fourier coefficients (Fourier matrices to be precise) of such functionally folded functions also have properties that are sufficient to complete the soundness analysis.

For the proof of Theorem 1.2, it is enough to show that for functionally folded functions, any non-zero Fourier coefficient of the function has at least one assignment that satisfies all the constraints of the Label Cover vertex. We prove this in Lemma 2.40. This conclusion is analogous to the one in [3]. In order to make sure that we do not use inverses in the constraints as mentioned in the previous section, we follow the proof strategy of [6] in the imperfect completeness case. Compared to [6], in the imperfect completeness case, one needs to control extra terms related to dimension 1 representations of the group which necessitates a different approach for the soundness analysis. In particular, we use the independent noise to take care of all high-dimensional terms in the Fourier expansion of the test acceptance probability.

For proving Theorem 1.3, we need a stronger conclusion on the non-zero Fourier coefficients of functionally folded functions. This is because the decoding strategy in [6] is highly non-standard; The reduction can only decode from a very specific subset of the list corresponding to non-zero Fourier coefficients. Therefore, we need to show that for this type of non-zero Fourier coefficients, there exists an assignment from that subset satisfying the constraints of the Label Cover vertex. This is done in Lemma 2.41.

We also observe that for the soundness proof to work, one does not need to fold all the functions. We use this observation to conclude that the reduced instance of Max-3-LIN has constraints of the form \(x_{1}\cdot x_{2}\cdot x_{3} = g\) for some \(g\in G\). Since the factor graph is fixed, these instances are completely specified by specifying only one group element per constraint.

1.2 Organization

We start with preliminaries in Sect. 2. In Sect. 2.1, we give an overview of representation theory and Fourier analysis over non-Abelian groups. In Sect. 2.2, we formally define the problem that we study and universal factor graphs. In Sect. 2.3, we define functional folding and prove two key lemmas that will be used in the main soundness analysis.

In Sect. 3, we prove the existence of \((1-\varepsilon ,\frac{1}{|G|}+\varepsilon )\)-universal factor graphs for Max-3-LIN over a non-Abelian group G with imperfect completeness. Finally, in Sect. 4, we prove the existence of \((1,\frac{1}{|[G,G]|}+\varepsilon )\)-universal factor graphs for Max-3-LIN over a non-Abelian group G with perfect completeness.

2 Preliminaries

2.1 Representation Theory and Fourier Analysis on Non-abelian Groups

In this section we give a brief description of concepts from the representation theory used in this work. We will not prove any claims in this section; instead, we refer the reader interested in a more detailed treatise to [19, 20].

Let us start by introducing a definition of representation.

Definition 2.1

A representation \((V_{\rho },\rho )\) of a group G is a group homomorphism \(\rho :G \rightarrow \text {GL}(V_{\rho })\), where \(V_{\rho }\) is a complex vector space.

For the sake of brevity throughout this article we will use only symbol \(\rho \) to denote a representation, and we will assume that the vector space \(V_{\rho }\) can be deduced from the context. We work with finite groups, and hence assume that \(V_{\rho } = {\mathbb {C}}^{n}\), where \(n \in {\mathbb {N}}\), and that \(\text {GL}(V_{\rho })\) is a space of invertible matrices. Furthermore, we always work with unitary representations.

The study of representations can be reduced to the study of irreducible representations. In order to introduce them, we first bring in the following definition.

Definition 2.2

Let \(\rho \) be a representation of a group G. A vector subspace \(W \subseteq V_{\rho }\) is G-invariant if and only if

Observe that if W is G-invariant then the restriction \(\rho \vert _{W}\) of \(\rho \) to W is a representation of G. We can now introduce irreducible representations.

Definition 2.3

A representation \(\rho \) of a group G is irreducible if \(V_{\rho } \ne \emptyset \) and its only G-invariant subspaces are \(\left\{ 0\right\} \) and \(V_{\rho }\).

We use Irrep(G) to denote the set of all irreducible representations of G up to an isomorphism, where an isomorphism between representations is given by the following definition.

Definition 2.4

Two representations \(\rho \) and \(\tau \) of a group G are isomorphic if there is an invertible linear operator \(\varphi :V_{\rho } \rightarrow V_{\tau }\) such that

We write \(\rho \cong \tau \) to denote that \(\rho \) is isomorphic to \(\tau \).

Representation theory is used in this work because it is a natural language for expressing Fourier analysis of the space \(L^{2} (G) = \left\{ f: G \rightarrow {\mathbb {C}} \right\} \), which will be a ubiquitous tool in this work. We endow the space \(L^{2}(G)\) with the scalar product defined as

which induces a norm on \(L^{2}(G)\) via

We can now introduce the Fourier transform as follows.

Definition 2.5

For \(f \in L^{2}(G)\), the Fourier transform of f is an element \({\hat{f}}\) of \(\prod _{\rho \in \text {Irrep}(G)} \text {End}(V_{\rho })\) given by

In the definition above we use \(\text {End}(V_{\rho })\) to denote a set of endomorphisms of the vector space \(V_{\rho }\). In particular, once we fix bases of \(\left\{ V_{\rho }\right\} _{\rho \in \text {Irrep}(G)}\), we can identify \({\hat{f}}\) with a matrix. Throughout this article we will consider the space of matrices of the same dimension to be equipped by the scalar product defined as

Note that if we consider A and B as operators on a vector space V, the definition of \(\langle A,B \rangle _{\text {End}(V)}\) does not change under unitary transformations of basis. Let us now state the Fourier inversion theorem which shows that the function is uniquely determined by its Fourier coefficients.

Lemma 2.6

(Fourier inversion) For \(f \in L^{2}(G)\) we have

Plancherel’s identity can be written in this setting as follows.

Lemma 2.7

(Plancherel’s identity)

A straightforward corollary of Plancherel’s idenity is Parseval’s identity, given in the following lemma.

Lemma 2.8

(Parseval’s identity) For \(f :G \rightarrow {\mathbb {C}}\) we have

where \(\Vert \cdot \Vert _{HS}\) is a norm induced by the scalar product (1), i.e., it is the Hilbert-Schmidt norm defined on a set of linear operators on \(V_{\rho }\) by

The following lemma characterizes the values of scalar products between matrix entries of two representations. In particular, it shows that the matrix entries of two representations are orthogonal, and that \(L^{2}(G)\)-norm of each entry of representation \(\rho \) equals to \(1/\dim (\rho )\).

Lemma 2.9

If \(\rho \) and \(\tau \) are two non-isomorphic irreducible representations of G then for any i, j, k, l we have

Furthermore,

where \(\delta _{ij}\) is the Kronecker delta function.

By taking \(\tau \cong 1 \) in the previous lemma we obtain the following corollary.

Lemma 2.10

Let \(\rho \in \text {Irrep}(G)\setminus \left\{ 1\right\} \). Then

Let us now associate a character with each representation.

Definition 2.11

The character \(\chi _{\rho }:G \rightarrow {\mathbb {C}}\) of a representation \(\rho :G \rightarrow \text {GL}(V)\) is a function defined by

Characters are orthogonal to each other as shown by the following lemma.

Lemma 2.12

For \(\rho ,\tau \in \text {Irrep}(G)\) we have

Another nice identity that characters satisfy is given in the following lemma.

Lemma 2.13

Taking \(g=1_G\) in the previous lemma implies that \(\sum _{\rho \in \text {Irrep}(G)} \dim (\rho )^{2}=|G|\), and hence for every \(\rho \in \text {Irrep}(G)\) we have \(\dim (\rho )\le \sqrt{|G|}\).

In this article we will also encounter convolution of functions in \(L^{2}(G)\), which is defined as follows.

Definition 2.14

Given \(f,g \in L^{2}(G)\), their convolution \(f*g \in L^{2}(G)\) is defined as

Fourier analysis interacts nicely with the convolution, as shown by the following lemma.

Lemma 2.15

For \(f,g \in L^{2}(G)\) we have

Given a group G we can define the group \(G^{n}\) as the set of n-tuples of elements of G on which the group operation is performed coordinate-wise. It is of interest to study the structure of representations of \(G^{n}\), particularly in relation to representations of G. In order to do so, let us first introduce direct sum and tensor product of representations.

Definition 2.16

Let \(\rho \) and \(\tau \) be two representations of G. We define their direct sum \(\rho \oplus \tau \) to be a representation of G defined on vectors \((v,w) \in \rho _V \oplus \tau _V\) by

Observe that if a representation \(\rho \) is not irreducible then there are G-invariant vector subspaces \(V_1,V_2 \subseteq V_{\rho }\) such that \(V_{\rho }\) is a direct sum of \(V_1\) and \(V_2\), i.e., \(V_{\rho }=V_1 \oplus V_2\). Hence, by fixing a suitable basis of \(V_{\rho }\) we can write \(\rho \) as a block diagonal matrix with two blocks on the diagonal corresponding to \(\rho \vert _{V_1}\) and \( \rho \vert _{V_2}\), i.e., we have that \(\rho \cong \rho \vert _{V_1} \oplus \rho \vert _{V_2}\). Since we assumed that \(\dim (\rho )<\infty \) it follows by the principle of induction that we can represent each \(\rho \) as

where \(\tau ^{s} \in \text {Irrep}(G)\). We now introduce the tensor product of representations.

Definition 2.17

Let \(\rho \) and \(\tau \) be representations of G. Tensor product \(\rho \otimes \tau \) of \(\rho \) and \(\tau \) is a representation of \(V_{\rho }\otimes V_{\tau }\) defined by

and extended to all vectors of \(V_{\rho }\otimes V_{\tau }\) by linearity.

Following lemma characterizes irreducible representations of \(G^{n}\) in terms of irreducible representations of G.

Lemma 2.18

Let G be a group and let \(n \in {\mathbb {N}}\). All irreducible representations of the group \(G^{n}\) are given by

We will sometimes use \(\rho =(\rho _1,\ldots ,\rho _n)\) to denote that \(\rho =\otimes _{d \in [n]} \rho _d\). Furthermore, we will use \(|\rho |\) to denote the number of \(\rho _d\not \cong 1\).

Let us remark that for a representation \(\rho =\otimes _{d=1}^n \rho _d\) as given in the lemma above, every entry \([\rho ]_{ij}, 1 \le i,j \le \dim (\rho ),\) is a product of entries of representations \(\rho _1,\ldots ,\rho _n\), and hence it can be written as

In our arguments we will work with a map \(\pi :[R] \rightarrow [L]\), where \(L,R \in {\mathbb {N}}\), and the notation [n] for \(n \in {\mathbb {N}}\) is used to mean \([n]=\left\{ 1,2,\ldots ,n\right\} \). Let us define with \({\tilde{\pi }}:G^{L} \rightarrow G^{R}\) the map given by

If \(\alpha \in \text {Irrep}(G^{R})\), then the map \(\alpha \circ {\tilde{\pi }}\) is a representation of \(G^{L}\). Observe that \(\alpha \circ {\tilde{\pi }}\) might not be irreducible, but anyhow it can be decomposed into its irreducible components, i.e., we have that

where \(u\in {\mathbb {N}}\) and \(\tau ^{s} \in \text {Irrep}(G^{L})\). In particular, with a suitable choice of basis the representation \(\alpha \circ {\tilde{\pi }}\) can be realized as a block diagonal matrix \(\oplus _{s=1}^{u} \tau ^{s}\). Hence, let us fix this basis and identify \(\alpha \circ {\tilde{\pi }}\) with a \(\dim (\alpha )\times \dim (\alpha )\) matrix with u diagonal blocks. To each \(1 \le i,j \le \dim (\alpha )\), such that (i, j) belongs to some of the blocks we can assign a representation \(\tau ^{s} \in \text {Irrep}(G^{L})\) and \(1 \le i',j'\le \dim (\tau ^{s})\) such that (i, j)-th matrix entry of \(\alpha \circ {\tilde{\pi }}\) corresponds to \((i',j')\)-th matrix entry of \(\tau ^{s}\). Throughout this work we will use \(Q(\alpha ,i,j)\) to denote \((\tau ^{s},i',j')\) obtained by this correspondence.

Furthermore, for a fixed \(\alpha \in \text {Irrep}(G^{R})\) and \(1 \le i \le \dim (\alpha ),\) let us denote with \(\text {B}(\alpha ,i)\) the set of all \(j\in \left\{ 1,\ldots ,\dim (\alpha )\right\} \) such that (i, j)-th matrix entry of \(\alpha \circ {\tilde{\pi }}\) belongs to some block in the block diagonal matrix \(\oplus _{s=1}^{u} \tau ^{s}\). In particular, \(B(\alpha ,i)\) denotes all column indices j which have non-trivial entry in their i-th row of \(\alpha \circ {\tilde{\pi }}\). Observe that \(B(\alpha ,i)\) remains the same if we switch the roles of columns and rows in the previous definition. In particular, \(\text {B}(\alpha ,i)\) is also the set of all \(j\in \left\{ 1,\ldots ,\dim (\alpha )\right\} \) such that (j, i)-th matrix entry of \(\alpha \circ {\tilde{\pi }}\) belongs to some block in the block diagonal matrix \(\oplus _{s=1}^{u} \tau ^{s}\).

Since \(\alpha \in \text {Irrep}(G^{R})\), by Lemma 2.18 we have that \(\alpha =\otimes _{d=1}^{R}\alpha _d\) where \(\alpha _d \in \text {Irrep}(G)\). Similarly, the representation \(\tau ^{s} \in \text {Irrep}(G^{L})\) from \( \alpha \circ {\tilde{\pi }} \cong \oplus _{s=1}^{u} \tau ^{s}\) can be represented as \(\tau ^{s}= \otimes _{d=1}^{L} \tau ^{s}_d\) where \(\tau ^{s}_d \in \text {Irrep}(G)\). Since all \(\tau ^{s}_d\) are completely determined by \(\alpha \) and \(\pi \), it is of interest to study the relation between \(\tau ^{s}_d\) and \(\alpha _d\). In particular, we prove the following lemma, which states that if \(\ell \in [R]\) is such that \(\alpha _{\ell } \not \cong 1\) and for every other \(\ell ' \in [R] {\setminus }\left\{ \ell \right\} \) such that \(\pi (\ell ') = \pi (\ell )\) we have \(\alpha _{\ell '}\cong 1\), then \(\tau ^{s}_{\pi (\ell )} \not \cong 1\) for each s.

Lemma 2.19

Let \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =\otimes _{d=1}^{R} \alpha _d\), and let \(\pi :[R] \rightarrow [L]\). Consider the representation \(\alpha \circ {\tilde{\pi }} \cong \oplus _{s=1}^{u} \tau ^{s}\) of \(G^{L}\) where each \(\tau ^{s} \in \text {Irrep}(G^{L})\). Furthermore, assume that for \(\ell \in [R]\) we have that \(\alpha _{\ell } \not \cong 1\), and also that for every \(\ell ' \in [R] \setminus \left\{ \ell \right\} \) such that \(\pi (\ell )=\pi (\ell ')\) we have that \(\alpha _{\ell '}\cong 1\). Then if we represent each \(\tau ^{s}\) as a tensor product of representations of G, i.e., \(\tau ^{s}=\otimes _{d=1}^L\tau ^{s}_d\), we have that \(\tau ^{s}_{\pi (\ell )} \not \cong 1\).

Proof

Consider any block \(\tau ^s\) in the block diagonal matrix \(\alpha \circ {\tilde{\pi }} \cong \oplus _{s=1}^{u} \tau ^{s}\), and let \(1\le i',j'\le \dim (\tau ^{s})\) be arbitrary. Let \(1\le i,j \le \dim (\alpha )\) be such that \([\alpha \circ {\tilde{\pi }}]_{ij}\) corresponds to \([\tau ^{s}]_{i'j'}\), i.e., i, j are unique such that \(Q(\alpha ,i,j) = (\tau ^{s},i',j')\). Since \(\alpha =\otimes _{d=1}^{R} \alpha _d\), we have that \([\alpha ]_{ij} = \prod _{d=1}^{R}[\alpha _{d}]_{d_id_j}\), where \(1\le d_i,d_j \le \dim (\alpha _d)\). But then

Now since each \(d \in [R]\setminus \left\{ \ell \right\} \) satisfies either \(\pi (d) \ne \pi (\ell )\) or \(\alpha _d \cong 1\), we have that \(\prod _{d \in [R] {\setminus } \left\{ \ell \right\} }(\alpha _{d})_{d_id_j} (x_{\pi (d)})\) does not depend on \(x_{\pi (\ell )}\). But then since \(\alpha _{\ell } \not \cong 1\) we have that \([\tau ^{s}]_{i'j'}(\textbf{x})\) depends on \(x_{\pi (\ell )}\) for at least one \(i',j'\), and therefore we can’t have \(\tau ^{s}_{\pi (\ell )} \cong 1\). Since the choice of s was arbitrary the claim of the lemma follows. \(\square \)

In the proof of the previous lemma we used the fact that \([\tau ^{s}]_{i'j'}(x)=[\alpha \circ {\tilde{\pi }}]_{ij} (x) = \prod _{d=1}^{R}[\alpha _{d}]_{d_id_j} (x_{\pi (d)})\) where \(Q(\alpha ,i,j) = (\tau ^{s},i',j')\). Hence, if for \(\ell \in [L]\) and each \(\ell ' \in [R]\) such that \(\pi (\ell ')=\ell \) we have that \(\alpha _{\ell '} \cong 1\), then \(\tau ^{s}_{\ell } \cong 1\) where \(\tau ^{s}_{\ell } \in \text {Irrep}(G)\) is a component of the tensor product \(\tau ^{s}=\otimes _{d=1}^{L}\tau ^{s}_d\). In particular, we have the following lemma.

Lemma 2.20

Let \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =\otimes _{d=1}^{R} \alpha _d\), and let \(\pi :[R] \rightarrow [L]\). Consider the representation \(\alpha \circ {\tilde{\pi }} \cong \oplus _{s=1}^{u} \tau ^{s}\) of \(G^{L}\) where each \(\tau ^{s} \in \text {Irrep}(G^{L})\). Furthermore let \(\ell \in [L]\) be such that for every \(\ell ' \in [R], \pi (\ell ')=\ell ,\) we have that \(\alpha _{\ell '}\cong 1\). Then for each \(s=1,\ldots ,u\), the representation \(\tau ^{s} \in \text {Irrep}(G^{L})\) does not not depend on its \(\ell \)-th argument, i.e., in the decomposition \(\tau ^{s} = \otimes _{d=1}^{L} \tau ^{s}_{d}\) we have \(\tau ^{s}_{\ell }\cong 1\).

Since the statements of the previous two lemmas are somewhat cumbersome, let us summarize them into a claim with a more elegant description. Before that, we introduce the following definition.

Definition 2.21

Let \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =\otimes _{d=1}^{R} \alpha _d\), and let \(\pi :[R] \rightarrow [L]\). We define \(T(\alpha )\) to be the set of all d for which \(\alpha _d\) is non-trivial, i.e.,

and let \(T_{\pi }(\alpha )\) be the set of all \(\pi (d)\) where \(d \in T(\alpha )\), i.e.,

Finally, let \(T^{uq}_{\pi }(\alpha )\) be the set of all \(d \in T_{\pi }(\alpha )\) that are mapped uniquely by \(\pi \), i.e., let

Now, Lemma 2.19 and Lemma 2.20 can be summarized as follows.

Lemma 2.22

Let \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =\otimes _{d=1}^{R} \alpha _d\), and let \(\pi :[R] \rightarrow [L]\). Consider the representation \(\alpha \circ {\tilde{\pi }} \cong \oplus _{s=1}^{u} \tau ^{s}\) of \(G^{L}\) where each \(\tau ^{s} \in \text {Irrep}(G^{L})\). Then for each s we have that \(T_{\pi }^{uq}(\alpha ) \subseteq T(\tau ^{s}) \subseteq T_{\pi }(\alpha )\).

In our proofs of hardness of Max-3-Lin with perfect completeness in the decomposition of a representation \(\alpha =\otimes _{d=1}^{R}\alpha _d\) instead of distinguishing between \(\alpha _d \cong 1\) and \(\alpha _d \not \cong 1\), we will need to distinguish between the case when \(\dim (\alpha _d)=1\) or \(\dim (\alpha _d)>1\). For that reason, we introduce analogues to Definition 2.21 and Lemma 2.22 as follows.

Definition 2.23

Let \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =\otimes _{d=1}^{R} \alpha _d\), and let \(\pi :[R] \rightarrow [L]\). Let \({\tilde{T}}(\alpha )\) be the set of all d such that \(\dim (\alpha _d) \ge 2\), i.e.,

and let \({\tilde{T}}_{\pi }(\alpha )\) be the set of all \(\pi (d)\) where \(d \in {\tilde{T}}(\alpha )\), i.e.,

Finally, let \({\tilde{T}}^{uq}_{\pi }(\alpha )\) be the set of all \(d \in {\tilde{T}}_{\pi }(\alpha )\) that are mapped uniquely by \(\pi \), i.e., let

Lemma 2.24

Let \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =\otimes _{d=1}^{R} \alpha _d\), and let \(\pi :[R] \rightarrow [L]\). Consider the representation \(\alpha \circ {\tilde{\pi }} \cong \oplus _{s=1}^{u} \tau ^{s}\) of \(G^{L}\) where each \(\tau ^{s} \in \text {Irrep}(G^{L})\). Then for each s we have that \({\tilde{T}}_{\pi }^{uq}(\alpha ) \subseteq {\tilde{T}}(\tau ^{s}) \subseteq {\tilde{T}}_{\pi }(\alpha )\).

The proof of Lemma 2.24 is deferred to the appendix.

2.2 Max-3-Lin and Universal Factor Graphs

We begin by introducing the Max-3-Lin problem over a group G.

Definition 2.25

In the Max-3-Lin problem over a group G input is given by n variables \(x_1,\ldots ,x_n,\) taking values in G, and m constraints, where i-th constraint is of the form

Note that in this definition we do not allow for constants between the variables, i.e., we do not allow equations of the form \(x_{i_1} \cdot g_i \cdot x_{i_2} \cdot g_i'\cdot x_{i_3} = c_i,\) with \(g_i,g_i' \in G\), which was not the case with the previous works [6, 10] on hardness of Max-3-Lin over non-Abelian groups. Furthermore, this definition does not allow for inverses in equations, for example the constraint \(x_{i_1} x_{i_2}^{-1} x_{i_3}^{-1}=c_i\) is not allowed, while in [10] these equations appeared since the analysis of the soundness required certain functions to be skew-symmetric (i.e., check Lemma 15 and Lemma 23 from [10]). Since in this work we consider hardness results, our proofs will also imply the hardness of instances defined in the sense of [6, 10].

Another strengthening of the previous results considered in this work comes from assuming that the instances have universal factor graphs. In order to formally explain this strengthening, we need to introduce the notion of a factor graph of a constraint satisfaction problem. We first recall the definition of a constraint satisfaction problem.

Definition 2.26

A constraint satisfaction problem (CSP) over a language \( \Sigma = [q], q \in {\mathbb {N}}\), is a finite collection of predicates \(K \subseteq \{ P:[q] ^k \rightarrow \{0,1\} \mid k \in {\mathbb {N}}\}\).

We use use \(\text {ar}(P)=k\) to denote the arity of a predicate \(P:[q] ^k \rightarrow \{0,1\}\). As an input to our problem we get an instance of a CSP K, which is defined as follows.

Definition 2.27

An instance \({\mathcal {I}}\) of a CSP K consists of a set \(X = \{x_1,\ldots ,x_n\}\) of n variables taking values in \(\Sigma \) and m constraints \(C_1,\ldots ,C_{m}\), where each constraint \(C_i\) is a pair \((P_i,S_i)\), with \(P_i \in K\) being a predicate with arity \(k_i:= \text {ar}(P_i)\), and \(S_i\) being an ordered tuple containing \(k_i\) distinct variables which we call scope.

Let us denote by \(\sigma :X \rightarrow \Sigma \) an assignment to the variables X of a CSP instance \({\mathcal {I}}\). We interpret \(\sigma (S_i)\) as a coordinate-wise action of \(\sigma \) on \(S_i\). Given \(\sigma \), we define the value \(\text {Val}_{\sigma }({\mathcal {I}})\) of \(\sigma \) as

We work with Max-CSPs in which we are interested in maximizing \(\text {Val}_{\sigma }({\mathcal {I}})\). We use \(\text {Opt}({\mathcal {I}})\) to denote the optimal value of \({\mathcal {I}}\), i.e., \(\text {Opt}({\mathcal {I}}) = \max \limits _{\sigma } \left( \text {Val}_{\sigma }({\mathcal {I}}) \right) .\) Observe that Max-3-Lin over G can be seen as a Max-CSP with predicates \(K=\left\{ P_g\right\} _{g \in G}\), where

We can now introduce factor graphs and the notion of hardness we are interested in this article.

Definition 2.28

The factor graph \({\mathcal {F}}\) of an instance \({\mathcal {I}}\) consists of the scopes \(S_r,r=1,\ldots ,m\). A family \(\left\{ {\mathcal {F}}_n\right\} _{n \in {\mathbb {N}}}\) is explicit if it can be constructed in time that is polynomial in n.

Definition 2.29

We say that the Max-CSP(K) is (c, s)-UFG-NP-hard if there is an explicit family of factor graphs \(\left\{ {\mathcal {F}}_n\right\} _n\) for K and a polynomial time reduction R from 3-Sat instances \(I_n\) on n variables to Max-CSP(K) instances \(R({\mathcal {I}}_n)\) with the factor graph \({\mathcal {F}}_n\) such that the following holds

-

Completeness: If \(I_n\) is satisfiable then \(\text {Opt}(R({\mathcal {I}})) \ge c\).

-

Soundness: If \(I_n\) is not satisfiable then \(\text {Opt}(R({\mathcal {I}})) \le s\).

We will say that Max-CSP(K) has “universal factor graphs” to mean that Max-CSP(K) is (c, s)-UFG-NP-hard, in which case the values of c and s will be clear from the context.

Definition 2.30

A polynomial time reduction R from CSP K to CSP \(K'\) is factor graph preserving if it satisfies the following property:

-

Whenever two instances \(I_1,I_2\) of K have the same factor graphs, the respective instances \(R(I_1)\) and \(R(I_2)\) output by the reduction R have the same factor graphs as well.

The definition of the (c, s)-UFG-NP-hardness given here matches the one from [3]. Note that another view is given by the definition from [13] which considered hardness from the perspective of circuit complexity, i.e., with the starting point being \(\text {NP}\not \subseteq \text {P}/\text {Poly}\) one would aim to show non-existence of polynomially sized circuits which distinguish soundness from completeness of instances with fixed factor graphs. This difference is only technical, and in particular it is not hard to see that the analogues of all the results given in this work also hold in the alternative setting considered in [13].

Majority of the strong hardness of approximation reductions such as [8, 14] use as an intermediary step in a reduction the parallel repetition [17, 18] of 3-Sat instances output by the PCP theorem [1, 2, 9]. Let us briefly describe the parallel repetition as a game played between two provers that can not communicate with each other and a verifier. We first fix the number of repetitions \(r \in {\mathbb {N}}\). Then, in each round the verifier picks r random constraints \(C_{i_1},C_{i_2},\ldots ,C_{i_r}, 1 \le i_1,\ldots ,i_r \le m,\) where \(C_{i_j}\) are constraints of the starting 3-Sat instance with m constraints. Then, the verifier sends these r constraints to the prover \(P_1\). The verifier also picks one variable from each constraint sent to \(P_1\) and sends these variables to the prover \(P_2\). The first prover responds with a satisfying assignment to the r constraints, while \(P_2\) responds with values to r variables. The verifier accepts if the assignments of the provers \(P_1\) and \(P_2\) agree on the same variables.

Parallel repetition game is usually conceptualized by the Label Cover problem, whose definition can be given as follows.

Definition 2.31

A Label Cover instance \(\Lambda =\left( V,W,E,[L],[R],\Pi \right) \) is a tuple in which

-

(V, W, E) is a bipartite graph with vertex sets V and W, and an edge set E.

-

[L] and [R] are alphabets, where \(L,R \in {\mathbb {N}}\).

-

\(\Pi \) is a set which consists of projection constraints \(\pi _e: [R] \rightarrow [L]\) for each edge \(e \in E \).

The value of a Label Cover instance under an assignment \(\sigma _L :V \rightarrow [L]\), \(\sigma _R :W \rightarrow [R],\) is defined as the fraction of edges \(e \in E\) that are satisfied, where an edge \(e=(v,w)\) is satisfied if \(\pi _e(\sigma _R(w)) = \sigma _L(v)\). We will write \(\text {Val}_{\sigma }(\Lambda )\) for the value of the Label Cover instance \(\Lambda \) under an assignment \(\sigma =(\sigma _L,\sigma _R)\).

In this definition one can see [R] as a set of all possible satisfying assignments of the prover \(P_1\) for a question \(w \in W\). Hence, the set [R] depends not only on the factor graph of the starting 3-Sat instance but also on the predicates applied to each triplet of variables. Due to this obstacle we can not use Label Cover as the black box input to our problem. In particular, we need to resort to bookkeeping the types of predicates received by the prover \(P_1\). Let us now describe the approach taken in this work.

The first difference compared to the “classical” approach to parallel repetition described above comes from the fact that as in [3] we apply parallel repetition to Max-TSA problem which is \((1,1-\varepsilon )\)-UFG-NP-hard [13] for some \(\varepsilon >0\). Max-TSA problem is a CSP with predicates \(TSA_b:\left\{ 0,1\right\} ^{5}\rightarrow \left\{ 0,1\right\} , b \in \left\{ 0,1\right\} ,\) given by

The reason for using Max-TSA problem instead of 3-Sat is technical in nature and it has to do with the fact that in the case we use Max-TSA and we identify with [R] all the possible assignments to the 5r variables received by \(P_1\), then checking whether an assignment \(\ell \in [R]\) returned by the prover satisfies all the constraints is equivalent to checking whether r equations

are true. This is achieved by setting each  to be the function that extracts values of variables appearing in j-th constraint received by \(P_1\), and if the values of the variables are \(x_1,x_2,\ldots ,x_5\), then the function

to be the function that extracts values of variables appearing in j-th constraint received by \(P_1\), and if the values of the variables are \(x_1,x_2,\ldots ,x_5\), then the function  returns the value \(x_1 + x_2 + x_3 +x_4 \cdot x_5\). If we used the same setup with 3-Sat as the starting instance, the predicates

returns the value \(x_1 + x_2 + x_3 +x_4 \cdot x_5\). If we used the same setup with 3-Sat as the starting instance, the predicates  would also depend on negations applied to the variables, which would be an issueFootnote 3 for functional folding which will be introduced in Sect. 2.3.

would also depend on negations applied to the variables, which would be an issueFootnote 3 for functional folding which will be introduced in Sect. 2.3.

We can now conceptualize the game between the two provers described above with the following definition.

Definition 2.32

A UFG Label Cover instance \(\Lambda _{UFG}\) is defined as \(\Lambda _{UFG}=(\Lambda , U)\), where \(\Lambda =\left( V,W,E,[L],[R],\Pi \right) \) is a Label Cover instance, while \(U=\left( {\mathcal {P}}, \textbf{b}, I \right) \) consists of

-

\({\mathcal {P}}\), which is a set of functions

, and

, and -

\(\textbf{b} \in \left\{ 0,1\right\} ^{m}, \textbf{b}=(b_1,\ldots ,b_m)\),

-

I, which is a collection of functions \(I_w: [r] \rightarrow [m], w \in W\).

The value of a UFG Label Cover instance under an assignment \(\sigma =(\sigma _L,\sigma _R)\) is the fraction of satisfied edges of \(\Lambda \), where an edge \(e=(w,v)\) is satisfied if it satisfies the projective constraint and if furthermore  for \(i=1,\ldots ,r\).

for \(i=1,\ldots ,r\).

In the definition above \(I_w\) returns the indices of the constraints received by the prover \(P_1\) when given a query w.

Since the starting Max-TSA problem is (1, 0.51)-UFG-NP-hard [3], by the parallel repetition theorem [18] we have the following lemma.

Lemma 2.33

For any \(\gamma >0\) there is a reduction from (1, 0.51)-Max-TSA to UFG Label Cover \(\Lambda _{UFG}(\Lambda ,U)\) such that it is NP-hard to distinguish the following cases

-

Completeness \(\text {Opt}(\Lambda _{UFG})=1\),

-

Soundness \(\text {Opt}(\Lambda _{UFG}) < \gamma \).

Furthermore, starting from two instances of Max-TSA with the same factor graphs, the reduction will produce instances \(\Lambda _{UFG},\Lambda _{UFG}'\) which differ only in their respective values of vectors \(\textbf{b},\mathbf{b'}\).

In our work for technical reasons we actually use smooth parallel repetition, which we describe in terms of two-prover game as follows. In this version apart from r we fix \(t \in {\mathbb {N}}, 1< t <r\) as well, and instead of picking r variables from the constraints \(C_{i_1},\ldots ,C_{i_r}\) sent to \(P_1\) and sending them to \(P_2\), the verifier selects t constraints at random and sends one variable from each of these constraints to \(P_2\). The verifier also sends \(r-t\) remaining constraints to \(P_2\). Note that in this version we do not need to force \(P_2\) to satisfy these constraints since this is enforced by the agreement test performed by the verifier. We use smooth parallel repetition because we want to say that for any two answers given by \(P_1\) it is highly likely that \(P_2\) will have only one answer that will be accepted by the verifier. UFG Label Cover described in this paragraph will be referred to as (r, t)-smooth UFG Label Cover.

In order to express the smoothness property of \(\Lambda _{UFG}\) we introduce the following definition.

Definition 2.34

Consider \(\pi :[R] \rightarrow [L]\) and let \(S \subseteq [R]\). We use \({\mathcal {C}}(S,\pi )\) to denote the indicator variable which is equal to 1 if and only if there are two distinct \(i,j \in S\) such that \(\pi (i)=\pi (j)\), or formally:

Now, as shown by Claim 2.20 in [3], we can assume that the UFG Label Cover instance satisfies smoothness property defined as follows.

Lemma 2.35

Let \(0 \le t \le r, t \in {\mathbb {N}}\), consider (r, t)-smooth UFG Label Cover \(\Lambda _{UFG}\) and let \(w \in W\) be any vertex of W. If we denote with \(E_w\) the set of of all edges e incident to w, then for \(S \subseteq [R]\) we have that

The difference in the lemma above and Claim 2.20 in [3] is due to the fact that we use r and t here in the role played by \((r+t)\) and r in [3], respectively. Let us also observe that choosing sufficiently large t ensures that the parallel repetition of (1, 0.51)-UFG-NP-hard Max-TSA still yields \(\Lambda _{UFG}\) instance with completeness 1 and soundness \(\gamma \). In more detail, completeness holds due to the consistency of our construction, while for the soundness we can ignore \(r-t\) constraints introduced for smoothness (observe that they can only make soundness lower), and then for every fixed \(\textbf{b}\) we are left with the basic projection game for which the parallel repetition theorem holds [18].

2.3 Functional Folding Meets Representation Theory

The next step in the “classical reduction” consists in encoding the answers of provers to a query w for \(P_1\) (or v for \(P_2\)) by a long code which can be thought of as a function \(f_w:G^{R}\rightarrow G\) for \(P_1\) (or \(f_v :G^{L} \rightarrow G\) for \(P_2\)), and using a suitable test along each edge of a Label Cover instance that depends on the hardness result we are trying to prove. For example, in the classical reduction of Håstad [14] which gives NP-hardness result of approximating Max-3-Lin over \({\mathbb {F}}_2\) (as well as Abelian groups among other things), the test is of the form

where \(\textbf{x},\textbf{y},\textbf{z}\) are uniformly random binary strings which are correlated, and the correlation depends on the constraint in the Label Cover. Without folding, the provers can answer with functions \(f_w= f_v = 0\), which will always satisfy the equations. In order to force provers to give non-trivial answers, Håstad [14] required functions \(f_w\) and \(f_v\) to satisfy

where \(\textbf{1}\) is all ones vector. This in particular forced the functions \(f_w\) and \(f_v\) to be “balanced”, i.e., they take values 0 and 1 equally often, which was enough to prove the result for Max-3-Lin over \({\mathbb {F}}_2\). In particular, the Fourier coefficient of both functions indexed by the empty set is zero, and hence Fourier weight is concentrated on non-empty sets,Footnote 4 which is useful to decode significant “coordinates” of the functions \(f_v\) and \(f_w\). The idea of folding was introduced by Bellare et. al. [5] as “folding over 1”. The same article explains well the intuition behind using long codes and folding. The generalization to (Abelian) group setting is given also by [14], i.e., \(f_w\) and \(f_v\) are folded by requiring them to satisfy

where \(c \in G\) and for \(\textbf{x}=(x_1,\ldots ,x_R)\) the action of c on \(\textbf{x}\) is performed coordinate-wise, i.e., \(c\textbf{x} = (cx_1,\ldots ,cx_R)\). Once again this makes functions \(f_w,f_v,\) “balanced”, which is enough to prove hardness, once again by noticing that Fourier coefficient on \(\emptyset \) vanishes. This definition makes sense for non-Abelian groups as well, and it was used in hardness of approximation results for Max-3-Lin over non-Abelian groups [6, 10].

In our setting however it is not enough to ask that Fourier coefficient of \(f_w\) corresponding to \(\emptyset \) vanishes. In particular, even when we have a non-zero Fourier coefficientFootnote 5 on a set \(S \subseteq [R]\), it can happen that for every \(d \in S\) we have some constraint  , and hence when trying to decode the strategy of the prover \(P_1\) we do not get labels satisfied by UFG Label Cover conditions introduced in Definition 2.32. For that reason we need stricter notion of folding, which ensures that we can always find some \(d \in S\) such that

, and hence when trying to decode the strategy of the prover \(P_1\) we do not get labels satisfied by UFG Label Cover conditions introduced in Definition 2.32. For that reason we need stricter notion of folding, which ensures that we can always find some \(d \in S\) such that  , as shown in Lemmas 2.40 and 2.41.

, as shown in Lemmas 2.40 and 2.41.

Let us now introduce the notion of functional folding, which is a generalization of functional folding introduced in [3] to non-Abelian setting.

Definition 2.36

Given a fixed collection of functions  , let us introduce an equivalence relation \(\sim \) on \(G^{R}\) by

, let us introduce an equivalence relation \(\sim \) on \(G^{R}\) by

Let \(\textbf{b}\in \left\{ 0,1\right\} ^{r}, \textbf{b} = (b_1,\ldots ,b_r)\). We say that a function \(f_\textbf{b} :G^{R} \rightarrow G\) is functionally folded with respect to  if for every \(\textbf{x} \sim \textbf{y}\) such that

if for every \(\textbf{x} \sim \textbf{y}\) such that

we have

For the sake of notational convenience we will usually omit the subscript \(\textbf{b}\) and also not mention that the folding is with respect to  , especially when this can be easily inferred from the context.

, especially when this can be easily inferred from the context.

We obtain classical folding over \({\mathbb {F}}_2\) from this definition by restrictingFootnote 6F to be constant \(-1\) function, and in the group setting we have that F takes any constant value from G. This in particular ensures that the restriction of the functionFootnote 7f to each equivalence class is balanced, which then implies that the whole function f is balanced. In our setting, we will use the fact that the following subsets of each equivalence class are balanced. We first fix any input, and then translate only “good coordinates” by applying any \(c \in G\) to them. This will make our function \(f_\textbf{b}\) balanced on these sets, which in turn is enough show that “bad Fourier coefficients” vanish. For more details, we refer to proofs Lemmas 2.40 and 2.41

Note that in order to construct a functionally folded function f we only need to define it on a fixed representative \(\textbf{y}\) of each equivalence class \([\textbf{y}]\). We can then extend the value of f to any \(\textbf{x} \sim \textbf{y}\) by saying that

As an immediate consequence of the definition we have the following lemma.

Lemma 2.37

If \(f :G^{R} \rightarrow G\) is functionally folded, then for any \(c \in G\) and any \(\textbf{x} \in G^{n}\) we have

Proof

By choosing \(F \equiv c\) we have that \(c\textbf{x} \sim \textbf{x}\), and since f is folded by (4) we have \(f(c\textbf{x}) = c f(\textbf{x})\), irrespective of the values of \(b_i\) since F is constant. \(\square \)

Following lemma allows us to eliminate trivial representations in the Fourier spectrum of a function \(\rho \circ f\), where f is functionally folded.

Lemma 2.38

Let \(f :G^{n} \rightarrow G\) satisfy \(f(c\textbf{x}) = cf(\textbf{x})\) for every \(c \in G\) and every \(\textbf{x} \in G^{n}\), and let \(\rho \in \text {Irrep}(G) {\setminus } \left\{ 1\right\} \). Then for \(g=[\rho \circ f]_{pq}\), where \(1 \le p,q \le \dim (\rho )\), if \(\alpha \cong 1\) we have \({\hat{g}}(\alpha )=0\). The same statement holds if we replace the condition that f satisfies \(f(c\textbf{x})=cf(\textbf{x})\) by \(f(\textbf{x}c) = f(\textbf{x}) c\) for every \(c \in G\).

Proof

where the last inequality holds since \(\sum _{c \in G}\rho (c)_{pr}=0 \) by Lemma 2.10. The proof in case \(f(\textbf{x} c) = f(\textbf{x}) c\) is analogous. \(\square \)

The lemma above will be useful when deriving the result for Max-3-Lin with almost perfect completeness. For Max-3-Lin over G with perfect completeness we will actually view all representations \(\beta \in \text {Irrep}(G^{L})\) with \(\dim (\beta )=1\) as trivial, and hence we will need the following lemma.

Lemma 2.39

Let \(f :G^{n} \rightarrow G\) satisfy \(f(c\textbf{x}) = cf(\textbf{x})\) for every \(c \in G\) and every \(\textbf{x} \in G^{n}\), and let \(\rho \in \text {Irrep}(G), \dim (\rho ) \ge 2\). Then for \(g=[\rho \circ f]_{pq}\) where \(1\le p,q \le \dim (\rho ),\) and \(\alpha \in \text {Irrep}(G^{n}), \dim (\alpha )=1,\) we have that \({\hat{g}}(\alpha )=0\). The same statement holds if we replace the condition that f satisfies \(f(c\textbf{x})=cf(\textbf{x})\) by \(f(\textbf{x}c) = f(\textbf{x}) c\) for every \(c \in G\).

The statement and the proof of this lemma already appeared in [6] as Lemma 2.29, and hence we omit the details here.

Observe that the proof of Lemma 2.38 and Lemma 2.39 did not require any additional properties of functional folding, and therefore they can be applied for f that is “classically folded” as well.

The next two lemmas we prove can be thought of as strengthenings of Lemma 2.38 and Lemma 2.39, respectively, for functionally folded functions f. In particular they show that if f is functionally folded, then for \(g=[\rho \circ f]_{pq}\) and \(\alpha \in \text {Irrep}(G^{R}), \alpha =(\alpha _1,\ldots ,\alpha _R)\) such that \({\hat{g}}(\alpha )\ne 0\) we need to have a “non-trivial” \(\alpha _{\ell }\) such that \(\ell \in [R]\) satisfies all constraints  .

.

We first state and prove the lemma that will be useful for the soundness analysis of Max-3-Lin with almost perfect completeness.

Lemma 2.40

Let \(f :G^{R} \rightarrow G\) be functionally folded with respect to  , and let \(\rho \in \text {Irrep}(G)\) be a representation such that \(\rho \not \cong 1\). Given some \(1 \le p,q \le \dim (\rho )\), let \(g(\textbf{x}) = \rho (f(\textbf{x}))_{pq}\), and consider \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =(\alpha _1,\ldots ,\alpha _R)\). If for every \(d \in [R]\) such that \(\alpha _{d} \not \cong 1\) we have

, and let \(\rho \in \text {Irrep}(G)\) be a representation such that \(\rho \not \cong 1\). Given some \(1 \le p,q \le \dim (\rho )\), let \(g(\textbf{x}) = \rho (f(\textbf{x}))_{pq}\), and consider \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =(\alpha _1,\ldots ,\alpha _R)\). If for every \(d \in [R]\) such that \(\alpha _{d} \not \cong 1\) we have  , then \({\hat{g}}(\alpha ) = 0\).

, then \({\hat{g}}(\alpha ) = 0\).

Proof

For \(c \in G \) let us define a function \(F_c :\left\{ 0,1\right\} ^{r} \rightarrow G\) by

and let us denote with \(\mathbf {F_c}\) the vector in \(G^{R}\) defined by  , \(1 \le d \le R\). Then for \(1 \le i,j \le \dim (\alpha )\) we can write

, \(1 \le d \le R\). Then for \(1 \le i,j \le \dim (\alpha )\) we can write

Here we used the fact that \(\{ \mathbf {F_c} \textbf{x} \}_{c \in G}\) is uniformly distributed over the coordinates d in which  , and constant in the rest. Let us now fix \(\textbf{x} \in G^{R}\) and study the term \(\sum _{c \in G} g(\mathbf {F_c} \textbf{x}) \alpha (\mathbf {F_c}{} \textbf{x})_{ij}\). Denote with \(\textbf{y}^{c}:=\mathbf {F_c}{} \textbf{x}\), and observe that for each \(c \in G\) we have \(\textbf{y}^{c} \sim \textbf{x}\) since

, and constant in the rest. Let us now fix \(\textbf{x} \in G^{R}\) and study the term \(\sum _{c \in G} g(\mathbf {F_c} \textbf{x}) \alpha (\mathbf {F_c}{} \textbf{x})_{ij}\). Denote with \(\textbf{y}^{c}:=\mathbf {F_c}{} \textbf{x}\), and observe that for each \(c \in G\) we have \(\textbf{y}^{c} \sim \textbf{x}\) since

Therefore, since f is functionally folded we have that

Let us now study the term \(\alpha (\mathbf {F_c}{} \textbf{x})_{ij}\). Using Lemma 2.18 let us represent \(\alpha \) as the tensor product of irreducible representations \(\alpha _d \in \text {Irrep}(G), d=1,\ldots ,R\), i.e., let us write \(\alpha (\textbf{x})=\alpha _1(x_1) \otimes \alpha _2(x_2) \otimes \ldots \otimes \alpha _R(x_R)\). Consider any \(d \in [R]\) such that \(\alpha _{d} \not \cong 1\). By the assumption of the lemma we have  . But in this case

. But in this case  and since

and since

we have that \(x_{d}=y_{d}^c\). Since for the remaining d we have that \(\alpha _d\) are constant (i.e., \(\alpha _d \cong 1\)), we have that \(\alpha (\mathbf {F_c}{} \textbf{x})_{ij} = \alpha (\textbf{x})_{ij}\). Hence we can write

Finally, we have that

where we used the fact that \(\sum _{c \in G} \rho (c)_{pr}=0\), which holds since \(\rho \not \cong 1\) allows us to use Lemma 2.9. \(\square \)

The next lemma we prove will be useful for decoding strategies of the provers in the analysis of soundness for Max-k-Lin with perfect completeness.

Lemma 2.41

Let \(f :G^{R} \rightarrow G\) be functionally folded with respect to  , and let \(\rho \in \text {Irrep}(G)\) be a representation such that \(\dim (\rho )\ge 2\). Given some \(1 \le p,q \le \dim (\rho )\), let \(g(\textbf{x}) = \rho (f(\textbf{x}))_{pq}\), and consider \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =(\alpha _1,\ldots ,\alpha _R)\). If for each \(d \in [R]\) such that \(\dim (\alpha _d)\ge 2\) we have that

, and let \(\rho \in \text {Irrep}(G)\) be a representation such that \(\dim (\rho )\ge 2\). Given some \(1 \le p,q \le \dim (\rho )\), let \(g(\textbf{x}) = \rho (f(\textbf{x}))_{pq}\), and consider \(\alpha \in \text {Irrep}(G^{R})\), \(\alpha =(\alpha _1,\ldots ,\alpha _R)\). If for each \(d \in [R]\) such that \(\dim (\alpha _d)\ge 2\) we have that  , then \({\hat{g}}(\alpha ) = 0\).

, then \({\hat{g}}(\alpha ) = 0\).

Proof

For \(c \in G \) we let a function \(F_c :\left\{ 0,1\right\} ^{r} \rightarrow G\) and a vector \(\mathbf {F_c}\) be defined in the same way as in the proof of Lemma 2.40. For \(1 \le i,j \le \dim (\alpha )\) we can write

We now fix \(\textbf{x} \in G^{R}\) and study the term \(\sum _{c \in G} g(\mathbf {F_c} \textbf{x}) \alpha (\mathbf {F_c}{} \textbf{x})_{ij}\). Denote with \(\mathbf{y^{c}}:=\mathbf {F_c}{} \textbf{x}\), and observe that for each \(c \in G\) we have \(\mathbf{y^{c}} \sim \textbf{x}\) since

Hence, since f is functionally folded we have that

Let us now study the term \(\alpha (\mathbf {F_c}{} \textbf{x})_{ij}\). By Lemma 2.18 we can write \(\alpha (\textbf{x})\) as \(\alpha (\textbf{x})=\alpha _1(x_1) \otimes \alpha _2(x_2) \otimes \ldots \otimes \alpha _R(x_R)\). For each \(d \in [R]\) such that \(\dim (\alpha _d) \ge 2\) by the assumption of the lemma we have that  . Hence, for such d we have that

. Hence, for such d we have that  and

and

Therefore, in the expression

each representation \(\alpha _d\) of dimension greater than 1 satisfies \(\alpha _d(\mathbf {F_c}_dx_d)=\alpha _d(x_d)\), and in particular for each such d the value of \(\alpha _d(\mathbf {F_c}_dx_d)\) does not change with with c. Hence, for a fixed \(\textbf{x}\) if we denote with J the set of all the indices \(d \in [R]\) such that  we can write

we can write

where \(C_{\alpha ,\textbf{x}} \in {\mathbb {C}}\) is a constant that depends on \(\alpha ,\textbf{x},\) but does not depend on c, and \(1\le d_i,d_j \le \dim (\alpha _d)\). Observe that for each \(\alpha _d\), where \(d \in J\), is one-dimensional. Hence, we can write

Finally, since the product of one-dimensional representations is a one-dimensional representation, we can define with \(\beta (c)\) the representation on G given by

Then, we have that \({\tilde{\beta }}:G^{R} \rightarrow {\mathbb {C}}\) defined by \({\tilde{\beta }}(\textbf{x}) = \overline{\beta (\textbf{x})}\) is also a one-dimensional representation as shown in Claim 2.25 in [6]. But then

where the last inequality holds because \(\sum _{c \in G} \rho (c)_{pr} \overline{{\tilde{\beta }}(c)}= |G| \langle \rho _{pr}, {\tilde{\beta }} \rangle _{L^{2}(G)}=0\) by Lemma 2.9 and since \({\tilde{\beta }}\) and \(\rho \) are of different dimensions, and hence non-isomorphic. \(\square \)

3 Hardness of Max-3-Lin with Almost Perfect Completeness with Universal Factor Graphs

In this section we describe a reduction from (r, t)-smooth UFG Label Cover to instances of Max-3-Lin over a non-Abelian G with factor graph independent of \(\textbf{b}=(b_1,\ldots ,b_m)\). Let us introduce some notation first. For each \(w \in W\) let \(f_w^{F} :G^{R}\rightarrow G\) be functionally folded function with respect to  , and for each \(v \in V\) let \(f_v^{F}:G^{L} \rightarrow G\) be “classically folded”, i.e., function \(f_v^{F}\) satisfies

, and for each \(v \in V\) let \(f_v^{F}:G^{L} \rightarrow G\) be “classically folded”, i.e., function \(f_v^{F}\) satisfies

Finally, let \(f_w:G^{R}\rightarrow G\) be a function without any restriction. When querying the function \(f_w^{F}(\textbf{x})\) in the procedure we describe below, we actually do not query \(f_w^{F}(\textbf{x})\) directly. Instead, we find the equivalence class of \(\textbf{x}\) and the fixed representative \(\bar{\textbf{x}}\) of the equivalence class \([\textbf{x}]\). Since \(\textbf{x} \sim \bar{\textbf{x}}\) we have that  . Because \(f_w^{F}\) is functionally folded we have

. Because \(f_w^{F}\) is functionally folded we have

and instead of \(f_w^{F}(\textbf{x})\) we actually query \(f_w^{F}(\bar{\textbf{x}})\) from the prover \(P_1\) and whenever we use \(f_w^{F}(\textbf{x})\) we actually mean \(F(b_{I_w(1)},\ldots ,b_{I_w(r)}) f_w^{F}(\bar{\textbf{x}})\). Note that \(f_w^{F}(\bar{\textbf{x}})\) does not depend on the values of \(b_{I_w(1)},\ldots ,b_{I_w(r)}\). Similarly, when querying \(f_v^{F}(\textbf{x}\cdot c)\) in the test below we use \(f_v^{F}(\textbf{x}\cdot c)\) to mean \(f_v^F({\bar{\varvec{{x}}}}) c\). With this in mind let us now we describe the Max-3-Lin instance we reduce to as a distribution of constraints generated by the following algorithm.

-

Sample an edge \(e=(v,w)\) from E.

-

Sample \(\textbf{y}\) uniformly at random from \(G^{L}\).

-

Sample \(\textbf{x}\) uniformly at random from \(G^{R}\).

-

Set \(\textbf{z} \in G^{R}\) such that \(z_i = x_i^{-1} \cdot \eta _i \cdot (y \circ \pi _e)_i^{-1} \), where \(\eta _i=1\) w.p. \(1-\varepsilon \), and \(\eta _i \sim G\) w.p. \(\varepsilon \).

-

Test \(f_w^{F}(\textbf{x}) f_w(\textbf{z}) f_v^{F}(\textbf{y}) = 1_G\).

Since \(f_v^{F}\) and \(f_w^{F}\) are folded, the test \(f_w^{F}(z) f_w(x) f_v^{F}(y) = 1_G\) is actually

which by rearranging gives us

Since \(f_w^{F}(\bar{\textbf{x}}),f_w(\textbf{z}),f_v^{F}(\bar{\textbf{y}})\) do not depend on the values of \(b_{I_w(1)},\ldots ,b_{I_w(r)}\) we get a Max-3-Lin instance whose factor graph does not depend on \(b_1,\ldots ,b_m\), and hence it remains to prove the following theorem.

Theorem 3.1

Let \(\varepsilon , \delta >0\), let \(\zeta = \frac{\log (2|G|^{3}/\delta )}{\varepsilon }\), and consider (r, t)-smooth UFG Label Cover \(\Lambda _{UFG}\) obtained by the parallel repetition of (1, 0.51)-UFG-NP-hard Max-TSA, where t is chosen such that \(\Lambda _{UFG}\) has soundness at most \(\frac{\delta ^{4}}{32 |G|^{12}}\zeta ^{-1}\), and r is chosen such that

If I is the instance of Max-3-Lin produced by the procedure described above with \(\Lambda _{UFG}\) as the starting point, then the following holds:

-

Completeness: If \(\text {Opt}(\Lambda _{UFG}) = 1\), then \(\text {Opt}(I) \ge 1-\varepsilon \).

-

Soundness If \(\text {Opt}(\Lambda _{UFG}) \le \frac{\delta ^{4}}{32 |G|^{12}}\zeta ^{-1}\), then \(\text {Opt}(I) \le 1/|G| + \delta .\)

We first show completeness.

Lemma 3.2

If \(\text {Opt}(\Lambda _{UFG}) = 1\) then \(\text {Opt}(I) \ge 1-\varepsilon \).

Proof

If \(\text {Opt}(\Lambda _{UFG})\) then there is an assignment \(\sigma \) which satisfies all the constraints of \(\Lambda _{UFG}\). Let \(f_w^{F}(x_1,\ldots ,x_n)\) be defined as

Note that \(f_w^{F}\) is functionally folded. In particular, if \((x_1,\ldots ,x_R):=\textbf{x} \sim \bar{\textbf{x}} = (\overline{x_1},\ldots ,\overline{x_R})\), then by the definition there is a function \(F:\left\{ 0,1\right\} ^{r}\rightarrow G\) such that

But then we have that

Since \(\sigma \) is a satisfying assignment we have that  for every \(i \in [r]\), and therefore

for every \(i \in [r]\), and therefore

which is sufficient to conclude that \(f_w^F\) is folded.

We also define \(f_w\) by \(f_w(z_1,\ldots ,z_R) = z_{\sigma (w)}\) and \(f_v^{F}(y_1,\ldots ,y_L) = y_{\sigma (v)}\). It is straightforward to check that \(f_v^{F}\) is “classically folded”. Hence, it remains to show that the probability that the test

is passed is at least \(1-\varepsilon \). But in case \(\eta _{\sigma (w)}=1\) which happens with probability at least \(1-\varepsilon \) we have that \(z_{\sigma (w)}= x_{\sigma (w)}^{-1} y_{\sigma (u)}^{-1}\) and hence

and therefore the test passes with probability at least \(1-\varepsilon \). \(\square \)

Let us now show the contrapositive of soundness from Theorem 3.1.

Lemma 3.3

If \(\text {Opt}(I) > \frac{1}{|G|}+\delta \) then \(\text {Opt}(\Lambda _{UFG}) > \frac{\delta ^{4}}{32 |G|^{12}}\zeta ^{-1}\).

Proof

By Lemma 2.13 the test is passed with probability

Since the probability of acceptance is at least \(1/|G|+\delta \) and \(\sum _{\rho \in \text {Irrep}(G)} \dim (\rho )^{2}=|G|\), there is at least one \(\rho \in \text {Irrep}(G) \setminus \left\{ 1\right\} \) such that

Let us fix one such \(\rho \), and expand \(\mathop {\text {E}}_{\textbf{x,y,z}} \left[ \chi _{\rho }(f_w^{F}(\textbf{x}) f_w(\textbf{z}) f_v^{F}(\textbf{y}))\right] \) as follows

Hence, for at least one triple p, q, r we have that

Let us now fix such p, q, r, and for the sake of notational convenience define

We can then write (5) as

We now study the term \(\Theta ^e:= \mathop {\text {E}}_{\textbf{x,y,z}} \left[ g_F(\textbf{z})g(\textbf{x}) h_F(\textbf{y})\right] \). Note that the functions \(h_F,g,g_F\) depend on the edge \(e=(v,w)\) as well, but in the next couple of paragraphs since we consider e to be fixed we will omit this dependence in our notation in order to avoid notational clutter. If we denote with \({\varvec{\eta }}\) the random variable \(\mathbf{\eta }=(\eta _1,\ldots ,\eta _R)\), where \(\eta _d\) are random variables defined in the beginning of this section, then by using the definition of \(\textbf{z}\) we have that

Now, by taking the Fourier transform of \(h_F,g,\) and \(g_F\), we can write the previous expression as

Because \(\mathop {\text {E}}_{\eta }[\alpha (\eta )] = (1-\varepsilon )^{|\alpha |} \cdot Id\), we can rewrite the expression above as

Now, we expand the expressions \(\left\langle \widehat{g_F} ( \alpha ) {\widehat{g}}(\alpha ), \alpha (\textbf{y}^{-1}\circ \pi _e) \right\rangle \) and \(\langle \widehat{h_F}(\beta ),\beta (\textbf{y}) \rangle \) and get

Consider the function \(\alpha \circ {\tilde{\pi }}_e(\textbf{y}) = \alpha (\textbf{y} \circ \pi _e)\). As discussed in Sect. 2.1, homomorphism \(\alpha \circ {\tilde{\pi }}_e (\textbf{y})\) is (not necessarily irreducible) representation of \(G^{L}\), and therefore \(\alpha \circ {\tilde{\pi }}_e(\textbf{y}) \cong \oplus _{s=1}^{u} \tau ^{s}(\textbf{y})\), where \(\tau ^{s}\) are irreducible representations of \(G^{L}\). Hence, if (k, i)-th matrix entry of \(\alpha \circ {\tilde{\pi }}_e\) does not belong to any block diagonal matrix \(\tau ^{s}\) we have that \(\alpha (\textbf{y} \circ \pi _e)_{ki}=0\). Otherwise, we can let \((\beta ',d_1,d_2) = Q(\alpha ,k,i)\) and calculate

where in the last equality we used the fact that \(\beta \) is unitary representation. Now, by Theorem 2.9 we have that

only if \(Q(\alpha ,k,i) = (\beta ,i',j')\). In case \(Q(\alpha ,k,i)=(\beta ,i',j')\), again by Theorem 2.9, we have that

Hence, going back to (7) we can write

Applying Cauchy-Schwarz inequality gives us

Now by Parseval’s identity we have that

where the last equality holds since \(\rho \) is unitary. Therefore, we can write

By applying Cauchy-Schwarz inequality to the inner term we obtain

By Lemma 2.22 we can upper bound this expression as

Recall that from (6) we have that

By convexity we have that \( \mathop {\text {E}}_{e \in E}|\Theta ^e |^{2} \ge |\mathop {\text {E}}_e \Theta ^e |^{2}\), and therefore

which with the expression in (8) gives us

The contribution of the terms with \(|\alpha |>\zeta \) where \(\zeta =\frac{\log (2|G|^{3}/\delta )}{ \varepsilon }\) is small, since in that case \((1-\varepsilon )^{2|\alpha |} \le \frac{\delta ^{2}}{4|G|^{6}}\), and hence

Therefore we have

As in Lemma 2.35 let us use \(E_w\) to denote the set of of all edges e incident to w. If we denote the inner term with \(t^e(\alpha ,\beta ):=\dim (\alpha ) \Vert \widehat{g_F}(\alpha )\Vert _{HS}^{2} \dim (\beta ) \Vert \widehat{h_F}(\beta )\Vert _{HS}^{2}\), we can write

where

and

Let us now show that the contribution of the term \(I_1\) is small due to the smoothness of \(\Lambda _{UFG}\). By unwrapping the definition of \(t^e(\alpha ,\beta )\) we have

But then by Lemma 2.35 we have that

where in the last inequality we used the fact that \(\sum _{\alpha \in \text {Irrep}(G^{R})}\dim (\alpha ) \Vert \widehat{g_F}(\alpha )\Vert _{HS}^{2} \le \Vert g_F\Vert _{L^{2}(G^{R})}^{2} \le 1 \). Finally, due to our choice of r, t we have that

and hence

Recalling our definition of \(I_2\) we can summarize our work so far by writing

Hence, by Markov’s inequality there is \(E' \subseteq E, |E'| \ge \frac{\delta ^{2}}{4 |G|^{6}} |E| \) such that for each \(e \in E'\) we have

Consider now the following randomized labelling of the starting UFG Label Cover instance. For a vertex w we pick a label \(\sigma _w\) as follows. First, we pick a representation \(\alpha \) with probability \(\dim (\alpha )\Vert \widehat{g_F}(\alpha )\Vert _{HS}^{2}\). Then, if we consider the decomposition \(\alpha =(\alpha _1,\ldots ,\alpha _R)\), we select \(\sigma _w\) uniformly at random from the set

Observe that whenever \(\widehat{g_F}(\alpha )\ne 0\) the set above is not empty by Lemma 2.40, and therefore we can always make such a choice. For a vertex v we pick a label \(\sigma _v\) in analogous way, i.e., we first pick a representation \(\beta \) with probability \(\dim (\beta )\Vert \widehat{h_F}(\beta )\Vert _{HS}^{2}\). Then, if we consider the decomposition \(\beta =(\beta ,\ldots ,\beta _L)\), we select \(\sigma _v\) uniformly at random from the set \(\left\{ d \in [L] \mid \beta _d \not \cong 1\right\} \). Note again that this set is not empty by Lemma 2.38. Then, by inequality (9) for each \(e \in E'\) we have that \(\alpha ,\beta \) satisfy with probability at least \(\frac{\delta ^{2}}{4 |G|^{6}}\) the following properties

-

\(|\alpha | <\zeta \),

-

\({\mathcal {C}}(T(\alpha ),\pi _e)=0\),

-

\(T_{\pi _e}^{uq}(\alpha ) \subseteq T(\beta ) \subseteq T_{\pi _e}(\alpha )\).

Let us now condition on this event happening and calculate the probability that the agreement test is passed along the edge e. Observe that since \( T(\beta ) \subseteq T_{\pi _e}(\alpha )\) we have that \(|\beta | \le |\alpha | \le \zeta \). Furthermore, since \({\mathcal {C}}(T(\alpha ),\pi _e)=0\) we have that \(\pi _e(\sigma _w) \in T_{\pi _e}^{uq}(\alpha )\) and hence since \(T(\beta ) \supseteq T_{\pi _e }^{uq}(\alpha )\) the probability that the test is passed is at least

Hence, for \(e \in E'\) the test passes with probability at least

and since \(|E'| \ge \frac{\delta ^{2}}{4 |G|^{6}} |E|\) the randomized assignment in expectation satisfies at least

fraction of edges. But the observe that by the method of conditional expectations there is a labelling that satisfies at least \(\frac{\delta ^{4}}{16 |G|^{4}}\zeta ^{-1}\) fraction of edges, which is a contradiction with the assumption that \(\text {Opt}(\Lambda _{UFG}) \le \frac{\delta ^{4}}{32 |G|^{12}} \zeta ^{-1}\). Hence, the initial assumption in our argument is incorrect, and therefore \(\text {Opt}(I) \le 1/|G|+\delta \). \(\square \)

4 Hardness of Max-3-Lin with Perfect Completeness with Universal Factor Graphs

In this section we discuss the hardness result for Max-3-Lin with perfect completeness. Since many steps in this result share a lot of similarities with the proof from the previous section and with [6], we will keep the discussion in this section much shorter and focus mostly on the new techniques specific to the reduction introduced in this section.

In order to apply the argument from [6] for high-dimensional terms we will show that we can suitably pick (r, t) such that (r, t)-smooth UFG Label Cover \(\Lambda _{UFG}\) satisfies the same smoothness property as the starting Label Cover instance in [6]. In particular, we have the following lemma.

Lemma 4.1

Consider (r, t)-smooth UFG Label Cover \(\Lambda _{UFG}\) constructed by the parallel repetition of (1, 0.51)-UFG-NP-hard Max-TSA instance. There is a constant \(d_0 \in (0,1/3)\) such that, given any fixed t, for all sufficiently big r we have that

We provide the proof of this lemma in the appendix. We remark that one can also prove this lemma by applying Lemma 6.9 from [14] to our setting, in which case the statement holds for any \(r\ge t\). However, since in this work we use parallel repetition of Max-TSA instead of Max-3-Sat we opt to reprove this lemma for the sake of completeness, and use less involved argument at the expense of having possibly much larger r which increases the size of starting \(\Lambda _{UFG}\) instance polynomially and hence does not affect the overall argument of this section.

As in the previous section we start from (r, t)-smooth UFG Label Cover, and along each edge we test functions \(f_w^F,f_w :G^{R} \rightarrow G, f_v :G^{L} \rightarrow G\), where \(f_w^F\) and \(f_v^{F}\) are folded in the same way as previously. The reduction to Max-3-Lin instance is given as a distribution of constraints generated by the following algorithm.

-

Sample an edge \(e=(v,w)\) from E.

-

Sample \(\textbf{y}\) uniformly at random from \(G^{L}\).

-

Sample \(\textbf{x}\) uniformly at random from \(G^{R}\).

-

Set \(\textbf{z} \in G^{R}\) such that \(z_i = x_i^{-1} \cdot (y \circ \pi _e)_i^{-1} \).

-

Test \(f_w^{F}(\textbf{x}) f_w(\textbf{z}) f_v^{F}(\textbf{y}) = 1_G\).

This is essentially the same test as in [6]; the only difference comes from the fact that we are folding two instead of three functions and that we are using functional folding on one of the functions. Let us now state the main theorem of this section.

Theorem 4.2

Let \(\varepsilon , \delta >0\), and C be a constant such that \(C^{-d_0/2} \le \frac{\delta ^{2}}{12|G|^{6}}\). Consider (r, t)-smooth UFG Label Cover \(\Lambda _{UFG}\) obtained by the parallel repetition of (1, 0.51)-UFG-NP-hard Max-TSA, where t is chosen such that \(\Lambda _{UFG}\) has soundness at most \(\frac{\delta ^{4}}{257 |G|^{12+2C}}C^{-1}\), and r is chosen sufficiently big so that Lemma 4.1 holds and

If I is the instance of Max-3-Lin produced by the procedure described above with \(\Lambda _{UFG}\) as the starting point, then the following holds:

-

Completeness: If \(\text {Opt}(\Lambda _{UFG}) = 1\) then \(\text {Opt}(I) = 1\).

-

Soundness If \(\text {Opt}(\Lambda _{UFG}) \le \frac{\delta ^{4}}{257 |G|^{12+2C}}C^{-1}\) then \(\text {Opt}(I) \le 1/|[G,G]| + \delta \).

Proof

As in the proof from the previous section the completeness case follows by setting \(f_w^F, f_w\), and \(f_v^{F}\) to be the dictator functions encoding the satisfying assignment to \(\Lambda _{UFG}\).

For the soundness case we closely follow the proof given in [6]. In particular, the test introduced in the beginning of this section is passed with probability

Let us argue by contradiction and assume that \(\text {Opt}(I) > 1/|[G,G]| + \delta \) which allows us to fix \(\rho \) such that \(\dim (\rho )\ge 2\) and

By replacing \(\chi _{\rho }\) with \(\text {tr}\circ \rho \) and expanding the expressions in the same way as in the proof of the Theorem 3.1 we conclude that for some \(1 \le p,q,r \le \dim (\rho )\) we have that

Let us define \(g_F,g:G^{R}\rightarrow {\mathbb {C}},h_F:G^{L}\rightarrow {\mathbb {C}}\) and \(\Theta ^e\) as before, and observe that by writing these functions in their respective Fourier basis we arrive at the expression

By Lemma 2.39 we have for \(\alpha \in \text {Irrep}(G^{R})\) with \(\dim (\alpha )=1\) that \(\widehat{g_F}(\alpha )=0\), and for \(\beta \in \text {Irrep}(G^{L})\) with \(\dim (\beta )=1\) that \(\widehat{h_F}(\beta )=0\). Hence, by following the notation of [6] let us denote with \({\textbf {Term}}^e(\alpha ,\beta )\) the inner term

and write

where

In order to arrive at a contradiction to \(|\mathop {\text {E}}_{e \in E}[ \Theta ^e ]|\ge \delta / |G|^{3}\) we will show that \(\left| \mathop {\text {E}}_{ e \in E} \Theta ^{e}(\text {low})\right| < \frac{\delta }{2|G|^{3}}\) and \(\left| \mathop {\text {E}}_{e\in E} \Theta ^{e}(\text {high})\right| \le \frac{\delta }{2|G|^{3}}\). In order to bound \(\mathop {\text {E}}_{e \in E} \Theta ^{e}(\text {high})\) due to our choice of the constant C we can just observe that Claim 4.7. from [6] can be applied in our setting to yield

In particular, since the starting UFG Label Cover instance is smooth one can just replace \(g,g',h\) in the proof of Claim 4.7. with \(g_F,g,h_F\) and check the correctness of all the steps in the proof for these functions.

For the low degree terms we can use argument identical to the one in Claim 4.6. of [6] to arrive at the expression